ERP Markers of Valence Coding in Emotional Speech Processing

Alice Mado Proverbio

Sacha Santoni

Roberta Adorni

Corresponding authormado.proverbio@unimib.it

Lead Contact

Received 2019 Oct 11; Revised 2019 Dec 20; Accepted 2020 Feb 19; Collection date 2020 Mar 27.

This is an open access article under the CC BY-NC-ND license (http://creativecommons.org/licenses/by-nc-nd/4.0/).

Summary

How is auditory emotional information processed? The study's aim was to compare cerebral responses to emotionally positive or negative spoken phrases matched for structure and content. Twenty participants listened to 198 vocal stimuli while detecting filler phrases containing first names. EEG was recorded from 128 sites. Three event-related potential (ERP) components were quantified and found to be sensitive to emotional valence since 350 ms of latency. P450 and late positivity were enhanced by positive content, whereas anterior negativity was larger to negative content. A similar set of markers (P300, N400, LP) was found previously for the processing of positive versus negative affective vocalizations, prosody, and music, which suggests a common neural mechanism for extracting the emotional content of auditory information. SwLORETA applied to potentials recorded between 350 and 550 ms showed that negative speech activated the right temporo/parietal areas (BA40, BA20/21), whereas positive speech activated the left homologous and inferior frontal areas.

Subject Areas: Neuroscience, Behavioral Neuroscience, Cognitive Neuroscience

Graphical Abstract

Highlights

- •

Specific ERP markers reflecting negative/positive speech processing were investigated

- •

Positive valence enhanced P450 and LP responses

- •

Negative valence enhanced N400-like anterior negativity

- •

Similar ERP markers were found for the processing of music and affective vocalizations

Neuroscience; Behavioral Neuroscience; Cognitive Neuroscience

Introduction

It is known that speech prosody conveys highly informative cues requiring complex processing. Several event-related potential (ERP) studies have shown how the affective content of speech can modulate ERP responses as early as 200 ms for auditory stimuli very short in length (e.g.,Paulmann and Kotz, 2008,Paulmann et al., 2010,Paulmann and Pell, 2010,Paulmann et al., 2013; Schirmer et al., 2013;Schirmer and Kotz, 2006) and at about 300–400 ms for more complex and longer stimuli (see the review byKotz and Paulmann, 2011).Zinchenko et al., 2015,Zinchenko et al., 2017 used an auditory processing task in dynamic multisensory stimuli and showed that negative emotions can have a modulatory effect as early as 100 ms after stimulus onset (as well as subsequently influencing P200 and N200 ERP components).

The P200 modulation is assumed to reflect enhanced attention to emotional stimuli so that they can be preferentially processed (Paulmann et al., 2013). Later ERP components (N400 and LPC) are also modulated by emotional prosody (e.g.,Bostanov and Kotchoubey, 2004,Schirmer et al., 2002,Schirmer et al., 2005a,Schirmer and Kotz, 2003,Paulmann and Pell, 2010,Kanske and Kotz, 2007,Schirmer et al., 2013), but the pattern of results is strongly dependent on stimuli, tasks, and experimental designs used. Generally, ERP responses display larger amplitudes for emotional or arousing stimuli than for neutral stimuli (e.g.,Schapkin et al., 2000). For example, in the pioneering psychophysiological study byBegleiter and Platz (1969) it was found that taboo words (such as swear words) elicited larger negative and positive peaks measured at three latency stages (from about 100 to 400 ms) than neutral words. More recently,Yao et al. (2016) explored the effect of word valence and arousal on auditory ERPs and reported that emotionally positive words elicited an enhancement of the late positive complex (LPC, 450–750 ms), whereas negative words elicited an enhancement of N400 (300–410 ms) response. In addition, low-arousal positive words were associated with a reduction of LPC amplitudes compared with high-arousal positive words, thus suggesting higher responses for more arousing stimuli and a positive/negative modulation of ERP components as a function of words' affective valence.Vallat et al. (2017) found enhanced late positive and late negative components (peaking between 800 and 1000 ms) at posterior and frontal areas for arousing names (but not neutral names).

Although a large literature agrees on a general effect of emotional versus neutral (affective “valence” coding, e.g.,Kissler et al., 2007,Herbert et al., 2008) or arousing versus less arousing stimuli (“arousal” coding), especially for P300 (Burleson, 1986,Cuthbert et al., 2000,Hajcak et al., 2010), it is still not very clear whether it is possible to distinguish the “polarity” (happy versus sad) of the emotion expressed, by looking at specific electrophysiological markers.

In this study it was investigated whether it was possible to identify specific ERP markers of emotional prosody, in response to ecological vocal utterances expressing a general positive or negative feeling. A few ERP studies in the literature have found greater positive potentials (P2 or P300s) to positive prosody and larger N400s or negative shifts to negative prosody and music (Grossmann et al., 2005,Proverbio et al., 2019,Proverbio and Piotti, nd,Yao et al., 2016), but there is no general consensus on this matter.

As for the neural circuits subtending the ability to understand speech prosody, regardless of language semantic content, the key structures seem to be the superior temporal gyrus (STG, also for being able to merge multimodal information such as body language and facial mimicry), the medial temporal cortex (MTG), and the inferior frontal cortex (IFG), in addition to the limbic system. For example, an fMRI study byKotz et al. (2003) analyzed the brain response to words spoken with different vocal intonations: positive (joy), negative (anger), and neutral. In this study an IFG activation was observed for processing both positive and negative intonations. Comparing normal and prosodic speech across all three emotional intonations revealed bilateral, but with higher left activation of temporal and subcortical regions (putamen and thalamus) as well as activations of left inferior frontal regions for normal speech. Prosodic speech resulted in bilateral inferior frontal, prefrontal, and caudate activation (Kotz et al., 2003). Again, an fNIRS (functional near infrared spectrograph) study byZhang et al. (2018a) recorded brain activity during passive listening of sentences consisting of non-words, i.e., lacking semantic content, but with regular syntactic structure subject-verb-object and characterized by a positive (joy), negative (fear and anger), and emotionally neutral prosody. The authors found that the superior temporal cortex, especially the right, middle, and posterior parts of the superior temporal gyrus (BA 22/42), discriminated between emotional and neutral prosodies. Furthermore, brain activation patterns were distinct when positive (happy) were contrasted to negative (fearful and angry) prosody in the left inferior frontal gyrus (BA 45) and the frontal eye field (BA8), and when angry were contrasted to neutral prosody in bilateral orbital frontal areas (BA 10/11).

Overall, the STG and MTG seem to be the core cortical structures for the vocal processing of emotions (Ethofer et al., 2006,Frühholz and Grandjean, 2013,Zhang et al., 2018b,Johnstone et al., 2006). Its anterior portion has shown activation peaks for emotional expressions compared with neutral expressions in many neuroimaging studies (Bach et al., 2008,Brück et al., 2011,Witteman et al., 2012,Wildgruber et al., 2005,Sander et al., 2005,Kreifelts et al., 2010).

A marked lateralization in favor of the right superior temporal cortex has been observed, which is consistent with the greater sensitivity of the right hemisphere to the slower variations of the acoustic profiles typical of emotional expressions (Liebenthal et al., 2016,Witteman et al., 2012,Beaucousin et al., 2006,Vigneau et al., 2011). Other studies have shown a left bias for processing positive emotions (Davidson, 1992,Canli et al., 1998,Flores-Gutiérrez et al., 2007). Indeed, a large body of research indicates that approach and avoidance reactions are asymmetrically instantiated in the left and right hemisphere, respectively (e.g.,Davidson, 1992). Furthermore, a considerable body of neuropsychological and psychophysiological evidence suggests that the left hemisphere is related to positive emotions, whereas the right hemisphere is related to negative emotions (e.g.,Maxwell and Davidson, 2007,Roesmann et al., 2019,Wyczesany et al., 2018,Simonov, 1998).

In a previous psychophysiological work (Proverbio et al., 2019) the neural processes involved in the coding of musical stimuli and non-verbal vocalizations were investigated. Both stimulus types might be negative or positive in emotional valence. The aim was to explore the existence of common neural bases for the extraction and comprehension of the emotional content of auditory information. We wondered why a sad or happy piece of music might be universally categorized as such by people of different age, culture, education, or musical preference. Specifically, it was hypothesized that the comprehension of the emotional significance of musical stimuli (with positive and negative content) might tap at the same neural mechanism involved in the processing of speech prosodic and affective vocalizations (Panksepp and Bernatzky, 2002). It has been hypothesized (Cook et al., 2006) that minor chords share some harmonic relationships that are found in sad speech prosody. Paquette and coworkers (2018) proposed that music could involve neuronal circuits devoted to the processing of biologically relevant emotional vocalizations, and a few studies have indeed found common brain activations (including middle and superior temporal cortex, medial frontal cortex, and cingulate cortex) during listening to both vocalizations and music (Aubé et al., 2015,Escoffier et al., 2013,Belin et al., 2008). Although from these studies it can be surely argued that similar mechanisms support the comprehension of emotional content of vocalizations and music, there is not a precise indication about the specific nature and timing of these neural processes, because of the different structure of stimuli usually compared. It is known that both types of complex stimuli are processed within the secondary auditory cortex (Whitehead and Armony, 2018). In a previous ERP study (Proverbio et al., 2019) positive and negative emotional vocalizations (e.g., laughing, crying) and digitally extracted musical tones (played on the violin) were used as stimuli. They shared the same melodic profile and main pitch/frequency characteristics. Participants listened to vocalizations or music while detecting rare auditory targets (bird tweeting or piano's arpeggios). The analyses of ERP responses showed that P200 and later late positive (LP) components were larger to positive stimuli (either voice or music based), whereas negative stimuli increased the amplitude of the anterior N400 component. Furthermore, notation obtained from acoustic signals showed how emotionally negative stimuli tended to be in a Minor key, and positive stimuli in a Major key, thus shedding some light on the brain's ability to understand music. Interestingly,Chen et al. (2008) found larger negativities (500–700 ms) to stimuli when listening to negative music and Schapkin and coworkers (2000) found larger P2 and P3 positive peaks to positive than to negative words, which is compatible with the aforementioned pattern of electrophysiological results.

The aim of the present study was therefore to further investigate whether similar markers of emotional prosody (namely positive versus negative) might be found for speech processing, similar to music and vocalizations, and whether their source reconstruction revealed distinct or shared neural circuits. We hypothesized that the mechanism supporting the comprehension of the emotional content (negative versus positive) of music, speech, and vocalizations shared common neural circuits. We also expected a possible right lateralization for the processing of negative emotions and a left lateralization for positive emotions (Davidson, 1992,Canli et al., 1998,Flores-Gutiérrez et al., 2007,Proverbio et al., 2019).

Results

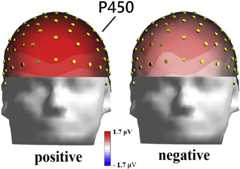

P450 Response

The repeated measures ANOVA analysis performed on P450 amplitude values, recorded between 350 and 550 ms at AF7-AF8, F7-F8, and FT7-FT8 electrode sites, showed the significant effect of the Emotional Valence [F (1,19) = 7.21, p < 0 .015; = 0.28] with larger P450 responses to positive (1.29 μV, SD = 0.16) than negative stimuli (0.60, SD = 0.25).

Also significant was the Hemisphere factor [F (1,19) = 8.83, p < 0.005, = 0.32] with greater P450 responses over the right than left hemisphere. The significant interaction of Valence x Hemisphere [F (1,19) = 7.99, p < 0.01, = 0.30] and relative post hoc comparisons indicated larger responses over right than left hemisphere (p < 0.01) for negative stimuli, as can be appreciated by looking at ERP waveforms ofFigure 1 and topographical maps ofFigure 2.

Figure 1.

ERP Waveforms Recorded at Left and Right Anterior Sites in Response to Positive or Negative Sentences

Figure 2.

Isocolour Topographical Maps of P450 (Front View) Recorded between 350 and 550 ms in the Two Valence Conditions

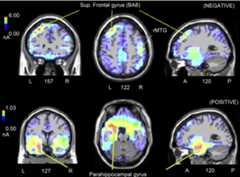

Source Reconstruction

To identify the neural generators of the surface activity within the P450 latency range astandardized weighted low-resolution electromagnetic tomography (swLORETA) was applied to ERP potentials recorded between 350 and 550 ms, separately for positive and negative stimuli. In this way we were able to observe neural processing of speech of different emotional valence in the same identical time window, also allowing comparison with similar ERP findings on music, vocalizations, and prosody (Proverbio et al., 2019,Proverbio and Piotti, nd). The inverse solution is visible inFigure 3, whereas a complete list of active electromagnetic dipoles is reported inTable 1. A further swLORETA (visible inFigure 4) was applied to the difference waves obtained by subtracting ERPs to negative stimuli from ERPs to positive stimuli in the P450 latency range. The homologous “negative minus positive” differential activity was not explored becauseanterior negativity occurred considerably later in time (1,100–1,200 ms).

Figure 3.

(Top) Coronal, Axial, and Sagittal Brain Sections Showing Some of the Sources of Activation for P450 Response (350–550 ms) to Negative Sentences According to swLORETA

Since negative sentences were non-targets the prefrontal activation possibly indicates increased attention and enhanced stimulus encoding. Note also the right activation of the medial temporal gyrus. (Bottom) Coronal, axial, and sagittal brain sections showing some of the sources of activation for P450 response (350–550 ms) to positive sentences according to swLORETA. The strong activity of the parahippocampal place area (PPA) compared with prefrontal cortex possibly suggests a reduced attention to positive auditory stimuli and a stronger encoding of the visual place stimulus. Note that the scale is different across conditions. A, anterior; P, posterior; L, left hemisphere; R, right hemisphere.

Table 1.

Active Electromagnetic Dipoles Explaining the P450 Surface Voltage Recorded between 350 and 550 ms according to swLORETA in Response to Negative and Positive Stimuli and the P450 Differential Activity (Positive – Negative)

| NEGATIVE | |||||

| Magn | Hem | Lobe | Gyrus | BA | Presumed function |

| 6.91 | L | F | Superior frontal | 8 | Semantic working memory |

| 6.81 | R | F | Middle frontal | 10 | Attention |

| 6.13 | R | F | Medial frontal | 10 | |

| 6.74 | L | T | Fusiform | 37 | Visual perception of the living room (place, colors, shapes, etc.) |

| 5.62 | R | T | Fusiform | 20 | |

| 6.03 | L | T | Superior temporal gyrus | 42 | Speech processing |

| 5.95 | L | F | Precentral | 6 | |

| 5.72 | R | P | Inferior parietal lobule | 40 | Language-evoked imagery (NEGATIVE) |

| 5.47 | R | P | Inferior parietal lobule | 39 | |

| 5.15 | R | T | Middle temporal | 21 | Prosody (NEGATIVE) |

| 5.13 | R | T | Inferior temporal | 20 | |

| 4.55 | L | F | Superior frontal | 9 | |

| 4.2 | L | Limbic | Cingulate | 31 | Emotion |

| POSITIVE | |||||

| 10.35 | L | Limbic | Parahippocampal | 34 | Parahippocampal place area (PPA) |

| 9.58 | R | Limbic | Parahippocampal | 34 | |

| 9.47 | L | F | Superior frontal | 8 | Semantic working memory |

| 8.55 | R | Limbic | Uncus | 20 | Emotion |

| 6.32 | R | Limbic | Cingulate | 23 | |

| 8.47 | L | F | Superior frontal | 10 | Attention |

| 4.9 | R | T | Fusiform | 37 | Visual perception of the living room (place, colors, shapes, etc.) |

| 8.48 | L | T | Fusiform | 20 | |

| 4.9 | R | O | Fusiform | 37 | |

| 4.74 | L | O | Lingual | 18 | |

| 6.07 | L | P | Postcentral | 3 | Language-evoked imagery (POSITIVE) |

| 4.79 | L | P | Supereior parietal lobule | 7 | |

| POSITIVE - NEGATIVE | |||||

| 9.39 | L | F | Inferior frontal | 6 | Positive emotions |

| 8.27 | L | P | Inferior parietal | 40 | Language-evoked imagery (POSITIVE) |

| 0.21 | L | T | Middle temporal | 21 | Prosody (POSITIVE) |

| 0.18 | R | T | Inferior temporal | 20 | Prosody |

Magn, magnitude in nA; Hem, hemisphere; L, left; R, right. Power RMS = 2; F, Frontal; T, Temporal; P, Parietal: O, Occipital.

Figure 4.

swLORETA Solution Relative to the Positive - Negative Difference

Left sagittal brain section (centered on the left medial temporal source) showing the electromagnetic activity explaining the differential voltage elicited by positive – negative stimuli in the P450 latency range (350–550 ms) according to swLORETA. A, anterior; P, posterior.

During inattentive perception of negative speech the most active neural sources (ordered for magnitude) were the left superior frontal gyrus (BA8), the right medial frontal gyrus, the fusiform gyrus bilaterally, the speech-specific left superior temporal gyrus (BA42), the right medial and inferior temporal gyrus, and parietal and limbic areas.

InTable 1 a description of presumed functions associated to these areas can be also found. During inattentive perception of positive speech the most active neural sources were the left and right parahippocampal place area (BA34), the superior frontal gyrus (BA8), as well as limbic, visual, and parietal areas (please seeTable 1 for details).

The swLORETA applied to the positive – negative difference in brain activity showed a largely left hemispheric circuit for representing positive emotional hues, including the left inferior frontal area, the left inferior parietal area, and the left middle temporal area, as can be appreciated inFigure 4.

Anterior Negativity

The repeated measures ANOVA analysis performed onanterior negativity mean area amplitude recorded between 1,100 and 1,200 ms at AFp3h-AFp4h, FC3-FC4 e FCC3h-FCC4h electrode sites yielded the significance of emotional valence [F (1,19) = 8.92, p < 0.008, = 0.32)] with greateranterior negativity to negative (−1.5 μV, SD = 0.38) than positive speech (0.74 μV, SD = 0.44). The further interaction of valence x hemisphere [F (1,19) = 5.63, p < 0.03, = 0.23] and relative post hoc comparisons showed thatanterior negativity was larger to negative than positive sentences. Furthermore, it was smaller (more positive) to positive than negative sentences, over the left hemisphere (LH-neg = −1.5 μV, SD = 0.38; RH-neg = −1.46 μV, SD = 0.39; LH-pos = −0.63 μV, SD = 0.44;RH-pos = −0.84 μV, SE = 0.44). This can be appreciated by looking at ERP waveforms ofFigure 1 and topographical maps ofFigure 5.

Figure 5.

Isocolour Topographical Maps of Anterior Negativity Voltages (Top and Front Views) Recorded between 1,100 and 1,200 ms in the Two Valence Conditions

The LP focus over the left inferior frontal area can also be observed.

The factor electrode was also significant [F (2,38) = 3.53, p < 0.035, = 0.16], showing greater anterior negativity amplitudes over frontocentral than anterior frontal sites.

Late Positivity

The repeated measures ANOVA analysis performed on LP mean area amplitude recorded between 1,300 and 1,500 ms over F1-F2, Fpz-FZ electrode sites showed a significance of emotional valence factor [F (1, 19) = 12.1, p < 0 .0025, = 0.38], with larger LP responses to positive (−0.48 μV, SD = 0.34) than negative stimuli (−1.36 μV, SD = 0.36) as can be seen inFigures 1 and5. The factor electrode was also significant [F (3, 57) = 3.6, p < 0.02, = 0.20], indicating greater responses over Fpz than other sites.

Discussion

The aim of the present study was to investigate the neural correlates involved in the understanding of emotional language by analyzing the bioelectrical response to speech as a function of its emotional and prosodic content (positive versus negative).

The first component showing a valence-dependent modulation was P450 (350–550 ms), which was greater in amplitude to positive than negative sentences. Similalry, previous studies have shown an enhancement of earlier P200 response to positive vs. negative prosody (Paulmann and Kotz, 2008). Again,Kanske and Kotz (2007) found that semantically positive words elicited larger P2 responses compared with neutral words. In a recent study byProverbio et al. (2019) contrasting ERP responses to positive versus negative vocalizations (for example, laughter and crying) it was found that positive stimuli elicited larger P200 responses than negative stimuli. It is possible that differences in the latency of the first modulated positive response (P200, P300, or P450) depend on stimulus duration or complexity.

ERP analyses also showed that P450 to negative stimuli was greater over the right than left frontal areas, whereas no significant hemispheric difference was observed for positive stimuli. This right hemispheric asymmetry for negatively valenced stimuli fits well with “valence hypothesis” byDavidson et al. (1999) according to which there would be a right hemispheric dominance for the processing of emotionally negative stimuli.

The second component considered, a negative deflection recorded between 1,100 and 1,200 ms and here named anterior negativity response, was significantly larger in response to emotionally negative verbal expressions. Since sentences did not differ in syntactic structure or word number/frequency it is reasonable to think that this difference might be ascribed to the different emotional content and valence (including prosodic inflections). Similar enhanced negativity to emotional prosody was found in an ERP study bySchirmer et al. (2002) and byGrossmann et al. (2005) reporting a greater negativity shift maximum over frontal and central sites to negative stimuli. In the latter study, 7-month-old children listened to words characterized by a happy, angry, or neutral prosody. Their ERP responses showed that the negative prosody elicited more negative potentials than the other prosodic intonations. An enhanced N400 response to negatively rather than positively valenced stimuli was also found byProverbio et al. (2019) reporting larger N400s to human vocalizations, as well as to music obtained by transforming those vocalizations in instrumental music. Intriguingly, a similar N400 enhancement during processing of negative v1s. positive stimuli has been reported byProverbio and Piotti, n.d., who recently compared the processing of viola played music obtained by digitally transforming positive versus negative speech utterances into music. Again,Yao et al. (2016) found that emotionally positive words elicited an enhancement of the late positive complex (LPC, 450–750 ms), whereas negative words elicited an enhancement of N400 (300–410 ms) response. This similarity suggests the existence of a common mechanism for extracting and comprehending the emotional content of music, speech, and vocalizations, based on the harmonic spectrum and melodic structure of prosody, voice, and music. The unique contribution of this research compared with previous findings in the ERP literature on emotional prosody is the comparison of bioelectrical responses to speech, or vocalizations (Proverbio et al., 2019) with those elicited by musical stimuli (played by violin/viola) and the discovery of a unitary set of ERP markers of emotional valence that is not dependent on stimulus modality.

The last component modulated by emotional valence was late positivity, which was quantified at a relatively early onset (1,300–1,500 ms) but was long lasting. LP response was larger to positive than to negative sentences. Again, this effect was also found by the previously mentioned studies examining the brain response to vocalizations and music (Proverbio et al., 2019,Proverbio and Piotti, nd). Other ERP studies in the literature support our finding of an increased late positivity to positively valenced stimuli (Carretié et al., 2008,Hinojosa et al., 2010,Kanske and Kotz, 2007,Schacht and Sommer, 2009,Herbert et al., 2006,Herbert et al., 2008).Bayer and Schacht (2014) observed similar results for LP in a study in which the participants were visually presented with words, faces, or images of positive, negative, or neutral valence. For what concerns a functional interpretation, the modulations of LP could reflect a prolonged evaluation of the stimulus probably caused by its intrinsic emotional relevance (Cuthbert et al., 2000,Schupp et al., 2004).

To investigate the existence of distinct neural circuits for processing different speech emotional contents a swLORETA source reconstruction was performed on the earliest modulation, corresponding to the peak of P450 response (350–550). In reviewing dipole localizations and approximate Brodmann areas (the estimated spatial accuracy of swLORETA being 5 mm), it should be noted that the technique has limited spatial resolution, as compared, for example, to MEG or fMRI. The strongest generator active for both valence conditions was the superior frontal gyrus of the left hemisphere (BA 8). The left PFC seems involved in stimulus encoding and semantic working memory (Tulving et al., 1994). According toGabrieli et al., 1998 it plays a crucial role in semantic working memory. For example, patients with left prefrontal focal lesions (du Boisgueheneuc et al., 2006) show a clear deficit in working memory. It should be mentioned, however, that the same region (BA8) is implicated in more general memory functions, being also involved in the processing of musical stimuli (Flores-Gutiérrez et al., 2007,Platel et al., 2003,Proverbio and De Benedetto, 2018). The activation of the superior frontal gyrus (BA 8) was in fact also found in the previously mentioned ERP studies by Proverbio and coauthors (Proverbio et al., 2019,Proverbio and Piotti, nd) in response to music and vocalizations, with greater signals for negative stimuli. Also active during listening to negative speech was the left superior temporal gyrus (BA 42), also known as secondary auditory cortex (Wernicke area), devoted to phoneme and word recognition (e.g.,Ardila et al., 2016). Heschl's gyri (BA41) were not found active at this late stage of processing. Also active was the so-called extended Wernicke area (BA20 and BA37 of the left hemisphere). According to meta-analytical studies (Ardila et al., 2016) these regions would be implicated in reception and understanding of speech. However, they might also indicate enhanced visual processing of background: objects, colors, shapes, and place (and lesser orienting toward the auditory channel). Both interpretations need further data before a definitive conclusion could be reached.

The second strongest focus of activity during listening to negative sentences was the medial/superior frontal cortex of the right hemisphere that has been characterized by the literature as involved in sustaining attention (Velanova et al., 2003), as well as in controlling attentional control and allocation (Pollmann et al., 2000,Pollmann, 2001), and context updating (Zinchenko et al., 2019). Since the task expressly required participants to ignore non-target stimuli this effect might indicate stronger attention attracting properties of negative speech. This interpretation is also supported by the much stronger activity of frontal and speech devoted areas during listening to negative speech, as opposed to the activation of visual areas and parahippocampal place areas (possibly reflecting the processing of the visual background: a place) during listening to positive speech. Other areas involved in speech processing were parietal regions, with a different hemispheric asymmetry as a function of valence. More specifically, the right inferior parietal lobule (BA 39 and BA 40) was found to be active in response to negative speech, whereas the left superior parietal lobule (BA 7) and postcentral gyrus (BA 3) were found to be active during processing of positive speech. This asymmetry might be interpreted in the light of the valence hemispheric specialization (e.g.,Davidson et al., 1999), whereas their activation might be linked to their role in language-evoked imagery (Just et al., 2004).

Specifically active for processing negative speech were also the right medial and inferior temporal gyri (BA21 and BA20). These areas seem to respond to both human and animal negative vocalizations (Belin et al., 2008), as well as to music extracted from human negative vocalizations (Proverbio et al., 2019). A similar finding was reported byAltenmüller et al. (2002) who found that listening to negative melodies engaged the right superior temporal gyrus (BA 42 and BA 22) and the right anterior cingulate cortex (BA 24), whereas listening to positive music engaged the left middle temporal gyrus (BA 21) and left inferior temporal gyrus (BA 20). This pattern of lateralization fits with the hypothesis proposed by various authors according to which positive emotions and stimuli that induce this type of emotion would be processed mainly by the left hemisphere, whereas negative emotions would be mainly processed by the right hemisphere (Davidson, 1992,Canli et al., 1998). Quite similarly,Flores-Gutiérrez et al. (2007) had participants listening to music with positive or negative emotional value and found that "unpleasant" music was mainly elaborated by the right hemisphere, whereas the "pleasant" music mainly activated the right hemisphere (Flores-Gutiérrez et al., 2007).

Conversely, specifically active for processing positive speech was the parahippocampal gyrus (PHG), bilaterally but with somewhat greater left hemisphere asymmetry. Since positive speech was task irrelevant (and less biologically relevant than negative speech) and participants were required to visually fixate on the center of a picture displaying a place (namely, a living room) this activation might be linked to the role of the parahippocampal gyrus in place processing (parahippocampal place area, PPA;Epstein, 1998). However, the role of PHG in the semantic processing of verbal stimuli with positive emotional value cannot be disregarded. Indeed, in an fMRI study in which participants were required to silently read positive, negative, and neutrals adjectives it was shown that adjectives with positive emotional value elicited a more pronounced amygdaloidal and PHG activation than emotionally neutral and negative adjectives (Herbert et al., 2009). Furthermore, an fMRI study (Johnstone et al., 2006) has identified the neural circuits (also in the parahippocampal area) involved in processing voices connoted by an emotionally positive prosody. The further swLORETA applied to the differential positive – negative response (in the P450 latency range) allowed us to investigate the neural circuits specifically involved in the processing of positive emotional connotations of auditory information, regardless of other factors, such as the processing of visual background, task-related factors (working memory, attention), and speech processing. Source reconstruction data supported the existence of a strongly left-lateralized neural network including the left inferior frontal area (which agrees with the available literature on positive emotions carried by auditory stimuli, such asProverbio et al., 2019,Belin et al., 2008,Kotz et al., 2003,Koelsch et al., 2006; andZhang et al., 2018a,Zhang et al., 2018b), the left inferior parietal area (e.g.,Flores-Gutiérrez et al., 2007), and left middle temporal gyrus (e.g.,Proverbio and Piotti, nd,Altenmüller et al., 2002).

In conclusion, the data outlined above have identified three ERP markers indexing speech valence processing, namely, enhanced early and late anterior positivities (P450 and LP) for processing speech that conveyed positive emotions and enhanced anterior negativity response for speech that conveyed negative emotions. It should be noted that a somewhat similar set of ERP components (P300, N400, and LP) was also seen in a series of ERP studies involving the perception of non-verbal vocalizations and music, as well as a study investigating the brain response to music extracted from the same speech stimuli of the present study. It is possible that differences in the latency of the above ERP responses (across studies) is dependent on differences in stimulus nature, duration, and complexity.

The similarity hints at a common mechanism for extracting emotional cues from auditory information. Furthermore, according to source reconstruction, and in line with recent fMRI studies, the key role of right medial temporal cortex for processing negative prosodic/melodic information was supported (Davidson, 1992,Canli et al., 1998,Flores-Gutiérrez et al., 2007,Proverbio et al., 2019,Proverbio and Piotti, nd,Belin et al., 2008). In addition, a later activation of the left inferior frontal area (at LP level) for processing positive prosodic/melodic information was found, which was also predicted on the basis of previous research (Proverbio et al., 2019,Belin et al., 2008,Kotz et al., 2003,Koelsch et al., 2006,Zhang et al., 2018a,Zhang et al., 2018b).

In fact, according to the literature (Ethofer et al., 2006,Bach et al., 2008,Lattner et al., 2005,Brück et al., 2011,Zhang et al., 2018a,Zhang et al., 2018b), (after sensory processing and the extraction of acoustic parameters) the right superior temporal cortex would identify the affective nature of prosody by means of multimodal integration, whereas the bilateral inferior frontal gyrus would perform a sophisticated evaluation and semantic understanding of the vocally expressed emotions, at a later stage. A general right hemispheric dominance for processing negative emotional content of auditory information was also found in the present study.

Limitations of the Study

The study is somewhat limited by the reduced spatial resolution of EEG technique as compared with other neuroimaging techniques such as fMRI or MEG. Another potential limit might be the uncommon choice of time windows for ERP quantifications. However, the relatively late time windows were justified by the stimulation prolonged over time.

Methods

All methods can be found in the accompanyingTransparent Methods supplemental file.

Acknowledgments

This study was funded by 2017-ATE-0058 grant no. 16940 by University of Milano-Bicocca to A.M.P., entitled “The processing of emotional valence in language and music: an ERP investigation.” The authors are deeply grateful to Francesco de Benedetto for his technical support. We are also grateful to Dr. Patricia Klaas for the final English editing.

Author Contributions

A.M.P. designed the study, interpreted the data, and wrote the paper. S.S. created and validated the stimuli. S.S. and R.A. recorded and analyzed EEG/ERP data. All authors have approved the submitted version of the manuscript.

Declaration of Interests

The authors declare that the research was conducted in the absence of any real or perceived conflict of interest.

Published: March 27, 2020

Footnotes

Supplemental Information can be found online athttps://doi.org/10.1016/j.isci.2020.100933.

Supplemental Information

References

- Altenmüller E., Schürmann K., Lim V., Parlitz D. Hits to the left - flops to the right. Different emotions during music listening are reflected in cortical lateralisation patterns. Neuropsychologia. 2002;40:1017–1025. doi: 10.1016/s0028-3932(02)00107-0. [DOI] [PubMed] [Google Scholar]

- Ardila A., Bernal B., Rosselli M. How localized are language brain areas? A review of Brodmann areas involvement in oral language. Arch. Clin. Neuropsychol. 2016;31:112–122. doi: 10.1093/arclin/acv081. [DOI] [PubMed] [Google Scholar]

- Aubé W., Angulo-Perkins A., Peretz I., Concha L., Armony J.L. Fear across the senses: brain responses to music, vocalizations and facial expressions. Soc. Cogn. Affect Neurosci. 2015;10:399–407. doi: 10.1093/scan/nsu067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bach D.R., Grandjean D., Sander D., Herdener M., Strik W.K., Seifritz E. The effect of appraisal level on processing of emotional prosody in meaningless speech. NeuroImage. 2008;42:919–927. doi: 10.1016/j.neuroimage.2008.05.034. [DOI] [PubMed] [Google Scholar]

- Bayer M., Schacht A. Event-related brain responses to emotional words, pictures, and faces–a cross-domain comparison. Front. Psychol. 2014;5:1106. doi: 10.3389/fpsyg.2014.01106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beaucousin V., Lacheret A., Turbelin M.R., Morel M., Mazoyer B., Tzourio-Mazoyer N. FMRI study of emotional speech comprehension. Cereb. Cortex. 2006;17:339–352. doi: 10.1093/cercor/bhj151. [DOI] [PubMed] [Google Scholar]

- Begleiter H., Platz A. Cortical evoked potentials to semantic stimuli. Psychophysiology. 1969;6:91–100. doi: 10.1111/j.1469-8986.1969.tb02887.x. [DOI] [PubMed] [Google Scholar]

- Belin P., Fecteau S., Charest I., Nicastro N., Hauser M.D., Armony J.L. Human cerebral response to animal affective vocalizations. Proc. R. Soc. B Biol. Sci. 2008;275:473–481. doi: 10.1098/rspb.2007.1460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bostanov V., Kotchoubey B. Recognition of affective prosody: continuous wavelet measures of event-related brain potentials to emotional exclamations. Psychophysiology. 2004;41:259–268. doi: 10.1111/j.1469-8986.2003.00142.x. [DOI] [PubMed] [Google Scholar]

- Brück C., Kreifelts B., Wildgruber D. Emotional voices in context: a neurobiological model of multimodal affective information processing. Phys. Life Rev. 2011;8:383–403. doi: 10.1016/j.plrev.2011.10.002. [DOI] [PubMed] [Google Scholar]

- du Boisgueheneuc F., Levy R., Volle E., Seassau M., Duffau H., Kinkingnehun S., Samson Y., Zhang S., Dubois B. Functions of the left superior frontal gyrus in humans: a lesion study. Brain. 2006;129(Pt 12):3315–3328. doi: 10.1093/brain/awl244. [DOI] [PubMed] [Google Scholar]

- Burleson M.H. Multiple P3s to emotional stimuli and their theoretical significance. Psychophysiology. 1986;23:684–694. doi: 10.1111/j.1469-8986.1986.tb00694.x. [DOI] [PubMed] [Google Scholar]

- Canli T., Desmond J.E., Zhao Z., Glover G., Gabrieli J.D.E. Hemispheric asymmetry for emotional stimuli detected with fMRI. NeuroReport. 1998;9:3233–3239. doi: 10.1097/00001756-199810050-00019. [DOI] [PubMed] [Google Scholar]

- Carretié L., Hinojosa J.A., Albert J., López-Martín S., De La Gándara B.S., Igoa J.M., Sotillo M. Modulation of ongoing cognitive processes by emotionally intense words. Psychophysiology. 2008;45:188–196. doi: 10.1111/j.1469-8986.2007.00617.x. [DOI] [PubMed] [Google Scholar]

- Chen J., Yuan J., Huang H., Chen C., Li H. Music-induced mood modulates the strength of emotional negativity bias: an ERP study. Neurosci. Lett. 2008;445:135–139. doi: 10.1016/j.neulet.2008.08.061. [DOI] [PubMed] [Google Scholar]

- Cook N.D., Fujisawa T.X., Takami K. Evaluation of the affective valence of speech using pitch substructure. IEEE Trans. Audio Speech Lang. Process. 2006;14:142–151. [Google Scholar]

- Cuthbert B.N., Schupp H.T., Bradley M.M., Birbaumer N., Lang P.J. Brain potentials in affective picture processing: covariation with autonomic arousal and affective report. Biol. Psychol. 2000;52:95–111. doi: 10.1016/s0301-0511(99)00044-7. [DOI] [PubMed] [Google Scholar]

- Davidson R.J. Anterior Cerebral Asymmetry and the Nature of Emotion. Brain and Cognition. 1992;20:125–151. doi: 10.1016/0278-2626(92)90065-t. [DOI] [PubMed] [Google Scholar]

- Davidson R.J., Abercrombie H., Nitschke J.B., Putnam K. Regional brain function, emotion and disorders of emotion. Curr. Opin. Neurobiol. 1999;9:228–234. doi: 10.1016/s0959-4388(99)80032-4. [DOI] [PubMed] [Google Scholar]

- Epstein R. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Escoffier N., Zhong J., Schirmer A. Emotional expressions in voice and music: same code, same effect? Hum. Brain. Mapp. 2013;34:1796–1810. doi: 10.1002/hbm.22029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ethofer T., Anders S., Erb M., Herbert C., Wiethoff S., Kissler J., Grodd W., Wildgruber D. Cerebral pathways in processing of affective prosody: a dynamic causal modeling study. Neuroimage. 2006;30:580–587. doi: 10.1016/j.neuroimage.2005.09.059. [DOI] [PubMed] [Google Scholar]

- Flores-Gutiérrez E.O., Díaz J.L., Barrios F.A., Favila-Humara R., Guevara M.Á., del Río-Portilla Y., Corsi-Cabrera M. Metabolic and electric brain patterns during pleasant and unpleasant emotions induced by music masterpieces. Int. J. Psychophysiol. 2007;65:69–84. doi: 10.1016/j.ijpsycho.2007.03.004. [DOI] [PubMed] [Google Scholar]

- Frühholz S., Grandjean D. Multiple subregions in superior temporal cortex are differentially sensitive to vocal expressions: a quantitative meta-analysis. Neurosci. Biobehav. Rev. 2013;37:24–35. doi: 10.1016/j.neubiorev.2012.11.002. [DOI] [PubMed] [Google Scholar]

- Gabrieli J.D., Poldrack R.A., Desmond J.E. The role of left prefrontal cortex in language and memory. Proc. Natl. Acad. Sci. U S A. 1998;95:906–913. doi: 10.1073/pnas.95.3.906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann T., Striano T., Friederici A.D. Infants’ electric brain responses to emotional prosody. Neuroreport. 2005;16:1825–1828. doi: 10.1097/01.wnr.0000185964.34336.b1. [DOI] [PubMed] [Google Scholar]

- Hajcak G., MacNamara A., Olvet D.M. Event-related potentials, emotion, and emotion regulation: an integrative review. Dev. Neuropsychol. 2010;35(2):129–155. doi: 10.1080/87565640903526504. [DOI] [PubMed] [Google Scholar]

- Herbert C., Kissler J., Junghöfer M., Peyk P., Rockstroh B. Processing of emotional adjectives: evidence from startle EMG and ERPs. Psychophysiology. 2006;43:197–206. doi: 10.1111/j.1469-8986.2006.00385.x. [DOI] [PubMed] [Google Scholar]

- Herbert C., Junghofer M., Kissler J. Event related potentials to emotional adjectives during reading. Psychophysiology. 2008;45:487–498. doi: 10.1111/j.1469-8986.2007.00638.x. [DOI] [PubMed] [Google Scholar]

- Herbert C., Ethofer T., Anders S., Junghofer M., Wildgruber D., Grodd W., Kissler J. Amygdala activation during reading of emotional adjectives - an advantage for pleasant content. Social Cogn. Affect. Neurosci. 2009;4:35–49. doi: 10.1093/scan/nsn027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hinojosa J.A., Méndez-Bértolo C., Pozo M.A. Looking at emotional words is not the same as reading emotional words: behavioral and neural correlates. Psychophysiology. 2010;47:748–757. doi: 10.1111/j.1469-8986.2010.00982.x. [DOI] [PubMed] [Google Scholar]

- Johnstone T., Van Reekum C.M., Oakes T.R., Davidson R.J. The voice of emotion: an FMRI study of neural responses to angry and happy vocal expressions. Social Cogn. Affect. Neurosci. 2006;1:242–249. doi: 10.1093/scan/nsl027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Just M.A., Newman S.D., Keller T.A., McEleney A., Carpenter P.A. Imagery in sentence comprehension: an fMRI study. Neuroimage. 2004;21:112–124. doi: 10.1016/j.neuroimage.2003.08.042. [DOI] [PubMed] [Google Scholar]

- Kanske P., Kotz S.A. Concreteness in emotional words: ERP evidence from a hemifield study. Brain Res. 2007;1148:138–148. doi: 10.1016/j.brainres.2007.02.044. [DOI] [PubMed] [Google Scholar]

- Kissler J., Herbert C., Peyk P., Junghofer M. Buzzwords: early cortical responses to emotional words during reading. Psychol. Sci. 2007;18:475–480. doi: 10.1111/j.1467-9280.2007.01924.x. [DOI] [PubMed] [Google Scholar]

- Koelsch S., V Cramon D.Y., Müller K., Friederici A.D. Investigating emotion with music: an fMRI study. Hum. Brain Mapp. 2006;27:239–250. doi: 10.1002/hbm.20180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kotz S.A., Meyer M., Alter K., Besson M., von Cramon D.Y., Friederici A.D. On the lateralization of emotional prosody: an event-related functional MR investigation. Brain Lang. 2003;86:366–376. doi: 10.1016/s0093-934x(02)00532-1. [DOI] [PubMed] [Google Scholar]

- Kotz S.A., Paulmann S. Emotion, language, and the brain. Lang. Linguist. Compass. 2011;5:108–125. [Google Scholar]

- Kreifelts B., Ethofer T., Huberle E., Grodd W., Wildgruber D. Association of trait emotional intelligence and individual fMRI-activation patterns during the perception of social signals from voice and face. Hum. Brain Mapp. 2010;31:979–991. doi: 10.1002/hbm.20913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lattner S., Meyer M., Friederici A.D. Voice perception: sex, pitch, and the right hemisphere. Hum. Brain Mapp. 2005;24:11–20. doi: 10.1002/hbm.20065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liebenthal E., Silbersweig D.A., Stern E. The language, tone and prosody of emotions: neural substrates and dynamics of spoken-word emotion perception. Front. Neurosci. 2016;10:506. doi: 10.3389/fnins.2016.00506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maxwell J.S., Davidson R.J. Emotion as motion: asymmetries in approach and avoidant actions. Psychol. Sci. 2007;18:1113–1119. doi: 10.1111/j.1467-9280.2007.02033.x. [DOI] [PubMed] [Google Scholar]

- Panksepp J., Bernatzky G. Emotional sounds and the brain: the neuro-affective foundations of musical appreciation. Behav. Process. 2002;60:133–155. doi: 10.1016/s0376-6357(02)00080-3. [DOI] [PubMed] [Google Scholar]

- Paulmann S., Kotz S.A. Early emotional prosody perception based on different speaker voices. Neuroreport. 2008;19:209–213. doi: 10.1097/WNR.0b013e3282f454db. [DOI] [PubMed] [Google Scholar]

- Paulmann S., Pell M.D. Contextual influences of emotional speech prosody on face processing: how much is enough? Cogn. Affect. Behav. Neurosci. 2010;10:230–242. doi: 10.3758/CABN.10.2.230. [DOI] [PubMed] [Google Scholar]

- Paulmann S., Seifert S., Kotz S.A. Orbito-frontal lesions cause impairment during late but not early emotional prosodic processing. Soc. Neurosci. 2010;5:59–75. doi: 10.1080/17470910903135668. [DOI] [PubMed] [Google Scholar]

- Paulmann S., Bleichner M., Kotz S.A. Valence, arousal, and task effects in emotional prosody processing. Front. Psychol. 2013;4:345. doi: 10.3389/fpsyg.2013.00345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platel H., Baron J.C., Desgranges B., Bernard F., Eustache F. Semantic and episodic memory of music are subserved by distinct neural networks. Neuroimage. 2003;20:244–256. doi: 10.1016/s1053-8119(03)00287-8. [DOI] [PubMed] [Google Scholar]

- Pollmann S., Weidner R., Müller H.J., von Cramon D.Y. A fronto-posterior network involved in visual dimension changes. J. Cogn. Neurosci. 2000;12:480–494. doi: 10.1162/089892900562156. [DOI] [PubMed] [Google Scholar]

- Pollmann S. Switching between dimensions, locations, and responses: the role of the left frontopolar cortex. NeuroImage. 2001;14:118–124. doi: 10.1006/nimg.2001.0837. [DOI] [PubMed] [Google Scholar]

- Proverbio A.M., De Benedetto F. Auditory enhancement of visual memory encoding is driven by emotional content of the auditory material and mediated by superior frontal cortex. Biol. Psychol. 2018;132:164–175. doi: 10.1016/j.biopsycho.2017.12.003. [DOI] [PubMed] [Google Scholar]

- Proverbio A.M., De Benedetto F., Guazzone M. Shared neural mechanisms for processing emotions in music and vocalizations. Eur. J. Neurosci. 2019 doi: 10.1111/ejn.14650. [DOI] [PubMed] [Google Scholar]

- Proverbio, A.M., Piotti, E. (n.d.). Common neural bases for processing speech prosody and music: An integrated model. Psychol. Sci.

- Roesmann K., Dellert T., Junghoefer M., Kissler J., Zwitserlood P., Zwanzger P., Dobel C. The causal role of prefrontal hemispheric asymmetry in valence processing of words - insights from a combined cTBS-MEG study. Neuroimage. 2019;191:367–379. doi: 10.1016/j.neuroimage.2019.01.057. [DOI] [PubMed] [Google Scholar]

- Sander D., Grandjean D., Pourtois G., Schwartz S., Seghier M.L., Scherer K.R., Vuilleumier P. Emotion and attention interactions in social cognition: brain regions involved in processing anger prosody. Neuroimage. 2005;28:848–858. doi: 10.1016/j.neuroimage.2005.06.023. [DOI] [PubMed] [Google Scholar]

- Schacht A., Sommer W. Time course and task dependence of emotion effects in word processing. Cogn. Affect. Behav. Neurosci. 2009;9:28–43. doi: 10.3758/CABN.9.1.28. [DOI] [PubMed] [Google Scholar]

- Schapkin S.A., Gusev A.N., Kuhl J. Categorization of unilaterally presented emotional words: an ERP analysis. Acta Neurobiol. Exp. (Wars) 2000;60:17–28. doi: 10.55782/ane-2000-1321. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Kotz S.A., Friederici A.D. Sex differentiates the role of emotional prosody during word processing. Cogn. Brain Res. 2002;14:228–233. doi: 10.1016/s0926-6410(02)00108-8. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Kotz S.A. ERP evidence for a sex-specific Stroop effect in emotional speech. J. Cogn. Neurosci. 2003;15:1135–1148. doi: 10.1162/089892903322598102. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Striano T., Friederici A.D. Sex differences in the preattentive processing of vocal emotional expressions. Neuroreport. 2005;16:635–639. doi: 10.1097/00001756-200504250-00024. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Kotz S.A. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn. Sci. 2006;10:24–30. doi: 10.1016/j.tics.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Chen C.B., Ching A., Tan L., Hong R.Y. Vocal emotions influence verbal memory: neural correlates and interindividual differences. Cogn. Affective, Behav. Neurosci. 2013;13:80–93. doi: 10.3758/s13415-012-0132-8. [DOI] [PubMed] [Google Scholar]

- Schupp H.T., Junghöfer M., Weike A.I., Hamm A.O. The selective processing of briefly presented affective pictures: an ERP analysis. Psychophysiology. 2004;41:441–449. doi: 10.1111/j.1469-8986.2004.00174.x. [DOI] [PubMed] [Google Scholar]

- Simonov P.V. The functional asymmetry of the emotions. Zh. Vyssh. Nerv. Deiat. Im. I P Pavlova. 1998;48:375–380. [PubMed] [Google Scholar]

- Tulving E., Kapur S., Craik F.I.M., Moscovitch M., Houle S. Hemispheric encoding/retrieval asymmetry in episodic memory: positron emission tomography findings. Proc. Natl. Acad. Sci. U S A. 1994;91:2016–2020. doi: 10.1073/pnas.91.6.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vallat R., Lajnef T., Eichenlaub J.B., Berthomier C., Jerbi K., Morlet D., Ruby M. Increased evoked potentials to arousing auditory stimuli during sleep: implication forthe understanding of dream recall. Front. Hum. Neurosci. 2017;21:132. doi: 10.3389/fnhum.2017.00132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Velanova K., Jacoby L.L., Wheeler M.E., McAvoy M.P., Petersen S.E., Buckner R.L. Functional-anatomic correlates of sustained and transient processing components engaged during controlled retrieval. J. Neurosci. 2003;17:8460–8470. doi: 10.1523/JNEUROSCI.23-24-08460.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vigneau M., Beaucousin V., Hervé P.Y., Jobard G., Petit L., Crivello F., Mellet E., Zago L., Mazoyer B., Tzourio-Mazoyer N. What is right-hemisphere contribution to phonological, lexico-semantic, and sentence processing?: insights from a meta-analysis. Neuroimage. 2011;54:577–593. doi: 10.1016/j.neuroimage.2010.07.036. [DOI] [PubMed] [Google Scholar]

- Whitehead J.C., Armony J.L. Singing in the brain: neural representation of music and voice as revealed by fMRI. Hum. Brain Mapp. 2018;39:4913–4924. doi: 10.1002/hbm.24333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wildgruber D., Riecker A., Hertrich I., Erb M., Grodd W., Ethofer T., Ackermann H. Identification of emotional intonation evaluated by fMRI. Neuroimage. 2005;24:1233–1241. doi: 10.1016/j.neuroimage.2004.10.034. [DOI] [PubMed] [Google Scholar]

- Witteman J., Van Heuven V.J., Schiller N.O. Hearing feelings: a quantitative meta-analysis on the neuroimaging literature of emotional prosody perception. Neuropsychologia. 2012;50:2752–2763. doi: 10.1016/j.neuropsychologia.2012.07.026. [DOI] [PubMed] [Google Scholar]

- Wyczesany M., Capotosto P., Zappasodi F., Prete G. Hemispheric asymmetries and emotions: evidence from effective connectivity. Neuropsychologia. 2018;121:98–105. doi: 10.1016/j.neuropsychologia.2018.10.007. [DOI] [PubMed] [Google Scholar]

- Yao Z., Yu D., Wang L., Zhu X., Guo J., Wang Z. Effects of valence and arousal on emotional word processing are modulated by concreteness: behavioral and ERP evidence from a lexical decision task. Int. J. Psychophysiology. 2016;110:231–242. doi: 10.1016/j.ijpsycho.2016.07.499. [DOI] [PubMed] [Google Scholar]

- Zhang D., Zhou Y., Yuan J. Speech prosodies of different emotional categories activate different brain regions in adult cortex: an fNIRS study. Sci. Rep. 2018;8:218. doi: 10.1038/s41598-017-18683-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang M., Ge Y., Kang C., Guo T., Peng D. ERP evidence for the contribution of meaning complexity underlying emotional word processing. J. Neurolinguist. 2018;45:110–118. [Google Scholar]

- Zinchenko A., Kanske P., Obermeier C., Schröger E., Kotz S.A. Emotion and goal-directed behavior: ERP evidence on cognitive and emotional conflict. Social Cogn. Affect. Neurosci. 2015;10:1577–1587. doi: 10.1093/scan/nsv050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zinchenko A., Obermeier C., Kanske P., Schröger E., Kotz S.A. Positive emotion impedes emotional but not cognitive conflict processing. Cogn. Affect. Behav. Neurosci. 2017;17:665–677. doi: 10.3758/s13415-017-0504-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zinchenko A., Conci M., Taylor P., Müller H.J., Geyer T. Taking attention out of context: frontopolar transcranial magnetic stimulation abolishes the formation of new context memories in visual search. J. Cogn. Neurosci. 2019;31:442–452. doi: 10.1162/jocn_a_01358. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.