pygad.torchga Module¶

This section of the PyGAD’s library documentation discusses thepygad.torchga module.

Thepygad.torchga module has a helper class and 2 functions to trainPyTorch models using the genetic algorithm (PyGAD).

The contents of this module are:

TorchGA: A class for creating an initial population of allparameters in the PyTorch model.model_weights_as_vector(): A function to reshape the PyTorchmodel weights to a single vector.model_weights_as_dict(): A function to restore the PyTorch modelweights from a vector.predict(): A function to make predictions based on the PyTorchmodel and a solution.

More details are given in the next sections.

Steps Summary¶

The summary of the steps used to train a PyTorch model using PyGAD is asfollows:

Create a PyTorch model.

Create an instance of the

pygad.torchga.TorchGAclass.Prepare the training data.

Build the fitness function.

Create an instance of the

pygad.GAclass.Run the genetic algorithm.

Create PyTorch Model¶

Before discussing training a PyTorch model using PyGAD, the first thingto do is to create the PyTorch model. To get started, please check thePyTorch librarydocumentation.

Here is an example of a PyTorch model.

importtorchinput_layer=torch.nn.Linear(3,5)relu_layer=torch.nn.ReLU()output_layer=torch.nn.Linear(5,1)model=torch.nn.Sequential(input_layer,relu_layer,output_layer)

Feel free to add the layers of your choice.

pygad.torchga.TorchGA Class¶

Thepygad.torchga module has a class namedTorchGA for creatingan initial population for the genetic algorithm based on a PyTorchmodel. The constructor, methods, and attributes within the class arediscussed in this section.

__init__()¶

Thepygad.torchga.TorchGA class constructor accepts the followingparameters:

model: An instance of the PyTorch model.num_solutions: Number of solutions in the population. Eachsolution has different parameters of the model.

Instance Attributes¶

All parameters in thepygad.torchga.TorchGA class constructor areused as instance attributes in addition to adding a new attribute calledpopulation_weights.

Here is a list of all instance attributes:

modelnum_solutionspopulation_weights: A nested list holding the weights of allsolutions in the population.

Methods in theTorchGA Class¶

This section discusses the methods available for instances of thepygad.torchga.TorchGA class.

create_population()¶

Thecreate_population() method creates the initial population of thegenetic algorithm as a list of solutions where each solution representsdifferent model parameters. The list of networks is assigned to thepopulation_weights attribute of the instance.

Functions in thepygad.torchga Module¶

This section discusses the functions in thepygad.torchga module.

pygad.torchga.model_weights_as_vector()¶

Themodel_weights_as_vector() function accepts a single parameternamedmodel representing the PyTorch model. It returns a vectorholding all model weights. The reason for representing the model weightsas a vector is that the genetic algorithm expects all parameters of anysolution to be in a 1D vector form.

The function accepts the following parameters:

model: The PyTorch model.

It returns a 1D vector holding the model weights.

pygad.torch.model_weights_as_dict()¶

Themodel_weights_as_dict() function accepts the followingparameters:

model: The PyTorch model.weights_vector: The model parameters as a vector.

It returns the restored model weights in the same form used by thestate_dict() method. The returned dictionary is ready to be passedto theload_state_dict() method for setting the PyTorch model’sparameters.

pygad.torchga.predict()¶

Thepredict() function makes a prediction based on a solution. Itaccepts the following parameters:

model: The PyTorch model.solution: The solution evolved.data: The test data inputs.

It returns the predictions for the data samples.

Examples¶

This section gives the complete code of some examples that build andtrain a PyTorch model using PyGAD. Each subsection builds a differentnetwork.

Example 1: Regression Example¶

The next code builds a simple PyTorch model for regression. The nextsubsections discuss each part in the code.

importtorchimporttorchgaimportpygaddeffitness_func(ga_instance,solution,sol_idx):globaldata_inputs,data_outputs,torch_ga,model,loss_functionpredictions=pygad.torchga.predict(model=model,solution=solution,data=data_inputs)abs_error=loss_function(predictions,data_outputs).detach().numpy()+0.00000001solution_fitness=1.0/abs_errorreturnsolution_fitnessdefon_generation(ga_instance):print(f"Generation ={ga_instance.generations_completed}")print(f"Fitness ={ga_instance.best_solution()[1]}")# Create the PyTorch model.input_layer=torch.nn.Linear(3,5)relu_layer=torch.nn.ReLU()output_layer=torch.nn.Linear(5,1)model=torch.nn.Sequential(input_layer,relu_layer,output_layer)# print(model)# Create an instance of the pygad.torchga.TorchGA class to build the initial population.torch_ga=torchga.TorchGA(model=model,num_solutions=10)loss_function=torch.nn.L1Loss()# Data inputsdata_inputs=torch.tensor([[0.02,0.1,0.15],[0.7,0.6,0.8],[1.5,1.2,1.7],[3.2,2.9,3.1]])# Data outputsdata_outputs=torch.tensor([[0.1],[0.6],[1.3],[2.5]])# Prepare the PyGAD parameters. Check the documentation for more information: https://pygad.readthedocs.io/en/latest/pygad.html#pygad-ga-classnum_generations=250# Number of generations.num_parents_mating=5# Number of solutions to be selected as parents in the mating pool.initial_population=torch_ga.population_weights# Initial population of network weightsga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,on_generation=on_generation)ga_instance.run()# After the generations complete, some plots are showed that summarize how the outputs/fitness values evolve over generations.ga_instance.plot_fitness(title="PyGAD & PyTorch - Iteration vs. Fitness",linewidth=4)# Returning the details of the best solution.solution,solution_fitness,solution_idx=ga_instance.best_solution()print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")# Make predictions based on the best solution.predictions=pygad.torchga.predict(model=model,solution=solution,data=data_inputs)print("Predictions :\n",predictions.detach().numpy())abs_error=loss_function(predictions,data_outputs)print("Absolute Error : ",abs_error.detach().numpy())

Create a PyTorch model¶

According to the steps mentioned previously, the first step is to createa PyTorch model. Here is the code that builds the model using theFunctional API.

importtorchinput_layer=torch.nn.Linear(3,5)relu_layer=torch.nn.ReLU()output_layer=torch.nn.Linear(5,1)model=torch.nn.Sequential(input_layer,relu_layer,output_layer)

Create an Instance of thepygad.torchga.TorchGA Class¶

The second step is to create an instance of thepygad.torchga.TorchGA class. There are 10 solutions per population.Change this number according to your needs.

importpygad.torchgatorch_ga=torchga.TorchGA(model=model,num_solutions=10)

Prepare the Training Data¶

The third step is to prepare the training data inputs and outputs. Hereis an example where there are 4 samples. Each sample has 3 inputs and 1output.

importnumpy# Data inputsdata_inputs=numpy.array([[0.02,0.1,0.15],[0.7,0.6,0.8],[1.5,1.2,1.7],[3.2,2.9,3.1]])# Data outputsdata_outputs=numpy.array([[0.1],[0.6],[1.3],[2.5]])

Build the Fitness Function¶

The fourth step is to build the fitness function. This function mustaccept 2 parameters representing the solution and its index within thepopulation.

The next fitness function calculates the mean absolute error (MAE) ofthe PyTorch model based on the parameters in the solution. Thereciprocal of the MAE is used as the fitness value. Feel free to use anyother loss function to calculate the fitness value.

loss_function=torch.nn.L1Loss()deffitness_func(ga_instance,solution,sol_idx):globaldata_inputs,data_outputs,torch_ga,model,loss_functionpredictions=pygad.torchga.predict(model=model,solution=solution,data=data_inputs)abs_error=loss_function(predictions,data_outputs).detach().numpy()+0.00000001solution_fitness=1.0/abs_errorreturnsolution_fitness

Create an Instance of thepygad.GA Class¶

The fifth step is to instantiate thepygad.GA class. Note how theinitial_population parameter is assigned to the initial weights ofthe PyTorch models.

For more information, please check theparameters this classaccepts.

# Prepare the PyGAD parameters. Check the documentation for more information: https://pygad.readthedocs.io/en/latest/pygad.html#pygad-ga-classnum_generations=250# Number of generations.num_parents_mating=5# Number of solutions to be selected as parents in the mating pool.initial_population=torch_ga.population_weights# Initial population of network weightsga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,on_generation=on_generation)

Run the Genetic Algorithm¶

The sixth and last step is to run the genetic algorithm by calling therun() method.

ga_instance.run()

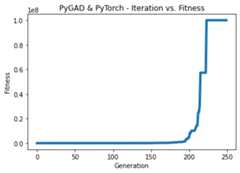

After the PyGAD completes its execution, then there is a figure thatshows how the fitness value changes by generation. Call theplot_fitness() method to show the figure.

ga_instance.plot_fitness(title="PyGAD & PyTorch - Iteration vs. Fitness",linewidth=4)

Here is the figure.

To get information about the best solution found by PyGAD, use thebest_solution() method.

# Returning the details of the best solution.solution,solution_fitness,solution_idx=ga_instance.best_solution()print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")

Fitnessvalueofthebestsolution=145.42425295191546Indexofthebestsolution:0

The next code restores the trained model weights using themodel_weights_as_dict() function. The restored weights are used tocalculate the predicted values.

predictions=pygad.torchga.predict(model=model,solution=solution,data=data_inputs)print("Predictions :\n",predictions.detach().numpy())

Predictions:[[0.08401088][0.60939324][1.3010881][2.5010352]]

The next code measures the trained model error.

abs_error=loss_function(predictions,data_outputs)print("Absolute Error : ",abs_error.detach().numpy())

AbsoluteError:0.006876422

Example 2: XOR Binary Classification¶

The next code creates a PyTorch model to build the XOR binaryclassification problem. Let’s highlight the changes compared to theprevious example.

importtorchimporttorchgaimportpygaddeffitness_func(ga_instance,solution,sol_idx):globaldata_inputs,data_outputs,torch_ga,model,loss_functionpredictions=pygad.torchga.predict(model=model,solution=solution,data=data_inputs)solution_fitness=1.0/(loss_function(predictions,data_outputs).detach().numpy()+0.00000001)returnsolution_fitnessdefon_generation(ga_instance):print(f"Generation ={ga_instance.generations_completed}")print(f"Fitness ={ga_instance.best_solution()[1]}")# Create the PyTorch model.input_layer=torch.nn.Linear(2,4)relu_layer=torch.nn.ReLU()dense_layer=torch.nn.Linear(4,2)output_layer=torch.nn.Softmax(1)model=torch.nn.Sequential(input_layer,relu_layer,dense_layer,output_layer)# print(model)# Create an instance of the pygad.torchga.TorchGA class to build the initial population.torch_ga=torchga.TorchGA(model=model,num_solutions=10)loss_function=torch.nn.BCELoss()# XOR problem inputsdata_inputs=torch.tensor([[0.0,0.0],[0.0,1.0],[1.0,0.0],[1.0,1.0]])# XOR problem outputsdata_outputs=torch.tensor([[1.0,0.0],[0.0,1.0],[0.0,1.0],[1.0,0.0]])# Prepare the PyGAD parameters. Check the documentation for more information: https://pygad.readthedocs.io/en/latest/pygad.html#pygad-ga-classnum_generations=250# Number of generations.num_parents_mating=5# Number of solutions to be selected as parents in the mating pool.initial_population=torch_ga.population_weights# Initial population of network weights.# Create an instance of the pygad.GA classga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,on_generation=on_generation)# Start the genetic algorithm evolution.ga_instance.run()# After the generations complete, some plots are showed that summarize how the outputs/fitness values evolve over generations.ga_instance.plot_fitness(title="PyGAD & PyTorch - Iteration vs. Fitness",linewidth=4)# Returning the details of the best solution.solution,solution_fitness,solution_idx=ga_instance.best_solution()print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")# Make predictions based on the best solution.predictions=pygad.torchga.predict(model=model,solution=solution,data=data_inputs)print("Predictions :\n",predictions.detach().numpy())# Calculate the binary crossentropy for the trained model.print("Binary Crossentropy : ",loss_function(predictions,data_outputs).detach().numpy())# Calculate the classification accuracy of the trained model.a=torch.max(predictions,axis=1)b=torch.max(data_outputs,axis=1)accuracy=torch.sum(a.indices==b.indices)/len(data_outputs)print("Accuracy : ",accuracy.detach().numpy())

Compared to the previous regression example, here are the changes:

The PyTorch model is changed according to the nature of the problem.Now, it has 2 inputs and 2 outputs with an in-between hidden layer of4 neurons.

input_layer=torch.nn.Linear(2,4)relu_layer=torch.nn.ReLU()dense_layer=torch.nn.Linear(4,2)output_layer=torch.nn.Softmax(1)model=torch.nn.Sequential(input_layer,relu_layer,dense_layer,output_layer)

The train data is changed. Note that the output of each sample is a1D vector of 2 values, 1 for each class.

# XOR problem inputsdata_inputs=torch.tensor([[0.0,0.0],[0.0,1.0],[1.0,0.0],[1.0,1.0]])# XOR problem outputsdata_outputs=torch.tensor([[1.0,0.0],[0.0,1.0],[0.0,1.0],[1.0,0.0]])

The fitness value is calculated based on the binary cross entropy.

loss_function=torch.nn.BCELoss()

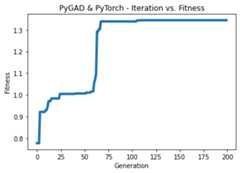

After the previous code completes, the next figure shows how the fitnessvalue change by generation.

Here is some information about the trained model. Its fitness value is100000000.0, loss is0.0 and accuracy is 100%.

Fitnessvalueofthebestsolution=100000000.0Indexofthebestsolution:0Predictions:[[1.0000000e+001.3627675e-10][3.8521746e-091.0000000e+00][4.2789325e-101.0000000e+00][1.0000000e+003.3668417e-09]]BinaryCrossentropy:0.0Accuracy:1.0

Example 3: Image Multi-Class Classification (Dense Layers)¶

Here is the code.

importtorchimporttorchgaimportpygadimportnumpydeffitness_func(ga_instance,solution,sol_idx):globaldata_inputs,data_outputs,torch_ga,model,loss_functionpredictions=pygad.torchga.predict(model=model,solution=solution,data=data_inputs)solution_fitness=1.0/(loss_function(predictions,data_outputs).detach().numpy()+0.00000001)returnsolution_fitnessdefon_generation(ga_instance):print(f"Generation ={ga_instance.generations_completed}")print(f"Fitness ={ga_instance.best_solution()[1]}")# Build the PyTorch model using the functional API.input_layer=torch.nn.Linear(360,50)relu_layer=torch.nn.ReLU()dense_layer=torch.nn.Linear(50,4)output_layer=torch.nn.Softmax(1)model=torch.nn.Sequential(input_layer,relu_layer,dense_layer,output_layer)# Create an instance of the pygad.torchga.TorchGA class to build the initial population.torch_ga=torchga.TorchGA(model=model,num_solutions=10)loss_function=torch.nn.CrossEntropyLoss()# Data inputsdata_inputs=torch.from_numpy(numpy.load("dataset_features.npy")).float()# Data outputsdata_outputs=torch.from_numpy(numpy.load("outputs.npy")).long()# The next 2 lines are equivelant to this Keras function to perform 1-hot encoding: tensorflow.keras.utils.to_categorical(data_outputs)# temp_outs = numpy.zeros((data_outputs.shape[0], numpy.unique(data_outputs).size), dtype=numpy.uint8)# temp_outs[numpy.arange(data_outputs.shape[0]), numpy.uint8(data_outputs)] = 1# Prepare the PyGAD parameters. Check the documentation for more information: https://pygad.readthedocs.io/en/latest/pygad.html#pygad-ga-classnum_generations=200# Number of generations.num_parents_mating=5# Number of solutions to be selected as parents in the mating pool.initial_population=torch_ga.population_weights# Initial population of network weights.# Create an instance of the pygad.GA classga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,on_generation=on_generation)# Start the genetic algorithm evolution.ga_instance.run()# After the generations complete, some plots are showed that summarize how the outputs/fitness values evolve over generations.ga_instance.plot_fitness(title="PyGAD & PyTorch - Iteration vs. Fitness",linewidth=4)# Returning the details of the best solution.solution,solution_fitness,solution_idx=ga_instance.best_solution()print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")# Fetch the parameters of the best solution.best_solution_weights=torchga.model_weights_as_dict(model=model,weights_vector=solution)model.load_state_dict(best_solution_weights)predictions=model(data_inputs)# print("Predictions : \n", predictions)# Calculate the crossentropy loss of the trained model.print("Crossentropy : ",loss_function(predictions,data_outputs).detach().numpy())# Calculate the classification accuracy for the trained model.accuracy=torch.sum(torch.max(predictions,axis=1).indices==data_outputs)/len(data_outputs)print("Accuracy : ",accuracy.detach().numpy())

Compared to the previous binary classification example, this example hasmultiple classes (4) and thus the loss is measured using cross entropy.

loss_function=torch.nn.CrossEntropyLoss()

Prepare the Training Data¶

Before building and training neural networks, the training data (inputand output) needs to be prepared. The inputs and the outputs of thetraining data are NumPy arrays.

The data used in this example is available as 2 files:

dataset_features.npy:Data inputs.https://github.com/ahmedfgad/NumPyANN/blob/master/dataset_features.npy

outputs.npy:Class labels.https://github.com/ahmedfgad/NumPyANN/blob/master/outputs.npy

The data consists of 4 classes of images. The image shape is(100,100,3). The number of training samples is 1962. The featurevector extracted from each image has a length 360.

importnumpydata_inputs=numpy.load("dataset_features.npy")data_outputs=numpy.load("outputs.npy")

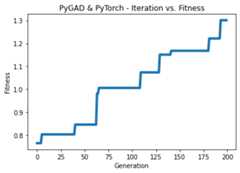

The next figure shows how the fitness value changes.

Here are some statistics about the trained model.

Fitnessvalueofthebestsolution=1.3446997034434534Indexofthebestsolution:0Crossentropy:0.74366045Accuracy:1.0

Example 4: Image Multi-Class Classification (Conv Layers)¶

Compared to the previous example that uses only dense layers, thisexample uses convolutional layers to classify the same dataset.

Here is the complete code.

importtorchimporttorchgaimportpygadimportnumpydeffitness_func(ga_instance,solution,sol_idx):globaldata_inputs,data_outputs,torch_ga,model,loss_functionpredictions=pygad.torchga.predict(model=model,solution=solution,data=data_inputs)solution_fitness=1.0/(loss_function(predictions,data_outputs).detach().numpy()+0.00000001)returnsolution_fitnessdefon_generation(ga_instance):print(f"Generation ={ga_instance.generations_completed}")print(f"Fitness ={ga_instance.best_solution()[1]}")# Build the PyTorch model.input_layer=torch.nn.Conv2d(in_channels=3,out_channels=5,kernel_size=7)relu_layer1=torch.nn.ReLU()max_pool1=torch.nn.MaxPool2d(kernel_size=5,stride=5)conv_layer2=torch.nn.Conv2d(in_channels=5,out_channels=3,kernel_size=3)relu_layer2=torch.nn.ReLU()flatten_layer1=torch.nn.Flatten()# The value 768 is pre-computed by tracing the sizes of the layers' outputs.dense_layer1=torch.nn.Linear(in_features=768,out_features=15)relu_layer3=torch.nn.ReLU()dense_layer2=torch.nn.Linear(in_features=15,out_features=4)output_layer=torch.nn.Softmax(1)model=torch.nn.Sequential(input_layer,relu_layer1,max_pool1,conv_layer2,relu_layer2,flatten_layer1,dense_layer1,relu_layer3,dense_layer2,output_layer)# Create an instance of the pygad.torchga.TorchGA class to build the initial population.torch_ga=torchga.TorchGA(model=model,num_solutions=10)loss_function=torch.nn.CrossEntropyLoss()# Data inputsdata_inputs=torch.from_numpy(numpy.load("dataset_inputs.npy")).float()data_inputs=data_inputs.reshape((data_inputs.shape[0],data_inputs.shape[3],data_inputs.shape[1],data_inputs.shape[2]))# Data outputsdata_outputs=torch.from_numpy(numpy.load("dataset_outputs.npy")).long()# Prepare the PyGAD parameters. Check the documentation for more information: https://pygad.readthedocs.io/en/latest/pygad.html#pygad-ga-classnum_generations=200# Number of generations.num_parents_mating=5# Number of solutions to be selected as parents in the mating pool.initial_population=torch_ga.population_weights# Initial population of network weights.# Create an instance of the pygad.GA classga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,on_generation=on_generation)# Start the genetic algorithm evolution.ga_instance.run()# After the generations complete, some plots are showed that summarize how the outputs/fitness values evolve over generations.ga_instance.plot_fitness(title="PyGAD & PyTorch - Iteration vs. Fitness",linewidth=4)# Returning the details of the best solution.solution,solution_fitness,solution_idx=ga_instance.best_solution()print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")# Make predictions based on the best solution.predictions=pygad.torchga.predict(model=model,solution=solution,data=data_inputs)# print("Predictions : \n", predictions)# Calculate the crossentropy for the trained model.print("Crossentropy : ",loss_function(predictions,data_outputs).detach().numpy())# Calculate the classification accuracy for the trained model.accuracy=torch.sum(torch.max(predictions,axis=1).indices==data_outputs)/len(data_outputs)print("Accuracy : ",accuracy.detach().numpy())

Compared to the previous example, the only change is that thearchitecture uses convolutional and max-pooling layers. The shape ofeach input sample is 100x100x3.

input_layer=torch.nn.Conv2d(in_channels=3,out_channels=5,kernel_size=7)relu_layer1=torch.nn.ReLU()max_pool1=torch.nn.MaxPool2d(kernel_size=5,stride=5)conv_layer2=torch.nn.Conv2d(in_channels=5,out_channels=3,kernel_size=3)relu_layer2=torch.nn.ReLU()flatten_layer1=torch.nn.Flatten()# The value 768 is pre-computed by tracing the sizes of the layers' outputs.dense_layer1=torch.nn.Linear(in_features=768,out_features=15)relu_layer3=torch.nn.ReLU()dense_layer2=torch.nn.Linear(in_features=15,out_features=4)output_layer=torch.nn.Softmax(1)model=torch.nn.Sequential(input_layer,relu_layer1,max_pool1,conv_layer2,relu_layer2,flatten_layer1,dense_layer1,relu_layer3,dense_layer2,output_layer)

Prepare the Training Data¶

The data used in this example is available as 2 files:

dataset_inputs.npy:Data inputs.https://github.com/ahmedfgad/NumPyCNN/blob/master/dataset_inputs.npy

dataset_outputs.npy:Class labels.https://github.com/ahmedfgad/NumPyCNN/blob/master/dataset_outputs.npy

The data consists of 4 classes of images. The image shape is(100,100,3) and there are 20 images per class for a total of 80training samples. For more information about the dataset, check theReading theDatasection of thepygad.cnn module.

Simply download these 2 files and read them according to the next code.

importnumpydata_inputs=numpy.load("dataset_inputs.npy")data_outputs=numpy.load("dataset_outputs.npy")

The next figure shows how the fitness value changes.

Here are some statistics about the trained model. The model accuracy is97.5% after the 200 generations. Note that just running the code againmay give different results.

Fitnessvalueofthebestsolution=1.3009520689219258Indexofthebestsolution:0Crossentropy:0.7686678Accuracy:0.975