pygad.gann Module¶

This section of the PyGAD’s library documentation discusses thepygad.gann module.

Thepygad.gann module trains neural networks (for eitherclassification or regression) using the genetic algorithm. It makes useof the 2 modulespygad andpygad.nn.

pygad.gann.GANN Class¶

Thepygad.gann module has a class namedpygad.gann.GANN fortraining neural networks using the genetic algorithm. The constructor,methods, function, and attributes within the class are discussed in thissection.

__init__()¶

In order to train a neural network using the genetic algorithm, thefirst thing to do is to create an instance of thepygad.gann.GANNclass.

Thepygad.gann.GANN class constructor accepts the followingparameters:

num_solutions: Number of neural networks (i.e. solutions) in thepopulation. Based on the value passed to this parameter, a number ofidentical neural networks are created where their parameters areoptimized using the genetic algorithm.num_neurons_input: Number of neurons in the input layer.num_neurons_output: Number of neurons in the output layer.num_neurons_hidden_layers=[]: A list holding the number ofneurons in the hidden layer(s). If empty[], then no hiddenlayers are used. For eachintvalue it holds, then a hidden layeris created with a number of hidden neurons specified by thecorrespondingintvalue. For example,num_neurons_hidden_layers=[10]creates a single hidden layer with10 neurons.num_neurons_hidden_layers=[10,5]creates 2hidden layers with 10 neurons for the first and 5 neurons for thesecond hidden layer.output_activation="softmax": The name of the activation functionof the output layer which defaults to"softmax".hidden_activations="relu": The name(s) of the activationfunction(s) of the hidden layer(s). It defaults to"relu". Ifpassed as a string, this means the specified activation function willbe used across all the hidden layers. If passed as a list, then itmust have the same length as the length of thenum_neurons_hidden_layerslist. An exception is raised if theirlengths are different. Whenhidden_activationsis a list, aone-to-one mapping between thenum_neurons_hidden_layersandhidden_activationslists occurs.

In order to validate the parameters passed to thepygad.gann.GANNclass constructor, thepygad.gann.validate_network_parameters()function is called.

Instance Attributes¶

All the parameters in thepygad.gann.GANN class constructor are usedas instance attributes. Besides such attributes, there are otherattributes added to the instances from thepygad.gann.GANN classwhich are:

parameters_validated: IfTrue, then the parameters passed tothe GANN class constructor are valid. Its initial value isFalse.population_networks: A list holding references to all thesolutions (i.e. neural networks) used in the population.

Methods in the GANN Class¶

This section discusses the methods available for instances of thepygad.gann.GANN class.

create_population()¶

Thecreate_population() method creates the initial population of thegenetic algorithm as a list of neural networks (i.e. solutions). Foreach network to be created, thepygad.gann.create_network() functionis called.

Each element in the list holds a reference to the last (i.e. output)layer for the network. The method does not accept any parameter and itaccesses all the required details from thepygad.gann.GANN instance.

The method returns the list holding the references to the networks. Thislist is later assigned to thepopulation_networks attribute of theinstance.

update_population_trained_weights()¶

Theupdate_population_trained_weights() method updates thetrained_weights attribute of the layers of each network (check thedocumentation of the pygad.nn.DenseLayerclass formore information) according to the weights passed in thepopulation_trained_weights parameter.

Accepts the following parameters:

population_trained_weights: A list holding the trained weights ofall networks as matrices. Such matrices are to be assigned to thetrained_weightsattribute of all layers of all networks.

Functions in thepygad.gann Module¶

This section discusses the functions in thepygad.gann module.

pygad.gann.validate_network_parameters()¶

Validates the parameters passed to the constructor of thepygad.gann.GANN class. If at least one an invalid parameter exists,an exception is raised and the execution stops.

The function accepts the same parameters passed to the constructor ofthepygad.gann.GANN class. Please check the documentation of suchparameters in the section discussing the class constructor.

The reason why this function sets a default value to thenum_solutions parameter is differentiating whether a population ofnetworks or a single network is to be created. IfNone, then asingle network will be created. If notNone, then a population ofnetworks is to be created.

If the value passed to thehidden_activations parameter is a string,not a list, then a list is created by replicating the passed name of theactivation function a number of times equal to the number of hiddenlayers (i.e. the length of thenum_neurons_hidden_layers parameter).

Returns a list holding the name(s) of the activation function(s) of thehidden layer(s).

pygad.gann.create_network()¶

Creates a neural network as a linked list between the input, hidden, andoutput layers where the layer at index N (which is the last/outputlayer) references the layer at index N-1 (which is a hidden layer) usingits previous_layer attribute. The input layer does not reference anylayer because it is the last layer in the linked list.

In addition to theparameters_validated parameter, this functionaccepts the same parameters passed to the constructor of thepygad.gann.GANN class except for thenum_solutions parameterbecause only a single network is created out of thecreate_network()function.

parameters_validated: IfFalse, then the parameters are notvalidated and a call to thevalidate_network_parameters() functionis made.

Returns the reference to the last layer in the network architecturewhich is the output layer. Based on such a reference, all network layerscan be fetched.

pygad.gann.population_as_vectors()¶

Accepts the population as networks and returns a list holding allweights of the layers of each solution (i.e. network) in the populationas a vector.

For example, if the population has 6 solutions (i.e. networks), thisfunction accepts references to such networks and returns a list with 6vectors, one for each network (i.e. solution). Each vector holds theweights for all layers for a single network.

Accepts the following parameters:

population_networks: A list holding references to the output(last) layers of the neural networks used in the population.

Returns a list holding the weights vectors for all solutions (i.e.networks).

pygad.gann.population_as_matrices()¶

Accepts the population as both networks and weights vectors and returnsthe weights of all layers of each solution (i.e. network) in thepopulation as a matrix.

For example, if the population has 6 solutions (i.e. networks), thisfunction returns a list with 6 matrices, one for each network holdingits weights for all layers.

Accepts the following parameters:

population_networks: A list holding references to the output(last) layers of the neural networks used in the population.population_vectors: A list holding the weights of all networks asvectors. Such vectors are to be converted into matrices.

Returns a list holding the weights matrices for all solutions (i.e.networks).

Steps to Build and Train Neural Networks using Genetic Algorithm¶

The steps to use this project for building and training a neural networkusing the genetic algorithm are as follows:

Prepare the training data.

Create an instance of the

pygad.gann.GANNclass.Fetch the population weights as vectors.

Prepare the fitness function.

Prepare the generation callback function.

Create an instance of the

pygad.GAclass.Run the created instance of the

pygad.GAclass.Plot the Fitness Values

Information about the best solution.

Making predictions using the trained weights.

Calculating some statistics.

Let’s start covering all of these steps.

Prepare the Training Data¶

Before building and training neural networks, the training data (inputand output) is to be prepared. The inputs and the outputs of thetraining data are NumPy arrays.

Here is an example of preparing the training data for the XOR problem.

For the input array, each element must be a list representing the inputs(i.e. features) for the sample. If there are 200 samples and each samplehas 50 features, then the shape of the inputs array is(200,50).The variablenum_inputs holds the length of each sample which is 2in this example.

data_inputs=numpy.array([[1,1],[1,0],[0,1],[0,0]])data_outputs=numpy.array([0,1,1,0])num_inputs=data_inputs.shape[1]

For the output array, each element must be a single number representingthe class label of the sample. The class labels must start at0. So,if there are 200 samples, then the shape of the output array is(200). If there are 5 classes in the data, then the values of allthe 200 elements in the output array must range from 0 to 4 inclusive.Generally, the class labels start from0 toN-1 whereN isthe number of classes.

For the XOR example, there are 2 classes and thus their labels are 0 and1. Thenum_classes variable is assigned to 2.

Note that the project only supports classification problems where eachsample is assigned to only one class.

Create an Instance of thepygad.gann.GANN Class¶

After preparing the input data, an instance of thepygad.gann.GANNclass is created by passing the appropriate parameters.

Here is an example that creates a network for the XOR problem. Thenum_solutions parameter is set to 6 which means the geneticalgorithm population will have 6 solutions (i.e. networks). All of these6 neural networks will have the same architectures as specified by theother parameters.

The output layer has 2 neurons because there are only 2 classes (0 and1).

importpygad.gannimportpygad.nnnum_solutions=6GANN_instance=pygad.gann.GANN(num_solutions=num_solutions,num_neurons_input=num_inputs,num_neurons_hidden_layers=[2],num_neurons_output=2,hidden_activations=["relu"],output_activation="softmax")

The architecture of the created network has the following layers:

An input layer with 2 neurons (i.e. inputs)

A single hidden layer with 2 neurons.

An output layer with 2 neurons (i.e. classes).

The weights of the network are as follows:

Between the input and the hidden layer, there is a weights matrix ofsize equal to

(numberinputsxnumberofhiddenneurons)=(2x2).Between the hidden and the output layer, there is a weights matrix ofsize equal to

(numberofhiddenneuronsxnumberofoutputs)=(2x2).

The activation function used for the output layer issoftmax. Therelu activation function is used for the hidden layer.

After creating the instance of thepygad.gann.GANN class next is tofetch the weights of the population as a list of vectors.

Fetch the Population Weights as Vectors¶

For the genetic algorithm, the parameters (i.e. genes) of each solutionare represented as a single vector.

For the task of training the network for the XOR problem, the weights ofeach network in the population are not represented as a vector but 2matrices each of size 2x2.

To create a list holding the population weights as vectors, one for eachnetwork, thepygad.gann.population_as_vectors() function is used.

population_vectors=pygad.gann.population_as_vectors(population_networks=GANN_instance.population_networks)

After preparing the population weights as a set of vectors, next is toprepare 2 functions which are:

Fitness function.

Callback function after each generation.

Prepare the Fitness Function¶

The PyGAD library works by allowing the users to customize the geneticalgorithm for their own problems. Because the problems differ in how thefitness values are calculated, then PyGAD allows the user to use acustom function as a maximization fitness function. This function mustaccept 2 positional parameters representing the following:

The solution.

The solution index in the population.

The fitness function must return a single number representing thefitness. The higher the fitness value, the better the solution.

Here is the implementation of the fitness function for training a neuralnetwork. It uses thepygad.nn.predict() function to predict theclass labels based on the current solution’s weights. Thepygad.nn.predict() function uses the trained weights available inthetrained_weights attribute of each layer of the network formaking predictions.

Based on such predictions, the classification accuracy is calculated.This accuracy is used as the fitness value of the solution. Finally, thefitness value is returned.

deffitness_func(ga_instance,solution,sol_idx):globalGANN_instance,data_inputs,data_outputspredictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[sol_idx],data_inputs=data_inputs)correct_predictions=numpy.where(predictions==data_outputs)[0].sizesolution_fitness=(correct_predictions/data_outputs.size)*100returnsolution_fitness

Prepare the Generation Callback Function¶

After each generation of the genetic algorithm, the fitness functionwill be called to calculate the fitness value of each solution. Withinthe fitness function, thepygad.nn.predict() function is used forpredicting the outputs based on the current solution’strained_weights attribute. Thus, it is required that such anattribute is updated by weights evolved by the genetic algorithm aftereach generation.

PyGAD 2.0.0 and higher has a new parameter accepted by thepygad.GAclass constructor namedon_generation. It could be assigned to afunction that is called after each generation. The function must accepta single parameter representing the instance of thepygad.GA class.

This callback function can be used to update thetrained_weightsattribute of layers of each network in the population.

Here is the implementation for a function that updates thetrained_weights attribute of the layers of the population networks.

It works by converting the current population from the vector form tothe matric form using thepygad.gann.population_as_matrices()function. It accepts the population as vectors and returns it asmatrices.

The population matrices are then passed to theupdate_population_trained_weights() method in thepygad.gannmodule to update thetrained_weights attribute of all layers for allsolutions within the population.

defcallback_generation(ga_instance):globalGANN_instancepopulation_matrices=pygad.gann.population_as_matrices(population_networks=GANN_instance.population_networks,population_vectors=ga_instance.population)GANN_instance.update_population_trained_weights(population_trained_weights=population_matrices)print(f"Generation ={ga_instance.generations_completed}")print(f"Fitness ={ga_instance.best_solution()[1]}")

After preparing the fitness and callback function, next is to create aninstance of thepygad.GA class.

Create an Instance of thepygad.GA Class¶

Once the parameters of the genetic algorithm are prepared, an instanceof thepygad.GA class can be created.

Here is an example.

initial_population=population_vectors.copy()num_parents_mating=4num_generations=500mutation_percent_genes=5parent_selection_type="sss"crossover_type="single_point"mutation_type="random"keep_parents=1init_range_low=-2init_range_high=5ga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,mutation_percent_genes=mutation_percent_genes,init_range_low=init_range_low,init_range_high=init_range_high,parent_selection_type=parent_selection_type,crossover_type=crossover_type,mutation_type=mutation_type,keep_parents=keep_parents,on_generation=callback_generation)

The last step for training the neural networks using the geneticalgorithm is calling therun() method.

Run the Created Instance of thepygad.GA Class¶

By calling therun() method from thepygad.GA instance, thegenetic algorithm will iterate through the number of generationsspecified in itsnum_generations parameter.

ga_instance.run()

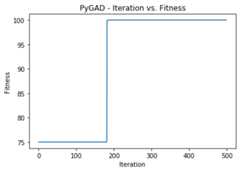

Plot the Fitness Values¶

After therun() method completes, theplot_fitness() method canbe called to show how the fitness values evolve by generation. A fitnessvalue (i.e. accuracy) of 100 is reached after around 180 generations.

ga_instance.plot_fitness()

By running the code again, a different initial population is created andthus a classification accuracy of 100 can be reached using a less numberof generations. On the other hand, a different initial population mightcause 100% accuracy to be reached using more generations or not reachedat all.

Information about the Best Solution¶

The following information about the best solution in the last populationis returned using thebest_solution() method in thepygad.GAclass.

Solution

Fitness value of the solution

Index of the solution within the population

Here is how such information is returned. The fitness value (i.e.accuracy) is 100.

solution,solution_fitness,solution_idx=ga_instance.best_solution()print(f"Parameters of the best solution :{solution}")print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")

Parametersofthebestsolution:[3.55081391-3.21562011-14.26177840.68044231-1.41258145-3.29793151.58136006-7.83726169]Fitnessvalueofthebestsolution=100.0Indexofthebestsolution:0

Using thebest_solution_generation attribute of the instance fromthepygad.GA class, the generation number at which thebestfitness is reached could be fetched. According to the result, the bestfitness value is reached after 182 generations.

ifga_instance.best_solution_generation!=-1:print(f"Best fitness value reached after{ga_instance.best_solution_generation} generations.")

Bestsolutionreachedafter182generations.

Making Predictions using the Trained Weights¶

Thepygad.nn.predict() function can be used to make predictionsusing the trained network. As printed, the network is able to predictthe labels correctly.

predictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[solution_idx],data_inputs=data_inputs)print(f"Predictions of the trained network :{predictions}")

Predictionsofthetrainednetwork:[0.1.1.0.]

Calculating Some Statistics¶

Based on the predictions the network made, some statistics can becalculated such as the number of correct and wrong predictions inaddition to the classification accuracy.

num_wrong=numpy.where(predictions!=data_outputs)[0]num_correct=data_outputs.size-num_wrong.sizeaccuracy=100*(num_correct/data_outputs.size)print(f"Number of correct classifications :{num_correct}.")print(f"Number of wrong classifications :{num_wrong.size}.")print(f"Classification accuracy :{accuracy}.")

Numberofcorrectclassifications:4print("Number of wrong classifications : 0Classificationaccuracy:100

Examples¶

This section gives the complete code of some examples that build andtrain neural networks using the genetic algorithm. Each subsectionbuilds a different network.

XOR Classification¶

This example is discussed in theSteps to Build and Train NeuralNetworks using Genetic Algorithm section that builds the XOR gate andits complete code is listed below.

importnumpyimportpygadimportpygad.nnimportpygad.ganndeffitness_func(ga_instance,solution,sol_idx):globalGANN_instance,data_inputs,data_outputs# If adaptive mutation is used, sometimes sol_idx is None.ifsol_idx==None:sol_idx=1predictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[sol_idx],data_inputs=data_inputs)correct_predictions=numpy.where(predictions==data_outputs)[0].sizesolution_fitness=(correct_predictions/data_outputs.size)*100returnsolution_fitnessdefcallback_generation(ga_instance):globalGANN_instance,last_fitnesspopulation_matrices=pygad.gann.population_as_matrices(population_networks=GANN_instance.population_networks,population_vectors=ga_instance.population)GANN_instance.update_population_trained_weights(population_trained_weights=population_matrices)print(f"Generation ={ga_instance.generations_completed}")print(f"Fitness ={ga_instance.best_solution()[1]}")print(f"Change ={ga_instance.best_solution()[1]-last_fitness}")last_fitness=ga_instance.best_solution()[1].copy()# Holds the fitness value of the previous generation.last_fitness=0# Preparing the NumPy array of the inputs.data_inputs=numpy.array([[1,1],[1,0],[0,1],[0,0]])# Preparing the NumPy array of the outputs.data_outputs=numpy.array([0,1,1,0])# The length of the input vector for each sample (i.e. number of neurons in the input layer).num_inputs=data_inputs.shape[1]# The number of neurons in the output layer (i.e. number of classes).num_classes=2# Creating an initial population of neural networks. The return of the initial_population() function holds references to the networks, not their weights. Using such references, the weights of all networks can be fetched.num_solutions=6# A solution or a network can be used interchangeably.GANN_instance=pygad.gann.GANN(num_solutions=num_solutions,num_neurons_input=num_inputs,num_neurons_hidden_layers=[2],num_neurons_output=num_classes,hidden_activations=["relu"],output_activation="softmax")# population does not hold the numerical weights of the network instead it holds a list of references to each last layer of each network (i.e. solution) in the population. A solution or a network can be used interchangeably.# If there is a population with 3 solutions (i.e. networks), then the population is a list with 3 elements. Each element is a reference to the last layer of each network. Using such a reference, all details of the network can be accessed.population_vectors=pygad.gann.population_as_vectors(population_networks=GANN_instance.population_networks)# To prepare the initial population, there are 2 ways:# 1) Prepare it yourself and pass it to the initial_population parameter. This way is useful when the user wants to start the genetic algorithm with a custom initial population.# 2) Assign valid integer values to the sol_per_pop and num_genes parameters. If the initial_population parameter exists, then the sol_per_pop and num_genes parameters are useless.initial_population=population_vectors.copy()num_parents_mating=4# Number of solutions to be selected as parents in the mating pool.num_generations=500# Number of generations.mutation_percent_genes=[5,10]# Percentage of genes to mutate. This parameter has no action if the parameter mutation_num_genes exists.parent_selection_type="sss"# Type of parent selection.crossover_type="single_point"# Type of the crossover operator.mutation_type="adaptive"# Type of the mutation operator.keep_parents=1# Number of parents to keep in the next population. -1 means keep all parents and 0 means keep nothing.init_range_low=-2init_range_high=5ga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,mutation_percent_genes=mutation_percent_genes,init_range_low=init_range_low,init_range_high=init_range_high,parent_selection_type=parent_selection_type,crossover_type=crossover_type,mutation_type=mutation_type,keep_parents=keep_parents,suppress_warnings=True,on_generation=callback_generation)ga_instance.run()# After the generations complete, some plots are showed that summarize how the outputs/fitness values evolve over generations.ga_instance.plot_fitness()# Returning the details of the best solution.solution,solution_fitness,solution_idx=ga_instance.best_solution()print(f"Parameters of the best solution :{solution}")print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")ifga_instance.best_solution_generation!=-1:print(f"Best fitness value reached after{ga_instance.best_solution_generation} generations.")# Predicting the outputs of the data using the best solution.predictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[solution_idx],data_inputs=data_inputs)print(f"Predictions of the trained network :{predictions}")# Calculating some statisticsnum_wrong=numpy.where(predictions!=data_outputs)[0]num_correct=data_outputs.size-num_wrong.sizeaccuracy=100*(num_correct/data_outputs.size)print(f"Number of correct classifications :{num_correct}.")print(f"Number of wrong classifications :{num_wrong.size}.")print(f"Classification accuracy :{accuracy}.")

Image Classification¶

In the documentation of thepygad.nn module, a neural network iscreated for classifying images from the Fruits360 dataset without beingtrained using an optimization algorithm. This section discusses how totrain such a classifier using the genetic algorithm with the help of thepygad.gann module.

Please make sure that the training data filesdataset_features.npyandoutputs.npyare available. For downloading them, use these links:

dataset_features.npy:The featureshttps://github.com/ahmedfgad/NumPyANN/blob/master/dataset_features.npy

outputs.npy:The class labelshttps://github.com/ahmedfgad/NumPyANN/blob/master/outputs.npy

After the data is available, here is the complete code that builds andtrains a neural network using the genetic algorithm for classifyingimages from 4 classes of the Fruits360 dataset.

Because there are 4 classes, the output layer is assigned has 4 neuronsaccording to thenum_neurons_output parameter of thepygad.gann.GANN class constructor.

importnumpyimportpygadimportpygad.nnimportpygad.ganndeffitness_func(ga_instance,solution,sol_idx):globalGANN_instance,data_inputs,data_outputspredictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[sol_idx],data_inputs=data_inputs)correct_predictions=numpy.where(predictions==data_outputs)[0].sizesolution_fitness=(correct_predictions/data_outputs.size)*100returnsolution_fitnessdefcallback_generation(ga_instance):globalGANN_instance,last_fitnesspopulation_matrices=pygad.gann.population_as_matrices(population_networks=GANN_instance.population_networks,population_vectors=ga_instance.population)GANN_instance.update_population_trained_weights(population_trained_weights=population_matrices)print(f"Generation ={ga_instance.generations_completed}")print(f"Fitness ={ga_instance.best_solution()[1]}")print(f"Change ={ga_instance.best_solution()[1]-last_fitness}")last_fitness=ga_instance.best_solution()[1].copy()# Holds the fitness value of the previous generation.last_fitness=0# Reading the input data.data_inputs=numpy.load("dataset_features.npy")# Download from https://github.com/ahmedfgad/NumPyANN/blob/master/dataset_features.npy# Optional step of filtering the input data using the standard deviation.features_STDs=numpy.std(a=data_inputs,axis=0)data_inputs=data_inputs[:,features_STDs>50]# Reading the output data.data_outputs=numpy.load("outputs.npy")# Download from https://github.com/ahmedfgad/NumPyANN/blob/master/outputs.npy# The length of the input vector for each sample (i.e. number of neurons in the input layer).num_inputs=data_inputs.shape[1]# The number of neurons in the output layer (i.e. number of classes).num_classes=4# Creating an initial population of neural networks. The return of the initial_population() function holds references to the networks, not their weights. Using such references, the weights of all networks can be fetched.num_solutions=8# A solution or a network can be used interchangeably.GANN_instance=pygad.gann.GANN(num_solutions=num_solutions,num_neurons_input=num_inputs,num_neurons_hidden_layers=[150,50],num_neurons_output=num_classes,hidden_activations=["relu","relu"],output_activation="softmax")# population does not hold the numerical weights of the network instead it holds a list of references to each last layer of each network (i.e. solution) in the population. A solution or a network can be used interchangeably.# If there is a population with 3 solutions (i.e. networks), then the population is a list with 3 elements. Each element is a reference to the last layer of each network. Using such a reference, all details of the network can be accessed.population_vectors=pygad.gann.population_as_vectors(population_networks=GANN_instance.population_networks)# To prepare the initial population, there are 2 ways:# 1) Prepare it yourself and pass it to the initial_population parameter. This way is useful when the user wants to start the genetic algorithm with a custom initial population.# 2) Assign valid integer values to the sol_per_pop and num_genes parameters. If the initial_population parameter exists, then the sol_per_pop and num_genes parameters are useless.initial_population=population_vectors.copy()num_parents_mating=4# Number of solutions to be selected as parents in the mating pool.num_generations=500# Number of generations.mutation_percent_genes=10# Percentage of genes to mutate. This parameter has no action if the parameter mutation_num_genes exists.parent_selection_type="sss"# Type of parent selection.crossover_type="single_point"# Type of the crossover operator.mutation_type="random"# Type of the mutation operator.keep_parents=-1# Number of parents to keep in the next population. -1 means keep all parents and 0 means keep nothing.ga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,mutation_percent_genes=mutation_percent_genes,parent_selection_type=parent_selection_type,crossover_type=crossover_type,mutation_type=mutation_type,keep_parents=keep_parents,on_generation=callback_generation)ga_instance.run()# After the generations complete, some plots are showed that summarize how the outputs/fitness values evolve over generations.ga_instance.plot_fitness()# Returning the details of the best solution.solution,solution_fitness,solution_idx=ga_instance.best_solution()print(f"Parameters of the best solution :{solution}")print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")ifga_instance.best_solution_generation!=-1:print(f"Best fitness value reached after{ga_instance.best_solution_generation} generations.")# Predicting the outputs of the data using the best solution.predictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[solution_idx],data_inputs=data_inputs)print(f"Predictions of the trained network :{predictions}")# Calculating some statisticsnum_wrong=numpy.where(predictions!=data_outputs)[0]num_correct=data_outputs.size-num_wrong.sizeaccuracy=100*(num_correct/data_outputs.size)print(f"Number of correct classifications :{num_correct}.")print(f"Number of wrong classifications :{num_wrong.size}.")print(f"Classification accuracy :{accuracy}.")

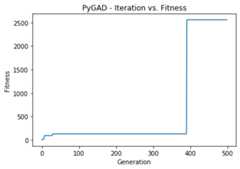

After training completes, here are the outputs of the print statements.The number of wrong classifications is only 1 and the accuracy is99.949%. This accuracy is reached after 482 generations.

Fitnessvalueofthebestsolution=99.94903160040775Indexofthebestsolution:0Bestfitnessvaluereachedafter482generations.Numberofcorrectclassifications:1961.Numberofwrongclassifications:1.Classificationaccuracy:99.94903160040775.

The next figure shows how fitness value evolves by generation.

Regression Example 1¶

To train a neural network for regression, follow these instructions:

Set the

output_activationparameter in the constructor of thepygad.gann.GANNclass to"None". It is possible to use theReLU function if all outputs are nonnegative.

GANN_instance=pygad.gann.GANN(...output_activation="None")

Wherever the

pygad.nn.predict()function is used, set theproblem_typeparameter to"regression".

predictions=pygad.nn.predict(...,problem_type="regression")

Design the fitness function to calculate the error (e.g. meanabsolute error).

deffitness_func(ga_instance,solution,sol_idx):...predictions=pygad.nn.predict(...,problem_type="regression")solution_fitness=1.0/numpy.mean(numpy.abs(predictions-data_outputs))returnsolution_fitness

The next code builds a complete example for building a neural networkfor regression.

importnumpyimportpygadimportpygad.nnimportpygad.ganndeffitness_func(ga_instance,solution,sol_idx):globalGANN_instance,data_inputs,data_outputspredictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[sol_idx],data_inputs=data_inputs,problem_type="regression")solution_fitness=1.0/numpy.mean(numpy.abs(predictions-data_outputs))returnsolution_fitnessdefcallback_generation(ga_instance):globalGANN_instance,last_fitnesspopulation_matrices=pygad.gann.population_as_matrices(population_networks=GANN_instance.population_networks,population_vectors=ga_instance.population)GANN_instance.update_population_trained_weights(population_trained_weights=population_matrices)print(f"Generation ={ga_instance.generations_completed}")print(f"Fitness ={ga_instance.best_solution(pop_fitness=ga_instance.last_generation_fitness)[1]}")print(f"Change ={ga_instance.best_solution(pop_fitness=ga_instance.last_generation_fitness)[1]-last_fitness}")last_fitness=ga_instance.best_solution(pop_fitness=ga_instance.last_generation_fitness)[1].copy()# Holds the fitness value of the previous generation.last_fitness=0# Preparing the NumPy array of the inputs.data_inputs=numpy.array([[2,5,-3,0.1],[8,15,20,13]])# Preparing the NumPy array of the outputs.data_outputs=numpy.array([[0.1,0.2],[1.8,1.5]])# The length of the input vector for each sample (i.e. number of neurons in the input layer).num_inputs=data_inputs.shape[1]# Creating an initial population of neural networks. The return of the initial_population() function holds references to the networks, not their weights. Using such references, the weights of all networks can be fetched.num_solutions=6# A solution or a network can be used interchangeably.GANN_instance=pygad.gann.GANN(num_solutions=num_solutions,num_neurons_input=num_inputs,num_neurons_hidden_layers=[2],num_neurons_output=2,hidden_activations=["relu"],output_activation="None")# population does not hold the numerical weights of the network instead it holds a list of references to each last layer of each network (i.e. solution) in the population. A solution or a network can be used interchangeably.# If there is a population with 3 solutions (i.e. networks), then the population is a list with 3 elements. Each element is a reference to the last layer of each network. Using such a reference, all details of the network can be accessed.population_vectors=pygad.gann.population_as_vectors(population_networks=GANN_instance.population_networks)# To prepare the initial population, there are 2 ways:# 1) Prepare it yourself and pass it to the initial_population parameter. This way is useful when the user wants to start the genetic algorithm with a custom initial population.# 2) Assign valid integer values to the sol_per_pop and num_genes parameters. If the initial_population parameter exists, then the sol_per_pop and num_genes parameters are useless.initial_population=population_vectors.copy()num_parents_mating=4# Number of solutions to be selected as parents in the mating pool.num_generations=500# Number of generations.mutation_percent_genes=5# Percentage of genes to mutate. This parameter has no action if the parameter mutation_num_genes exists.parent_selection_type="sss"# Type of parent selection.crossover_type="single_point"# Type of the crossover operator.mutation_type="random"# Type of the mutation operator.keep_parents=1# Number of parents to keep in the next population. -1 means keep all parents and 0 means keep nothing.init_range_low=-1init_range_high=1ga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,mutation_percent_genes=mutation_percent_genes,init_range_low=init_range_low,init_range_high=init_range_high,parent_selection_type=parent_selection_type,crossover_type=crossover_type,mutation_type=mutation_type,keep_parents=keep_parents,on_generation=callback_generation)ga_instance.run()# After the generations complete, some plots are showed that summarize how the outputs/fitness values evolve over generations.ga_instance.plot_fitness()# Returning the details of the best solution.solution,solution_fitness,solution_idx=ga_instance.best_solution(pop_fitness=ga_instance.last_generation_fitness)print(f"Parameters of the best solution :{solution}")print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")ifga_instance.best_solution_generation!=-1:print(f"Best fitness value reached after{ga_instance.best_solution_generation} generations.")# Predicting the outputs of the data using the best solution.predictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[solution_idx],data_inputs=data_inputs,problem_type="regression")print(f"Predictions of the trained network :{predictions}")# Calculating some statisticsabs_error=numpy.mean(numpy.abs(predictions-data_outputs))print(f"Absolute error :{abs_error}.")

The next figure shows how the fitness value changes for the generationsused.

Regression Example 2 - Fish Weight Prediction¶

This example uses the Fish Market Dataset available at Kaggle(https://www.kaggle.com/aungpyaeap/fish-market). Simply download the CSVdataset fromthislink(https://www.kaggle.com/aungpyaeap/fish-market/download). The dataset isalso available at theGitHub project of the pygad.gannmodule:https://github.com/ahmedfgad/NeuralGenetic

Using the Pandas library, the dataset is read using theread_csv()function.

data=numpy.array(pandas.read_csv("Fish.csv"))

The last 5 columns in the dataset are used as inputs and theWeightcolumn is used as output.

# Preparing the NumPy array of the inputs.data_inputs=numpy.asarray(data[:,2:],dtype=numpy.float32)# Preparing the NumPy array of the outputs.data_outputs=numpy.asarray(data[:,1],dtype=numpy.float32)# Fish Weight

Note how the activation function at the last layer is set to"None".Moreover, theproblem_type parameter in thepygad.nn.train() andpygad.nn.predict() functions is set to"regression". Remember todesign an appropriate fitness function for the regression problem. Inthis example, the fitness value is calculated based on the mean absoluteerror.

solution_fitness=1.0/numpy.mean(numpy.abs(predictions-data_outputs))

Here is the complete code.

importnumpyimportpygadimportpygad.nnimportpygad.gannimportpandasdeffitness_func(ga_instance,solution,sol_idx):globalGANN_instance,data_inputs,data_outputspredictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[sol_idx],data_inputs=data_inputs,problem_type="regression")solution_fitness=1.0/numpy.mean(numpy.abs(predictions-data_outputs))returnsolution_fitnessdefcallback_generation(ga_instance):globalGANN_instance,last_fitnesspopulation_matrices=pygad.gann.population_as_matrices(population_networks=GANN_instance.population_networks,population_vectors=ga_instance.population)GANN_instance.update_population_trained_weights(population_trained_weights=population_matrices)print(f"Generation ={ga_instance.generations_completed}")print(f"Fitness ={ga_instance.best_solution(pop_fitness=ga_instance.last_generation_fitness)[1]}")print(f"Change ={ga_instance.best_solution(pop_fitness=ga_instance.last_generation_fitness)[1]-last_fitness}")last_fitness=ga_instance.best_solution(pop_fitness=ga_instance.last_generation_fitness)[1].copy()# Holds the fitness value of the previous generation.last_fitness=0data=numpy.array(pandas.read_csv("../data/Fish.csv"))# Preparing the NumPy array of the inputs.data_inputs=numpy.asarray(data[:,2:],dtype=numpy.float32)# Preparing the NumPy array of the outputs.data_outputs=numpy.asarray(data[:,1],dtype=numpy.float32)# The length of the input vector for each sample (i.e. number of neurons in the input layer).num_inputs=data_inputs.shape[1]# Creating an initial population of neural networks. The return of the initial_population() function holds references to the networks, not their weights. Using such references, the weights of all networks can be fetched.num_solutions=6# A solution or a network can be used interchangeably.GANN_instance=pygad.gann.GANN(num_solutions=num_solutions,num_neurons_input=num_inputs,num_neurons_hidden_layers=[2],num_neurons_output=1,hidden_activations=["relu"],output_activation="None")# population does not hold the numerical weights of the network instead it holds a list of references to each last layer of each network (i.e. solution) in the population. A solution or a network can be used interchangeably.# If there is a population with 3 solutions (i.e. networks), then the population is a list with 3 elements. Each element is a reference to the last layer of each network. Using such a reference, all details of the network can be accessed.population_vectors=pygad.gann.population_as_vectors(population_networks=GANN_instance.population_networks)# To prepare the initial population, there are 2 ways:# 1) Prepare it yourself and pass it to the initial_population parameter. This way is useful when the user wants to start the genetic algorithm with a custom initial population.# 2) Assign valid integer values to the sol_per_pop and num_genes parameters. If the initial_population parameter exists, then the sol_per_pop and num_genes parameters are useless.initial_population=population_vectors.copy()num_parents_mating=4# Number of solutions to be selected as parents in the mating pool.num_generations=500# Number of generations.mutation_percent_genes=5# Percentage of genes to mutate. This parameter has no action if the parameter mutation_num_genes exists.parent_selection_type="sss"# Type of parent selection.crossover_type="single_point"# Type of the crossover operator.mutation_type="random"# Type of the mutation operator.keep_parents=1# Number of parents to keep in the next population. -1 means keep all parents and 0 means keep nothing.init_range_low=-1init_range_high=1ga_instance=pygad.GA(num_generations=num_generations,num_parents_mating=num_parents_mating,initial_population=initial_population,fitness_func=fitness_func,mutation_percent_genes=mutation_percent_genes,init_range_low=init_range_low,init_range_high=init_range_high,parent_selection_type=parent_selection_type,crossover_type=crossover_type,mutation_type=mutation_type,keep_parents=keep_parents,on_generation=callback_generation)ga_instance.run()# After the generations complete, some plots are showed that summarize how the outputs/fitness values evolve over generations.ga_instance.plot_fitness()# Returning the details of the best solution.solution,solution_fitness,solution_idx=ga_instance.best_solution(pop_fitness=ga_instance.last_generation_fitness)print(f"Parameters of the best solution :{solution}")print(f"Fitness value of the best solution ={solution_fitness}")print(f"Index of the best solution :{solution_idx}")ifga_instance.best_solution_generation!=-1:print(f"Best fitness value reached after{ga_instance.best_solution_generation} generations.")# Predicting the outputs of the data using the best solution.predictions=pygad.nn.predict(last_layer=GANN_instance.population_networks[solution_idx],data_inputs=data_inputs,problem_type="regression")print(f"Predictions of the trained network :{predictions}")# Calculating some statisticsabs_error=numpy.mean(numpy.abs(predictions-data_outputs))print(f"Absolute error :{abs_error}.")

The next figure shows how the fitness value changes for the 500generations used.