WO2022257499A1 - Sound source localization method and apparatus based on microphone array, and storage medium - Google Patents

Sound source localization method and apparatus based on microphone array, and storage mediumDownload PDFInfo

- Publication number

- WO2022257499A1 WO2022257499A1PCT/CN2022/076825CN2022076825WWO2022257499A1WO 2022257499 A1WO2022257499 A1WO 2022257499A1CN 2022076825 WCN2022076825 WCN 2022076825WWO 2022257499 A1WO2022257499 A1WO 2022257499A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- sound source

- azimuth

- data

- delay

- led

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S5/00—Position-fixing by co-ordinating two or more direction or position line determinations; Position-fixing by co-ordinating two or more distance determinations

- G01S5/18—Position-fixing by co-ordinating two or more direction or position line determinations; Position-fixing by co-ordinating two or more distance determinations using ultrasonic, sonic, or infrasonic waves

Definitions

- the present inventionrelates to the technical field of sound source localization, in particular to a microphone array-based sound source localization method, device and storage medium.

- microphonesare widely used in multimedia conferences, teaching, communications, vibration and noise detection of mechanical equipment, military command and reconnaissance, and other fields.

- audio collection related products on the marketmainly use a single microphone as the audio signal collection unit, and some high-end products use various forms of microphone arrays as sensors for audio signal collection and processing.

- Each microphone in the arrayis an "array element", and the information redundancy collected by multiple "array elements" is used to obtain more information about the sound source.

- the position of the sound source in spacecan be determined through the sound source localization method.

- the sound source localization methodcannot effectively denoise, and the algorithm complexity is high, and the real-time performance is poor, resulting in the inability to accurately and effectively display the direction of the sound source.

- the present applicationprovides a sound source localization method, device and storage medium based on a microphone array, which can accurately and effectively display the direction of the sound source.

- the embodiment of the present applicationprovides a sound source localization method based on a microphone array, which is applied to a sound source localization device, the sound source localization device is electrically connected to the microphone array module, and the sound source localization device is also connected to the LED

- the azimuth display moduleis electrically connected, and the method includes: acquiring a voice signal from the microphone array module; preprocessing the voice signal to determine target data containing sound source information; according to the target data, through azimuth processing, Obtain sound source orientation information; obtain a control signal for controlling the LED orientation display module according to the sound source orientation information; send the control signal to the LED orientation display module, so that the LED orientation display module Displays the direction of the sound source.

- the step of obtaining sound source azimuth information through azimuth processing according to the target dataincludes the following steps: according to the time delay estimation method, calculating the time delay data for the target data; according to the time delay screening method, Effective time delay data is determined from the time delay data; sound source azimuth information is obtained by calculating the effective time delay data according to an azimuth solution algorithm.

- the step of calculating the time delay data for the target dataincludes the following steps: calculating the time delay frequency domain data for the target data according to the Fourier transform algorithm;

- the weighted generalized cross-correlation algorithmcalculates the time-delay weighted data for the frequency domain data; according to the inverse Fourier transform algorithm, calculates the time-delay time domain data for the weighted data; according to the peak detection method, the Latency time domain data determines the latency data.

- the step of determining the effective delay data from the delay data according to the delay screening methodincludes the following steps: calculating the delay error value from the delay data according to the delay error formula; The error threshold and the time delay error value are used to determine valid time delay data from the time delay data.

- the step of preprocessing the speech signal to determine the target data containing sound source informationincludes the following steps: according to the speech endpoint detection method, the start state and the end state are determined from the speech signal; according to the The start state and the end state, the target data including the sound source information is determined by the speech signal.

- the step of obtaining a control signal for controlling the LED azimuth display module according to the azimuth information of the sound sourceincludes the following steps: determining the azimuth angle according to the azimuth information of the sound source;

- the pairing tableobtains the control signal for controlling the LED azimuth display module from the azimuth angle.

- the present applicationprovides a sound source localization device, the sound source localization device is electrically connected to a microphone array module, the sound source localization device is also electrically connected to an LED orientation display module, and the device includes: an acquisition module , used to acquire the voice signal from the microphone array module; a preprocessing module, used to preprocess the voice signal, and determine the target data containing sound source information; a direction calculation module, used to calculate the target data according to the , through azimuth processing, to obtain sound source azimuth information; the control module is used to obtain a control signal for controlling the LED azimuth display module according to the sound source azimuth information; a sending module is used to send the control signal to The LED azimuth display module, so that the LED azimuth display module displays the azimuth of the sound source.

- the embodiment of the present applicationalso provides a terminal, including: a memory, a processor, and a computer program stored in the memory and operable on the processor, and the processor implements the following when executing the computer program.

- a terminalincluding: a memory, a processor, and a computer program stored in the memory and operable on the processor, and the processor implements the following when executing the computer program.

- the present applicationprovides a computer-readable storage medium, the computer-readable storage medium stores computer-executable instructions, and the computer-executable instructions are used to make a computer perform a microphone array-based Sound source localization method.

- the present applicationalso provides a computer program product, the computer program product includes a computer program stored on a computer-readable storage medium, the computer program includes program instructions, and when the program instructions are executed by a computer , causing the computer to execute the sound source localization method based on the microphone array as described in the first aspect.

- the sound source localization methodcan effectively eliminate useless speech signals such as noise and silence, and obtain sound source information

- the target data of the sound sourcecan be used to obtain the control signal for controlling the LED azimuth display module according to the sound source azimuth information, thereby displaying the azimuth of the sound source on the LED azimuth display module, with high processing efficiency, high real-time performance, and accurate and effective display The direction of the sound source.

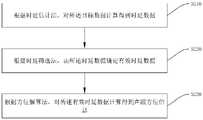

- Fig. 1is the flow chart of the sound source localization method based on microphone array provided by one embodiment of the present application;

- Fig. 2is the specific method flowchart of step S130 in Fig. 1;

- Fig. 3is a specific method flowchart of step S210 in Fig. 2;

- Fig. 4is the specific method flowchart of step S220 in Fig. 2;

- Fig. 5is the specific method flowchart of step S120 in Fig. 1;

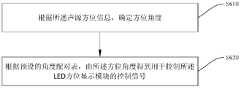

- Fig. 6is a specific method flowchart of step S140 in Fig. 1;

- Fig. 7is a device diagram of a sound source localization device provided by another embodiment of the present application.

- FIG. 8is an apparatus diagram of a terminal provided in another embodiment of the present application.

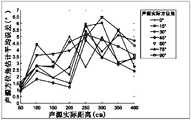

- Fig. 9is a schematic diagram of the estimated mean value of the sound source azimuth obtained by the flow chart of Fig. 1;

- FIG. 10is a schematic diagram of the average error of sound source azimuth estimation obtained from the flow chart in FIG. 1 .

- the present applicationprovides a microphone array-based sound source localization method, device, and storage medium, the microphone array-based sound source localization method comprising: acquiring a voice signal from the microphone array module; preprocessing the voice signal , determine the target data containing the sound source information; according to the target data, through the orientation processing, obtain the sound source orientation information; according to the sound source orientation information, obtain the control signal for controlling the LED orientation display module; The control signal is sent to the LED azimuth display module, so that the LED azimuth display module displays the azimuth of the sound source.

- the sound source localization methodcan effectively eliminate useless voice signals such as noise and silence, obtain target data containing sound source information, and obtain the information used to control the LED orientation display module based on the sound source orientation information. control signal, so as to display the orientation of the sound source on the LED orientation display module, with high processing efficiency and high real-time performance, and can accurately and effectively display the orientation of the sound source.

- a microphone arrayrefers to an arrangement of microphones. Specifically, it refers to a system composed of a certain number of acoustic sensors (for example, microphones) used to sample and process the spatial characteristics of the sound field.

- acoustic sensorsfor example, microphones

- Electret microphonealso known as electret microphone, consists of two parts: acoustic-electric conversion and impedance conversion.

- the key component of the acoustic-electric conversionis the electret diaphragm.

- a thin film of pure goldis evaporated on one side of the electret diaphragm, and after being electretized by a high-voltage electric field, opposite charges reside on both sides of the electret diaphragm.

- the pure gold filmis connected to the metal shell, and a capacitor is formed between the pure gold film and the metal plate; when the electret diaphragm encounters sound wave vibration, the electric field at both ends of the capacitor changes, resulting in a change with the sound wave. alternating voltage.

- Fourier transformmeans that a certain function that satisfies certain conditions can be expressed as a linear combination of trigonometric functions (sine and/or cosine functions) or their integrals.

- VADVoice Activity Detection

- Fig. 1is a sound source localization method based on a microphone array provided by an embodiment of the present application, which is applied to a sound source localization device, and the sound source localization device is electrically connected to the microphone array module , the sound source localization device is also electrically connected to the LED orientation display module, the method includes but not limited to step S110, step S120, step S130, step S140 and step S150.

- Step S110acquiring a voice signal from the microphone array module

- the voice generated by the sound sourcecan be effectively collected by the microphone array module and converted into a voice signal, so that the voice signal from the microphone array module can be obtained.

- Step S120preprocessing the speech signal to determine target data including sound source information

- Step S130according to the target data, through azimuth processing, to obtain sound source azimuth information

- Step S140obtaining a control signal for controlling the LED azimuth display module according to the azimuth information of the sound source

- control signalcorresponds to the location information of the sound source, which can ensure the accuracy of display by the LED location display module.

- Step S150sending the control signal to the LED azimuth display module, so that the LED azimuth display module displays the azimuth of the sound source.

- the direction of the sound sourcecan be quickly and intuitively displayed by using the LED position display module.

- the sound source localization methodcan effectively eliminate useless voice signals such as noise and silence, obtain target data containing sound source information, and obtain the target data for controlling the sound source according to the sound source orientation information.

- the control signal of the LED azimuth display moduleis described, so that the azimuth of the sound source is displayed on the LED azimuth display module, the processing efficiency is high, the real-time performance is high, and the azimuth of the sound source can be accurately and effectively displayed; as can be seen from Fig. 9 and Fig. 10, the sound source The average value of the azimuth angle estimation is close to the actual value, and the average error of the sound source azimuth angle estimation is small. Therefore, the accuracy of the sound source localization method is high.

- step S130specifically includes but is not limited to the following steps:

- Step S210calculate the time delay data for the target data

- Step S220according to the delay screening method, determine effective delay data from the delay data

- Step S230calculate the azimuth information of the sound source from the effective time delay data.

- time-delay data of the target datacan be effectively estimated by using the time-delay estimation method; the time-delay screening method is used to exclude abnormal data, obtain effective time-delay data, and ensure the accuracy of subsequent operations.

- the azimuth angle of the sound sourcecan be obtained through the azimuth solution algorithm; when the microphone array module uses four electret microphones, the solution formula is as follows:

- ⁇is the azimuth angle of the sound source

- r 1 , r 2 , r 3 and r 4are the distances between the four electret microphones and the sound source respectively

- ⁇ ijis the effective time of the electret microphones M i and M j

- Cis the speed of sound

- step S210specifically includes but is not limited to the following steps:

- Step S310calculate the time-delay frequency domain data for the target data

- Step S320according to the signal-to-noise ratio weighted generalized cross-correlation algorithm, calculate the delay weighted data for the frequency domain data;

- Step S330according to the inverse Fourier transform algorithm, calculate the weighted data to obtain time-delay time-domain data

- Step S340determine the delay data from the delay time domain data.

- time-delay frequency domain datais obtained by using the Fourier transform algorithm, and the calculation of the signal-to-noise ratio weighted generalized cross-correlation algorithm is performed to ensure the accuracy of the time delay estimation; by using the inverse Fourier transform algorithm, The time-delay time-domain data in the time domain is obtained again, and it is convenient to use the peak detection method to determine the time-delay data.

- the voice signals of the four electret microphonesare sampled at a frequency of 10KHz and stored in an array with a length of 2048, and the four groups of voice signals are respectively recorded as target Data X 1 (t), X 2 (t), X 3 (t) and X 4 (t), perform fast Fourier transform on the target data, and obtain 4 sets of time-delay frequency domain data X 1 ( ⁇ ), X 2 ( ⁇ ), X 3 ( ⁇ ) and X 4 ( ⁇ ); any two sets of time-delay-frequency domain data are taken to determine the time-delay data, for example, time-delay frequency domain data X 1 ( ⁇ ) and X 2 ( ⁇ ), to determine the time-delay data ⁇ 12 ; for four groups of time-delay frequency domain data X 1 ( ⁇ ), X 2 ( ⁇ ), X 3 ( ⁇ ) and X 4 ( ⁇ ), it is necessary to determine 6 groups of time-delay data, respectively are ⁇ 12 , ⁇

- step S320the SNR-weighted generalized cross-correlation algorithm is as follows:

- G 12 ( ⁇ )X 1 ( ⁇ )X 2 * ( ⁇ )

- G 11 ( ⁇ )X 1 ( ⁇ )X 1 * ( ⁇ )

- G 22 ( ⁇ )X 2 ( ⁇ )X 2 * ( ⁇ )

- Ais delay weighted data

- SNR weighting functionis the SNR weighting function

- X 1 ( ⁇ )is a frequency domain data

- X 1 * ( ⁇ )is the conjugate complex number of X 1 ( ⁇ )

- X 2 ( ⁇ )is another frequency domain data

- X 2 * ( ⁇ )is a complex conjugate number of X 2 ( ⁇ ).

- the weighting functionuses the PHAT function, which has certain anti-noise and anti-reverberation capabilities, but in practical applications, the impact of additive noise will easily lead to a sharp decline in the performance of the algorithm.

- step S220specifically includes but is not limited to the following steps:

- Step S410calculate the time delay error value from the time delay data

- step S420valid delay data is determined from the delay data according to a preset error threshold and the delay error value.

- the delay error formulais as follows:

- ⁇is an error

- i, j and kare numbers of any three electret microphones in the microphone array module

- ⁇ ij , ⁇ ik and ⁇ kjare effective time delay data.

- the microphone array moduleuses four electret microphones, six sets of time delay data can be obtained, namely ⁇ 12 , ⁇ 13 , ⁇ 14 , ⁇ 23 , ⁇ 24 and ⁇ 34 ; the time delay estimation method If there is an error, use the error to filter the delay value, respectively calculate whether the error is within the error threshold, and if it exceeds, discard this set of delay data, so as to filter out effective delay data from the delay data.

- step S120specifically includes but is not limited to the following steps:

- Step S510according to the voice endpoint detection method, determine the start state and the end state from the voice signal

- Step S520according to the start state and the end state, determine target data including sound source information from the speech signal.

- the short-term energy and zero-crossing rate of the speech signalare obtained, thereby judging the start state and the end state of the speech signal, specifically, if the short-term energy of the speech signal exceeds the threshold value then It is judged as the start state, otherwise, it enters the end state, thereby eliminating useless voice signals such as noise and silence.

- step S140specifically includes but is not limited to the following steps:

- Step S610determining the azimuth angle according to the azimuth information of the sound source

- Step S620according to the preset angle pairing table, a control signal for controlling the LED azimuth display module is obtained from the azimuth angle.

- the azimuth information of the sound sourceincludes the azimuth angle of the sound source, and the angle pairing table refers to the lighting sequence table corresponding to the azimuth angle and the LED azimuth display module.

- the LED azimuth display moduleis lit by the control signal, which can be intuitive and effective. Displays the direction of the sound source.

- the sound source localization systemincludes a casing and an LED azimuth display module arranged on the casing.

- the LED azimuth display moduleincludes 16 LED lamp beads, and the LED lamp beads are evenly arranged along the circumference of the casing. It is divided into 16 sections, the angle between two adjacent LED lamp beads is 22.5 degrees, and a direction pointed from the center of the casing is set to 0 degrees, which is the starting point of the first section, then the angle formed by all sections

- the angle rangesare [0 degree, 22.5 degree), [22.5 degree, 45 degree), [45 degree, 67.5 degree), [67.5 degree, 90 degree), [90 degree, 112.5 degree), [112.5 degree, 135 degree degrees), [135 degrees, 157.5 degrees), [157.5 degrees, 180 degrees), [180 degrees, 202.5 degrees), [202.5 degrees, 225 degrees), [225 degrees, 247.5 degrees), [247.5 degrees, 270 degrees) , [270 degrees, 292.5 degrees), [292.5 degrees, 315 degrees), [315 degrees, 337.5 degrees) and [33

- an embodiment of the present applicationalso provides a sound source localization device 700, the microphone array module and the LED azimuth display module are respectively electrically connected to the sound source localization device 700, said devices include but not limited to: Module 710 , preprocessing module 720 , orientation calculation module 730 , control module 740 and sending module 750 .

- the acquiring module 710is configured to acquire the voice signal from the microphone array module

- a preprocessing module 720configured to preprocess the speech signal to determine target data containing sound source information

- the azimuth calculation module 730is configured to obtain sound source azimuth information through azimuth processing according to the target data

- a control module 740configured to obtain a control signal for controlling the LED azimuth display module according to the azimuth information of the sound source;

- the sending module 750is configured to send the control signal to the LED azimuth display module, so that the LED azimuth display module displays the azimuth of the sound source.

- the sound source localization device 700 in this embodimentis based on the same inventive concept as the above-mentioned microphone array-based sound source localization method, the corresponding content in the method embodiments is also applicable to this embodiment. The embodiment of the device will not be described in detail here.

- an embodiment of the present applicationalso provides a terminal 800 , and the terminal 800 may be any type of smart terminal, such as a mobile phone, a tablet computer, a personal computer, and the like.

- the terminal 800includes: a memory 810 , a processor 820 , and a computer program stored in the memory 810 and operable on the processor 820 .

- the processor 820 and the memory 810may be connected through a bus or in other ways.

- the non-transitory software programs and instructions required to implement the microphone array-based sound source localization method of the above-mentioned embodimentare stored in the memory 810, and when executed by the processor 820, the application in the above-mentioned embodiment is executed.

- the sound source localization method based on the microphone array of the sound source localization systemfor example, executes the method steps S110 to S150 in FIG. 1 described above, the method steps S210 to S230 in FIG. 2 , and the method steps S310 to S340 in FIG. 3 , The method steps S410 to S420 in FIG. 4 , the method steps S510 to S520 in FIG. 5 , and the method steps S610 to S620 in FIG. 6 .

- the memory 810can be used to store non-transitory software programs, non-transitory computer-executable programs and modules, such as a microphone-based

- the program instructions/modules corresponding to the sound source localization method of the arrayfor example, the acquisition module 710, the preprocessing module 720, the orientation calculation module 730, the control module 740 and the sending module 750 shown in FIG. 7 .

- the processor 820executes various functional applications and data processing of the sound source localization device 700 by running the non-transitory software programs, instructions and modules stored in the memory 810, that is, a microphone-based Sound source localization methods for arrays.

- the memory 810may include a program storage area and a data storage area, wherein the program storage area may store an operating system and an application program required by at least one function; the data storage area may store data created according to the use of a sound source localization device 700 Wait.

- the memory 810may include a high-speed random access memory, and may also include a non-transitory memory, such as at least one magnetic disk storage device, a flash memory device, or other non-transitory solid-state storage devices.

- the memory 810may optionally include remote memories that are remotely located relative to the processor 820, and these remote memories may be connected to the terminal 800 through a network. Examples of the aforementioned networks include, but are not limited to, the Internet, intranets, local area networks, mobile communication networks, and combinations thereof.

- the one or more modulesare stored in the memory 810, and when executed by the one or more processors 820, perform a sound source localization method based on a microphone array in the above method embodiment, for example, perform Method steps S110 to S150 in Fig. 1 described above, method steps S210 to S230 in Fig. 2, method steps S310 to S340 in Fig. 3, method steps S410 to S420 in Fig. 4, method steps S510 in Fig. 5 To S520, the method steps S610 to S620 in FIG. 6 implement the functions of the modules 710 to 750 in FIG. 7 .

- the embodiment of the present applicationalso provides a computer-readable storage medium, the computer-readable storage medium stores computer-executable instructions, and the computer-executable instructions are executed by one or more processors 820, for example, by the Executed by one processor 820, the above-mentioned one or more processors 820 can execute a sound source localization method based on a microphone array in the above-mentioned method embodiment, for example, execute the method steps S110 to S150 in FIG. 1 described above , method steps S210 to S230 in Fig. 2, method steps S310 to S340 in Fig. 3, method steps S410 to S420 in Fig. 4, method steps S510 to S520 in Fig. 5, method steps S610 to S620 in Fig. 6 , realizing the functions of modules 710 to 750 in FIG. 7 .

- the device embodiments described aboveare only illustrative, and the units described as separate components may or may not be physically separated, that is, they may be located in one place, or may be distributed to multiple network units. Part or all of the modules can be selected according to actual needs to achieve the purpose of the solution of this embodiment.

- each implementation mannercan be implemented by means of software plus a general hardware platform.

- all or part of the process in the method of the above-mentioned embodimentscan be completed by instructing related hardware through a computer program, and the program can be stored in a computer-readable storage medium. , it may include the flow of the embodiment of the above method.

- the storage mediummay be a magnetic disk, an optical disk, a read-only memory (ReadOnly Memory, ROM) or a random access memory (Random Access Memory, RAM), etc.

Landscapes

- Physics & Mathematics (AREA)

- Engineering & Computer Science (AREA)

- General Physics & Mathematics (AREA)

- Radar, Positioning & Navigation (AREA)

- Remote Sensing (AREA)

- Circuit For Audible Band Transducer (AREA)

- Obtaining Desirable Characteristics In Audible-Bandwidth Transducers (AREA)

Abstract

Description

Translated fromChinese本发明涉及声源定位技术领域,特别涉及一种基于麦克风阵列的声源定位方法、装置及存储介质。The present invention relates to the technical field of sound source localization, in particular to a microphone array-based sound source localization method, device and storage medium.

麦克风作为声音信号尤其是语音信号采集的一种常用声电换能器,在多媒体会议、教学、通信、机械设备振动与噪声检测、军事指挥侦察等领域有着广泛应用。目前市场上音频采集相关产品,以单个麦克风作为音频信号采集单元的产品为主,一些高端产品采用了各种形式的麦克风阵列作为音频信号采集、处理的传感器。阵列中的每个麦克风为一个“阵元”,利用多个“阵元”采集的信息冗余获得更多关于声源的信息。As a common acoustic-electric transducer for collecting sound signals, especially voice signals, microphones are widely used in multimedia conferences, teaching, communications, vibration and noise detection of mechanical equipment, military command and reconnaissance, and other fields. At present, audio collection related products on the market mainly use a single microphone as the audio signal collection unit, and some high-end products use various forms of microphone arrays as sensors for audio signal collection and processing. Each microphone in the array is an "array element", and the information redundancy collected by multiple "array elements" is used to obtain more information about the sound source.

通过声源定位方法,能够确定声源在空间中的位置,目前,声源定位方法无法有效去噪,而且算法复杂度高,实时性差,导致无法准确有效显示声源的方位。The position of the sound source in space can be determined through the sound source localization method. At present, the sound source localization method cannot effectively denoise, and the algorithm complexity is high, and the real-time performance is poor, resulting in the inability to accurately and effectively display the direction of the sound source.

发明内容Contents of the invention

以下是对本文详细描述的主题的概述。本概述并非是为了限制权利要求的保护范围。The following is an overview of the topics described in detail in this article. This summary is not intended to limit the scope of the claims.

本申请提供了一种基于麦克风阵列的声源定位方法、装置及存储介质,能够准确有效的显示声源的方位。The present application provides a sound source localization method, device and storage medium based on a microphone array, which can accurately and effectively display the direction of the sound source.

本申请解决其技术问题的解决方案是:The solution that the present application solves its technical problem is:

第一方面,本申请实施例提供了一种基于麦克风阵列的声源定位方法,应用于声源定位装置,所述声源定位装置与麦克风阵列模块电连接,所述声源定位装置还与LED方位显示模块电连接,所述方法包括:获取来自所述麦克风阵列模块的语音信号;对所述语音信号进行预处理,确定包含声源信息的目标数据;根据所述目标数据,通过方位处理,得到声源方位信息;根据所述声源方位信息,得到用于控制所述LED方位显示模块的控制信号;将所述控制信号发送至所述LED方位显示模块,以使所述LED方位显示模块显示声源的方位。In the first aspect, the embodiment of the present application provides a sound source localization method based on a microphone array, which is applied to a sound source localization device, the sound source localization device is electrically connected to the microphone array module, and the sound source localization device is also connected to the LED The azimuth display module is electrically connected, and the method includes: acquiring a voice signal from the microphone array module; preprocessing the voice signal to determine target data containing sound source information; according to the target data, through azimuth processing, Obtain sound source orientation information; obtain a control signal for controlling the LED orientation display module according to the sound source orientation information; send the control signal to the LED orientation display module, so that the LED orientation display module Displays the direction of the sound source.

进一步,所述根据所述目标数据,通过方位处理,得到声源方位信息这一步骤,包括以下步骤:根据时延估计法,对所述目标数据计算得到时延数据;根据时延筛选法,由所述时延数据确定有效时延数据;根据方位解算法,对所述有效时延数据计算得到声源方位信息。Further, the step of obtaining sound source azimuth information through azimuth processing according to the target data includes the following steps: according to the time delay estimation method, calculating the time delay data for the target data; according to the time delay screening method, Effective time delay data is determined from the time delay data; sound source azimuth information is obtained by calculating the effective time delay data according to an azimuth solution algorithm.

进一步,根据时延估计法,对所述目标数据计算得到时延数据这一步骤,包括以下步骤:根据傅里叶变换算法,对所述目标数据计算得到时延频域数据;根据信噪比加权型广义互相关算法,对所述频域数据计算得到时延加权数据;根据傅里叶逆变换算法,对所述加权数据计算得到时延时域数据;根据峰值检测法,由所述时延时域数据确定时延数据。Further, according to the time delay estimation method, the step of calculating the time delay data for the target data includes the following steps: calculating the time delay frequency domain data for the target data according to the Fourier transform algorithm; The weighted generalized cross-correlation algorithm calculates the time-delay weighted data for the frequency domain data; according to the inverse Fourier transform algorithm, calculates the time-delay time domain data for the weighted data; according to the peak detection method, the Latency time domain data determines the latency data.

进一步,所述信噪比加权型广义互相关算法如下:其中,其中,其中,G12(ω)=X1(ω)X2*(ω),G11(ω)=X1(ω)X1*(ω),G22(ω)=X2(ω)X2*(ω),其中,A为时延加权数据,是信噪比加权函数,X1(ω)为一个频域数据,X1*(ω)为X1(ω)的共轭复数,X2(ω)为另一个频域数据,X2*(ω)为X2(ω)的共轭复数。Further, the SNR-weighted generalized cross-correlation algorithm is as follows: in, in, Among them, G12 (ω)=X1 (ω)X2* (ω), G11 (ω)=X1 (ω)X1* (ω), G22 (ω)=X2 (ω)X2* (ω), where A is delay-weighted data, is the SNR weighting function, X1 (ω) is a frequency domain data, X1* (ω) is the conjugate complex number of X1 (ω), X2 (ω) is another frequency domain data, X2* (ω) is a complex conjugate number of X2 (ω).

进一步,所述根据时延筛选法,由所述时延数据确定有效时延数据这一步骤,包括以下步骤:根据时延误差公式,由时延数据计算得到时延误差值;根据预设的误差阈值和所述时延误差值,由所述时延数据确定有效时延数据。Further, the step of determining the effective delay data from the delay data according to the delay screening method includes the following steps: calculating the delay error value from the delay data according to the delay error formula; The error threshold and the time delay error value are used to determine valid time delay data from the time delay data.

进一步,所述对所述语音信号进行预处理,确定包含声源信息的目标数据这一步骤,包括以下步骤:根据语音端点检测法,由所述语音信号确定起始状态和结束状态;根据所述起始状态和所述结束状态,由所述语音信号确定包含声源信息的目标数据。Further, the step of preprocessing the speech signal to determine the target data containing sound source information includes the following steps: according to the speech endpoint detection method, the start state and the end state are determined from the speech signal; according to the The start state and the end state, the target data including the sound source information is determined by the speech signal.

进一步,所述根据所述声源方位信息,得到用于控制所述LED方位显示模块的控制信号这一步骤,包括以下步骤:根据所述声源方位信息,确定方位角度;根据预设的角度配对表,由所述方位角度得到用于控制所述LED方位显示模块的控制信号。Further, the step of obtaining a control signal for controlling the LED azimuth display module according to the azimuth information of the sound source includes the following steps: determining the azimuth angle according to the azimuth information of the sound source; The pairing table obtains the control signal for controlling the LED azimuth display module from the azimuth angle.

第二方面,本申请提供了一种声源定位装置,所述声源定位装置与麦克风阵列模块电连接,所述声源定位装置还与LED方位显示模块电连接,所述装置包括:获取模块,用于获取来自所述麦克风阵列模块的语音信号;预处理模块,用于对所述语音信号进行预处理,确定包含声源信息的目标数据;方位解算模块,用于根据所述目标数据,通过方位处理,得到声源方位信息;控制模块,用于根据所述声源方位信息,得到用于控制所述LED方位显示模块的控制信号;发送模块,用于将所述控制信号发送至所述LED方位显示模块,以使所述LED方位显示模块显示声源的方位。In a second aspect, the present application provides a sound source localization device, the sound source localization device is electrically connected to a microphone array module, the sound source localization device is also electrically connected to an LED orientation display module, and the device includes: an acquisition module , used to acquire the voice signal from the microphone array module; a preprocessing module, used to preprocess the voice signal, and determine the target data containing sound source information; a direction calculation module, used to calculate the target data according to the , through azimuth processing, to obtain sound source azimuth information; the control module is used to obtain a control signal for controlling the LED azimuth display module according to the sound source azimuth information; a sending module is used to send the control signal to The LED azimuth display module, so that the LED azimuth display module displays the azimuth of the sound source.

第三方面,本申请实施例还提供了一种终端,包括:存储器、处理器及存储在存储器上并可在处理器上运行的计算机程序,所述处理器执行所述计算机程序时实现如第一方面所述的一种基于麦克风阵列的声源定位方法。In the third aspect, the embodiment of the present application also provides a terminal, including: a memory, a processor, and a computer program stored in the memory and operable on the processor, and the processor implements the following when executing the computer program. A microphone array-based sound source localization method described in one aspect.

第四方面,本申请提供了一种计算机可读存储介质,计算机可读存储介质存储有计算机可执行指令,计算机可执行指令用于使计算机执行如第一方面所述的一种基于麦克风阵列的声源定位方法。In a fourth aspect, the present application provides a computer-readable storage medium, the computer-readable storage medium stores computer-executable instructions, and the computer-executable instructions are used to make a computer perform a microphone array-based Sound source localization method.

第五方面,本申请还提供了一种计算机程序产品,所述计算机程序产品包括存储在计算机可读存储介质上的计算机程序,所述计算机程序包括程序指令,当所述程序指令被计算机执行时,使计算机执行如第一方面所述的一种基于麦克风阵列的声源定位方法。In a fifth aspect, the present application also provides a computer program product, the computer program product includes a computer program stored on a computer-readable storage medium, the computer program includes program instructions, and when the program instructions are executed by a computer , causing the computer to execute the sound source localization method based on the microphone array as described in the first aspect.

本申请实施例中提供的一个或多个技术方案,至少具有如下有益效果:根据本申请实施例提供的方案,声源定位方法能够有效排除噪音和静音等无用的语音信号,得到包含声源信息的目标数据,能够根据声源方位信息得到用于控制所述LED方位显示模块的控制信号,从而在LED方位显示模块上显示声源的方位,处理效率高,实时性高,并且能够准确有效显示声源的方位。One or more technical solutions provided in the embodiments of the present application have at least the following beneficial effects: According to the solutions provided in the embodiments of the present application, the sound source localization method can effectively eliminate useless speech signals such as noise and silence, and obtain sound source information The target data of the sound source can be used to obtain the control signal for controlling the LED azimuth display module according to the sound source azimuth information, thereby displaying the azimuth of the sound source on the LED azimuth display module, with high processing efficiency, high real-time performance, and accurate and effective display The direction of the sound source.

本申请的其它特征和优点将在随后的说明书中阐述,并且,部分地从说明书中变得显而易见,或 者通过实施本申请而了解。本申请的目的和其他优点可通过在说明书、权利要求书以及附图中所特别指出的结构来实现和获得。Other features and advantages of the application will be set forth in the description which follows, and, in part, will be obvious from the description, or may be learned by practice of the application. The objectives and other advantages of the application will be realized and attained by the structure particularly pointed out in the written description and claims hereof as well as the appended drawings.

附图用来提供对本申请技术方案的进一步理解,并且构成说明书的一部分,与本申请的实施例一起用于解释本申请的技术方案,并不构成对本申请技术方案的限制。The accompanying drawings are used to provide a further understanding of the technical solution of the present application, and constitute a part of the specification, and are used together with the embodiments of the present application to explain the technical solution of the present application, and do not constitute a limitation to the technical solution of the present application.

图1是本申请一个实施例提供的基于麦克风阵列的声源定位方法的流程图;Fig. 1 is the flow chart of the sound source localization method based on microphone array provided by one embodiment of the present application;

图2是图1中步骤S130的具体方法流程图;Fig. 2 is the specific method flowchart of step S130 in Fig. 1;

图3是图2中步骤S210的具体方法流程图;Fig. 3 is a specific method flowchart of step S210 in Fig. 2;

图4是图2中步骤S220的具体方法流程图;Fig. 4 is the specific method flowchart of step S220 in Fig. 2;

图5是图1中步骤S120的具体方法流程图;Fig. 5 is the specific method flowchart of step S120 in Fig. 1;

图6是图1中步骤S140的具体方法流程图;Fig. 6 is a specific method flowchart of step S140 in Fig. 1;

图7是本申请另一个实施例提供的声源定位装置的装置图;Fig. 7 is a device diagram of a sound source localization device provided by another embodiment of the present application;

图8是本申请另一个实施例提供的终端的装置图;FIG. 8 is an apparatus diagram of a terminal provided in another embodiment of the present application;

图9是图1的流程图得到的声源方位角估计平均值示意图;Fig. 9 is a schematic diagram of the estimated mean value of the sound source azimuth obtained by the flow chart of Fig. 1;

图10是图1的流程图得到的声源方位角估计平均误差示意图。FIG. 10 is a schematic diagram of the average error of sound source azimuth estimation obtained from the flow chart in FIG. 1 .

为了使本发明的目的、技术方案及优点更加清楚明白,以下结合附图及实施例,对本申请进行进一步详细说明。应当理解,此处所描述的具体实施例仅用以解释本申请,并不用于限定本申请。In order to make the object, technical solution and advantages of the present invention clearer, the present application will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the present application, not to limit the present application.

需要说明的是,虽然在装置示意图中进行了功能模块划分,在流程图中示出了逻辑顺序,但是在某些情况下,可以以不同于装置中的模块划分,或流程图中的顺序执行所示出或描述的步骤。说明书和权利要求书及上述附图中的术语“第一”、“第二”等是用于区别类似的对象,而不必用于描述特定的顺序或先后次序。It should be noted that although the functional modules are divided in the schematic diagram of the device, and the logical sequence is shown in the flowchart, in some cases, it can be executed in a different order than the module division in the device or the flowchart in the flowchart. steps shown or described. The terms "first", "second" and the like in the specification and claims and the above drawings are used to distinguish similar objects, and not necessarily used to describe a specific sequence or sequence.

除非另有定义,本文所使用的所有的技术和科学术语与属于本发明的技术领域的技术人员通常理解的含义相同。本文中所使用的术语只是为了描述本发明实施例的目的,不是旨在限制本发明。Unless otherwise defined, all technical and scientific terms used herein have the same meaning as commonly understood by one of ordinary skill in the technical field of the invention. The terms used herein are only for the purpose of describing the embodiments of the present invention, and are not intended to limit the present invention.

本申请提供了一种基于麦克风阵列的声源定位方法、装置及存储介质,该基于麦克风阵列的声源定位方法包括:获取来自所述麦克风阵列模块的语音信号;对所述语音信号进行预处理,确定包含声源信息的目标数据;根据所述目标数据,通过方位处理,得到声源方位信息;根据所述声源方位信息,得到用于控制所述LED方位显示模块的控制信号;将所述控制信号发送至所述LED方位显示模块,以使所述LED方位显示模块显示声源的方位。根据本申请实施例提供的方案,声源定位方法能够有效排除噪音和静音等无用的语音信号,得到包含声源信息的目标数据,能够根据声源方位信息得到用于控制所述LED方位显示模块的控制信号,从而在LED方位显示模块上显示声源的方位,处理效率高,实 时性高,并且能够准确有效显示声源的方位。The present application provides a microphone array-based sound source localization method, device, and storage medium, the microphone array-based sound source localization method comprising: acquiring a voice signal from the microphone array module; preprocessing the voice signal , determine the target data containing the sound source information; according to the target data, through the orientation processing, obtain the sound source orientation information; according to the sound source orientation information, obtain the control signal for controlling the LED orientation display module; The control signal is sent to the LED azimuth display module, so that the LED azimuth display module displays the azimuth of the sound source. According to the solution provided in the embodiment of the present application, the sound source localization method can effectively eliminate useless voice signals such as noise and silence, obtain target data containing sound source information, and obtain the information used to control the LED orientation display module based on the sound source orientation information. control signal, so as to display the orientation of the sound source on the LED orientation display module, with high processing efficiency and high real-time performance, and can accurately and effectively display the orientation of the sound source.

首先,对本申请中涉及的若干名词进行解析:First, analyze some nouns involved in this application:

麦克风阵列,指的是麦克风的排列。具体是指由一定数目的声学传感器(例如,麦克风)组成,用来对声场的空间特性进行采样并处理的系统。A microphone array refers to an arrangement of microphones. Specifically, it refers to a system composed of a certain number of acoustic sensors (for example, microphones) used to sample and process the spatial characteristics of the sound field.

驻极体麦克风,又称驻极体话筒,由声电转换和阻抗变换两部分组成。声电转换的关键元件是驻极体振动膜,在驻极体振动膜的一面蒸发上一层纯金薄膜,再经过高压电场驻极后,驻极体振动膜的两面分别驻有异性电荷,纯金薄膜与金属外壳相连通,纯金薄膜与金属极板之间形成电容;当驻极体膜片遇到声波振动时,引起电容两端的电场发生变化,从而产生了随声波变化而变化的交变电压。Electret microphone, also known as electret microphone, consists of two parts: acoustic-electric conversion and impedance conversion. The key component of the acoustic-electric conversion is the electret diaphragm. A thin film of pure gold is evaporated on one side of the electret diaphragm, and after being electretized by a high-voltage electric field, opposite charges reside on both sides of the electret diaphragm. The pure gold film is connected to the metal shell, and a capacitor is formed between the pure gold film and the metal plate; when the electret diaphragm encounters sound wave vibration, the electric field at both ends of the capacitor changes, resulting in a change with the sound wave. alternating voltage.

傅里叶变换,表示能将满足一定条件的某个函数表示成三角函数(正弦和/或余弦函数)或者它们的积分的线性组合。Fourier transform means that a certain function that satisfies certain conditions can be expressed as a linear combination of trigonometric functions (sine and/or cosine functions) or their integrals.

傅里叶逆变换,对一个给定的傅里叶变换,求其相应原函数f(t)的运算。Inverse Fourier transform, for a given Fourier transform, find the operation of the corresponding original function f(t).

语音活动检测(Voice Activity Detection,VAD),又称语音端点检测或语音边界检测,能够从声音信号流里识别和消除长时间的静音期。Voice Activity Detection (VAD), also known as voice endpoint detection or voice boundary detection, can identify and eliminate long periods of silence from the sound signal stream.

下面结合附图,对本申请实施例作进一步阐述。The embodiments of the present application will be further described below in conjunction with the accompanying drawings.

参照图1、图9和图10,图1是本申请一个实施例提供的一种基于麦克风阵列的声源定位方法,应用于声源定位装置,所述声源定位装置与麦克风阵列模块电连接,所述声源定位装置还与LED方位显示模块电连接,所述方法包括但不限于有步骤S110、步骤S120、步骤S130、步骤S140和步骤S150。Referring to Fig. 1, Fig. 9 and Fig. 10, Fig. 1 is a sound source localization method based on a microphone array provided by an embodiment of the present application, which is applied to a sound source localization device, and the sound source localization device is electrically connected to the microphone array module , the sound source localization device is also electrically connected to the LED orientation display module, the method includes but not limited to step S110, step S120, step S130, step S140 and step S150.

步骤S110,获取来自所述麦克风阵列模块的语音信号;Step S110, acquiring a voice signal from the microphone array module;

可以理解的是,声源产生的语音能够有效被麦克风阵列模块采集,并转化为语音信号,从而能够获取来自麦克风阵列模块的语音信号。It can be understood that the voice generated by the sound source can be effectively collected by the microphone array module and converted into a voice signal, so that the voice signal from the microphone array module can be obtained.

步骤S120,对所述语音信号进行预处理,确定包含声源信息的目标数据;Step S120, preprocessing the speech signal to determine target data including sound source information;

可以理解的是,通过预处理,能够排除噪音和静音等无用的语音信号,从而得到包含声源信息的目标数据,能够提高后续的信号处理的精度和效率。It can be understood that through preprocessing, useless speech signals such as noise and silence can be eliminated, thereby obtaining target data including sound source information, which can improve the accuracy and efficiency of subsequent signal processing.

步骤S130,根据所述目标数据,通过方位处理,得到声源方位信息;Step S130, according to the target data, through azimuth processing, to obtain sound source azimuth information;

可以理解的是,对目标数据进行方位处理,能够得到有效准确的声源方位信息。It can be understood that effective and accurate sound source azimuth information can be obtained by performing azimuth processing on the target data.

步骤S140,根据所述声源方位信息,得到用于控制所述LED方位显示模块的控制信号;Step S140, obtaining a control signal for controlling the LED azimuth display module according to the azimuth information of the sound source;

需要说明的是,控制信号与声源方位信息对应,能够保证LED方位显示模块显示的准确性。It should be noted that the control signal corresponds to the location information of the sound source, which can ensure the accuracy of display by the LED location display module.

步骤S150,将所述控制信号发送至所述LED方位显示模块,以使所述LED方位显示模块显示声源的方位。Step S150, sending the control signal to the LED azimuth display module, so that the LED azimuth display module displays the azimuth of the sound source.

需要说明的是,利用LED方位显示模块,能够快速直观的显示声源的方位。It should be noted that the direction of the sound source can be quickly and intuitively displayed by using the LED position display module.

可以理解的是,根据本申请实施例提供的方案,声源定位方法能够有效排除噪音和静音等无用的语音信号,得到包含声源信息的目标数据,能够根据声源方位信息得到用于控制所述LED方位显示模块的控制信号,从而在LED方位显示模块上显示声源的方位,处理效率高,实时性高,并且能够准确 有效显示声源的方位;由图9和图10可知,声源方位角估计平均值与实际值接近,声源方位角估计平均误差较小,因此,声源定位方法的准确度较高。It can be understood that, according to the solutions provided in the embodiments of the present application, the sound source localization method can effectively eliminate useless voice signals such as noise and silence, obtain target data containing sound source information, and obtain the target data for controlling the sound source according to the sound source orientation information. The control signal of the LED azimuth display module is described, so that the azimuth of the sound source is displayed on the LED azimuth display module, the processing efficiency is high, the real-time performance is high, and the azimuth of the sound source can be accurately and effectively displayed; as can be seen from Fig. 9 and Fig. 10, the sound source The average value of the azimuth angle estimation is close to the actual value, and the average error of the sound source azimuth angle estimation is small. Therefore, the accuracy of the sound source localization method is high.

另外,参照图2,在一实施例中,步骤S130具体包括但不限于有以下步骤:In addition, referring to FIG. 2, in one embodiment, step S130 specifically includes but is not limited to the following steps:

步骤S210,根据时延估计法,对所述目标数据计算得到时延数据;Step S210, according to the time delay estimation method, calculate the time delay data for the target data;

步骤S220,根据时延筛选法,由所述时延数据确定有效时延数据;Step S220, according to the delay screening method, determine effective delay data from the delay data;

步骤S230,根据方位解算法,对所述有效时延数据计算得到声源方位信息。Step S230, according to the azimuth solution algorithm, calculate the azimuth information of the sound source from the effective time delay data.

可以理解的是,利用时延估计法,能够有效估计出目标数据的时延数据;利用时延筛选法排除异常数据,得到有效时延数据,保证后续运算的准确度。It can be understood that the time-delay data of the target data can be effectively estimated by using the time-delay estimation method; the time-delay screening method is used to exclude abnormal data, obtain effective time-delay data, and ensure the accuracy of subsequent operations.

需要说明的是,通过方位解算法,能够得到声源的方位角;麦克风阵列模块采用四个驻极体麦克风时,解算公式如下:It should be noted that the azimuth angle of the sound source can be obtained through the azimuth solution algorithm; when the microphone array module uses four electret microphones, the solution formula is as follows:

其中,θ为声源的方位角,r1、r2、r3和r4为四个驻极体麦克风分别与声源的距离,τij为驻极体麦克风Mi和Mj的有效时延数据,解算公式的具体推导过程如下所示:Among them, θ is the azimuth angle of the sound source, r1 , r2 , r3 and r4 are the distances between the four electret microphones and the sound source respectively, τij is the effective time of the electret microphones Mi and Mj Extending the data, the specific derivation process of the solution formula is as follows:

其中,C为声速;Among them, C is the speed of sound;

由公式(1)、(2)和(3),整理得:From formulas (1), (2) and (3), we can get:

当驻极体麦克风与声源的距离较远时,可以近似的认为:When the distance between the electret microphone and the sound source is relatively long, it can be approximated that:

r4+r2≈r3+r1 (5)r4 +r2 ≈r3 +r1 (5)

将公式(5)代入公式(4)并求反切,则得到解算公式。Substituting formula (5) into formula (4) and finding the inverse tangent, the solution formula is obtained.

另外,参照图3,在一实施例中,步骤S210具体包括但不限于有以下步骤:In addition, referring to FIG. 3 , in one embodiment, step S210 specifically includes but is not limited to the following steps:

步骤S310,根据傅里叶变换算法,对所述目标数据计算得到时延频域数据;Step S310, according to the Fourier transform algorithm, calculate the time-delay frequency domain data for the target data;

步骤S320,根据信噪比加权型广义互相关算法,对所述频域数据计算得到时延加权数据;Step S320, according to the signal-to-noise ratio weighted generalized cross-correlation algorithm, calculate the delay weighted data for the frequency domain data;

步骤S330,根据傅里叶逆变换算法,对所述加权数据计算得到时延时域数据;Step S330, according to the inverse Fourier transform algorithm, calculate the weighted data to obtain time-delay time-domain data;

步骤S340,根据峰值检测法,由所述时延时域数据确定时延数据。Step S340, according to the peak detection method, determine the delay data from the delay time domain data.

可以理解的是,利用傅里叶变换算法,得到时延频域数据,并进行信噪比加权型广义互相关算法的计算,保证时延估计的准确性;利用傅里叶逆变换算法,重新得到在时域上的时延时域数据,方便利用峰值检测法确定时延数据。It can be understood that the time-delay frequency domain data is obtained by using the Fourier transform algorithm, and the calculation of the signal-to-noise ratio weighted generalized cross-correlation algorithm is performed to ensure the accuracy of the time delay estimation; by using the inverse Fourier transform algorithm, The time-delay time-domain data in the time domain is obtained again, and it is convenient to use the peak detection method to determine the time-delay data.

在具体实践中,麦克风阵列模块采用四个驻极体麦克风时,以10KHz的频率采样4个驻极体麦克风的语音信号,分别存放在长度为2048的数组中,4组语音信号分别记为目标数据X1(t)、X2(t)、X3(t)和X4(t),对目标数据进行快速傅里叶变换,得到4组时延频域数据X1(ω)、X2(ω)、X3(ω)和X4(ω);任取其中两组时延频域数据,确定时延数据,例如取时延频域数据X1(ω)和X2(ω),确定时延数据τ12;对于4组时延频域数据X1(ω)、X2(ω)、X3(ω)和X4(ω),需要确定6组时延数据,分别为τ12、τ13、τ14、τ23、τ24和τ34。In practice, when the microphone array module uses four electret microphones, the voice signals of the four electret microphones are sampled at a frequency of 10KHz and stored in an array with a length of 2048, and the four groups of voice signals are respectively recorded as target Data X1 (t), X2 (t), X3 (t) and X4 (t), perform fast Fourier transform on the target data, and obtain 4 sets of time-delay frequency domain data X1 (ω), X2 (ω), X3 (ω) and X4 (ω); any two sets of time-delay-frequency domain data are taken to determine the time-delay data, for example, time-delay frequency domain data X1 (ω) and X2 (ω ), to determine the time-delay data τ12 ; for four groups of time-delay frequency domain data X1 (ω), X2 (ω), X3 (ω) and X4 (ω), it is necessary to determine 6 groups of time-delay data, respectively are τ12 , τ13 , τ14 , τ23 , τ24 and τ34 .

另外,在一实施例中,步骤S320中,所述信噪比加权型广义互相关算法如下:In addition, in an embodiment, in step S320, the SNR-weighted generalized cross-correlation algorithm is as follows:

其中,in,

其中,in,

其中,in,

G12(ω)=X1(ω)X2*(ω),G11(ω)=X1(ω)X1*(ω),G22(ω)=X2(ω)X2*(ω),G12 (ω)=X1 (ω)X2* (ω), G11 (ω)=X1 (ω)X1* (ω), G22 (ω)=X2 (ω)X2* (ω),

其中,A为时延加权数据,是信噪比加权函数,X1(ω)为一个频域数据,X1*(ω)为X1(ω)的共轭复数,X2(ω)为另一个频域数据,X2*(ω)为X2(ω)的共轭复数。Among them, A is delay weighted data, is the SNR weighting function, X1 (ω) is a frequency domain data, X1* (ω) is the conjugate complex number of X1 (ω), X2 (ω) is another frequency domain data, X2* (ω) is a complex conjugate number of X2 (ω).

可以理解的是,加权函数使用PHAT函数,具有一定的抗噪声和抗混响能力,但是在实际应用中受到加性噪声的影响容易导致该算法的性能急剧下降,通过引入信噪比加权函数和广义互相关算法以改善在不同信噪比条件下的识别准确率。It can be understood that the weighting function uses the PHAT function, which has certain anti-noise and anti-reverberation capabilities, but in practical applications, the impact of additive noise will easily lead to a sharp decline in the performance of the algorithm. By introducing the SNR weighting function and Generalized cross-correlation algorithm to improve the recognition accuracy under different signal-to-noise ratio conditions.

另外,参照图4,在一实施例中,步骤S220具体包括但不限于有以下步骤:In addition, referring to FIG. 4 , in one embodiment, step S220 specifically includes but is not limited to the following steps:

步骤S410,根据时延误差公式,由时延数据计算得到时延误差值;Step S410, according to the time delay error formula, calculate the time delay error value from the time delay data;

步骤S420,根据预设的误差阈值和所述时延误差值,由所述时延数据确定有效时延数据。In step S420, valid delay data is determined from the delay data according to a preset error threshold and the delay error value.

具体的,所述时延误差公式如下:Specifically, the delay error formula is as follows:

δ=|τij-(τik+τkj)|,δ=|τij -(τik +τkj )|,

其中,δ为误差,i、j和k为所述麦克风阵列模块中的任意三个所述驻极体麦克风的编号,τij、τik和τkj为有效时延数据。Wherein, δ is an error, i, j and k are numbers of any three electret microphones in the microphone array module, and τij , τik and τkj are effective time delay data.

可以理解的是,当麦克风阵列模块采用四个驻极体麦克风时,可得到6组时延数据,分别为τ12、τ13、τ14、τ23、τ24和τ34;时延估计法是存在误差的,利用误差对时延值进行筛选,分别计算误 差是否在误差阈值内,若超出,则抛弃这组时延数据,从而在时延数据内筛选出有效时延数据。It can be understood that when the microphone array module uses four electret microphones, six sets of time delay data can be obtained, namely τ12 , τ13 , τ14 , τ23 , τ24 and τ34 ; the time delay estimation method If there is an error, use the error to filter the delay value, respectively calculate whether the error is within the error threshold, and if it exceeds, discard this set of delay data, so as to filter out effective delay data from the delay data.

另外,参照图5,在一实施例中,步骤S120具体包括但不限于有以下步骤:In addition, referring to FIG. 5 , in one embodiment, step S120 specifically includes but is not limited to the following steps:

步骤S510,根据语音端点检测法,由所述语音信号确定起始状态和结束状态;Step S510, according to the voice endpoint detection method, determine the start state and the end state from the voice signal;

步骤S520,根据所述起始状态和所述结束状态,由所述语音信号确定包含声源信息的目标数据。Step S520, according to the start state and the end state, determine target data including sound source information from the speech signal.

可以理解的是,利用语音端点检测法,得到语音信号的短时能量和过零率,从而判断语音信号的起始状态和结束状态,具体为,若语音信号的短时能量超过门限值则判断为起始状态,反之,进入结束状态,从而排除掉噪音和静音等无用的语音信号。It can be understood that, using the speech endpoint detection method, the short-term energy and zero-crossing rate of the speech signal are obtained, thereby judging the start state and the end state of the speech signal, specifically, if the short-term energy of the speech signal exceeds the threshold value then It is judged as the start state, otherwise, it enters the end state, thereby eliminating useless voice signals such as noise and silence.

另外,参照图6,在一实施例中,步骤S140具体包括但不限于有以下步骤:In addition, referring to FIG. 6, in one embodiment, step S140 specifically includes but is not limited to the following steps:

步骤S610,根据所述声源方位信息,确定方位角度;Step S610, determining the azimuth angle according to the azimuth information of the sound source;

步骤S620,根据预设的角度配对表,由所述方位角度得到用于控制所述LED方位显示模块的控制信号。Step S620, according to the preset angle pairing table, a control signal for controlling the LED azimuth display module is obtained from the azimuth angle.

可以理解的是,声源方位信息包含了声源的方位角度,角度配对表是指方位角度与LED方位显示模块对应的点亮顺序表,通过控制信号点亮LED方位显示模块,能够直观有效的显示声源的方位。It can be understood that the azimuth information of the sound source includes the azimuth angle of the sound source, and the angle pairing table refers to the lighting sequence table corresponding to the azimuth angle and the LED azimuth display module. The LED azimuth display module is lit by the control signal, which can be intuitive and effective. Displays the direction of the sound source.

在具体实践中,声源定位系统包括机壳和设置在机壳上的LED方位显示模块,LED方位显示模块包括16个LED灯珠,LED灯珠沿机壳的周向均匀排列,机壳共被分为16个区间,相邻两个LED灯珠的夹角为22.5度,由机壳中心指向的一个方向设为0度,并为第一个区间的起点,则所有区间形成的夹角的角度范围依次为[0度、22.5度)、[22.5度、45度)、[45度、67.5度)、[67.5度、90度)、[90度、112.5度)、[112.5度、135度)、[135度、157.5度)、[157.5度、180度)、[180度、202.5度)、[202.5度、225度)、[225度、247.5度)、[247.5度、270度)、[270度、292.5度)、[292.5度、315度)、[315度、337.5度)和[337.5度、360度),16个LED灯珠依次位于16个区间形成的夹角的角平分线上,角度配对表就是包含LED灯珠与区间的对应关系的表格,根据声源的方位所属的区间,得到用于控制所述LED方位显示模块的控制信号,以点亮该区间对应的LED灯珠,从而直观有效的显示声源的方位。In practice, the sound source localization system includes a casing and an LED azimuth display module arranged on the casing. The LED azimuth display module includes 16 LED lamp beads, and the LED lamp beads are evenly arranged along the circumference of the casing. It is divided into 16 sections, the angle between two adjacent LED lamp beads is 22.5 degrees, and a direction pointed from the center of the casing is set to 0 degrees, which is the starting point of the first section, then the angle formed by all sections The angle ranges are [0 degree, 22.5 degree), [22.5 degree, 45 degree), [45 degree, 67.5 degree), [67.5 degree, 90 degree), [90 degree, 112.5 degree), [112.5 degree, 135 degree degrees), [135 degrees, 157.5 degrees), [157.5 degrees, 180 degrees), [180 degrees, 202.5 degrees), [202.5 degrees, 225 degrees), [225 degrees, 247.5 degrees), [247.5 degrees, 270 degrees) , [270 degrees, 292.5 degrees), [292.5 degrees, 315 degrees), [315 degrees, 337.5 degrees) and [337.5 degrees, 360 degrees), 16 LED lamp beads are located in the order of 16 intervals to form the angle bisector On the line, the angle pairing table is a table containing the corresponding relationship between LED lamp beads and intervals. According to the interval to which the azimuth of the sound source belongs, the control signal used to control the LED azimuth display module is obtained to light up the LED corresponding to the interval. Lamp beads, so as to intuitively and effectively display the direction of the sound source.

另外,参照图7,本申请的一个实施例还提供了一种声源定位装置700,麦克风阵列模块和LED方位显示模块分别与声源定位装置700电连接,所述装置包括但不限于:获取模块710、预处理模块720、方位解算模块730、控制模块740和发送模块750。In addition, referring to Fig. 7, an embodiment of the present application also provides a sound

其中,获取模块710,用于获取来自所述麦克风阵列模块的语音信号;Wherein, the acquiring

预处理模块720,用于对所述语音信号进行预处理,确定包含声源信息的目标数据;A

方位解算模块730,用于根据所述目标数据,通过方位处理,得到声源方位信息;The

控制模块740,用于根据所述声源方位信息,得到用于控制所述LED方位显示模块的控制信号;A

发送模块750,用于将所述控制信号发送至所述LED方位显示模块,以使所述LED方位显示模块显示声源的方位。The sending

需要说明的是,由于本实施例中的一种声源定位装置700与上述的一种基于麦克风阵列的声源定 位方法基于相同的发明构思,因此,方法实施例中的相应内容同样适用于本装置实施例,此处不再详述。It should be noted that since the sound

另外,参照图8,本申请的一个实施例还提供了一种终端800,该终端800可以是任意类型的智能终端,例如手机、平板电脑、个人计算机等。In addition, referring to FIG. 8 , an embodiment of the present application also provides a terminal 800 , and the terminal 800 may be any type of smart terminal, such as a mobile phone, a tablet computer, a personal computer, and the like.

具体地,该终端800包括:存储器810、处理器820及存储在存储器810上并可在处理器820上运行的计算机程序。Specifically, the terminal 800 includes: a

处理器820和存储器810可以通过总线或者其他方式连接。The

需要说明的是,实现上述实施例的基于麦克风阵列的声源定位方法所需的非暂态软件程序以及指令存储在存储器810中,当被处理器820执行时,执行上述实施例中的应用于声源定位系统的基于麦克风阵列的声源定位方法,例如,执行以上描述的图1中的方法步骤S110至S150,图2中的方法步骤S210至S230,图3中的方法步骤S310至S340,图4中的方法步骤S410至S420,图5中的方法步骤S510至S520,图6中的方法步骤S610至S620。It should be noted that the non-transitory software programs and instructions required to implement the microphone array-based sound source localization method of the above-mentioned embodiment are stored in the

需要说明的是,存储器810作为一种非暂态计算机可读存储介质,可用于存储非暂态软件程序、非暂态性计算机可执行程序以及模块,如本申请实施例中的一种基于麦克风阵列的声源定位方法对应的程序指令/模块,例如,图7所示的获取模块710、预处理模块720、方位解算模块730、控制模块740和发送模块750。处理器820通过运行存储在存储器810中的非暂态软件程序、指令以及模块,从而执行一种声源定位装置700的各种功能应用以及数据处理,即实现上述方法实施例的一种基于麦克风阵列的声源定位方法。It should be noted that, as a non-transitory computer-readable storage medium, the

存储器810可以包括存储程序区和存储数据区,其中,存储程序区可存储操作系统、至少一个功能所需要的应用程序;存储数据区可存储根据一种声源定位装置700的使用所创建的数据等。此外,存储器810可以包括高速随机存取存储器,还可以包括非暂态存储器,例如至少一个磁盘存储器件、闪存器件、或其他非暂态固态存储器件。在一些实施方式中,存储器810可选包括相对于处理器820远程设置的远程存储器,这些远程存储器可以通过网络连接至该终端800。上述网络的实例包括但不限于互联网、企业内部网、局域网、移动通信网及其组合。The

所述一个或者多个模块存储在所述存储器810中,当被所述一个或者多个处理器820执行时,执行上述方法实施例中的一种基于麦克风阵列的声源定位方法,例如,执行以上描述的图1中的方法步骤S110至S150,图2中的方法步骤S210至S230,图3中的方法步骤S310至S340,图4中的方法步骤S410至S420,图5中的方法步骤S510至S520,图6中的方法步骤S610至S620,实现图7的模块710至750的功能。The one or more modules are stored in the

本申请实施例还提供了一种计算机可读存储介质,所述计算机可读存储介质存储有计算机可执行指令,该计算机可执行指令被一个或多个处理器820执行,例如,被图7中的一个处理器820执行,可使得上述一个或多个处理器820执行上述方法实施例中的一种基于麦克风阵列的声源定位方法,例如,执行以上描述的图1中的方法步骤S110至S150,图2中的方法步骤S210至S230,图3中的方 法步骤S310至S340,图4中的方法步骤S410至S420,图5中的方法步骤S510至S520,图6中的方法步骤S610至S620,实现图7的模块710至750的功能。The embodiment of the present application also provides a computer-readable storage medium, the computer-readable storage medium stores computer-executable instructions, and the computer-executable instructions are executed by one or

以上所描述的装置实施例仅仅是示意性的,其中所述作为分离部件说明的单元可以是或者也可以不是物理上分开的,即可以位于一个地方,或者也可以分布到多个网络单元上。可以根据实际的需要选择其中的部分或者全部模块来实现本实施例方案的目的。The device embodiments described above are only illustrative, and the units described as separate components may or may not be physically separated, that is, they may be located in one place, or may be distributed to multiple network units. Part or all of the modules can be selected according to actual needs to achieve the purpose of the solution of this embodiment.

通过以上的实施方式的描述,本领域技术人员可以清楚地了解到各实施方式可借助软件加通用硬件平台的方式来实现。本领域技术人员可以理解实现上述实施例方法中的全部或部分流程是可以通过计算机程序来指令相关的硬件来完成,所述的程序可存储于一计算机可读取存储介质中,该程序在执行时,可包括如上述方法的实施例的流程。其中,所述的存储介质可为磁碟、光盘、只读存储记忆体(ReadOnly Memory,ROM)或随机存储记忆体(Random AcceSS Memory,RAM)等。Through the above description of the implementation manners, those skilled in the art can clearly understand that each implementation manner can be implemented by means of software plus a general hardware platform. Those skilled in the art can understand that all or part of the process in the method of the above-mentioned embodiments can be completed by instructing related hardware through a computer program, and the program can be stored in a computer-readable storage medium. , it may include the flow of the embodiment of the above method. Wherein, the storage medium may be a magnetic disk, an optical disk, a read-only memory (ReadOnly Memory, ROM) or a random access memory (Random Access Memory, RAM), etc.

以上是对本申请的较佳实施进行了具体说明,但本申请并不局限于上述实施方式,熟悉本领域的技术人员在不违背本申请精神的前提下还可作出种种的等同变形或替换,这些等同的变形或替换均包含在本申请权利要求所限定的范围内。The above is a specific description of the preferred implementation of the application, but the application is not limited to the above-mentioned implementation, and those skilled in the art can also make various equivalent deformations or replacements without violating the spirit of the application. Equivalent modifications or replacements are all within the scope defined by the claims of the present application.

Claims (10)

Translated fromChineseApplications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202110656020.4ACN113466793B (en) | 2021-06-11 | 2021-06-11 | Sound source positioning method and device based on microphone array and storage medium |

| CN202110656020.4 | 2021-06-11 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2022257499A1true WO2022257499A1 (en) | 2022-12-15 |

Family

ID=77869736

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/CN2022/076825CeasedWO2022257499A1 (en) | 2021-06-11 | 2022-02-18 | Sound source localization method and apparatus based on microphone array, and storage medium |

Country Status (2)

| Country | Link |

|---|---|

| CN (1) | CN113466793B (en) |

| WO (1) | WO2022257499A1 (en) |

Cited By (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN116184320A (en)* | 2023-04-27 | 2023-05-30 | 之江实验室 | Unmanned aerial vehicle acoustic positioning method and unmanned aerial vehicle acoustic positioning system |

| CN116299179A (en)* | 2023-05-22 | 2023-06-23 | 北京边锋信息技术有限公司 | Sound source positioning method, sound source positioning device and readable storage medium |

| CN116359843A (en)* | 2023-03-07 | 2023-06-30 | 歌尔科技有限公司 | Sound source positioning method, device, intelligent equipment and medium |

| CN117008056A (en)* | 2023-10-07 | 2023-11-07 | 国网浙江省电力有限公司宁波供电公司 | Method for determining target sound source based on MEMS |

| CN118362977A (en)* | 2024-06-19 | 2024-07-19 | 北京远鉴信息技术有限公司 | Sound source positioning device and method, electronic equipment and storage medium |

| CN118707449A (en)* | 2024-08-27 | 2024-09-27 | 自然资源部第一海洋研究所 | A method, device, equipment and medium for finding direction of marine mammals by ticking sound |

Families Citing this family (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113466793B (en)* | 2021-06-11 | 2023-10-17 | 五邑大学 | Sound source positioning method and device based on microphone array and storage medium |

| CN114442143A (en)* | 2022-01-25 | 2022-05-06 | 武汉新朗光电科技有限公司 | Audio-based life detection and positioning system, method, device and medium |

| CN115015940A (en)* | 2022-04-21 | 2022-09-06 | 歌尔科技有限公司 | Method, device and equipment for determining sound source position and storage medium |

| CN119024270A (en)* | 2024-07-30 | 2024-11-26 | 漳州立达信光电子科技有限公司 | Sound source localization method, device, equipment and storage medium |

| CN119247273A (en)* | 2024-09-14 | 2025-01-03 | 漳州立达信光电子科技有限公司 | A sound source localization method, device, equipment, storage medium and program product |

| CN119199741B (en)* | 2024-11-29 | 2025-05-13 | 科大讯飞股份有限公司 | Sound source positioning method, related device, equipment and storage medium |

Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN104991573A (en)* | 2015-06-25 | 2015-10-21 | 北京品创汇通科技有限公司 | Locating and tracking method and apparatus based on sound source array |

| CN113466793A (en)* | 2021-06-11 | 2021-10-01 | 五邑大学 | Sound source positioning method and device based on microphone array and storage medium |

Family Cites Families (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102854494B (en)* | 2012-08-08 | 2015-09-09 | Tcl集团股份有限公司 | A kind of sound localization method and device |

| CN104181506B (en)* | 2014-08-26 | 2016-08-17 | 山东大学 | A kind of based on improving the sound localization method of PHAT weighting time delay estimation and realizing system |

| CN105609112A (en)* | 2016-01-15 | 2016-05-25 | 苏州宾果智能科技有限公司 | Sound source positioning method and apparatus and time delay estimation method and apparatus |

| CN108089152B (en)* | 2016-11-23 | 2020-07-03 | 杭州海康威视数字技术股份有限公司 | Equipment control method, device and system |

| CN107102296B (en)* | 2017-04-27 | 2020-04-14 | 大连理工大学 | A sound source localization system based on distributed microphone array |

| CN107479030B (en)* | 2017-07-14 | 2020-11-17 | 重庆邮电大学 | Frequency division and improved generalized cross-correlation based binaural time delay estimation method |

| US10580429B1 (en)* | 2018-08-22 | 2020-03-03 | Nuance Communications, Inc. | System and method for acoustic speaker localization |

| CN109669159A (en)* | 2019-02-21 | 2019-04-23 | 深圳市友杰智新科技有限公司 | Auditory localization tracking device and method based on microphone partition ring array |

- 2021

- 2021-06-11CNCN202110656020.4Apatent/CN113466793B/enactiveActive

- 2022

- 2022-02-18WOPCT/CN2022/076825patent/WO2022257499A1/ennot_activeCeased

Patent Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN104991573A (en)* | 2015-06-25 | 2015-10-21 | 北京品创汇通科技有限公司 | Locating and tracking method and apparatus based on sound source array |

| CN113466793A (en)* | 2021-06-11 | 2021-10-01 | 五邑大学 | Sound source positioning method and device based on microphone array and storage medium |

Non-Patent Citations (2)

| Title |

|---|

| "Thesis : Harbin Institute of Technology", 15 March 2016, HARBIN INSTITUTE OF TECHNOLOGY, CN, article LI YANG: "The Design And Implementation of Sound Source Localization System Based on Small Size Microphone Array", pages: 1 - 66, XP093012688* |

| "Thesis Hubei University of Technology", 15 August 2020, HUBEI UNIVERSITY OF TECHNOLOGY, article ZHANG QI: "Research on Sound Source Localization Algorithm Based on Circular Microphone Array", pages: 1 - 77, XP093012684* |

Cited By (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN116359843A (en)* | 2023-03-07 | 2023-06-30 | 歌尔科技有限公司 | Sound source positioning method, device, intelligent equipment and medium |

| CN116184320A (en)* | 2023-04-27 | 2023-05-30 | 之江实验室 | Unmanned aerial vehicle acoustic positioning method and unmanned aerial vehicle acoustic positioning system |

| CN116299179A (en)* | 2023-05-22 | 2023-06-23 | 北京边锋信息技术有限公司 | Sound source positioning method, sound source positioning device and readable storage medium |

| CN116299179B (en)* | 2023-05-22 | 2023-09-12 | 北京边锋信息技术有限公司 | Sound source positioning method, sound source positioning device and readable storage medium |

| CN117008056A (en)* | 2023-10-07 | 2023-11-07 | 国网浙江省电力有限公司宁波供电公司 | Method for determining target sound source based on MEMS |

| CN117008056B (en)* | 2023-10-07 | 2024-01-12 | 国网浙江省电力有限公司宁波供电公司 | Method for determining target sound source based on MEMS |

| CN118362977A (en)* | 2024-06-19 | 2024-07-19 | 北京远鉴信息技术有限公司 | Sound source positioning device and method, electronic equipment and storage medium |

| CN118707449A (en)* | 2024-08-27 | 2024-09-27 | 自然资源部第一海洋研究所 | A method, device, equipment and medium for finding direction of marine mammals by ticking sound |

Also Published As

| Publication number | Publication date |

|---|---|

| CN113466793A (en) | 2021-10-01 |

| CN113466793B (en) | 2023-10-17 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| WO2022257499A1 (en) | Sound source localization method and apparatus based on microphone array, and storage medium | |

| US11398235B2 (en) | Methods, apparatuses, systems, devices, and computer-readable storage media for processing speech signals based on horizontal and pitch angles and distance of a sound source relative to a microphone array | |

| CN111239687B (en) | Sound source positioning method and system based on deep neural network | |

| CN111624553B (en) | Sound source localization method and system, electronic device and storage medium | |

| CN111445920B (en) | Multi-sound source voice signal real-time separation method, device and pickup | |

| CN104254819B (en) | Audio user interaction recognition and contextual refinement | |

| WO2020151133A1 (en) | Sound acquisition system having distributed microphone array, and method | |

| WO2022142853A1 (en) | Method and device for sound source positioning | |

| WO2020024816A1 (en) | Audio signal processing method and apparatus, device, and storage medium | |

| CN113687305B (en) | Sound source azimuth positioning method, device, equipment and computer readable storage medium | |

| CN108231085A (en) | A kind of sound localization method and device | |

| WO2020043037A1 (en) | Voice transcription device, system and method, and electronic device | |

| CN107167770A (en) | A kind of microphone array sound source locating device under the conditions of reverberation | |

| Dang et al. | A feature-based data association method for multiple acoustic source localization in a distributed microphone array | |

| Zhang et al. | Deep learning-based direction-of-arrival estimation for multiple speech sources using a small scale array | |

| CN112346012A (en) | Sound source position determining method and device, readable storage medium and electronic equipment | |

| CN115600084A (en) | Method and device for identifying acoustic non-line-of-sight signal, electronic equipment and storage medium | |

| Dang et al. | An iteratively reweighted steered response power approach to multisource localization using a distributed microphone network | |

| CN115128544A (en) | A sound source localization method, device and medium based on a linear dual array of microphones | |

| WO2023056905A1 (en) | Sound source localization method and apparatus, and device | |

| Ðurković et al. | Low latency localization of multiple sound sources in reverberant environments | |

| CN114694667A (en) | Voice output method, device, computer equipment and storage medium | |

| CN117121104A (en) | Estimating an optimized mask for processing acquired sound data | |

| CN117037836B (en) | Real-time sound source separation method and device based on signal covariance matrix reconstruction | |

| Cano et al. | Selective hearing: A machine listening perspective |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application | Ref document number:22819106 Country of ref document:EP Kind code of ref document:A1 | |

| NENP | Non-entry into the national phase | Ref country code:DE | |

| 122 | Ep: pct application non-entry in european phase | Ref document number:22819106 Country of ref document:EP Kind code of ref document:A1 | |

| 122 | Ep: pct application non-entry in european phase | Ref document number:22819106 Country of ref document:EP Kind code of ref document:A1 |