WO2021164147A1 - Artificial intelligence-based service evaluation method and apparatus, device and storage medium - Google Patents

Artificial intelligence-based service evaluation method and apparatus, device and storage mediumDownload PDFInfo

- Publication number

- WO2021164147A1 WO2021164147A1PCT/CN2020/093342CN2020093342WWO2021164147A1WO 2021164147 A1WO2021164147 A1WO 2021164147A1CN 2020093342 WCN2020093342 WCN 2020093342WWO 2021164147 A1WO2021164147 A1WO 2021164147A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- recognized

- recognition

- voice stream

- target

- emotion

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06Q—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES; SYSTEMS OR METHODS SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES, NOT OTHERWISE PROVIDED FOR

- G06Q30/00—Commerce

- G06Q30/02—Marketing; Price estimation or determination; Fundraising

- G06Q30/0282—Rating or review of business operators or products

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06Q—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES; SYSTEMS OR METHODS SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES, NOT OTHERWISE PROVIDED FOR

- G06Q30/00—Commerce

- G06Q30/01—Customer relationship services

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L25/00—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00

- G10L25/48—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use

- G10L25/51—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use for comparison or discrimination

- G10L25/63—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use for comparison or discrimination for estimating an emotional state

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04M—TELEPHONIC COMMUNICATION

- H04M3/00—Automatic or semi-automatic exchanges

- H04M3/42—Systems providing special services or facilities to subscribers

- H04M3/50—Centralised arrangements for answering calls; Centralised arrangements for recording messages for absent or busy subscribers ; Centralised arrangements for recording messages

- H04M3/51—Centralised call answering arrangements requiring operator intervention, e.g. call or contact centers for telemarketing

- H04M3/5175—Call or contact centers supervision arrangements

Definitions

- This applicationrelates to the field of artificial intelligence technology, and in particular to an artificial intelligence-based service evaluation method, device, equipment and storage medium.

- the enterpriseIn order to improve the service capabilities of the enterprise and fully meet the different requirements of customers, the enterprise establishes a corresponding seat center, and the seat staff of the seat center provide customers with corresponding services to improve service efficiency and avoid the inconvenience of customers going to the counter to handle business. Since the agent is an important link between the customer and the company, the service quality of the agent will greatly affect the customer's satisfaction with the company.

- the inventorrealizes that the current service evaluation of the agents in the enterprise is mainly based on the customer's manual scoring of the agent's services. Whether the customer scores and the specific evaluation scores are subjectively determined by the customer, which makes the service evaluation process objective and accurate. not tall.

- the embodiments of the present applicationprovide an artificial intelligence-based service evaluation method, device, equipment, and storage medium to solve the problem of low objectivity and accuracy in the current service evaluation process.

- a service evaluation method based on artificial intelligenceincluding:

- Fusion processingis performed on the text analysis result and the emotion analysis result corresponding to the voice stream to be recognized, and the service quality score corresponding to the target identity information is obtained.

- a service evaluation device based on artificial intelligenceincluding:

- To-be-recognized voice stream acquisition moduleused to acquire the to-be-recognized voice stream collected in real time during the service process

- the target identity information acquisition moduleis configured to perform identity recognition on the voice stream to be recognized, and determine the target identity information corresponding to the voice stream to be recognized;

- a text analysis result obtaining moduleconfigured to perform text analysis on the voice stream to be recognized, and obtain a text analysis result corresponding to the voice stream to be recognized;

- An emotion analysis result obtaining moduleconfigured to perform emotion analysis on the voice stream to be recognized, and obtain the emotion analysis result corresponding to the voice stream to be recognized;

- the service quality score obtaining moduleis configured to perform fusion processing on the text analysis result and the sentiment analysis result corresponding to the voice stream to be recognized, and obtain the service quality score corresponding to the target identity information.

- a computer deviceincludes a memory, a processor, and computer-readable instructions that are stored in the memory and can run on the processor, and the processor implements the following steps when the processor executes the computer-readable instructions:

- One or more readable storage mediastoring computer readable instructions

- the computer readable storage mediumstoring computer readable instructions

- the one Or multiple processorsperform the following steps:

- Fusion processingis performed on the text analysis result and the emotion analysis result corresponding to the voice stream to be recognized, and the service quality score corresponding to the target identity information is obtained.

- the voice stream to be recognizedis identified to determine its corresponding target identity information, so as to realize the identity recognition of the voice stream to be recognized corresponding to the unknown speaker .

- the text analysis results and sentiment analysis resultsare obtained respectively, and then the text analysis results and sentiment analysis results are fused to obtain the service quality score corresponding to the target identity information, so as to realize the use of artificial intelligence

- the technical meansto realize the objective analysis of the service quality of the speaker in the speech stream to be recognized, to ensure the objectivity and accuracy of the target analysis results obtained, and to avoid the lack of subjective evaluation by people.

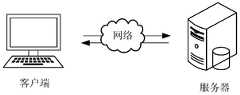

- FIG. 1is a schematic diagram of an application environment of an artificial intelligence-based service evaluation method in an embodiment of the present application

- FIG. 2is a flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application

- FIG. 3is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application

- FIG. 4is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application

- FIG. 5is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application

- FIG. 6is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application.

- FIG. 7is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application.

- FIG. 8is a schematic diagram of a service evaluation device based on artificial intelligence in an embodiment of the present application.

- Fig. 9is a schematic diagram of a computer device in an embodiment of the present application.

- the artificial intelligence-based service evaluation methodcan be applied to the application environment as shown in FIG. 1.

- the artificial intelligence-based service evaluation methodis applied in an artificial intelligence-based service evaluation system.

- the artificial intelligence-based service evaluation systemincludes a client and a server as shown in FIG. 1, and the client and the server communicate through a network. It is used to objectively analyze the recordings collected during the process of providing services to customers by the agents to ensure the objectivity and accuracy of service evaluation.

- the clientis also called the client, which refers to the program that corresponds to the server and provides local services to the client.

- the clientcan be installed on, but not limited to, various personal computers, notebook computers, smart phones, tablet computers, and portable wearable devices.

- the servercan be implemented as an independent server or a server cluster composed of multiple servers.

- an artificial intelligence-based service evaluation methodis provided.

- the artificial intelligence-based service evaluation methodis applied to the server shown in FIG. 1, and includes the following steps:

- S201Obtain a voice stream to be recognized that is collected in real time during the service process.

- the voice stream to be recognizedrefers to the voice stream used for service evaluation.

- the to-be-recognized voice streammay be a voice stream recorded in real time during the process of providing a service to a customer by an agent, and is specifically an object of information processing for service evaluation.

- the agentprovides services to customers through the telemarketing system.

- the recording module on the telemarketing systemcollects the voice stream to be recognized in the process of providing services to the customer in real time, and sends the voice stream to be recognized

- the server of the service evaluation systemcan receive the to-be-recognized voice stream recorded by the recording module in real time, and can also obtain the to-be-recognized voice stream that needs to be evaluated for the service from the database, so that the seat personnel’s For each service, the corresponding voice stream to be recognized can be collected and the follow-up service evaluation can be performed.

- the serverobtains the real-time recorded voice stream to be recognized during the service provided by the agent to the customer and conducts follow-up service evaluation, so that the service evaluation process is not limited to whether the customer scores or not, and ensures that the object used for service evaluation Completeness, to ensure the objectivity and accuracy of the service evaluation process.

- S202Perform identity recognition on the voice stream to be recognized, and determine target identity information corresponding to the voice stream to be recognized.

- the identification of the voice stream to be recognizedis used to identify the identity of the speaker corresponding to the voice stream to be recognized.

- the target identity informationis based on the identity information of the speaker identified by the voice stream to be recognized.

- performing identity recognition on the voice stream to be recognized and determining the target identity information corresponding to the voice stream to be recognizedmay specifically include the following steps: performing voiceprint feature extraction on the voice stream to be recognized, acquiring voiceprint features to be recognized, and recognizing the voice

- the similarity of the fingerprint feature and the standard voiceprint feature corresponding to each seat in the databaseis calculated to obtain the voiceprint similarity, and the identity information corresponding to the standard voiceprint feature with the largest voiceprint similarity is determined as the target identity information.

- the voiceprint feature to be recognizedis the voiceprint feature obtained by using a pre-trained voiceprint extraction model to perform voiceprint extraction on the voice stream to be recognized.

- the standard voiceprint featureis the voiceprint feature obtained by using a pre-trained voiceprint extraction model to extract the voiceprint of a standard voice stream of an agent.

- the standard voice streamis a voice stream that carries the identity information of the seat personnel, so that the extracted standard voiceprint features are associated with the identity information of the seat personnel.

- the voiceprint extraction modelcan be, but is not limited to, a Gaussian mixture model.

- the serverAfter the server acquires the voice stream to be recognized in real time during the service process, it performs identity recognition on the voice stream to be recognized to determine the target identity information corresponding to the voice stream to be recognized, so that the machine can analyze the corresponding voice stream to be recognized.

- the identity information of the targetto ensure the consistency of the voice stream to be recognized corresponding to the seat personnel and the target identity information, which can realize the identity recognition of the seat personnel with unknown identities.

- S203Perform text analysis on the voice stream to be recognized, and obtain a text analysis result corresponding to the voice stream to be recognized.

- the text analysis resultis a result reflecting the service quality obtained by analyzing the text content corresponding to the voice stream to be recognized.

- the servermay pre-train a text analysis model for analyzing speaker emotions corresponding to the text content.

- the text analysis modelmay be a model obtained after model training is performed on training text data carrying different emotion labels using a neural network model.

- the text analysis modelcan be used to perform sentiment analysis on the text information to be recognized extracted from the speech stream to be recognized to obtain text analysis results. The processing process is more efficient and the analysis results are more objective.

- S204Perform sentiment analysis on the voice stream to be recognized, and obtain a sentiment analysis result corresponding to the voice stream to be recognized.

- the sentiment analysis resultis the result obtained by the sentiment analysis for the speech stream to be recognized.

- a voice emotion recognition modelis pre-stored in the service evaluation system, and the voice emotion recognition model is a pre-trained model for emotion recognition of a voice stream.

- the serveruses a pre-trained voice emotion recognition model to recognize the emotion of the voice stream to be recognized collected in real time by the recording module on the telemarketing system.

- the processcan be realized by a machine to ensure the objectivity and accuracy of the identified target analysis result .

- S205Perform fusion processing on the text analysis result and the emotion analysis result corresponding to the voice stream to be recognized, and obtain the service quality score corresponding to the target identity information.

- the service quality scoreis a service score determined based on analysis of the voice stream to be recognized.

- the fusion processing of the text analysis result and the emotion analysis result corresponding to the voice stream to be recognizedrefers to combining the text analysis result and the emotion analysis result to obtain a service quality score that can objectively reflect the service quality of the agent corresponding to the voice stream to be recognized.

- the results of text analysis and sentiment analysiscan include at least two types of results, such as positive and negative reviews, or 1-star to 5-star ratings, etc.

- the service evaluation systempre-stores different text analysis results and sentiment analysis results corresponding The scoring score comparison table. After obtaining the text analysis result and sentiment analysis result corresponding to each voice stream to be recognized, the server can query the score comparison table based on the text analysis result and sentiment analysis result to determine its corresponding service quality score, so that the obtained The service quality score comprehensively considers the text analysis results and sentiment analysis results corresponding to the voice stream to be recognized, which helps to ensure the objectivity and accuracy of the service quality score.

- the voice stream to be recognizedis identified to determine its corresponding target identity information, so as to realize the identity recognition of the voice stream to be recognized corresponding to the unknown speaker.

- the text analysis results and sentiment analysis resultsare obtained respectively, and then the text analysis results and sentiment analysis results are fused to obtain the service quality score corresponding to the target identity information, so as to realize the use of artificial intelligence.

- the technical meansto realize the objective analysis of the service quality of the speaker in the speech stream to be recognized, to ensure the objectivity and accuracy of the target analysis results obtained, and to avoid the lack of subjective evaluation by people.

- the agentmay collect "um", "good” or other short speech streams to be recognized. These short speech streams to be recognized are used in identification and In the emotion recognition process, the recognition accuracy is low. Therefore, after step S201, that is, after the voice stream to be recognized is collected in real time in the process of obtaining the service, the service evaluation method based on artificial intelligence further includes: obtaining the corresponding voice stream to be recognized Voice duration, if the voice duration is greater than the duration threshold, perform identity recognition on the voice stream to be recognized, and determine the target identity information corresponding to the voice stream to be recognized.

- the voice duration corresponding to the voice stream to be recognizedrefers to the speaking duration corresponding to the voice stream to be recognized.

- the voice durationis the speaking duration corresponding to the to-be-recognized voice stream recorded in real time during the process of providing services to the customer by the agent, and is the service duration for the agent to provide services to the customer.

- the duration thresholdrefers to a preset threshold for evaluating whether the duration reaches the target of service evaluation.

- the serverafter the server acquires the voice stream to be recognized in real time during the service process, it needs to determine the voice duration corresponding to each voice stream to be recognized, and compare the voice duration with the preset duration threshold of the system; if the voice duration is If the duration is greater than the duration threshold, then perform the identification of the voice stream to be recognized, determine the target identity information corresponding to the voice stream to be recognized and the subsequent steps, that is, perform steps S202-S205; if the voice duration is not greater than the duration threshold, do not perform the recognition to be recognized

- the voice streamperforms identity recognition, and the target identity information corresponding to the voice stream to be recognized and the subsequent steps are determined, that is, the subsequent steps S202-S205 are not executed.

- the subsequent identity recognition and emotion recognitionare only performed on the to-be-recognized speech stream whose speech duration is greater than the duration threshold, so as to ensure the accuracy of subsequent identity recognition and emotion recognition and avoid speech

- the recognition result of the short-duration voice stream to be recognizedis inaccurate and affects the service evaluation; understandably, if the voice duration of the voice stream to be recognized is not greater than the duration threshold, the server does not perform subsequent recognition processing on the voice stream to be recognized, which can be effective Reduce the amount of data for subsequent identification and improve the processing efficiency of subsequent identification.

- step S202that is, performing identity recognition on the voice stream to be recognized, and determining the target identity information corresponding to the voice stream to be recognized, specifically includes the following steps:

- S301Perform feature extraction on the voice stream to be recognized, and obtain the MFCC feature and the pitch feature corresponding to the voice stream to be recognized.

- MFCCMel-scale Frequency Cepstral Coefficients

- the Mel scaledescribes the non-linear characteristics of the human ear frequency, and its relationship with frequency is available

- the serverperforms pre-emphasis, framing, windowing, fast Fourier transform, triangular bandpass filter filtering, logarithmic operation, and logarithmic operation on the to-be-recognized speech stream collected in real time during the process of obtaining the service.

- Discrete cosine transform processingto obtain MFCC features.

- the Pitch featureis a feature related to the fundamental frequency (F0) of the sound, which reflects the information of the pitch, that is, the tone.

- F0fundamental frequency

- Calculating F0is also called pitch detection algorithms (PDA).

- PDApitch detection algorithms

- the systempre-stores a pitch detection algorithm (Pitch detection algorithm), which can estimate the pitch or fundamental frequency of periodic signals, and is widely used in voice signals and music signals.

- the algorithmscan be divided into time domains. And frequency domain two methods.

- the serveracquires the voice stream to be recognized in real time during the service process, it uses a pre-stored pitch detection algorithm to perform feature extraction on the voice stream to be recognized to obtain the pitch feature.

- MFCC featurescan better reflect the tone and prosody information of the speaker, making voices of the same sex more distinguishable, and helping to improve subsequent extraction based on the voice stream to be recognized The characteristics of the accuracy of identification.

- S302Perform splicing processing on the MFCC feature and the Pitch feature to obtain a target feature vector.

- concatenating the MFCC feature and the Pitch featurerefers to concatenating all the dimensions of the MFCC feature and the Pitch feature to form a target feature vector.

- the target feature vectorrefers to the feature vector formed after the MFCC feature and the Pitch feature are spliced.

- the serveris performing feature extraction on the voice stream to be recognized to obtain 32-dimensional MFCC features and 32-dimensional pitch features; then, the 32-dimensional MFCC features and 32-dimensional pitch features are spliced to form a 64-dimensional target feature vector.

- the target feature vector after splicingcontains both the information of the MFCC feature and the information of the Pitch feature, which makes the information of the target feature vector larger, which is more helpful to improve the accuracy of subsequent identification.

- S303Use a time-delay neural network-based identity feature recognition model to process the target feature vector to obtain identity feature information.

- the identity feature recognition modelis equipped with a summary pool for calculating the mean and standard deviation of the features input to the hidden layer Floor.

- the identity feature recognition model based on the time-delay neural networkis a model obtained by pre-training the training samples with the time-delay neural network.

- the Time-Delay Neural Network(TDNN) can adapt to the dynamic time domain changes in the speech signal, and the structural parameters are less, and the speech recognition does not need to align the phonetic symbols with the audio in advance on the timeline.

- the training samplesinclude training speech and speaker labels corresponding to the training speech.

- a traditional TDNNincludes an input layer, a first hidden layer, a second hidden layer and an output layer.

- the input layer, the first hidden layer, the second hidden layer, and the output layerare constructed in advance according to the requirements of the service evaluation system, and the second hidden layer and the output layer are set up between the second hidden layer and the output layer for matching the hidden layer.

- the statistical pooling layer(Stattistic Pooling) where the mean and standard deviation of the input features are calculated.

- the mean vector Standard deviation vector ⁇is the same or operator.

- the identification feature recognition model obtained by the time-delayed neural network training with a summary pooling layer set between the second hidden layer and the output layeris used to process the target feature vector to obtain the identification feature information, so that the summary pool

- the transformation layercan process the mean vector ⁇ and standard deviation vector ⁇ obtained by processing the target feature vector through the first hidden layer and the second hidden layer, so as to process the mean vector ⁇ and standard deviation vector ⁇ in the output layer to The accuracy of the extracted identity feature information.

- S304Perform similarity calculation between the identity feature information and the standard feature information corresponding to each agent in the database, obtain feature similarity, and determine the target identity information corresponding to the voice stream to be recognized based on the feature similarity.

- the databaseis a database used to store data used or generated in the service evaluation process, and the database is connected to the server so that the server can access the database.

- the standard feature informationis the feature information corresponding to the identity tag of the seat personnel stored in the database in advance.

- the standard voice stream corresponding to each agentcan be input in advance into the time-delay neural network-based identity feature recognition model in step S303 for processing, so as to obtain corresponding standard feature information.

- the corresponding identity tag associationcan be used for subsequent identity recognition based on the obtained standard feature information.

- the feature similarityrefers to the specific value obtained by calculating the similarity between the identity feature information and the standard feature information using a preset similarity algorithm.

- the similarity algorithmincludes but is not limited to the cosine similarity algorithm.

- determining the target identity information corresponding to the voice stream to be recognized based on the feature similarityrefers to at least one feature similarity obtained by performing similarity calculations between the identity feature information and at least one standard feature information in the database, based on the feature similarity

- the identity tag corresponding to the largest standard feature informationis determined as the target identity information corresponding to the voice stream to be recognized.

- the MFCC feature and the pitch feature extracted from the speech stream to be recognizedare spliced, so that the obtained target feature vector has a larger amount of information, which is more helpful to ensure subsequent identity Accuracy of recognition;

- the identification feature recognition model based on time-delay neural networkis used to process the target feature vector, and the identification feature recognition model is equipped with a summary pooling layer for calculating the mean and standard deviation of the features input to the hidden layer , So that its processing process fully considers the context information of the target feature vector, and the output layer processes the output after the mean and standard deviation processing, which not only helps to ensure the processing efficiency of the recognition result, but also the accuracy of the recognition result.

- the similarity calculationis performed based on the identity feature information and the standard feature information to determine the target identity information corresponding to the voice stream to be recognized according to the feature similarity, so as to ensure the objectivity of the target identity information determination.

- step S203which is to perform text analysis on the voice stream to be recognized, and obtain the text analysis result corresponding to the voice stream to be recognized, specifically includes the following steps:

- S401Use a voice recognition model to perform text recognition on a voice stream to be recognized, and obtain text information to be recognized.

- the speech recognition modelis a pre-trained model for recognizing text content in speech.

- the speech recognition modelmay be a static speech decoding network for recognizing text content in speech, which is obtained by using training speech data and training text data for model training. Therefore, the decoding speed is fast during text recognition, and the speech static decoding network is used to perform text recognition on the speech stream to be recognized, and the corresponding text information to be recognized can be quickly obtained.

- the text information to be recognizedis the text content recognized from the voice stream to be recognized.

- S402Perform sensitive word analysis on the text information to be recognized, and obtain a sensitive word analysis result.

- the sensitive word analysis resultis a result used to reflect whether there are sensitive words in the text information to be recognized and the impact of the existing sensitive words on the service evaluation.

- the sensitive word analysis process of the text information to be recognizedincludes the following steps: query a sensitive word database based on the text information to be recognized, obtain the number of sensitive words in the text information to be recognized, and determine the sensitive word analysis result according to the number of sensitive words.

- the sensitive word databasepre-stores the sensitive words of the agents in the service process, so that the sensitive word analysis can be performed on the text information to be recognized in the service evaluation process, and the sensitive word analysis results can be obtained.

- the sensitive word analysis resultcan be determined based on the comparison between the number of sensitive words and the first number threshold preset by the system.

- the first number thresholdis a preset value used to evaluate the quality of sensitive word analysis.

- the sensitive word score tablecan be queried based on the number of sensitive words to determine the sensitive word analysis result.

- the sensitive word score tableis a pre-stored information table used to reflect the number of sensitive words and the corresponding scoring score or scoring result.

- the tone analysis resultis used to reflect the analysis result corresponding to the speaker's tone in the text information to be recognized.

- the tone analysis of the text information to be recognizedincludes the following steps: using a voice analyzer to analyze the text information to be recognized to obtain the recognition tone, query the service evaluation information table based on the recognition tone, and obtain the tone analysis result.

- the tone analyzeris an analyzer used to analyze the language and text to determine the tone contained therein.

- the tone analyzercan use IBM's Watson tone analyzer.

- the recognition toneis the tone of the speaker recognized from the text information to be recognized using a tone analyzer.

- the corresponding relationship between different scoring standards and corresponding emotion recognition resultsis stored in the service evaluation information table in advance.

- the scoring standardcontains multiple judgment conditions related to the tone, such as plain tone, no passion, rigid tone, showing indifference and disdain, dissatisfaction In the tone of the client, such as "Didn’t I tell you this question just now?" and "Do you even need me to explain this?” etc., the server is using a tone analyzer to analyze the recognized text information After confirming the recognition tone, query the service evaluation information table based on the recognition tone to obtain the corresponding tone analysis result.

- the text analysis comparison table stored in the systemcan be queried based on the sensitive word analysis results and the tone analysis results, and collected in real time during the service process The text analysis result corresponding to the voice stream to be recognized.

- the text analysis comparison tableis a data table preset by the system to reflect the corresponding relationship between the combination of different sensitive word analysis results and tone analysis results and the analysis results, so that after determining the sensitive word analysis results and the tone analysis results, you can Quickly look up the table to determine the corresponding text analysis results.

- the sensitive word analysis results and the tone analysis resultscan be analyzed Normalization processing to obtain the normalization result of sensitive words and the normalization result of the tone, so as to change the dimensional expression into a dimensionless expression; then use the text analysis weighting algorithm to normalize the result of the sensitive word and the tone Calculate the transformation results, and obtain the text analysis results corresponding to the voice stream to be recognized collected in real time during the service process, so that the text analysis results can be represented by quantitative features.

- the sensitive word analysis weight w1 and the tone analysis weight w2are the weights preset by the service evaluation system.

- a speech recognition modelis used to perform text recognition on the speech stream to be recognized, so as to convert speech information into text information, and provide technical support for subsequent sensitive word and tone analysis; Perform sensitive word analysis and tone analysis on the text information to be recognized. According to the obtained sensitive word analysis results and tone analysis results, determine the text analysis results corresponding to the voice stream to be recognized, so that the text analysis results comprehensively consider the sensitive words and the tone in the text information to be recognized.

- the two dimensions of the speaker's toneevaluate the quality of service and ensure the objectivity and accuracy of the obtained text analysis results.

- step S403that is, using the voice emotion recognition model to analyze the voice stream to be recognized to obtain the emotion analysis result, specifically includes the following steps:

- S501Perform voice segmentation on the voice stream to be recognized, and obtain at least two target voice segments.

- the target speech segmentis a speech segment formed by segmenting the speech stream to be recognized.

- the serveruses a voice activation detection algorithm to detect the voice stream to be recognized, to detect the pause time corresponding to each pause point in the voice stream to be recognized, and determine the pause point whose pause time is greater than the preset duration threshold as the voice segment point , Perform voice segmentation on the to-be-recognized voice stream based on the voice segmentation point, and obtain at least two target voice segments for subsequent emotion recognition and speech rate calculations based on the target voice segments, which provides a technical basis for parallel processing and helps Ensure the efficiency of subsequent analysis and processing.

- S502Perform emotion recognition on each target speech segment using a voice emotion recognition model, and obtain the recognition emotion corresponding to each target speech segment.

- the speech emotion recognition modelis a model that is pre-trained to recognize the speaker's emotion in the speech.

- the speech emotion recognition modelmay specifically be a PAD emotion model, which will have three dimensions of pleasure, activation and dominance for emotions, where P stands for Pleasure-displeasure, which represents the individual's emotional state Positive and negative characteristics; A stands for activation (Arousal-nonarousal), which represents the individual's neurophysiological activation level; D stands for Dominance-submissiveness, which represents the individual's control over the situation and others. Recognizing emotions is the output result of emotion recognition for each target speech segment using a speech emotion recognition model.

- S503Calculate the recognition speech rate corresponding to each target speech segment.

- the recognition speech rate corresponding to the target speech segmentrefers to the quotient of the number of spoken words corresponding to the target speech segment and the speech duration, which is used to reflect the number of spoken words per unit time.

- the voice recognition modelhas been used to perform text recognition on the voice stream to be recognized, and the text information to be recognized corresponding to the entire voice stream to be recognized is obtained.

- the speech durationcan be determined based on the timestamps corresponding to the first frame of data and the last frame of data in each target speech segment; and the text information to be recognized can be determined based on the timestamps corresponding to the first frame of data and the last frame of data

- the number of spoken words and the speech durationare used to determine the recognition speech rate corresponding to each target speech segment. Understandably, based on the to-be-recognized text information obtained in the text analysis process, the recognition speech rate corresponding to the target speech segment can be quickly calculated, and the efficiency of obtaining the recognition speech rate can be improved.

- the agentspeaks faster, it means that the agent is more impatient, which makes the customer's satisfaction with the service provided by the agent worse. Therefore, the agent speaks faster It can be used as a sentiment analysis dimension to evaluate its service quality, so it is necessary to calculate the recognition speech rate corresponding to each target speech segment.

- S504Obtain an emotion analysis result corresponding to the voice stream to be recognized based on the recognition speech rate and the recognition emotion corresponding to the at least two target speech segments.

- the servermay perform sentiment analysis based on the two sentiment analysis dimensions of the recognition speech rate and the sentiment recognition corresponding to the at least two target speech segments, and obtain the sentiment analysis result corresponding to the to-be-recognized voice stream composed of the at least two target speech segments.

- the recognition speech rate and recognition emotion corresponding to at least two target speech segmentscan be converted into corresponding scores respectively, and then weighted processing can be performed to obtain the emotion analysis corresponding to the voice stream to be recognized collected in real time during the service process. result.

- the service evaluation method based on artificial intelligenceby dividing the to-be-recognized speech stream into at least two target speech segments, a technical basis is provided for subsequent analysis of the speech rate changes and mood changes corresponding to the at least two target speech segments. Then analyze each target speech segment to determine its corresponding recognition speech speed and recognition emotion. Use the two dimensions of recognition speech speed and recognition emotion to evaluate the service quality to ensure the objectivity and objectivity of the sentiment analysis results obtained. accuracy.

- step S502which uses a speech emotion recognition model to perform emotion recognition on each target speech segment, and obtains the recognition emotion corresponding to each target speech segment, specifically includes the following steps:

- S601Perform feature extraction on each target speech segment, and obtain the spectrogram feature and TEO feature corresponding to the target speech segment.

- the spectrogramis a speech spectrogram, which is a spectrum analysis view obtained by processing time domain signals with sufficient time length.

- the abscissa of the spectrogramis time, the ordinate is frequency, and the coordinate point value Energy for voice data.

- the spectrogram featuresare based on the features extracted from the spectrogram.

- the serverobtains the corresponding spectrogram based on the target speech segment; then normalizes the spectrogram to obtain the normalized spectrogram grayscale image; then, calculate Gabor maps of different scales and directions, and local binary patterns are used to extract the texture features of the Gabor maps; finally, the texture features corresponding to the local binary patterns extracted from the Gabor maps of different scales and directions are cascaded to obtain the corresponding The characteristics of the spectrogram.

- the spectrogram featureis a voice emotion feature. Compared with the traditional prosody feature, frequency domain feature and voice quality feature, the emotion recognition result is more accurate when performing emotion recognition.

- TEOTag Energy Operator

- HMTeager Energy Operatoris a nonlinear operator that can track the instantaneous energy of the signal. It is a simple signal analysis algorithm proposed by the scientist HMTeager when he is studying nonlinear speech modeling.

- the TEO featureis the fundamental frequency feature obtained by using TEO to analyze the target speech segment. Due to the characteristics of the Teager energy operator, the TEO feature extracted from the target speech segment has better stability in a noisy environment and improves its distinguishability. Therefore, the anti-noise performance of the TEO feature is good.

- S602Splicing the spectrogram feature and the TEO feature to obtain the target recognition feature corresponding to the target speech segment.

- the splicing processing of the spectrogram feature and the TEO featurerefers to splicing all the dimensions of the spectrogram feature and the TEO feature to form the target recognition feature.

- the target recognition featurerefers to the feature formed after the splicing process of the spectrogram feature and the TEO feature.

- the serverwhen it performs feature extraction on the target speech segment, it can obtain 1024-dimensional spectrogram features and 20-dimensional TEO features; and then splice the 1024-dimensional spectrogram features and 20-dimensional TEO features into 1044 dimensions.

- Target recognition featureso that the spliced target recognition feature contains not only the information of the spectrogram feature, but also the information of the TEO feature, so that the amount of information of the target recognition feature is larger. Since the target recognition feature contains the information of the TEO feature, It has good stability in a noisy environment, so that the final target recognition feature also has corresponding anti-noise performance. It helps to improve the accuracy of subsequent recognition.

- S603Use the voice emotion recognition model to perform emotion recognition on the target recognition feature corresponding to each target speech segment, and obtain the recognition emotion corresponding to each target speech segment.

- the speech emotion recognition modelis a model that is pre-trained to recognize the speaker's emotion in the speech.

- the process of pre-training a speech emotion recognition modelincludes the following steps: (1) Obtain original speeches whose speech duration is greater than a preset duration, and each original speech carries a corresponding emotion label, wherein the preset duration is a spectrogram The minimum duration for feature processing, the voice duration of the original speech is longer than the preset time, which can ensure the feasibility of subsequent spectrogram feature extraction. (2) Perform feature extraction on the original speech, obtain the spectrogram feature and TEO feature corresponding to the original speech, and stitch the spectrogram feature and TEO feature corresponding to the original speech to form a training sample.

- the training samplerefers to a training feature formed by splicing the spectrogram feature corresponding to the original speech and the TEO feature, and the training feature corresponds to the emotion label of the original speech.

- the process of feature extraction and feature splicing in the process of acquiring training samplesis consistent with the foregoing steps S602 and S603, and in order to avoid repetition, details are not repeated here.

- the training samples of the speech emotion recognition modelcombine the information of the spectrogram feature and the TEO feature Compared with the traditional prosodic features, frequency domain features, and voice quality features, the emotion recognition results are more accurate when performing emotion recognition; moreover, it has the anti-noise characteristics of TEO features, so that the subsequent use of voice emotion recognition models for target recognition When the feature is used for emotion recognition, the anti-noise performance is good, which helps to improve the accuracy of the emotion recognition.

- the spectrogram features and TEO features extracted from the target speech segmentare spliced, so that the acquired target

- the greater amount of information in the recognition featurehelps to ensure the accuracy and noise resistance of subsequent emotion recognition.

- the target recognition feature determined by the target speech segmentis input into the speech emotion recognition model for recognition, and the recognition emotion corresponding to the target speech segment can be quickly obtained, so that the accuracy of the obtained recognition emotion is higher and the noise resistance is higher.

- step S504which is to obtain the emotion analysis result corresponding to the voice stream to be recognized based on the recognition speech rate and the recognition emotion corresponding to at least two target speech segments, specifically includes the following steps:

- S701Obtain the target emotion corresponding to the current target speech segment based on the recognition speech rate of the current target speech segment, the recognition speech rate of the previous target speech segment, and the recognition emotion of the current target speech segment.

- the current target speech segmentrefers to the target speech segment that needs to be analyzed at the current moment.

- the last target speech segmentrefers to a target speech segment before the current target speech segment among at least two target speech segments after voice segmentation of the speech stream to be recognized.

- the target emotion corresponding to the current target speech segmentrefers to the target emotion corresponding to the current target speech segment that is determined for subsequent analysis by considering the recognition speech rate of the two target speech segments before and after and the recognition emotion corresponding to the current target recognition speech segment.

- the above step S701specifically includes the following steps: (1) If there is no previous target speech segment, obtain the target emotion corresponding to the current target speech segment based on the recognition emotion corresponding to the current speech segment. That is, the current target speech segment is the first target speech segment. At this time, if the current target speech segment is negative emotion, then the target emotion of the current target speech segment is negative emotion; if the current target speech segment is positive emotion, then the current target speech The target emotion of the segment is positive emotion. (2) If there is a previous target speech segment, when the recognition rate of the current target speech segment is greater than the recognition rate of the last target speech segment, and the recognition emotion of the current target speech segment is a negative emotion, the current target speech segment The corresponding target emotion is determined to be a negative emotion.

- the recognition rate of the current target speech segmentis not greater than the recognition rate of the previous target speech segment, or the recognition rate of the current target speech segment is greater than the previous target speech segment

- the recognition rate of speech and the recognition emotion of the current target speech segmentis a positive emotion

- the target emotion corresponding to the current target speech segmentis a positive emotion.

- the target emotion of the current target speech segmentis determined to be negative Emotions and other emotions are all positive emotions, so that the determined target emotion comprehensively considers information such as emotions and speaking speed, which helps to improve the accuracy of subsequent analysis.

- S702Obtain the number of negative emotions corresponding to the voice stream to be recognized based on the target emotions corresponding to at least two current target speech segments.

- the target emotioncan be positive emotion and negative emotion.

- Positive emotionrefers to the emotion corresponding to the positive mental attitude or state. It is the emotion corresponding to a benign, positive, stable and constructive mental state, including but not Limited to emotions such as love, happiness, optimism, trust, acceptance and surprise.

- Negative emotionsrefer to emotions that are generated by external or internal factors in a specific behavior that are not conducive to continuing to complete work or normal thinking. It is opposite to positive emotions, including but not limited to disgust, dislike, opposition, dissatisfaction, ignorance And contempt and other emotions.

- the number of negative emotionsrefers to the number of at least two current target speech segments whose target emotions are negative emotions.

- S703Obtain a sentiment analysis result corresponding to the voice stream to be recognized based on the number of negative emotions corresponding to the voice stream to be recognized.

- obtaining the emotion analysis result corresponding to the voice stream to be recognizedincludes: if the number of negative emotions corresponding to the voice stream to be recognized is greater than the second number threshold, then the obtained emotion analysis result Is a negative emotion; if the number of negative emotions corresponding to the voice stream to be recognized is not greater than the second number threshold, the obtained emotion analysis result is a positive emotion.

- the second number thresholdis a preset value.

- obtaining the emotion analysis result corresponding to the voice stream to be recognizedincludes: calculating the probability of the negative emotion based on the number of negative emotions corresponding to the voice stream to be recognized, if the probability of the negative emotion is greater than a preset Probability threshold, the obtained sentiment analysis result is a negative sentiment; if the probability of the negative sentiment is not greater than the preset probability threshold, the obtained sentiment analysis result is a positive sentiment.

- the probability of negative emotionrefers to the ratio of the number of negative emotions to the number of all target speech segments.

- the preset probability thresholdis a preset probability value.

- obtaining the emotion analysis result corresponding to the speech stream to be recognizedincludes: querying the emotion score comparison table based on the number of negative emotions corresponding to the speech stream to be recognized, and obtaining the speech stream to be recognized

- the sentiment score comparison tableis a data table used to store sentiment scores corresponding to different amounts of negative emotions.

- the target emotion corresponding to each current target voiceneeds to comprehensively consider the recognition emotion and the recognition speed of the two target speech segments, which helps to improve the accuracy of subsequent analysis.

- the target emotions corresponding to at least two current target speech segmentsdetermine the number of negative emotions corresponding to the voice stream to be recognized, and obtain the sentiment analysis results based on the number of negative emotions, so that the sentiment analysis results comprehensively consider the two effects of speech speed and negative emotions.

- the key dimension of qualityhelps to improve the objectivity and accuracy of service evaluation.

- an artificial intelligence-based service evaluation devicecorresponds to the artificial intelligence-based service evaluation method in the foregoing embodiment in a one-to-one correspondence.

- the artificial intelligence-based service evaluation deviceincludes a voice stream acquisition module 801 to be recognized, a target identity information acquisition module 802, a text analysis result acquisition module 803, a sentiment analysis result acquisition module 804, and a service quality score acquisition module 805 .

- the detailed description of each functional moduleis as follows:

- the to-be-recognized voice stream acquisition module 801is used to acquire the to-be-recognized voice stream collected in real time during the service process.

- the target identity information acquisition module 802is configured to perform identity recognition on the voice stream to be recognized and determine the target identity information corresponding to the voice stream to be recognized.

- the text analysis result obtaining module 803is configured to perform text analysis on the voice stream to be recognized, and obtain a text analysis result corresponding to the voice stream to be recognized.

- the emotion analysis result obtaining module 804is configured to perform emotion analysis on the voice stream to be recognized, and obtain the emotion analysis result corresponding to the voice stream to be recognized.

- the service quality score obtaining module 805is configured to perform fusion processing on the text analysis result and the sentiment analysis result corresponding to the voice stream to be recognized, and obtain the service quality score corresponding to the target identity information.

- the artificial intelligence-based service evaluation devicefurther includes: a voice duration judgment processing module for acquiring the voice duration corresponding to the voice stream to be recognized, if the voice duration is greater than the duration Threshold, perform identity recognition on the voice stream to be recognized, and determine the target identity information corresponding to the voice stream to be recognized.

- a voice duration judgment processing modulefor acquiring the voice duration corresponding to the voice stream to be recognized, if the voice duration is greater than the duration Threshold, perform identity recognition on the voice stream to be recognized, and determine the target identity information corresponding to the voice stream to be recognized.

- the target identity information acquisition module 802includes:

- the voice stream feature extraction unitis used to perform feature extraction on the voice stream to be recognized, and obtain the MFCC feature and pitch feature corresponding to the voice stream to be recognized.

- the target feature vector obtaining unitis used for splicing the MFCC feature and the pitch feature to obtain the target feature vector.

- the identity feature information acquisition unitis used to process the target feature vector using the identity feature recognition model based on the time-delay neural network to obtain the identity feature information.

- the identity feature recognition modelis equipped with the average value and standard for the hidden layer input features Aggregate pooling layer for difference calculation.

- the target identity information acquisition unitis used to calculate the similarity between the identity characteristic information and the standard characteristic information corresponding to each agent in the database, obtain the characteristic similarity, and determine the target identity information corresponding to the voice stream to be recognized based on the characteristic similarity.

- the text analysis result obtaining module 803includes:

- the text information acquisition unitis configured to use a voice recognition model to perform text recognition on the voice stream to be recognized, and obtain text information to be recognized.

- the sensitive word analysis result obtaining unitis used to perform sensitive word analysis on the text information to be recognized and obtain the sensitive word analysis result.

- the tone analysis result obtaining unitis used to perform tone analysis on the text information to be recognized and obtain the tone analysis result.

- the text analysis result obtaining unitis used to obtain the text analysis result corresponding to the voice stream to be recognized based on the sensitive word analysis result and the tone analysis result.

- the sentiment analysis result obtaining module 804includes:

- the target speech segment acquisition unitis configured to perform speech segmentation on the speech stream to be recognized to acquire at least two target speech segments.

- the recognition emotion acquisition unitis used for using a voice emotion recognition model to perform emotion recognition on each target speech segment, and obtain the recognition emotion corresponding to each target speech segment.

- the recognition speech rate calculation unitis used to calculate the recognition speech rate corresponding to each target speech segment.

- the emotion analysis result obtaining unitis configured to obtain the emotion analysis result corresponding to the speech stream to be recognized based on the recognition speech rate and the recognition emotion corresponding to the at least two target speech segments.

- the recognition emotion acquiring unitincludes:

- the voice segment feature extraction subunitis used to perform feature extraction on each target voice segment to obtain the spectrogram features and TEO features corresponding to the target voice segment.

- the target recognition feature acquisition subunitis used to splice the spectrogram features and TEO features to acquire the target recognition features corresponding to the target speech segment.

- the recognition emotion acquisition sub-unitis used for using the voice emotion recognition model to perform emotion recognition on the target recognition feature corresponding to each target speech segment, and obtain the recognition emotion corresponding to each target speech segment.

- the sentiment analysis result obtaining unitincludes:

- the target emotion acquisition subunitis used to obtain the target emotion corresponding to the current target speech segment based on the recognition speech rate of the current target speech segment, the recognition speech speed of the previous target speech segment, and the recognition emotion of the current target speech segment.

- the negative emotion quantity obtaining subunitis used to obtain the negative emotion quantity corresponding to the voice stream to be recognized based on the target emotion corresponding to at least two current target speech segments.

- the emotion analysis result obtaining subunitis used to obtain the emotion analysis result corresponding to the voice stream to be recognized based on the number of negative emotions corresponding to the voice stream to be recognized.

- Each module in the above artificial intelligence-based service evaluation devicecan be implemented in whole or in part by software, hardware, and a combination thereof.

- the above-mentioned modulesmay be embedded in the form of hardware or independent of the processor in the computer equipment, or may be stored in the memory of the computer equipment in the form of software, so that the processor can call and execute the operations corresponding to the above-mentioned modules.

- a computer deviceis provided.

- the computer devicemay be a server, and its internal structure diagram may be as shown in FIG. 9.

- the computer equipmentincludes a processor, a memory, a network interface, and a database connected through a system bus. Among them, the processor of the computer device is used to provide calculation and control capabilities.

- the memory of the computer deviceincludes a non-volatile storage medium and an internal memory.

- the non-volatile storage mediumstores an operating system, computer readable instructions, and a database.

- the internal memoryprovides an environment for the operation of the operating system and computer-readable instructions in the non-volatile storage medium.

- the database of the computer equipmentis used to store data used or generated during the execution of the artificial intelligence-based service evaluation method.

- the network interface of the computer deviceis used to communicate with an external terminal through a network connection. When the computer-readable instructions are executed by the processor, an artificial intelligence-based service evaluation method is realized.

- a computer deviceincluding a memory, a processor, and computer-readable instructions stored in the memory and capable of running on the processor.

- the processorexecutes the computer-readable instructions to implement the Artificial intelligence service evaluation methods, such as S201-S205 shown in Fig. 2, or shown in Figs. 2-7, are not repeated here to avoid repetition.

- the functions of the modules/units in the embodiment of the artificial intelligence-based service evaluation deviceare realized, for example, the to-be-recognized voice stream acquisition module 801 and the target identity information acquisition module shown in FIG. 8 802.

- the functions of the text analysis result acquisition module 803, the sentiment analysis result acquisition module 804, and the service quality score acquisition module 805are not repeated here in order to avoid repetition.

- one or more readable storage media storing computer readable instructionsare provided.

- the computer readable storage mediumstores computer readable instructions, and the computer readable instructions are executed by one or more processors.

- the one or more processorsare executed to implement the artificial intelligence-based service evaluation method in the foregoing embodiment, such as S201-S205 shown in FIG. 2, or shown in FIG. 2 to FIG. 7, in order to avoid repetition, I won't repeat it here.

- the computer-readable instructionis executed by the processor, the function of each module/unit in the embodiment of the above-mentioned artificial intelligence-based service evaluation device is realized, for example, the to-be-recognized voice stream acquisition module 801 and the target identity shown in FIG.

- the functions of the information acquisition module 802, the text analysis result acquisition module 803, the sentiment analysis result acquisition module 804, and the service quality score acquisition module 805are not repeated here in order to avoid repetition.

- the readable storage medium in this embodimentincludes a non-volatile readable storage medium and a volatile readable storage medium.

- Non-volatile memorymay include read only memory (ROM), programmable ROM (PROM), electrically programmable ROM (EPROM), electrically erasable programmable ROM (EEPROM), or flash memory.

- Volatile memorymay include random access memory (RAM) or external cache memory.

- RAMis available in many forms, such as static RAM (SRAM), dynamic RAM (DRAM), synchronous DRAM (SDRAM), double data rate SDRAM (DDRSDRAM), enhanced SDRAM (ESDRAM), synchronous chain Channel (Synchlink) DRAM (SLDRAM), memory bus (Rambus) direct RAM (RDRAM), direct memory bus dynamic RAM (DRDRAM), and memory bus dynamic RAM (RDRAM), etc.

Landscapes

- Engineering & Computer Science (AREA)

- Business, Economics & Management (AREA)

- Strategic Management (AREA)

- Accounting & Taxation (AREA)

- Marketing (AREA)

- Development Economics (AREA)

- Physics & Mathematics (AREA)

- Finance (AREA)

- General Business, Economics & Management (AREA)

- Signal Processing (AREA)

- Health & Medical Sciences (AREA)

- Economics (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Computational Linguistics (AREA)

- Multimedia (AREA)

- Acoustics & Sound (AREA)

- Entrepreneurship & Innovation (AREA)

- Human Computer Interaction (AREA)

- Game Theory and Decision Science (AREA)

- Audiology, Speech & Language Pathology (AREA)

- Psychiatry (AREA)

- Hospice & Palliative Care (AREA)

- General Health & Medical Sciences (AREA)

- Child & Adolescent Psychology (AREA)

- Telephonic Communication Services (AREA)

- Machine Translation (AREA)

Abstract

Description

Translated fromChinese本申请以2020年2月19日提交的申请号为202010102176.3,名称为“基于人工智能的服务评价方法、装置、设备及存储介质”的中国发明申请为基础,并要求其优先权。This application is based on the Chinese invention application with the application number 202010102176.3 and the title of "artificial intelligence-based service evaluation method, device, equipment and storage medium" filed on February 19, 2020, and claims its priority.

本申请涉及人工智能技术领域,尤其涉及一种基于人工智能的服务评价方法、装置、设备及存储介质。This application relates to the field of artificial intelligence technology, and in particular to an artificial intelligence-based service evaluation method, device, equipment and storage medium.

为了提升企业服务能力,充分满足客户的不同要求,企业建立相应的坐席中心,由坐席中心的坐席人员给客户提供相应的服务,以提高服务效率,避免客户到柜台办理业务存在的不便。由于坐席人员是连接客户与企业的重要纽带,坐席人员的服务质量很大程度上会影响客户对企业的满意度。发明人意识到当前企业内部对坐席人员的服务评价主要是根据客户对坐席人员的服务进行手动评分,客户是否评分以及具体评多少分均由客户主观决定,使得服务评价过程中客观性和准确率不高。In order to improve the service capabilities of the enterprise and fully meet the different requirements of customers, the enterprise establishes a corresponding seat center, and the seat staff of the seat center provide customers with corresponding services to improve service efficiency and avoid the inconvenience of customers going to the counter to handle business. Since the agent is an important link between the customer and the company, the service quality of the agent will greatly affect the customer's satisfaction with the company. The inventor realizes that the current service evaluation of the agents in the enterprise is mainly based on the customer's manual scoring of the agent's services. Whether the customer scores and the specific evaluation scores are subjectively determined by the customer, which makes the service evaluation process objective and accurate. not tall.

发明内容Summary of the invention

本申请实施例提供一种基于人工智能的服务评价方法、装置、设备及存储介质,以解决当前服务评价过程中客观性和准确率不高的问题。The embodiments of the present application provide an artificial intelligence-based service evaluation method, device, equipment, and storage medium to solve the problem of low objectivity and accuracy in the current service evaluation process.

一种基于人工智能的服务评价方法,包括:A service evaluation method based on artificial intelligence, including:

获取服务过程中实时采集的待识别语音流;The voice stream to be recognized collected in real time during the process of obtaining the service;

对所述待识别语音流进行身份识别,确定所述待识别语音流对应的目标身份信息;Perform identity recognition on the voice stream to be recognized, and determine the target identity information corresponding to the voice stream to be recognized;

对所述待识别语音流进行文本分析,获取所述待识别语音流对应的文本分析结果;Perform text analysis on the voice stream to be recognized, and obtain a text analysis result corresponding to the voice stream to be recognized;

对所述待识别语音流进行情绪分析,获取所述待识别语音流对应的情绪分析结果;Perform sentiment analysis on the voice stream to be recognized, and obtain a sentiment analysis result corresponding to the voice stream to be recognized;

对所述待识别语音流对应的所述文本分析结果和所述情绪分析结果进行融合处理,获取所述目标身份信息对应的服务质量评分。Fusion processing is performed on the text analysis result and the emotion analysis result corresponding to the voice stream to be recognized, and the service quality score corresponding to the target identity information is obtained.

一种基于人工智能的服务评价装置,包括:A service evaluation device based on artificial intelligence, including:

待识别语音流获取模块,用于获取服务过程中实时采集的待识别语音流;To-be-recognized voice stream acquisition module, used to acquire the to-be-recognized voice stream collected in real time during the service process;

目标身份信息获取模块,用于对所述待识别语音流进行身份识别,确定所述待识别语音流对应的目标身份信息;The target identity information acquisition module is configured to perform identity recognition on the voice stream to be recognized, and determine the target identity information corresponding to the voice stream to be recognized;

文本分析结果获取模块,用于对所述待识别语音流进行文本分析,获取所述待识别语音流对应的文本分析结果;A text analysis result obtaining module, configured to perform text analysis on the voice stream to be recognized, and obtain a text analysis result corresponding to the voice stream to be recognized;

情绪分析结果获取模块,用于对所述待识别语音流进行情绪分析,获取所述待识别语音流对应的情绪分析结果;An emotion analysis result obtaining module, configured to perform emotion analysis on the voice stream to be recognized, and obtain the emotion analysis result corresponding to the voice stream to be recognized;

服务质量评分获取模块,用于对所述待识别语音流对应的所述文本分析结果和所述情绪分析结果进行融合处理,获取所述目标身份信息对应的服务质量评分。The service quality score obtaining module is configured to perform fusion processing on the text analysis result and the sentiment analysis result corresponding to the voice stream to be recognized, and obtain the service quality score corresponding to the target identity information.

一种计算机设备,包括存储器、处理器以及存储在所述存储器中并可在所述处理器上运行的计算机可读指令,所述处理器执行所述计算机可读指令时实现如下步骤:A computer device includes a memory, a processor, and computer-readable instructions that are stored in the memory and can run on the processor, and the processor implements the following steps when the processor executes the computer-readable instructions:

获取服务过程中实时采集的待识别语音流;The voice stream to be recognized collected in real time during the process of obtaining the service;

对所述待识别语音流进行身份识别,确定所述待识别语音流对应的目标身份信息;Perform identity recognition on the voice stream to be recognized, and determine the target identity information corresponding to the voice stream to be recognized;

对所述待识别语音流进行文本分析,获取所述待识别语音流对应的文本分析结果;Perform text analysis on the voice stream to be recognized, and obtain a text analysis result corresponding to the voice stream to be recognized;

对所述待识别语音流进行情绪分析,获取所述待识别语音流对应的情绪分析结果;Perform sentiment analysis on the voice stream to be recognized, and obtain a sentiment analysis result corresponding to the voice stream to be recognized;

对所述待识别语音流对应的所述文本分析结果和所述情绪分析结果进行融合处理,获 取所述目标身份信息对应的服务质量评分。Perform fusion processing on the text analysis result and the sentiment analysis result corresponding to the voice stream to be recognized, and obtain a service quality score corresponding to the target identity information.

一个或多个存储有计算机可读指令的可读存储介质,所述计算机可读存储介质存储有计算机可读指令,所述计算机可读指令被一个或多个处理器执行时,使得所述一个或多个处理器执行如下步骤:One or more readable storage media storing computer readable instructions, the computer readable storage medium storing computer readable instructions, and when the computer readable instructions are executed by one or more processors, the one Or multiple processors perform the following steps:

获取服务过程中实时采集的待识别语音流;The voice stream to be recognized collected in real time during the process of obtaining the service;

对所述待识别语音流进行身份识别,确定所述待识别语音流对应的目标身份信息;Perform identity recognition on the voice stream to be recognized, and determine the target identity information corresponding to the voice stream to be recognized;

对所述待识别语音流进行文本分析,获取所述待识别语音流对应的文本分析结果;Perform text analysis on the voice stream to be recognized, and obtain a text analysis result corresponding to the voice stream to be recognized;

对所述待识别语音流进行情绪分析,获取所述待识别语音流对应的情绪分析结果;Perform sentiment analysis on the voice stream to be recognized, and obtain a sentiment analysis result corresponding to the voice stream to be recognized;

对所述待识别语音流对应的所述文本分析结果和所述情绪分析结果进行融合处理,获取所述目标身份信息对应的服务质量评分。Fusion processing is performed on the text analysis result and the emotion analysis result corresponding to the voice stream to be recognized, and the service quality score corresponding to the target identity information is obtained.

上述基于人工智能的服务评价方法、装置、设备及存储介质中,通过对待识别语音流进行身份识别,以确定其对应的目标身份信息,从而实现对未知说话人对应的待识别语音流进行身份识别。通过对待识别语音流进行文本分析和情绪分析,分别获取文本分析结果和情绪分析结果,再对文本分析结果和情绪分析结果进行融合处理,获取目标身份信息对应的服务质量评分,以实现采用人工智能的技术手段实现对待识别语音流中说话人的服务质量进行客观分析,以保证获取的目标分析结果的客观性和准确性,避免人为主观评价的不足。In the above artificial intelligence-based service evaluation method, device, equipment and storage medium, the voice stream to be recognized is identified to determine its corresponding target identity information, so as to realize the identity recognition of the voice stream to be recognized corresponding to the unknown speaker . Through text analysis and sentiment analysis of the voice stream to be recognized, the text analysis results and sentiment analysis results are obtained respectively, and then the text analysis results and sentiment analysis results are fused to obtain the service quality score corresponding to the target identity information, so as to realize the use of artificial intelligence The technical means to realize the objective analysis of the service quality of the speaker in the speech stream to be recognized, to ensure the objectivity and accuracy of the target analysis results obtained, and to avoid the lack of subjective evaluation by people.

为了更清楚地说明本申请实施例的技术方案,下面将对本申请实施例的描述中所需要使用的附图作简单地介绍,显而易见地,下面描述中的附图仅仅是本申请的一些实施例,对于本领域普通技术人员来讲,在不付出创造性劳动性的前提下,还可以根据这些附图获得其他的附图。In order to explain the technical solutions of the embodiments of the present application more clearly, the following will briefly introduce the drawings that need to be used in the description of the embodiments of the present application. Obviously, the drawings in the following description are only some embodiments of the present application. For those of ordinary skill in the art, other drawings can be obtained based on these drawings without creative labor.

图1是本申请一实施例中基于人工智能的服务评价方法的一应用环境示意图;FIG. 1 is a schematic diagram of an application environment of an artificial intelligence-based service evaluation method in an embodiment of the present application;

图2是本申请一实施例中基于人工智能的服务评价方法的一流程图;FIG. 2 is a flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application;

图3是本申请一实施例中基于人工智能的服务评价方法的另一流程图;FIG. 3 is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application;

图4是本申请一实施例中基于人工智能的服务评价方法的另一流程图;FIG. 4 is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application;

图5是本申请一实施例中基于人工智能的服务评价方法的另一流程图;FIG. 5 is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application;

图6是本申请一实施例中基于人工智能的服务评价方法的另一流程图;FIG. 6 is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application;

图7是本申请一实施例中基于人工智能的服务评价方法的另一流程图;FIG. 7 is another flowchart of a service evaluation method based on artificial intelligence in an embodiment of the present application;

图8是本申请一实施例中基于人工智能的服务评价装置的一示意图;FIG. 8 is a schematic diagram of a service evaluation device based on artificial intelligence in an embodiment of the present application;

图9是本申请一实施例中计算机设备的一示意图。Fig. 9 is a schematic diagram of a computer device in an embodiment of the present application.

下面将结合本申请实施例中的附图,对本申请实施例中的技术方案进行清楚、完整地描述,显然,所描述的实施例是本申请一部分实施例,而不是全部的实施例。基于本申请中的实施例,本领域普通技术人员在没有作出创造性劳动前提下所获得的所有其他实施例,都属于本申请保护的范围。The technical solutions in the embodiments of the present application will be described clearly and completely in conjunction with the accompanying drawings in the embodiments of the present application. Obviously, the described embodiments are part of the embodiments of the present application, rather than all of them. Based on the embodiments in this application, all other embodiments obtained by those of ordinary skill in the art without creative work shall fall within the protection scope of this application.