USRE49105E1 - Self-calibrated, remote imaging and data processing system - Google Patents

Self-calibrated, remote imaging and data processing systemDownload PDFInfo

- Publication number

- USRE49105E1 USRE49105E1US16/661,868US201916661868AUSRE49105EUS RE49105 E1USRE49105 E1US RE49105E1US 201916661868 AUS201916661868 AUS 201916661868AUS RE49105 EUSRE49105 EUS RE49105E

- Authority

- US

- United States

- Prior art keywords

- imaging sensor

- image

- mount unit

- imaging

- pixels

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Expired - Lifetime

Links

Images

Classifications

- G—PHYSICS

- G01—MEASURING; TESTING

- G01C—MEASURING DISTANCES, LEVELS OR BEARINGS; SURVEYING; NAVIGATION; GYROSCOPIC INSTRUMENTS; PHOTOGRAMMETRY OR VIDEOGRAMMETRY

- G01C11/00—Photogrammetry or videogrammetry, e.g. stereogrammetry; Photographic surveying

- G01C11/02—Picture taking arrangements specially adapted for photogrammetry or photographic surveying, e.g. controlling overlapping of pictures

- G—PHYSICS

- G01—MEASURING; TESTING

- G01C—MEASURING DISTANCES, LEVELS OR BEARINGS; SURVEYING; NAVIGATION; GYROSCOPIC INSTRUMENTS; PHOTOGRAMMETRY OR VIDEOGRAMMETRY

- G01C21/00—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00

- G01C21/20—Instruments for performing navigational calculations

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S17/00—Systems using the reflection or reradiation of electromagnetic waves other than radio waves, e.g. lidar systems

- G01S17/86—Combinations of lidar systems with systems other than lidar, radar or sonar, e.g. with direction finders

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S17/00—Systems using the reflection or reradiation of electromagnetic waves other than radio waves, e.g. lidar systems

- G01S17/88—Lidar systems specially adapted for specific applications

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S17/00—Systems using the reflection or reradiation of electromagnetic waves other than radio waves, e.g. lidar systems

- G01S17/88—Lidar systems specially adapted for specific applications

- G01S17/89—Lidar systems specially adapted for specific applications for mapping or imaging

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S19/00—Satellite radio beacon positioning systems; Determining position, velocity or attitude using signals transmitted by such systems

- G01S19/01—Satellite radio beacon positioning systems transmitting time-stamped messages, e.g. GPS [Global Positioning System], GLONASS [Global Orbiting Navigation Satellite System] or GALILEO

- G01S19/13—Receivers

- G01S19/14—Receivers specially adapted for specific applications

- G—PHYSICS

- G03—PHOTOGRAPHY; CINEMATOGRAPHY; ANALOGOUS TECHNIQUES USING WAVES OTHER THAN OPTICAL WAVES; ELECTROGRAPHY; HOLOGRAPHY

- G03B—APPARATUS OR ARRANGEMENTS FOR TAKING PHOTOGRAPHS OR FOR PROJECTING OR VIEWING THEM; APPARATUS OR ARRANGEMENTS EMPLOYING ANALOGOUS TECHNIQUES USING WAVES OTHER THAN OPTICAL WAVES; ACCESSORIES THEREFOR

- G03B37/00—Panoramic or wide-screen photography; Photographing extended surfaces, e.g. for surveying; Photographing internal surfaces, e.g. of pipe

- G03B37/04—Panoramic or wide-screen photography; Photographing extended surfaces, e.g. for surveying; Photographing internal surfaces, e.g. of pipe with cameras or projectors providing touching or overlapping fields of view

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N17/00—Diagnosis, testing or measuring for television systems or their details

- H04N17/002—Diagnosis, testing or measuring for television systems or their details for television cameras

- G—PHYSICS

- G03—PHOTOGRAPHY; CINEMATOGRAPHY; ANALOGOUS TECHNIQUES USING WAVES OTHER THAN OPTICAL WAVES; ELECTROGRAPHY; HOLOGRAPHY

- G03B—APPARATUS OR ARRANGEMENTS FOR TAKING PHOTOGRAPHS OR FOR PROJECTING OR VIEWING THEM; APPARATUS OR ARRANGEMENTS EMPLOYING ANALOGOUS TECHNIQUES USING WAVES OTHER THAN OPTICAL WAVES; ACCESSORIES THEREFOR

- G03B2206/00—Systems for exchange of information between different pieces of apparatus, e.g. for exchanging trimming information, for photo finishing

Definitions

- the present inventionrelates, generally, to the field of remote imaging techniques and, more particularly, to a system for rendering high-resolution, high accuracy, low distortion digital images over very large fields of view.

- Remote sensing and imagingare broad-based technologies having a number of diverse and extremely important practical applications—such as geological mapping and analysis, and meteorological forecasting.

- Aerial and satellite-based photography and imagingare especially useful remote imaging techniques that have, over recent years, become heavily reliant on the collection and processing of data for digital images, including spectral, spatial, elevation, and vehicle location and orientation parameters.

- Spatial datacharacterizing real estate improvements and locations, roads and highways, environmental hazards and conditions, utilities infrastructures (e.g., phone lines, pipelines), and geophysical features—can now be collected, processed, and communicated in a digital format to conveniently provide highly accurate mapping and surveillance data for various applications (e.g., dynamic GPS mapping).

- Elevation datamay be used to improve the overall system's spatial and positional accuracy and may be acquired from either existing Digital Elevation Model (DEM) data sets or collected with the spectral sensor data from an active, radiation measuring Doppler based devices, or passive, stereographic calculations.

- DEMDigital Elevation Model

- Photographic issuessuch as spherical aberrations, astigmatism, field curvature, distortion, and chromatic aberrations are well-known problems that must be dealt with in any sensor/imaging application.

- Certain applicationsrequire very high image resolution—often with tolerances of inches.

- an actual digital imaging devicemay be located anywhere from several feet to miles from its target, resulting in a very large scale factor. Providing images with very large scale factors, that also have resolution tolerances of inches, poses a challenge to even the most robust imaging system.

- Ortho-imagingis an approach that has been used in an attempt to address this problem.

- ortho-imagingrenders a composite image of a target by compiling varying sub-images of the target.

- a digital imaging devicethat has a finite range and resolution records images of fixed subsections of a target area sequentially. Those images are then aligned according to some sequence to render a composite of a target area.

- the present inventionrelates to remote data collection and processing system using a variety of sensors.

- the systemmay include computer console units that control vehicle and system operations in real-time.

- the systemmay also include global positioning systems that are linked to and communicate with the computer consoles.

- cameras and/or camera array assembliescan be employed for producing an image of a target viewed through an aperture.

- the camera array assembliesare communicatively connected to the computer consoles.

- the camera array assemblyhas a mount housing, a first imaging sensor centrally coupled to the housing having a first focal axis passing through the aperture.

- the camera array assemblyalso has a second imaging sensor coupled to the housing and offset from the first imaging sensor along an axis, that has a second focal axis passing through the aperture and intersecting the first focal axis within an intersection area.

- the camera array assemblyhas a third imaging sensor, coupled to the housing and offset from the first imaging sensor along the axis, opposite the second imaging sensor, that has a third focal axis passing through the aperture and intersecting the first focal axis within the intersection area. Any number of one-to-n cameras may be used in this manner, where “n” can be any odd or even number.

- the systemmay also include an Attitude Measurement Unit (AMU) such as inertial, optical, or similar measurement units communicatively connected to the computer consoles and the camera array assemblies.

- AMUAttitude Measurement Unit

- the AMUmay determine the yaw, pitch, and/or roll of the aircraft at any instant in time and successive DGPS positions may be used to measure the vehicle heading with relation to geodesic north.

- the AMU datais integrated with the precision DGPS data to produce a robust, real-time AMU system.

- the systemmay further include a mosaicing module housed within the computer consoles.

- the mosaicing moduleincludes a first component for performing initial processing on an input image.

- the mosaicing modulealso includes a second component for determining geographical boundaries of an input image with the second component being cooperatively engaged with the first component.

- the mosaicing modulefurther includes a third component for mapping an input image into the composite image with accurate geographical position.

- the third componentbeing cooperatively engaged with the first and second components.

- a fourth componentis also included in the mosaicing module for balancing color of the input images mapped into the composite image.

- the fourth componentcan be cooperatively engaged with the first, second and third components.

- the mosaicing modulecan include a fifth component for blending borders between adjacent input images mapped into the composite image. The fifth component being cooperatively engaged with the first, second, third and fourth components.

- a sixth componentan optional forward oblique and/or optional rear oblique camera array system may be implemented that collects oblique image data and merges the image data with attitude and positional measurements in order to create a digital elevation model using stereographic techniques. Creation of which may be performed in real-time onboard the vehicle or post processed later.

- This sixth componentworks cooperatively with the other components. All components may be mounted to a rigid platform for the purpose of providing co-registration of sensor data. Vibrations, turbulence, and other forces may act on the vehicle in such a way as to create errors in the alignment relationship between sensors. Utilization of common, rigid platform mount for the sensors provides a significant advantage over other systems that do not use this co-registration architecture.

- the present inventionmay employ a certain degree of lateral oversampling to improve output quality and/or co-mounted, co-registered oversampling to overcome physical pixel resolution limits.

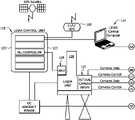

- FIG. 1illustrates a vehicle based data collection and processing system of the present invention

- FIG. 1Aillustrates a portion of the vehicle based data collection and processing system of FIG. 1 ;

- FIG. 1Billustrates a portion of the vehicle based data collection and processing system of FIG. 1 ;

- FIG. 2illustrates a vehicle based data collection and processing system of FIG. 1 with the camera array assembly of the present invention shown in more detail;

- FIG. 3illustrates a camera array assembly in accordance with certain aspects of the present invention

- FIG. 4illustrates one embodiment of an imaging pattern retrieved by the camera array assembly of FIG. 1 ;

- FIG. 5depicts an imaging pattern illustrating certain aspects of the present invention

- FIG. 6illustrates an image strip in accordance with the present invention

- FIG. 7illustrates another embodiment of an image strip in accordance with the present invention.

- FIG. 8illustrates one embodiment of an imaging process in accordance with the present invention

- FIG. 9illustrates diagrammatically how photos taken with the camera array assembly can be aligned to make an individual frame

- FIG. 10is a block diagram of the processing logic according to certain embodiments of the present invention.

- FIG. 11is an illustration of lateral oversampling looking down from a vehicle according to certain embodiments of the present invention.

- FIG. 12is an illustration of lateral oversampling looking down from a vehicle according to certain embodiments of the present invention.

- FIG. 13is an illustration of flight line oversampling looking down from a vehicle according to certain embodiments of the present invention.

- FIG. 14is an illustration of flight line oversampling looking down from a vehicle according to certain embodiments of the present invention.

- FIG. 15is an illustration of progressive magnification looking down from a vehicle according to certain embodiments of the present invention.

- FIG. 16is an illustration of progressive magnification looking down from a vehicle according to certain embodiments of the present invention.

- FIG. 17is an illustration of progressive magnification looking down from a vehicle according to certain embodiments of the present invention.

- FIG. 18is a schematic of the system architecture according to certain embodiments of the present invention.

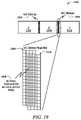

- FIG. 19is an illustration of lateral co-mounted, co-registered oversampling in a sidelap sub-pixel area for a single camera array looking down from a vehicle according to certain embodiments of the present invention

- FIG. 20is an illustration of lateral co-mounted, co-registered oversampling in a sidelap sub-pixel area for two overlapping camera arrays looking down from a vehicle according to certain embodiments of the present invention.

- FIG. 21is an illustration of fore and lateral co-mounted, co-registered oversampling in sidelap sub-pixel areas for two stereo camera arrays looking down from a vehicle according to certain embodiments of the present invention.

- FIGS. 1, 1A, and 1BA vehicle based data collection and processing system 100 of the present invention is shown in FIGS. 1, 1A, and 1B . Additional aspects and embodiments of the present invention are shown in FIGS. 2 and 18 .

- System 100includes one or more computer consoles 102 .

- the computer consolescontain one or more computers 104 for controlling both vehicle and system operations.

- Examples of the functions of the computer consoleare the controlling digital color sensor systems that can be associated with the data collection and processing system, providing the display data to a pilot, coordinating the satellite generated GPS pulse-per-second (PPS) event trigger (which may be 20 or more pulses per second), data logging, sensor control and adjustment, checking and alarming for error events, recording and indexing photos, storing and processing data, flight planning capability that automates the navigation of the vehicle, data, and providing a real-time display of pertinent information.

- PPSGPS pulse-per-second

- a communications interface between the control computer console and the vehicle autopilot controlprovides the ability to actually control the flight path of the vehicle in real-time. This results in a more precise control of the vehicle's path than is possible by a human being. All of these functions can be accomplished by the use of various computer programs that are synchronized to the GPS PPS signals and take into account the various electrical latencies of the measurement devices.

- the computeris embedded within the sensor.

- One or more differential global positioning systems 106are incorporated into the system 100 .

- the global positioning systems 106are used to navigate and determine precise flight paths during vehicle and system operations. To accomplish this, the global positioning systems 106 are communicatively linked to the computer console 102 such that the information from the global positioning systems 106 can be acquired and processed without flight interruption.

- Zero or more GPS unitsmay be located at known survey points in order to provide a record of each sub-seconds' GPS satellite-based errors in order to be able to back correct the accuracy of the system 100 .

- GPS and/or ground based positioning servicesmay be used that eliminate the need for ground control points altogether. This technique results in greatly improved, sub-second by sub-second positional accuracy of the data capture vehicle.

- One or more AMUs 108that provide real-time yaw, pitch, and roll information that is used to accurately determine the attitude of the vehicle at the instant of data capture are also communicatively linked to the computer console 102 .

- the present attitude measurement unit(e.g., Applanix POS AV), uses three high performance fiber optic gyros, one gyro each for yaw, pitch, and roll measurement.

- AMUs from other manufacturers, and AMUs that use other inertial measurement devicescan be used as well.

- an AMUmay be employed to determine the instantaneous attitude of the vehicle and make the system more fault tolerant to statistical errors in AMU readings.

- Connected to the AMUcan be one or more multi-frequency DGPS receivers 110 .

- the multi-frequency DGPS receivers 110can be integrated with the AMU's yaw, pitch, and roll attitude data in order to more accurately determine the location of the remote sensor platform in three dimensional space. Additionally, the direction of geodesic North may be determined by the vector created by successive DGPS positions, recorded in a synchronized manner with the GPS PPS signals.

- One or more camera array assemblies 112 for producing an image of a target viewed through an apertureare also communicatively connected to the one or more computer consoles 102 .

- the camera array assemblies 112which will be described in greater detail below, provide the data collection and processing system with the ability to capture high resolution, high precision progressive scan or line scan, color digital photography.

- the systemmay also include DC power and conditioning equipment 114 to condition DC power and to invert DC power to AC power in order to provide electrical power for the system.

- the systemmay further include a navigational display 116 , which graphically renders the position of the vehicle versus the flight plan for use by the pilot (either onboard or remote) of the vehicle to enable precision flight paths in horizontal and vertical planes.

- the systemmay also include an EMU module comprised of LIDAR, SAR 118 or a forward and rear oblique camera array for capturing three dimensional elevation/relief data.

- the EMU module 118can include a laser unit 120 , an EMU control unit 122 , and an EMU control computer 124 .

- Temperature controlling devicessuch as solid state cooling modules, can also be deployed as needed in order to provide the proper thermal environment for the system.

- the systemalso includes a mosaicing module, not depicted, housed with the computer console 102 .

- the mosaicing modulewhich will be described in further detail below, provides the system the ability to gather data acquired by the global positioning system 106 , the AMU 108 , and the camera system 112 and process that data into useable orthomaps.

- the system 100also can include a self-locking flight path technique that provides the ability to micro-correct the positional accuracy of adjacent flight paths in order to realize precision that exceeds the native precision of the AMU and DGPS sensors alone.

- a complete flight planning methodologyis used to micro plan all aspects of missions.

- the inputsare the various mission parameters (latitude/longitude, resolution, color, accuracy, etc.) and the outputs are detailed on-line digital maps and data files that are stored onboard the data collection vehicle and used for real-time navigation and alarms.

- the ability to interface the flight planning data directly into the autopilotis an additional integrated capability.

- a computer programmay be used that automatically controls the flight path, attitude adjustments, graphical display, moving maps of the vehicle path, checks for alarm conditions and corrective actions, notifies the pilot and/or crew of overall system status, and provides for fail-safe operations and controls. Safe operations parameters may be constantly monitored and reported. Whereas the current system uses a manned crew, the system is designed to perform equally well in an unmanned vehicle.

- FIG. 2shows another depiction of the present invention.

- the camera array assembly 112is shown in more detail.

- the camera array assembly 112allows for images to be acquired from the rear oblique, the forward obliques and the nadir positions.

- FIG. 3describes in more detail a camera array assembly of the present invention.

- FIG. 3provides a camera array assembly 300 airborne over target 302 (e.g., terrain).

- target 302e.g., terrain

- target 302e.g., terrain

- the camera array assembly 300comprises a housing 304 within which imaging sensors 306 , 308 , 310 , 312 and 314 are disposed along a concave curvilinear axis 316 .

- the radius of curvature of axis 316may vary or be altered dramatically, providing the ability to effect very subtle or very drastic degrees of concavity in axis 316 .

- axis 316may be completely linear—having no curvature at all.

- the imaging sensors 306 , 308 , 310 , 312 and 314couple to the housing 304 , either directly or indirectly, by attachment members 318 .

- Attachment members 318may comprise a number of fixed or dynamic, permanent or temporary, connective apparatus.

- the attachment members 318may comprise simple welds, removable clamping devices, or electro-mechanically controlled universal joints.

- the system 100may have a real-time, onboard navigation system to provide a visual, bio-feedback display to the vehicle pilot, or remote display in the case of operations in an unmanned vehicle.

- the pilotis able to adjust the position of the vehicle in real-time in order to provide a more accurate flight path.

- the pilotmay be onboard the vehicle or remotely located and using the flight display to control the vehicle through a communication link.

- the system 100may also use highly fault-tolerant methods that have been developed to provide a software interleaved disk storage methodology that allows one or two hard drives to fail and still not lose target data that is stored on the drives.

- This software inter-leaved disk storage methodologyprovides superior fault-tolerance and portability versus other, hardware methodologies, such as RAID-5.

- the system 100may also incorporate a methodology that has been developed that allows for a short calibration step just before mission data capture.

- the calibration methodology stepadjusts the camera settings, mainly exposure time, based on sampling the ambient light intensity and setting near optimal values just before reaching the region of interest.

- a moving average algorithmis then used to make second-by-second camera adjustments in order to deliver improved, consistent photo results. This improves the color processing of the orthomaps.

- the calibrationmay be used to check or to establish the exact spatial position of each sensor device (cameras, DPG, AMU, EMU, etc.). In this manner, changes that may happen in the spatial location of these devices may be accounted for and maintain overall system precision metrics.

- system 100may incorporate a methodology that has been developed that allows for calibrating the precision position and attitude of each sensor device (cameras, DPG, AMU, EMU, etc.) on the vehicle by flying over an area that contains multiple known, visible, highly accurate geographic positions.

- a programtakes this data as input and outputs the micro positional data that is then used to precisely process the orthomaps.

- housing 304comprises a simple enclosure inside of which imaging sensors 306 , 308 , 310 , 312 and 314 are disposed.

- FIG. 3depicts a 5-camera array

- the systemworks equally well when utilizing any number of camera sensors from 1 to any number.

- Sensors 306 through 314couple, via the attachment members 318 , either collectively to a single transverse cross member, or individually to lateral cross members disposed between opposing walls of the housing 304 .

- the housing 304may itself comprise only a supporting cross member of concave curvature to which the imaging sensors 306 through 314 couple, via members 318 .

- the housing 304may comprise a hybrid combination of enclosure and supporting cross member.

- the housing 304further comprises an aperture 320 formed in its surface, between the imaging sensors and target 302 .

- the aperture 320may comprise only a void, or it may comprise a protective screen or window to maintain environmental integrity within the housing 304 .

- a protective transparent plateis used for any sensor, special coatings may be applied to the plate to improve the quality of the sensor data.

- the aperture 320may comprise a lens or other optical device to enhance or alter the nature of the images recorded by the sensors.

- the aperture 320is formed with a size and shape sufficient to provide the imaging sensors 306 through 314 proper lines of sight to a target region 322 on terrain 302 .

- the imaging sensors 306 through 314are disposed within or along housing 304 such that the focal axes of all sensors converge and intersect each other within an intersection area bounded by the aperture 320 . Depending upon the type of image data being collected, the specific imaging sensors used, and other optics or equipment employed, it may be necessary or desirable to offset the intersection area or point of convergence above or below the aperture 320 .

- the imaging sensors 306 through 314are separated from each other at angular intervals. The exact angle of displacement between the imaging sensors may vary widely depending upon the number of imaging sensors utilized and on the type of imaging data being collected. The angular displacement between the imaging sensors may also be unequal, if required, so as to provide a desired image offset or alignment.

- the focal axes of all imaging sensorsmay intersect at exactly the same point, or may intersect at a plurality of points, all within close proximity to each other and within the intersection area defined by the aperture 320 .

- the imaging sensor 310is centrally disposed within the housing 304 along axis 316 .

- the imaging sensor 310has a focal axis 324 , directed orthogonally from the housing 304 to align the line of sight of the imaging sensor with the image area 326 of the region 322 .

- the imaging sensor 308is disposed within the housing 304 along the axis 316 , adjacent to the imaging sensor 310 .

- the imaging sensor 308is aligned such that its line of sight coincides with the image area 328 of the region 322 , and such that its focal axis 330 converges with and intersects the axis 324 within the area bounded by the aperture 320 .

- the imaging sensor 312is disposed within the housing 304 adjacent to the imaging sensor 310 , on the opposite side of the axis 316 as the imaging sensor 308 .

- the imaging sensor 312is aligned such that its line of sight coincides with the image area 332 of the region 322 , and such that its focal axis 334 converges with and intersects axes 324 and 330 within the area bounded by the aperture 320 .

- the imaging sensor 306is disposed within the housing 304 along the axis 316 , adjacent to the sensor 308 .

- the imaging sensor 306is aligned such that its line of sight coincides with the image area 336 of region 322 , and such that its focal axis 338 converges with and intersects the other focal axes within the area bounded by aperture 320 .

- the imaging sensor 314is disposed within housing 304 adjacent to sensor 312 , on the opposite side of axis 316 as sensor 306 .

- the imaging sensor 314is aligned such that its line of sight coincides with image area 340 of region 322 , and such that its focal axis 344 converges with and intersects the other focal axes within the area bounded by aperture 320 .

- the imaging sensors 306 through 314may comprise a number of digital imaging devices including, for example, individual area scan cameras, line scan cameras, infrared sensors, hyperspectral and/or seismic sensors. Each sensor may comprise an individual imaging device, or may itself comprise an imaging array.

- the imaging sensors 306 through 314may all be of a homogenous nature, or may comprise a combination of varied imaging devices. For ease of reference, the imaging sensors 306 through 314 are hereafter referred to as cameras 306 through 314 , respectively.

- lens distortionis typically a source of imaging problems. Each individual lens must be carefully calibrated to determine precise distortion factors.

- small-format digital camerashaving lens angle widths of 17 degrees or smaller are utilized. This alleviates noticeable distortion efficiently and affordably.

- Cameras 306 through 314are alternately disposed within housing 304 along axis 316 such that each camera's focal axis converges upon aperture 320 , crosses focal axis 324 , and aligns its field of view with a target area opposite its respective position in the array resulting in a “cross-eyed”, retinal relationship between the cameras and the imaging target(s).

- the camera array assembly 300is configured such that adjoining borders of image areas 326 , 328 , 332 , 336 and 340 overlap slightly.

- attachment members 318are of a permanent and fixed nature (e.g., welds)

- the spatial relationship between the aperture 320 , the cameras, and their lines of sightremain fixed as will the spatial relationship between image areas 326 , 328 , 332 , 336 and 340 .

- Such a configurationmay be desirable in, for example, a satellite surveillance application where the camera array assembly 300 will remain at an essentially fixed distance from region 322 .

- the position and alignment of the camerasis set such that areas 326 , 328 , 332 , 336 and 340 provide full imaging coverage of region 322 .

- attachment members 318are of a temporary or adjustable nature, however, it may be desirable to selectively adjust, either manually or by remote automation, the position or alignment of the cameras so as to shift, narrow or widen areas 326 , 328 , 332 , 336 and 340 —thereby enhancing or altering the quality of images collected by the camera array assembly 300 .

- the mount unitis any rigid structure to which at least one imaging sensor may be affixed.

- the mount unitis preferably a housing, which encloses the imaging sensor, but may be any rigid structure including a brace, tripod, or the like.

- an imaging sensormeans any device capable of receiving and processing active or passive radiometric energy, i.e., light, sound, heat, gravity, and the like, from a target area.

- imaging sensorsmay include any number of digital cameras, including those that utilize a red-blue-green filter, a bushbroom filter, or a hyperspectral filter, LIDAR sensors, infrared sensors, heat-sensing sensors, gravitometers and the like.

- Imagining sensorsdo not include attitude measuring sensors such as gyroscopes, GPS devices, and the like devices, which serve to orient the vehicle with the aid of satellite data and/or inertial data.

- the multiple sensorsare different.

- the mount unitpreferably has an aperture through which light and/or energy may pass.

- the mount plateis preferably planer, but may be non-planer.

- the mount platepreferably has aperture(s) in alignment with the aperture(s) of the mount unit(s) through which light and/or energy may pass.

- a rigid structureis one that flexes less than about 100 th of a degree, preferably less than about 1,000 th of a degree, more preferably less than about 10,000 th of a degree while in use.

- the rigid structureis one that flexes less than about 100 th of a degree, preferably less than about 1,000 th of a degree, more preferably less than about 10,000 th of a degree while secured to an aircraft during normal, i.e., non-turbulent, flight.

- Objectsare rigidly affixed to one another if during normal operation they flex from each other less than about 100 th of a degree, preferably less than about 1,000 th of a degree, more preferably less than about 10,000 th of a degree.

- Camera 310is designated as the principal camera.

- the image plane 326 of camera 310serves as a plane of reference.

- the orientations of the other cameras 306 , 308 , 312 and 314are measured relative to the plane of reference.

- the relative orientations of each cameraare measured in terms of the yaw, pitch and roll angles required to rotate the image plane of the camera to become parallel to the plane of reference.

- the order of rotationsis preferably yaw, pitch, and roll.

- the imaging sensors affixed to the mount unit(s)may not be aligned in the same plane. Instead, the angle of their mount relative to the mount angle of a first sensor affixed to the first mount unit, preferably the principle nadir camera of the first mount unit, may be offset. Accordingly, the imaging sensors may be co-registered to calibrate the physical mount angle offset of each imaging sensor relative to each other.

- multiple, i.e., at least two, rigid mount unitsare affixed to the same rigid mount plate and are co-registered.

- the cameras 306 through 314are affixed to a rigid mount unit and co-registered.

- the geometric centerpoint of the AMUpreferably a gyroscope

- the physical position of the first sensor affixed to the first mount unitpreferably the principle nadir camera of the first mount unit, is calculated relative to a reference point, preferably the geometric centerpoint of the AMU.

- the physical position of all remaining sensors within all mount unitsare calculated—directly or indirectly—relative to the same reference point.

- the boresight angle of a sensoris defined as the angle from the geometric center of that sensor to a reference plane.

- the reference planeis orthogonal to the target area.

- the boresight angle of the first sensormay be determined using the ground target points.

- the boresight angles of subsequent sensorsare preferably calculated with reference to the boresight angle of the first sensor.

- the sensorsare preferably calibrated using known ground targets, which are preferably photo-identifiable, and alternatively calibrated using a self-locking flight path or any other method as disclosed in U.S. Publication No. 2004/0054488A1, now U.S. Pat. No. 7,212,938B2, the disclosure of which is hereby incorporated by reference in full.

- the imaging sensor within the second mount unitmay be any imaging sensor, and is preferably a LIDAR.

- the second imaging sensoris a digital camera, or array of digital cameras.

- the boresight angle of so the sensor(s) affixed to the second mount unitare calculated with reference to the boresight angle of the first sensor.

- the physical offset of the imaging sensor(s) within the second mount unitmay be calibrated with reference to the boresight angle of the first sensor within the first mount unit.

- images of areas 336 , 328 , 326 , 332 and 340 taken by cameras 306 through 314 , respectively,are illustrated from an overhead view. Again, because of the “cross-eyed” arrangement, the image of area 336 is taken by camera 306 , the image of area 340 is taken by camera 314 , and so on. In one embodiment of the present invention, images other than those taken by the center camera 310 take on a trapezoidal shape after perspective transformation. Cameras 306 through 314 form an array along axis 316 that is, in most applications, pointed down vertically.

- a second array of camerasconfigured similar the array of cameras 306 through 314 , is aligned with respect to the first array of cameras to have an oblique view providing a “heads-up” perspective.

- the angle of declination from horizontal of the heads-up camera array assemblymay vary due to mission objectives and parameters but angles of 25-45 degrees are typical.

- Other alternative embodiments, varying the mounting of camera arrays,are similarly comprehended by the present invention. In all such embodiments, the relative positions and attitudes of the cameras are precisely measured and calibrated so as to facilitate image processing in accordance with the present invention.

- an external mechanisme.g., a GPS timing signal

- a mosaicing modulethen renders the individual input images from such an array into an ortho-rectified compound image (or “mosaic”), without any visible seams between the adjacent images.

- the mosaicing moduleperforms a set of tasks comprising: determining the geographical boundaries and dimensions of each input image; projecting each input image onto the mosaic with accurate geographical positioning; balancing the color of the images in the mosaic; and blending adjacent input images at their shared seams.

- the exact order of the tasks performedmay vary, depending upon the size and nature of the input image data.

- the mosaicing moduleperforms only a single transformation to an original input image during mosaicing. That transformation can be represented by a 4 ⁇ 4 matrix. By combining multiple transformation matrices into a single matrix, processing time is reduced and original input image sharpness is retained.

- pixels in the mosaicmay not be mapped to by any pixels in the input images (i.e., input pixels). Warped lines could potentially result as artifacts in the mosaic.

- Certain embodiments of the present inventionovercome this with a super-sampling system, where each input and output pixel is further divided into an n ⁇ m grid of sub-pixels. Transformation is performed from sub-pixels to sub-pixels. The final value of an output pixel is the average value of its sub-pixels for which there is a corresponding input sub-pixel. Larger n and m values produce mosaics of higher resolution, but do require extra processing time.

- the mosaicing modulemay utilize the following information: the spatial position (e.g., x, y, z coordinates) of each camera's focal point at the time an input image is captured; the attitude (i.e., yaw, pitch, roll) of each camera's image plane relative to the target region's ground plane at the time an input image was captured; each camera's fields of view (i.e., along track and cross track); and the Digital Terrain Model (DTM) of the area.

- the attitudecan be provided by the AMUs associated with the system.

- Digital terrain models (DTMs) or Digital surface models (DSMs)can be created from information obtained using a LIDAR module 118 .

- LIDARis similar to the more familiar radar, and can be thought of as laser radar.

- radarradio waves are transmitted into the atmosphere that scatters some of the energy back to the radar's receiver.

- LIDARalso transmits and receives electromagnetic radiation, but at a higher frequency since it operates in the ultraviolet, visible and infrared region of the electromagnetic spectrum.

- LIDARtransmits light out to a target area. The transmitted light interacts with and is changed by the target area. Some of this light is reflected/scattered back to the LIDAR instrument where it can be analyzed. The change in the properties of the light enables some property of the target area to be determined. The time for the light to travel out to the target area and back to LIDAR device is used to determine the range to the target.

- DTM and DSM data setscan also be captured from the camera array assembly.

- Traditional means of obtaining elevation datamay also be used such as stereographic techniques.

- Range finder LIDARis the simplest LIDAR and is used to measure the distance from the LIDAR device to a solid or hard target.

- DIAL LIDARis used to measure chemical concentrations (such as ozone, water vapor, pollutants) in the atmosphere.

- a DIAL LIDARuses two different laser wavelengths that are selected so that one of the wavelengths is absorbed by the molecule of interest while the other wavelength is not. The difference in intensity of the two return signals can be used to deduce the concentration of the molecule being investigated.

- Doppler LIDARis used to measure the velocity of a target.

- the wavelength of the light reflected/scattered off the targetwill be changed slightly. This is known as a Doppler-shift and therefore Doppler LIDAR. If the target is moving away from the LIDAR, the return light will have a longer wavelength (sometimes referred to as a red shift), if moving towards the LIDAR the return light will be at a shorter wavelength (blue shifted).

- the targetcan be either a hard target or an atmospheric target (e.g. microscopic dust and aerosol particles that are carried by the wind.

- a camera's focal pointis preferably used as a perspective transformation center. Its position in space may be determined, for example, by a multi-frequency carrier phase post-processed GPS system mounted on the host craft.

- the offsets, in three dimensions, of a camera's focal pointare preferably carefully measured against the center of the GPS antenna. These offsets may be combined with the position of the GPS antenna, and the orientation of the host craft, to determine the exact position of the camera's focal point.

- the position of the GPS antennais preferably determined by processing of collected GPS data against similar ground-based GPS antennas deployed at precisely surveyed points.

- One or more AMUsare preferably mounted onboard for attitude determination.

- the attitude of the AMU reference plane relative to the target region's ground planeis preferably measured and recorded at short intervals, with accuracy better than one-hundredth of one degree.

- the attitude of the AMU reference planemay be defined as the series of rotations that can be performed on the axes of this plane to make it parallel to the ground plane. The term “align” could also be used to describe this operation.

- the attitude of center camera 310(i.e. its image plane), relative to the AMU, is preferably precisely calibrated.

- the attitude of each of the other cameras, relative to center camera 310is preferably also be carefully calibrated. This dependent calibration is more efficient than directly calibrating each camera.

- the camera array assembly 300is remounted, only center camera 310 needs to be recalibrated. Effectively, a series of two transformations is applied to an input image from center camera 310 . First, the center camera's image plane is aligned to the AMU plane. Then, the AMU plane is aligned again to the ground plane. These transformations, however, combine into a single operation by multiplying their respective transformation matrices. For images from each of the other cameras, an additional transformation is first performed to align it with the center camera's image plane.

- the position of the focal point of center camera 310may be determined as described above.

- the x and y components of this positionpreferably determine the position of the mosaic's nadir point 400 on the ground.

- Field of view (FOV) angles of each cameraare known, thus the dimensions of each input image may be determined by the z component of that camera's focal point.

- An average elevation of the groundis preferably determined by computing the average elevation of points in the DTMs of the area, and then each input image is projected to an imaginary horizontal plane at this elevation. Relief displacement is then preferably applied using the DTMs of the area.

- the DTMscan be obtained from many sources including: the USGS 30-or 10-meter DTMs available for most of the US; commercial DTMs; or DTMs obtained by a LIDAR or SAR EMU device mounted on the host craft that captures data concurrently with the cameras.

- the resulting compound imagealso needs to have radiometric consistency throughout, and no visible seams at the joints between two adjacent images.

- the present inventionprovides a number of techniques for achieving this goal.

- Exposure timei.e., the time the shutter is open to collect light onto the image plane. The longer the exposure time, the lighter the resultant image becomes. Exposure time must adapt to changes in ambient lighting caused by conditions such as: cloud coverage; the angle and position of the sun relative to the camera; and so forth. Optimal exposure time may also depend on a camera's orientation with respect to lighting sources (e.g., cameras pointing towards a sunlit object typically receive more ambient light than those pointing towards a shaded object). Exposure time is adjusted to keep the average intensity of an image within a certain desired range. For example, in 24-bit color images each Red, Green and Blue component can have intensity values from 0 to 255. In most instances, however, it is desirable to keep the average intensity at a mean value (i.e., 127).

- an exposure control modulecontrols exposure time for each of the cameras or imaging sensors. It examines each input image and calculates average image intensity. Based on a moving average (i.e., average intensity of the last X number of images), the exposure control module determines whether to increase or decrease exposure time. The module can use a longer running average to effect a slower reaction to changes in lighting conditions, with less susceptibility to unusually dark or light images (e.g., asphalt roads or water). The exposure control module controls exposure time for each camera separately.

- the exposure control modulecomputes the average intensity of an image by selecting only green-dominant pixels. For example, if an image has 1 million pixels and 300,000 are green-dominant, only those 300,000 green-dominant pixels are included in the calculation of average intensity. This results in an imaging process that is less susceptible to biasing caused by man-made structures and water bodies, whose pixels are usually not green-dominant. As previously noted, it is desirable to maintain an intensity value of about 127. When intensity value is over 127 (i.e., over-exposed), exposure time is reduced so that less light is captured. Similarly, when intensity value is under 127 (i.e., under-exposed), exposure time is increased so that more light is captured.

- the exposure control modulereduces intensity differences between input images. Nonetheless, further processing is provided to enhance tonal balance.

- factorse.g., lens physics, atmospheric conditions, spatial/positional relationships of imaging devices

- More lightis received in the center of a camera or sensor than at the edges.

- the mosaicing module of the present inventionaddresses this with an anti-vignetting function, illustrated in reference now to FIG. 5 .

- a number of focal columns 500 , 502 , 504 , 506 and 508converge from image plane 509 and cross through focal point 510 as they range across imaging target area 512 (e.g., ground terrain).

- Columns 500 through 508may comprise individual resolution columns of a single camera or sensor, or may represent the focal axes of a number of independent cameras or sensors.

- column 504serves as the axis and point 513 at which column 504 intersects image plane 509 serves as a principal point.

- the exposure control moduleapplies an anti-vignetting function multiplying the original intensity of an input pixel with a column-dependent anti-vignetting factor.

- the off-axis angle 514is: zero for center column 504 ; larger for columns 502 and 506 ; and larger still for columns 500 and 508 .

- the overall field of view angle 516(FOVx angle) is depicted between columns 504 and 508 .

- ⁇ (c1) and ⁇ (c2)are the ⁇ function values of the off-axis angles at column c1 and c2, respectively.

- Each set of input imagesneeds to be stitched into a mosaic image. Even though the exposure control module regulates the amount of light each camera or sensor receives, the resulting input images may still differ in intensity.

- the present inventionprovides an intensity-balancing module that compares overlapping area between adjacent input images, to further balance the relative intensities. Because adjoining input images are taken simultaneously, the overlapping areas should, in theory, have identical intensity in both input images. However, due to various factors, the intensity values are usually not the same. Some such factors causing intensity difference could include, for example, the exposure control module being biased by unusually bright or dark objects present in the field of view of only a particular camera, or the boresight angles of cameras being different (i.e., cameras that are more slanted receive less light than those more vertical).

- a correlation vector (fR, fG, FB)is determined using, for example, the following process. Let V be a 3 ⁇ 1 vector representing the values (R, G and B) of a pixel:

- a correlation matrix Cmay be derived as:

- the correlation matrixscales pixel values of the secondary image so that the average intensity of the overlapping area of the secondary image becomes identical to the average intensity of the overlapping area of the reference image.

- the second imagecan be balanced to the reference image by multiplying its pixel values by the correlation matrix.

- a center imageis considered the reference image.

- the reference imageis first copied to the compound image (or mosaic). Overlapping areas between the reference image and an adjoining image (e.g., the near left image) are correlated to compute a balancing correlation matrix (BCM).

- BCMbalancing correlation matrix

- the BCMwill be multiplied with vectors representing pixels of the adjoining image to make the intensity of the overlapping area identical in both images.

- This relationshipmay be expressed as:

- the balancing factor for each color channel(i.e., red, green and blue) is independently computed. These three values form the BCM.

- the now-balanced adjoining imageis copied to the mosaic. Smooth transitioning at the border of the copied image is providing by “feathering” with a mask.

- This maskhas the same dimension as the adjoining image and comprises a number of elements. Each element in the mask indicates the weight of the corresponding adjoining image pixel in the mosaic. The weight is zero for pixels at the boundary (i.e. the output value is taken from the reference image), and increases gradually in the direction of the adjoining image until it becomes unity—after a chosen blending width has been reached. Beyond the blending area, the mosaic will be entirely determined by the pixels of the adjoining image. Similarly, the overlaps between all the other constituent input images are analyzed and processed to compute the correlation vectors and to balance the intensities of the images.

- FIG. 6depicts a strip 600 being formed in accordance with the present invention.

- Vbe a vector that represents the R, G and B values of a pixel:

- VR G B

- hthe transition width of region 608

- ythe along-track 606 distance from the boundary 610 of the overlapped region to a point A, whose pixel values are represented by V.

- Cthe correlation matrix:

- the mosaiccan be divided into a number of segments corresponding to the position of the original input images that make up the mosaic. The process described above is applied to each segment separately to provide better local color consistency.

- pixels at the border of two segmentsmay create vertical seams (assuming north-south flight lines).

- balancing factors for pixels in this areahave to be “transitioned” from that of one segment to the other. This is explained now with reference to FIG. 7 .

- FIG. 7depicts a strip 700 being formed in accordance with the present invention.

- a base mosaic 702 and a new segment 704overlap in area 706 .

- Mosaic 702 and another new segment 708overlap in area 710 .

- Segments 704 and 708overlap in area 712 , and areas 706 , 710 and 712 all overlap and coincide at area 714 .

- point 716serves as an origin for y-axis 718 and x-axis 720 . Movement along y-axis 718 represents movement along the flight path of the imaging system.

- Point 716is located at the lower left of area 714 .

- the dimensions of a stripare determined by the minimum and maximum x and y values of the constituent mosaics.

- An output stripis initialized to a background color.

- a first mosaicis transferred to the strip.

- the next mosaic(along the flight path) is processed next.

- Intensity values of the overlapping areas of the new mosaic and the first mosaicare correlated, separately for each color channel.

- the new mosaicis divided into a number of segments corresponding to the original input images that made up the mosaic.

- a mask matrix, comprising a number of mask elements,is created for the new mosaic.

- a mask elementcontains the correlation matrix for a corresponding pixel in the new mosaic. All elements in the mask are initialized to unity.

- the size of the maskcan be limited to just the transition area of the new mosaic.

- the correlation matrixis calculated for the center segment.

- the mask area corresponding to the center segmentis processed.

- the values of the elements at the edge of the overlap areaare set to the correlation vector.

- gradually moving away from the first mosaic along the stripthe components of the correlation matrix are either increased or decreased (whether they are less or more than unity, respectively) until they become unity at a predetermined transition distance.

- the area of the mask corresponding to a segment adjoining the center segmentis then processed similarly.

- the area 714 formed by the first mosaic and the center and adjoining segments of the new imagerequires special treatment. Because the correlation matrix for the adjoining segment may not be identical to that of the center segment, a seam may appear at the border of the two segments in the overlap area 714 with the first mosaic.

- the corneris influenced by the correlation matrices from both segments.

- its correlation matrixis the distance-weighted average of the two segments, evaluated as follows:

- a color fidelity (i.e., white-balance) filteris applied. This multiplies R and B components with a determinable factor to enhance color fidelity. The factor may be determined by calibrating the cameras and lenses.

- the color fidelity filterensures that the colors in an image retain their fidelity, as perceived directly by the human eye. Within the image capture apparatus, the Red, Green and Blue light receiving elements may have different sensitivities to the color they are supposed to capture.

- a “while-balance” processis applied—where image of a white object is captured. Theoretically, pixels in the image of that white object should have equivalent R, G and B values.

- the average color values for each R, G and Bmay be avgR, avgG and avgB, respectively.

- the R, G and B values of the pixelsare multiplied by the following ratios:

- R valuesare multiplied by the ratio avgG/avgR;

- the present inventionprovides an intensity normalization module that normalizes the average intensity of each strip so that the mean and standard deviation are of a desired value. For example, a mean of 127 is the norm in photogrammetry. A standard deviation of 51 helps to spread the intensity value over an optimal range for visual perception of image features.

- Each stripmay have been taken in different lighting conditions and, therefore, may have different imaging data profiles (i.e., mean intensity and standard deviation).

- This modulenormalizes the strips, such that all have the same mean and standard deviation. This enables the strips to be stitched together without visible seams.

- tiled mosaicsfor an area of interest.

- Finished tilescan correspond to the USGS quads or quarter-quads.

- Stitching strips into mosaicsis similar to stitching mosaics together to generate strips, with strips now taking the role of the mosaics.

- problemsmay arise if the line crosses elevated structures such as buildings, bridges, etc. This classic problem in photogrammetry arises from the parallax caused by the same object being looked at from two different perspectives.

- one stripmay present a view from one side of the building while another strip presents a view from another side of the building.

- the resulting mosaicmay look like a tepee.

- a terrain-guided mosaicing processmay be implemented to guide the placement of a seam line.

- LIDAR or DEM data collected with, or analyzed from, image datamay be processed to determine the configuration and shaping of images as they are mosaiced together.

- a seam linemay not be a straight line—instead comprising a seam line that shifts back and forth to snake through elevated structures.

- Process 800begins with a series 802 of one, or more, raw collected images. Images 802 are then processed through a white-balancing process 804 , transforming them into a series of intermediate images. Series 802 is then processed through anti-vignetting function 806 before progressing to the orthorectification process 808 .

- orthorectificationmay rely on position and attitude data 810 from the imaging sensor system or platform, and on DTM data 812 .

- DTM data 812may be developed from position data 810 and from, for example, USGS DTM data 814 or LIDAR data 816 .

- Series 802is now orthorectified and processing continues with color balancing 818 .

- series 802is converted by mosaicing module 820 into compound image 822 .

- Module 820performs the mosaicing and feathering processes during this conversion.

- one or more compound images 822are further combined in step 824 , by mosaicing with a gradient and feathering, into image strip 826 .

- Image stripsare processed through intensity normalization 828 .

- the now normalized strips 828are mosaiced together in step 830 , again by mosaicing with a gradient and feathering, rendering a finishing tiled mosaic 832 .

- the mosaicing performed in step 830may comprise a terrain-guided mosaicing, relying on DTM data 812 or LIDAR data 816 .

- FIG. 9illustrates diagrammatically how photos taken with the camera array assembly may be aligned to make an individual frame.

- This embodimentshows a photo patter illustration looking down from a vehicle, using data ortho-rectified from five cameras.

- FIG. 10is a block diagram of the processing logic according to certain embodiments of the present invention.

- the processing logicaccepts one or more inputs, which may include elevation measurements 1002 , attitude measurements 1004 and/or photo and sensor imagery 1006 .

- Certain inputsmay be passed through an initial processing step prior to analysis, as is shown in block 1008 , wherein the attitude measurements are combined with data from ground control points.

- Elevation measurements 1002 and attitude measurements 1004may be combined to generate processed elevation data 1010 .

- Processed elevation data 1010may then be used to generate elevation DEM 1014 and DTM 1016 .

- attitude measurements 1006may be combined with photo and sensor imagery 1006 to generate georeferenced images 1012 , which then undergo image processing 1018 , which may include color balancing and gradient filtering.

- either DTM 1016 or a USGS DEM 1022is combined with processed images 1018 to generate orthorectified imagery 1024 .

- Orthorectified imagery 1024then feeds into self-locking flightlines 1026 .

- Balancing projection mosaicing 1028then follows, to generate final photo output 1030 .

- FIG. 11is an illustration of a lateral oversampling pattern 1100 looking down from a vehicle according to certain embodiments of the present invention showing minimal lateral oversampling.

- the central nadir region 1102 assigned to the center cameraoverlaps only slightly with the left nadir region 1104 and right nadir region 1106 , so that overlap is minimized

- FIG. 12is an illustration of a lateral oversampling pattern 1200 looking down from a vehicle according to certain embodiments of the present invention showing a greater degree of lateral oversampling.

- the central nadir region 1202shows a high degree of overlap with left nadir region 1204 and right nadir region 1206 .

- FIG. 13is an illustration of a flight line oversampling pattern 1300 looking down from a vehicle according to certain embodiments of the present invention showing a certain degree of flight line oversampling but minimal lateral oversampling.

- Central nadir regions 1302 and 1304are overlapped to one another along the flight line, but do not overlap laterally with left nadir regions 1306 and 1308 or with right nadir regions 1310 and 1312 .

- FIG. 14is an illustration of flight line oversampling looking down from a vehicle according to certain embodiments of the present invention showing significant flight line oversampling as well as significant lateral oversampling. It can be seen that each of the central nadir regions 1402 through 1406 are significantly overlapped with one another as well as with left nadir regions 1408 through 1412 and right nadir regions 1414 through 1418 . Left nadir regions 1408 through 1412 are overlapped with one another, as are right nadir regions 1414 through 1418 . Accordingly, each point on the surface is sampled at least twice, and in some cases as many as four times.

- This techniqueuses the fact that in the area of an image that is covered twice, or more, by different camera sensors, a doubling of the image resolution is possible in both the lateral (across path) and flight line (along path) directions for an overall quadrupling of the resolution.

- FIG. 15is an illustration of a progressive magnification pattern 1500 looking down from a vehicle according to certain embodiments of the present invention.

- Central nadir region 1502is bounded on its left and right edges by inner left nadir region 1504 and inner right nadir region 1506 , respectively.

- Inner left nadir region 1504is bounded on its left edge by outer left nadir region 1508

- inner right nadir region 1506is bounded on its right edge by outer right nadir region 1510 . Note that these regions exhibit a minimal degree of overlap and oversampling from one to another.

- FIG. 16is an illustration of a progressive magnification pattern 1600 looking down from a vehicle according to certain embodiments of the present invention.

- Central nadir region 1602is bounded on its left and right edges by inner left nadir region 1604 and inner right nadir region 1606 , respectively.

- Inner left nadir region 1604is bounded on its left edge by outer left nadir region 1608

- inner right nadir region 1606is bounded on its right edge by outer right nadir region 1610 . Note that, as above, these regions exhibit a minimal degree of overlap and oversampling from one to another.

- Within each of the nadir regions 1604 through 1610there is a central image region 1614 through 1620 shown shaded in grey.

- FIG. 17is an illustration of a progressive magnification pattern 1700 looking down from a vehicle according to certain embodiments of the present invention.

- a left inner nadir region 1702 and a right inner nadir region 1704overlap in the center.

- a left intermediate nadir region 1706 and a right intermediate nadir region 1708are disposed partly outside of regions 1702 and 1704 , respectively, each sharing an overlapping area with the respective adjacent area by approximately 50%.

- An outer left nadir region 1710 and an outer right nadir region 1712are disposed partly outside of regions 1706 and 1708 , respectively, each sharing an overlapping area with the respective adjacent area by approximately 50%.

- a central image region 1714is disposed in the center of pattern 1700 , comprised of the central portions of nadir regions 1702 through 1712 .

- FIG. 18depicts a schematic of the architecture of a system 1800 according to certain embodiments of the present invention.

- System 1800may include one or more GPS satellites 1802 and one or more SATCOM satellites 1804 .

- One or more GPS location systems 1806may also be included, operably connected to one or more modules 1808 collecting LIDAR, GPS and/or X, Y, Z location data and feeding such information to one or more data capture system applications 1812 .

- One or more data capture system applications 1812may also receive spectral data from a camera array 1822 .

- a DGPS 1810may communicate with one or more SATCOM satellites 1804 via a wireless communications link 1826 .

- One or more SATCOM satellites 1804may, in turn, communicate with one or more data capture system applications 1812 .

- One or more data capture system applications 1812may interface with an autopilot 1816 , an SSD and/or a RealTime StitchG system 1820 , which may also interact with one another.

- SSD 1814may be operably connected to RealTime DEM 1818 .

- RealTime DEM 1818 and RealTime StitchG 1820may be connected to a storage device, such as disk array 1824 .

- FIG. 19is an illustration of a lateral co-mounted, co-registered oversampling configuration 1900 for a single camera array 112 looking down from a vehicle according to certain embodiments of the present invention showing minimal lateral oversampling.

- the camerasoverlap a few degrees in the vertical sidelap area 1904 and 1908 .

- FIG. 19depicts a 3-camera array, these subpixel calibration techniques work equally well when utilizing any number of camera sensors from 2 to any number of cameras being calibrated.

- the camera sensorsmay be co-registered to calibrate the physical mount angle offset of each sensor relative to each other and/or to the nadir camera. This provides an initial, “close” calibration. These initial calibration parameters may be entered into an onboard computer system 104 in the system 100 , and updated during flight using oversampling techniques.

- the rectangles labeled A, B, and Crepresent image areas 1902 , 1906 and 1910 from a 3-camera array C-B-A (not shown). Images of areas 1902 , 1906 and 1910 taken by cameras A through C (not shown), respectively, are illustrated from an overhead view. Again, similar to FIGS. 3 and 4 , because of the “cross-eyed” arrangement, the image of area 1902 is taken by right camera A, the image of area 1906 is taken by center/nadir camera B, and the image of area 1910 is taken by left camera C. Cameras A through C form an array (not shown) that is, in most applications, pointed down vertically.

- the hatched areas labeled A/B and B/C sidelapsrepresent image overlap areas 1904 and 1908 , respectively.

- the left image overlap area 1904is where right camera A overlaps with the center/nadir camera B

- the right image overlap area 1908is where the left camera C overlaps with the center/nadir camera B.

- the camera sensor gridbisects each pixel in the overlap areas 1904 and 1908 , which effectively quadruples the image resolution in these areas 1904 and 1908 via the mechanism of co-mounted, co-registered over-sampling.

- this quadrupling of alignment precision between adjacent camerasimproves the systems 100 alignment precision for all sensors affixed to a rigid mount plate.

- the cameras and sensorsare affixed to a rigid mount unit, which is affixed to the rigid mount plate, as discussed above.

- the angular alignment of adjacent cameras affixed to the rigid mount unitis improved, the angular alignment of the other sensors is also enhanced.

- This enhancement of alignment precision for the other sensors affixed to the rigid mount platealso improves the image resolution for those sensors.

- FIG. 20is an illustration of a lateral co-mounted, co-registered oversampling configuration 2000 for two overlapping camera arrays 112 looking down from a vehicle according to certain embodiments of the present invention showing maximum lateral oversampling.

- the adjacent camerasoverlap a few degrees in the vertical sidelap areas 2006 , 2008 , 2014 and 2016 , and the corresponding cameras overlap completely in the image areas 2002 , 2010 , 2018 and 2004 , 2012 , 2020 .

- FIG. 20depicts two 3-camera arrays, these subpixel calibration techniques work equally well when utilizing two overlapping camera arrays with any number of camera sensors from 2 to any number of cameras being calibrated.

- the camera sensorsmay be co-registered to calibrate the physical mount angle offset of each sensor relative to each other and/or to the nadir camera.

- multiple, i.e., at least two, rigid mount unitsare affixed to a rigid mount plate and are co-registered. This provides an initial, “close” calibration. These initial calibration parameters may be entered into an onboard computer system 104 in the system 100 , and updated during flight.

- the rectangles labeled A, B, and Crepresent image areas 2002 , 2010 , 2018 , and 2004 , 2012 , 2020 from two overlapping 3-camera arrays C-B-A (not shown), respectively. Images of areas 2002 , 2010 , 2018 , and 2004 , 2012 , 2020 taken by cameras A through C (not shown) and overlapping cameras A′ through C′ (not shown), respectively, are illustrated from an overhead view. Again, similar to FIGS. 3 and 4 , because of the “cross-eyed” arrangement, the image of area 2002 is taken by right camera A, the image of area 2010 is taken by center/nadir camera B, and the image of area 2018 is taken by left camera C.

- the image of area 2004is taken by right camera A′

- the image of area 2012is taken by center camera B′

- the image of area 2020is taken by left camera C′.

- Cameras A through C and overlapping cameras A′ through C′form arrays (not shown) that are, in most applications, pointed down vertically.

- the hatched areas labeled A/B and B/C sidelapsrepresent two overlapping image overlap areas 2006 , 2008 and 2014 , 2016 , respectively.

- the left image overlap areas 2006 , 2008is where right camera A overlaps with the center/nadir camera B, and where right camera A′ overlaps with the center camera B′, respectively.

- the right image overlap areas 2014 and 2016is where the left camera C overlaps with the center/nadir camera B, and where the left camera C′ overlaps with the center camera B′.

- the camera sensor gridbisects each pixel in the overlap areas 2006 , 2008 and 2014 , 2016 , which effectively quadruples the image resolution in these areas 2006 , 2008 and 2014 , 2016 via the mechanism of co-mounted, co-registered oversampling.

- the overlapping camera sensor gridsbisects each pixel in the sidelap areas 2006 and 2008 , which effectively quadruples the image resolution in these areas 2006 and 2008 via the mechanism of co-mounted, co-registered oversampling.

- the overlapping camera sensor gridsbisects each pixel in the sidelap areas 2014 and 2016 , which effectively quadruples the image resolution in these areas 2014 and 2016 .

- This 64 times improvement of alignment precision between adjacent and corresponding camerasenhances the systems 100 alignment precision for all sensors affixed to a rigid mount plate.

- Cameras A through C and, optionally, other sensorsare affixed to a first rigid mount unit and cameras A′ through C′ and, optionally, other sensors are affixed to a second rigid mount unit, which are each affixed to a rigid mount plate.

- the angular alignment of adjacent and/or corresponding cameras affixed to the first and/or second rigid mount unitsis improved, the angular alignment of the other sensors is also enhanced.

- This enhancement of alignment precision for the other sensors affixed to the rigid mount platealso improves the image resolution for those sensors.

- the image resolutionis effectively quadrupled for the entire image, not just for the A/B and B/C sidelap overlap areas.

- the overlapping grid detail labeled “OVERLAPPING GRID 4X”represents overlapping areas 2022 and 2024 in right images areas 2018 and 2020 , respectively.

- the overlapping camera sensor gridsbisects each pixel in the overlapping areas 2022 and 2024 , which effectively quadruples the image resolution in these areas 2022 and 2024 via the mechanism of co-mounted, co-registered oversampling.

- one camera arrayis monochrome, and another camera array is red-green-blue. Even though each array covers different color bands, simple image processing techniques are used so that all color bands realize the benefit of this increased resolution. Another advantage provided by these techniques is that, in the case where one camera array is red-green-blue and the other, overlapping camera array is an infrared or near infrared (or some other bandwidth), which results in a superior multi-spectral image.

- FIG. 21is an illustration of a fore and lateral co-mounted, co-registered oversampling configuration 2100 for two camera arrays 112 looking down from a vehicle according to certain embodiments of the present invention.

- FIG. 21is an illustration of a fore and lateral co-mounted, co-registered oversampling configuration 2100 for two overlapping camera arrays 112 looking down from a vehicle according to certain embodiments of the present invention showing minimal fore and minimal lateral oversampling.

- the adjacent camerasoverlap a few degrees in the vertical sidelap areas 2104 , 2108 , 2124 and 2128 , and the corresponding cameras overlap a few degrees along the horizontal forelap areas 2112 , 2116 and 2120 .

- FIG. 21depicts two 3-camera arrays, these subpixel calibration techniques work equally well when utilizing two overlapping camera arrays with any number of camera sensors from 2 to any number of cameras being calibrated.

- the camera sensorsmay be co-registered to calibrate the physical mount angle offset of each sensor relative to each other and/or to the nadir camera.

- multiple, i.e., at least two, rigid mount unitsare affixed to a rigid mount plate and are co-registered. This provides an initial, “close” calibration. These initial calibration parameters may be entered into an onboard computer system 104 in the system 100 , and updated during flight.

- the rectangles labeled A, B, and Crepresent image areas 2102 , 2106 and 2110 from a 3-camera array C-B-A (not shown), and the rectangles D, E, and F represent image areas 2122 , 2126 and 2130 from a 3-camera array F-E-D (not shown), respectively.

- Images of areas 2102 , 2106 and 2110 taken by cameras A through C (not shown), and images of areas 2122 , 2126 and 2130 taken by cameras D through F (not shown), respectively,are illustrated from an overhead view. Again, similar to FIGS.

- the rear, left image of area 2102is taken by rear, right camera A

- the rear, center image of area 2106is taken by rear, center/nadir camera B

- the rear, right image of area 2110is taken by rear, left camera C.

- the forward, left image of area 2122is taken by forward, right camera D

- the forward, center image of area 2126is taken by forward, center camera E

- the forward, right image of area 2020is taken by forward, left camera F.

- Cameras A through C and overlapping cameras D through Fform arrays (not shown) that are, in most applications, pointed down vertically.

- the vertical hatched areasrepresent four image overlap areas 2104 , 2108 , 2124 and 2128 .

- the rear, left image overlap area 2104is where rear, right camera A overlaps with the center/nadir camera B

- the rear, right image overlap area 2108is where rear, left camera C overlaps with the center/nadir camera B.

- the forward, left image overlap area 2124is where forward, right camera D overlaps with the center/nadir camera E

- the forward, right image overlap area 2128is where forward, left camera F overlaps with the center camera E.

- the overlapping grid detail labeled “SIDELAP AREA 4:1”represents overlaping sidelap overlap areas 2104 , 2108 and 2124 , 2128 .

- the camera sensor gridbisects each pixel in the overlap areas 2104 , 2108 , 2124 and 2128 , which effectively quadruples the image resolution in these areas 2104 , 2108 , 2124 and 2128 via the mechanism of co-mounted, co-registered oversampling.

- This quadrupling of alignment precision between adjacent camerasimproves the systems 100 alignment precision for all sensors affixed to a rigid mount plate.

- Cameras A through C and, optionally, other sensorsare affixed to a first rigid mount unit and cameras D through F and, optionally, other sensors are affixed to a second rigid mount unit, which are each affixed to a rigid mount plate.