US8164461B2 - Monitoring task performance - Google Patents

Monitoring task performanceDownload PDFInfo

- Publication number

- US8164461B2 US8164461B2US11/788,178US78817807AUS8164461B2US 8164461 B2US8164461 B2US 8164461B2US 78817807 AUS78817807 AUS 78817807AUS 8164461 B2US8164461 B2US 8164461B2

- Authority

- US

- United States

- Prior art keywords

- task

- individual

- sensors

- tasks

- performance

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active, expires

Links

Images

Classifications

- G—PHYSICS

- G08—SIGNALLING

- G08B—SIGNALLING OR CALLING SYSTEMS; ORDER TELEGRAPHS; ALARM SYSTEMS

- G08B25/00—Alarm systems in which the location of the alarm condition is signalled to a central station, e.g. fire or police telegraphic systems

- G08B25/01—Alarm systems in which the location of the alarm condition is signalled to a central station, e.g. fire or police telegraphic systems characterised by the transmission medium

- G08B25/016—Personal emergency signalling and security systems

- G—PHYSICS

- G08—SIGNALLING

- G08B—SIGNALLING OR CALLING SYSTEMS; ORDER TELEGRAPHS; ALARM SYSTEMS

- G08B21/00—Alarms responsive to a single specified undesired or abnormal condition and not otherwise provided for

- G08B21/02—Alarms for ensuring the safety of persons

- G08B21/0202—Child monitoring systems using a transmitter-receiver system carried by the parent and the child

- G08B21/0288—Attachment of child unit to child/article

- G—PHYSICS

- G08—SIGNALLING

- G08B—SIGNALLING OR CALLING SYSTEMS; ORDER TELEGRAPHS; ALARM SYSTEMS

- G08B21/00—Alarms responsive to a single specified undesired or abnormal condition and not otherwise provided for

- G08B21/02—Alarms for ensuring the safety of persons

- G08B21/04—Alarms for ensuring the safety of persons responsive to non-activity, e.g. of elderly persons

- G08B21/0407—Alarms for ensuring the safety of persons responsive to non-activity, e.g. of elderly persons based on behaviour analysis

- G08B21/0423—Alarms for ensuring the safety of persons responsive to non-activity, e.g. of elderly persons based on behaviour analysis detecting deviation from an expected pattern of behaviour or schedule

- G—PHYSICS

- G08—SIGNALLING

- G08B—SIGNALLING OR CALLING SYSTEMS; ORDER TELEGRAPHS; ALARM SYSTEMS

- G08B21/00—Alarms responsive to a single specified undesired or abnormal condition and not otherwise provided for

- G08B21/02—Alarms for ensuring the safety of persons

- G08B21/04—Alarms for ensuring the safety of persons responsive to non-activity, e.g. of elderly persons

- G08B21/0438—Sensor means for detecting

- G08B21/0453—Sensor means for detecting worn on the body to detect health condition by physiological monitoring, e.g. electrocardiogram, temperature, breathing

- G—PHYSICS

- G08—SIGNALLING

- G08B—SIGNALLING OR CALLING SYSTEMS; ORDER TELEGRAPHS; ALARM SYSTEMS

- G08B21/00—Alarms responsive to a single specified undesired or abnormal condition and not otherwise provided for

- G08B21/02—Alarms for ensuring the safety of persons

- G08B21/04—Alarms for ensuring the safety of persons responsive to non-activity, e.g. of elderly persons

- G08B21/0438—Sensor means for detecting

- G08B21/0492—Sensor dual technology, i.e. two or more technologies collaborate to extract unsafe condition, e.g. video tracking and RFID tracking

- G—PHYSICS

- G08—SIGNALLING

- G08B—SIGNALLING OR CALLING SYSTEMS; ORDER TELEGRAPHS; ALARM SYSTEMS

- G08B29/00—Checking or monitoring of signalling or alarm systems; Prevention or correction of operating errors, e.g. preventing unauthorised operation

- G08B29/18—Prevention or correction of operating errors

- G08B29/185—Signal analysis techniques for reducing or preventing false alarms or for enhancing the reliability of the system

- G—PHYSICS

- G16—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR SPECIFIC APPLICATION FIELDS

- G16H—HEALTHCARE INFORMATICS, i.e. INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR THE HANDLING OR PROCESSING OF MEDICAL OR HEALTHCARE DATA

- G16H40/00—ICT specially adapted for the management or administration of healthcare resources or facilities; ICT specially adapted for the management or operation of medical equipment or devices

- G16H40/60—ICT specially adapted for the management or administration of healthcare resources or facilities; ICT specially adapted for the management or operation of medical equipment or devices for the operation of medical equipment or devices

- G16H40/67—ICT specially adapted for the management or administration of healthcare resources or facilities; ICT specially adapted for the management or operation of medical equipment or devices for the operation of medical equipment or devices for remote operation

- G—PHYSICS

- G16—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR SPECIFIC APPLICATION FIELDS

- G16H—HEALTHCARE INFORMATICS, i.e. INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR THE HANDLING OR PROCESSING OF MEDICAL OR HEALTHCARE DATA

- G16H50/00—ICT specially adapted for medical diagnosis, medical simulation or medical data mining; ICT specially adapted for detecting, monitoring or modelling epidemics or pandemics

- G16H50/30—ICT specially adapted for medical diagnosis, medical simulation or medical data mining; ICT specially adapted for detecting, monitoring or modelling epidemics or pandemics for calculating health indices; for individual health risk assessment

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q9/00—Arrangements in telecontrol or telemetry systems for selectively calling a substation from a main station, in which substation desired apparatus is selected for applying a control signal thereto or for obtaining measured values therefrom

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04L—TRANSMISSION OF DIGITAL INFORMATION, e.g. TELEGRAPHIC COMMUNICATION

- H04L67/00—Network arrangements or protocols for supporting network services or applications

- H04L67/01—Protocols

- H04L67/12—Protocols specially adapted for proprietary or special-purpose networking environments, e.g. medical networks, sensor networks, networks in vehicles or remote metering networks

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04L—TRANSMISSION OF DIGITAL INFORMATION, e.g. TELEGRAPHIC COMMUNICATION

- H04L67/00—Network arrangements or protocols for supporting network services or applications

- H04L67/50—Network services

- H04L67/535—Tracking the activity of the user

Definitions

- Methods, devices, and systemshave been developed in various fields of technology for the monitoring of the movement and/or health of an individual. With respect to the monitoring of the health of an individual, some methods, devices, and systems have been developed to aid in the diagnosis and treatment of individuals.

- a systemIn the field of remote health monitoring, for instance, systems have been developed to enable an individual to contact medical professionals from their dwelling regarding a medical emergency. For example, in various systems, a system is equipped with an emergency call button on a base station that initiates a call or signal to an emergency call center from a user's home telephone. The concept of such a system is that if an individual has a health related problem, they can press the emergency call button and emergency medical providers will respond to assist them.

- the mobile devicesgenerally include an emergency call button that transmits a signal to the base station in the dwelling indicating an emergency. Once the signal is made, the base station alerts a remote assistance center that can contact emergency medical personnel or a designated third party.

- Systemshave also been developed that use sensors within the home to monitor an individual within a dwelling.

- these systemsinclude motion sensors, for example, that are connected to a base control system that monitors areas within the dwelling for movement.

- the systemwhen a lack of movement is indicated, the system indicates the lack of movement to a remote assistance center that can contact a party to aid the individual. Additionally, such sensing systems also monitor the health of the system, and its sensors, based upon the individual sensor activations.

- some systemscan be used to diagnose and/or improve brain functionality.

- the individualuses a computing program that goes through a number of exercises on the display of the computing device.

- the individualmakes a selection from one of a number of choices presented on the display and executable instructions within the computing device determine whether the answer is correct.

- Such systemscan aid in recovery of memory that has been lost due to a traumatic brain injury or can aid in relearning such information, among other uses.

- some systemscan include functionality that aids the individual in their daily routine. For example, some systems can provide a scheduling functionality that can utilize reminders directed to an individual that may have reduced brain functionality.

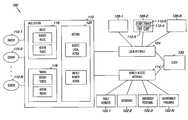

- FIG. 1illustrates a monitoring system embodiment

- FIG. 2illustrates an embodiment of base station data flow.

- FIG. 3illustrates an embodiment of a task performance monitoring system.

- FIG. 4illustrates an embodiment of a task performance planning, execution, and validation framework for use with various embodiments of the present disclosure.

- FIG. 5illustrates task prompting routine for use with various embodiments of the present disclosure.

- Embodiments of the present disclosurecan provide simple, cost effective, privacy-respecting, and/or relatively non-intrusive methods, devices, and/or systems for monitoring task performance.

- Embodiments of the present disclosurefor example, can be utilized with and can include systems and devices as described in U.S. application Ser. No. 11/323,077, filed Dec. 20, 2005.

- the present disclosureprovides detail into task performance concepts that can be used with the systems discussed in the above referenced application, the present application, and/or other systems for monitoring one or more individuals.

- embodimentscan include systems, methods, and devices to monitor the activity of an individual within or around a dwelling.

- a “dwelling”can, for example, be a house, condominium, townhouse, apartment, or institution (e.g., hospital, assisted living facility, nursing home, prison, etc.).

- Embodiments, for example,can monitor the task performance of an individual within or around a dwelling.

- an embodimentcan use a fixed or mobile device to aid an individual in performing a kitchen function, such as making lunch, or opening a drawer, among other functions.

- Various embodimentscan be designed such that, based upon a number of task performance factors, when an individual is successfully completing a task that information can be evaluated to determine whether the difficulty of the task, when repeated, and/or the whether the difficulty of the next task, can be adjusted to better fit the individual and/or can be adjusted to challenge the individual being monitored.

- Various embodimentscan include systems, methods, or devices that utilize a fixed or mobile device to monitor activity of an individual within and/or out of a dwelling, such as monitoring the task performance of an individual.

- a mobile devicecan be used to aid an individual in running errands, among other functions.

- various embodimentscan provide automated detection of changes in activity within a dwelling and automated initiation of alerts to third parties to check on and/or assist the individual where assistance is needed, thereby avoiding prolonged periods of time before assistance is provided.

- Some embodimentscan utilize multiple sensors, multiple timers, and/or multiple rules to determine whether to initiate an action, thereby increasing the certainty that an action is necessary and should be initiated.

- Various embodimentsalso can utilize multiple sensors, multiple timers, and/or multiple rules to make statistical correlations between a number of sensors, thereby increasing certainty that the system is in satisfactory health.

- the logic componentcan be rules-based, and can initiate a timer which establishes a time period for making the determination.

- the systemcan then monitor sensors within the dwelling to determine whether a particular task has been completed.

- the systemcan include memory to store such information or send the information to a remote server (e.g., at a remote monitoring site), for example.

- a system embodimentcan, for example, include providing a number of sensors for monitoring an individual in performing a number of tasks from a list of tasks to be completed.

- the number of taskscan be large items, such as a list of errands to be accomplished in a given day, and/or can be small items, such as the steps for taking a bath.

- the level of detail of the tasks provided to the individualcan be determined in a number of ways. For example, in some embodiments, the level of detail can be determined by the manufacturer, a system installer, a system administrator, a care provider, and/or the individual being monitored.

- the system(e.g., through use of executable instructions executed by logic component) can set the level of detail of the tasks presented to an individual.

- the level of detailcan be adjustable based upon one or more factors including, but not limited to, length of time that the individual has been monitored, number of times a particular task has been performed, success rate of task performance, and/or percentage of correct steps versus incorrect steps in performing a task, among other factors.

- Embodimentscan be designed such that the number of tasks or steps can be increased and/or decreased based upon such factors.

- a taskcan include an associated number of steps to be completed by an individual. In some embodiments, each task can include multiple steps.

- the performance of a task from a list of taskscan be monitored by using at least one of a number of sensors. This can be accomplished, for example, by waiting for a sensor response that indicates that the task has been performed, such as pressing a button when done, or inferring that a task has been accomplished based upon the feedback provided by the sensors.

- the systemcan be designed such that sensors sense the opening of a drawer containing the toothpaste and toothbrush, sensors detecting the water being turned on and off, and sensors detecting the drawer being closed.

- sensorscan infer that the user has opened a drawer, removed the toothpaste and toothbrush, run the water to rinse, and returned the toothpaste and brush to the drawer.

- routinescan be pre-selected and provided as executable instructions provided in the system and displayed for the individual to follow and/or can be designed based upon the individual's routine and entered before system installation, at system installation, and/or after installation.

- the individualcan be provided with a number of step instruction prompts associated with the steps of the task.

- These promptscan be short prompts that indicate a brief instruction to the individual, such as open drawer, or can have a lot of information, such as, “You are now going to brush your teeth. Take your brush and paste out of the drawer, add paste to brush, place brush in mouth and start brushing motion, rinse mouth with water, and place brush and paste back in drawer.”

- Such level of detail of promptscan be determined and provided before system installation, at system installation, and/or after installation, in various embodiments.

- These promptscan be provided in various formats, such as text, image, video, and/or audio.

- Task performance information corresponding to the performance of the task by the individualcan be obtained by the system.

- Such informationcan be obtained via the sensors which can include direct or inferred information, as discussed above.

- Task performance informationcan include step prompt information including the number of step instruction prompts provided during performance of the task, sensor data from the at least one of the number of sensors, and adjusting the list of tasks to be completed based on the task performance information of the task, in various embodiments.

- announcementscan be provided to the individual. These announcements can provide any suitable information to the individual. For example, an announcement can be provided for accomplishment of a step or task, in various embodiments.

- adjusting the list of taskscan include changing the number of tasks from the list of tasks to be completed. Adjusting the list of tasks can additionally, or alternatively, include changing the number of steps associated with one or more particular tasks.

- the embodimentcan include prompting the individual to perform a second task from the list when the first task has been completed.

- prompting the individualcan be accomplished via a mobile device.

- Providing the individual with a number of step instruction promptscan include providing the individual with a reminder when a step of the first task remains uncompleted. This can aid in encouraging the individual to finish an unfinished task.

- Some embodimentscan include scheduling the number of tasks to be completed by the individual. For example, the number of tasks to be completed can be provided in a sequential order, among other ordering formats.

- the methodcan include providing a number of sensors for monitoring the individual in performing a number of tasks from a list of tasks to be completed.

- the number of taskscan each include, for example, an associated number of steps to be completed by the individual, initiating a first task having an associated number of steps based upon one or more context items, monitoring the performance of the first task by using at least one of the number of sensors, and/or providing a task completion indication based upon a determination of completion of the first task.

- Initiating the first task based upon one or more context itemscan include initiating the first task based upon one or more context items from the group including a time of day, a day of the week, a list of completed tasks, a list of uncompleted tasks, a completion status of a particular task, and/or a determined location of the individual, among other items.

- Initiating the first task based upon one or more context itemscan include initiating the first task based upon localizing the individual within a residence by using at least one fixed sensor located in the residence. For example, motion sensors can be used to locate and individual.

- an individualcan be located based upon an activation of one or more sensors (e.g., Activation of a number of sensors in the kitchen can be an indication of the location of the individual.

- the certaintycan be increased by evaluating a combination of both fixed and wearable sensors to verify whether the individual is in the kitchen).

- the combination of fixed and wearable sensorscan be used to improve the accuracy of the location detection and to distinguish between multiple individuals in the dwelling.

- Some embodimentscan include monitoring the performance of the first task by using at least one of a number of fixed sensors and by using at least one portable sensor worn by the individual. Monitoring the performance of the first task can also include using the at least one portable sensor to determine whether the first task is being completed.

- Initiating the first taskcan include prompting the individual on an interface of a mobile device, in some embodiments.

- the first taskcan be initiated based upon integrating sensor data provided by at least one fixed sensor and at least one portable sensor.

- Some embodimentscan include initiating a second task having an associated number of steps to be completed based upon one or more context items. Such context items will be discussed in more detail herein.

- a methodcan include monitoring the performance of the first task by using integrated sensor data provided by at least one fixed sensor and at least one portable sensor. For example, the position of the individual can be ascertained by the location of the portable sensor and the fixed sensor can indicate what the individual is doing (e.g., opening a drawer).

- a system embodiment for monitoring task performancecan include a number of fixed sensors located throughout a residence of the individual.

- a number of portable sensorcan be worn or carried by the individual.

- a computing devicecan be used to communicate with a number of fixed sensors and the portable sensors.

- Computing devicescan include memory having instructions storable thereon and executable by a processor or other logic component to perform a method.

- An example of a methodcan include monitoring, by using one or more sensors, the performance of a first task from a list of tasks to be completed. Each of the tasks can include an associated number of steps to be completed by the individual.

- the individualcan be provided with a number of step instruction prompts corresponding to an associated number of steps of a first task.

- Task performance informationcan be obtained that corresponds to the performance of the first task by the individual.

- This task performance informationcan include, for example, step prompt information including the number of step instruction prompts provided during performance of the first task, and/or sensor data from the at least one of the number of sensors, indicating that a particular step has been completed based on the sensor data.

- the number of step instruction prompts provided to the individual in performing the first task on a subsequent occasioncan be adjusted. This can be beneficial in continuing to challenge the individual and in tailoring the system to the learning speed and/or granularity of the individual.

- the systemcan be designed to indicate whether a step or task has been completed. This can be accomplished in a variety of manners and can be presented to the individual or can be transparent to the individual. For example, indicating that a particular step has been completed can be accomplished by placing a flag in a data file and/or by an announcement to the individual.

- the adjustment of the number of step instruction promptcan be accomplished in a number of ways. For example, adjusting the number of step instruction prompts provided to the individual in performing the first task on a subsequent occasion can be accomplished via a user interface (e.g., a health professional, system administrator, etc.) and/or via an interface usable by the individual being monitored. Adjusting the number of step instruction prompts provided to the individual in performing the first task on a subsequent occasion be accomplished by increasing or reducing the number of step instruction prompts provided to the individual in performing the first task on the subsequent occasion.

- a user interfacee.g., a health professional, system administrator, etc.

- Adjusting the number of step instruction prompts provided to the individual in performing the first task on a subsequent occasionbe accomplished by increasing or reducing the number of step instruction prompts provided to the individual in performing the first task on the subsequent occasion.

- a systemcan include instructions to analyze the task performance information and to initiate adjusting the number of step instruction prompts provided to the individual in performing the first task on a subsequent occasion.

- a systemcan include one or more instructions to schedule the number of tasks to be completed by the individual.

- Such systemscan include a guidance module for providing guidance information for accomplishing at least one step.

- System embodimentscan include a tracking module for tracking completion of the steps associated with at least one task.

- the systemcan include an emotion sensing module.

- a modulecan be helpful, for example, in determining if the individual is getting frustrated with a particular situation and needs some assistance or a prompt.

- the systemcan include a solution module to provide information to the individual that may aid in solving a particular problem encountered by the individual.

- the solution modulecan, for example, include instructions executable to assist the individual with locating a particular item by providing the individual with a number of possible locations of an item.

- the number of possible locationscan, for example, be based on data from at least one of the number of fixed sensors and/or based on data from a database, among other data locations.

- FIG. 1illustrates a monitoring system embodiment.

- the figures hereinfollow a numbering convention in which the first digit or digits correspond to the drawing figure number and the remaining digits identify an element or component in the drawing. Similar elements or components between different figures may be identified by the use of similar digits.

- 110may reference element “ 10 ” in FIG. 1

- a similar elementmay be referenced as 210 in FIG. 2 .

- elements shown in the various embodiments hereincan be added, exchanged, and/or eliminated so as to provide a number of additional embodiments of value.

- the system 100utilizes the base station 110 to monitor the activities of a client (e.g., an individual) in and/or around a dwelling through use of a number of sensors 112 - 1 , 112 - 2 , and 112 -N.

- the number of sensorscan be a number of fixed or portable sensors.

- Monitoring the performance of a particular taskcan include using at least one portable sensor to determine whether the task is being completed. These determinations can be responses to a prompt made by the system, or can be inferred based upon the individual's daily activities as sensed by one or more sensors.

- the systemcan prompt an individual for a specific response and/or can determine whether a task has been accomplished based upon the activity of the individual. For instance, a system can be designed to ask the individual if the individual has brushed his teeth and the individual can respond to the system with a response that indicates yes (e.g., closing the drawer holding the tooth brush, pressing a button on a mobile or fixed device, or an audible response identified by an audio sensor).

- a systemcan be designed to ask the individual if the individual has brushed his teeth and the individual can respond to the system with a response that indicates yes (e.g., closing the drawer holding the tooth brush, pressing a button on a mobile or fixed device, or an audible response identified by an audio sensor).

- the base station 110can also initiate a number of actions based upon a number of rules implemented by the base station 110 . These rules use the information obtained from the number of sensors 112 - 1 through 112 -N to determine whether to initiate an action or not. For example, a rule may be that if a yes response is received, go to the next task on a task list.

- the base station 110includes a number of components providing a number of functions, as will be discussed herein. In the embodiment of FIG. 1 , the base station 110 is illustrated with respect to its various functionalities. For example, the base station 110 is capable of using rules 116 and/or timers 118 to determine whether to initiate an action 120 .

- the base station 110includes executable instructions to receive signals from sensors 112 - 1 through 112 -N that are generated by activation of a sensor 112 - 1 through 112 -N.

- Embodiments of the disclosurecan include various types of sensing devices, including on/off type sensors, and/or ones whose signal strengths scale to the size of the activation parameter, such as temperature, weight, or touch.

- the one or more sensorscan be of many different types.

- types of sensorscan include, but are not limited to, sensors to indicate the opening and closing of a door and/or drawer; sensors to indicate the movement of objects such as shades and/or blinds; current and/or voltage sensors to monitor appliances, lights, wells, etc.; pressure or fluid flow sensors to indicate the turning on and off of water; temperature sensors to indicate that the furnace is on or off; force sensors such as strain gauge sensors to sense an individual walking over a pad, sitting in a chair, or lying in bed; motion sensors to sense the motion of objects within the dwelling; alert switches/buttons to signal an emergency or client input such as a cancellation request; and sensors to measure the signal strength between multiple sensors.

- Sensorsmay also include those carried or worn by the individual, such as, vibration, temperature, audio, touch, humidity, Electro Cardio Gram (ECG), Electro Encephalogram (EEG) and/or Resistance (e.g., Galvanic Skin Reaction, etc.), among other well know sensing devices.

- ECGElectro Cardio Gram

- EEGElectro Encephalogram

- Resistancee.g., Galvanic Skin Reaction, etc.

- a sensorcan also be a button on the base station 110 and/or mobile device 126 - 2 which senses when someone actuates the button.

- Sensorscan be analog and/or digital type sensors and can include logic circuitry and/or executable instructions to transmit signal output to the base station 110 .

- the base station 110can utilize a remote assistance center device (indicated as Remote Access Interface) 114 to inform a third party 122 - 1 through 122 -N that an alert condition exists and that aid may be needed. Aid can be a call to the individual 130 , a visit by a third party 122 - 1 through 122 -N to the location of the individual 130 , or other such aid.

- third parties122 - 1 through 122 -N can include hospital staff, emergency medical technicians, system technicians, doctors, neighbors, family members, friends of the individual 130 , police, fire department, and/or emergency 911 operators.

- the base station 110 and the remote assistance center device 114can each be any type of computing device capable of managing the functionality of receiving alert requests and initiating such requests.

- suitable devicescan include personal computers, mainframe computers, system servers, devices having computer components therein, and other such devices.

- the remote assistance center device 114 and a local interface 124are accessible by an individual 130 (e.g., client).

- the communication between the devices 110 , 112 - 1 through 112 -N, and 124can be accomplished in various manners.

- the communicationscan be accomplished by wired (e.g., telephone lines) and/or wireless (e.g., radio interface) communications.

- wirede.g., telephone lines

- wirelesse.g., radio interface

- the functionality of these devicescan be provided in fewer devices than shown, or in more devices than shown.

- system devices 126 - 1 through 126 -Ncan also communicate with the base station 110 through the local interface 124 .

- a system devicecan be in the form of a mobile device 126 - 1 .

- the mobile device 126 - 1can, in some embodiments, provide access to and/or control of at least some of the functions of the base station 110 described herein. Embodiments of the mobile device 126 - 1 are discussed in greater detail herein.

- a logic componentcan be used to control the functions of the base station 110 .

- the logic componentcan include executable instructions for providing such functions as handling received information from the sensors in the system, time-stamping received information such as sensor activation and/or system health functionality, among others.

- the logic componentcan include RAM and/or ROM, a clock, an input/output, and a processor, among other things.

- a mobile devicecan be used with the base station 110 .

- the mobile devicecan be carried or worn by the individual 130 , as discussed herein.

- Mobile devices 126 - 2can be any type of device that is portable and that can provide the described functionalities.

- Examplescan include basic devices that have the capability to provide such functionalities, up to complex devices, having multiple functions.

- Examples of complex mobile devices 126 - 2can include mobile telephones and portable computing devices, such as personal digital assistants (PDAs), and the like.

- PDAspersonal digital assistants

- the mobile devicecan have home/away functionality to indicate whether the individual 130 is within a certain distance of the base station 110 of the system, for instance, through use of a sensor (e.g., sensor 112 - 5 ).

- a transceiver, transmitter, and/or receivercan be used to transmit signals to and/or receive signals from the base station 110 within the dwelling.

- a transmitter and a transceivercan be used interchangeably if a transmission functionality is desired. Additionally, a receiver and a transceiver can be used interchangeably if a reception functionality is desired.

- Short range communication types of sensorscan include IEEE 802.15.4 and/or IEEE 802.11 protocols, among others.

- the mobile device 126 - 2can communicate with the base station 110 using short range communication signals.

- the mobile device 126 - 2can use a short range communication signal and the local interface 124 can be incorporated into the base station 110 .

- the mobile device 126 - 2can utilize a long range communication signal to communicate to the base station 110 .

- the local interface 124can be a mobile device such as a mobile telephone that can send the instructions from the mobile device 126 - 2 to the base station 110 .

- the mobile device 126 - 2can be separate from, associated with, or included in the mobile telephone.

- the mobile devicecan include executable instructions to enable the mobile device 126 - 2 to communicate with the mobile telephone in order to instruct the mobile telephone how to forward its base station message to the base station 110 .

- the base station 110can include executable instructions to enable a short range communication signal to be translated into a long range wireless signal.

- the number of sensors 112 - 1 through 112 -Ncan include a task sensor, where the task sensor is associated with a task assigned to the individual 130 .

- the individual 130is assigned the task of retrieving a beverage.

- the task sensorwould be the sensor that is activated when the refrigerator door is opened.

- the logic componentcan thus be designed to couple the task to the task sensor and to initiate a task-complete action when the task sensor is activated.

- the task-complete actioncan be to send a signal to the remote assistance center device that the task was completed successfully.

- one or more sensorscan be used to identify when a task is compete and/or in progress. Such embodiments can accomplish these tasks, for example, by monitoring the actuation of one or more sensors, the time between sensor activations, and other such suitable manners.

- the home/away sensor 112 - 5 and/or mobile device 126 - 2can be equipped with an identification tag.

- the logic componentcan be designed to initiate a task-complete action when the task sensor is activated and when the signal strength between the home/away sensor 112 - 5 with the correct identification tag is larger than the signal strength between the home/away sensor 112 - 5 with a different identification tag and the task sensor.

- the mobile devicecan be equipped with components including, but not limited to, a display, a transceiver, a transmitter and/or a receiver, an antenna, a power source, a microprocessor, memory, input devices, and/or other output devices such as lights, speakers, and/or buzzers.

- componentsincluding, but not limited to, a display, a transceiver, a transmitter and/or a receiver, an antenna, a power source, a microprocessor, memory, input devices, and/or other output devices such as lights, speakers, and/or buzzers.

- the mobile devicecan include an “awaken” mechanism, where activating the awaken mechanism transmits a wireless signal indicative of a return of the mobile device to within the base range of the base station.

- the awaken mechanismWhen the awaken mechanism is activated, the mobile device can begin to send signals to the base station at the first predetermined amount of time if five (5) seconds at the first predefined time interval of thirty (30) seconds.

- Other first and second predetermined times of signal length and first and second predefined time intervalsare also possible.

- the mobile devicecan be constructed to periodically check-in with the base station device, such as by sending a ping signal to the base station device via radio frequency or other such manner.

- the mobile devicecan be provided with energy saving executable instructions that allow the mobile device to be in “sleep mode,” where power usage is reduced, and then to “awaken” periodically to send a ping signal to the base station device.

- the mobile devicecan then return to “sleep mode.”

- the clientcan awaken the mobile device manually, for instance, by pushing an emergency button.

- FIG. 2illustrates an embodiment of base station data flow. This diagram illustrates the flow of information from various parts of the system.

- the system sensing information 242can be used in a variety of functions provided by the system 200 .

- system sensing informationcan be used to support emergency call functions 234 , activity monitoring functions 232 , and system health functions 238 , among others.

- Each of these functionsi.e., 232 , 234 , and 238 ) utilizes information about either a sensor or an activity of an individual that activates a sensor.

- the blocks 232 , 234 , and/or 238can process and interpret information from system sensing block 242 in order to provide information to the alert protocol manager functionality 236 , and a system diagnostic alert protocol functionality 240 . These functionalities can be provided at the base station and/or at a remote location, for example. Individually, blocks 232 , 234 , or 238 can pass system information directly to the alert protocol manager 236 , or can process the information itself to determine the need to initiate an alert request or other action request to the alert protocol manager 236 .

- the alert protocol manager 236can initiate an alert upon a request from 232 , 234 , or 238 , or can further process the information received from 232 , 234 , or 238 to determine whether to initiate an alert or other action.

- the initiation of an alert by the alert protocol manager 236can be implemented through use of functions within the system client interface 244 and/or the system remote interface 246 .

- the system remote interface 246can be a call center computer, such as a computer at an emergency call center.

- an alert processcan include a notification of the client that an alert will be or has been initiated.

- system client interfacecan be used to indicate the impending or existing alert condition and/or can be used by the client to confirm and/or cancel the alert.

- the system remote interfacecan be used to contact a third party, such as a remote assistance center device to inform the third party that an alert condition exists and that aid may be needed.

- Aidcan be a call to the client of the system, a visit by a third party (e.g., doctor, emergency medical personal, system technician, etc.) to the location of the client, or other such aid, as discussed herein.

- system informationcan be provided from the system platform services block 248 to the system health block 238 . This information can be used to determine whether to issue an alert for a fault in the system, for a software/firmware update, or the like.

- the system diagnostic alert block 240can be used to issue such an alert. This alert can then be effectuated through the system client interface 244 and/or the system remote interface 246 .

- the alertcan be sent to both the individual and a third party (e.g., via blocks 244 and 246 ). If the client changes the battery, the alert can be canceled and notification of the cancellation can be provided to the third party.

- embodiments illustrated hereinmay indicate a flow path, this is meant to be an example of flow and should not be viewed as limiting. Further, unless explicitly stated, the embodiments described herein are not constrained to a particular order or sequence. Additionally, some of the described embodiments and elements thereof can occur or be performed at the same point in time. Embodiments can be performed by executable instructions such as software and/or firmware.

- Activity monitoring alert protocol management functionalitycan be accomplished by a number of executable instructions and/or through use of logic circuitry.

- the executable instructions and/or, in some embodiments, the logic of the mobile devicecan be updated. For example, this can be accomplished wirelessly via communication with the base station, among other updating methods.

- FIG. 3illustrates an embodiment of a task performance monitoring system.

- a number of functionalities that are provided by the system 300are illustrated. These functionalities are grouped as user interface functions, data storage and access functions, assistive technologies functions, personal safety functions, and sensory input functions. Embodiments can utilize more or less functions and/or function types, than are shown in the embodiment of FIG. 3 .

- a system and/or devicecan include, for example, a speech processor 350 , a remote multi-user interface 352 , an in home user interface 354 , and/or a mobile user interface 356 .

- a system or devicecan include a data center 358 , external health resources 360 , and data sources 362 .

- embodimentscan include a planning module 364 , a memory aid module 366 , and/or a task execution module 368 .

- a system or devicecan include personal safety functions such as automated personal emergency response system (a-pers) 370 and/or activities of daily living monitoring 372 .

- personal safety functionssuch as automated personal emergency response system (a-pers) 370 and/or activities of daily living monitoring 372 .

- embodimentscan include in home sensor devices 374 , remote sensor devices 376 , and localization devices 378 .

- the sensory input functionscan be utilized to collect information about the individual being monitored and/or be used in determining whether a task has been performed and/or performed successfully.

- in home sensor devicesare devices that are positioned within the dwelling in which the individual is being monitored.

- Remote sensor devicesare those located outside the dwelling of the individual. These may be portable or fixed and, if portable, may be used within the dwelling, in some situations.

- Localization devicesare used to determine the location of the individual.

- Examples, of localization devices that would be suitable for use in embodiments of the present disclosureare mobile communication devices (e.g., capable of positioning based upon proximity to one or more fixed receivers), and global positioning system (GPS) devices.

- Mobile phones and other portable devicescan have such capabilities.

- FIG. 4illustrates an embodiment of a task performance planning, execution, and validation framework for use with various embodiments of the present disclosure.

- the embodimentincludes initiation of an activity from a day plan at 480 .

- the initiation of the activitycan, for example, be the monitoring of an activity that is being started by the individual being monitored, or the initiation of one or more instructions to aid in instructing the individual how to accomplish the activity.

- the instructionscan be delivered in text, image, video, and/or audio information provided to the individual. This can be accomplished through the use of one or more files saved in memory and executable instructions that are executed to display the one or more files for the individual.

- the systemcan be designed such that the individual can select which format or formats the instructions are presented to them. For instance, the individual may select that the instructions are to be presented in text form or in video form when available, among other format selection choices. In some embodiments, the selection can be made by a user, such as an administrator or the manufacturer.

- an announcementcan be made to the individual at various times during such processes as, for example, illustrated in FIG. 4 .

- an announcement signaling the completion of a task or activitycan be provided in one or more formats such as text, image audio, or video.

- the method embodiment of FIG. 4also includes checking for the next pending activity or other item on the day plan. This can be beneficial in moving the individual to a next task/step once the current task/step has been completed.

- FIG. 5illustrates task prompting routine for use with various embodiments of the present disclosure.

- the day planincludes waking 590 - 1 , bathing 590 - 2 , eating breakfast 590 - 3 , performing a planned leisure activity (hobby) 590 - 4 , eating lunch 590 - 5 , exercise 590 - 6 , and other activities of daily living 590 -M.

- the bathing activityis further defined in the table to the right.

- the tablean example of the steps and sensing methodology, criteria for proceeding, and actuations, if any, are discussed. This example provides a number of different steps of a task, a number of different sensor types used individually and in combination, different types of criteria for completion of the steps and/or task, among other features.

- the first rowincludes the headings of the different information sections of the table.

- the headingsare PROMPT/INTERVENTION, SENSOR(S) TO DETECT ACTION, CRITERIA TO PROCEED TO INITIATE SENDING TASK PROMPTS, AND ACTUATORS.

- the first columnindicates the various steps for the Bathing Activity of Daily Living (ADL).

- the promptis a prompt to indicate that the individual is to start their bathing task.

- the sensor to detect actionis a motion sensor in the bathroom.

- the criterionis to detect that the individual has successfully entered the bathroom.

- the promptis a light emitting diode (LED) on the shaver indicating to the individual that they are to use the shaver.

- the sensor usedis an accelerometer in the shaver.

- the criteriaare the starting and/or stopping of the shaver.

- step 3an audio instruction is provided to the individual that indicates that they are to undress and enter the shower.

- a water flow sensor and/or temperature sensoris used in this example to detect action.

- the criterionis an audible acknowledgement from the individual that the task has been completed and an auto adjustment of the shower temperature can be made by an actuator during this step of the task.

- an LED on the towel barindicates that the individual should take the towel off the towel bar.

- some instructionswere provided at a high level and others were provided at a low level. This example, indicates the versatility that can be provided to an individual based upon the implementation of embodiments discussed herein.

- the executable instructionscan determine which third party to contact.

- Other sensorscan be used in combination with, or instead of, a sensor worn by the individual to determine whether the individual is within the dwelling. Examples of other sensors include, motion sensors, sensors on the interior/exterior/garage doors, sensors on the individual's automobile, and the like.

Landscapes

- Health & Medical Sciences (AREA)

- Engineering & Computer Science (AREA)

- Business, Economics & Management (AREA)

- General Health & Medical Sciences (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Emergency Management (AREA)

- Gerontology & Geriatric Medicine (AREA)

- Medical Informatics (AREA)

- Biomedical Technology (AREA)

- Public Health (AREA)

- Epidemiology (AREA)

- Primary Health Care (AREA)

- Computer Security & Cryptography (AREA)

- Psychiatry (AREA)

- Life Sciences & Earth Sciences (AREA)

- Pulmonology (AREA)

- Psychology (AREA)

- Social Psychology (AREA)

- Child & Adolescent Psychology (AREA)

- Physiology (AREA)

- Physical Education & Sports Medicine (AREA)

- Heart & Thoracic Surgery (AREA)

- Multimedia (AREA)

- Biophysics (AREA)

- Cardiology (AREA)

- General Business, Economics & Management (AREA)

- Pathology (AREA)

- Databases & Information Systems (AREA)

- Data Mining & Analysis (AREA)

- Computer Networks & Wireless Communication (AREA)

- Alarm Systems (AREA)

- Telephonic Communication Services (AREA)

- Debugging And Monitoring (AREA)

Abstract

Description

Claims (33)

Priority Applications (7)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US11/788,178US8164461B2 (en) | 2005-12-30 | 2007-04-19 | Monitoring task performance |

| PCT/US2008/004850WO2008130542A2 (en) | 2007-04-19 | 2008-04-15 | Monitoring task performance |

| US13/324,711US8872664B2 (en) | 2005-12-30 | 2011-12-13 | Monitoring activity of an individual |

| US14/524,717US9396646B2 (en) | 2005-12-30 | 2014-10-27 | Monitoring activity of an individual |

| US15/212,776US10115294B2 (en) | 2005-12-30 | 2016-07-18 | Monitoring activity of an individual |

| US16/174,741US10475331B2 (en) | 2005-12-30 | 2018-10-30 | Monitoring activity of an individual |

| US16/678,144US20200074840A1 (en) | 2005-12-30 | 2019-11-08 | Monitoring activity of an individual |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US11/323,077US7589637B2 (en) | 2005-12-30 | 2005-12-30 | Monitoring activity of an individual |

| US11/788,178US8164461B2 (en) | 2005-12-30 | 2007-04-19 | Monitoring task performance |

Related Parent Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| US11/323,077Continuation-In-PartUS7589637B2 (en) | 2005-12-30 | 2005-12-30 | Monitoring activity of an individual |

Related Child Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| US13/324,711ContinuationUS8872664B2 (en) | 2005-12-30 | 2011-12-13 | Monitoring activity of an individual |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| US20070192174A1 US20070192174A1 (en) | 2007-08-16 |

| US8164461B2true US8164461B2 (en) | 2012-04-24 |

Family

ID=38973664

Family Applications (6)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| US11/788,178Active2026-09-29US8164461B2 (en) | 2005-12-30 | 2007-04-19 | Monitoring task performance |

| US13/324,711Active2026-01-17US8872664B2 (en) | 2005-12-30 | 2011-12-13 | Monitoring activity of an individual |

| US14/524,717ActiveUS9396646B2 (en) | 2005-12-30 | 2014-10-27 | Monitoring activity of an individual |

| US15/212,776ActiveUS10115294B2 (en) | 2005-12-30 | 2016-07-18 | Monitoring activity of an individual |

| US16/174,741ActiveUS10475331B2 (en) | 2005-12-30 | 2018-10-30 | Monitoring activity of an individual |

| US16/678,144AbandonedUS20200074840A1 (en) | 2005-12-30 | 2019-11-08 | Monitoring activity of an individual |

Family Applications After (5)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| US13/324,711Active2026-01-17US8872664B2 (en) | 2005-12-30 | 2011-12-13 | Monitoring activity of an individual |

| US14/524,717ActiveUS9396646B2 (en) | 2005-12-30 | 2014-10-27 | Monitoring activity of an individual |

| US15/212,776ActiveUS10115294B2 (en) | 2005-12-30 | 2016-07-18 | Monitoring activity of an individual |

| US16/174,741ActiveUS10475331B2 (en) | 2005-12-30 | 2018-10-30 | Monitoring activity of an individual |

| US16/678,144AbandonedUS20200074840A1 (en) | 2005-12-30 | 2019-11-08 | Monitoring activity of an individual |

Country Status (2)

| Country | Link |

|---|---|

| US (6) | US8164461B2 (en) |

| WO (1) | WO2008130542A2 (en) |

Cited By (13)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20110275042A1 (en)* | 2010-02-22 | 2011-11-10 | Warman David J | Human-motion-training system |

| US9044543B2 (en) | 2012-07-17 | 2015-06-02 | Elwha Llc | Unmanned device utilization methods and systems |

| US9049168B2 (en)* | 2013-01-11 | 2015-06-02 | State Farm Mutual Automobile Insurance Company | Home sensor data gathering for neighbor notification purposes |

| US9061102B2 (en) | 2012-07-17 | 2015-06-23 | Elwha Llc | Unmanned device interaction methods and systems |

| US9361778B1 (en) | 2013-03-15 | 2016-06-07 | Gary German | Hands-free assistive and preventive remote monitoring system |

| US9396646B2 (en) | 2005-12-30 | 2016-07-19 | Healthsense, Inc. | Monitoring activity of an individual |

| US9426292B1 (en)* | 2015-12-29 | 2016-08-23 | International Business Machines Corporation | Call center anxiety feedback processor (CAFP) for biomarker based case assignment |

| US20190109947A1 (en)* | 2016-03-23 | 2019-04-11 | Koninklijke Philips N.V. | Systems and methods for matching subjects with care consultants in telenursing call centers |

| US10311694B2 (en) | 2014-02-06 | 2019-06-04 | Empoweryu, Inc. | System and method for adaptive indirect monitoring of subject for well-being in unattended setting |

| US20190213100A1 (en)* | 2016-07-22 | 2019-07-11 | Intel Corporation | Autonomously adaptive performance monitoring |

| US10475141B2 (en) | 2014-02-06 | 2019-11-12 | Empoweryu, Inc. | System and method for adaptive indirect monitoring of subject for well-being in unattended setting |

| US11169613B2 (en)* | 2018-05-30 | 2021-11-09 | Atheer, Inc. | Augmented reality task flow optimization systems |

| US11438435B2 (en) | 2019-03-01 | 2022-09-06 | Microsoft Technology Licensing, Llc | User interaction and task management using multiple devices |

Families Citing this family (72)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| KR100750999B1 (en)* | 2004-12-20 | 2007-08-22 | 삼성전자주식회사 | Device and method for processing call/message-related event in wireless terminal |

| US20080077020A1 (en) | 2006-09-22 | 2008-03-27 | Bam Labs, Inc. | Method and apparatus for monitoring vital signs remotely |

| US20080201158A1 (en) | 2007-02-15 | 2008-08-21 | Johnson Mark D | System and method for visitation management in a controlled-access environment |

| US8026814B1 (en) | 2007-07-25 | 2011-09-27 | Pinpoint Technologies Inc. | Wireless mesh network for an asset tracking system |

| US8400268B1 (en) | 2007-07-25 | 2013-03-19 | Pinpoint Technologies Inc. | End to end emergency response |

| US7893843B2 (en)* | 2008-06-18 | 2011-02-22 | Healthsense, Inc. | Activity windowing |

| NL1036271C2 (en)* | 2008-12-03 | 2010-06-07 | Irina Til | DEVICE FOR MEMORY ACTIVATION OF DEMENTING PEOPLE FOR INDEPENDENT PERFORMANCE OF EVERYDAY SELF-CARE TREATMENTS. |

| US20100262403A1 (en)* | 2009-04-10 | 2010-10-14 | Bradford White Corporation | Systems and methods for monitoring water heaters or boilers |

| US8164444B2 (en)* | 2009-04-29 | 2012-04-24 | Healthsense, Inc. | Position detection |

| EP2454921B1 (en)* | 2009-07-15 | 2013-03-13 | Koninklijke Philips Electronics N.V. | Activity adapted automation of lighting |

| US8917181B2 (en)* | 2010-05-07 | 2014-12-23 | Mikael Edlund | Method for monitoring an individual |

| US9064391B2 (en) | 2011-12-20 | 2015-06-23 | Techip International Limited | Tamper-alert resistant bands for human limbs and associated monitoring systems and methods |

| US8736447B2 (en) | 2011-12-20 | 2014-05-27 | Techip International Limited | Tamper-resistant monitoring systems and methods |

| WO2014100488A1 (en)* | 2012-12-19 | 2014-06-26 | Robert Bosch Gmbh | Personal emergency response system by nonintrusive load monitoring |

| US11872053B1 (en)* | 2013-02-22 | 2024-01-16 | Cloud Dx, Inc. | Systems and methods for monitoring medication effectiveness |

| US11612352B1 (en)* | 2013-02-22 | 2023-03-28 | Cloud Dx, Inc. | Systems and methods for monitoring medication effectiveness |

| US20140272847A1 (en)* | 2013-03-14 | 2014-09-18 | Edulock, Inc. | Method and system for integrated reward system for education related applications |

| US20140272894A1 (en)* | 2013-03-13 | 2014-09-18 | Edulock, Inc. | System and method for multi-layered education based locking of electronic computing devices |

| US20140255889A1 (en)* | 2013-03-10 | 2014-09-11 | Edulock, Inc. | System and method for a comprehensive integrated education system |

| US20140278895A1 (en)* | 2013-03-12 | 2014-09-18 | Edulock, Inc. | System and method for instruction based access to electronic computing devices |

| US9913003B2 (en)* | 2013-03-14 | 2018-03-06 | Alchera Incorporated | Programmable monitoring system |

| US9008890B1 (en) | 2013-03-15 | 2015-04-14 | Google Inc. | Augmented trajectories for autonomous vehicles |

| US8996224B1 (en) | 2013-03-15 | 2015-03-31 | Google Inc. | Detecting that an autonomous vehicle is in a stuck condition |

| US20140278686A1 (en)* | 2013-03-15 | 2014-09-18 | Desire2Learn Incorporated | Method and system for automatic task time estimation and scheduling |

| EP2989620A1 (en)* | 2013-04-22 | 2016-03-02 | Domosafety SA | System and method for automated triggering and management of alarms |

| KR20140134109A (en)* | 2013-05-13 | 2014-11-21 | 엘에스산전 주식회사 | Solitary senior people care system |

| WO2015054501A2 (en)* | 2013-10-09 | 2015-04-16 | Stadson Technology | Safety system utilizing personal area network communication protocols between other devices |

| CN103680061B (en)* | 2013-12-11 | 2016-06-08 | 苏州市职业大学 | A kind of indoor old solitary people security control device |

| US9355534B2 (en)* | 2013-12-12 | 2016-05-31 | Nokia Technologies Oy | Causing display of a notification on a wrist worn apparatus |

| US20160253910A1 (en)* | 2014-02-26 | 2016-09-01 | Cynthia A. Fisher | System and Method for Computer Guided Interaction on a Cognitive Prosthetic Device for Users with Cognitive Disabilities |

| US9460612B2 (en) | 2014-05-01 | 2016-10-04 | Techip International Limited | Tamper-alert and tamper-resistant band |

| US9293029B2 (en)* | 2014-05-22 | 2016-03-22 | West Corporation | System and method for monitoring, detecting and reporting emergency conditions using sensors belonging to multiple organizations |

| US10356649B2 (en)* | 2014-09-26 | 2019-07-16 | Intel Corporation | Multisensory change detection for internet of things domain |

| US9754465B2 (en)* | 2014-10-30 | 2017-09-05 | International Business Machines Corporation | Cognitive alerting device |

| US10671954B2 (en)* | 2015-02-23 | 2020-06-02 | Google Llc | Selective reminders to complete interrupted tasks |

| US20180053397A1 (en)* | 2015-03-05 | 2018-02-22 | Ent. Services Development Corporation Lp | Activating an alarm if a living being is present in an enclosed space with ambient temperature outside a safe temperature range |

| US9805587B2 (en) | 2015-05-19 | 2017-10-31 | Ecolink Intelligent Technology, Inc. | DIY monitoring apparatus and method |

| WO2017003764A1 (en) | 2015-07-02 | 2017-01-05 | Select Comfort Corporation | Automation for improved sleep quality |

| WO2017013608A1 (en)* | 2015-07-20 | 2017-01-26 | Opterna Technology Limited | Communications system having a plurality of sensors to remotely monitor a living environment |

| US9953511B2 (en)* | 2015-09-16 | 2018-04-24 | Honeywell International Inc. | Portable security device that communicates with home security system monitoring service |

| US11283877B2 (en) | 2015-11-04 | 2022-03-22 | Zoox, Inc. | Software application and logic to modify configuration of an autonomous vehicle |

| US9606539B1 (en) | 2015-11-04 | 2017-03-28 | Zoox, Inc. | Autonomous vehicle fleet service and system |

| US10248119B2 (en) | 2015-11-04 | 2019-04-02 | Zoox, Inc. | Interactive autonomous vehicle command controller |

| US9630619B1 (en) | 2015-11-04 | 2017-04-25 | Zoox, Inc. | Robotic vehicle active safety systems and methods |

| US10334050B2 (en)* | 2015-11-04 | 2019-06-25 | Zoox, Inc. | Software application and logic to modify configuration of an autonomous vehicle |

| US10401852B2 (en) | 2015-11-04 | 2019-09-03 | Zoox, Inc. | Teleoperation system and method for trajectory modification of autonomous vehicles |

| WO2017079341A2 (en) | 2015-11-04 | 2017-05-11 | Zoox, Inc. | Automated extraction of semantic information to enhance incremental mapping modifications for robotic vehicles |

| US9632502B1 (en) | 2015-11-04 | 2017-04-25 | Zoox, Inc. | Machine-learning systems and techniques to optimize teleoperation and/or planner decisions |

| US9754490B2 (en) | 2015-11-04 | 2017-09-05 | Zoox, Inc. | Software application to request and control an autonomous vehicle service |

| US12265386B2 (en) | 2015-11-04 | 2025-04-01 | Zoox, Inc. | Autonomous vehicle fleet service and system |

| US10572961B2 (en) | 2016-03-15 | 2020-02-25 | Global Tel*Link Corporation | Detection and prevention of inmate to inmate message relay |

| US9609121B1 (en) | 2016-04-07 | 2017-03-28 | Global Tel*Link Corporation | System and method for third party monitoring of voice and video calls |

| EP3516559A1 (en)* | 2016-09-20 | 2019-07-31 | HeartFlow, Inc. | Systems and methods for monitoring and updating blood flow calculations with user-specific anatomic and physiologic sensor data |

| US11061416B2 (en) | 2016-11-22 | 2021-07-13 | Wint Wi Ltd | Water profile used to detect malfunctioning water appliances |

| US20180302403A1 (en)* | 2017-04-13 | 2018-10-18 | Plas.md, Inc. | System and method for location-based biometric data collection and processing |

| US10225396B2 (en) | 2017-05-18 | 2019-03-05 | Global Tel*Link Corporation | Third party monitoring of a activity within a monitoring platform |

| US10860786B2 (en) | 2017-06-01 | 2020-12-08 | Global Tel*Link Corporation | System and method for analyzing and investigating communication data from a controlled environment |

| CN107707657B (en)* | 2017-09-30 | 2021-08-06 | 苏州涟漪信息科技有限公司 | Safety monitoring system based on multiple sensors |

| US10497475B2 (en) | 2017-12-01 | 2019-12-03 | Verily Life Sciences Llc | Contextually grouping sensor channels for healthcare monitoring |

| WO2019115308A1 (en)* | 2017-12-13 | 2019-06-20 | Koninklijke Philips N.V. | Personalized assistance for impaired subjects |

| EP3546153B1 (en) | 2018-03-27 | 2021-05-12 | Braun GmbH | Personal care device |

| EP3546151B1 (en) | 2018-03-27 | 2025-04-30 | Braun GmbH | BODY CARE DEVICE |

| US11410257B2 (en) | 2019-01-08 | 2022-08-09 | Rauland-Borg Corporation | Message boards |

| US11626010B2 (en)* | 2019-02-28 | 2023-04-11 | Nortek Security & Control Llc | Dynamic partition of a security system |

| US12165495B2 (en)* | 2019-02-28 | 2024-12-10 | Nice North America Llc | Virtual partition of a security system |

| US11270799B2 (en)* | 2019-08-20 | 2022-03-08 | Vinya Intelligence Inc. | In-home remote monitoring systems and methods for predicting health status decline |

| US11393326B2 (en)* | 2019-09-12 | 2022-07-19 | Rauland-Borg Corporation | Emergency response drills |

| US11482323B2 (en) | 2019-10-01 | 2022-10-25 | Rauland-Borg Corporation | Enhancing patient care via a structured methodology for workflow stratification |

| DE102019128456A1 (en)* | 2019-10-22 | 2021-04-22 | Careiot GmbH | Procedures for monitoring people in need |

| CN113781692A (en)* | 2020-06-10 | 2021-12-10 | 骊住株式会社 | space management device |

| WO2022010398A1 (en)* | 2020-07-10 | 2022-01-13 | Telefonaktiebolaget Lm Ericsson (Publ) | Conditional reconfiguration based on data traffic |

| US12112343B2 (en) | 2022-02-24 | 2024-10-08 | Klaviyo, Inc. | Detecting changes in customer (user) behavior using a normalization value |

Citations (39)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5447166A (en) | 1991-09-26 | 1995-09-05 | Gevins; Alan S. | Neurocognitive adaptive computer interface method and system based on on-line measurement of the user's mental effort |

| DE19522803A1 (en) | 1995-06-23 | 1997-01-02 | Klaus Vorschmitt | Safety monitoring of individuals requiring care |

| US5724987A (en) | 1991-09-26 | 1998-03-10 | Sam Technology, Inc. | Neurocognitive adaptive computer-aided training method and system |

| US5810747A (en)* | 1996-08-21 | 1998-09-22 | Interactive Remote Site Technology, Inc. | Remote site medical intervention system |

| US5890905A (en) | 1995-01-20 | 1999-04-06 | Bergman; Marilyn M. | Educational and life skills organizer/memory aid |

| US5905436A (en) | 1996-10-24 | 1999-05-18 | Gerontological Solutions, Inc. | Situation-based monitoring system |

| US6042383A (en) | 1998-05-26 | 2000-03-28 | Herron; Lois J. | Portable electronic device for assisting persons with learning disabilities and attention deficit disorders |

| US6108685A (en) | 1994-12-23 | 2000-08-22 | Behavioral Informatics, Inc. | System for generating periodic reports generating trend analysis and intervention for monitoring daily living activity |

| US6281790B1 (en)* | 1999-09-01 | 2001-08-28 | Net Talon Security Systems, Inc. | Method and apparatus for remotely monitoring a site |

| US6402520B1 (en) | 1997-04-30 | 2002-06-11 | Unique Logic And Technology, Inc. | Electroencephalograph based biofeedback system for improving learning skills |

| US20020198473A1 (en) | 2001-03-28 | 2002-12-26 | Televital, Inc. | System and method for real-time monitoring, assessment, analysis, retrieval, and storage of physiological data over a wide area network |

| US20030004652A1 (en) | 2001-05-15 | 2003-01-02 | Daniela Brunner | Systems and methods for monitoring behavior informatics |

| US6520905B1 (en) | 1998-02-26 | 2003-02-18 | Eastman Kodak Company | Management of physiological and psychological state of an individual using images portable biosensor device |

| US6524239B1 (en) | 1999-11-05 | 2003-02-25 | Wcr Company | Apparatus for non-instrusively measuring health parameters of a subject and method of use thereof |

| US6540674B2 (en) | 2000-12-29 | 2003-04-01 | Ibm Corporation | System and method for supervising people with mental disorders |

| US6558165B1 (en) | 2001-09-11 | 2003-05-06 | Capticom, Inc. | Attention-focusing device and method of use |

| US20030117279A1 (en) | 2001-12-25 | 2003-06-26 | Reiko Ueno | Device and system for detecting abnormality |

| US20030130590A1 (en) | 1998-12-23 | 2003-07-10 | Tuan Bui | Method and apparatus for providing patient care |

| US20030185436A1 (en)* | 2002-03-26 | 2003-10-02 | Smith David R. | Method and system of object classification employing dimension reduction |

| US20030189485A1 (en) | 2002-03-27 | 2003-10-09 | Smith Simon Lawrence | System for monitoring an inhabited environment |

| US20030216670A1 (en)* | 2002-05-17 | 2003-11-20 | Beggs George R. | Integral, flexible, electronic patient sensing and monitoring system |

| US20030229471A1 (en) | 2002-01-22 | 2003-12-11 | Honeywell International Inc. | System and method for learning patterns of behavior and operating a monitoring and response system based thereon |

| US20030236451A1 (en)* | 2002-04-03 | 2003-12-25 | The Procter & Gamble Company | Method and apparatus for measuring acute stress |

| US20040131998A1 (en) | 2001-03-13 | 2004-07-08 | Shimon Marom | Cerebral programming |

| US20040191747A1 (en)* | 2003-03-26 | 2004-09-30 | Hitachi, Ltd. | Training assistant system |

| US20040219498A1 (en)* | 2002-04-09 | 2004-11-04 | Davidson Lance Samuel | Training apparatus and methods |

| US20050024199A1 (en)* | 2003-06-11 | 2005-02-03 | Huey John H. | Combined systems user interface for centralized monitoring of a screening checkpoint for passengers and baggage |

| US20050057357A1 (en)* | 2003-07-10 | 2005-03-17 | University Of Florida Research Foundation, Inc. | Daily task and memory assistance using a mobile device |

| US20050065452A1 (en)* | 2003-09-06 | 2005-03-24 | Thompson James W. | Interactive neural training device |

| US20050073391A1 (en)* | 2003-10-02 | 2005-04-07 | Koji Mizobuchi | Data processing apparatus |

| US20050131736A1 (en) | 2003-12-16 | 2005-06-16 | Adventium Labs And Red Wing Technologies, Inc. | Activity monitoring |

| US20050137465A1 (en)* | 2003-12-23 | 2005-06-23 | General Electric Company | System and method for remote monitoring in home activity of persons living independently |

| US6950026B2 (en) | 2003-02-17 | 2005-09-27 | National Institute Of Information And Communication Technology Incorporated Administrative Agency | Method for complementing personal lost memory information with communication, and communication system, and information recording medium thereof |

| US20050244797A9 (en) | 2001-05-14 | 2005-11-03 | Torkel Klingberg | Method and arrangement in a computer training system |

| US20050264425A1 (en)* | 2004-06-01 | 2005-12-01 | Nobuo Sato | Crisis monitoring system |

| US20060161218A1 (en)* | 2003-11-26 | 2006-07-20 | Wicab, Inc. | Systems and methods for treating traumatic brain injury |

| US20070032738A1 (en)* | 2005-01-06 | 2007-02-08 | Flaherty J C | Adaptive patient training routine for biological interface system |

| US20070132597A1 (en)* | 2005-12-09 | 2007-06-14 | Valence Broadband, Inc. | Methods and systems for monitoring patient support exiting and initiating response |

| US20070152837A1 (en)* | 2005-12-30 | 2007-07-05 | Red Wing Technologies, Inc. | Monitoring activity of an individual |

Family Cites Families (16)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5400246A (en) | 1989-05-09 | 1995-03-21 | Ansan Industries, Ltd. | Peripheral data acquisition, monitor, and adaptive control system via personal computer |

| US6542076B1 (en) | 1993-06-08 | 2003-04-01 | Raymond Anthony Joao | Control, monitoring and/or security apparatus and method |

| US6160481A (en)* | 1997-09-10 | 2000-12-12 | Taylor, Jr.; John E | Monitoring system |

| US7277414B2 (en)* | 2001-08-03 | 2007-10-02 | Honeywell International Inc. | Energy aware network management |

| JP2003157481A (en) | 2001-11-21 | 2003-05-30 | Allied Tereshisu Kk | Aged-person support system using repeating installation and aged-person support device |

| US20030114763A1 (en)* | 2001-12-13 | 2003-06-19 | Reddy Shankara B. | Fusion of computerized medical data |

| US7091865B2 (en) | 2004-02-04 | 2006-08-15 | General Electric Company | System and method for determining periods of interest in home of persons living independently |

| US8894576B2 (en) | 2004-03-10 | 2014-11-25 | University Of Virginia Patent Foundation | System and method for the inference of activities of daily living and instrumental activities of daily living automatically |

| US20050231356A1 (en)* | 2004-04-05 | 2005-10-20 | Bish Danny R | Hands-free portable receiver assembly for use with baby monitor systems |

| JP3857278B2 (en) | 2004-04-06 | 2006-12-13 | Smk株式会社 | Touch panel input device |

| US7242305B2 (en) | 2004-04-09 | 2007-07-10 | General Electric Company | Device and method for monitoring movement within a home |

| US7154399B2 (en) | 2004-04-09 | 2006-12-26 | General Electric Company | System and method for determining whether a resident is at home or away |

| US20060055543A1 (en)* | 2004-09-10 | 2006-03-16 | Meena Ganesh | System and method for detecting unusual inactivity of a resident |

| US20060089538A1 (en)* | 2004-10-22 | 2006-04-27 | General Electric Company | Device, system and method for detection activity of persons |

| US7420472B2 (en)* | 2005-10-16 | 2008-09-02 | Bao Tran | Patient monitoring apparatus |

| US8164461B2 (en) | 2005-12-30 | 2012-04-24 | Healthsense, Inc. | Monitoring task performance |

- 2007

- 2007-04-19USUS11/788,178patent/US8164461B2/enactiveActive

- 2008

- 2008-04-15WOPCT/US2008/004850patent/WO2008130542A2/enactiveApplication Filing

- 2011

- 2011-12-13USUS13/324,711patent/US8872664B2/enactiveActive

- 2014

- 2014-10-27USUS14/524,717patent/US9396646B2/enactiveActive

- 2016

- 2016-07-18USUS15/212,776patent/US10115294B2/enactiveActive

- 2018

- 2018-10-30USUS16/174,741patent/US10475331B2/enactiveActive

- 2019

- 2019-11-08USUS16/678,144patent/US20200074840A1/ennot_activeAbandoned

Patent Citations (42)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5724987A (en) | 1991-09-26 | 1998-03-10 | Sam Technology, Inc. | Neurocognitive adaptive computer-aided training method and system |

| US5447166A (en) | 1991-09-26 | 1995-09-05 | Gevins; Alan S. | Neurocognitive adaptive computer interface method and system based on on-line measurement of the user's mental effort |

| US6108685A (en) | 1994-12-23 | 2000-08-22 | Behavioral Informatics, Inc. | System for generating periodic reports generating trend analysis and intervention for monitoring daily living activity |

| US5890905A (en) | 1995-01-20 | 1999-04-06 | Bergman; Marilyn M. | Educational and life skills organizer/memory aid |

| DE19522803A1 (en) | 1995-06-23 | 1997-01-02 | Klaus Vorschmitt | Safety monitoring of individuals requiring care |

| US5810747A (en)* | 1996-08-21 | 1998-09-22 | Interactive Remote Site Technology, Inc. | Remote site medical intervention system |

| US5905436A (en) | 1996-10-24 | 1999-05-18 | Gerontological Solutions, Inc. | Situation-based monitoring system |

| US6626676B2 (en) | 1997-04-30 | 2003-09-30 | Unique Logic And Technology, Inc. | Electroencephalograph based biofeedback system for improving learning skills |

| US6402520B1 (en) | 1997-04-30 | 2002-06-11 | Unique Logic And Technology, Inc. | Electroencephalograph based biofeedback system for improving learning skills |

| US6520905B1 (en) | 1998-02-26 | 2003-02-18 | Eastman Kodak Company | Management of physiological and psychological state of an individual using images portable biosensor device |

| US6042383A (en) | 1998-05-26 | 2000-03-28 | Herron; Lois J. | Portable electronic device for assisting persons with learning disabilities and attention deficit disorders |

| US20030130590A1 (en) | 1998-12-23 | 2003-07-10 | Tuan Bui | Method and apparatus for providing patient care |

| US6281790B1 (en)* | 1999-09-01 | 2001-08-28 | Net Talon Security Systems, Inc. | Method and apparatus for remotely monitoring a site |

| US6821258B2 (en) | 1999-11-05 | 2004-11-23 | Wcr Company | System and method for monitoring frequency and intensity of movement by a recumbent subject |

| US6524239B1 (en) | 1999-11-05 | 2003-02-25 | Wcr Company | Apparatus for non-instrusively measuring health parameters of a subject and method of use thereof |

| US20030114736A1 (en) | 1999-11-05 | 2003-06-19 | Wcr Company | System and method for monitoring frequency and intensity of movement by a recumbent subject |

| US6540674B2 (en) | 2000-12-29 | 2003-04-01 | Ibm Corporation | System and method for supervising people with mental disorders |

| US20040131998A1 (en) | 2001-03-13 | 2004-07-08 | Shimon Marom | Cerebral programming |

| US20020198473A1 (en) | 2001-03-28 | 2002-12-26 | Televital, Inc. | System and method for real-time monitoring, assessment, analysis, retrieval, and storage of physiological data over a wide area network |

| US20050244797A9 (en) | 2001-05-14 | 2005-11-03 | Torkel Klingberg | Method and arrangement in a computer training system |

| US20030004652A1 (en) | 2001-05-15 | 2003-01-02 | Daniela Brunner | Systems and methods for monitoring behavior informatics |

| US6558165B1 (en) | 2001-09-11 | 2003-05-06 | Capticom, Inc. | Attention-focusing device and method of use |

| US20030117279A1 (en) | 2001-12-25 | 2003-06-26 | Reiko Ueno | Device and system for detecting abnormality |

| US20030229471A1 (en) | 2002-01-22 | 2003-12-11 | Honeywell International Inc. | System and method for learning patterns of behavior and operating a monitoring and response system based thereon |

| US20030185436A1 (en)* | 2002-03-26 | 2003-10-02 | Smith David R. | Method and system of object classification employing dimension reduction |

| US20030189485A1 (en) | 2002-03-27 | 2003-10-09 | Smith Simon Lawrence | System for monitoring an inhabited environment |

| US20030236451A1 (en)* | 2002-04-03 | 2003-12-25 | The Procter & Gamble Company | Method and apparatus for measuring acute stress |

| US20040219498A1 (en)* | 2002-04-09 | 2004-11-04 | Davidson Lance Samuel | Training apparatus and methods |

| US20030216670A1 (en)* | 2002-05-17 | 2003-11-20 | Beggs George R. | Integral, flexible, electronic patient sensing and monitoring system |