TWI792592B - Computer-assisted needle insertion system and computer-assisted needle insertion method - Google Patents

Computer-assisted needle insertion system and computer-assisted needle insertion methodDownload PDFInfo

- Publication number

- TWI792592B TWI792592BTW110136416ATW110136416ATWI792592BTW I792592 BTWI792592 BTW I792592BTW 110136416 ATW110136416 ATW 110136416ATW 110136416 ATW110136416 ATW 110136416ATW I792592 BTWI792592 BTW I792592B

- Authority

- TW

- Taiwan

- Prior art keywords

- needle

- breathing

- processor

- needle insertion

- learning model

- Prior art date

Links

- 238000003780insertionMethods0.000titleclaimsabstractdescription115

- 230000037431insertionEffects0.000titleclaimsabstractdescription115

- 238000012966insertion methodMethods0.000titleabstractdescription14

- 238000010801machine learningMethods0.000claimsabstractdescription81

- 238000002591computed tomographyMethods0.000claimsabstractdescription39

- 238000013459approachMethods0.000claimsabstractdescription12

- 230000004044responseEffects0.000claimsabstractdescription8

- 230000029058respiratory gaseous exchangeEffects0.000claimsdescription124

- 238000006073displacement reactionMethods0.000claimsdescription59

- 230000000241respiratory effectEffects0.000claimsdescription28

- 230000009471actionEffects0.000claimsdescription21

- 230000001186cumulative effectEffects0.000claimsdescription15

- 230000007774longtermEffects0.000claimsdescription11

- 206010038669Respiratory arrestDiseases0.000claimsdescription7

- 230000033001locomotionEffects0.000claimsdescription7

- 238000005070samplingMethods0.000claimsdescription7

- 230000009916joint effectEffects0.000claimsdescription4

- 239000003550markerSubstances0.000description21

- 230000006870functionEffects0.000description17

- 208000008784apneaDiseases0.000description13

- 238000010586diagramMethods0.000description10

- 238000000034methodMethods0.000description10

- 238000002565electrocardiographyMethods0.000description7

- 238000012545processingMethods0.000description7

- 230000002159abnormal effectEffects0.000description4

- 239000003795chemical substances by applicationSubstances0.000description4

- 238000012549trainingMethods0.000description4

- 238000013528artificial neural networkMethods0.000description3

- 230000000306recurrent effectEffects0.000description3

- 238000001356surgical procedureMethods0.000description3

- 238000003325tomographyMethods0.000description3

- 230000003321amplificationEffects0.000description2

- 238000001914filtrationMethods0.000description2

- 238000005259measurementMethods0.000description2

- 238000003199nucleic acid amplification methodMethods0.000description2

- 239000007787solidSubstances0.000description2

- 238000012360testing methodMethods0.000description2

- 230000009278visceral effectEffects0.000description2

- 206010028980NeoplasmDiseases0.000description1

- 238000002679ablationMethods0.000description1

- 230000008859changeEffects0.000description1

- 238000006243chemical reactionMethods0.000description1

- 238000012937correctionMethods0.000description1

- 238000013135deep learningMethods0.000description1

- 238000003062neural network modelMethods0.000description1

- 230000008518non respiratory effectEffects0.000description1

- 238000005457optimizationMethods0.000description1

- 210000000056organAnatomy0.000description1

- 230000008569processEffects0.000description1

- 230000005855radiationEffects0.000description1

- 230000002787reinforcementEffects0.000description1

- 239000000126substanceSubstances0.000description1

- 230000002123temporal effectEffects0.000description1

- 238000012546transferMethods0.000description1

Images

Landscapes

- Apparatus For Radiation Diagnosis (AREA)

- Radiation-Therapy Devices (AREA)

- Knitting Machines (AREA)

- Automatic Analysis And Handling Materials Therefor (AREA)

Abstract

Description

Translated fromChinese本揭露是有關於一種電腦輔助入針系統和電腦輔助入針方法。The present disclosure relates to a computer-assisted needle insertion system and a computer-assisted needle insertion method.

隨著科技的進步,電腦斷層攝影(computed tomography,CT)影像已普遍用於執行精準內臟入針或腫瘤消融術(ablation)等手術。然而,基於CT影像的手術存在一些缺點。舉例來說,在進行內臟入針手術時,施術者通常僅能憑著經驗估計初次入針的位置、角度或深度,而使的入針路徑不夠精準。因此,受術者可能需要被拍攝多張CT影像以供作為施術者校正入針路徑的參考。如此,受術者接受的輻射劑量可能超標。此外,受術者的呼吸動作可能影響施術者調整入針路徑,且施術者僅能根據經驗等無量化的指標來執行手術。手術的風險可能因上述的因素而增加。With the advancement of technology, computed tomography (CT) images have been widely used in operations such as precise visceral needle insertion or tumor ablation. However, surgery based on CT images has some disadvantages. For example, when performing visceral needling surgery, the operator can usually only estimate the position, angle or depth of the initial needle insertion based on experience, so the needle insertion path is not accurate enough. Therefore, the subject may need to take multiple CT images as a reference for the operator to correct the needle insertion path. In this way, the radiation dose received by the recipient may exceed the standard. In addition, the patient's breathing action may affect the operator's adjustment of the needle insertion path, and the operator can only perform the operation based on unquantifiable indicators such as experience. The risk of surgery may be increased by the factors mentioned above.

本揭露提供一種電腦輔助入針系統和電腦輔助入針方法,可提供建議入針時段和建議入針路徑給入針的施術者。The present disclosure provides a computer-assisted needling insertion system and a computer-assisted needling insertion method, which can provide a suggested needling time period and a suggested needling path for the operator of needling.

本揭露的一種電腦輔助入針系統,適用於控制針具。電腦輔助入針系統包含儲存媒體以及處理器。儲存媒體儲存第一機器學習模型以及第二機器學習模型。處理器耦接儲存媒體,其中處理器經配置以執行:取得電腦斷層攝影影像以及入針路徑,並且根據第一機器學習模型、電腦斷層攝影影像以及入針路徑產生建議入針路徑,並指示針具接近目標的表面上的入針點,其中入針點位於建議入針路徑;取得目標的呼吸訊號,根據第二機器學習模型以及呼吸訊號估計目標的未來呼吸狀態是否正常;以及響應於判斷未來呼吸狀態正常,根據呼吸訊號輸出建議入針時段。The disclosed computer-aided needle insertion system is suitable for controlling needles. The computer-aided needle insertion system includes a storage medium and a processor. The storage medium stores the first machine learning model and the second machine learning model. The processor is coupled to the storage medium, wherein the processor is configured to execute: obtain the computerized tomography image and the needle insertion path, generate a suggested needle insertion path according to the first machine learning model, the computer tomography image, and the needle insertion path, and indicate the needle There is a needle entry point on the surface close to the target, wherein the needle entry point is located on the suggested needle entry path; obtaining the target's breathing signal, estimating whether the target's future breathing state is normal according to the second machine learning model and the breathing signal; and responding to judging the future The breathing state is normal, and the needle insertion time is recommended according to the breathing signal output.

在本揭露的一實施例中,上述的第一機器學習模型為深度Q-學習模型。In an embodiment of the present disclosure, the above-mentioned first machine learning model is a deep Q-learning model.

在本揭露的一實施例中,上述的電腦斷層攝影影像包含針具、目標的表面上的標記以及目標物件。In an embodiment of the present disclosure, the above-mentioned computed tomography image includes needles, markings on the surface of the target, and the target object.

在本揭露的一實施例中,上述的Q-學習模型的狀態集合中的狀態包含:針具的針尖的第一座標、標記的第二座標以及目標物件的第三座標。In an embodiment of the present disclosure, the states in the state set of the above-mentioned Q-learning model include: the first coordinate of the needle tip of the needle, the second coordinate of the mark, and the third coordinate of the target object.

在本揭露的一實施例中,上述的Q-學習模型的獎勵關聯於下列的至少其中之一:第一座標與第三座標之間的第一距離;第一座標、第二座標和第三座標所形成的單位向量與入針路徑之間的夾角;單位向量與入針路徑之間的第二距離;第一座標停止更新的時間;以及第一座標與表面之間的第三距離。In an embodiment of the present disclosure, the reward of the above-mentioned Q-learning model is associated with at least one of the following: the first distance between the first coordinate and the third coordinate; the first coordinate, the second coordinate and the third The angle between the unit vector formed by the coordinates and the needle path; the second distance between the unit vector and the needle path; the time when the first coordinate stops updating; and the third distance between the first coordinate and the surface.

在本揭露的一實施例中,上述的電腦輔助入針系統更包含收發器。收發器耦接處理器,其中處理器通過收發器通訊連接至機械手臂,其中處理器通過收發器傳送指令至機械手臂以控制針具,其中Q-學習模型的動作集合中的動作包含:機械手臂的關節動作。In an embodiment of the present disclosure, the computer-aided needle insertion system further includes a transceiver. The transceiver is coupled to the processor, wherein the processor communicates with the robotic arm through the transceiver, wherein the processor transmits instructions to the robotic arm through the transceiver to control the needle, wherein the actions in the action set of the Q-learning model include: the robotic arm joint action.

在本揭露的一實施例中,上述的處理器從動作集合中選出對應於Q-學習模型的累積獎勵的最大期望值的動作,並且根據動作更新Q-學習模型的狀態以訓練Q-學習模型。In an embodiment of the present disclosure, the above-mentioned processor selects an action corresponding to the maximum expected cumulative reward of the Q-learning model from the action set, and updates the state of the Q-learning model according to the action to train the Q-learning model.

在本揭露的一實施例中,上述的處理器根據更新的狀態決定建議入針路徑。In an embodiment of the present disclosure, the above-mentioned processor determines a suggested needle insertion route according to the updated state.

在本揭露的一實施例中,上述的機械手臂包含固定針具的夾具,其中處理器通過收發器指示機械手臂在建議入針時段以外的時段鬆開夾具。In an embodiment of the present disclosure, the above-mentioned robotic arm includes a clamp for fixing the needle, wherein the processor instructs the robotic arm to release the clamp during a period other than the recommended needle insertion period through the transceiver.

在本揭露的一實施例中,上述的處理器更經配置以執行:根據歷史資料決定參考呼吸週期,並且根據參考呼吸週期決定取樣時段;根據取樣時段取樣心電圖訊號;以及將心電圖訊號轉換為呼吸訊號。In an embodiment of the present disclosure, the above-mentioned processor is further configured to execute: determine a reference breathing cycle according to historical data, and determine a sampling period according to the reference breathing cycle; sample the ECG signal according to the sampling period; and convert the ECG signal into a respiration signal.

在本揭露的一實施例中,上述的儲存媒體更儲存第三機器學習模型,其中處理器更經配置以執行:自呼吸訊號擷取出呼吸參數;取得第二座標的位移量,並根據位移量決定時域參數;對呼吸訊號執行短時傅立葉轉換以產生頻域參數;基於第三機器學習模型而根據呼吸參數、時域參數以及頻域參數判斷目標的長期呼吸狀態是否正常;以及響應於長期呼吸狀態正常,估計目標的未來呼吸狀態是否正常。In an embodiment of the present disclosure, the above-mentioned storage medium further stores a third machine learning model, wherein the processor is further configured to execute: extracting breathing parameters from the breathing signal; obtaining the displacement of the second coordinate, and according to the displacement Determining time domain parameters; performing short-time Fourier transform on the respiratory signal to generate frequency domain parameters; judging whether the long-term breathing state of the target is normal according to the breathing parameters, time domain parameters and frequency domain parameters based on the third machine learning model; and responding to the long-term The breathing state is normal, and it is estimated whether the target's future breathing state is normal.

在本揭露的一實施例中,上述的標記包含對應於第一子座標的第一子標記以及對應於第二子座標的第二子標記,其中時域參數包含下列的至少其中之一:第一子座標在X軸的第一位移量;第一子座標在Y軸的第二位移量;第一子座標在Z軸的第三位移量;第一位移量、第二位移量以及第三位移量的第一總和;第二子座標在X軸的第四位移量;第二子座標在Y軸的第五位移量;第二子座標在Z軸的第六位移量;第四位移量、第五位移量以及第六位移量的第二總和;以及第一總和與第二總和的總和。In an embodiment of the present disclosure, the above-mentioned markers include a first sub-mark corresponding to the first sub-coordinate and a second sub-mark corresponding to the second sub-coordinate, wherein the time domain parameter includes at least one of the following: The first displacement of a sub-coordinate on the X-axis; the second displacement of the first sub-coordinate on the Y-axis; the third displacement of the first sub-coordinate on the Z-axis; the first displacement, the second displacement and the third The first sum of the displacement; the fourth displacement of the second sub-coordinate on the X-axis; the fifth displacement of the second sub-coordinate on the Y-axis; the sixth displacement of the second sub-coordinate on the Z-axis; the fourth displacement , the second sum of the fifth displacement and the sixth displacement; and the sum of the first sum and the second sum.

在本揭露的一實施例中,上述的處理器更經配置以執行:基於第二機器學習模型而根據時域參數以及頻域參數估計目標的未來呼吸狀態是否正常。In an embodiment of the present disclosure, the above-mentioned processor is further configured to execute: estimating whether the future breathing state of the target is normal or not according to the time-domain parameter and the frequency-domain parameter based on the second machine learning model.

在本揭露的一實施例中,上述的處理器更經配置以執行:根據呼吸訊號判斷第二座標的位移量,並且根據位移量決定時域參數;根據時域參數產生特徵訊號;響應於特徵訊號在時段期間小於閾值,將時段設為呼吸停滯時段;以及根據呼吸停滯時段決定建議入針時段。In an embodiment of the present disclosure, the above-mentioned processor is further configured to perform: judging the displacement of the second coordinate according to the breathing signal, and determining the time-domain parameter according to the displacement; generating a characteristic signal according to the time-domain parameter; responding to the characteristic The signal is less than the threshold during the time period, and the time period is set as the apnea stagnation period; and the suggested needle insertion time period is determined according to the apnea stagnation period.

在本揭露的一實施例中,上述的儲存媒體更儲存第三機器學習模型,其中處理器更經配置以執行:取得對應於呼吸停滯時段的第二呼吸訊號;自第二呼吸訊號擷取出第二呼吸參數;根據第二呼吸訊號判斷第二座標的第二位移量,並且根據第二位移量決定第二時域參數;對第二呼吸訊號執行短時傅立葉轉換以產生頻域參數;基於第三機器學習模型而根據第二呼吸參數、第二時域參數以及頻域參數判斷目標的當前呼吸狀態是否正常;以及響應於當前呼吸狀態正常,將呼吸停滯時段設為建議入針時段。In an embodiment of the present disclosure, the above-mentioned storage medium further stores a third machine learning model, wherein the processor is further configured to execute: obtaining a second respiratory signal corresponding to a period of respiratory arrest; extracting a second respiratory signal from the second respiratory signal; Two respiratory parameters; determine the second displacement of the second coordinate according to the second respiratory signal, and determine the second time domain parameter according to the second displacement; perform short-time Fourier transform on the second respiratory signal to generate the frequency domain parameter; based on the first Three machine learning models judge whether the current breathing state of the target is normal according to the second breathing parameter, the second time domain parameter and the frequency domain parameter;

在本揭露的一實施例中,上述的處理器根據建議入針時段決定針具移動時間以及針具移動距離,並且輸出針具移動時間以及針具移動距離。In an embodiment of the present disclosure, the above-mentioned processor determines the needle moving time and the needle moving distance according to the suggested needle insertion time period, and outputs the needle moving time and the needle moving distance.

本揭露的一種電腦輔助入針方法,適用於控制針具。電腦輔助入針方法包含:取得第一機器學習模型以及第二機器學習模型;取得電腦斷層攝影影像以及入針路徑,並且根據第一機器學習模型、電腦斷層攝影影像以及入針路徑產生建議入針路徑,並指示針具接近目標的表面上的入針點,其中所述入針點位於所述建議入針路徑;取得目標的呼吸訊號,根據第二機器學習模型以及呼吸訊號估計目標的未來呼吸狀態是否正常;以及響應於判斷未來呼吸狀態正常,根據呼吸訊號輸出建議入針時段。A computer-assisted needle insertion method disclosed in the present disclosure is suitable for controlling needles. The computer-assisted needle insertion method includes: obtaining a first machine learning model and a second machine learning model; obtaining computerized tomography images and needle insertion paths, and generating suggested needle insertions based on the first machine learning model, computer tomography images, and needle insertion paths path, and indicate the needle entry point on the surface of the needle approaching the target, wherein the needle entry point is located on the proposed needle entry path; obtain the target's breathing signal, and estimate the target's future breathing according to the second machine learning model and the breathing signal Whether the state is normal; and in response to judging that the future breathing state is normal, suggest a needle insertion time period according to the breathing signal output.

基於上述,本揭露可使針具接近目標表面上的理想入針點。本揭露可根據機器學習技術判斷目標的呼吸狀態是否正常。本揭露還可根據目標的呼吸訊號判斷目標的呼吸停滯時段,並且建議施術者在呼吸停滯時段執行入針手術。Based on the above, the present disclosure can bring the needle tool close to the ideal needle entry point on the target surface. The present disclosure can judge whether the target's breathing state is normal based on machine learning technology. The present disclosure can also judge the target's breathing stagnation period according to the breathing signal of the target, and suggest the operator to perform the needle insertion operation during the breathing stagnation period.

為了使本發明之內容可以被更容易明瞭,以下特舉實施例作為本發明確實能夠據以實施的範例。另外,凡可能之處,在圖式及實施方式中使用相同標號的元件/構件/步驟,係代表相同或類似部件。In order to make the content of the present invention more comprehensible, the following specific embodiments are taken as examples in which the present invention can actually be implemented. In addition, wherever possible, elements/components/steps using the same reference numerals in the drawings and embodiments represent the same or similar parts.

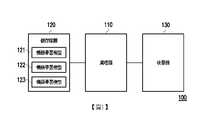

圖1根據本揭露的一實施例繪示一種電腦輔助入針系統100的示意圖。電腦輔助入針系統100可用於控制針具,其中所述針具可固定於機械手臂。電腦輔助入針系統100可通過配置機械手臂來控制針具。電腦輔助入針系統100可包含處理器110、儲存媒體120以及收發器130。FIG. 1 shows a schematic diagram of a computer-assisted

處理器110例如是中央處理單元(central processing unit,CPU),或是其他可程式化之一般用途或特殊用途的微控制單元(micro control unit,MCU)、微處理器(microprocessor)、數位信號處理器(digital signal processor,DSP)、可程式化控制器、特殊應用積體電路(application specific integrated circuit,ASIC)、圖形處理器(graphics processing unit,GPU)、影像訊號處理器(image signal processor,ISP)、影像處理單元(image processing unit,IPU)、算數邏輯單元(arithmetic logic unit,ALU)、複雜可程式邏輯裝置(complex programmable logic device,CPLD)、現場可程式化邏輯閘陣列(field programmable gate array,FPGA)或其他類似元件或上述元件的組合。處理器110可耦接至儲存媒體120以及收發器130,並且存取和執行儲存於儲存媒體120中的多個模組和各種應用程式。The

儲存媒體120例如是任何型態的固定式或可移動式的隨機存取記憶體(random access memory,RAM)、唯讀記憶體(read-only memory,ROM)、快閃記憶體(flash memory)、硬碟(hard disk drive,HDD)、固態硬碟(solid state drive,SSD)或類似元件或上述元件的組合,而用於儲存可由處理器110執行的多個模組或各種應用程式。在本實施例中,儲存媒體120可儲存包含機器學習模型121(或稱為「第一機器學習模型」,用於建議入針路徑)、機器學習模型122(或稱為「第二機器學習模型」,用於估計目標的未來呼吸狀態是否正常)以及機器學習模型123(或稱為「第三機器學習模型」,用於判斷目標的當前/長期呼吸狀態是否正常)等多個模組,其功能將於後續說明。The

收發器130以無線或有線的方式傳送及接收訊號。收發器130還可以執行例如低噪聲放大、阻抗匹配、混頻、向上或向下頻率轉換、濾波、放大以及類似的操作。處理器110可通過收發器130通訊連接至用以控制針具的機械手臂。The

圖2根據本揭露的一實施例繪示對目標(即:受術者)20進行入針的示意圖。機械手臂30可包含用於固定針具40的夾具31。電腦輔助入針系統100可輔助施術者控制機械手臂30以將針具40的針尖41插入目標物件60。目標物件60例如是目標20體內的目標臟器。FIG. 2 shows a schematic diagram of inserting needles into a target (ie, subject) 20 according to an embodiment of the present disclosure. The

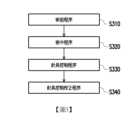

圖3根據本揭露的一實施例繪示一種電腦輔助入針方法的流程圖,其中所述電腦輔助入針方法可由如圖1所示的電腦輔助入針系統100實施。在步驟S310中,處理器110可執行術前程序。在步驟S320中,處理器110可執行術中程序。在步驟S330中,處理器110可執行針具控制程序。在步驟S340中,處理器110可執行針具控制校正程序。FIG. 3 shows a flowchart of a computer-aided needle insertion method according to an embodiment of the present disclosure, wherein the computer-aided needle insertion method can be implemented by the computer-aided

處理器110可執行術前程序(即:步驟S310)以產生CT影像。具體來說,處理器110可通過收發器130通訊連接至CT影像攝影機,並且控制CT影像攝影機掃瞄目標20以產生CT影像。CT影像可包含目標20、標籤50以及目標物件60。處理器110可自CT影像攝影機接收CT影像。The

處理器110可執行術中程序(即:步驟S320)以指示針具40接近目標20的表面21上的入針點。理想的入針點可位於建議入針路徑15與表面21的相交處。處理器110可通過收發器130輸出建議入針路徑15以供施術者參考。圖4根據本揭露的一實施例繪示步驟S320的流程圖。步驟S320可包含步驟S321~S324。The

在步驟S321中,處理器110可通過收發器130接收CT影像以及入針路徑10。舉例來說,處理器110可通過收發器130以自CT影像攝影機接收CT影像。舉例來說,施術者可操作終端裝置選擇入針路徑10。處理器110可通過收發器130以自所述終端裝置接收入針路徑10。In step S321 , the

在步驟S322中,處理器110可通過收發器130輸出CT影像以及入針路徑10。具體來說,處理器110可通過收發器130通訊連接至顯示器以顯示CT影像供施術者參考。施術者可根據CT影像確認是否採用入針路徑10,並可根據入針路徑10在目標20的表面21貼上標記50,如圖2所示。舉例來說,施術者可在入針路徑10與表面21的相交處貼上標記50。In step S322 , the

在步驟S323中,在標記50被貼上目標20後,處理器110可控制CT影像攝影機掃瞄目標20以取得包含目標20、標籤50以及目標物件60的CT影像。處理器110可通過收發器130以自CT影像攝影機接收所述CT影像。在一實施例中,處理器110可通過收發器130將CT影像以及入針路徑10輸出至顯示器。顯示器可顯示CT影像以及入針路徑10以供施術者參考。In step S323 , after the

在步驟S324中,處理器110可根據CT影像以及入針路徑10指示針具40的針尖41接近目標20的表面21上的入針點,其中所述入針點可位於標記50內。在一實施例中,處理器110可通過收發器130輸出提示資訊(例如:提示影像或提示音訊),其中所述提示資訊可提示施術者操作機械手臂30以使針具40的針尖41接近入針點。在一實施例中,處理器110可通過收發器130傳送指令至機械手臂30以控制針具40,藉以使針具40的針尖41接近入針點。In step S324 , the

具體來說,處理器110可根據機器學習模型121、CT影像以及入針路徑10以產生建議入針路徑15,並指示針具40的針尖41接近入針點,其中CT影像可包含目標20、標籤50以及目標物件60。入針點可位於建議入針路徑15。機器學習模型121可以是強化學習(reinforcement learning)模型。在本實施例中,機器學習模型121是深度Q-學習(deep Q-learning,DQN)模型。Specifically, the

根據Q-學習演算法,機器學習模型121的智慧型代理人(agent)可通過執行動作集合(set of actions)A中的動作以從狀態集合(set of states)S中的一個狀態轉移至狀態集合S中的另一個狀態。在一個特定的狀態下執行一個動作時,智慧型代理人可取得一個獎勵(reward)。機器學習模型121的智慧型代理人可由處理器110實施。According to the Q-learning algorithm, the intelligent agent (agent) of the

機器學習模型121的狀態集合S中的狀態可包含針尖41的座標、標記50的座標、目標物件60的座標或時間戳記。狀態集合S中的狀態可對應於笛卡兒座標系(Cartesian coordinate system)或四元數(quaternion)。機器學習模型121的動作集合A中的動作可包含機械手臂30的關節動作。若機械手臂30包含一或多個關節,則所述關節動作可控制機械手臂30的一或多個關節,藉以改變針尖41的座標。值得注意的是,為了避免特定的動作對機械手臂30造成損害,動作集合A可不包含對應於機械手臂30的奇異點(singularity)的關節動作。The states in the state set S of the

機器學習模型121所使用的演算法如方程式(1)和方程式(2)所示,其中為對應於時間點t的狀態,為對應於時間點t的動作,為對應於時間點t的獎勵,Q為期望累積獎勵(expected cumulated reward)函數。方程式(1)用於訓練時期,並且方程式(2)用於測試時期。獎勵僅使用於訓練時期,測試時期是使用訓練好的期望累積獎勵函數Q。在一實施例中,期望累積獎勵函數Q可為深度神經網路。處理器110可在收集到足夠的狀態、動作獎勵、狀態的樣本後,形成訓練集合TD(TD={(,,,),}),並可根據訓練集合TD中的N個樣本(N為正整數)訓練機器學習模型121的期望累積獎勵函數Q,如方程式(1)所示。…(1)…(2)The algorithm used by the

處理器110可根據所述狀態決定建議入針路徑15,其中建議入針路徑15可近似於入針路徑10(施術者選擇的是入針路徑10,建議入針路徑15會通過期望累積獎勵函數Q逼近入針路徑10),如圖2所示。具體來說,處理器110可根據方程式(2)最佳化針尖41的座標、標記50的座標或目標物件60的座標,以使針尖41接近目標20的表面21上的入針點。例如,狀態可為針尖41的座標、標記50的座標或目標物件60的座標在時間點t時的函數。另一方面,針尖41的座標、標記50的座標或目標物件60的座標可為狀態的函數。處理器110可根據狀態決定一動作,從而最大化已訓練好的期望累積獎勵Q。處理器110可根據動作更新狀態以產生狀態。再重複執行上述的步驟後,最終的狀態將會達到最佳化。也就是說,針尖41的座標、標記50的座標或目標物件60的座標將達到最佳化。在完成針尖41的座標、標記50的座標或目標物件60的座標的最佳化後,處理器110可根據針尖41的最佳化座標、標記50的最佳化座標或目標物件60的最佳化座標決定建議入針路徑15。舉例來說,針尖41的最佳化座標、標記50的最佳化座標或目標物件60的最佳化座標三者可組成建議入針路徑15。The

在一實施例中,處理器110可通過收發器130輸出與機器學習模型121的狀態或動作相關的參考資訊給施術者。施術者可根據參考資訊操作機械手臂30以使針尖41接近入針點。在一實施例中,處理器110可通過收發器130傳送對應於機器學習模型121的狀態或動作的指令至機械手臂30,藉以控制機械手臂30移動針具40以使針尖41接近入針點。In one embodiment, the

在一實施例中,機器學習模型121的獎勵可關聯於針尖41的座標與目標物件60的座標之間的距離。若所述距離越短,則獎勵的值越高。換句話說,機器學習模型121的獎勵可用於訓練期望累積獎勵函數Q,其中所述期望累積獎勵函數Q可最小化針尖41與目標物件60之間的距離(或稱為「第一距離」)。In one embodiment, the reward of the

在一實施例中,機器學習模型121的獎勵可關聯於特定單位向量與標記50的入針路徑10之間的夾角,其中所述特定向量可為針尖41的座標、標記50的座標與目標物件60的座標所形成的單位向量。若所述夾角越小,則獎勵的值越高。換句話說,機器學習模型121的獎勵可用於訓練期望累積獎勵函數Q,其中所述期望累積獎勵函數Q可使針尖41、座標50以及目標物件60所組成的線段與入針路徑10平行。在一實施例中,機器學習模型121的獎勵可關聯於所述特定單位向量與入針路徑10之間的距離(或稱為「第二距離」)。若所述距離越短,則獎勵的值越高。換句話說,機器學習模型121的獎勵可使針尖41、座標50以及目標物件60所組成的線段逼近入針路徑10。In one embodiment, the reward of the

在一實施例中,機器學習模型121的獎勵可關聯於針尖41的座標停止更新的時間。若停止更新的時間越長(例如:針尖41靜止不動),則獎勵的值越低。換句話說,機器學習模型121的獎勵可用於訓練期望累積獎勵函數Q,其中所述期望累積獎勵函數Q可促使機械手臂30積極地移動針尖41。In one embodiment, the reward of the

在一實施例中,機器學習模型121的獎勵可關聯於針尖41的座標與表面21之間的距離(或稱為「第三距離」)。若所述距離小於閾值,則獎勵的值會大幅地降低。換句話說,機器學習模型121的獎勵可用於訓練期望累積獎勵函數Q,其中所述期望累積獎勵函數Q可維持針尖41與表面21之間的距離,以避免針尖41與表面21接觸。因此,在施術者操作機械手臂30將針具40刺入表面21之前,機械手臂30都不會讓針具40與表面21接觸。In one embodiment, the reward of the

處理器110可執行針具控制程序(即:步驟S330)以根據目標20的呼吸訊號輸出建議入針時段供施術者參考。圖5根據本揭露的一實施例繪示步驟S330的流程圖,步驟S330可包含步驟S331~S336。The

在步驟S331中,處理器110可通過收發器130取得目標20的呼吸訊號。具體來說,處理器110可根據歷史資料決定參考呼吸週期,其中所述歷史資料例如是分別對應於多位臨床受測者的多個呼吸訊號。處理器110可計算多個呼吸訊號的平均呼吸週期以產生參考呼吸週期,並可根據參考呼吸週期決定取樣時段。In step S331 , the

在決定取樣時段後,處理器110可通過收發器130接收目標20的心電圖(electrocardiography,ECG)的測量結果,並且根據取樣時段自測量結果取樣心電圖訊號。處理器110可將心電圖訊號轉換為目標20的呼吸訊號。舉例來說,處理器110可根據R尺寸(R size)方法或RR方法以將心電圖訊號轉換為呼吸訊號。After the sampling period is determined, the

在步驟S332中,處理器110可根據呼吸訊號判斷目標20的長期呼吸狀態是否正常。若目標20的長期呼吸狀態正常,則進入步驟S333。若目標20的呼吸震幅或週期在所屬群體(例如:相同年齡或相同性別)的正常範圍內,則處理器110可將目標20的長期呼吸狀態判斷為正常。In step S332, the

具體來說,處理器110可從呼吸訊號中擷取出呼吸參數,其中呼吸參數可包含呼吸起始時間點、呼吸結束時間點或平均呼吸週期等。圖6根據本揭露的一實施例繪示呼吸訊號600的示意圖。處理器110可從呼吸訊號600取得N個呼吸週期,並且根據N個呼吸週期計算平均呼吸週期,其中N可為任意的正整數。在本實施例中,假設N為2。處理器110可自呼吸訊號600取得呼吸起始時間點61以及呼吸停止時間點62。處理器110可根據呼吸停止時間點62與呼吸起始時間點61的差值計算呼吸週期610。此外,處理器110可自呼吸訊號600取得呼吸起始時間點63以及呼吸停止時間點64。處理器110可根據呼吸停止時間點64與呼吸起始時間點63的差值計算呼吸週期620。處理器110可計算呼吸週期610與呼吸週期620的平均值以產生平均呼吸週期(單位:秒)。Specifically, the

另一方面,處理器110通過收發器130取得標記50的座標的位移量,並且根據位移量決定時域參數。具體來說,處理器110可根據對應於呼吸訊號600的心電圖訊號判斷標記50的座標的位移量。處理器110可根據標記50的座標的位移量決定時域參數。在一實施例中,處理器110可先對呼吸訊號執行低通濾波程序以產生經濾波的呼吸訊號。處理器110可根據經濾波的呼吸訊號判斷標記50的座標的位移量。On the other hand, the

時域參數可關聯於標記50的子標記。圖7根據本揭露的一實施例繪示標記50的示意圖。標記50可包含一或多個子標記。在本實施例中,標記50可包含子標記51、子標記52、子標記53以及子標記54。Temporal parameters may be associated with submarks of the

在一實施例中,時域參數可為時間的函數。時域參數可包含子標記在各個座標軸上的位移量。舉例來說,時域參數可包含子標記51的座標(子標記的座標又稱為「子座標」)在X軸的位移量、子標記51的座標在Y軸的位移量、子標記51的座標在Z軸的位移量、子標記52的座標在X軸的位移量、子標記52的座標在Y軸的位移量、子標記52的座標在Z軸的位移量、子標記53的座標在X軸的位移量、子標記53的座標在Y軸的位移量、子標記53的座標在Z軸的位移量、子標記54的座標在X軸的位移量、子標記54的座標在Y軸的位移量或子標記54的座標在Z軸的位移量等多個時域特徵。In one embodiment, the time domain parameter may be a function of time. The time domain parameter may include the displacement of the sub-marker on each coordinate axis. For example, the time domain parameter may include the displacement of the coordinates of the sub-mark 51 (the coordinates of the sub-mark are also called "sub-coordinates") on the X-axis , the displacement of the coordinates of the

在一實施例中,時域參數可包含子標記在各個座標軸上的位移量的總和。舉例來說,時域參數可包含子標記51的座標在X軸、Y軸和Z軸上的位移量的總和()。時域參數可包含子標記52的座標在X軸、Y軸和Z軸上的位移量的總和()。時域參數可包含子標記53的座標在X軸、Y軸和Z軸上的位移量的總和()。時域參數可包含子標記54的座標在X軸、Y軸和Z軸上的位移量的總和()。In an embodiment, the time-domain parameter may include the sum of the displacements of the submarks on each coordinate axis. For example, the time domain parameter may include the sum of the displacements of the coordinates of the sub-mark 51 on the X-axis, Y-axis and Z-axis ( ). The time-domain parameter can include the sum of the displacements of the coordinates of the sub-mark 52 on the X-axis, Y-axis and Z-axis ( ). The time-domain parameter can include the sum of the displacements of the coordinates of the sub-mark 53 on the X-axis, Y-axis and Z-axis ( ). The time-domain parameter can include the sum of the displacements of the coordinates of the sub-mark 54 on the X-axis, Y-axis and Z-axis ( ).

在一實施例中,時域參數可包含多個子標記在不同座標軸上的位移量的總和。舉例來說,時域參數可包含總和、總和、總和以及總和等四個參數的總和()。In an embodiment, the time-domain parameter may include a sum of displacements of multiple sub-markers on different coordinate axes. For example, a time domain parameter could contain the sum of ,sum ,sum and the sum The sum of the four parameters ( ).

另一方面,處理器110可對呼吸訊號執行短時傅立葉轉換(short-time Fourier transform,STFT)以產生頻域參數。舉例來說,處理器110可對呼吸訊號600執行解析度為128的短時傅立葉轉換,藉以產生包含128個頻率特徵的頻域參數。On the other hand, the

在取得呼吸參數、時域參數以及頻域參數後,處理器110可基於機器學習模型123而根據呼吸參數、時域參數以及頻域參數判斷目標20的長期呼吸狀態是否正常。具體來說,處理器110可將分別對應於多個時間點的多個呼吸參數、多個時域參數以及多個頻域參數輸入至機器學習模型123,以產生分別對應於多個時間點的多筆輸出資料,其中所述多筆輸出資料的每一者可代表當前呼吸狀態正常或當前呼吸狀態異常,多個當前呼吸狀態資料則累積為長期呼吸狀態。處理器110可根據所述多筆輸出資料判斷目標20的長期呼吸狀態是否正常。機器學習模型123例如是遞歸類神經網路(recurrent neural network,RNN)模型。在一實施例中,機器學習模型123可為監督式機器學習模型。處理器110可收集多個歷史呼吸參數、多個歷史時域參數以及多個歷史頻域參數來訓練機器學習模型123。訓練好的機器學習模型123可依據根據呼吸參數、時域參數以及頻域參數判斷目標20的呼吸狀態是否正常。After obtaining the breathing parameters, time domain parameters and frequency domain parameters, the

在步驟S333中,處理器110根據機器學習模型122以及呼吸訊號估計目標20的未來呼吸狀態是否正常。若目標20的未來呼吸狀態正常,則進入步驟S334。In step S333 , the

具體來說,處理器110可基於機器學習模型122而根據與呼吸訊號600相關的時域參數或頻率參數(例如:步驟S332所述的時域參數或頻率參數)估計目標20的未來呼吸狀態(例如:估計時間t+1、t+2或t+3的呼吸狀態)。處理器110可將時域參數或頻率參數輸入至機器學習模型122。機器學習模型122可根據輸入資料輸出代表未來呼吸狀態正常或未來呼吸狀態異常的輸出資料。機器學習模型122例如是遞歸類神經網路模型。在一實施例中,機器學習模型122可為監督式機器學習模型。處理器110可收集多個歷史時域參數以及多個歷史頻域參數來訓練機器學習模型122。訓練好的機器學習模型122可依據根據時域參數以及頻域參數估計目標20的未來呼吸狀態是否正常。Specifically, the

在步驟S334中,處理器110可根據呼吸訊號判斷目標20的呼吸停滯時段。具體來說,處理器110可根據對應於呼吸訊號600的時域參數(例如:步驟S332所述的時域參數)產生特徵訊號。處理器110可響應於特徵訊號在特定時段期間小於閾值而將所述特定時段設為設為呼吸停滯時段。在一實施例中,特徵訊號可為標記50的子標記在各個座標軸上的位移量的特徵。In step S334, the

圖8根據本揭露的一實施例繪示特徵訊號700的示意圖。假設時段710對應於呼吸週期610,並且時段720對應於呼吸週期620。處理器110可響應於特徵訊號700在時段810或時段820期間小於閾值800而將時段810或時段820設為目標20的呼吸停滯時段。在呼吸停滯時段期間為目標20執行入針可大幅地降低手術風險。FIG. 8 shows a schematic diagram of a

在步驟S335中,處理器110可根據呼吸停滯時段決定建議入針時段。舉例來說,處理器110可選擇時段810以及時段820的至少其中之一以作為建議入針時段。In step S335, the

在一實施例中,處理器110可根據目標20的當前呼吸狀態決定建議入針時段。具體來說,處理器110可通過收發器130取得對應於呼吸停滯時段的呼吸訊號(或稱為「第二呼吸訊號」、「呼吸停滯訊號」)。舉例來說,處理器110可通過收發器130接收對應於呼吸停滯時段的目標20的心電圖訊號,並且將所述心電圖訊號轉換為呼吸停滯訊號。處理器110可根據R尺寸方法或RR方法以將心電圖訊號轉換為呼吸停滯訊號。In one embodiment, the

接著,處理器110可根據呼吸停滯訊號產生時域參數以及頻率參數。舉例來說,處理器110可根據與步驟S332相似的方式產生對應於呼吸停滯訊號時域參數以及頻率參數。處理器110可基於機器學習模型123而根據時域參數以及頻域參數判斷目標20的當前呼吸狀態是否正常。處理器110可將時域參數以及頻域參數輸入至機器學習模型123。機器學習模型123可根據輸入資料輸出代表當前呼吸狀態正常或當前呼吸狀態異常的輸出資料。處理器110可響應於當前狀態正常而將對應於呼吸停滯訊號的呼吸停滯時段設為建議入針時段。Then, the

在步驟S336中,處理器110可通過收發器130輸出建議入針時段。In step S336 , the

在一實施例中,為了避免目標20受到傷害,處理器110可通過收發器130指示機械手臂30在建議入針時段以外的時段鬆開夾具31,藉以使針具40能隨著目標20的呼吸起伏移動。In one embodiment, in order to prevent the

在一實施例中,處理器110可根據建議入針時段決定針具40的針具移動時間以及針具移動距離。處理器110可通過收發器130輸出針具移動時間以及針具移動距離。舉例來說,處理器110可決定針具移動時間(或針具移動距離)與建議入針時段的長度成正比。In one embodiment, the

處理器110可執行控制校正程序(即:步驟S340)以確保入針手術順利地執行。圖9根據本揭露的一實施例繪示步驟S340的流程圖,其中步驟S340可包含步驟S341~343。在步驟S341中,處理器110可取得目標20在入針手術期間的CT影像。CT影像可包含目標20、針具40、標籤50以及目標物件60。在步驟S342中,處理器110可根據CT影像判斷針具40的當前路徑是否符合建議入針路徑15。在步驟S343中,處理器110可根據針具40的當前路徑調整機械手臂30。具體來說,若針具40的當前路徑與建議入針路徑15相符,則處理器110可維持機械手臂30的原先配置。若針具40的當前路徑與建議入針路徑15不相符,則處理器110可根據針具40的當前路徑更新建議入針路徑,並且通過收發器130輸出更新的建議入針路徑。The

圖10根據本揭露的一實施例繪示一種電腦輔助入針方法的流程圖。電腦輔助入針方法適用於控制針具,並且電腦輔助入針方法可由如圖1所示的電腦輔助入針系統100實施。在步驟S111中,取得第一機器學習模型以及第二機器學習模型。在步驟S112中,取得電腦斷層攝影影像以及入針路徑,並且根據第一機器學習模型、電腦斷層攝影影像以及入針路徑產生建議入針路徑,並指示針具接近目標的表面上的入針點,其中入針點位於建議入針路徑。在步驟S113中,取得目標的呼吸訊號,根據第二機器學習模型以及呼吸訊號估計目標的未來呼吸狀態是否正常。在步驟S114中,響應於判斷未來呼吸狀態正常,根據呼吸訊號輸出建議入針時段。FIG. 10 shows a flowchart of a computer-assisted needle insertion method according to an embodiment of the present disclosure. The computer-aided needle insertion method is suitable for controlling needles, and the computer-aided needle insertion method can be implemented by the computer-aided

綜上所述,本揭露可在入針手術執行前根據深度學習技術固定針具的位置,使針具接近目標表面上的理想入針點。施術者可根據入針點精準地將針具插入目標物件。此外,本揭露可根據機器學習技術判斷目標的呼吸狀態是否正常,藉以避免施術者在目標呼吸狀態異常的情況下執行入針手術,導致入針手術受到干擾。再者,本揭露還可根據目標的呼吸訊號判斷目標的呼吸停滯時段,並且建議施術者在呼吸停滯時段執行入針手術,藉此將目標的呼吸對入針手術的影響降到最低。在非呼吸停滯時段期間,電腦輔助入針系統可控制機械手臂鬆開用以固定針具的夾具,降低針具對使用者身體的損害。To sum up, the present disclosure can fix the position of the needle according to the deep learning technology before the needle insertion operation, so that the needle can approach the ideal needle insertion point on the target surface. The operator can accurately insert the needle into the target object according to the needle insertion point. In addition, the present disclosure can judge whether the breathing state of the target is normal according to the machine learning technology, so as to prevent the operator from performing the needle insertion operation when the breathing state of the target is abnormal, causing the needle insertion operation to be disturbed. Furthermore, the present disclosure can also determine the target's breathing stagnation period according to the breathing signal of the target, and suggest the operator to perform the needle insertion operation during the breathing stagnation period, so as to minimize the influence of the target's breathing on the needle insertion operation. During the period of non-respiratory arrest, the computer-assisted needle insertion system can control the mechanical arm to release the clamp used to fix the needle, reducing the damage of the needle to the user's body.

10:入針路徑 100:電腦輔助入針系統 110:處理器 120:儲存媒體 121、122、123:機器學習模型 130:收發器 15:建議入針路徑 20:目標 21:表面 30:機械手臂 31:夾具 40:針具 41:針尖 50:標記 51、52、53、54:子標記 60:目標物件 600:呼吸訊號 61、63:呼吸起始時間點 610、620:呼吸週期 62、64:呼吸停止時間點 700:特徵訊號 710、720、810、820:時段 800:閾值 S111、S112、S113、S114、S310、S320、S321、S322、S323、S324、S330、S331、S332、S333、S334、S335、S336、S340、S341、S342、S343:步驟10: Needle path 100: Computer Aided Needle Insertion System 110: Processor 120:

圖1根據本揭露的一實施例繪示一種電腦輔助入針系統的示意圖。 圖2根據本揭露的一實施例繪示對目標進行入針的示意圖。 圖3根據本揭露的一實施例繪示一種電腦輔助入針方法的流程圖。 圖4根據本揭露的一實施例繪示步驟S320的流程圖。 圖5根據本揭露的一實施例繪示步驟S330的流程圖。 圖6根據本揭露的一實施例繪示呼吸訊號的示意圖。 圖7根據本揭露的一實施例繪示標記的示意圖。 圖8根據本揭露的一實施例繪示特徵訊號的示意圖。 圖9根據本揭露的一實施例繪示步驟S340的流程圖。 圖10根據本揭露的一實施例繪示一種電腦輔助入針方法的流程圖。FIG. 1 is a schematic diagram of a computer-aided needle insertion system according to an embodiment of the present disclosure. FIG. 2 shows a schematic diagram of inserting a needle into a target according to an embodiment of the present disclosure. FIG. 3 shows a flow chart of a computer-assisted needle insertion method according to an embodiment of the present disclosure. FIG. 4 shows a flowchart of step S320 according to an embodiment of the present disclosure. FIG. 5 shows a flowchart of step S330 according to an embodiment of the present disclosure. FIG. 6 shows a schematic diagram of a breathing signal according to an embodiment of the present disclosure. FIG. 7 shows a schematic diagram of a marker according to an embodiment of the present disclosure. FIG. 8 is a schematic diagram illustrating characteristic signals according to an embodiment of the present disclosure. FIG. 9 shows a flowchart of step S340 according to an embodiment of the present disclosure. FIG. 10 shows a flowchart of a computer-assisted needle insertion method according to an embodiment of the present disclosure.

S111、S112、S113、S114:步驟S111, S112, S113, S114: steps

Claims (11)

Translated fromChinesePriority Applications (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US17/534,418US12171460B2 (en) | 2020-12-29 | 2021-11-23 | Computer-assisted needle insertion system and computer-assisted needle insertion method |

| US18/942,801US20250064478A1 (en) | 2020-12-29 | 2024-11-11 | Computer-assisted needle insertion method |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US202063131765P | 2020-12-29 | 2020-12-29 | |

| US63/131,765 | 2020-12-29 |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| TW202226031A TW202226031A (en) | 2022-07-01 |

| TWI792592Btrue TWI792592B (en) | 2023-02-11 |

Family

ID=83436741

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| TW110136416ATWI792592B (en) | 2020-12-29 | 2021-09-30 | Computer-assisted needle insertion system and computer-assisted needle insertion method |

Country Status (1)

| Country | Link |

|---|---|

| TW (1) | TWI792592B (en) |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20190117317A1 (en)* | 2016-04-12 | 2019-04-25 | Canon U.S.A., Inc. | Organ motion compensation |

| US20190223958A1 (en)* | 2018-01-23 | 2019-07-25 | Inneroptic Technology, Inc. | Medical image guidance |

| TW201941218A (en)* | 2018-01-08 | 2019-10-16 | 美商普吉尼製藥公司 | Systems and methods for rapid neural network-based image segmentation and radiopharmaceutical uptake determination |

| CN110364238A (en)* | 2018-04-11 | 2019-10-22 | 西门子医疗有限公司 | Machine learning-based contrast agent management |

| CN111565632A (en)* | 2017-10-13 | 2020-08-21 | 奥特美医疗有限责任公司 | Systems and microtubule conductivity for characterizing, diagnosing, and treating a patient's health condition, and methods of using the same |

- 2021

- 2021-09-30TWTW110136416Apatent/TWI792592B/enactive

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20190117317A1 (en)* | 2016-04-12 | 2019-04-25 | Canon U.S.A., Inc. | Organ motion compensation |

| CN111565632A (en)* | 2017-10-13 | 2020-08-21 | 奥特美医疗有限责任公司 | Systems and microtubule conductivity for characterizing, diagnosing, and treating a patient's health condition, and methods of using the same |

| TW201941218A (en)* | 2018-01-08 | 2019-10-16 | 美商普吉尼製藥公司 | Systems and methods for rapid neural network-based image segmentation and radiopharmaceutical uptake determination |

| US20190223958A1 (en)* | 2018-01-23 | 2019-07-25 | Inneroptic Technology, Inc. | Medical image guidance |

| CN110364238A (en)* | 2018-04-11 | 2019-10-22 | 西门子医疗有限公司 | Machine learning-based contrast agent management |

Also Published As

| Publication number | Publication date |

|---|---|

| TW202226031A (en) | 2022-07-01 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| JP4836122B2 (en) | Surgery support apparatus, method and program | |

| JP6813592B2 (en) | Organ movement compensation | |

| JP6843639B2 (en) | Ultrasonic diagnostic device and ultrasonic diagnostic support device | |

| CN104055520B (en) | Human organ motion monitoring method and operation guiding system | |

| JP6441051B2 (en) | Dynamic filtering of mapping points using acquired images | |

| JP2020520691A (en) | Biopsy devices and systems | |

| JP6475324B2 (en) | Optical tracking system and coordinate system matching method of optical tracking system | |

| US20110160569A1 (en) | system and method for real-time surface and volume mapping of anatomical structures | |

| JP2007083038A5 (en) | ||

| JP2019528899A (en) | Visualization of image objects related to instruments in extracorporeal images | |

| US20250064478A1 (en) | Computer-assisted needle insertion method | |

| CN114073581B (en) | Bronchus electromagnetic navigation system | |

| JP2022095582A (en) | Probe cavity motion modeling | |

| CN114748141A (en) | Puncture needle three-dimensional pose real-time reconstruction method and device based on X-ray image | |

| JP2016534811A (en) | Support device for supporting alignment of imaging device with respect to position and shape determination device | |

| CN111403017A (en) | Medical assistance device, system, and method for determining a deformation of an object | |

| US20230186471A1 (en) | Providing a specification | |

| JP2019063518A (en) | Estimation of ablation size and visual representation | |

| JP2007537816A (en) | Medical imaging system for mapping the structure of an object | |

| US20240099776A1 (en) | Systems and methods for integrating intraoperative image data with minimally invasive medical techniques | |

| TWI792592B (en) | Computer-assisted needle insertion system and computer-assisted needle insertion method | |

| US7039226B2 (en) | Method and apparatus for modeling momentary conditions of medical objects dependent on at least one time-dependent body function | |

| JP6881945B2 (en) | Volume measurement map update | |

| WO2024222402A1 (en) | Catheter robot and registration method thereof | |

| JP2023513383A (en) | Medical imaging systems, devices, and methods for visualizing deployment of internal therapeutic devices |