CN116295342A - A Multi-Sensing State Estimator for Aircraft Surveying - Google Patents

A Multi-Sensing State Estimator for Aircraft SurveyingDownload PDFInfo

- Publication number

- CN116295342A CN116295342ACN202310243900.8ACN202310243900ACN116295342ACN 116295342 ACN116295342 ACN 116295342ACN 202310243900 ACN202310243900 ACN 202310243900ACN 116295342 ACN116295342 ACN 116295342A

- Authority

- CN

- China

- Prior art keywords

- unmanned aerial

- aerial vehicle

- imu

- state estimator

- optimization

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

- G—PHYSICS

- G01—MEASURING; TESTING

- G01C—MEASURING DISTANCES, LEVELS OR BEARINGS; SURVEYING; NAVIGATION; GYROSCOPIC INSTRUMENTS; PHOTOGRAMMETRY OR VIDEOGRAMMETRY

- G01C21/00—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00

- G01C21/005—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00 with correlation of navigation data from several sources, e.g. map or contour matching

- G—PHYSICS

- G01—MEASURING; TESTING

- G01C—MEASURING DISTANCES, LEVELS OR BEARINGS; SURVEYING; NAVIGATION; GYROSCOPIC INSTRUMENTS; PHOTOGRAMMETRY OR VIDEOGRAMMETRY

- G01C21/00—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00

- G01C21/20—Instruments for performing navigational calculations

- G01C21/206—Instruments for performing navigational calculations specially adapted for indoor navigation

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02T—CLIMATE CHANGE MITIGATION TECHNOLOGIES RELATED TO TRANSPORTATION

- Y02T10/00—Road transport of goods or passengers

- Y02T10/10—Internal combustion engine [ICE] based vehicles

- Y02T10/40—Engine management systems

Landscapes

- Engineering & Computer Science (AREA)

- Radar, Positioning & Navigation (AREA)

- Remote Sensing (AREA)

- Automation & Control Theory (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Control Of Position, Course, Altitude, Or Attitude Of Moving Bodies (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明涉及飞控传感技术领域,具体为一种用于飞行器勘测的多传感状态估计器。The invention relates to the technical field of flight control sensing, in particular to a multi-sensing state estimator for aircraft surveying.

背景技术Background technique

无人机的定位方法从适用层面可分为室内定位方法和室外定位方法。GPS在室外空旷长距离场景下,是一种有效的定位方式,但是只能进行大范围粗略地估计。UAV positioning methods can be divided into indoor positioning methods and outdoor positioning methods from the applicable level. GPS is an effective positioning method in open and long-distance outdoor scenarios, but it can only be roughly estimated in a large area.

状态估计是一种多传感器数据融合方法,利用两种以上的不准确信息融合来提高测量系统的测量精度。State estimation is a multi-sensor data fusion method that uses the fusion of more than two types of inaccurate information to improve the measurement accuracy of the measurement system.

传统的室内定位算法主要分为里程计法和惯性导航法;惯性导航法利用加速度计和陀螺仪实现定位,由于积分累积误差的存在,惯性导航的定位精度一般很差;里程计法又依据所使用的传感器不同,又细分为光流法、视觉里程计法和视觉惯性里程计法;其中光流法因其原理简单、容易实现等特点,被广泛的应用在无人机室内定位过程中。Traditional indoor positioning algorithms are mainly divided into odometer method and inertial navigation method; inertial navigation method uses accelerometer and gyroscope to achieve positioning, due to the existence of integral accumulation error, the positioning accuracy of inertial navigation is generally poor; Different sensors are used, which are subdivided into optical flow method, visual odometer method and visual inertial odometer method; among them, optical flow method is widely used in the indoor positioning process of drones because of its simple principle and easy implementation. .

基于视觉里程计(VisualOdometry)的定位技术是目前较为新颖和研究较多的一种定位技术;VO通过刚性挂载在无人机上的摄像机采集图像数据,根据图像特征和运动约束进行位姿估计,不会产生积累误差;但当视觉传感器数据采样率和无人机运动速度比较快时,会造成特征点数目减少而引起估计精度降低。The positioning technology based on visual odometry (Visual Odometry) is currently a relatively new and researched positioning technology; VO collects image data through a camera rigidly mounted on the UAV, and performs pose estimation based on image features and motion constraints. There will be no cumulative error; but when the visual sensor data sampling rate and the UAV movement speed are relatively fast, the number of feature points will be reduced and the estimation accuracy will be reduced.

发明内容Contents of the invention

本发明的目的在于提供一种用于飞行器勘测的多传感状态估计器,以解决上述背景技术中提出的问题。The purpose of the present invention is to provide a multi-sensing state estimator for aircraft surveying, so as to solve the problems raised in the background art above.

为实现上述目的,本发明提供如下技术方案:一种用于飞行器勘测的多传感状态估计器,包括对四旋翼无人机建立详细的数学模型,对基于优化的多传感器状态估计器进行模型描述,解决无人机对未知环境的探索问题,实现图像与IMU数据的紧耦合估计、视觉图像跟踪,完成惯性传感预积分,在完成上述步骤后,进入初始化,包含三个环节:求取相机与IMU之间的相对旋转、相机初始化、IMU与视觉信息的对齐;In order to achieve the above object, the present invention provides the following technical solutions: a multi-sensor state estimator for aircraft survey, including establishing a detailed mathematical model for the quadrotor UAV, and modeling the optimization-based multi-sensor state estimator Description, to solve the problem of unmanned aerial vehicles exploring the unknown environment, realize the tightly coupled estimation of image and IMU data, visual image tracking, and complete the pre-integration of inertial sensing. After completing the above steps, enter initialization, including three links: Relative rotation between camera and IMU, camera initialization, alignment of IMU and visual information;

同时进行回环检测,利用局部路径规划方法保障无人机能够在障碍物中飞行,当单架无人机具备了局部路径规划能力后,即可借助多无人机集群SLAM目标分配策略实现多无人机的协同区域搜索。At the same time, the loop detection is carried out, and the local path planning method is used to ensure that the UAV can fly in obstacles. When a single UAV has the local path planning capability, it can use the multi-UAV cluster SLAM target allocation strategy to realize multi-unmanned aerial vehicles. Human-machine collaborative area search.

优选的,需要对四旋翼无人机建立运动模型,通过对机体受力分析和力矩分析,可以得到简化后集体的运动方程:Preferably, it is necessary to establish a motion model for the quadrotor UAV. Through the force analysis and moment analysis of the body, the simplified collective motion equation can be obtained:

优选的,需要对基于优化的多传感器状态估计器进行模型描述,该状态估计器实现了无人机在复杂环境下的实时位姿估计,解决了传统无人机SLAM需搭载过多传感器的问题。最后针对四旋翼无人机集群对未知环境的探索和地图构建问题,研究适合多传感器状态估计器的在线无人机运动规划算法;提出基于动力学路径搜索的改进A*算法和B样条轨迹优化方法,解决了单一无人机的在线运动决策和规划方法;并设计无人机集群SLAM控制策略,完成无人机在集群状态下的目标点和路径点规划,从而完成方法框架的闭环。Preferably, it is necessary to model the multi-sensor state estimator based on optimization. This state estimator realizes the real-time pose estimation of the UAV in a complex environment, and solves the problem that the traditional UAV SLAM needs to carry too many sensors. . Finally, aiming at the exploration of the unknown environment and the map construction of the quadrotor UAV cluster, the online UAV motion planning algorithm suitable for the multi-sensor state estimator is studied; the improved A* algorithm and B-spline trajectory based on dynamic path search are proposed The optimization method solves the online motion decision-making and planning method of a single UAV; and designs the UAV cluster SLAM control strategy to complete the target point and path point planning of the UAV in the cluster state, thus completing the closed loop of the method framework.

优选的,解决无人机对未知环境的探索问题,并且重点解决三个问题。即无人机的状态估计问题、路径规划问题和运动控制问题。Preferably, to solve the problem of unmanned aerial vehicles exploring the unknown environment, and focus on solving three problems. That is, the state estimation problem, path planning problem and motion control problem of UAV.

优选的,需要实现图像与IMU数据的紧耦合估计,利用深度视觉图像获取相机运动和IMU数据获取载体的预积分轨迹,通过设计优化过程使图像和IMU计算的轨迹数据实现紧耦合估计;通过最小二乘优化方法,最小化视觉测量值与IMU估计值之间的差值,即“残差”,实现图像和IMU数据的紧耦合。Preferably, it is necessary to realize the tightly coupled estimation of the image and the IMU data, use the depth vision image to obtain the pre-integration trajectory of the camera motion and the IMU data acquisition carrier, and make the trajectory data calculated by the image and the IMU realize the tightly coupled estimation through the design optimization process; through the minimum The quadratic optimization method minimizes the difference between the visual measurement and the IMU estimate, the "residual", to achieve a tight coupling of image and IMU data.

优选的,需要完成视觉图像跟踪设计,使用多组特征点的像素坐标及特征点深度,还原图像帧状态,状态包含:相机的空间位置(Position,P)、速度(velocity,V)和四元数表示的旋转(Quaternion,Q);包含三个主要的处理步骤:稀疏光流法(KLT)跟踪,SFM三维运动重建和滑窗关键帧的选取。Preferably, the visual image tracking design needs to be completed, and the pixel coordinates and feature point depths of multiple sets of feature points are used to restore the state of the image frame. The state includes: the camera's spatial position (Position, P), velocity (velocity, V) and quaternion The rotation (Quaternion, Q) represented by the number; contains three main processing steps: sparse optical flow method (KLT) tracking, SFM 3D motion reconstruction and sliding window key frame selection.

优选的,需要完成惯性传感预积分,使用视觉观测值和惯性观测值进行耦合:需求解出视觉观测值并计算其残差,残差的雅各比矩阵是优化中下降的方向,协方差矩阵是观测值对应的权值;特别的,针对对连续时间积分存在的后期优化难度高的问题,提出预积分环节,通过对惯性传感器在世界坐标系下的连续时刻进行预积分推导来解决该问题。Preferably, inertial sensing pre-integration needs to be completed, and visual observations and inertial observations are used for coupling: it is necessary to solve the visual observations and calculate their residuals. The Jacobian matrix of the residuals is the direction of decline in optimization, and the covariance The matrix is the weight value corresponding to the observation value; in particular, for the problem of high difficulty in post-optimization of continuous time integration, a pre-integration link is proposed, which is solved by pre-integrating and deriving the continuous moments of the inertial sensor in the world coordinate system. question.

优选的,完成上述权利要求部分后,需要进入初始化环节,特别的,视觉惯性紧耦合系统需要通过初始化过程对系统参数进行恢复和校准,恢复的参数包含相机尺度、重力、速度以及IMU的测量误差(Bias);由于视觉三维运动重建(SFM)在初始化的过程中有着较好的表现,所以在初始化的过程中主要以SFM为主;通过将IMU的预积分结果与视觉三维运动重建结果对齐,可对IMU的测量误差进行进一步初始化,主要包括三个环节:求取相机与IMU之间的相对旋转、相机初始化、IMU与视觉信息的对齐。Preferably, after completing the above claims, it is necessary to enter the initialization link. In particular, the visual-inertial tightly coupled system needs to restore and calibrate the system parameters through the initialization process. The restored parameters include camera scale, gravity, speed and IMU measurement error (Bias); since the visual 3D motion reconstruction (SFM) has a good performance in the initialization process, it is mainly based on SFM in the initialization process; by aligning the pre-integration results of the IMU with the visual 3D motion reconstruction results, The measurement error of the IMU can be further initialized, which mainly includes three steps: obtaining the relative rotation between the camera and the IMU, initializing the camera, and aligning the IMU with the visual information.

优选的,需要进行回环检测在回环,检测过程中,当新的关键帧生成后,使用FAST特征点检测算法寻找处新的特征点,这些特征点与其在KTL光流跟踪中寻找的特征点不同;随后通过BRIEF方法提取描述子并与历史描述子匹配,使用DBow词袋字典库对描述子信息进行存储和检索;若存在本关键帧中的描述子对应的特征点与词袋中存储的历史特征点相同,则匹配对应的特征点系统寻找到回点;若寻找到回环点,则需找出回环最早出现的关键帧,将此关键帧的位姿设为固定;在回环检测过程中,由于回环检测的速度总是慢于关键帧的生成速度,为了保持回环检测不落后于关键帧生成,通常采用跳帧法将部分关键帧剔除,从而保证检测效率;在完成回环检测后,多传感器状态估计器需通过快速重定位方式,将回环帧信息返回至后端联合优化过程以更新优化数据;需要确保无人机不与障碍物相撞,利用局部路径规划方法,使无人机利用已经生成的地图和传感器实时更新的障碍物信息,在线生成一条或多条无人机局部路径,保障无人机能够在障碍物中飞行,采用一种B样条优化方法对动力学搜索产生的轨迹进行优化,用以提高路径的平滑度,改进路径与障碍物间隙过小的问题。Preferably, loopback detection is required. During the loopback detection process, when a new key frame is generated, the FAST feature point detection algorithm is used to find new feature points, which are different from the feature points found in KTL optical flow tracking. ; Then use the BRIEF method to extract the descriptor and match it with the historical descriptor, and use the DBow word bag dictionary database to store and retrieve the descriptor information; if there is a feature point corresponding to the descriptor in this key frame and the history stored in the word bag If the feature points are the same, then match the corresponding feature point system to find the loopback point; if the loopback point is found, it is necessary to find the key frame where the loopback first appeared, and set the pose of this keyframe to be fixed; during the loopback detection process, Since the speed of loop detection is always slower than the generation speed of key frames, in order to keep loop detection from lagging behind key frame generation, frame skipping method is usually used to remove some key frames to ensure detection efficiency; after completing loop detection, multi-sensor The state estimator needs to return the loopback frame information to the back-end joint optimization process through fast relocation to update the optimization data; it needs to ensure that the UAV does not collide with obstacles, and use the local path planning method to make the UAV The generated map and the obstacle information updated by the sensor in real time generate one or more local paths of the UAV online to ensure that the UAV can fly in the obstacle. A B-spline optimization method is used to analyze the trajectory generated by the dynamic search. Optimize to improve the smoothness of the path and improve the problem that the gap between the path and obstacles is too small.

优选的,通过借助多无人机集群SLAM目标分配策略实现多无人机的协同区域搜索,使用一种DARP算法对区域进行分区,其中DARP算法全名称是DivideAreasAlgorithmforOptimalMulti-RobotCoveragePathPlanning;DARP算法根据移动无人机的初始位置划分区域,确保每个区域的面积近似相等且联通,当完成无人机探索区域划分后,即可根据区域执行设定的搜索策略。Preferably, by means of multi-UAV cluster SLAM target allocation strategy to realize multi-UAV collaborative area search, use a DARP algorithm to partition the area, wherein the full name of the DARP algorithm is DivideAreasAlgorithmforOptimalMulti-RobotCoveragePathPlanning; DARP algorithm is based on mobile unmanned The initial position of the drone is used to divide the area to ensure that the area of each area is approximately equal and connected. After the division of the drone exploration area is completed, the set search strategy can be executed according to the area.

与现有技术相比,本发明的有益效果是:Compared with prior art, the beneficial effect of the present invention is:

通过一种基于优化的传感器融合框架,结合紧耦合和松耦合的优势,利用低成本的惯性测量单元(InertialMeasurementUnit,IMU)在图像采样间隔时间内的短时平稳性,从而实现更高的视觉系统的采样频率,并使无人机在加速和急转的情况下都能进行稳定的位姿跟踪。最终结合无人机集群控制算法和策略,深入研究基于该融合框架理论的集群无人机同时定位与地图构建方法,从而设计出一款精度高、实时性好的多传感状态估计器。Through an optimization-based sensor fusion framework, combining the advantages of tight coupling and loose coupling, the short-term stability of the low-cost inertial measurement unit (InertialMeasurementUnit, IMU) in the image sampling interval is used to achieve a higher vision system The sampling frequency is high, and the UAV can perform stable pose tracking under acceleration and sharp turns. Finally, combined with the UAV swarm control algorithm and strategy, the simultaneous positioning and map construction method of swarm UAVs based on the fusion framework theory is studied in depth, so as to design a multi-sensor state estimator with high precision and good real-time performance.

附图说明Description of drawings

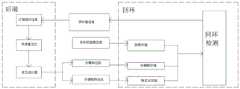

图1为未知环境探索的结构建模框图;Figure 1 is a block diagram of structural modeling for unknown environment exploration;

图2为位置控制框图;水平位置、高度控制、姿态控制PID框图;Fig. 2 is a position control block diagram; horizontal position, height control, attitude control PID block diagram;

图3为视觉图像跟踪系统结构图;Fig. 3 is a visual image tracking system structural diagram;

图4为滑动窗口示意图;Fig. 4 is a schematic diagram of a sliding window;

图5为快速定位过程示意图;Fig. 5 is a schematic diagram of the rapid positioning process;

图6为随机搜索和平行搜索示意图;Fig. 6 is a schematic diagram of random search and parallel search;

图7为图像与IMU紧耦合过程的示意图;Fig. 7 is a schematic diagram of image and IMU tight coupling process;

图8为预积分示意图。Figure 8 is a schematic diagram of pre-integration.

具体实施方式Detailed ways

下面将结合本发明实施例中的附图,对本发明实施例中的技术方案进行清楚、完整地描述,显然,所描述的实施例仅仅是本发明一部分实施例,而不是全部的实施例。基于本发明中的实施例,本领域普通技术人员在没有做出创造性劳动前提下所获得的所有其他实施例,都属于本发明保护的范围。The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only some, not all, embodiments of the present invention. Based on the embodiments of the present invention, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the protection scope of the present invention.

请参阅图1-8,本发明提供一种技术方案:一种用于飞行器勘测的多传感状态估计器,来提供多场景下的无人机定位和环境构图功能,并将其应用于实际无人机集群SLAM系统中,结合无人机控制算法和策略,完成集群系统在未知环境条件中的自主飞行和地图构建。通过一种基于优化的传感器融合框架,结合紧耦合和松耦合的优势,利用低成本的惯性测量单元(InertialMeasurementUnit,IMU)在图像采样间隔时间内的短时平稳性,从而实现更高的视觉系统的采样频率,并使无人机在加速和急转的情况下都能进行稳定的位姿跟踪。最终结合无人机集群控制算法和策略,深入研究基于该融合框架理论的集群无人机同时定位与地图构建方法,从而实现精度高、实时性好的无人机SLAM系统。将该系统装配于四旋翼无人机QAV250机体上,组成一种满足GPS室内高精度定位、能够进行大规模部署的封闭区域勘测飞行器。以下为多状态传感器估计系统的研究方案:Please refer to Figures 1-8, the present invention provides a technical solution: a multi-sensing state estimator for aircraft surveys, to provide the functions of UAV positioning and environmental composition in multiple scenarios, and apply it in practice In the UAV cluster SLAM system, combined with the UAV control algorithm and strategy, the autonomous flight and map construction of the cluster system in unknown environmental conditions are completed. Through an optimization-based sensor fusion framework, combining the advantages of tight coupling and loose coupling, the short-term stability of the low-cost inertial measurement unit (InertialMeasurementUnit, IMU) in the image sampling interval is used to achieve a higher vision system The sampling frequency is high, and the UAV can perform stable pose tracking under acceleration and sharp turns. Finally, combined with the UAV swarm control algorithm and strategy, the simultaneous positioning and map construction method of cluster UAVs based on the fusion framework theory is studied in depth, so as to realize the UAV SLAM system with high precision and good real-time performance. The system is assembled on the body of the quadrotor UAV QAV250 to form a closed area survey aircraft that meets GPS indoor high-precision positioning and can be deployed on a large scale. The following is the research plan of the multi-state sensor estimation system:

首先对四旋翼无人机建立详细的数学模型,通过对机体受力分析和力矩分析,可以得到简化后集体的运动方程:Firstly, a detailed mathematical model of the quadrotor UAV is established, and the simplified collective motion equation can be obtained by analyzing the force and moment of the body:

然后对基于优化的多传感器状态估计器进行模型描述;该状态估计器实现了无人机在复杂环境下的实时位姿估计,解决了传统无人机SLAM需搭载过多传感器的问题;最后针对四旋翼无人机集群对未知环境的探索和地图构建问题,研究适合多传感器状态估计器的在线无人机运动规划算法。提出基于动力学路径搜索的改进A*算法和B样条轨迹优化方法,解决了单一无人机的在线运动决策和规划方法。并设计无人机集群SLAM控制策略,完成无人机在集群状态下的目标点和路径点规划,从而完成方法框架的闭环。Then, the model description of multi-sensor state estimator based on optimization is carried out; the state estimator realizes the real-time pose estimation of UAV in complex environment, and solves the problem that traditional UAV SLAM needs to carry too many sensors; finally, it aims at Quadrotor UAV swarms explore the unknown environment and map construction problems, and study the online UAV motion planning algorithm suitable for multi-sensor state estimators. An improved A* algorithm based on dynamic path search and a B-spline trajectory optimization method are proposed to solve the online motion decision-making and planning method of a single UAV. And design the UAV cluster SLAM control strategy, complete the target point and path point planning of the UAV in the cluster state, so as to complete the closed loop of the method framework.

特别的,若要完成无人机对未知环境的探索,需要重点解决三个问题。即无人机的状态估计问题、路径规划问题和运动控制问题。在实际工程中,这三类问题被分配给了三个不同的处理器进行分别处理,以减小系统的运算和实时度压力,其作用和相互关系如图1所示。In particular, in order to complete the exploration of the unknown environment by UAVs, three problems need to be solved. That is, the state estimation problem, path planning problem and motion control problem of UAV. In actual engineering, these three types of problems are assigned to three different processors for separate processing to reduce the system's computing and real-time pressure. Their functions and interrelationships are shown in Figure 1.

小型四旋翼无人机飞控系统一般以单片型微控制器作为飞行控制器的计算单元。且具备捷联惯性传感器,以及高度位置等传感器为四旋翼飞行提供必要的反馈数据。四旋翼无人机是一个非线性、欠驱动的被控对象,采用经典的比例积分微分控制算法设计无人机的姿态控制、位置控制和控制器。图2从上到下依次为位姿控制框图、水平位置控制PID结构图、高度控制PID结构图、姿态控制PID结构图。The flight control system of a small quadrotor UAV generally uses a single-chip microcontroller as the calculation unit of the flight controller. It also has strapdown inertial sensors and sensors such as height and position to provide necessary feedback data for quadrotor flight. The quadrotor UAV is a nonlinear and underactuated controlled object. The attitude control, position control and controller of the UAV are designed using the classic proportional-integral-derivative control algorithm. Figure 2 is the pose control block diagram, the horizontal position control PID structure diagram, the height control PID structure diagram, and the attitude control PID structure diagram from top to bottom.

控制分配器将期望拉力及力矩转换为期望的电机转速,旋翼无人机悬停时单个螺旋桨拉力可以表示为:cT为常数且可通过试验测得。旋翼无人机悬停时单个螺旋桨在机身上产生的反扭力矩可以表示为/>cM为常数且可通过试验测得。X字四旋翼的控制分配和多旋翼的控制效率模型如下:The control distributor converts the desired pulling force and torque into the desired motor speed. When the rotor UAV is hovering, the pulling force of a single propeller can be expressed as: cT is a constant and can be measured experimentally. The anti-torque torque generated by a single propeller on the fuselage when the rotor UAV hovers can be expressed as cM is a constant and can be measured experimentally. The control distribution of the X-shaped quadrotor and the control efficiency model of the multi-rotor are as follows:

cT,d,cM为未知参数,可以用控制器中的比例系数进行补偿,设油门、俯仰、滚转、偏航四个方向的输出分别为σ1,σ2,σ3,σ4则令:cT , d, and cM are unknown parameters, which can be compensated by the proportional coefficient in the controller, and the outputs in the four directions of throttle, pitch, roll, and yaw are respectively σ1 , σ2 , σ3 , σ4 Then order:

状态估计是一种多传感器数据融合方法,利用两种以上的不准确信息融合来提高测量系统的测量精度;基于优化的多传感器状态估计器系统结构,主要分为四个部分:视觉特征识别和追踪、IMU预积分、针对残差的最小二乘优化、回环检测。State estimation is a multi-sensor data fusion method, which uses more than two kinds of inaccurate information fusion to improve the measurement accuracy of the measurement system; based on the optimized multi-sensor state estimator system structure, it is mainly divided into four parts: visual feature recognition and Tracking, IMU pre-integration, least squares optimization for residuals, loop closure detection.

针对状态估计器本发明主要有以下几点创新:(1)引入RGBD相机作为图像传感器,解决了传统单目VIO系统无法获得视觉特征尺度的问题;(2)提出了“视觉-IMU”残差概念,重新设计了优化目标函数,降低了系统的复杂度;(3)重新设计了优化过程中的优化变量,降低了系统优化所需的时间;(4)完成了状态估计器的程序系统实现,通过设计实物系统,验证其有效性。Aiming at the state estimator, the present invention mainly has the following innovations: (1) The RGBD camera is introduced as the image sensor, which solves the problem that the traditional monocular VIO system cannot obtain the visual feature scale; (2) the "vision-IMU" residual concept, redesigned the optimization objective function, and reduced the complexity of the system; (3) redesigned the optimization variables in the optimization process, reducing the time required for system optimization; (4) completed the program system implementation of the state estimator , and verify its effectiveness by designing the physical system.

本发明基于优化的多传感器状态估计器设计实现原理,利用深度视觉图像获取相机运动和IMU数据获取载体的预积分轨迹,通过设计优化过程使图像和IMU计算的轨迹数据实现紧耦合估计。通过最小二乘优化方法,最小化视觉测量值与IMU估计值之间的差值,即“残差”,实现图像和IMU数据的紧耦合;图7为图像与IMU紧耦合过程的示意图。The present invention is based on the design and implementation principle of an optimized multi-sensor state estimator, uses the depth vision image to acquire the pre-integration trajectory of the camera motion and the IMU data acquisition carrier, and realizes the tightly coupled estimation of the trajectory data calculated by the image and the IMU through the design optimization process. Through the least squares optimization method, the difference between the visual measurement value and the IMU estimated value, that is, the "residual error", is minimized to realize the tight coupling of image and IMU data; Figure 7 is a schematic diagram of the image and IMU tight coupling process.

视觉图像跟踪是基于优化的多传感器状态估计器前端数据预处理中的图像与处理环节。使用多组特征点的像素坐标及特征点深度,还原图像帧状态,状态包含:相机的空间位置(Position,P)、速度(velocity,V)和四元数表示的旋转(Quaternion,Q);视觉图像跟踪部分的系统结构图如图3所示,包含三个主要的处理步骤:稀疏光流法(KLT)跟踪,SFM三维运动重建和滑窗关键帧的选取。Visual image tracking is based on the image and processing link in the front-end data preprocessing of the optimized multi-sensor state estimator. Use the pixel coordinates and feature point depths of multiple sets of feature points to restore the state of the image frame. The state includes: the camera's spatial position (Position, P), velocity (velocity, V) and rotation represented by the quaternion (Quaternion, Q); The system structure diagram of the visual image tracking part is shown in Figure 3, which includes three main processing steps: sparse optical flow method (KLT) tracking, SFM 3D motion reconstruction and sliding window key frame selection.

利用KLT稀疏光流法对上一帧提取的特征点在当前帧的位置进行跟踪。为了获取相机在空间中的位置,我们使用SFM法对相机运动进行重建,在寻找特征点的过程中,为了避免所寻找的特征点过于密集,需要设置非极大值抑制半径,该半径描述的是在寻找特征点过程中,每个特征点间距的最小距离。当完成了当前图像上的特征点获取后,使用KLT光流跟踪法跟踪下一帧图像中的上一帧图像寻要找的特征点,并给予相同的特征点ID。在完成上述步骤后,系统将进行关键帧的筛选和无用帧的剔除,从而保证图像以一定速率进行发布,减小后端优化过程中的计算压力。使用滑动窗口对当前需要处理的关键帧进行存储和更新,在关键帧的判断过程中,关键帧存储的窗口的大小默认为10;滑窗法中的关键帧如图4所示。Use the KLT sparse optical flow method to track the position of the feature points extracted in the previous frame in the current frame. In order to obtain the position of the camera in space, we use the SFM method to reconstruct the camera motion. In the process of finding the feature points, in order to avoid the feature points being too dense, it is necessary to set the non-maximum suppression radius, which describes the It is the minimum distance between each feature point in the process of finding feature points. After completing the feature point acquisition on the current image, use the KLT optical flow tracking method to track the previous frame image in the next frame image to find the feature point and give the same feature point ID. After completing the above steps, the system will filter key frames and eliminate useless frames, so as to ensure that images are released at a certain rate and reduce the calculation pressure in the back-end optimization process. Use the sliding window to store and update the key frames that need to be processed currently. During the key frame judgment process, the default size of the key frame storage window is 10; the key frames in the sliding window method are shown in Figure 4.

惯性传感器预积分是基于优化的多传感器状态估计器前端处理中最为重要的一项处理,由于惯性传感器的采样频率通常远高于视觉传感器的采样频率,而IMU获得的是每Inertial sensor pre-integration is the most important processing in the front-end processing of the multi-sensor state estimator based on optimization. Since the sampling frequency of inertial sensors is usually much higher than that of visual sensors, and the IMU obtains each

一时刻的加速度和角速度,通过积分获得两帧之间的由IMU测出的位移和旋转变换。在本发明中,使用视觉观测值和惯性观测值进行耦合:需求解出视觉观测值并计算其残差,残差的雅各比矩阵是优化中下降的方向,协方差矩阵是观测值对应的权值。The acceleration and angular velocity at one moment are integrated to obtain the displacement and rotation transformation measured by the IMU between two frames. In the present invention, visual observations and inertial observations are used for coupling: it is necessary to solve the visual observations and calculate their residuals, the Jacobian matrix of the residuals is the direction of decline in optimization, and the covariance matrix is the corresponding observation value weight.

首先,对惯性传感器在世界坐标系下的连续时刻进行积分推导,公式如下:First, integral derivation is performed on the continuous moments of the inertial sensor in the world coordinate system, the formula is as follows:

由于传感器采样获得的IMU数据是离散的,因此需要对上式使用中值积分进行离散化:Since the IMU data obtained by sensor sampling is discrete, it is necessary to discretize the above formula using the median integral:

根据上述对连续时间积分存在的后期优化难度高的问题,提出预积分环节。通过对惯性传感器在世界坐标系下的连续时刻进行预积分推导来解决该问题;预积分示意图如图8。According to the above-mentioned problem of high difficulty in post-optimization of continuous time integration, a pre-integration link is proposed. This problem is solved by pre-integrating and deriving the continuous moments of the inertial sensor in the world coordinate system; the schematic diagram of pre-integrating is shown in Figure 8.

本发明在完成前端视觉图像跟踪和前端惯性传感器预积分后,将进入初始化环节。初始化环节是本发明运行过程中最为重要的环节。特别的,视觉惯性紧耦合系统需要通过初始化过程对系统参数进行恢复和校准,恢复的参数包含相机尺度、重力、速度以及IMU的测量误差(Bias)。由于视觉三维运动重建(SFM)在初始化的过程中有着较好的表现,所以在初始化的过程中主要以SFM为主。通过将IMU的预积分结果与视觉三维运动重建结果对齐,可对IMU的测量误差进行进一步初始化。系统的初始化主要包括三个环节:求取相机与IMU之间的相对旋转、相机初始化、IMU与视觉信息的对齐。After the present invention completes the front-end visual image tracking and the front-end inertial sensor pre-integration, it will enter the initialization link. The initialization link is the most important link in the running process of the present invention. In particular, the visual-inertial tightly coupled system needs to restore and calibrate the system parameters through the initialization process. The restored parameters include the camera scale, gravity, velocity, and the measurement error (Bias) of the IMU. Since visual three-dimensional motion reconstruction (SFM) has a better performance in the initialization process, SFM is mainly used in the initialization process. The IMU's measurement error can be further initialized by aligning the IMU's pre-integration results with the visual 3D motion reconstruction results. The initialization of the system mainly includes three links: obtaining the relative rotation between the camera and the IMU, initializing the camera, and aligning the IMU with the visual information.

由于多传感器状态估计器采用视觉元件和惯性元件作为紧耦合的数据来源,而传感器间存在空间和时间上的不匹配,因此相机与IMU之间的旋转标定十分重要,通常当系统存在1-2°的标定误差时,系统的精度就会变的极低。通过计算可得权重:Since the multi-sensor state estimator uses visual elements and inertial elements as tightly coupled data sources, and there is a spatial and temporal mismatch between sensors, the rotation calibration between the camera and the IMU is very important, usually when the system has 1-2 ° calibration error, the accuracy of the system will become extremely low. The weight can be obtained by calculating:

对N测量值有其中threshold为阈值,一般取为5,QN的左奇异向量中最小奇异值对应的特征向量。由此,即可通过求解权重方程得到相对旋转。同时我们还需要注意求解的终止条件,即校准完成的终止条件。当存在足够多的旋转运动时,系统可以很好的估计出相对旋转/>这时QN对应一个准确解且其零空间的秩为1。但是在实际校准的过程中,某些轴向上可能存在退化运动,如匀速运动。此时QN的零空间的秩会大于1,其判断条件是判断QN的第二小的奇异值是否大于某个阈值,若大于则其零空间的秩为1,反之秩大于1。当秩大于1时,表示初始化过程中的相对旋转/>精度不足或存在过多的退化运动,系统则不能完成初始化。For N measurements there are Where threshold is the threshold, which is generally taken as 5, and the eigenvector corresponding to the smallest singular value in the left singular vector of QN. From this, the relative rotation can be obtained by solving the weight equation. At the same time, we also need to pay attention to the termination condition of the solution, that is, the termination condition of the calibration completion. When there is enough rotational motion, the system can make a good estimate of the relative rotation /> Then QN corresponds to an exact solution and the rank of its null space is 1. However, in the actual calibration process, there may be degenerate motion in some axes, such as uniform motion. At this time, the rank of the null space of QN will be greater than 1. The judgment condition is to judge whether the second smallest singular value of QN is greater than a certain threshold. If it is greater, the rank of the null space is 1, otherwise the rank is greater than 1. When the rank is greater than 1, it indicates the relative rotation during initialization /> Insufficient precision or excessive degenerative motion, the system cannot complete initialization.

视觉与IMU的对齐主要解决三个问题,修正陀螺仪的偏移bias,初始化速度、重力向量g和尺度因子(Metricscale),改进重力向量g的量值,在上一段落中已经根据连续图像的相对旋转算出相机和IMU间的外参旋转,接下来使用SFM计算出来的各帧图像的相对旋转来计算出陀螺仪的偏移。通过IMU量模型,利用旋转矩阵最终求解最小二乘问题:The alignment between vision and IMU mainly solves three problems, correcting the offset bias of the gyroscope, initializing the velocity, gravity vector g and scale factor (Metricscale), and improving the magnitude of the gravity vector g. In the previous paragraph, the relative The rotation calculates the external parameter rotation between the camera and the IMU, and then uses the relative rotation of each frame image calculated by the SFM to calculate the offset of the gyroscope. Through the IMU volume model, the rotation matrix is used to finally solve the least squares problem:

后端优化是基于优化的多传感器状态估计器的核心部分。后端优化的核心思想是使由边缘化的先验信息、IMU测量残差以及视觉的观测残差组成的代价函数最小:Backend optimization is a core part of optimization-based multi-sensor state estimators. The core idea of back-end optimization is to minimize the cost function composed of marginalized prior information, IMU measurement residuals and visual observation residuals:

在代价函数中,两个残差项依次是IMU测量残差以及“视觉-IMU”的观测残差/>其中残差大小以马氏距离来表示。在优化计算过程中使用高斯迭代法,将代价函数进行线性化。在后端的优化过程中IMU部分依赖IMU残差以及雅克比矩阵进行优化。在使用“Ceres函数库”计算非线性优化的过程中,使用雅可比矩阵对代价函数进行高斯迭代以求最优解。In the cost function, the two residual terms are in turn the IMU measurement residuals And the observation residuals of "Vision-IMU"/> The size of the residual is represented by the Mahalanobis distance. In the optimization calculation process, the Gaussian iteration method is used to linearize the cost function. In the optimization process of the backend, the IMU partly relies on the IMU residual and the Jacobian matrix for optimization. In the process of using the "Ceres function library" to calculate the nonlinear optimization, the Jacobian matrix is used to perform Gaussian iterations on the cost function to find the optimal solution.

在回环检测过程中,当新的关键帧生成后,使用FAST特征点检测算法寻找处新的特征点,这些特征点与其在KTL光流跟踪中寻找的特征点不同。随后通过BRIEF方法提取描述子并与历史描述子匹配,使用DBow词袋字典库对描述子信息进行存储和检索。若存在本关键帧中的描述子对应的特征点与词袋中存储的历史特征点相同,则匹配对应的特征点系统寻找到回点。若寻找到回环点,则需找出回环最早出现的关键帧,将此关键帧的位姿设为固定。在回环检测过程中,由于回环检测的速度总是慢于关键帧的生成速度,为了保持回环检测不落后于关键帧生成,通常采用跳帧法将部分关键帧剔除,从而保证检测效率。在完成回环检测后,多传感器状态估计器需通过快速重定位方式,将回环帧信息返回至后端联合优化过程以更新优化数据,其过程示意图如图5所示,将当前回环帧记为Framecur,将与Framecur互为回环的关键帧记为Frameold。记录Frameold的位姿、关键帧序号、匹配点对、Framecur的关键帧序号、时间戳并标记为回环。In the process of loop closure detection, when a new key frame is generated, the FAST feature point detection algorithm is used to find new feature points, which are different from the feature points found in KTL optical flow tracking. Then the descriptor is extracted by the BRIEF method and matched with the historical descriptor, and the descriptor information is stored and retrieved using the DBow word bag dictionary library. If the feature points corresponding to the descriptors in this key frame are the same as the historical feature points stored in the bag of words, the corresponding feature point system will find the return point. If the loopback point is found, it is necessary to find the keyframe where the loopback occurs first, and set the pose of this keyframe to be fixed. In the process of loop detection, since the speed of loop detection is always slower than the generation speed of key frames, in order to keep loop detection from lagging behind key frame generation, frame skipping method is usually used to remove some key frames, so as to ensure the detection efficiency. After the loopback detection is completed, the multi-sensor state estimator needs to return the loopback frame information to the back-end joint optimization process to update the optimization data through fast relocation. The schematic diagram of the process is shown in Figure 5, and the current loopback frame is recorded as Framecur , record the key frame that is a loop with Framecur as Frameold . Record the pose of Frameold , key frame number, matching point pair, key frame number of Framecur , timestamp and mark it as a loopback.

通过重投影计算,可以计算Framecur位姿移动到Frameold位姿的变化量,即当前帧和回环帧的相对位姿。使用KLT光流跟踪,可以得到当前帧与回环帧的匹配的点对。回环检测进程将回环帧信息打包,发送至联合优化过程。状态估计器将Framecur位姿计作Frameold位姿,将与Framecur匹配的特征点的坐标用Frameold对应点坐标代替,并重新进行优化计算。Through the reprojection calculation, the amount of change from the Framecur pose to the Frameold pose can be calculated, that is, the relative pose of the current frame and the loopback frame. Using KLT optical flow tracking, the matching point pairs between the current frame and the loopback frame can be obtained. The loopback detection process packs the loopback frame information and sends it to the joint optimization process. The state estimator counts the framecur pose as the frameold pose, replaces the coordinates of the feature points matching the framecur with the corresponding point coordinates of the frameold , and re-calculates the optimization.

无人机在自主运行过程中,需要确保无人机不与障碍物相撞。利用局部路径规划方法,使无人机利用已经生成的地图和传感器实时更新的障碍物信息,在线生成一条或多条无人机局部路径,保障无人机能够在障碍物中飞行,这对局部路径规划算法提出了一定的要求。首先,该算法必须是一种在线路径规划算法,能够保证无人机实时运行,且路径可根据更新的障碍物实时改变。其次,为保障无人机能够平稳飞行,局部路径规划算法所规划出的路径需要满足无人机的动力学约束。最后,局部路径规划算法所规划出的路径需是局部最优解或次优解。综合上述需求,本发明采用一种基于B样条轨迹优化的动力学路径搜索方法,使每架无人机能够在局部范围内进行实时路径规划。During the autonomous operation of the UAV, it is necessary to ensure that the UAV does not collide with obstacles. Using the local path planning method, the UAV can use the generated map and the obstacle information updated by the sensor in real time to generate one or more UAV local paths online to ensure that the UAV can fly in the obstacle. The path planning algorithm puts forward certain requirements. First of all, the algorithm must be an online path planning algorithm, which can ensure the real-time operation of the UAV, and the path can be changed in real time according to the updated obstacles. Secondly, in order to ensure that the UAV can fly smoothly, the path planned by the local path planning algorithm needs to meet the dynamic constraints of the UAV. Finally, the path planned by the local path planning algorithm needs to be a local optimal solution or a suboptimal solution. Based on the above requirements, the present invention adopts a dynamic path search method based on B-spline trajectory optimization, so that each UAV can perform real-time path planning within a local area.

单纯使用动力学路径搜索产生的路径可能不理想。同时由于在动力学搜索算法中,自由空间中的距离信息被忽略,因此搜索得到的路径通常接近障碍物。为了解决上述问题,采用一种B样条优化方法对动力学搜索产生的轨迹进行优化,用以提高路径的平滑度,改进路径与障碍物间隙过小的问题。The paths generated by purely dynamic pathfinding may not be ideal. At the same time, since the distance information in free space is ignored in the dynamic search algorithm, the searched path is usually close to the obstacle. In order to solve the above problems, a B-spline optimization method is used to optimize the trajectory generated by dynamic search to improve the smoothness of the path and improve the problem that the gap between the path and the obstacle is too small.

当单架无人机具备了局部路径规划能力后,即可借助多无人机集群SLAM目标分配策略实现多无人机的协同区域搜索。区别于无人机点对点的路径规划,协同路径规划的目标是多无人机协同覆盖扫描一个区域。这种协同搜索即针对特定的面积任务区域设计一种控制方式,使得多无人机能够以最小的代价快速高效地覆盖整个已知环境的任务区域,或者搜索任务区域,发现未知环境里价值较高的目标,降低环境的不确定性。多无人机在单个区域的协同搜索可从功能上分为两个部分。其一,每架无人机的搜索区域分配,即将一个简单的单连通区域分配成多个区域。其二,每架无人机在各自区域内的搜索策略。为了将一个简单的单连通区域分配成多个区域,本发明使用一种DARP(DivideAreasAlgorithmforOptimalMulti-RobotCoveragePathPlanning)算法对区域进行分区。DARP算法根据移动无人机的初始位置划分区域,确保每个区域的面积近似相等且联通。When a single UAV has the local path planning capability, it can use the multi-UAV cluster SLAM target allocation strategy to realize the collaborative area search of multiple UAVs. Different from UAV point-to-point path planning, the goal of collaborative path planning is to cover and scan an area with multiple UAVs. This collaborative search is to design a control method for a specific task area, so that multiple UAVs can quickly and efficiently cover the entire mission area of the known environment at the minimum cost, or search for the task area, and find that the value in the unknown environment is relatively low. High goals reduce environmental uncertainty. The collaborative search of multiple UAVs in a single area can be functionally divided into two parts. First, the search area allocation of each UAV, that is, to allocate a simple single-connected area into multiple areas. Second, the search strategy of each UAV in its respective area. In order to distribute a simple simply connected region into multiple regions, the present invention uses a DARP (DivideAreasAlgorithmforOptimalMulti-RobotCoveragePathPlanning) algorithm to partition the regions. The DARP algorithm divides the area according to the initial position of the mobile UAV, ensuring that the area of each area is approximately equal and connected.

当完成无人机探索区域划分后,即可根据区域执行设定的搜索策略。常见的无人机搜索策略有随机搜索、平行搜索(“Z”字形或之字形)、网格搜索、内螺旋式搜索等。随机搜索是指无人机以恒定的航迹角在搜索区域内飞行到达区域边界后,再以最小转弯半径转弯进入搜索区域,此时无人机以该航迹角继续飞行,如此往复,如图6(a)所示。平行搜索指无人机在搜需区域按照垂直或水平方向进行搜索,由于无人机性能的约束,从能量、路程、时间角度表明转弯过程比直线飞行过程的效率要低,因此,目前大部分的研究都是基于平行线式的搜索,如图6(b)所示。After the UAV exploration area is divided, the set search strategy can be executed according to the area. Common UAV search strategies include random search, parallel search ("Z" shape or zigzag), grid search, inner spiral search, etc. Random search means that the UAV flies in the search area with a constant track angle and reaches the area boundary, and then turns into the search area with the minimum turning radius. At this time, the UAV continues to fly at this track angle, and so on, Figure 6(a) shows. Parallel search means that UAVs search in the search area according to the vertical or horizontal direction. Due to the constraints of UAV performance, the efficiency of turning process is lower than that of straight flight process from the perspective of energy, distance and time. Therefore, most of the current The researches are all based on parallel-line search, as shown in Fig. 6(b).

在使用时,可以通过QAV250型微型四旋翼无人机搭载多传感器系统和微型计算机,采用拥有Intel-i7-10700k1处理器;Nvidia-GTX2060图形计算卡;32GB运行内存的仿真计算机来说明;传感器有IntelRealsenseD435i,IntelRealsenseD435i,IntelRealsenseT265;IntelRealsenseD435i采集深度图像及RGBD图像;IntelRealsenseD435i采集惯性信息;IntelRealsenseT265进行位置跟踪。利用来自于EuRoC的数据集,在Ubuntu1系统下使用C++语言进行飞行控制及数据处理。同时需要调用开源的第三方库作为系统计算支持。如Eigen矩阵库、Ceres非线性优化库、PCL点云支持库、OpenCV图像处理库等。When in use, you can use the QAV250 micro-quadrotor drone to carry a multi-sensor system and a microcomputer, using an Intel-i7-10700k1 processor; Nvidia-GTX2060 graphics computing card; 32GB of memory to illustrate the simulation computer; the sensor has IntelRealsenseD435i, IntelRealsenseD435i, IntelRealsenseT265; IntelRealsenseD435i collects depth images and RGBD images; IntelRealsenseD435i collects inertial information; IntelRealsenseT265 performs position tracking. Using the data set from EuRoC, the flight control and data processing are performed using C++ language under the Ubuntu1 system. At the same time, it is necessary to call open source third-party libraries as system computing support. Such as Eigen matrix library, Ceres nonlinear optimization library, PCL point cloud support library, OpenCV image processing library, etc.

为了获取相机在空间中的位置,我们使用SFM法对相机运动进行重建,在此过程中需要标记图像中的特征点,并获得特征点不同图像中的位置。本发明使用Opencv计算机视觉库中的特征点采集(GoodFeatures_To_Track)方法,在系统采集到的第一帧图像内寻找特征最明显的多个特征点。在VIO系统中范围通常在150至500个之间,每个特征点都具有其对应的特征ID。In order to obtain the position of the camera in space, we use the SFM method to reconstruct the camera motion. In the process, we need to mark the feature points in the image and obtain the positions of the feature points in different images. The present invention uses the feature point acquisition (GoodFeatures_To_Track) method in the Opencv computer vision library to search for a plurality of feature points with the most obvious features in the first frame image collected by the system. In the VIO system, the range is usually between 150 and 500, and each feature point has its corresponding feature ID.

由于基于优化的多传感器状态估计器使用相同的传感器数据同时进行位姿和深度的估计,因此视觉图像跟踪过程与SLAM地图点云的创建过程被设计为同步进行以避免系统存在深度数据的时间延迟,对于还没有深度的特征点,如果它被之前2帧以上的关键帧观察到过,用奇异值分解计算出一个它的坐标,使得特征点在其被观察到的每帧图像上的重投影误差最小,以满足后端优化需求。但在点云更新过程中,特征点的坐标应当使用传感器测量的深度。同时在使用RGBD相机而非点云相机进行SLAM的过程中,需进行以下步骤以保证数据的可靠性和地图的无偏性:Since the optimization-based multi-sensor state estimator uses the same sensor data to estimate pose and depth simultaneously, the visual image tracking process and the SLAM map point cloud creation process are designed to be synchronized to avoid the time delay of the depth data in the system , for a feature point that has no depth, if it has been observed by more than 2 key frames before, use singular value decomposition to calculate its coordinates, so that the reprojection of the feature point on each frame of the image it is observed The error is minimal to meet the needs of back-end optimization. But in the point cloud update process, the coordinates of the feature points should use the depth measured by the sensor. At the same time, in the process of using RGBD cameras instead of point cloud cameras for SLAM, the following steps are required to ensure the reliability of the data and the unbiasedness of the map:

1)信任深度相机,对于每个特征点设定其被观察到的第一次的位置即为准确值,可直接加入地图点。1) Trust the depth camera. For each feature point, set its observed position for the first time as the accurate value, which can be directly added to the map point.

2)对深度相误差所以加判断,对于每个特征点在器被观察到的连续两帧中,在世界坐标系中的三维坐标不能相差太大,如果相差太大,就把它的第一帧的观察记录删掉。如果相差不大,就取两个三维坐标的平均值,作为该点的三维位置,加入地图点。2) Add judgment to the depth phase error. For each feature point in two consecutive frames observed by the device, the three-dimensional coordinates in the world coordinate system cannot be too different. If the difference is too large, it will be the first The observation record of the frame is deleted. If the difference is not large, take the average of the two three-dimensional coordinates as the three-dimensional position of the point and add it to the map point.

3)对地图点进行筛选,该点需要在连续几帧中被观察到,并且该连续图像帧中所观察到的特征点其在世界坐标系中的三维坐标相差不大,则认为它是一个准确的特征点并将其加入地图点。3) Screen the map points, which need to be observed in several consecutive frames, and the three-dimensional coordinates of the observed feature points in the continuous image frames are not much different in the world coordinate system, then it is considered to be a Accurate feature points and add them to map points.

在进行会回环检测时,采用FAST特征点检测法和BRIEF描述子这两个实时性较好的检测和描述方法,这样的组合使系统对处理器和内存的要求降低,从而保证实时性要求,同时使用DBow词袋字典库对描述子信息进行存储和检索。When performing loopback detection, two detection and description methods with better real-time performance, FAST feature point detection method and BRIEF descriptor, are used. This combination reduces the system's requirements for processors and memory, thereby ensuring real-time requirements. At the same time, the DBow bag-of-words dictionary is used to store and retrieve descriptor information.

在进行局部路径规划时,无人机借助基于优化的多传感器状态估计器实时估计其在空间中的位姿状态,借助RGBD传感器提供的深度信息和自身位置进而计算点云(PointCloud)信息并更新地图。同时由于在动力学搜索算法中,自由空间中的距离信息被忽略,因此搜索得到的路径通常接近障碍物。为了解决上述问题,采用一种B样条优化方法对动力学搜索产生的轨迹进行优化。针对多无人机室内调度问题,本发明采用一种基于DARP区域分割法的无人机调度算法,实现无人机集群在室内的多连通区域如房间、走廊等环境下的搜索调度。When performing local path planning, the UAV uses an optimized multi-sensor state estimator to estimate its pose state in space in real time, and uses the depth information provided by the RGBD sensor and its own position to calculate and update the point cloud information. map. At the same time, since the distance information in free space is ignored in the dynamic search algorithm, the searched path is usually close to the obstacle. In order to solve the above problems, a B-spline optimization method is used to optimize the trajectory generated by dynamic search. Aiming at the problem of multi-UAV indoor scheduling, the present invention adopts a UAV scheduling algorithm based on the DARP region segmentation method to realize the search and scheduling of UAV clusters in indoor multi-connected areas such as rooms and corridors.

需要说明的是,在本文中,诸如第一和第二等之类的关系术语仅仅用来将一个实体或者操作与另一个实体或操作区分开来,而不一定要求或者暗示这些实体或操作之间存在任何这种实际的关系或者顺序。而且,术语“包括”、“包含”或者其任何其他变体意在涵盖非排他性的包含,从而使得包括一系列要素的过程、方法、物品或者设备不仅包括那些要素,而且还包括没有明确列出的其他要素,或者是还包括为这种过程、方法、物品或者设备所固有的要素。It should be noted that in this article, relational terms such as first and second are only used to distinguish one entity or operation from another entity or operation, and do not necessarily require or imply that there is a relationship between these entities or operations. There is no such actual relationship or order between them. Furthermore, the term "comprises", "comprises" or any other variation thereof is intended to cover a non-exclusive inclusion such that a process, method, article, or apparatus comprising a set of elements includes not only those elements, but also includes elements not expressly listed. other elements of or also include elements inherent in such a process, method, article, or device.

尽管已经示出和描述了本发明的实施例,对于本领域的普通技术人员而言,可以理解在不脱离本发明的原理和精神的情况下可以对这些实施例进行多种变化、修改、替换和变型,本发明的范围由所附权利要求及其等同物限定。Although the embodiments of the present invention have been shown and described, those skilled in the art can understand that various changes, modifications and substitutions can be made to these embodiments without departing from the principle and spirit of the present invention. and modifications, the scope of the invention is defined by the appended claims and their equivalents.

Claims (10)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202310243900.8ACN116295342A (en) | 2023-03-15 | 2023-03-15 | A Multi-Sensing State Estimator for Aircraft Surveying |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202310243900.8ACN116295342A (en) | 2023-03-15 | 2023-03-15 | A Multi-Sensing State Estimator for Aircraft Surveying |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN116295342Atrue CN116295342A (en) | 2023-06-23 |

Family

ID=86837447

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202310243900.8APendingCN116295342A (en) | 2023-03-15 | 2023-03-15 | A Multi-Sensing State Estimator for Aircraft Surveying |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN116295342A (en) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN117830879A (en)* | 2024-01-02 | 2024-04-05 | 广东工业大学 | Indoor-oriented distributed unmanned aerial vehicle cluster positioning and mapping method |

Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114485649A (en)* | 2022-02-09 | 2022-05-13 | 北京自动化控制设备研究所 | Unmanned aerial vehicle-oriented inertial, visual and height information fusion navigation method |

| CN115406447A (en)* | 2022-10-31 | 2022-11-29 | 南京理工大学 | Autonomous positioning method of quad-rotor unmanned aerial vehicle based on visual inertia in rejection environment |

- 2023

- 2023-03-15CNCN202310243900.8Apatent/CN116295342A/enactivePending

Patent Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114485649A (en)* | 2022-02-09 | 2022-05-13 | 北京自动化控制设备研究所 | Unmanned aerial vehicle-oriented inertial, visual and height information fusion navigation method |

| CN115406447A (en)* | 2022-10-31 | 2022-11-29 | 南京理工大学 | Autonomous positioning method of quad-rotor unmanned aerial vehicle based on visual inertia in rejection environment |

Non-Patent Citations (1)

| Title |

|---|

| 孙艺东: "基于优化多传感器状态估计器的无人机集群SLAM系统研究", pages 1 - 65, Retrieved from the Internet <URL:https://www.doc88.com/p-50259287747624.html>* |

Cited By (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN117830879A (en)* | 2024-01-02 | 2024-04-05 | 广东工业大学 | Indoor-oriented distributed unmanned aerial vehicle cluster positioning and mapping method |

| CN117830879B (en)* | 2024-01-02 | 2024-06-14 | 广东工业大学 | Indoor-oriented distributed unmanned aerial vehicle cluster positioning and mapping method |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN112634451B (en) | Outdoor large-scene three-dimensional mapping method integrating multiple sensors | |

| Murali et al. | Perception-aware trajectory generation for aggressive quadrotor flight using differential flatness | |

| CN104236548B (en) | A method for indoor autonomous navigation of micro UAV | |

| Shen et al. | Autonomous multi-floor indoor navigation with a computationally constrained MAV | |

| Fraundorfer et al. | Vision-based autonomous mapping and exploration using a quadrotor MAV | |

| Bacik et al. | Autonomous flying with quadrocopter using fuzzy control and ArUco markers | |

| CN113485441A (en) | Distribution network inspection method combining unmanned aerial vehicle high-precision positioning and visual tracking technology | |

| CN110726406A (en) | An Improved Nonlinear Optimization Method for Monocular Inertial Navigation SLAM | |

| CN107504969A (en) | Four rotor-wing indoor air navigation aids of view-based access control model and inertia combination | |

| CN113625774A (en) | Multi-unmanned aerial vehicle cooperative positioning system and method for local map matching and end-to-end distance measurement | |

| Sanfourche et al. | Perception for UAV: Vision-Based Navigation and Environment Modeling. | |

| Steiner et al. | A vision-aided inertial navigation system for agile high-speed flight in unmapped environments: Distribution statement a: Approved for public release, distribution unlimited | |

| CN116989772B (en) | An air-ground multi-modal multi-agent collaborative positioning and mapping method | |

| Williams et al. | Feature and pose constrained visual aided inertial navigation for computationally constrained aerial vehicles | |

| CN117901126A (en) | A humanoid robot dynamic perception method and computing system | |

| Jian et al. | Lvcp: Lidar-vision tightly coupled collaborative real-time relative positioning | |

| CN115903543B (en) | Simulation verification system for fast traversal and search control of UAV in complex space | |

| CN116295342A (en) | A Multi-Sensing State Estimator for Aircraft Surveying | |

| Kehoe et al. | State estimation using optical flow from parallax-weighted feature tracking | |

| Cao et al. | Visual-inertial-laser slam based on orb-slam3 | |

| Gao et al. | Canopy volume measurement of fruit trees using robotic platform loaded LiDAR data | |

| Xu et al. | Agricultural Vehicle Automatic Navigation Positioning and Obstacle Avoidance Technology Based on ICP | |

| Roggeman et al. | Embedded vision-based localization and model predictive control for autonomous exploration | |

| Dang et al. | Visual-inertial odometry-enhanced geometrically stable icp for mapping applications using aerial robots | |

| Lu et al. | HEPP: Hyper-efficient Perception and Planning for High-speed Obstacle Avoidance of UAVs |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination |