CN116226375A - Training method and device for classification model suitable for text review - Google Patents

Training method and device for classification model suitable for text reviewDownload PDFInfo

- Publication number

- CN116226375A CN116226375ACN202310019334.2ACN202310019334ACN116226375ACN 116226375 ACN116226375 ACN 116226375ACN 202310019334 ACN202310019334 ACN 202310019334ACN 116226375 ACN116226375 ACN 116226375A

- Authority

- CN

- China

- Prior art keywords

- text

- text sample

- enhanced

- sample

- prediction

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F16/00—Information retrieval; Database structures therefor; File system structures therefor

- G06F16/30—Information retrieval; Database structures therefor; File system structures therefor of unstructured textual data

- G06F16/35—Clustering; Classification

- G06F16/353—Clustering; Classification into predefined classes

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Data Mining & Analysis (AREA)

- Databases & Information Systems (AREA)

- Physics & Mathematics (AREA)

- General Engineering & Computer Science (AREA)

- General Physics & Mathematics (AREA)

- Information Retrieval, Db Structures And Fs Structures Therefor (AREA)

- Machine Translation (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本公开涉及人工智能技术领域,尤其涉及深度学习、计算机技术领域。The present disclosure relates to the technical field of artificial intelligence, in particular to deep learning and computer technical fields.

背景技术Background technique

相关技术中,文本审核是基于自然语言处理技术,用于判断一段文本内容是否遵循互联网、媒体等平台内容规范的一个自动化和智能化系统。常见的文本审核应用场景包括用户签名/昵称、评论/留言、即时通讯文本内容、用户帖子、媒体资讯、商品信息、视频直播弹幕、图文信息等。In related technologies, text auditing is an automated and intelligent system based on natural language processing technology for judging whether a piece of text content complies with the content specifications of platforms such as the Internet and media. Common text review application scenarios include user signatures/nicknames, comments/messages, instant messaging text content, user posts, media information, product information, live video barrage, graphic information, etc.

文本审核处理的审核类型有多种,互联网上每天产生海量的用户数据,需要负担繁重的审核任务。分类模型可以利用计算机和自然语言处理技术,实现自动化的文本内容违规检测和识别,主导或者辅助人工审核的功能,大大减少了相关人员的工作成本。There are many types of auditing for text auditing processing. Massive user data is generated on the Internet every day, which requires heavy auditing tasks. The classification model can use computer and natural language processing technology to realize automatic detection and identification of text content violations, leading or assisting the function of manual review, which greatly reduces the work cost of relevant personnel.

因此,如何提高适用于文本审核的分类模型的精确度和泛化能力,已经成为重要的研究方向之一。Therefore, how to improve the accuracy and generalization ability of classification models suitable for text review has become one of the important research directions.

发明内容Contents of the invention

本公开提供了一种适用于文本审核的分类模型的训练方法及其装置。The disclosure provides a method and device for training a classification model suitable for text review.

根据本公开的一方面,提供了一种适用于文本审核的分类模型的训练方法,该方法包括:According to an aspect of the present disclosure, a method for training a classification model suitable for text review is provided, the method comprising:

针对多个文本审核类型中的任一文本审核类型,获取文本审核类型对应的第i轮文本样本集和第j轮训练后的第一分类模型,i、j为正整数;For any text review type in multiple text review types, obtain the i-th round of text sample set corresponding to the text review type and the first classification model after the j-th round of training, where i and j are positive integers;

基于预训练语言模型对第i轮文本样本集中的文本样本进行不同类型的预测,以获取不同预测类型的增强文本样本;Perform different types of predictions on the text samples in the i-th round of text samples based on the pre-trained language model to obtain enhanced text samples of different prediction types;

基于第一分类模型获取增强文本样本的标签和置信度;Acquiring labels and confidence levels of enhanced text samples based on the first classification model;

根据增强文本样本的预测类型、增强文本样本的标签和置信度对增强文本样本进行筛选,并根据筛选后的增强文本样本对第i轮文本样本集进行更新,得到第i+1轮文本样本集;Filter the enhanced text sample according to the prediction type of the enhanced text sample, the label and the confidence of the enhanced text sample, and update the i-th round of text sample set according to the filtered enhanced text sample to obtain the i+1-th round of text sample set ;

根据第i+1轮文本样本集对第一分类模型进行训练,获取第j+1轮训练后的第二分类模型,并继续获取下一轮文本样本集对第二分类模型进行训练,直至训练结束生成目标分类模型。Train the first classification model according to the i+1th round of text sample set, obtain the second classification model after the j+1th round of training, and continue to obtain the next round of text sample set to train the second classification model until training Finish generating the target classification model.

本公开可以避免模型训练时引入噪声,提高训练数据的多样性,从而扩展模型训练的边界,充分挖掘分类模型的泛化能力,有利于提升分类模型准确率。The disclosure can avoid introducing noise during model training, increase the diversity of training data, thereby expand the boundary of model training, fully tap the generalization ability of the classification model, and help improve the accuracy of the classification model.

根据本公开的另一方面,提供了一种适用于文本审核的分类模型的训练装置,包括:According to another aspect of the present disclosure, a training device for a classification model suitable for text review is provided, including:

第一获取模块,用于针对多个文本审核类型中的任一文本审核类型,获取文本审核类型对应的第i轮文本样本集和第j轮训练后的第一分类模型,i、j为正整数;The first acquisition module is used to obtain the i-th round of text sample set corresponding to the text audit type and the first classification model after the j-th round of training for any text audit type in a plurality of text audit types, where i and j are positive integer;

第二获取模块,用于基于预训练语言模型对第i轮文本样本集中的文本样本进行不同类型的预测,以获取不同预测类型的增强文本样本;The second acquisition module is used to perform different types of predictions on the text samples in the i-th round of text samples based on the pre-trained language model, so as to obtain enhanced text samples of different prediction types;

第三获取模块,用于基于第一分类模型获取增强文本样本的标签和置信度;The third obtaining module is used to obtain the label and confidence of the enhanced text sample based on the first classification model;

更新模块,用于根据增强文本样本的预测类型、增强文本样本的标签和置信度对增强文本样本进行筛选,并根据筛选后的增强文本样本对第i轮文本样本集进行更新,得到第i+1轮文本样本集;The update module is used to filter the enhanced text sample according to the prediction type of the enhanced text sample, the label and the confidence of the enhanced text sample, and update the i-th round of text sample set according to the filtered enhanced text sample to obtain the i+ 1 round of text sample set;

训练模块,用于根据第i+1轮文本样本集对第一分类模型进行训练,获取第j+1轮训练后的第二分类模型,并继续获取下一轮文本样本集对第二分类模型进行训练,直至训练结束生成目标分类模型。The training module is used to train the first classification model according to the i+1th round of text sample sets, obtain the second classification model after the j+1th round of training, and continue to obtain the next round of text sample sets for the second classification model Carry out training until the end of training to generate a target classification model.

根据本公开的另一方面,提供了一种电子设备,包括至少一个处理器,以及According to another aspect of the present disclosure, an electronic device is provided, including at least one processor, and

与至少一个处理器通信连接的存储器;其中,memory communicatively coupled to at least one processor; wherein,

存储器存储有可被至少一个处理器执行的指令,指令被至少一个处理器执行,以使至少一个处理器能够执行本公开第一个方面实施例的适用于文本审核的分类模型的训练方法。The memory stores instructions that can be executed by at least one processor, and the instructions are executed by at least one processor, so that the at least one processor can execute the method for training a classification model suitable for text review according to the embodiment of the first aspect of the present disclosure.

根据本公开的另一方面,提供了一种存储有计算机指令的非瞬时计算机可读存储介质,其中,计算机指令用于使计算机执行本公开第一个方面实施例的适用于文本审核的分类模型的训练方法。According to another aspect of the present disclosure, there is provided a non-transitory computer-readable storage medium storing computer instructions, wherein the computer instructions are used to cause a computer to execute the classification model applicable to text review in the embodiment of the first aspect of the present disclosure training method.

根据本公开的另一方面,提供了一种计算机程序产品,包括计算机程序,计算机程序在被处理器执行时实现本公开第一个方面实施例的适用于文本审核的分类模型的训练方法。According to another aspect of the present disclosure, a computer program product is provided, including a computer program. When the computer program is executed by a processor, the method for training a classification model suitable for text review according to the embodiment of the first aspect of the present disclosure is implemented.

应当理解,本部分所描述的内容并非旨在标识本公开的实施例的关键或重要特征,也不用于限制本公开的范围。本公开的其它特征将通过以下的说明书而变得容易理解。It should be understood that what is described in this section is not intended to identify key or important features of the embodiments of the present disclosure, nor is it intended to limit the scope of the present disclosure. Other features of the present disclosure will be readily understood through the following description.

附图说明Description of drawings

附图用于更好地理解本方案,不构成对本公开的限定。其中:The accompanying drawings are used to better understand the present solution, and do not constitute a limitation to the present disclosure. in:

图1是根据本公开一个实施例的适用于文本审核的分类模型的训练方法的流程图;FIG. 1 is a flow chart of a method for training a classification model suitable for text review according to an embodiment of the present disclosure;

图2是根据本公开一个实施例的适用于文本审核的分类模型的训练方法的流程图;FIG. 2 is a flow chart of a method for training a classification model suitable for text review according to an embodiment of the present disclosure;

图3是根据本公开一个实施例的适用于文本审核的分类模型的训练方法的示意图;3 is a schematic diagram of a training method for a classification model suitable for text review according to an embodiment of the present disclosure;

图4是根据本公开一个实施例的适用于文本审核的分类模型的训练方法的流程图;FIG. 4 is a flow chart of a method for training a classification model suitable for text review according to an embodiment of the present disclosure;

图5是根据本公开一个实施例的适用于文本审核的分类模型的训练方法的示意图;5 is a schematic diagram of a training method for a classification model suitable for text review according to an embodiment of the present disclosure;

图6是根据本公开一个实施例的适用于文本审核的分类模型的训练装置的结构图;6 is a structural diagram of a training device for a classification model suitable for text review according to an embodiment of the present disclosure;

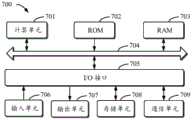

图7是用来实现本公开实施例的适用于文本审核的分类模型的训练方法的电子设备的框图。Fig. 7 is a block diagram of an electronic device for implementing the method for training a classification model suitable for text review according to an embodiment of the present disclosure.

具体实施方式Detailed ways

以下结合附图对本公开的示范性实施例做出说明,其中包括本公开实施例的各种细节以助于理解,应当将它们认为仅仅是示范性的。因此,本领域普通技术人员应当认识到,可以对这里描述的实施例做出各种改变和修改,而不会背离本公开的范围和精神。同样,为了清楚和简明,以下的描述中省略了对公知功能和结构的描述。Exemplary embodiments of the present disclosure are described below in conjunction with the accompanying drawings, which include various details of the embodiments of the present disclosure to facilitate understanding, and they should be regarded as exemplary only. Accordingly, those of ordinary skill in the art will recognize that various changes and modifications of the embodiments described herein can be made without departing from the scope and spirit of the disclosure. Also, descriptions of well-known functions and constructions are omitted in the following description for clarity and conciseness.

本公开实施例涉及计算机视觉、深度学习等人工智能技术领域。Embodiments of the present disclosure relate to artificial intelligence technology fields such as computer vision and deep learning.

人工智能(Artificial Intelligence),英文缩写为AI。它是研究、开发用于模拟、延伸和扩展人的智能的理论、方法、技术及应用系统的一门新的技术科学。Artificial Intelligence (Artificial Intelligence), the English abbreviation is AI. It is a new technical science that studies and develops theories, methods, technologies and application systems for simulating, extending and expanding human intelligence.

深度学习(DL,Deep Learning)是机器学习(ML,Machine Learning)领域中一个新的研究方向,它被引入机器学习使其更接近于最初的目标——AI。深度学习是学习样本数据的内在规律和表示层次,这些学习过程中获得的信息对诸如文字,图像和声音等数据的解释有很大的帮助。它的最终目标是让机器能够像人一样具有分析学习能力,能够识别文字、图像和声音等数据。Deep learning (DL, Deep Learning) is a new research direction in the field of machine learning (ML, Machine Learning), which is introduced into machine learning to make it closer to the original goal - AI. Deep learning is to learn the internal laws and representation levels of sample data. The information obtained during the learning process is of great help to the interpretation of data such as text, images and sounds. Its ultimate goal is to enable machines to have the ability to analyze and learn like humans, and to be able to recognize data such as text, images, and sounds.

计算机技术是指用计算机快速、准确的计算能力、逻辑判断能力和人工模拟能力,对系统进行定量计算和分析,为解决复杂系统问题提供手段和工具。Computer technology refers to the quantitative calculation and analysis of the system by using the computer's fast and accurate calculation ability, logical judgment ability and artificial simulation ability, so as to provide means and tools for solving complex system problems.

通常文本审核系统包括敏感词典匹配模块、基于深度学习构建的分类模型,其中分类模型需要使用人工标注的高质量数据进行训练,训练数据的数量和质量对目标分类模型的效果有很大影响。实现中,标注大量数据需要耗费较多人力资源;此外,由于符合文本审核类型的文本样本在天然数据中分布占比很低,其采集和获取的难度很高。本公开可以在无需额外人力标注的条件下,获取符合文本审核类型的文本样本,同时增加数据多样性,提升模型泛化能力。Usually, the text review system includes a sensitive dictionary matching module and a classification model based on deep learning. The classification model needs to be trained with high-quality manually labeled data. The quantity and quality of the training data have a great impact on the effect of the target classification model. In the implementation, labeling a large amount of data requires more human resources; in addition, because the distribution of text samples that meet the type of text review is very low in natural data, it is very difficult to collect and obtain. The disclosure can obtain text samples conforming to the text review type without additional human labeling, increase data diversity, and improve model generalization ability.

下面结合参考附图描述本公开的适用于文本审核的分类模型的训练方法及其装置。The method and device for training a classification model suitable for text review according to the present disclosure will be described below with reference to the accompanying drawings.

图1是根据本公开一个实施例的适用于文本审核的分类模型的训练方法的流程图,如图1所示,该方法包括以下步骤:Fig. 1 is a flowchart of a method for training a classification model suitable for text review according to an embodiment of the present disclosure. As shown in Fig. 1 , the method includes the following steps:

S101,针对多个文本审核类型中的任一文本审核类型,获取文本审核类型对应的第i轮文本样本集和第j轮训练后的第一分类模型,i、j为正整数。S101. For any text review type among multiple text review types, obtain the i-th round of text sample set corresponding to the text review type and the first classification model after the j-th round of training, where i and j are positive integers.

在一些实现中,文本审核类型包括消极信息类型、敏感信息类型等,每个文本审核类型对应一个分类模型。In some implementations, the text review types include negative information types, sensitive information types, etc., and each text review type corresponds to a classification model.

可选地,本公开实施例中的分类模型可以是二分类模型,例如,可以基于预训练模型(ERNIE 2.0模型)进行微调,获取二分类模型,针对多个文本审核类型中的任一文本审核类型,可以基于该文本审核类型对应的分类模型可以判断待处理文本是否符合当前文本审核类型。Optionally, the classification model in the embodiment of the present disclosure may be a binary classification model, for example, it may be fine-tuned based on a pre-trained model (ERNIE 2.0 model) to obtain a binary classification model for any text review in multiple text review types Type, based on the classification model corresponding to the text review type, it can be judged whether the text to be processed conforms to the current text review type.

可选地,初始文本样本集包括多个文本样本,文本样本是有标注文本样本,也就是说,每个文本样本都具有标签。Optionally, the initial text sample set includes multiple text samples, and the text samples are labeled text samples, that is, each text sample has a label.

例如,若文本审核类型为广告推广,第i轮文本样本集中的文本样本包括标签为0、1,其中,标签为0的文本样本为具有广告推广的文本样本,标签为1的文本样本为不具有广告推广的文本样本。For example, if the text review type is advertising promotion, the text samples in the i-th round of text sample set include labels 0 and 1, where the text sample with the label 0 is the text sample with advertising promotion, and the text sample with the label 1 is the text sample without Text sample with advertising promotion.

S102,基于预训练语言模型对第i轮文本样本集中的文本样本进行不同类型的预测,以获取不同预测类型的增强文本样本。S102. Based on the pre-trained language model, perform different types of predictions on the text samples in the i-th round of text samples, so as to obtain enhanced text samples of different prediction types.

可选地,本公开实施例中,预测类型可以包括掩码预测和续写预测。Optionally, in this embodiment of the present disclosure, the prediction type may include mask prediction and continuation prediction.

需要说明的是,本公开实施例中,预训练语言模型可以是具有生成能力的预训练语言模型(如ERNIE 3.0模型、t5模型、gpt-3模型等)。It should be noted that, in the embodiment of the present disclosure, the pre-trained language model may be a pre-trained language model with generation capability (such as ERNIE 3.0 model, t5 model, gpt-3 model, etc.).

在一些实现中,不同预测类型对应不同的数据预处理过程,可以根据预测类型对第i轮文本样本集中的文本样本进行数据预处理,进而输入预训练语言模型进行预测,以生成不同预测类型的增强文本样本。In some implementations, different prediction types correspond to different data preprocessing processes. Data preprocessing can be performed on the text samples in the i-th round of text sample sets according to the prediction types, and then input into the pre-trained language model for prediction to generate different prediction types. Enhanced text samples.

S103,基于第一分类模型获取增强文本样本的标签和置信度。S103. Acquire labels and confidence levels of enhanced text samples based on the first classification model.

利用第j轮训练后的第一分类模型对增强文本样本进行二分类处理,以构造增强文本样本的标签,并获取增强文本样本的置信度。The first classification model after the j-th round of training is used to perform binary classification processing on the enhanced text sample to construct the label of the enhanced text sample and obtain the confidence of the enhanced text sample.

在一些实现中,增强文本样本的标签指示增强文本样本符合文本审核类型,在一些实现中,增强文本样本的标签指示增强文本样本不符合文本审核类型。In some implementations, the label of the enhanced text sample indicates that the enhanced text sample conforms to the text audit type, and in some implementations, the label of the enhanced text sample indicates that the enhanced text sample does not conform to the text audit type.

需要说明的是,基于第一分类模型构造的增强文本样本的标签为伪标签。It should be noted that the labels of the enhanced text samples constructed based on the first classification model are pseudo-labels.

S104,根据增强文本样本的预测类型、增强文本样本的标签和置信度对增强文本样本进行筛选,并根据筛选后的增强文本样本对第i轮文本样本集进行更新,得到第i+1轮文本样本集。S104, filter the enhanced text sample according to the prediction type of the enhanced text sample, the label and the confidence of the enhanced text sample, and update the i-th round of text sample set according to the filtered enhanced text sample to obtain the i+1-th round of text sample set.

在一些实现中,不同预测类型对应不同的筛选策略。In some implementations, different prediction types correspond to different screening strategies.

例如,若增强文本样本的预测类型为掩码预测,为了提升分类模型效果的核心,学习影响标签判断的关键词的位置,可以基于增强文本样本的标签和置信度,分别出筛选增强文本样本中符合文本审核类型和不符合文本审核类型的若干个增强文本样本,以对第i轮文本样本集进行更新。For example, if the prediction type of the enhanced text sample is mask prediction, in order to improve the core of the classification model effect and learn the position of the keywords that affect the label judgment, based on the label and confidence of the enhanced text sample, filter the enhanced text sample respectively Several enhanced text samples conforming to the text auditing type and not conforming to the text auditing type are used to update the i-th round of text sample set.

再例如,若增强文本样本的预测类型为续写预测,为了提高预训练语言模型的召回率,充分挖掘分类模型的泛化能力,可以基于增强文本样本的标签,筛选增强文本样本中符合文本审核类型的若干个增强文本样本,以对第i轮文本样本集进行更新。For another example, if the prediction type of the enhanced text sample is continuation prediction, in order to improve the recall rate of the pre-trained language model and fully tap the generalization ability of the classification model, based on the label of the enhanced text sample, the enhanced text sample can be selected to meet the text review Several enhanced text samples of type to update the i-th round text sample set.

需要说明的是,第i+1轮文本样本集中的文本样本是有标注的文本样本,标注的标签即为分类模型获取的伪标签。It should be noted that the text samples in the i+1-th round of text sample sets are labeled text samples, and the labeled labels are the pseudo-labels obtained by the classification model.

S105,根据第i+1轮文本样本集对第一分类模型进行训练,获取第j+1轮训练后的第二分类模型,并继续获取下一轮文本样本集对第二分类模型进行训练,直至训练结束生成目标分类模型。S105, train the first classification model according to the i+1th round of text sample set, obtain the second classification model after the j+1th round of training, and continue to obtain the next round of text sample set to train the second classification model, Until the end of training, the target classification model is generated.

可选地,根据第i+1轮文本样本集对第一分类模型进行训练,并根据损失函数对第一分类模型进行反向调整,以获取第j+1轮训练后的第二分类模型。Optionally, the first classification model is trained according to the text sample set of the i+1th round, and the first classification model is reversely adjusted according to the loss function to obtain the second classification model after the j+1th round of training.

继续获取下一轮文本样本集对第二分类模型进行训练,并重复上述步骤,直至满足预设的训练结束条件,确定完成分类模型的训练,生成目标分类模型。可选地,训练结束条件可以是达到预设的训练迭代次数,或损失函数的损失值收敛到预设损失阈值。本公开实施例对此不做限制。Continue to obtain the next round of text sample sets to train the second classification model, and repeat the above steps until the preset training end conditions are met, and it is determined that the training of the classification model is completed to generate the target classification model. Optionally, the training end condition may be that the preset number of training iterations is reached, or the loss value of the loss function converges to the preset loss threshold. Embodiments of the present disclosure do not limit this.

本公开实施例中,基于预训练语言模型对第i轮文本样本集中的文本样本进行不同类型的预测,以获取不同预测类型的增强文本样本;基于第一分类模型获取增强文本样本的标签和置信度;根据增强文本样本的预测类型、增强文本样本的标签和置信度对增强文本样本进行筛选,并根据筛选后的增强文本样本对第i轮文本样本集进行更新,得到第i+1轮文本样本集;本公开可以避免模型训练时引入噪声,提高训练数据的多样性,从而扩展模型训练的边界,充分挖掘分类模型的泛化能力,有利于提升分类模型准确率。In the embodiment of the present disclosure, different types of predictions are made on the text samples in the i-th round of text samples based on the pre-trained language model to obtain enhanced text samples of different prediction types; the labels and confidence of the enhanced text samples are obtained based on the first classification model According to the prediction type of the enhanced text sample, the label and the confidence of the enhanced text sample, the enhanced text sample is screened, and the i-th round of text sample set is updated according to the filtered enhanced text sample, and the i+1-th round of text is obtained Sample set; this disclosure can avoid the introduction of noise during model training, increase the diversity of training data, thereby expand the boundary of model training, fully tap the generalization ability of the classification model, and help improve the accuracy of the classification model.

图2是根据本公开一个实施例的适用于文本审核的分类模型的训练方法的流程图,如图2所示,该方法包括以下步骤:FIG. 2 is a flow chart of a training method for a classification model suitable for text review according to an embodiment of the present disclosure. As shown in FIG. 2 , the method includes the following steps:

S201,针对多个文本审核类型中的任一文本审核类型,获取文本审核类型对应的第i轮文本样本集和第j轮训练后的第一分类模型,i、j为正整数。S201. For any text review type among multiple text review types, obtain the i-th round of text sample set corresponding to the text review type and the first classification model after the j-th round of training, where i and j are positive integers.

关于步骤S201的介绍可以参见上述实施例中的内容,此处不再赘述。For the introduction of step S201, reference may be made to the content in the foregoing embodiments, and details are not repeated here.

S202,对文本样本进行掩码覆盖,并基于预训练语言模型对掩码覆盖后的文本样本进行掩码预测,生成预测类型为掩码预测的增强文本样本。S202. Perform mask covering on the text sample, and perform mask prediction on the mask covered text sample based on the pre-trained language model, and generate an enhanced text sample whose prediction type is mask prediction.

按照预设的覆盖比例对文本样本进行掩码覆盖,生成第一文本样本,第一文本样本中包括一个或多个掩码。将第一文本样本输入预训练语言模型,基于预训练语言模型对第一文本样本中的一个或多个掩码进行预测,以生成预测类型为掩码预测的增强文本样本。The text sample is covered with a mask according to a preset coverage ratio to generate a first text sample, and the first text sample includes one or more masks. The first text sample is input into the pre-trained language model, and one or more masks in the first text sample are predicted based on the pre-trained language model, so as to generate an enhanced text sample whose prediction type is mask prediction.

可选地,覆盖比例可以是15%,在其他实现中,覆盖比例可以取其他值,本公开实施例对此不做限制。Optionally, the coverage ratio may be 15%. In other implementations, the coverage ratio may take other values, which are not limited in this embodiment of the present disclosure.

按照预设的覆盖比例对文本样本进行掩码覆盖,生成多条第一文本样本,例如,若文本样本为“我是一名员工”按照预设的覆盖比例对文本样本进行掩码覆盖后,生成的第一文本样本可以是“我是一名[mask]”、“[mask]是一名员工”、“我[mask]一名员工”、“我是一[mask]员工”等。Mask the text sample according to the preset coverage ratio to generate multiple first text samples. For example, if the text sample is "I am an employee", after masking the text sample according to the preset coverage ratio, The generated first text samples may be "I am a [mask]", "[mask] is an employee", "I am [mask] an employee", "I am a [mask] employee", etc.

基于预训练语言模型对“我是一名[mask]”的第一文本样本中的掩码进行预测,生成增强文本样本可以是“我是一名教师”、“我是一名学生”、“我是一名员工”、“我是一名工作人员”等。Based on the pre-trained language model to predict the mask in the first text sample of "I am a [mask]", the generated enhanced text samples can be "I am a teacher", "I am a student", " I am an employee", "I am a staff member", etc.

S203,基于预设的提示词对文本样本进行改写,并基于预训练语言模型对改写后的文本样本进行续写预测,生成预测类型为续写预测的增强文本样本。S203. Rewrite the text sample based on the preset prompt words, and perform continuation prediction on the rewritten text sample based on the pre-trained language model, and generate an enhanced text sample whose prediction type is continuation prediction.

为了提升提高模型的召回率,充分挖掘分类模型的泛化能力,获取文本样本中标签为符合文本审核类型的第二文本样本。基于预设的提示词对第二文本样本进行改写,获取第三文本样本。对任意两个第三文本样本进行拼接,获取第四文本样本。基于预训练语言模型对第四文本样本进行续写,以生成预测类型为续写预测的增强文本样本。In order to improve the recall rate of the model and fully tap the generalization ability of the classification model, a second text sample whose label is in line with the text review type among the text samples is obtained. The second text sample is rewritten based on the preset prompt words to obtain a third text sample. Splicing any two third text samples to obtain a fourth text sample. Continuing the fourth text sample based on the pre-trained language model to generate an enhanced text sample whose prediction type is continuation prediction.

如图3所示,首先设计多种不同的提示词prompt模版,对第二文本样本随机采样,基于预设的prompt模版对第二文本样本进行改写,获取第三文本样本,按照定义的prompt对第三文本样本进行拼接,可使用的prompt模版如图3所示,其核心思想是构造提示语,按列表形式列出两条已有的符合文本审核类型的第二文本样本,以此作为提示,期望预训练语言模型继续生成第三条符合文本审核类型的文本样本,也即生成预测类型为续写预测的增强文本样本。As shown in Figure 3, first design a variety of different prompt word prompt templates, randomly sample the second text sample, rewrite the second text sample based on the preset prompt template, and obtain the third text sample, according to the defined prompt pair The third text sample is spliced, and the prompt template that can be used is shown in Figure 3. The core idea is to construct a prompt, and list two existing second text samples that meet the text review type as a prompt. , it is expected that the pre-trained language model will continue to generate a third text sample that meets the text review type, that is, generate an enhanced text sample whose prediction type is continuation prediction.

以预训练语言模型为ERNIE 3.0模型为例进行说明,ERNIE 3.0模型有两部分,一部分是理解网络,一部分是生成网络,在预训练时可以同时做理解任务和生成任务,这里使用生成网络对第四文本样本进行续写,以生成预测类型为续写预测的增强文本样本。Taking the pre-training language model as the ERNIE 3.0 model as an example, the ERNIE 3.0 model has two parts, one is the understanding network, and the other is the generation network. During pre-training, the understanding task and the generation task can be performed at the same time. Here, the generation network is used for the first four text samples for continuation to generate enhanced text samples whose prediction type is continuation prediction.

S204,基于第一分类模型获取增强文本样本的标签和置信度。S204. Acquire labels and confidence levels of the enhanced text samples based on the first classification model.

S205,根据增强文本样本的预测类型、增强文本样本的标签和置信度对增强文本样本进行筛选,并根据筛选后的增强文本样本对第i轮文本样本集进行更新,得到第i+1轮文本样本集。S205, filter the enhanced text sample according to the prediction type of the enhanced text sample, the label and the confidence of the enhanced text sample, and update the i-th round of text sample set according to the filtered enhanced text sample to obtain the i+1-th round of text sample set.

S206,根据第i+1轮文本样本集对第一分类模型进行训练,获取第j+1轮训练后的第二分类模型,并继续获取下一轮文本样本集对第二分类模型进行训练,直至训练结束生成目标分类模型。S206, train the first classification model according to the i+1th round of text sample set, obtain the second classification model after the j+1th round of training, and continue to obtain the next round of text sample set to train the second classification model, Until the end of training, the target classification model is generated.

关于步骤S204~步骤S206的介绍可以参见上述实施例中的内容,此处不再赘述。For the introduction of step S204 to step S206, reference may be made to the content in the foregoing embodiments, and details are not repeated here.

本公开实施例中,对文本样本进行掩码覆盖,并基于预训练语言模型对掩码覆盖后的文本样本进行掩码预测,生成预测类型为掩码预测的增强文本样本,基于预设的提示词对文本样本进行改写,并基于预训练语言模型对改写后的文本样本进行续写预测,生成预测类型为续写预测的增强文本样本。本公开可以避免模型训练时引入噪声,提高训练数据的多样性,从而扩展模型训练的边界,充分挖掘分类模型的泛化能力,有利于提升分类模型准确率。In the embodiment of the present disclosure, the text sample is masked, and the masked text sample is masked based on the pre-trained language model to generate an enhanced text sample whose prediction type is mask prediction, based on the preset prompt The words rewrite the text sample, and based on the pre-trained language model, perform continuation prediction on the rewritten text sample, and generate an enhanced text sample whose prediction type is continuation prediction. The disclosure can avoid introducing noise during model training, increase the diversity of training data, thereby expand the boundary of model training, fully tap the generalization ability of the classification model, and help improve the accuracy of the classification model.

图4是根据本公开一个实施例的适用于文本审核的分类模型的训练方法的流程图,如图4所示,该方法包括以下步骤:FIG. 4 is a flowchart of a training method for a classification model suitable for text review according to an embodiment of the present disclosure. As shown in FIG. 4 , the method includes the following steps:

S401,针对多个文本审核类型中的任一文本审核类型,获取文本审核类型对应的第i轮文本样本集和第j轮训练后的第一分类模型,i、j为正整数。S401. For any text review type among multiple text review types, obtain the i-th round of text sample set corresponding to the text review type and the first classification model after the j-th round of training, where i and j are positive integers.

S402,基于预训练语言模型对第i轮文本样本集中的文本样本进行不同类型的预测,以获取不同预测类型的增强文本样本。S402. Based on the pre-trained language model, perform different types of predictions on the text samples in the i-th round of text samples, so as to obtain enhanced text samples of different prediction types.

关于步骤S401~步骤S402的介绍可以参见上述实施例中的内容,此处不再赘述。For the introduction of step S401 to step S402, reference may be made to the content in the foregoing embodiments, and details are not repeated here.

S403,基于第一分类模型获取增强文本样本的标签和置信度。S403. Acquire labels and confidence levels of the enhanced text samples based on the first classification model.

可选地,本公开实施例中的第一分类模型可以是二分类模型。基于第一分类模型对增强文本样本进行分类处理,判断增强文本样本是否符合当前文本审核类型,从而获取增强文本样本的标签和置信度。Optionally, the first classification model in this embodiment of the present disclosure may be a binary classification model. The enhanced text sample is classified based on the first classification model, and whether the enhanced text sample conforms to the current text review type is judged, so as to obtain the label and confidence of the enhanced text sample.

在一些实现中,增强文本样本的标签指示增强文本样本符合文本审核类型,在一些实现中,增强文本样本的标签指示增强文本样本不符合文本审核类型。In some implementations, the label of the enhanced text sample indicates that the enhanced text sample conforms to the text audit type, and in some implementations, the label of the enhanced text sample indicates that the enhanced text sample does not conform to the text audit type.

S404,响应于增强文本样本的预测类型为掩码预测,基于增强文本样本的标签和置信度对增强文本样本进行筛选,生成第一增强文本样本。S404. In response to the prediction type of the enhanced text sample being mask prediction, filter the enhanced text sample based on the label and confidence of the enhanced text sample to generate a first enhanced text sample.

在一些实现中,获取增强文本样本中置信度最高的M个标签为符合文本审核类型的第三增强文本样本,和增强文本样本中置信度最高的N个标签为不符合文本审核类型的第四增强文本样本,确定第三增强文本样本和第四增强文本样本为第一增强文本样本。M、N为正整数。In some implementations, the M labels with the highest confidence in the enhanced text samples are obtained as the third enhanced text samples that meet the text review type, and the N tags with the highest confidence in the enhanced text samples are the fourth that do not meet the text review type. For the enhanced text samples, determine the third enhanced text samples and the fourth enhanced text samples as the first enhanced text samples. M and N are positive integers.

例如,可以获取增强文本样本中置信度最高的1个标签为符合文本审核类型的增强文本样本,和增强文本样本中置信度最高的1个标签为不符合文本审核类型的增强文本样本为第一增强文本样本。For example, one of the enhanced text samples with the highest confidence label can be obtained as an enhanced text sample that meets the text audit type, and among the enhanced text samples with the highest confidence label as an enhanced text sample that does not meet the text audit type is the first Enhanced text samples.

需要说明的是,该数据增强过程目的是期望学习到文本样本为什么符合文本审核类型,关键词位置在哪里,例如一个文本样本中对掩码部分做了修改变成两条增强文本,一条是符合文本审核类型,另一条不符合文本审核类型,这两条是很像的,输入分类模型中,就有利于分类模型学习为什么只改了掩码部分标签就变了,该数据增强过程可以提高分类模型的准确率。It should be noted that the purpose of this data enhancement process is to learn why the text sample conforms to the text review type and where the keywords are located. The text audit type, the other one does not conform to the text audit type, these two are very similar, input into the classification model, it is beneficial for the classification model to learn why the label changes only after changing the mask part, this data enhancement process can improve the classification model accuracy.

S405,响应于增强文本样本的预测类型为续写预测,基于增强文本样本的标签对增强文本样本进行筛选,生成第二增强文本样本。S405. In response to the prediction type of the enhanced text sample being continuation prediction, filter the enhanced text sample based on the label of the enhanced text sample to generate a second enhanced text sample.

在一些实现中,增强文本样本中可能存在重复的情况,为减小计算量,加快训练效率,可以对增强文本样本进行合并,以去除重复的增强文本样本,得到第五增强文本样本,确定标签为符合文本审核类型的第五增强文本样本为第二增强文本样本。In some implementations, there may be repetitions in the enhanced text samples. In order to reduce the amount of calculation and speed up the training efficiency, the enhanced text samples can be merged to remove the repeated enhanced text samples to obtain the fifth enhanced text sample and determine the label The fifth enhanced text sample that conforms to the text review type is the second enhanced text sample.

需要说明的是,该数据增强过程目的是期望生成更多违规的样本(标签为符合文本审核类型的文本样本),将预测不违规的样本(标签为不符合文本审核类型的文本样本)过滤掉,目的是提高模型的召回率。It should be noted that the purpose of this data augmentation process is to generate more violation samples (labeled as text samples conforming to the text review type), and to filter out samples that are not predicted to violate the rules (labeled as text samples not conforming to the text review type). , the purpose of which is to improve the recall rate of the model.

S406,将第一增强文本样本和第二增强文本样本加入第i轮文本样本集,得到第i+1轮文本样本集。S406. Add the first enhanced text sample and the second enhanced text sample to the i-th round text sample set to obtain the i+1-th round text sample set.

S407,根据第i+1轮文本样本集对第一分类模型进行训练,获取第j+1轮训练后的第二分类模型,并继续获取下一轮文本样本集对第二分类模型进行训练,直至训练结束生成目标分类模型。S407, train the first classification model according to the i+1th round of text sample set, obtain the second classification model after the j+1th round of training, and continue to obtain the next round of text sample set to train the second classification model, Until the end of training, the target classification model is generated.

关于步骤S406~步骤S407的介绍可以参见上述实施例中的内容,此处不再赘述。For the introduction of step S406 to step S407, reference may be made to the content in the foregoing embodiments, and details are not repeated here.

本公开实施例中,基于第一分类模型获取增强文本样本的标签和置信度,响应于增强文本样本的预测类型为掩码预测,基于增强文本样本的标签和置信度对增强文本样本进行筛选,生成第一增强文本样本,响应于增强文本样本的预测类型为续写预测,基于增强文本样本的标签对增强文本样本进行筛选,生成第二增强文本样本。本公开使用上一轮训练后的分类模型对增强数据样本打分生成标签,可以避免模型训练时引入噪声,预训练语言模型在预训练阶段经过海量数据的训练,具有生成丰富语义的数据的能力,利用其进行数据增强可以提高训练数据的多样性,从而扩展分类模型的边界,充分挖掘分类模型的泛化能力。In the embodiment of the present disclosure, the label and confidence of the enhanced text sample are obtained based on the first classification model, and in response to the prediction type of the enhanced text sample being mask prediction, the enhanced text sample is screened based on the label and confidence of the enhanced text sample, A first enhanced text sample is generated, and in response to the prediction type of the enhanced text sample being continuation prediction, the enhanced text sample is screened based on the label of the enhanced text sample to generate a second enhanced text sample. This disclosure uses the classification model after the previous round of training to score the enhanced data samples to generate labels, which can avoid introducing noise during model training. The pre-trained language model has been trained with massive data in the pre-training stage, and has the ability to generate rich semantic data. Using it for data enhancement can increase the diversity of training data, thereby expanding the boundary of the classification model and fully exploiting the generalization ability of the classification model.

图5是根据本公开一个实施例的适用于文本审核的分类模型的训练方法的流程图,如图5所示,本公开实施例中,针对多个文本审核类型中的任一文本审核类型,获取文本审核类型对应的第i轮文本样本集和第j轮训练后的第一分类模型;对文本样本进行掩码覆盖,并基于预训练语言模型对掩码覆盖后的文本样本进行掩码预测,生成预测类型为掩码预测的增强文本样本;基于预设的提示词对文本样本进行改写,并基于预训练语言模型对改写后的文本样本进行续写预测,生成预测类型为续写预测的增强文本样本。基于第一分类模型获取增强文本样本的标签和置信度;响应于增强文本样本的预测类型为掩码预测,基于增强文本样本的标签和置信度对增强文本样本进行筛选,生成第一增强文本样本;响应于增强文本样本的预测类型为续写预测,基于增强文本样本的标签对增强文本样本进行筛选,生成第二增强文本样本;将第一增强文本样本和第二增强文本样本加入第i轮文本样本集,得到第i+1轮文本样本集。根据第i+1轮文本样本集对第一分类模型进行训练,获取第j+1轮训练后的第二分类模型,并继续获取下一轮文本样本集对第二分类模型进行训练,直至训练结束生成目标分类模型。FIG. 5 is a flow chart of a training method for a classification model suitable for text review according to an embodiment of the present disclosure. As shown in FIG. 5 , in an embodiment of the present disclosure, for any text review type among multiple text review types, Obtain the i-th round of text sample set corresponding to the text review type and the first classification model after the j-th round of training; perform mask coverage on the text samples, and perform mask prediction on the mask-covered text samples based on the pre-trained language model , generate an enhanced text sample whose prediction type is mask prediction; rewrite the text sample based on the preset prompt words, and perform continuation prediction on the rewritten text sample based on the pre-trained language model, and generate an enhanced text sample whose prediction type is continuation prediction Enhanced text samples. Obtain the label and confidence of the enhanced text sample based on the first classification model; in response to the prediction type of the enhanced text sample being mask prediction, filter the enhanced text sample based on the label and confidence of the enhanced text sample to generate the first enhanced text sample ; In response to the prediction type of the enhanced text sample being continuation prediction, the enhanced text sample is screened based on the label of the enhanced text sample to generate a second enhanced text sample; the first enhanced text sample and the second enhanced text sample are added to the i-th round Text sample set to obtain the i+1th round of text sample set. Train the first classification model according to the i+1th round of text sample set, obtain the second classification model after the j+1th round of training, and continue to obtain the next round of text sample set to train the second classification model until training Finish generating the target classification model.

本公开使用上一轮训练后的分类模型对增强数据样本打分生成标签,可以避免模型训练时引入噪声,预训练语言模型在预训练阶段经过海量数据的训练,具有生成丰富语义的数据的能力,利用其进行数据增强可以提高训练数据的多样性,从而扩展分类模型的边界,充分挖掘分类模型的泛化能力。This disclosure uses the classification model after the previous round of training to score the enhanced data samples to generate labels, which can avoid introducing noise during model training. The pre-trained language model has been trained with massive data in the pre-training stage, and has the ability to generate rich semantic data. Using it for data enhancement can increase the diversity of training data, thereby expanding the boundary of the classification model and fully exploiting the generalization ability of the classification model.

图6是根据本公开一个实施例的适用于文本审核的分类模型的训练装置的结构图,如图6所示,适用于文本审核的分类模型的训练装置600包括:FIG. 6 is a structural diagram of a training device for a classification model suitable for text review according to an embodiment of the present disclosure. As shown in FIG. 6 , the

第一获取模块610,用于针对多个文本审核类型中的任一文本审核类型,获取文本审核类型对应的第i轮文本样本集和第j轮训练后的第一分类模型,i、j为正整数;The

第二获取模块620,用于基于预训练语言模型对第i轮文本样本集中的文本样本进行不同类型的预测,以获取不同预测类型的增强文本样本;The

第三获取模块630,用于基于第一分类模型获取增强文本样本的标签和置信度;The third obtaining

更新模块640,用于根据增强文本样本的预测类型、增强文本样本的标签和置信度对增强文本样本进行筛选,并根据筛选后的增强文本样本对第i轮文本样本集进行更新,得到第i+1轮文本样本集;The

训练模块650,用于根据第i+1轮文本样本集对第一分类模型进行训练,获取第j+1轮训练后的第二分类模型,并继续获取下一轮文本样本集对第二分类模型进行训练,直至训练结束生成目标分类模型。The

在一些实现中,第二获取模块620,还用于:对文本样本进行掩码覆盖,并基于预训练语言模型对掩码覆盖后的文本样本进行掩码预测,生成预测类型为掩码预测的增强文本样本;和/或基于预设的提示词对文本样本进行改写,并基于预训练语言模型对改写后的文本样本进行续写预测,生成预测类型为续写预测的增强文本样本。In some implementations, the

在一些实现中,第二获取模块620,还用于:按照预设的覆盖比例对文本样本进行掩码覆盖,生成第一文本样本,第一文本样本中包括一个或多个掩码;将第一文本样本输入预训练语言模型,基于预训练语言模型对第一文本样本中的一个或多个掩码进行预测,以生成增强文本样本。In some implementations, the second obtaining

在一些实现中,第二获取模块620,还用于:获取文本样本中标签为符合文本审核类型的第二文本样本;基于预设的提示词对第二文本样本进行改写,获取第三文本样本;对任意两个第三文本样本进行拼接,获取第四文本样本;基于预训练语言模型对第四文本样本进行续写,以生成增强文本样本。In some implementations, the second obtaining

在一些实现中,更新模块640,还用于:响应于增强文本样本的预测类型为掩码预测,基于增强文本样本的标签和置信度对增强文本样本进行筛选,生成第一增强文本样本;响应于增强文本样本的预测类型为续写预测,基于增强文本样本的标签对增强文本样本进行筛选,生成第二增强文本样本;将第一增强文本样本和第二增强文本样本加入第i轮文本样本集,得到第i+1轮文本样本集。In some implementations, the

在一些实现中,标签包括符合文本审核类型和不符合文本审核类型,更新模块640,还用于:获取增强文本样本中置信度最高的M个标签为符合文本审核类型的第三增强文本样本,和增强文本样本中置信度最高的N个标签为不符合文本审核类型的第四增强文本样本;确定第三增强文本样本和第四增强文本样本为第一增强文本样本。In some implementations, the labels include conforming to the text auditing type and not conforming to the text auditing type, and the

在一些实现中,更新模块640,还用于:对增强文本样本进行合并,以去除重复的增强文本样本,得到第五增强文本样本;确定标签为符合文本审核类型的第五增强文本样本为第二增强文本样本。In some implementations, the

本公开可以避免模型训练时引入噪声,提高训练数据的多样性,从而扩展模型训练的边界,充分挖掘分类模型的泛化能力,有利于提升分类模型准确率。The disclosure can avoid introducing noise during model training, increase the diversity of training data, thereby expand the boundary of model training, fully tap the generalization ability of the classification model, and help improve the accuracy of the classification model.

根据本公开的实施例,本公开还提供了一种电子设备、一种可读存储介质和一种计算机程序产品。According to the embodiments of the present disclosure, the present disclosure also provides an electronic device, a readable storage medium, and a computer program product.

图7是用来实现本公开实施例的适用于文本审核的分类模型的训练方法的电子设备的框图。电子设备旨在表示各种形式的数字计算机,诸如,膝上型计算机、台式计算机、工作台、个人数字助理、服务器、刀片式服务器、大型计算机、和其它适合的计算机。电子设备还可以表示各种形式的移动装置,诸如,个人数字处理、蜂窝电话、智能电话、可穿戴设备和其它类似的计算装置。本文所示的部件、它们的连接和关系、以及它们的功能仅仅作为示例,并且不意在限制本文中描述的和/或者要求的本公开的实现。Fig. 7 is a block diagram of an electronic device for implementing the method for training a classification model suitable for text review according to an embodiment of the present disclosure. Electronic device is intended to represent various forms of digital computers, such as laptops, desktops, workstations, personal digital assistants, servers, blade servers, mainframes, and other suitable computers. Electronic devices may also represent various forms of mobile devices, such as personal digital processing, cellular telephones, smart phones, wearable devices, and other similar computing devices. The components shown herein, their connections and relationships, and their functions, are by way of example only, and are not intended to limit implementations of the disclosure described and/or claimed herein.

如图7所示,设备700包括计算单元701,其可以根据存储在只读存储器(ROM)702中的计算机程序或者从存储单元708加载到随机访问存储器(RAM)703中的计算机程序,来执行各种适当的动作和处理。在RAM 703中,还可存储设备700操作所需的各种程序和数据。计算单元701、ROM 702以及RAM 703通过总线704彼此相连。输入/输出(I/O)接口705也连接至总线704。As shown in FIG. 7, the

设备700中的多个部件连接至I/O接口705,包括:输入单元706,例如键盘、鼠标等;输出单元707,例如各种类型的显示器、扬声器等;存储单元708,例如磁盘、光盘等;以及通信单元709,例如网卡、调制解调器、无线通信收发机等。通信单元709允许设备700通过诸如因特网的计算机网络和/或各种电信网络与其他设备交换信息/数据。Multiple components in the

计算单元701可以是各种具有处理和计算能力的通用和/或专用处理组件。计算单元701的一些示例包括但不限于中央处理单元(CPU)、图形处理单元(GPU)、各种专用的人工智能(AI)计算芯片、各种运行机器学习模型算法的计算单元、数字信号处理器(DSP)、以及任何适当的处理器、控制器、微控制器等。计算单元701执行上文所描述的各个方法和处理,例如适用于文本审核的分类模型的训练方法。例如,在一些实施例中,适用于文本审核的分类模型的训练方法可被实现为计算机软件程序,其被有形地包含于机器可读介质,例如存储单元708。在一些实施例中,计算机程序的部分或者全部可以经由ROM 702和/或通信单元709而被载入和/或安装到设备700上。当计算机程序加载到RAM 703并由计算单元701执行时,可以执行上文描述的适用于文本审核的分类模型的训练方法的一个或多个步骤。备选地,在其他实施例中,计算单元701可以通过其他任何适当的方式(例如,借助于固件)而被配置为执行适用于文本审核的分类模型的训练方法。The

本文中以上描述的系统和技术的各种实施方式可以在数字电子电路系统、集成电路系统、场可编程门阵列(FPGA)、专用集成电路(ASIC)、专用标准产品(ASSP)、芯片上系统的系统(SOC)、负载可编程逻辑设备(CPLD)、计算机硬件、固件、软件、和/或它们的组合中实现。这些各种实施方式可以包括:实施在一个或者多个计算机程序中,该一个或者多个计算机程序可在包括至少一个可编程处理器的可编程系统上执行和/或解释,该可编程处理器可以是专用或者通用可编程处理器,可以从存储系统、至少一个输入装置、和至少一个输出装置接收数据和指令,并且将数据和指令传输至该存储系统、该至少一个输入装置、和该至少一个输出装置。Various implementations of the systems and techniques described above herein can be implemented in digital electronic circuit systems, integrated circuit systems, field programmable gate arrays (FPGAs), application specific integrated circuits (ASICs), application specific standard products (ASSPs), systems on chips Implemented in a system of systems (SOC), load programmable logic device (CPLD), computer hardware, firmware, software, and/or combinations thereof. These various embodiments may include being implemented in one or more computer programs executable and/or interpreted on a programmable system including at least one programmable processor, the programmable processor Can be special-purpose or general-purpose programmable processor, can receive data and instruction from storage system, at least one input device, and at least one output device, and transmit data and instruction to this storage system, this at least one input device, and this at least one output device an output device.

用于实施本公开的方法的程序代码可以采用一个或多个编程语言的任何组合来编写。这些程序代码可以提供给通用计算机、专用计算机或其他可编程数据处理装置的处理器或控制器,使得程序代码当由处理器或控制器执行时使流程图和/或框图中所规定的功能/操作被实施。程序代码可以完全在机器上执行、部分地在机器上执行,作为独立软件包部分地在机器上执行且部分地在远程机器上执行或完全在远程机器或服务器上执行。Program codes for implementing the methods of the present disclosure may be written in any combination of one or more programming languages. These program codes may be provided to a processor or controller of a general-purpose computer, a special purpose computer, or other programmable data processing devices, so that the program codes, when executed by the processor or controller, make the functions/functions specified in the flow diagrams and/or block diagrams Action is implemented. The program code may execute entirely on the machine, partly on the machine, as a stand-alone software package partly on the machine and partly on a remote machine or entirely on the remote machine or server.

在本公开的上下文中,机器可读介质可以是有形的介质,其可以包含或存储以供指令执行系统、装置或设备使用或与指令执行系统、装置或设备结合地使用的程序。机器可读介质可以是机器可读信号介质或机器可读储存介质。机器可读介质可以包括但不限于电子的、磁性的、光学的、电磁的、红外的、或半导体系统、装置或设备,或者上述内容的任何合适组合。机器可读存储介质的更具体示例会包括基于一个或多个线的电气连接、便携式计算机盘、硬盘、随机存取存储器(RAM)、只读存储器(ROM)、可擦除可编程只读存储器(EPROM或快闪存储器)、光纤、便捷式紧凑盘只读存储器(CD-ROM)、光学储存设备、磁储存设备、或上述内容的任何合适组合。In the context of the present disclosure, a machine-readable medium may be a tangible medium that may contain or store a program for use by or in conjunction with an instruction execution system, apparatus, or device. A machine-readable medium may be a machine-readable signal medium or a machine-readable storage medium. A machine-readable medium may include, but is not limited to, electronic, magnetic, optical, electromagnetic, infrared, or semiconductor systems, apparatus, or devices, or any suitable combination of the foregoing. More specific examples of machine-readable storage media would include one or more wire-based electrical connections, portable computer discs, hard drives, random access memory (RAM), read only memory (ROM), erasable programmable read only memory (EPROM or flash memory), optical fiber, compact disk read only memory (CD-ROM), optical storage, magnetic storage, or any suitable combination of the foregoing.

为了提供与用户的交互,可以在计算机上实施此处描述的系统和技术,该计算机具有:用于向用户显示信息的显示装置(例如,CRT(阴极射线管)或者LCD(液晶显示器)监视器);以及键盘和指向装置(例如,鼠标或者轨迹球),用户可以通过该键盘和该指向装置来将输入提供给计算机。其它种类的装置还可以用于提供与用户的交互;例如,提供给用户的反馈可以是任何形式的传感反馈(例如,视觉反馈、听觉反馈、或者触觉反馈);并且可以用任何形式(包括声输入、语音输入或者、触觉输入)来接收来自用户的输入。To provide for interaction with the user, the systems and techniques described herein can be implemented on a computer having a display device (e.g., a CRT (cathode ray tube) or LCD (liquid crystal display) monitor) for displaying information to the user. ); and a keyboard and pointing device (eg, a mouse or a trackball) through which a user can provide input to the computer. Other kinds of devices can also be used to provide interaction with the user; for example, the feedback provided to the user can be any form of sensory feedback (e.g., visual feedback, auditory feedback, or tactile feedback); and can be in any form (including Acoustic input, speech input or, tactile input) to receive input from the user.

可以将此处描述的系统和技术实施在包括后台部件的计算系统(例如,作为数据服务器)、或者包括中间件部件的计算系统(例如,应用服务器)、或者包括前端部件的计算系统(例如,具有图形用户界面或者网络浏览器的用户计算机,用户可以通过该图形用户界面或者该网络浏览器来与此处描述的系统和技术的实施方式交互)、或者包括这种后台部件、中间件部件、或者前端部件的任何组合的计算系统中。可以通过任何形式或者介质的数字数据通信(例如,通信网络)来将系统的部件相互连接。通信网络的示例包括:局域网(LAN)、广域网(WAN)和互联网。The systems and techniques described herein can be implemented in a computing system that includes back-end components (e.g., as a data server), or a computing system that includes middleware components (e.g., an application server), or a computing system that includes front-end components (e.g., as a a user computer having a graphical user interface or web browser through which a user can interact with embodiments of the systems and techniques described herein), or including such backend components, middleware components, Or any combination of front-end components in a computing system. The components of the system can be interconnected by any form or medium of digital data communication, eg, a communication network. Examples of communication networks include: Local Area Network (LAN), Wide Area Network (WAN) and the Internet.

计算机系统可以包括客户端和服务器。客户端和服务器一般远离彼此并且通常通过通信网络进行交互。通过在相应的计算机上运行并且彼此具有客户端-服务器关系的计算机程序来产生客户端和服务器的关系。服务器可以是云服务器,也可以为分布式系统的服务器,或者是结合了区块链的服务器。A computer system may include clients and servers. Clients and servers are generally remote from each other and typically interact through a communication network. The relationship of client and server arises by computer programs running on the respective computers and having a client-server relationship to each other. The server can be a cloud server, a server of a distributed system, or a server combined with a blockchain.

应该理解,可以使用上面所示的各种形式的流程,重新排序、增加或删除步骤。例如,本发公开中记载的各步骤可以并行地执行也可以顺序地执行也可以不同的次序执行,只要能够实现本公开公开的技术方案所期望的结果,本文在此不进行限制。It should be understood that steps may be reordered, added or deleted using the various forms of flow shown above. For example, each step described in the present disclosure may be executed in parallel, sequentially, or in a different order, as long as the desired result of the technical solution disclosed in the present disclosure can be achieved, no limitation is imposed herein.

上述具体实施方式,并不构成对本公开保护范围的限制。本领域技术人员应该明白的是,根据设计要求和其他因素,可以进行各种修改、组合、子组合和替代。任何在本公开的精神和原则之内所作的修改、等同替换和改进等,均应包含在本公开保护范围之内。The specific implementation manners described above do not limit the protection scope of the present disclosure. It should be apparent to those skilled in the art that various modifications, combinations, sub-combinations and substitutions may be made depending on design requirements and other factors. Any modifications, equivalent replacements and improvements made within the spirit and principles of the present disclosure shall be included within the protection scope of the present disclosure.

Claims (17)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202310019334.2ACN116226375A (en) | 2023-01-06 | 2023-01-06 | Training method and device for classification model suitable for text review |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202310019334.2ACN116226375A (en) | 2023-01-06 | 2023-01-06 | Training method and device for classification model suitable for text review |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN116226375Atrue CN116226375A (en) | 2023-06-06 |

Family

ID=86577873

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202310019334.2APendingCN116226375A (en) | 2023-01-06 | 2023-01-06 | Training method and device for classification model suitable for text review |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN116226375A (en) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2025098098A1 (en)* | 2023-11-08 | 2025-05-15 | 阿里巴巴(中国)有限公司 | Sample processing methods, system and electronic device |

- 2023

- 2023-01-06CNCN202310019334.2Apatent/CN116226375A/enactivePending

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2025098098A1 (en)* | 2023-11-08 | 2025-05-15 | 阿里巴巴(中国)有限公司 | Sample processing methods, system and electronic device |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| US12314677B2 (en) | Method for pre-training model, device, and storage medium | |

| CN112560912B (en) | Classification model training methods, devices, electronic equipment and storage media | |

| US12373735B2 (en) | Method for pre-training language model | |

| CN110597994A (en) | Event element identification method and device | |

| CN113722493A (en) | Data processing method, device, storage medium and program product for text classification | |

| CN113688232B (en) | Method and device for classifying bid-inviting text, storage medium and terminal | |

| CN114218951B (en) | Entity recognition model training method, entity recognition method and device | |

| CN113836925A (en) | Training method, device, electronic device and storage medium for pre-trained language model | |

| US20220391598A1 (en) | Text checking method based on knowledge graph, electronic device, and medium | |

| CN114881129A (en) | Model training method and device, electronic equipment and storage medium | |

| CN114970540A (en) | Method and device for training text audit model | |

| US20220198358A1 (en) | Method for generating user interest profile, electronic device and storage medium | |

| CN112559885A (en) | Method and device for determining training model of map interest point and electronic equipment | |

| CN117371428B (en) | Text processing method and device based on large language model | |

| CN114417974A (en) | Model training method, information processing method, device, electronic device and medium | |

| CN114896986A (en) | Method and device for enhancing training data of semantic recognition model | |

| CN114817476A (en) | Language model training method and device, electronic equipment and storage medium | |

| CN116932762A (en) | Small sample financial text classification method, system, medium and equipment | |

| US20230139642A1 (en) | Method and apparatus for extracting skill label | |

| CN117573817A (en) | Model training method, correlation determining method, device, equipment and storage medium | |

| CN113807390A (en) | Model training method and device, electronic equipment and storage medium | |

| CN114492370B (en) | Web page recognition method, device, electronic device and medium | |

| US20220367052A1 (en) | Neural networks with feedforward spatial transformation units | |

| CN113641724B (en) | Knowledge tag mining method and device, electronic equipment and storage medium | |

| CN116226375A (en) | Training method and device for classification model suitable for text review |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination |