CN116091533A - Laser radar target demonstration and extraction method in Qt development environment - Google Patents

Laser radar target demonstration and extraction method in Qt development environmentDownload PDFInfo

- Publication number

- CN116091533A CN116091533ACN202310002862.7ACN202310002862ACN116091533ACN 116091533 ACN116091533 ACN 116091533ACN 202310002862 ACN202310002862 ACN 202310002862ACN 116091533 ACN116091533 ACN 116091533A

- Authority

- CN

- China

- Prior art keywords

- target

- data

- point cloud

- frame

- blist

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10028—Range image; Depth image; 3D point clouds

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10032—Satellite or aerial image; Remote sensing

- G06T2207/10044—Radar image

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02A—TECHNOLOGIES FOR ADAPTATION TO CLIMATE CHANGE

- Y02A90/00—Technologies having an indirect contribution to adaptation to climate change

- Y02A90/10—Information and communication technologies [ICT] supporting adaptation to climate change, e.g. for weather forecasting or climate simulation

Landscapes

- Engineering & Computer Science (AREA)

- Multimedia (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Optical Radar Systems And Details Thereof (AREA)

- Radar Systems Or Details Thereof (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及计算机视觉领域,尤其涉及一种Qt开发环境下的激光雷达目标演示与提取方法。The invention relates to the field of computer vision, in particular to a laser radar target demonstration and extraction method under the Qt development environment.

背景技术Background technique

Qt是一套完整的跨平台C++图形用户界面应用程序开发框架,具备广泛的开发基础和良好的封装机制,其高度的模块化设计、简化的内存回收机制以及丰富的API,可为用户提供移植性强、易用性高及运行速度快的开发环境。Qt is a complete set of cross-platform C++ graphical user interface application development framework, with extensive development foundation and good packaging mechanism, its highly modular design, simplified memory recovery mechanism and rich API, can provide users with porting A development environment with strong compatibility, high usability and fast running speed.

激光雷达技术具备方向性好、测量精度高的特点,它可利用主动探测技术生成周围环境实时、高分辨率3D点云,同时不受外界自然光影响。Lidar technology has the characteristics of good directionality and high measurement accuracy. It can use active detection technology to generate real-time, high-resolution 3D point clouds of the surrounding environment without being affected by external natural light.

因此,如何将二者优势相结合,从而更加直观、流畅地完成点云数据的演示与目标识别成为新的课题,目前关于Qt与激光雷达的结合,存在以下问题:Therefore, how to combine the advantages of the two, so as to complete the presentation of point cloud data and target recognition more intuitively and smoothly has become a new topic. At present, there are the following problems about the combination of Qt and LiDAR:

第一,激光雷达可通过ROS节点发布点云数据,传统意义上,利用Qt获取ROS节点数据,需要安装ROS Qt Creator插件、配置环境变量、创建工作空间(WorkSpace)、修改CMakelists.txt等,步骤繁多易错,且难于理解。First, lidar can publish point cloud data through ROS nodes. Traditionally, to use Qt to obtain ROS node data, you need to install the ROS Qt Creator plug-in, configure environment variables, create a workspace (WorkSpace), modify CMakelists.txt, etc., the steps Numerous and error-prone, and difficult to understand.

第二,Qt中关于三维点云图像的绘制,最直接的方法是利用自带的Datavisualization(数据可视化)模块,然而该模块却存在CPU占用率较高导致点云画面演示卡顿,以及无法将反射率强度信息以伪彩色表征的问题。Second, the most direct way to draw 3D point cloud images in Qt is to use the built-in Datavisualization (data visualization) module. The problem of representing albedo intensity information in pseudo-color.

第三,目前激光雷达的目标提取主要包括基于体素(Voxel)和基于原始点云的方法。其中基于体素的目标提取方法,大多需要经过3D卷积神经网络抽象,运算过程较为复杂,不利于帧间级目标提取与跟踪。Third, the current lidar target extraction mainly includes methods based on voxel (Voxel) and original point cloud. Among them, voxel-based target extraction methods mostly need to be abstracted by 3D convolutional neural network, and the calculation process is relatively complicated, which is not conducive to frame-level target extraction and tracking.

发明内容Contents of the invention

为了解决上述技术所存在的不足之处,本发明提供了一种Qt开发环境下的激光雷达目标演示与提取方法。In order to solve the deficiencies in the above technologies, the present invention provides a method for demonstrating and extracting laser radar targets under the Qt development environment.

为了解决以上技术问题,本发明采用的技术方案是,一种Qt开发环境下的激光雷达目标演示与提取方法,包括以下步骤:In order to solve the above technical problems, the technical solution adopted in the present invention is a laser radar target demonstration and extraction method under a Qt development environment, comprising the following steps:

S1、利用Qt在ROS中订阅激光雷达点云数据;S1. Use Qt to subscribe lidar point cloud data in ROS;

S2、利用Qt中的OPENGL模块动态演示彩色三维点云数据;S2, using the OPENGL module in Qt to dynamically demonstrate the color 3D point cloud data;

S3、通过“体素连接法”,对单帧数据完成多目标提取;S3. Through the "voxel connection method", complete multi-target extraction for single frame data;

S4、通过帧间相关性分析,完成多目标跟踪。S4. Through inter-frame correlation analysis, multi-target tracking is completed.

进一步地,步骤S1具体包括:Further, step S1 specifically includes:

S11、在Ubuntu桌面操作系统中安装Qt及ROS melodic;S11, install Qt and ROS melodic in the Ubuntu desktop operating system;

S12、在Qt工程文件中添加ROS依赖的动态链接库及其路径;S12, add the dynamic link library and path thereof that ROS depends on in the Qt project file;

S13、在Qt中创建订阅节点,用于在ROS中订阅激光雷达点云数据;S13. Create a subscription node in Qt for subscribing to lidar point cloud data in ROS;

S14、订阅节点创建后,启动激光雷达发布节点,通过重写订阅节点的静态回调函数获得激光雷达发布的格式数据。S14. After the subscription node is created, start the lidar release node, and obtain the format data published by the lidar by rewriting the static callback function of the subscription node.

进一步地,步骤S2具体包括:Further, step S2 specifically includes:

S21、点云数据格式转换;S21, point cloud data format conversion;

S22、数据转出;S22, data transfer out;

S23、利用OPENCV将单帧点云反射率灰度数据映射为彩色数据;S23, using OPENCV to map the single-frame point cloud reflectance grayscale data into color data;

S24、使用OPENGL渲染点云数据;S24, using OPENGL to render point cloud data;

S25、动态更新;S25, dynamic update;

S26、图形变换。S26. Graphic transformation.

进一步地,步骤S3单帧数据是指激光雷达单周期扫描所获得的数据,步骤S3具体包括:Further, the single-frame data in step S3 refers to the data obtained by the single-period scanning of the laser radar, and the step S3 specifically includes:

S31、建立体素;S31. Establish voxels;

S32、获取背景数据;S32. Obtain background data;

S33、鉴别目标;S33, identifying the target;

S34、目标确认。S34. Target confirmation.

进一步地,步骤S4具体包括:Further, step S4 specifically includes:

S41、根据当前帧各目标的亮格数组,记录各目标中心点位置;S41. According to the bright grid array of each target in the current frame, record the position of the center point of each target;

S42、获取下一帧各目标的亮格数组,记录各目标中心点位置;对前后两帧各目标的亮格数组进行相关性分析,通过遍历法,获取前一帧中某目标相关性最大的后一帧数组;S42. Obtain the bright grid array of each target in the next frame, and record the position of the center point of each target; perform correlation analysis on the bright grid arrays of each target in the two frames before and after, and obtain the most relevant target of a certain target in the previous frame through the traversal method next frame array;

S43、计算同一目标两帧间空间距离,得到该目标速度;S43. Calculate the spatial distance between two frames of the same target to obtain the target speed;

S44、将后一帧设为当前帧,当下一帧到达时,按照步骤S41、S42、S43的方法完成迭代,各目标速度以激光雷达扫描周期进行更新。S44. Set the next frame as the current frame. When the next frame arrives, complete the iteration according to the method of steps S41, S42, and S43, and update the speed of each target with the laser radar scanning cycle.

进一步地,步骤S21中的格式转换是指利用ROS库自带函数转换点云数据类型;Further, the format conversion in step S21 refers to using the ROS library's own function to convert the point cloud data type;

步骤S22中的数据是指步骤S1中的静态回调函数中的点云数据;The data in the step S22 refers to the point cloud data in the static callback function in the step S1;

步骤S23的单帧点云反射率灰度数据是指激光雷达单周期扫描所获得的数据;The single-frame point cloud reflectance grayscale data in step S23 refers to the data obtained by laser radar single-cycle scanning;

步骤S24对于点云数据中的任意一点p,应包含位置信息(px、py、pz)以及颜色信息(pR、pG、pB);将单帧点云全部上述信息写入顶点缓冲对象QOpenGLBuffer*VBO中,再利用GLSL语言完成顶点着色器和片段着色器的编写,实现各点位置及颜色的计算及显示;Step S24 For any point p in the point cloud data, it should include position information (px , py , pz ) and color information (pR , pG , pB ); write all the above information into the single frame point cloud In the vertex buffer object QOpenGLBuffer*VBO, use GLSL language to complete the writing of vertex shader and fragment shader, and realize the calculation and display of the position and color of each point;

S25、设定单帧点云在画面中的显示持续时间tP,若界面接收点云时间为t1,则在[t1,t1+tP]范围内该帧点云得以显示,超过t1+tP后,该帧数据被替代更新,从而实现动态显示并及时释放内存;S25. Set the display duration tP of the single-frame point cloud in the screen. If the interface receives the point cloud time is t1 , then the frame point cloud can be displayed within the range of [t1 , t1 +tP ]. After t1 +tP , the frame data is replaced and updated, so as to realize dynamic display and release memory in time;

S26、结合OPENGL中摄像机、视角、旋转函数,重写Qt中鼠标事件,实现鼠标拖拽图像的旋转、鼠标滚轮缩放图像功能,流畅展示百万级点云数据。S26. Combining the camera, viewing angle, and rotation functions in OPENGL, rewrite the mouse event in Qt, realize the functions of dragging and dragging the image with the mouse, and zooming the image with the mouse wheel, and smoothly display millions of point cloud data.

进一步地,步骤S22的数据转出的具体过程为:在静态回调函数中建立信号槽,将数据传递给本类的普通槽函数,在普通槽函数中,发射与外部设计师界面类对象建立的信号,即可完成静态函数的数据通过信号槽向外部类对象的传递过程。Furthermore, the specific process of data transfer in step S22 is: create a signal slot in the static callback function, pass the data to the ordinary slot function of this class, and in the ordinary slot function, launch the interface class object created with the external designer The signal can complete the transfer process of the data of the static function to the external class object through the signal slot.

进一步地,步骤S23中利用OPENCV将单帧点云反射率灰度数据映射为彩色数据包括如下步骤:Further, in step S23, using OPENCV to map single-frame point cloud reflectance grayscale data to color data includes the following steps:

S231、在Ubuntu桌面操作系统中安装OPENCV;S231, install OPENCV in the Ubuntu desktop operating system;

S232、在Qt工程文件中添加OPENCV依赖的动态链接库。S232. Add the dynamic link library that OPENCV depends on in the Qt project file.

进一步地,步骤S31具体为:设置背景采样时间ts=5s,在[0,ts]内只有背景点云;首先获取背景点云在X、Y、Z轴方向上坐标绝对值的最大值,记为xm、ym、zm,单位为米,则可在空间直角坐标系中建立长方体完整外包当前全部点云,范围为[-xm,xm],[-ym,ym],[-zm,zm];以0.1m为长度单位建立正方体体素,则点云空间划分为20·xm·20·ym·20·zm个体素;Further, step S31 is specifically as follows: set the background sampling time ts =5s, and there is only the background point cloud in [0,ts ]; first obtain the maximum value of the absolute value of the coordinates of the background point cloud in the X, Y, and Z axis directions , denoted as xm , ym , zm , and the unit is meter, then a cuboid can be established in the spatial rectangular coordinate system to completely outsource all current point clouds, and the range is [-xm , xm ], [-ym , ym ], [-zm , zm ]; to establish a cube voxel with 0.1m as the length unit, the point cloud space is divided into 20 xm 20 ym 20 zm voxels;

步骤S32具体为:计算在ts内落入到某体素中的扫描点数Ns,选取Ns中反射率最大值rmax与最小值rmin,则该体素的背景反射率区间为[rmin,rmax];以此类推,记录外包长方体中全部体素的反射率区间,可作为体素属性存于计算机内存中;Step S32 is specifically: calculate the number Ns of scanning points falling into a certain voxel within ts , select the maximum value rmax and the minimum value rmin of the reflectance in Ns , then the background reflectance range of this voxel is [ rmin , rmax ]; and so on, record the reflectance range of all voxels in the outsourcing cuboid, which can be stored in computer memory as voxel attributes;

步骤S33鉴别目标的条件为:背景采集完成后,当动目标出现时,激光照射到目标产生回波,当单帧回波数据满足以下条件之一时,即可判定为目标;The condition for identifying the target in step S33 is: after the background collection is completed, when the moving target appears, the laser light hits the target to generate an echo, and when the single-frame echo data satisfies one of the following conditions, it can be determined as the target;

(1)位置pi(xi,yi,zi)不属于任何一个体素单元;此时,应根据目标位置坐标,扩展外包长方体范围,以完整包含目标点云;(1) The position pi (xi, yi ,zi ) does not belong to any voxel unit; at this time, the range of the outbound cuboid should be expanded according to the coordinates of the target position to completely contain the target point cloud;

(2)目标点的位置pi(xi,yi,zi)属于某体素,但其反射率信息ri不在该体素对应的背景反射区间内;(2) The position pi (xi , yi , zi ) of the target point belongs to a certain voxel, but its reflectivity information ri is not within the background reflection interval corresponding to the voxel;

步骤S34具体为:从背景中鉴别出来的点云信息,可能代表多个目标,因此需要对其进行有效分割,分割的依据是包含目标的体素是否交联,基于“体素连接法”提取多目标。Step S34 is specifically: the point cloud information identified from the background may represent multiple targets, so it needs to be effectively segmented, and the segmentation is based on whether the voxels containing the target are cross-linked, based on the "voxel connection method" to extract Many goals.

进一步地,“体素连接法”提取多目标,具体步骤为:Further, the "voxel connection method" extracts multiple targets, and the specific steps are:

S341、对于外包长方体,将所有包含目标点云的体素记为“亮格”,以QVector3D类型的变量保存各亮格的中心点坐标,计入到QList<QVector3D>类型的对象blist中;作为备选池,blist即表示目标全部点云所在的亮格序列;S341. For the outsourcing cuboid, record all voxels containing the target point cloud as "bright grid", save the center point coordinates of each bright grid with a variable of QVector3D type, and count them into the object blist of QList<QVector3D> type; as Alternative pool, blist is the bright grid sequence where all point clouds of the target are located;

S342、选取blist中任意一点m0(x0,y0,z0),它是体素M0的中心;与体素M0共面的体素数为6,与M0每1条棱共棱的立方体数为1,因此与M0交接的其他体素数量为18,记各相邻的体素为M0i(i=0,1,2,…17);S342. Select any point m0 (x0 , y0 , z0 ) in the blist, which is the center of the voxel M0 ; the number of voxels coplanar with the voxel M0 is 6, and each edge of the voxel M0 shares The number of cubes on an edge is 1, so the number of other voxels connected with M0 is 18, and each adjacent voxel is recorded as M0i (i=0, 1, 2,...17);

S343、根据M0i与M0的相对位置关系(ui,vi,wi),计算各相邻体素的中心坐标m0i(x0+ui,y0+vi,z0+wi);S343. Calculate the center coordinatesm 0i(x0 +ui , y0+v i,z0 + wi );

S344、在blist中寻找m0i,若存在,则存入目标0的中心点数组blist_0中,数据类型仍为QList<QVector3D>;为防止重复查找,需将m0i从blist中删除;换言之,将m0i从备选池blist移入目标池blist_0中;S344. Search for m0i in blist, if it exists, store it in the center point array blist_0 of target 0, and the data type is still QList<QVector3D>; in order to prevent repeated searching, m0i needs to be deleted from blist; in other words, m0i is moved from the candidate pool blist to the target pool blist_0;

S345、对blist_0中的第一个元素m01,寻找其相邻的18个体素并获取中心坐标值,记为m01i(x01+ui,y01+vi,z01+wi)(i=0,1,2,...17),若其存在于blist中,则存入blist_0中,并将m01i从blist中删除;按照此方法可对blist_0各元素进行遍历;而且,blist_0在遍历的同时不断完成扩容,以确保不断有属于当前目标的亮格加入;S345. For the first element m01 in blist_0, search for its adjacent 18 voxels and obtain the center coordinate value, recorded as m01i (x01 +ui , y01 +vi , z01 +wi ) (i=0, 1, 2, ... 17), if it exists in blist, then store in blist_0, and m01i is deleted from blist; According to this method, each element of blist_0 can be traversed; and, blist_0 continues to expand while traversing to ensure that there are always bright boxes belonging to the current target added;

S346、当遍历结束时,即blist_0数量不再增加时,以体素M0为中心的逐层选亮点过程结束;blist_0即构成了目标0的全部亮格;S346. When the traversal ends, that is, when the number of blist_0 no longer increases, the layer-by-layer selection of bright spots centered on the voxel M0 ends; blist_0 constitutes all the bright grids of the target 0;

S347、判断blist中元素数量;若为0,说明只存在一个目标,其亮格即为blist;若大于0,则说明还存在多个目标;此时按照步骤S342~S347的思路,对blist进行逐层法提取多目标blist_1,blist_2,…,blist_N,直至备选池blist中元素数量为0,表示全部目标提取结束。S347. Determine the number of elements in the blist; if it is 0, it means that there is only one object, and its bright cell is the blist; if it is greater than 0, it means that there are multiple objects; at this time, according to the thinking of steps S342~S347, perform blist Extract multi-targets blist_1, blist_2,...,blist_N layer by layer until the number of elements in the candidate pool blist is 0, which means the extraction of all targets is over.

本发明公开了一种Qt开发环境下的激光雷达目标演示与提取方法,该方法可在ROS中订阅激光雷达传感器发布的消息来获取三维点云数据,利用OPENGL绘制与渲染的三维彩色点云模型,然后使用“体素连接法”完成单帧多目标分割提取,通过比对帧间目标体素的相关性,实现目标跟踪与实时速度测量。该方法步骤相对简单,避免使用自带的数据可视化模块,针对整个运算过程进行优化,利于帧间级目标提取与跟踪。The invention discloses a laser radar target demonstration and extraction method under the Qt development environment. The method can subscribe to the message issued by the laser radar sensor in ROS to obtain three-dimensional point cloud data, and use OPENGL to draw and render the three-dimensional color point cloud model , and then use the "voxel connection method" to complete single-frame multi-target segmentation and extraction, and realize target tracking and real-time speed measurement by comparing the correlation of target voxels between frames. The steps of this method are relatively simple, avoiding the use of the built-in data visualization module, and optimizing the entire operation process, which is beneficial to frame-level target extraction and tracking.

附图说明Description of drawings

图1是本发明的总流程图。Fig. 1 is the general flowchart of the present invention.

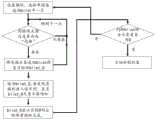

图2是本发明中单帧目标提取流程图。Fig. 2 is a flow chart of single frame target extraction in the present invention.

图3是本发明中“体素连接法”目标分割流程图Fig. 3 is the target segmentation flowchart of "voxel connection method" in the present invention

具体实施方式Detailed ways

下面结合附图和具体实施方式对本发明作进一步详细的说明。The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

如图1所示,对本发明一种Qt开发环境下的激光雷达目标演示与提取方法,其实现过程为:As shown in Figure 1, to the lidar target demonstration and extraction method under a kind of Qt development environment of the present invention, its realization process is:

S1、利用Qt在ROS中订阅激光雷达点云数据;S1. Use Qt to subscribe lidar point cloud data in ROS;

S2、利用Qt中的OPENGL模块动态演示彩色三维点云数据;S2, using the OPENGL module in Qt to dynamically demonstrate the color 3D point cloud data;

S3、通过“体素连接法”,对单帧数据完成多目标提取;S3. Through the "voxel connection method", complete multi-target extraction for single frame data;

S4、通过帧间相关性分析,完成多目标跟踪。S4. Through inter-frame correlation analysis, multi-target tracking is completed.

步骤S1具体包括:Step S1 specifically includes:

S11、在Ubuntu 18.04系统中,安装Qt 5.9.9及ROS melodic;S11. In the Ubuntu 18.04 system, install Qt 5.9.9 and ROS melodic;

S12、在Qt工程文件中添加下列ROS依赖的动态链接库及其路径:S12. Add the following ROS-dependent dynamic link library and its path in the Qt project file:

INCLUDEPATH+=/opt/ros/melodic/includeINCLUDEPATH+=/opt/ros/melodic/include

DEPENDPATH+=/opt/ros/melodic/libDEPENDPATH+=/opt/ros/melodic/lib

LIBS+=-L$$DEPENDPATH-lrosbag\libs+=-L$$DEPENDPATH -lrosbag\

-lroscpp\-lroscpp\

-lroslib\-lroslib\

-lroslz4\-lroslz4\

-lrostime\-lrostime\

-lroscpp_serialization\-lroscpp_serialization\

-lrospack\-lrospack\

-lcpp_common\-lcpp_common\

-lrosbag_storage\-lrosbag_storage\

-lrosconsole\-lrosconsole\

-lxmlrpcpp\-lxmlrpcpp\

-lrosconsole_backend_interface\-lrosconsole_backend_interface\

-lrosconsole_log4cxx\;-lrosconsole_log4cxx\;

S13、在Qt中创建订阅节点类QNodeSub,用于在ROS中订阅激光雷达数据,该类继承于Qt线程类Qthread;类的主程序中包含头文件#include<ros/ros.h>,创建句柄ros::NodeHandle node,定义变量ros::Subscriber chatter_subscriber=node.subscribe("/livox/lidar",1000,QNodeSub::chatterCallback),即可完成订阅(subscriber)节点对象chatter_subscriber的创建;S13. Create a subscription node class QNodeSub in Qt, which is used to subscribe lidar data in ROS. This class inherits from the Qt thread class Qthread; the main program of the class contains the header file #include<ros/ros.h>, and creates a handle ros::NodeHandle node, define the variable ros::Subscriber chatter_subscriber=node.subscribe("/livox/lidar",1000,QNodeSub::chatterCallback), you can complete the creation of the subscription (subscriber) node object chatter_subscriber;

S14、订阅节点创建后,启动激光雷达发布(publisher)节点,通过重写订阅节点的静态回调函数void QNodeSub::chatterCallback(const sensor_msgs::PointCloud2&msg),即可获得激光雷达传感器发布的sensor_msg::PointCloud2格式数据。S14. After the subscription node is created, start the lidar publisher node, and rewrite the static callback function void QNodeSub::chatterCallback(const sensor_msgs::PointCloud2&msg) of the subscription node to obtain the sensor_msg::PointCloud2 published by the lidar sensor format data.

步骤S2具体包括:Step S2 specifically includes:

S21、点云数据格式转换;S21, point cloud data format conversion;

S22、数据转出;S22, data transfer out;

S23、利用OPENCV将单帧点云反射率灰度数据映射为彩色数据;S23, using OPENCV to map the single-frame point cloud reflectance grayscale data into color data;

S24、使用OPENGL渲染点云数据;S24, using OPENGL to render point cloud data;

S25、动态更新;S25, dynamic update;

S26、图形变换。S26. Graphic transformation.

步骤S21中的格式转换,是指利用ROS库自带函数sensor_msgs::convertPointCloud2ToPointCloud,将sensor_msg::PointCloud2类点云数据转换成sensor_msg::PointCloud类数据。The format conversion in step S21 refers to using the function sensor_msgs::convertPointCloud2ToPointCloud of the ROS library to convert the point cloud data of sensor_msg::PointCloud2 into data of sensor_msg::PointCloud.

步骤S22中的数据,是指步骤S14中静态回调函数中的点云数据PointCloud类变量h;The data in the step S22 refers to the point cloud data PointCloud class variable h in the static callback function in the step S14;

步骤S22中的转出,具体过程为:在回调函数中建立信号槽,将h传递给本类的普通槽函数;在普通槽函数中,发射与外部设计师界面类对象建立的信号,其中信号的参数为h,即可完成静态函数的数据通过信号槽向外部类对象的传递过程。The transfer out in step S22, the specific process is: create a signal slot in the callback function, pass h to the ordinary slot function of this class; in the ordinary slot function, launch the signal established with the external designer interface class object, where the signal The parameter is h, and the process of transferring the data of the static function to the external class object through the signal slot can be completed.

步骤S23中利用OPENCV可按照如下步骤完成:Utilize OPENCV in step S23 and can finish according to following steps:

S231、在Ubuntu 18.04中安装OPENCV 4.5.4;S231, install OPENCV 4.5.4 in Ubuntu 18.04;

S232、在Qt工程文件中添加OPENCV依赖的动态链接库:S232. Add the dynamic link library that OPENCV depends on in the Qt project file:

INCLUDEPATH+=/usr/local/include\INCLUDEPATH+=/usr/local/include\

/usr/local/include/opencv4\/usr/local/include/opencv4\

/usr/local/include/opencv4/opencv2\/usr/local/include/opencv4/opencv2\

LIBS+=/usr/local/lib/libopencv_calib3d.so.4.5.4\、LIBS+=/usr/local/lib/libopencv_calib3d.so.4.5.4\、

/usr/local/lib/libopencv_core.so.4.5.4\/usr/local/lib/libopencv_core.so.4.5.4\

/usr/local/lib/libopencv_highgui.so.4.5.4\/usr/local/lib/libopencv_highgui.so.4.5.4\

/usr/local/lib/libopencv_imgcodecs.so.4.5.4\/usr/local/lib/libopencv_imgcodecs.so.4.5.4\

/usr/local/lib/libopencv_imgproc.so.4.5.4\/usr/local/lib/libopencv_imgproc.so.4.5.4\

/usr/local/lib/libopencv_dnn.so.4.5.4\/usr/local/lib/libopencv_dnn.so.4.5.4\

步骤S23中将单帧点云反射率灰度数据映射为彩色数据,具体包括:创建图像容器类(cv::Mat)对象mapt,格式为CV_8UC1,图像矩阵大小为1*单帧点云数据长度N,即:cv::Mat mapt=cv::Mat::zeros(1,N,CV_8UC1);将单帧PointCloud格式的点云数组h中的反射率灰度数据注入到img中:In step S23, the single-frame point cloud reflectance grayscale data is mapped to color data, specifically including: creating an image container class (cv::Mat) object mapt, the format is CV_8UC1, and the image matrix size is 1*single-frame point cloud data length N, that is: cv::Mat mapt=cv::Mat::zeros(1,N,CV_8UC1); Inject the reflectance grayscale data in the point cloud array h in the single-frame PointCloud format into img:

定义cv::Mat类对象mapc,使用cv::applyColorMap(mapt,mapc,cv::COLORMAP_JET),即可将灰度图mapt映射为JET伪彩图mapc;对于mapc中第i像元,其R、G、B值分别对应于mapc.at<Vec3b>(0,i)[2]、mapc.at<Vec3b>(0,i)[1]、mapc.at<Vec3b>(0,i)[0]。Define the cv::Mat class object mapc, and use cv::applyColorMap(mapt,mapc,cv::COLORMAP_JET) to map the grayscale mapt to the JET pseudo-color mapc; for the i-th pixel in mapc, its R , G, and B values correspond to mapc.at<Vec3b>(0,i)[2], mapc.at<Vec3b>(0,i)[1], mapc.at<Vec3b>(0,i)[ 0].

步骤S24中渲染点云数据,具体为:对于点云中的任意一点p,应包含位置信息(px、py、pz)以及颜色信息(pR、pG、pB);设单帧点云长度为N,则表征单帧点云的数组维度为N×6;将上述数组写入顶点缓冲对象QOpenGLBuffer*VBO中,再利用GLSL语言完成顶点着色器和片段着色器的编写,实现各点位置及颜色的计算及显示。Render point cloud data in step S24, specifically: for any point p in the point cloud, it should include position information (px , py , pz ) and color information (pR , pG , pB ); If the length of the frame point cloud is N, the dimension of the array representing the single frame point cloud is N×6; write the above array into the vertex buffer object QOpenGLBuffer*VBO, and then use the GLSL language to complete the writing of the vertex shader and the fragment shader to realize Calculation and display of the position and color of each point.

步骤S25具体包括:设定单帧点云在画面中的显示持续时间tP,若界面接收点云时间为t1,则在[t1,t1+tP]范围内该帧点云得以显示,超过t1+tP后,该帧数据被替代更新,从而实现动态显示并及时释放内存。Step S25 specifically includes: setting the display duration tP of a single frame point cloud in the screen, if the interface receives the point cloud time is t1 , then the frame point cloud can be obtained within the range of [t1 , t1 +tP ] It is shown that after t1 +tP is exceeded, the frame data is replaced and updated, so as to realize dynamic display and release memory in time.

步骤S3的单帧数据,是指激光雷达单周期扫描所获得的数据。The single-frame data in step S3 refers to the data obtained by the single-period scanning of the lidar.

结合附图2的单帧目标提取流程图,步骤为设置循环,遍历一帧点云数据中的全部点,判断该点是否属于背景,如果否转到下一点,如果是,则判断该点所在体素置入目标备选池,之后获取单帧全部目标“体素连接法”完成分割,步骤S3具体包括:Combined with the single-frame target extraction flow chart in Figure 2, the steps are to set a cycle, traverse all points in a frame of point cloud data, and judge whether the point belongs to the background, if not, go to the next point, if yes, then judge where the point is Put the voxels into the target candidate pool, and then obtain all the targets in a single frame "voxel connection method" to complete the segmentation. Step S3 specifically includes:

S31、建立体素;S31. Establish voxels;

S32、获取背景数据;S32. Obtain background data;

S33、鉴别目标;S33, identifying the target;

S34、目标确认。S34. Target confirmation.

步骤S31具体为:设置背景采样时间ts=5s,在[0,ts]内只有背景点云;首先获取背景点云在X、Y、Z轴方向上坐标绝对值的最大值(如为浮点型则向上取整),记为xm、ym、zm,单位为米,则可在空间直角坐标系中建立长方体完整外包当前全部点云,范围为[-xm,xm],[-ym,ym],[-zm,zm];以0.1m(精度可调)为长度单位建立正方体体素,则点云空间划分为20·xm·20·ym·20·zm个体素。Step S31 is specifically: set the background sampling time ts =5s, and only the background point cloud is in [0, ts ]; first obtain the maximum value of the absolute value of the coordinates of the background point cloud in the X, Y, and Z axis directions (for example, Floating-point type is rounded up), recorded as xm , ym , zm , and the unit is meter, then a cuboid can be established in the space Cartesian coordinate system to completely outsource all current point clouds, and the range is [-xm , xm ], [-ym , ym ], [-zm , zm ]; with 0.1m (adjustable precision) as the length unit to establish a cube voxel, the point cloud space is divided into 20 xm 20 ym 20 zm voxels.

步骤S32具体为:计算在ts内落入到某体素中的扫描点数Ns,选取Ns中反射率最大值rmax与最小值rmin,则该体素的背景反射率区间为[rmin,rmax];以此类推,记录外包长方体中全部体素的反射率区间,可作为体素属性存于计算机内存中。Step S32 is specifically: calculate the number Ns of scanning points falling into a certain voxel within ts , select the maximum value rmax and the minimum value rmin of the reflectance in Ns , then the background reflectance range of this voxel is [ rmin , rmax ]; and so on, record the reflectance range of all voxels in the enclosing cuboid, which can be stored in computer memory as voxel attributes.

步骤S33中的鉴别目标的条件为:背景采集完成后,当动目标出现时,激光照射到目标产生回波,当单帧回波数据满足下述条件之一,即可判定为目标。The condition for identifying the target in step S33 is: after the background collection is completed, when a moving target appears, the laser light hits the target to generate an echo, and when the single-frame echo data satisfies one of the following conditions, it can be determined as a target.

(1)位置pi(xi,yi,zi)不属于任何一个体素单元;此时,应根据目标位置坐标,扩展外包长方体范围,以完整包含目标点云;(1) The position pi (xi , yi , zi ) does not belong to any voxel unit; at this time, the range of the outbound cuboid should be expanded according to the coordinates of the target position to completely contain the target point cloud;

(2)目标点的位置pi(xi,yi,zi)属于某体素,但其反射率信息ri不在该体素对应的背景反射区间内。(2) The position pi (xi , yi , zi) of the target point belongs to a certain voxel, but its reflectivity information ri is not in the background reflection interval corresponding to the voxel.

步骤S34具体为:从背景中鉴别出来的点云信息,可能代表多个目标,因此需要对其进行有效分割,分割的依据是包含目标的体素是否交联,下面将基于“体素连接法”提取多目标,结合附图3所示的“体素连接法”目标分割流程图,首先,设置循环,选取单帧备选池blist中一点,判断该点边是否存在“亮格”,如果不存在转到下一点,如果存在,将亮格从备选池blist移至目标池blist 0,之后遍历blist_0,若发现亮格则存入该序列,直至blist 0元素不再增加,当blist 0表示目标0对应的体素提取完成,最后判断blist所含元素是否为0,如果否,回到初始位,如果是,目标分割结束。Step S34 is specifically: the point cloud information identified from the background may represent multiple targets, so it needs to be effectively segmented, and the segmentation is based on whether the voxels containing the target are cross-linked. The following will be based on the "voxel connection method "Extract multiple targets, combined with the "voxel connection method" target segmentation flowchart shown in Figure 3, first, set the loop, select a point in the single-frame candidate pool blist, and judge whether there is a "bright grid" on the side of the point, if If it does not exist, go to the next point. If it exists, move the bright cell from the alternative pool blist to the target pool blist 0, and then traverse blist_0. If a bright cell is found, it will be stored in this sequence until the elements of blist 0 no longer increase. When blist 0 Indicates that the voxel corresponding to the target 0 has been extracted, and finally judge whether the element contained in the blist is 0, if not, return to the initial position, if yes, the target segmentation ends.

具体步骤为:The specific steps are:

S341、对于外包长方体,将所有包含目标点云的体素记为“亮格”,以QVector3D类型的变量保存各亮格的中心点坐标,计入到QList<QVector3D>类型的对象blist中;作为备选池,blist即表示目标全部点云所在的亮格序列;S341. For the outsourcing cuboid, record all voxels containing the target point cloud as "bright grid", save the center point coordinates of each bright grid with a variable of QVector3D type, and count them into the object blist of QList<QVector3D> type; as Alternative pool, blist is the bright grid sequence where all point clouds of the target are located;

S342、选取blist中任意一点m0(x0,y0,z0),它是体素M0的中心;与体素M0共面的体素数为6,与M0每1条棱共棱的立方体数为1,因此与M0交接的其他体素数量为18,记各相邻的体素为M0i(i=0,1,2,…17);S342. Select any point m0 (x0 , y0 , z0 ) in the blist, which is the center of the voxel M0 ; the number of voxels coplanar with the voxel M0 is 6, and each edge of the voxel M0 shares The number of cubes on an edge is 1, so the number of other voxels connected with M0 is 18, and each adjacent voxel is recorded as M0i (i=0, 1, 2,...17);

S343、根据M0i与M0的相对位置关系(ui,vi,wi),计算各相邻体素的中心坐标m0i(x0+ui,y0+vi,z0+wi);S343. Calculate the center coordinatesm 0i(x0 +ui , y0+v i,z0 + wi );

S344、在blist中寻找m0i,若存在,则存入目标0的中心点数组blist_0中,数据类型仍为QList<QVector3D>;为防止重复查找,需将m0i从blist中删除;换言之,将m0i从备选池blist移入目标池blist_0中;S344. Search for m0i in blist, if it exists, store it in the center point array blist_0 of target 0, and the data type is still QList<QVector3D>; in order to prevent repeated searching, m0i needs to be deleted from blist; in other words, m0i is moved from the candidate pool blist to the target pool blist_0;

S345、对blist_0中的第一个元素m01,寻找其相邻的18个体素并获取中心坐标值,记为m01i(x01+ui,y01+vi,z01+wi)(i=0,1,2,...17),若其存在于blist中,则存入blist_0中,并将m01i从blist中删除;按照此方法可对blist_0各元素进行遍历;而且,blist_0在遍历的同时不断完成扩容,以确保不断有属于当前目标的亮格加入;S345. For the first element m01 in blist_0, search for its adjacent 18 voxels and obtain the center coordinate value, recorded as m01i (x01 +ui , y01 +vi , z01 +wi ) (i=0, 1, 2, ... 17), if it exists in blist, then store in blist_0, and m01i is deleted from blist; According to this method, each element of blist_0 can be traversed; and, blist_0 continues to expand while traversing to ensure that there are always bright boxes belonging to the current target added;

S346、当遍历结束时,即blist_0数量不再增加时,以体素M0为中心的逐层选亮点过程结束;blist_0即构成了目标0的全部亮格;S346. When the traversal ends, that is, when the number of blist_0 no longer increases, the layer-by-layer selection of bright spots centered on the voxel M0 ends; blist_0 constitutes all the bright grids of the target 0;

S347、判断blist中元素数量;若为0,说明只存在一个目标,其亮格即为blist;若大于0,则说明还存在多个目标;此时按照步骤342~步骤347的思路,对blist进行逐层法提取多目标blist_1,blist_2,…,blist_N,直至备选池blist中元素数量为0,表示全部目标提取结束。S347. Determine the number of elements in the blist; if it is 0, it means that there is only one object, and its bright cell is the blist; if it is greater than 0, it means that there are multiple objects; at this time, according to the thinking of steps 342 to 347, the blist Extract multi-objects blist_1, blist_2,...,blist_N layer by layer until the number of elements in the candidate pool blist is 0, indicating that the extraction of all objects is over.

步骤S4具体包括:Step S4 specifically includes:

S41、根据当前帧各目标的亮格数组,记录各目标中心点位置Targeti;S41. According to the bright grid array of each target in the current frame, record the position Targeti of each target center point;

S42、获取下一帧各目标的亮格数组,记录各目标中心点位置Targetj;对前后两帧各目标的亮格数组进行相关性分析,通过遍历法,获取前一帧中某目标相关性最大的后一帧数组,即可认为是两个数组对应于同一目标,从而实现目标跟踪;具体来讲,以前一帧图像中目标0的亮格序列blist_0i为基准,与后一帧中各目标亮格序列进行比较,由于帧间隔时间极短(0.1s),查找后一帧中各目标亮格序列与blist_0i序列中重复元素最多的序列,即识别为同一目标;类似地,可对前一帧图像中各目标完成帧间相关性分析;S42. Obtain the bright grid array of each target in the next frame, and record the position Targetj of each target center point; perform correlation analysis on the bright grid arrays of each target in the two frames before and after, and obtain the correlation of a certain target in the previous frame by traversal method The largest subsequent frame array can be considered as two arrays corresponding to the same target, so as to realize target tracking; specifically, the bright grid sequence blist_0i of target 0 in the previous frame image is used as the benchmark, and each Compare the target bright grid sequence, because the frame interval time is very short (0.1s), find the sequence with the most repeated elements in each target bright grid sequence and blist_0i sequence in the next frame, that is, identify it as the same target; similarly, Each target in the previous frame image completes the inter-frame correlation analysis;

S43、计算同一目标两帧间的中心点Targeti、Targetj的空间距离,即可得到该目标速度;S43. Calculate the spatial distance of the center points Targeti and Targetj between two frames of the same target to obtain the target speed;

S44、将后一帧设为当前帧,当下一帧到达时,按照步骤S41、S42、S43的方法,完成迭代,各目标速度以激光雷达扫描周期进行更新。S44. Set the next frame as the current frame. When the next frame arrives, the iteration is completed according to the method of steps S41, S42, and S43, and the speed of each target is updated with the lidar scanning period.

综上所述,本Qt开发环境下的激光雷达目标演示与提取方法,包括Qt中建立ROS订阅节点,获取点云数据;利用QT的OPENGL模块动态展示彩色点云;建立体素模型,获取背景反射率区间;确认单帧目标所在体素;“体素连接法”分割目标;利用帧间相关性实现目标跟踪。该方法可在ROS中订阅激光雷达传感器发布的消息来获取三维点云数据,利用OPENGL绘制与渲染的三维彩色点云模型,然后使用“体素连接法”完成单帧多目标分割提取,通过比对帧间目标体素的相关性,实现目标跟踪与实时速度测量。To sum up, the lidar target demonstration and extraction method in this Qt development environment includes the establishment of ROS subscription nodes in Qt to obtain point cloud data; the use of QT's OPENGL module to dynamically display color point clouds; the establishment of voxel models to obtain background Reflectivity interval; confirm the voxel where the target is located in a single frame; "voxel connection method" to segment the target; use inter-frame correlation to realize target tracking. This method can subscribe to the news released by the lidar sensor in ROS to obtain 3D point cloud data, use OPENGL to draw and render the 3D color point cloud model, and then use the "voxel connection method" to complete single-frame multi-target segmentation and extraction. The correlation of target voxels between frames enables target tracking and real-time speed measurement.

上述实施方式并非是对本发明的限制,本发明也并不仅限于上述举例,本技术领域的技术人员在本发明的技术方案范围内所做出的变化、改型、添加或替换,也均属于本发明的保护范围。The above-mentioned embodiments are not limitations to the present invention, and the present invention is not limited to the above-mentioned examples, and changes, modifications, additions or substitutions made by those skilled in the art within the scope of the technical solution of the present invention also belong to this invention. protection scope of the invention.

Claims (10)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202310002862.7ACN116091533B (en) | 2023-01-03 | 2023-01-03 | Laser radar target demonstration and extraction method in Qt development environment |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202310002862.7ACN116091533B (en) | 2023-01-03 | 2023-01-03 | Laser radar target demonstration and extraction method in Qt development environment |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN116091533Atrue CN116091533A (en) | 2023-05-09 |

| CN116091533B CN116091533B (en) | 2024-05-31 |

Family

ID=86205760

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202310002862.7AActiveCN116091533B (en) | 2023-01-03 | 2023-01-03 | Laser radar target demonstration and extraction method in Qt development environment |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN116091533B (en) |

Citations (15)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20130222369A1 (en)* | 2012-02-23 | 2013-08-29 | Charles D. Huston | System and Method for Creating an Environment and for Sharing a Location Based Experience in an Environment |

| WO2019023892A1 (en)* | 2017-07-31 | 2019-02-07 | SZ DJI Technology Co., Ltd. | Correction of motion-based inaccuracy in point clouds |

| CN110210389A (en)* | 2019-05-31 | 2019-09-06 | 东南大学 | A kind of multi-targets recognition tracking towards road traffic scene |

| CN110264468A (en)* | 2019-08-14 | 2019-09-20 | 长沙智能驾驶研究院有限公司 | Point cloud data mark, parted pattern determination, object detection method and relevant device |

| US20200043182A1 (en)* | 2018-07-31 | 2020-02-06 | Intel Corporation | Point cloud viewpoint and scalable compression/decompression |

| CN110853037A (en)* | 2019-09-26 | 2020-02-28 | 西安交通大学 | A lightweight color point cloud segmentation method based on spherical projection |

| US20200074230A1 (en)* | 2018-09-04 | 2020-03-05 | Luminar Technologies, Inc. | Automatically generating training data for a lidar using simulated vehicles in virtual space |

| CN111476822A (en)* | 2020-04-08 | 2020-07-31 | 浙江大学 | Laser radar target detection and motion tracking method based on scene flow |

| CN111781608A (en)* | 2020-07-03 | 2020-10-16 | 浙江光珀智能科技有限公司 | Moving target detection method and system based on FMCW laser radar |

| CN113075683A (en)* | 2021-03-05 | 2021-07-06 | 上海交通大学 | Environment three-dimensional reconstruction method, device and system |

| CN114419152A (en)* | 2022-01-14 | 2022-04-29 | 中国农业大学 | A method and system for target detection and tracking based on multi-dimensional point cloud features |

| CN114746872A (en)* | 2020-04-28 | 2022-07-12 | 辉达公司 | Model predictive control techniques for autonomous systems |

| CN114862901A (en)* | 2022-04-26 | 2022-08-05 | 青岛慧拓智能机器有限公司 | Road-end multi-source sensor fusion target sensing method and system for surface mine |

| CN115032614A (en)* | 2022-05-19 | 2022-09-09 | 北京航空航天大学 | Bayesian optimization-based solid-state laser radar and camera self-calibration method |

| CN115330923A (en)* | 2022-08-10 | 2022-11-11 | 小米汽车科技有限公司 | Point cloud data rendering method and device, vehicle, readable storage medium and chip |

- 2023

- 2023-01-03CNCN202310002862.7Apatent/CN116091533B/enactiveActive

Patent Citations (15)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20130222369A1 (en)* | 2012-02-23 | 2013-08-29 | Charles D. Huston | System and Method for Creating an Environment and for Sharing a Location Based Experience in an Environment |

| WO2019023892A1 (en)* | 2017-07-31 | 2019-02-07 | SZ DJI Technology Co., Ltd. | Correction of motion-based inaccuracy in point clouds |

| US20200043182A1 (en)* | 2018-07-31 | 2020-02-06 | Intel Corporation | Point cloud viewpoint and scalable compression/decompression |

| US20200074230A1 (en)* | 2018-09-04 | 2020-03-05 | Luminar Technologies, Inc. | Automatically generating training data for a lidar using simulated vehicles in virtual space |

| CN110210389A (en)* | 2019-05-31 | 2019-09-06 | 东南大学 | A kind of multi-targets recognition tracking towards road traffic scene |

| CN110264468A (en)* | 2019-08-14 | 2019-09-20 | 长沙智能驾驶研究院有限公司 | Point cloud data mark, parted pattern determination, object detection method and relevant device |

| CN110853037A (en)* | 2019-09-26 | 2020-02-28 | 西安交通大学 | A lightweight color point cloud segmentation method based on spherical projection |

| CN111476822A (en)* | 2020-04-08 | 2020-07-31 | 浙江大学 | Laser radar target detection and motion tracking method based on scene flow |

| CN114746872A (en)* | 2020-04-28 | 2022-07-12 | 辉达公司 | Model predictive control techniques for autonomous systems |

| CN111781608A (en)* | 2020-07-03 | 2020-10-16 | 浙江光珀智能科技有限公司 | Moving target detection method and system based on FMCW laser radar |

| CN113075683A (en)* | 2021-03-05 | 2021-07-06 | 上海交通大学 | Environment three-dimensional reconstruction method, device and system |

| CN114419152A (en)* | 2022-01-14 | 2022-04-29 | 中国农业大学 | A method and system for target detection and tracking based on multi-dimensional point cloud features |

| CN114862901A (en)* | 2022-04-26 | 2022-08-05 | 青岛慧拓智能机器有限公司 | Road-end multi-source sensor fusion target sensing method and system for surface mine |

| CN115032614A (en)* | 2022-05-19 | 2022-09-09 | 北京航空航天大学 | Bayesian optimization-based solid-state laser radar and camera self-calibration method |

| CN115330923A (en)* | 2022-08-10 | 2022-11-11 | 小米汽车科技有限公司 | Point cloud data rendering method and device, vehicle, readable storage medium and chip |

Non-Patent Citations (5)

| Title |

|---|

| ARASH KIANI: "Point Cloud Registration of Tracked Objects and Real-time Visualization of LiDAR Data on Web and Web VR", 《MASTER\'S THESIS IN INFORMATICS》, 15 May 2020 (2020-05-15), pages 1 - 56* |

| 吴开阳: "基于激光雷达传感器的三维多目标检测与跟踪技术研究", 《中国优秀硕士学位论文全文数据库 信息科技辑》, no. 2022, 15 June 2022 (2022-06-15), pages 136 - 366* |

| 吴阳勇 等: "Qt与MATLAB混合编程设计雷达信号验证软件", 《电子测量技术》, vol. 43, no. 22, 23 November 2020 (2020-11-23), pages 13 - 18* |

| 石泽亮: "移动机器人视觉伺服操作臂控制方法研究", 《中国优秀硕士学位论文全文数据库信息科技辑》, no. 2022, 15 November 2022 (2022-11-15), pages 140 - 111* |

| 赵次郎: "基于激光视觉数据融合的三维场景重构与监控", 《中国优秀硕士学位论文全文数据库 信息科技辑》, no. 2015, 15 July 2015 (2015-07-15), pages 138 - 1060* |

Also Published As

| Publication number | Publication date |

|---|---|

| CN116091533B (en) | 2024-05-31 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN101615191B (en) | Storage and real-time visualization implementation method of mass cloud data | |

| CN111932671A (en) | Three-dimensional solid model reconstruction method based on dense point cloud data | |

| CN113034656B (en) | Rendering method, device and device for lighting information in game scene | |

| CN104616345B (en) | Octree forest compression based three-dimensional voxel access method | |

| CN104778744B (en) | Extensive three-dimensional forest Visual Scene method for building up based on Lidar data | |

| CN113593027B (en) | Three-dimensional avionics display control interface device | |

| JP5873672B2 (en) | Method for estimating the amount of light received at a point in a virtual environment | |

| US10846908B2 (en) | Graphics processing apparatus based on hybrid GPU architecture | |

| CN102722885A (en) | Method for accelerating three-dimensional graphic display | |

| JP7375149B2 (en) | Positioning method, positioning device, visual map generation method and device | |

| CN118334363B (en) | Topographic feature semantic modeling method based on remote sensing image and LiDAR analysis | |

| Wegen et al. | A Survey on Non-photorealistic Rendering Approaches for Point Cloud Visualization | |

| CN119445006A (en) | Three-dimensional digital content generation method, device, system, equipment, medium and product | |

| CN111275806A (en) | Parallelization real-time rendering system and method based on points | |

| CN116091533B (en) | Laser radar target demonstration and extraction method in Qt development environment | |

| Buck et al. | Ignorance is bliss: flawed assumptions in simulated ground truth | |

| CN116310135B (en) | Curved surface display method and system based on multi-resolution LOD model | |

| CN116993894A (en) | Virtual picture generation method, device, equipment, storage medium and program product | |

| CN115063496B (en) | Point cloud data rapid processing method and device | |

| WO2024183288A1 (en) | Shadow rendering method and apparatus, computer device, and storage medium | |

| CN118674850A (en) | Scene rendering method, device, equipment, medium and program product | |

| CN111445565B (en) | A line-of-sight-based integrated display method and device for multi-source spatial data | |

| Zhang et al. | Efficient and fine-grained viewshed analysis in a three-dimensional urban complex environment | |

| CN119963712B (en) | Method, device, equipment and medium for integrating data in two-dimensional and three-dimensional scenes | |

| Atanasov et al. | Efficient Rendering of Digital Twins Consisting of Both Static And Dynamic Data |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |