CN115590504A - Motion evaluation method and device, electronic equipment and storage medium - Google Patents

Motion evaluation method and device, electronic equipment and storage mediumDownload PDFInfo

- Publication number

- CN115590504A CN115590504ACN202211224136.1ACN202211224136ACN115590504ACN 115590504 ACN115590504 ACN 115590504ACN 202211224136 ACN202211224136 ACN 202211224136ACN 115590504 ACN115590504 ACN 115590504A

- Authority

- CN

- China

- Prior art keywords

- image frame

- action

- actions

- motion

- test object

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Classifications

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/103—Measuring devices for testing the shape, pattern, colour, size or movement of the body or parts thereof, for diagnostic purposes

- A61B5/11—Measuring movement of the entire body or parts thereof, e.g. head or hand tremor or mobility of a limb

- A61B5/1116—Determining posture transitions

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/103—Measuring devices for testing the shape, pattern, colour, size or movement of the body or parts thereof, for diagnostic purposes

- A61B5/11—Measuring movement of the entire body or parts thereof, e.g. head or hand tremor or mobility of a limb

- A61B5/1118—Determining activity level

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B2503/00—Evaluating a particular growth phase or type of persons or animals

- A61B2503/10—Athletes

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B2503/00—Evaluating a particular growth phase or type of persons or animals

- A61B2503/12—Healthy persons not otherwise provided for, e.g. subjects of a marketing survey

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B2505/00—Evaluating, monitoring or diagnosing in the context of a particular type of medical care

- A61B2505/09—Rehabilitation or training

Landscapes

- Health & Medical Sciences (AREA)

- Life Sciences & Earth Sciences (AREA)

- Engineering & Computer Science (AREA)

- Medical Informatics (AREA)

- Physics & Mathematics (AREA)

- Dentistry (AREA)

- Biophysics (AREA)

- Pathology (AREA)

- Physiology (AREA)

- Biomedical Technology (AREA)

- Heart & Thoracic Surgery (AREA)

- Oral & Maxillofacial Surgery (AREA)

- Molecular Biology (AREA)

- Surgery (AREA)

- Animal Behavior & Ethology (AREA)

- General Health & Medical Sciences (AREA)

- Public Health (AREA)

- Veterinary Medicine (AREA)

- Image Analysis (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本申请涉及计算机视觉技术领域,尤其涉及一种运动评测方法、装置、电子设备及存储介质。The present application relates to the technical field of computer vision, and in particular to a motion evaluation method, device, electronic equipment and storage medium.

背景技术Background technique

人们对身体健康的重视程度越来越高,全民健身意识也不断加强,在日常锻炼中,最常见的运动项目包括仰卧起坐、俯卧撑等。运动人员在进行运动时,通常利用计数的方式进行运动评测,以计算运动人员的运动成绩,从而激发运动人员的运动热情。People are paying more and more attention to physical health, and the awareness of national fitness is also increasing. In daily exercise, the most common sports include sit-ups, push-ups, etc. When athletes are doing sports, they usually use the counting method for sports evaluation to calculate their sports performance, so as to stimulate their enthusiasm for sports.

现有技术中,对运动动作进行计数评测可以通过计数员观察运动人员的动作来统计数量,但是人工计数主观性强,且容易出错,影响评测准确性。也可以在测试区域安装红外接收设备,实现光电式计数,但是光电式计数容易受遮挡物干扰,且对运动动作的准确度无法评判,从而影响评测准确性。In the prior art, the counting and evaluation of sports actions can be counted by counters observing the movements of athletes. However, manual counting is highly subjective and prone to errors, which affects the accuracy of evaluation. It is also possible to install infrared receiving equipment in the test area to realize photoelectric counting, but photoelectric counting is easily disturbed by obstructions, and the accuracy of sports actions cannot be judged, thus affecting the accuracy of evaluation.

因此,如何提高运动动作评测的准确度是本领域技术人员亟需解决的技术问题。Therefore, how to improve the accuracy of motion action evaluation is a technical problem that those skilled in the art urgently need to solve.

发明内容Contents of the invention

基于上述现有技术的缺陷和不足,本申请提出一种运动评测方法、装置、电子设备及存储介质,能够提高运动动作评测准确度。Based on the defects and deficiencies of the above-mentioned prior art, the present application proposes a motion evaluation method, device, electronic equipment, and storage medium, which can improve the accuracy of motion action evaluation.

本申请第一方面提供了一种运动评测方法,包括:The first aspect of the present application provides a motion evaluation method, including:

对测试对象运动视频的图像帧进行动作属性分类,确定各图像帧对应的动作属性;所述图像帧对应的动作属性表示所述图像帧中所呈现的测试对象的姿态;Carry out action attribute classification to the image frame of test object motion video, determine the action attribute corresponding to each image frame; The action attribute corresponding to the image frame represents the posture of the test object presented in the image frame;

根据各图像帧对应的动作属性,确定所述测试对象运动时的每组动作是否合规,得到对每组动作的规范性评测结果;According to the action attributes corresponding to each image frame, determine whether each group of actions of the test object is in compliance, and obtain a normative evaluation result for each group of actions;

根据对每组动作的规范性评测结果,确定对应所述测试对象的运动评测结果。According to the normative evaluation results for each group of movements, the motion evaluation results corresponding to the test object are determined.

本申请第二方面提供了一种运动评测装置,包括:The second aspect of the present application provides a sports evaluation device, including:

动作属性分类模块,用于对测试对象运动视频的图像帧进行动作属性分类,确定各图像帧对应的动作属性;所述图像帧对应的动作属性表示所述图像帧中所呈现的测试对象的姿态;The action attribute classification module is used to classify the image frames of the test object motion video to determine the action attributes corresponding to each image frame; the action attribute corresponding to the image frame represents the posture of the test object presented in the image frame ;

动作合规分析模块,用于根据各图像帧对应的动作属性,确定所述测试对象运动时的每组动作是否合规,得到对每组动作的规范性评测结果;An action compliance analysis module is used to determine whether each group of actions of the test object is compliant according to the action attributes corresponding to each image frame, and obtain a normative evaluation result for each group of actions;

运动评测模块,用于根据对每组动作的规范性评测结果,确定对应所述测试对象的运动评测结果。The motion evaluation module is configured to determine the motion evaluation result corresponding to the test object according to the normative evaluation result for each group of actions.

本申请第三方面提供了一种电子设备,包括:存储器和处理器;The third aspect of the present application provides an electronic device, including: a memory and a processor;

其中,所述存储器与所述处理器连接,用于存储程序;Wherein, the memory is connected to the processor for storing programs;

所述处理器,用于通过运行所述存储器中的程序,实现上述运动评测方法。The processor is configured to implement the above motion evaluation method by running the program in the memory.

本申请第四方面提供了一种存储介质,所述存储介质上存储有计算机程序,所述计算机程序被处理器执行时,实现上述运动评测方法。The fourth aspect of the present application provides a storage medium, on which a computer program is stored, and when the computer program is executed by a processor, the above motion evaluation method is implemented.

本申请提出的运动评测方法,对测试对象运动视频的图像帧进行动作属性分类,确定各图像帧对应的动作属性;图像帧对应的动作属性表示图像帧中所呈现的测试对象的姿态;根据各图像帧对应的动作属性,确定测试对象运动时的每组动作是否合规,得到对每组动作的规范性评测结果;根据对每组动作的规范性评测结果,确定对应测试对象的运动评测结果。采用本申请的技术方案,可以对测试时采集的图像帧进行动作分析,确定测试对象的动作是否合规,并根据动作的规范性评测结果进行动作计数,相比于人工评测更加准确,而且不仅进行了数量评测,还实现了动作规范评测,提高了运动评测以及动作评测的准确度。The motion evaluation method proposed by the application classifies the motion attributes of the image frames of the motion video of the test object, and determines the motion attributes corresponding to each image frame; the motion attribute corresponding to the image frame represents the posture of the test object presented in the image frame; according to each The action attributes corresponding to the image frame determine whether each group of actions of the test object is compliant, and obtain the normative evaluation results for each group of actions; according to the normative evaluation results for each group of actions, determine the motion evaluation results of the corresponding test objects . By adopting the technical solution of the application, it is possible to analyze the motion of the image frames collected during the test, determine whether the motion of the test object is compliant, and count the motions according to the standardized evaluation results of the motion, which is more accurate than manual evaluation, and not only Quantitative evaluation was carried out, and the evaluation of movement norms was also realized, which improved the accuracy of sports evaluation and action evaluation.

附图说明Description of drawings

为了更清楚地说明本申请实施例或现有技术中的技术方案,下面将对实施例或现有技术描述中所需要使用的附图作简单地介绍,显而易见地,下面描述中的附图仅仅是本申请的实施例,对于本领域普通技术人员来讲,在不付出创造性劳动的前提下,还可以根据提供的附图获得其他的附图。In order to more clearly illustrate the technical solutions in the embodiments of the present application or the prior art, the following will briefly introduce the drawings that need to be used in the description of the embodiments or the prior art. Obviously, the accompanying drawings in the following description are only It is an embodiment of the present application, and those skilled in the art can also obtain other drawings according to the provided drawings without creative work.

图1是本申请实施例提供的一种运动评测方法的流程示意图;FIG. 1 is a schematic flow chart of a motion evaluation method provided in an embodiment of the present application;

图2是本申请实施例提供的获取各图像帧中测试对象的骨骼点坐标的处理流程示意图;Fig. 2 is a schematic diagram of the processing flow for obtaining the coordinates of the skeletal points of the test object in each image frame provided by the embodiment of the present application;

图3是本申请实施例提供的对比候选对象与骨骼点常模的处理流程示意图;Fig. 3 is a schematic diagram of the processing flow for comparing candidate objects and skeletal point norms provided by the embodiment of the present application;

图4是本申请实施例提供的确定各图像帧对应的动作属性的处理流程示意图;FIG. 4 is a schematic diagram of the processing flow for determining the action attribute corresponding to each image frame provided by the embodiment of the present application;

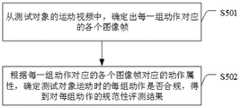

图5是本申请实施例提供的确定每组动作的规范性评测结果的处理流程示意图;Fig. 5 is a schematic diagram of the processing flow for determining the normative evaluation results of each group of actions provided by the embodiment of the present application;

图6是本申请实施例提供的确定每一组动作对应的各个图像帧的处理流程示意图;FIG. 6 is a schematic diagram of the processing flow for determining each image frame corresponding to each group of actions provided by the embodiment of the present application;

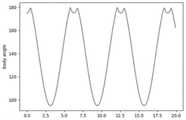

图7是本申请实施例提供的修正前的身体弯曲角度变化曲线图;Fig. 7 is a curve diagram of body bending angle change before correction provided by the embodiment of the present application;

图8是本申请实施例提供的身体未躺平时身体弯曲角度示意图;Fig. 8 is a schematic diagram of the bending angle of the body when the body is not lying down provided by the embodiment of the present application;

图9是本申请实施例提供的身体躺平时身体弯曲角度示意图;Fig. 9 is a schematic diagram of the bending angle of the body when the body is lying down provided by the embodiment of the present application;

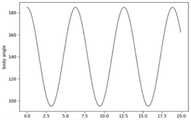

图10是本申请实施例提供的修正后的身体弯曲角度变化曲线图;Fig. 10 is a curve diagram of the body bending angle after correction provided by the embodiment of the present application;

图11是本申请实施例提供的运动状态机的工作流程示意图;FIG. 11 is a schematic diagram of the workflow of the motion state machine provided by the embodiment of the present application;

图12是本申请实施例提供的一种运动评测装置的结构示意图;Fig. 12 is a schematic structural diagram of a motion evaluation device provided by an embodiment of the present application;

图13是本申请实施例提供的一种电子设备的结构示意图。FIG. 13 is a schematic structural diagram of an electronic device provided by an embodiment of the present application.

具体实施方式detailed description

本申请实施例技术方案适用于运动评测的应用场景。采用本申请实施例技术方案,能够根据动作的规范性评测结果进行动作计数,相比于人工评测更加准确,而且不仅进行了数量评测,还实现了动作规范评测,提高了运动评测以及动作评测的准确度。The technical solutions of the embodiments of the present application are applicable to application scenarios of motion evaluation. By adopting the technical solution of the embodiment of the present application, it is possible to count actions according to the normative evaluation results of actions, which is more accurate than manual evaluation, and not only performs quantitative evaluation, but also realizes action standard evaluation, which improves the accuracy of motion evaluation and action evaluation. Accuracy.

在日常锻炼中,对运动对象进行运动评测能够激发运动对象的运动热情,检验运动对象的运动成果,对于仰卧起坐、俯卧撑等常见且动作简单的运动项目,现有技术中,通常采用人工计数、光电式计数或者终端IMU计数等运动评测方法。In daily exercise, the exercise evaluation of the exercise object can stimulate the enthusiasm of the exercise object and test the exercise performance of the exercise object. For common and simple sports such as sit-ups and push-ups, in the prior art, manual counting is usually used , photoelectric counting or terminal IMU counting and other motion evaluation methods.

其中,人工计数的运动评测方式是通过计数员观察测试者运动过程中的动作是否标准,一次运动周期对应的一组动作均标准则计数员会计一次数。但是人工计数耗时耗力,且主观性较强,容易作弊,对于轻微的违规动作不易察觉,导致运动评测的准确度以及评测效率较低。Among them, the motion evaluation method of manual counting is to observe whether the actions of the tester during the exercise are standard by the counter, and if a set of actions corresponding to one motion cycle is standard, the count will be counted once. However, manual counting is time-consuming and labor-intensive, and is highly subjective, easy to cheat, and difficult to detect minor violations, resulting in low accuracy and efficiency of motion evaluation.

光电式计数的运动评测方式是通过红外等传感器进行计数,在测试区域两侧安装红外发射端和红外接收端,通过红外接收端的通断判断是否存在遮挡,以红外通断状态循环进行计数。例如,对于仰卧起坐的光电式计数,当测试者平躺时,测试者身体遮挡下侧的红外传感器,下侧红外断开判断此时为平躺状态;当测试者坐起时,测试者身体遮挡上侧红外传感器,上侧红外传感器断开判断此时为坐起状态;当两侧红外接收器接收到红外信号,判断此时为中间过程状态。通过红外通断状态循环确定各组仰卧起坐动作的周期,从而实现仰卧起坐的计数。但是红外感应,容易受到遮挡物干扰,从而影响计数,并且红外感应仅仅只能通过感应到的运动周期计数,但是无法判断各组动作是否合规,导致运动评测的准确度较低。The motion evaluation method of photoelectric counting is counting through infrared sensors, installing infrared transmitters and infrared receivers on both sides of the test area, judging whether there is occlusion through the on-off of the infrared receiver, and counting in the infrared on-off state. For example, for the photoelectric counting of sit-ups, when the tester is lying down, the tester's body blocks the infrared sensor on the lower side, and the lower side infrared sensor is disconnected to judge that it is a lying state; when the tester sits up, the tester The upper infrared sensor is blocked by the body, and the upper infrared sensor is disconnected to judge that the sit-up state is at this time; when the infrared receivers on both sides receive infrared signals, it is judged that this is an intermediate process state. The period of each group of sit-ups is determined through the cycle of the infrared on-off state, so as to realize the counting of the sit-ups. However, infrared sensing is susceptible to interference from obstructions, which affects counting, and infrared sensing can only count through the sensed motion cycles, but cannot judge whether each group of actions is compliant, resulting in low accuracy of motion evaluation.

终端IMU计数的运动评测方式是在测试者身上穿戴IMU设备(惯性测量设备),例如加速度计、陀螺仪等,记录测试者的加速度(加速度计)和/或角速度(陀螺仪)等传感器数据,对周期变化的数据进行周期划分,获得运动周期,从而进行周期计数。该种方法也不能进行动作合规检测,导致运动评测的准确度较低。The motion evaluation method of terminal IMU counting is to wear an IMU device (inertial measurement device) on the tester, such as an accelerometer, a gyroscope, etc., and record sensor data such as the tester's acceleration (accelerometer) and/or angular velocity (gyroscope), Periodically divide the data that changes periodically to obtain the motion period, so as to perform period counting. This method also cannot perform motion compliance detection, resulting in low accuracy of motion evaluation.

鉴于上述的现有技术的不足以及现实存在的运动评测的准确度较低的问题,本申请发明人经过研究和试验,提出一种运动评测方法,该方法能够实现测试对象的动作规范性评测,并根据动作的规范性评测结果进行动作计数,不仅进行了数量评测,还实现了动作规范评测,提高了运动评测的准确度。In view of the above-mentioned deficiencies in the prior art and the low accuracy of motion evaluation in reality, the inventor of the present application proposed a motion evaluation method after research and experiments, which can realize the standardized evaluation of the test object's motion. And according to the normative evaluation results of the movements, the movement counting is carried out, not only the quantity evaluation is carried out, but also the movement standard evaluation is realized, which improves the accuracy of the movement evaluation.

下面将结合本申请实施例中的附图,对本申请实施例中的技术方案进行清楚、完整地描述,显然,所描述的实施例仅仅是本申请一部分实施例,而不是全部的实施例。基于本申请中的实施例,本领域普通技术人员在没有做出创造性劳动前提下所获得的所有其他实施例,都属于本申请保护的范围。The technical solutions in the embodiments of the present application will be clearly and completely described below in conjunction with the accompanying drawings in the embodiments of the present application. Obviously, the described embodiments are only some of the embodiments of the present application, not all of them. Based on the embodiments in this application, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the scope of protection of this application.

本申请实施例提出一种运动评测方法,参见图1所示,该方法包括:The embodiment of the present application proposes a motion evaluation method, as shown in Figure 1, the method includes:

S101、对测试对象运动视频的图像帧进行动作属性分类,确定各图像帧对应的动作属性。S101. Classify the motion attributes of the image frames of the motion video of the test object, and determine the motion attributes corresponding to each image frame.

具体的,对于进行运动评测的测试对象,需要对测试对象进行视频采集,得到该测试对象的运动视频,从运动视频中提取出图像帧,通过对提取出的各图像帧进行动作属性分类,从而分析出各图像帧中所呈现的测试对象的姿态,即确定各图像帧对应的动作属性。其中,本实施例可以将运动视频中将所有图像帧均提取出来,进行动作属性分类,也可以设定图像帧的提取时间间隔,例如设定提取时间间隔为50ms,那么便从运动视频中每隔50ms提取一帧图像帧。本实施例中优选使用RGB相机对测试对象进行运动视频采集。Specifically, for the test object for motion evaluation, it is necessary to collect the video of the test object, obtain the motion video of the test object, extract image frames from the motion video, and classify the action attributes of each extracted image frame, thereby Analyzing the posture of the test object presented in each image frame, that is, determining the action attribute corresponding to each image frame. Wherein, in this embodiment, all the image frames in the motion video can be extracted to classify the action attributes, and the extraction time interval of the image frames can also be set, for example, the extraction time interval is set to be 50ms, then each Extract an image frame every 50ms. In this embodiment, an RGB camera is preferably used to collect motion video of the test object.

本实施例中,图像帧对应的动作属性表示该图像帧中所呈现的测试对象的姿态,例如,在仰卧起坐运动测评时采集的运动视频中,图像帧中所呈现的测试对象的姿态,即动作属性可以包括:触膝状态、屈膝状态、抱头状态、躺平状态和测试对象的朝向状态等中的至少一种。在俯卧撑运动测评时采集的运动视频中,图像帧中所呈现的测试对象的姿态,即动作属性可以包括:屈肘状态、直臂状态、背部挺直状态和测试对象的朝向状态等中的至少一种。In this embodiment, the action attribute corresponding to the image frame represents the posture of the test object presented in the image frame, for example, in the exercise video collected during sit-up exercise evaluation, the posture of the test object presented in the image frame, That is, the action attribute may include: at least one of the state of touching the knee, the state of bending the knee, the state of holding the head, the state of lying flat, and the state of the test object's orientation. In the motion video collected during the push-up exercise evaluation, the posture of the test object presented in the image frame, that is, the action attribute may include: at least one of the elbow state, the straight arm state, the back straight state and the orientation state of the test object, etc. A sort of.

本实施例中,首先需要确定运动视频的图像帧中的测试对象,从图像帧中的测试对象上选取若干目标点,根据目标点的位置,可以分析出测试对象的姿态,从而实现对测试对象的动作属性分析。其中,目标点主要是选取测试对象执行运动过程中的各个动作时,有明显变化的关节或部位等位置,以便通过测试对象中目标点在运动视频中的变化,可以判断出动作的变化,根据图像帧中目标点所处位置,判断出测试对象当前的姿态。In this embodiment, it is first necessary to determine the test object in the image frame of the motion video, select some target points from the test object in the image frame, and analyze the posture of the test object according to the position of the target point, thereby realizing the test object Action attribute analysis. Among them, the target point is mainly to select the positions of the joints or parts that have obvious changes when the test object performs various actions in the movement process, so that the change of the action can be judged by the change of the target point in the test object in the motion video, according to The position of the target point in the image frame is used to determine the current posture of the test object.

进一步地,本步骤具体包括:Further, this step specifically includes:

第一,对测试对象的运动视频中的各图像帧进行人体骨骼点检测,获取各图像帧中测试对象的骨骼点坐标。Firstly, human skeleton point detection is performed on each image frame in the motion video of the test object, and the skeleton point coordinates of the test object in each image frame are obtained.

本实施例可以利用人体骨骼关键点检测的技术,对运动视频中的图像帧进行人体骨骼点获取,并确定各个人体骨骼点的坐标,从而得到图像帧中测试对象的骨骼点坐标。其中,检测出的骨骼点可以包括:头顶、鼻尖、左耳、右耳、下巴、脖子、左肩、右肩、左肘、右肘、左腕、右腕、左掌指关节、右掌指关节、左手指尖、右手指尖、左髋、右髋、左膝、右膝、左踝、右踝、左脚趾尖、右脚趾尖、左脚跟、右脚跟等中的任意骨骼点。In this embodiment, the human skeleton key point detection technology can be used to obtain human skeleton points from the image frames in the motion video, and determine the coordinates of each human skeleton point, so as to obtain the skeleton point coordinates of the test object in the image frame. Among them, the detected bone points may include: the top of the head, the tip of the nose, the left ear, the right ear, the chin, the neck, the left shoulder, the right shoulder, the left elbow, the right elbow, the left wrist, the right wrist, the left metacarpophalangeal joint, the right metacarpophalangeal joint, the left hand Any bone point in fingertip, right fingertip, left hip, right hip, left knee, right knee, left ankle, right ankle, left toe tip, right toe tip, left heel, right heel, etc.

第二,根据各图像帧中测试对象的骨骼点坐标,对各图像帧中的测试对象进行动作属性分类,确定各图像帧对应的动作属性。Second, according to the skeletal point coordinates of the test object in each image frame, classify the action attribute of the test object in each image frame, and determine the action attribute corresponding to each image frame.

获取到各图像帧中测试对象的骨骼点坐标后,可以通过对测试对象的骨骼点坐标进行分析,从而能够确定测试对象所呈现的姿态,实现动作属性分类,得到各图像帧对应的动作属性。例如,对于仰卧起坐运动,可以分析出测试对象是否触膝、是否屈膝、是否抱头、是否躺平、朝向左还是右中的至少一种。After the skeleton point coordinates of the test object in each image frame are obtained, the posture presented by the test object can be determined by analyzing the skeleton point coordinates of the test object, the action attribute classification is realized, and the action attribute corresponding to each image frame is obtained. For example, for sit-ups, at least one of whether the test subject touches the knee, bends the knee, holds the head, lies flat, faces left or right can be analyzed.

其中,在对仰卧起坐运动中,可以采集左肘、右肘、左膝、右膝等的骨骼点坐标分析触膝状态,采集左髋、右髋、左膝、右膝、左踝、右踝等的骨骼点坐标分析屈膝状态,采集头顶、鼻尖、左耳、右耳、下巴、脖子、左肩、右肩、左肘、右肘、左腕、右腕、左掌指关节、右掌指关节、左手指尖、右手指尖等的骨骼点坐标分析抱头状态,采集左肩、右肩、左髋、右髋、左踝、右踝、左脚趾尖、右脚趾尖、左脚跟、右脚跟等的骨骼点坐标分析躺平状态,采集头顶、左踝、右踝、左脚趾尖、右脚趾尖、左脚跟、右脚跟等的骨骼点坐标分析朝向状态。Among them, in the sit-up exercise, the bone point coordinates of the left elbow, right elbow, left knee, and right knee can be collected to analyze the knee contact state, and the left hip, right hip, left knee, right knee, left ankle, and right knee can be collected. The coordinates of bone points such as ankles are used to analyze the state of knee flexion, and the top of the head, the tip of the nose, the left ear, the right ear, the chin, the neck, the left shoulder, the right shoulder, the left elbow, the right elbow, the left wrist, the right wrist, the left metacarpophalangeal joint, the right metacarpophalangeal joint, Skeleton point coordinates of left fingertips, right fingertips, etc. analyze the state of holding the head, collect left shoulder, right shoulder, left hip, right hip, left ankle, right ankle, left toe tip, right toe tip, left heel, right heel, etc. Skeleton point coordinates analyze the lying flat state, collect the bone point coordinates of the top of the head, left ankle, right ankle, left toe tip, right toe tip, left heel, right heel, etc. to analyze the orientation state.

S102、根据各图像帧对应的动作属性,确定测试对象运动时的每组动作是否合规,得到对每组动作的规范性评测结果。S102. According to the action attributes corresponding to each image frame, determine whether each set of actions of the test object is compliant, and obtain a normative evaluation result for each set of actions.

具体的,本实施例可以根据各图像帧中测试对象的骨骼点坐标,对各图像帧中测试对象的动作进行周期性分析,其中,每个周期均对应一组动作,从而能够分析出测试对象的运动视频中每组动作对应的图像帧,然后根据各图像帧对应的动作属性,判断各组动作是否合规,从而得到每组动作的规范性评测结果。Specifically, this embodiment can periodically analyze the actions of the test object in each image frame according to the skeletal point coordinates of the test object in each image frame, wherein each cycle corresponds to a group of actions, so that the test object can be analyzed The image frames corresponding to each group of actions in the motion video, and then judge whether each group of actions is compliant according to the action attributes corresponding to each image frame, so as to obtain the normative evaluation results of each group of actions.

在仰卧起坐运动中,从躺平动作到坐直动作,再从坐直动作到躺平动作为一个周期,仰卧起坐动作的规范性需要检测从躺平动作到坐直动作之间是否触膝、从躺平动作到触膝动作之间是否抱头、从躺平动作到触膝动作之间是否屈膝,以及一组动作开始时是否躺平,每组动作的规范性评测结果中记录了每组动作中的违规情况。例如,一组动作中从躺平动作到坐直动作之间的各图像帧的动作属性中触膝状态均表示未触膝,则确定该组动作的规范性评测结果中包括未触膝违规。另外,根据对每组动作中各图像帧的动作属性的分析,规范性评测结果中还可以包括未屈膝违规、未躺平违规、未抱头违规等。In the sit-up exercise, from lying down to sitting upright, and then from sitting upright to lying down is a cycle. The standardization of sit-ups needs to detect whether there is a touch between lying down and sitting upright. Knee, whether the head is held between the lying flat action and the knee touching action, whether the knee is bent between the lying flat action and the knee touching action, and whether lying flat at the beginning of a group of movements, recorded in the normative evaluation results of each group of movements Violations in each set of actions. For example, if the knee-touching state in the action attributes of each image frame from lying down to sitting upright in a group of actions indicates no knee-touching, it is determined that the normative evaluation results of this group of actions include non-knee-touching violations. In addition, according to the analysis of the action attributes of each image frame in each group of actions, the normative evaluation results may also include violations of not bending the knee, not lying flat, and not holding the head, etc.

S103、根据对每组动作的规范性评测结果,确定对应测试对象的运动评测结果。S103. According to the normative evaluation results of each group of movements, determine the motion evaluation results of the corresponding test objects.

具体的,本实施例可以根据运动视频中每组动作的规范性评测结果,对测试对象进行运动计数,从而根据计数结果以及每组动作的规范性评测结果,确定测试对象的运动评测结果。Specifically, this embodiment can count the motion of the test object according to the normative evaluation result of each group of actions in the motion video, so as to determine the motion evaluation result of the test object according to the counting result and the normative evaluation result of each group of actions.

进一步地,本步骤具体包括:第一,将规范性评测结果中不存在违规的动作作为规范动作;第二,记录测试对象运动视频中规范动作的数量,并将规范动作的数量以及每组动作的规范性评测结果作为测试对象的运动评测结果。Further, this step specifically includes: first, taking actions that do not have violations in the normative evaluation results as normative actions; second, recording the number of normative actions in the test subject's motion video, and recording the number of normative actions and the The normative evaluation results of the test subjects were used as the exercise evaluation results.

运动评测包括计时运动评测和极限计数运动评测,其中,计时运动评测是在固定时长内对运动动作进行计数,极限计数运动评测是对记录测试对象的极限运动动作数量,即计时运动评测是计时停止后,停止计数,而极限计数运动评测是测试对象运动停止后,停止计数。对于计时运动评测只需要对运动视频中计时范围内的动作的进行合规判断与计数,对于极限计数运动评测是需要对运动视频中所有动作进行合规判断与计数。Sports evaluation includes timing sports evaluation and extreme counting sports evaluation. Among them, timing sports evaluation is to count sports actions within a fixed duration, and extreme counting sports evaluation is to record the number of extreme sports actions of the test object, that is, timing sports evaluation is timing stop After that, stop counting, and the limit counting exercise evaluation is to stop counting after the test object stops moving. For timing sports evaluation, it is only necessary to judge and count the actions within the timing range in the sports video, and for extreme counting sports evaluation, it is necessary to judge and count all the actions in the sports video.

通过上述介绍可见,本申请实施例提出的运动评测方法,对测试对象运动视频的图像帧进行动作属性分类,确定各图像帧对应的动作属性;图像帧对应的动作属性表示图像帧中所呈现的测试对象的姿态;根据各图像帧对应的动作属性,确定测试对象运动时的每组动作是否合规,得到对每组动作的规范性评测结果;根据对每组动作的规范性评测结果,确定对应测试对象的运动评测结果。采用本实施例的技术方案,可以对测试时采集的图像帧进行动作分析,确定测试对象的动作是否合规,并根据动作的规范性评测结果进行动作计数,相比于人工评测更加准确,而且不仅进行了数量评测,还实现了动作规范评测,提高了运动评测以及动作评测的准确度。It can be seen from the above introduction that the motion evaluation method proposed in the embodiment of the present application classifies the motion attributes of the image frames of the motion video of the test object, and determines the motion attributes corresponding to each image frame; The posture of the test object; according to the action attributes corresponding to each image frame, determine whether each group of actions of the test object is in compliance, and obtain the normative evaluation results for each group of actions; according to the normative evaluation results for each group of actions, determine Corresponding to the test object's motion evaluation results. By adopting the technical solution of this embodiment, it is possible to analyze the motion of the image frames collected during the test, determine whether the motion of the test object is compliant, and count the motions according to the standardized evaluation results of the motions, which is more accurate than manual evaluation, and Not only the quantity evaluation is carried out, but also the action specification evaluation is realized, which improves the accuracy of the motion evaluation and action evaluation.

作为一种可选的实施方式,参见图2所示,本申请另一实施例公开了,上述步骤S101中,对测试对象的运动视频中的各图像帧进行人体骨骼点检测,获取各图像帧中测试对象的骨骼点坐标,包括如下步骤:As an optional implementation manner, as shown in FIG. 2, another embodiment of the present application discloses that in the above step S101, human skeleton point detection is performed on each image frame in the motion video of the test object, and each image frame is obtained. The bone point coordinates of the test object, including the following steps:

S201、对测试对象的运动视频中的各图像帧进行人体骨骼点检测,得到各图像帧中所有候选对象的骨骼点坐标。S201. Perform human skeleton point detection on each image frame in the motion video of the test object, and obtain skeleton point coordinates of all candidate objects in each image frame.

具体的,由于运动视频在进行拍摄时可能会将除测试对象以外的其他人员拍摄进去,因此,在对存在测试对象以外的其他人的图像帧进行人体骨骼点检测时,可能会获取到测试对象的骨骼点坐标以及其他人的骨骼点坐标,无法判断出哪个是测试对象,从而也无法确定测试对象的骨骼点坐标。因此,本实施例可以将图像帧中的所有对象均作为候选对象,然后通过人体骨骼点检测方式,获取图像帧中各候选对象的骨骼点坐标。Specifically, since the motion video may capture people other than the test object when shooting, the test object may be obtained when the human skeleton point detection is performed on the image frame of other people other than the test object. The bone point coordinates of the user and other people's bone point coordinates, it is impossible to determine which is the test object, so it is also impossible to determine the bone point coordinates of the test object. Therefore, in this embodiment, all the objects in the image frame can be used as candidate objects, and then the skeleton point coordinates of each candidate object in the image frame can be acquired by means of human skeleton point detection.

S202、通过将各图像帧中每个候选对象的骨骼点坐标与预先确定的各图像帧对应的骨骼点常模的骨骼点坐标进行对比,获取各图像帧中测试对象的骨骼点坐标。S202. Obtain the skeleton point coordinates of the test object in each image frame by comparing the skeleton point coordinates of each candidate object in each image frame with the predetermined skeleton point coordinates of the skeleton point norm corresponding to each image frame.

具体的,本实施例预先确定了各图像帧对应的骨骼点常模,只有候选对象与骨骼点常模相似度较高,才说明该候选对象为测试对象,因此,需要将图像帧中的每个候选对象的骨骼点坐标与该图像帧对应的骨骼点常模的骨骼点坐标进行对比,确定每个候选对象与骨骼点常模之间的相似度,将相似度最大时对应的候选对象作为测试对象,从而得到测试对象的骨骼点坐标。Specifically, this embodiment predetermines the skeletal point norm corresponding to each image frame. Only when the candidate object has a high similarity with the skeletal point norm does it indicate that the candidate object is a test object. Therefore, each image frame needs to be The bone point coordinates of each candidate object are compared with the bone point coordinates of the bone point norm corresponding to the image frame to determine the similarity between each candidate object and the bone point norm, and the candidate object corresponding to the maximum similarity is taken as Test object, so as to get the bone point coordinates of the test object.

其中,每个图像帧对应的骨骼点常模的骨骼点坐标均是利用运动视频中的常模图像帧的骨骼点坐标进行滑动平均计算确定的,而常模图像帧是所述图像帧之前的图像帧中、测试对象与骨骼点常模的姿态相同的图像帧。本实施例中,骨骼点常模包括平躺常模、坐直常模和中间常模中的至少一种。其中,图像帧对应的坐直常模为该图像帧之前的图像帧中的,测试对象触膝且身体弯曲角度最小时的图像帧中,测试对象的骨骼点的滑动平均;图像帧对应的平躺常模为该图像帧之前的图像帧中的,测试对象平躺且身体弯曲角度最大时的图像帧中,测试对象的骨骼点的滑动平均;图像帧对应的中间常模为该图像帧之前的图像帧中的,测试对象身体弯曲角度在100°到120°时的图像帧中,测试对象的骨骼点的滑动平均。Wherein, the skeletal point coordinates of the skeletal point norm corresponding to each image frame are determined by sliding average calculation using the skeletal point coordinates of the normative image frame in the motion video, and the normative image frame is the previous image frame Among the image frames, the pose of the test object and the skeletal point norm are the same. In this embodiment, the skeletal point norm includes at least one of a lying down norm, a sitting upright norm and an intermediate norm. Wherein, the sitting straight norm corresponding to the image frame is in the image frame before the image frame, in the image frame when the test object touches the knee and the body bending angle is the smallest, the sliding average of the bone points of the test object; The lying norm is the moving average of the bone points of the test subject in the image frame before the image frame when the test subject is lying down and the body bending angle is the largest; the middle norm corresponding to the image frame is before the image frame In the image frame of the test object, in the image frame when the body bending angle of the test object is 100° to 120°, the sliding average of the bone points of the test object.

若当前的图像帧的前一帧图像帧中的测试对象与骨骼点常模的骨骼点相似度较低(即测试对象与骨骼点常模的姿态不同)时,当前的图像帧对应的骨骼点常模的骨骼点坐标与当前的图像帧的前一帧图像帧对应的骨骼点常模的骨骼点坐标相同;若当前的图像帧的前一帧图像帧中的测试对象与骨骼点常模的骨骼点相似度较高(即测试对象与骨骼点常模的姿态相同)时,当前的图像帧对应的骨骼点常模的骨骼点坐标的滑动平均计算公式为:If the test object in the previous image frame of the current image frame has a low similarity with the bone point of the skeletal point norm (that is, the posture of the test object and the skeletal point norm is different), the corresponding skeletal point of the current image frame The skeleton point coordinates of the norm are the same as the skeleton point coordinates of the skeleton point norm corresponding to the previous image frame of the current image frame; if the test object in the previous image frame of the current image frame is the same as the skeleton point norm When the skeletal point similarity is high (that is, the pose of the test object and the skeletal point norm is the same), the sliding average calculation formula of the skeletal point coordinates of the skeletal point norm corresponding to the current image frame is:

comm_pose1=α*comm_pose0+(1-α)posecomm_pose1=α*comm_pose0+(1-α)pose

其中,comm_pose1表示当前的图像帧对应的骨骼点常模的骨骼点坐标,pose表示当前的图像帧的前一帧图像帧的骨骼点坐标;comm_poseO表示当前的图像帧的前一帧图像帧对应的骨骼点常模的骨骼点坐标;α表示滑动系数。Among them, comm_pose1 represents the bone point coordinates of the bone point norm corresponding to the current image frame, pose represents the bone point coordinates of the previous image frame of the current image frame; comm_poseO represents the bone point coordinates of the previous image frame of the current image frame The bone point coordinates of the bone point norm; α represents the sliding coefficient.

本实施例通过设置三种状态的骨骼点常模,通过对比图像帧中所有候选对象与骨骼点常模,从候选对象中找出测试对象,实现了对各图像帧中测试对象的跟踪,能够提高确定测试对象的骨骼点坐标的准确度,从而提高运动评测的准确度。In this embodiment, by setting the skeletal point norms of three states, by comparing all candidate objects in the image frame with the skeletal point norms, the test object is found from the candidate objects, and the tracking of the test object in each image frame is realized. Improve the accuracy of determining the coordinates of skeletal points of the test object, thereby improving the accuracy of motion evaluation.

作为一种可选的实施方式,参见图3所示,本申请另一实施例公开了,上述步骤S202,通过将各图像帧中每个候选对象的骨骼点坐标与预先确定的各图像帧对应的骨骼点常模的骨骼点坐标进行对比,获取各图像帧中测试对象的骨骼点坐标,包括如下步骤:As an optional implementation, referring to FIG. 3 , another embodiment of the present application discloses that in the above step S202, by corresponding the skeleton point coordinates of each candidate object in each image frame to each predetermined image frame Comparing the bone point coordinates of the normal model of the bone point of each image frame to obtain the bone point coordinates of the test object in each image frame, including the following steps:

S301、利用各图像帧中每个候选对象的骨骼点坐标与各图像帧对应的骨骼点常模的骨骼点坐标,计算各图像帧中每个候选对象与对应的骨骼点常模之间的相似度。S301. Using the skeletal point coordinates of each candidate object in each image frame and the skeletal point coordinates of the skeletal point norm corresponding to each image frame, calculate the similarity between each candidate object and the corresponding skeletal point norm in each image frame Spend.

具体的,本实施例可以利用图像帧中每个候选对象的骨骼点坐标与该图像帧对应的骨骼点常模的骨骼点坐标,计算图像帧中每个候选对象与骨骼点常模之间的相似度,其中,如果图像帧对应的骨骼点常模包括三种状态,那么需要计算候选对象与每种状态的骨骼点常模之间的相似度。Specifically, this embodiment can use the skeletal point coordinates of each candidate object in the image frame and the skeletal point coordinates of the skeletal point norm corresponding to the image frame to calculate the distance between each candidate object and the skeletal point norm in the image frame. Similarity, wherein, if the skeletal point norm corresponding to the image frame includes three states, then it is necessary to calculate the similarity between the candidate object and the skeletal point norm of each state.

进一步地,本步骤具体包括:Further, this step specifically includes:

第一,对各图像帧中每个候选对象的骨骼点坐标和各图像帧对应的骨骼点常模的骨骼点坐标均进行归一化,得到各图像帧中每个候选对象的归一化骨骼点坐标和各图像帧对应的骨骼点常模的归一化骨骼点坐标。First, the bone point coordinates of each candidate object in each image frame and the bone point coordinates of the bone point norm corresponding to each image frame are normalized to obtain the normalized bone point coordinates of each candidate object in each image frame Point coordinates and the normalized bone point coordinates of the bone point norm corresponding to each image frame.

本实施例需要对图像帧中每个候选对象的骨骼点坐标以及对应的骨骼点常模的骨骼点坐标均进行归一化处理,得到图像帧中每个候选对象的归一化骨骼点坐标和对应的骨骼点常模的归一化骨骼点坐标,避免因为运动视频采集设备采集的图像受采集设备的视角影响,从而影响从图像帧中检测出的骨骼点坐标的准确度。In this embodiment, the bone point coordinates of each candidate object in the image frame and the bone point coordinates of the corresponding bone point norm need to be normalized to obtain the normalized bone point coordinates of each candidate object in the image frame and The normalized bone point coordinates of the corresponding bone point norm avoid that the image captured by the motion video capture device is affected by the viewing angle of the capture device, thereby affecting the accuracy of the bone point coordinates detected from the image frame.

本实施例中,优选采用大腿长度和左髋与右髋之间的髋部中心点对骨骼点进行归一化,归一化计算公式如下所述:In this embodiment, it is preferable to use the thigh length and the hip center point between the left hip and the right hip to normalize the bone points, and the normalization calculation formula is as follows:

norm_pose=(pose-hip_center)/leg_len*facternorm_pose=(pose-hip_center)/leg_len*facter

hip_center=(hip_left+hip_right)/2hip_center=(hip_left+hip_right)/2

knee_center=(knee_left+knee_right)/2knee_center=(knee_left+knee_right)/2

leg_len=Euclidean(hip_center,knee_center)leg_len = Euclidean(hip_center, knee_center)

其中,norm_pose表示归一化骨骼点坐标,pose表示原始的骨骼点坐标,hip_center表示原始的骨骼点坐标对应的候选对象或骨骼点常模中的髋部中心点坐标,leg_len表示原始的骨骼点坐标对应的候选对象或骨骼点常模中,髋部中心点与膝盖中心点之间的距离(即大腿长度),facter表示比例因子,hip_left表示原始的骨骼点坐标对应的候选对象或骨骼点常模中的左髋骨骼点坐标,hip_right表示原始的骨骼点坐标对应的候选对象或骨骼点常模中的右髋骨骼点坐标,knee_center表示原始的骨骼点坐标对应的候选对象或骨骼点常模中的膝盖中心点坐标,knee_left表示原始的骨骼点坐标对应的候选对象或骨骼点常模中的左膝骨骼点坐标,knee_right表示原始的骨骼点坐标对应的候选对象或骨骼点常模中的右膝骨骼点坐标。Among them, norm_pose represents the normalized bone point coordinates, pose represents the original bone point coordinates, hip_center represents the candidate object corresponding to the original bone point coordinates or the hip center point coordinates in the bone point norm, and leg_len represents the original bone point coordinates In the corresponding candidate object or bone point norm, the distance between the hip center point and the knee center point (that is, the thigh length), factor indicates the scale factor, and hip_left indicates the candidate object or bone point norm corresponding to the original bone point coordinates The coordinates of the left hip bone point in , hip_right indicates the candidate object corresponding to the original bone point coordinates or the right hip bone point coordinates in the bone point norm, knee_center indicates the candidate object corresponding to the original bone point coordinates or the bone point norm in the Knee center point coordinates, knee_left indicates the candidate object corresponding to the original bone point coordinates or the left knee bone point coordinates in the bone point norm, knee_right indicates the candidate object corresponding to the original bone point coordinates or the right knee bone in the bone point norm point coordinates.

另外,本实施例也可以利用其他骨骼点对所有骨骼点坐标进行归一化处理,例如,利用上身长度和脖子上的骨骼点等。对于仰卧起坐的运动,利用大腿长度和左髋与右髋之间的髋部中心点对骨骼点进行归一化的准确率更高。In addition, this embodiment can also use other bone points to normalize the coordinates of all bone points, for example, using the length of the upper body and the bone points on the neck. For the sit-up exercise, normalizing the bone points with the thigh length and the hip center point between the left and right hips is more accurate.

第二,将各图像帧中每个候选对象的所有归一化骨骼点坐标组合成各图像帧中每个候选对象的骨骼点向量,将各图像帧对应的骨骼点常模的所有归一化骨骼点坐标组合成各图像帧对应的骨骼点常模的骨骼点向量。Second, combine all the normalized skeletal point coordinates of each candidate object in each image frame into the skeletal point vector of each candidate object in each image frame, and normalize all the normalized skeletal point norms corresponding to each image frame The bone point coordinates are combined into the bone point vector of the bone point norm corresponding to each image frame.

对图像帧中每个候选对象的骨骼点坐标以及对应的骨骼点常模的骨骼点坐标均进行归一化之后,确定图像帧中每个候选对象的归一化骨骼点坐标序列以及对应的骨骼点常模的归一化骨骼点坐标序列,然后将骨骼点坐标序列拉直处理,得到骨骼点向量。如果候选对象或骨骼点常模中的骨骼点坐标有30个,那么对应的骨骼点坐标序列即为30*2维,拉直后的骨骼点向量则为60*1维。例如,归一化骨骼点坐标序列为((x0,y0),(x1,y1),……,(xh,yh)),将该坐标序列拉直处理后得到的骨骼点向量则为(x0,y0,x1,y1,……,xh,yh),其中k为候选对象或骨骼点常模中骨骼点坐标的的数量。After normalizing the bone point coordinates of each candidate object in the image frame and the bone point coordinates of the corresponding bone point norm, determine the normalized bone point coordinate sequence and the corresponding bone point coordinates of each candidate object in the image frame The normalized bone point coordinate sequence of the point norm, and then straighten the bone point coordinate sequence to obtain the bone point vector. If there are 30 bone point coordinates in the candidate object or bone point norm, then the corresponding bone point coordinate sequence is 30*2 dimensions, and the straightened bone point vector is 60*1 dimensions. For example, the normalized bone point coordinate sequence is ((x0, y0), (x1, y1), ..., (xh, yh)), and the bone point vector obtained after straightening the coordinate sequence is (x0 , y0, x1, y1,..., xh, yh), where k is the number of bone point coordinates in the candidate object or bone point norm.

第三,计算各图像帧中每个候选对象的骨骼点向量与对应的骨骼点常模的骨骼点向量之间的向量相似度作为各图像帧中每个候选对象与对应的骨骼点常模之间的相似度。Third, calculate the vector similarity between the skeleton point vector of each candidate object in each image frame and the skeleton point vector of the corresponding skeleton point norm as the distance between each candidate object and the corresponding skeleton point norm in each image frame similarity between.

本实施例可以利用皮尔逊相似度的计算方式计算候选对象的骨骼点向量与骨骼点常模的骨骼点向量之间的向量相似度,并将该向量相似度作为候选对象与骨骼点常模之间的相似度。其中,计算两个骨骼点向量m、n的皮尔逊相似度的计算公式如下所述:In this embodiment, the calculation method of Pearson similarity can be used to calculate the vector similarity between the skeletal point vector of the candidate object and the skeletal point vector of the skeletal point norm, and use the vector similarity as the difference between the candidate object and the skeletal point norm similarity between. Among them, the calculation formula for calculating the Pearson similarity of two bone point vectors m and n is as follows:

其中,r表示骨骼点向量m、n之间的向量相似度,h表示骨骼点向量中的向量元素数量,mi表示骨骼点向量m中的第i个向量元素,ni表示骨骼点向量n中的第i个向量元素,表示骨骼点向量m中的向量元素平均值,表示骨骼点向量n中的向量元素平均值。Among them, r represents the vector similarity between the bone point vector m and n, h represents the number of vector elements in the bone point vector, mi represents the ith vector element in the bone point vector m, and ni represents the bone point vector n The ith vector element in , Represents the average value of the vector elements in the bone point vector m, Represents the average value of the vector elements in the bone point vector n.

S302、将各图像帧中与对应的骨骼点常模相似度最大的候选对象的骨骼点坐标作为各图像帧中测试对象的骨骼点坐标。S302. Use the bone point coordinates of the candidate object having the largest norm similarity with the corresponding bone point in each image frame as the bone point coordinates of the test object in each image frame.

具体的,对上述步骤获取到的图像帧中各候选对象与各骨骼点常模之间的相似度进行比较,将相似度取值最大的候选对象作为该图像帧中的测试对象,从而得到各图像帧中测试对象的骨骼点坐标。在进行相似度比较时,首先对比一个候选对象与各个骨骼点常模之间的相似度,取其中最大的一个相似度作为该候选对象对应的目标相似度,利用此方式确定图像帧中所有候选对象对应的目标相似度,然后对比所有候选对象的目标相似度,将目标相似度中最大值对应的候选对象作为测试对象。Specifically, compare the similarity between each candidate object in the image frame obtained in the above steps and each skeletal point norm, and use the candidate object with the largest similarity value as the test object in the image frame, so as to obtain each The bone point coordinates of the test object in the image frame. When performing similarity comparison, first compare the similarity between a candidate object and the norms of each skeletal point, take the largest similarity as the target similarity corresponding to the candidate object, and use this method to determine all candidate objects in the image frame. The target similarity corresponding to the object, and then compare the target similarity of all candidate objects, and use the candidate object corresponding to the maximum value in the target similarity as the test object.

其中,测试对象的确定公式如下所述:Among them, the determination formula of the test object is as follows:

其中,id表示测试对象的标识,表示图像帧中第i个候选对象的归一化骨骼点坐标向量与图像帧对应的坐直常模的归一化骨骼点坐标向量之间的向量相似度,表示图像帧中第i个候选对象的归一化骨骼点坐标向量与图像帧对应的平躺常模的归一化骨骼点坐标向量之间的向量相似度,氛示图像帧中第i个候选对象的归一化骨骼点坐标向量与图像帧对应的中间常模的归一化骨骼点坐标向量之间的向量相似度,k表示图像帧中候选对象的数量。Among them, id represents the identity of the test object, Represent the vector similarity between the normalized bone point coordinate vector of the i-th candidate object in the image frame and the normalized bone point coordinate vector of the sitting upright norm corresponding to the image frame, Indicates the vector similarity between the normalized bone point coordinate vector of the i-th candidate object in the image frame and the normalized bone point coordinate vector of the flat lying norm corresponding to the image frame, Indicates the vector similarity between the normalized skeletal point coordinate vector of the ith candidate object in the image frame and the normalized skeletal point coordinate vector of the corresponding intermediate norm of the image frame, and k represents the number of candidate objects in the image frame .

作为一种可选的实施方式,参见图4所示,本申请另一实施例公开了,上述步骤S101中,根据各图像帧中测试对象的骨骼点坐标,对各图像帧中的所述测试对象进行动作属性分类,确定各图像帧对应的动作属性,包括如下步骤:As an optional implementation mode, as shown in FIG. 4, another embodiment of the present application discloses that in the above step S101, according to the skeletal point coordinates of the test object in each image frame, the Classify the action attributes of the object, and determine the action attributes corresponding to each image frame, including the following steps:

S401、对各图像帧中测试对象的所有骨骼点坐标进行归一化,得到各图像帧对应的归一化骨骼点坐标。S401. Normalize all bone point coordinates of the test object in each image frame to obtain normalized bone point coordinates corresponding to each image frame.

具体的,本实施例需要对图像帧中测试对象的骨骼点坐标进行归一化处理,得到各图像帧中测试对象的归一化骨骼点坐标,避免因为运动视频采集设备采集的图像受采集设备的视角影响,从而影响从图像帧中检测出的骨骼点坐标的准确度。Specifically, this embodiment needs to normalize the skeleton point coordinates of the test object in the image frames to obtain the normalized skeleton point coordinates of the test object in each image frame, so as to avoid the image captured by the motion video collection device from being affected by the collection device. The angle of view affects the accuracy of the bone point coordinates detected from the image frame.

本实施例中,优选采用大腿长度和左髋与右髋之间的髋部中心点对骨骼点进行归一化,归一化的计算方式与上述实施例中的归一化方式相同,本实施例不再具体阐述。本实施例也可以利用其他骨骼点对所有骨骼点坐标进行归一化处理,例如,利用上身长度和脖子上的骨骼点等。对于仰卧起坐的运动,利用大腿长度和左髋与右髋之间的髋部中心点对骨骼点进行归一化的准确率更高。In this embodiment, it is preferable to use the length of the thigh and the center point of the hip between the left hip and the right hip to normalize the bone points, and the calculation method of normalization is the same as that in the above embodiment. Examples are not described in detail. In this embodiment, other bone points can also be used to normalize the coordinates of all bone points, for example, using the length of the upper body and the bone points on the neck. For the sit-up exercise, normalizing the bone points with the thigh length and the hip center point between the left and right hips is more accurate.

S402、将各图像帧对应的所有归一化骨骼点坐标组合成各图像帧对应的骨骼点向量。S402. Combine all normalized bone point coordinates corresponding to each image frame into a bone point vector corresponding to each image frame.

具体的,对图像帧中测试对象的骨骼点坐标进行归一化之后,确定图像帧中测试对象的归一化骨骼点坐标序列,然后将该归一化骨骼点坐标序列拉直处理,得到图像帧中测试对象的骨骼点向量。其中,对归一化骨骼点坐标序列拉直处理的具体方式与上述实施例中的拉直处理方式相同,本实施例不再具体阐述。Specifically, after normalizing the skeletal point coordinates of the test object in the image frame, determine the normalized skeletal point coordinate sequence of the test object in the image frame, and then straighten the normalized skeletal point coordinate sequence to obtain the image A vector of bone points for the test object in the frame. Wherein, the specific manner of straightening the normalized skeletal point coordinate sequence is the same as that in the above-mentioned embodiment, and will not be described in detail in this embodiment.

S403、对各图像帧对应的骨骼点向量进行动作属性分类,确定各图像帧对应的动作属性。S403. Classify the action attributes of the skeleton point vectors corresponding to each image frame, and determine the action attributes corresponding to each image frame.

具体的,本实施例在确定了各图像帧中测试对象的骨骼点向量后,可以根据测试对象的骨骼点向量,对测试对象进行动作属性分类,确定各图像帧对应的动作属性。本实施例可以利用样本骨骼点向量预先训练动作属性分类模型。其中,图像帧对应的动作属性中包括至少一个属性元素,每个属性元素的值用于表示该图像帧中测试对象是否展现与属性元素对应的姿态。Specifically, in this embodiment, after determining the skeletal point vector of the test object in each image frame, the test object can be classified according to the skeletal point vector of the test object to determine the action attribute corresponding to each image frame. In this embodiment, the action attribute classification model can be trained in advance by using the sample skeleton point vector. Wherein, the action attribute corresponding to the image frame includes at least one attribute element, and the value of each attribute element is used to indicate whether the test object in the image frame exhibits the gesture corresponding to the attribute element.

例如,动作属性中可以包括触膝状态、躺平状态、屈膝状态、抱头状态和朝向状态中的至少一个属性元素,本实施例可以利用1和0表示是和否,如果触膝状态的值为1,则说明图像帧中测试对象触膝,如果触膝状态的值为0,则说明图像帧中测试对象未触膝;如果躺平状态的值为1,则说明图像帧中测试对象躺平,如果躺平状态的值为0,则说明图像帧中测试对象未躺平;如果屈膝状态的值为1,则说明图像帧中测试对象屈膝,如果屈膝状态的值为0,则说明图像帧中测试对象未屈膝;如果抱头状态的值为1,则说明图像帧中测试对象抱头,如果抱头状态的值为0,则说明图像帧中测试对象未抱头;如果朝向状态的值为1,则说明图像帧中测试对象朝右,如果朝向状态的值为0,则说明图像帧中测试对象朝左。For example, the action attribute may include at least one attribute element in the state of touching the knee, lying down, bending the knee, holding the head and facing the state. In this embodiment, 1 and 0 may be used to indicate yes and no. If the value of the state of touching the knee If it is 1, it means that the test object touches the knee in the image frame, if the value of the knee touch state is 0, it means that the test object does not touch the knee in the image frame; if the value of the lying down state is 1, it means that the test object is lying down in the image frame Flat, if the value of the lying flat state is 0, it means that the test object in the image frame is not lying flat; if the value of the kneeling state is 1, it means that the test object in the image frame is kneeling; The test object in the frame is not kneeling; if the value of the head-holding state is 1, it means that the test object is holding the head in the image frame; if the value of the head-holding state is 0, it means that the test object is not holding the head in the image frame; A value of 1 means that the test object in the image frame is facing right, and if the value of the orientation state is 0, it means that the test object in the image frame is facing left.

本实施例中,预先训练的动作属性分类模型包括多层感知机网络和分类神经网络,图像帧中测试对象的骨骼点向量输入到多层感知机网络中,多层感知机网络可以对骨骼点向量进行动作属性分析,从而输出动作属性向量,其中动作属性向量中包含各个动作属性元素对应的数值。如果图像帧中测试对象的骨骼点坐标有30个,且动作属性中包含5个动作属性元素,骨骼点向量的维度便为60维向量,那么多层感知机网络的输入层为60维,中间层为64维,输出层为5维,多层感知机网络输出的动作属性向量则为5维向量。分类神经网络对多层感知机网络输出的动作属性向量进行sigmoid运算,实现对动作属性向量的归一化操作,得到归一化0到1的数值,每个数值对应一个阈值,大于阈值则输出1,小于等于阈值则输出0,从而得到五个数值,这五个数值分别表示测试对象是否展现与数值对应的姿态。In this embodiment, the pre-trained action attribute classification model includes a multi-layer perceptron network and a classification neural network, and the skeleton point vector of the test object in the image frame is input into the multi-layer perceptron network, and the multi-layer perceptron network can classify the skeleton points The vector performs action attribute analysis, thereby outputting an action attribute vector, wherein the action attribute vector includes values corresponding to each action attribute element. If there are 30 bone point coordinates of the test object in the image frame, and the action attribute contains 5 action attribute elements, the dimension of the bone point vector is a 60-dimensional vector, so the input layer of the multi-layer perceptron network is 60-dimensional, and the middle The layer is 64-dimensional, the output layer is 5-dimensional, and the action attribute vector output by the multilayer perceptron network is a 5-dimensional vector. The classification neural network performs a sigmoid operation on the action attribute vector output by the multi-layer perceptron network, realizes the normalization operation on the action attribute vector, and obtains a normalized value from 0 to 1. Each value corresponds to a threshold, and if it is greater than the threshold, it will output 1, if it is less than or equal to the threshold value, then

作为一种可选的实施方式,参见图5所示,本申请另一实施例公开了,上述步骤S102,根据各图像帧对应的动作属性,确定测试对象运动时的每组动作是否合规,得到对每组动作的规范性评测结果,包括如下步骤:As an optional implementation, as shown in FIG. 5 , another embodiment of the present application discloses that in the above step S102, according to the action attributes corresponding to each image frame, it is determined whether each set of actions of the test object is compliant, Obtain the normative evaluation results for each group of actions, including the following steps:

S501、从测试对象的运动视频中,确定出每一组动作对应的各个图像帧。S501. From the motion video of the test object, determine each image frame corresponding to each group of actions.

具体的,为了对测试对象进行运动评测,需要针对测试对象运动过程中的所有运动动作进行规范性评测以及计数评测,因此,本实施例首先需要确定测试对象的运动视频中的每一组动作对应的图像帧。Specifically, in order to perform motion evaluation on the test object, it is necessary to perform normative evaluation and counting evaluation for all motion actions in the motion process of the test object. Therefore, in this embodiment, it is first necessary to determine the corresponding image frame.

本实施例可以通过检测运动视频中的图像帧中测试对象的动作状态,根据各图像帧对应的动作状态的周期性变换,可以确定出每组动作的周期,从而将每组动作的周期内的图像帧作为每组动作对应的图像帧。例如,在仰卧起坐运动中,从躺平动作到坐直动作,再从坐直动作到躺平动作为一组动作的周期。In this embodiment, by detecting the action state of the test object in the image frame in the motion video, according to the periodic transformation of the action state corresponding to each image frame, the cycle of each group of actions can be determined, so that the period of each group of actions in the cycle The image frame is used as the image frame corresponding to each group of actions. For example, in sit-ups, the movement from lying down to sitting upright, and then from sitting upright to lying down is a cycle of one set of movements.

S502、根据每一组动作对应的各个图像帧对应的动作属性,确定测试对象运动时的每组动作是否合规,得到对每组动作的规范性评测结果。S502. According to the motion attributes corresponding to each image frame corresponding to each group of motions, determine whether each group of motions of the test object is compliant, and obtain a normative evaluation result for each group of motions.

具体的,本实施例需要利用每组动作所包含的所有图像帧对应的动作属性对每组动作进行合规分析,从而得到每组动作的规范性评测结果。对于每组动作的周期可以分为上半周期和下半周期,上半周期中的图像帧与下半周期的图像帧的动作状态不同,本实施例可以根据一组动作中各图像帧对应的动作状态来对该组动作的周期进行划分,将一组动作中第一动作状态对应的图像帧作为该动作上半周期的图像帧,第二动作状态对应的图像帧作为该动作下半周期的图像帧。例如,在仰卧起坐的运动评测中,一组动作从平躺拐点到坐起拐点之间的图像帧的动作状态均为坐起状态,这些图像帧为上半周期的图像帧;从坐起拐点到平躺拐点之间的图像帧的动作状态均为平躺状态,这些图像帧为下半周期的图像帧。其中,平躺拐点为一组动作中测试对象的身体弯度最大的图像帧之后、测试对象具有坐起趋势的图像帧;坐起拐点为一组动作中测试对象的身体弯度最小的图像帧之后、测试对象具有躺下趋势的图像帧。在仰卧起坐的运动评测中,一组动作的第一合规分析是针对该组动作的前一组动作的下半周期的图像帧进行分析,一组动作的第二合规分析是针对该组动作的上半周期的图像帧进行分析。Specifically, this embodiment needs to use the action attributes corresponding to all the image frames included in each group of actions to perform compliance analysis on each group of actions, so as to obtain the normative evaluation results of each group of actions. The cycle of each group of actions can be divided into the first half cycle and the second half cycle. The action states of the image frames in the first half cycle and the image frames in the second half cycle are different. In this embodiment, according to the corresponding The cycle of the group of actions is divided according to the action state, and the image frame corresponding to the first action state in a set of actions is used as the image frame of the first half cycle of the action, and the image frame corresponding to the second action state is used as the image frame of the second half cycle of the action. image frame. For example, in the exercise evaluation of sit-ups, the action states of the image frames between the lying inflection point and the sitting-up inflection point of a group of actions are all in the sitting up state, and these image frames are the image frames in the first half cycle; The action states of the image frames between the inflection point and the lying inflection point are all in the lying state, and these image frames are image frames in the second half cycle. Wherein, the lying inflection point is after the image frame with the largest body curvature of the test subject in a group of actions, and the image frame in which the test subject has a tendency to sit up; the sitting up inflection point is after the image frame with the smallest body curvature of the test subject in a group of actions, Image frames of the test subject with a tendency to lie down. In the exercise evaluation of sit-ups, the first compliance analysis of a group of movements is to analyze the image frames of the second half cycle of the previous group of movements of the group of movements, and the second compliance analysis of a group of movements is to analyze the The image frames of the first half cycle of the group action are analyzed.

进一步地,对于两组相邻的动作,其中,第二组动作为第一组动作相邻的后一组动作,对第二组动作合规分析的具体步骤为:Further, for two groups of adjacent actions, where the second group of actions is the subsequent group of actions adjacent to the first group of actions, the specific steps for compliance analysis of the second group of actions are:

根据第一组动作对应的第一合规分析结果和第二组动作中第一动作状态的各个图像帧对应的动作属性,确定第二组动作是否合规,得到第二组动作的规范性测评结果。According to the first compliance analysis results corresponding to the first group of actions and the action attributes corresponding to each image frame of the first action state in the second group of actions, determine whether the second group of actions is compliant, and obtain the normative evaluation of the second group of actions result.

一组动作对应的图像帧包括:第一动作状态对应的图像帧以及与第一动作状态后相邻的第二动作状态对应的图像帧,在仰卧起坐运动中,即为坐起状态对应的图像帧以及平躺状态对应的图像帧。本实施例通过对第一组动作中第二动作状态的各个图像帧对应的动作属性进行第一合规分析,确定第一组动作对应的第一合规分析结果。通过对第二组动作中的第一动作状态的各个图像帧对应的动作属性进行第二合规分析,确定第二组动作对应的第二合规分析结果。将第一组动作对应的第一合规分析结果和第二组动作对应的第二合规分析结果作为第二组动作的规范性评测结果。而针对第二组动作中第二动作状态的各个图像帧对应的动作属性分析出的第一合规分析结果为第二组动作后相邻的第三组动作的规范性评测结果包含的内容。The image frames corresponding to a group of actions include: the image frames corresponding to the first action state and the image frames corresponding to the second action state adjacent to the first action state. The image frame and the image frame corresponding to the lying state. In this embodiment, a first compliance analysis result corresponding to the first group of actions is determined by performing a first compliance analysis on the action attributes corresponding to each image frame in the second action state in the first group of actions. A second compliance analysis result corresponding to the second group of actions is determined by performing a second compliance analysis on the action attributes corresponding to each image frame of the first action state in the second group of actions. The first compliance analysis results corresponding to the first set of actions and the second compliance analysis results corresponding to the second set of actions are used as the normative evaluation results of the second set of actions. The first compliance analysis result analyzed for the action attributes corresponding to each image frame of the second action state in the second set of actions is the content included in the normative evaluation results of the third set of actions adjacent to the second set of actions.

在仰卧起坐运动中,对第二组动作中第一动作状态的各个图像帧对应的动作属性进行第二合规分析,得到第二组动作对应的第二合规分析结果,具体包括:如果第二组动作中第一动作状态(即坐起状态)的各个图像帧的触膝状态均为未触膝,则说明测试对象在进行第二组动作时未触膝,确定第二组动作对应的第二合规分析结果包括未触膝违规。如果第二组动作中第一动作状态(即坐起状态)的各个图像帧中,存在触膝状态为触膝的图像帧,则分析第二组动作中待分析图像帧的屈膝状态和抱头状态,其中,待分析图像帧为第一动作状态的各个图像帧中,触膝状态为触膝的图像帧以及触膝状态为触膝的图像帧之前的图像帧。如果第二组动作中的待分析图像帧中存在屈膝状态为未屈膝的图像帧,则说明测试对象在进行第二组动作时,触膝之前存在未屈膝的情况,确定第二组动作对应的第二合规分析结果包括未屈膝违规。如果第二组动作中的待分析图像帧中存在抱头状态为未抱头的图像帧,则说明测试对象在进行第二组动作时,触膝之前存在未抱头的情况,确定第二组动作对应的第二合规分析结果包括未抱头违规。In the sit-up exercise, the second compliance analysis is performed on the action attributes corresponding to each image frame of the first action state in the second group of actions, and the second compliance analysis result corresponding to the second group of actions is obtained, specifically including: if In the second group of actions, the knee-touching state of each image frame in the first action state (that is, the sitting-up state) is not touching the knee, which means that the test object does not touch the knee when performing the second set of actions, and it is determined that the second set of actions corresponds to The results of the second compliance analysis included no knee-touch violations. If in each image frame of the first action state (i.e. sitting up state) in the second group of actions, there is an image frame whose knee-touching state is knee-touching, then analyze the knee-bending state and head-holding state of the image frame to be analyzed in the second group of actions state, wherein the image frame to be analyzed is the image frame in the first action state, the image frame in which the knee-touch state is knee-touch and the image frame before the image frame in which the knee-touch state is knee-touch. If there is an image frame in which the knee is not bent in the image frame to be analyzed in the second group of actions, it means that the test subject has not bent the knee before touching the knee when performing the second group of actions, and it is determined that the second group of actions corresponds to The second compliance analysis result includes a no-knee violation. If in the image frames to be analyzed in the second group of actions, there is an image frame in which the head is held in the state of not holding the head, it means that when the test subject performs the second group of actions, there is a situation of not holding the head before touching the knee, and the second group is determined. The second compliance analysis result corresponding to the action includes a violation of not holding the head.

通过对第一组动作中第二动作状态的各个图像帧对应的动作属性进行第一合规分析确定第一组动作对应的第一合规分析结果,具体包括:如果第一组动作中第二动作状态(即平躺状态)的各个图像帧的躺平状态均为未躺平,则确定第一组动作对应的第一合规分析结果包括未躺平违规,说明测试对象开始第二组动作时,并未躺平,因此,第二组动作未躺平违规。Determine the first compliance analysis result corresponding to the first group of actions by performing the first compliance analysis on the action attributes corresponding to each image frame of the second action state in the first group of actions, specifically including: if the second action in the first group of actions If the lying state of each image frame in the action state (that is, the lying state) is not lying flat, then it is determined that the first compliance analysis result corresponding to the first group of actions includes non-laying violation, indicating that the test subject starts the second group of actions At the time, he did not lie flat, therefore, the second set of movements did not lie flat and violated the rules.

作为一种可选的实施方式,参见图6所示,本申请另一实施例公开了,上述步骤S501,从测试对象的运动视频中,确定出每一组动作对应的各个图像帧,包括如下步骤:As an optional implementation manner, as shown in FIG. 6, another embodiment of the present application discloses that in the above step S501, from the motion video of the test object, each image frame corresponding to each group of actions is determined, including the following step:

S601、根据运动视频的各图像帧中测试对象的骨骼点坐标,分析各图像帧对应的动作状态。S601. According to the skeletal point coordinates of the test object in each image frame of the motion video, analyze the action state corresponding to each image frame.

具体的,本实施例可以根据运动视频的各图像帧中测试对象的骨骼点坐标,对图像帧中测试对象的身体弯曲角度进行计算,然后根据各图像帧中测试对象的身体弯曲角度计算各图像帧中测试对象当前的运动速度,根据各图像帧中测试对象的身体弯曲角度和运动速度,分析图像帧对应的动作状态。Specifically, this embodiment can calculate the body bending angle of the test object in the image frame according to the skeletal point coordinates of the test object in each image frame of the motion video, and then calculate the body bending angle of each image frame according to the body bending angle of the test object in each image frame. According to the current motion speed of the test object in the frame, according to the body bending angle and motion speed of the test object in each image frame, the motion state corresponding to the image frame is analyzed.

进一步地,本步骤具体包括:Further, this step specifically includes:

第一,根据图像帧中所述测试对象的骨骼点坐标,判断图像帧是否符合预先设置的状态切换条件。First, according to the skeleton point coordinates of the test object in the image frame, it is judged whether the image frame meets the preset state switching condition.

本实施例中,预先设置的状态切换条件为将图像帧对应的动作状态切换为与该图像帧的前一帧图像帧对应的动作状态所不同的动作状态的条件。在一组动作包括两种动作状态时,一组动作对应的图像帧则包括:第一动作状态对应的图像帧以及与该第一动作状态后相邻的第二动作状态对应的图像帧。如果当前的图像帧的前一帧图像帧对应的动作状态为第一动作状态,则确定状态切换条件为切换到第二动作状态的状态切换条件,判断当前的图像帧是否符合切换到第二动作状态的状态切换条件;如果当前的图像帧无前一帧图像帧(即为第一帧图像帧),或者当前的图像帧的前一帧图像帧对应的动作状态为第二动作状态或无动作状态,则确定状态切换条件为切换到第一动作状态的状态切换条件,判断当前的图像帧是否符号切换到第一动作状态的状态切换条件。In this embodiment, the preset state switching condition is a condition for switching the action state corresponding to the image frame to an action state different from the action state corresponding to the previous image frame of the image frame. When a set of actions includes two action states, the image frames corresponding to the set of actions include: an image frame corresponding to the first action state and an image frame corresponding to the second action state adjacent to the first action state. If the action state corresponding to the previous image frame of the current image frame is the first action state, then determine that the state switching condition is the state switching condition for switching to the second action state, and judge whether the current image frame meets the switching to the second action The state switching condition of the state; if the current image frame has no previous image frame (that is, the first image frame), or the action state corresponding to the previous image frame of the current image frame is the second action state or no action state, then determine the state switching condition as the state switching condition for switching to the first action state, and judge whether the current image frame sign switches to the state switching condition for the first action state.

具体的,判断图像帧是否符合状态切换条件,首先需要根据当前的图像帧中测试对象的骨骼点坐标,计算当前的图像帧中测试对象的身体弯曲角度,根据当前的图像帧的前一帧图像帧中测试对象的骨骼点坐标,计算前一帧图像帧中测试对象的身体弯曲角度,然后根据两帧图像帧中测试对象的身体弯曲角度之间的差值,以及两帧图像帧的采集时间之间的差值,计算出当前的图像帧中测试对象的运动速度(即角速度)。其中,图像帧中测试对象的身体弯曲角度可以将髋部到脚踝的向量与髋部到肩部的向量之间的角度作为身体弯曲角度。Specifically, to determine whether the image frame meets the state switching condition, first, it is necessary to calculate the body bending angle of the test object in the current image frame according to the bone point coordinates of the test object in the current image frame, and to calculate the body bending angle of the test object according to the previous frame image of the current image frame The bone point coordinates of the test object in the frame, calculate the body bending angle of the test object in the previous image frame, and then according to the difference between the body bending angles of the test object in the two image frames, and the acquisition time of the two image frames The difference between them is used to calculate the motion velocity (that is, the angular velocity) of the test object in the current image frame. Wherein, for the body bending angle of the test subject in the image frame, the angle between the vector from the hip to the ankle and the vector from the hip to the shoulder may be taken as the body bending angle.

本实施例确定了当前的图像帧中测试对象的运动速度后,需要比较该运动速度与预设速度阈值之间的大小,如果当前的图像帧中测试对象的运动速度不小于预设速度阈值,则确定当前的图像帧不符合状态切换条件,如果当前的图像帧中测试对象的运动速度小于预设速度阈值,则说明测试对象可能符合状态切换条件,即当前的图像帧可能为状态切换拐点,即为状态切换的候选拐点。此时需要根据当前的图像帧的前一帧图像帧对应的动作状态和当前的图像帧中测试对象的身体弯曲角度,判断当前的图像帧中测试对象是否具有动作状态切换趋势,如果当前的图像帧中测试对象具有动作状态切换趋势,则说明作为候选拐点的当前的图像帧为状态切换拐点,符合状态切换条件,如果当前的图像帧中测试对象不具有动作状态切换趋势,则说明作为候选拐点的当前的图像帧不是状态切换拐点,不符合状态切换条件。例如,如果作为候选拐点的当前的图像帧中测试对象具有切换为第二动作状态(平躺状态)的趋势,则说明当前的图像帧为平躺拐点,符合切换为第二动作状态的状态切换条件。如果作为候选拐点的当前的图像帧中测试对象具有切换为第一动作状态(坐起状态)的趋势,则说明当前的图像帧为坐起拐点,符合切换为第一动作状态的状态切换条件。After this embodiment determines the motion speed of the test object in the current image frame, it is necessary to compare the size between the motion speed and the preset speed threshold, if the motion speed of the test object in the current image frame is not less than the preset speed threshold, It is then determined that the current image frame does not meet the state switching condition, if the motion speed of the test object in the current image frame is less than the preset speed threshold, then the test object may meet the state switching condition, that is, the current image frame may be the state switching inflection point, That is, the candidate inflection point for state switching. At this time, it is necessary to judge whether the test object in the current image frame has an action state switching tendency according to the action state corresponding to the previous image frame of the current image frame and the body bending angle of the test object in the current image frame. If the test object in the frame has an action state switching tendency, it means that the current image frame as a candidate inflection point is a state switching inflection point, which meets the state switching conditions. If the test object in the current image frame does not have an action state switching tendency, it means that it is a candidate inflection point The current image frame is not a state switching inflection point and does not meet the state switching conditions. For example, if the test object has a tendency to switch to the second action state (lying state) in the current image frame as the candidate inflection point, then it indicates that the current image frame is an inflection point of lying flat, which conforms to the state switching of switching to the second action state condition. If the test object has a tendency to switch to the first action state (sitting up state) in the current image frame as the candidate inflection point, then the current image frame is a sitting up inflection point, which meets the state switching condition for switching to the first action state.

其中,根据当前的图像帧的前一帧图像帧对应的动作状态和当前的图像帧中测试对象的身体弯曲角度,判断当前的图像帧中测试对象是否具有动作状态切换趋势具体是:如果当前的图像帧的前一帧图像帧对应的动作状态为第一动作状态(即坐起状态),则确定前一帧图像帧附近的所有为第一动作状态(即坐起状态)的图像帧中,测试对象的最小身体弯曲角度,计算当前的图像帧中测试对象的身体弯曲角度与最小身体弯曲角度之间的角度差值,如果该角度差值大于预设阈值,则说明当前的图像帧中测试对象有切换为第二动作状态的趋势(即躺下的趋势)。如果当前的图像帧的前一帧图像帧对应的动作状态为第二动作状态(即平躺状态),则确定前一帧图像帧附近的所有为第二动作状态(即平躺状态)的图像帧中,测试对象的最大身体弯曲角度,计算当前的图像帧中测试对象的身体弯曲角度与最大身体弯曲角度之间的角度差值,如果该角度差值大于预设阈值,则说明当前的图像帧中测试对象有切换为第一动作状态的趋势(即坐起趋势)。Wherein, according to the action state corresponding to the previous image frame of the current image frame and the body bending angle of the test object in the current image frame, judging whether the test object has an action state switching tendency in the current image frame is specifically: if the current The action state corresponding to the previous frame of the image frame of the image frame is the first action state (i.e. the state of sitting up), then it is determined that in all the image frames near the previous frame image frame that are the first action state (i.e. the state of sitting up), The minimum body bending angle of the test object, calculate the angle difference between the body bending angle of the test object and the minimum body bending angle in the current image frame, if the angle difference is greater than the preset threshold, it means that the test object in the current image frame The subject has a tendency to switch to a second motion state (ie a tendency to lie down). If the action state corresponding to the previous frame image frame of the current image frame is the second action state (i.e. the lying state), then determine all images near the previous frame image frame that are the second action state (i.e. the lying state) In the frame, the maximum body bending angle of the test object, calculate the angle difference between the body bending angle of the test object and the maximum body bending angle in the current image frame, if the angle difference is greater than the preset threshold, it means that the current image In the frame, the test subject has a tendency to switch to the first action state (that is, a tendency to sit up).

如图8所示,图像帧中测试对象的右髋骨骼点为O,右踝骨骼点为A,右肩骨骼点为B,计算测试对象的身体弯曲角度可以直接计算髋部-脚踝向量髋部-肩膀向量之间的夹角,计算公式为:As shown in Figure 8, the right hip bone point of the test object in the image frame is O, the right ankle bone point is A, and the right shoulder bone point is B. Calculating the body bending angle of the test object can directly calculate the hip-ankle vector Hip-Shoulder Vector The angle between is calculated as:

由于采用余弦定理计算出的夹角α的范围为[0°,180°],那么当测试对象为图9所示的姿态时,计算出的夹角α为向量顺时针到向量之间的角度,但是实际上测试对象的身体弯曲角度应该是向量逆时针到向量之间的角度,即360°-α,此时运动视频中测试对象的身体弯曲角度的变化曲线图便会出现如图7所示的曲线图中的角度突变情况,影响确定图像帧对应的动作状态的准确度。因此,本实施例需要根据图像帧对应的动作属性中的朝向状态以及向量和向量之间的叉积来确定测试对象的身体弯曲角度。即,当图像帧对应的朝向状态表示朝右,且cross<0(向量在向量的顺时针方向),以及图像帧对应的朝向状态表示朝左,且cross>0(向量在向量均逆时针方向)时,身体弯曲角度为360°-α。那么此时运动视频中测试对象的身体弯曲角度的变化曲线图便会修正为图10所示的曲线图。Since the range of the angle α calculated by the law of cosines is [0°, 180°], when the test object is in the attitude shown in Figure 9, the calculated angle α is a vector clockwise to vector , but actually the test subject's body bending angle should be the vector counterclockwise to vector The angle between them is 360°-α. At this time, the change curve of the body bending angle of the test object in the motion video will appear as shown in Figure 7. The sudden change of angle in the curve will affect the determination of the corresponding action of the image frame. The accuracy of the state. Therefore, in this embodiment, according to the orientation state and vector in the action attribute corresponding to the image frame and vector cross product between to determine the body bending angle of the test subject. That is, when the orientation state corresponding to the image frame indicates facing right, and cross<0 (vector in vector The clockwise direction of the image frame), and the orientation state corresponding to the image frame indicates that it is facing left, and cross>0 (vector in vector All counterclockwise), the body bending angle is 360°-α. Then, the change curve of the body bending angle of the test subject in the motion video at this time will be corrected to the curve shown in FIG. 10 .

第二,若图像帧符合所述状态切换条件,则确定图像帧对应的动作状态为状态切换条件对应的动作状态。Second, if the image frame meets the state switching condition, determine that the action state corresponding to the image frame is the action state corresponding to the state switching condition.

状态切换条件对应的动作状态为按照状态切换条件对图像帧对应的动作状态进行切换后的动作状态。例如,如果图像帧符合切换为第一动作状态的状态切换条件,则确定图像帧对应的动作状态为第一动作状态,如果图像帧符合切换为第二动作状态的状态切换条件,则确定图像帧对应的动作状态为第二动作状态。The action state corresponding to the state switching condition is the action state after switching the action state corresponding to the image frame according to the state switching condition. For example, if the image frame meets the state switching condition for switching to the first action state, then determine that the action state corresponding to the image frame is the first action state, and if the image frame meets the state switching condition for switching to the second action state, then determine that the image frame The corresponding action state is the second action state.

第三,若图像帧不符合所述状态切换条件,则确定图像帧对应的动作状态为图像帧的前一帧图像帧对应的动作状态。Third, if the image frame does not meet the state switching condition, determine that the action state corresponding to the image frame is the action state corresponding to the previous image frame of the image frame.