CN115114982A - A distributed model training method and related device for deep learning in edge computing - Google Patents

A distributed model training method and related device for deep learning in edge computingDownload PDFInfo

- Publication number

- CN115114982A CN115114982ACN202210752982.4ACN202210752982ACN115114982ACN 115114982 ACN115114982 ACN 115114982ACN 202210752982 ACN202210752982 ACN 202210752982ACN 115114982 ACN115114982 ACN 115114982A

- Authority

- CN

- China

- Prior art keywords

- training

- model

- data

- distributed

- parameters

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Landscapes

- Management, Administration, Business Operations System, And Electronic Commerce (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及计算机技术领域,特别是一种边缘计算中面向深度学习的分布式模型训练方法、装置、计算机设备及和存储介质。The invention relates to the field of computer technology, in particular to a deep learning-oriented distributed model training method, device, computer equipment and storage medium in edge computing.

背景技术Background technique

在工业场景中,面对高维度、强实时、大流量的设备和传感器数据,往往需要更加复杂的深度神经网络进行特征提取,但由于复杂网络参数较多,其训练过程会非常耗时;此外,如此大量的数据,如果都集中在云端进行处理,对云端计算资源、数据存储能力以及网络带宽等将是一个严峻的考验。In industrial scenarios, in the face of high-dimensional, strong real-time, and large-traffic equipment and sensor data, more complex deep neural networks are often required for feature extraction. However, due to the large number of complex network parameters, the training process will be very time-consuming; in addition, , If such a large amount of data is concentrated in the cloud for processing, it will be a severe test for cloud computing resources, data storage capabilities and network bandwidth.

在深度学习领域,提高模型精度的常用方式有两种:其一是通过对模型结构进行优化,但随着神经网络结构设计的不断成熟,网络结构的优化越发困难;其二是增大模型或数据规模,但又会导致模型训练时间的增加。随着信息技术的发展,分布式训练的思想被应用于解决大规模数据集的训练领域,当现有分布式训练存在使用范围窄,模型参数多等技术问题,不能被广泛应用于工业边缘管控领域。In the field of deep learning, there are two common ways to improve the accuracy of the model: one is to optimize the model structure, but with the continuous maturity of the neural network structure design, the optimization of the network structure becomes more and more difficult; the other is to increase the model or Data size, but it will lead to an increase in model training time. With the development of information technology, the idea of distributed training has been applied to solve the training field of large-scale data sets. When the existing distributed training has technical problems such as narrow application scope and many model parameters, it cannot be widely used in industrial edge management and control. field.

发明内容SUMMARY OF THE INVENTION

本发明的一个目的就是提供一种边缘计算中面向深度学习的分布式模型训练方法。An object of the present invention is to provide a deep learning-oriented distributed model training method in edge computing.

本发明的目的是通过这样的技术方案实现的,具体步骤如下:The purpose of the present invention is to realize through such technical scheme, and concrete steps are as follows:

1)数据采集:从待分类数据的历史数据库中采集数据,构建初始数据集;1) Data collection: collect data from the historical database of the data to be classified, and construct an initial data set;

2)数据分类:所述初始数据集中的包括训练集S、测试集Q,并将训练集S的数据平均分为N个训练子集Si,Si=(S1、S2、…、SN);2) Data classification: the initial data set includes a training set S and a test set Q, and the data of the training set S is evenly divided into N training subsets Si , Si =(S1 , S2 , . . . , SN );

3)构建模型:采用一维卷积构建N个基于ResNet网络的训练模型,并初始化模型超参数;3) Model building: use one-dimensional convolution to build N training models based on the ResNet network, and initialize the model hyperparameters;

4)模型训练:将N个训练子集Si数据分别输入N个基于ResNet网络的训练模型进行基于数据并行的分布式训练;4) Model training: the N training subsets Si data are respectively input into N training models based on the ResNet network for distributed training based on data parallelism;

5)参数输出:当基于ResNet网络的训练模型的分布式训练次数达到预设阈值时,输出模型参数,得到训练结果。5) Parameter output: When the distributed training times of the training model based on the ResNet network reaches a preset threshold, the model parameters are output to obtain the training result.

进一步,所述ResNet网络包括依次连接的卷积输入层、四个残差块、池化层和全连接层,并在全连接层前设置了Dropout。Further, the ResNet network includes a convolutional input layer, four residual blocks, a pooling layer and a fully connected layer connected in sequence, and a dropout is set before the fully connected layer.

进一步,所述基于ResNet网络的训练模型的初始参数包括训练规模、epoch、Dropout概率、Loss函数、Adam优化器参数。Further, the initial parameters of the training model based on the ResNet network include training scale, epoch, Dropout probability, Loss function, and Adam optimizer parameters.

进一步,步骤4)中模型训练的具体步骤为:Further, the concrete steps of model training in step 4) are:

4-1)将N个训练子集Si数据分别输入N个基于ResNet网络的训练模型进行一次单独训练;4-1) N training subsets Si data are respectively input into N training models based on ResNet network for a single training;

4-2)将训练后的模型的参数进行融合:4-2) Fusion of the parameters of the trained model:

式中,ω是模型参数,表示执行完n个样本数据后计算得到的真实梯度,n表示总样本数量,mk表示第k个模型所含样本数量,表示第k个模型计算的梯度信息;where ω is the model parameter, Represents the true gradient calculated after executing n sample data, n represents the total number of samples, mk represents the number of samples contained in the kth model, Indicates the gradient information calculated by the kth model;

4-3)将融合后的参数再分配到N个基于ResNet网络的训练模型中,进行训练;4-3) redistribute the fused parameters to N training models based on the ResNet network for training;

4-4)若当前训练次数P<Pmax,则重复步骤4-2)-步骤4-3),若当前训练次数P≥Pmax,则转至步骤5),其中Pmax为预设的最大迭代次数。4-4) If the current training times P<Pmax , repeat steps 4-2)-step 4-3), if the current training times P ≥ Pmax , then go to step 5), where Pmax is a preset The maximum number of iterations.

本发明的另一个目的就是提供一种边缘计算中基于深度学习的分布式模型训练装置。Another object of the present invention is to provide a distributed model training device based on deep learning in edge computing.

本发明的目的是通过这样的技术方案实现的,包括:The object of the present invention is achieved through such technical solutions, including:

数据采集模块:从待分类数据的历史数据库中采集数据,构建初始数据集;Data collection module: collect data from the historical database of data to be classified, and construct an initial data set;

数据分类模块:所述初始数据集中的包括训练集S、测试集Q,用于并将训练集S的数据平均分为N个训练子集Si,Si=(S1、S2、…、SN);Data classification module: the initial data set includes a training set S and a test set Q, and is used to divide the data of the training set S into N training subsets Si , Si =(S1 , S2 , … , SN );

构建模型模块:用于采用一维卷积构建N个基于ResNet网络的训练模型,并初始化模型超参数;Model building module: used to build N training models based on ResNet network using one-dimensional convolution, and initialize model hyperparameters;

模型训练模块:用于将N个训练子集Si数据分别输入N个基于ResNet网络的训练模型进行基于数据并行的分布式训练;Model training module: used to input N training subsets Si data into N training models based on ResNet network for distributed training based on data parallelism;

参数输出模块:用于在基于ResNet网络的训练模型的分布式训练次数达到预设阈值时,输出模型参数,得到训练结果。Parameter output module: used to output the model parameters to obtain the training result when the distributed training times of the training model based on the ResNet network reaches the preset threshold.

本发明还提供一种计算机设备,包括存储器、处理器及存储在存储器上并可在处理器上运行的计算机程序,其特征在于,所述处理器执行所述程序时实现上述一种边缘计算中面向深度学习的分布式模型训练方法。The present invention also provides a computer device, comprising a memory, a processor, and a computer program stored in the memory and running on the processor, characterized in that, when the processor executes the program, the above-mentioned edge computing is implemented. A distributed model training method for deep learning.

本发明还提供一种计算机可读存储介质,其上存储有计算机程序,其特征在于,该程序被处理器执行时实现上述一种边缘计算中面向深度学习的分布式模型训练方法。The present invention also provides a computer-readable storage medium on which a computer program is stored, characterized in that, when the program is executed by a processor, the above-mentioned deep learning-oriented distributed model training method in edge computing is implemented.

由于采用了上述技术方案,本发明具有如下的优点:Owing to adopting the above-mentioned technical scheme, the present invention has the following advantages:

本申请将数据集分解为多个数据子集,并将多个数据子集分别输入多个ResNet网络中进行训练,每训练完成一个epoch会向管理节点同步梯度信息,梯度信息经管理节点融合后,重新回传到多个ResNet网络中进行参数更新,本申请的基于数据并行的分布式模型训练方法可以在相同模型精度下,明显提高模型训练效率。In this application, the data set is decomposed into multiple data subsets, and the multiple data subsets are respectively input into multiple ResNet networks for training. After each training epoch is completed, the gradient information will be synchronized to the management node. After the gradient information is fused by the management node , and back to multiple ResNet networks for parameter update. The data parallel-based distributed model training method of the present application can significantly improve the model training efficiency under the same model accuracy.

本发明的其他优点、目标和特征在某种程度上将在随后的说明书中进行阐述,并且在某种程度上,基于对下文的考察研究对本领域技术人员而言将是显而易见的,或者可以从本发明的实践中得到教导。本发明的目标和其他优点可以通过下面的说明书和权利要求书来实现和获得。Other advantages, objects, and features of the present invention will be set forth in the description that follows, and will be apparent to those skilled in the art based on a study of the following, to the extent that is taught in the practice of the present invention. The objectives and other advantages of the present invention may be realized and attained by the following description and claims.

附图说明Description of drawings

本发明的附图说明如下。The accompanying drawings of the present invention are described below.

图1为本发明的方法流程图。FIG. 1 is a flow chart of the method of the present invention.

图2为本发明ResNet网络的结构示意图。FIG. 2 is a schematic structural diagram of the ResNet network of the present invention.

图3为本发明实施1中数据集样本样例图。FIG. 3 is a sample diagram of a dataset sample in

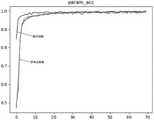

图4为本发明实施1中模型训练精度曲线图。FIG. 4 is a graph of model training accuracy in

图5为本发明实施1中模型训练损失值曲线图。FIG. 5 is a graph showing the loss value of model training in

图6为本发明实施1中模型测试分类的混淆矩阵图。FIG. 6 is a confusion matrix diagram of model testing classification in

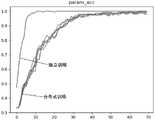

图7为本发明实施2中模型训练精度曲线图。FIG. 7 is a graph showing the accuracy of model training in

图8为本发明实施2中模型训练损失值曲线图。FIG. 8 is a graph showing the loss value of model training in

图9为本发明实施2中模型测试分类的混淆矩阵图。FIG. 9 is a confusion matrix diagram of model testing classification in

图10为本发明实施3中模型训练精度曲线图。FIG. 10 is a graph showing the accuracy of model training in

图11为本发明实施3中模型训练损失值曲线图。FIG. 11 is a graph showing the loss value of model training in

图12为本发明实施3中模型测试分类的混淆矩阵图。FIG. 12 is a confusion matrix diagram of model testing classification in

具体实施方式Detailed ways

下面结合附图和实施例对本发明作进一步说明。The present invention will be further described below with reference to the accompanying drawings and embodiments.

实施例1:Example 1:

一种手写数字体数据集分类中面向深度学习的分布式模型训练方法、装置、计算机设备及和存储介质。A distributed model training method, device, computer equipment and storage medium for deep learning in the classification of handwritten digit datasets.

一种手写数字体数据集分类中面向深度学习的分布式模型训练方法,具体步骤如下:A distributed model training method for deep learning in the classification of handwritten digit datasets, the specific steps are as follows:

1)数据采集:从美国国家标准与技术研究所(Mixed National Institute ofStandards and Technology database,MNIST)开源的手写数字体数据库中采集数据,构建初始数据集,如图3所示,所述MNIST手写数字数据库中的单个数据为包含0~9共10个数字的手写笔记图片,图片大小为28*28,数据库共计有60000个训练样本以及10000个测试样本。1) Data collection: collect data from the open source handwritten digit body database of the National Institute of Standards and Technology (Mixed National Institute of Standards and Technology database, MNIST) to construct an initial data set, as shown in Figure 3, the MNIST handwritten digits The single data in the database is a handwritten note picture containing a total of 10 numbers from 0 to 9, and the picture size is 28*28. The database has a total of 60,000 training samples and 10,000 test samples.

2)数据分类:从MNIST手写数字数据库的训练样本中提取15000训练样本构成训练集S,从测试样本中提取3000测试样本构成测试集Q,并将15000训练样本平均分A、B、C三个训练集,并将全部采集的15000训练样本作为对照集D。2) Data classification: 15,000 training samples are extracted from the training samples of the MNIST handwritten digit database to form a training set S, 3,000 test samples are extracted from the test samples to form a test set Q, and the 15,000 training samples are equally divided into three A, B, and C The training set, and all the 15,000 training samples collected are used as the control set D.

表1数据集样本划分表Table 1 Data set sample division table

3)构建模型:如图2所示,采用一维卷积构建A、B、C、D四个基于ResNet网络的训练模型,并初始化模型参数;3) Building the model: As shown in Figure 2, four training models A, B, C, and D based on the ResNet network are constructed by one-dimensional convolution, and the model parameters are initialized;

所述ResNet网络包括依次连接的卷积输入层、四个残差块、池化层和全连接层,并在全连接层前设置了Dropout,所述ResNet网络的模型参数如表2所示。The ResNet network includes a convolutional input layer, four residual blocks, a pooling layer, and a fully connected layer connected in sequence, and a dropout is set before the fully connected layer. The model parameters of the ResNet network are shown in Table 2.

表2ResNet网络模型的参数Table 2 Parameters of the ResNet network model

所述基于ResNet网络的训练模型的初始参数包括训练规模、epoch、Dropout概率、Loss函数、Adam优化器参数,其如表3所示。The initial parameters of the training model based on the ResNet network include training scale, epoch, Dropout probability, Loss function, Adam optimizer parameters, which are shown in Table 3.

表3ResNet网络模型的超参数Table 3 Hyperparameters of the ResNet network model

4)模型训练:将训练集A、B、C的数据分别输入A、B、C三个训练模型进行基于数据并行的分布式训练,将训练集D输入训练模型D中进行独立训练;4) Model training: input the data of training sets A, B, and C into three training models A, B, and C respectively for distributed training based on data parallelism, and input training set D into training model D for independent training;

训练模型A、B、C分别进行1次独立训练和6次基于参数融合的训练,其模型参数融合为:The training models A, B, and C are trained for 1 independent training and 6 trainings based on parameter fusion respectively. The model parameters are fused as follows:

式中,ω是模型参数,表示执行完n个样本数据后计算得到的真实梯度,n表示总样本数量,mk表示第k个模型所含样本数量,表示第k个模型计算的梯度信息;where ω is the model parameter, Represents the true gradient calculated after executing n sample data, n represents the total number of samples, mk represents the number of samples contained in the kth model, Indicates the gradient information calculated by the kth model;

A、B、C训练模型每次训练10个epoch,总计迭代次数70次,对模型D独立训练70次;模型训练的精度曲线与损失值曲线分别如图4、5所示。Models A, B, and C are trained for 10 epochs each time, with a total of 70 iterations, and model D is independently trained 70 times; the accuracy and loss curves of model training are shown in Figures 4 and 5, respectively.

在本发明实例中,基于数据并行的分布式训练包括两类节点,分别是管理节点与计算节点,二者分别对应边缘管控平台分布式离线计算服务中的汇聚节点与基础计算节点;管理节点主要负责参数同步,数据集会被拆分为多个独立子集存储在平台分布式对象存储系统中,在训练开始时会加载到各计算节点;训练开始时,各计算节点会使用管理节点统一传递的参数进行初始化,随后利用本地数据进行训练;每训练完成一个epoch会向管理节点同步梯度信息,梯度信息经管理节点融合后,会重新回传到各节点进行参数更新,参数更新完后便进行下一轮的训练。In the example of the present invention, the distributed training based on data parallelism includes two types of nodes, namely management nodes and computing nodes, which correspond to the aggregation nodes and basic computing nodes in the distributed offline computing service of the edge management and control platform respectively; the management nodes mainly Responsible for parameter synchronization, the data set will be divided into multiple independent subsets and stored in the platform distributed object storage system, and will be loaded to each computing node at the beginning of training; at the beginning of training, each computing node will use the management node to uniformly transmit the data. The parameters are initialized, and then the local data is used for training; each epoch after training is completed, the gradient information will be synchronized with the management node. After the gradient information is fused by the management node, it will be sent back to each node for parameter update. After the parameter update, the next step will be performed. one round of training.

5)参数输出:当基于ResNet网络的训练模型的分布式训练次数达到1次独立训练和6次基于参数融合的训练时,输出模型参数,得到训练结果;5) Parameter output: When the distributed training times of the training model based on the ResNet network reaches 1 independent training and 6 training based on parameter fusion, the model parameters are output to obtain the training results;

6)模型测试:将测试集Q数据分别输入A、B、C、D训练模型中进行测试,各模型的测试准确率及耗时信息如表4所示,各模型测试分类的混淆矩阵如图6所示。6) Model testing: Input the test set Q data into the training models A, B, C, and D for testing. The testing accuracy and time-consuming information of each model are shown in Table 4, and the confusion matrix of each model testing classification is shown in the figure. 6 shown.

表4模型的测试结果及耗时信息Table 4. Test results and time-consuming information of the model

由以上实验结果可以看出,各模型较好完成了对于输入样本的分类任务,各类别分类准确率均在90%以上。基于测试集测试结果及准确率对比曲线数据可知,与传统训练方法相比,并行的分布式模型训练方法得到的模型性能基本一致,平均测试准确率为96.189%,且相较于传统单节点训练方式,测试准确率仅差距0.478%。两种方式得到的模型性能无明显差异,但基于分布式训练方案,模型训练时间缩短到了单节点训练用时的50.546%,可见基于数据并行的分布式模型训练方法可以在相同模型精度下,明显提高模型训练效率。It can be seen from the above experimental results that each model has successfully completed the classification task for the input samples, and the classification accuracy of each category is above 90%. Based on the test results of the test set and the accuracy rate comparison curve data, it can be seen that compared with the traditional training method, the model performance obtained by the parallel distributed model training method is basically the same, and the average test accuracy rate is 96.189%, and compared with the traditional single-node training method. method, the test accuracy gap is only 0.478%. There is no significant difference in model performance between the two methods, but based on the distributed training scheme, the model training time is shortened to 50.546% of the single-node training time. It can be seen that the distributed model training method based on data parallelism can significantly improve the accuracy of the same model. Model training efficiency.

本实施例还提供一种手写数字体数据集分类中基于深度学习的分布式模型训练装置,包括:This embodiment also provides a distributed model training device based on deep learning in the classification of handwritten digit datasets, including:

1)数据采集模块:用于从MNIST手写数字数据库中采集数据,构建初始数据集;1) Data acquisition module: used to collect data from the MNIST handwritten digit database to construct an initial data set;

2)数据分类模块:所述初始数据集中的包括训练集S、测试集Q,用于将训练集S的数据平均分为A、B、C三个训练子集;2) data classification module: the initial data set includes training set S and test set Q, for dividing the data of training set S into three training subsets of A, B, and C on average;

3)构建模型模块:用于采用一维卷积构建A、B、C、D四个基于ResNet网络的训练模型,并初始化模型参数;3) Building a model module: It is used to construct four ResNet-based training models of A, B, C, and D by using one-dimensional convolution, and initialize the model parameters;

4)模型训练模块:用于将A、B、C三个训练子集的数据分别输入A、B、C训练模型进行基于数据并行的分布式训练,将整个训练集S作为数据集D输入模型D中进行独立训练;4) Model training module: It is used to input the data of the three training subsets A, B, and C into the A, B, and C training models for distributed training based on data parallelism, and use the entire training set S as the dataset D input model Independent training in D;

5)参数输出模块:用于在基于ResNet网络的训练模型的分布式训练次数达到预设阈值时,输出模型参数,得到训练结果。5) Parameter output module: when the distributed training times of the training model based on the ResNet network reaches a preset threshold, output the model parameters to obtain the training result.

6)模型测试模块:用于将测试集Q数据分别输入A、B、C、D训练模型中进行测试,并记录各模型的测试准确率及耗时信息。6) Model testing module: used to input the test set Q data into the A, B, C, and D training models for testing, and record the testing accuracy and time-consuming information of each model.

本实施例还提供一种计算机设备,包括存储器、处理器及存储在存储器上并可在处理器上运行的计算机程序,其特征在于,所述处理器执行所述程序时实现上述一种手写数字体数据集分类中面向深度学习的分布式模型训练方法。This embodiment also provides a computer device, including a memory, a processor, and a computer program stored in the memory and running on the processor, wherein the processor implements the above-mentioned handwritten data when executing the program. A distributed model training method for deep learning in font dataset classification.

本实施例还提供一种计算机可读存储介质,其上存储有计算机程序,其特征在于,该程序被处理器执行时实现上述一种手写数字体数据集分类中面向深度学习的分布式模型训练方法。This embodiment also provides a computer-readable storage medium on which a computer program is stored, characterized in that, when the program is executed by a processor, the above-mentioned distributed model training for deep learning in the classification of handwritten digit datasets is implemented method.

实施例2:Example 2:

一种CWRU轴承数据集故障分类中面向深度学习的分布式模型训练方法、装置、计算机设备及和存储介质。A distributed model training method, device, computer equipment and storage medium oriented to deep learning in fault classification of CWRU bearing data set.

一种CWRU轴承数据集故障分类中面向深度学习的分布式模型训练方法,具体步骤如下:A distributed model training method for deep learning in fault classification of CWRU bearing dataset, the specific steps are as follows:

1)数据采集:从凯斯西储大学(CWRU)的轴承数据库中采集数据,构建初始数据集;1) Data collection: collect data from the bearing database of Case Western Reserve University (CWRU) to construct an initial data set;

所述CWRU轴承数据库包含了四种工况(0hp,1hp,2hp,3hp),每种工况下的转速依次为1797rpm,1772rpm,1750rpm以及1730rpm;每种工况下又包含了四种轴承状态,分别是正常状态、外环故障状态、内环故障状态以及滚动体故障状态,对于每种故障状态又包含了3种不同故障程度,分别是0.1778mm、0.3556mm、0.7112mm三种类别;本实施例将采用CWRU轴承数据集中采样率在48KHz频率下,负载为3hp时10类轴承状态中的驱动端加速度数据;如表5所示。The CWRU bearing database includes four working conditions (0hp, 1hp, 2hp, 3hp), and the rotation speed under each working condition is 1797rpm, 1772rpm, 1750rpm and 1730rpm in turn; each working condition also includes four bearing states , which are the normal state, the outer ring fault state, the inner ring fault state and the rolling element fault state. For each fault state, there are 3 different fault degrees, which are 0.1778mm, 0.3556mm, and 0.7112mm three categories; this The embodiment will use the acceleration data of the driving end in 10 types of bearing states when the sampling rate of the CWRU bearing data set is at a frequency of 48KHz and the load is 3hp; as shown in Table 5.

表5数据库样本信息表Table 5 Database sample information table

2)数据分类:从CWRU轴承数据库中提取的10类轴承状态中随机选取共24000个数据,平均分配到A、B、C三个训练集,并将全部采集的24000训练样本作为对照集D,从CWRU轴承数据库中提取3000测试样本构成测试集Q;2) Data classification: Randomly select a total of 24,000 data from the 10 types of bearing states extracted from the CWRU bearing database, and evenly distribute them to three training sets A, B, and C, and take all the 24,000 training samples collected as the control set D, Extract 3000 test samples from the CWRU bearing database to form the test set Q;

3)构建模型:如图2所示,采用一维卷积构建A、B、C、D四个基于ResNet网络的训练模型,并初始化模型参数;3) Building the model: As shown in Figure 2, four training models A, B, C, and D based on the ResNet network are constructed by one-dimensional convolution, and the model parameters are initialized;

所述ResNet网络包括依次连接的卷积输入层、四个残差块、池化层和全连接层,并在全连接层前设置Dropout,所述ResNet网络的参数如表6所示。The ResNet network includes a convolutional input layer, four residual blocks, a pooling layer, and a fully connected layer connected in sequence, and a dropout is set before the fully connected layer. The parameters of the ResNet network are shown in Table 6.

表6ResNet网络模型的参数Table 6 Parameters of the ResNet network model

所述基于ResNet网络的训练模型的初始参数包括训练规模、epoch、Dropout概率、Loss函数、Adam优化器参数,其如表7所示。The initial parameters of the training model based on the ResNet network include training scale, epoch, Dropout probability, Loss function, Adam optimizer parameters, which are shown in Table 7.

表7ResNet网络模型的超参数Table 7 Hyperparameters of the ResNet network model

4)模型训练:将训练集A、B、C的数据分别输入A、B、C三个训练模型进行基于数据并行的分布式训练,将训练集D输入训练模型D中进行独立训练;4) Model training: input the data of training sets A, B, and C into three training models A, B, and C respectively for distributed training based on data parallelism, and input training set D into training model D for independent training;

训练模型A、B、C分别进行了1次独立训练和6次基于参数融合的训练,其模型参数融合为:The training models A, B, and C have performed 1 independent training and 6 training based on parameter fusion respectively. The model parameters are fused as follows:

式中,ω是模型参数,表示执行完n个样本数据后计算得到的真实梯度,n表示总样本数量,mk表示第k个模型所含样本数量,表示第k个模型计算的梯度信息;where ω is the model parameter, Represents the true gradient calculated after executing n sample data, n represents the total number of samples, mk represents the number of samples contained in the kth model, Indicates the gradient information calculated by the kth model;

A、B、C三个训练模型每次训练10个epoch,总计迭代次数70次,对模型D独立训练70次;模型训练的精度曲线与损失值曲线分别如图7、8所示。The three training models A, B, and C are trained for 10 epochs each time, with a total of 70 iterations, and model D is independently trained 70 times; the accuracy curve and loss value curve of model training are shown in Figures 7 and 8, respectively.

在本发明实例中,基于数据并行的分布式训练包括两类节点,分别是管理节点与计算节点,二者分别对应边缘管控平台分布式离线计算服务中的汇聚节点与基础计算节点;管理节点主要负责参数同步,数据集会被拆分为多个独立子集存储在平台分布式对象存储系统中,在训练开始时会加载到各计算节点;训练开始时,各计算节点会使用管理节点统一传递的参数进行初始化,随后利用本地数据进行训练;每训练完成一个epoch会向管理节点同步梯度信息,梯度信息经管理节点融合后,会重新回传到各节点进行参数更新,参数更新完后便进行下一轮的训练。In the example of the present invention, the distributed training based on data parallelism includes two types of nodes, namely management nodes and computing nodes, which correspond to the aggregation nodes and basic computing nodes in the distributed offline computing service of the edge management and control platform respectively; the management nodes mainly Responsible for parameter synchronization, the data set will be divided into multiple independent subsets and stored in the platform distributed object storage system, and will be loaded to each computing node at the beginning of training; at the beginning of training, each computing node will use the management node to uniformly transmit the data. The parameters are initialized, and then the local data is used for training; each epoch after training is completed, the gradient information will be synchronized with the management node. After the gradient information is fused by the management node, it will be sent back to each node for parameter update. After the parameter update, the next step will be performed. one round of training.

5)参数输出:当基于ResNet网络的训练模型的分布式训练次数达到1次独立训练和6次基于参数融合的训练时,输出模型参数,得到训练结果;5) Parameter output: When the distributed training times of the training model based on the ResNet network reaches 1 independent training and 6 training based on parameter fusion, the model parameters are output to obtain the training results;

6)模型测试:将测试集Q数据分别输入A、B、C、D四个训练模型中进行测试,各模型的测试准确率及耗时信息如表8所示,各模型测试分类的混淆矩阵如图9所示。6) Model testing: Input the test set Q data into the four training models A, B, C, and D for testing. The testing accuracy and time-consuming information of each model are shown in Table 8. The confusion matrix of each model testing classification is shown in Table 8. As shown in Figure 9.

表8模型的测试结果及耗时信息Table 8 Test results and time-consuming information of the model

本实施例还提供一种CWRU轴承数据集故障分类中基于深度学习的分布式模型训练装置,包括:This embodiment also provides a distributed model training device based on deep learning in fault classification of CWRU bearing datasets, including:

1)数据采集模块:用于CWRU轴承数据库中采集数据,构建初始数据集;1) Data acquisition module: used to collect data in the CWRU bearing database and construct an initial data set;

2)数据分类模块:数据分类模块:所述初始数据集中的包括训练集S、测试集Q,用于将训练集S的数据平均分为A、B、C三个训练子集;2) data classification module: data classification module: the initial data set includes a training set S and a test set Q, and is used to divide the data of the training set S into three training subsets of A, B, and C on average;

3)构建模型模块:用于采用一维卷积构建A、B、C、D四个基于ResNet网络的训练模型,并初始化模型参数;3) Building a model module: It is used to construct four ResNet-based training models of A, B, C, and D by using one-dimensional convolution, and initialize the model parameters;

4)模型训练模块:用于将A、B、C三个训练子集的数据分别输入A、B、C三个训练模型进行基于数据并行的分布式训练,将整个训练集S作为数据集D输入模型D中进行独立训练;4) Model training module: used to input the data of the three training subsets A, B, and C into the three training models A, B, and C respectively for distributed training based on data parallelism, and use the entire training set S as the data set D Input into model D for independent training;

5)参数输出模块:用于在基于ResNet网络的训练模型的分布式训练次数达到预设阈值时,输出模型参数,得到训练结果。5) Parameter output module: when the distributed training times of the training model based on the ResNet network reaches a preset threshold, output the model parameters to obtain the training result.

6)模型测试模块:用于将测试集Q数据分别输入A、B、C、D四个训练模型中进行测试,并记录各模型的测试准确率及耗时信息。6) Model testing module: used to input the test set Q data into the four training models A, B, C, and D for testing, and record the testing accuracy and time-consuming information of each model.

本实施例还提供一种计算机设备,包括存储器、处理器及存储在存储器上并可在处理器上运行的计算机程序,其特征在于,所述处理器执行所述程序时实现上述一种CWRU轴承数据集故障分类中面向深度学习的分布式模型训练方法。This embodiment also provides a computer device, including a memory, a processor, and a computer program stored in the memory and running on the processor, wherein the processor implements the above-mentioned CWRU bearing when executing the program A distributed model training method for deep learning in dataset fault classification.

本实施例还提供一种计算机可读存储介质,其上存储有计算机程序,其特征在于,该程序被处理器执行时实现上述一种CWRU轴承数据集故障分类中面向深度学习的分布式模型训练方法。This embodiment also provides a computer-readable storage medium on which a computer program is stored, characterized in that, when the program is executed by the processor, the above-mentioned distributed model training for deep learning in fault classification of the CWRU bearing dataset is implemented method.

实施例3:Example 3:

一种MFPT轴承数据集故障分类中面向深度学习的分布式模型训练方法、装置、计算机设备及和存储介质。A distributed model training method, device, computer equipment and storage medium for deep learning in MFPT bearing data set fault classification.

一种MFPT轴承数据集故障分类中面向深度学习的分布式模型训练方法,具体步骤如下:A distributed model training method for deep learning in MFPT bearing data set fault classification, the specific steps are as follows:

1)数据采集:从美国机械故障预防技术学会(MFPT)轴承数据库中采集数据,构建初始数据集;1) Data collection: collect data from the bearing database of the American Society for Mechanical Failure Prevention Technology (MFPT) to construct an initial data set;

所述MFPT轴承数据集包含四类数据:第一类是三种正常情况,工况环境为负载270磅、转速25Hz、采样速率97.656sps,持续6秒;第二类是三种外环故障情况,对应工况与正常情况一致;第三类是7种工况下的外环故障,7种工况主要指负载的变化,负载依次是:25磅、50磅、100磅、150磅、200磅、250磅、300磅,其他参数一致(转速25Hz、采样速率48828sps,持续3秒);最后一类是7种工况下的内环故障,7种工况同样指负载的变化,负载依次是:0磅、50磅、100磅、150磅、200磅、250磅、300磅,其他参数一致(转速25Hz、采样速率48828sps,持续3秒);如表9所示。The MFPT bearing data set contains four types of data: the first type is three normal conditions, the working environment is a load of 270 pounds, a speed of 25Hz, a sampling rate of 97.656sps, lasting 6 seconds; the second type is three outer ring fault conditions , the corresponding working conditions are consistent with the normal conditions; the third category is the outer ring fault under 7 working conditions. The 7 working conditions mainly refer to the change of the load. The loads are: 25 pounds, 50 pounds, 100 pounds, 150 pounds, 200 pounds. lbs, 250 lbs, 300 lbs, other parameters are the same (rotation speed 25Hz, sampling rate 48828sps, lasting 3 seconds); the last category is the inner ring fault under 7 working conditions, the 7 working conditions also refer to the change of the load, and the load is in turn Yes: 0 lbs, 50 lbs, 100 lbs, 150 lbs, 200 lbs, 250 lbs, 300 lbs, other parameters are the same (rotation speed 25Hz, sampling rate 48828sps, lasting 3 seconds); as shown in Table 9.

表9数据库样本信息表Table 9 Database sample information table

2)数据分类:从MFPT轴承数据库中提取3类轴承状态中随机选取2160个数据,平均分配A、B、C、D、E五个训练集,并将全部采集的2160训练样本作为对照集F,从CWRU轴承数据库中提取1000个测试样本构成测试集Q;2) Data classification: 2160 data are randomly selected from 3 types of bearing states from the MFPT bearing database, and five training sets of A, B, C, D, and E are evenly distributed, and all the 2160 training samples collected are used as the control set F. , 1000 test samples are extracted from the CWRU bearing database to form the test set Q;

3)构建模型:如图2所示,采用一维卷积构建A、B、C、D、E、F六个基于ResNet网络的训练模型,并初始化模型参数;3) Model building: As shown in Figure 2, six training models A, B, C, D, E, and F based on the ResNet network are constructed by one-dimensional convolution, and the model parameters are initialized;

所述ResNet网络包括依次连接的卷积输入层、四个残差块、池化层和全连接层,并在全连接层前设置了Dropout,所述ResNet网络的参数表如表10所示。The ResNet network includes a convolutional input layer, four residual blocks, a pooling layer, and a fully connected layer connected in sequence, and a dropout is set before the fully connected layer. The parameter table of the ResNet network is shown in Table 10.

表10ResNet网络模型的参数Table 10 Parameters of the ResNet network model

所述基于ResNet网络的训练模型的初始参数包括训练规模、epoch、Dropout概率、Loss函数、Adam优化器参数,其如表11所示。The initial parameters of the training model based on the ResNet network include training scale, epoch, Dropout probability, Loss function, Adam optimizer parameters, which are shown in Table 11.

表11ResNet网络模型的超参数Table 11 Hyperparameters of the ResNet network model

4)模型训练:将训练集A、B、C、D、E的数据分别输入A、B、C、D、E五个训练模型进行基于数据并行的分布式训练,将训练集F输入训练模型F中进行独立训练;4) Model training: Input the data of the training set A, B, C, D, and E into the five training models A, B, C, D, and E respectively for distributed training based on data parallelism, and input the training set F into the training model Independent training in F;

训练模型A、B、C、D、E分别进行了1次独立训练和6次基于参数融合的训练,其模型参数融合为:The training models A, B, C, D, and E have performed 1 independent training and 6 training based on parameter fusion respectively. The model parameters are fused as follows:

式中,ω是模型参数,表示执行完n个样本数据后计算得到的真实梯度,n表示总样本数量,mk表示第k个模型所含样本数量,表示第k个模型计算的梯度信息;where ω is the model parameter, Represents the true gradient calculated after executing n sample data, n represents the total number of samples, mk represents the number of samples contained in the kth model, Indicates the gradient information calculated by the kth model;

A、B、C、D、E训练模型每次训练10个epoch,总计迭代次数70次,对模型F独立训练70次;模型训练的精度曲线与损失值曲线分别如图10、11所示。The A, B, C, D, and E training models are trained for 10 epochs each time, with a total of 70 iterations, and the model F is independently trained 70 times; the accuracy and loss curves of model training are shown in Figures 10 and 11, respectively.

在本发明实例中,基于数据并行的分布式训练包括两类节点,分别是管理节点与计算节点,二者分别对应边缘管控平台分布式离线计算服务中的汇聚节点与基础计算节点;管理节点主要负责参数同步,数据集会被拆分为多个独立子集存储在平台分布式对象存储系统中,在训练开始时会加载到各计算节点;训练开始时,各计算节点会使用管理节点统一传递的参数进行初始化,随后利用本地数据进行训练;每训练完成一个epoch会向管理节点同步梯度信息,梯度信息经管理节点融合后,会重新回传到各节点进行参数更新,参数更新完后便进行下一轮的训练。In the example of the present invention, the distributed training based on data parallelism includes two types of nodes, namely management nodes and computing nodes, which correspond to the aggregation nodes and basic computing nodes in the distributed offline computing service of the edge management and control platform respectively; the management nodes mainly Responsible for parameter synchronization, the data set will be divided into multiple independent subsets and stored in the platform distributed object storage system, and will be loaded to each computing node at the beginning of training; at the beginning of training, each computing node will use the management node to uniformly transmit the data. The parameters are initialized, and then the local data is used for training; each epoch after training is completed, the gradient information will be synchronized with the management node. After the gradient information is fused by the management node, it will be sent back to each node for parameter update. After the parameter update, the next step will be performed. one round of training.

5)参数输出:当基于ResNet网络的训练模型的分布式训练次数达到1次独立训练和6次基于参数融合的训练时,输出模型参数,得到训练结果;5) Parameter output: When the distributed training times of the training model based on the ResNet network reaches 1 independent training and 6 training based on parameter fusion, the model parameters are output to obtain the training results;

6)模型测试:将测试集Q数据分别输入A、B、C、D、E、F六个训练模型中进行测试,各模型的测试准确率及耗时信息如表12所示,各模型测试分类的混淆矩阵如图12所示。6) Model test: Input the test set Q data into the six training models A, B, C, D, E, and F for testing. The test accuracy and time-consuming information of each model are shown in Table 12. Each model is tested. The confusion matrix for classification is shown in Figure 12.

表12模型的测试结果及耗时信息Table 12 Test results and time-consuming information of the model

本实施例还提供一种MFPT轴承数据集故障分类中基于深度学习的分布式模型训练装置,包括:The present embodiment also provides a distributed model training device based on deep learning in MFPT bearing data set fault classification, including:

1)数据采集模块:用于从MFPT轴承数据库中采集数据,构建初始数据集;1) Data acquisition module: used to collect data from the MFPT bearing database and construct an initial data set;

2)数据分类模块:数据分类模块:所述初始数据集中的包括训练集S、测试集Q,用于将训练集S的数据平均分为A、B、C、E、F五个训练子集;2) Data classification module: data classification module: the initial data set includes training set S and test set Q, and is used to divide the data of training set S into five training subsets A, B, C, E, and F on average. ;

3)构建模型模块:用于采用一维卷积构建A、B、C、D、E、F六个基于ResNet网络的训练模型,并初始化模型参数;3) Building a model module: It is used to construct six training models based on ResNet network A, B, C, D, E, and F by using one-dimensional convolution, and initialize the model parameters;

4)模型训练模块:用于将A、B、C、D、E五个训练子集的数据分别输入A、B、C、D、E五个训练模型进行基于数据并行的分布式训练,将整个训练集S作为数据集F输入模型F中进行独立训练;4) Model training module: It is used to input the data of the five training subsets A, B, C, D, and E into the five training models of A, B, C, D, and E for distributed training based on data parallelism. The entire training set S is input into the model F as the data set F for independent training;

5)参数输出模块:用于在基于ResNet网络的训练模型的分布式训练次数达到预设阈值时,输出模型参数,得到训练结果。5) Parameter output module: when the distributed training times of the training model based on the ResNet network reaches a preset threshold, output the model parameters to obtain the training result.

6)模型测试模块:用于将测试集Q数据分别输入A、B、C、D、E、F训练模型中进行测试,并记录各模型的测试准确率及耗时信息。6) Model testing module: used to input the test set Q data into the A, B, C, D, E, F training models for testing, and record the testing accuracy and time-consuming information of each model.

本实施例还提供一种计算机设备,包括存储器、处理器及存储在存储器上并可在处理器上运行的计算机程序,其特征在于,所述处理器执行所述程序时实现上述一种MFPT轴承数据集故障分类中面向深度学习的分布式模型训练方法。This embodiment also provides a computer device, including a memory, a processor, and a computer program stored in the memory and running on the processor, characterized in that, when the processor executes the program, the above-mentioned MFPT bearing is implemented A distributed model training method for deep learning in dataset fault classification.

本实施例还提供一种计算机可读存储介质,其上存储有计算机程序,其特征在于,该程序被处理器执行时实现上述一种MFPT轴承数据集故障分类中面向深度学习的分布式模型训练方法。This embodiment also provides a computer-readable storage medium on which a computer program is stored, characterized in that, when the program is executed by a processor, the above-mentioned distributed model training for deep learning in fault classification of MFPT bearing datasets is implemented method.

本领域内的技术人员应明白,本申请的实施例可提供为方法、系统、或计算机程序产品。因此,本申请可采用完全硬件实施例、完全软件实施例、或结合软件和硬件方面的实施例的形式。而且,本申请可采用在一个或多个其中包含有计算机可用程序代码的计算机可用存储介质(包括但不限于磁盘存储器、CD-ROM、光学存储器等)上实施的计算机程序产品的形式。As will be appreciated by those skilled in the art, the embodiments of the present application may be provided as a method, a system, or a computer program product. Accordingly, the present application may take the form of an entirely hardware embodiment, an entirely software embodiment, or an embodiment combining software and hardware aspects. Furthermore, the present application may take the form of a computer program product embodied on one or more computer-usable storage media (including, but not limited to, disk storage, CD-ROM, optical storage, etc.) having computer-usable program code embodied therein.

本申请是参照根据本申请实施例的方法、设备(系统)、和计算机程序产品的流程图和/或方框图来描述的。应理解可由计算机程序指令实现流程图和/或方框图中的每一流程和/或方框、以及流程图和/或方框图中的流程和/或方框的结合。可提供这些计算机程序指令到通用计算机、专用计算机、嵌入式处理机或其他可编程数据处理设备的处理器以产生一个机器,使得通过计算机或其他可编程数据处理设备的处理器执行的指令产生用于实现在流程图一个流程或多个流程和/或方框图一个方框或多个方框中指定的功能的装置。The present application is described with reference to flowchart illustrations and/or block diagrams of methods, apparatus (systems), and computer program products according to embodiments of the present application. It will be understood that each flow and/or block in the flowchart illustrations and/or block diagrams, and combinations of flows and/or blocks in the flowchart illustrations and/or block diagrams, can be implemented by computer program instructions. These computer program instructions may be provided to the processor of a general purpose computer, special purpose computer, embedded processor or other programmable data processing device to produce a machine such that the instructions executed by the processor of the computer or other programmable data processing device produce Means for implementing the functions specified in a flow or flow of a flowchart and/or a block or blocks of a block diagram.

这些计算机程序指令也可存储在能引导计算机或其他可编程数据处理设备以特定方式工作的计算机可读存储器中,使得存储在该计算机可读存储器中的指令产生包括指令装置的制造品,该指令装置实现在流程图一个流程或多个流程和/或方框图一个方框或多个方框中指定的功能。These computer program instructions may also be stored in a computer-readable memory capable of directing a computer or other programmable data processing apparatus to function in a particular manner, such that the instructions stored in the computer-readable memory result in an article of manufacture comprising instruction means, the instructions The apparatus implements the functions specified in the flow or flow of the flowcharts and/or the block or blocks of the block diagrams.

这些计算机程序指令也可装载到计算机或其他可编程数据处理设备上,使得在计算机或其他可编程设备上执行一系列操作步骤以产生计算机实现的处理,从而在计算机或其他可编程设备上执行的指令提供用于实现在流程图一个流程或多个流程和/或方框图一个方框或多个方框中指定的功能的步骤。These computer program instructions can also be loaded on a computer or other programmable data processing device to cause a series of operational steps to be performed on the computer or other programmable device to produce a computer-implemented process such that The instructions provide steps for implementing the functions specified in the flow or blocks of the flowcharts and/or the block or blocks of the block diagrams.

最后应当说明的是:以上实施例仅用以说明本发明的技术方案而非对其限制,尽管参照上述实施例对本发明进行了详细的说明,所属领域的普通技术人员应当理解:依然可以对本发明的具体实施方式进行修改或者等同替换,而未脱离本发明精神和范围的任何修改或者等同替换,其均应涵盖在本发明的权利要求保护范围之内。Finally, it should be noted that the above embodiments are only used to illustrate the technical solutions of the present invention rather than to limit them. Although the present invention has been described in detail with reference to the above embodiments, those of ordinary skill in the art should understand that: the present invention can still be Modifications or equivalent replacements are made to the specific embodiments of the present invention, and any modifications or equivalent replacements that do not depart from the spirit and scope of the present invention shall be included within the protection scope of the claims of the present invention.

Claims (7)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210752982.4ACN115114982B (en) | 2022-06-28 | 2022-06-28 | A distributed model training method and related device for deep learning in edge computing |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210752982.4ACN115114982B (en) | 2022-06-28 | 2022-06-28 | A distributed model training method and related device for deep learning in edge computing |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN115114982Atrue CN115114982A (en) | 2022-09-27 |

| CN115114982B CN115114982B (en) | 2025-06-03 |

Family

ID=83330963

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202210752982.4AActiveCN115114982B (en) | 2022-06-28 | 2022-06-28 | A distributed model training method and related device for deep learning in edge computing |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN115114982B (en) |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN118537682A (en)* | 2024-07-24 | 2024-08-23 | 青岛云世纪信息科技有限公司 | Deep learning-based automatic mechanical arm training method and system and electronic equipment |

| CN119474222A (en)* | 2025-01-15 | 2025-02-18 | 南京信息工程大学 | A hierarchical and distributed edge data aggregation and reporting method and device |

| CN119830044A (en)* | 2025-03-17 | 2025-04-15 | 北京中科金财科技股份有限公司 | Artificial intelligence model training data set construction method and system |

Citations (13)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN109376615A (en)* | 2018-09-29 | 2019-02-22 | 苏州科达科技股份有限公司 | For promoting the method, apparatus and storage medium of deep learning neural network forecast performance |

| WO2020052169A1 (en)* | 2018-09-12 | 2020-03-19 | 深圳云天励飞技术有限公司 | Clothing attribute recognition detection method and apparatus |

| US20200097841A1 (en)* | 2018-09-21 | 2020-03-26 | Renovo Motors, Inc. | Systems and methods for processing vehicle data |

| CN111626407A (en)* | 2020-05-22 | 2020-09-04 | 中国科学院空天信息创新研究院 | Rapid reconstruction method and system for deep neural network model |

| CN111881958A (en)* | 2020-07-17 | 2020-11-03 | 上海东普信息科技有限公司 | License plate classification recognition method, device, equipment and storage medium |

| CN112183718A (en)* | 2020-08-31 | 2021-01-05 | 华为技术有限公司 | A deep learning training method and device for computing equipment |

| CN112528548A (en)* | 2020-11-27 | 2021-03-19 | 东莞市汇林包装有限公司 | Self-adaptive depth coupling convolution self-coding multi-mode data fusion method |

| US20210357256A1 (en)* | 2020-05-14 | 2021-11-18 | Hewlett Packard Enterprise Development Lp | Systems and methods of resource configuration optimization for machine learning workloads |

| CN113706550A (en)* | 2021-03-09 | 2021-11-26 | 腾讯科技(深圳)有限公司 | Image scene recognition and model training method and device and computer equipment |

| CN113792783A (en)* | 2021-09-13 | 2021-12-14 | 陕西师范大学 | A deep learning-based automatic recognition method and system for the face and face stage |

| CN114445909A (en)* | 2021-12-24 | 2022-05-06 | 深圳市大数据研究院 | Method, device, storage medium and device for training an automatic recognition model of cue words |

| CN114548356A (en)* | 2020-11-27 | 2022-05-27 | 华为技术有限公司 | Machine learning method, device and system |

| CN115114000A (en)* | 2022-06-28 | 2022-09-27 | 重庆大学 | Method and device for realizing multitask parallel computation in edge computation |

- 2022

- 2022-06-28CNCN202210752982.4Apatent/CN115114982B/enactiveActive

Patent Citations (13)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2020052169A1 (en)* | 2018-09-12 | 2020-03-19 | 深圳云天励飞技术有限公司 | Clothing attribute recognition detection method and apparatus |

| US20200097841A1 (en)* | 2018-09-21 | 2020-03-26 | Renovo Motors, Inc. | Systems and methods for processing vehicle data |

| CN109376615A (en)* | 2018-09-29 | 2019-02-22 | 苏州科达科技股份有限公司 | For promoting the method, apparatus and storage medium of deep learning neural network forecast performance |

| US20210357256A1 (en)* | 2020-05-14 | 2021-11-18 | Hewlett Packard Enterprise Development Lp | Systems and methods of resource configuration optimization for machine learning workloads |

| CN111626407A (en)* | 2020-05-22 | 2020-09-04 | 中国科学院空天信息创新研究院 | Rapid reconstruction method and system for deep neural network model |

| CN111881958A (en)* | 2020-07-17 | 2020-11-03 | 上海东普信息科技有限公司 | License plate classification recognition method, device, equipment and storage medium |

| CN112183718A (en)* | 2020-08-31 | 2021-01-05 | 华为技术有限公司 | A deep learning training method and device for computing equipment |

| CN112528548A (en)* | 2020-11-27 | 2021-03-19 | 东莞市汇林包装有限公司 | Self-adaptive depth coupling convolution self-coding multi-mode data fusion method |

| CN114548356A (en)* | 2020-11-27 | 2022-05-27 | 华为技术有限公司 | Machine learning method, device and system |

| CN113706550A (en)* | 2021-03-09 | 2021-11-26 | 腾讯科技(深圳)有限公司 | Image scene recognition and model training method and device and computer equipment |

| CN113792783A (en)* | 2021-09-13 | 2021-12-14 | 陕西师范大学 | A deep learning-based automatic recognition method and system for the face and face stage |

| CN114445909A (en)* | 2021-12-24 | 2022-05-06 | 深圳市大数据研究院 | Method, device, storage medium and device for training an automatic recognition model of cue words |

| CN115114000A (en)* | 2022-06-28 | 2022-09-27 | 重庆大学 | Method and device for realizing multitask parallel computation in edge computation |

Non-Patent Citations (3)

| Title |

|---|

| HAGERTY, JR: "Deep Learning and Handcrafted Method Fusion: Higher Diagnostic Accuracy for Melanoma Dermoscopy Images", IEEE JOURNAL OF BIOMEDICAL AND HEALTH INFORMATICS, vol. 23, no. 4, 1 July 2019 (2019-07-01), pages 1385 - 1391, XP011733352, DOI: 10.1109/JBHI.2019.2891049* |

| 李维刚;谌竟成;范丽霞;谢璐;: "基于卷积神经网络的钢铁材料微观组织自动辨识", 钢铁研究学报, no. 01, 15 January 2020 (2020-01-15), pages 36 - 46* |

| 高敏: "面向边缘计算的分布式机器学习算法研究", 中国优秀硕士学位论文全文数据库 (信息科技辑), no. 1, 1 August 2020 (2020-08-01), pages 140 - 235* |

Cited By (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN118537682A (en)* | 2024-07-24 | 2024-08-23 | 青岛云世纪信息科技有限公司 | Deep learning-based automatic mechanical arm training method and system and electronic equipment |

| CN119474222A (en)* | 2025-01-15 | 2025-02-18 | 南京信息工程大学 | A hierarchical and distributed edge data aggregation and reporting method and device |

| CN119830044A (en)* | 2025-03-17 | 2025-04-15 | 北京中科金财科技股份有限公司 | Artificial intelligence model training data set construction method and system |

| CN119830044B (en)* | 2025-03-17 | 2025-06-10 | 北京中科金财科技股份有限公司 | Artificial intelligence model training data set construction method and system |

Also Published As

| Publication number | Publication date |

|---|---|

| CN115114982B (en) | 2025-06-03 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN115114982A (en) | A distributed model training method and related device for deep learning in edge computing | |

| CN113609770B (en) | Rolling bearing RUL prediction method based on piecewise linear fitting HI and LSTM | |

| CN116502175A (en) | Method, device and storage medium for diagnosing fault of graph neural network | |

| CN109816032A (en) | Zero sample classification method and apparatus of unbiased mapping based on production confrontation network | |

| CN112241240B (en) | Method, apparatus and computer program product for parallel transmission of data | |

| JP2023534696A (en) | Anomaly detection in network topology | |

| CN114820279B (en) | Distributed deep learning method and device based on multiple GPUs and electronic equipment | |

| CN110705029A (en) | A flow field prediction method for oscillating flapping-wing energy harvesting system based on transfer learning | |

| EP3701403A1 (en) | Accelerated simulation setup process using prior knowledge extraction for problem matching | |

| CN116599857B (en) | Digital twin application system suitable for multiple scenes of Internet of things | |

| CN112990222A (en) | Image boundary knowledge migration-based guided semantic segmentation method | |

| CN110907177A (en) | Bearing fault diagnosis method based on layered extreme learning machine | |

| CN116975634B (en) | Micro-service extraction method based on program static attribute and graph neural network | |

| CN107944488A (en) | Long time series data processing method based on stratification depth network | |

| CN109894495B (en) | A method and system for anomaly detection of extruder based on energy consumption data and Bayesian network | |

| CN116007937A (en) | Intelligent fault diagnosis method and device for mechanical equipment transmission part | |

| CN112949711A (en) | Neural network model reusable training method and device for software-defined satellite | |

| CN119150870B (en) | An entity extraction method for constructing knowledge graph for mechanical fault diagnosis | |

| US20140324861A1 (en) | Block Partitioning For Efficient Record Processing In Parallel Computing Environment | |

| US20220138554A1 (en) | Systems and methods utilizing machine learning techniques for training neural networks to generate distributions | |

| CN118656273A (en) | Hard disk failure prediction and data migration method for low-quality data sets | |

| CN116910617B (en) | MCDLSTM-CNN-based chemical production process fault diagnosis method and system | |

| Luo et al. | Autosmart: An efficient and automatic machine learning framework for temporal relational data | |

| She et al. | A meta-transfer learning prediction method with few-shot data for the remaining useful life of rolling bearing | |

| CN111460321A (en) | Method and device for overlapping community search based on Node2Vec |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |