CN114973188A - Driving scene classification coding method and system thereof - Google Patents

Driving scene classification coding method and system thereofDownload PDFInfo

- Publication number

- CN114973188A CN114973188ACN202210473739.9ACN202210473739ACN114973188ACN 114973188 ACN114973188 ACN 114973188ACN 202210473739 ACN202210473739 ACN 202210473739ACN 114973188 ACN114973188 ACN 114973188A

- Authority

- CN

- China

- Prior art keywords

- scene

- classification

- coding

- interaction

- information

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V20/00—Scenes; Scene-specific elements

- G06V20/50—Context or environment of the image

- G06V20/56—Context or environment of the image exterior to a vehicle by using sensors mounted on the vehicle

- G06V20/58—Recognition of moving objects or obstacles, e.g. vehicles or pedestrians; Recognition of traffic objects, e.g. traffic signs, traffic lights or roads

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/70—Arrangements for image or video recognition or understanding using pattern recognition or machine learning

- G06V10/764—Arrangements for image or video recognition or understanding using pattern recognition or machine learning using classification, e.g. of video objects

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Multimedia (AREA)

- Artificial Intelligence (AREA)

- Health & Medical Sciences (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Computing Systems (AREA)

- Databases & Information Systems (AREA)

- Evolutionary Computation (AREA)

- General Health & Medical Sciences (AREA)

- Medical Informatics (AREA)

- Software Systems (AREA)

- Traffic Control Systems (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明涉及车辆测试与仿真技术领域,尤其是涉及一种驾驶场景分类编码方法及其系统。The invention relates to the technical field of vehicle testing and simulation, in particular to a driving scene classification and coding method and a system thereof.

背景技术Background technique

自动驾驶汽车测试是自动驾驶研发中的重要环节,也是自动驾驶技术发展的重要支撑,随着智能网联汽车高等级的自动化和网联化系统不断产业化落地,对测试的依赖越来越深入。场景是自动驾驶测试系统中相当重要的一环,测试场景的多样性、覆盖性、典型性等能够影响到测试结果的准确性,从而保证自动驾驶的安全与质量。Autonomous vehicle testing is an important link in autonomous driving research and development, and it is also an important support for the development of autonomous driving technology. . Scenarios are a very important part of the autonomous driving test system. The diversity, coverage, and typicality of test scenarios can affect the accuracy of test results, thereby ensuring the safety and quality of autonomous driving.

场景指的是行驶场合和驾驶情景的组合,它受行驶环境的深刻影响,如道路、交通、天气、光照等因素,共同构成整个场景概念。场景是在一定时间和空间范围内环境与驾驶行为的综合反映,描述了道路、交通设施、气象条件、交通参与物等外部状态以及自车的驾驶任务和状态等信息。The scene refers to the combination of driving occasions and driving scenarios, which is deeply affected by the driving environment, such as roads, traffic, weather, lighting and other factors, which together constitute the entire scene concept. The scene is a comprehensive reflection of the environment and driving behavior within a certain time and space, and describes the external state of roads, traffic facilities, meteorological conditions, traffic participants and other information as well as the driving tasks and states of the vehicle.

目前,已有大量且复杂的多种场景被用在针对自动驾驶汽车的相关技术开发或测评过程中,此外,自然驾驶数据中也存在着诸多场景,对于车辆驾驶行为以及自动驾驶功能安全、预期功能安全的测试,往往需要在特定场景中进行分析,但由于驾驶场景的使用还具有不全面、不系统的特点,导致无法高效利用驾驶场景,难以保证车辆相关技术的开发和测评效率。At present, a large number of complex scenarios have been used in the development or evaluation of related technologies for autonomous vehicles. In addition, there are also many scenarios in natural driving data. Functional safety tests often need to be analyzed in specific scenarios. However, due to the incomplete and unsystematic characteristics of the use of driving scenarios, the driving scenarios cannot be used efficiently, and it is difficult to ensure the development and evaluation efficiency of vehicle-related technologies.

发明内容SUMMARY OF THE INVENTION

本发明的目的就是为了克服上述现有技术存在的缺陷而提供一种驾驶场景分类编码方法,能够对车辆采集的数据进行驾驶场景分类和编码,从而实现驾驶场景的高效利用,提高车辆相关技术的开发和测评效率。The purpose of the present invention is to provide a driving scene classification and coding method in order to overcome the above-mentioned defects in the prior art, which can classify and code the driving scene on the data collected by the vehicle, so as to realize the efficient use of the driving scene and improve the performance of the vehicle-related technology. Develop and measure efficiency.

本发明的目的可以通过以下技术方案来实现:一种驾驶场景分类编码方法,包括以下步骤:The object of the present invention can be achieved through the following technical solutions: a driving scene classification and coding method, comprising the following steps:

S1、获取自动驾驶环境及情景数据;S1. Obtain automatic driving environment and scene data;

S2、基于获取的数据,识别出场景组成要素和情景交互信息;S2. Based on the acquired data, identify the scene components and scene interaction information;

S3、分别针对场景组成要素和情景交互信息进行分类、并进行编码;S3. Classify and encode scene components and scene interaction information respectively;

根据场景其他补充信息,进行相应编码;According to other supplementary information of the scene, perform corresponding coding;

S4、组合输出场景组成要素分类编码、情景交互信息分类编码以及补充信息编码,以作为驾驶场景分类编码结果。S4. Combining and outputting scene component element classification coding, scene interaction information classification coding, and supplementary information coding, as a result of driving scene classification coding.

进一步地,所述场景组成要素包括使用语义特征进行区分的动静态要素,具体为场景区域、场景道路特征、自车、交通参与者构成、天气。Further, the scene composition elements include dynamic and static elements distinguished by semantic features, specifically scene area, scene road features, self-vehicle, composition of traffic participants, and weather.

进一步地,所述情景交互信息包括使用动作主体进行区分的交互要素,具体为交通流、自车目标与状态、自车与其他交通参与者交互关系。Further, the contextual interaction information includes interaction elements distinguished by action subjects, specifically traffic flow, self-vehicle target and state, and the interaction relationship between the self-vehicle and other traffic participants.

进一步地,所述步骤S3具体包括以下步骤:Further, the step S3 specifically includes the following steps:

S31、针对识别出的场景组成要素,根据其包含的动静态要素,选取相应关键变量进行多级分类;S31. For the identified scene constituent elements, according to the dynamic and static elements contained therein, select corresponding key variables for multi-level classification;

根据动静态要素多级分类结果以及预先设定的编码数据,对场景组成要素分类结果进行编码;According to the multi-level classification results of dynamic and static elements and the preset coding data, the classification results of scene components are encoded;

S32、针对识别出的情景交互信息,根据其包含的交互要素,选取相应关键变量进行分类和参数分级;S32. For the identified situation interaction information, according to the interaction elements contained therein, select corresponding key variables for classification and parameter classification;

根据交互要素分类结果、参数分级结果以及预先设定的编码数据,对情景交互信息分类结果进行编码;According to the classification result of interaction elements, the result of parameter classification and the preset coding data, the classification result of scene interaction information is coded;

S33、根据场景其他补充信息,结合预先设定的编码数据,进行补充信息编码。S33: Encode the supplementary information according to other supplementary information of the scene and in combination with the preset encoded data.

进一步地,所述步骤S31具体是选取离散型关键变量进行多级分类,所述动静态要素多级分类结果包含子类以及相应的二级子类。Further, the step S31 specifically selects discrete key variables for multi-level classification, and the multi-level classification result of the dynamic and static elements includes sub-categories and corresponding secondary sub-categories.

进一步地,所述步骤S32具体是选取离散型关键变量进行交互要素分类、选取连续型关键变量进行参数分级。Further, in the step S32, discrete key variables are selected for interaction element classification, and continuous key variables are selected for parameter classification.

一种驾驶场景分类编码系统,包括场景组成要素识别分类模块、情景交互信息识别分类模块和柔性代码编辑模块,所述场景组成要素识别分类模块、情景交互信息识别分类模块分别连接至层级第一编码模块、层级第二编码模块,所述层级第一编码模块、层级第二编码模块均与类型库、属性库相连接,所述类型库和属性库分别连接有编辑子模块,所述场景组成要素识别分类模块用于从获取的自动驾驶环境及情景数据中识别出场景组成动静态要素、并进行动静态要素多级分类;A driving scene classification and coding system, comprising a scene component element identification and classification module, a scene interaction information identification and classification module, and a flexible code editing module, wherein the scene component element identification and classification module and the scene interaction information identification and classification module are respectively connected to the first code of the hierarchy module, the second coding module of the level, the first coding module of the level and the second coding module of the level are all connected with the type library and the attribute library, and the type library and the attribute library are respectively connected with editing sub-modules, and the scene constituent elements The identification and classification module is used to identify the dynamic and static elements of the scene from the acquired automatic driving environment and scene data, and perform multi-level classification of dynamic and static elements;

所述情景交互信息识别分类模块用于从获取的自动驾驶环境及情景数据中识别出情景交互要素、并进行交互要素分类和参数分级;The scene interaction information identification and classification module is used to identify scene interaction elements from the acquired automatic driving environment and scene data, and to perform interaction element classification and parameter classification;

所述层级第一编码模块用于对动静态要素多级分类结果进行相应编码操作,输出得到场景组成元素码段;The hierarchical first coding module is used to perform corresponding coding operations on the multi-level classification results of dynamic and static elements, and output the scene composition element code segment;

所述层级第二编码模块用于对交互要素分类和参数分级进行相应编码操作,输出得到情景交互信息码段;The second encoding module of the level is used to perform corresponding encoding operations on the interaction element classification and parameter classification, and output the scene interaction information code segment;

所述柔性代码编辑模块用于对场景其他补充信息进行编码操作,输出得到补充信息码段;The flexible code editing module is used to encode other supplementary information of the scene, and output the supplementary information code segment;

所述类型库和属性库用于存储预先设定的编码数据;The type library and the attribute library are used to store preset encoded data;

所述编辑子模块用于编辑新的编码数据。The editing submodule is used for editing new encoded data.

进一步地,所述情景交互信息识别分类模块包括参数识别单元和情景交互信息分类器,所述参数识别单元用于从获取的自动驾驶环境及情景数据中识别出各交通参与者的相对位置以及动作、状态的具体参数信息,以作为情景交互信息分类器的输入,所述情景交互信息分类器的输出为自车和其他交通参与者的交互关系,并使用交互关系的特征变量分级表达。Further, the scene interaction information identification and classification module includes a parameter identification unit and a scene interaction information classifier, and the parameter identification unit is used to identify the relative positions and actions of each traffic participant from the acquired automatic driving environment and scene data. , The specific parameter information of the state is used as the input of the contextual interaction information classifier, and the output of the contextual interaction information classifier is the interaction relationship between the self-vehicle and other traffic participants, which is expressed hierarchically using the characteristic variables of the interaction relationship.

进一步地,所述情景交互信息分类器设置有依次连接的相对位置判断单元和交互关系判断单元,所述相对位置判断单元用于判断输出自车与其他交通参与者之间的相对位置,所述交互关系判断单元用于判断输出交互关系的特征变量。Further, the scene interaction information classifier is provided with a relative position judging unit and an interaction relationship judging unit connected in sequence, and the relative position judging unit is used for judging and outputting the relative position between the vehicle and other traffic participants, the The interaction relationship judgment unit is used for judging the characteristic variable of the output interaction relationship.

进一步地,所述层级第一编码模块和层级第二编码模块的第一层均为链式结构、第二层均为树装结构,所述链式结构用于保证每个码段以及子码段之间相互独立,方便编码和识别;所述树状结构用于丰富各码位可表达的信息量。Further, the first layer of the hierarchical first coding module and the hierarchical second coding module is a chain structure, and the second layer is a tree structure, and the chain structure is used to ensure that each code segment and subcode are The segments are independent of each other, which is convenient for coding and identification; the tree-like structure is used to enrich the amount of information that can be expressed by each code point.

与现有技术相比,本发明通过识别场景组成要素,并根据其包含的动静态要素选取关键变量进行分类与编码;通过识别场景中的情景交互信息,并依照交通状况和交互关系进行分类和编码;此外,还可对涉及的补充信息进行属性库的建立和编码。由此实现对车辆采集数据进行驾驶场景分类和编码的目的,能够使驾驶场景被高效利用,在很大程度上提高车辆采集数据的利用率和使用效率。Compared with the prior art, the present invention identifies the scene constituent elements, and selects key variables for classification and coding according to the dynamic and static elements contained in the scene; identifies the scene interaction information in the scene, and classifies and encodes the scene according to traffic conditions and interaction relationships. Coding; in addition, the establishment and coding of the attribute library can also be performed on the supplementary information involved. In this way, the purpose of classifying and coding driving scenes for the vehicle collected data can be achieved, the driving scenes can be efficiently utilized, and the utilization rate and use efficiency of the vehicle collected data can be greatly improved.

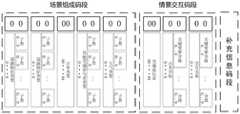

本发明针对场景组成要素、情景交互要素的分类结果以及补充信息分别进行编码,以得到包含场景组成要素码段、情景交互信息码段以及补充信息码段的驾驶场景分类编码结果,其中,场景组成要素码段、情景交互信息码段为刚性码段,能够表达场景的关键信息并方便分类筛选或存储,而补充信息码段为柔性码段,能够提供充分的自由度,具有扩展属性,表达更丰富的信息。由此充分保证了本发明驾驶场景分类编码结果的可靠性。The present invention encodes the scene component elements, the classification results of the scene interaction elements and the supplementary information respectively, so as to obtain the driving scene classification and coding result including the scene component element code segment, the scene interaction information code segment and the supplementary information code segment, wherein the scene composition The element code segment and the scene interaction information code segment are rigid code segments, which can express the key information of the scene and facilitate classification, screening or storage, while the supplementary information code segment is a flexible code segment, which can provide sufficient degrees of freedom, has extended attributes, and is more expressive. A wealth of information. Therefore, the reliability of the classification and coding result of the driving scene of the present invention is fully guaranteed.

附图说明Description of drawings

图1为本发明的方法流程示意图;Fig. 1 is the method flow schematic diagram of the present invention;

图2为场景分类过程示意图;Figure 2 is a schematic diagram of a scene classification process;

图3为实施例中场景组成要素结构图;FIG. 3 is a structural diagram of a scene component element in an embodiment;

图4为实施例中情景交互信息结构图;Fig. 4 is the scene interaction information structure diagram in the embodiment;

图5为情景交互信息分类器结构示意图;5 is a schematic structural diagram of a context interaction information classifier;

图6为编码过程示意图;6 is a schematic diagram of an encoding process;

图7为场景分类代码结构说明;Fig. 7 is the scene classification code structure description;

图8为补充信息码段结构说明;Figure 8 is a description of the structure of the supplementary information code segment;

图9为实施例中自然驾驶数据的视频片段示意图。FIG. 9 is a schematic diagram of a video clip of natural driving data in an embodiment.

具体实施方式Detailed ways

下面结合附图和具体实施例对本发明进行详细说明。The present invention will be described in detail below with reference to the accompanying drawings and specific embodiments.

实施例Example

如图1所示,一种驾驶场景分类编码方法,包括以下步骤:As shown in Figure 1, a driving scene classification and coding method includes the following steps:

S1、获取自动驾驶环境及情景数据;S1. Obtain automatic driving environment and scene data;

S2、基于获取的数据,识别出场景组成要素和情景交互信息;S2. Based on the acquired data, identify the scene components and scene interaction information;

S3、分别针对场景组成要素和情景交互信息进行分类、并进行编码;S3. Classify and encode scene components and scene interaction information respectively;

根据场景其他补充信息,进行相应编码;According to other supplementary information of the scene, perform corresponding coding;

S4、组合输出场景组成要素分类编码、情景交互信息分类编码以及补充信息编码,以作为驾驶场景分类编码结果。S4. Combining and outputting scene component element classification coding, scene interaction information classification coding, and supplementary information coding, as a result of driving scene classification coding.

将上述方法应用于实际,主要是基于车辆采集的原始数据,根据具体场景动静态要素以及情景交互信息,对该数据片段所表达的场景进行分类及场景编码:Applying the above method to practice is mainly based on the original data collected by the vehicle, according to the dynamic and static elements of the specific scene and the scene interaction information, to classify and encode the scene expressed by the data fragment:

步骤1,对场景的数据进行预处理和标识,确定获取场景中的动静态场景要素以及情景交互信息的数据来源,比如从现有的驾驶数据切片中获取进行场景分类的场景信息以及相应参数数值,需要说明的是,实际应用中,场景信息的数据来源是多样的;Step 1: Preprocess and identify the data of the scene, and determine the data source for obtaining the dynamic and static scene elements and scene interaction information in the scene, such as obtaining scene information and corresponding parameter values for scene classification from existing driving data slices , it should be noted that in practical applications, the data sources of scene information are diverse;

步骤2,识别场景组成要素,根据其包含的动静态要素选取关键变量进行分类,这些动静态要素使用其语义特征属性进行区分,并进行多级分类;Step 2: Identify the elements of the scene, and select key variables for classification according to the dynamic and static elements contained in the scene. These dynamic and static elements are distinguished by their semantic feature attributes, and multi-level classification is performed;

步骤3,识别场景中的情景交互信息,依照交通状况和交互关系进行分类,这些要素使用动作主体进行区分,并且根据动作参数及其关系进行交互情景分类,即基于场景中交通参与者与自车的交互关系进行分类,这些交互关系则使用其运动学参数表征进行区分。Step 3: Identify the scene interaction information in the scene, classify it according to the traffic conditions and interaction relationship, these elements are distinguished by the action subject, and classify the interaction scene according to the action parameters and their relationship, that is, based on the traffic participants and the self-vehicle in the scene. These interactions are classified using their kinematic parameter representations.

其中,场景组成要素包含使用语义特征进行区分的动静态场景因素,例如场景区域、场景道路特征、交通参与者构成、天气,这些要素包含了场景中的主要组成元素特征,各场景组成元素的分类包含子类以及二级子类,可以对场景组成元素进行具体化的分类,例如场景区域可以分为高速公路、城市、快速路、厂区等,而高速公路再行分类包含主路、上匝道、下匝道等;关键变量为场景组成要素的分类变量,表现为离散型;Among them, the scene components include dynamic and static scene factors that are distinguished by semantic features, such as scene area, scene road characteristics, traffic participant composition, and weather. These elements include the characteristics of the main component elements in the scene, and the classification of each scene component element. Contains subclasses and second-level subclasses, which can specifically classify the elements of the scene. For example, the scene area can be divided into highways, cities, expressways, factory areas, etc. off-ramp, etc.; the key variable is the categorical variable of scene components, which is discrete;

情景交互信息则包含使用动作主体区分的情景交互要素,根据交互关系进行分类和分级,例如自车目标与状态、与其他交通参与者的交互关系及特征变量等级,交通流状态,这些要素包含了场景中的主要情景交互内容,情景交互信息具有子类,如自车目标与状态可以分为:保持车道、变道、路口左转等;交互关系分为前方领航、临道切入、前方切出与变道超车等。子类对于二级分类(分级)有不同的参数要求,使用特定关键变量组合进行分类,关键变量可以为连续型。Contextual interaction information includes contextual interaction elements distinguished by action subjects, which are classified and graded according to the interaction relationship, such as the target and state of the vehicle, the interaction relationship with other traffic participants, and the level of characteristic variables, and the state of traffic flow. These elements include The main scene interaction content in the scene, and the scene interaction information has sub-categories, such as the vehicle’s goal and status, which can be divided into: keep lane, change lane, turn left at intersection, etc. and changing lanes for overtaking, etc. Subclasses have different parameter requirements for secondary classification (grading), and use specific key variable combinations for classification, and the key variables can be continuous.

在完成上述场景分类之后,继续对场景分类结果进行相应编码:After completing the above scene classification, continue to encode the scene classification result accordingly:

场景编码结果由场景组成元素码段、情景交互信息码段以及补充信息码段组成,前两码段具有刚性,需要有对应类型库或属性库作为支撑,补充信息码段为柔性码段,可以自定义相应的类型库与属性库。The scene coding result consists of the scene component code segment, the scene interaction information code segment, and the supplementary information code segment. The first two code segments are rigid and need to be supported by the corresponding type library or attribute library. The supplementary information code segment is a flexible code segment, which can be Customize the corresponding type library and property library.

其中,场景编码方式为:对于场景组成元素,对应于其分类方式,使用一级分类以及其二级子类的多级代码;对于情景交互信息,对应于其分类,使用一级代码表达分类,对应于其具体参数分级,使用另一级代码表达参数或属性分级。Among them, the scene coding method is: for the scene component elements, corresponding to their classification methods, use the multi-level codes of the first-level classification and its second-level sub-categories; for the scene interaction information, corresponding to its classification, use the first-level code to express the classification, Corresponding to its specific parameter ranking, another level of code is used to express the parameter or attribute ranking.

此外,编码方式根据具体场景可以进行代码级别以及具体子类的扩充和删减,即可以自行调整编码系统的类型库以及属性库,在代码分级和多级子类上均具有可扩展性。In addition, the coding method can expand and delete code levels and specific subclasses according to specific scenarios, that is, the type library and attribute library of the coding system can be adjusted by themselves, and it has scalability in both code classification and multi-level subclasses.

对于具有补充信息的柔性码段,该部分码段可以使用前两个码段的类型库,以进行对前两部分信息的补充描述,也可以自行创建新库进行补充信息的编码,如对场景建立时间、多个交通参与者信息、场景触发条件或其他针对特定研究的数据内容补充等。For the flexible code segment with supplementary information, the type library of the first two code segments can be used for this part of the code segment to supplement the description of the first two parts of the information, or a new library can be created to encode the supplementary information, such as scene coding. Build time, multiple traffic participant information, scenario triggers, or other study-specific data content additions, etc.

在实际应用中,可以EPR等企业工业资源管理系统的编码方式为基础,应用于场景编码的特定语境,并根据需求设计刚性码段与柔性码段,因此这是一种充分可扩展的柔性编码方式。在该编码方法中,计算机能够自动从分类库中识别元素并编码,编码结果是非二义且完整的,同时在补充信息码段具有充分可扩展性,具体方案如下:In practical applications, based on the coding method of enterprise industrial resource management systems such as EPR, it can be applied to the specific context of scene coding, and rigid code segments and flexible code segments can be designed according to requirements, so this is a fully scalable flexible code segment. Encoding. In this encoding method, the computer can automatically identify and encode elements from the classification library. The encoding result is non-ambiguous and complete, and at the same time, it has sufficient scalability in the supplementary information code segment. The specific scheme is as follows:

首先需要说明:编码和分类过程同时应用,在进行分类的同时进行部分码段的编制。此编码系统第一层为链式结构,第二层为树状结构,外层的链式结构保证每个码段以及子码段之间相互独立,方便编码和识别;第二层的树状结构丰富各码位可表达的信息量。First of all, it needs to be explained: the coding and classification processes are applied at the same time, and some code segments are compiled while the classification is performed. The first layer of this coding system is a chain structure, the second layer is a tree structure, and the chain structure of the outer layer ensures that each code segment and sub-code segments are independent of each other, which is convenient for encoding and identification; The structure enriches the amount of information that each code point can express.

步骤A,对应于上述场景分类过程中的步骤2,设计场景组成要素码段,该码段有5个子码段,分别对应场景区域、场景道路特征、自车、其他交通参与者、天气,各子码段之间相互独立。Step A, corresponding to step 2 in the above-mentioned scene classification process, design the scene composition element code segment, the code segment has 5 sub-code segments, respectively corresponding to the scene area, scene road features, self-vehicle, other traffic participants, weather, etc. The subcode segments are independent of each other.

步骤B,对应于上述场景分类过程中的步骤3,设计情景交互信息码段,该码段有3个情景交互信息子码段,分别对应交通流信息、自车目标与状态、交互关系及参数分级。Step B, corresponding to step 3 in the above-mentioned scene classification process, design a scene interaction information code segment, and the code segment has 3 scene interaction information sub-code segments, corresponding to traffic flow information, self-vehicle target and state, interaction relationship and parameters respectively. Grading.

步骤C,独立于场景分类方法之外,对应于该场景地其他补充信息,设计柔性码段。In step C, independent of the scene classification method, a flexible code segment is designed corresponding to other supplementary information of the scene.

对于编码步骤A需要说明:该码段中的5个子码段均有2位代码,该两位代码为树状结构,使用10进制,理论上每个子码段均可以包含100个子类。该两位代码第一位代表场景组成要素分类的一级子类类型,第二位代表场景组成要素分类的二级子类类型。For encoding step A, it needs to be explained: the 5 sub-code segments in the code segment have 2-bit codes, and the two-digit codes are in a tree-like structure and use decimal notation. In theory, each sub-code segment can contain 100 sub-classes. The first digit of the two-digit code represents the first-level subclass type of the scene component classification, and the second digit represents the second-level subclass type of the scene component classification.

对于编码步骤B需要说明:该码段中的3个子码段均有2位代码,该两位代码为树状结构,使用10进制,理论上每个子码段均可以包含100个子类。该两位第一位代表交互情景分类的一级子类类型,第二位代表场景组成分类的二级子类类型。For the encoding step B, it should be noted that the three sub-code segments in the code segment have 2-bit codes, and the two-digit codes are in a tree-like structure, using decimal notation. In theory, each sub-code segment can contain 100 subclasses. The first digit of the two digits represents the first-level sub-category type of the interaction scene classification, and the second digit represents the second-level sub-category type of the scene composition classification.

对于编码步骤B中交互情景分类的二级子类类型,如是连续型变量,则使用参数空间对参数进行分级处理,最多可分10级。For the second-level sub-category type of the interaction scene classification in the coding step B, if it is a continuous variable, the parameter space is used to classify the parameters, which can be divided into 10 levels at most.

对于编码步骤C需要说明:该补充信息码段是可以为空的,在有必要时,可以在该码段添加子码段,子码段的数量没有限制。每一个子码段的位数均为3位,第一位代表子码段的类型,第二位代表子码段的一级分类编码结果,第三位代表子码段的二级分类或分级编码结果。For the encoding step C, it should be noted that the supplementary information code segment can be empty, and if necessary, a sub-code segment can be added to the code segment, and the number of sub-code segments is not limited. The number of digits in each sub-code segment is 3 bits, the first digit represents the type of the sub-code segment, the second digit represents the first-level classification and coding result of the sub-code segment, and the third digit represents the second-level classification or classification of the sub-code segment encoding result.

此外,对应于每一个刚性码段的不同类型的子码段,均有预先定义的分类库或属性库,同时该库也可以在分类编码过程中进行添加和调整,已存在于分类库或属性库中的分类或属性则直接进行编码,而不属于库内的分类或属性则可选择性地添加进入库中,随后进行编码。In addition, corresponding to different types of sub-code segments of each rigid code segment, there is a pre-defined classification library or attribute library. At the same time, the library can also be added and adjusted during the classification and coding process. It already exists in the classification library or attribute library. The categories or attributes in the library are directly encoded, and the categories or attributes that do not belong to the library can be selectively added to the library and then encoded.

对于补充信息码段,根据所需表达的信息不同,可以补充不属于已有类型库或属性库中的信息,生成相应的库以便编码。For the supplementary information code segment, according to the different information to be expressed, the information that does not belong to the existing type library or attribute library can be supplemented, and the corresponding library can be generated for encoding.

值得注意的是,对于该编码方法,刚性码段可以表达场景的关键信息并方便分类筛选或存储,而柔性码段则提供充分的自由度,使其具有扩展属性,表达更丰富的信息。It is worth noting that, for this encoding method, rigid code segments can express key information of the scene and facilitate classification, screening or storage, while flexible code segments provide sufficient degrees of freedom, enabling them to have extended attributes and express richer information.

本技术方案还提供一种驾驶场景分类编码系统,包括场景组成要素识别分类模块、情景交互信息识别分类模块和柔性代码编辑模块,场景组成要素识别分类模块、情景交互信息识别分类模块分别连接至层级第一编码模块、层级第二编码模块,层级第一编码模块、层级第二编码模块均与类型库、属性库相连接,类型库和属性库分别连接有编辑子模块。The technical solution also provides a driving scene classification and coding system, which includes a scene component element identification and classification module, a scene interaction information identification and classification module, and a flexible code editing module. The scene component element identification and classification module and the scene interaction information identification and classification module are respectively connected to the hierarchy. The first coding module, the second coding module of the hierarchical level, the first coding module of the hierarchical level and the second coding module of the hierarchical level are all connected with the type library and the attribute library, and the type library and the attribute library are respectively connected with editing submodules.

其中,场景组成要素识别分类模块用于从获取的自动驾驶环境及情景数据中识别出场景组成动静态要素、并进行动静态要素多级分类;Among them, the scene component identification and classification module is used to identify the dynamic and static elements of the scene from the acquired automatic driving environment and scene data, and to perform multi-level classification of dynamic and static elements;

情景交互信息识别分类模块用于从获取的自动驾驶环境及情景数据中识别出情景交互要素、并进行交互要素分类和参数分级;The scene interaction information identification and classification module is used to identify the scene interaction elements from the acquired automatic driving environment and scene data, and to classify the interaction elements and parameter classification;

层级第一编码模块用于对动静态要素多级分类结果进行相应编码操作,输出得到场景组成元素码段;The first level coding module is used to perform corresponding coding operations on the multi-level classification results of dynamic and static elements, and output the scene composition element code segment;

层级第二编码模块用于对交互要素分类和参数分级进行相应编码操作,输出得到情景交互信息码段;The second encoding module of the level is used to perform corresponding encoding operations on the interaction element classification and parameter classification, and output the scene interaction information code segment;

柔性代码编辑模块用于对场景其他补充信息进行编码操作,输出得到补充信息码段;The flexible code editing module is used to encode other supplementary information of the scene, and output the supplementary information code segment;

类型库和属性库用于存储预先设定的编码数据;Type libraries and property libraries are used to store pre-set encoded data;

编辑子模块用于编辑新的编码数据。The edit submodule is used to edit new encoded data.

在情景交互信息识别分类模块中,包含有情景交互信息分类器,当进行情景交互分类时,该分类器以自车或其他交通参与者的动作、相对位置以及运动状态的连续型关键变量等作为输入,以自车和其他交通参与者的交互关系作为输出,并使用该种交互关系的特征变量分级表达。In the contextual interaction information recognition and classification module, there is a contextual interaction information classifier. When performing contextual interaction classification, the classifier takes the actions, relative positions and continuous key variables of the movement state of the vehicle or other traffic participants as the The input takes the interaction between the vehicle and other traffic participants as the output, and uses the characteristic variables of this interaction to express hierarchically.

即:交互情景信息分类器的输入为各交通参与者的相对位置以及动作、状态的具体参数信息,这些信息由对应的参数识别模块从对应的数据源识别得到,如自车车速范围,自车航向角与车道线切线夹角等;表现为连续型。That is: the input of the interactive scene information classifier is the relative position of each traffic participant and the specific parameter information of the action and state, which is identified by the corresponding parameter identification module from the corresponding data source, such as the speed range of the own vehicle, the own vehicle The included angle between the heading angle and the tangent to the lane line, etc.; the performance is continuous.

在该分类器中,相对位置判断模块以及交互关系判断模块作为分类的主要执行模块,可以从关键变量中判断交互关系,得到交互关系分类结果;而交互关系可以使用特征变量计算函数进行该种交互关系特征变量的计算,从而进行参数分级。In the classifier, the relative position judgment module and the interaction relationship judgment module are the main execution modules of the classification, which can judge the interaction relationship from the key variables and obtain the interaction relationship classification result; and the interaction relationship can use the feature variable calculation function to perform this kind of interaction Calculation of relational characteristic variables for parameter grading.

综上可知,本技术方案基于已采集的驾驶场景的环境数据以及情景交互数据,进行场景的系统性分类和编码,该方案可由计算机软件和/或硬件构成,一般可以集成在对应的电子设备中构成驾驶场景分类器并进行编码存储。To sum up, this technical solution performs systematic classification and coding of scenarios based on the collected environmental data and scenario interaction data of driving scenarios. The solution can be composed of computer software and/or hardware, and can generally be integrated into corresponding electronic devices. A driving scene classifier is constructed and encoded and stored.

该场景分类编码方法基于已有的车辆驾驶数据,来源不限。该驾驶数据包含场景组成要素,如道路、天气等信息;以及交通参与者目标及参数,如自车目标、自车车速等。这些数据的可能来源包括但不限于:车载视觉传感器(包括单目、多目摄像头),毫米波雷达,激光雷达,以上传感器可以同时提供具体的场景组成要素信息,如交通参与者类型、道路类型、天气条件等,以及情景交互信息,如交通参与者运动参数,交通流密度等;车载GPS,IMU等传感器,可以提供自车或其他交通参与者的运动状态参数,从而用来构成情景交互信息;此外,高精地图,路侧单元也会提供相关场景组成要素或情景交互信息。The scene classification and coding method is based on the existing vehicle driving data, and the source is not limited. The driving data includes scene components, such as road, weather, and other information; as well as traffic participant goals and parameters, such as ego vehicle goals, ego vehicle speed, and so on. The possible sources of these data include but are not limited to: vehicle vision sensors (including monocular and multi-eye cameras), millimeter-wave radar, and lidar. The above sensors can also provide specific scene component information, such as traffic participant types, road types , weather conditions, etc., as well as contextual interaction information, such as movement parameters of traffic participants, traffic flow density, etc.; on-board GPS, IMU and other sensors can provide the movement state parameters of the vehicle or other traffic participants, so as to form contextual interaction information ; In addition, high-precision maps and roadside units will also provide relevant scene components or scene interaction information.

以上描述针对真实驾驶情况,对于仿真场景,仿真器可以使用数据接口导出车辆运动学相关参数,目前诸多仿真器还可以导出仿真车中车载传感器(摄像头、雷达等)的视频数据或仿真数据,另外对于仿真环境,场景组成要素作为仿真环境的重要组成部分,也是相对容易获取的数据,因此仿真场景数据的充足性使其充分适用于该场景分类方法与编码系统。The above description is for the real driving situation. For the simulation scenario, the simulator can use the data interface to export the parameters related to the vehicle kinematics. At present, many simulators can also export the video data or simulation data of the on-board sensors (camera, radar, etc.) in the simulated vehicle. For the simulation environment, the scene components, as an important part of the simulation environment, are also relatively easy to obtain data, so the sufficiency of the simulation scene data makes it fully suitable for the scene classification method and coding system.

对于一组对应于同一个场景的包含不同信息的数据切片或片段,需按照时间戳进行对应,从而确保该组场景数据具有有效性;不同驾驶场景的数据切片长度可能不同,但需确保该场景的所有数据切片具有相同的时间跨度,值得注意的是不同场景时间跨度的不同不影响该驾驶场景分类方法的应用。For a group of data slices or fragments containing different information corresponding to the same scene, it is necessary to correspond according to the timestamp to ensure the validity of the group of scene data; the length of data slices for different driving scenes may be different, but it is necessary to ensure that the scene All the data slices have the same time span. It is worth noting that the different time spans of different scenes do not affect the application of this driving scene classification method.

图2为实施例中场景分类方法流程图,在已有有效驾驶数据片段的情况下首先进行场景组成要素和情景交互要素的识别。FIG. 2 is a flow chart of the scene classification method in the embodiment. In the case of existing valid driving data segments, the scene component elements and scene interaction elements are first identified.

参照图3场景组成要素结构图,分别从道路区域,道路特征、自车、其他交通参与者、天气识别该场景各元素关键变量的分类情况。例如,可以从车载视觉传感器的视频片段进行识别,或从车载雷达等传感器获得环境组成信息。对于具体的要素识别方法,本方法未作限定,作为最为基础的方法,人工筛选是可行的,但是为了提高分类效率,现在也同样常用机器学习的方式从视频或图像资料中识别相关元素,当使用这类方法时,常需要有人工标注的数据进行学习,但是机器学习的方式也方便集成在基于电子设备的场景分类器中使用。Referring to the structure diagram of the scene component elements in Figure 3, the classification of the key variables of each element of the scene is identified from the road area, road features, self-vehicle, other traffic participants, and weather. For example, recognition can be done from video footage from on-board vision sensors, or information about the composition of the environment from sensors such as on-board radar. For the specific element identification method, this method is not limited. As the most basic method, manual screening is feasible, but in order to improve the classification efficiency, machine learning is also commonly used to identify relevant elements from video or image data. When using this type of method, human-labeled data is often required for learning, but the machine learning method is also easy to integrate into electronic device-based scene classifiers.

使用一定的分类方法可以从包含场景组成要素的视频、图像或雷达点云等相关数据中获得分类结果,对于所有场景组成要素来说都有子类,而对于除自车以及临时路面条件以外的其他场景组成要素,还需识别其二级子类的分类结果,从而构成完整的场景组成要素分类。Classification results can be obtained from relevant data such as videos, images or radar point clouds containing scene components using a certain classification method. For all scene components, there are subclasses, and for all scene components except the vehicle and temporary road conditions. For other scene components, it is also necessary to identify the classification results of their secondary subclasses, so as to form a complete scene component classification.

参照图4情景交互元素结构图,分别从交通流、自车目标与状态以及交互关系及其特征变量分级识别该场景各元素的关键变量的分类情况。例如,可以从车载GPS或IMU上识别自车运动的动作序列,从而确定自车目标;可以从车载视觉传感器或车载雷达中获得其他交通参与者的动作序列,从而判断其他交通参与者的动作类型;可以从视频或雷达点云数据中获得周围交通流的相关信息,另外,如若有路侧单元可以提供该路段的交通流信息则可以减少从视频等数据中识别的步骤。通过以上步骤可以从车载视频或雷达数据中直接或间接获得情景交互信息的关键变量值。Referring to the structure diagram of scene interaction elements in FIG. 4 , the classification of key variables of each element of the scene is identified separately from the traffic flow, the target and state of the vehicle, the interaction relationship and its characteristic variables. For example, the motion sequence of the vehicle's motion can be identified from the vehicle GPS or IMU to determine the vehicle's target; the motion sequences of other traffic participants can be obtained from the vehicle vision sensor or vehicle radar to determine the type of other traffic participants' actions. ; The relevant information of the surrounding traffic flow can be obtained from the video or radar point cloud data. In addition, if there is a roadside unit that can provide the traffic flow information of the road section, the identification steps from video and other data can be reduced. Through the above steps, the key variable values of the contextual interaction information can be directly or indirectly obtained from the in-vehicle video or radar data.

对于自车状态以及与其他交通参与者的交互关系这一类连续型变量,还需要其具体数据信息进行分级。交通参与者的状态或动作参数根据其目标或动作类型不同具体涉及的内容也不同,参考表1可以确定需要的关键变量,而关键变量的具体数值可以由自车或他车的车载GPS或惯导系统得到,若其他交通参与者没有相关传感器,则类似于场景组成要素的识别,可以采用机器学习的方法,从视频数据或雷达点云中得到,相关的方法目前的精确度已经相当高,并且该方式同样也方便在基于电子设备的场景分类器中使用。For continuous variables such as self-vehicle status and interaction with other traffic participants, specific data information is also required for classification. The state or action parameters of traffic participants vary in specific content according to their goals or action types. Refer to Table 1 to determine the required key variables, and the specific values of key variables can be determined by the on-board GPS or custom If other traffic participants do not have relevant sensors, it is similar to the identification of scene components. Machine learning methods can be used to obtain them from video data or radar point clouds. The current accuracy of related methods is quite high. And this method is also convenient to use in scene classifiers based on electronic devices.

表1交通参与者状态和关键变量例表Table 1. Examples of traffic participant status and key variables

参照图5的情景交互关系分类器,使用自车和其他交通参与者的位置、动作等参数,应用相对位置判断模块以及交互关系判断模块,可以得到交互关系的分类变量;另外,每一种交互关系都有其定义的特征变量,该种特征变量可以用识别得到的相关参数输入特征变量计算函数计算得到,之后可以得到该种交互关系的特征变量分级。至此该场景分类方法应用完成,并得到一个具体的场景分类结果。Referring to the situational interaction relationship classifier in FIG. 5 , using the parameters such as the position and action of the vehicle and other traffic participants, and applying the relative position judgment module and the interaction relationship judgment module, the classification variables of the interaction relationship can be obtained; in addition, each interaction Each relationship has its own defined characteristic variable, which can be calculated by inputting the characteristic variable calculation function with the identified relevant parameters, and then the characteristic variable classification of the interaction relationship can be obtained. So far, the application of the scene classification method is completed, and a specific scene classification result is obtained.

图6是场景分类结果的编码过程示意图,在进行场景分类的过程中同时进行场景编码,可以很方便地对场景进行系统性的存储、管理、筛选和调用。FIG. 6 is a schematic diagram of the encoding process of the scene classification result. During the scene classification process, scene encoding is simultaneously performed, which can conveniently store, manage, filter and call the scene systematically.

编码的应用基于驾驶环境及情景数据,也即上述场景分类的结果。类似于场景分类的步骤,需要对场景组成要素和情景交互进行多级编码。但场景编码还存在分类库或属性库的预先编制阶段,也存在补充信息代码部分的编制步骤。The application of the coding is based on the driving environment and scene data, that is, the result of the above-mentioned scene classification. Similar to the step of scene classification, multi-level coding of scene components and scene interactions is required. However, scene coding still has a pre-compiling stage of classification library or attribute library, as well as a compiling step of supplementary information code part.

场景组成要素分为道路区域,道路特征、自车、交通参与者天气,对应编码系统,分别为第1到5级子代码段,每一子代码段均为两位。参照图7,自车为单级代码,代表自车类型,参照对应的编码表可以进行代码划分。除此以外的其他代码均为两级代码,需要进行两级编码,第一级为场景组成要素的各要素子类,而第二级为各要素子类的二级子类,因此该部分代码为树状结构。表2是场景组成元素的两级编码例表,表中列举了部分场景组成元素的编码结果,根据场景分类结果,对照规定的分类代码表即可进行场景组成元素的编码。值得注意的是,该表为表达编码系统的例表,是分类库或属性库的直观表达,而并非对实际编码的限制性说明,使用中可以根据实际需求进行库的编制和补充。The components of the scene are divided into road areas, road features, self-vehicles, and weather of traffic participants, corresponding to the coding system, which are sub-code segments at levels 1 to 5, and each sub-code segment has two digits. Referring to FIG. 7 , the self-vehicle is a single-level code, representing the type of the self-vehicle, and code division can be performed by referring to the corresponding coding table. The other codes are two-level codes, and two-level coding is required. The first level is each element subclass of the scene component elements, and the second level is the second-level subclass of each element subclass. Therefore, this part of the code is a tree structure. Table 2 is an example table of two-level coding of scene component elements. The table lists the coding results of some scene component elements. According to the scene classification results, the scene component elements can be coded according to the specified classification code table. It is worth noting that this table is an example table for expressing the coding system. It is an intuitive expression of the classification library or attribute library, rather than a restrictive description of the actual coding. In use, the library can be compiled and supplemented according to actual needs.

表2场景组成元素分类代码例表Table 2. Example table of scene composition element classification codes

情景交互元素分为自车目标与状态,交互关系以及交通流状态信息,对应编码系统,为第6-8级子代码段。每一类别代码均为两位。参照图7,交通流状态为单级代码段,代表交通流状态类型,参照对应的编码系统表可以进行代码划分。除此以外的其他代码均为两级代码,需要进行两级编码,第一级为情景交互元素的各元素子类,而第二级为根据各要素子类的具体参数值进行分级划分后的分级结果。表3是情景交互元素的两级编码例表,表中列举了部分情景交互元素的编码结果,根据场景分类步骤中情景交互元素的分类结果,对照规定的分类代码表即可进行编码。同上,该表为表达编码系统的例表,是分类库或属性库的直观表达,而并非对该编码系统的限制性说明,使用中可以根据实际需求进行库的编制和补充。Scenario interaction elements are divided into self-vehicle target and state, interaction relationship and traffic flow state information, corresponding to the coding system, which are sub-code segments of level 6-8. Each category code is two digits. Referring to FIG. 7 , the traffic flow state is a single-level code segment, representing the type of traffic flow state, and code division can be performed by referring to the corresponding coding system table. The other codes are all two-level codes, and two-level coding is required. The first level is each element subclass of the scene interaction element, and the second level is classified according to the specific parameter values of each element subclass. Grading results. Table 3 is a two-level coding example table of scene interaction elements. The table lists the coding results of some scene interaction elements. According to the classification results of scene interaction elements in the scene classification step, coding can be performed by comparing with the specified classification code table. As above, this table is an example table for expressing the coding system, which is an intuitive expression of the classification library or attribute library, rather than a restrictive description of the coding system. The library can be compiled and supplemented according to actual needs in use.

表3情景交互元素分类代码例表Table 3 Scenario interaction element classification code example table

参照图3,编码操作之前以及编码过程中,均存在分类库或属性库的建立和修改,首先在进行准备过程中,可由用户选择是否进行库的默认设定,用户可以根据已有信息进行部分库的建立以便于在编码时的自动化处理。在编码过程中,如遇到已有分类库或属性库中不包含的类别或属性信息,即可以选择进行库的扩充,该工作也可以由用户设定自动进行。Referring to Figure 3, before the encoding operation and during the encoding process, there is the establishment and modification of a classification library or an attribute library. First, in the preparation process, the user can choose whether to perform the default setting of the library, and the user can perform some parts according to the existing information. Libraries are built to facilitate automation while coding. In the coding process, if you encounter category or attribute information that is not included in the existing classification library or attribute library, you can choose to expand the library, and this work can also be automatically set by the user.

此外,在完成场景组成元素码段和情景交互信息码段的编制以后,将进行补充信息码段的编制,该码段可以有任意个子码段,每一个子码段有三位,如图8所示。子码段的第一位代码表示码段类型,该类型可以是对场景组成或情景交互信息的补充,也可以是用户定义的其他类型,对应该位代码有相应的分类库,该分类库由用户自定义,并且在补充信息编制的时候可以由用户选择并进行后续代码编制。子码段的第二位和第三位分别代表该码段所代表类型的子类和二级子类,对于部分补充信息类型,该二级子类也可以是连续变量分级而成的属性库。In addition, after completing the compilation of the scene composition element code segment and the scene interaction information code segment, the supplementary information code segment will be compiled. The code segment can have any number of sub-code segments, and each sub-code segment has three bits, as shown in Figure 8. Show. The first code of the sub-code segment indicates the code segment type, which can be a supplement to the scene composition or scene interaction information, or can be other types defined by the user. There is a corresponding classification library corresponding to this bit code. User-defined, and can be selected by the user for subsequent code compilation when supplementary information is compiled. The second and third digits of the sub-code segment represent the sub-class and second-level sub-class of the type represented by the code segment, respectively. For some supplementary information types, the second-level sub-class can also be an attribute library composed of continuous variables. .

本实施例以在乘坐舒适性研究中采集的自然驾驶数据为例,对本技术方案的具体应用做以说明。In this embodiment, the natural driving data collected in the riding comfort research is taken as an example to illustrate the specific application of the technical solution.

首先说明该数据的来源及特性:该数据为车辆行驶在城市道路或高速公路上,由车载摄像头(有对应处理器,可以自动识别交通参与者、道路类型、车道线、其他交通参与者的动作参数等信息)、车载GPS、车载IMU采集的数据,数据结果自然包含自车的运动速度、加速度、轨迹、姿态等信息,除此以外还有车正向水平视角约120度的视频数据。First explain the source and characteristics of the data: the data is that the vehicle is driving on urban roads or highways, and the on-board camera (with a corresponding processor can automatically identify traffic participants, road types, lane lines, and actions of other traffic participants) Parameters and other information), the data collected by the on-board GPS, and the on-board IMU, the data results naturally include the vehicle's motion speed, acceleration, trajectory, attitude and other information, in addition to the vehicle's forward horizontal viewing angle of about 120 degrees. Video data.

根据数据类型,以及场景分类所需的基本信息做以下归纳:道路类型、道路特征、天气以及其他交通参与者、交通流以及其他交通参与者的动作和状态需要从视频信息中获知;自车类型由试验信息获知;自车目标和状态由自车GPS以及IMU的车辆加速度、速度、轨迹等信息获得。According to the data type and the basic information required for scene classification, the following conclusions are made: road type, road characteristics, weather, and other traffic participants, traffic flow and actions and states of other traffic participants need to be learned from video information; self-vehicle type It is obtained from the test information; the target and state of the ego vehicle are obtained from the vehicle acceleration, speed, trajectory and other information of the ego car GPS and IMU.

在场景组成元素的分类以及该码段的编制方面,主要的数据来源为车载视频数据。In the classification of scene elements and the compilation of the code segment, the main data source is vehicle video data.

考量其道路类型,该实验有明确的道路类型,分别为高速路和城市道路,此外参考视频内容易分辨其具体道路类型的二级子类,如图9所示的视频截图,该道路类型为城市道路,具体子类为有信号灯的路口;考量其自车类型,该车为家用轿车;考量其道路特征,道路为直道,二级子类为3车道最右道;考量其天气情况,为晴天,二级子类为光照条件中等;考量其他交通参与者,主要存在交互关系的有一货车。Considering its road type, the experiment has clear road types, namely expressways and urban roads. In addition, the secondary sub-categories of the specific road types can be easily distinguished in the reference video, as shown in the video screenshot shown in Figure 9. The road type is Urban road, the specific subcategory is the intersection with signal lights; considering its own vehicle type, the car is a family car; considering its road characteristics, the road is a straight road, and the secondary subcategory is the 3-lane rightmost road; considering its weather conditions, it is On a sunny day, the secondary sub-category is moderate light conditions; considering other traffic participants, there is mainly a truck that has an interactive relationship.

在该码段的编码方面,首先需要根据实验信息编制相关的类型库,同样也可以设定在分类识别过程中进行类型库的定义和修改。根据用户不同的类型库设定,编码的结果具有特异性,以下假定道路类型中城市道路为第2类,路口为第3子类;道路特征中直道为第2类,3车道最右道为第6子类;自车类型为第1类;天气情况晴天为第3类,光照程度适中为第2子类;其他交通参与者,车辆类为第1类,货车为第2子类;综上该码段的编码结果为2326013212。In the coding of the code segment, the relevant type library needs to be compiled first according to the experimental information, and the definition and modification of the type library can also be set in the process of classification and identification. According to the user's different type library settings, the coding results are specific. The following assumes that the urban road in the road type is the second category, and the intersection is the third sub-category; the straight road in the road features is the second category, and the 3-lane rightmost road is Sub-category 6; self-vehicle type is category 1; weather conditions are sunny, category 3, moderate light level is sub-category 2; other traffic participants, vehicle category is category 1, and trucks are category 2; The encoding result of the above code segment is 2326013212.

在情景交互信息的分类方面,自车的主要相关数据来源为车载GPS和IMU的速度、加速度、轨迹等信息;交通流和其他交通参与者的信息来源为车载视频。In the classification of contextual interaction information, the main sources of data related to the self-vehicle are the speed, acceleration, trajectory and other information of the on-board GPS and IMU; the information sources of traffic flow and other traffic participants are the in-vehicle video.

考量交通流信息,由视频可知此时交通流状态为正常;考量自车目标和状态,由视频中可以得知自车目标为直行超车,其关键变量为直行车速,根据时间戳,在GPS的数据中可计算得该片段自车行驶均速为62km/h;考量交互关系,应用交互关系分类器,其他交通参与者为一货车,位置为右侧第2车道,自车位置为右侧第二车道,则其位置关系为临道行驶;该货车动作为直行,结合自车目标,则可以得到分类结果为直行超车。将分类结果输入特征变量类型库,可知特征变量为侧向车距,涉及参数为侧向车距,由参数识别模块中得到其侧向车距为1.3m。Considering the traffic flow information, it can be seen from the video that the traffic flow state is normal at this time; considering the target and status of the vehicle, it can be seen from the video that the target of the vehicle is overtaking, and the key variable is the speed of the vehicle. It can be calculated from the data that the average speed of the vehicle in this segment is 62km/h; considering the interaction relationship, the interaction relationship classifier is applied, and the other traffic participants are a truck, the position is the second lane on the right, and the position of the vehicle is the second lane on the right. If there are two lanes, its positional relationship is approaching; the truck moves straight, and combined with the target of the vehicle, the classification result can be obtained as straight overtaking. Input the classification result into the feature variable type library, it can be known that the feature variable is the lateral vehicle distance, and the involved parameter is the lateral vehicle distance. The lateral vehicle distance obtained from the parameter identification module is 1.3m.

在该情景交互信息分类完成后,可以进行该码段的编码工作。同样的,分类库和属性库的设定具有特异性;在此需要注意的是,在对自车和其他交通参与者的关键变量的编码中,需要进行分级工作。对交通流的编码,假定正常交通流状态为01,;对自车目标和状态的编码,假定直行超车为第6子类,其关键变量自车车速62km/h为第4级;对交互关系,直行超车为第4类,该侧向车距分级结果为3级。综上该码段的编码结果为016443。After the classification of the scene interaction information is completed, the coding of the code segment can be performed. Likewise, the setting of the classification and attribute libraries is specific; it is important to note here that grading work is required in the encoding of key variables for the ego vehicle and other traffic participants. For the coding of traffic flow, it is assumed that the normal traffic flow state is 01; for the coding of the target and status of the ego vehicle, it is assumed that straight overtaking is the sixth subcategory, and the key variable ego speed of 62km/h is the fourth level; , the straight overtaking is category 4, and the lateral distance classification result is level 3. In summary, the encoding result of this code segment is 016443.

至此该场景分类完成。At this point, the classification of the scene is completed.

对于编码部分,仍可以根据需要进行补充信息码段的编制。如在该实验中,被试的主观舒适性表达是重要的实验结果之一,在数据处理和分析时需要调用,因此可以将其编入补充信息码段。该码段定义一主观舒适性表达的子码段,如前所述有三位,第一位代表其子码段类型,可以在子码段类型库中加入主观舒适度表达,假定为第4类,因此该位为4;第二位,第三位则分别代表主观舒适度的表达时长和峰值大小,两者均为连续型,因此以分级变量表示。考量表达时长,从1秒到15秒,每1.5秒为一个等级,如该次表达持续6s则编号为4;考量峰值大小,以10%-20%,20%-30%,到90%-100%,共分为9级,如此次表达峰值为35%,则编号为3,则此补充码段的编码结果为443,其含义表达具有非二义性。For the coding part, the supplementary information code segment can still be compiled as required. For example, in this experiment, the subject's subjective comfort expression is one of the important experimental results, which needs to be called during data processing and analysis, so it can be compiled into the supplementary information code segment. This code segment defines a sub-code segment for subjective comfort expression. As mentioned above, there are three bits, and the first digit represents its sub-code segment type. The subjective comfort level expression can be added to the sub-code segment type library, assuming the fourth type. , so the digit is 4; the second and third digits represent the expression duration and peak size of subjective comfort, both of which are continuous, so they are expressed as graded variables. Consider the expression time, from 1 second to 15 seconds, every 1.5 seconds is a grade, if the expression lasts for 6s, the number is 4; 100%, divided into 9 grades. If the expression peak is 35%, the number is 3, and the coding result of this supplementary code segment is 443, and its meaning expression is non-ambiguous.

值得注意的是,对于以上信息的识别和获取,类似该摄像头自带的智能算法,目前已有很多成熟的机器学习算法可以从视频数据中获得其他交通参与者类型、动作、速度等参数;道路类型、天气状况等同样可以经过相关数据的训练以后自动进行识别。另外,GPS或IMU的数据结构是固定的,也很容易编制对应的程序进行自动化的数据处理,因此在建立该场景分类编码系统时,可以充分利用自动识别或计算的算法和系统已达到该工作的自动化,最大程度减少人工的参与提高分类效率。It is worth noting that for the identification and acquisition of the above information, similar to the intelligent algorithm that comes with the camera, there are many mature machine learning algorithms that can obtain other traffic participant types, movements, speeds and other parameters from video data; road Types, weather conditions, etc. can also be automatically identified after training on relevant data. In addition, the data structure of GPS or IMU is fixed, and it is easy to compile corresponding programs for automatic data processing. Therefore, when establishing the scene classification and coding system, the algorithm and system of automatic identification or calculation can be fully utilized to achieve this work. The automation minimizes manual participation and improves classification efficiency.

Claims (10)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210473739.9ACN114973188A (en) | 2022-04-29 | 2022-04-29 | Driving scene classification coding method and system thereof |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210473739.9ACN114973188A (en) | 2022-04-29 | 2022-04-29 | Driving scene classification coding method and system thereof |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN114973188Atrue CN114973188A (en) | 2022-08-30 |

Family

ID=82978876

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202210473739.9APendingCN114973188A (en) | 2022-04-29 | 2022-04-29 | Driving scene classification coding method and system thereof |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN114973188A (en) |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN115762205A (en)* | 2022-10-28 | 2023-03-07 | 广州文远知行科技有限公司 | Method, device and equipment for determining driving scene data and storage medium |

| CN116778720A (en)* | 2023-08-25 | 2023-09-19 | 中汽传媒(天津)有限公司 | Traffic condition scene library construction and application method, system and electronic equipment |

| CN117082303A (en)* | 2023-10-16 | 2023-11-17 | 深圳金语科技有限公司 | Stream media driving monitoring method and equipment thereof |

Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN111144015A (en)* | 2019-12-30 | 2020-05-12 | 吉林大学 | Method for constructing virtual scene library of automatic driving automobile |

| CN111178402A (en)* | 2019-12-13 | 2020-05-19 | 赛迪检测认证中心有限公司 | Scene classification method and device for road test of automatic driving vehicle |

- 2022

- 2022-04-29CNCN202210473739.9Apatent/CN114973188A/enactivePending

Patent Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN111178402A (en)* | 2019-12-13 | 2020-05-19 | 赛迪检测认证中心有限公司 | Scene classification method and device for road test of automatic driving vehicle |

| CN111144015A (en)* | 2019-12-30 | 2020-05-12 | 吉林大学 | Method for constructing virtual scene library of automatic driving automobile |

Cited By (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN115762205A (en)* | 2022-10-28 | 2023-03-07 | 广州文远知行科技有限公司 | Method, device and equipment for determining driving scene data and storage medium |

| CN116778720A (en)* | 2023-08-25 | 2023-09-19 | 中汽传媒(天津)有限公司 | Traffic condition scene library construction and application method, system and electronic equipment |

| CN116778720B (en)* | 2023-08-25 | 2023-11-24 | 中汽传媒(天津)有限公司 | Traffic condition scene library construction and application method, system and electronic equipment |

| CN117082303A (en)* | 2023-10-16 | 2023-11-17 | 深圳金语科技有限公司 | Stream media driving monitoring method and equipment thereof |

| CN117082303B (en)* | 2023-10-16 | 2024-01-30 | 深圳金语科技有限公司 | Stream media driving monitoring method and equipment thereof |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN114973188A (en) | Driving scene classification coding method and system thereof | |

| CN111143197B (en) | Automatic driving test case generation method, device, equipment and storage medium | |

| CN113498511B (en) | Test scenario simulation method, device, computer equipment and storage medium | |

| WO2021185121A1 (en) | Model generation method and apparatus, object detection method and apparatus, device, and storage medium | |

| CN110998663A (en) | Image generation method of simulation scene, electronic device and storage medium | |

| CN114627441B (en) | Unstructured road recognition network training method, application method and storage medium | |

| CN119249246B (en) | A multi-source heterogeneous big data processing system | |

| CN114647583A (en) | Method for building intelligent driving scene library | |

| CN117667699A (en) | Intelligent networking automobile test scene generation method based on knowledge graph | |

| CN119693906B (en) | Intelligent automobile scene understanding method based on edge data learning enhancement | |

| CN120297385A (en) | Mathematics teaching knowledge graph generation method and system based on artificial intelligence | |

| Wang et al. | Vehicle detection algorithm based on improved RT-DETR | |

| CN118068721A (en) | Vehicle simulation system and intelligent driving simulation test method using the same | |

| CN119723171A (en) | Hierarchical classification method for wildlife monitoring images based on species classification tree | |

| CN114495060A (en) | Road traffic marking identification method and device | |

| Briz-Redón | SpNetPrep: An R package using Shiny to facilitate spatial statistics on road networks | |

| CN117132958B (en) | Road element identification method and related device | |

| CN113656979A (en) | Road network data generation method and device, electronic equipment and storage medium | |

| US12135742B1 (en) | Systems and methods for searching an image database | |

| Sohn et al. | Towards Scenario Retrieval of Real Driving Data with Large Vision-Language Models. | |

| CN116935134A (en) | Point cloud data labeling method, point cloud data labeling system, terminal and storage medium | |

| CN115952734A (en) | Automatic driving simulation test scene generation method introducing driver style | |

| Aeri et al. | Unveiling Effectiveness: Advanced Vehicle Tracking and Detection Systems in Action | |

| Hernández-Díaz et al. | Intelligent system to detect violations in pedestrian areas committed by vehicles in the City of Cartagena de Indias | |

| Punjabi et al. | Integrated machine learning architectures for a knowledge graph embeddings (KGEs) approach |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination |