CN114968076A - Method, apparatus, medium, and program product for storage management - Google Patents

Method, apparatus, medium, and program product for storage managementDownload PDFInfo

- Publication number

- CN114968076A CN114968076ACN202110615806.1ACN202110615806ACN114968076ACN 114968076 ACN114968076 ACN 114968076ACN 202110615806 ACN202110615806 ACN 202110615806ACN 114968076 ACN114968076 ACN 114968076A

- Authority

- CN

- China

- Prior art keywords

- data

- information

- access

- cache

- manager

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F12/00—Accessing, addressing or allocating within memory systems or architectures

- G06F12/02—Addressing or allocation; Relocation

- G06F12/08—Addressing or allocation; Relocation in hierarchically structured memory systems, e.g. virtual memory systems

- G06F12/0802—Addressing of a memory level in which the access to the desired data or data block requires associative addressing means, e.g. caches

- G06F12/0862—Addressing of a memory level in which the access to the desired data or data block requires associative addressing means, e.g. caches with prefetch

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/06—Digital input from, or digital output to, record carriers, e.g. RAID, emulated record carriers or networked record carriers

- G06F3/0601—Interfaces specially adapted for storage systems

- G06F3/0602—Interfaces specially adapted for storage systems specifically adapted to achieve a particular effect

- G06F3/061—Improving I/O performance

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F12/00—Accessing, addressing or allocating within memory systems or architectures

- G06F12/02—Addressing or allocation; Relocation

- G06F12/08—Addressing or allocation; Relocation in hierarchically structured memory systems, e.g. virtual memory systems

- G06F12/0802—Addressing of a memory level in which the access to the desired data or data block requires associative addressing means, e.g. caches

- G06F12/0866—Addressing of a memory level in which the access to the desired data or data block requires associative addressing means, e.g. caches for peripheral storage systems, e.g. disk cache

- G06F12/0868—Data transfer between cache memory and other subsystems, e.g. storage devices or host systems

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/06—Digital input from, or digital output to, record carriers, e.g. RAID, emulated record carriers or networked record carriers

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/06—Digital input from, or digital output to, record carriers, e.g. RAID, emulated record carriers or networked record carriers

- G06F3/0601—Interfaces specially adapted for storage systems

- G06F3/0628—Interfaces specially adapted for storage systems making use of a particular technique

- G06F3/0655—Vertical data movement, i.e. input-output transfer; data movement between one or more hosts and one or more storage devices

- G06F3/0656—Data buffering arrangements

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F2212/00—Indexing scheme relating to accessing, addressing or allocation within memory systems or architectures

- G06F2212/60—Details of cache memory

- G06F2212/6024—History based prefetching

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N20/00—Machine learning

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Engineering & Computer Science (AREA)

- General Physics & Mathematics (AREA)

- Human Computer Interaction (AREA)

- Memory System Of A Hierarchy Structure (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本公开的实施例总体涉及存储管理领域,更具体地,涉及一种用于存储管理的方法、设备、介质和程序产品。Embodiments of the present disclosure generally relate to the field of storage management, and more particularly, to a method, apparatus, medium and program product for storage management.

背景技术Background technique

随着信息技术的发展,出现了各种存储装置。通常,存储装置的容量大小与输入/输出(I/O)性能或访问性能无法兼具。具体地,存储装置的带宽与延迟和容量成反比。也就是说,存储装置的带宽越大,延迟越低,并且容量越小。为此,可以利用小容量的高速存储装置缓存从大容量的低速存储装置获取的数据,以改善整体系统的访问性能。然而,当前的缓存方案是低效和复杂的且不容易更新。With the development of information technology, various storage devices have appeared. Often, the capacity size of a storage device cannot be combined with input/output (I/O) performance or access performance. Specifically, the bandwidth of a storage device is inversely proportional to latency and capacity. That is, the larger the bandwidth of the storage device, the lower the latency, and the smaller the capacity. To this end, a small-capacity high-speed storage device can be used to cache data acquired from a large-capacity low-speed storage device, so as to improve the access performance of the overall system. However, current caching schemes are inefficient and complex and not easy to update.

发明内容SUMMARY OF THE INVENTION

总体上,本公开的实施例提供了一种用于存储管理的方案,以提高存储性能、访问效率和用户体验。In general, embodiments of the present disclosure provide a solution for storage management to improve storage performance, access efficiency, and user experience.

在本公开的第一方面,提供了一种存储管理方法。该方法包括:应用层中的管理器获取访问信息,访问信息与操作系统从应用层接收到的访问请求有关;管理器基于访问信息确定应用层的潜在访问数据的数据信息;以及管理器使数据信息对于操作系统中的驱动器可用,以使得所述驱动器基于数据信息将潜在访问数据加载到高速缓存。例如,潜在访问数据可以与访问请求所要访问的数据相关联,这是因为潜在访问数据是基于访问请求所要访问的数据预测的。以此方式,管理器可以基于访问信息预测关于可能将要访问的潜在访问数据的数据信息,并且驱动器可以基于数据信息预取潜在访问数据,从而提高存储性能、访问效率和用户体验。In a first aspect of the present disclosure, a storage management method is provided. The method includes: a manager in the application layer obtains access information, the access information is related to an access request received by the operating system from the application layer; the manager determines data information of potential access data of the application layer based on the access information; and the manager makes the data The information is available to the driver in the operating system to cause the driver to load potentially accessed data into the cache based on the data information. For example, the potential access data may be associated with the data to be accessed by the access request because the potential access data is predicted based on the data to be accessed by the access request. In this way, the manager can predict data information about potential access data that may be accessed based on the access information, and the driver can prefetch potential access data based on the data information, thereby improving storage performance, access efficiency, and user experience.

在一些实施例中,获取访问信息包括:响应于从驱动器接收到关于访问信息的通知,从存储器中的第一缓冲区获取访问信息,第一缓冲区由驱动器维护以用于存储访问信息。以此方式,管理器可以基于来自驱动器的通知从驱动器维护的缓冲区方便地获取访问信息。In some embodiments, obtaining the access information includes, in response to receiving a notification from the driver about the access information, obtaining the access information from a first buffer in the memory, the first buffer being maintained by the driver for storing the access information. In this way, the manager can conveniently obtain access information from buffers maintained by the driver based on notifications from the driver.

在一些实施例中,确定数据信息包括:将访问信息应用于经训练的预测器,以得到数据信息,预测器是基于训练访问信息和与训练访问信息相关联的训练数据信息被训练的。预测器是基于训练访问信息和训练数据信息而训练的模型,其用于基于当前的访问信息来预测后续的潜在访问数据的访问信息。应用层中的管理器可以部署有或获取经训练的预测器,并利用预测器来确定数据信息。在预测器的训练中,历史访问信息可以作为训练访问信息,并且与历史访问信息相关联的历史数据信息可以作为训练数据信息。作为示例,历史数据信息可以是在针对历史访问信息的访问操作执行之后,与后续执行的访问操作相关联的信息,例如后续执行的访问操作所访问的数据的地址、大小等。可以采用任何适当预测技术来实现预测器,例如随机森林、最小二乘多项式拟合、一元线性回归、多元线性回归、非线性方法、马尔可夫链预测、数据挖掘、神经网络等。以此方式,可以利用经训练的预测器准确且高效地确定数据信息。In some embodiments, determining the data information includes applying the access information to a trained predictor to obtain the data information, the predictor being trained based on the training access information and training data information associated with the training access information. The predictor is a model trained based on the training access information and the training data information, and is used to predict the access information of the subsequent potential access data based on the current access information. Managers in the application layer can deploy or acquire trained predictors and use the predictors to determine data information. In the training of the predictor, historical access information may be used as training access information, and historical data information associated with the historical access information may be used as training data information. As an example, the historical data information may be information associated with subsequent access operations performed after the access operations for historical access information are performed, such as addresses and sizes of data accessed by the subsequently performed access operations. The predictor may be implemented using any suitable prediction technique, such as random forests, least squares polynomial fitting, univariate linear regression, multiple linear regression, nonlinear methods, Markov chain prediction, data mining, neural networks, and the like. In this way, data information can be accurately and efficiently determined using the trained predictor.

在一些实施例中,确定数据信息包括:基于从存储器获取的高速缓存回收信息以及访问信息,来确定数据信息。例如,管理器可以基于高速缓存回收信息确定高速缓存的使用情况,从而确定是否启用/禁用预取,或者在确定启用预取时采用何种预取策略,并且基于所确定的预取策略,结合访问信息确定数据信息。以此方式,管理器可以在结合高速缓存回收信息和访问信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, determining the data information includes determining the data information based on cache reclamation information and access information obtained from the memory. For example, the manager may determine cache usage based on cache reclamation information to determine whether to enable/disable prefetching, or what prefetching strategy to use when determining enabling prefetching, and based on the determined prefetching strategy, combine The access information determines the data information. In this way, the manager can accurately predict the data information of potential access data in conjunction with cache reclamation information and access information.

在一些实施例中,高速缓存回收信息包括以下至少一项:与对高速缓存进行回收时高速缓存中的已使用部分相关联的信息,与对高速缓存进行回收时高速缓存中的未使用部分相关联的信息,和与对高速缓存进行回收时高速缓存中的被改写的数据相关联的信息。以此方式,管理器可以在考虑与高速缓存的回收相关的各种信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the cache reclamation information includes at least one of: information associated with a used portion of the cache when the cache is reclaimed, and information associated with an unused portion of the cache when the cache is reclaimed associative information, and information associated with overwritten data in the cache when the cache is reclaimed. In this way, the manager can accurately predict the data information of the potentially accessed data taking into account various information related to the eviction of the cache.

在一些实施例中,该方法还包括:管理器在确定数据信息之后,向驱动器发送关于数据信息的通知,以用于驱动器基于通知从存储器中的第二缓冲区获取数据信息,第二缓冲区由驱动器维护以用于存储数据信息。以此方式,驱动器可以方便地从其维护的另一缓冲区获取数据信息,从而基于数据信息将潜在访问数据加载到高速缓存。In some embodiments, the method further includes: after the manager determines the data information, sending a notification about the data information to the driver, for the driver to obtain the data information from a second buffer in the memory based on the notification, the second buffer Maintained by drives for storing data information. In this way, the drive can conveniently obtain data information from another buffer it maintains, thereby loading potentially accessed data into the cache based on the data information.

在一些实施例中,访问信息包括以下至少一项:发起访问请求的时间;访问请求所要访问的数据的地址;访问请求所要访问的数据的大小;命中的访问请求的数目,命中的访问请求所要访问的数据已经被加载到高速缓存中;和命中的访问请求的比例。以此方式,管理器可以在考虑与访问请求相关的各种信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the access information includes at least one of the following: the time when the access request was initiated; the address of the data to be accessed by the access request; the size of the data to be accessed by the access request; The accessed data has been loaded into the cache; and the proportion of access requests that hit. In this way, the manager can accurately predict the data information of potential access data taking into account various information related to the access request.

在一些实施例中,数据信息包括以下至少一项:潜在访问数据的地址,和潜在访问数据的大小。以此方式,驱动器可以方便地获取潜在访问数据。In some embodiments, the data information includes at least one of: an address of the potentially accessed data, and a size of the potentially accessed data. In this way, the drive can easily obtain potentially accessible data.

在一些实施例中,潜在访问数据被存储在存储装置中,并且确定数据信息包括:响应于确定应用层的访问请求的数目低于存储装置的最大吞吐量,确定所述数据信息。以此方式,可以根据不同负载情况来禁用或启用预取,从而实现更好的性能。In some embodiments, the potential access data is stored in the storage device, and determining the data information includes determining the data information in response to determining that the number of access requests of the application layer is below a maximum throughput of the storage device. In this way, prefetching can be disabled or enabled based on different load conditions, resulting in better performance.

在本公开的第二方面,提供了一种存储管理方法,包括:操作系统中的驱动器响应于操作系统从应用层接收到访问请求,确定与访问请求有关的访问信息;驱动器获取应用层的潜在访问数据的数据信息,数据信息是应用层中的管理器基于访问信息确定的;以及驱动器基于数据信息将潜在访问数据加载到高速缓存。以此方式,驱动器可以基于管理器所确定的数据信息,预取可能将要访问的潜在访问数据,从而提高存储性能、访问效率和用户体验。In a second aspect of the present disclosure, a storage management method is provided, including: a driver in an operating system, in response to an access request received by the operating system from an application layer, determines access information related to the access request; the driver obtains the potential of the application layer Data information of the access data, the data information is determined by the manager in the application layer based on the access information; and the driver loads the potentially accessed data into the cache based on the data information. In this way, the drive can prefetch potential access data that may be accessed based on the data information determined by the manager, thereby improving storage performance, access efficiency, and user experience.

在一些实施例中,该方法还包括:驱动器在确定访问信息之后,向管理器发送关于访问信息的通知,以用于管理器基于通知从存储器中的第一缓冲区获取访问信息,第一缓冲区由驱动器维护以用于存储访问信息。以此方式,管理器可以基于来自驱动器的通知从驱动器维护的缓冲区方便地获取访问信息。In some embodiments, the method further includes: after the driver determines the access information, sending a notification about the access information to the manager, so that the manager obtains the access information from the first buffer in the memory based on the notification, and the first buffer Areas are maintained by the drive to store access information. In this way, the manager can conveniently obtain access information from buffers maintained by the driver based on notifications from the driver.

在一些实施例中,获取数据信息包括:响应于从管理器接收到关于数据信息的通知,从存储器中的第二缓冲区获取数据信息,第二缓冲区由驱动器维护以用于存储数据信息。以此方式,驱动器可以方便地从其维护的另一缓冲区获取数据信息,从而基于数据信息将潜在访问数据加载到高速缓存。In some embodiments, obtaining the data information includes, in response to receiving the notification from the manager about the data information, obtaining the data information from a second buffer in the memory maintained by the drive for storing the data information. In this way, the drive can conveniently obtain data information from another buffer it maintains, thereby loading potentially accessed data into the cache based on the data information.

在一些实施例中,数据信息是由管理器基于从存储器获取的高速缓存回收信息以及访问信息来确定的。例如,管理器可以基于高速缓存回收信息确定高速缓存的使用情况,从而确定是否启用/禁用预取,或者在确定启用预取时采用何种预取策略,并且基于所确定的预取策略,结合访问信息确定数据信息。以此方式,管理器可以在结合高速缓存回收信息和访问信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the data information is determined by the manager based on cache reclamation information obtained from memory and access information. For example, the manager may determine cache usage based on cache reclamation information to determine whether to enable/disable prefetching, or what prefetching strategy to use when determining enabling prefetching, and based on the determined prefetching strategy, combine The access information determines the data information. In this way, the manager can accurately predict the data information of potential access data in conjunction with cache reclamation information and access information.

在一些实施例中,高速缓存回收信息包括以下至少一项:与对高速缓存进行回收时高速缓存中的已使用部分相关联的信息,与对高速缓存进行回收时高速缓存中的未使用部分相关联的信息,和与对高速缓存进行回收时高速缓存中的被改写的数据相关联的信息。以此方式,管理器可以在考虑与高速缓存的回收相关的各种信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the cache reclamation information includes at least one of: information associated with a used portion of the cache when the cache is reclaimed, and information associated with an unused portion of the cache when the cache is reclaimed associative information, and information associated with overwritten data in the cache when the cache is reclaimed. In this way, the manager can accurately predict the data information of the potentially accessed data taking into account various information related to the eviction of the cache.

在一些实施例中,访问信息包括以下至少一项:发起访问请求的时间;访问请求所要访问的数据的地址;访问请求所要访问的数据的大小;命中的访问请求的数目,命中的访问请求所要访问的数据已经被加载到高速缓存中;和命中的访问请求的比例。以此方式,管理器可以在考虑与访问请求相关的各种信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the access information includes at least one of the following: the time when the access request was initiated; the address of the data to be accessed by the access request; the size of the data to be accessed by the access request; The accessed data has been loaded into the cache; and the proportion of access requests that hit. In this way, the manager can accurately predict the data information of potential access data taking into account various information related to the access request.

在一些实施例中,数据信息包括以下至少一项:潜在访问数据的地址,和潜在访问数据的大小。以此方式,驱动器可以方便地获取潜在访问数据。In some embodiments, the data information includes at least one of: an address of the potentially accessed data, and a size of the potentially accessed data. In this way, the drive can easily obtain potentially accessible data.

在一些实施例中,潜在访问数据被存储在存储装置中,并且数据信息是管理器响应于确定应用层的访问请求的数目低于存储装置的最大吞吐量而确定的。以此方式,可以根据不同负载情况来禁用或启用预取,从而实现更好的性能。In some embodiments, potential access data is stored in the storage device, and the data information is determined by the manager in response to determining that the number of application layer access requests is below the maximum throughput of the storage device. In this way, prefetching can be disabled or enabled based on different load conditions, resulting in better performance.

在本公开的第三方面,提供了一种电子设备。电子设备包括至少一个处理单元和至少一个存储器。至少一个存储器被耦合到至少一个处理单元并且存储用于由至少一个处理单元执行的指令。指令当由至少一个处理单元执行时,使得设备实现第一方面的方法。In a third aspect of the present disclosure, an electronic device is provided. The electronic device includes at least one processing unit and at least one memory. At least one memory is coupled to the at least one processing unit and stores instructions for execution by the at least one processing unit. The instructions, when executed by at least one processing unit, cause an apparatus to implement the method of the first aspect.

在本公开的第四方面,提供了一种电子设备。电子设备包括至少一个处理单元和至少一个存储器。至少一个存储器被耦合到至少一个处理单元并且存储用于由至少一个处理单元执行的指令。指令当由至少一个处理单元执行时,使得设备实现第二方面的方法。In a fourth aspect of the present disclosure, an electronic device is provided. The electronic device includes at least one processing unit and at least one memory. At least one memory is coupled to the at least one processing unit and stores instructions for execution by the at least one processing unit. The instructions, when executed by the at least one processing unit, cause an apparatus to implement the method of the second aspect.

在本公开的第五方面,提供了一种计算机可读存储介质。计算机可读存储介质上存储有计算机程序,计算机程序被处理器执行时实现第一方面的方法。In a fifth aspect of the present disclosure, a computer-readable storage medium is provided. A computer program is stored on the computer-readable storage medium, and when the computer program is executed by a processor, the method of the first aspect is implemented.

在本公开的第六方面,提供了一种计算机可读存储介质。计算机可读存储介质上存储有计算机程序,计算机程序被处理器执行时实现第二方面的方法。In a sixth aspect of the present disclosure, a computer-readable storage medium is provided. A computer program is stored on the computer-readable storage medium, and when the computer program is executed by a processor, the method of the second aspect is implemented.

在本公开的第七方面,提供了一种计算机程序产品。计算机程序产品包括指令,其中指令在被处理器执行时使得电子设备执行根据第一方面的方法。In a seventh aspect of the present disclosure, a computer program product is provided. The computer program product includes instructions, wherein the instructions, when executed by a processor, cause an electronic device to perform the method according to the first aspect.

在本公开的第八方面,提供了一种计算机程序产品。计算机程序产品包括指令,其中指令在被处理器执行时使得电子设备执行根据第一方面的方法。In an eighth aspect of the present disclosure, a computer program product is provided. The computer program product includes instructions, wherein the instructions, when executed by a processor, cause an electronic device to perform the method according to the first aspect.

应当理解,发明内容部分中所描述的内容并非旨在限定本公开的实施例的关键或重要特征,亦非用于限制本公开的范围。本公开的其它特征将通过以下的描述变得容易理解。It should be understood that the matters described in this Summary are not intended to limit key or critical features of the embodiments of the present disclosure, nor are they intended to limit the scope of the present disclosure. Other features of the present disclosure will become apparent from the following description.

附图说明Description of drawings

结合附图并参考以下详细说明,本公开各实施例的上述和其他特征、优点及方面将变得更加明显。在附图中,相同或相似的附图标记表示相同或相似的元素,其中:The above and other features, advantages and aspects of various embodiments of the present disclosure will become more apparent when taken in conjunction with the accompanying drawings and with reference to the following detailed description. In the drawings, the same or similar reference numbers refer to the same or similar elements, wherein:

图1示出了示例三级存储结构的示意图;Figure 1 shows a schematic diagram of an example tertiary storage structure;

图2示出了示例传统存储管理环境的示意图;Figure 2 shows a schematic diagram of an example conventional storage management environment;

图3示出了本公开的实施例能够实现于其中的示例存储管理环境的示意图;3 shows a schematic diagram of an example storage management environment in which embodiments of the present disclosure can be implemented;

图4示出了根据本公开的一些实施例的存储管理过程的示意图;4 shows a schematic diagram of a storage management process according to some embodiments of the present disclosure;

图5示出了示例传统缓存过程的泳道图;Figure 5 shows a swimlane diagram of an example conventional caching process;

图6示出了根据本公开的一些实施例的具有预取的示例缓存过程的泳道图;6 illustrates a swimlane diagram of an example caching process with prefetching in accordance with some embodiments of the present disclosure;

图7示出了根据本公开的一些实施例的在管理器处执行的存储管理过程的流程图;7 illustrates a flow diagram of a storage management process performed at a manager in accordance with some embodiments of the present disclosure;

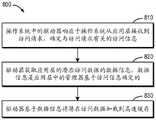

图8示出了根据本公开的一些实施例的在驱动器处执行的存储管理过程的流程图;以及Figure 8 illustrates a flow diagram of a storage management process performed at a drive in accordance with some embodiments of the present disclosure; and

图9示出了适合实现本公开的实施例的示例设备的框图。9 illustrates a block diagram of an example device suitable for implementing embodiments of the present disclosure.

具体实施方式Detailed ways

现在将参考一些示例实施例描述本公开的原理。应当理解,这些实施例仅出于说明的目的进行描述,并且帮助本领域技术人员理解和实现本公开,而不暗示对本公开的范围的任何限制。除了下面描述的方式以外,本文中描述的公开内容可以以各种方式来实现。The principles of the present disclosure will now be described with reference to some example embodiments. It should be understood that these embodiments are described for illustrative purposes only and to assist those skilled in the art in understanding and implementing the present disclosure without implying any limitation on the scope of the present disclosure. The disclosure described herein can be implemented in various ways other than those described below.

在以下描述和权利要求中,除非另有定义,否则本文中使用的所有技术和科学术语具有与本公开所属领域的普通技术人员通常理解的相同的含义。In the following description and claims, unless defined otherwise, all technical and scientific terms used herein have the same meaning as commonly understood by one of ordinary skill in the art to which this disclosure belongs.

如本文中使用的,除非上下文另外明确指出,否则单数形式“一”、“一个”和“该”也意图包括复数形式。术语“包括”及其变体应当被解读为开放式术语,意指“包括但不限于”。术语“基于”应当被解读为“至少部分基于”。术语“一个实施例”和“实施例”应当被解读为“至少一个实施例”。术语“另一实施例”应当被理解为“至少一个其他实施例”。术语“第一”、“第二”等可以指代不同或相同的对象。下面可以包括其他定义(明确的和隐含的)。As used herein, the singular forms "a," "an," and "the" are intended to include the plural forms as well, unless the context clearly dictates otherwise. The term "including" and variations thereof should be read as open-ended terms meaning "including but not limited to". The term "based on" should be read as "based at least in part on". The terms "one embodiment" and "an embodiment" should be read as "at least one embodiment." The term "another embodiment" should be understood as "at least one other embodiment." The terms "first", "second", etc. may refer to different or the same objects. Other definitions (explicit and implicit) may be included below.

如上所述,随着信息技术的发展,出现了各种存储装置。通常,存储装置的容量大小与I/O性能或访问性能无法兼具。具体地,存储装置的带宽与延迟和容量成反比。也就是说,存储装置的带宽越大,延迟越低,但容量越小。为此,可以利用小容量的高速存储装置缓存从大容量的低速存储装置获取的数据,以改善整体系统的访问性能。As described above, with the development of information technology, various storage devices have appeared. Generally, the capacity size of a storage device cannot be combined with I/O performance or access performance. Specifically, the bandwidth of a storage device is inversely proportional to latency and capacity. That is, the larger the bandwidth of the storage device, the lower the latency, but the smaller the capacity. To this end, a small-capacity high-speed storage device can be used to cache data acquired from a large-capacity low-speed storage device, so as to improve the access performance of the overall system.

利用小容量的高速存储装置缓存从大容量的低速存储装置获取的数据的方式可以被认为是分级存储结构。典型地,分级存储结构可以是三级存储结构。图1示出了示例三级存储结构100的示意图。The manner in which data retrieved from a large-capacity low-speed storage device is cached using a small-capacity high-speed storage device can be considered as a hierarchical storage structure. Typically, the hierarchical storage structure may be a tertiary storage structure. FIG. 1 shows a schematic diagram of an example

如图1所示,中央处理单元(CPU)110内的寄存器112和一至三级高速缓存114-118位于三级存储结构100中的第一级,其带宽大、延迟低而容量小。As shown in FIG. 1, registers 112 and level one through three caches 114-118 within a central processing unit (CPU) 110 are located in the first level of the three

动态随机存取存储器(DRAM)120位于三级存储结构100的第二级。相较于第一级的寄存器112和一至三级高速缓存114-118,DRAM 120的带宽较小、延迟较大而容量较大。例如,DRAM 120可以具有50-100ns的延迟。此外,存储级存储器(SCM)130可以被认为位于三级存储结构100的第二级和第三级之间。类似于DRAM 120,相较于第一级的寄存器112和一至三级高速缓存114-118,SCM 130的带宽较小、延迟较大而容量较大。例如,SCM 130可以具有120ns的延迟。A dynamic random access memory (DRAM) 120 is located at the second level of the

网络存储器140和网络存储装置150位于三级存储结构100的第三级。相较于第二级的DRAM 120和SCM 130,网络存储器140和网络存储装置150的带宽较小、延迟较大而容量较大。例如,网络存储器140和网络存储装置150可以具有1-1000μs的延迟。此外,固态驱动器(SSD)160和硬盘驱动器(HDD)170也位于三级存储结构100的第三级。相较于第二级的DRAM 120和SCM 130,SSD 160和HDD 170的带宽较小、延迟较大而容量较大。例如,SSD 160可以具有100μs的延迟,而HDD 170可以具有1ms的延迟。The

可见,从CPU 110内的寄存器112、一至三级高速缓存114-118,到DRAM 120、SCM130,再到网络存储器140、网络存储装置150、外设SSD、HDD,在上述三级存储结构中离CPU越近,带宽越大、延迟越低、而容量越小。It can be seen that from the

相较于CPU内部运算指令所需要的仅数个时钟周期,外部存储装置的延迟从几百个时钟周期(例如,DRAM 120)到几百万个时钟周期(例如,HDD 170)不等。然而,存储装置容量大小与访问性能无法兼具。因此,利用小容量的高速存储装置作为缓存,可以显著的改善整体系统的访问性能。传统上,已经提出了可以托管高速缓存与低速后端存储装置,并且对上层应用提供统一的虚拟块设备访问接口的方案,来加速访问性能。The latency of an external storage device varies from hundreds of clock cycles (eg, DRAM 120 ) to millions of clock cycles (eg, HDD 170 ), compared to only a few clock cycles required to operate instructions inside the CPU. However, the capacity size of the storage device and the access performance cannot be combined. Therefore, using a small-capacity high-speed storage device as a cache can significantly improve the access performance of the overall system. Traditionally, solutions that can host caches and low-speed back-end storage devices and provide a unified virtual block device access interface for upper-layer applications have been proposed to speed up access performance.

另一方面,预取技术通过提前加载数据,可以显著降低延迟和提高访问性能。当前从操作系统到各种大型应用都从其自己的视角形成了各自的预取方案来提高访问性能。然而,这些传统的预取方案具有各种问题。例如,其无法良好的解决各个应用之间的预取的相互影响。此外,其也无法根据系统负载,动态地调整预取策略。On the other hand, prefetching can significantly reduce latency and improve access performance by loading data in advance. Currently, operating systems and various large-scale applications have formed their own prefetching schemes from their own perspectives to improve access performance. However, these conventional prefetching schemes suffer from various problems. For example, it cannot well resolve the interaction of prefetching among various applications. In addition, it cannot dynamically adjust the prefetching strategy according to the system load.

此外,为了更好地理解本公开的实施例,首先将参考图2介绍传统存储管理环境。Furthermore, for a better understanding of the embodiments of the present disclosure, a conventional storage management environment will first be introduced with reference to FIG. 2 .

图2示出了示例传统存储管理环境200的示意图。如图2所示,位于应用层的应用210可以通过调用操作系统的访问接口,以进行读写操作。读写操作可以遵循如下示例过程。例如,操作系统内的虚拟文件系统(VFS)220可以将访问接口的访问解析成存储装置块设备240的对应逻辑地址处的访问,并且调用驱动器230以读写该逻辑地址处的数据。进一步地,存储装置块设备240可以调用存储装置驱动器260,以读写存储装置270的、与逻辑地址对应的物理地址处的数据。FIG. 2 shows a schematic diagram of an example conventional

相对于常规访问操作,在采用了虚拟块设备(例如,存储装置块设备240和高速缓存块设备250)的系统中,驱动器230托管了后端存储装置270与高速缓存280,并且向上层呈现统一的虚拟块设备接口。具体地,存储装置270映射到虚拟的存储装置块设备240,并且高速缓存280映射到虚拟的高速缓存块设备250。由此,驱动器230可以通过存储装置块设备240和高速缓存块设备250,来托管存储装置270和高速缓存280,并且通过存储装置块设备240和高速缓存块设备250来调用存储装置驱动器260和高速缓存驱动器265,来实现对存储装置270和高速缓存280的访问。一般而言,存储装置270是大容量且低成本的存储盘(例如HDD等)。所有数据最终都存储在存储装置270中。高速缓存280则采用高速且小容量的存储盘(例如NVME SSD等)。高速缓存280用于缓存从存储装置270读取的数据以供应用使用。In contrast to regular access operations, in systems employing virtual block devices (eg,

当应用210访问数据时,驱动器230将获取来自应用210的访问请求。通常,对于来自应用210的读访问请求,驱动器230可以在其托管的高速缓存280中查找该读访问请求所要访问的数据是否已经存储在高速缓存280中。如果该读访问请求所要访问的数据已经存储在高速缓存280中(也被称为“命中”),则驱动器230直接从高速缓存280中读取数据,并且返回给应用210。如果高速缓存280中不包括该读访问请求所要访问的数据(也被称为“未命中”),则驱动器230将该读访问请求转发至存储装置270。在从存储装置270读取数据之后,再返回给应用210。此外,驱动器230可以根据系统或用户配置,确定是否将该所读取的数据存储到高速缓存280中。When the

可见,在应用210重复读取相同数据,并且该数据被加载到高速缓存280的情况下,系统可以充分利用高速缓存280的高速访问特性,快速地从高速缓存280读取数据,从而显著提高系统性能。It can be seen that when the

然而,该传统方案依赖于应用210重复读取相同数据来提高系统性能。因此,仅能够对相同数据的第二次及以后的访问提高性能。然而,在大量情况下,应用210对相同数据仅访问一次。或者,在数据量非常大以至于高速缓存280无法容纳该数据的情况下,即使对相同数据存在多次访问,由于高速缓存280无法存储该数据,因此驱动器230不得不从低速的存储装置270读取数据,从而无法提高系统性能。However, this conventional approach relies on the

为此,传统上提出了一些预取方案来提前加载数据,从而降低延迟并提高性能。然而,传统方案具有各种缺陷。例如,传统上,各种应用都从其自己的视角来实现其各自的预取方案,但是这些预取方案无法良好解决各个应用之间的预取的互相影响。此外,传统方案也无法根据系统负载实时调整预取策略。另外,在传统方案中,预取方案被实现在操作系统内核中。然而,操作系统内核对稳定性和安全性要求严苛,同时还需要对所有应用210的访问请求做出快速响应。在这种情况下,将预取方案实现在操作系统内核中将严重影响系统的稳定性、安全性和响应速度。可见,传统方案缺乏应对各种应用的完善且全面的预取方案,无法依赖数据预取来显著提高大量应用场景中的系统性能。To this end, some prefetching schemes have traditionally been proposed to load data in advance, thereby reducing latency and improving performance. However, the conventional scheme has various drawbacks. For example, traditionally, various applications implement their own prefetching schemes from their own perspectives, but these prefetching schemes cannot well resolve the mutual influence of prefetching among various applications. In addition, traditional solutions cannot adjust the prefetching strategy in real time according to the system load. Additionally, in conventional schemes, the prefetching scheme is implemented in the operating system kernel. However, the operating system kernel has strict requirements on stability and security, and also needs to respond quickly to the access requests of all

相对于上述传统技术,本公开的实施例提供了一种用于存储管理的方案。总体而言,根据在此描述的各种实施例,操作系统中的驱动器响应于操作系统从应用层接收到访问请求,可以确定与访问请求有关的访问信息。应用层中的管理器可以获取该访问信息。由此,管理器可以基于该访问信息确定应用层将来可能访问的数据(下文也称为“应用层的潜在访问数据”)的数据信息,并且使该数据信息对于操作系统中的驱动器可用。由此,驱动器可以基于该数据信息而将应用层将来可能访问的数据加载到高速缓存。Compared with the above-mentioned conventional technologies, the embodiments of the present disclosure provide a solution for storage management. In general, according to various embodiments described herein, a driver in the operating system may determine access information related to the access request in response to the operating system receiving the access request from the application layer. A manager in the application layer can obtain this access information. Thus, the manager can determine data information of data that the application layer may access in the future (hereinafter also referred to as "potentially accessed data of the application layer") based on the access information, and make the data information available to the driver in the operating system. Thus, based on the data information, the driver can load data into the cache that may be accessed by the application layer in the future.

以此方式,管理器可以基于访问信息确定潜在访问数据的数据信息,而驱动器可以基于管理器所确定的数据信息,预取可能将要访问的潜在访问数据,从而提高了存储性能、访问效率和用户体验。这样,实现了一种基于操作系统中的驱动器结合应用层的智能管理器的存储访问加速方案。与以上所讨论的传统方案相比,本方案可以从上层应用针对底层存储装置的访问请求的访问特征构造智能预取策略,以利用高速缓存的高速访问特性,来实现系统性能更强的智能预取。In this way, the manager can determine the data information of the potential access data based on the access information, and the drive can prefetch the potential access data that may be accessed based on the data information determined by the manager, thereby improving storage performance, access efficiency and users. experience. In this way, a storage access acceleration scheme based on the driver in the operating system combined with the intelligent manager of the application layer is implemented. Compared with the traditional scheme discussed above, this scheme can construct an intelligent prefetching strategy from the access characteristics of the access request for the underlying storage device from the upper-layer application, so as to utilize the high-speed access characteristics of the cache to realize intelligent prefetching with stronger system performance. Pick.

具体地,针对传统方案仅能够对相同数据的第二次及以后的访问提高性能的缺陷,本方案可以在管理器中基于已经访问的数据的模式,来预测可能将要访问的数据,并且将可能将要访问的数据预取到高速缓存中。为此,可能将要访问的数据在被首次访问时已经被缓存,从而可以克服传统上对二次访问的依赖。Specifically, in view of the defect that the traditional scheme can only improve the performance of the second and subsequent accesses to the same data, the present scheme can predict the data that may be accessed in the manager based on the mode of the data that has been accessed, and will likely Prefetch data to be accessed into cache. To this end, data that may be accessed is already cached when it is accessed for the first time, thereby overcoming the traditional reliance on secondary access.

此外,针对数据量非常大以至于高速缓存无法容纳的情况,在本公开的一些实施例中可以在管理器中基于高速缓存回收信息确定高速缓存的使用情况,从而确定是否启用/禁用预取,或者在确定启用预取时采用何种预取策略,从而基于所确定的预取策略,结合访问信息确定数据信息。例如,在高速缓存负载较小的情况下,可以采用激进的预取策略以预取较多的潜在访问数据;在高速缓存负载较大的情况下,可以采用保守的预取策略以预取较少的潜在访问数据。以此方式,可以适应于系统所提供的高速缓存的容量,以避免出现高速缓存无法容纳的情况。In addition, for the case where the amount of data is too large to be accommodated by the cache, in some embodiments of the present disclosure, the usage of the cache may be determined in the manager based on the cache reclamation information, so as to determine whether to enable/disable prefetching, Or which prefetching strategy is adopted when prefetching is determined, so that the data information is determined based on the determined prefetching strategy in combination with the access information. For example, in the case of a small cache load, an aggressive prefetch strategy can be used to prefetch more potential access data; in the case of a large cache load, a conservative prefetch strategy can be used to prefetch more data. Less potential access to data. In this way, the capacity of the cache provided by the system can be adapted to avoid situations where the cache cannot fit.

另外,相较于各个应用各自实现其预取操作,由于本方案由专门的管理器实现适用于各种应用的通用预取方案,因此避免了各个应用的预取操作之间的相互影响。具体地,在传统方案中,虽然各个应用彼此独立,但是被部署在同一硬件环境上。应用各自实现其自己的预取策略,而缺乏统一的协调管理,从而可能与底层共享的硬件访问特性冲突。然而,在本方案中,应用的访问信息的采集是在操作系统中的驱动器中实现的,因此所采集的是应用层的所有应用的全局访问情况。在这种情况下,管理器可以根据全局访问情况,实现更好的预取策略。In addition, compared with each application implementing its own prefetching operation, since this scheme implements a general prefetching scheme suitable for various applications by a dedicated manager, the mutual influence between the prefetching operations of each application is avoided. Specifically, in the traditional solution, although each application is independent of each other, it is deployed on the same hardware environment. Each application implements its own prefetch strategy without a unified coordination management, which may conflict with the underlying shared hardware access characteristics. However, in this solution, the collection of application access information is implemented in the driver in the operating system, so what is collected is the global access information of all applications in the application layer. In this case, the manager can implement a better prefetching strategy based on the global access situation.

进一步地,针对传统方案无法根据系统负载实时调整预取策略的情况,本方案可以对于不同全局负载情况,采用不同的预取策略。例如,针对一个存储装置,如果各个应用的总访问请求的数目达到该存储装置的最大吞吐量,则可以智能地禁用预取。相反,如果总访问请求的数目并未达到该存储装置的最大吞吐量,由于此时系统可以承受由预取不命中导致的存储资源浪费,因此可以启用预取,或者更进一步地采用更加激进的预测策略以预取更多的潜在访问数据,以实现更好的性能。下面将结合附图描述本公开的各种示例实施例。Further, for the situation that the traditional solution cannot adjust the prefetching strategy in real time according to the system load, the present solution can adopt different prefetching strategies for different global load situations. For example, for a storage device, if the number of total access requests of various applications reaches the maximum throughput of the storage device, prefetching can be intelligently disabled. Conversely, if the total number of access requests does not reach the maximum throughput of the storage device, since the system can afford wasted storage resources caused by prefetch misses, prefetching can be enabled, or a more aggressive Prediction strategies to prefetch more potentially accessed data for better performance. Various example embodiments of the present disclosure will be described below in conjunction with the accompanying drawings.

图3示出了本公开的实施例能够实现于其中的示例存储管理环境300的示意图。FIG. 3 shows a schematic diagram of an example

存储管理环境300的部分部件可以参考传统存储管理环境200中的实现方式。例如,应用210进行读写操作的访问接口与传统存储管理环境200的访问接口一致。在下文中,将进一步参考图3描述根据本公开的实施例,具体地描述相对于传统存储管理环境200的改进。Some components of the

存储管理环境300中包括实现在应用层的管理器310,并且改进了操作系统(更具体地,内核态的操作系统)中的驱动器320。应用层是运行应用的层级。应用层可以处于用户态。在操作系统的设计中,用户态指非特权的执行状态。操作系统的内核禁止此状态下的代码进行潜在危险的操作,比如写入系统配置文件、杀掉其他用户的进程、重启系统等。内核态是操作系统内核所运行的模式,运行在该模式的代码,可以无限制地对系统存储、外部设备进行访问。驱动器320可以用于管理存储装置270和高速缓存280,其例如可以是操作系统中的虚拟块设备驱动器。驱动器320不与底层的块设备(例如,存储装置块设备240、高速缓存块设备250等)一一对应,而是底层的块设备的代理。驱动器320可以虚拟出一个块设备,并且应用对该块设备的访问,实际由驱动器320转发给底层的块设备完成访问。管理器310可替换地被称为预取器,其可以用于预测将要访问的潜在访问数据,以提高系统性能。The

具体地,在某些实施例中,管理器310可以是后台执行预取算法的应用程序。管理器310可以通过调用驱动器320的访问接口,来识别应用的访问特征,从而做出预取决策。具体地,驱动器320可以将与访问请求有关的访问信息存储在存储器330中。由此,管理器310可以从存储器330获取访问信息,并且基于访问信息来确定潜在访问数据的数据信息。然后,管理器310可以将数据信息存储在存储器330中。由此,驱动器320可以从存储器330获取数据信息,并且基于数据信息将潜在访问数据加载到高速缓存280。Specifically, in some embodiments,

在某些实施例中,管理器310还可以被启用和关闭。例如,系统的用户或管理员可以选择启用或关闭管理器310。备选地,可以根据系统负载情况,来启用或关闭管理器310。例如,在访问请求的数目低于阈值数目的情况下,启用管理器310,从而确定数目信息。而在访问请求的数目超过阈值数目的情况下,关闭管理器310。此外,管理器310所采用的预取算法也可以根据系统负载情况动态调整。例如,在系统负载小的情况下,管理器310可以采用更加激进的预测策略以预取更多的潜在访问数据,以实现更好的性能。相反,在系统负载大的情况下,管理器310可以采用更加保守的预测策略以预取较少的潜在访问数据,以节约存储资源。In some embodiments,

由于在该智能预取框架方案中,管理器310作为后台服务运行,应用210与管理器310之间不存在任何接口,因此应用210与管理器310互相不感知对方的存在。由此,智能预取框架方案可以在任意应用场景上下文中使用,并且应用210无需做出任何适应性修改。此外,由于管理器310实现在应用层而非操作系统内核中,因此对管理器310的稳定性要求相对较低,管理器310可以方便且容易地更新,而无需对内核进行适应性修改,从而影响内核的稳定性。此外,即使管理器310预取失败,由于正常的缓存功能正常运行,因此也不会影响系统的正常运行。进一步地,由于管理器310可以基于系统负载动态调整,因此管理器310还能够保证在各种存储访问负载下高效工作,而不影响系统健壮性。In this intelligent prefetching framework solution, the

图4示出了根据本公开的一些实施例的存储管理过程400的示意图。Figure 4 shows a schematic diagram of a

操作系统中的驱动器320可以响应于操作系统从应用层接收到访问请求,确定与访问请求有关的访问信息。原理在于,处于操作系统之上的应用层的所有应用对存储装置270的访问都将调用驱动器320提供的接口,从而驱动器320可以采集到全局的、针对存储装置270的访问信息。The

具体地,应用210对数据的访问请求,经过操作系统内核处理之后,将转换为对存储该数据的存储装置270的访问请求。具体地,如上文中参考图2所述,位于应用层的应用210可以通过调用操作系统的访问接口,以进行读写操作。例如,操作系统内的虚拟文件系统220可以将访问接口的访问解析成存储装置块设备240的对应逻辑地址处的访问,并且调用驱动器320以读写该逻辑地址处的数据。进一步地,存储装置块设备240可以调用存储装置驱动器260,以读写存储装置270的、与逻辑地址对应的物理地址处的数据。Specifically, the access request for data by the

在这种情况下,在应用210访问存储在存储装置块设备240中的数据时,驱动器320将通过操作系统内核,获取与来自应用的访问请求相关联的访问信息410。在某些实施例中,访问请求可以包括读访问请求和/或写访问请求。例如,访问信息410可以包括发起访问请求的时间;访问请求所要访问的数据的地址;访问请求所要访问的数据的大小;命中的访问请求的数目,命中的访问请求所要访问的数据已经被加载到高速缓存中;和命中的访问请求的比例等。In this case, when the

此外,为了进一步提供更全面的信息以提高管理器310的预取决策的准确性,除了访问信息410之外,驱动器320还可以获取与高速缓存280相关联的高速缓存回收信息。在某些实施例中,管理器可以基于高速缓存回收信息确定高速缓存的使用情况,从而确定是否启用/禁用预取,或者在确定启用预取时采用何种预取策略,从而基于所确定的预取策略,结合访问信息410确定数据信息420。例如,如果高速缓存回收信息指示高速缓存280的已使用部分的大小低于阈值大小,这意味着高速缓存280的负载较小,因此可以启用预取或者采用更为激进的预取策略,并且使用这样的预取策略来基于访问信息410确定数据信息420。Additionally, in addition to

在某些实施例中,高速缓存回收信息可以包括与对高速缓存280进行回收时高速缓存280中的已使用部分相关联的信息。例如,已使用部分的地址、大小、比例等。此外,高速缓存回收信息还可以包括与对高速缓存280进行回收时高速缓存280中的未使用部分相关联的信息。例如,未使用部分的地址、大小、比例等。进一步地,高速缓存回收信息还可以包括与对高速缓存280进行回收时高速缓存280中的被改写的数据相关联的信息。例如,高速缓存280中存储脏数据的地址、大小、比例等。In some embodiments, cache reclamation information may include information associated with used portions of

另外,在某些实施例中,驱动器320可以将访问信息410存储到存储器330中的第一缓冲区中,以使管理器310从第一缓冲区获取访问信息410。此外,驱动器320还可以将高速缓存回收信息也存储到存储器330中的第一缓冲区中。备选地,驱动器320也可以直接向管理器310发送访问信息410和/或高速缓存回收信息。存储器330通常也可以被称为“内存”或“主存”。由于访问信息410和/或高速缓存回收信息存储在存储器330中,因此可以被快速存取,以用于高效地确定数据信息420。应理解,虽然在本文中将存储器描述为“内存”或“主存”,但是存储器也可以使用任何其他适当高速存储装置实现。Additionally, in some embodiments, the

具体地,第一缓冲区可以是存储器330中开辟的、由驱动器320维护的、以动态数据结构进行组织和管理的存储区域。在某些实施例中,该动态数据结构可以是环形缓冲区,但本公开的实施例不限于此,例如动态数据结构也可以是线性缓冲区等任何适当结构。当操作系统内核调用驱动器320的接口时,该接口将访问信息410和/或高速缓存回收信息写入第一缓冲区中,以供管理器310获取。然而,本公开的实施例不限于此,例如,驱动器320也可以直接向管理器310发送访问信息410和/或高速缓存回收信息。Specifically, the first buffer may be a storage area opened in the

进一步地,在某些实施例中,驱动器320还可以向管理器310发送关于访问信息410和/或高速缓存回收信息的通知,以使得管理器310能够获取访问信息410和/或高速缓存回收信息。例如,在驱动器320将访问信息410和/或高速缓存回收信息存储到第一缓冲区中时,驱动器320可以向管理器310发送通知。更具体地,驱动器320可以在存储访问信息410和/或高速缓存回收信息时同步地向管理器310发送通知,这种通知方式可以被认为是同步通知。例如,如果驱动器320同步通知管理器310,则驱动器320在写入访问信息410之后,需要等待管理器310读取访问信息410,才能返回。在这种情况下,驱动器320的写操作与管理器310的读操作是彼此耦合且不独立的。备选地,访问信息410和/或高速缓存回收信息的存储和通知的发送也可以是异步的,这种通知方式可以被认为是异步通知。例如,在异步通知中,驱动器320在完成访问信息410的写入时,管理器310并未读取访问信息410。更具体地,驱动器320可以将访问信息410写入第一缓冲区,并且在写入完成之后即返回。然后,管理器310自己的线程可以调用系统接口,来从第一缓冲区读取访问信息410。在这种情况下,驱动器320写入访问信息410的过程与管理器310读取访问信息410的过程彼此独立。此外,在某些实施例中,还可以在触发预定条件的情况下进行通知。例如,在驱动器320检测到第一缓冲区中的访问信息410和/或高速缓存回收信息的数据量大于阈值数据量或者大于第一缓冲区的大小的预定百分比时,驱动器320可以向管理器310发送通知。应理解,该通知可以既可以是同步通知,也可以是异步通知,本公开的实施例在此不受限制。Further, in some embodiments, the

由此,管理器310可以获取访问信息410。如上所述,在某些实施例中,驱动器320可以将访问信息410和/或高速缓存回收信息存储到第一缓冲区中。为此,驱动器320可以向管理器310提供对第一缓冲区的访问接口。管理器310可以通过该访问接口访问第一缓冲区,从而管理器310可以从第一缓冲区获取访问信息410和/或高速缓存回收信息。然而,本公开的实施例不限于此,例如,管理器310也可以直接从驱动器320接收访问信息410和/或高速缓存回收信息。From this, the

进一步地,如上所述,在某些实施例中,驱动器320还可以向管理器310发送关于访问信息410和/或高速缓存回收信息的通知。在这种情况下,如果管理器310从驱动器320接收到通知,则管理器310可以获取访问信息410和/或高速缓存回收信息。例如,管理器310可以响应于接收到通知而从第一缓冲区获取访问信息410。备选地,管理器310可以监测第一缓冲区,从而在监测到第一缓冲区中被放入访问信息410和/或高速缓存回收信息,或者访问信息410和/或高速缓存回收信息的数据量大于阈值数据量,或者访问信息410和/或高速缓存回收信息的数据量大于第一缓冲区的大小的预定百分比时,管理器310可以主动获取访问信息410和/或高速缓存回收信息。Further, as discussed above, in some embodiments,

管理器310基于访问信息410和/或高速缓存回收信息确定应用层的潜在访问数据的数据信息420。潜在访问数据与访问请求所要访问的数据相关联,例如,潜在访问数据是在访问了访问请求所针对的数据之后可能要进一步访问的数据。作为示例,数据信息420可以包括潜在访问数据的地址(诸如,存储装置270或存储装置块设备240中的地址),和潜在访问数据的大小等。然而,本公开的实施例不限于此,例如,数据信息420也可以包括潜在访问数据在存储装置270或存储装置块设备240中的起始地址、偏移等。以此方式,可以通过起始地址和偏移来确定潜在访问数据在存储装置270或存储装置块设备240中的地址。由此,利用数据信息420就可以获取潜在访问数据。

具体地,管理器310可以分析访问信息410中的规律,做出预取决策,从而得到数据信息420,以用于获取潜在访问数据。在某些实施例中,为了确定数据信息420,管理器310可以将访问信息410应用于经训练的预测器,以得到数据信息420。预测器是基于训练访问信息和与训练访问信息相关联的训练数据信息被训练的。例如,历史访问信息可以被用于训练预测器,在这种情况下,历史访问信息也可以被称为训练访问信息。此外,与历史访问信息相关联的历史数据信息也可以被用于训练预测器,这种情况下,历史数据信息也可以被称为训练数据信息。例如,历史数据信息可以是在针对历史访问信息的访问操作执行之后,与后续执行的访问操作相关联的信息,例如后续执行的访问操作所访问的数据的地址、大小等。预测器是基于训练访问信息和训练数据信息而训练的模型,其用于基于当前的访问信息来预测后续的潜在访问数据的访问信息。管理器310可以部署有或获取经训练的预测器,并利用预测器来确定数据信息。可以采用任何适当预测技术来实现预测器,例如随机森林、最小二乘多项式拟合、一元线性回归、多元线性回归、非线性方法、马尔可夫链预测、数据挖掘、神经网络等。Specifically, the

作为一个具体示例,管理器310可以基于访问信息410中指示的、访问请求所针对的数据的地址和大小等,来预测将要访问的潜在访问数据的位置和大小。例如,管理器310可以获知应用在时刻0读取了地址0x10000处的64KB数据,在时刻+5,读取了地址0x20000处的64KB数据,则管理器可以在时刻+10之前,做出预取决策。该预取决策指示预取0x30000地址处的64KB数据。由此,数据信息420可以指示潜在访问数据的地址为0x30000以及大小为64KB。As a specific example, the

在某些实施例中,管理器310可以将数据信息420存储到存储器330中的第二缓冲区中,以使得驱动器320能够从第二缓冲区获取数据信息420。具体地,类似于第一缓冲区,第二缓冲区可以是存储器330中开辟的、由驱动器320维护的、以动态数据结构进行组织和管理的存储区域。在某些实施例中,该动态数据结构可以是环形缓冲区,但本公开的实施例不限于此,例如动态数据结构也可以是线性缓冲区等任何适当结构。管理器310可以通过驱动器320提供的接口,将数据信息420存储到第二缓冲区,从而提供给驱动器320。然而,本公开的实施例不限于此,例如,管理器310也可以直接向驱动器320发送数据信息420。应理解,虽然在本文中将第二缓冲区描述为与第一缓冲区位于相同存储器330中,但是第二缓冲区与第一缓冲区可以位于不同存储器中。In some embodiments,

进一步地,在某些实施例中,管理器310还可以向驱动器320发送关于数据信息420的通知,以使驱动器320获取数据信息420。例如,在管理器310将数据信息420存储到第二缓冲区中时,管理器310可以向驱动器320发送通知。类似于驱动器320向管理器310发送的通知,该通知可以既可以是同步通知,也可以是异步通知,并且还可以在触发预定条件的情况下进行通知,本公开的实施例在此不受限制。Further, in some embodiments, the

在某些实施例中,对访问信息410可以采用异步通知,而对数据信息420可以采用同步通知,以提高系统健壮性和性能。原理在于,驱动器320处于底层的操作系统,而管理器310处于应用层,因此需要保证驱动器320的稳定性和安全性。具体地,如果驱动器320写入访问信息410是异步操作,则即使管理器310死机,也不会影响驱动器320写入访问信息410。而在管理器310写入数据信息420是同步操作的情况下,如果管理器310死机,则该同步操作不会发生,从而不影响驱动器320的稳定性。进一步地,如果驱动器320死机,则管理器310的同步操作将失败,这也符合控制逻辑,因为如果驱动器320无法正常工作,管理器310也必然无法正常工作。In some embodiments, asynchronous notifications may be employed for

由此,驱动器320可以获取数据信息420。如上所述,在某些实施例中,管理器310可以将数据信息420存储到第二缓冲区中。为此,驱动器320可以向管理器310提供对第二缓冲区的访问接口。管理器310可以通过该访问接口访问第二缓冲区,从而驱动器320可以从第二缓冲区获取数据信息420。然而,本公开的实施例不限于此,例如,驱动器320也可以直接从管理器310接收访问信息。Thereby, the

进一步地,如上所述,在某些实施例中,管理器310还可以向驱动器320发送关于数据信息420的通知。在这种情况下,如果驱动器320从管理器310接收到通知,则驱动器320可以获取数据信息420。例如,驱动器320可以响应于接收到通知而从第二缓冲区获取数据信息420。备选地,驱动器320可以监测第二缓冲区,从而在监测到第二缓冲区中被放入数据信息420,或者数据信息420的数据量大于阈值数据量,或者数据信息420的数据量大于第二缓冲区的大小的预定百分比时,驱动器320可以主动获取数据信息420。Further, as discussed above, in some embodiments,

由此,驱动器320可以基于数据信息420,将潜在访问数据加载到高速缓存280。在某些实施例中,驱动器320可以基于数据信息420从存储装置270获取潜在访问数据,并且将潜在访问数据存储到高速缓存280。具体地,在驱动器320获取数据信息420之后,可以从后端存储装置270读取该数据信息420指示的地址处的、具有指定大小的数据,并且写入到高速缓存280中,从而完成预取流程。例如,驱动器320可以读取存储装置块设备240中的、数据信息420指示的地址处的数据。为此,驱动器320可以调用存储装置块设备240,再由存储装置块设备240调用存储装置驱动器260,以读取存储装置270中的、与该数据信息420指示的地址对应的物理地址处的数据。然后,驱动器320可以将所读取的数据写入逻辑上缓存数据的高速缓存块设备250,再由高速缓存块设备250调用高速缓存驱动器265以写入实际缓存数据的高速缓存280。As such,

以此方式,由于潜在访问数据已经被预取至高速缓存280中,因此在应用真正访问该潜在访问数据时,就可以直接从高速缓存280中快速读取该潜在访问数据,从而显著提高系统性能。In this way, since the potential access data has been prefetched into the

此外,由于第一和第二缓冲区由驱动器320分配并维护,并且在存储器330中实现,因此对第一和第二缓冲区的访问操作均可以为异步(也即非阻塞)的存储器操作。具体地,驱动器320新增并且向应用层的管理器310提供对这两个缓冲区的访问的接口。管理器310通过这样的接口访问相应缓冲区,从而在非常短的延迟内获取与应用210对存储装置270的访问请求相关联的访问信息410,并且基于访问信息快速做出预取决策和提供用于获取潜在访问数据的数据信息420,最终完成预取。由于延迟非常短,因此通常可以在应用210尚未实际访问潜在访问数据之前,完成预取。以此方式,用于传输访问信息410和数据信息420的通道均可以以非阻塞的缓冲区实现,即访问信息410和数据信息420的传输不会导致驱动器320和管理器310挂起。因此,当通道异常时,例如通道中的读或写数据异常时,不会影响系统的正常运行。Furthermore, since the first and second buffers are allocated and maintained by the

此外,本方案通过将预取决策逻辑实现在管理器中,以及将访问信息采集和预取执行逻辑实现在驱动器中,实现了这些逻辑的彼此分离,并且避免了将预取决策逻辑实现在内核中将导致的各种潜在缺陷。例如,消耗大量资源的复杂神经网络算法无法在资源受限的内核中实现,而可以在提供更多资源的应用层中实现。作为另一示例,复杂的预取决策逻辑由于功能更强大,因此可能更容易出错,在这种情况下,将其实现在内核中将导致内核不稳定。作为又一示例,在预取决策逻辑实现在内核中时,恶意第三方可能经由预取决策逻辑入侵系统,从而影响内核的安全性。由此,相比于在内核中实现的预取决策逻辑需要考虑对资源、安全性和稳定性等各个方面,从而只能在牺牲准确性和效率的情况下保持精简稳定,在应用层中实现的管理器可以实现更加复杂、高效和准确的预取决策逻辑,从而有效感知当前和历史累积的访问信息,分析应用的复杂访问特性,准确做出预取决策。In addition, by implementing the prefetch decision logic in the manager and the access information collection and prefetch execution logic in the driver, this solution realizes the separation of these logics and avoids implementing the prefetch decision logic in the kernel. various potential defects that will be caused. For example, complex neural network algorithms that consume a lot of resources cannot be implemented in a resource-constrained kernel, but can be implemented in an application layer that provides more resources. As another example, complex prefetch decision logic, being more powerful, may be more error-prone, in which case implementing it in the kernel would result in kernel instability. As yet another example, when the prefetch decision logic is implemented in the kernel, a malicious third party may invade the system via the prefetch decision logic, thereby affecting the security of the kernel. Therefore, compared with the prefetching decision logic implemented in the kernel, various aspects such as resources, security and stability need to be considered, so that it can only be kept streamlined and stable at the expense of accuracy and efficiency, and implemented in the application layer. The manager can implement more complex, efficient and accurate prefetching decision logic, so as to effectively perceive the current and historically accumulated access information, analyze the complex access characteristics of the application, and make accurate prefetching decisions.

进一步地,通过将应用层中的管理器310与内核中的驱动器320完全解耦,管理器310的失败或故障完全不会影响正常的访问操作,使得系统健壮性显著超过传统内核原生预取方案。此外,由于应用210不感知管理器310的运行,因此可以实现管理器310的动态升级,同时无需更新应用210,从而具有更广泛的适配性。Further, by completely decoupling the

以上结合图3详细描述了根据本公开的一些实施例的具有管理器310的存储管理环境,并且结合图4详细描述了根据本公开的一些实施例的具有预取的存储管理过程。在下文中,将参考图5-6描述传统缓存过程和具有预取的缓存过程,以清楚地呈现本方案与传统方案的区别和优势。The storage management environment with the

图5示出了示例传统缓存过程500的泳道图。如图5所示,应用210读取510数据A。在5ms延迟之后,存储装置270返回515数据A,并且数据A被缓存在高速缓存280中。此外,应用210读取520数据B。在5ms延迟之后,存储装置270返回525数据B,并且数据B被缓存在高速缓存280中。另外,应用210读取530数据C。在5ms延迟之后,存储装置270返回535数据C,并且数据C被缓存在高速缓存280中。进一步地,应用210读取540数据D。在5ms延迟之后,存储装置270返回545数据D,并且数据D被缓存在高速缓存280中。FIG. 5 shows a swim lane diagram of an example

在后续访问读取过程中,由于数据A-D已经被缓存在高速缓存280中,因此在应用210读取数据A-D时,将直接从高速的高速缓存280中读取这些数据,而无需从低速的存储装置270中读取这些数据。例如,应用210读取550数据A。在小于100μs的延迟之后,高速缓存280返回555数据A。此外,应用210读取560数据B。在小于100μs的延迟之后,高速缓存280返回565数据B。In the subsequent access and reading process, since the data A-D have been cached in the

可见,该传统方案依赖于应用210重复读取相同数据来提高系统性能。因此,仅能够对相同数据的第二次及以后的访问提高性能。然而,在大量情况下,应用210对相同数据仅访问一次。或者,在数据量非常大以至于高速缓存280无法容纳这些数据的情况下,即使对相同数据存在多次访问,由于高速缓存280不足以容纳这些数据,因此驱动器230仍然不得不从低速的存储装置270读取数据,从而无法提高系统性能。It can be seen that the conventional solution relies on the

图6示出了根据本公开的一些实施例的具有预取的示例缓存过程600的泳道图。FIG. 6 shows a swimlane diagram of an

如图6所示,应用210读取610数据A。在5ms延迟之后,存储装置270返回615数据A,并且数据A被缓存在高速缓存280中。此外,应用210读取620数据B。在5ms延迟之后,存储装置270返回630数据B,并且数据B被缓存在高速缓存280中。As shown in FIG. 6,

管理器310可以根据应用的数据访问规律(例如,在这种情况下是顺序的),确定预取数据C和D的预取决策。由此,在应用后续访问数据C和D时,可以直接在高速缓存280中读取这些数据,从而将延迟从5ms显著降低为100μs。具体地,管理器310确定预取625数据C和D,并且存储装置270返回635数据C以及返回645数据D。在这种情况下,应用210读取640数据C,由于数据C已经被预取至高速缓存280中,因此仅在小于100μs延迟之后,高速缓存280返回650数据C。此外,应用210读取655数据D,由于数据D已经被预取至高速缓存280中,因此仅在小于100μs延迟之后,高速缓存280返回660数据D。

进一步地,在后续访问读取过程中,由于数据A-D已经被缓存在高速缓存280中,因此在应用210读取数据A-D时,将直接从高速的高速缓存280中读取这些数据,而无需从低速的存储装置270中读取这些数据。例如,应用210读取665数据A。在小于100μs的延迟之后,高速缓存280返回670数据A。此外,应用210读取675数据B。在小于100μs的延迟之后,高速缓存280返回680数据B。Further, in the subsequent access and reading process, since the data A-D have been cached in the

以此方式,相对于传统缓存过程,对于大量单次访问数据的应用场景,本公开的智能预取方案也可以有效利用高速缓存,从而显著提高系统性能。In this way, compared with the traditional caching process, the intelligent prefetching scheme of the present disclosure can also effectively utilize the cache for the application scenario of a large amount of single-access data, thereby significantly improving the system performance.

以下将结合图7和图8分别对在管理器处执行的存储管理过程和在驱动器处执行的存储管理过程进行详细描述。The storage management process performed at the manager and the storage management process performed at the drive will be described in detail below with reference to FIG. 7 and FIG. 8, respectively.

图7示出了根据本公开的一些实施例的在管理器处执行的存储管理过程700的流程图。例如,方法700可以由如图3所示的管理器310来执行。应当理解的是,方法700还可以包括未示出的附加步骤和/或可以省略所示出的步骤,本公开的范围在此方面不受限制。FIG. 7 shows a flow diagram of a

在710,应用层中的管理器获取访问信息,访问信息与操作系统从应用层接收到的访问请求有关。在720,管理器基于访问信息确定应用层的潜在访问数据的数据信息。在730,管理器使该数据信息对于操作系统中的驱动器可用,以使得操作系统中的驱动器基于数据信息将潜在访问数据加载到高速缓存。例如,管理器可以将该数据信息存储于操作系统中的驱动器可访问的存储器中或者预定存储区中,从而使得数据信息对于该驱动器是可访问的,即,处于可用的状态。在一些实现中,管理器可以通知驱动器从该存储器中或者从该预定存储区中获取该数据信息。在另一些实现中,管理器也可以不向驱动器发送通知,而是驱动器在有需要的时候自主地从该存储器中或者从该预定存储区中读取数据信息。At 710, the manager in the application layer obtains access information related to access requests received by the operating system from the application layer. At 720, the manager determines data information of potential access data of the application layer based on the access information. At 730, the manager makes the data information available to the driver in the operating system, so that the driver in the operating system loads the potentially accessed data into the cache based on the data information. For example, the manager may store the data information in a drive-accessible memory in the operating system or in a predetermined storage area so that the data information is accessible to the drive, ie, in a usable state. In some implementations, the manager may notify the driver to obtain the data information from the memory or from the predetermined storage area. In other implementations, the manager may not send a notification to the drive, but the drive autonomously reads data information from the memory or from the predetermined storage area when necessary.

以此方式,管理器可以基于访问信息预测可能将要访问的潜在访问数据的数据信息,并且驱动器可以基于数据信息预取潜在访问数据,从而提高存储性能、访问效率和用户体验。In this way, the manager can predict data information of potential access data that may be accessed based on the access information, and the driver can prefetch the potential access data based on the data information, thereby improving storage performance, access efficiency and user experience.

在一些实施例中,获取访问信息包括:响应于从驱动器接收到关于访问信息的通知,从存储器中的第一缓冲区获取访问信息,第一缓冲区由驱动器维护以用于存储访问信息。以此方式,管理器可以基于来自驱动器的通知从驱动器维护的缓冲区方便地获取访问信息。In some embodiments, obtaining the access information includes, in response to receiving a notification from the driver about the access information, obtaining the access information from a first buffer in the memory, the first buffer being maintained by the driver for storing the access information. In this way, the manager can conveniently obtain access information from buffers maintained by the driver based on notifications from the driver.

在一些实施例中,确定数据信息包括:将访问信息应用于经训练的预测器,以得到数据信息,预测器是基于训练访问信息和与训练访问信息相关联的训练数据信息被训练的。以此方式,可以利用经训练的预测器准确且高效地确定数据信息。In some embodiments, determining the data information includes applying the access information to a trained predictor to obtain the data information, the predictor being trained based on the training access information and training data information associated with the training access information. In this way, data information can be accurately and efficiently determined using the trained predictor.

在一些实施例中,确定数据信息包括:基于从存储器获取的高速缓存回收信息以及访问信息,来确定数据信息。以此方式,管理器可以在结合高速缓存回收信息和访问信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, determining the data information includes determining the data information based on cache reclamation information and access information obtained from the memory. In this way, the manager can accurately predict the data information of potential access data in conjunction with cache reclamation information and access information.

在一些实施例中,高速缓存回收信息包括以下至少一项:与对高速缓存进行回收时高速缓存中的已使用部分相关联的信息,与对高速缓存进行回收时高速缓存中的未使用部分相关联的信息,和与对高速缓存进行回收时高速缓存中的被改写的数据相关联的信息。以此方式,管理器可以在考虑与高速缓存的回收相关的各种信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the cache reclamation information includes at least one of: information associated with a used portion of the cache when the cache is reclaimed, and information associated with an unused portion of the cache when the cache is reclaimed associative information, and information associated with overwritten data in the cache when the cache is reclaimed. In this way, the manager can accurately predict the data information of the potentially accessed data taking into account various information related to the eviction of the cache.

在一些实施例中,该方法还包括:管理器在确定数据信息之后,向驱动器发送关于数据信息的通知,以用于驱动器基于通知从存储器中的第二缓冲区获取数据信息,第二缓冲区由驱动器维护以用于存储数据信息。以此方式,驱动器可以方便地从其维护的另一缓冲区获取数据信息,从而基于数据信息将潜在访问数据加载到高速缓存。In some embodiments, the method further includes: after the manager determines the data information, sending a notification about the data information to the driver, for the driver to obtain the data information from a second buffer in the memory based on the notification, the second buffer Maintained by drives for storing data information. In this way, the drive can conveniently obtain data information from another buffer it maintains, thereby loading potentially accessed data into the cache based on the data information.

在一些实施例中,访问信息包括以下至少一项:发起访问请求的时间;访问请求所要访问的数据的地址;访问请求所要访问的数据的大小;命中的访问请求的数目,命中的访问请求所要访问的数据已经被加载到高速缓存中;和命中的访问请求的比例。以此方式,管理器可以在考虑与访问请求相关的各种信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the access information includes at least one of the following: the time when the access request was initiated; the address of the data to be accessed by the access request; the size of the data to be accessed by the access request; The accessed data has been loaded into the cache; and the proportion of access requests that hit. In this way, the manager can accurately predict the data information of potential access data taking into account various information related to the access request.

在一些实施例中,数据信息包括以下至少一项:潜在访问数据的地址,和潜在访问数据的大小。以此方式,驱动器可以方便地获取潜在访问数据。In some embodiments, the data information includes at least one of: an address of the potentially accessed data, and a size of the potentially accessed data. In this way, the drive can easily obtain potentially accessible data.

图8示出了根据本公开的一些实施例的在驱动器320处执行的存储管理过程800的流程图。例如,方法800可以由如图3所示的驱动器320来执行。应当理解的是,方法800还可以包括未示出的附加步骤和/或可以省略所示出的步骤,本公开的范围在此方面不受限制。FIG. 8 shows a flow diagram of a

在810,操作系统中的驱动器响应于操作系统从应用层接收到访问请求,确定与访问请求有关的访问信息。在820,驱动器获取应用层的潜在访问数据的数据信息,数据信息是应用层中的管理器基于访问信息确定的。在830,驱动器基于数据信息将潜在访问数据加载到高速缓存。以此方式,驱动器可以基于管理器所确定的数据信息,预取可能将要访问的潜在访问数据,从而提高存储性能、访问效率和用户体验。At 810, a driver in the operating system determines access information related to the access request in response to the operating system receiving the access request from the application layer. At 820, the driver obtains data information of potential access data of the application layer, the data information being determined by the manager in the application layer based on the access information. At 830, the driver loads the potentially accessed data into the cache based on the data information. In this way, the drive can prefetch potential access data that may be accessed based on the data information determined by the manager, thereby improving storage performance, access efficiency, and user experience.

在一些实施例中,该方法还包括:驱动器在确定访问信息之后,向管理器发送关于访问信息的通知,以用于管理器基于通知从存储器中的第一缓冲区获取访问信息,第一缓冲区由驱动器维护以用于存储访问信息。以此方式,管理器可以基于来自驱动器的通知从驱动器维护的缓冲区方便地获取访问信息。In some embodiments, the method further includes: after the driver determines the access information, sending a notification about the access information to the manager, so that the manager obtains the access information from the first buffer in the memory based on the notification, and the first buffer Areas are maintained by the drive to store access information. In this way, the manager can conveniently obtain access information from buffers maintained by the driver based on notifications from the driver.

在一些实施例中,获取数据信息包括:响应于从管理器接收到关于数据信息的通知,从存储器中的第二缓冲区获取数据信息,第二缓冲区由驱动器维护以用于存储数据信息。以此方式,驱动器可以方便地从其维护的另一缓冲区获取数据信息,从而基于数据信息将潜在访问数据加载到高速缓存。In some embodiments, obtaining the data information includes, in response to receiving the notification from the manager about the data information, obtaining the data information from a second buffer in the memory maintained by the drive for storing the data information. In this way, the drive can conveniently obtain data information from another buffer it maintains, thereby loading potentially accessed data into the cache based on the data information.

在一些实施例中,数据信息是由管理器基于从存储器获取的高速缓存回收信息以及访问信息来确定的。以此方式,管理器可以在结合高速缓存回收信息和访问信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the data information is determined by the manager based on cache reclamation information obtained from memory and access information. In this way, the manager can accurately predict the data information of potential access data in conjunction with cache reclamation information and access information.

在一些实施例中,高速缓存回收信息包括以下至少一项:与对高速缓存进行回收时高速缓存中的已使用部分相关联的信息,与对高速缓存进行回收时高速缓存中的未使用部分相关联的信息,和与对高速缓存进行回收时高速缓存中的被改写的数据相关联的信息。以此方式,管理器可以在考虑与高速缓存的回收相关的各种信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the cache reclamation information includes at least one of: information associated with a used portion of the cache when the cache is reclaimed, and information associated with an unused portion of the cache when the cache is reclaimed associative information, and information associated with overwritten data in the cache when the cache is reclaimed. In this way, the manager can accurately predict the data information of the potentially accessed data taking into account various information related to the eviction of the cache.

在一些实施例中,访问信息包括以下至少一项:发起访问请求的时间;访问请求所要访问的数据的地址;访问请求所要访问的数据的大小;命中的访问请求的数目,命中的访问请求所要访问的数据已经被加载到高速缓存中;和命中的访问请求的比例。以此方式,管理器可以在考虑与访问请求相关的各种信息的情况下准确地预测潜在访问数据的数据信息。In some embodiments, the access information includes at least one of the following: the time when the access request was initiated; the address of the data to be accessed by the access request; the size of the data to be accessed by the access request; The accessed data has been loaded into the cache; and the proportion of access requests that hit. In this way, the manager can accurately predict the data information of potential access data taking into account various information related to the access request.

在一些实施例中,数据信息包括以下至少一项:潜在访问数据的地址,和潜在访问数据的大小。以此方式,驱动器可以方便地获取潜在访问数据。In some embodiments, the data information includes at least one of: an address of the potentially accessed data, and a size of the potentially accessed data. In this way, the drive can easily obtain potentially accessible data.

图9示出了适合实现本公开的实施例的示例设备900的框图。例如,如图1所示的管理器310和驱动器320可以由设备900实施。如图9所示,设备900包括中央处理单元(CPU)901,其可以根据存储在只读存储器(ROM)902中的计算机程序指令或者从存储单元905加载到随机访问存储器(RAM)903中的计算机程序指令,来执行各种适当的动作和处理。在RAM903中,还可存储设备900操作所需的各种程序和数据。CPU 901、ROM 902以及RAM903通过总线904彼此相连。输入/输出(I/O)接口905也连接至总线904。9 illustrates a block diagram of an

设备900中的多个部件连接至I/O接口905,包括:输入单元906,例如键盘、鼠标等;输出单元907,例如各种类型的显示器、扬声器等;存储单元908,例如磁盘、光盘等;以及通信单元909,例如网卡、调制解调器、无线通信收发机等。通信单元909允许设备900通过诸如因特网的计算机网络和/或各种电信网络与其他设备交换信息/数据。Various components in the

上文所描述的各个过程和处理,例如方法700或800,可由处理单元901执行。例如,在一些实施例中,方法700或800可被实现为计算机软件程序,其被有形地包含于机器可读介质,例如存储单元905。在一些实施例中,计算机程序的部分或者全部可以经由ROM 902和/或通信单元909而被载入和/或安装到设备900上。当计算机程序被加载到RAM 903并由CPU 901执行时,可以执行上文描述的方法700或800的一个或多个动作。The various procedures and processes described above, such as

本公开可以是方法、装置、系统和/或计算机程序产品。计算机程序产品可以包括计算机可读存储介质,其上载有用于执行本公开的各个方面的计算机可读程序指令。The present disclosure may be a method, apparatus, system and/or computer program product. The computer program product may include a computer-readable storage medium having computer-readable program instructions loaded thereon for carrying out various aspects of the present disclosure.

计算机可读存储介质可以是可以保持和存储由指令执行设备使用的指令的有形设备。计算机可读存储介质例如可以是——但不限于——电存储设备、磁存储设备、光存储设备、电磁存储设备、半导体存储设备或者上述的任意合适的组合。计算机可读存储介质的更具体的例子(非穷举的列表)包括:便携式计算机盘、硬盘、随机存取存储器(RAM)、只读存储器(ROM)、可擦式可编程只读存储器(EPROM或闪存)、静态随机存取存储器(SRAM)、便携式压缩盘只读存储器(CD-ROM)、数字多功能盘(DVD)、记忆棒、软盘、机械编码设备、例如其上存储有指令的打孔卡或凹槽内凸起结构、以及上述的任意合适的组合。这里所使用的计算机可读存储介质不被解释为瞬时信号本身,诸如无线电波或者其他自由传播的电磁波、通过波导或其他传输媒介传播的电磁波(例如,通过光纤电缆的光脉冲)、或者通过电线传输的电信号。A computer-readable storage medium may be a tangible device that can hold and store instructions for use by the instruction execution device. The computer-readable storage medium may be, for example, but not limited to, an electrical storage device, a magnetic storage device, an optical storage device, an electromagnetic storage device, a semiconductor storage device, or any suitable combination of the foregoing. More specific examples (non-exhaustive list) of computer readable storage media include: portable computer disks, hard disks, random access memory (RAM), read only memory (ROM), erasable programmable read only memory (EPROM) or flash memory), static random access memory (SRAM), portable compact disk read only memory (CD-ROM), digital versatile disk (DVD), memory sticks, floppy disks, mechanically coded devices, such as printers with instructions stored thereon Hole cards or raised structures in grooves, and any suitable combination of the above. Computer-readable storage media, as used herein, are not to be construed as transient signals per se, such as radio waves or other freely propagating electromagnetic waves, electromagnetic waves propagating through waveguides or other transmission media (eg, light pulses through fiber optic cables), or through electrical wires transmitted electrical signals.

这里所描述的计算机可读程序指令可以从计算机可读存储介质下载到各个计算/处理设备,或者通过网络、例如因特网、局域网、广域网和/或无线网下载到外部计算机或外部存储设备。网络可以包括铜传输电缆、光纤传输、无线传输、路由器、防火墙、交换机、网关计算机和/或边缘服务器。每个计算/处理设备中的网络适配卡或者网络接口从网络接收计算机可读程序指令,并转发该计算机可读程序指令,以供存储在各个计算/处理设备中的计算机可读存储介质中。The computer readable program instructions described herein may be downloaded to various computing/processing devices from a computer readable storage medium, or to an external computer or external storage device over a network such as the Internet, a local area network, a wide area network, and/or a wireless network. The network may include copper transmission cables, fiber optic transmission, wireless transmission, routers, firewalls, switches, gateway computers, and/or edge servers. A network adapter card or network interface in each computing/processing device receives computer-readable program instructions from a network and forwards the computer-readable program instructions for storage in a computer-readable storage medium in each computing/processing device .

用于执行本公开操作的计算机程序指令可以是汇编指令、指令集架构(ISA)指令、机器指令、机器相关指令、微代码、固件指令、状态设置数据、或者以一种或多种编程语言的任意组合编写的源代码或目标代码,所述编程语言包括面向对象的编程语言—诸如Smalltalk、C++、Java等,以及常规的过程式编程语言—诸如“C”语言或类似的编程语言。计算机可读程序指令可以完全地在用户计算机上执行、部分地在用户计算机上执行、作为一个独立的软件包执行、部分在用户计算机上部分在远程计算机上执行、或者完全在远程计算机或服务器上执行。在涉及远程计算机的情形中,远程计算机可以通过任意种类的网络—包括局域网(LAN)或广域网(WAN)—连接到用户计算机,或者,可以连接到外部计算机(例如利用因特网服务提供商来通过因特网连接)。在一些实施例中,通过利用计算机可读程序指令的状态信息来个性化定制电子电路,例如可编程逻辑电路、现场可编程门阵列(FPGA)或可编程逻辑阵列(PLA),该电子电路可以执行计算机可读程序指令,从而实现本公开的各个方面。Computer program instructions for carrying out operations of the present disclosure may be assembly instructions, instruction set architecture (ISA) instructions, machine instructions, machine-dependent instructions, microcode, firmware instructions, state setting data, or instructions in one or more programming languages. Source or object code written in any combination, including object-oriented programming languages - such as Smalltalk, C++, Java, etc., and conventional procedural programming languages - such as the "C" language or similar programming languages. The computer readable program instructions may execute entirely on the user's computer, partly on the user's computer, as a stand-alone software package, partly on the user's computer and partly on a remote computer, or entirely on the remote computer or server implement. In the case of a remote computer, the remote computer may be connected to the user's computer through any kind of network, including a local area network (LAN) or a wide area network (WAN), or may be connected to an external computer (eg, using an Internet service provider through the Internet connect). In some embodiments, custom electronic circuits, such as programmable logic circuits, field programmable gate arrays (FPGAs), or programmable logic arrays (PLAs), can be personalized by utilizing state information of computer readable program instructions. Computer readable program instructions are executed to implement various aspects of the present disclosure.

这里参照根据本公开实施例的方法、装置(系统)和计算机程序产品的流程图和/或框图描述了本公开的各个方面。应当理解,流程图和/或框图的每个方框以及流程图和/或框图中各方框的组合,都可以由计算机可读程序指令实现。Aspects of the present disclosure are described herein with reference to flowchart illustrations and/or block diagrams of methods, apparatus (systems) and computer program products according to embodiments of the disclosure. It will be understood that each block of the flowchart illustrations and/or block diagrams, and combinations of blocks in the flowchart illustrations and/or block diagrams, can be implemented by computer readable program instructions.

这些计算机可读程序指令可以提供给通用计算机、专用计算机或其它可编程数据处理装置的处理单元,从而生产出一种机器,使得这些指令在通过计算机或其它可编程数据处理装置的处理单元执行时,产生了实现流程图和/或框图中的一个或多个方框中规定的功能/动作的装置。也可以把这些计算机可读程序指令存储在计算机可读存储介质中,这些指令使得计算机、可编程数据处理装置和/或其他设备以特定方式工作,从而,存储有指令的计算机可读介质则包括一个制造品,其包括实现流程图和/或框图中的一个或多个方框中规定的功能/动作的各个方面的指令。These computer readable program instructions may be provided to a processing unit of a general purpose computer, special purpose computer or other programmable data processing apparatus to produce a machine that causes the instructions when executed by the processing unit of the computer or other programmable data processing apparatus , resulting in means for implementing the functions/acts specified in one or more blocks of the flowchart and/or block diagrams. These computer readable program instructions can also be stored in a computer readable storage medium, these instructions cause a computer, programmable data processing apparatus and/or other equipment to operate in a specific manner, so that the computer readable medium on which the instructions are stored includes An article of manufacture comprising instructions for implementing various aspects of the functions/acts specified in one or more blocks of the flowchart and/or block diagrams.

也可以把计算机可读程序指令加载到计算机、其它可编程数据处理装置、或其它设备上,使得在计算机、其它可编程数据处理装置或其它设备上执行一系列操作步骤,以产生计算机实现的过程,从而使得在计算机、其它可编程数据处理装置、或其它设备上执行的指令实现流程图和/或框图中的一个或多个方框中规定的功能/动作。Computer readable program instructions can also be loaded onto a computer, other programmable data processing apparatus, or other equipment to cause a series of operational steps to be performed on the computer, other programmable data processing apparatus, or other equipment to produce a computer-implemented process , thereby causing instructions executing on a computer, other programmable data processing apparatus, or other device to implement the functions/acts specified in one or more blocks of the flowcharts and/or block diagrams.

附图中的流程图和框图显示了根据本公开的多个实施例的系统、方法和计算机程序产品的可能实现的体系架构、功能和操作。在这点上,流程图或框图中的每个方框可以代表一个模块、程序段或指令的一部分,所述模块、程序段或指令的一部分包含一个或多个用于实现规定的逻辑功能的可执行指令。在有些作为替换的实现中,方框中所标注的功能也可以以不同于附图中所标注的顺序发生。例如,两个连续的方框实际上可以基本并行地执行,它们有时也可以按相反的顺序执行,这依所涉及的功能而定。也要注意的是,框图和/或流程图中的每个方框、以及框图和/或流程图中的方框的组合,可以用执行规定的功能或动作的专用的基于硬件的系统来实现,或者可以用专用硬件与计算机指令的组合来实现。The flowchart and block diagrams in the Figures illustrate the architecture, functionality, and operation of possible implementations of systems, methods and computer program products according to various embodiments of the present disclosure. In this regard, each block in the flowchart or block diagrams may represent a module, segment, or portion of instructions, which comprises one or more functions for implementing the specified logical function(s) executable instructions. In some alternative implementations, the functions noted in the blocks may occur out of the order noted in the figures. For example, two blocks in succession may, in fact, be executed substantially concurrently, or the blocks may sometimes be executed in the reverse order, depending upon the functionality involved. It is also noted that each block of the block diagrams and/or flowchart illustrations, and combinations of blocks in the block diagrams and/or flowchart illustrations, can be implemented in dedicated hardware-based systems that perform the specified functions or actions , or can be implemented in a combination of dedicated hardware and computer instructions.

以上已经描述了本公开的各实施例,上述说明是示例性的,并非穷尽性的,并且也不限于所披露的各实施例。Various embodiments of the present disclosure have been described above, and the foregoing descriptions are exemplary, not exhaustive, and not limiting of the disclosed embodiments.

Claims (23)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| PCT/CN2021/104238WO2022179032A1 (en) | 2021-02-25 | 2021-07-02 | Method for storage management, and device, medium, and program product |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202110208064 | 2021-02-25 | ||

| CN2021102080640 | 2021-02-25 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN114968076Atrue CN114968076A (en) | 2022-08-30 |

Family

ID=82973404

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202110615806.1APendingCN114968076A (en) | 2021-02-25 | 2021-06-02 | Method, apparatus, medium, and program product for storage management |

Country Status (2)

| Country | Link |

|---|---|

| CN (1) | CN114968076A (en) |

| WO (1) | WO2022179032A1 (en) |

Family Cites Families (7)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN106681990B (en)* | 2015-11-05 | 2019-10-25 | 华中科技大学 | A prefetching method for cached data in a mobile cloud storage environment |

| US10303608B2 (en)* | 2017-08-22 | 2019-05-28 | Qualcomm Incorporated | Intelligent data prefetching using address delta prediction |

| DE112017008158T5 (en)* | 2017-11-22 | 2020-09-10 | Intel Corporation | FILE PRE-CALL SCHEDULING FOR CACHE TO REDUCE LATENCIES |

| CN111104054B (en)* | 2018-10-29 | 2023-10-27 | 伊姆西Ip控股有限责任公司 | Method, apparatus and computer program product for managing input/output operations |

| CN111124955B (en)* | 2018-10-31 | 2023-09-08 | 珠海格力电器股份有限公司 | Cache control method and equipment and computer storage medium |

| CN112256599B (en)* | 2019-07-22 | 2024-11-15 | 华为技术有限公司 | A data pre-fetching method, device and storage device |

| CN111143243B (en)* | 2019-12-19 | 2023-06-27 | 上海交通大学 | A cache prefetching method and system based on NVM hybrid memory |

- 2021

- 2021-06-02CNCN202110615806.1Apatent/CN114968076A/enactivePending

- 2021-07-02WOPCT/CN2021/104238patent/WO2022179032A1/ennot_activeCeased

Also Published As

| Publication number | Publication date |

|---|---|

| WO2022179032A1 (en) | 2022-09-01 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| US10831375B2 (en) | Hierarchical pre-fetch pipelining in a hybrid memory server | |

| US8423715B2 (en) | Memory management among levels of cache in a memory hierarchy | |

| US8694584B2 (en) | Speculative and coordinated data access in a hybrid memory server | |

| CN113906384A (en) | Memory as a service in an Artificial Neural Network (ANN) application | |

| US10372622B2 (en) | Software controlled cache line replacement within a data property dependent cache segment of a cache using a cache segmentation enablement bit and cache segment selection bits | |

| WO2022179032A1 (en) | Method for storage management, and device, medium, and program product | |

| US9552293B1 (en) | Emulating eviction data paths for invalidated instruction cache | |

| US9286238B1 (en) | System, apparatus, and method of cache management |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination |