CN114967752A - Unmanned aerial vehicle autonomous landing method and system based on vision - Google Patents

Unmanned aerial vehicle autonomous landing method and system based on visionDownload PDFInfo

- Publication number

- CN114967752A CN114967752ACN202210719200.7ACN202210719200ACN114967752ACN 114967752 ACN114967752 ACN 114967752ACN 202210719200 ACN202210719200 ACN 202210719200ACN 114967752 ACN114967752 ACN 114967752A

- Authority

- CN

- China

- Prior art keywords

- action

- control

- apron

- uav

- altitude

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

- 238000000034methodMethods0.000titleclaimsabstractdescription30

- 230000009471actionEffects0.000claimsdescription103

- 230000008859changeEffects0.000claimsdescription17

- 238000007781pre-processingMethods0.000claimsdescription15

- 238000013135deep learningMethods0.000claimsdescription11

- 238000000605extractionMethods0.000claimsdescription6

- 238000012549trainingMethods0.000claimsdescription6

- 230000006835compressionEffects0.000claimsdescription4

- 238000007906compressionMethods0.000claimsdescription4

- 230000009467reductionEffects0.000claimsdescription4

- 230000000007visual effectEffects0.000abstractdescription5

- 230000006399behaviorEffects0.000abstractdescription4

- 230000002787reinforcementEffects0.000abstractdescription3

- 239000000284extractSubstances0.000abstractdescription2

- 230000008447perceptionEffects0.000abstractdescription2

- 230000006870functionEffects0.000description22

- 238000010586diagramMethods0.000description4

- 238000005516engineering processMethods0.000description4

- 230000010365information processingEffects0.000description3

- 230000008569processEffects0.000description3

- 238000011160researchMethods0.000description3

- 238000013459approachMethods0.000description2

- 238000001514detection methodMethods0.000description2

- 238000011161developmentMethods0.000description2

- 238000011156evaluationMethods0.000description2

- 238000012545processingMethods0.000description2

- 238000005070samplingMethods0.000description2

- ORILYTVJVMAKLC-UHFFFAOYSA-NAdamantaneNatural productsC1C(C2)CC3CC1CC2C3ORILYTVJVMAKLC-UHFFFAOYSA-N0.000description1

- 238000009825accumulationMethods0.000description1

- 230000009286beneficial effectEffects0.000description1

- 230000000694effectsEffects0.000description1

- 238000007689inspectionMethods0.000description1

- 238000012886linear functionMethods0.000description1

- 238000012986modificationMethods0.000description1

- 230000004048modificationEffects0.000description1

- 230000002265preventionEffects0.000description1

Images

Classifications

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D1/00—Control of position, course, altitude or attitude of land, water, air or space vehicles, e.g. using automatic pilots

- G05D1/10—Simultaneous control of position or course in three dimensions

- G05D1/101—Simultaneous control of position or course in three dimensions specially adapted for aircraft

Landscapes

- Engineering & Computer Science (AREA)

- Aviation & Aerospace Engineering (AREA)

- Radar, Positioning & Navigation (AREA)

- Remote Sensing (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Automation & Control Theory (AREA)

- Control Of Position, Course, Altitude, Or Attitude Of Moving Bodies (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明涉及无人机导航技术领域,具体为一种基于视觉的无人机自主着陆方法及系统。The invention relates to the technical field of unmanned aerial vehicle navigation, in particular to a vision-based autonomous landing method and system of an unmanned aerial vehicle.

背景技术Background technique

无人驾驶飞行器被称为“无人机”(“UAV”),它是由无线遥控装置和自带的程序控制器来操纵。随着科技的不断发展,无人机的应用越来越广泛。与有人驾驶飞机相比,无人机更适合在危险和严酷的环境中执行任务。由于其体积小、成本低、性价比高、距离远、无人驾驶等优点,现已广泛应用于军用领域和民用领域。在军事方面,它用于战术侦查、电子干扰、目标鉴别等作战支援。在民用方面,无人机将成为农林植保、电力巡检、物流配送、森林防火、交通执法、高空安全、危险环境搜救等领域的主流。The unmanned aerial vehicle is called an "unmanned aerial vehicle" ("UAV"), and it is operated by a wireless remote control device and its own program controller. With the continuous development of science and technology, the application of drones is becoming more and more extensive. Compared to manned aircraft, drones are better suited for missions in dangerous and harsh environments. Due to its small size, low cost, high cost performance, long distance, unmanned driving and other advantages, it has been widely used in military and civilian fields. In the military, it is used for combat support such as tactical reconnaissance, electronic jamming, and target identification. In terms of civilian use, drones will become the mainstream in the fields of agriculture, forestry and plant protection, power inspection, logistics and distribution, forest fire prevention, traffic law enforcement, high-altitude safety, and search and rescue in dangerous environments.

目前,国内外无人机自主着陆导航技术主要包括惯性导航系统(INS)、GPS导航、INS/GPS组合导航系统和视觉导航系统。惯性导航系统虽然具有不依赖任何外部信息、实现简单、稳定性好的优点,其最大的问题是对初始值很敏感,导航误差随时间而增加,导致位姿估计参数存在较大的误差,无法满足着陆时所需的精度要求。GPS导航系统应用广泛,需要依靠无线电信号的传播,其具有快速、高效率、定位精度高、应用广泛、全天候定位等优势,但容易干扰或丢失卫星信号,导致定位不准确。INS/GPS组合导航系统虽然弥补了各自单独导航系统的缺点,但其仍然受到GPS的影响,如出现卫星失效等情况。这些系统目前还不够准确,不能满足无人机着陆的需求。与传统的惯性导航方法相比,视觉导航误差积累不大,相对于GPS导航系统,它受到周围环境、时间和气象条件的干扰等影响较小。视觉传感器利用可见光,外部光通过物体的反射进入相机变成图像,因此不会受到电磁干扰的影响。视觉传感器具有节省时间和成本、轻便、结构简单等优势。与其他传感器相比,它最大的特点是可以智能识别目标,而其他传感器只能提供一些传感数据。此外,视觉导航系统具有隐藏性、完全自主性以及机载相机的抗干扰性,因而提高系统的性能,所以近年来基于视觉的导航系统一直是研究的重点,着陆过程是无人机坠毁最频繁发生的过程,可靠、准确的着陆技术是无人机技术的一个重要趋势,核心是如何实现精准、稳定和实时导航。At present, domestic and foreign UAV autonomous landing navigation technology mainly includes inertial navigation system (INS), GPS navigation, INS/GPS integrated navigation system and visual navigation system. Although the inertial navigation system has the advantages of not relying on any external information, simple implementation and good stability, its biggest problem is that it is very sensitive to the initial value, and the navigation error increases with time, resulting in a large error in the pose estimation parameters. Satisfy the accuracy requirements required for landing. GPS navigation system is widely used and needs to rely on the propagation of radio signals. It has the advantages of fast speed, high efficiency, high positioning accuracy, wide application, all-weather positioning, etc., but it is easy to interfere or lose satellite signals, resulting in inaccurate positioning. Although the INS/GPS integrated navigation system makes up for the shortcomings of their separate navigation systems, it is still affected by GPS, such as satellite failure. These systems are currently not accurate enough for drone landings. Compared with traditional inertial navigation methods, the accumulation of visual navigation errors is not large, and compared with GPS navigation systems, it is less affected by the interference of surrounding environment, time and weather conditions. Vision sensors utilize visible light, and external light enters the camera through the reflection of objects and becomes an image, so it is not affected by electromagnetic interference. Vision sensors have the advantages of saving time and cost, light weight, and simple structure. Compared with other sensors, its biggest feature is that it can intelligently identify targets, while other sensors can only provide some sensing data. In addition, the visual navigation system has concealment, complete autonomy and anti-interference of the airborne camera, thus improving the performance of the system, so in recent years, the vision-based navigation system has been the focus of research, and the landing process is the most frequent UAV crash. The process of occurrence, reliable and accurate landing technology is an important trend in UAV technology, and the core is how to achieve accurate, stable and real-time navigation.

随着计算机视觉的发展,对于在复杂环境以及动态平台着陆仍然是技术难题,利用机器视觉研究增多,基于深度学习框架下的算法应用于视觉着落方面,对于着陆地标检测以及复杂条件跟踪需要加强研究。因此,现阶段对无人机的自主着陆在移动目标的研究较少,需要无人机掌握目标识别、针对运动目标追踪和行为状态预测的能力。With the development of computer vision, landing in complex environments and dynamic platforms is still a technical problem. The use of machine vision research has increased, and algorithms based on the deep learning framework are applied to visual landings. Landmark detection and complex condition tracking need to be strengthened. . Therefore, at this stage, there are few researches on the autonomous landing of UAVs on moving targets, which requires UAVs to master the ability to recognize targets, track moving targets, and predict behavior states.

发明内容SUMMARY OF THE INVENTION

针对现有技术中存在的问题,本发明的目的是提供一种基于的视觉的无人机自主着陆方法,提出了一种新的基于深度强化学习的算法框架与训练方式,利用多个网络分担拟合任务的压力,即由多个控制网络联合控制无人机的导航,旨在解决无人机的动态平台着陆问题,具有鲁棒性高、可移植性强的特点。In view of the problems existing in the prior art, the purpose of the present invention is to provide a vision-based UAV autonomous landing method, propose a new algorithm framework and training method based on deep reinforcement learning, and use multiple networks to share The pressure of fitting the task, that is, the navigation of the UAV is jointly controlled by multiple control networks, which aims to solve the dynamic platform landing problem of the UAV, and has the characteristics of high robustness and strong portability.

本发明是通过以下技术方案来实现:The present invention is achieved through the following technical solutions:

一种基于视觉的无人机自主着陆方法,包括以下步骤:A vision-based drone autonomous landing method, comprising the following steps:

步骤1、无人机获取动态停机坪的图像并进行预处理;

步骤2、基于训练后的深度学习网络提取预处理图像中动态停机坪的运动特征、距离特征及轮廓尺度变化特征,并输出无人机的方向控制动作、高度控制动作和速度控制动作;

步骤3、根据无人机的方向控制动作、高度控制动作和速度控制动作,确定无人机的方向控制指令和高度控制指令;

步骤4、根据方向控制指令和高度控制指令控制无人机着陆至动态停机坪。Step 4. Control the drone to land on the dynamic apron according to the direction control command and the altitude control command.

优选的,步骤1中将获取的图像复制为三份,对每份图像分别进行预处理,预处理的方法为对图像进行降维、压缩和堆叠处理。Preferably, in

优选的,步骤2中所述深度学习网络包括方向控制网络、高度控制网络和区域预测网络;Preferably, the deep learning network in

所述方向控制网络,用于提取预处理后图像序列中停机坪的运动特征,并根据停机坪的运动特征输出方向控制动作;The direction control network is used for extracting the motion features of the apron in the image sequence after preprocessing, and outputting direction control actions according to the motion features of the apron;

所述高度控制网络,用于提取预处理后深度图像序列中的停机坪的距离特征,并根据停机坪的距离特征输出无人机高度控制动作;The altitude control network is used for extracting the distance feature of the apron in the depth image sequence after preprocessing, and outputting the UAV altitude control action according to the distance feature of the apron;

所述区域预测网络,用于提取预处理后图像序列中停机坪的轮廓尺度变化特征,并根据停机坪的轮廓尺度变化特征输出无人机速度控制动作。The area prediction network is used for extracting the contour scale change feature of the apron in the image sequence after preprocessing, and outputting the speed control action of the UAV according to the contour scale change feature of the apron.

优选的,步骤3中确认方向控制指令和高度控制指令的方法如下:Preferably, the method for confirming the direction control instruction and the altitude control instruction in

采用动作选择策略分别选择方向控制动作、高度控制动作和速度控制动作的最优动作,根据最优动作和无人机的初始速度确定方向控制指令和高度控制指令。The optimal actions of the direction control action, the altitude control action and the speed control action are selected respectively by the action selection strategy, and the direction control command and the altitude control command are determined according to the optimal action and the initial speed of the UAV.

优选的,根据方向控制动作和速度控制动作的最优动作,并结合无人机的初始速度确定方向控制指令。Preferably, the direction control command is determined according to the optimal action of the direction control action and the speed control action, combined with the initial speed of the UAV.

优选的,根据高度控制动作和速度控制动作的最优动作确定高度动作控制指令。Preferably, the height motion control instruction is determined according to the optimal motion of the height control motion and the speed control motion.

优选的,所述方向动作控制指令的表达式如下:Preferably, the expression of the directional action control instruction is as follows:

auav1=ai*a0*biauav1 = ai *a0 *bi

其中,a0为无人机速度初值,v为速度,w为角速度,ai为方向控制动作的最优动作,bi为速度控制动作的最优动作。Among them, a0 is the initial value of the UAV speed, v is the speed, w is the angular velocity, ai is the optimal action of the direction control action, and bi is the optimal action of the speed control action.

优选的,所述高度动作控制指令的表达式如下:Preferably, the expression of the height motion control instruction is as follows:

auav2=ai*biauav2 = ai *bi

其中,Ai为高度控制动作的最优动作,bi为速度控制动作的最优动作。Among them, Ai is the optimal action of the height control action, and bi is the optimal action of the speed control action.

一种基于视觉的无人机自主着陆方法的系统,包括,A system for a vision-based approach to autonomous drone landing, comprising,

图像预处理模块,用于对无人机获取动态停机坪的图像并进行预处理;The image preprocessing module is used to obtain and preprocess the image of the dynamic apron for the UAV;

特征提取模块,用于采用训练后的深度学习网络提取预处理图像中动态停机坪的运动特征、距离特征及轮廓尺度变化特征,并输出无人机的方向控制动作、高度控制动作和速度控制动作;The feature extraction module is used to extract the motion features, distance features and contour scale change features of the dynamic apron in the preprocessed image by using the trained deep learning network, and output the direction control actions, altitude control actions and speed control actions of the UAV ;

控制指令输出模块,用于根据无人机的方向控制动作、高度控制动作和速度控制动作,确定无人机的方向控制指令和高度控制指令;The control command output module is used to determine the direction control command and the altitude control command of the drone according to the direction control action, altitude control action and speed control action of the drone;

控制模块,用于根据方向控制指令和高度控制指令控制无人机着陆至动态停机坪。The control module is used to control the UAV to land on the dynamic apron according to the direction control command and the altitude control command.

与现有技术相比,本发明具有以下有益的技术效果:Compared with the prior art, the present invention has the following beneficial technical effects:

本发明公开了一种基于视觉的无人机自主着陆方法,实现了未知环境下的无人机动态停机坪自主着陆;采用无人机视觉序列的特征提取与状态估计方法,根据处理后的视觉信息提取动态停机坪的中心位置、运动方向、运动速度等特征,估计自身与动态停机坪的相对位姿,预测动态停机坪时空状态与运动行为;根据无人机的感知结果,直接生成无人机的运动方向、升降高度、飞行速度等控制指令,实现在GPS拒止的未知环境下无人机的动态停机坪着陆任务;该方法将基于深度强化学习的多控制网络协同控制与目标时空状态预测方法相结合,使无人机在不受人工干预的情况下,自主完成未知环境下无人机的动态停机坪着陆任务,极大的提升了无人机的自主性和智能性。The invention discloses a vision-based autonomous landing method of an unmanned aerial vehicle, which realizes the autonomous landing of an unmanned aerial vehicle on a dynamic parking apron in an unknown environment. The information extracts the center position, movement direction, movement speed and other characteristics of the dynamic apron, estimates the relative pose between itself and the dynamic apron, and predicts the space-time state and motion behavior of the dynamic apron; according to the perception results of the UAV, it directly generates an unmanned vehicle. Control commands such as the direction of movement, height of elevation, and flight speed of the UAV can realize the dynamic apron landing task of the UAV in the unknown environment denied by GPS. The combination of prediction methods enables UAVs to autonomously complete the dynamic apron landing tasks of UAVs in unknown environments without manual intervention, which greatly improves the autonomy and intelligence of UAVs.

附图说明Description of drawings

图1是本发明无人机自主着陆方法的系统总体框图。FIG. 1 is an overall block diagram of the system of the autonomous landing method of the UAV of the present invention.

图2是本发明的基于深度强化学习提出网络结构框图。FIG. 2 is a block diagram of the proposed network structure based on deep reinforcement learning of the present invention.

图3是本发明的无人机自主着陆方法的流程框图。FIG. 3 is a flow chart of the method for autonomous landing of an unmanned aerial vehicle of the present invention.

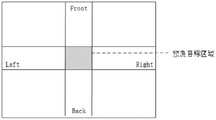

图4是本发明的着陆区域预测显示示意图。FIG. 4 is a schematic diagram showing the prediction of the landing area of the present invention.

图5是本发明的训练协议算法框图。FIG. 5 is a block diagram of the training protocol algorithm of the present invention.

具体实施方式Detailed ways

下面结合附图对本发明做进一步的详细说明,所述是对本发明的解释而不是限定。The present invention will be further described in detail below in conjunction with the accompanying drawings, which are to explain rather than limit the present invention.

参见图1-5,一种基于视觉的无人机自主着陆方法,包括以下步骤:Referring to Figure 1-5, a vision-based approach to autonomous UAV landing includes the following steps:

步骤1,无人机机载摄像机获取停机坪的图像,并对获取的图像进行预处理。

具体的,所获取的图像为无人机第一人称视角的图像,图像的预处理为对图像的降维、压缩和堆叠处理;将获取的图像复制为三份,对每份图像分别进行预处理,将预处理后的三份图像分别作为下述方向控制网络、高度控制网络和区域预测网络的输入数据。Specifically, the acquired image is an image from the first-person perspective of the drone, and the preprocessing of the image is dimensionality reduction, compression and stacking processing of the image; the acquired image is copied into three copies, and each image is preprocessed separately , the three preprocessed images are used as the input data of the following direction control network, height control network and region prediction network respectively.

方向控制网络的输入数据,将获取到的RGB图像降维成GRAY图像,再将降维后的GRAY图片由640*480压缩为110*110,将压缩后的连续多帧图像堆叠作为方向控制网络的输入数据。For the input data of the direction control network, reduce the dimension of the obtained RGB image into a GRAY image, and then compress the dimension-reduced GRAY image from 640*480 to 110*110, and stack the compressed continuous multi-frame images as the direction control network. the input data.

高度控制网络的输入数据,将深度图像转换为伪激光雷达数据,再将RGB图像经过上述降维、压缩和堆叠处理,将作为高度控制网络的输入数据。The input data of the height control network, the depth image is converted into pseudo lidar data, and then the RGB image is processed by the above dimensionality reduction, compression and stacking, which will be used as the input data of the height control network.

区域预测网络的输入数据,将获取到的RGB图像降维成GRAY图像,再将降维后的GRAY图片由640*480压缩为96*96,将压缩后的连续多帧图像堆叠作为方向控制网络的输入数据。For the input data of the regional prediction network, reduce the dimension of the obtained RGB image into a GRAY image, and then compress the reduced GRAY image from 640*480 to 96*96, and stack the compressed continuous multi-frame images as a direction control network. the input data.

步骤2,构建并训深度学习网络,所述深度学习网络包括基于GRAY图像序列的方向控制网络(D-NET)、基于GRAY-D图像序列的高度控制网络(H-NET)和基于GRAY图像序列的区域预测网络(F-NET)。

将预处理图像作为三个控制网络的输入,三个控制网络分别输出无人机的运动方向a1t、高度a2t、速度变化量a3t,最后,无人机执行动作后,收到新的状态信息和奖励信息。The preprocessed image is used as the input of the three control networks, and the three control networks output the UAV's movement direction a1t , height a2t , and speed change a3t . Finally, after the UAV performs the action, it receives a new Status information and reward information.

三个控制网络的结构相同,下面以方向控制网络(D-NET)为例对网络结构进行描述。The three control networks have the same structure, and the following describes the network structure by taking the direction control network (D-NET) as an example.

对于D-NET网络包括三个卷积层,四个55*55连续步骤图像被堆叠在一起作为D-NET的输入,由ReLU函数激活的三个卷积层用于从已处理的图像输入中生成特征表示,具体来说,第一卷积层利用4个大小为3*3的卷积核,以步长2进行特征提取;第二卷积层利用8个大小为3*3的卷积核,以步长2进行特征提取,第三卷积层利用16个大小为3*3的卷积核,以步长2进行特征提取,三个控制网络优化后的卷积网络能够给不同控制需求的控制网络生成有针对性的特征表示,在每个分支中,第一全连接层使用ReLU函数进行激活,第二全连接层使用Linear函数进行激活,第三全连接层使用Softmax函数进行激活。For the D-NET network consisting of three convolutional layers, four 55*55 consecutive step images are stacked together as the input of D-NET, and the three convolutional layers activated by the ReLU function are used from the processed image input Generate feature representation. Specifically, the first convolutional layer uses 4 convolution kernels of

训练期间,在每个一个步(step)中,训练协议如图5所示。首先,初始化方向控制控制网络、高度控制控制网络、速度控制控制网络,随后三个控制网络分别获取图像,将预处理后的图像作为状态信息(s1,s2,s3),控制网络获取状态信息后输出值函数,再选择最佳动作并执行。在每个步骤中,无人机会检测是否到达中止条件,如果是则停止循环;否则更新奖励信息,并将状态转换为新的状态,最后,把新的状态作为网络输入,计算并选择新的动作。存储<状态,动作,奖励,新的状态>到经验回放池1、2、3中。During training, at each step, the training protocol is shown in Figure 5. First, the direction control control network, height control control network, and speed control control network are initialized, and then the three control networks obtain images respectively, and the preprocessed images are used as state information (s1, s2, s3). After the control network obtains the state information Output the value function, then choose the best action and execute it. In each step, the drone will detect whether the abort condition is reached, and if so, stop the loop; otherwise, update the reward information and convert the state to a new state. Finally, the new state is used as the network input, and the new state is calculated and selected. action. Store <state, action, reward, new state> into

经验回放池1中采样获得<s1t,a1t,r1t,s1t+1>,其中s1t为t时刻方向控制网络获得的状态编码,a1t为无人机选择的动作,r1t为对所选择动作进行的动作评价,s1t+1为下一时刻状态;经验回放池2中采样获得<s2t,a2t,r2t,s2t+1>,经验回放池3中采样获得<s3t,a3t,r3t,s3t+1>。在每次迭代过程中,首先,方向控制网络获取s1t计算状态价值函数Q(s1t;ω1),ω为方向控制网络参数;高度控制网络获取s2t计算状态价值函数Q(s2t;ω2),区域预测网络获取s3t计算状态价值函数Q(s3t;ω3)。随后利用已执行动作a1t、a2t、a3t选择状态动作价值函数Q(s1t,a1t;ω1)、Q(s2t,a2t;ω2)、Q(s3t,a3t;ω3)。将s1t+1作为下一时刻状态计算得到状态价值函数,Q(s1t+1;ω1)、Q(s2t+1;ω2)、Q(s3t+1;ω3);对Q(s1t+1;ω1)、Q(s2t+1;ω2)、Q(s3t+1;ω3)分别选择最大Q值动作得Q(s1t+1,a1t+1;ω1),Q(s2t+1,a2t+1;ω2),Q(s3t+1,a3t+1;ω3)。最后利用折扣函数γ和奖励r1t,r1t计算t时刻的状态动作价值函数y1,y1如式所示:<s1t , a1t , r1t , s1t+1 > are sampled in

基于上式,方向控制网络损失函数公式为:Based on the above formula, the loss function formula of the direction control network is:

L1(ω1)=E[(y1-Q(s1t,a1t;ω1))2]L1(ω1 )=E[(y1-Q(s1t , a1t ; ω1 ))2 ]

利用折扣函数γ和奖励r2t计算t时刻的状态动作价值函数y2如下式:Using the discount function γ and the reward r2t to calculate the state action value function y2 at time t as follows:

基于上式,区域预测网络损失函数公式为:Based on the above formula, the loss function formula of the regional prediction network is:

L2(ω)=E[(y2-Q(s2t,a2t;ω2))2]L2(ω)=E[(y2-Q(s2t , a2t ; ω2 ))2 ]

利用折扣函数γ和奖励r3t计算t时刻的状态动作价值函数y3如下式:Using the discount function γ and the reward r3t to calculate the state action value function y3 at time t as follows:

基于上式,区域预测网络损失函数公式为:Based on the above formula, the loss function formula of the regional prediction network is:

L3(ω)=E[(y3-Q(s3t,a3t;ω3))2]L3(ω)=E[(y3-Q(s3t , a3t ; ω3 ))2 ]

利用Adam优化器调整抗追踪网络参数。Use Adam optimizer to tune anti-tracking network parameters.

步骤3,基于训练后的深度学习网络获取预处理图像中的动态停机坪的运动特征、距离特征及轮廓尺度变化特征。In

方向控制网络(D-NET),用于提取预处理后图像序列中停机坪的运动特征s1t,并根据停机坪的运动特征输出方向控制动作adir;Direction control network (D-NET), used to extract the motion feature s1t of the apron in the image sequence after preprocessing, and output the direction control action adir according to the motion feature of the apron;

停机坪的运动特征包括停机坪的轮廓特征、形状特征和位置变化特征。The motion features of the apron include contour features, shape features and position change features of the apron.

高度控制网络(H-NET),用于提取预处理后深度图像序列中的停机坪的距离特征s2t,并根据停机坪的距离特征输出无人机高度控制动作aheight;Height control network (H-NET), used to extract the distance feature s2t of the apron in the depth image sequence after preprocessing, and output the UAV height control action aheight according to the distance feature of the apron;

停机坪的距离特征包括无人机与地面的高度特征、无人机与停机坪的距离特征。The distance feature of the apron includes the height feature of the UAV and the ground, and the distance feature of the UAV and the apron.

区域预测网络(F-NET),用于提取预处理后图像序列中停机坪的轮廓尺度变化特征s3t,并根据停机坪的轮廓尺度变化特征输出无人机速度控制动作aheight。The area prediction network (F-NET) is used to extract the contour scale change feature s3t of the apron in the preprocessed image sequence, and output the UAV speed control action aheight according to the contour scale change feature of the apron.

停机坪的轮廓尺度变化特征包括停机坪的轮廓特征,以及轮廓尺度变化量。The contour scale change feature of the apron includes the contour feature of the apron and the contour scale change amount.

步骤4,根据获取的动态停机坪的运动特征、距离特征及轮廓尺度变化特征,确定无人机降落方向控制指令和高度控制指令。Step 4: Determine the landing direction control instruction and the altitude control instruction of the UAV according to the acquired motion characteristics, distance characteristics and contour scale variation characteristics of the dynamic apron.

具体的,如图2所示,方向控制网络、高度控制网络、区域预测网络同时工作。Specifically, as shown in Figure 2, the direction control network, the altitude control network, and the area prediction network work simultaneously.

方向控制网络输出状态动作价值函数,其对应的方向控制动作adir为:a1,a2,a3,a4,a5,a6,a7,a8,a9。The direction control network outputs the state action value function, and its corresponding direction control action adir is: a1 , a2 , a3 , a4 , a5 , a6 , a7 , a8 , a9 .

其中,a1,a2,a3,a4,a5,a6,a7,a8,a9代表的方向分别是向前方、向后方、向左方、向右方、向左上方、向右上方、向左上方、向右下方以及保持当前方向状态,方向控制动作具体如下式:Among them, a1 , a2 , a3 , a4 , a5 , a6 , a7 , a8 , a9 represent the directions of forward, backward, left, right, and upper left, respectively , upper right, upper left, lower right and maintain the current direction state, the direction control action is as follows:

adir=[a1 a2 a3 a4 a5 a6 a7 a8 a9adir =[a1 a2 a3 a4 a5 a6 a7 a8 a9

高度控制网络输出的状态动作价值函数对应的高度控制动作aheight为:A1,A2,代表的方向分别是向上方和向下方,高度控制动作具体如下式:The height control action aheight corresponding to the state action value function output by the height control network is: A1 , A2 , and the directions represented are upward and downward respectively. The height control action is as follows:

aheight=[A1 A2]aheight = [A1 A2 ]

区域预测网络输出的状态动作价值函数对应的速度控制动作为:b1,b2,b3,分别代表无人机的速度增加,速度不变和速度减小:The speed control actions corresponding to the state action value function output by the regional prediction network are: b1 , b2 , b3 , which represent the speed increase, the speed change and the speed decrease of the UAV respectively:

afore=[b1 b2 b3]afore =[b1 b2 b3 ]

采用动作选择策略,根据状态动作价值函数估计的动作评价选择方向控制动作、高度控制动作和速度控制动作的最优动作ai,Ai,bi。The action selection strategy is adopted to select the optimal actions ai , Ai , bi of the direction control action, the height control action and the speed control action according to the action evaluation estimated by the state action value function.

根据最优动作和无人机的初始速度确定无人机的方向动作控制指令auav1。Determine the direction action control command auav1 of the UAV according to the optimal action and the initial speed of the UAV.

auav1=ai*a0*biauav1 = ai *a0 *bi

其中,a0代表无人机速度初值,包括速度v与角速度w。Among them, a0 represents the initial value of the UAV speed, including the speed v and the angular speed w.

根据高度控制最优动作和速度控制最优动作确定高度动作控制指令auav2,表达式如下:According to the optimal motion of height control and the optimal motion of speed control, the height motion control instruction auav2 is determined, and the expression is as follows:

auav2=Ai*biauav2 =Ai *bi

步骤5,根据方向控制指令和高度控制指令,控制无人机降落至动态停机坪。Step 5: Control the drone to land on the dynamic apron according to the direction control instruction and the altitude control instruction.

根据D-NET信息处理结果完成停机坪行为识别、动态停机坪时空状态预测任务,基于预测结果最终输出无人机的运动方向控制指令。根据H-NET信息处理结果完成无人机的停机坪识别与搜索,基于检测结果最终输出无人机的高度控制指令。根据F-NET信息处理结果完成停机坪运动状态预测,基于预测结果最终输出无人机的速度控制指令。完成无人机的动态停机坪着陆任务。具体如图3所示,F-NET着陆区域位置预测结果能够实时显示如图4所示。According to the D-NET information processing results, the tasks of apron behavior identification and dynamic apron spatio-temporal state prediction are completed, and the UAV's movement direction control instructions are finally output based on the prediction results. According to the H-NET information processing results, the apron identification and search of the UAV is completed, and the altitude control command of the UAV is finally output based on the detection results. According to the F-NET information processing result, the tarmac motion state prediction is completed, and the speed control command of the UAV is finally output based on the prediction result. Complete the dynamic tarmac landing mission of the drone. Specifically, as shown in Figure 3, the F-NET landing area position prediction result can be displayed in real time as shown in Figure 4.

本发明还提供了一种基于视觉的无人机自主着陆系统,包括图像预处理模块、特征提取模块、控制指令输出模块和控制模块。The invention also provides a vision-based drone autonomous landing system, which includes an image preprocessing module, a feature extraction module, a control instruction output module and a control module.

图像预处理模块,用于对无人机获取动态停机坪的图像并进行预处理;The image preprocessing module is used to obtain and preprocess the image of the dynamic apron for the UAV;

特征提取模块,用于采用训练后的深度学习网络提取预处理图像中动态停机坪的运动特征、距离特征及轮廓尺度变化特征,并输出无人机的方向控制动作、高度控制动作和速度控制动作;The feature extraction module is used to extract the motion features, distance features and contour scale change features of the dynamic apron in the preprocessed image by using the trained deep learning network, and output the direction control actions, altitude control actions and speed control actions of the UAV ;

控制指令输出模块,用于根据无人机的方向控制动作、高度控制动作和速度控制动作,确定无人机的方向控制指令和高度控制指令;The control command output module is used to determine the direction control command and the altitude control command of the drone according to the direction control action, altitude control action and speed control action of the drone;

控制模块,用于根据方向控制指令和高度控制指令控制无人机着陆至动态停机坪。The control module is used to control the UAV to land on the dynamic apron according to the direction control command and the altitude control command.

以上内容仅为说明本发明的技术思想,不能以此限定本发明的保护范围,凡是按照本发明提出的技术思想,在技术方案基础上所做的任何改动,均落入本发明权利要求书的保护范围之内。The above content is only to illustrate the technical idea of the present invention, and cannot limit the protection scope of the present invention. Any modification made on the basis of the technical solution proposed in accordance with the technical idea of the present invention falls within the scope of the claims of the present invention. within the scope of protection.

Claims (9)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210719200.7ACN114967752A (en) | 2022-06-23 | 2022-06-23 | Unmanned aerial vehicle autonomous landing method and system based on vision |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210719200.7ACN114967752A (en) | 2022-06-23 | 2022-06-23 | Unmanned aerial vehicle autonomous landing method and system based on vision |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN114967752Atrue CN114967752A (en) | 2022-08-30 |

Family

ID=82965863

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202210719200.7APendingCN114967752A (en) | 2022-06-23 | 2022-06-23 | Unmanned aerial vehicle autonomous landing method and system based on vision |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN114967752A (en) |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN119205906A (en)* | 2024-08-29 | 2024-12-27 | 南通大学 | A method for predicting the dynamic position of an offshore parking platform based on Bayesian deep learning |

| CN119918398A (en)* | 2024-12-30 | 2025-05-02 | 上海天御星空航天科技有限公司 | Spacecraft behavior prediction method and system |

| CN120428578A (en)* | 2025-07-08 | 2025-08-05 | 深圳市创达电子有限公司 | UAV landing pad control method |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN103587708A (en)* | 2013-11-14 | 2014-02-19 | 上海大学 | Method for field fixed point zero-dead-zone autonomous soft landing of subminiature unmanned rotor aircraft |

| CN107065924A (en)* | 2017-03-15 | 2017-08-18 | 普宙飞行器科技(深圳)有限公司 | The vehicle-mounted landing system of unmanned plane, can vehicle-mounted landing unmanned plane and landing method |

| CN107544550A (en)* | 2016-06-24 | 2018-01-05 | 西安电子科技大学 | A kind of Autonomous Landing of UAV method of view-based access control model guiding |

| US11036240B1 (en)* | 2018-06-18 | 2021-06-15 | Amazon Technologies, Inc. | Safe landing of aerial vehicles upon loss of navigation |

| KR20220068606A (en)* | 2020-11-19 | 2022-05-26 | 한서대학교 산학협력단 | Automatic landing algorithm of drone considering partial images |

- 2022

- 2022-06-23CNCN202210719200.7Apatent/CN114967752A/enactivePending

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN103587708A (en)* | 2013-11-14 | 2014-02-19 | 上海大学 | Method for field fixed point zero-dead-zone autonomous soft landing of subminiature unmanned rotor aircraft |

| CN107544550A (en)* | 2016-06-24 | 2018-01-05 | 西安电子科技大学 | A kind of Autonomous Landing of UAV method of view-based access control model guiding |

| CN107065924A (en)* | 2017-03-15 | 2017-08-18 | 普宙飞行器科技(深圳)有限公司 | The vehicle-mounted landing system of unmanned plane, can vehicle-mounted landing unmanned plane and landing method |

| US11036240B1 (en)* | 2018-06-18 | 2021-06-15 | Amazon Technologies, Inc. | Safe landing of aerial vehicles upon loss of navigation |

| KR20220068606A (en)* | 2020-11-19 | 2022-05-26 | 한서대학교 산학협력단 | Automatic landing algorithm of drone considering partial images |

Non-Patent Citations (3)

| Title |

|---|

| 岳鹏宇: "目标驱动的移动机器人自主导航研究", 《中国优秀硕士学位论文全文数据库(信息科技辑)》, no. 01, 15 January 2022 (2022-01-15), pages 31 - 39* |

| 张舸;伊国兴;高翔;: "基于视觉导航的旋翼无人机自主降落系统", 传感器与微系统, no. 09, 20 September 2018 (2018-09-20)* |

| 高嘉瑜;武云云;: "无人机视觉自主着陆仿真系统", 现代导航, no. 02, 15 April 2017 (2017-04-15)* |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN119205906A (en)* | 2024-08-29 | 2024-12-27 | 南通大学 | A method for predicting the dynamic position of an offshore parking platform based on Bayesian deep learning |

| CN119918398A (en)* | 2024-12-30 | 2025-05-02 | 上海天御星空航天科技有限公司 | Spacecraft behavior prediction method and system |

| CN120428578A (en)* | 2025-07-08 | 2025-08-05 | 深圳市创达电子有限公司 | UAV landing pad control method |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN112378397B (en) | Unmanned aerial vehicle target tracking method and device and unmanned aerial vehicle | |

| CN112379681B (en) | Unmanned aerial vehicle obstacle avoidance flight method and device and unmanned aerial vehicle | |

| Cesetti et al. | A vision-based guidance system for UAV navigation and safe landing using natural landmarks | |

| CN114967752A (en) | Unmanned aerial vehicle autonomous landing method and system based on vision | |

| Kong et al. | Autonomous landing of an UAV with a ground-based actuated infrared stereo vision system | |

| CN112596071B (en) | Unmanned aerial vehicle autonomous positioning method and device and unmanned aerial vehicle | |

| CN112789672B (en) | Control and navigation system, gesture optimization, mapping and positioning techniques | |

| Roelofsen et al. | Reciprocal collision avoidance for quadrotors using on-board visual detection | |

| KR102313115B1 (en) | Autonomous flying drone using artificial intelligence neural network | |

| CN112380933B (en) | Unmanned aerial vehicle target recognition method and device and unmanned aerial vehicle | |

| CN112747736A (en) | Indoor unmanned aerial vehicle path planning method based on vision | |

| US20100305857A1 (en) | Method and System for Visual Collision Detection and Estimation | |

| Chen et al. | A review of autonomous obstacle avoidance technology for multi-rotor UAVs | |

| CN106155082A (en) | A kind of unmanned plane bionic intelligence barrier-avoiding method based on light stream | |

| CN118534893A (en) | A path planning method based on air-ground collaborative system | |

| CN116719037A (en) | An environment sensing method and system for intelligent lawn mowing robots | |

| CN118897572A (en) | An AI-based drone obstacle avoidance method | |

| CN117270565A (en) | Airborne autonomous sensing and flight system based on vision | |

| Li et al. | UAV obstacle avoidance by human-in-the-loop reinforcement in arbitrary 3D environment | |

| CN110968112B (en) | Unmanned aerial vehicle autonomous landing method based on monocular vision | |

| TWI809727B (en) | Method for searching a path by using a three-dimensional reconstructed map | |

| CN115903880A (en) | Unmanned aerial vehicle autonomous image navigation and obstacle avoidance method based on improved reinforcement learning | |

| CN118795460A (en) | Unmanned aerial vehicle track tracking method based on perception radar | |

| Qi et al. | Detection and tracking of a moving target for UAV based on machine vision | |

| CN117311385A (en) | Unmanned aerial vehicle protection system and method based on multidimensional detection data |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination |