CN114630096A - Method, device and equipment for densifying point cloud of TOF camera and readable storage medium - Google Patents

Method, device and equipment for densifying point cloud of TOF camera and readable storage mediumDownload PDFInfo

- Publication number

- CN114630096A CN114630096ACN202210242970.7ACN202210242970ACN114630096ACN 114630096 ACN114630096 ACN 114630096ACN 202210242970 ACN202210242970 ACN 202210242970ACN 114630096 ACN114630096 ACN 114630096A

- Authority

- CN

- China

- Prior art keywords

- angle

- pixel

- new

- tof camera

- dimensional coordinates

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

- 238000000034methodMethods0.000titleclaimsabstractdescription45

- 238000012545processingMethods0.000claimsabstractdescription16

- 238000000280densificationMethods0.000claimsabstractdescription15

- 238000004364calculation methodMethods0.000claimsdescription27

- 238000001914filtrationMethods0.000claimsdescription14

- 230000004927fusionEffects0.000claimsdescription7

- 238000009499grossingMethods0.000claimsdescription6

- 230000008569processEffects0.000claimsdescription5

- 238000012952ResamplingMethods0.000claimsdescription4

- 238000005516engineering processMethods0.000abstractdescription12

- 238000013473artificial intelligenceMethods0.000abstractdescription6

- 230000006870functionEffects0.000description18

- 230000000694effectsEffects0.000description8

- 238000010586diagramMethods0.000description6

- 230000002411adverseEffects0.000description5

- 238000004891communicationMethods0.000description4

- 230000003287optical effectEffects0.000description3

- 230000008859changeEffects0.000description2

- 230000003993interactionEffects0.000description2

- 238000005070samplingMethods0.000description2

- 238000004458analytical methodMethods0.000description1

- 230000005540biological transmissionEffects0.000description1

- 230000015572biosynthetic processEffects0.000description1

- 238000006243chemical reactionMethods0.000description1

- 230000006835compressionEffects0.000description1

- 238000007906compressionMethods0.000description1

- 238000013480data collectionMethods0.000description1

- 238000013500data storageMethods0.000description1

- 238000013135deep learningMethods0.000description1

- 239000000835fiberSubstances0.000description1

- 230000010365information processingEffects0.000description1

- 238000010801machine learningMethods0.000description1

- 239000011159matrix materialSubstances0.000description1

- 238000005259measurementMethods0.000description1

- 230000007246mechanismEffects0.000description1

- 238000003058natural language processingMethods0.000description1

- 230000003068static effectEffects0.000description1

Images

Classifications

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/128—Adjusting depth or disparity

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S17/00—Systems using the reflection or reradiation of electromagnetic waves other than radio waves, e.g. lidar systems

- G01S17/88—Lidar systems specially adapted for specific applications

- G01S17/89—Lidar systems specially adapted for specific applications for mapping or imaging

- G01S17/894—3D imaging with simultaneous measurement of time-of-flight at a 2D array of receiver pixels, e.g. time-of-flight cameras or flash lidar

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/139—Format conversion, e.g. of frame-rate or size

Landscapes

- Engineering & Computer Science (AREA)

- Multimedia (AREA)

- Signal Processing (AREA)

- Physics & Mathematics (AREA)

- Computer Networks & Wireless Communication (AREA)

- Electromagnetism (AREA)

- General Physics & Mathematics (AREA)

- Radar, Positioning & Navigation (AREA)

- Remote Sensing (AREA)

- Processing Or Creating Images (AREA)

- Image Processing (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本申请涉及图像处理和人工智能技术领域,尤其涉及一种TOF相机点云的稠密化方法、装置、设备及可读存储介质。The present application relates to the technical field of image processing and artificial intelligence, and in particular, to a method, apparatus, device and readable storage medium for densifying a point cloud of a TOF camera.

背景技术Background technique

现有技术中,将原始稀疏点云经过上采样方法(随机均值密度插值、局部平面采样、三角剖分立方插值)进行稠密结果,在3D传感器本身分辨率足够的情况下是可行的,但是在只有几百个点且点间距比较大的情况下,此方法无疑增加了深度的误差,点云成型十分不自然。In the prior art, the original sparse point cloud is subjected to an upsampling method (random mean density interpolation, local plane sampling, triangulation cubic interpolation) to obtain a dense result, which is feasible when the resolution of the 3D sensor itself is sufficient. When there are only a few hundred points and the point spacing is relatively large, this method undoubtedly increases the depth error, and the point cloud formation is very unnatural.

发明内容SUMMARY OF THE INVENTION

本申请实施例的目的在于提出一种TOF相机点云的稠密化方法、装置、设备及可读存储介质,以解决的现有技术采用的上采样方法对点间距较大的情况下点云成型不自然以及深度误差大的技术问题。The purpose of the embodiments of the present application is to propose a method, device, device, and readable storage medium for densifying a point cloud of a TOF camera, so as to solve the problem of forming a point cloud when the point distance is large by the upsampling method adopted in the prior art. Unnatural and technical problems with large depth errors.

为了解决上述技术问题,本申请实施例提供一种TOF相机点云的稠密化方法,采用了如下所述的技术方案:所述方法包括下述步骤:In order to solve the above technical problems, the embodiment of the present application provides a method for densifying a point cloud of a TOF camera, and adopts the following technical solution: the method includes the following steps:

获取所述TOF相机在各个角度的拍摄数据,获取所述角度限位器测量所述TOF相机在各个角度拍摄的角度值,其中,所述拍摄数据包括深度数据以及分辨率数据;Obtaining the shooting data of the TOF camera at various angles, and acquiring the angle limiter to measure the angle values of the TOF camera shooting at various angles, wherein the shooting data includes depth data and resolution data;

根据相机的内置参数、所述深度数据以及角度值计算各个角度的原始三维坐标,并在各个角度中设置一个原始位置;Calculate the original three-dimensional coordinates of each angle according to the built-in parameters of the camera, the depth data and the angle value, and set an original position in each angle;

根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率;Calculate the intrinsic spatial angular resolution of each pixel at the original position according to the built-in parameters of the camera and the resolution data;

根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度;Calculate the angle of each pixel of each row relative to the position of the row where the camera principal point position is located according to the angular resolution;

根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标;Calculate the new three-dimensional coordinates of the rotated TOF camera in the original position coordinate system according to the angle of each pixel and the original three-dimensional coordinates;

将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理;The new three-dimensional coordinates of the angle of each pixel are fused and smoothed and filtered;

将处理后的新的三维坐标作为新的点云数据并输出。The processed new three-dimensional coordinates are used as new point cloud data and output.

为了解决上述技术问题,本申请实施例还提供一种TOF相机点云的稠密化装置,所述装置包括:In order to solve the above technical problems, the embodiment of the present application also provides a device for densifying a point cloud of a TOF camera, the device comprising:

获取模块,用于获取所述TOF相机在各个角度的拍摄数据,获取所述角度限位器测量所述TOF相机在各个角度拍摄的角度值,其中,所述拍摄数据包括深度数据以及分辨率数据;The acquisition module is used for acquiring the shooting data of the TOF camera at various angles, and acquiring the angle limiter to measure the angle values of the TOF camera shooting at various angles, wherein the shooting data includes depth data and resolution data ;

原始三维坐标计算模块,用于根据相机的内置参数、所述深度数据以及角度值计算各个角度的原始三维坐标,并在各个角度中设置一个原始位置;The original three-dimensional coordinate calculation module is used to calculate the original three-dimensional coordinates of each angle according to the built-in parameters of the camera, the depth data and the angle value, and set an original position in each angle;

角分辨率计算模块,用于根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率;an angular resolution calculation module, configured to calculate the intrinsic spatial angular resolution of each pixel at the original position according to the built-in parameters of the camera and the resolution data;

角度计算模块,用于根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度;an angle calculation module for calculating the angle of each pixel of each row relative to the position of the row where the camera principal point position is located according to the angular resolution;

新的三维坐标计算模块,用于根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标;A new three-dimensional coordinate calculation module for calculating the new three-dimensional coordinates of the rotating TOF camera under the original position coordinate system according to the angle of each pixel and the original three-dimensional coordinates;

融合模块,用于将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理;The fusion module is used to fuse the new three-dimensional coordinates of the angle of each pixel and perform smooth filtering;

生成模块,用于将处理后的新的三维坐标作为新的点云数据并输出。The generation module is used to output the processed new three-dimensional coordinates as new point cloud data.

为了解决上述技术问题,本申请实施例还提供一种计算机设备,采用了如下所述的技术方案:包括存储器和处理器,所述存储器中存储有计算机可读指令,所述处理器执行所述计算机可读指令时实现如上述的TOF相机点云的稠密化方法的步骤。In order to solve the above technical problem, an embodiment of the present application further provides a computer device, which adopts the following technical solution: comprising a memory and a processor, wherein the memory stores computer-readable instructions, and the processor executes the Computer readable instructions implement the steps of the method for densification of a TOF camera point cloud as described above.

为了解决上述技术问题,本申请实施例还提供一种计算机可读存储介质,采用了如下所述的技术方案:所述计算机可读存储介质上存储有计算机可读指令,所述计算机可读指令被处理器执行时实现如上所述的TOF相机点云的稠密化方法的步骤。In order to solve the above technical problem, an embodiment of the present application further provides a computer-readable storage medium, which adopts the following technical solution: the computer-readable storage medium stores computer-readable instructions, and the computer-readable instructions Steps that when executed by a processor implement the method for densification of a TOF camera point cloud as described above.

与现有技术相比,在本申请中通过获取所述TOF相机在各个角度的拍摄数据,获取所述角度限位器测量所述TOF相机在各个角度拍摄的角度值,根据相机的内置参数、所述深度数据以及角度值计算各个角度的原始三维坐标,根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率,根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度,根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标,将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理,将处理后的新的三维坐标作为新的点云数据并输出。通过对每个角度的深度数据重新计算新的三维坐标,以此达到稀疏点云稠密化结果,避免了给新的点云造成深度错位,虚假等不良影响,得到了精度和还原度比较高的深度数据。Compared with the prior art, in the present application, by acquiring the shooting data of the TOF camera at various angles, the angle limiter is obtained to measure the angle values of the TOF camera shooting at various angles, according to the built-in parameters of the camera, The depth data and the angle value calculate the original three-dimensional coordinates of each angle, calculate the intrinsic spatial angular resolution of each pixel at the original position according to the built-in parameters of the camera and the resolution data, and calculate according to the angular resolution The angle of each pixel of each row relative to the position of the main point of the camera in the row, and according to the angle of each pixel and the original three-dimensional coordinates, a new three-dimensional image of the rotated TOF camera in the original position coordinate system is calculated. Coordinates, fuse the new three-dimensional coordinates of the angle of each pixel and perform smooth filtering processing, and output the processed new three-dimensional coordinates as new point cloud data. By recalculating the new three-dimensional coordinates of the depth data at each angle, the sparse point cloud densification result is achieved, which avoids the adverse effects of depth dislocation and falsehood on the new point cloud, and obtains a high precision and restoration degree. depth data.

附图说明Description of drawings

为了更清楚地说明本申请中的方案,下面将对本申请实施例描述中所需要使用的附图做一个简单介绍,显而易见地,下面描述中的附图是本申请的一些实施例,对于本领域普通技术人员来讲,在不付出创造性劳动的前提下,还可以根据这些附图获得其他的附图。In order to illustrate the solutions in the present application more clearly, the following will briefly introduce the accompanying drawings used in the description of the embodiments of the present application. For those of ordinary skill, other drawings can also be obtained from these drawings without any creative effort.

图1是本申请可以应用于其中的示例性系统架构图;FIG. 1 is an exemplary system architecture diagram to which the present application can be applied;

图2是TOF相机点云的稠密化方法的一个实施例的流程图;Fig. 2 is the flow chart of one embodiment of the densification method of TOF camera point cloud;

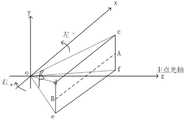

图3是角分辨率的计算坐标图;Fig. 3 is the calculation coordinate diagram of angular resolution;

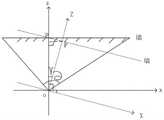

图4是新的三维坐标的计算坐标图;Fig. 4 is the calculated coordinate diagram of the new three-dimensional coordinate;

图5是原始点云与新的点云的俯视图;Figure 5 is a top view of the original point cloud and the new point cloud;

图6是原始点云与新的点云的正视图;Figure 6 is a front view of the original point cloud and the new point cloud;

图7是TOF相机点云的稠密化装置的一个实施例的结构示意图;7 is a schematic structural diagram of an embodiment of a device for densifying a point cloud of a TOF camera;

图8是根据本申请的计算机设备的一个实施例的结构示意图。FIG. 8 is a schematic structural diagram of an embodiment of a computer device according to the present application.

具体实施方式Detailed ways

除非另有定义,本文所使用的所有的技术和科学术语与属于本申请的技术领域的技术人员通常理解的含义相同;本文中在申请的说明书中所使用的术语只是为了描述具体的实施例的目的,不是旨在于限制本申请;本申请的说明书和权利要求书及上述附图说明中的术语“包括”和“具有”以及它们的任何变形,意图在于覆盖不排他的包含。本申请的说明书和权利要求书或上述附图中的术语“第一”、“第二”等是用于区别不同对象,而不是用于描述特定顺序。Unless otherwise defined, all technical and scientific terms used herein have the same meaning as commonly understood by one of ordinary skill in the technical field of this application; the terms used herein in the specification of the application are for the purpose of describing specific embodiments only It is not intended to limit the application; the terms "comprising" and "having" and any variations thereof in the description and claims of this application and the above description of the drawings are intended to cover non-exclusive inclusion. The terms "first", "second" and the like in the description and claims of the present application or the above drawings are used to distinguish different objects, rather than to describe a specific order.

在本文中提及“实施例”意味着,结合实施例描述的特定特征、结构或特性可以包含在本申请的至少一个实施例中。在说明书中的各个位置出现该短语并不一定均是指相同的实施例,也不是与其它实施例互斥的独立的或备选的实施例。本领域技术人员显式地和隐式地理解的是,本文所描述的实施例可以与其它实施例相结合。Reference herein to an "embodiment" means that a particular feature, structure, or characteristic described in connection with the embodiment can be included in at least one embodiment of the present application. The appearances of the phrase in various places in the specification are not necessarily all referring to the same embodiment, nor a separate or alternative embodiment that is mutually exclusive of other embodiments. It is explicitly and implicitly understood by those skilled in the art that the embodiments described herein may be combined with other embodiments.

为了使本技术领域的人员更好地理解本申请方案,下面将结合附图,对本申请实施例中的技术方案进行清楚、完整地描述。In order to make those skilled in the art better understand the solutions of the present application, the technical solutions in the embodiments of the present application will be described clearly and completely below with reference to the accompanying drawings.

如图1所示,系统架构100可以包括终端设备101、102、103,网络104和服务器105。网络104用以在终端设备101、102、103和服务器105之间提供通信链路的介质。网络104可以包括各种连接类型,例如有线、无线通信链路或者光纤电缆等等。As shown in FIG. 1 , the

用户可以使用终端设备101、102、103通过网络104与服务器105交互,以接收或发送消息等。终端设备101、102、103上可以安装有各种通讯客户端应用,例如网页浏览器应用、购物类应用、搜索类应用、即时通信工具、邮箱客户端、社交平台软件等。The user can use the

终端设备101、102、103可以是具有显示屏并且支持网页浏览的各种电子设备,包括但不限于智能手机、平板电脑、电子书阅读器、MP3播放器(Moving Picture ExpertsGroup Audio Layer III,动态影像专家压缩标准音频层面3)、MP4(Moving PictureExperts Group Audio Layer IV,动态影像专家压缩标准音频层面4)播放器、膝上型便携计算机和台式计算机等等。The

服务器105可以是提供各种服务的服务器,例如对终端设备101、102、103上显示的页面提供支持的后台服务器。The

需要说明的是,本申请实施例所提供的基于语音语义的信息检索方法一般由服务器执行,相应地,基于语音语义的信息检索装置一般设置于服务器中。It should be noted that the method for retrieving information based on speech semantics provided by the embodiments of the present application is generally executed by a server, and accordingly, an apparatus for information retrieval based on speech semantics is generally set in the server.

应该理解,图1中的终端设备、网络和服务器的数目仅仅是示意性的。根据实现需要,可以具有任意数目的终端设备、网络和服务器。It should be understood that the numbers of terminal devices, networks and servers in FIG. 1 are merely illustrative. There can be any number of terminal devices, networks and servers according to implementation needs.

继续参考图2-6,示出了根据本申请的TOF相机点云的稠密化方法的一个实施例的流程图。所述的TOF相机点云的稠密化方法,包括以下步骤:Continuing to refer to FIGS. 2-6 , a flowchart of one embodiment of a method for densifying a TOF camera point cloud according to the present application is shown. The densification method of the TOF camera point cloud includes the following steps:

步骤S201:获取所述TOF相机在各个角度的拍摄数据,获取所述角度限位器测量所述TOF相机在各个角度拍摄的角度值,其中,所述拍摄数据包括深度数据以及分辨率数据;Step S201: acquiring the shooting data of the TOF camera at various angles, and acquiring the angle limiter to measure the angle values of the TOF camera shooting at various angles, wherein the shooting data includes depth data and resolution data;

需要说明的是,TOF相机采用tofv2.0深度相机,为减少环境光的影响,TOF模组采用940nm波长的vcsel作为光源,并且配套940nm增透的镜头,因为环境光或太阳光里940nm波长的光占比小,所以TOF相机输出的拍摄数据能较好的抵抗干扰,在TOF相机底下放置可以测量旋转角度的舵机,舵机通过调节脉宽信号来控制转动的角度,其中,舵机内装设有角度限位器,角度限位器位于所述舵机的中心用于测量所述舵机的转动角度,在每次转动控制在1°的转动角度范围内。由于本技术方案并不考虑y轴(俯仰角)而只考虑x轴(偏航角)的角度变化,故需要将TOF深度相机大致的光心位置与舵机的中心位置重合,尽量减少偏航的误差,此时将TOF和舵机理解为前置的3D模组,装置的成本比较低廉,装置采集转动小角度的数据序列传输到计算机完成新的点云的稠密化。It should be noted that the TOF camera uses a tofv2.0 depth camera. In order to reduce the influence of ambient light, the TOF module uses vcsel with a wavelength of 940nm as the light source, and is equipped with a 940nm antireflection lens, because ambient light or sunlight has a wavelength of 940nm. The light ratio is small, so the shooting data output by the TOF camera can resist interference better. A steering gear that can measure the rotation angle is placed under the TOF camera. The steering gear controls the rotation angle by adjusting the pulse width signal. Among them, the steering gear is built-in An angle limiter is provided, and the angle limiter is located at the center of the steering gear for measuring the rotation angle of the steering gear, and is controlled within a rotation angle range of 1° for each rotation. Since this technical solution does not consider the y-axis (pitch angle) but only considers the angle change of the x-axis (yaw angle), it is necessary to coincide the approximate optical center position of the TOF depth camera with the center position of the steering gear to minimize yaw At this time, the TOF and the steering gear are understood as the front 3D module, the cost of the device is relatively low, and the device collects and rotates a small-angle data sequence and transmits it to the computer to complete the densification of the new point cloud.

其中,对于角度值的设置有如下步骤,设置所述原始位置为0°,所述原始位置的左侧转动为负递增,所述原始位置的右侧转动为正递增;设定TOF相机转动一个往复为小角度组合,所述一个往复为TOF相机转动时均经过原始位置、负递增以及正递增;驱动所述舵机按角度序列进行小角度组合转动并获取对应角度TOF相机在各个角度的拍摄数据以及所述角度限位器测量的对应角度的角度值。Among them, the setting of the angle value has the following steps: set the original position to 0°, the left rotation of the original position is a negative increment, and the right rotation of the original position is a positive increment; set the TOF camera to rotate one The reciprocation is a combination of small angles, and the reciprocation is the original position, negative increment and positive increment when the TOF camera rotates; the steering gear is driven to rotate in a small angle combination according to the angle sequence, and the shooting of the TOF camera at each angle of the corresponding angle is obtained. data and the angle value of the corresponding angle measured by the angle stopper.

原始位置为0°位置,角度限位器转动过程中,y轴方向不变,在x轴方向中舵机及TOF相机向左转动定为负递增,则转动了1°为-1°,在x轴方向中舵机及TOF相机向右转动定为正递增,则转动了1°为1°,如图3所示,最后各个角度下的方向排序可为(0°、1°、2°、3°、4°、-1°、-2°、-3°、-4°),也可为(4°、3°、2°、1°、0°、-1°、-2°、-3°、-4°)或(0°、-1°、-2°、-3°、-4°、1°、2°、3°、4°)。The original position is the 0° position. During the rotation of the angle limiter, the y-axis direction does not change. In the x-axis direction, the left rotation of the steering gear and the TOF camera is set as a negative increment, and the rotation of 1° is -1°. In the x-axis direction, the rotation of the steering gear and the TOF camera to the right is determined as a positive increment, then the rotation of 1° is 1°, as shown in Figure 3, the final order of directions at each angle can be (0°, 1°, 2°, 3°, 4°, -1°, -2°, -3°, -4°), or (4°, 3°, 2°, 1°, 0°, -1°, - 2°, -3°, -4°) or (0°, -1°, -2°, -3°, -4°, 1°, 2°, 3°, 4°).

步骤S202:根据相机的内置参数、所述深度数据以及角度值计算各个角度的原始三维坐标,并在各个角度中设置一个原始位置;Step S202: Calculate the original three-dimensional coordinates of each angle according to the built-in parameters of the camera, the depth data and the angle value, and set an original position in each angle;

原始位置为0°位置,方便后续根据原始位置获取相应的数据,原始三维坐标即获取X:TOF相机坐标系下的x方向值;Y:TOF相机坐标系下的y方向值;Z:TOF相机坐标系下的z方向值,方便后续计算角分辨率。The original position is the 0° position, which is convenient for subsequent acquisition of the corresponding data according to the original position. The original three-dimensional coordinates are X: the x direction value in the TOF camera coordinate system; Y: the y direction value in the TOF camera coordinate system; Z: the TOF camera coordinate system The z-direction value in the coordinate system is convenient for subsequent calculation of angular resolution.

步骤S203:根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率;Step S203: Calculate the intrinsic spatial angular resolution of each pixel at the original position according to the built-in parameters of the camera and the resolution data;

如图3所示,所述根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率的计算公式为:As shown in FIG. 3 , the calculation formula for calculating the intrinsic spatial angular resolution of each pixel at the original position according to the built-in parameters of the camera and the resolution data is:

其中,Xc,Yc,Zc,为像素坐标c(u,v)点的三维坐标点,fx,fy分别是TOF相机x,y方向的焦距,U0,V0是TOF相机的主点坐标,所得角分辨率为像素坐标系每个像素坐标的空间角分辨率。Among them, Xc, Yc, Zc are the three-dimensional coordinate points of the pixel coordinate c (u, v) point, fx, fy are the focal lengths of the TOF camera in the x and y directions, respectively, U0, V0 are the principal point coordinates of the TOF camera, and the resulting angle Resolution is the spatial angular resolution of each pixel coordinate of the pixel coordinate system.

步骤S204:根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度;Step S204: Calculate the angle of each pixel of each row relative to the position of the row where the camera principal point position is located according to the angular resolution;

所述根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度的步骤包括:根据所述角分辨率,获取所述原始位置每个像素相对相机内参主点所在该行位置的角度;根据所述角度依据所述正负方向以主点位置划分角度的正负性;往复一个小角度组合后,计算从所述原始位置起所在行的每个像素相对于所述原始位置的角度,依据所述负递增与所述正递增对其他行等同计算对应所在行的每个像素的角度。The step of calculating, according to the angular resolution, the angle of each pixel of each row relative to the position of the camera main point in the row includes: obtaining, according to the angular resolution, the relative camera internal reference main point of each pixel of the original position. The angle of the row position; according to the angle according to the positive and negative directions, divide the positive and negative of the angle with the main point position; after reciprocating a small angle combination, calculate the relative value of each pixel in the row from the original position relative to For the angle of the original position, according to the negative increment and the positive increment, the angle of each pixel of the corresponding row is equally calculated for other rows.

步骤S205:根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标;Step S205: Calculate the new three-dimensional coordinates of the rotated TOF camera in the original position coordinate system according to the angle of each pixel and the original three-dimensional coordinates;

所述根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标的步骤包括:The step of calculating the new three-dimensional coordinates of the rotated TOF camera in the original position coordinate system according to the angle of each pixel and the original three-dimensional coordinates includes:

如图4所示,设置所述深度数据为z并将所述深度数据z转换成距离distance=z/cos(theta),在0°(原始位置)参考坐标系下转动一定角度angle转换成弧度rad,则新的x=distance*sin(alpha+rad),新的y=y不变,新的z=distance*cos(theta+rad)。As shown in Figure 4, set the depth data as z and convert the depth data z into distance distance=z/cos(theta), and turn a certain angle angle into radians under the 0° (original position) reference coordinate system rad, the new x=distance*sin(alpha+rad), the new y=y remains unchanged, and the new z=distance*cos(theta+rad).

步骤S206:将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理;Step S206: fuse the new three-dimensional coordinates of the angle of each pixel and perform smooth filtering;

所述将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理的步骤之前还包括:查询每个像素的角度的新的三维坐标是否有对应的所述深度数据;当所述新的三维坐标具有深度数据时,将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理;当新的三维坐标不具有深度数据时,删除没有深度数据的新的三维坐标。过滤新的三围为坐标,避免没有深度数据的深度坐标对新的点云的稠密化进行影响。Before the step of fusing the new three-dimensional coordinates of the angle of each pixel and performing smooth filtering processing, the step further includes: querying whether the new three-dimensional coordinates of the angle of each pixel has the corresponding depth data; When the three-dimensional coordinates of the pixel have depth data, the new three-dimensional coordinates of the angle of each pixel are fused and smoothed; when the new three-dimensional coordinates do not have depth data, the new three-dimensional coordinates without depth data are deleted. Filter the new measurements as coordinates to avoid the influence of depth coordinates without depth data on the densification of the new point cloud.

所述将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理的步骤包括:将每个像素的角度的新的三维坐标进行融合;对融合后的每个像素的角度的新的三维坐标进行重采样与滤波处理;平滑处理滤波后的每个像素的角度的新的三维坐标,并计算法线;将法线显示在平滑后的每个像素的角度的新的三维坐标,形成新的点云。The step of fusing the new three-dimensional coordinates of the angle of each pixel and performing smooth filtering processing includes: fusing the new three-dimensional coordinates of the angle of each pixel; The three-dimensional coordinates are resampled and filtered; the new three-dimensional coordinates of the filtered angle of each pixel are smoothed, and the normal is calculated; the normal is displayed in the smoothed new three-dimensional coordinates of the angle of each pixel to form New point cloud.

通过重采样实现点云平滑,重采样是通过“移动最小二乘”(MLS,Moving LeastSquares)法来实现的,移动最小二乘的拟合函数的建立由一个系数向量a(x)和基函数p(x)构成,这里a(x)不是常数,而是坐标x的函数。拟合函数的建立:在拟合区域的一个局域子域U上,拟合函数表示:式中,a(x)=[a1(x),a2(x)…am(x)]T为为待求系数,它是坐标x的函数,p(x)=[p1(x),p2(x)…pm(x)]T称为基函数,它是一个阶完备的多项式,是基函数的项式[7],常用的二次基为[1,u,v,u2,u2,uv]T所以式(1)中的拟合函数通常表示为:Point cloud smoothing is achieved by resampling. Resampling is achieved by the "Moving Least Squares" (MLS, Moving LeastSquares) method. The fitting function of the moving least squares is established by a coefficient vector a(x) and a basis function p(x), where a(x) is not a constant, but a function of the coordinate x. Establishment of the fitting function: On a local sub-domain U of the fitting region, the fitting function is expressed as: In the formula, a(x)=[a1(x), a2(x)...am(x)]T is For the coefficient to be found, it is a function of the coordinate x, p(x)=[p1(x), p2(x)...pm(x)]T is called the basis function, which is a complete polynomial of order and is the basis function The term of [7], the commonly used quadratic basis is [1, u, v, u2 , u2 , uv]T So the fitting function in formula (1) is usually expressed as:

f(x)=a0(x)+a1(x)N+a2(x)v+a3(x)u2+a4(x)v2+a5(x)uvf(x)=a0 (x)+a1 (x)N+a2 (x)v+a3 (x)u2 +a4 (x)v2 +a5 (x)uv

使上式的函数最小化,w(x-xi)是节点xi的权函数。To minimize the function of the above formula, w(x-xi) is the weight function of node xi.

权函数是移动最小二乘法的核心。在移动最小二乘法中,权函数w(x-xi)应该具有紧致性,即权函数在x的一个子域内不等于0,在这个子域外全是0,这个子域称为权函数的支持域(即x的影响域),其半径记为s。常用的权函数为立方样条权函数:The weight function is the core of moving least squares. In the moving least squares method, the weight function w(x-xi) should be compact, that is, the weight function is not equal to 0 in a subfield of x, and is all 0 outside this subfield, and this subfield is called the weight function. The support domain (that is, the influence domain of x), whose radius is denoted by s. The commonly used weight function is the cubic spline weight function:

其中in

hi为第i节点的权函数支持域的大小,β为引入的影响系数。hi is the size of the support domain of the weight function of the i-th node, and β is the introduced influence coefficient.

点云的法线计算一般有两种方法:1、使用曲面重建方法,从点云数据中得到采样点对应的曲面,然后再用曲面模型计算其表面的法线;2、直接使用近似值直接从点云数据集推断出曲面法线,通过近似值估计点云中每个点的表面法线。具体来说,从该点最近邻计算的协方差矩阵的特征向量和特征值的分析,从该点的周围点邻域(也称为k邻域)估计一点处的表面法线。There are generally two ways to calculate the normal of the point cloud: 1. Use the surface reconstruction method to obtain the surface corresponding to the sampling point from the point cloud data, and then use the surface model to calculate the normal of the surface; 2. Use the approximate value directly from the The point cloud dataset infers surface normals, approximating the surface normals for each point in the point cloud by approximating them. Specifically, from the analysis of the eigenvectors and eigenvalues of the covariance matrix computed from the point's nearest neighbors, the surface normal at a point is estimated from the point's surrounding point neighborhood (also called the k-neighborhood).

步骤S207:将处理后的新的三维坐标作为新的点云数据并输出。Step S207: The processed new three-dimensional coordinates are used as new point cloud data and output.

如图5-图6所示,(a)原始单帧稀疏点云俯视效果、(b)稀疏点云追加后俯视效果、(c)原始单帧稀疏点云正视效果、(d)稀疏点云追加后正视效果,(a)(c)中可以看出原始点云非常稀疏,只有五百多个点,且由于相机硬件特点,中间含两条比较大的无效的空白;经过角度限位器的左右依次转动1°-4°后,即(b)(c)可以看出处理后新的点云效果稠密化明显,且保留了相机原有特性,通过上述方案中TOF进行深度数据采集以及通过角度限位器装置获得水平方向转动角度,垂直方向不变,通过得到每个角度下的深度数据重新计算原始位置的三维坐标系的三维数据,以此达到稀疏点云稠密化结果。As shown in Figure 5-Figure 6, (a) the top-down effect of the original single-frame sparse point cloud, (b) the top-down effect after the sparse point cloud is added, (c) the front-view effect of the original single-frame sparse point cloud, (d) the sparse point cloud After the addition, it can be seen in (a) and (c) that the original point cloud is very sparse, with only more than 500 points, and due to the hardware characteristics of the camera, there are two relatively large invalid blanks in the middle; after the angle limiter After turning 1°-4° left and right, that is (b) (c), it can be seen that the new point cloud after processing is obviously dense and retains the original characteristics of the camera. The TOF in the above scheme is used for depth data collection and The rotation angle in the horizontal direction is obtained through the angle limiter device, and the vertical direction remains unchanged. The three-dimensional data of the three-dimensional coordinate system of the original position is recalculated by obtaining the depth data at each angle, so as to achieve the result of sparse point cloud densification.

本实施例通过获取所述TOF相机在各个角度的拍摄数据,获取所述角度限位器测量所述TOF相机在各个角度拍摄的角度值,根据相机的内置参数、所述深度数据以及角度值计算各个角度的原始三维坐标,根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率,根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度,根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标,将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理,将处理后的新的三维坐标作为新的点云数据并输出。通过对每个角度的深度数据重新计算新的三维坐标,以此达到稀疏点云稠密化结果,避免了给新的点云造成深度错位,虚假等不良影响,得到了精度和还原度比较高的深度数据。In this embodiment, by acquiring the shooting data of the TOF camera at various angles, acquiring the angle limiter to measure the angle values of the TOF camera shooting at various angles, and calculating according to the built-in parameters of the camera, the depth data and the angle value The original three-dimensional coordinates of each angle, calculate the intrinsic spatial angular resolution of each pixel at the original position according to the built-in parameters of the camera and the resolution data, and calculate the relative camera relative to the camera for each pixel of each row according to the angular resolution The angle of the row position where the main point position is located, the new three-dimensional coordinates of the rotated TOF camera in the original position coordinate system are calculated according to the angle of each pixel and the original three-dimensional coordinates, and the angle of each pixel is calculated. The new three-dimensional coordinates are fused and smoothed, and the processed new three-dimensional coordinates are used as new point cloud data and output. By recalculating the new three-dimensional coordinates of the depth data at each angle, the sparse point cloud densification result is achieved, which avoids the adverse effects of depth dislocation and falsehood on the new point cloud, and obtains a high precision and restoration degree. depth data.

需要强调的是,为进一步保证上述点云数据的私密和安全性,上述点云数据还可以存储于一区块链的节点中。It should be emphasized that, in order to further ensure the privacy and security of the above point cloud data, the above point cloud data can also be stored in a node of a blockchain.

本申请所指区块链是分布式数据存储、点对点传输、共识机制、加密算法等计算机技术的新型应用模式。区块链(Blockchain),本质上是一个去中心化的数据库,是一串使用密码学方法相关联产生的数据块,每一个数据块中包含了一批次网络交易的信息,用于验证其信息的有效性(防伪)和生成下一个区块。区块链可以包括区块链底层平台、平台产品服务层以及应用服务层等。The blockchain referred to in this application is a new application mode of computer technologies such as distributed data storage, point-to-point transmission, consensus mechanism, and encryption algorithm. Blockchain, essentially a decentralized database, is a series of data blocks associated with cryptographic methods. Each data block contains a batch of network transaction information to verify its Validity of information (anti-counterfeiting) and generation of the next block. The blockchain can include the underlying platform of the blockchain, the platform product service layer, and the application service layer.

本申请实施例可以基于人工智能技术对相关的数据进行获取和处理。其中,人工智能(Artificial Intelligence,AI)是利用数字计算机或者数字计算机控制的机器模拟、延伸和扩展人的智能,感知环境、获取知识并使用知识获得最佳结果的理论、方法、技术及应用系统。The embodiments of the present application may acquire and process related data based on artificial intelligence technology. Among them, artificial intelligence (AI) is a theory, method, technology and application system that uses digital computers or machines controlled by digital computers to simulate, extend and expand human intelligence, perceive the environment, acquire knowledge and use knowledge to obtain the best results. .

人工智能基础技术一般包括如传感器、专用人工智能芯片、云计算、分布式存储、大数据处理技术、操作/交互系统、机电一体化等技术。人工智能软件技术主要包括计算机视觉技术、机器人技术、生物识别技术、语音处理技术、自然语言处理技术以及机器学习/深度学习等几大方向。The basic technologies of artificial intelligence generally include technologies such as sensors, special artificial intelligence chips, cloud computing, distributed storage, big data processing technology, operation/interaction systems, and mechatronics. Artificial intelligence software technology mainly includes computer vision technology, robotics technology, biometrics technology, speech processing technology, natural language processing technology, and machine learning/deep learning.

本领域普通技术人员可以理解实现上述实施例方法中的全部或部分流程,是可以通过计算机可读指令来指令相关的硬件来完成,该计算机可读指令可存储于一计算机可读取存储介质中,该程序在执行时,可包括如上述各方法的实施例的流程。其中,前述的存储介质可为磁碟、光盘、只读存储记忆体(Read-Only Memory,ROM)等非易失性存储介质,或随机存储记忆体(Random Access Memory,RAM)等。Those of ordinary skill in the art can understand that all or part of the processes in the methods of the above embodiments can be implemented by instructing relevant hardware through computer-readable instructions, and the computer-readable instructions can be stored in a computer-readable storage medium. , when the program is executed, it may include the processes of the foregoing method embodiments. The aforementioned storage medium may be a non-volatile storage medium such as a magnetic disk, an optical disk, a read-only memory (Read-Only Memory, ROM), or a random access memory (Random Access Memory, RAM).

应该理解的是,虽然附图的流程图中的各个步骤按照箭头的指示依次显示,但是这些步骤并不是必然按照箭头指示的顺序依次执行。除非本文中有明确的说明,这些步骤的执行并没有严格的顺序限制,其可以以其他的顺序执行。而且,附图的流程图中的至少一部分步骤可以包括多个子步骤或者多个阶段,这些子步骤或者阶段并不必然是在同一时刻执行完成,而是可以在不同的时刻执行,其执行顺序也不必然是依次进行,而是可以与其他步骤或者其他步骤的子步骤或者阶段的至少一部分轮流或者交替地执行。It should be understood that although the various steps in the flowchart of the accompanying drawings are sequentially shown in the order indicated by the arrows, these steps are not necessarily executed in sequence in the order indicated by the arrows. Unless explicitly stated herein, the execution of these steps is not strictly limited to the order and may be performed in other orders. Moreover, at least a part of the steps in the flowchart of the accompanying drawings may include multiple sub-steps or multiple stages, and these sub-steps or stages are not necessarily executed at the same time, but may be executed at different times, and the execution sequence is also It does not have to be performed sequentially, but may be performed alternately or alternately with other steps or at least a portion of sub-steps or stages of other steps.

进一步参考图7,作为对上述图2-6所示方法的实现,本申请提供了一种TOF相机点云的稠密化装置的一个实施例,该装置实施例与图2-6所示的方法实施例相对应,该装置具体可以应用于各种电子设备中。Further referring to FIG. 7 , as an implementation of the method shown in the above-mentioned FIGS. 2-6 , the present application provides an embodiment of a device for densifying a point cloud of a TOF camera, which is the same as the method shown in FIGS. 2-6 . Corresponding to the embodiments, the apparatus can be specifically applied to various electronic devices.

如图7所示,本实施例所述的TOF相机点云的稠密化装置300包括:获取模块301、角分辨率计算模块303、原始三维坐标计算模块302、角度计算模块304、新的三维坐标计算模块305、融合模块306、生成模块307。其中:As shown in FIG. 7 , the device 300 for densifying the point cloud of the TOF camera in this embodiment includes: an

获取模块301,用于获取所述TOF相机在各个角度的拍摄数据,获取所述角度限位器测量所述TOF相机在各个角度拍摄的角度值,其中,所述拍摄数据包括深度数据以及分辨率数据;The

原始三维坐标计算模块302,用于根据相机的内置参数、所述深度数据以及角度值计算各个角度的原始三维坐标,并在各个角度中设置一个原始位置;The original three-dimensional coordinate

角分辨率计算模块303,用于根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率;an angular

角度计算模块304,用于根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度;An

新的三维坐标计算模块305,用于根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标;A new three-dimensional coordinate

融合模块306,用于将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理;The

生成模块307,用于将处理后的新的三维坐标作为新的点云数据并输出。The

本实施例通过获取所述TOF相机在各个角度的拍摄数据,获取所述角度限位器测量所述TOF相机在各个角度拍摄的角度值,根据相机的内置参数、所述深度数据以及角度值计算各个角度的原始三维坐标,根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率,根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度,根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标,将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理,将处理后的新的三维坐标作为新的点云数据并输出。通过对每个角度的深度数据重新计算新的三维坐标,以此达到稀疏点云稠密化结果,避免了给新的点云造成深度错位,虚假等不良影响,得到了精度和还原度比较高的深度数据。In this embodiment, by acquiring the shooting data of the TOF camera at various angles, acquiring the angle limiter to measure the angle values of the TOF camera shooting at various angles, and calculating according to the built-in parameters of the camera, the depth data and the angle value The original three-dimensional coordinates of each angle, calculate the intrinsic spatial angular resolution of each pixel at the original position according to the built-in parameters of the camera and the resolution data, and calculate the relative camera relative to the camera for each pixel of each row according to the angular resolution The angle of the row position where the main point position is located, the new three-dimensional coordinates of the rotated TOF camera in the original position coordinate system are calculated according to the angle of each pixel and the original three-dimensional coordinates, and the angle of each pixel is calculated. The new three-dimensional coordinates are fused and smoothed, and the processed new three-dimensional coordinates are used as new point cloud data and output. By recalculating the new three-dimensional coordinates of the depth data at each angle, the sparse point cloud densification result is achieved, which avoids the adverse effects of depth dislocation and falsehood on the new point cloud, and obtains a high precision and restoration degree. depth data.

在本实施例的一些可选的实现方式中,所述获取模块301包括:In some optional implementations of this embodiment, the obtaining

角度设置子模块,设置所述原始位置为0°,所述原始位置的左侧转动为负递增,所述原始位置的右侧转动为正递增;The angle setting sub-module, sets the original position to 0°, the left rotation of the original position is a negative increment, and the right rotation of the original position is a positive increment;

设定子模块,设定TOF相机转动一个往复为小角度组合,所述一个往复为TOF相机转动时均经过原始位置、负递增以及正递增;Set the sub-module, and set the TOF camera to rotate a reciprocation as a combination of small angles, and the reciprocation is that when the TOF camera rotates, it all goes through the original position, negative increment and positive increment;

角度值获取子模块,驱动所述舵机按角度序列进行小角度组合转动并获取对应角度TOF相机在各个角度的拍摄数据以及所述角度限位器测量的对应角度的角度值。The angle value acquisition sub-module drives the steering gear to perform small-angle combined rotation according to the angle sequence and acquires the shooting data of the corresponding angle TOF camera at each angle and the angle value of the corresponding angle measured by the angle limiter.

在本实施例的一些可选的实现方式中,所述角度计算模块304包括:In some optional implementations of this embodiment, the

角度差子模块,根据所述角分辨率,获取所述原始位置每个像素相对相机内参主点所在该行位置的角度;The angle difference sub-module, according to the angular resolution, obtains the angle of each pixel of the original position relative to the position of the line where the main point of the internal reference of the camera is located;

分辨率差子模块,根据所述角度依据所述正负方向以主点位置划分角度的正负性;A resolution difference sub-module, according to the angle according to the positive and negative directions, the positive and negative of the angle are divided by the position of the principal point;

角度计算子模块,往复一个小角度组合后,计算从所述原始位置起根据所述角分辨率计算每行的每个像素相对相机内参主点所在该行位置的角度,并依据所述正负方向以主点位置划分角度的正负性。The angle calculation sub-module, after reciprocating a small angle combination, calculates the angle of each pixel of each line relative to the position of the line where the main point of the camera's internal reference is located from the original position according to the angular resolution, and calculates the angle according to the positive and negative The direction divides the positive and negative of the angle by the position of the principal point.

在本实施例的一些可选的实现方式中,所述角分辨率计算模块303的计算公式为:In some optional implementations of this embodiment, the calculation formula of the angular

其中,Xc,Yc,Zc,为像素坐标c(u,v)点的三维坐标点,fx,fy分别是TOF相机x,y方向的焦距,U0,V0是TOF相机的主点坐标,所得角分辨率为像素坐标系每个像素坐标的空间角分辨率。Among them, Xc, Yc, Zc are the three-dimensional coordinate points of the pixel coordinate c (u, v) point, fx, fy are the focal lengths of the TOF camera in the x and y directions, respectively, U0, V0 are the principal point coordinates of the TOF camera, and the resulting angle Resolution is the spatial angular resolution of each pixel coordinate of the pixel coordinate system.

在本实施例的一些可选的实现方式中,所述新的三维坐标计算模块305包括:In some optional implementations of this embodiment, the new three-dimensional coordinate

新的三维坐标计算子模块,设置所述深度数据为z并将所述深度数据z转换成距离distance=z/cos(theta),在0°(原始位置)参考坐标系下转动一定角度angle转换成弧度rad,则新的x=distance*sin(alpha+rad),新的y=y不变,新的z=distance*cos(theta+rad)。The new three-dimensional coordinate calculation sub-module, sets the depth data as z and converts the depth data z into distance distance=z/cos(theta), and rotates a certain angle in the 0° (original position) reference coordinate system for angle conversion In radians rad, the new x=distance*sin(alpha+rad), the new y=y remains unchanged, and the new z=distance*cos(theta+rad).

在本实施例的一些可选的实现方式中,所述的TOF相机点云的稠密化装置300还包括:In some optional implementations of this embodiment, the device 300 for densifying the point cloud of the TOF camera further includes:

查询模块,查询每个像素的角度的新的三维坐标是否有对应的所述深度数据;a query module to query whether the new three-dimensional coordinates of the angle of each pixel have the corresponding depth data;

当所述新的三维坐标具有深度数据时,将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理;When the new three-dimensional coordinates have depth data, the new three-dimensional coordinates of the angle of each pixel are fused and smoothed and filtered;

当新的三维坐标不具有深度数据时,删除没有深度数据的新的三维坐标。When the new three-dimensional coordinates have no depth data, the new three-dimensional coordinates without depth data are deleted.

在本实施例的一些可选的实现方式中,所述融合模块306包括:In some optional implementations of this embodiment, the

融合子模块,将每个像素的角度的新的三维坐标进行融合;The fusion sub-module fuses the new three-dimensional coordinates of the angle of each pixel;

滤波子模块,对融合后的每个像素的角度的新的三维坐标进行重采样与滤波处理;The filtering sub-module performs resampling and filtering processing on the new three-dimensional coordinates of the angle of each pixel after fusion;

平滑子模块,平滑处理滤波后的每个像素的角度的新的三维坐标,并计算法线;The smoothing sub-module, smoothes the new 3D coordinates of the filtered angle of each pixel, and calculates the normal;

形成子模块,将法线显示在平滑后的每个像素的角度的新的三维坐标,形成新的点云。A submodule is formed that displays the normals at the smoothed new 3D coordinates of the angle of each pixel, forming a new point cloud.

为解决上述技术问题,本申请实施例还提供计算机设备。具体请参阅图8,图8为本实施例计算机设备基本结构框图。To solve the above technical problems, the embodiments of the present application also provide computer equipment. For details, please refer to FIG. 8 , which is a block diagram of a basic structure of a computer device according to this embodiment.

所述计算机设备4包括通过系统总线相互通信连接存储器41、处理器42、网络接口43。需要指出的是,图8中仅示出了具有组件41-43的计算机设备4,但是应理解的是,并不要求实施所有示出的组件,可以替代的实施更多或者更少的组件。其中,本技术领域技术人员可以理解,这里的计算机设备是一种能够按照事先设定或存储的指令,自动进行数值计算和/或信息处理的设备,其硬件包括但不限于微处理器、专用集成电路(ApplicationSpecific Integrated Circuit,ASIC)、可编程门阵列(Field-Programmable GateArray,FPGA)、数字处理器(Digital Signal Processor,DSP)、嵌入式设备等。The computer device 4 includes a

所述计算机设备可以是桌上型计算机、笔记本、掌上电脑及云端服务器等计算设备。所述计算机设备可以与用户通过键盘、鼠标、遥控器、触摸板或声控设备等方式进行人机交互。The computer equipment may be a desktop computer, a notebook computer, a palmtop computer, a cloud server and other computing equipment. The computer device can perform human-computer interaction with the user through a keyboard, a mouse, a remote control, a touch pad or a voice control device.

所述存储器41至少包括一种类型的可读存储介质,所述可读存储介质包括闪存、硬盘、多媒体卡、卡型存储器(例如,SD或DX存储器等)、随机访问存储器(RAM)、静态随机访问存储器(SRAM)、只读存储器(ROM)、电可擦除可编程只读存储器(EEPROM)、可编程只读存储器(PROM)、磁性存储器、磁盘、光盘等。在一些实施例中,所述存储器41可以是所述计算机设备4的内部存储单元,例如该计算机设备6的硬盘或内存。在另一些实施例中,所述存储器41也可以是所述计算机设备6的外部存储设备,例如该计算机设备4上配备的插接式硬盘,智能存储卡(Smart Media Card,SMC),安全数字(Secure Digital,SD)卡,闪存卡(FlashCard)等。当然,所述存储器41还可以既包括所述计算机设备4的内部存储单元也包括其外部存储设备。本实施例中,所述存储器41通常用于存储安装于所述计算机设备4的操作系统和各类应用软件,例如TOF相机点云的稠密化方法的计算机可读指令等。此外,所述存储器41还可以用于暂时的存储已经输出或者将要输出的各类数据。The

所述处理器42在一些实施例中可以是中央处理器(Central Processing Unit,CPU)、控制器、微控制器、微处理器、或其他数据处理芯片。该处理器42通常用于控制所述计算机设备4的总体操作。本实施例中,所述处理器42用于运行所述存储器41中存储的计算机可读指令或者处理数据,例如运行所述TOF相机点云的稠密化方法的计算机可读指令。The

所述网络接口43可包括无线网络接口或有线网络接口,该网络接口43通常用于在所述计算机设备4与其他电子设备之间建立通信连接。The

本实施例通过获取所述TOF相机在各个角度的拍摄数据,获取所述角度限位器测量所述TOF相机在各个角度拍摄的角度值,根据相机的内置参数、所述深度数据以及角度值计算各个角度的原始三维坐标,根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率,根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度,根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标,将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理,将处理后的新的三维坐标作为新的点云数据并输出。通过对每个角度的深度数据重新计算新的三维坐标,以此达到稀疏点云稠密化结果,避免了给新的点云造成深度错位,虚假等不良影响,得到了精度和还原度比较高的深度数据。In this embodiment, by acquiring the shooting data of the TOF camera at various angles, acquiring the angle limiter to measure the angle values of the TOF camera shooting at various angles, and calculating according to the built-in parameters of the camera, the depth data and the angle value The original three-dimensional coordinates of each angle, calculate the intrinsic spatial angular resolution of each pixel at the original position according to the built-in parameters of the camera and the resolution data, and calculate the relative camera relative to the camera for each pixel of each row according to the angular resolution The angle of the row position where the main point position is located, the new three-dimensional coordinates of the rotated TOF camera in the original position coordinate system are calculated according to the angle of each pixel and the original three-dimensional coordinates, and the angle of each pixel is calculated. The new three-dimensional coordinates are fused and smoothed, and the processed new three-dimensional coordinates are used as new point cloud data and output. By recalculating the new three-dimensional coordinates of the depth data at each angle, the sparse point cloud densification result is achieved, which avoids the adverse effects of depth dislocation and falsehood on the new point cloud, and obtains a high precision and restoration degree. depth data.

本申请还提供了另一种实施方式,即提供一种计算机可读存储介质,所述计算机可读存储介质存储有计算机可读指令,所述计算机可读指令可被至少一个处理器执行,以使所述至少一个处理器执行如上述的TOF相机点云的稠密化方法的步骤。The present application also provides another embodiment, that is, to provide a computer-readable storage medium, where the computer-readable storage medium stores computer-readable instructions, and the computer-readable instructions can be executed by at least one processor to The at least one processor is caused to perform the steps of the method for densification of a TOF camera point cloud as described above.

本实施例通过获取所述TOF相机在各个角度的拍摄数据,获取所述角度限位器测量所述TOF相机在各个角度拍摄的角度值,根据相机的内置参数、所述深度数据以及角度值计算各个角度的原始三维坐标,根据相机的内置参数以及所述分辨率数据计算每个像素在所述原始位置下的固有空间角分辨率,根据所述角分辨率计算每行的每个像素相对相机主点位置所在该行位置的角度,根据每个像素的所述角度以及所述原始三维坐标计算出转动的TOF相机在所述原始位置坐标系下的新的三维坐标,将每个像素的角度的新的三维坐标进行融合并进行平滑滤波处理,将处理后的新的三维坐标作为新的点云数据并输出。通过对每个角度的深度数据重新计算新的三维坐标,以此达到稀疏点云稠密化结果,避免了给新的点云造成深度错位,虚假等不良影响,得到了精度和还原度比较高的深度数据。In this embodiment, by acquiring the shooting data of the TOF camera at various angles, acquiring the angle limiter to measure the angle values of the TOF camera shooting at various angles, and calculating according to the built-in parameters of the camera, the depth data and the angle value The original three-dimensional coordinates of each angle, calculate the intrinsic spatial angular resolution of each pixel at the original position according to the built-in parameters of the camera and the resolution data, and calculate the relative camera relative to the camera for each pixel of each row according to the angular resolution The angle of the row position where the main point position is located, the new three-dimensional coordinates of the rotated TOF camera in the original position coordinate system are calculated according to the angle of each pixel and the original three-dimensional coordinates, and the angle of each pixel is calculated. The new three-dimensional coordinates are fused and smoothed, and the processed new three-dimensional coordinates are used as new point cloud data and output. By recalculating the new three-dimensional coordinates of the depth data at each angle, the sparse point cloud densification result is achieved, which avoids the adverse effects of depth dislocation and falsehood on the new point cloud, and obtains a high precision and restoration degree. depth data.

通过以上的实施方式的描述,本领域的技术人员可以清楚地了解到上述实施例方法可借助软件加必需的通用硬件平台的方式来实现,当然也可以通过硬件,但很多情况下前者是更佳的实施方式。基于这样的理解,本申请的技术方案本质上或者说对现有技术做出贡献的部分可以以软件产品的形式体现出来,该计算机软件产品存储在一个存储介质(如ROM/RAM、磁碟、光盘)中,包括若干指令用以使得一台终端设备(可以是手机,计算机,服务器,空调器,或者网络设备等)执行本申请各个实施例所述的方法。From the description of the above embodiments, those skilled in the art can clearly understand that the method of the above embodiment can be implemented by means of software plus a necessary general hardware platform, and of course can also be implemented by hardware, but in many cases the former is better implementation. Based on this understanding, the technical solution of the present application can be embodied in the form of a software product in essence or in a part that contributes to the prior art, and the computer software product is stored in a storage medium (such as ROM/RAM, magnetic disk, CD-ROM), including several instructions to make a terminal device (which may be a mobile phone, a computer, a server, an air conditioner, or a network device, etc.) execute the methods described in the various embodiments of this application.

显然,以上所描述的实施例仅仅是本申请一部分实施例,而不是全部的实施例,附图中给出了本申请的较佳实施例,但并不限制本申请的专利范围。本申请可以以许多不同的形式来实现,相反地,提供这些实施例的目的是使对本申请的公开内容的理解更加透彻全面。尽管参照前述实施例对本申请进行了详细的说明,对于本领域的技术人员来而言,其依然可以对前述各具体实施方式所记载的技术方案进行修改,或者对其中部分技术特征进行等效替换。凡是利用本申请说明书及附图内容所做的等效结构,直接或间接运用在其他相关的技术领域,均同理在本申请专利保护范围之内。Obviously, the above-described embodiments are only a part of the embodiments of the present application, rather than all of the embodiments. The accompanying drawings show the preferred embodiments of the present application, but do not limit the scope of the patent of the present application. This application may be embodied in many different forms, rather these embodiments are provided so that a thorough and complete understanding of the disclosure of this application is provided. Although the present application has been described in detail with reference to the foregoing embodiments, those skilled in the art can still modify the technical solutions described in the foregoing specific embodiments, or perform equivalent replacements for some of the technical features. . Any equivalent structure made by using the contents of the description and drawings of the present application, which is directly or indirectly used in other related technical fields, is also within the scope of protection of the patent of the present application.

Claims (10)

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN2022100111047 | 2022-01-05 | ||

| CN202210011104 | 2022-01-05 |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN114630096Atrue CN114630096A (en) | 2022-06-14 |

| CN114630096B CN114630096B (en) | 2023-10-27 |

Family

ID=81901915

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202210242970.7AActiveCN114630096B (en) | 2022-01-05 | 2022-03-11 | Method, device and equipment for densification of TOF camera point cloud and readable storage medium |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN114630096B (en) |

Citations (9)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20170350983A1 (en)* | 2016-06-01 | 2017-12-07 | Velodyne Lidar, Inc. | Multiple Pixel Scanning LIDAR |

| CN109494278A (en)* | 2019-01-10 | 2019-03-19 | 深圳技术大学(筹) | Single-photon avalanche diode, active quenching circuit, pulsed TOF sensor and forming method |

| WO2020014951A1 (en)* | 2018-07-20 | 2020-01-23 | 深圳市道通智能航空技术有限公司 | Method and apparatus for building local obstacle map, and unmanned aerial vehicle |

| CN111563923A (en)* | 2020-07-15 | 2020-08-21 | 浙江大华技术股份有限公司 | Method for obtaining dense depth map and related device |

| CN112106112A (en)* | 2019-09-16 | 2020-12-18 | 深圳市大疆创新科技有限公司 | Point cloud fusion method, device and system and storage medium |

| CN112434709A (en)* | 2020-11-20 | 2021-03-02 | 西安视野慧图智能科技有限公司 | Aerial survey method and system based on real-time dense three-dimensional point cloud and DSM of unmanned aerial vehicle |

| CN112733641A (en)* | 2020-12-29 | 2021-04-30 | 深圳依时货拉拉科技有限公司 | Object size measuring method, device, equipment and storage medium |

| CN113284251A (en)* | 2021-06-11 | 2021-08-20 | 清华大学深圳国际研究生院 | Cascade network three-dimensional reconstruction method and system with self-adaptive view angle |

| CN113643382A (en)* | 2021-08-22 | 2021-11-12 | 浙江大学 | A method and device for obtaining dense colored point cloud based on rotating laser fusion camera |

- 2022

- 2022-03-11CNCN202210242970.7Apatent/CN114630096B/enactiveActive

Patent Citations (9)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20170350983A1 (en)* | 2016-06-01 | 2017-12-07 | Velodyne Lidar, Inc. | Multiple Pixel Scanning LIDAR |

| WO2020014951A1 (en)* | 2018-07-20 | 2020-01-23 | 深圳市道通智能航空技术有限公司 | Method and apparatus for building local obstacle map, and unmanned aerial vehicle |

| CN109494278A (en)* | 2019-01-10 | 2019-03-19 | 深圳技术大学(筹) | Single-photon avalanche diode, active quenching circuit, pulsed TOF sensor and forming method |

| CN112106112A (en)* | 2019-09-16 | 2020-12-18 | 深圳市大疆创新科技有限公司 | Point cloud fusion method, device and system and storage medium |

| CN111563923A (en)* | 2020-07-15 | 2020-08-21 | 浙江大华技术股份有限公司 | Method for obtaining dense depth map and related device |

| CN112434709A (en)* | 2020-11-20 | 2021-03-02 | 西安视野慧图智能科技有限公司 | Aerial survey method and system based on real-time dense three-dimensional point cloud and DSM of unmanned aerial vehicle |

| CN112733641A (en)* | 2020-12-29 | 2021-04-30 | 深圳依时货拉拉科技有限公司 | Object size measuring method, device, equipment and storage medium |

| CN113284251A (en)* | 2021-06-11 | 2021-08-20 | 清华大学深圳国际研究生院 | Cascade network three-dimensional reconstruction method and system with self-adaptive view angle |

| CN113643382A (en)* | 2021-08-22 | 2021-11-12 | 浙江大学 | A method and device for obtaining dense colored point cloud based on rotating laser fusion camera |

Non-Patent Citations (3)

| Title |

|---|

| HUALU LI ET AL.: "TOF Camera Array for Package Volume Measurement", pages 2260 - 2264* |

| 杨洪飞 等: "图像融合在空间目标三维重建中的应用", no. 09* |

| 程源文 等: "单点相位式TOF深度探测器研究与设计", vol. 42, no. 1, pages 65 - 70* |

Also Published As

| Publication number | Publication date |

|---|---|

| CN114630096B (en) | 2023-10-27 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN112435193B (en) | Method and device for denoising point cloud data, storage medium and electronic equipment | |

| CN111553946A (en) | Method and device for removing ground point cloud and obstacle detection method and device | |

| CN107077744A (en) | 3D model generation using edges | |

| CN113313832B (en) | Semantic generation method and device of three-dimensional model, storage medium and electronic equipment | |

| Cao et al. | Accurate 3-D reconstruction under IoT environments and its applications to augmented reality | |

| CN113643414B (en) | Three-dimensional image generation method and device, electronic equipment and storage medium | |

| CN113160420A (en) | Three-dimensional point cloud reconstruction method and device, electronic equipment and storage medium | |

| JP6046501B2 (en) | Feature point output device, feature point output program, feature point output method, search device, search program, and search method | |

| US11367263B1 (en) | Image-guided three dimensional modeling | |

| CN113793387A (en) | Calibration method, device and terminal of monocular speckle structured light system | |

| CN115205383A (en) | Method, device, electronic device and storage medium for determining camera pose | |

| CN116188742A (en) | Virtual object control method, device, equipment and storage medium | |

| CN115375836A (en) | Method and system for point cloud fusion 3D reconstruction based on multivariate confidence filtering | |

| CN114792355A (en) | Virtual image generation method and device, electronic equipment and storage medium | |

| US11783501B2 (en) | Method and apparatus for determining image depth information, electronic device, and media | |

| CN114972146A (en) | Image fusion method and device based on generation countermeasure type double-channel weight distribution | |

| CN113791425A (en) | Radar P display interface generation method and device, computer equipment and storage medium | |

| CN114630096B (en) | Method, device and equipment for densification of TOF camera point cloud and readable storage medium | |

| CN115131507B (en) | Image processing method, image processing device and meta space three-dimensional reconstruction method | |

| CN114926654B (en) | A polar line correction method and device | |

| CN114820908B (en) | Virtual image generation method, device, electronic device and storage medium | |

| CN115661421A (en) | Point cloud outlier removal method, point cloud processing method, device and related equipment | |

| CN114627170A (en) | Three-dimensional point cloud registration method and device, computer equipment and storage medium | |

| CN114445495B (en) | A point-surface optimization method, terminal device and computer storage medium | |

| CN117496161B (en) | Point cloud segmentation method and device |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |