CN114612743A - Deep learning model training method, target object identification method and device - Google Patents

Deep learning model training method, target object identification method and deviceDownload PDFInfo

- Publication number

- CN114612743A CN114612743ACN202210234795.7ACN202210234795ACN114612743ACN 114612743 ACN114612743 ACN 114612743ACN 202210234795 ACN202210234795 ACN 202210234795ACN 114612743 ACN114612743 ACN 114612743A

- Authority

- CN

- China

- Prior art keywords

- feature map

- target object

- enhanced

- module

- image

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/21—Design or setup of recognition systems or techniques; Extraction of features in feature space; Blind source separation

- G06F18/214—Generating training patterns; Bootstrap methods, e.g. bagging or boosting

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/24—Classification techniques

- G06F18/241—Classification techniques relating to the classification model, e.g. parametric or non-parametric approaches

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/25—Fusion techniques

- G06F18/253—Fusion techniques of extracted features

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/045—Combinations of networks

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Data Mining & Analysis (AREA)

- Physics & Mathematics (AREA)

- Life Sciences & Earth Sciences (AREA)

- Artificial Intelligence (AREA)

- General Physics & Mathematics (AREA)

- General Engineering & Computer Science (AREA)

- Evolutionary Computation (AREA)

- Bioinformatics & Computational Biology (AREA)

- Evolutionary Biology (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Bioinformatics & Cheminformatics (AREA)

- Computational Linguistics (AREA)

- Biomedical Technology (AREA)

- Biophysics (AREA)

- Health & Medical Sciences (AREA)

- General Health & Medical Sciences (AREA)

- Molecular Biology (AREA)

- Computing Systems (AREA)

- Mathematical Physics (AREA)

- Software Systems (AREA)

- Image Analysis (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本公开涉及人工智能技术领域,尤其涉及深度学习、图像识别和计算机视觉技术领域。更具体地,本公开提供了一种深度学习模型的训练方法和装置、一种目标对象识别方法装置、电子设备、存储介质及计算机程序产品。The present disclosure relates to the technical field of artificial intelligence, and in particular, to the technical fields of deep learning, image recognition and computer vision. More specifically, the present disclosure provides a deep learning model training method and apparatus, a target object recognition method and apparatus, an electronic device, a storage medium, and a computer program product.

背景技术Background technique

在人脸伪造检测领域,传统方法可以采用二分类网络模型结构来进行人脸伪造检测。然而,在上述网络模型的训练过程中,需要引入大量的网络训练参数,增加了计算量,或者忽略了训练数据与模型之间的实际适配性问题,导致检测准确性低下。In the field of face forgery detection, traditional methods can use two-class network model structure for face forgery detection. However, in the training process of the above network model, a large number of network training parameters need to be introduced, which increases the amount of calculation, or ignores the actual adaptation problem between the training data and the model, resulting in low detection accuracy.

发明内容SUMMARY OF THE INVENTION

本公开提供了一种深度学习模型的训练方法和装置、一种目标对象识别方法、装置、电子设备、存储介质及计算机程序产品。The present disclosure provides a deep learning model training method and apparatus, a target object recognition method, apparatus, electronic device, storage medium and computer program product.

根据本公开的一方面,提供了一种深度学习模型的训练方法,包括:According to an aspect of the present disclosure, a training method for a deep learning model is provided, including:

根据样本图像的初始向量特征图,得到融合特征图,所述样本图像包括目标对象以及所述目标对象的标签;Obtain a fusion feature map according to the initial vector feature map of the sample image, where the sample image includes a target object and a label of the target object;

根据所述融合特征图和所述标签,得到目标对象的第一分类差异值;According to the fusion feature map and the label, the first classification difference value of the target object is obtained;

根据所述第一分类差异值和所述初始向量特征图,确定与所述样本图像相对应的增强图像;以及determining an enhanced image corresponding to the sample image according to the first classification difference value and the initial vector feature map; and

利用所述增强图像训练所述深度学习模型。The deep learning model is trained using the augmented image.

根据本公开的另一方面,提供了一种目标对象识别方法,包括:According to another aspect of the present disclosure, a target object recognition method is provided, comprising:

将待识别图像输入深度学习模型,得到所述待识别图像中目标对象的识别结果,其中,所述深度学习模型是利用根据以上所述的方法训练的。The image to be recognized is input into a deep learning model, and the recognition result of the target object in the image to be recognized is obtained, wherein the deep learning model is trained using the method described above.

根据本公开的另一方面,提供了一种深度学习模型的训练装置,包括:According to another aspect of the present disclosure, a training device for a deep learning model is provided, including:

融合模块,用于根据样本图像的初始向量特征图,得到融合特征图,所述样本图像包括目标对象以及所述目标对象的标签;a fusion module, configured to obtain a fusion feature map according to an initial vector feature map of a sample image, where the sample image includes a target object and a label of the target object;

计算模块,用于根据所述融合特征图和所述标签,得到目标对象的第一分类差异值;a computing module for obtaining the first classification difference value of the target object according to the fusion feature map and the label;

增强模块,用于根据所述第一分类差异值和所述初始向量特征图,确定与所述样本图像相对应的增强图像;以及an enhancement module for determining an enhanced image corresponding to the sample image according to the first classification difference value and the initial vector feature map; and

训练模块,用于利用所述增强图像训练所述深度学习模型。A training module for training the deep learning model using the enhanced image.

根据第四方面,提供了一种目标对象识别装置,包括:According to a fourth aspect, a target object identification device is provided, comprising:

识别模块,用于将待识别图像输入深度学习模型,得到所述待识别图像中目标对象的识别结果,其中,所述深度学习模型是利用以上任一项所述的深度学习模型的训练装置训练的。The recognition module is used to input the image to be recognized into the deep learning model, and obtain the recognition result of the target object in the to-be-recognized image, wherein the deep learning model is trained using the training device of the deep learning model described in any of the above of.

根据本公开的另一方面,提供了一种电子设备,包括:According to another aspect of the present disclosure, there is provided an electronic device, comprising:

至少一个处理器;以及at least one processor; and

与所述至少一个处理器通信连接的存储器;其中,a memory communicatively coupled to the at least one processor; wherein,

所述存储器存储有可被所述至少一个处理器执行的指令,所述指令被所述至少一个处理器执行,以使所述至少一个处理器能够执行实现如上所述的方法。The memory stores instructions executable by the at least one processor, the instructions being executed by the at least one processor to enable the at least one processor to perform a method as described above.

根据本公开的另一方面,提供了一种存储有计算机指令的非瞬时计算机可读存储介质,其中,所述计算机指令用于使所述计算机执行实现如上所述的方法。According to another aspect of the present disclosure, there is provided a non-transitory computer-readable storage medium storing computer instructions for causing the computer to execute the method as described above.

根据本公开的另一方面,提供了一种计算机程序产品,包括计算机程序,所述计算机程序在被处理器执行时实现如上所述的方法。According to another aspect of the present disclosure, there is provided a computer program product comprising a computer program which, when executed by a processor, implements the method as described above.

应当理解,本部分所描述的内容并非旨在标识本公开的实施例的关键或重要特征,也不用于限制本公开的范围。本公开的其它特征将通过以下的说明书而变得容易理解。It should be understood that what is described in this section is not intended to identify key or critical features of embodiments of the disclosure, nor is it intended to limit the scope of the disclosure. Other features of the present disclosure will become readily understood from the following description.

附图说明Description of drawings

附图用于更好地理解本方案,不构成对本公开的限定。其中:The accompanying drawings are used for better understanding of the present solution, and do not constitute a limitation to the present disclosure. in:

图1是根据本公开的实施例的可以应用于深度学习模型的训练方法、目标对象识别方法及装置的示例性系统架构;1 is an exemplary system architecture of a training method, a target object recognition method, and an apparatus that can be applied to a deep learning model according to an embodiment of the present disclosure;

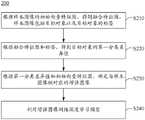

图2是根据本公开的实施例的深度学习模型的训练方法的流程图;2 is a flowchart of a training method of a deep learning model according to an embodiment of the present disclosure;

图3是根据本公开的实施例的获取融合特征图的方法的流程图;3 is a flowchart of a method for obtaining a fusion feature map according to an embodiment of the present disclosure;

图4是根据本公开的实施例的确定增强图像的方法的流程图;4 is a flowchart of a method of determining an enhanced image according to an embodiment of the present disclosure;

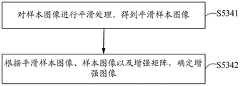

图5A是根据本公开的另一实施例的确定增强图像的方法的流程图;5A is a flowchart of a method of determining an enhanced image according to another embodiment of the present disclosure;

图5B是根据本公开的另一实施例的确定增强图像的方法的示意图;5B is a schematic diagram of a method of determining an enhanced image according to another embodiment of the present disclosure;

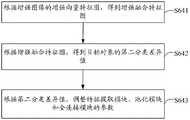

图6是根据本公开的实施例的训练深度学习模型的方法的流程图;6 is a flowchart of a method of training a deep learning model according to an embodiment of the present disclosure;

图7A是根据本公开的实施例的确定目标对象的第二分类差异值的方法的流程图;7A is a flowchart of a method of determining a second classification difference value of a target object according to an embodiment of the present disclosure;

图7B是根据本公开的另一实施例的确定目标对象的第二分类差异值的方法的流程图;7B is a flowchart of a method for determining a second classification difference value of a target object according to another embodiment of the present disclosure;

图8A至8C是根据本公开的实施例的深度学习模型的训练方法的示意图;8A to 8C are schematic diagrams of a training method of a deep learning model according to an embodiment of the present disclosure;

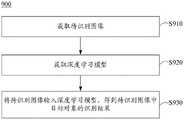

图9是根据本公开的实施例的目标对象识别方法的流程图;9 is a flowchart of a target object recognition method according to an embodiment of the present disclosure;

图10是根据本公开的实施例的深度学习模型的训练装置的框图;10 is a block diagram of a training apparatus for a deep learning model according to an embodiment of the present disclosure;

图11是根据本公开的实施例的目标对象识别装置的框图;11 is a block diagram of a target object recognition apparatus according to an embodiment of the present disclosure;

图12是根据本公开的实施例的深度学习模型的训练方法以及目标对象识别方法的电子设备的框图。12 is a block diagram of an electronic device for a training method of a deep learning model and a target object recognition method according to an embodiment of the present disclosure.

具体实施方式Detailed ways

以下结合附图对本公开的示范性实施例做出说明,其中包括本公开实施例的各种细节以助于理解,应当将它们认为仅仅是示范性的。因此,本领域普通技术人员应当认识到,可以对这里描述的实施例做出各种改变和修改,而不会背离本公开的范围和精神。同样,为了清楚和简明,以下的描述中省略了对公知功能和结构的描述。Exemplary embodiments of the present disclosure are described below with reference to the accompanying drawings, which include various details of the embodiments of the present disclosure to facilitate understanding and should be considered as exemplary only. Accordingly, those of ordinary skill in the art will recognize that various changes and modifications of the embodiments described herein can be made without departing from the scope and spirit of the present disclosure. Also, descriptions of well-known functions and constructions are omitted from the following description for clarity and conciseness.

图1是根据本公开的实施例的可以应用于深度学习模型的训练方法、目标对象识别方法及装置的示例性系统架构。FIG. 1 is an exemplary system architecture of a training method, a target object recognition method, and an apparatus that can be applied to a deep learning model according to an embodiment of the present disclosure.

需要注意的是,图1所示仅为可以应用本公开实施例的系统架构的示例,以帮助本领域技术人员理解本公开的技术内容,但并不意味着本公开实施例不可以用于其他设备、系统、环境或场景。例如,在另一实施例中,可以应用深度学习模型的训练方法、目标对象识别方法及装置的示例性系统架构可以包括终端设备,但终端设备可以无需与服务器进行交互,即可实现本公开实施例提供的深度学习模型的训练方法、目标对象识别方法及装置。It should be noted that FIG. 1 is only an example of a system architecture to which the embodiments of the present disclosure can be applied, so as to help those skilled in the art to understand the technical content of the present disclosure, but it does not mean that the embodiments of the present disclosure cannot be used for other A device, system, environment or scene. For example, in another embodiment, an exemplary system architecture to which a deep learning model training method, a target object recognition method and apparatus may be applied may include a terminal device, but the terminal device may implement the present disclosure without interacting with a server The training method of the deep learning model, the target object recognition method and the device provided by the example.

如图1所示,根据该实施例的系统架构100可以包括终端设备101、102、103,网络104和服务器105。网络104用以在终端设备101、102、103和服务器105之间提供通信链路的介质。网络104可以包括各种连接类型,例如有线和/或无线通信链路等。As shown in FIG. 1 , the

用户可以使用终端设备101、102、103通过网络104与服务器105交互,以接收或发送消息等。终端设备101、102、103上可以安装有各种通讯客户端应用。例如,知识阅读类应用、网页浏览器应用、搜索类应用、即时通信工具、邮箱客户端或社交平台软件等(仅为示例)。The user can use the

终端设备101、102、103可以是具有显示屏并且支持网页浏览的各种电子设备,包括但不限于智能手机、平板电脑、膝上型便携计算机和台式计算机等。The

服务器105可以是提供各种服务的各种类型的服务器。例如,例如,服务器105可以是云服务器,又称为云计算服务器或云主机,是云计算服务体系中的一项主机产品,以解决了传统物理主机与VPS服务(Virtual Private Server,虚拟专用服务器)中,存在的管理难度大,业务扩展性弱的缺陷。服务器105也可以为分布式系统的服务器,或者是结合了区块链的服务器。

需要说明的是,本公开实施例所提供的深度学习模型的训练方法一般可以由终端设备101、102、或103执行。相应地,本公开实施例所提供的深度学习模型的训练装置也可以设置于终端设备101、102、或103中。It should be noted that the training method of the deep learning model provided by the embodiment of the present disclosure may generally be performed by the

备选地,本公开实施例所提供的深度学习模型的训练方法一般也可以由服务器105执行。相应地,本公开实施例所提供的深度学习模型的训练装置一般可以设置于服务器105中。本公开实施例所提供的深度学习模型的训练方法也可以由不同于服务器105且能够与终端设备101、102、103和/或服务器105通信的服务器或服务器集群执行。相应地,本公开实施例所提供的深度学习模型的训练装置也可以设置于不同于服务器105且能够与终端设备101、102、103和/或服务器105通信的服务器或服务器集群中。Alternatively, the training method of the deep learning model provided by the embodiment of the present disclosure may also be generally executed by the

需要说明的是,本公开实施例所提供的目标对象识别方法一般可以由服务器105执行。相应地,本公开实施例所提供的目标对象识别装置一般可以设置于服务器105中。本公开实施例所提供的目标对象识别方法也可以由不同于服务器105且能够与终端设备101、102、103和/或服务器105通信的服务器或服务器集群执行。相应地,本公开实施例所提供的目标对象识别装置也可以设置于不同于服务器105且能够与终端设备101、102、103和/或服务器105通信的服务器或服务器集群中。It should be noted that, the target object identification method provided by the embodiment of the present disclosure may generally be executed by the

备选地,本公开实施例所提供的目标对象识别方法一般也可以由终端设备101、102、或103执行。相应地,本公开实施例所提供的目标对象识别装置也可以设置于终端设备101、102、或103中。Alternatively, the target object identification method provided by the embodiment of the present disclosure may also be generally executed by the

应该理解,图1中的终端设备、网络和服务器的数目仅仅是示意性的。根据实现需要,可以具有任意数目的终端设备、网络和服务器。It should be understood that the numbers of terminal devices, networks and servers in FIG. 1 are merely illustrative. There can be any number of terminal devices, networks and servers according to implementation needs.

应注意,以下方法中各个操作的序号仅作为该操作的表示以便描述,而不应被看作表示该各个操作的执行顺序。除非明确指出,否则该方法不需要完全按照所示顺序来执行。It should be noted that the sequence numbers of the respective operations in the following methods are only used as representations of the operations for the convenience of description, and should not be regarded as representing the execution order of the respective operations. The methods need not be performed in the exact order shown unless explicitly stated.

图2是根据本公开实施例的深度学习模型的训练方法的流程图。FIG. 2 is a flowchart of a training method of a deep learning model according to an embodiment of the present disclosure.

如图2所示,深度学习模型的训练方法200包括操作S210~S240。深度学习模型包括特征提取模块、池化模块和全连接模块。该模型可以与分类网络相结合,以用于对样本图像中目标对象进行识别。As shown in FIG. 2, the

在操作S210,根据样本图像的初始向量特征图,得到融合特征图,样本图像包括目标对象以及目标对象的标签。In operation S210, a fusion feature map is obtained according to the initial vector feature map of the sample image, where the sample image includes a target object and a label of the target object.

样本图像可以是通过相机采集而得到的视频流中的任意一帧或多帧图像,或者可以采用其他方式获取,本公开不以此为限。The sample image may be any one frame or multiple frames of images in the video stream acquired by the camera, or may be acquired in other manners, which the present disclosure is not limited to.

可以理解,样本图像包括不同的对象及其对应的特征,可以利用深度学习模型来预测分析样本图像中目标对象的特征的真伪程度,也即获取针对目标对象的识别结果。例如,目标对象可以是指样本图像中的面部特征,而针对目标对象的识别结果例如可以是指利用深度学习模型来预测分析样本图像中的面部特征的真伪,从而得到关于面部特征的识别结果,即该面部特征是真实面部或者伪造面部。在本公开实施例中,对于样本图像中的目标对象可以根据实际选择。It can be understood that the sample image includes different objects and their corresponding features, and a deep learning model can be used to predict and analyze the authenticity of the features of the target object in the sample image, that is, to obtain the recognition result for the target object. For example, the target object may refer to the facial features in the sample image, and the recognition result for the target object may refer to, for example, using a deep learning model to predict and analyze the authenticity of the facial features in the sample image, so as to obtain the recognition results about the facial features , that is, the facial feature is a real face or a fake face. In the embodiment of the present disclosure, the target object in the sample image can be selected according to the actual situation.

在本公开实施例中,目标对象的标签与目标对象一一对应,为目标对象添加的标签用于表征样本图像中该目标对象的真伪程度,为了便于表征,可以采用标签值来表示样本图像中目标对象的真伪程度,例如,若目标对象为真,标签值可以取1,若目标对象是伪造的,则标签值可以取0。例如,在目标对象为面部的示例中,当目标对象为假脸时,标签值可以取0;当目标对象为真脸时,标签值可以取1。In the embodiment of the present disclosure, the label of the target object corresponds to the target object one-to-one, and the label added to the target object is used to represent the authenticity degree of the target object in the sample image. In order to facilitate the representation, the label value can be used to represent the sample image. The degree of authenticity of the target object, for example, if the target object is true, the tag value can be 1, and if the target object is fake, the tag value can be 0. For example, in the example in which the target object is a face, when the target object is a fake face, the label value may be 0; when the target object is a real face, the label value may be 1.

在本公开实施例中,样本图像中目标对象的标签可以用于计算目标对象的分类差异值。而添加有标签的样本图像可以用于训练深度学习模型,以使深度学习模型获得辨别真伪的能力。In the embodiment of the present disclosure, the label of the target object in the sample image may be used to calculate the classification difference value of the target object. The labeled sample images can be used to train a deep learning model, so that the deep learning model can obtain the ability to distinguish between true and false.

所述初始向量特征图例如可以是将样本图像输入特征提取模块而得到的,将该初始向量特征图输入池化模块可以得到融合特征图。For example, the initial vector feature map may be obtained by inputting the sample image into the feature extraction module, and the initial vector feature map may be input into the pooling module to obtain the fusion feature map.

在操作S220,根据融合特征图和标签,得到目标对象的第一分类差异值。In operation S220, the first classification difference value of the target object is obtained according to the fusion feature map and the label.

例如,可以将融合特征图输入到全连接模块中,并将全连接模块的输出输入到分类网络中进行预测,得到针对目标对象的初始分类结果值。根据该初始分类结果值以及目标对象的标签对应的标签值,可以得到目标对象的第一分类差异值。在一个示例中,上述分类网络例如可以是EfficientNet-B4分类网络,或者可以采用其他分类网络,本公开对此不做限定。For example, the fusion feature map can be input into the fully connected module, and the output of the fully connected module can be input into the classification network for prediction, and the initial classification result value for the target object can be obtained. According to the initial classification result value and the label value corresponding to the label of the target object, the first classification difference value of the target object can be obtained. In an example, the above classification network may be, for example, an EfficientNet-B4 classification network, or other classification networks may be used, which is not limited in the present disclosure.

在本公开实施例中,可以采用如下公式(1)计算样本图像中目标对象的第一分类差异值:In the embodiment of the present disclosure, the following formula (1) can be used to calculate the first classification difference value of the target object in the sample image:

在公式(1)中,表示第i个样本图像中目标对象的第一分类差异值,yi表示第i个样本图像中目标对象的标签对应的标签值,表示第i个样本图像中目标对象的初始分类结果值。In formula (1), represents the first classification difference value of the target object in the ith sample image, yi represents the label value corresponding to the label of the target object in the ith sample image, Represents the initial classification result value of the target object in the ith sample image.

在操作S230,根据第一分类差异值和初始向量特征图,确定与样本图像相对应的增强图像。In operation S230, an enhanced image corresponding to the sample image is determined according to the first classification difference value and the initial vector feature map.

依据第一分类差异值和上述初始向量特征图,可以确定与样本图像相对应的增强图像,该增强图像可以用于训练深度学习模型。According to the first classification difference value and the above-mentioned initial vector feature map, an enhanced image corresponding to the sample image can be determined, and the enhanced image can be used to train a deep learning model.

在操作S240,利用增强图像训练深度学习模型。In operation S240, a deep learning model is trained using the augmented image.

在本公开实施例中,用于训练深度学习模型的训练样本可以包含多个批次的样本图像,每个批次包括多个样本图像。针对一个批次中包含的多个样本图像,可以依据操作S210至操作S230的方法分别获取与多个样本图像相对应的多个增强图像,并利用这些增强图像来训练深度学习模型,直至模型收敛,从而使得深度学习模型能够辨别图像中的真伪特征。In an embodiment of the present disclosure, a training sample for training a deep learning model may include multiple batches of sample images, and each batch includes multiple sample images. For a plurality of sample images included in a batch, a plurality of enhanced images corresponding to the plurality of sample images may be obtained respectively according to the methods of operations S210 to S230, and these enhanced images are used to train a deep learning model until the model converges , so that the deep learning model can distinguish the true and false features in the image.

在本公开实施例的方案中,通过利用目标对象的第一分类差异值和初始向量特征来确定增强图像,并利用增强图像训练深度学习模型,在此过程中,无需引入大量的网络训练参数,降低了模型训练过程的计算量,而且利用增强图像来训练深度学习模型,使得模型学习更加鲁棒的伪造特征,提高了模型识别准确率。In the solution of the embodiment of the present disclosure, the enhanced image is determined by using the first classification difference value and the initial vector feature of the target object, and the deep learning model is trained by using the enhanced image. In this process, there is no need to introduce a large number of network training parameters, The calculation amount of the model training process is reduced, and the enhanced image is used to train the deep learning model, which enables the model to learn more robust forgery features and improves the model recognition accuracy.

图3是根据本公开的实施例的获取融合特征图的方法的流程图,以下将参考图3对融合特征图的获取方法进行示例性说明。FIG. 3 is a flowchart of a method for acquiring a fusion feature map according to an embodiment of the present disclosure, and the method for acquiring a fusion feature map will be exemplarily described below with reference to FIG. 3 .

如图3所示,获取融合特征图的方法包括操作S311~S313。As shown in FIG. 3 , the method for obtaining a fusion feature map includes operations S311 to S313 .

在操作S311,对初始向量特征图执行池化操作,得到第一池化特征图。In operation S311, a pooling operation is performed on the initial vector feature map to obtain a first pooled feature map.

这里所谓的池化操作例如可以采用平均池化操作、最大池化操作或者其他池化操作,具体不做限定。The so-called pooling operation here can be, for example, an average pooling operation, a maximum pooling operation, or other pooling operations, which is not specifically limited.

以平均池化操作为例,例如,对一个大小为H×W×C的初始向量特征图F执行平均池化操作,可以得到第一池化特征图。该第一池化特征图可以表示如下:Taking the average pooling operation as an example, for example, by performing the average pooling operation on an initial vector feature map F of size H×W×C, the first pooled feature map can be obtained. The first pooled feature map can be represented as follows:

在公式(2)中,E1k表示第一池化特征图,Fi,j,k表示第一池化特征图对应的特征向量,0≤i≤H-1,0≤j≤W-1,0≤k≤C-1。In formula (2), E1k represents the first pooling feature map, Fi, j, k represent the feature vector corresponding to the first pooling feature map, 0≤i≤H-1, 0≤j≤W-1 , 0≤k≤C-1.

由上式可知,对大小为H×W×C的初始向量特征图F执行平均池化操作,相当于将初始向量特征图F分解为H×W个一维向量(记为Gi,j),Gi,j∈RC。即对于每个0≤i≤H-1,0≤j≤W-1,0≤k≤C-1,有:It can be seen from the above formula that performing the average pooling operation on the initial vector feature map F of size H×W×C is equivalent to decomposing the initial vector feature map F into H×W one-dimensional vectors (denoted as Gi, j ) , Gi,j ∈ RC . That is, for each 0≤i≤H-1, 0≤j≤W-1, 0≤k≤C-1, there are:

(Gi,j)k=Fi,j,k (3)(Gi,j )k =Fi,j,k (3)

公式(3)中,(Gi,j)k表示i*j个k,Fi,j,k实质上可以理解为长、宽、高分别为i、j、k的立方体。In formula (3), (Gi,j )k represents i*j k, Fi, j, k can be understood as a cube whose length, width and height are i, j, and k respectively.

在操作S312,利用第一权重对第一池化特征图进行处理,得到加权池化特征图。In operation S312, the first pooled feature map is processed with the first weight to obtain a weighted pooled feature map.

在本公开实施例中,第一权重(记为U)是利用上一批次的样本图像训练后得到的全连接模块的权重,第一权重指示了目标对象的特征的分布概率。第一权重越大,表明目标对象的特征的重要程度越高。在本公开实施例中,第一权重U的长度为k。In the embodiment of the present disclosure, the first weight (denoted as U) is the weight of the fully connected module obtained after training with the sample images of the previous batch, and the first weight indicates the distribution probability of the feature of the target object. The larger the first weight, the higher the importance of the feature of the target object. In this embodiment of the present disclosure, the length of the first weight U is k.

根据本公开实施例,利用第一权重对第一池化特征图进行处理,是指利用第一权重U对(Gi,j)k进行加权处理,并基于加权处理后的向量得到加权池化特征图。According to the embodiment of the present disclosure, using the first weight to process the first pooling feature map refers to using the first weight U to perform weighting processing on (Gi, j )k, and obtaining weighted pooling based on the weighted vector feature map.

利用第一权重U对(Gi,j)k进行加权处理,可以得到一个加权得分:Using the first weight U to weight (Gi, j )k, a weighted score can be obtained:

公式(4)中,pi,j表示加权得分,对于每个0≤i≤H-1和0≤j≤W-1,<·>表示向量内积。In formula (4), pi,j represents the weighted score, and for each of 0≤i≤H-1 and 0≤j≤W-1, <·> denotes the vector inner product.

在本公开实施例中,pi,j可以看作目标对象的伪造特征分布的概率,∑i,jpi,j=1。In the embodiment of the present disclosure, pi,j can be regarded as the probability of forging feature distribution of the target object, ∑i,j pi,j =1.

在本公开实施例中,加权池化特征图可以表示为:In this embodiment of the present disclosure, the weighted pooling feature map can be expressed as:

公式(5)中,β表示超参数。In formula (5), β represents a hyperparameter.

在本公开实施例中,β可以根据实际情况设定,具体不做限定。In the embodiment of the present disclosure, β may be set according to actual conditions, which is not specifically limited.

在操作S313,根据第一池化特征图和加权池化特征图,得到融合特征图。In operation S313, a fusion feature map is obtained according to the first pooled feature map and the weighted pooled feature map.

具体地,可以将第一池化特征图和加权池化特征图进行融合,得到融合特征图。融合特征图可以表示为:Specifically, the first pooled feature map and the weighted pooled feature map may be fused to obtain a fused feature map. The fusion feature map can be expressed as:

基于上述Gi,j与Fi,j,k的关系,可以将融合特征图表示为:Based on the above relationship between Gi, j and Fi, j, k , the fusion feature map can be expressed as:

在本公开实施例中,利用第一权重对第一池化特征图进行处理,并将第一池化特征图和加权池化特征图进行融合,得到融合特征图,相对于单纯采用第一池化特征图的方法,本公开的方法能够增强池化特征图中潜在的伪造特征,从而使得模型更加关注潜在的伪造特征,减少无关特征或者弱相关特征对后续确定增强图像的干扰,进而提高模型的训练准确度。In the embodiment of the present disclosure, the first pooled feature map is processed by using the first weight, and the first pooled feature map and the weighted pooled feature map are fused to obtain a fused feature map. Compared with simply using the first pooled feature map The method of transforming the feature map, the method of the present disclosure can enhance the potential forged features in the pooled feature map, so that the model pays more attention to the potential forged features, reduces the interference of irrelevant features or weakly related features on the subsequent determination of enhanced images, and improves the model. training accuracy.

图4是根据本公开的实施例的确定增强图像的方法的流程图。以下将参考图4对确定增强图像的方法的示例性实现方式进行说明。4 is a flowchart of a method of determining an enhanced image according to an embodiment of the present disclosure. An exemplary implementation of the method of determining an enhanced image will be described below with reference to FIG. 4 .

如图4所示,确定增强图像的方法包括操作S431~S434。As shown in FIG. 4 , the method of determining an enhanced image includes operations S431 ˜ S434 .

在操作S431,根据初始向量图和第一权重,确定注意力特征图。In operation S431, an attention feature map is determined according to the initial vector map and the first weight.

在本公开实施例中,注意力特征图(Attention Map,AM)用于表示模型对于局部区域的感兴趣程度。In the embodiment of the present disclosure, an attention map (Attention Map, AM) is used to represent the degree of interest of the model to the local area.

根据本公开的实施例,可以根据初始向量图和第一权重来确定注意力特征图,其满足:According to an embodiment of the present disclosure, the attention feature map can be determined according to the initial vector map and the first weight, which satisfies:

公式(8)中,AMi,j表示注意力特征图。In formula (8), AMi,j represents the attention feature map.

在本公开实施例中,注意力特征图AMi,j是大小为H×W的矩阵,其与样本图像的大小相同。由于注意力特征图表示模型对于局部区域的感兴趣程度,因而矩阵中元素的值越大,表示注意力特征图中敏感区域越大,模型对于注意力特征图中敏感区域的感兴趣程度越高,可以将注意力特征图的最敏感区域作为感兴趣区域(Regions of Interests,ROI),以用于确定增强图像。In an embodiment of the present disclosure, the attention feature map AMi,j is a matrix of size H×W, which is the same size as the sample image. Since the attention feature map represents the model's interest in the local area, the larger the value of the element in the matrix, the larger the sensitive area in the attention feature map, and the higher the model's interest in the sensitive area in the attention feature map. , the most sensitive region of the attention feature map can be taken as the region of interest (Regions of Interests, ROI) for determining the enhanced image.

在操作S432,确定注意力特征图中,数值较大的预定数目个元素。In operation S432, a predetermined number of elements with larger numerical values in the attention feature map are determined.

根据本公开的实施例,可以根据选择阈值确定预定数目,换言之,可以根据选择阈值选取注意力特征图的最敏感区域作为感兴趣区域。According to an embodiment of the present disclosure, the predetermined number may be determined according to the selection threshold, in other words, the most sensitive region of the attention feature map may be selected as the region of interest according to the selection threshold.

根据本公开的实施例,选择阈值可以根据一个批次中的N个样本图像的N个第一分类差异值的平均第一分类差异值来确定。According to an embodiment of the present disclosure, the selection threshold may be determined according to an average first classification difference value of N first classification difference values of N sample images in a batch.

具体地,选择阈值可以采用如下公式计算得到:Specifically, the selection threshold can be calculated by the following formula:

公式(9)中,η表示选择阈值,N表示一个批次中样本图像的数量,表示第一分类差异值,公式(10)中,α为超参,例如α=0.1。In formula (9), n represents the selection threshold, N represents the number of sample images in a batch, Represents the first classification difference value. In formula (10), α is a hyperparameter, for example, α=0.1.

在本公开实施例中,对于一个批次中的N个样本图像的N个第一分类差异值可以采用上述第一分类差异值计算公式得到,这里不再赘述。In the embodiment of the present disclosure, the N first classification difference values of the N sample images in a batch can be obtained by using the above-mentioned first classification difference value calculation formula, which will not be repeated here.

由上述公式可以知晓,当第一分类差异值较大时,此时模型尚未学习到有效的伪造特征,相应地,选择阈值较小,反之亦然。根据选择阈值选取注意力特征图的最敏感区域作为感兴趣区域,这样可以自适应地控制感兴趣区域的大小(即增强矩阵的大小),从而控制样本图像增强的程度。It can be known from the above formula that when the difference value of the first classification is large, the model has not learned effective forgery features at this time, and accordingly, the selection threshold is small, and vice versa. According to the selection threshold, the most sensitive region of the attention feature map is selected as the region of interest, which can adaptively control the size of the region of interest (that is, the size of the enhancement matrix), thereby controlling the degree of sample image enhancement.

在本公开实施例中,根据选择阈值确定预定数目例如可以是指根据选择阈值来确定注意力特征图(例如大小为H×W的矩阵)中需要增强的元素的比例。例如,可以将注意力特征图中的H×W个元素按照数值大小排序,根据选择阈值来选择排序在前的20%(仅为示例)的元素来确定增强矩阵。In the embodiment of the present disclosure, determining the predetermined number according to the selection threshold may, for example, refer to determining the proportion of elements to be enhanced in the attention feature map (eg, a matrix of size H×W) according to the selection threshold. For example, the H×W elements in the attention feature map can be sorted numerically, and the top 20% (example only) elements are selected according to the selection threshold to determine the enhancement matrix.

在操作S433,根据预定数目个元素,得到增强矩阵。In operation S433, an enhancement matrix is obtained according to a predetermined number of elements.

由于预定数目个元素的数量可能小于样本图像的大小,为了便于后续计算,在根据预定数据个元素得到增强图像的过程中,可以在相关位置补零,以使增强矩阵的大小与样本图像的大小一致。Since the number of the predetermined number of elements may be smaller than the size of the sample image, in order to facilitate the subsequent calculation, in the process of obtaining the enhanced image according to the predetermined data elements, zeros can be filled in the relevant positions, so that the size of the enhancement matrix is the same as the size of the sample image. Consistent.

在操作S434,根据增强矩阵和样本图像,确定增强图像。In operation S434, an enhanced image is determined according to the enhancement matrix and the sample image.

根据增强矩阵和样本图像可以确定增强图像,该增强图像可以用于训练训练深度学习模型。The augmented image can be determined from the augmented matrix and the sample image, and the augmented image can be used to train a deep learning model.

以下参考图5A和图5B对确定增强图像的方法进行说明。The method of determining the enhanced image will be described below with reference to FIGS. 5A and 5B .

图5A是根据本公开的另一实施例的确定增强图像的方法的流程。5A is a flowchart of a method of determining an enhanced image according to another embodiment of the present disclosure.

如图5A所示,确定增强图像的方法包括操作S5341~S5342。As shown in FIG. 5A , the method of determining an enhanced image includes operations S5341˜S5342.

在操作S5341,对样本图像进行平滑处理,得到平滑样本图像。In operation S5341, smoothing is performed on the sample image to obtain a smoothed sample image.

在本公开实施例中,对样本图像进行平滑处理,可以采用任意一种或多种图像平滑处理方法,例如高斯平滑,或者可以采用其他图像平滑处理方法,在此不做限制。In this embodiment of the present disclosure, any one or more image smoothing methods may be used to smooth the sample image, such as Gaussian smoothing, or other image smoothing methods may be used, which is not limited herein.

在操作S5342,根据平滑样本图像、样本图像以及增强矩阵,确定增强图像。In operation S5342, an enhanced image is determined according to the smoothed sample image, the sample image, and the enhancement matrix.

在本公开实施例中,在确定增强图像之前,可以根据增强矩阵确定除感兴趣区域之外的其他区域所对应的互补矩阵,该互补矩阵表征的区域已经去除潜在的伪造区域。根据互补矩阵、平滑样本图像、样本图像以及增强矩阵,可以确定增强图像。In the embodiment of the present disclosure, before determining the enhanced image, a complementary matrix corresponding to other regions other than the region of interest may be determined according to the enhanced matrix, and the region represented by the complementary matrix has removed potential forgery regions. From the complementary matrix, the smoothed sample image, the sample image, and the enhancement matrix, the enhanced image can be determined.

在本公开实施例中,可以采用如下公式确定增强图像:In this embodiment of the present disclosure, the following formula can be used to determine the enhanced image:

公式(11)中,表示增强图像,T表示增强矩阵,Blur(xi)表示平滑样本图像,xi表示样本图像,⊙表示哈达马乘积。In formula (11), represents the enhanced image, T represents the enhancement matrix, Blur(xi ) represents the smoothed sample image,xi represents the sample image, and ⊙ represents the Hadamard product.

基于上述公式,可以进一步准确确定增强图像,从而提高模型训练的准确性。Based on the above formula, the enhanced image can be further accurately determined, thereby improving the accuracy of model training.

图5B是根据本公开的另一实施例的确定增强图像的方法的示意图。5B is a schematic diagram of a method of determining an enhanced image according to another embodiment of the present disclosure.

如图5B所示,在目标对象为面部的示例中,基于以上描述的方法确定了增强矩阵T,该增强矩阵T表征了样本图像R中最敏感的伪造区域,依据增强矩阵T可以确定互补矩阵T’。As shown in Fig. 5B, in the example where the target object is a face, an enhancement matrix T is determined based on the method described above, the enhancement matrix T represents the most sensitive forgery area in the sample image R, and the complementary matrix can be determined according to the enhancement matrix T T'.

对样本图像R进行平滑处理,得到平滑样本图像M,根据上述增强图像计算公式,分别计算增强矩阵与平滑样本图像M之间的哈达马乘积以及样本图像R与互补矩阵T’之间的哈达马乘积,并将两个哈达马乘积结果相加,从而得到增强图像D。该增强图像D中保留了样本图像R的大部分语义信息,而且增强图像D对于样本图像R中潜在的伪造区域(虚线部分示出)进行了特征增强,这样,后续在利用增强图像D训练模型时,可以使模型学习到更稳定、准确的特征。Perform smoothing on the sample image R to obtain a smoothed sample image M, and calculate the Hadamard product between the enhanced matrix and the smoothed sample image M and the Hadamard product between the sample image R and the complementary matrix T' according to the above enhanced image calculation formula. product, and add the two Hadamard product results to obtain the enhanced image D. The enhanced image D retains most of the semantic information of the sample image R, and the enhanced image D enhances the potential forgery area (shown by the dotted line) in the sample image R. In this way, the enhanced image D is used to train the model later. , the model can learn more stable and accurate features.

图6是根据本公开的实施例的训练深度学习模型的方法的流程图。以下将参考图6对训练深度学习模型的方法的示例性实现方式进行说明。6 is a flowchart of a method of training a deep learning model according to an embodiment of the present disclosure. An exemplary implementation of the method of training a deep learning model will be described below with reference to FIG. 6 .

如图6所示,训练深度学习模型的方法包括操作S641~S643。该深度学习模型包括特征提取模块、池化模块和全连接模块。As shown in FIG. 6 , the method for training a deep learning model includes operations S641 to S643. The deep learning model includes a feature extraction module, a pooling module and a fully connected module.

在操作S641,根据增强图像的增强向量特征图,得到增强融合特征图。In operation S641, an enhanced fusion feature map is obtained according to the enhanced vector feature map of the enhanced image.

所述增强向量特征图例如可以是将增强图像输入特征提取模块而得到的,将该增强向量特征图输入池化模块可以得到增强融合特征图。For example, the enhanced vector feature map may be obtained by inputting the enhanced image into the feature extraction module, and the enhanced vector feature map may be input into the pooling module to obtain the enhanced fusion feature map.

在本公开实施例中,获取增强向量特征图、增强融合特征图分别与获取初始向量特征图、融合特征图的方式类似,这里不再赘述。In the embodiment of the present disclosure, obtaining the enhanced vector feature map and the enhanced fusion feature map are respectively similar to the manners of obtaining the initial vector feature map and the fusion feature map, which will not be repeated here.

在操作S642,根据增强融合特征图,得到目标对象的第二分类差异值。In operation S642, a second classification difference value of the target object is obtained according to the enhanced fusion feature map.

例如,可以将增强融合特征图输入到全连接模块中,并将全连接模块的输出输入到分类网络中,得到针对目标对象的增强分类结果值,依据该增强分类结果值可以确定目标对象的第二分类差异值。For example, the enhanced fusion feature map can be input into the fully connected module, and the output of the fully connected module can be input into the classification network to obtain the enhanced classification result value for the target object. Dichotomous difference value.

在操作S643,根据第二分类差异值,调整特征提取模块、池化模块和全连接模块的参数。In operation S643, the parameters of the feature extraction module, the pooling module and the fully connected module are adjusted according to the second classification difference value.

在本公开实施例中,基于增强图像来确定目标对象的第二分类差异值,并根据第二分类差异值调整深度学习模型的各模块的参数,由于增强图像对于样本图像中潜在的伪造区域进行了特征增强,这样在利用增强图像训练模型时,可以使模型学习到更稳定、准确的特征,从而提高了模型识别准确率。In the embodiment of the present disclosure, the second classification difference value of the target object is determined based on the enhanced image, and the parameters of each module of the deep learning model are adjusted according to the second classification difference value. Feature enhancement is implemented, so that when the model is trained with enhanced images, the model can learn more stable and accurate features, thereby improving the accuracy of model recognition.

下面将参考图7A和图7B来说明上述操作S642的示例实现方式。An example implementation of the above operation S642 will be described below with reference to FIGS. 7A and 7B .

图7A是根据本公开的实施例的确定目标对象的第二分类差异值的方法的流程图。7A is a flowchart of a method of determining a second classification difference value of a target object according to an embodiment of the present disclosure.

如图7A所示,确定目标对象的第二分类差异值的方法包括操作S7421~S7422。As shown in FIG. 7A , the method of determining the second classification difference value of the target object includes operations S7421˜S7422.

在操作S7421,根据增强融合特征图,确定目标对象的增强分类结果值。In operation S7421, the enhanced classification result value of the target object is determined according to the enhanced fusion feature map.

例如,可以将增强融合特征图输入到全连接模块中,并将全连接模块的输出输入到分类网络中,得到目标对象的增强分类结果值。For example, the enhanced fusion feature map can be input into the fully connected module, and the output of the fully connected module can be input into the classification network to obtain the enhanced classification result value of the target object.

在操作S7422,根据初始分类结果值和增强分类结果值,确定目标对象的第二分类差异值。In operation S7422, a second classification difference value of the target object is determined according to the initial classification result value and the enhanced classification result value.

前面已经介绍,将融合特征图输入到全连接模块中,并将全连接模块的输出输入到分类网络中,可以得到针对目标对象的初始分类结果值。As mentioned above, the fusion feature map is input into the fully connected module, and the output of the fully connected module is input into the classification network, and the initial classification result value for the target object can be obtained.

根据本公开的实施例,根据初始分类结果值和增强分类结果值之间的相对熵,可以确定目标对象的第二分类差异值。According to an embodiment of the present disclosure, according to the relative entropy between the initial classification result value and the enhanced classification result value, the second classification difference value of the target object may be determined.

具体地,初始分类结果值和增强分类结果值之间的相对熵可以采用如下公式(12)计算:Specifically, the relative entropy between the initial classification result value and the enhanced classification result value can be calculated using the following formula (12):

公式(12)中,LPC表示初始分类结果值和增强分类结果值之间的相对熵,N为一个批次中样本图像的数量,表示初始分类结果值,表示增强分类结果值。In formula (12), LPC represents the relative entropy between the initial classification result value and the enhanced classification result value, N is the number of sample images in a batch, represents the initial classification result value, Represents the enhanced classification result value.

在本公开实施例中,可以将初始分类结果值和增强分类结果值之间的相对熵作为目标对象的第二分类差异值,以用于调整深度学习模型的各模块的参数。In the embodiment of the present disclosure, the relative entropy between the initial classification result value and the enhanced classification result value may be used as the second classification difference value of the target object to adjust the parameters of each module of the deep learning model.

图7B是本公开另一实施例的确定目标对象的第二分类差异值的方法的流程图。7B is a flowchart of a method for determining a second classification difference value of a target object according to another embodiment of the present disclosure.

如图7B所示,确定目标对象的第二分类差异值的方法包括操作S7423~S7424。As shown in FIG. 7B , the method of determining the second classification difference value of the target object includes operations S7423˜S7424.

在操作S7423,根据增强融合特征图,确定目标对象的增强分类结果值。In operation S7423, the enhanced classification result value of the target object is determined according to the enhanced fusion feature map.

在本操作中,确定目标对象的增强分类结果值与以上描述的方法相同或类似,在此不做赘述。In this operation, determining the enhanced classification result value of the target object is the same as or similar to the method described above, and will not be repeated here.

在操作S7424,根据标签和增强分类结果值,确定目标对象的第二分类差异值。In operation S7424, a second classification difference value of the target object is determined according to the label and the enhanced classification result value.

根据增强分类结果值以及目标对象的标签对应的标签值,可以得到目标对象的第二分类差异值。According to the enhanced classification result value and the label value corresponding to the label of the target object, the second classification difference value of the target object can be obtained.

在本公开实施例中,可以采用如下公式(13)计算样本图像中目标对象的第二分类差异值:In the embodiment of the present disclosure, the following formula (13) can be used to calculate the second classification difference value of the target object in the sample image:

公式(13)中,表示第j个样本图像中目标对象的第二分类差异值,yi表示第i个样本图像中目标对象的标签对应的标签值,表示第i个样本图像中目标对象的增强分类结果值。In formula (13), represents the second classification difference value of the target object in the jth sample image, yi represents the label value corresponding to the label of the target object in the ith sample image, Represents the enhanced classification result value of the target object in the ith sample image.

基于上述公式,可以准确确定目标对象的第二分类差异值,从而提高模型训练的准确性。Based on the above formula, the second classification difference value of the target object can be accurately determined, thereby improving the accuracy of model training.

图8A、图8B和图8C是根据本公开的实施例的深度学习模型的训练方法的示意图,以下将参考图8A至图8C对本公开的方案进行说明。8A , 8B and 8C are schematic diagrams of a training method of a deep learning model according to an embodiment of the present disclosure, and the solution of the present disclosure will be described below with reference to FIGS. 8A to 8C .

如图8A至8C所示,在本公开实施例中,深度学习模型800包括特征提取模块810(810′)、池化模块820(820′)、全连接模块830(830′)和分类器840(840′)。根据本公开实施例的深度学习模型的训练方法可以完成对特征提取模块810、池化模块820、全连接模块830和分类器840的训练,直至各模块收敛。As shown in FIGS. 8A to 8C , in an embodiment of the present disclosure, the

如图8A所示,使用特征提取模块810对包含目标对象以及目标对象的标签的样本图像R执行特征提取操作,得到样本图像R的初始向量特征图Fr。使用池化模块820对初始向量特征图Fr执行池化操作,得到第一池化特征图,利用第一权重U对第一池化特征图进行处理,得到加权池化特征图,以及将第一池化特征图和加权池化特征图进行融合,得到融合特征图Er。将融合特征图Er输入全连接模块830,并将全连接模块830输出的结果输入分类器840中,得到目标对象的初始分类结果值Cr。依据初始分类结果值Cr以及目标对象的标签对应的标签值来计算850得到第一分类差异值Lr。依据第一分类差异值Lr、第一权重U以及以上得到的初始向量特征图Fr可以确定860增强图像D。该增强图像D能够增强池化特征图中潜在的伪造特征,从而使得模型更加关注潜在的伪造特征,有利于提高模型的训练准确度。As shown in FIG. 8A , the

如图8B所示,确定增强图像D之后,可以使用特征提取模块810′对增强图像D执行特征提取操作,得到增强融合特征图Fd。将增强融合特征图Fd和第一权重U输入池化模块820′,得到增强融合特征图Ed,获取增强融合特征图Ed的方式与获取融合特征图Er的方式类似,此处不再详细说明。将增强融合特征图Ed输入全连接模块830′,并将全连接模块830′输出的结果输入分类器840′中,得到目标对象的增强分类结果值Cd。根据增强分类结果值Cd和目标对象的标签可以依据公式(13)计算870得到第二分类差异值Ld,计算得到的第二分类差异值Ld可以用于调整特征提取模块810、池化模块820、全连接模块830和分类器840的参数。As shown in FIG. 8B , after the enhanced image D is determined, the

图8C示出了另一实施例的确定目标对象的第二分类差异值的方法的示例图。FIG. 8C shows an example diagram of a method for determining a second classification difference value of a target object according to another embodiment.

如图8C所示,得到目标对象的增强分类结果值Cd之后,可以根据增强分类结果值Cd和初始分类结果值Cr并依据公式(12)来计算870′得到初始分类结果值Cr和增强分类结果值Cd之间的相对熵。在本公开实施例中,可以将上述相对熵作为第二分类差异值Ld′,以用于调整特征提取模块810、池化模块820、全连接模块830和分类器840的参数。As shown in FIG. 8C , after obtaining the enhanced classification result value Cd of the target object, the initial classification result value Cr and the enhanced classification result can be obtained by calculating 870 ′ according to the enhanced classification result value Cd and the initial classification result value Cr and according to formula (12). Relative entropy between values Cd. In the embodiment of the present disclosure, the above relative entropy may be used as the second classification difference value Ld′ to adjust the parameters of the

在本公开实施例的方案中,通过利用目标对象的第一分类差异值和初始向量特征来确定增强图像,并利用增强图像训练深度学习模型,在此过程中,无需引入大量的网络训练参数,降低了模型训练过程的计算量,而且利用增强图像来训练深度学习模型,使得模型学习更加鲁棒的伪造特征,提高了模型识别准确率。In the solution of the embodiment of the present disclosure, the enhanced image is determined by using the first classification difference value of the target object and the initial vector feature, and the enhanced image is used to train the deep learning model. In this process, there is no need to introduce a large number of network training parameters, The calculation amount of the model training process is reduced, and the enhanced image is used to train the deep learning model, which enables the model to learn more robust forgery features and improves the model recognition accuracy.

根据本公开的实施例,可以利用多个批次的样本图像来训练上述深度学习模型,直至模型收敛。利用每个样本图像训练模型的过程与以上描述的过程相同或类似,在此不再赘述。经训练的深度学习模型可以用于目标对象的识别,下面将参考图9对目标对象识别方法进行说明。According to an embodiment of the present disclosure, the above-mentioned deep learning model can be trained by using multiple batches of sample images until the model converges. The process of using each sample image to train the model is the same as or similar to the process described above, and will not be repeated here. The trained deep learning model can be used for target object recognition, and the target object recognition method will be described below with reference to FIG. 9 .

图9是根据本公开的实施例的目标对象识别方法的流程图。FIG. 9 is a flowchart of a target object recognition method according to an embodiment of the present disclosure.

如图9所示,目标对象识别方法900包括操作S910~S930。As shown in FIG. 9, the target

在操作S910,获取待识别图像。In operation S910, an image to be recognized is acquired.

待识别图像可以是通过相机采集而得到的视频流中的任意一帧或多帧图像,或者可以采用其他方式获取,本公开不以此为限。The image to be recognized may be any one frame or multiple frames of images in a video stream acquired by a camera, or may be acquired in other manners, and the present disclosure is not limited thereto.

根据本公开的实施例,待识别图像包括一个或多个目标对象,所谓的目标对象例如可以包括面部,或者是其他对象,这里不做限定。According to an embodiment of the present disclosure, the image to be recognized includes one or more target objects, and the so-called target objects may include, for example, a face or other objects, which are not limited here.

在操作S920,获取深度学习模型。In operation S920, a deep learning model is obtained.

根据本公开的实施例,这里所谓的深度学习模型是基于上述实施例中任一项所述的深度学习模型的训练方法训练得到的。According to an embodiment of the present disclosure, the so-called deep learning model here is obtained by training based on the training method of the deep learning model described in any one of the foregoing embodiments.

在操作S930,将待识别图像输入深度学习模型,得到待识别图像中目标对象的识别结果。In operation S930, the image to be recognized is input into the deep learning model, and a recognition result of the target object in the image to be recognized is obtained.

将上述待识别图像输入深度学习模型中,可以得到待识别图像中目标对象的识别结果。在目标对象为面部的示例中,这里所谓的识别结果可以包括真实面部和伪造面部。By inputting the above image to be recognized into the deep learning model, the recognition result of the target object in the image to be recognized can be obtained. In the example in which the target object is a face, the so-called recognition result here may include a real face and a fake face.

在本公开实施例的方案中,通过利用以上方式训练得到的深度学习模型来识别待识别图像,可以使得模型学习更加鲁棒的伪造特征,从而提高模型识别准确率。In the solution of the embodiment of the present disclosure, by using the deep learning model trained in the above manner to recognize the image to be recognized, the model can learn more robust forgery features, thereby improving the model recognition accuracy.

图10是根据本公开的实施例的深度学习模型的训练装置的框图。10 is a block diagram of a training apparatus of a deep learning model according to an embodiment of the present disclosure.

如图10所示,深度学习模型的训练装置1000包括融合模块1010、计算模块1020、增强模块1030和训练模块1040。As shown in FIG. 10 , the

融合模块1010用于根据样本图像的初始向量特征图,得到融合特征图,样本图像包括目标对象以及目标对象的标签。The

计算模块1020用于根据融合特征图和标签,得到目标对象的第一分类差异值。The

增强模块1030用于根据第一分类差异值和初始向量特征图,确定与样本图像相对应的增强图像。以及The

训练模块1040用于利用增强图像训练深度学习模型。The

根据本公开的实施例,融合模块1010包括池化子模块、加权子模块和第一融合子模块。According to an embodiment of the present disclosure, the

池化子模块用于对初始向量特征图执行池化操作,得到第一池化特征图。The pooling sub-module is used to perform a pooling operation on the initial vector feature map to obtain the first pooled feature map.

加权子模块用于利用第一权重对第一池化特征图进行处理,得到加权池化特征图。以及The weighting sub-module is used to process the first pooled feature map with the first weight to obtain a weighted pooled feature map. as well as

第一融合子模块用于根据第一池化特征图和加权池化特征图,得到融合特征图。The first fusion sub-module is used to obtain a fusion feature map according to the first pooled feature map and the weighted pooled feature map.

根据本公开的实施例,上述第一权重指示了目标对象的特征的分布概率。According to an embodiment of the present disclosure, the above-mentioned first weight indicates the distribution probability of the feature of the target object.

根据本公开的实施例,增强模块1030包括注意力子模块、选择子模块、生成子模块和增强子模块。According to an embodiment of the present disclosure, the

注意力子模块用于根据初始向量图和第一权重,确定注意力特征图。The attention sub-module is used to determine the attention feature map according to the initial vector map and the first weight.

选择子模块用于确定注意力特征图中,数值较大的预定数目个元素。The selection sub-module is used to determine a predetermined number of elements with larger values in the attention feature map.

生成子模块用于根据预定数目个元素,得到增强矩阵。以及The generating sub-module is used to obtain an enhancement matrix according to a predetermined number of elements. as well as

增强子模块用于根据增强矩阵和样本图像,确定增强图像。The enhancement sub-module is used to determine the enhanced image according to the enhancement matrix and the sample image.

根据本公开的实施例,选择子模块包括选择单元,选择单元用于根据选择阈值,确定预定数目。According to an embodiment of the present disclosure, the selection sub-module includes a selection unit configured to determine the predetermined number according to the selection threshold.

根据本公开的实施例,样本图像包括N个样本图像,N为大于1的整数。上述装置1000还包括第一确定模块和第二确定模块。According to an embodiment of the present disclosure, the sample images include N sample images, where N is an integer greater than 1. The above-mentioned

第一确定模块用于确定N个样本图像的N个第一分类差异值的平均第一分类差异值。以及The first determination module is configured to determine the average first classification difference value of N first classification difference values of the N sample images. as well as

第二确定模块用于根据平均第一分类差异值,确定选择阈值。The second determining module is configured to determine the selection threshold according to the average first classification difference value.

根据本公开的实施例,增强子模块包括平滑单元和增强单元。According to an embodiment of the present disclosure, the enhancement sub-module includes a smoothing unit and an enhancement unit.

平滑单元用于对样本图像进行平滑处理,得到平滑样本图像。以及增强单元用于根据平滑样本图像、样本图像以及增强矩阵,确定增强图像。The smoothing unit is used for smoothing the sample image to obtain a smoothed sample image. and an enhancement unit for determining an enhanced image according to the smoothed sample image, the sample image and the enhancement matrix.

根据本公开的实施例,上述深度学习模型包括特征提取模块、池化模块和全连接模块,训练模块1040包括第二融合子模块、计算子模块和调整子模块。According to an embodiment of the present disclosure, the above-mentioned deep learning model includes a feature extraction module, a pooling module, and a fully connected module, and the

第二融合子模块用于根据增强图像的增强向量特征图,得到增强融合特征图。The second fusion sub-module is used to obtain the enhanced fusion feature map according to the enhanced vector feature map of the enhanced image.

计算子模块用于根据增强融合特征图和标签,得到目标对象的第二分类差异值。以及The calculation sub-module is used to obtain the second classification difference value of the target object according to the enhanced fusion feature map and the label. as well as

调整子模块用于根据第二分类差异值,调整特征提取模块、池化模块和全连接模块的参数。The adjustment sub-module is used to adjust the parameters of the feature extraction module, the pooling module and the fully connected module according to the difference value of the second classification.

根据本公开的实施例,计算子模块包括第一计算单元和第二计算单元。According to an embodiment of the present disclosure, the calculation submodule includes a first calculation unit and a second calculation unit.

第一计算单元用于根据增强融合特征图,确定目标对象的增强分类结果值。The first computing unit is configured to determine the enhanced classification result value of the target object according to the enhanced fusion feature map.

第二计算单元用于根据初始分类结果值和增强分类结果值,确定目标对象的第二分类差异值,初始分类结果值是基于融合特征图得到的。The second calculation unit is configured to determine the second classification difference value of the target object according to the initial classification result value and the enhanced classification result value, and the initial classification result value is obtained based on the fusion feature map.

根据本公开的实施例,第二计算单元包括计算子单元,计算子单元用于根据初始分类结果值和增强分类结果值之间的相对熵,确定目标对象的第二分类差异值。According to an embodiment of the present disclosure, the second calculation unit includes a calculation subunit for determining the second classification difference value of the target object according to the relative entropy between the initial classification result value and the enhanced classification result value.

根据本公开实施例,计算子模块包括第三计算单元和第四计算单元。According to an embodiment of the present disclosure, the calculation submodule includes a third calculation unit and a fourth calculation unit.

第三计算单元用于根据增强融合特征图,确定目标对象的增强分类结果值。以及The third computing unit is configured to determine the enhanced classification result value of the target object according to the enhanced fusion feature map. as well as

第四计算单元用于根据标签和增强分类结果值,确定目标对象的第二分类差异值。The fourth calculation unit is configured to determine the second classification difference value of the target object according to the label and the enhanced classification result value.

图11是根据本公开的实施例的目标对象识别装置的框图。FIG. 11 is a block diagram of a target object recognition apparatus according to an embodiment of the present disclosure.

如图11所示,目标对象识别装置1100包括第一获取模块1110、第二获取模块1120和识别模块1130。As shown in FIG. 11 , the target

第一获取模块1110用于获取待识别图像。The

第二获取模块1120用于获取深度学习模型,该深度学习模型是基于上述实施例中任一项的深度学习模型的训练装置训练得到的。The

识别模块1130用于将待识别图像输入深度学习模型,得到待识别图像中目标对象的识别结果。The

根据本公开实施例,目标对象包括面部,识别结果包括真实面部和伪造面部。According to an embodiment of the present disclosure, the target object includes a face, and the recognition result includes a real face and a fake face.

需要说明的是,装置部分实施例中各模块/单元/子单元等的实施方式、解决的技术问题、实现的功能、以及达到的技术效果分别与方法部分实施例中各对应的步骤的实施方式、解决的技术问题、实现的功能、以及达到的技术效果相同或类似,在此不再赘述。It should be noted that the implementations of each module/unit/subunit, etc., the technical problems solved, the functions realized, and the technical effects achieved in some embodiments of the apparatus are respectively the implementations of the corresponding steps in the embodiments of the method part. , the technical problem solved, the function realized, and the technical effect achieved are the same or similar, and will not be repeated here.

本公开的技术方案中,所涉及的用户个人信息的收集、存储、使用、加工、传输、提供和公开等处理,均符合相关法律法规的规定,且不违背公序良俗。In the technical solutions of the present disclosure, the collection, storage, use, processing, transmission, provision, and disclosure of the user's personal information involved are all in compliance with relevant laws and regulations, and do not violate public order and good customs.

根据本公开的实施例,本公开还提供了一种电子设备、一种可读存储介质和一种计算机程序产品。According to embodiments of the present disclosure, the present disclosure also provides an electronic device, a readable storage medium, and a computer program product.

图12示出了可以用来实施本公开的实施例的示例电子设备1200的示意性框图。电子设备旨在表示各种形式的数字计算机,诸如,膝上型计算机、台式计算机、工作台、个人数字助理、服务器、刀片式服务器、大型计算机、和其它适合的计算机。电子设备还可以表示各种形式的移动装置,诸如,个人数字处理、蜂窝电话、智能电话、可穿戴设备和其它类似的计算装置。本文所示的部件、它们的连接和关系、以及它们的功能仅仅作为示例,并且不意在限制本文中描述的和/或者要求的本公开的实现。12 shows a schematic block diagram of an example

如图12所示,设备1200包括计算单元1201,其可以根据存储在只读存储器(ROM)1202中的计算机程序或者从存储单元1208加载到随机访问存储器(RAM)1203中的计算机程序,来执行各种适当的动作和处理。在RAM 1203中,还可存储设备1200操作所需的各种程序和数据。计算单元1201、ROM 1202以及RAM 1203通过总线1204彼此相连。输入/输出(I/O)接口1205也连接至总线1204。As shown in FIG. 12 , the

设备1200中的多个部件连接至I/O接口1205,包括:输入单元1206,例如键盘、鼠标等;输出单元1207,例如各种类型的显示器、扬声器等;存储单元1208,例如磁盘、光盘等;以及通信单元1209,例如网卡、调制解调器、无线通信收发机等。通信单元1209允许设备1200通过诸如因特网的计算机网络和/或各种电信网络与其他设备交换信息/数据。Various components in the

计算单元1201可以是各种具有处理和计算能力的通用和/或专用处理组件。计算单元1201的一些示例包括但不限于中央处理单元(CPU)、图形处理单元(GPU)、各种专用的人工智能(AI)计算芯片、各种运行机器学习模型算法的计算单元、数字信号处理器(DSP)、以及任何适当的处理器、控制器、微控制器等。计算单元1201执行上文所描述的各个方法和处理,例如深度学习模型的训练方法以及目标对象识别方法。例如,在一些实施例中,深度学习模型的训练方法以及目标对象识别方法可被实现为计算机软件程序,其被有形地包含于机器可读介质,例如存储单元1208。在一些实施例中,计算机程序的部分或者全部可以经由ROM 1202和/或通信单元1209而被载入和/或安装到设备1200上。当计算机程序加载到RAM 1203并由计算单元1201执行时,可以执行上文描述的深度学习模型的训练方法以及目标对象识别方法的一个或多个步骤。备选地,在其他实施例中,计算单元1201可以通过其他任何适当的方式(例如,借助于固件)而被配置为执行深度学习模型的训练方法以及目标对象识别方法。

本文中以上描述的系统和技术的各种实施方式可以在数字电子电路系统、集成电路系统、场可编程门阵列(FPGA)、专用集成电路(ASIC)、专用标准产品(ASSP)、芯片上系统的系统(SOC)、复杂可编程逻辑设备(CPLD)、计算机硬件、固件、软件、和/或它们的组合中实现。这些各种实施方式可以包括:实施在一个或者多个计算机程序中,该一个或者多个计算机程序可在包括至少一个可编程处理器的可编程系统上执行和/或解释,该可编程处理器可以是专用或者通用可编程处理器,可以从存储系统、至少一个输入装置、和至少一个输出装置接收数据和指令,并且将数据和指令传输至该存储系统、该至少一个输入装置、和该至少一个输出装置。Various implementations of the systems and techniques described herein above may be implemented in digital electronic circuitry, integrated circuit systems, field programmable gate arrays (FPGAs), application specific integrated circuits (ASICs), application specific standard products (ASSPs), systems on chips system (SOC), complex programmable logic device (CPLD), computer hardware, firmware, software, and/or combinations thereof. These various embodiments may include being implemented in one or more computer programs executable and/or interpretable on a programmable system including at least one programmable processor that The processor, which may be a special purpose or general-purpose programmable processor, may receive data and instructions from a storage system, at least one input device, and at least one output device, and transmit data and instructions to the storage system, the at least one input device, and the at least one output device an output device.

用于实施本公开的方法的程序代码可以采用一个或多个编程语言的任何组合来编写。这些程序代码可以提供给通用计算机、专用计算机或其他可编程数据处理装置的处理器或控制器,使得程序代码当由处理器或控制器执行时使流程图和/或框图中所规定的功能/操作被实施。程序代码可以完全在机器上执行、部分地在机器上执行,作为独立软件包部分地在机器上执行且部分地在远程机器上执行或完全在远程机器或服务器上执行。Program code for implementing the methods of the present disclosure may be written in any combination of one or more programming languages. These program codes may be provided to a processor or controller of a general purpose computer, special purpose computer or other programmable data processing apparatus, such that the program code, when executed by the processor or controller, performs the functions/functions specified in the flowcharts and/or block diagrams. Action is implemented. The program code may execute entirely on the machine, partly on the machine, partly on the machine and partly on a remote machine as a stand-alone software package or entirely on the remote machine or server.

在本公开的上下文中,机器可读介质可以是有形的介质,其可以包含或存储以供指令执行系统、装置或设备使用或与指令执行系统、装置或设备结合地使用的程序。机器可读介质可以是机器可读信号介质或机器可读储存介质。机器可读介质可以包括但不限于电子的、磁性的、光学的、电磁的、红外的、或半导体系统、装置或设备,或者上述内容的任何合适组合。机器可读存储介质的更具体示例会包括基于一个或多个线的电气连接、便携式计算机盘、硬盘、随机存取存储器(RAM)、只读存储器(ROM)、可擦除可编程只读存储器(EPROM或快闪存储器)、光纤、便捷式紧凑盘只读存储器(CD-ROM)、光学储存设备、磁储存设备、或上述内容的任何合适组合。In the context of the present disclosure, a machine-readable medium may be a tangible medium that may contain or store a program for use by or in connection with the instruction execution system, apparatus or device. The machine-readable medium may be a machine-readable signal medium or a machine-readable storage medium. Machine-readable media may include, but are not limited to, electronic, magnetic, optical, electromagnetic, infrared, or semiconductor systems, devices, or devices, or any suitable combination of the foregoing. More specific examples of machine-readable storage media would include one or more wire-based electrical connections, portable computer disks, hard disks, random access memory (RAM), read only memory (ROM), erasable programmable read only memory (EPROM or flash memory), fiber optics, compact disk read only memory (CD-ROM), optical storage, magnetic storage, or any suitable combination of the foregoing.

为了提供与用户的交互,可以在计算机上实施此处描述的系统和技术,该计算机具有:用于向用户显示信息的显示装置(例如,CRT(阴极射线管)或者LCD(液晶显示器)监视器);以及键盘和指向装置(例如,鼠标或者轨迹球),用户可以通过该键盘和该指向装置来将输入提供给计算机。其它种类的装置还可以用于提供与用户的交互;例如,提供给用户的反馈可以是任何形式的传感反馈(例如,视觉反馈、听觉反馈、或者触觉反馈);并且可以用任何形式(包括声输入、语音输入或者、触觉输入)来接收来自用户的输入。To provide interaction with a user, the systems and techniques described herein may be implemented on a computer having a display device (eg, a CRT (cathode ray tube) or LCD (liquid crystal display) monitor) for displaying information to the user ); and a keyboard and pointing device (eg, a mouse or trackball) through which a user can provide input to the computer. Other kinds of devices can also be used to provide interaction with the user; for example, the feedback provided to the user can be any form of sensory feedback (eg, visual feedback, auditory feedback, or tactile feedback); and can be in any form (including acoustic input, voice input, or tactile input) to receive input from the user.

可以将此处描述的系统和技术实施在包括后台部件的计算系统(例如,作为数据服务器)、或者包括中间件部件的计算系统(例如,应用服务器)、或者包括前端部件的计算系统(例如,具有图形用户界面或者网络浏览器的用户计算机,用户可以通过该图形用户界面或者该网络浏览器来与此处描述的系统和技术的实施方式交互)、或者包括这种后台部件、中间件部件、或者前端部件的任何组合的计算系统中。可以通过任何形式或者介质的数字数据通信(例如,通信网络)来将系统的部件相互连接。通信网络的示例包括:局域网(LAN)、广域网(WAN)和互联网。The systems and techniques described herein may be implemented on a computing system that includes back-end components (eg, as a data server), or a computing system that includes middleware components (eg, an application server), or a computing system that includes front-end components (eg, a user's computer having a graphical user interface or web browser through which a user may interact with implementations of the systems and techniques described herein), or including such backend components, middleware components, Or any combination of front-end components in a computing system. The components of the system may be interconnected by any form or medium of digital data communication (eg, a communication network). Examples of communication networks include: Local Area Networks (LANs), Wide Area Networks (WANs), and the Internet.

计算机系统可以包括客户端和服务器。客户端和服务器一般远离彼此并且通常通过通信网络进行交互。通过在相应的计算机上运行并且彼此具有客户端-服务器关系的计算机程序来产生客户端和服务器的关系。A computer system can include clients and servers. Clients and servers are generally remote from each other and usually interact through a communication network. The relationship of client and server arises by computer programs running on the respective computers and having a client-server relationship to each other.

应该理解,可以使用上面所示的各种形式的流程,重新排序、增加或删除步骤。例如,本发公开中记载的各步骤可以并行地执行也可以顺序地执行也可以不同的次序执行,只要能够实现本公开公开的技术方案所期望的结果,本文在此不进行限制。It should be understood that steps may be reordered, added or deleted using the various forms of flow shown above. For example, the steps described in the present disclosure can be executed in parallel, sequentially, or in different orders. As long as the desired results of the technical solutions disclosed in the present disclosure can be achieved, there is no limitation herein.

上述具体实施方式,并不构成对本公开保护范围的限制。本领域技术人员应该明白的是,根据设计要求和其他因素,可以进行各种修改、组合、子组合和替代。任何在本公开的精神和原则之内所作的修改、等同替换和改进等,均应包含在本公开保护范围之内。The above-mentioned specific embodiments do not constitute a limitation on the protection scope of the present disclosure. It should be understood by those skilled in the art that various modifications, combinations, sub-combinations and substitutions may occur depending on design requirements and other factors. Any modifications, equivalent replacements, and improvements made within the spirit and principles of the present disclosure should be included within the protection scope of the present disclosure.

Claims (29)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210234795.7ACN114612743A (en) | 2022-03-10 | 2022-03-10 | Deep learning model training method, target object identification method and device |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210234795.7ACN114612743A (en) | 2022-03-10 | 2022-03-10 | Deep learning model training method, target object identification method and device |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN114612743Atrue CN114612743A (en) | 2022-06-10 |

Family

ID=81862803

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202210234795.7APendingCN114612743A (en) | 2022-03-10 | 2022-03-10 | Deep learning model training method, target object identification method and device |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN114612743A (en) |

Cited By (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN115471717A (en)* | 2022-09-20 | 2022-12-13 | 北京百度网讯科技有限公司 | Model semi-supervised training and classification method and device, equipment, medium and product |

| CN115482395A (en)* | 2022-09-30 | 2022-12-16 | 北京百度网讯科技有限公司 | Model training method, image classification method, device, electronic equipment and medium |

| CN116012666A (en)* | 2022-12-20 | 2023-04-25 | 百度时代网络技术(北京)有限公司 | Image generation, model training and information reconstruction methods and devices and electronic equipment |

| CN116071628A (en)* | 2023-02-06 | 2023-05-05 | 北京百度网讯科技有限公司 | Image processing method, device, electronic equipment and storage medium |

| CN116416440A (en)* | 2023-01-13 | 2023-07-11 | 北京百度网讯科技有限公司 | Target recognition method, model training method, device, medium and electronic equipment |

Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2020102988A1 (en)* | 2018-11-20 | 2020-05-28 | 西安电子科技大学 | Feature fusion and dense connection based infrared plane target detection method |

| CN112801164A (en)* | 2021-01-22 | 2021-05-14 | 北京百度网讯科技有限公司 | Training method, device and equipment of target detection model and storage medium |

| CN113139543A (en)* | 2021-04-28 | 2021-07-20 | 北京百度网讯科技有限公司 | Training method of target object detection model, target object detection method and device |

| CN113537249A (en)* | 2021-08-17 | 2021-10-22 | 浙江大华技术股份有限公司 | Image determination method, device, storage medium and electronic device |

| CN113657181A (en)* | 2021-07-23 | 2021-11-16 | 西北工业大学 | A Rotating Target Detection Method in SAR Image Based on Smooth Label Coding and Feature Enhancement |

| US20210365741A1 (en)* | 2019-05-08 | 2021-11-25 | Tencent Technology (Shenzhen) Company Limited | Image classification method, computer-readable storage medium, and computer device |

| CN113705425A (en)* | 2021-08-25 | 2021-11-26 | 北京百度网讯科技有限公司 | Training method of living body detection model, and method, device and equipment for living body detection |

| CN113902625A (en)* | 2021-08-19 | 2022-01-07 | 深圳市朗驰欣创科技股份有限公司 | Infrared image enhancement method based on deep learning |

- 2022

- 2022-03-10CNCN202210234795.7Apatent/CN114612743A/enactivePending

Patent Citations (8)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2020102988A1 (en)* | 2018-11-20 | 2020-05-28 | 西安电子科技大学 | Feature fusion and dense connection based infrared plane target detection method |

| US20210365741A1 (en)* | 2019-05-08 | 2021-11-25 | Tencent Technology (Shenzhen) Company Limited | Image classification method, computer-readable storage medium, and computer device |

| CN112801164A (en)* | 2021-01-22 | 2021-05-14 | 北京百度网讯科技有限公司 | Training method, device and equipment of target detection model and storage medium |

| CN113139543A (en)* | 2021-04-28 | 2021-07-20 | 北京百度网讯科技有限公司 | Training method of target object detection model, target object detection method and device |

| CN113657181A (en)* | 2021-07-23 | 2021-11-16 | 西北工业大学 | A Rotating Target Detection Method in SAR Image Based on Smooth Label Coding and Feature Enhancement |

| CN113537249A (en)* | 2021-08-17 | 2021-10-22 | 浙江大华技术股份有限公司 | Image determination method, device, storage medium and electronic device |

| CN113902625A (en)* | 2021-08-19 | 2022-01-07 | 深圳市朗驰欣创科技股份有限公司 | Infrared image enhancement method based on deep learning |

| CN113705425A (en)* | 2021-08-25 | 2021-11-26 | 北京百度网讯科技有限公司 | Training method of living body detection model, and method, device and equipment for living body detection |

Non-Patent Citations (1)

| Title |

|---|

| 何志祥;胡俊伟;: "基于深度学习的无人机目标识别算法研究", 滨州学院学报, no. 02, 15 April 2019 (2019-04-15), pages 19 - 25* |

Cited By (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN115471717A (en)* | 2022-09-20 | 2022-12-13 | 北京百度网讯科技有限公司 | Model semi-supervised training and classification method and device, equipment, medium and product |

| CN115471717B (en)* | 2022-09-20 | 2023-06-20 | 北京百度网讯科技有限公司 | Semi-supervised training and classifying method device, equipment, medium and product of model |

| CN115482395A (en)* | 2022-09-30 | 2022-12-16 | 北京百度网讯科技有限公司 | Model training method, image classification method, device, electronic equipment and medium |

| CN115482395B (en)* | 2022-09-30 | 2024-02-20 | 北京百度网讯科技有限公司 | Model training method, image classification device, electronic equipment and medium |

| CN116012666A (en)* | 2022-12-20 | 2023-04-25 | 百度时代网络技术(北京)有限公司 | Image generation, model training and information reconstruction methods and devices and electronic equipment |

| CN116012666B (en)* | 2022-12-20 | 2023-10-27 | 百度时代网络技术(北京)有限公司 | Image generation, model training and information reconstruction methods and devices and electronic equipment |

| CN116416440A (en)* | 2023-01-13 | 2023-07-11 | 北京百度网讯科技有限公司 | Target recognition method, model training method, device, medium and electronic equipment |

| CN116416440B (en)* | 2023-01-13 | 2024-02-06 | 北京百度网讯科技有限公司 | Target recognition methods, model training methods, devices, media and electronic equipment |

| CN116071628A (en)* | 2023-02-06 | 2023-05-05 | 北京百度网讯科技有限公司 | Image processing method, device, electronic equipment and storage medium |

| CN116071628B (en)* | 2023-02-06 | 2024-04-05 | 北京百度网讯科技有限公司 | Image processing method, device, electronic device and storage medium |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN113379627B (en) | Training method of image enhancement model and method for enhancing image | |

| CN113627439B (en) | Text structuring processing method, processing device, electronic equipment and storage medium | |

| CN114612743A (en) | Deep learning model training method, target object identification method and device | |

| CN112966742A (en) | Model training method, target detection method and device and electronic equipment | |

| CN113971751A (en) | Training feature extraction model, and method and device for detecting similar images | |

| CN112561879B (en) | Ambiguity evaluation model training method, image ambiguity evaluation method and image ambiguity evaluation device | |

| CN114093006A (en) | Training method, device and equipment of living human face detection model and storage medium | |

| CN115358392B (en) | Training method of deep learning network, text detection method and device | |

| CN114882321A (en) | Deep learning model training method, target object detection method and device | |

| CN114898266B (en) | Training methods, image processing methods, devices, electronic equipment and storage media | |

| CN114494784A (en) | Training methods, image processing methods and object recognition methods of deep learning models | |

| CN113643260A (en) | Method, apparatus, apparatus, medium and product for detecting image quality | |

| CN113869253A (en) | Liveness detection method, training method, device, electronic device and medium | |

| CN114494747A (en) | Model training method, image processing method, device, electronic device and medium | |

| CN114429633A (en) | Text recognition method, model training method, device, electronic equipment and medium | |

| CN116311298B (en) | Information generation method, information processing method, device, electronic device, and medium | |

| CN115116111A (en) | Anti-disturbance face live detection model training method, device and electronic equipment | |

| CN113989568A (en) | Target detection method, training method, device, electronic device and storage medium | |

| CN114387651A (en) | A face recognition method, device, equipment and storage medium | |

| CN114495229A (en) | Image recognition processing method and device, equipment, medium and product | |

| CN114417029A (en) | Model training method, device, electronic device and storage medium | |

| CN115205555B (en) | Method for determining similar images, training method, information determining method and equipment | |

| CN114648814A (en) | Face liveness detection method and model training method, device, equipment and medium | |

| CN114926322A (en) | Image generation method and device, electronic equipment and storage medium | |

| CN114724144A (en) | Text recognition method, model training method, device, equipment and medium |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| WD01 | Invention patent application deemed withdrawn after publication | Application publication date:20220610 | |

| WD01 | Invention patent application deemed withdrawn after publication |