CN114511766A - Image identification method based on deep learning and related device - Google Patents

Image identification method based on deep learning and related deviceDownload PDFInfo

- Publication number

- CN114511766A CN114511766ACN202210092535.0ACN202210092535ACN114511766ACN 114511766 ACN114511766 ACN 114511766ACN 202210092535 ACN202210092535 ACN 202210092535ACN 114511766 ACN114511766 ACN 114511766A

- Authority

- CN

- China

- Prior art keywords

- feature map

- convolution

- feature

- convolution kernel

- convolution operation

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/25—Fusion techniques

- G06F18/253—Fusion techniques of extracted features

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/21—Design or setup of recognition systems or techniques; Extraction of features in feature space; Blind source separation

- G06F18/214—Generating training patterns; Bootstrap methods, e.g. bagging or boosting

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/24—Classification techniques

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/045—Combinations of networks

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/048—Activation functions

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- Data Mining & Analysis (AREA)

- Life Sciences & Earth Sciences (AREA)

- Artificial Intelligence (AREA)

- General Physics & Mathematics (AREA)

- General Engineering & Computer Science (AREA)

- Evolutionary Computation (AREA)

- Bioinformatics & Computational Biology (AREA)

- Computational Linguistics (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Bioinformatics & Cheminformatics (AREA)

- Health & Medical Sciences (AREA)

- Biomedical Technology (AREA)

- Biophysics (AREA)

- Evolutionary Biology (AREA)

- General Health & Medical Sciences (AREA)

- Molecular Biology (AREA)

- Computing Systems (AREA)

- Mathematical Physics (AREA)

- Software Systems (AREA)

- Image Analysis (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明涉及图像处理技术领域,特别涉及一种基于深度学习的图像识别方法及相关装置。The present invention relates to the technical field of image processing, and in particular, to an image recognition method and related device based on deep learning.

背景技术Background technique

图像识别技术多基于深度学习的图像分类算法,采用池化层或多层卷积的方式对待识别图像的特征进行提取。为提高分类精度,相关技术中多通过下采样操作以扩大感受野范围,从而增加特征图像的语义信息。然而扩大感受野范围的同时会导致特征图像细节信息的丢失。Image recognition technology is mostly based on deep learning image classification algorithms, which use pooling layers or multi-layer convolutions to extract the features of the images to be recognized. In order to improve the classification accuracy, the down-sampling operation is often used in the related art to expand the range of the receptive field, thereby increasing the semantic information of the feature image. However, enlarging the receptive field will lead to the loss of the detailed information of the feature image.

为解决上述问题,目前多采用特征金字塔的方式,通过多个卷积核提取待识别图像在不同感受野下的特征,再将不同感受野下的特征进行特征融合。该处理方式虽能够在一定程度上修补特征图的细节信息,但大幅增加了神经网络模型的参数量,导致神经网络收敛速度降低。In order to solve the above problems, the method of feature pyramid is currently used, and the features of the image to be recognized under different receptive fields are extracted through multiple convolution kernels, and then the features under different receptive fields are fused. Although this processing method can repair the detailed information of the feature map to a certain extent, it greatly increases the number of parameters of the neural network model, resulting in a decrease in the convergence speed of the neural network.

发明内容SUMMARY OF THE INVENTION

本申请实施例提供一种基于深度学习的图像识别方法及相关装置,用于通过不同数值的膨胀因子对待处理特征图进行多轮卷积,以将不同感受野下的特征识别结果进行融合。降低神经网络模型的参数量,提高收敛速度。Embodiments of the present application provide an image recognition method and related apparatus based on deep learning, which are used to perform multiple rounds of convolution on feature maps to be processed by using expansion factors of different values to fuse feature recognition results under different receptive fields. Reduce the number of parameters of the neural network model and improve the convergence speed.

第一方面,本申请实施例提供了一种基于深度学习的图像识别方法,所述方法包括:In a first aspect, an embodiment of the present application provides an image recognition method based on deep learning, the method comprising:

对待识别图像进行特征提取,获取表征所述待识别图像的不同维度的多张待处理特征图;其中,各待处理特征图的尺寸相同;Perform feature extraction on the image to be recognized, and obtain multiple feature maps to be processed representing different dimensions of the image to be recognized; wherein, the size of each feature map to be processed is the same;

基于预先设置的第一膨胀因子对各待处理特征图进行多轮第一卷积操作,以根据每轮第一卷积操作得到的特征识别结果确定每一待处理特征图对应的第一总特征图;其中,每轮第一卷积操作对应的第一膨胀因子不同,不同数值的第一膨胀因子表征所述特征识别结果对应的感受野不同;Multiple rounds of first convolution operations are performed on each feature map to be processed based on the preset first expansion factor, so as to determine the first total feature corresponding to each feature map to be processed according to the feature recognition result obtained by each round of the first convolution operation Figure; wherein, the first expansion factors corresponding to the first convolution operations in each round are different, and the first expansion factors of different values represent different receptive fields corresponding to the feature recognition results;

通过解码器确定所述第一总特征图所属的预设分类,并将所述预设分类作为所述待识别图像的识别结果;其中,所述第一卷积操作过程如下:The preset classification to which the first total feature map belongs is determined by the decoder, and the preset classification is used as the recognition result of the to-be-recognized image; wherein, the first convolution operation process is as follows:

基于本轮对应的第一膨胀因子,采用第一卷积核对输入项进行卷积运算,得到所述输入项对应的第一子特征图;并采用第二卷积核对所述第一子特征图进行卷积运算,得到所述输入项在所述第一膨胀因子对应感受野下的特征识别结果;其中,首轮第一卷积操作的输入项为所述各待处理特征图,非首轮第一卷积操作的输入项为前一轮得到的特征识别结果;所述第一卷积核和所述第二卷积核的尺寸不同。Based on the first expansion factor corresponding to the current round, the first convolution kernel is used to perform a convolution operation on the input item, and the first sub-feature map corresponding to the input item is obtained; and the second convolution kernel is used to check the first sub-feature map. Perform a convolution operation to obtain the feature recognition result of the input item under the receptive field corresponding to the first expansion factor; wherein, the input items of the first round of the first convolution operation are the feature maps to be processed, not the first round The input item of the first convolution operation is the feature recognition result obtained in the previous round; the size of the first convolution kernel and the second convolution kernel are different.

本申请实施例通过对待识别图像进行特征提取,获取待识别图像对应的多张待处理特征图。基于预先设置的第一膨胀因子对各待处理特征图进行多轮第一卷积操作,以根据每轮第一卷积操作得到的特征识别结果确定每一待处理图像对应的第一总特征图。通过解码器确定该第一总特征图的预设分类,进而得到该待识别图像的识别结果。由于每轮第一卷积操作对应第一膨胀因子不同,不同数值的第一膨胀因子表征所述特征识别结果对应的感受野不同,因而每轮第一卷积操作得到的特征识别结果处于不同感受野。且每轮第一卷积操作的特征识别结果会作为下一轮的输入项,因而最后一轮第一卷积操作即可得到表征不同感受野下的特征结果融合的第一总特征图。通过上述流程能够大幅降低神经网络模型的参数量,提高模型收敛速度。In this embodiment of the present application, a plurality of feature maps to be processed corresponding to the to-be-recognized image are acquired by performing feature extraction on the to-be-recognized image. Multiple rounds of first convolution operations are performed on each feature map to be processed based on the preset first expansion factor, so as to determine the first total feature map corresponding to each image to be processed according to the feature recognition result obtained by each round of the first convolution operation . The preset classification of the first total feature map is determined by the decoder, and then the recognition result of the to-be-recognized image is obtained. Since the first expansion factor corresponding to each round of the first convolution operation is different, and the first expansion factor with different values indicates that the receptive field corresponding to the feature recognition result is different, the feature recognition result obtained by each round of the first convolution operation is in a different perception wild. And the feature recognition result of the first convolution operation in each round will be used as the input item of the next round, so the first overall feature map representing the fusion of feature results under different receptive fields can be obtained in the last round of the first convolution operation. Through the above process, the parameter quantity of the neural network model can be greatly reduced, and the model convergence speed can be improved.

在一些可能的实施例中,所述第一卷积核和所述第二卷积核的预设维度相同,所述获取表征所述待识别图像的不同维度的多张待处理特征图之后,所述方法还包括:In some possible embodiments, the preset dimensions of the first convolution kernel and the second convolution kernel are the same, and after acquiring multiple feature maps to be processed representing different dimensions of the image to be recognized, The method also includes:

对各待处理特征图进行多轮第一卷积操作之前,对所述各待处理特征图进行维度处理,以使所述各待处理特征图的维度与所述预设维度相同。Before performing multiple rounds of first convolution operations on each feature map to be processed, dimension processing is performed on each feature map to be processed, so that the dimension of each feature map to be processed is the same as the preset dimension.

本申请实施例中的第一卷积核和第二卷积核的预设维度相同,对各待处理特征图进行多轮第一卷积操作之前需要对各待处理特征图进行维度处理,以使各待处理特征图的维度与预设维度相同。由此通过第一卷积核对待处理特征图进行卷积运算后得到的子特征图可直接用于第二卷积核进行卷积,提高处理速率。The preset dimensions of the first convolution kernel and the second convolution kernel in this embodiment of the present application are the same. Make the dimension of each feature map to be processed the same as the preset dimension. Therefore, the sub-feature map obtained by performing the convolution operation on the feature map to be processed through the first convolution kernel can be directly used for convolution by the second convolution kernel, thereby improving the processing rate.

在一些可能的实施例中,所述第二卷积核的数量大于所述第一卷积核的数量,所述采用第一卷积核对输入项进行卷积操作,包括:In some possible embodiments, the number of the second convolution kernels is greater than the number of the first convolution kernels, and the first convolution kernel is used to perform a convolution operation on the input item, including:

对所述输入项中的每一图片进行卷积运算,以得到每一图片对应的第一子特征图;其中,所述图片为待处理特征图或特征识别结果;Convolution operation is performed on each picture in the input item to obtain the first sub-feature map corresponding to each picture; wherein, the picture is a feature map to be processed or a feature recognition result;

所述采用第二卷积核对所述第一子特征图进行卷积操作,包括:The using the second convolution kernel to perform a convolution operation on the first sub-feature map includes:

对所述输入项中的每一所述图片进行卷积运算,并在卷积过程中对各所述图片相同位置处的像素值相加,以得到所述输入项中全部图片对应的特征提取结果。Perform a convolution operation on each of the pictures in the input item, and add the pixel values at the same position of each of the pictures in the convolution process to obtain the feature extraction corresponding to all the pictures in the input item result.

本申请实施例的第二卷积核数量大于第一卷积核数量,采用第二卷积核对第一卷积核输出的第一子特征图进行卷积时,即可得到不同维度下第一子特征图的多种特征识别结果,提高图像的细节信息。The number of the second convolution kernels in this embodiment of the present application is greater than the number of the first convolution kernels. When the second convolution kernel is used to convolve the first sub-feature map output by the first convolution kernel, the first sub-feature map in different dimensions can be obtained. Various feature recognition results of the sub-feature map to improve the detailed information of the image.

在一些可能的实施例中,所述通过解码器确定所述第一总特征图所属的预设分类,包括:In some possible embodiments, the determining, by the decoder, the preset category to which the first total feature map belongs, including:

控制回归头基于第二膨胀因子对所述第一总特征图进行第二卷积运算,以确定所述第一总特征图对应的感兴趣区域;并,controlling the regression head to perform a second convolution operation on the first total feature map based on the second expansion factor to determine the region of interest corresponding to the first total feature map; and,

控制分类头基于所述第二膨胀因子对所述第一总特征图进行第三卷积运算,以确定第一总特征图的分类检测结果;其中,所述第二膨胀因子与所述第一膨胀因子的数值不同;The control classification head performs a third convolution operation on the first total feature map based on the second expansion factor, so as to determine the classification detection result of the first total feature map; wherein the second expansion factor is related to the first total feature map. The value of the expansion factor is different;

若所述分类检测结果表征所述第一总特征图为可识别图像,则对所述第一总特征图的感兴趣区域进行类别识别,并根据识别结果确定所述第一总特征图所属的预设分类;若不为所述可识别图像,则输出表征图像无法识别的提示信息。If the classification detection result indicates that the first general feature map is an identifiable image, perform category recognition on the region of interest of the first general feature map, and determine the category to which the first general feature map belongs according to the recognition result. Preset classification; if it is not the identifiable image, output prompt information indicating that the image cannot be recognized.

本申请实施例控制回归头确定第一总特征图的感兴趣区域,并控制分类头确定第一总特征图的分类检测结果,当分类检测结果表征该第一总特征图为可识别图像时,通过对感兴趣区域进行类别识别以确定其所属的预设分类。上述回归头和分类头以并行的方式同步处理,提高处理速率。The embodiment of the present application controls the regression head to determine the region of interest of the first general feature map, and controls the classification head to determine the classification detection result of the first general feature map. When the classification detection result indicates that the first general feature map is an identifiable image, By identifying the category of the region of interest to determine the preset category to which it belongs. The above regression head and classification head are processed synchronously in a parallel manner to improve the processing rate.

在一些可能的实施例中,所述控制回归头基于第二膨胀因子对所述第一总特征图进行第二卷积运算,以确定所述第一总特征图对应的感兴趣区域,包括:In some possible embodiments, the control regression head performs a second convolution operation on the first total feature map based on a second expansion factor to determine a region of interest corresponding to the first total feature map, including:

基于所述第二膨胀因子,采用第三卷积核对所述第一总特征图进行卷积运算,得到在所述第二膨胀因子对应感受野下的第二子特征图;其中,所述第三卷积核与所述第一卷积核的尺寸相同;Based on the second expansion factor, a third convolution kernel is used to perform a convolution operation on the first total feature map to obtain a second sub-feature map under the receptive field corresponding to the second expansion factor; The size of the triple convolution kernel is the same as that of the first convolution kernel;

采用第四卷积核对所述第二子特征图进行卷积运算,得到所述第二子特征图对应的第二总特征图;并采用第五卷积核对所述第二总特征图进行卷积运算,得到所述感兴趣区域;其中,所述第四卷积核与所述第二卷积核的尺寸相同,且所述第四卷积核的数量大于所述第三卷积核的数量;所述第五卷积核的尺寸与所述第一卷积核相同。The fourth convolution kernel is used to perform a convolution operation on the second sub-feature map to obtain a second total feature map corresponding to the second sub-feature map; and the fifth convolution kernel is used to convolve the second total feature map. product operation to obtain the region of interest; wherein, the size of the fourth convolution kernel is the same as that of the second convolution kernel, and the number of the fourth convolution kernel is greater than that of the third convolution kernel number; the size of the fifth convolution kernel is the same as that of the first convolution kernel.

本申请实施例控制回归头基于第二膨胀因子,采用第三卷积核对第一总特征图进行卷积运算,得到在所述第二膨胀因子对应感受野下的第二子特征图,然后采用第四卷积核对该第二子特征图进行卷积运算得到表征第二子特征图特征融合结果的第二总特征图,最后通过具备感兴趣区域识别能力的第五卷积核从第二总特征图中确定感兴趣区域,以提高感兴趣区域的选取精度。The embodiment of the present application controls the regression head to perform a convolution operation on the first total feature map based on the second expansion factor, using a third convolution kernel to obtain a second sub-feature map under the receptive field corresponding to the second expansion factor, and then uses The fourth convolution kernel performs a convolution operation on the second sub-feature map to obtain a second total feature map representing the feature fusion result of the second sub-feature map. The region of interest is determined in the feature map to improve the selection accuracy of the region of interest.

在一些可能的实施例中,所述控制分类头基于所述第二膨胀因子对所述第一总特征图进行第三卷积运算,以确定第一总特征图的分类检测结果,包括:In some possible embodiments, the control classification head performs a third convolution operation on the first total feature map based on the second expansion factor to determine a classification detection result of the first total feature map, including:

基于所述第二膨胀因子,采用第六卷积核对所述第一总特征图进行卷积运算,得到所述在所述第二膨胀因子对应感受野下的第三子特征图;其中,所述第六卷积核与所述第一卷积核的尺寸相同;Based on the second expansion factor, a sixth convolution kernel is used to perform a convolution operation on the first total feature map to obtain the third sub-feature map under the receptive field corresponding to the second expansion factor; The sixth convolution kernel has the same size as the first convolution kernel;

采用第七卷积核对所述第三子特征图进行卷积运算,得到所述第三子特征图对应的第三总特征图;并采用第八卷积核对所述第三总特征图进行卷积运算,得到所述分类检测结果;其中,所述第六卷积核和所述第八卷积核的尺寸均与所述第一卷积核相同;所述第七卷积核与所述第二卷积核的尺寸相同,且所述第七卷积核的数量大于所述第六卷积核的数量。The seventh convolution kernel is used to perform a convolution operation on the third sub-feature map to obtain a third total feature map corresponding to the third sub-feature map; and the eighth convolution kernel is used to convolve the third total feature map. product operation to obtain the classification detection result; wherein, the sizes of the sixth convolution kernel and the eighth convolution kernel are the same as the first convolution kernel; the seventh convolution kernel is the same as the The sizes of the second convolution kernels are the same, and the number of the seventh convolution kernels is greater than the number of the sixth convolution kernels.

本申请实施例控制分类头基于第二膨胀因子,采用第六卷积核对第一总特征图进行卷积运算,得到在所述第二膨胀因子对应感受野下的第三子特征图,然后采用第七卷积核对该第三子特征图进行卷积运算得到表征第三子特征图特征融合结果的第三总特征图,最后通过具备分类检测能力的第八卷积核筛选出能够被神经网络模型识别的第三总特征图,以提高模型识别精度。The embodiment of the present application controls the classification head to perform a convolution operation on the first total feature map by using the sixth convolution kernel based on the second expansion factor to obtain a third sub-feature map under the receptive field corresponding to the second expansion factor, and then uses The seventh convolution kernel performs a convolution operation on the third sub-feature map to obtain a third total feature map representing the feature fusion result of the third sub-feature map. The third total feature map for model recognition to improve model recognition accuracy.

在一些可能的实施例中,所述解码器中的分类头和回归头内均至少包括一个膨胀卷积块。In some possible embodiments, both the classification header and the regression header in the decoder include at least one dilated convolution block.

本申请通过不同数值的膨胀因子对待处理特征图进行多轮卷积,以将不同感受野下的特征识别结果进行融合,无需进行类似特征金字塔下的多尺度特征融合,避免了特征融合过程中的特征权重大小不一造成的特征损失,本申请解码器中仅需1个回归头和1个分类头即可保证分类结果的识别精度。The present application performs multiple rounds of convolution on the feature map to be processed through expansion factors of different values, so as to fuse the feature recognition results under different receptive fields, and does not need to perform multi-scale feature fusion under similar feature pyramids, avoiding the process of feature fusion. For feature loss caused by different feature weights, only one regression head and one classification head are needed in the decoder of this application to ensure the recognition accuracy of the classification result.

第二方面,本申请实施例提供了一种基于深度学习的图像识别装置,所述装置包括:In a second aspect, an embodiment of the present application provides an image recognition device based on deep learning, the device comprising:

特征提取模块,被配置为执行对待识别图像进行特征提取,获取表征所述待识别图像的不同维度的多张待处理特征图;其中,各待处理特征图的尺寸相同;a feature extraction module, configured to perform feature extraction on the image to be recognized, and obtain a plurality of feature maps to be processed representing different dimensions of the image to be recognized; wherein, the size of each feature map to be processed is the same;

特征融合模块,被配置为执行基于预先设置的第一膨胀因子对各待处理特征图进行多轮第一卷积操作,以根据每轮第一卷积操作得到的特征识别结果确定每一待处理特征图对应的第一总特征图;其中,每轮第一卷积操作对应的第一膨胀因子不同,不同数值的第一膨胀因子表征所述特征识别结果对应的感受野不同;The feature fusion module is configured to perform multiple rounds of first convolution operations on each feature map to be processed based on a preset first expansion factor, so as to determine each to-be-processed feature map according to the feature recognition results obtained by each round of the first convolution operation The first total feature map corresponding to the feature map; wherein, the first expansion factors corresponding to the first convolution operations in each round are different, and the first expansion factors with different values represent different receptive fields corresponding to the feature recognition results;

图像识别模块,被配置执行通过解码器确定所述第一总特征图所属的预设分类,并将所述预设分类作为所述待识别图像的识别结果;其中,所述第一卷积操作过程如下:an image recognition module, configured to perform a decoder to determine a preset classification to which the first total feature map belongs, and use the preset classification as a recognition result of the to-be-recognized image; wherein the first convolution operation The process is as follows:

基于本轮对应的第一膨胀因子,采用第一卷积核对输入项进行卷积运算,得到所述输入项对应的第一子特征图;并采用第二卷积核对所述第一子特征图进行卷积运算,得到所述输入项在所述第一膨胀因子对应感受野下的特征识别结果;其中,首轮第一卷积操作的输入项为所述各待处理特征图,非首轮第一卷积操作的输入项为前一轮得到的特征识别结果;所述第一卷积核和所述第二卷积核的尺寸不同。Based on the first expansion factor corresponding to the current round, the first convolution kernel is used to perform a convolution operation on the input item, and the first sub-feature map corresponding to the input item is obtained; and the second convolution kernel is used to check the first sub-feature map. Perform a convolution operation to obtain the feature recognition result of the input item under the receptive field corresponding to the first expansion factor; wherein, the input items of the first round of the first convolution operation are the feature maps to be processed, not the first round The input item of the first convolution operation is the feature recognition result obtained in the previous round; the size of the first convolution kernel and the second convolution kernel are different.

在一些可能的实施例中,所述第一卷积核和所述第二卷积核的预设维度相同,执行所述获取表征所述待识别图像的不同维度的多张待处理特征图之后,所述特征提取模块还被配置为:In some possible embodiments, the preset dimensions of the first convolution kernel and the second convolution kernel are the same, after performing the acquiring a plurality of feature maps to be processed representing different dimensions of the image to be recognized , the feature extraction module is also configured to:

对各待处理特征图进行多轮第一卷积操作之前,对所述各待处理特征图进行维度处理,以使所述各待处理特征图的维度与所述预设维度相同。Before performing multiple rounds of first convolution operations on each feature map to be processed, dimension processing is performed on each feature map to be processed, so that the dimension of each feature map to be processed is the same as the preset dimension.

在一些可能的实施例中,所述第二卷积核的数量大于所述第一卷积核的数量,执行所述采用第一卷积核对输入项进行卷积操作,所述特征融合模块被配置为:In some possible embodiments, the number of the second convolution kernels is greater than the number of the first convolution kernels, and performing the convolution operation on the input items using the first convolution kernels, the feature fusion module is Configured as:

对所述输入项中的每一图片进行卷积运算,以得到每一图片对应的第一子特征图;其中,所述图片为待处理特征图或特征识别结果;Convolution operation is performed on each picture in the input item to obtain the first sub-feature map corresponding to each picture; wherein, the picture is a feature map to be processed or a feature recognition result;

执行所述采用第二卷积核对所述第一子特征图进行卷积操作,所述特征融合模块被配置为:Performing the convolution operation on the first sub-feature map using the second convolution kernel, the feature fusion module is configured to:

对所述输入项中的每一所述图片进行卷积运算,并在卷积过程中对各所述图片相同位置处的像素值相加,以得到所述输入项中全部图片对应的特征提取结果。Perform a convolution operation on each of the pictures in the input item, and add the pixel values at the same position of each of the pictures in the convolution process to obtain the feature extraction corresponding to all the pictures in the input item result.

在一些可能的实施例中,执行所述通过解码器确定所述第一总特征图所属的预设分类,所述图像识别模块被配置为:In some possible embodiments, to perform the determining of the preset classification to which the first total feature map belongs by the decoder, the image recognition module is configured to:

控制回归头基于第二膨胀因子对所述第一总特征图进行第二卷积运算,以确定所述第一总特征图对应的感兴趣区域;并,controlling the regression head to perform a second convolution operation on the first total feature map based on the second expansion factor to determine the region of interest corresponding to the first total feature map; and,

控制分类头基于所述第二膨胀因子对所述第一总特征图进行第三卷积运算,以确定第一总特征图的分类检测结果;其中,所述第二膨胀因子与所述第一膨胀因子的数值不同;The control classification head performs a third convolution operation on the first total feature map based on the second expansion factor, so as to determine the classification detection result of the first total feature map; wherein the second expansion factor is related to the first total feature map. The value of the expansion factor is different;

若所述分类检测结果表征所述第一总特征图为可识别图像,则对所述第一总特征图的感兴趣区域进行类别识别,并根据识别结果确定所述第一总特征图所属的预设分类。If the classification detection result indicates that the first general feature map is an identifiable image, perform category recognition on the region of interest of the first general feature map, and determine the category to which the first general feature map belongs according to the recognition result. Default categories.

在一些可能的实施例中,执行所述控制回归头基于第二膨胀因子对所述第一总特征图进行第二卷积运算,以确定所述第一总特征图对应的感兴趣区域,所述图像识别模块被配置为:In some possible embodiments, the control regression head performs a second convolution operation on the first total feature map based on a second expansion factor to determine a region of interest corresponding to the first total feature map, so The image recognition module described above is configured to:

基于所述第二膨胀因子,采用第三卷积核对所述第一总特征图进行卷积运算,得到在所述第二膨胀因子对应感受野下的第二子特征图;其中,所述第三卷积核与所述第一卷积核的尺寸相同;Based on the second expansion factor, a third convolution kernel is used to perform a convolution operation on the first total feature map to obtain a second sub-feature map under the receptive field corresponding to the second expansion factor; The size of the triple convolution kernel is the same as that of the first convolution kernel;

采用第四卷积核对所述第二子特征图进行卷积运算,得到所述第二子特征图对应的第二总特征图;并采用第五卷积核对所述第二总特征图进行卷积运算,得到所述感兴趣区域;其中,所述第三卷积核和所述第五卷积核的尺寸均与所述第一卷积核相同;所述第四卷积核与所述第二卷积核的尺寸相同,且所述第四卷积核的数量大于所述第三卷积核的数量。The fourth convolution kernel is used to perform a convolution operation on the second sub-feature map to obtain a second total feature map corresponding to the second sub-feature map; and the fifth convolution kernel is used to convolve the second total feature map. product operation to obtain the region of interest; wherein, the size of the third convolution kernel and the fifth convolution kernel are the same as the first convolution kernel; the fourth convolution kernel and the The sizes of the second convolution kernels are the same, and the number of the fourth convolution kernels is greater than the number of the third convolution kernels.

在一些可能的实施例中,执行所述控制分类头基于所述第二膨胀因子对所述第一总特征图进行第三卷积运算,以确定第一总特征图的分类检测结果,所述图像识别模块被配置为:In some possible embodiments, the control classification header performs a third convolution operation on the first total feature map based on the second dilation factor to determine a classification detection result of the first total feature map, the The image recognition module is configured to:

基于所述第二膨胀因子,采用第六卷积核对所述第一总特征图进行卷积运算,得到所述在所述第二膨胀因子对应感受野下的第三子特征图;其中,所述第六卷积核与所述第一卷积核的尺寸相同;Based on the second expansion factor, a sixth convolution kernel is used to perform a convolution operation on the first total feature map to obtain the third sub-feature map under the receptive field corresponding to the second expansion factor; The sixth convolution kernel has the same size as the first convolution kernel;

采用第七卷积核对所述第三子特征图进行卷积运算,得到所述第三子特征图对应的第三总特征图;并采用第八卷积核对所述第三总特征图进行卷积运算,得到所述分类检测结果;其中,所述第六卷积核和所述第八卷积核的尺寸均与所述第一卷积核相同;所述第七卷积核与所述第二卷积核的尺寸相同,且所述第七卷积核的数量大于所述第六卷积核的数量。The seventh convolution kernel is used to perform a convolution operation on the third sub-feature map to obtain a third total feature map corresponding to the third sub-feature map; and the eighth convolution kernel is used to convolve the third total feature map. product operation to obtain the classification detection result; wherein, the sizes of the sixth convolution kernel and the eighth convolution kernel are the same as the first convolution kernel; the seventh convolution kernel is the same as the The sizes of the second convolution kernels are the same, and the number of the seventh convolution kernels is greater than the number of the sixth convolution kernels.

在一些可能的实施例中,所述解码器中的分类头和回归头内均至少包括一个膨胀卷积块。In some possible embodiments, both the classification header and the regression header in the decoder include at least one dilated convolution block.

第三方面,本申请实施例还提供了一种电子设备,包括:In a third aspect, an embodiment of the present application also provides an electronic device, including:

处理器;processor;

用于存储所述处理器可执行指令的存储器;a memory for storing the processor-executable instructions;

其中,所述处理器被配置为执行所述指令,以实现如本申请第一方面中提供的任一方法。Wherein, the processor is configured to execute the instructions to implement any method as provided in the first aspect of the present application.

第四方面,本申请实施例还提供了一种计算机可读存储介质,当所述计算机可读存储介质中的指令由电子设备的处理器执行时,使得电子设备能够执行如本申请第一方面中提供的任一方法。In a fourth aspect, an embodiment of the present application further provides a computer-readable storage medium. When the instructions in the computer-readable storage medium are executed by a processor of an electronic device, the electronic device can execute the first aspect of the present application. any of the methods provided in .

第五方面,本申请一实施例提供了一种计算机程序产品,包括计算机程序,所述计算机程序被处理器执行时实现如本申请第一方面中提供的任一方法。In a fifth aspect, an embodiment of the present application provides a computer program product, including a computer program, which implements any of the methods provided in the first aspect of the present application when the computer program is executed by a processor.

本申请的其它特征和优点将在随后的说明书中阐述,并且,部分地从说明书中变得显而易见,或者通过实施本申请而了解。本申请的目的和其他优点可通过在所写的说明书、权利要求书、以及附图中所特别指出的结构来实现和获得。Other features and advantages of the present application will be set forth in the description which follows, and in part will be apparent from the description, or may be learned by practice of the present application. The objectives and other advantages of the application may be realized and attained by the structure particularly pointed out in the written description, claims, and drawings.

附图说明Description of drawings

为了更清楚地说明本申请实施例的技术方案,下面将对本申请实施例中所需要使用的附图作简单地介绍,显而易见地,下面所介绍的附图仅仅是本申请的一些实施例,对于本领域普通技术人员来讲,在不付出创造性劳动的前提下,还可以根据这些附图获得其他的附图。In order to illustrate the technical solutions of the embodiments of the present application more clearly, the following briefly introduces the drawings that need to be used in the embodiments of the present application. Obviously, the drawings introduced below are only some embodiments of the present application. For those of ordinary skill in the art, other drawings can also be obtained from these drawings without any creative effort.

图1为本申请实施例示出特征金字塔FPN示意图;1 shows a schematic diagram of a feature pyramid FPN for an embodiment of the present application;

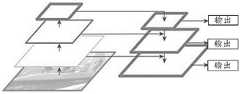

图2为本申请实施例示出的Yolof网络结构示意图;2 is a schematic diagram of a Yolof network structure shown in an embodiment of the application;

图3a为本申请实施例示出的基于深度学习的图像识别方法整体流程图;Fig. 3a is the overall flow chart of the image recognition method based on deep learning shown in the embodiment of the application;

图3b为本申请实施例示出的本申请神经网络结构示意图;FIG. 3b is a schematic structural diagram of the neural network of the present application shown in an embodiment of the present application;

图4为本申请实施例示出的基于深度学习的图像识别装置400结构图;FIG. 4 is a structural diagram of an

图5为本申请实施例示出的电子设备示意图。FIG. 5 is a schematic diagram of an electronic device according to an embodiment of the present application.

具体实施方式Detailed ways

下面将结合附图对本申请实施例中的技术方案进行清楚、详尽地描述。在本申请实施例的描述中,除非另有说明,““/”将表示或的意思,例如,A/B可以表示A或B;文本中的“和/或”仅仅是一种描述关联对象的关联关系,表示可以存在三种关系,例如,A和/或B,可以表示:单独存在A,同时存在A和B,单独存在B这三种情况,另外,在本申请实施例的描述中,“多个”是指两个或多于两个。The technical solutions in the embodiments of the present application will be described clearly and in detail below with reference to the accompanying drawings. In the description of the embodiments of the present application, unless otherwise specified, "/" will mean or, for example, A/B can mean A or B; "and/or" in the text is only a description of the associated object The association relationship indicates that there can be three kinds of relationships, for example, A and/or B can indicate that A exists alone, A and B exist at the same time, and B exists alone. In addition, in the description of the embodiments of this application , "plurality" means two or more than two.

在本申请实施例的描述中,除非另有说明,术语“多个”是指两个或两个以上,其它量词与之类似应当理解,此处所描述的优选实施例仅用于说明和解释本申请,并不用于限定本申请,并且在不冲突的情况下,本申请的实施例及实施例中的特征可以相互组合。In the description of the embodiments of the present application, unless otherwise specified, the term "plurality" refers to two or more, and other quantifiers are similar to it. It should be understood that the preferred embodiments described herein are only used to illustrate and explain the present invention. The application is not used to limit the application, and the embodiments of the application and the features in the embodiments may be combined with each other under the condition of no conflict.

为进一步说明本申请实施例提供的技术方案,下面结合附图以及具体实施方式对此进行详细的说明。虽然本申请实施例提供了如下述实施例或附图所示的方法操作步骤,但基于常规或者无需创造性的劳动在方法中可以包括更多或者更少的操作步骤。在逻辑上不存在必要因果关系的步骤中,这些步骤的执行顺序不限于本申请实施例提供的执行顺序。方法在实际的处理过程中或者控制设备执行时,可以按照实施例或者附图所示的方法顺序执行或者并行执行。In order to further illustrate the technical solutions provided by the embodiments of the present application, the following detailed descriptions are given in conjunction with the accompanying drawings and specific embodiments. Although the embodiments of the present application provide method operation steps as shown in the following embodiments or the accompanying drawings, more or less operation steps may be included in the method based on routine or without creative work. In steps that logically do not have a necessary causal relationship, the execution order of these steps is not limited to the execution order provided by the embodiments of the present application. In the actual processing process or when the method is executed by the control device, the method may be executed sequentially or in parallel according to the methods shown in the embodiments or the accompanying drawings.

前文已提及,相关技术中为提高分类精度,多通过下采样操作以扩大感受野范围,从而增加特征图像的语义信息。然而扩大感受野范围的同时会导致特征图像细节信息的丢失。As mentioned above, in order to improve the classification accuracy in the related art, the downsampling operation is often used to expand the range of the receptive field, thereby increasing the semantic information of the feature image. However, enlarging the receptive field will lead to the loss of the detailed information of the feature image.

相关技术中多采用特征金字塔的方式,通过多个卷积核提取待识别图像在不同感受野下的特征,再将不同感受野下的特征进行特征融合。具体的,图1示出了特征金字塔FPN的网络结构。如图1所示,FPN网络中包含了一个自底向上的卷积线路(如图1左侧所示),即自上而下的特征卷积。和一个自顶向下的卷积线路(如图1右侧所示)。由于高层具备更多的特征语义,低层的特征语义较少但具备更多的位置信息,FPN通过融合与左侧特征图相邻的特征图像的方式,将低分辨率、高语义信息的高层特征和高分辨率、低语义信息的低层特征进行自上而下的侧边连接,使得所有尺度下的特征都有丰富的语义信息,以提高特征图像的细节信息。其具体做法是两个特征层的较高层特征2倍上采样(即在原有图像的像素基础上在像素点之间采用合适的插值算法插入新的元素,将图像尺寸扩大一倍)。较低层特征通过1×1卷积改变一下低层特征的通道数,然后简单地把将上采样和1×1卷积后的结果对应元素相加。In the related art, the method of feature pyramid is mostly used, and the features of the image to be recognized under different receptive fields are extracted through multiple convolution kernels, and then the features under different receptive fields are feature-fused. Specifically, Figure 1 shows the network structure of the feature pyramid FPN. As shown in Figure 1, the FPN network contains a bottom-up convolution line (shown on the left side of Figure 1), that is, top-down feature convolution. and a top-down convolution line (shown on the right side of Figure 1). Since high-level features have more feature semantics, and low-level feature semantics have less feature semantics but more location information, FPN combines low-resolution, high-semantic information high-level features by fusing feature images adjacent to the left feature map. Top-down side connections are performed with low-level features with high resolution and low semantic information, so that features at all scales have rich semantic information to improve the detailed information of feature images. The specific method is to upsample the higher-level features of the two feature layers by 2 times (that is, on the basis of the pixels of the original image, a suitable interpolation algorithm is used to insert new elements between the pixel points, and the size of the image is doubled). The lower-level features are changed by 1×1 convolution to change the number of channels of the lower-level feature, and then simply add the corresponding elements of the result after upsampling and 1×1 convolution.

该方式的优点在于对每一种尺度的图像进行特征提取,能够产生多尺度的特征图像,并且所有特征图像军具有较强的语义信息。该方式存在的缺点在于模型参数量较多,占用内存量较大。The advantage of this method is that the feature extraction of each scale image can generate multi-scale feature images, and all feature images have strong semantic information. The disadvantage of this method is that there are many model parameters and a large amount of memory is occupied.

为解决上述问题,本申请的发明构思为:通过对待识别图像进行特征提取,获取待识别图像对应的多张待处理特征图。基于预先设置的第一膨胀因子对各待处理特征图进行多轮第一卷积操作,以根据每轮第一卷积操作得到的特征识别结果确定每一待处理图像对应的第一总特征图。通过解码器确定该第一总特征图的预设分类,进而得到该待识别图像的识别结果。由于每轮第一卷积操作对应第一膨胀因子不同,不同数值的第一膨胀因子表征所述特征识别结果对应的感受野不同,因而每轮第一卷积操作得到的特征识别结果处于不同感受野。且每轮第一卷积操作的特征识别结果会作为下一轮的输入项,因而最后一轮第一卷积操作即可得到表征不同感受野下的特征结果融合的第一总特征图。通过上述流程能够大幅降低神经网络模型的参数量,提高模型收敛速度。In order to solve the above problems, the inventive concept of the present application is to obtain a plurality of feature maps to be processed corresponding to the to-be-recognized image by performing feature extraction on the to-be-recognized image. Multiple rounds of first convolution operations are performed on each feature map to be processed based on the preset first expansion factor, so as to determine the first total feature map corresponding to each image to be processed according to the feature recognition result obtained by each round of the first convolution operation . The preset classification of the first total feature map is determined by the decoder, and then the recognition result of the to-be-recognized image is obtained. Since the first expansion factor corresponding to each round of the first convolution operation is different, and the first expansion factor with different values indicates that the receptive field corresponding to the feature recognition result is different, the feature recognition result obtained by each round of the first convolution operation is in a different perception wild. And the feature recognition result of the first convolution operation in each round will be used as the input item of the next round, so the first overall feature map representing the fusion of feature results under different receptive fields can be obtained in the last round of the first convolution operation. Through the above process, the parameter quantity of the neural network model can be greatly reduced, and the model convergence speed can be improved.

为便于理解本申请提供的技术方案,首先对Yolof神经网络进行简单说明,具体如图2所示:In order to facilitate the understanding of the technical solution provided by this application, the Yolof neural network is first briefly described, as shown in Figure 2:

图2示出了Yolof神经网络的网络结构,包括特征提取骨架Backbone、编码器Encoder和解码器Decoder。Backbone用于对待识别图像进行特征提取,以获取该待识别图像的特征图像。Figure 2 shows the network structure of the Yolof neural network, including the feature extraction skeleton Backbone, the encoder Encoder and the decoder Decoder. Backbone is used for feature extraction of the to-be-recognized image to obtain the characteristic image of the to-be-recognized image.

该网络结构中Backbone的输出包含2个版本,其一为相对于输入图像采样率为32、通道数2048的特征图像,即图2示出的C5。其二为相对于输入图像采样率为16、通道数为2048的特征图像,即图2示出的DC5。The output of Backbone in this network structure contains two versions, one of which is a feature image with a sampling rate of 32 and a channel number of 2048 relative to the input image, namely C5 shown in Figure 2. The second is a feature image with a sampling rate of 16 and a channel number of 2048 relative to the input image, namely DC5 shown in FIG. 2 .

接下来将特征图像输入到Encoder中,该网络结构采用膨胀编码器DilatedEncoder作为Encoder。Dilated Encoder将Backbone输出的特征作为输入,首先使用1x1卷积和3x3卷积减少特征通道数至512,然后使用4个连续的残差模块提取特征。每个残差模块中,首先使用1x1卷积将特征通道数减少至原来1/4,然后使用3x3的空洞卷积增大感受野,最后使用1x1卷积将特征通道数扩充4倍。4个残差模块中空洞卷积的膨胀因子分别为2、4、6、8。这样,通过4轮(每一膨胀因子对应一轮)膨胀卷积运算后即可得到该特征图像在不同感受野下的特征融合结果。Next, the feature image is input into the Encoder, and the network structure adopts the dilated encoder DilatedEncoder as the Encoder. The Dilated Encoder takes the features output by Backbone as input, first uses 1x1 convolution and 3x3 convolution to reduce the number of feature channels to 512, and then uses 4 consecutive residual modules to extract features. In each residual module, 1x1 convolution is used to reduce the number of feature channels to 1/4 of the original, then 3x3 hole convolution is used to increase the receptive field, and finally 1x1 convolution is used to expand the number of feature channels by 4 times. The dilation factors of atrous convolution in the four residual modules are 2, 4, 6, and 8, respectively. In this way, the feature fusion results of the feature image under different receptive fields can be obtained after 4 rounds of dilation convolution operations (each dilation factor corresponds to one round).

最后将该特征融合结果输入到Decoder中。Yolof的Decoder中回归头(Conv-BN-ReLU)包含4个膨胀卷积块,分类头(Conv-BN-ReLU)包含2个膨胀卷积块。其中,膨胀卷积块有几个即表征需要做几次膨胀卷积操作。回归头中的每个锚点(anchor)都有一个预测框objectness prediction(即,感兴趣区域),最终的分类得分由分类分支的输出乘以objectness prediction得到。即,回归头用于确定该特征融合结果中的感兴趣区域,分类头用于对特征融合结果的感兴趣区域进行分类识别以获取输入图像的所属分类。Finally, the feature fusion result is input into the Decoder. The regression head (Conv-BN-ReLU) in Yolof's Decoder contains 4 dilated convolution blocks, and the classification head (Conv-BN-ReLU) contains 2 dilated convolution blocks. Among them, there are several dilated convolution blocks, which means that several dilated convolution operations need to be performed. Each anchor in the regression head has a prediction box objectness prediction (ie, region of interest), and the final classification score is obtained by multiplying the output of the classification branch by the objectness prediction. That is, the regression head is used to determine the region of interest in the feature fusion result, and the classification head is used to classify and identify the region of interest in the feature fusion result to obtain the classification of the input image.

Yolof神经网络中编码器的特征融合流程仅采用一级特征进行检测,无需如图1示出的特征金字塔FPN需要多层特征检测。相比于FPN的内存占用量大幅降低。基于此,本申请基于Yolof的网络结构,采用不同尺寸的卷积核对待处理特征图进行多轮卷积运算以获取表征不同感受野下的特征结果融合的第一总特征图,运算所需参数量相比于Yolof大幅降低,提高模型收敛速率。The feature fusion process of the encoder in the Yolof neural network only uses one-level features for detection, without the need for multi-layer feature detection as shown in Figure 1, the feature pyramid FPN. Compared with FPN, the memory footprint is greatly reduced. Based on this, the present application is based on Yolof's network structure, using convolution kernels of different sizes to perform multiple rounds of convolution operations on the feature map to be processed to obtain a first total feature map representing the fusion of feature results under different receptive fields, and the parameters required for the calculation Compared with Yolof, the amount is greatly reduced, which improves the model convergence rate.

下面结合附图对本申请实施例提供的一种基于深度学习的图像识别方法进行详细说明,具体如图3a所示,包括以下步骤:A deep learning-based image recognition method provided by the embodiments of the present application will be described in detail below with reference to the accompanying drawings. Specifically, as shown in FIG. 3a, the method includes the following steps:

步骤301:对待识别图像进行特征提取,获取表征所述待识别图像的不同维度的多张待处理特征图;其中,各待处理特征图的尺寸相同;Step 301: perform feature extraction on the image to be recognized, and obtain multiple feature maps to be processed representing different dimensions of the image to be recognized; wherein, the size of each feature map to be processed is the same;

本申请实施例选取RegNetX-400MF作为基础构建特征提取网络,包含4个模块,每个模块的输出的特征图比上个模块输出的特征图的缩小2倍,下采样通过增加卷积步长完成。每个模块由不同的block数量级联组成,数量分别为[1,2,7,12],形成类残差网络结构。每个卷积层后都跟着一个批量标准化层(Batch Normalization)和纠正非线性函数层(ReLU)。Batch Normalization层能够对输出进行规范化,ReLU层对上层的输出增加非线性。RegNetX-400MF的特征维度为384。即,将N张待识别图像进行特征提取后会得到(N,384,H,W)的特征图像,其中,H和W为图像的长宽尺寸。即N张384维,尺寸为H×W的待处理特征图。In the embodiment of this application, RegNetX-400MF is selected as the basis to construct a feature extraction network, which includes 4 modules. The output feature map of each module is 2 times smaller than the feature map output by the previous module, and the downsampling is completed by increasing the convolution step size. . Each module is composed of different block numbers cascaded [1, 2, 7, 12] to form a residual-like network structure. Each convolutional layer is followed by a batch normalization layer (Batch Normalization) and a correction nonlinear function layer (ReLU). The Batch Normalization layer can normalize the output, and the ReLU layer adds nonlinearity to the output of the upper layer. The feature dimension of RegNetX-400MF is 384. That is, a feature image of (N, 384, H, W) will be obtained after feature extraction of N images to be identified, where H and W are the length and width of the image. That is, N 384-dimensional feature maps of size H×W to be processed.

步骤302:基于预先设置的第一膨胀因子对各待处理特征图进行多轮第一卷积操作,以根据每轮第一卷积操作得到的特征识别结果确定每一待处理特征图对应的第一总特征图;其中,每轮第一卷积操作对应的第一膨胀因子不同,不同数值的第一膨胀因子表征所述特征识别结果对应的感受野不同;Step 302: Perform multiple rounds of first convolution operations on each feature map to be processed based on the preset first expansion factor, so as to determine the first convolution operation corresponding to each feature map to be processed according to the feature recognition result obtained by each round of the first convolution operation. an overall feature map; wherein, the first expansion factors corresponding to the first convolution operations in each round are different, and the first expansion factors with different values represent different receptive fields corresponding to the feature recognition results;

通过解码器确定所述第一总特征图所属的预设分类,并将所述预设分类作为所述待识别图像的识别结果;其中,所述第一卷积操作过程如下:The preset classification to which the first total feature map belongs is determined by the decoder, and the preset classification is used as the recognition result of the to-be-recognized image; wherein, the first convolution operation process is as follows:

基于本轮对应的第一膨胀因子,采用第一卷积核对输入项进行卷积运算,得到所述输入项对应的第一子特征图;并采用第二卷积核对所述第一子特征图进行卷积运算,得到所述输入项在所述第一膨胀因子对应感受野下的特征识别结果;其中,首轮第一卷积操作的输入项为所述各待处理特征图,非首轮第一卷积操作的输入项为前一轮得到的特征识别结果;所述第一卷积核和所述第二卷积核的尺寸不同。Based on the first expansion factor corresponding to the current round, the first convolution kernel is used to perform a convolution operation on the input item, and the first sub-feature map corresponding to the input item is obtained; and the second convolution kernel is used to check the first sub-feature map. Perform a convolution operation to obtain the feature recognition result of the input item under the receptive field corresponding to the first expansion factor; wherein, the input items of the first round of the first convolution operation are the feature maps to be processed, not the first round The input item of the first convolution operation is the feature recognition result obtained in the previous round; the size of the first convolution kernel and the second convolution kernel are different.

在一些可能的实施例中,由于待卷积的图像维度需与卷积核维度相同,故可设置第一卷积核和第二卷积核的预设维度相同,由此通过第一卷积核对待处理特征图进行卷积运算后得到的子特征图可直接用于第二卷积核进行卷积,提高处理速率。In some possible embodiments, since the dimension of the image to be convoluted needs to be the same as the dimension of the convolution kernel, the preset dimensions of the first convolution kernel and the second convolution kernel can be set to be the same, so that the first convolution kernel can pass the first convolution kernel. The sub-feature map obtained after the kernel performs the convolution operation on the feature map to be processed can be directly used for convolution by the second convolution kernel to improve the processing rate.

进一步的,本申请实施例对各待处理特征图进行多轮第一卷积操作之前,对各待处理特征图进行维度处理,以使各待处理特征图的维度与预设维度相同。Further, in this embodiment of the present application, before performing multiple rounds of first convolution operations on each feature map to be processed, dimension processing is performed on each feature map to be processed, so that the dimension of each feature map to be processed is the same as the preset dimension.

具体的,Yolof神经网络中特征提取骨干提取的特征图像为(N,512,25,25)。即N张512维,尺寸大小为25×25的特征图像。本申请此处沿用Yolof的膨胀卷积架构,故要求输入为(N,512,25,25)大小的待处理特征图。因而此处先使用1x1卷积(512,1,1)将上述步骤301得到的(N,384,H,W)特征图升高维度,并调整尺寸,将其变成大小为(N,512,25,25)的待处理特征图,然后将以上得到的(N,512,25,25)待处理特征图进行一次标准3x3卷积运算用来精炼上下语意。Specifically, the feature image extracted by the feature extraction backbone in the Yolof neural network is (N, 512, 25, 25). That is, N 512-dimensional feature images with a size of 25×25. This application uses Yolof's dilated convolution architecture here, so the input is required to be a feature map of size (N, 512, 25, 25) to be processed. Therefore, first use 1x1 convolution (512, 1, 1) to increase the dimension of the (N, 384, H, W) feature map obtained in the

为获取待处理特征图在不同感受野下的特征融合结果,本申请实施例设置了多种数值的第一膨胀因子,每种数值的第一膨胀因子对应一轮第一卷积操作。由此,可通过对待处理图像进行多伦第一卷积操作得到表征待处理特征图在不同感受野下特征融合结果的第一总特征图。In order to obtain the feature fusion results of the feature maps to be processed under different receptive fields, the embodiment of the present application sets a first expansion factor of various values, and the first expansion factor of each value corresponds to one round of the first convolution operation. Thus, the first total feature map representing the feature fusion result of the feature map to be processed under different receptive fields can be obtained by performing a multiplex first convolution operation on the image to be processed.

本申请实施例的第二卷积核的数量大于第一卷积核的数量,执行上述步骤302的第一卷积操作时,首先采用第一卷积核对输入项进行卷积操作时对输入项中的每一图片进行卷积运算,以得到每一图片对应的第一子特征图;其中,该图片为待处理特征图或特征识别结果。然后采用第二卷积核对第一子特征图进行卷积操作时对输入项中的每一图片进行卷积运算,并在卷积过程中对各图片相同位置处的像素值相加,以得到输入项中全部图片对应的特征提取结果。The number of the second convolution kernels in the embodiment of the present application is greater than the number of the first convolution kernels. When the first convolution operation in the

具体的,本申请第一膨胀因子的数值分别为2、4、6和8。即需要对待处理特征图进行4轮第一卷积操作。执行首轮第一卷积操作时,先对以上得到的(N,512,25,25)待处理特征图,使用1x1卷积降维成所需要的(N,128,25,25)待处理特征图,再使用数值为2的第一膨胀因子,通过128维,尺寸大小为3×3的第一卷积核,对该待处理特征图进行卷积运算会得到大小为(N,128,25,25)的第一子特征图。以1张待处理特征图为例,通过该第一卷积核的卷积运算可得到该待处理特征图在128种不同维度下的第一子特征图(即128张第一子特征图)。Specifically, the values of the first expansion factor in the present application are 2, 4, 6, and 8, respectively. That is, four rounds of the first convolution operation need to be performed on the feature map to be processed. When performing the first round of the first convolution operation, the (N, 512, 25, 25) to-be-processed feature map obtained above is first reduced by 1x1 convolution to the required (N, 128, 25, 25) to be processed feature map, and then use the first expansion factor with a value of 2, through the 128-dimensional first convolution kernel with a size of 3 × 3, the convolution operation on the feature map to be processed will obtain a size of (N, 128, 25, 25) of the first sub-feature map. Taking a feature map to be processed as an example, the first sub-feature map (ie, 128 first sub-feature maps) of the feature map to be processed in 128 different dimensions can be obtained through the convolution operation of the first convolution kernel. .

接下来通过128个128维,尺寸大小为1x1的第二卷积核对上述(N,128,25,25)第一子特征图进行卷积运算,并在卷积过程中针对不同图像相同位置的像素值进行相加可得到N×128张特征识别结果,特征识别结果的大小为(N,128,25,25)。此时完成了首轮第一卷积操作,即,首轮第一卷积操作通过第一卷积核和第二卷积核的卷积运算最终得到的是每张第一子特征图在128种维度下,数值为2的第一膨胀因子对应感受野下的特征融合结果。Next, the above-mentioned (N, 128, 25, 25) first sub-feature map is subjected to convolution operation through 128 128-dimensional second convolution kernels with a size of 1x1, and the convolution process is performed for different images at the same position. The pixel values are added to obtain N×128 feature recognition results, and the size of the feature recognition results is (N, 128, 25, 25). At this point, the first round of the first convolution operation is completed, that is, the first round of the first convolution operation through the convolution operation of the first convolution kernel and the second convolution kernel finally obtains each first sub-feature map at 128 Under this dimension, the first expansion factor with a value of 2 corresponds to the feature fusion result under the receptive field.

进一步的,分别采用数值为4、6和8的第一膨胀因子,重复上述通过第一卷积核和第二卷积核进行卷积运算的操作。相比于首轮第一卷积操作,非首轮第一卷积操作的输入项为前一轮得到的特征识别结果。相当于首轮第一卷积操作得到的输入项为前一轮得到的特征识别结果,第二轮第一卷积操作将首轮得到的特征识别结果1作为输入项,获取该输入项对应的特征识别结果2。第三轮第一卷积操作将特征识别结果2作为输入项,得到特征识别结果3。第四轮第一卷积操作将特征识别结果3作为输入项,得到特征识别结果4。由于每轮特征识别结果的感受野不同,最终第四轮得到的特征识别结果4相当于融合了不同感受野的特征识别结果,即第一总特征图。Further, the first expansion factors with values of 4, 6, and 8 are respectively used, and the above-mentioned operations of performing the convolution operation through the first convolution kernel and the second convolution kernel are repeated. Compared with the first round of the first convolution operation, the input items of the non-first round of the first convolution operation are the feature recognition results obtained in the previous round. It is equivalent to the input item obtained by the first round of the first convolution operation is the feature recognition result obtained in the previous round, and the second round of the first convolution operation takes the feature recognition result 1 obtained in the first round as the input item, and obtains the corresponding input item.

Yolof解码器处理阶段需要基于每轮对应的膨胀因子,通过128个128维,尺寸大小为3×3的卷积核对(N,128,25,25)的特征图像进行卷积运算,其每轮所需参数量为128x3x3x128=147456,而上述流程中,本申请的每轮第一卷积操作所需参数量为128x3x3+128x128x1x1=17536。相比于Yolof每轮缩减了近9倍的参数量,极大提高了神经网络的处理速度。The Yolof decoder processing stage needs to perform convolution operations on the feature images of (N, 128, 25, 25) through 128 128-dimensional convolution kernels with a size of 3×3 based on the corresponding expansion factor of each round. The amount of parameters required is 128x3x3x128=147456, and in the above process, the amount of parameters required for each round of the first convolution operation of the present application is 128x3x3+128x128x1x1=17536. Compared with Yolof, the number of parameters in each round is reduced by nearly 9 times, which greatly improves the processing speed of the neural network.

本申请实施例通过解码器确定第一总特征图所属的预设分类时,需控制回归头基于第二膨胀因子对第一总特征图进行第二卷积运算,以确定第一总特征图对应的感兴趣区域;并控制分类头基于第二膨胀因子对第一总特征图进行第三卷积运算,以确定第一总特征图的分类检测结果。In this embodiment of the present application, when the decoder determines the preset category to which the first total feature map belongs, the regression head needs to be controlled to perform a second convolution operation on the first total feature map based on the second expansion factor, so as to determine the corresponding first total feature map. and control the classification head to perform a third convolution operation on the first total feature map based on the second expansion factor, so as to determine the classification detection result of the first total feature map.

实施时,对于回归头:基于第二膨胀因子,采用第三卷积核对第一总特征图进行卷积运算,得到在第二膨胀因子对应感受野下的第二子特征图;并采用第四卷积核对第二子特征图进行卷积运算,得到第二子特征图对应的第二总特征图;并采用第五卷积核对第二总特征图进行卷积运算,得到感兴趣区域。During implementation, for the regression head: based on the second expansion factor, the third convolution kernel is used to perform the convolution operation on the first total feature map to obtain the second sub-feature map under the receptive field corresponding to the second expansion factor; and the fourth The convolution kernel performs a convolution operation on the second sub-feature map to obtain a second total feature map corresponding to the second sub-feature map; and a fifth convolution kernel is used to perform a convolution operation on the second total feature map to obtain a region of interest.

具体的,将上述(N,128,25,25)的第一总特征图采作为输入项,基于数值为1的第二膨胀因子,采用128维,3×3尺寸的第三卷积核进行卷积运算,得到N×128张第二子特征图,即(N,128,25,25)的第二子特征图。将N,128,25,25)的第二子特征图与128种128维,1×1尺寸的第四卷积核进行卷积运算,得到在该第二膨胀因子对应感受野下的第二总特征图,再将其升维至512维度,将第二总特征图(N,512,25,25)通过3x3尺寸的第五卷积核(N,(4+1)*9,512,3,3)对其进行卷积运算得到(N,(4+1)*9,3,3)的第二总特征图,将其中的(N,1*9,25,25)传至分类头残差连接,剩余的(N,4*9,25,25)输出感兴趣区域。其中,“4”表示感兴趣区域的位置,“9”表示感兴趣区域数量,“1”表示特征图内存在物体。Specifically, the first total feature map of the above (N, 128, 25, 25) is taken as the input item, and based on the second expansion factor whose value is 1, the third convolution kernel of 128 dimensions and 3×3 size is used to perform The convolution operation is performed to obtain N×128 second sub-feature maps, that is, the second sub-feature map of (N, 128, 25, 25). Convolve the second sub-feature map of N, 128, 25, 25) with 128 kinds of 128-dimensional, 1×1-sized fourth convolution kernels to obtain the second expansion factor corresponding to the receptive field. The total feature map is then upgraded to 512 dimensions, and the second total feature map (N, 512, 25, 25) is passed through the fifth convolution kernel of 3x3 size (N, (4+1)*9, 512, 3, 3) Perform a convolution operation on it to obtain the second total feature map of (N, (4+1)*9, 3, 3), and pass (N, 1*9, 25, 25) to the classification The head residual is connected, and the remaining (N, 4*9, 25, 25) outputs the region of interest. Among them, "4" indicates the location of the region of interest, "9" indicates the number of regions of interest, and "1" indicates that there is an object in the feature map.

对于分类头:基于第二膨胀因子,采用第六卷积核对第一总特征图进行卷积运算,得到在第二膨胀因子对应感受野下的第三子特征图;并采用第七卷积核对第三子特征图进行卷积运算,得到第三子特征图对应的第三总特征图;并采用第八卷积核对第三总特征图进行卷积运算,得到分类检测结果。For the classification head: based on the second expansion factor, the sixth convolution check is used to perform convolution operation on the first total feature map to obtain the third sub-feature map under the receptive field corresponding to the second expansion factor; and the seventh convolution check is used to check A convolution operation is performed on the third sub-feature map to obtain a third total feature map corresponding to the third sub-feature map; and an eighth convolution kernel is used to perform a convolution operation on the third total feature map to obtain a classification detection result.

具体的,将上述(N,128,25,25)的第一总特征图采作为输入项,基于数值为1的第二膨胀因子,采用128维,3×3尺寸的第六卷积核进行卷积运算,得到N×128张第三子特征图,即(N,128,25,25)的第三子特征图。将N,128,25,25)的第三子特征图与128种128维,1×1尺寸的第七卷积核进行卷积运算,得到在该第二膨胀因子对应感受野下的第三总特征图,再将其升维至512维度,将第三总特征图(N,512,25,25)通过3x3尺寸的第八卷积核N,9*34,512,3,3)对其进行卷积运算得到(N,9*34,3,3)的第三总特征图;再将回归头得到的(N,1*9,25,25)扩维至(N,1*9*34,25,25),并残差连接至上面得到的(N,9*34,3,3),卷积运算之后得到最有可能的9个类别(class)。此处需要说明的是,本申请的实验数据是基于麻将牌进行训练的,存在9种识别可能,对应上述“9”。即9种能够被神经网络识别的图像内容。而麻将牌具备34个分类结果,对应上述“34”,即为能够被神经网络识别的分类(可例如“一万”、“二饼”、“东风”等)。该流程相当于先对第三总特征图的图像内容进行一个初步的分类检测,首先筛选出能够被神经网络模型识别的第三总特征图(即上述9种可能),然后根据回归头得到的感兴趣区域的位置,对筛选出的第三总特征图的感兴趣区域进行分类识别,从而确定第三总特征图所属的预设分类(即在34种麻将牌分类中具体属于哪一种分类)。该预设分类即为待识别图像最终的识别结果。Specifically, the first total feature map of the above (N, 128, 25, 25) is taken as the input item, and based on the second expansion factor with a value of 1, a sixth convolution kernel of 128 dimensions and a size of 3×3 is used to perform The convolution operation is performed to obtain N×128 third sub-feature maps, that is, the third sub-feature map of (N, 128, 25, 25). Convolve the third sub-feature map of N, 128, 25, 25) with 128 kinds of 128-dimensional, 1×1-sized seventh convolution kernels to obtain the third sub-feature map under the receptive field corresponding to the second expansion factor The total feature map is then upgraded to 512 dimensions, and the third total feature map (N, 512, 25, 25) is passed through the eighth convolution kernel of 3x3 size N, 9*34, 512, 3, 3) pair It performs the convolution operation to obtain the third total feature map of (N, 9*34, 3, 3); and then expands the (N, 1*9, 25, 25) obtained by the regression head to (N, 1*9) *34, 25, 25), and the residual is connected to the (N, 9*34, 3, 3) obtained above, and the most likely 9 classes are obtained after the convolution operation. It should be noted here that the experimental data of this application is trained based on mahjong tiles, and there are 9 identification possibilities, corresponding to the above "9". That is, 9 kinds of image content that can be recognized by the neural network. The mahjong tile has 34 classification results, corresponding to the above-mentioned "34", which is the classification that can be recognized by the neural network (for example, "ten thousand", "two cakes", "dongfeng", etc.). This process is equivalent to performing a preliminary classification and detection on the image content of the third total feature map, first screening out the third total feature map that can be recognized by the neural network model (that is, the above 9 possibilities), and then according to the regression head. The location of the region of interest, classify and identify the region of interest of the screened third general feature map, so as to determine the preset classification to which the third general feature map belongs (that is, which classification it belongs to among the 34 types of mahjong tile classifications). ). The preset classification is the final recognition result of the image to be recognized.

另需说明的是,上述第三、四、五卷积核用于筛选出能够被神经网络识别的第二总特征图,第六、七、八卷积核用于确定感兴趣区域的位置。虽然第三、四卷积核的维度和尺寸与第一、二卷积核相同,第六、七卷积核的维度和尺寸也与第一、二卷积核相同但用于决定卷积核具体功能的内部配置参数并不相同,其内部配置参数可基于实际需求设置,本申请对此不进行限定。It should also be noted that the third, fourth, and fifth convolution kernels described above are used to filter out the second total feature map that can be recognized by the neural network, and the sixth, seventh, and eighth convolution kernels are used to determine the location of the region of interest. Although the dimensions and dimensions of the third and fourth convolution kernels are the same as those of the first and second convolution kernels, the dimensions and dimensions of the sixth and seventh convolution kernels are also the same as those of the first and second convolution kernels but are used to determine the convolution kernels. The internal configuration parameters of specific functions are not the same, and the internal configuration parameters can be set based on actual requirements, which are not limited in this application.

Yolof提出的解码器中,针对分类头,分类头首先对Encoder得到的(N,512,25,25)特征图,先使用膨胀因子为1的膨胀卷积进行一次完整的膨胀卷积运算,而后再重复上述完整膨胀卷积运算步骤,一共2次完整的膨胀因子为1的膨胀卷积运算,再将运算后的特征图使用标准的3x3卷积进行分类,再将其结果与回归头得到的(N,(4+1)*9,25,25)特征图进行残差连接,得到(N,9,25,25)特征图上的类别。针对回归头(Regression),将上述Encoder得到的(N,512,25,25)特征图,先使用膨胀因子为1的膨胀卷积进行一次完整的膨胀卷积运算,而后再重复上述完整膨胀卷积运算步骤3次,一共4次完整的膨胀因子为1的膨胀卷积运算,而后再进行标准的3x3卷积进行特征回归,得到(N,(4+1)*9,25,25)特征图,将其中的(N,1*9,25,25)传至分类头残差连接,剩余的(N,4*9,25,25)输出感兴趣区域。In the decoder proposed by Yolof, for the classification head, the classification head first performs a complete expansion convolution operation on the (N, 512, 25, 25) feature map obtained by the Encoder using the expansion convolution with an expansion factor of 1, and then Repeat the above complete dilated convolution operation steps, a total of 2 complete dilated convolution operations with an expansion factor of 1, and then use the standard 3x3 convolution to classify the feature map after the operation, and then compare the result with the result obtained by the regression head. The (N, (4+1)*9, 25, 25) feature map performs residual connection to obtain the category on the (N, 9, 25, 25) feature map. For the regression head (Regression), the (N, 512, 25, 25) feature map obtained by the above Encoder is first used to perform a complete expansion convolution operation with an expansion factor of 1, and then repeat the above complete expansion volume. The product operation steps are 3 times, a total of 4 complete expansion convolution operations with an expansion factor of 1, and then the standard 3x3 convolution is performed for feature regression to obtain (N, (4+1)*9, 25, 25) features Figure, pass (N, 1*9, 25, 25) to the residual connection of the classification head, and the remaining (N, 4*9, 25, 25) output the region of interest.

此处需要说明的是,基于任一膨胀因子,采用不同卷积核执行卷积操作在神经网络结构中表征通过一个膨胀卷积块执行了一次完整的膨胀卷积操作。以本申请回归头为例,基于第二膨胀因子,分别采用第三、四、五卷积核进行卷积运算的整个流程相当于是一个膨胀卷积块执行的操作。由此可见,上文提及Yolof的分类头需要完成2次膨胀因子为1的膨胀卷积操作,其回归头内需要完成4次膨胀因子为1的膨胀卷积运算,即表征Yolof的分类头内设有2个膨胀卷积块。而本申请上述流程中,分类头和回归头内均基于第二膨胀因子进行了一次膨胀卷积运算,即本申请分类头和回归头内分别仅需设置一个膨胀卷积块。It should be noted here that, based on any dilation factor, using different convolution kernels to perform convolution operations represents in the neural network structure that a complete dilated convolution operation is performed through one dilated convolution block. Taking the regression head of the present application as an example, based on the second expansion factor, the entire process of using the third, fourth, and fifth convolution kernels to perform the convolution operation is equivalent to the operation performed by one expansion convolution block. It can be seen that the classification head of Yolof mentioned above needs to complete 2 expansion convolution operations with an expansion factor of 1, and the regression head needs to complete 4 expansion convolution operations with an expansion factor of 1, that is, the classification head that characterizes Yolof There are 2 dilated convolution blocks inside. In the above process of the present application, a dilated convolution operation is performed in both the classification head and the regression head based on the second dilation factor, that is, only one dilated convolution block needs to be set in the classification head and the regression head of the present application.

本申请的网络结构如图3b所示,首先间待识别图像输入RegNetX进行特征提取,然后将提取后的待处理特征图基于Yolof中的编码器流程进行修改,将原本采用3×3卷积核执行4次膨胀卷积的卷积操作修改为基于E1至E4(即4个数值的第一膨胀因子),分别采用3×3尺寸的第一卷积核和1×1尺寸的第二卷积核执行4次第一卷积操作,从而得到第一总特征图。最后将第一总特征图分别输入解码器的回归头和分类头内。其中,回归头用于确定待识别的感兴趣区域,分类头用于对感兴趣区域内的图像内容进行分类识别以获取该待识别图像的所属分类。The network structure of this application is shown in Figure 3b. First, the images to be recognized are input into RegNetX for feature extraction, and then the extracted feature maps to be processed are modified based on the encoder process in Yolof, and the original 3×3 convolution kernel is used. The convolution operation that performs 4 times of dilated convolution is modified to be based on E1 to E4 (ie, the first dilation factor of 4 values), using the first convolution kernel of 3×3 size and the second convolution of 1×1 size respectively. The convolution kernel performs 4 first convolution operations to obtain the first total feature map. Finally, the first total feature map is input into the regression head and the classification head of the decoder respectively. The regression head is used to determine the region of interest to be identified, and the classification head is used to classify and identify the image content in the region of interest to obtain the classification of the image to be identified.

下面将麻将牌作为训练集来验证本申请技术方案的可行性。首先获取麻将牌数据集,对麻将牌数据集进行标注清洗,并划分训练集和测试集。通过特征提取获取目标数据集的待处理特征图,通过跨步层叠空洞卷积对待处理特征图进行特征提取及融合,增强小目标检测性能,在原图的大小的特征图上进行目标检测。具体的,对上述数据集与其对应样本标签进行随机打乱,按照8:2的原则进行训练集、测试集的划分。The mahjong tiles are used as a training set below to verify the feasibility of the technical solution of the present application. First obtain the mahjong tile dataset, label and clean the mahjong tile dataset, and divide the training set and the test set. Obtain the to-be-processed feature map of the target data set through feature extraction, and perform feature extraction and fusion of the to-be-processed feature map through strided convolution to enhance the small target detection performance. Object detection is performed on feature maps of large size. Specifically, the above data set and its corresponding sample labels are randomly scrambled, and the training set and the test set are divided according to the principle of 8:2.

将上述训练集及对应的样本标签作为输入数据对神经网络进行训练,在每一个训练轮次进行性能评价,待性能评价指标达到收敛后就得到具有麻将牌检测能力的神经网络模型。进一步的,对测试数据集进行检测,得到检测结果。具体的,选取RegNetX-400MF作为基础构建特征提取网络,包含4个模块,每个模块的输出的特征图比上个模块输出的特征图的缩小2倍,下采样通过增加卷积步长完成。每个模块由不同的卷积块数量级联组成,数量分别为[1,2,7,12],形成类残差网络结构。每个卷积层后都跟着一个Batch Normalization层和ReLU激活层。Batch Normalization层能够对输出进行规范化,ReLU层对上层的输出增加非线性。通过特征提取得到原图尺寸大小的特征图,并对该特征图通过跨步层叠空洞卷积进行特征提取和融合,增强密集目标检测性能,最终得到和输入特征图同等大小的特征图。然后通过两组卷积模块,分别进行目标分类和位置预测,并将其映射到原图上,通过非极大值抑制输出精确的麻将牌目标检测结果。最后训练集和对应的样本标签作为输入数据,对神经网络进行训练。不固定输入网络尺寸,读取每个批次的图片中最大尺寸为该Batch的输入尺寸,小于最大尺寸的图片通过添加补丁的方式进行扩充,训练过程中待性能评价指标达到收敛种植训练,得到麻将牌目标检测模型。采用上述神经网络模型对测试集进行特征提取,在原图尺寸的特征图上进行预测,最终通过非极大值抑制得到精确的目标类别和位置坐标。最后使用目标检测工具箱(MMDetection)深度学习框架训练出麻将牌目标检测器。The above training set and corresponding sample labels are used as input data to train the neural network, and performance evaluation is performed in each training round. After the performance evaluation index reaches convergence, a neural network model with mahjong tile detection ability is obtained. Further, the test data set is detected to obtain the detection result. Specifically, RegNetX-400MF is selected as the basis to build a feature extraction network, which consists of 4 modules. The output feature map of each module is 2 times smaller than the feature map output by the previous module, and the downsampling is completed by increasing the convolution step size. Each module is composed of different convolutional blocks cascaded in number [1, 2, 7, 12] to form a residual-like network structure. Each convolutional layer is followed by a Batch Normalization layer and ReLU activation layer. The Batch Normalization layer can normalize the output, and the ReLU layer adds nonlinearity to the output of the upper layer. Obtain the original image size through feature extraction A feature map of the same size is obtained, and feature extraction and fusion are performed on the feature map through stride layered convolution to enhance the performance of dense target detection, and finally a feature map of the same size as the input feature map is obtained. Then, through two groups of convolution modules, target classification and position prediction are performed respectively, and they are mapped to the original image, and the accurate mahjong tile target detection results are output through non-maximum suppression. Finally, the training set and the corresponding sample labels are used as input data to train the neural network. The input network size is not fixed. The maximum size of the images in each batch is the input size of the batch, and the images smaller than the maximum size are expanded by adding patches. During the training process, when the performance evaluation index reaches convergence, the training is obtained. Mahjong tile target detection model. The above neural network model is used to extract the features of the test set, and the size of the original image is The prediction is made on the feature map of , and the precise target category and position coordinates are finally obtained through non-maximum suppression. Finally, a Mahjong tile target detector is trained using the target detection toolbox (MMDetection) deep learning framework.

经实验测得,本申请解码器中分类头和回归头内仅需设置一个膨胀卷积块即可得到与Yolof相近的识别精度。It is experimentally measured that only one dilated convolution block is set in the classification head and the regression head in the decoder of the present application to obtain a recognition accuracy similar to that of Yolof.

基于相同的发明构思,本申请实施例还提供了一种基于深度学习的图像识别装置400,具体如图4所示,包括:Based on the same inventive concept, an embodiment of the present application also provides an

特征提取模块401,被配置为执行对待识别图像进行特征提取,获取表征所述待识别图像的不同维度的多张待处理特征图;其中,各待处理特征图的尺寸相同;The feature extraction module 401 is configured to perform feature extraction on the image to be recognized, and obtain a plurality of feature maps to be processed representing different dimensions of the image to be recognized; wherein, the size of each feature map to be processed is the same;

特征融合模块402,被配置为执行基于预先设置的第一膨胀因子对各待处理特征图进行多轮第一卷积操作,以根据每轮第一卷积操作得到的特征识别结果确定每一待处理特征图对应的第一总特征图;其中,每轮第一卷积操作对应的第一膨胀因子不同,不同数值的第一膨胀因子表征所述特征识别结果对应的感受野不同;The feature fusion module 402 is configured to perform multiple rounds of first convolution operations on each to-be-processed feature map based on a preset first expansion factor, so as to determine each to-be-processed feature map according to the feature recognition result obtained by each round of the first convolution operation. processing the first total feature map corresponding to the feature map; wherein, the first expansion factors corresponding to the first convolution operations in each round are different, and the first expansion factors with different values represent different receptive fields corresponding to the feature recognition results;

图像识别模块403,被配置执行通过解码器确定所述第一总特征图所属的预设分类,并将所述预设分类作为所述待识别图像的识别结果;其中,所述第一卷积操作过程如下:The image recognition module 403 is configured to determine the preset classification to which the first total feature map belongs by using the decoder, and use the preset classification as the recognition result of the to-be-recognized image; wherein the first convolution The operation process is as follows:

基于本轮对应的第一膨胀因子,采用第一卷积核对输入项进行卷积运算,得到所述输入项对应的第一子特征图;并采用第二卷积核对所述第一子特征图进行卷积运算,得到所述输入项在所述第一膨胀因子对应感受野下的特征识别结果;其中,首轮第一卷积操作的输入项为所述各待处理特征图,非首轮第一卷积操作的输入项为前一轮得到的特征识别结果;所述第一卷积核和所述第二卷积核的尺寸不同。Based on the first expansion factor corresponding to the current round, the first convolution kernel is used to perform a convolution operation on the input item, and the first sub-feature map corresponding to the input item is obtained; and the second convolution kernel is used to check the first sub-feature map. Perform a convolution operation to obtain the feature recognition result of the input item under the receptive field corresponding to the first expansion factor; wherein, the input items of the first round of the first convolution operation are the feature maps to be processed, not the first round The input item of the first convolution operation is the feature recognition result obtained in the previous round; the size of the first convolution kernel and the second convolution kernel are different.

在一些可能的实施例中,所述第一卷积核和所述第二卷积核的预设维度相同,执行所述获取表征所述待识别图像的不同维度的多张待处理特征图之后,所述特征提取模块401还被配置为:In some possible embodiments, the preset dimensions of the first convolution kernel and the second convolution kernel are the same, after performing the acquiring a plurality of feature maps to be processed representing different dimensions of the image to be recognized , the feature extraction module 401 is further configured to:

对各待处理特征图进行多轮第一卷积操作之前,对所述各待处理特征图进行维度处理,以使所述各待处理特征图的维度与所述预设维度相同。Before performing multiple rounds of first convolution operations on each feature map to be processed, dimension processing is performed on each feature map to be processed, so that the dimension of each feature map to be processed is the same as the preset dimension.

在一些可能的实施例中,所述第二卷积核的数量大于所述第一卷积核的数量,执行所述采用第一卷积核对输入项进行卷积操作,所述特征融合模块402被配置为:In some possible embodiments, the number of the second convolution kernels is greater than the number of the first convolution kernels, and performing the convolution operation on the input items using the first convolution kernels, the feature fusion module 402 is configured as:

对所述输入项中的每一图片进行卷积运算,以得到每一图片对应的第一子特征图;其中,所述图片为待处理特征图或特征识别结果;Convolution operation is performed on each picture in the input item to obtain the first sub-feature map corresponding to each picture; wherein, the picture is a feature map to be processed or a feature recognition result;

执行所述采用第二卷积核对所述第一子特征图进行卷积操作,所述特征融合模块402被配置为:To perform the convolution operation on the first sub-feature map using the second convolution kernel, the feature fusion module 402 is configured to:

对所述输入项中的每一所述图片进行卷积运算,并在卷积过程中对各所述图片相同位置处的像素值相加,以得到所述输入项中全部图片对应的特征提取结果。Perform a convolution operation on each of the pictures in the input item, and add the pixel values at the same position of each of the pictures in the convolution process to obtain the feature extraction corresponding to all the pictures in the input item result.

在一些可能的实施例中,执行所述通过解码器确定所述第一总特征图所属的预设分类,所述图像识别模块403被配置为:In some possible embodiments, to perform the determining by the decoder the preset classification to which the first total feature map belongs, the image recognition module 403 is configured to:

控制回归头基于第二膨胀因子对所述第一总特征图进行第二卷积运算,以确定所述第一总特征图对应的感兴趣区域;并,controlling the regression head to perform a second convolution operation on the first total feature map based on the second expansion factor to determine the region of interest corresponding to the first total feature map; and,

控制分类头基于所述第二膨胀因子对所述第一总特征图进行第三卷积运算,以确定第一总特征图的分类检测结果;其中,所述第二膨胀因子与所述第一膨胀因子的数值不同;The control classification head performs a third convolution operation on the first total feature map based on the second expansion factor, so as to determine the classification detection result of the first total feature map; wherein the second expansion factor is related to the first total feature map. The value of the expansion factor is different;

若所述分类检测结果表征所述第一总特征图为可识别图像,则对所述第一总特征图的感兴趣区域进行类别识别,并根据识别结果确定所述第一总特征图所属的预设分类。If the classification detection result indicates that the first general feature map is an identifiable image, perform category recognition on the region of interest of the first general feature map, and determine the category to which the first general feature map belongs according to the recognition result. Default categories.

在一些可能的实施例中,执行所述控制回归头基于第二膨胀因子对所述第一总特征图进行第二卷积运算,以确定所述第一总特征图对应的感兴趣区域,所述图像识别模块403被配置为:In some possible embodiments, the control regression head performs a second convolution operation on the first total feature map based on a second expansion factor to determine a region of interest corresponding to the first total feature map, so The image recognition module 403 is configured as: