CN114398979A - Ultrasonic image thyroid nodule classification method based on feature decoupling - Google Patents

Ultrasonic image thyroid nodule classification method based on feature decouplingDownload PDFInfo

- Publication number

- CN114398979A CN114398979ACN202210037158.0ACN202210037158ACN114398979ACN 114398979 ACN114398979 ACN 114398979ACN 202210037158 ACN202210037158 ACN 202210037158ACN 114398979 ACN114398979 ACN 114398979A

- Authority

- CN

- China

- Prior art keywords

- thyroid

- feature

- decoupling

- tad

- information

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/24—Classification techniques

- G06F18/241—Classification techniques relating to the classification model, e.g. parametric or non-parametric approaches

- G06F18/2415—Classification techniques relating to the classification model, e.g. parametric or non-parametric approaches based on parametric or probabilistic models, e.g. based on likelihood ratio or false acceptance rate versus a false rejection rate

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/047—Probabilistic or stochastic networks

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/048—Activation functions

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/0002—Inspection of images, e.g. flaw detection

- G06T7/0012—Biomedical image inspection

- G—PHYSICS

- G16—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR SPECIFIC APPLICATION FIELDS

- G16H—HEALTHCARE INFORMATICS, i.e. INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR THE HANDLING OR PROCESSING OF MEDICAL OR HEALTHCARE DATA

- G16H50/00—ICT specially adapted for medical diagnosis, medical simulation or medical data mining; ICT specially adapted for detecting, monitoring or modelling epidemics or pandemics

- G16H50/20—ICT specially adapted for medical diagnosis, medical simulation or medical data mining; ICT specially adapted for detecting, monitoring or modelling epidemics or pandemics for computer-aided diagnosis, e.g. based on medical expert systems

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10132—Ultrasound image

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20081—Training; Learning

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20084—Artificial neural networks [ANN]

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30004—Biomedical image processing

- G06T2207/30096—Tumor; Lesion

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Health & Medical Sciences (AREA)

- Data Mining & Analysis (AREA)

- Biomedical Technology (AREA)

- General Physics & Mathematics (AREA)

- General Health & Medical Sciences (AREA)

- General Engineering & Computer Science (AREA)

- Life Sciences & Earth Sciences (AREA)

- Artificial Intelligence (AREA)

- Evolutionary Computation (AREA)

- Mathematical Physics (AREA)

- Computing Systems (AREA)

- Molecular Biology (AREA)

- Computational Linguistics (AREA)

- Biophysics (AREA)

- Software Systems (AREA)

- Medical Informatics (AREA)

- Probability & Statistics with Applications (AREA)

- Public Health (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Databases & Information Systems (AREA)

- Evolutionary Biology (AREA)

- Bioinformatics & Computational Biology (AREA)

- Pathology (AREA)

- Bioinformatics & Cheminformatics (AREA)

- Epidemiology (AREA)

- Primary Health Care (AREA)

- Nuclear Medicine, Radiotherapy & Molecular Imaging (AREA)

- Radiology & Medical Imaging (AREA)

- Quality & Reliability (AREA)

- Ultra Sonic Daignosis Equipment (AREA)

- Image Analysis (AREA)

- Image Processing (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明属于图像处理技术领域,特别涉及一种基于特征解耦的超声图像甲状腺结节分类技术。The invention belongs to the technical field of image processing, and in particular relates to a thyroid nodule classification technology of ultrasonic images based on feature decoupling.

背景技术Background technique

甲状腺结节是一种常见的结节性病变。根据中华医学会对甲状腺疾病的流行病学调查结果显示,甲状腺结节的患病率高达18.6%,其中恶性结节(甲状腺癌)占5%-15%。为了使甲状腺结节患者获得正确的治疗方式,准确区分结节良恶性至关重要。诊断甲状腺结节良恶性有两种常用的方法:无创的甲状腺超声成像和有创的细针穿刺活检(fine-needleaspiration biopsy,FNAB)。FNAB是结节诊断的金标准,但使用FNAB进行大规模筛查会使患者遭受创伤并产生大量费用的浪费。相反,超声成像速度快、成本低、无辐射,且获得高分辨率的图像的同时不会对患者的浅表器官造成损伤。其适用于各年龄段人群的甲状腺健康检查,是目前最常用的检查方法之一。Thyroid nodules are common nodular lesions. According to the epidemiological survey of thyroid diseases by the Chinese Medical Association, the prevalence of thyroid nodules is as high as 18.6%, of which malignant nodules (thyroid cancer) account for 5%-15%. In order for patients with thyroid nodules to receive the correct treatment, it is crucial to accurately distinguish between benign and malignant nodules. There are two commonly used methods for diagnosing benign and malignant thyroid nodules: non-invasive thyroid ultrasound imaging and invasive fine-needle aspiration biopsy (FNAB). FNAB is the gold standard for nodule diagnosis, but large-scale screening using FNAB is traumatic for patients and incurs significant costs. In contrast, ultrasound imaging is fast, low-cost, radiation-free, and obtains high-resolution images without causing damage to the patient's superficial organs. It is suitable for thyroid health examinations of people of all ages and is one of the most commonly used examination methods.

2009年,Horvath等人提出了甲状腺成像报告和数据系统(Thyroid ImagingReporting and Data System,TI-RADS)。TI-RADS旨在为评估甲状腺结节提供更标准的指导,避免不必要的侵入性检查。其基于甲状腺超声图像,利用形状、朝向、边缘、钙化和回声等特征作为超声描述符对甲状腺结节恶性肿瘤的风险进行分级。然而,仍然有几个障碍限制了TI-RADS的诊断效果。一是医学领域对良恶性结节特征的认识不充分,临床实践中医生对结节的解释和描述仍存在争议。其次,超声医师的判断是主观性的,严重依赖大量经验,医疗资源的不平衡将大大增加在偏远或资源贫乏地区的大规模筛查的难度。因此,基于超声图像的甲状腺结节智能分类是一个关键课题。In 2009, Horvath et al. proposed the Thyroid Imaging Reporting and Data System (TI-RADS). TI-RADS aims to provide more standard guidance for evaluating thyroid nodules and avoid unnecessary invasive testing. It is based on thyroid ultrasound images and uses features such as shape, orientation, margins, calcifications, and echoes as ultrasound descriptors to grade the risk of thyroid nodule malignancy. However, several obstacles still limit the diagnostic efficacy of TI-RADS. First, the understanding of the characteristics of benign and malignant nodules in the medical field is insufficient, and the interpretation and description of nodules by doctors in clinical practice is still controversial. Second, sonographers' judgments are subjective and rely heavily on a large amount of experience. The imbalance of medical resources will greatly increase the difficulty of large-scale screening in remote or under-resourced areas. Therefore, intelligent classification of thyroid nodules based on ultrasound images is a key topic.

近年来,基于影像组学的医学大数据分析方法成为研究热点。其可以定量分析医学图像数据的特征,从而获得肉眼无法识别或难以量化的疾病特征。影像组学也被应用于甲状腺结节的良恶性分类任务,主要分为两大类:传统方法和深度学习方法。In recent years, medical big data analysis methods based on radiomics have become a research hotspot. It can quantitatively analyze the characteristics of medical image data to obtain disease characteristics that cannot be recognized by the naked eye or are difficult to quantify. Radiomics has also been applied to the task of classifying benign and malignant thyroid nodules into two main categories: traditional methods and deep learning methods.

传统方法采用人工设计的特征提取方法,结合特征选择和分类器进行诊断。然而,传统方法依赖于良好的轮廓勾画来保证特征提取的稳定性,这显著地增加了人力成本,且医生对轮廓标注的主观性易造成特征的偏差,影响分类模型的泛化性能。Traditional methods use artificially designed feature extraction methods that combine feature selection and classifiers for diagnosis. However, traditional methods rely on good contouring to ensure the stability of feature extraction, which significantly increases labor costs, and the subjectivity of doctors to contour annotation can easily lead to feature bias, which affects the generalization performance of the classification model.

深度学习方法基于深度神经网络(DNN),其借助于数据驱动的模式自适应地学习特征提取并进行预测。DNN可以看作是从图像到类标签端到端映射的方法,具有特征提取、特征选择、分类等功能。甲状腺超声图像具有高度复杂性(包含气管、动脉、肌肉等多种组织),且结节形状、大小的都具有显著的差异,这些都增加了普通DNN训练的难度。本发明总结了人类医生在诊断过程中依据的特征(即影像学表现),其分为两大类:局部特征(内部回声强度、纹理、边界定义、纵横比等)和全局特征(甲状腺位置、周围回声、相对大小等)。有效利用这两类特征将有利于建立性能更佳的DNN模型。Deep learning methods are based on deep neural networks (DNNs), which adaptively learn feature extraction and make predictions with the help of data-driven patterns. DNN can be viewed as an end-to-end mapping method from images to class labels, with functions such as feature extraction, feature selection, and classification. Thyroid ultrasound images are highly complex (including trachea, arteries, muscles and other tissues), and nodules have significant differences in shape and size, which increase the difficulty of ordinary DNN training. The present invention summarizes the characteristics (i.e. imaging manifestations) that human doctors rely on in the diagnosis process, which are divided into two categories: local characteristics (internal echo intensity, texture, boundary definition, aspect ratio, etc.) and global characteristics (thyroid location, ambient echo, relative size, etc.). Effective use of these two types of features will facilitate the establishment of better-performing DNN models.

尽管DNN拥有强大的特征提取能力,但从医学图像中提取局部和全局特征仍具有挑战性。He等人将医生勾画的甲状腺结节图像作为神经网络的输入来提取局部特征,将结节周围更大范围的图像作为全局特征,在结节分类上取得了良好的性能。Xie等人对肺结节的模型建立了整体外观、体素值异质性和形状异质性三种类型的输入来提取多样性的特征。尽管这些研究在提取局部和全局特征方面做了不同的尝试,但也局限于改变神经网络的输入来获得不同视野下的特征。Despite the powerful feature extraction capabilities of DNNs, it is still challenging to extract local and global features from medical images. He et al. used the images of thyroid nodules delineated by doctors as the input of neural network to extract local features, and used images of a larger range around the nodule as global features, and achieved good performance in nodule classification. Xie et al. modeled lung nodules with three types of inputs: global appearance, voxel value heterogeneity, and shape heterogeneity to extract diverse features. Although these studies have made different attempts in extracting local and global features, they are also limited to changing the input of the neural network to obtain features under different views.

发明内容SUMMARY OF THE INVENTION

为解决上述技术问题,本发明提出一种基于特征解耦的超声图像甲状腺结节分类方法,将诊断过程中所依据的影像学表现总结为局部特征和全局特征两个组成部分,通过建立一种更合适的方式完成特征空间中的局部/全局特征解耦,完成甲状腺结节的分类。In order to solve the above technical problems, the present invention proposes a method for classifying thyroid nodules in ultrasonic images based on feature decoupling, which summarizes the imaging manifestations based on the diagnosis process into two components: local features and global features. A more appropriate way to complete the local/global feature decoupling in the feature space to complete the classification of thyroid nodules.

本发明采用的技术方案为:一种基于特征解耦的超声图像甲状腺结节分类方法,建立局部/全局特征解耦网络,通过该局部/全局特征解耦网络对甲状腺超声图像进行甲状腺结节的分类;The technical scheme adopted in the present invention is as follows: a method for classifying thyroid nodules in ultrasonic images based on feature decoupling, establishing a local/global feature decoupling network, and performing thyroid nodule classification on thyroid ultrasound images through the local/global feature decoupling network. Classification;

所述局部/全局特征解耦网络结构包括两条通路,第一条通路输出甲状腺结节的分类结果;第一条通路采用ImageNet预训练好的ResNet-18模型作为主干,其包括四个TAD模块和四个残差模块,四个TAD模块和四个残差模块交叉设置,其中TAD模块用于将特征图解耦为组织信息和解剖信息,TAD模块解耦得到的组织信息和解剖信息通过Concatenate拼接融合得到影像学表现,将影像学表现输入残差模块进行特征提取;The local/global feature decoupling network structure includes two paths, the first path outputs the classification results of thyroid nodules; the first path uses the ImageNet pre-trained ResNet-18 model as the backbone, which includes four TAD modules and four residual modules, four TAD modules and four residual modules are crossed, where the TAD module is used to couple the feature map into tissue information and anatomical information, and the tissue information and anatomical information obtained by the decoupling of the TAD module pass Concatenate The imaging performance is obtained by splicing and fusion, and the imaging performance is input into the residual module for feature extraction;

第二条通路输出甲状腺结节的分割结果;第二条通路包括四个解码器,并以第一条通路中的第四个残差块的输出作为第一个解码器的输入,四个解码器还通过跳跃连接四个TAD模块。The second pass outputs the segmentation results of thyroid nodules; the second pass includes four decoders, and the output of the fourth residual block in the first pass is used as the input of the first decoder, and the four decoding The controller also connects four TAD modules via jumps.

所述组织信息为含有局部特征线索的信息,具体的:The organization information is information containing local feature clues, specifically:

从步骤S2处理后的甲状腺超声图像中提取到的图像特征,得到特征图;Obtain a feature map from the image features extracted from the thyroid ultrasound image processed in step S2;

将特征图的所有像素按行展开,得到两个长度相同、位置配对的像素集合key和query;Expand all the pixels of the feature map by row to obtain two pixel sets key and query with the same length and paired positions;

通过对两个像素集合key和query进行白化后自注意的计算,捕获特征的长距离依赖关系,生成甲状腺图像中各种组织信息的注意图;By calculating the self-attention after whitening of the two pixel sets key and query, the long-distance dependence of the features is captured, and the attention map of various tissue information in the thyroid image is generated;

使用门控单元Vg=σ(gj)进行筛选,得到聚焦结节的组织信息。Screening using the gating unit Vg =σ(gj ) yields tissue information for focused nodules.

解剖信息为含有全局特征线索的信息,具体的:通过设置权重矩阵Wm,所述权重矩阵Wm不与key的权重矩阵共享,特征图的像素根据权重矩阵Wm加权得到解剖信息。The anatomical information is information containing global feature clues, specifically: by setting the weight matrix Wm , the weight matrix Wm is not shared with the weight matrix of the key, and the pixels of the feature map are weighted according to the weight matrix Wm to obtain the anatomical information.

TAD模块用于将特征图解耦为组织信息和解剖信息,计算式为:The TAD module is used to couple the feature map into tissue information and anatomical information, and the calculation formula is:

其中,x表示输入特征,y表示TAD模块的输出特征,i和j表示特征位置索引;ωG(xi,xj)表示测量xi和xj嵌入相似度的函数,表示softmax函数,ρ(·)表示ReLU激活函数,σ(·)表示sigmoid激活函数,qi=Wqxi,kj=Wkxj,vj=Wvxj,gj=Wgxj,mj=Wmxj,Wq,Wk,Wv,Wg,Wm是要学习的权重矩阵。where x represents the input feature, y represents the output feature of the TAD module, i and j represent the feature position index; ωG (xi ,xj ) represents the function that measures the embedding similarity of xi and xj , represents the softmax function, ρ(·) represents the ReLU activation function, σ(·) represents the sigmoid activation function, qi =Wq xi , kj =Wk xj , vj =Wv xj , gj =Wg xj , mj =Wm xj , Wq , Wk , Wv , Wg , Wm are weight matrices to be learned.

当局部/全局特征解耦网络训练时,该网络输出结果与真实值计算损失,根据损失函数的梯度优化网络参数;所述损失函数包含分类损失与分割损失两部分,计算方式如下:When the local/global feature decoupling network is trained, the network output result and the real value calculate the loss, and optimize the network parameters according to the gradient of the loss function; the loss function includes the classification loss with segmentation loss The two parts are calculated as follows:

其中,代表二值交叉熵损失,代表交并比损失,y代表类别标签,代表预测类别结果,Y表示分割的真实标签,表示预测的分割结果,N为训练批大小,λ为超参数。in, represents the binary cross-entropy loss, represents the intersection loss, y represents the class label, represents the predicted category result, Y represents the true label of the segmentation, Indicates the predicted segmentation result, N is the training batch size, and λ is the hyperparameter.

所述甲状腺超声图像的采集过程为:使用探头对患者的甲状腺部位进行连续滑动和扫描,并将病变最大部分的图像保存到数据库中。The acquisition process of the thyroid ultrasound image is as follows: using the probe to continuously slide and scan the thyroid part of the patient, and save the image of the largest part of the lesion into the database.

还包括在甲状腺超声图像上标记甲状腺结节轮廓。Also includes marking the outline of thyroid nodules on thyroid ultrasound images.

还包括对标记有甲状腺结节轮廓的甲状腺超声图像采用水平集算法自适应地提取到结节的粗糙二值分割模板。It also includes a rough binary segmentation template that adaptively extracts the nodule by using the level set algorithm on the thyroid ultrasound image marked with the outline of the thyroid nodule.

本发明的有益效果:本发明提出了一个新的局部/全局特征表达方法;该方法可以自适应的在特征空间完成局部/全局特征解耦,相比于现有方法拥有更大的视野范围,可以获取更有效且稳定的特征。其在超声图像下的甲状腺结节良恶性分类取得了超过医生的诊断性能;本发明具体包括以下优点:Beneficial effects of the present invention: The present invention proposes a new local/global feature expression method; the method can adaptively complete the local/global feature decoupling in the feature space, and has a larger field of view than the existing method, More efficient and stable features can be obtained. The benign and malignant classification of thyroid nodules under the ultrasound image has achieved diagnostic performance exceeding that of doctors; the present invention specifically includes the following advantages:

提出了一个特征解耦模块,建立了“What”和“Where”两条通路的信息交互,从而完成了分类、分割的多任务学习框架;通过两条通路的相互促进学习,获得了比现有方法更好的鲁棒性和泛化性。A feature decoupling module is proposed, which establishes the information interaction of "What" and "Where" paths, thus completing the multi-task learning framework of classification and segmentation. method for better robustness and generalization.

附图说明Description of drawings

图1为本发明中甲状腺超声图像数据与结节二值分割模板的示意图。FIG. 1 is a schematic diagram of a thyroid ultrasound image data and a nodule binary segmentation template in the present invention.

图2为本发明中局部/全局特征解耦网络(LoGo-Net)的结构图。FIG. 2 is a structural diagram of a local/global feature decoupling network (LoGo-Net) in the present invention.

图3为本发明中组织-解剖解耦模块的结构图。FIG. 3 is a structural diagram of the tissue-anatomy decoupling module in the present invention.

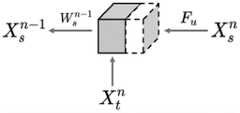

图4为本发明中“Where”通路的解码器的结构图。FIG. 4 is a structural diagram of the decoder of the "Where" path in the present invention.

图5为本发明中输出显著图的示意图。FIG. 5 is a schematic diagram of outputting a saliency map in the present invention.

具体实施方式Detailed ways

下面将结合本发明实施例中的附图,对本发明实施例中的技术方案进行清楚详细地描述,显然,所描述的实施例仅是本发明一部分的实施例,而不是全部的实施例。基于本发明中的实施例,本领域普通技术人员在没有做出创造性劳动前提下所获得的所有其他实施例,都属于本发明保护的范围。The technical solutions in the embodiments of the present invention will be described clearly and in detail below with reference to the drawings in the embodiments of the present invention. Obviously, the described embodiments are only a part of the embodiments of the present invention, but not all of the embodiments. Based on the embodiments of the present invention, all other embodiments obtained by those of ordinary skill in the art without creative efforts shall fall within the protection scope of the present invention.

本发明提供的一种基于特征解耦的超声图像甲状腺结节分类方法及应用,包括如下步骤:A method and application for classifying thyroid nodules in ultrasonic images based on feature decoupling provided by the present invention include the following steps:

步骤S1:甲状腺超声图像采集与标注。Step S1: thyroid ultrasound image acquisition and labeling.

在超声检查过程中,超声医师使用高频线性探头对患者的甲状腺部位进行连续滑动和扫描,并将病变最大部分的图像保存到数据库中。超声设备根据成像需要来调整动态范围值、增益值、成像深度和频率,以获得清晰的甲状腺超声图像。通常,动态范围值设置为40~85dB,增益值设置为50~70dB,成像深度设置为大约2.5~4cm,频率设置为6~12MHz。During an ultrasound examination, the sonographer uses a high-frequency linear probe to continuously slide and scan the patient's thyroid area and save images of the largest portion of the lesion to a database. The ultrasound equipment adjusts the dynamic range value, gain value, imaging depth and frequency according to the imaging needs to obtain a clear thyroid ultrasound image. Typically, the dynamic range value is set to 40-85dB, the gain value is set to 50-70dB, the imaging depth is set to about 2.5-4cm, and the frequency is set to 6-12MHz.

甲状腺结节的轮廓由医生在每张超声图像上进行提示。具体的,医生在结节轮廓附近标记少量点,本发明采用水平集算法自适应地提取到结节的粗糙二值分割模板,如图1所示。甲状腺结节的良恶性由患者的超声报告与病理记录同时确定,为图像标注0,1的二值标签。当出现多个结节时,其中若含有恶性结节则图像标注为恶性。The outline of the thyroid nodule is suggested by the doctor on each ultrasound image. Specifically, the doctor marks a small number of points near the outline of the nodule, and the present invention adopts the level set algorithm to adaptively extract the rough binary segmentation template of the nodule, as shown in FIG. 1 . The benign and malignant thyroid nodules were determined by the patient's ultrasound report and pathological records at the same time, and the images were marked with a binary label of 0, 1. When multiple nodules appear, the images are marked as malignant if they contain malignant nodules.

步骤S2:图像预处理。Step S2: image preprocessing.

超声图像经过灰度归一化和尺寸缩放到224×224的分辨率。二值分割模板尺寸缩放到56×56的分辨率。本发明使用数据增强解决正负样本不平衡的问题,对数据量较少的负样本进行镜像翻转,使其达到正样本相同的数据量。Ultrasound images were gray-normalized and size-scaled to a resolution of 224 × 224. The binary segmentation template size is scaled to a resolution of 56×56. The invention uses data enhancement to solve the problem of unbalanced positive and negative samples, and performs mirror flipping on negative samples with less data volume, so as to achieve the same data volume as positive samples.

步骤S3:局部/全局特征解耦网络(LoGo-Net)的建立。Step S3: Establishment of local/global feature decoupling network (LoGo-Net).

该网络受超声诊断领域知识和视觉认知机理启发,模拟人类视觉系统的双通路结构和处理模式,建立基于多任务学习框架的模型,如图2所示。LoGo-Net包含“What”和“Where”两条通路,两条通路功能相互促进、同步优化,分别完成分类与分割任务。除此之外,LoGo-Net含有基于自注意机制设计的组织-解剖解耦(TAD)模块,其将特征解耦为包含局部特征线索的组织信息和包含全局特征线索的解剖信息,并作为信息传递的载体连接了“What”和“Where”通路。Inspired by the knowledge in the field of ultrasound diagnosis and the mechanism of visual cognition, the network simulates the dual-path structure and processing mode of the human visual system, and establishes a model based on a multi-task learning framework, as shown in Figure 2. LoGo-Net includes two pathways, "What" and "Where". The functions of the two pathways promote each other and are optimized synchronously to complete the tasks of classification and segmentation respectively. In addition, LoGo-Net contains a tissue-anatomy decoupling (TAD) module designed based on a self-attention mechanism, which decouples features into tissue information containing local feature cues and anatomical information containing global feature cues, and as information The delivered vector connects the "What" and "Where" pathways.

步骤S31:组织-解剖解耦(TAD)模块的建立。Step S31: Establishment of a tissue-anatomy decoupling (TAD) module.

TAD模块旨在从特征图中分离出含有局部特征线索的组织信息和含有全局特征线索的解剖信息。如图3所示,对于组织信息,通过对两个像素集合(key和query)进行白化后自注意的计算,捕获特征的长距离依赖关系,生成甲状腺图像中各种组织信息的注意图。然后使用门控单元Vg=σ(gj)来对所需的注意图进行筛选,使模型选择性地聚焦于结节和其他相关组织上,抑制与分类无关的组织信息。对于解剖信息,设置了一个新的权重矩阵Wm,它不与key的权重矩阵共享,这使得组织和解剖信息的优化相互独立。The TAD module aims to separate the tissue information with local feature cues and the anatomical information with global feature cues from the feature map. As shown in Fig. 3, for tissue information, the long-distance dependencies of features are captured by the computation of post-whitening self-attention on two pixel sets (key and query), and attention maps of various tissue information in thyroid images are generated. A gating unit Vg =σ(gj ) is then used to screen the desired attention map, allowing the model to selectively focus on nodules and other relevant tissues, suppressing tissue information irrelevant to classification. For anatomical information, a new weight matrixWm is set, which is not shared with the key's weight matrix, which makes the optimization of tissue and anatomical information independent of each other.

这里的特征图具体指从步骤S2处理后的甲状腺超声图像中提取到的图像特征,是轮廓、边缘、纹理等特征的集合。The feature map here specifically refers to the image features extracted from the thyroid ultrasound image processed in step S2, which is a collection of features such as contours, edges, and textures.

本步骤中的像素集合为特征图的所有像素按行展开,key和query为两个长度相同、位置配对的像素集合,区别在于分别由两个不同的权重矩阵加权后得到。The pixel set in this step is that all the pixels of the feature map are expanded in rows, and the key and query are two pixel sets with the same length and paired positions. The difference is that they are obtained by weighting by two different weight matrices.

步骤S31具体计算如下:The specific calculation of step S31 is as follows:

其中x表示输入特征,y表示TAD模块的输出特征,i和j表示特征位置索引,所以ωG(xi,xj)表示测量xi和xj嵌入相似度的函数。当ωG(xi,xj)用嵌入的高斯函数实例化时,则等同于沿xj维度的softmax函数,表示为ρ(·)表示ReLU激活函数,σ(·)表示sigmoid激活函数。qi=Wqxi,kj=Wkxj,vj=Wvxj,gj=Wgxj,mj=Wmxj,其中Wq,Wk,Wv,Wg,Wm是要学习的权重矩阵。where x represents the input feature, y represents the output feature of the TAD module, and i and j represent the feature location indices, so ωG (xi ,xj ) represents the function that measures the embedding similarity of xi and xj . When ωG (xi ,xj ) is instantiated with an embedded Gaussian function, then it is equivalent to a softmax function along the xj dimension, denoted as ρ(·) represents the ReLU activation function, and σ(·) represents the sigmoid activation function. qi =Wq xi , kj =Wk xj , vj =Wv xj , gj =Wg xj , mj =Wm xj , where Wq ,Wk ,Wv , Wg , Wm are the weight matrices to be learned.

步骤S32:“What”通路的建立。Step S32: Establishment of the "What" path.

“What”通路实现了甲状腺结节良恶性分类的功能。“What”通路使用ImageNet预训练好的ResNet-18作为分类模型的主干。ResNet原始结构全连接层具有1000个神经元,在本网络中替换为2个,以完成良恶性分类的任务。如图2所示,实施例中共含有四个TAD模块和四个残差模块,在每个残差模块特征提取之前,先通过TAD模块将特征图解耦为组织信息和解剖信息。其中组织信息被送入“Where”通路进行信息交换,以约束其载有结节的位置信息。组织信息和解剖信息通过Concatenate拼接融合得到影像学表现,并送入后续的残差模块,使模型可以提取包含局部与全局的更丰富的特征。这里的特征图即步骤S2处理后的甲状腺超声图像中提取到的图像特征。The "What" pathway realizes the function of classifying benign and malignant thyroid nodules. The "What" pathway uses ImageNet pre-trained ResNet-18 as the backbone of the classification model. The fully connected layer of ResNet's original structure has 1000 neurons, which are replaced by 2 in this network to complete the task of benign and malignant classification. As shown in FIG. 2 , the embodiment contains four TAD modules and four residual modules. Before the feature extraction of each residual module, the feature map is coupled into tissue information and anatomical information through the TAD module. The tissue information is sent into the "Where" channel for information exchange to constrain the location information of the nodules. The tissue information and anatomical information are obtained by concatenate splicing and fusion to obtain the imaging representation, and sent to the subsequent residual module, so that the model can extract richer features including local and global. The feature map here is the image feature extracted from the thyroid ultrasound image processed in step S2.

步骤S33:“Where”通路的建立。Step S33: Establishment of the "Where" path.

“Where”通路实现的是甲状腺结节的分割任务。如图3所示,实施例中“Where”通路含有四个解码器。具体地,解码器单元如图4所示,计算公式为:其中和分别代表高层和底层特征。表示组织信息。Fu(·)是双线性差值的上采样函数,[·]表示特征拼接操作,Ws为两层卷积运算。“Where”通路以步骤S32中第四个残差模块的输出的作为解码器的起始输入,通过跳跃连接不断整合了四个TAD模块输出的组织信息,以此恢复更精确的边缘细节,最终得到完整的结节分割预测。The "Where" pathway implements the segmentation task of thyroid nodules. As shown in Figure 3, the "Where" path in the embodiment contains four decoders. Specifically, the decoder unit is shown in Figure 4, and the calculation formula is: in and represent high-level and low-level features, respectively. Indicates organizational information. Fu (·) is the upsampling function of the bilinear difference, [·] represents the feature stitching operation, and Ws is the two-layer convolution operation. The "Where" path takes the output of the fourth residual module in step S32 as the initial input of the decoder, and continuously integrates the organizational information output by the four TAD modules through skip connections, so as to recover more accurate edge details, and finally Get full nodule segmentation predictions.

步骤S4:损失函数的定义及实验设置。Step S4: Definition of loss function and experimental settings.

当模型训练时,模型输出结果与真实值计算损失,根据损失函数的梯度优化模型参数。损失函数包含分类损失与分割损失两部分,计算方式如下:When the model is trained, the model output results and the real value calculate the loss, and optimize the model parameters according to the gradient of the loss function. The loss function contains the classification loss with segmentation loss The two parts are calculated as follows:

其中代表二值交叉熵损失,代表交并比损失,y代表类别标签,代表模型预测类别结果。Y={Y1,Y2,…,YN}表示分割的真实标签,表示模型预测的分割结果。N为训练批大小,λ为超参数,在本实施例中设置为0.5。in represents the binary cross-entropy loss, represents the intersection loss, y represents the class label, Represents the model predicted class outcome. Y={Y1 , Y2 ,...,YN } represents the ground truth label of segmentation, Represents the segmentation result predicted by the model. N is the training batch size, and λ is a hyperparameter, which is set to 0.5 in this example.

数据集结果使用5折交叉验证,即按4:1的比例划分训练数据与测试数据并交叉验证。训练时,使用随机梯度下降法优化损失函数,动量设置为0.9,权值衰减为1e-5。初始学习率设置为0.001,每次迭代训练完成后乘以系数0.95。在训练过程开始前采用warm up预热,即先使用较小的学习率训练,等模型相对稳定后再使用预先设置的学习率进行训练,可得到更佳的模型效果。The data set results use 5-fold cross-validation, that is, the training data and test data are divided by a ratio of 4:1 and cross-validated. During training, the loss function is optimized using stochastic gradient descent, the momentum is set to 0.9, and the weight decay is 1e-5. The initial learning rate is set to 0.001 and multiplied by a factor of 0.95 after each iteration of training. Use warm up to warm up before the training process starts, that is, first use a smaller learning rate for training, and then use the preset learning rate for training after the model is relatively stable, which can get better model effects.

步骤S5:人工智能(AI)与医生的联合诊断。Step S5: Joint diagnosis by artificial intelligence (AI) and a doctor.

通过S4训练完成的模型,输入图像后可得到良恶性的预测概率,通过将分类损失函数反向传播算得输入图像的梯度,取绝对值后归一化得到显著图,其揭示了模型做出诊断的判别区域。在进行实际的临床阅片时,本发明向医生同步提供AI模型的预测概率与显著图,医生将结合医学知识做出自己的判断。这提高了医生面对疑难病例的诊断水平,有效减少了漏诊情况,有潜力推广到偏远或资源贫乏地区的早期癌症筛查程序中。For the model trained by S4, the predicted probability of benign and malignant can be obtained after inputting the image. The gradient of the input image is calculated by back-propagating the classification loss function, and the saliency map is obtained by normalizing the absolute value, which reveals that the model makes a diagnosis. discriminant region. During actual clinical reading, the present invention simultaneously provides the predicted probability and saliency map of the AI model to the doctor, and the doctor will make his own judgment based on medical knowledge. This improves doctors' ability to diagnose difficult cases, effectively reduces missed diagnoses, and has the potential to be extended to early cancer screening procedures in remote or under-resourced areas.

本领域的普通技术人员将会意识到,这里所述的实施例是为了帮助读者理解本发明的原理,应被理解为本发明的保护范围并不局限于这样的特别陈述和实施例。对于本领域的技术人员来说,本发明可以有各种更改和变化。凡在本发明的精神和原则之内,所作的任何修改、等同替换、改进等,均应包含在本发明的权利要求范围之内。Those of ordinary skill in the art will appreciate that the embodiments described herein are intended to assist readers in understanding the principles of the present invention, and it should be understood that the scope of protection of the present invention is not limited to such specific statements and embodiments. Various modifications and variations of the present invention are possible for those skilled in the art. Any modification, equivalent replacement, improvement, etc. made within the spirit and principle of the present invention shall be included within the scope of the claims of the present invention.

Claims (9)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210037158.0ACN114398979A (en) | 2022-01-13 | 2022-01-13 | Ultrasonic image thyroid nodule classification method based on feature decoupling |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202210037158.0ACN114398979A (en) | 2022-01-13 | 2022-01-13 | Ultrasonic image thyroid nodule classification method based on feature decoupling |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN114398979Atrue CN114398979A (en) | 2022-04-26 |

Family

ID=81230824

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202210037158.0APendingCN114398979A (en) | 2022-01-13 | 2022-01-13 | Ultrasonic image thyroid nodule classification method based on feature decoupling |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN114398979A (en) |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114663861A (en)* | 2022-05-17 | 2022-06-24 | 山东交通学院 | Vehicle re-identification method based on dimension decoupling and non-local relation |

| CN115035030A (en)* | 2022-05-07 | 2022-09-09 | 北京大学深圳医院 | Image recognition method, apparatus, computer device, and computer-readable storage medium |

| CN117611806A (en)* | 2024-01-24 | 2024-02-27 | 北京航空航天大学 | A positive prediction system for prostate cancer surgical margins based on imaging and clinical features |

Citations (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2019232346A1 (en)* | 2018-05-31 | 2019-12-05 | Mayo Foundation For Medical Education And Research | Systems and media for automatically diagnosing thyroid nodules |

| CN110706793A (en)* | 2019-09-25 | 2020-01-17 | 天津大学 | Attention mechanism-based thyroid nodule semi-supervised segmentation method |

| CN111243042A (en)* | 2020-02-28 | 2020-06-05 | 浙江德尚韵兴医疗科技有限公司 | Ultrasonic thyroid nodule benign and malignant characteristic visualization method based on deep learning |

| CN111898560A (en)* | 2020-08-03 | 2020-11-06 | 华南理工大学 | A classification and regression feature decoupling method in target detection |

| CN113159051A (en)* | 2021-04-27 | 2021-07-23 | 长春理工大学 | Remote sensing image lightweight semantic segmentation method based on edge decoupling |

| CN113177554A (en)* | 2021-05-19 | 2021-07-27 | 中山大学 | Thyroid nodule identification and segmentation method, system, storage medium and equipment |

| CN113378933A (en)* | 2021-06-11 | 2021-09-10 | 合肥合滨智能机器人有限公司 | Thyroid ultrasound image classification and segmentation network, training method, device and medium |

| CN113539477A (en)* | 2021-06-24 | 2021-10-22 | 杭州深睿博联科技有限公司 | Decoupling mechanism-based lesion benign and malignant prediction method and device |

| CN113804766A (en)* | 2021-09-15 | 2021-12-17 | 大连理工大学 | A Multi-parameter Ultrasonic Characterization Method of Heterogeneous Material Structure Homogeneity Based on SVR |

| CN113870289A (en)* | 2021-09-22 | 2021-12-31 | 浙江大学 | A method and device for decoupling, dividing and conquering facial nerve segmentation |

- 2022

- 2022-01-13CNCN202210037158.0Apatent/CN114398979A/enactivePending

Patent Citations (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2019232346A1 (en)* | 2018-05-31 | 2019-12-05 | Mayo Foundation For Medical Education And Research | Systems and media for automatically diagnosing thyroid nodules |

| CN110706793A (en)* | 2019-09-25 | 2020-01-17 | 天津大学 | Attention mechanism-based thyroid nodule semi-supervised segmentation method |

| CN111243042A (en)* | 2020-02-28 | 2020-06-05 | 浙江德尚韵兴医疗科技有限公司 | Ultrasonic thyroid nodule benign and malignant characteristic visualization method based on deep learning |

| CN111898560A (en)* | 2020-08-03 | 2020-11-06 | 华南理工大学 | A classification and regression feature decoupling method in target detection |

| CN113159051A (en)* | 2021-04-27 | 2021-07-23 | 长春理工大学 | Remote sensing image lightweight semantic segmentation method based on edge decoupling |

| CN113177554A (en)* | 2021-05-19 | 2021-07-27 | 中山大学 | Thyroid nodule identification and segmentation method, system, storage medium and equipment |

| CN113378933A (en)* | 2021-06-11 | 2021-09-10 | 合肥合滨智能机器人有限公司 | Thyroid ultrasound image classification and segmentation network, training method, device and medium |

| CN113539477A (en)* | 2021-06-24 | 2021-10-22 | 杭州深睿博联科技有限公司 | Decoupling mechanism-based lesion benign and malignant prediction method and device |

| CN113804766A (en)* | 2021-09-15 | 2021-12-17 | 大连理工大学 | A Multi-parameter Ultrasonic Characterization Method of Heterogeneous Material Structure Homogeneity Based on SVR |

| CN113870289A (en)* | 2021-09-22 | 2021-12-31 | 浙江大学 | A method and device for decoupling, dividing and conquering facial nerve segmentation |

Non-Patent Citations (1)

| Title |

|---|

| SHI-XUAN ZHAO等: "A Local and Global Feature Disentangled Network: Toward Classification of Benign-Malignant Thyroid Nodules From Ultrasound Image"* |

Cited By (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN115035030A (en)* | 2022-05-07 | 2022-09-09 | 北京大学深圳医院 | Image recognition method, apparatus, computer device, and computer-readable storage medium |

| CN114663861A (en)* | 2022-05-17 | 2022-06-24 | 山东交通学院 | Vehicle re-identification method based on dimension decoupling and non-local relation |

| CN117611806A (en)* | 2024-01-24 | 2024-02-27 | 北京航空航天大学 | A positive prediction system for prostate cancer surgical margins based on imaging and clinical features |

| CN117611806B (en)* | 2024-01-24 | 2024-04-12 | 北京航空航天大学 | A positive prediction system for surgical margins of prostate cancer based on imaging and clinical features |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN109584254B (en) | Heart left ventricle segmentation method based on deep full convolution neural network | |

| US11101033B2 (en) | Medical image aided diagnosis method and system combining image recognition and report editing | |

| Meng et al. | Liver fibrosis classification based on transfer learning and FCNet for ultrasound images | |

| Nurmaini et al. | Accurate detection of septal defects with fetal ultrasonography images using deep learning-based multiclass instance segmentation | |

| CN108257135A (en) | The assistant diagnosis system of medical image features is understood based on deep learning method | |

| CN111047594A (en) | Tumor MRI weak supervised learning analysis modeling method and model thereof | |

| CN106296699A (en) | Cerebral tumor dividing method based on deep neural network and multi-modal MRI image | |

| CN110232383A (en) | A kind of lesion image recognition methods and lesion image identifying system based on deep learning model | |

| CN114398979A (en) | Ultrasonic image thyroid nodule classification method based on feature decoupling | |

| CN112529894A (en) | Thyroid nodule diagnosis method based on deep learning network | |

| CN111767952B (en) | Interpretable lung nodule benign and malignant classification method | |

| CN110335231A (en) | A method for assisted screening of chronic kidney disease with ultrasound imaging based on texture features and depth features | |

| CN106780453A (en) | A kind of method realized based on depth trust network to brain tumor segmentation | |

| CN109948671B (en) | Image classification method, device, storage medium and endoscopic imaging equipment | |

| CN111462082A (en) | Focus picture recognition device, method and equipment and readable storage medium | |

| CN115472258A (en) | Method for generating MRI (magnetic resonance imaging) image and predicting curative effect after breast cancer neoadjuvant chemotherapy | |

| CN113902738A (en) | A cardiac MRI segmentation method and system | |

| Yang et al. | Unsupervised domain adaptation for cross-device OCT lesion detection via learning adaptive features | |

| CN117633558A (en) | Multi-excitation fusion zero-sample lesion detection method based on visual language model | |

| CN116704305A (en) | Multi-modal and multi-section classification method for echocardiography based on deep learning algorithm | |

| Zhang et al. | SEG-LUS: A novel ultrasound segmentation method for liver and its accessory structures based on muti-head self-attention | |

| Wen et al. | A-PSPNet: A novel segmentation method of renal ultrasound image | |

| Subramanian et al. | Design and evaluation of a deep learning aided approach for kidney stone detection in CT scan images | |

| Dong et al. | Deep supervision adversarial learning network for retinal vessel segmentation | |

| Chincholkar et al. | Deep learning techniques in liver segmentation: evaluating U-Net, Attention U-Net, ResNet50, and ResUNet models |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| RJ01 | Rejection of invention patent application after publication | Application publication date:20220426 | |

| RJ01 | Rejection of invention patent application after publication |