CN114357152A - Information processing method, apparatus, computer-readable storage medium, and computer device - Google Patents

Information processing method, apparatus, computer-readable storage medium, and computer deviceDownload PDFInfo

- Publication number

- CN114357152A CN114357152ACN202111031124.2ACN202111031124ACN114357152ACN 114357152 ACN114357152 ACN 114357152ACN 202111031124 ACN202111031124 ACN 202111031124ACN 114357152 ACN114357152 ACN 114357152A

- Authority

- CN

- China

- Prior art keywords

- target sample

- classification model

- category

- probability distribution

- target

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Landscapes

- Information Retrieval, Db Structures And Fs Structures Therefor (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本申请涉及互联网技术领域,具体涉及一种信息处理方法、装置、计算机可读存储介质和计算机设备。The present application relates to the field of Internet technologies, and in particular, to an information processing method, apparatus, computer-readable storage medium, and computer equipment.

背景技术Background technique

自然语言处理(Natural Language Processing,NLP)是计算机科学领域与人工智能领域中的一个重要方向。它研究能够实现人与计算机之间用自然语言进行有效通信的各种理论和方法。随着计算机技术以及人工智能技术的发展,人们对自然语言处理技术的要求也不断提高,相应的,对模型的性能要求也在提高。其中,为了可以得到性能较好且模型体积较小的模型,可以通过知识蒸馏(knowledge distillation,简称KD)对模型进行训练。Natural Language Processing (NLP) is an important direction in the field of computer science and artificial intelligence. It studies various theories and methods that enable effective communication between humans and computers using natural language. With the development of computer technology and artificial intelligence technology, people's requirements for natural language processing technology are also increasing, and correspondingly, the performance requirements for models are also increasing. Among them, in order to obtain a model with better performance and smaller model volume, the model can be trained by knowledge distillation (KD for short).

然而,在现有技术中,用于模型压缩的知识蒸馏技术在大规模语料上训练速度较慢,使得模型压缩部署所需的成本较高,在模型训练过程中信息处理效率较低,进而导致模型训练的效率较低。However, in the prior art, the knowledge distillation technology used for model compression is slow to train on large-scale corpus, which makes the cost of model compression deployment high, and the information processing efficiency is low in the model training process, which leads to Model training is less efficient.

发明内容SUMMARY OF THE INVENTION

本申请实施例提供一种信息处理方法、装置、计算机可读存储介质和计算机设备,可以提高模型训练过程中信息处理的效率,进而提高模型训练的效率。Embodiments of the present application provide an information processing method, apparatus, computer-readable storage medium, and computer equipment, which can improve the efficiency of information processing during model training, thereby improving the efficiency of model training.

本申请实施例提供一种信息处理方法,包括:The embodiment of the present application provides an information processing method, including:

获取目标样本;Get the target sample;

采用预设分类模型对所述目标样本进行分类处理,得到所述目标样本对应的第一类别概率分布;Use a preset classification model to classify the target sample, and obtain the first category probability distribution corresponding to the target sample;

根据所述第一类别概率分布计算所述目标样本的困难系数,并基于所述困难系数对所述目标样本进行筛选,得到筛选后目标样本,所述困难系数表征预设分类模型输出所述目标样本对应的每一类别概率的不确定程度;The difficulty coefficient of the target sample is calculated according to the probability distribution of the first category, and the target sample is screened based on the difficulty coefficient to obtain a filtered target sample. The difficulty coefficient represents the output of the target by a preset classification model. The uncertainty of the probability of each category corresponding to the sample;

采用训练后深度分类模型对所述筛选后目标样本进行分类处理,得到所述筛选后目标样本对应的第二类别概率分布,所述训练后深度分类模型的网络深度大于预设分类模型的网络深度;The post-training depth classification model is used to classify the filtered target samples, and the probability distribution of the second category corresponding to the filtered target samples is obtained. The network depth of the post-training deep classification model is greater than the network depth of the preset classification model. ;

计算所述第二类别概率分布与所述第一类别概率分布之间的差异,并基于所述差异对预设分类模型进行收敛,得到训练后分类模型,所述训练后分类模型用于对待处理信息进行分类。Calculate the difference between the probability distribution of the second category and the probability distribution of the first category, and converge the preset classification model based on the difference to obtain a classification model after training, and the classification model after training is used for processing information is classified.

相应的,本申请实施例提供一种信息处理装置,包括:Correspondingly, an embodiment of the present application provides an information processing apparatus, including:

获取单元,用于获取目标样本;an acquisition unit for acquiring the target sample;

第一分类单元,用于采用预设分类模型对所述目标样本进行分类处理,得到所述目标样本对应的第一类别概率分布;a first classification unit, configured to use a preset classification model to classify the target sample to obtain a first class probability distribution corresponding to the target sample;

筛选单元,用于根据所述第一类别概率分布计算所述目标样本的困难系数,并基于所述困难系数对所述目标样本进行筛选,得到筛选后目标样本,所述困难系数表征预设分类模型输出所述目标样本对应的每一类别概率的不确定程度;a screening unit, configured to calculate the difficulty coefficient of the target sample according to the probability distribution of the first category, and to screen the target sample based on the difficulty coefficient to obtain a screened target sample, and the difficulty coefficient represents a preset classification The model outputs the uncertainty degree of each category probability corresponding to the target sample;

第二分类单元,用于采用训练后深度分类模型对所述筛选后目标样本进行分类处理,得到所述筛选后目标样本对应的第二类别概率分布,所述训练后深度分类模型的网络深度大于预设分类模型的网络深度;The second classification unit is configured to use the post-training depth classification model to classify the filtered target samples to obtain the second class probability distribution corresponding to the filtered target samples, where the network depth of the post-trained deep classification model is greater than The network depth of the preset classification model;

计算单元,用于计算所述第二类别概率分布与所述第一类别概率分布之间的差异,并基于所述差异对预设分类模型进行收敛,得到训练后分类模型,所述训练后分类模型用于对待处理信息进行分类。a calculation unit, configured to calculate the difference between the probability distribution of the second category and the probability distribution of the first category, and based on the difference to converge a preset classification model to obtain a classification model after training, the classification after training Models are used to classify the information to be processed.

在一实施例中,所述筛选单元,包括:In one embodiment, the screening unit includes:

第一排序子单元,用于根据所述目标样本的困难系数对每一目标样本以由大到小的顺序进行排序,得到第一排序结果;a first sorting subunit, configured to sort each target sample in descending order according to the difficulty coefficient of the target sample to obtain a first sorting result;

筛选子单元,用于根据所述第一排序结果对满足预设条件的目标样本进行筛选,得到筛选后目标样本。The screening subunit is used for screening the target samples that meet the preset conditions according to the first sorting result to obtain the target samples after screening.

在一实施例中,所述筛选单元,包括:In one embodiment, the screening unit includes:

第一获取子单元,用于根据所述第一类别概率分布获取每一目标样本对应的每一类别的类别概率;a first obtaining subunit, configured to obtain the class probability of each class corresponding to each target sample according to the first class probability distribution;

第二排序子单元,用于将每一目标样本对应的每一类别的类别概率以由大到小的顺序进行排序,得到第二排序结果;The second sorting subunit is used to sort the category probability of each category corresponding to each target sample in descending order to obtain a second sorting result;

第一确定子单元,用于根据所述第二排序结果确定每一目标样本对应的类别概率中排名最靠前的两个类别概率;a first determining subunit, configured to determine, according to the second sorting result, the top two category probabilities among the category probabilities corresponding to each target sample;

第二确定子单元,用于根据排名最靠前的两个类别概率之间的差值确定每一目标样本的分类间隔差值;The second determination subunit is used to determine the classification interval difference of each target sample according to the difference between the two top-ranked class probabilities;

第三确定子单元,用于基于所述分类间隔差值确定每一目标样本的困难系数。The third determination subunit is configured to determine the difficulty coefficient of each target sample based on the classification interval difference.

在一实施例中,所述筛选单元,包括:In one embodiment, the screening unit includes:

第二获取子单元,用于根据所述第一类别概率分布获取每一目标样本对应的每一类别的类别概率;a second obtaining subunit, configured to obtain the class probability of each class corresponding to each target sample according to the first class probability distribution;

第三排序子单元,用于将每一目标样本对应的每一类别的类别概率以由大到小的顺序进行排序,得到第三排序结果;The third sorting subunit is used to sort the category probability of each category corresponding to each target sample in descending order to obtain a third sorting result;

第四确定子单元,用于根据所述第三排序结果确定每一目标样本对应的类别概率中排名最靠前的类别概率;a fourth determining subunit, configured to determine, according to the third sorting result, the class probability with the highest ranking among the class probabilities corresponding to each target sample;

第一计算子单元,用于根据所述排名最靠前的类别概率计算每一目标样本的混淆分数;a first calculation subunit, configured to calculate the confusion score of each target sample according to the top-ranked category probability;

第五确定子单元,用于基于所述混淆分数确定每一目标样本的困难系数。The fifth determination subunit is used for determining the difficulty coefficient of each target sample based on the confusion score.

在一实施例中,所述筛选单元,包括:In one embodiment, the screening unit includes:

第二计算子单元,用于根据所述第一类别概率分布计算所述目标样本的熵值;a second calculation subunit, configured to calculate the entropy value of the target sample according to the probability distribution of the first category;

第六确定子单元,用于基于所述熵值确定每一目标样本的困难系数。The sixth determination subunit is used for determining the difficulty coefficient of each target sample based on the entropy value.

在一实施例中,所述计算单元,包括:In one embodiment, the computing unit includes:

相对熵计算子单元,用于计算所述第二类别概率分布以及所述第一类别概率分布之间的相对熵;a relative entropy calculation subunit, configured to calculate the relative entropy between the probability distribution of the second category and the probability distribution of the first category;

差异确定子单元,用于根据所述相对熵确定所述第二类别概率分布与所述第一类别概率分布之间的差异。A difference determination subunit, configured to determine the difference between the second category probability distribution and the first category probability distribution according to the relative entropy.

在一实施例中,所述信息处理装置,还包括:In one embodiment, the information processing device further includes:

模型获取单元,用于获取预设分类模型和训练后深度分类模型;A model obtaining unit, used to obtain a preset classification model and a deep classification model after training;

网络深度层数据获取单元,用于当预设分类模型与所述训练后深度分类模型的模型架构相同时,获取预设分类模型的第一网络深度层级数以及所述训练后深度分类模型的第二网络深度层级数,所述第一网络深度层级数小于所述第二网络深度层级数;The network depth layer data acquisition unit is used to obtain the first network depth level number of the preset classification model and the third depth classification model after the training when the model architecture of the preset classification model is the same as that of the deep classification model after training. 2. The number of network depth levels, the first network depth level number is less than the second network depth level number;

初始化模型参数确定单元,用于将所述训练后深度分类模型的前第一网络深度层级数的层级的模型参数确定为预设分类模型的初始化模型参数。The initialization model parameter determination unit is configured to determine the model parameters of the level before the first network depth level of the deep classification model after training as the initialization model parameters of the preset classification model.

在一实施例中,所述获取单元,包括:In one embodiment, the obtaining unit includes:

初始样本获取子单元,用于获取初始样本数据集,所述初始样本数据集包括至少一个初始样本;an initial sample acquisition subunit, configured to acquire an initial sample data set, where the initial sample data set includes at least one initial sample;

分词子单元,用于对所述初始样本进行分词处理,得到初始样本词集合;A word segmentation subunit, used to perform word segmentation processing on the initial sample to obtain an initial sample word set;

特征提取子单元,用于对所述初始样本词集合进行特征提取,得到初始样本词向量集合;a feature extraction subunit, used to perform feature extraction on the initial sample word set to obtain an initial sample word vector set;

数据扩充子单元,用于计算所述初始样本词向量中每一词的近义词,根据所述近义词对所述初始样本进行数据扩充,得到目标样本。A data expansion subunit, configured to calculate the synonym of each word in the initial sample word vector, and perform data expansion on the initial sample according to the synonym to obtain a target sample.

此外,本申请实施例还提供一种计算机可读存储介质,所述计算机可读存储介质存储有多条指令,所述指令适于处理器进行加载,以执行本申请实施例所提供的任一种信息处理方法中的步骤。In addition, the embodiments of the present application further provide a computer-readable storage medium, where the computer-readable storage medium stores a plurality of instructions, and the instructions are suitable for being loaded by a processor to execute any one of the instructions provided by the embodiments of the present application. steps in an information processing method.

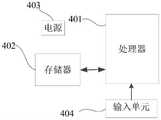

此外,本申请实施例还提供一种计算机设备,包括处理器和存储器,所述存储器存储有应用程序,所述处理器用于运行所述存储器内的应用程序实现本申请实施例提供的信息处理方法。In addition, an embodiment of the present application further provides a computer device, including a processor and a memory, the memory stores an application program, and the processor is configured to run the application program in the memory to implement the information processing method provided by the embodiment of the present application .

本申请实施例还提供一种计算机程序产品或计算机程序,所述计算机程序产品或计算机程序包括计算机指令,所述计算机指令存储在计算机可读存储介质中。计算机设备的处理器从计算机可读存储介质读取所述计算机指令,处理器执行所述计算机指令,使得所述计算机设备执行本申请实施例提供的信息处理方法中的步骤。Embodiments of the present application further provide a computer program product or computer program, where the computer program product or computer program includes computer instructions, and the computer instructions are stored in a computer-readable storage medium. The processor of the computer device reads the computer instructions from the computer-readable storage medium, and the processor executes the computer instructions, so that the computer device executes the steps in the information processing method provided by the embodiments of this application.

本申请实施例通过获取目标样本;采用预设分类模型对目标样本进行分类处理,得到目标样本对应的第一类别概率分布;根据第一类别概率分布计算目标样本的困难系数,并基于困难系数对目标样本进行筛选,得到筛选后目标样本;采用训练后深度分类模型对筛选后目标样本进行分类处理,得到筛选后目标样本对应的第二类别概率分布;计算第二类别概率分布与第一类别概率分布之间的差异,并基于差异对预设分类模型进行收敛,得到训练后分类模型,该训练后分类模型用于对待处理信息进行分类。以此,通过预设分类模型对目标样本进行分类处理得到的目标样本对应的第一类别概率分布来计算每一目标样本的困难系数,并根据困难系数对目标样本进行筛选,得到筛选后目标样本,进而基于筛选后目标样本以及训练后分类模型来对预设分类模型进行训练,这种根据每一目标样本的困难系数来针对性的对训练样本进行筛选的方法,相对于现有技术,减少了训练样本的数量,进而减少了计算量以及缩短了训练时间,以此在模型训练过程中提高了信息处理效率,进而提高了模型训练的效率。In this embodiment of the present application, the target samples are obtained; a preset classification model is used to classify the target samples to obtain the first category probability distribution corresponding to the target samples; the difficulty coefficients of the target samples are calculated according to the first category probability distributions, and based on the difficulty coefficients, the The target samples are screened to obtain the screened target samples; the post-training depth classification model is used to classify the screened target samples, and the second category probability distribution corresponding to the screened target samples is obtained; the second category probability distribution and the first category probability distribution are calculated. The difference between the distributions is obtained, and the preset classification model is converged based on the difference to obtain a post-training classification model, and the post-training classification model is used to classify the information to be processed. In this way, the probability distribution of the first category corresponding to the target sample obtained by classifying the target sample by the preset classification model is used to calculate the difficulty coefficient of each target sample, and the target sample is screened according to the difficulty coefficient to obtain the screened target sample. , and then train the preset classification model based on the target samples after screening and the classification model after training. This method of screening training samples according to the difficulty coefficient of each target sample, compared with the prior art, reduces the number of The number of training samples is reduced, the amount of calculation and the training time are reduced, so as to improve the efficiency of information processing in the process of model training, thereby improving the efficiency of model training.

附图说明Description of drawings

为了更清楚地说明本申请实施例中的技术方案,下面将对实施例描述中所需要使用的附图作简单地介绍,显而易见地,下面描述中的附图仅仅是本申请的一些实施例,对于本领域技术人员来讲,在不付出创造性劳动的前提下,还可以根据这些附图获得其他的附图。In order to illustrate the technical solutions in the embodiments of the present application more clearly, the following briefly introduces the drawings that are used in the description of the embodiments. Obviously, the drawings in the following description are only some embodiments of the present application. For those skilled in the art, other drawings can also be obtained from these drawings without creative effort.

图1是本申请实施例提供的一种信息处理方法实施场景示意图;1 is a schematic diagram of an implementation scenario of an information processing method provided by an embodiment of the present application;

图2是本申请实施例提供的一种信息处理方法的流程示意图;2 is a schematic flowchart of an information processing method provided by an embodiment of the present application;

图3是本申请实施例提供的一种信息处理方法的目标样本筛选示意图;3 is a schematic diagram of target sample screening of an information processing method provided by an embodiment of the present application;

图4是本申请实施例提供的一种信息处理方法的模型训练示意图;4 is a schematic diagram of model training of an information processing method provided by an embodiment of the present application;

图5是本申请实施例提供的一种信息处理方法的另一流程示意图;5 is another schematic flowchart of an information processing method provided by an embodiment of the present application;

图6是本申请实施例提供的信息处理装置的结构示意图;6 is a schematic structural diagram of an information processing apparatus provided by an embodiment of the present application;

图7是本申请实施例提供的计算机设备的结构示意图。FIG. 7 is a schematic structural diagram of a computer device provided by an embodiment of the present application.

具体实施方式Detailed ways

下面将结合本申请实施例中的附图,对本申请实施例中的技术方案进行清楚、完整地描述,显然,所描述的实施例仅仅是本申请一部分实施例,而不是全部的实施例。基于本申请中的实施例,本领域技术人员在没有作出创造性劳动前提下所获得的所有其他实施例,都属于本申请保护的范围。The technical solutions in the embodiments of the present application will be clearly and completely described below with reference to the drawings in the embodiments of the present application. Obviously, the described embodiments are only a part of the embodiments of the present application, but not all of the embodiments. Based on the embodiments in the present application, all other embodiments obtained by those skilled in the art without creative work fall within the protection scope of the present application.

本申请实施例提供一种信息处理方法、装置、计算机可读存储介质和计算机设备。其中,该信息处理装置可以集成在计算机设备中,该计算机设备可以是服务器,也可以是终端等设备。Embodiments of the present application provide an information processing method, an apparatus, a computer-readable storage medium, and a computer device. Wherein, the information processing apparatus may be integrated in computer equipment, and the computer equipment may be a server or a terminal or other equipment.

其中,服务器可以是独立的物理服务器,也可以是多个物理服务器构成的服务器集群或者分布式系统,还可以是提供云服务、云数据库、云计算、云函数、云存储、网络服务、云通信、中间件服务、域名服务、安全服务、网络加速服务(Content Delivery Network,CDN)、以及大数据和人工智能平台等基础云计算服务的云服务器。终端可以是智能手机、平板电脑、笔记本电脑、台式计算机、智能音箱、智能手表等可以进行信息处理的设备,但并不局限于此。终端以及服务器可以通过有线或无线通信方式进行直接或间接地连接,本申请在此不做限制。The server may be an independent physical server, or a server cluster or distributed system composed of multiple physical servers, or may provide cloud services, cloud databases, cloud computing, cloud functions, cloud storage, network services, and cloud communications. , middleware services, domain name services, security services, network acceleration services (Content Delivery Network, CDN), and cloud servers for basic cloud computing services such as big data and artificial intelligence platforms. The terminal may be a device that can process information, such as a smart phone, a tablet computer, a notebook computer, a desktop computer, a smart speaker, and a smart watch, but is not limited thereto. The terminal and the server may be directly or indirectly connected through wired or wireless communication, which is not limited in this application.

为了更好的说明本申请实施例,请参照以下名词进行参考:In order to better illustrate the embodiments of the present application, please refer to the following terms:

知识蒸馏(Knowledge Distillation,简称KD):一种用于模型压缩的方法,利用教师模型的输出指导一个较小的学生模型进行学习,以实现知识迁移。Knowledge Distillation (KD): A method for model compression that uses the output of the teacher model to guide a smaller student model to learn to achieve knowledge transfer.

教师模型:或者叫老师模型,用于进行知识蒸馏的模型,一般较为复杂,具备较大的模型容量以及较好的性能。Teacher model: or teacher model, the model used for knowledge distillation is generally more complex, with larger model capacity and better performance.

学生模型:待进行模型压缩的学生模型,一般模型容量较小,模型结构较为简单。Student model: The student model to be compressed. Generally, the model capacity is small and the model structure is relatively simple.

相对熵(relative entropy):又被称为Kullback-Leibler散度(Kullback-Leibler divergence)或信息散度(information divergence),是两个概率分布(probability distribution)间差异的非对称性度量。在信息理论中,相对熵等价于两个概率分布的信息熵(Shannon entropy)的差值。相对熵是一些优化算法,例如最大期望算法(Expectation-Maximization algorithm,EM)的损失函数。此时参与计算的一个概率分布为真实分布,另一个为理论(拟合)分布,相对熵表示使用理论分布拟合真实分布时产生的信息损耗。Relative entropy: Also known as Kullback-Leibler divergence or information divergence, it is an asymmetric measure of the difference between two probability distributions. In information theory, relative entropy is equivalent to the difference between the information entropy (Shannon entropy) of two probability distributions. Relative entropy is the loss function of some optimization algorithms, such as the Expectation-Maximization algorithm (EM). At this time, one probability distribution involved in the calculation is the real distribution, and the other is the theoretical (fitting) distribution. The relative entropy represents the information loss generated when the theoretical distribution is used to fit the real distribution.

请参阅图1,以信息处理装置集成在计算机设备中为例,图1为本申请实施例所提供的信息处理方法的实施场景示意图,其中,计算机设备可以获取目标样本;采用预设分类模型对目标样本进行分类处理,得到目标样本对应的第一类别概率分布;根据第一类别概率分布计算目标样本的困难系数,并基于困难系数对目标样本进行筛选,得到筛选后目标样本;采用训练后深度分类模型对筛选后目标样本进行分类处理,得到筛选后目标样本对应的第二类别概率分布;计算第二类别概率分布与第一类别概率分布之间的差异,并基于差异对预设分类模型进行收敛,得到训练后分类模型,该训练后分类模型用于对待处理信息进行分类。其中,信息处理可以理解为在构建一个性能较好的模型的过程中,对训练样本等信息进行的处理,以提高模型训练的效率。Please refer to FIG. 1 . Taking the information processing apparatus integrated into computer equipment as an example, FIG. 1 is a schematic diagram of an implementation scenario of the information processing method provided by the embodiment of the present application, wherein the computer equipment can obtain target samples; The target sample is classified to obtain the first category probability distribution corresponding to the target sample; the difficulty coefficient of the target sample is calculated according to the first category probability distribution, and the target sample is screened based on the difficulty coefficient to obtain the screened target sample; after training depth The classification model performs classification processing on the filtered target samples, and obtains the probability distribution of the second category corresponding to the target samples after screening; calculates the difference between the probability distribution of the second category and the probability distribution of the first category, and performs a preset classification model based on the difference. Convergence, a post-training classification model is obtained, and the post-training classification model is used to classify the information to be processed. Among them, information processing can be understood as the processing of training samples and other information in the process of building a model with better performance, so as to improve the efficiency of model training.

需要说明的是,图1所示的信息处理方法的实施环境场景示意图仅仅是一个示例,本申请实施例描述的信息处理方法的实施环境场景是为了更加清楚的说明本申请实施例的技术方案,并不构成对于本申请实施例提供的技术方案的限定。本领域普通技术人员可知,随着信息处理的演变和新业务场景的出现,本申请提供的技术方案对于类似的技术问题,同样适用。It should be noted that the schematic diagram of the implementation environment scenario of the information processing method shown in FIG. 1 is only an example, and the implementation environment scenario of the information processing method described in the embodiments of the present application is to more clearly illustrate the technical solutions of the embodiments of the present application. It does not constitute a limitation to the technical solutions provided in the embodiments of the present application. Those of ordinary skill in the art know that with the evolution of information processing and the emergence of new business scenarios, the technical solutions provided in this application are also applicable to similar technical problems.

以下分别进行详细说明。需要说明的是,以下实施例的描述顺序不作为对实施例优选顺序的限定。Each of them will be described in detail below. It should be noted that the description order of the following embodiments is not intended to limit the preferred order of the embodiments.

本实施例将从信息处理装置的角度进行描述,该信息处理装置具体可以集成在计算机设备中,该计算机设备可以是服务器,本申请在此不作限制。This embodiment will be described from the perspective of an information processing apparatus. The information processing apparatus may specifically be integrated in a computer device, and the computer device may be a server, which is not limited in this application.

请参阅图2,图2是本申请实施例提供的信息处理方法的流程示意图。该信息处理方法包括:Please refer to FIG. 2 , which is a schematic flowchart of an information processing method provided by an embodiment of the present application. The information processing method includes:

在步骤101中,获取目标样本。In

自然语言处理是计算机科学领域与人工智能领域中的一个重要方向。它研究能够实现人与计算机之间用自然语言进行有效通信的各种理论和方法。随着计算机技术以及人工智能技术的发展,人们对自然语言处理技术的要求也不断提高,相应的,对模型的性能要求也在提高。模型训练得到的模型一般体积较大,这些大模型的执行时间较长且计算量较大,使得模型的部署成本较高,且无法广泛的应用于各种设备,例如资源受限的设备。Natural language processing is an important direction in the field of computer science and artificial intelligence. It studies various theories and methods that enable effective communication between humans and computers using natural language. With the development of computer technology and artificial intelligence technology, people's requirements for natural language processing technology are also increasing, and correspondingly, the performance requirements for models are also increasing. The models obtained by model training are generally large in size. These large models have a long execution time and a large amount of calculation, which makes the model deployment cost high, and cannot be widely used in various devices, such as devices with limited resources.

为了可以得到性能较好且体积较小的模型,可以通过模型压缩技术对训练好的性能较好且体积较大的模型进行压缩,得到性能较好且体积较小的模型,以实现可以将训练得到的模型更多的应用于内存等资源受限的设备,例如手机、机器人等移动设备。In order to obtain a model with better performance and smaller size, the trained model with better performance and larger size can be compressed through model compression technology, and a model with better performance and smaller size can be obtained, so that the training can be The obtained model is more applicable to devices with limited resources such as memory, such as mobile devices such as mobile phones and robots.

其中,可以通过知识蒸馏(knowledge distillation,简称KD)对模型进行训练。在现有技术中,一般都是通过在训练集上训练出一个大且性能较好的教师模型,并对训练集进行数据增广操作,例如将训练集扩充到10倍数量左右,进而可以在扩充后的训练集上进行知识蒸馏,然而,这种训练方法需要使用大规模的增广训练集对模型进行训练,训练速度较慢,且如果学生模型在学习过程之中已经逐渐掌握了某些输入样本的特性,则对于这些样本仍然需要进行重复的学习,同时需要重复地执行计算量较大的老师模型,造成了训练的冗余,增加了不必要的模型压缩的计算量,使得模型压缩部署所需的成本较高,因此,现有的训练方法在模型训练过程中信息处理效率较低,进而导致模型训练的效率较低。Among them, the model can be trained by knowledge distillation (KD for short). In the prior art, a large teacher model with better performance is generally trained on the training set, and data augmentation operations are performed on the training set, for example, the training set is expanded to about 10 times the number, and then the Knowledge distillation is performed on the augmented training set. However, this training method requires the use of a large-scale augmented training set to train the model, the training speed is slow, and if the student model has gradually mastered certain Due to the characteristics of the input samples, repeated learning is still required for these samples, and at the same time, the teacher model with a large amount of calculation needs to be repeatedly executed, resulting in redundancy of training, increasing the unnecessary calculation amount of model compression, making model compression. The cost required for deployment is high, therefore, the existing training methods have low information processing efficiency during model training, which in turn leads to low model training efficiency.

为了解决以上问题,本申请提供了一种信息数据方法,通过考虑知识蒸馏过程中学生模型的性能动态的变化情况,通过确定样本难度衡量指标来筛选对于学生模型而言预测难度较高的样本,该样本难度衡量指标用于衡量学生模型对某一样本进行预测的困难程度,也即学生模型对基于该样本输出的结果的不确定程度,进而可以基于这些针对性的筛选得到的样本对教师模型进行压缩,以得到训练后的学生模型,避免了学生模型对于其已经掌握的样本进行重复的学习,降低不必要的模型压缩的计算量,以此提高模型训练过程中的信息处理效率,进而提升模型训练的效率。下面对本申请提供的信息处理方法进行详细描述。In order to solve the above problems, the present application provides an information data method. By considering the dynamic changes of the performance of the student model in the process of knowledge distillation, the samples with higher prediction difficulty for the student model are selected by determining the sample difficulty measurement index. The sample difficulty measurement index is used to measure the degree of difficulty of the student model in predicting a certain sample, that is, the uncertainty of the student model to the result output based on the sample, and then the teacher model can be evaluated based on these targeted screening samples. Compression is used to obtain the trained student model, which avoids the student model from repeating the learning of the samples it has already mastered, and reduces the amount of unnecessary model compression calculation, thereby improving the information processing efficiency in the model training process, thereby improving the model. training efficiency. The information processing method provided by the present application will be described in detail below.

首先,可以获取目标样本,其中,该目标样本可以为用于模型训练的至少一个训练样本。First, a target sample can be obtained, where the target sample can be at least one training sample used for model training.

在一实施例中,该目标样本可以通过对初始训练数据集进行数据增广得到,以增强知识蒸馏得到的学生模型的性能。具体地,对于获取到的初始训练数据集,对于其中的每个目标样本中的任意一个词,可以通过基于预训练模型的上下文词向量或是静态词向量(GloVe)来进行同义词的替换,每个样本可以得到D个增广后的样本。这些根据数据增广得到的样本和初始训练数据集中的样本组成增广后的数据集,也即得到多个目标样本。其中,D可以为大于1的数值,D的大小可以根据具体业务的需要进行设备,例如可以设置为10、15或者20等常数。In one embodiment, the target sample can be obtained by performing data augmentation on the initial training data set to enhance the performance of the student model obtained by knowledge distillation. Specifically, for the obtained initial training data set, for any word in each target sample, synonyms can be replaced by context word vectors or static word vectors (GloVe) based on the pre-training model. D samples can be obtained after D samples. These samples obtained by data augmentation and the samples in the initial training data set form an augmented data set, that is, multiple target samples are obtained. Wherein, D can be a value greater than 1, and the size of D can be configured according to the needs of specific services, for example, it can be set to a constant such as 10, 15, or 20.

具体的,可以获取初始样本数据集,该初始样本数据集包括至少一个初始样本;通过对该初始样本进行分词处理,得到初始样本词集合;对该初始样本词集合进行特征提取,得到初始样本词向量集合;计算该初始样本词向量中每一词的近义词,根据该近义词对该初始样本进行数据扩充,得到目标样本。Specifically, an initial sample data set may be obtained, and the initial sample data set includes at least one initial sample; an initial sample word set is obtained by performing word segmentation on the initial sample; and an initial sample word set is obtained by feature extraction on the initial sample word set Vector set; calculate the synonym of each word in the initial sample word vector, and perform data expansion on the initial sample according to the synonym to obtain the target sample.

其中,可以通过文本预处理对获取到的初始样本进行分词处理,其中,不同语种或者不同领域的初始样本可以有不同的分词处理方法,例如,对于英文,可以通过单词之间的空格进行分词,对于中文,可以利用分词工具进行处理,对于专业领域的初始样本,可以按照不同专业领域的样本特点设计分词算法,从而实现分词处理等等,得到初始样本进行分词处理后的初始样本词集合。例如,对于初始样本“我吃了三明治”,可以通过分词处理得到“我,吃,了,三明治”,等等。以此对每一初始样本进行分词处理,可以得到初始样本词集合,进而可以通过近义词预测模型对该初始样本词集合进行特征提取,得到初始样本词向量集合,计算该初始样本词向量中每一词的近义词,根据该近义词对该初始样本进行数据扩充,得到目标样本。Among them, word segmentation processing can be performed on the obtained initial samples through text preprocessing, wherein initial samples in different languages or different fields can have different word segmentation processing methods. For example, for English, word segmentation can be performed by spaces between words, For Chinese, word segmentation tools can be used for processing. For initial samples in professional fields, word segmentation algorithms can be designed according to the characteristics of samples in different professional fields, so as to realize word segmentation processing, etc., and obtain the initial sample word set after word segmentation processing of initial samples. For example, for the initial sample "I ate a sandwich", the word segmentation can be used to get "I, eat, have, sandwich", and so on. In this way, word segmentation is performed on each initial sample, and an initial sample word set can be obtained, and then feature extraction can be performed on the initial sample word set through a synonym prediction model to obtain an initial sample word vector set, and each word vector in the initial sample word vector can be calculated. The synonym of the word, and the initial sample is expanded according to the synonym to obtain the target sample.

其中,可以通过对初始样本词集合中的词进行词性分析,将词汇集合中无需进行近义词扩充的词进行过滤,该无需进行近义词扩充的词的词性可以为介词、冠词等词,例如,对于词汇集合“你,午饭,吃,的,什么”中的“你”、“的”和“什么”为介词、冠词等词性的词,由于这些词进行替换无法增强数据的多样性甚至可能会破坏样本的原有语义,因此可以将这些词性的词进行过滤,再计算过滤后的初始样本词集合中每一词的近义词,以实现对初始样本的数据扩充,得到目标样本。The part-of-speech analysis of the words in the initial sample word set can be used to filter the words in the vocabulary set that do not need to be expanded by synonyms. In the vocabulary set "you, lunch, eat, what, what", "you", "of" and "what" are prepositions, articles and other part-of-speech words, because the replacement of these words cannot enhance the diversity of data and may even The original semantics of the sample are destroyed, so the words of these parts of speech can be filtered, and then the synonyms of each word in the filtered initial sample word set can be calculated to realize the data expansion of the initial sample and obtain the target sample.

初始样本等训练样本数据可以是从与信息处理装置连接的存储器中获取,也可以从其他数据存储终端获取。还可以从实体终端的存储器中获取,也可以从虚拟的存储空间如数据集或者语料库中获取。在一些实施例中,训练样本数据可以从一个存储位置获取,也可以从多个存储位置获取,例如训练样本数据可以保存于区块链上,信息处理装置从区块链上获取上述训练样本数据。信息处理装置可以是响应于某一训练样本数据获取指令后在一个时间段中集中获取训练样本数据,也可以是根据某一数据获取逻辑持续进行训练样本数据获取。Training sample data such as initial samples may be acquired from a memory connected to the information processing device, or may be acquired from other data storage terminals. It can also be obtained from the memory of the physical terminal, or can be obtained from a virtual storage space such as a data set or a corpus. In some embodiments, the training sample data may be acquired from one storage location, or may be acquired from multiple storage locations. For example, the training sample data may be stored on a blockchain, and the information processing device may acquire the above-mentioned training sample data from the blockchain. . The information processing apparatus may centrally acquire training sample data in a time period in response to a certain training sample data acquisition instruction, or may continuously acquire training sample data according to a certain data acquisition logic.

在步骤102中,采用预设分类模型对目标样本进行分类处理,得到目标样本对应的第一类别概率分布。In step 102, a preset classification model is used to classify the target samples to obtain a first category probability distribution corresponding to the target samples.

其中,该预设分类模型可以为待进行模型训练的模型,且模型结构较为简单,例如,可以为知识蒸馏架构中的学生模型。为了可以目标样本进行针对性的筛选,以提高模型压缩训练的效率,可以先将目标样本输入到预设分类模型中,根据预设分类模型的输出结果来确定学生模型对每一目标样本进行预测的困难程度,根据困难程度来对目标样本进行筛选,具体的,可以通过预设分类模型对目标样本进行分类处理,得到目标样本对应的第一类别概率分布。可选的,可以通过预设分类模型对目标样本进行特征提取,再通过分类器将特征提取得到的特征进行分类,得到目标样本对应的第一类别概率分布,该分类器可以为多层感知器(Multilayer Perceptron,简称MLP)。The preset classification model may be a model to be trained, and the model structure is relatively simple. For example, it may be a student model in a knowledge distillation architecture. In order to carry out targeted screening of target samples and improve the efficiency of model compression training, the target samples can be input into the preset classification model first, and the student model can be determined to predict each target sample according to the output results of the preset classification model. According to the degree of difficulty, the target samples are screened according to the degree of difficulty. Specifically, a preset classification model can be used to classify the target samples, and the probability distribution of the first category corresponding to the target samples can be obtained. Optionally, feature extraction can be performed on the target sample through a preset classification model, and then the features obtained by the feature extraction can be classified by a classifier to obtain the first category probability distribution corresponding to the target sample, and the classifier can be a multi-layer perceptron. (Multilayer Perceptron, referred to as MLP).

该第一类别概率分布可以为预设分类模型预测某一目标样本对应每一类别的概率构成的概率分布,例如,假设预设分类模型用于预测目标样本的情感极性是正向还是负向,同时假设预设分类模型预测目标样本的情感极性属于正向的概率为0.7,属于负向的概率为0.3,则该第一类别概率分布可以为(正向0.7,负向0.3);又例如,假设预设分类模型用于预测目标样本属于什么新闻,同时假设预设分类模型预测目标样本属于A类新闻的概率为0.3,属于B类新闻的概率为0.6,属于C类新闻的概率为0.1,则该第一类别概率分布可以为(A类新闻0.3,B类新闻0.6,C类新闻0.1);又例如,假设预设分类模型用于预测目标样本1与目标样本2的语义是否相同,同时假设预设分类模型预测目标样本1与目标样本2的语义相同的概率为0.9,不相同的概率为0.1,则该第一类别概率分布可以为(相同0.9,不相同0.1);再例如,假设预设分类模型用于预测目标样本甲是否蕴含目标样本乙,同时假设预设分类模型预测目标样本甲蕴含目标样本乙的概率为0.2,预测目标样本甲不蕴含目标样本乙的概率为0.8,则该第一类别概率分布可以为(蕴含0.2,不蕴含0.8),等等。The first category probability distribution may be a probability distribution formed by the preset classification model predicting the probability of a target sample corresponding to each category. For example, assuming that the preset classification model is used to predict whether the emotional polarity of the target sample is positive or negative, At the same time, assuming that the preset classification model predicts that the probability of the emotional polarity of the target sample being positive is 0.7, and the probability of being negative is 0.3, then the probability distribution of the first category can be (positive 0.7, negative 0.3); another example , assuming that the preset classification model is used to predict what news the target sample belongs to, and at the same time, assume that the preset classification model predicts that the probability of the target sample belonging to category A news is 0.3, the probability of belonging to category B news is 0.6, and the probability of belonging to category C news is 0.1 , then the probability distribution of the first category can be (A-type news 0.3, B-type news 0.6, C-type news 0.1); for another example, assuming that the preset classification model is used to predict whether the semantics of target sample 1 and target sample 2 are the same, At the same time, assuming that the preset classification model predicts that the semantics of target sample 1 and target sample 2 are the same as 0.9, and the probability of being different is 0.1, then the probability distribution of the first category can be (same 0.9, different 0.1); for another example, Suppose that the preset classification model is used to predict whether the target sample A contains the target sample B. At the same time, it is assumed that the preset classification model predicts that the probability that the target sample A contains the target sample B is 0.2, and the probability that the target sample A does not contain the target sample B is 0.8. Then the probability distribution of the first category can be (with 0.2, not with 0.8), and so on.

可选的,在采用预设分类模型对目标样本进行分类处理之前,可以对预设分类模型进行初始化,具体的,可以获取预设分类模型和训练后深度分类模型,该训练后深度分类模型,是一个训练好的高性能的模型,相对于预设分类模型,模型结构较为复杂且模型容量更大,例如,可以为知识蒸馏架构中的教师模型,当预设分类模型与该训练后深度分类模型的模型架构相同时,为了提高模型训练的速度,可以获取预设分类模型的第一网络深度层级数以及该训练后深度分类模型的第二网络深度层级数,该第一网络深度层级数小于该第二网络深度层级数,将该训练后深度分类模型的前第一网络深度层级数的层级的模型参数确定为预设分类模型的初始化模型参数。例如,可以假设预设分类模型的网络深度为Ls,即第一网络深度层级数为Ls层,该训练后深度分类模型的网络深度为Lt,即第二网络深度层级数为Lt,且Ls<<Lt,则可以训练后深度分类模型的前Ls层的参数来作为预设分类模型的参数。Optionally, before using the preset classification model to classify the target samples, the preset classification model may be initialized, and specifically, the preset classification model and the post-training depth classification model may be obtained, and the post-training depth classification model, It is a trained high-performance model. Compared with the preset classification model, the model structure is more complex and the model capacity is larger. For example, it can be the teacher model in the knowledge distillation architecture. When the preset classification model and the trained deep classification model When the model architectures of the models are the same, in order to improve the speed of model training, the number of first network depth levels of the preset classification model and the number of second network depth levels of the post-training depth classification model can be obtained. The number of first network depth levels is less than For the second network depth level, the model parameter of the level before the first network depth level of the trained depth classification model is determined as the initialization model parameter of the preset classification model. For example, it can be assumed that the network depth of the preset classification model is Ls , that is, the number of layers of the first network depth is Ls , and the network depth of the deep classification model after training is Lt , that is, the number of layers of the second network depth is Lt , and Ls <<Lt , then the parameters of the first Ls layer of the deep classification model after training can be used as the parameters of the preset classification model.

可选的,当预设分类模型与该训练后深度分类模型的模型架构不相同时,可以对该预设分类模型进行随机的初始化。Optionally, when the model architecture of the preset classification model is different from that of the trained deep classification model, the preset classification model may be randomly initialized.

在步骤103中,根据第一类别概率分布计算目标样本的困难系数,并基于困难系数对目标样本进行筛选,得到筛选后目标样本。In step 103, the difficulty coefficient of the target sample is calculated according to the probability distribution of the first category, and the target sample is screened based on the difficulty coefficient to obtain the screened target sample.

其中,该困难系数可以为衡量样本难度的指标,也即表征预设分类模型输出目标样本对应的每一类别概率的不确定程度,用于表征目标样本的难度,预设分类模型基于目标样本的输出结果越不确定,则该目标样本的难度越大,该困难系数也就越大,该困难系数可以为分数,也可以为比率,以及其他形式的参数,在此不做限定。The difficulty coefficient may be an index to measure the difficulty of the sample, that is, to characterize the degree of uncertainty of the probability of each category corresponding to the output target sample of the preset classification model, which is used to characterize the difficulty of the target sample. The preset classification model is based on the The more uncertain the output result, the greater the difficulty of the target sample, and the greater the difficulty coefficient. The difficulty coefficient can be a score, a ratio, or other parameters, which are not limited here.

请参考图3,图3为本申请实施例提供的一种信息处理方法的目标样本筛选示意图,在将目标样本数据集中的目标样本输入到预设分类模型中得到第一类别概率后,可以根据第一类别概率分布计算目标样本的困难系数,并基于困难系数对目标样本进行筛选,得到筛选后目标样本。Please refer to FIG. 3. FIG. 3 is a schematic diagram of target sample screening of an information processing method provided by an embodiment of the present application. After the target samples in the target sample data set are input into the preset classification model to obtain the first category probability, the probability of the first category can be obtained according to the The first category probability distribution calculates the difficulty coefficient of the target sample, and screens the target sample based on the difficulty coefficient to obtain the screened target sample.

在一实施例中,可以通过根据第一类别概率分布来计算每一目标样本的分类间隔差值(Margin),根据该分类间隔差值来计算目标样本的困难系数,具体的,可以根据该第一类别概率分布获取每一目标样本对应的每一类别的类别概率,将每一目标样本对应的每一类别的类别概率以由大到小的顺序进行排序,得到第二排序结果,根据该第二排序结果确定每一目标样本对应的类别概率中排名最靠前的两个类别概率,根据排名最靠前的两个类别概率之间的差值确定每一目标样本的分类间隔差值,基于该分类间隔差值确定每一目标样本的困难系数。其中,该困难系数可以由该分类间隔差值确定,可以等于该分类间隔差值,也可以根据该分类间隔差值变换为其他数值,例如加权处理等变换方式,在此不做限定。为了便于说明,以该分类间隔差值等于困难系数为例进行具体的说明,其中,可以假设困难系数为ux,也即分类间隔差值为ux,则该困难系数的具体计算公式可以为In one embodiment, the classification interval difference (Margin) of each target sample can be calculated according to the first class probability distribution, and the difficulty coefficient of the target sample can be calculated according to the classification interval difference. A category probability distribution obtains the category probability of each category corresponding to each target sample, sorts the category probability of each category corresponding to each target sample in descending order, and obtains the second sorting result. The second ranking result determines the top two category probabilities among the category probabilities corresponding to each target sample, and determines the classification interval difference of each target sample according to the difference between the top two category probabilities, based on The classification interval difference determines the difficulty coefficient for each target sample. The difficulty coefficient may be determined by the classification interval difference, may be equal to the classification interval difference, or may be transformed into other numerical values according to the classification interval difference, such as weighting processing and other transformation methods, which are not limited here. For the convenience of explanation, a specific description is given by taking the difference of the classification interval equal to the difficulty coefficient as an example, wherein, it can be assumed that the difficulty coefficient is ux , that is, the difference of the classification interval is ux , then the specific calculation formula of the difficulty coefficient can be:

其中,x可以为任一目标样本,可以是预设分类模型认为目标样本x最可能属于的类别,为目标样本X最可能属于的类别的概率,可以是预设分类模型认为目标样本x第二可能属于的类别,为目标样本X第二可能属于的类别的概率,最可能的类别和第二可能类别之间的概率差ux越小,则说明预设分类模型倾向于给出模棱两可的预测,也就说明这一目标样本对于预设分类模型的难度更大,进而可以根据分类间隔差值来确定目标样本的困难系数,例如,可以假设预设分类模型用于预测目标样本x属于什么类别的新闻,同时假设预设分类模型预测目标样本属于A类新闻的概率为0.3,属于B类新闻的概率为0.6,属于C类新闻的概率为0.1,则该第一类别概率分布可以为(A类新闻0.3,B类新闻0.6,C类新闻0.1),将每一目标样本对应的每一类别的类别概率以由大到小的顺序进行排序,得到第二排序结果0.6、0.3、0.1,则根据该第二排序结果可以得到预设分类模型认为目标样本x最可能属于的类别为B类新闻,P(B∣x)为0.6,预设分类模型认为目标样本x第二可能属于的类别为A类新闻,P(A∣x)为0.3,则分类间隔差值ux=0.6-0.3=0.3,也即该困难系数为0.3。Among them, x can be any target sample, can be the category that the preset classification model thinks the target sample x is most likely to belong to, is the probability of the category that the target sample X most likely belongs to, It can be the category that the preset classification model thinks the target sample x may belong to, is the probability of the second possible category of the target sample X. The smaller the probability difference ux between the most likely category and the second possible category, the smaller the preset classification model tends to give ambiguous predictions, which means that A target sample is more difficult for the preset classification model, and then the difficulty coefficient of the target sample can be determined according to the difference between the classification intervals. For example, it can be assumed that the preset classification model is used to predict what category of news the target sample x belongs to. The preset classification model predicts that the probability of the target sample belonging to category A news is 0.3, the probability of belonging to category B news is 0.6, and the probability of belonging to category C news is 0.1, then the probability distribution of the first category may be (category A news 0.3, B category news Category news 0.6, C category news 0.1), sort the category probability of each category corresponding to each target sample in descending order, and get the second sorting result 0.6, 0.3, 0.1, then according to the second sorting The result can be obtained that the preset classification model believes that the most likely category of the target sample x belongs to the category B news, P(B∣x) is 0.6, the preset classification model believes that the second most likely category of the target sample x belongs to the category A news, P (A∣x) is 0.3, then the classification interval difference ux =0.6-0.3=0.3, that is, the difficulty coefficient is 0.3.

在一实施例中,可以通过根据第一类别概率分布来计算每一目标样本的混淆分数(Confusion Score),根据该混淆分数来计算目标样本的困难系数,具体的,可以根据该第一类别概率分布获取每一目标样本对应的每一类别的类别概率,将每一目标样本对应的每一类别的类别概率以由大到小的顺序进行排序,得到第三排序结果,根据该第三排序结果确定每一目标样本对应的类别概率中排名最靠前的类别概率,根据该第一类别概率分布计算每一目标样本的混淆分数,基于该混淆分数确定每一目标样本的困难系数。其中,该困难系数可以由混淆分数确定,可以等于该混淆分数,也可以根据该混淆分数变换为其他数值,例如加权处理等变换方式,在此不做限定。为了便于说明,以该混淆分数等于困难系数为例进行具体的说明,因此,该困难系数ux的具体计算公式可以为In one embodiment, the confusion score (Confusion Score) of each target sample can be calculated according to the first category probability distribution, and the difficulty coefficient of the target sample can be calculated according to the confusion score. Specifically, the first category probability can be calculated according to The distribution obtains the category probability of each category corresponding to each target sample, sorts the category probability of each category corresponding to each target sample in descending order, and obtains a third sorting result, according to the third sorting result Determine the class probability with the highest ranking among the class probabilities corresponding to each target sample, calculate the confusion score of each target sample according to the first class probability distribution, and determine the difficulty coefficient of each target sample based on the confusion score. The difficulty coefficient may be determined by the confusion score, may be equal to the confusion score, or may be transformed into other numerical values according to the confusion score, such as weighting processing and other transformation methods, which are not limited herein. For the convenience of description, the confusion score is equal to the difficulty coefficient as an example for specific description. Therefore, the specific calculation formula of the difficulty coefficient ux can be:

其中,表示当P(y∣x)取得最大值时,y的取值,也即可以为某一目标样本的第一类别概率分布中概率值最大的类别,可以为某一目标样本的第一类别概率分布中概率值最大的类别概率,以此可以得到混淆分数为混淆分数越大,则预设分类模型对自己对目标样本对应的类别的预测越不自信,则可以表明该目标样本对预设分类模型的难度较大。in, Represents the value of y when P(y∣x) reaches the maximum value, that is, It can be the category with the largest probability value in the probability distribution of the first category of a target sample, It can be the class probability with the largest probability value in the first class probability distribution of a certain target sample, so that the confusion score can be obtained as The larger the confusion score, the less confident the preset classification model is in its prediction of the category corresponding to the target sample, which indicates that the target sample is more difficult for the preset classification model.

例如,可以假设预设分类模型用于预测目标样本x属于什么类别的新闻,同时假设预设分类模型预测目标样本丙属于A类新闻的概率为0.3,属于B类新闻的概率为0.6,属于C类新闻的概率为0.1,则该第一类别概率分布可以为(A类新闻0.3,B类新闻0.6,C类新闻0.1),将目标样本丙对应的每一类别的类别概率以由大到小的顺序进行排序,可以得到第三排序结果0.6、0.3、0.1,根据该第三排序结果可以确定该目标样本丙对应的类别概率中排名最靠前的类别概率为0.6,根据该排名最靠前的类别概率计算目标样本丙的混淆分数,则该混淆分数为1-0.6=0.4,即困难系数为ux=0.4。同时,还可以假设预设分类模型预测目标样本丁属于A类新闻的概率为0.1,属于B类新闻的概率为0.2,属于C类新闻的概率为0.7,则该第一类别概率分布可以为(A类新闻0.1,B类新闻0.2,C类新闻0.7),将目标样本丁对应的每一类别的类别概率以由大到小的顺序进行排序,可以得到第三排序结果0.7、0.2、0.1,根据该第三排序结果可以确定目标样本丁对应的类别概率中排名最靠前的类别概率为0.7,根据该排名最靠前的类别概率计算目标样本丁的混淆分数,则该混淆分数为1-0.7=0.3,即困难系数为ux=0.3。对比目标样本丙和丁的混淆分数,可以得到目标样本丙的混淆分数更大,也即目标样本丙的困难系数更大,表明目标样本丙对于预设分类模型来说更加困难。For example, it can be assumed that the preset classification model is used to predict what category of news the target sample x belongs to. At the same time, it can be assumed that the preset classification model predicts that the probability that the target sample C belongs to category A news is 0.3, the probability of belonging to category B news is 0.6, and the probability of belonging to category C is 0.6. The probability of class news is 0.1, then the probability distribution of the first class can be (A class news 0.3, B class news 0.6, C class news 0.1), the class probability of each class corresponding to the target sample C is from large to small. According to the third sorting result, it can be determined that the probability of the category with the highest ranking among the category probabilities corresponding to the target sample C is 0.6, according to the ranking of the highest category probability Calculate the confusion score of the target sample C with the class probability of , then the confusion score is 1-0.6=0.4, that is, the difficulty coefficient is ux =0.4. At the same time, it can also be assumed that the preset classification model predicts that the probability that the target sample D belongs to category A news is 0.1, the probability of belonging to category B news is 0.2, and the probability of belonging to category C news is 0.7, then the probability distribution of the first category can be ( Type A news 0.1, B type news 0.2, C type news 0.7), sort the category probability of each category corresponding to the target sample D in descending order, and the third sorting result 0.7, 0.2, 0.1 can be obtained, According to the third sorting result, it can be determined that the probability of the highest ranked category among the category probabilities corresponding to the target sample D is 0.7, and the confusion score of the target sample D is calculated according to the probability of the highest ranked category, then the confusion score is 1- 0.7=0.3, that is, the difficulty coefficient is ux =0.3. Comparing the confusion scores of target samples C and D, it can be obtained that the confusion score of target sample C is larger, that is, the difficulty coefficient of target sample C is larger, indicating that target sample C is more difficult for the preset classification model.

在一实施例中,可以通过根据第一类别概率分布来计算每一目标样本的熵值(Entropy),根据该熵值来计算目标样本的困难系数,具体的,可以根据该第一类别概率分布计算该目标样本的熵值,并基于该熵值确定每一目标样本的困难系数。其中,熵值代表信息量的期望值,代表不确定程度,其中,每一目标样本的困难系数可以由该目标样本的熵值确定,可以等于该熵值,也可以根据该熵值变换为其他数值,例如加权处理等变换方式,在此不做限定。为了便于说明,以该熵值为困难系数为例进行具体的说明,因此,该困难系数ux的具体计算公式可以为In one embodiment, the entropy value (Entropy) of each target sample may be calculated according to the first category probability distribution, and the difficulty coefficient of the target sample may be calculated according to the entropy value. Specifically, the first category probability distribution may be used. An entropy value of the target sample is calculated, and a difficulty coefficient of each target sample is determined based on the entropy value. Among them, the entropy value represents the expected value of the amount of information, and represents the degree of uncertainty. The difficulty coefficient of each target sample can be determined by the entropy value of the target sample, which can be equal to the entropy value, or can be transformed into other values according to the entropy value. , such as weighting processing and other transformation methods, which are not limited here. For the convenience of description, the entropy value is taken as an example of the difficulty coefficient for specific description. Therefore, the specific calculation formula of the difficulty coefficient ux can be:

其中,P(y∣x)表示预设分类模型预设目标样本x对应类别y的概率,logP(y∣x)表示对P(y∣x)做对数运算得到的结果,∑表示数学中用于求多项数的和的符号。熵值反映了预设分类模型对于目标样本进行预测的不确定性,熵值越大,则不确定程度越高,说明这一目标样本对于当前的预设分类模型越困难。例如,假设目标样本1的熵值为5,即困难系数为5,假设目标样本2的熵值为7,也即困难系数为7,则目标样本2的困难系数大于目标样本1,则相对于目标样本1,目标样本2对于预设分类模型而言更加困难。Among them, P(y∣x) represents the probability that the preset target sample x of the preset classification model corresponds to the category y, logP(y∣x) represents the result obtained by performing the logarithmic operation on P(y∣x), and ∑ represents the mathematical Symbol for summing polynomials. The entropy value reflects the uncertainty of the preset classification model for the prediction of the target sample. The larger the entropy value, the higher the degree of uncertainty, indicating that the target sample is more difficult for the current preset classification model. For example, if the entropy value of target sample 1 is 5, that is, the difficulty coefficient is 5, and if the entropy value of target sample 2 is 7, that is, the difficulty coefficient is 7, then the difficulty coefficient of target sample 2 is greater than that of target sample 1, relative to Target sample 1, target sample 2 is more difficult for the preset classification model.

因为训练后深度分类模型的模型结构较为复杂,模型参数比较多,因此训练后深度分类模型的执行时间和计算消耗远大于预设分类模型,因此,为了可以提高模型训练的效率,可以选择性的对目标样本进行筛选来进行知识蒸馏,因此,可以根据目标样本的困难系数选择性地对较为困难的目标样本执行训练后深度分类模型得到对应的输出,然后利用这些输出指导预设分类模型的训练。同时,由于困难样本的泛化性能更好,因此预设分类模型可以通过筛选得到的困难样本的学习来取得较好的性能。在一实施例中,该基于困难系数对目标样本进行筛选,得到筛选后目标样本的步骤,可以包括:Because the model structure of the deep classification model after training is relatively complex and there are many model parameters, the execution time and computational consumption of the deep classification model after training are much larger than those of the preset classification model. Therefore, in order to improve the efficiency of model training, you can selectively The target samples are screened for knowledge distillation. Therefore, the post-training deep classification model can be selectively performed on the more difficult target samples according to the difficulty coefficient of the target samples to obtain the corresponding output, and then these outputs can be used to guide the training of the preset classification model. . At the same time, because the generalization performance of difficult samples is better, the preset classification model can achieve better performance by learning from the difficult samples obtained by screening. In one embodiment, the step of screening the target sample based on the difficulty coefficient to obtain the screened target sample may include:

根据该目标样本的困难系数对每一目标样本以由大到小的顺序进行排序,得到第一排序结果;Sort each target sample in descending order according to the difficulty coefficient of the target sample to obtain the first sorting result;

根据该第一排序结果对满足预设条件的目标样本进行筛选,得到筛选后目标样本。The target samples that meet the preset conditions are screened according to the first sorting result, and the screened target samples are obtained.

其中,可以根据该目标样本的困难系数对每一目标样本以由大到小的顺序进行排序,以此得到第一排序结果,根据第一排序结果对满足预设条件的目标样本进行筛选,得到筛选后目标样本。其中,筛选后目标样本的确定方式有多种,例如,当根据该目标样本的困难系数对每一目标样本以由大到小的顺序进行排序时,该预设条件可以为第一排序结果中排名大于预设排名,也即满足预设条件的目标样本可以为排名大于预设排名的目标样本。其中,该预设排名可以为根据实际训练需要进行确定,例如,当训练需要5万个样本时,可以根据第一排序结果获取排名在前5万的5万个目标样本,又例如,假设目标样本训练集的目标样本的总数量为N,该预设排名可以为在第一排序结果中排名大于pN的目标样本,其中,p可以为一个0到1的数值,具体取值可以根据实际情况进行设置,例如可以为设置为0.1至0.5之间的常数。例如,可以假设目标样本训练集的目标样本的总数量N为10000,p为0.2,则pN为10000×0.2=2000,则该预设排名为2000,该满足预设条件的目标样本可以为排名大于2000名的目标样本。Wherein, each target sample can be sorted in descending order according to the difficulty coefficient of the target sample, so as to obtain the first sorting result, and the target samples that meet the preset conditions are screened according to the first sorting result, and the result is obtained Target samples after screening. There are various ways to determine the target samples after screening. For example, when each target sample is sorted in descending order according to the difficulty coefficient of the target sample, the preset condition may be that in the first sorting result The ranking is higher than the preset ranking, that is, the target samples that satisfy the preset conditions may be the target samples whose ranking is higher than the preset ranking. The preset ranking can be determined according to actual training needs. For example, when 50,000 samples are required for training, 50,000 target samples ranked in the top 50,000 can be obtained according to the first sorting result. The total number of target samples in the sample training set is N, and the preset ranking can be the target samples ranked greater than pN in the first sorting result, where p can be a value from 0 to 1, and the specific value can be based on the actual situation. Set, for example, a constant between 0.1 and 0.5. For example, it can be assumed that the total number N of target samples in the target sample training set is 10000, p is 0.2, then pN is 10000×0.2=2000, then the preset ranking is 2000, and the target samples that meet the preset conditions can be ranked A target sample of more than 2000 people.

此外,也可以由小到大的顺序进行排序,当根据该目标样本的困难系数对每一目标样本以由小到大的顺序进行排序时,该预设条件可以为第一排序结果中排名小于预设排名,也即满足预设条件的目标样本可以为排名小于预设排名的目标样本。In addition, the order can also be sorted from small to large. When sorting each target sample in the order from small to large according to the difficulty coefficient of the target sample, the preset condition can be that the ranking in the first sorting result is less than The preset ranking, that is, the target samples satisfying the preset condition may be the target samples whose ranking is lower than the preset ranking.

在一实施例中,该满足预设条件的目标样本可以指困难系数大于预设阈值的目标样本,该预设阈值可以为一个临界值,当目标样本的困难系数大于这个临界值时,可以将这个目标样本确定为筛选后目标样本,用于后续对预设分类模型的训练,预设阈值的具体取值可以根据实际应用进行确定,在此不做限定。In one embodiment, the target sample that satisfies the preset condition may refer to a target sample whose difficulty coefficient is greater than a preset threshold, and the preset threshold may be a critical value. When the difficulty coefficient of the target sample is greater than this critical value, the The target sample is determined as a filtered target sample, which is used for subsequent training of the preset classification model. The specific value of the preset threshold can be determined according to the actual application, which is not limited here.

在步骤104中,采用训练后深度分类模型对筛选后目标样本进行分类处理,得到筛选后目标样本对应的第二类别概率分布。In

其中,该训练后深度分类模型的网络深度可以大于预设分类模型的网络深度。Wherein, the network depth of the deep classification model after training may be greater than the network depth of the preset classification model.

具体的,可以采用训练后深度分类模型对筛选后目标样本进行特征提取,进而可以将特征提取得到的特征进行分类处理,得到筛选后目标样本对应的第二类别概率分布。Specifically, a post-training deep classification model may be used to extract features of the filtered target samples, and then the features obtained by the feature extraction may be subjected to classification processing to obtain the probability distribution of the second category corresponding to the filtered target samples.

该第二类别概率分布可以为训练后深度分类模型预测某一目标样本对应每一类别的概率构成的概率分布,例如,假设训练后深度分类模型用于预测目标样本的情感极性是正向还是负向,同时假设训练后深度分类模型预测目标样本的情感极性属于正向的概率为0.7,属于负向的概率为0.3,则该第二类别概率分布可以为(正向0.7,负向0.3);又例如,假设训练后深度分类模型用于预测目标样本属于什么新闻,同时假设训练后深度分类模型预测目标样本属于A类新闻的概率为0.3,属于B类新闻的概率为0.6,属于C类新闻的概率为0.1,则该第二类别概率分布可以为(A类新闻0.3,B类新闻0.6,C类新闻0.1);又例如,假设训练后深度分类模型用于预测目标样本1与目标样本2的语义是否相同,同时假设训练后深度分类模型预测目标样本1与目标样本2的语义相同的概率为0.9,不相同的概率为0.1,则该第二类别概率分布可以为(相同0.9,不相同0.1);再例如,假设训练后深度分类模型用于预测目标样本甲是否蕴含目标样本乙,同时假设训练后深度分类模型预测目标样本甲蕴含目标样本乙的概率为0.2,预测目标样本甲不蕴含目标样本乙的概率为0.8,则该第二类别概率分布可以为(蕴含0.2,不蕴含0.8),等等。The second category probability distribution may be a probability distribution composed of the probability of a target sample corresponding to each category predicted by the deep classification model after training. For example, it is assumed that the deep classification model after training is used to predict whether the sentiment polarity of the target sample is positive or negative. At the same time, assuming that the emotional polarity of the target sample predicted by the deep classification model after training is positive, the probability of being positive is 0.7, and the probability of being negative is 0.3, then the probability distribution of the second category can be (positive 0.7, negative 0.3) ; For another example, suppose that the post-training deep classification model is used to predict what news the target sample belongs to, and at the same time, suppose that the post-training deep classification model predicts that the probability that the target sample belongs to category A news is 0.3, the probability that it belongs to category B news is 0.6, and it belongs to category C. If the probability of news is 0.1, the probability distribution of the second category can be (A category news 0.3, B category news 0.6, C category news 0.1); for another example, suppose that the deep classification model after training is used to predict the target sample 1 and the target sample Whether the semantics of the The same 0.1); for another example, suppose that the post-training deep classification model is used to predict whether target sample A contains target sample B, and suppose that the probability that the post-training deep classification model predicts that target sample A contains target sample B is 0.2, and predicts that target sample A does not. The probability of implicating the target sample B is 0.8, then the probability distribution of the second category can be (implies 0.2, does not imply 0.8), and so on.

在步骤105中,计算第二类别概率分布与第一类别概率分布之间的差异,并基于差异对预设分类模型进行收敛,得到训练后分类模型。In

具体的,请参阅图4,图4是本申请实施例提供的一种信息处理方法的模型训练示意图,可以通过损失函数来计算第二类别概率分布与第一类别概率分布之间的差异,并根据差异对预设分类模型的模型参数进行更新,当预设分类模型不满足收敛条件时,可以继续进行动态的对目标样本进行筛选,从而可以将筛选后的目标样本输入到训练后深度分类模型中,进而根据输出结果计算差异,根据差异对预设分类模型的模型参数进行迭代训练,当预设分类模型满足收敛条件时,可以得到训练后分类模型。Specifically, please refer to FIG. 4. FIG. 4 is a schematic diagram of model training of an information processing method provided by an embodiment of the present application. The difference between the probability distribution of the second category and the probability distribution of the first category can be calculated by using a loss function, and The model parameters of the preset classification model are updated according to the difference. When the preset classification model does not meet the convergence conditions, the target samples can be continuously screened dynamically, so that the filtered target samples can be input into the deep classification model after training. Then, the difference is calculated according to the output result, and the model parameters of the preset classification model are iteratively trained according to the difference. When the preset classification model satisfies the convergence condition, the post-training classification model can be obtained.

其中,该训练后分类模型可以用于对待处理信息进行分类。例如,该训练后分类模型可以用于预测待处理信息的情感极性是正向还是负向;又例如,训练后分类模型可以用于预测待处理信息属于什么类别,其中,该待处理信息可以为一段新闻信息,例如,该新闻信息可以为新闻文本,训练后分类模型可以用于预测这段新闻信息属于什么类别的新闻,例如体育新闻、财经新闻、娱乐新闻等等;又例如,可以用于判断待处理信息1与待处理信息2的语义是否相同,例如,可以用于判断文本a的语义是否与文本b的语义相同;再例如,可以用于预测待处理信息c的语义是否包含待处理信息d的语义等自然语言理解以及语义分析任务。The post-training classification model can be used to classify the information to be processed. For example, the post-training classification model can be used to predict whether the sentiment polarity of the information to be processed is positive or negative; for another example, the post-training classification model can be used to predict what category the information to be processed belongs to, where the information to be processed can be A piece of news information, for example, the news information can be news text, and the classification model after training can be used to predict what category of news this piece of news information belongs to, such as sports news, financial news, entertainment news, etc.; for example, it can be used for Judging whether the semantics of the information to be processed 1 and the information to be processed 2 are the same, for example, it can be used to judge whether the semantics of the text a is the same as the semantics of the text b; for example, it can be used to predict whether the semantics of the information to be processed contains the to-be-processed Natural language understanding and semantic analysis tasks such as the semantics of information d.

在一实施例中,可以通过计算目标样本的第二类别概率分布以及该第一类别概率分布之间的相对熵,根据该相对熵确定该第二类别概率分布与该第一类别概率分布之间的差异。其中,第二类别概率分布以及该第一类别概率分布之间的相对熵可以用来衡量两个概率分布之间的不同程度,预设分类模型计算得到的第一类别概率分布可以作为真实分布,训练后深度分类模型计算得到的第二概率分布可以作为理论(拟合)分布,相对熵表示使用理论分布拟合真实分布时产生的信息损耗,根据相对熵可以得到预设分类模型与训练后深度分类模型之间的差异。该相对熵的具体计算公式可以为In one embodiment, by calculating the relative entropy between the second class probability distribution of the target sample and the first class probability distribution, the relationship between the second class probability distribution and the first class probability distribution can be determined according to the relative entropy. difference. Wherein, the relative entropy between the probability distribution of the second category and the probability distribution of the first category can be used to measure the degree of difference between the two probability distributions, and the probability distribution of the first category calculated by the preset classification model can be used as the true distribution, The second probability distribution calculated by the depth classification model after training can be used as the theoretical (fitting) distribution. The relative entropy represents the information loss generated when the theoretical distribution is used to fit the real distribution. According to the relative entropy, the preset classification model and the depth after training can be obtained. Differences between classification models. The relative entropy The specific calculation formula can be

其中,X可以为筛选后目标样本,表示x可以为X中的一个目标样本,P(x)表示第一概率分布,Q(x)表示第二概率分布。需要说明的是,该相对熵可以有多种不同的计算方法,也即相对熵的具体计算公式可以有多种形式,在此不做限定。Among them, X can be the target sample after screening, Indicates that x can be a target sample in X, P(x) represents the first probability distribution, and Q(x) represents the second probability distribution. It should be noted that the relative entropy may have various calculation methods, that is, the specific calculation formula of the relative entropy may have various forms, which are not limited herein.

由以上可知,本申请实施例通过获取目标样本;采用预设分类模型对目标样本进行分类处理,得到目标样本对应的第一类别概率分布;根据第一类别概率分布计算目标样本的困难系数,并基于困难系数对目标样本进行筛选,得到筛选后目标样本;采用训练后深度分类模型对筛选后目标样本进行分类处理,得到筛选后目标样本对应的第二类别概率分布;计算第二类别概率分布与第一类别概率分布之间的差异,并基于差异对预设分类模型进行收敛,得到训练后分类模型,该训练后分类模型用于对待处理信息进行分类。以此,通过预设分类模型对目标样本进行分类处理得到的目标样本对应的第一类别概率分布来计算每一目标样本的困难系数,并根据困难系数对目标样本进行筛选,得到筛选后目标样本,进而基于筛选后目标样本以及训练后分类模型来对预设分类模型进行训练,这种根据每一目标样本的困难系数来针对性的对训练样本进行筛选的方法,相对于现有技术,减少了训练样本的数量,进而减少了训练过程中的计算量,同时缩短了训练时间,以此在模型训练过程中提高了信息处理效率,进而提高了模型训练的效率。It can be seen from the above that the embodiment of the present application obtains the target sample; uses a preset classification model to classify the target sample to obtain the first category probability distribution corresponding to the target sample; calculates the difficulty coefficient of the target sample according to the first category probability distribution, and Screen the target samples based on the difficulty coefficient to obtain the filtered target samples; use the post-training deep classification model to classify the filtered target samples to obtain the second category probability distribution corresponding to the screened target samples; calculate the second category probability distribution and The difference between the probability distributions of the first category is obtained, and the preset classification model is converged based on the difference to obtain a post-training classification model, and the post-training classification model is used to classify the information to be processed. In this way, the probability distribution of the first category corresponding to the target sample obtained by classifying the target sample by the preset classification model is used to calculate the difficulty coefficient of each target sample, and the target sample is screened according to the difficulty coefficient to obtain the screened target sample. , and then train the preset classification model based on the target samples after screening and the classification model after training. This method of screening training samples according to the difficulty coefficient of each target sample, compared with the prior art, reduces the number of The number of training samples is reduced, the amount of calculation in the training process is reduced, and the training time is shortened, thereby improving the information processing efficiency during the model training process, thereby improving the efficiency of model training.

根据上面实施例所描述的方法,以下将举例作进一步详细说明。According to the methods described in the above embodiments, the following examples will be used for further detailed description.

在本实施例中,将以该信息处理装置具体集成在计算机设备为例进行说明。其中,该信息处理方法以服务器为执行主体,且以该预设分类模型为学生模型、训练后深度分类模型为教师模型为例进行具体的描述。In this embodiment, the information processing apparatus is specifically integrated in a computer device as an example for description. The information processing method takes the server as the execution body, and takes the preset classification model as the student model and the deep classification model after training as the teacher model as an example for specific description.

为了更好的描述本申请实施例,请参阅图5。如图5所示,图5为本申请实施例提供的信息处理方法的另一流程示意图。具体流程如下:To better describe the embodiments of the present application, please refer to FIG. 5 . As shown in FIG. 5 , FIG. 5 is another schematic flowchart of an information processing method provided by an embodiment of the present application. The specific process is as follows:

在步骤201中,服务器获取初始样本数据集,对该初始样本进行分词处理,得到初始样本词集合,对该初始样本词集合进行特征提取,得到初始样本词向量集合,计算该初始样本词向量中每一词的近义词,根据该近义词对该初始样本进行数据扩充,得到目标样本。In

为了可以增强知识蒸馏得到的学生模型的性能,该目标样本可以通过对初始训练数据集进行数据增广得到。具体的,服务器可以获取初始样本数据集,该初始样本数据集包括至少一个初始样本,通过对该初始样本进行分词处理,得到初始样本词集合,对该初始样本词集合进行特征提取,得到初始样本词向量集合,进而可以计算该初始样本词向量中每一词的近义词,根据该近义词对该初始样本进行数据扩充,得到目标样本。In order to enhance the performance of the student model obtained by knowledge distillation, the target sample can be obtained by performing data augmentation on the initial training data set. Specifically, the server may obtain an initial sample data set, where the initial sample data set includes at least one initial sample, perform word segmentation processing on the initial sample to obtain an initial sample word set, and perform feature extraction on the initial sample word set to obtain an initial sample A set of word vectors, and then a synonym of each word in the initial sample word vector can be calculated, and data expansion of the initial sample is performed according to the synonym to obtain a target sample.

其中,服务器可以通过文本预处理对获取到的初始样本进行分词处理,其中,不同语种或者不同领域的初始样本可以有不同的分词处理方法,例如,对于英文,可以通过单词之间的空格进行分词,对于中文,可以利用分词工具进行处理,对于专业领域的初始样本,可以按照不同专业领域的样本特点设计分词算法,从而实现分词处理等等,得到初始样本进行分词处理后的初始样本词集合。例如,对于初始样本“我吃了三明治”,可以通过分词处理得到“我,吃,了,三明治”,等等。以此对每一初始样本进行分词处理,可以得到初始样本词集合,进而可以通过近义词预测模型对该初始样本词集合进行特征提取,得到初始样本词向量集合,计算该初始样本词向量中每一词的近义词,根据该近义词对该初始样本进行数据扩充,得到目标样本。The server can perform word segmentation processing on the obtained initial samples through text preprocessing, wherein initial samples in different languages or different fields can have different word segmentation processing methods. For example, for English, word segmentation can be performed by spaces between words For Chinese, word segmentation tools can be used for processing. For initial samples in professional fields, word segmentation algorithms can be designed according to the characteristics of samples in different professional fields, so as to realize word segmentation processing, etc., and obtain the initial sample word set after word segmentation processing of initial samples. For example, for the initial sample "I ate a sandwich", the word segmentation can be used to get "I, eat, have, sandwich", and so on. In this way, word segmentation is performed on each initial sample, and an initial sample word set can be obtained, and then feature extraction can be performed on the initial sample word set through a synonym prediction model to obtain an initial sample word vector set, and each word vector in the initial sample word vector can be calculated. The synonym of the word, and the initial sample is expanded according to the synonym to obtain the target sample.

其中,服务器可以通过对初始样本词集合中的词进行词性分析,将词汇集合中无需进行近义词扩充的词进行过滤,该无需进行近义词扩充的词的词性可以为介词、冠词等词,例如,对于词汇集合“你,午饭,吃,的,什么”中的“你”、“的”和“什么”为介词、冠词等词性的词,由于这些词进行替换无法增强数据的多样性甚至可能会破坏样本的原有语义,因此可以将这些词性的词进行过滤,再计算过滤后的初始样本词集合中每一词的近义词,以实现对初始样本的数据扩充,得到目标样本。Wherein, the server can filter the words in the vocabulary set that do not need synonym expansion by performing part-of-speech analysis on the words in the initial sample word set. For words with parts of speech such as prepositions and articles in the vocabulary set "you, lunch, eat, what, what", "you", "of" and "what" are prepositions, articles and other parts of speech, because the replacement of these words cannot enhance the diversity of the data and even may It will destroy the original semantics of the sample, so the words of these parts of speech can be filtered, and then the synonyms of each word in the filtered initial sample word set can be calculated to realize the data expansion of the initial sample and obtain the target sample.

初始样本等训练样本数据可以是从与信息处理装置连接的存储器中获取,也可以从其他数据存储终端获取。还可以从实体终端的存储器中获取,也可以从虚拟的存储空间如数据集或者语料库中获取。在一些实施例中,训练样本数据可以从一个存储位置获取,也可以从多个存储位置获取,例如训练样本数据可以保存于区块链上,信息处理装置从区块链上获取上述训练样本数据。信息处理装置可以是响应于某一训练样本数据获取指令后在一个时间段中集中获取训练样本数据,也可以是根据某一数据获取逻辑持续进行训练样本数据获取。Training sample data such as initial samples may be acquired from a memory connected to the information processing device, or may be acquired from other data storage terminals. It can also be obtained from the memory of the physical terminal, or can be obtained from a virtual storage space such as a data set or a corpus. In some embodiments, the training sample data may be acquired from one storage location, or may be acquired from multiple storage locations. For example, the training sample data may be stored on a blockchain, and the information processing device may acquire the above-mentioned training sample data from the blockchain. . The information processing apparatus may centrally acquire training sample data in a time period in response to a certain training sample data acquisition instruction, or may continuously acquire training sample data according to a certain data acquisition logic.

在步骤202中,服务器获取预设分类模型和训练后深度分类模型,当预设分类模型与该训练后深度分类模型的模型架构相同时,获取预设分类模型的第一网络深度层级数以及该训练后深度分类模型的第二网络深度层级数,将该训练后深度分类模型的前第一网络深度层级数的层级的模型参数确定为预设分类模型的初始化模型参数。In