CN114332893A - Table structure identification method and device, computer equipment and storage medium - Google Patents

Table structure identification method and device, computer equipment and storage mediumDownload PDFInfo

- Publication number

- CN114332893A CN114332893ACN202111020622.7ACN202111020622ACN114332893ACN 114332893 ACN114332893 ACN 114332893ACN 202111020622 ACN202111020622 ACN 202111020622ACN 114332893 ACN114332893 ACN 114332893A

- Authority

- CN

- China

- Prior art keywords

- features

- text

- feature

- area

- image

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Landscapes

- Image Analysis (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本申请涉及人工智能技术领域,特别是涉及一种表格结构识别方法、装置、计算机设备和存储介质。The present application relates to the technical field of artificial intelligence, and in particular, to a table structure identification method, apparatus, computer equipment and storage medium.

背景技术Background technique

随着人工智能技术的发展,以及对于数据信息的提取、整理以及更新的效率和准确度要求日益提升,表格作为结构化数据的存储形式,具有规范性的特点,更便于用户对于表格内存储的数据进行查询、提取或更新录入。但目前通常采用将表格转换成PDF的格式后再进行发表,导致无法直接对表格内的数据进行提取或对表格进行更新,因此出现了针对PDF形式的表格的结构、内容识别技术。With the development of artificial intelligence technology and the increasing efficiency and accuracy requirements for the extraction, sorting and updating of data information, tables, as a storage form of structured data, have normative characteristics, which are more convenient for users to store data in tables. Data for query, extraction or update entry. However, at present, the table is usually converted into a PDF format and then published, which makes it impossible to directly extract the data in the table or update the table. Therefore, the structure and content recognition technology for tables in PDF format has emerged.

传统上的表格识别方法,多采用先针对PDF文件进行文本检测,得到图像中的文本区域,可包括图像中涉及的不同文本区域,然后利用图神经网络预测出每两个文本区域之间的关系,根据每两个文本区域间的关系确定相应文本区域是需要合并还是不需要合并,最后对预测出的邻接矩阵做后处理,重现图像中的表格结构,进而识别表格中的内容的方式。Traditional table recognition methods mostly use text detection on PDF files to obtain text areas in the image, which can include different text areas involved in the image, and then use a graph neural network to predict the relationship between each two text areas. , according to the relationship between each two text regions, determine whether the corresponding text regions need to be merged or not, and finally do post-processing on the predicted adjacency matrix to reproduce the table structure in the image, and then identify the method of the content in the table.

但传统的表格识别方法,无法直接解决表格中存在空白字段的场景,并且预测的邻接矩阵仅能代表文本区域是否合并,仅考虑了领域结点的特征,无法覆盖整体的待识别表格,还需要额外的文本检测网络去定位图像中的文本位置,再进一步组织成行列信息。因此传统的表格识别方法无法对待识别表格进行整体、全局的识别,还需额外设置相应的文本检测网络,容易出现识别内容失误的问题,导致表格识别效率仍然较为低下。However, the traditional table recognition method cannot directly solve the situation where there are blank fields in the table, and the predicted adjacency matrix can only represent whether the text areas are merged. It only considers the characteristics of the field nodes and cannot cover the overall table to be recognized. An additional text detection network is used to locate text positions in the image, which are further organized into row and column information. Therefore, the traditional table recognition method cannot perform overall and global recognition of the table to be recognized, and a corresponding text detection network needs to be additionally set up, which is prone to the problem of errors in the recognition content, resulting in a still low table recognition efficiency.

发明内容SUMMARY OF THE INVENTION

基于此,有必要针对上述技术问题,提供一种能够对PDF表格进行整体、全面的识别,以提高表格识别准确度和识别效率的表格结构识别方法、装置、计算机设备和存储介质。Based on this, it is necessary to provide a form structure identification method, device, computer equipment and storage medium that can perform overall and comprehensive identification of PDF forms to improve the accuracy and efficiency of form identification.

一种表格结构识别方法,其特征在于,所述方法包括:A table structure identification method, characterized in that the method comprises:

获取目标表格图像区域,识别所述目标表格图像区域中的文本区域;Obtain the target form image area, and identify the text area in the target form image area;

确定各所述文本区域的图像特征和坐标特征,并分别将所述图像特征、坐标特征进行融合,得到与各所述文本区域对应的文本区域元素融合特征;Determine the image features and coordinate features of each of the text regions, and fuse the image features and coordinate features respectively to obtain text region element fusion features corresponding to each of the text regions;

根据所述文本区域元素融合特征,确定所述目标表格图像区域中各结点的邻接特征;According to the element fusion feature of the text area, determine the adjacency feature of each node in the target table image area;

将任意两个结点的邻接特征进行特征拼接,并对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果;The adjacency features of any two nodes are feature spliced, and the adjacency matrix obtained by the splicing is classified and predicted, and the row-column relationship prediction result of the text area corresponding to the two nodes is generated;

基于各所述文本区域的行列关系预测结果,确定与所述目标表格图像区域对应的表格结构。Based on the row-column relationship prediction result of each of the text regions, a table structure corresponding to the target table image region is determined.

在一个实施例中,所述基于所述局部特征和所述全局特征进行特征聚合,生成所述目标表格图像区域中各结点的邻接特征,包括:In one embodiment, the performing feature aggregation based on the local features and the global features to generate adjacency features of each node in the target table image region includes:

获取与门机制对应的各个门参数;Get each gate parameter corresponding to the gate mechanism;

基于预设激活函数、各所述门参数,对所述局部特征和所述全局特征进行特征聚合,得到与所述目标表格图像区域中各结点的邻接特征。Based on a preset activation function and each of the gate parameters, feature aggregation is performed on the local feature and the global feature to obtain adjacency features with each node in the target table image area.

一种表格结构识别装置,其特征在于,所述装置包括:A table structure identification device, characterized in that the device comprises:

文本区域识别模块,用于获取目标表格图像区域,识别所述目标表格图像区域中的文本区域;A text area identification module, used to obtain the target form image area, and identify the text area in the target form image area;

文本区域元素融合特征生成模块,用于确定各所述文本区域的图像特征和坐标特征,并分别将所述图像特征、坐标特征进行融合,得到与各所述文本区域对应的文本区域元素融合特征;The text region element fusion feature generation module is used to determine the image features and coordinate features of each of the text regions, and respectively fuse the image features and coordinate features to obtain the text region element fusion features corresponding to each of the text regions. ;

邻接特征生成模块,用于根据所述文本区域元素融合特征,确定所述目标表格图像区域中各结点的邻接特征;an adjacency feature generation module, configured to determine the adjacency feature of each node in the target table image area according to the element fusion feature of the text area;

行列关系预测结果生成模块,用于将任意两个结点的邻接特征进行特征拼接,并对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果;The row-column relationship prediction result generation module is used to perform feature splicing on the adjacency features of any two nodes, classify and predict the adjacency matrix obtained by the splicing, and generate the row-column relationship prediction result of the text area corresponding to the two nodes;

表格结构确定模块,用于基于各所述文本区域的行列关系预测结果,确定与所述目标表格图像区域对应的表格结构。A table structure determination module, configured to determine a table structure corresponding to the target table image region based on the row-column relationship prediction result of each of the text regions.

一种计算机设备,包括存储器和处理器,所述存储器存储有计算机程序,所述处理器执行所述计算机程序时实现以下步骤:A computer device includes a memory and a processor, the memory stores a computer program, and the processor implements the following steps when executing the computer program:

获取目标表格图像区域,识别所述目标表格图像区域中的文本区域;Obtain the target form image area, and identify the text area in the target form image area;

确定各所述文本区域的图像特征和坐标特征,并分别将所述图像特征、坐标特征进行融合,得到与各所述文本区域对应的文本区域元素融合特征;Determine the image features and coordinate features of each of the text regions, and fuse the image features and coordinate features respectively to obtain text region element fusion features corresponding to each of the text regions;

根据所述文本区域元素融合特征,确定所述目标表格图像区域中各结点的邻接特征;According to the element fusion feature of the text area, determine the adjacency feature of each node in the target table image area;

将任意两个结点的邻接特征进行特征拼接,并对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果;The adjacency features of any two nodes are feature spliced, and the adjacency matrix obtained by the splicing is classified and predicted, and the row-column relationship prediction result of the text area corresponding to the two nodes is generated;

基于各所述文本区域的行列关系预测结果,确定与所述目标表格图像区域对应的表格结构。Based on the row-column relationship prediction result of each of the text regions, a table structure corresponding to the target table image region is determined.

一种计算机可读存储介质,其上存储有计算机程序,所述计算机程序被处理器执行时实现以下步骤:A computer-readable storage medium on which a computer program is stored, and when the computer program is executed by a processor, the following steps are implemented:

获取目标表格图像区域,识别所述目标表格图像区域中的文本区域;Obtain the target form image area, and identify the text area in the target form image area;

确定各所述文本区域的图像特征和坐标特征,并分别将所述图像特征、坐标特征进行融合,得到与各所述文本区域对应的文本区域元素融合特征;Determine the image features and coordinate features of each of the text regions, and fuse the image features and coordinate features respectively to obtain text region element fusion features corresponding to each of the text regions;

根据所述文本区域元素融合特征,确定所述目标表格图像区域中各结点的邻接特征;According to the element fusion feature of the text area, determine the adjacency feature of each node in the target table image area;

将任意两个结点的邻接特征进行特征拼接,并对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果;The adjacency features of any two nodes are feature spliced, and the adjacency matrix obtained by the splicing is classified and predicted, and the row-column relationship prediction result of the text area corresponding to the two nodes is generated;

基于各所述文本区域的行列关系预测结果,确定与所述目标表格图像区域对应的表格结构。Based on the row-column relationship prediction result of each of the text regions, a table structure corresponding to the target table image region is determined.

上述表格结构识别方法、装置、计算机设备和存储介质中,通过获取目标表格图像区域,识别目标表格图像区域中的文本区,并确定各文本区域的图像特征和坐标特征,分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征,可通过对目标表格图像区域内的不同文本区域进行图像特征、坐标特征的融合,以达到对目标表格图像区域的整体识别,而不是针对单个文本区域的局部识别。进而根据文本区域元素融合特征,确定目标表格图像区域中各结点的邻接特征,并将任意两个结点的邻接特征进行特征拼接,通过对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果,进而基于各文本区域的行列关系预测结果,确定与目标表格图像区域对应的表格结构。实现了根据对各文本区域的行列关系的预测结果,即可确定得到相应的表格结构,而无需利用额外的文本检测网络进一步进行识别,可减少不必要繁琐操作,进而提高了表格识别准确度和识别效率。In the above-mentioned table structure identification method, device, computer equipment and storage medium, by acquiring the target table image area, identify the text area in the target table image area, and determine the image feature and coordinate feature of each text area, respectively The features are fused to obtain the text region element fusion features corresponding to each text region. The image features and coordinate features of different text regions in the target table image region can be fused to achieve the overall recognition of the target table image region. Not local recognition for a single text area. Then, according to the element fusion features of the text area, the adjacency features of each node in the target table image area are determined, and the adjacency features of any two nodes are feature spliced, and the adjacency matrix obtained by splicing is classified and predicted to generate a The row-column relationship prediction result of the text region corresponding to each node is further determined based on the row-column relationship prediction result of each text region, and the table structure corresponding to the target table image region is determined. It is realized that according to the prediction results of the row-column relationship of each text area, the corresponding table structure can be determined without using an additional text detection network for further identification, which can reduce unnecessary and cumbersome operations, thereby improving the accuracy of table recognition. identification efficiency.

附图说明Description of drawings

图1为一个实施例中表格结构识别方法的应用环境图;1 is an application environment diagram of a table structure identification method in one embodiment;

图2为一个实施例中表格结构识别方法的流程示意图;2 is a schematic flowchart of a table structure identification method in one embodiment;

图3为一个实施例中表格结构识别方法的目标表格图像区域示意图;3 is a schematic diagram of a target table image area of a table structure identification method in one embodiment;

图4为一个实施例中表格结构识别方法的文本区域检测结果示意图;4 is a schematic diagram of a text region detection result of a table structure identification method in one embodiment;

图5为一个实施例表格结构识别方法的行关系预测结果示意图;5 is a schematic diagram of a row relationship prediction result of a table structure identification method according to an embodiment;

图6为一个实施例中表格结构识别方法的列关系预测结果示意图;6 is a schematic diagram of a column relationship prediction result of a table structure identification method in one embodiment;

图7为一个实施例中得到与各文本区域对应的文本区域元素融合特征的流程示意图;FIG. 7 is a schematic flowchart of obtaining the text region element fusion feature corresponding to each text region in one embodiment;

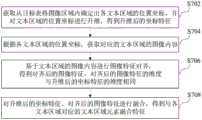

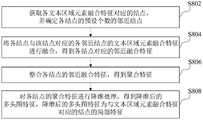

图8为一个实施例获取各文本区域元素融合特征对应的结点的局部特征的流程示意图;8 is a schematic flowchart of obtaining local features of nodes corresponding to fusion features of each text region element according to an embodiment;

图9为一个实施例中表格结构识别方法的整体流程示意图;9 is a schematic diagram of the overall flow of a table structure identification method in one embodiment;

图10为一个实施例中用于生成邻接特征的FLAG网络结构示意图;10 is a schematic diagram of a FLAG network structure for generating adjacent features in one embodiment;

图11为一个实施例中表格结构识别装置的结构框图;11 is a structural block diagram of an apparatus for identifying a table structure in one embodiment;

图12为一个实施例中计算机设备的内部结构图。Figure 12 is a diagram of the internal structure of a computer device in one embodiment.

具体实施方式Detailed ways

为了使本申请的目的、技术方案及优点更加清楚明白,以下结合附图及实施例,对本申请进行进一步详细说明。应当理解,此处描述的具体实施例仅仅用以解释本申请,并不用于限定本申请。In order to make the purpose, technical solutions and advantages of the present application more clearly understood, the present application will be described in further detail below with reference to the accompanying drawings and embodiments. It should be understood that the specific embodiments described herein are only used to explain the present application, but not to limit the present application.

本申请提供的表格结构识别方法,涉及了人工智能技术,其中,人工智能(Artificial Intelligence,AI)是利用数字计算机或者数字计算机控制的机器模拟、延伸和扩展人的智能,感知环境、获取知识并使用知识获得最佳结果的理论、方法、技术及应用系统。换句话说,人工智能是计算机科学的一个综合技术,它企图了解智能的实质,并生产出一种新的能以人类智能相似的方式做出反应的智能机器。人工智能也就是研究各种智能机器的设计原理与实现方法,使机器具有感知、推理与决策的功能。其中,人工智能技术是一门综合学科,涉及领域广泛,既有硬件层面的技术也有软件层面的技术。人工智能基础技术一般包括如传感器、专用人工智能芯片、云计算、分布式存储、大数据处理技术、操作/交互系统、机电一体化等技术。人工智能软件技术主要包括计算机视觉技术、语音处理技术、自然语言处理技术以及机器学习/深度学习等几大方向。The table structure identification method provided by this application involves artificial intelligence technology, wherein artificial intelligence (Artificial Intelligence, AI) is the use of digital computers or machines controlled by digital computers to simulate, extend and expand human intelligence, perceive the environment, acquire knowledge and Theories, methods, techniques and applied systems for using knowledge to achieve optimal results. In other words, artificial intelligence is a comprehensive technique of computer science that attempts to understand the essence of intelligence and produce a new kind of intelligent machine that can respond in a similar way to human intelligence. Artificial intelligence is to study the design principles and implementation methods of various intelligent machines, so that the machines have the functions of perception, reasoning and decision-making. Among them, artificial intelligence technology is a comprehensive discipline, involving a wide range of fields, both hardware-level technology and software-level technology. The basic technologies of artificial intelligence generally include technologies such as sensors, special artificial intelligence chips, cloud computing, distributed storage, big data processing technology, operation/interaction systems, and mechatronics. Artificial intelligence software technology mainly includes computer vision technology, speech processing technology, natural language processing technology, and machine learning/deep learning.

其中,人工智能软件技术中的机器学习(Machine Learning,ML)是一门多领域交叉学科,涉及概率论、统计学、逼近论、凸分析、算法复杂度理论等多门学科。专门研究计算机怎样模拟或实现人类的学习行为,以获取新的知识或技能,重新组织已有的知识结构使之不断改善自身的性能。机器学习是人工智能的核心,是使计算机具有智能的根本途径,其应用遍及人工智能的各个领域。机器学习和深度学习通常包括人工神经网络、置信网络、强化学习、迁移学习、归纳学习、式教学习等技术。Among them, machine learning (ML) in artificial intelligence software technology is a multi-domain interdisciplinary subject involving probability theory, statistics, approximation theory, convex analysis, algorithm complexity theory and other disciplines. It specializes in how computers simulate or realize human learning behaviors to acquire new knowledge or skills, and to reorganize existing knowledge structures to continuously improve their performance. Machine learning is the core of artificial intelligence and the fundamental way to make computers intelligent, and its applications are in all fields of artificial intelligence. Machine learning and deep learning usually include artificial neural networks, belief networks, reinforcement learning, transfer learning, inductive learning, teaching learning and other technologies.

而随着人工智能技术研究和进步,人工智能技术在多个领域展开研究和应用,例如常见的智能家居、智能穿戴设备、虚拟助理、智能音箱、智能营销、无人驾驶、自动驾驶、无人机、机器人、智能医疗、智能客服以及智能课堂等,相信随着技术的发展,人工智能技术将在更多的领域得到应用,并发挥越来越重要的价值。With the research and progress of artificial intelligence technology, artificial intelligence technology has been researched and applied in many fields, such as common smart homes, smart wearable devices, virtual assistants, smart speakers, smart marketing, unmanned driving, autonomous driving, unmanned driving It is believed that with the development of technology, artificial intelligence technology will be applied in more fields and play an increasingly important value.

本申请实施例提供的表格结构识别方法,可以应用于如图1所示的应用环境中。其中,终端102通过网络与服务器104进行通信。其中,服务器104通过获取目标表格图像区域,识别目标表格图像区域中的文本区域,并确定各文本区域的图像特征和坐标特征,分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征。进而服务器104根据文本区域元素融合特征,确定目标表格图像区域中各结点的邻接特征,通过将任意两个结点的邻接特征进行特征拼接,并对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果。进而基于各文本区域的行列关系预测结果,确定与目标表格图像区域对应的表格结构,将相应的表格结构反馈至终端102。其中,终端102可以但不限于是各种个人计算机、笔记本电脑、智能手机、平板电脑和便携式可穿戴设备,服务器104可以用独立的服务器或者是多个服务器组成的服务器集群来实现。The table structure identification method provided by the embodiment of the present application can be applied to the application environment shown in FIG. 1 . The terminal 102 communicates with the

在一个实施例中,如图2所示,提供了一种表格结构识别方法,以该方法应用于图1中的服务器为例进行说明,包括以下步骤:In one embodiment, as shown in FIG. 2 , a method for identifying a table structure is provided, which is described by taking the method applied to the server in FIG. 1 as an example, including the following steps:

步骤S202,获取目标表格图像区域,识别目标表格图像区域中的文本区域。Step S202, acquiring the target form image area, and identifying the text area in the target form image area.

具体地,通过对待识别图像进行目标检测,获取与待识别图像对应的目标表格图像区域,进而对目标表格图像区域进行进一步识别,识别得出目标表格图像区域中的文本区域。Specifically, the target form image area corresponding to the to-be-recognized image is acquired by performing target detection on the to-be-recognized image, and then the target form image area is further identified to identify the text area in the target form image area.

在一个实施例中,如图3所示,提供了一种表格结构识别方法的目标表格图像区域,通过对待识别图像进行目标检测,得到如图3所示的目标表格图像区域,并进一步对目标表格图像区域进行识别,可识别得到如图4所示的表格结构识别方法的文本区域检测结果。In one embodiment, as shown in FIG. 3 , a target table image area of a table structure recognition method is provided, and the target table image area shown in FIG. 3 is obtained by performing target detection on the image to be recognized, and further The table image area is recognized, and the text area detection result of the table structure recognition method as shown in FIG. 4 can be recognized.

具体地,通过采用Mask-RCNN网络对目标表格区域进行目标检测,确定目标表格区域内的文本区域。其中,文本区域可由如图4所示的文本区域检测结果表示,即在目标表格区域内,具有如图4所示的和各文本框对应的文本区域,进而通过Mask-RCNN网络对目标表格区域进行目标检测,可确定不同文本框的位置。Specifically, by using the Mask-RCNN network to perform target detection on the target table region, the text region in the target table region is determined. Among them, the text area can be represented by the text area detection results shown in Figure 4, that is, in the target table area, there are text areas corresponding to each text box as shown in Figure 4, and then the target table area is detected by the Mask-RCNN network. Object detection is performed to determine the position of different text boxes.

进一步地,Mask-RCNN网络表示兼容通用目标检测以及分割任务的网络,包含检测分支和分割分支。在本实施例中,由于仅需要对文本区域进行识别,则仅采用Mask-RCNN网络的检测分支对目标表格区域进行检测。Further, the Mask-RCNN network represents a network compatible with general object detection and segmentation tasks, including a detection branch and a segmentation branch. In this embodiment, since only the text region needs to be recognized, only the detection branch of the Mask-RCNN network is used to detect the target table region.

其中,Mask-RCNN网络的骨干网络为带有FPN的Res50网络,其中,FPN表示特征金字塔网络(Feature Pyramid Network),可利用多尺度的方式提升目标检测效果的神经网络,Res50网络表示层数为50的深度残差网络(Deep Residual Network),属于卷积神经网络的基础网络类型。其中,可通过Res50网络得到图片不同阶段的特征图,进而根据不同阶段的特征图建立特征金字塔,即得到具有FPN的Res50网络。Among them, the backbone network of the Mask-RCNN network is a Res50 network with FPN, where FPN represents a feature pyramid network (Feature Pyramid Network), a neural network that can improve the effect of target detection in a multi-scale way, and the Res50 network represents the number of layers. 50 deep residual network (Deep Residual Network), which belongs to the basic network type of convolutional neural network. Among them, the feature maps of different stages of the picture can be obtained through the Res50 network, and then a feature pyramid is established according to the feature maps of different stages, that is, the Res50 network with FPN is obtained.

在一个实施例中,由于通过FPN(特征金字塔网络)的Res50网络(深度残差网络)进行目标检测后得到的RPN(区域生成网络)预测结果中,仍存在较多冗余的文本区域,进而采用NMS算法对所有的文本区域进行过滤,以滤除多余的文本区域,进而降低计算复杂度。其中,NMS算法(Non-Maximum Suppression)表示为非极大值抑制算法,用于对目标检测领域中对局部极大值的搜索,可对不满足极大值要求的数据取值起到过滤作用。In one embodiment, because there are still many redundant text regions in the RPN (region generation network) prediction result obtained after the target detection is performed by the Res50 network (deep residual network) of the FPN (feature pyramid network), and further The NMS algorithm is used to filter all text regions to filter out redundant text regions, thereby reducing the computational complexity. Among them, the NMS algorithm (Non-Maximum Suppression) is expressed as a non-maximum suppression algorithm, which is used to search for local maxima in the field of target detection, and can filter the data values that do not meet the maximum value requirements. .

步骤S204,确定各文本区域的图像特征和坐标特征,并分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征。Step S204: Determine the image feature and coordinate feature of each text region, and fuse the image feature and coordinate feature respectively to obtain the text region element fusion feature corresponding to each text region.

具体地,通过获取从目标表格图像区域内确定出的各文本区域的位置坐标,并对各文本区域的位置坐标进行升维,可得到升维后的坐标特征。进一步可根据各文本区域的位置坐标获取对应的文本区域的图像内容,进而基于文本区域的图像内容进行图像特征对齐,得到对齐后的图像特征。其中,对齐后的图像特征的维度与升维后的坐标特征的维度相同。Specifically, by acquiring the position coordinates of each text area determined from the image area of the target form, and increasing the dimension of the position coordinates of each text area, the coordinate features after the dimension increase can be obtained. Further, the image content of the corresponding text area can be obtained according to the position coordinates of each text area, and then image features are aligned based on the image content of the text area to obtain aligned image features. The dimension of the aligned image feature is the same as the dimension of the coordinate feature after the dimension increase.

进一步地,通过对升维后的坐标特征、对齐后的图像特征进行融合,得到与各文本区域对应的文本区域元素融合特征。Further, by fusing the coordinate features after dimension enhancement and the image features after alignment, the text region element fusion features corresponding to each text region are obtained.

步骤S206,根据文本区域元素融合特征,确定目标表格图像区域中各结点的邻接特征。Step S206, according to the element fusion feature of the text region, determine the adjacency feature of each node in the image region of the target table.

具体地,通过获取与各文本区域元素融合特征对应的结点的局部特征和全局特征,进而基于局部特征和全局特征进行特征聚合,得到目标表格图像区域中各结点的邻接特征。Specifically, by acquiring local features and global features of nodes corresponding to the element fusion features of each text area, and then performing feature aggregation based on the local features and global features, the adjacency features of each node in the target table image region are obtained.

其中,通过采用K-邻近算法(k-Nearest Neighbor algorithm),分别确定出各文本区域元素融合特征对应的结点的K个邻近结点,并将K个邻近结点和该结点的文本区域元素融合特征进行融合,以得到各结点的邻近融合特征,通过将各结点的邻近融合特征进行整合,以得到对应的聚合特征。进一步采用FCN网络(Full Connected Network,即全连接神经网络)对各结点的聚合特征进行降维处理,得到降维后的多头图特征。其中,降维后的多头图特征即为与文本区域元素融合特征对应的结点的局部特征。Among them, by using the K-Nearest Neighbor algorithm, K adjacent nodes of the nodes corresponding to the fusion features of each text area element are determined respectively, and the K adjacent nodes and the text area of the node are determined. The element fusion features are fused to obtain the adjacent fusion features of each node, and the corresponding aggregation features are obtained by integrating the adjacent fusion features of each node. Further, the FCN network (Full Connected Network, namely fully connected neural network) is used to reduce the dimensionality of the aggregated features of each node, and the multi-head graph features after dimensionality reduction are obtained. Among them, the multi-head graph feature after dimensionality reduction is the local feature of the node corresponding to the element fusion feature of the text area.

同样地,根据多头注意力机制,对与各结点对应的文本区域元素融合特征进行上下文特征聚合,得到与文本区域元素融合特征对应的结点的全局特征。Similarly, according to the multi-head attention mechanism, the context feature aggregation is performed on the text region element fusion features corresponding to each node, and the global features of the nodes corresponding to the text region element fusion features are obtained.

进一步地,通过获取预设激活函数以及与门机制对应的各个门参数,进而基于预设激活函数、各门参数,对局部特征和全局特征进行特征聚合,得到与目标表格图像区域中各结点的邻接特征。Further, by obtaining the preset activation function and each gate parameter corresponding to the gate mechanism, and then based on the preset activation function and each gate parameter, feature aggregation is performed on the local feature and the global feature, and each node in the image area of the target table is obtained. adjacency features.

在一个实施例中,采用以下公式(1)对局部特征以及全局特征进行聚合,得到与目标表格图像区域中各结点的邻接特征:In one embodiment, the following formula (1) is used to aggregate local features and global features to obtain adjacency features with each node in the target table image area:

Fagg=Sigmoid(gatei)*Fglobal+(1-Sigmoid(gatei))*Flocal,i∈[1,2,..,N]; (1)Fagg =Sigmoid(gatei )*Fglobal +(1-Sigmoid(gatei ))*Flocal ,i∈[1,2,..,N]; (1)

其中,Fagg表示聚合后的邻接特征、Fglobal表示全局特征、Flocal表示局部特征,Sigmoid为预设激活函数,gatei表示第i个head上的门参数,head表示多头注意力机制(Multi-head attention)中的不同注意力点,head的数量和门机制的gate数量相同。Among them, Fagg represents the aggregated adjacency feature, Fglobal represents the global feature, Flocal represents the local feature, Sigmoid is the preset activation function, gatei represents the gate parameter on the ith head, and head represents the multi-head attention mechanism (Multi - different attention points in head attention), the number of heads is the same as the number of gates of the gate mechanism.

步骤S208,将任意两个结点的邻接特征进行特征拼接,并对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果。In step S208, feature splicing is performed on the adjacency features of any two nodes, and the adjacency matrix obtained by splicing is classified and predicted to generate a row-column relationship prediction result of the text regions corresponding to the two nodes.

具体地,通过将任意两个结点的邻接特征进行特征拼接,得到拼接后的邻接矩阵,进而根据全连接神经网络对拼接后的邻接矩阵,进行二分类预测,得到相应的文本区域的行列关系预测结果。Specifically, by splicing the adjacency features of any two nodes, the spliced adjacency matrix is obtained, and then the spliced adjacency matrix is subjected to two-class prediction according to the fully connected neural network, and the row-column relationship of the corresponding text area is obtained. forecast result.

其中,二分类预测包括行关系预测和列关系预测,具体来说,根据全连接网络对拼接后的邻接矩阵进行二分类预测,确定拼接的邻接矩阵对应的两个文本区域是否属于表格中的同一行,或者判断拼接的邻接矩阵对应的两个文本区域是否属于表格中的同一列,进而得到相应的文本区域的行列关系预测结果。Among them, the two-class prediction includes row relationship prediction and column relationship prediction. Specifically, the two-class prediction is performed on the spliced adjacency matrix according to the fully connected network to determine whether the two text areas corresponding to the spliced adjacency matrix belong to the same table in the table. row, or determine whether the two text regions corresponding to the spliced adjacency matrix belong to the same column in the table, and then obtain the row-column relationship prediction result of the corresponding text region.

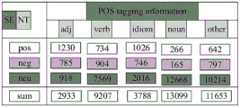

在一个实施例中,如图5和图6所示,分别提供了表格结构识别方法的行关系预测结果示意图,以及表格结构识别方法的列关系预测结果示意图。参照图5可知,行关系预测结果可确定出表格中每一行对应的文本区域,在图5中采用不同深浅程度的灰度对不同行的文本区域进行表示,在第一行包括的文本区域为“SE”、“POS tagging information”,第二行包括的文本区域为“NT”、“adj”、“verb”、“idiom”、“noun”、“other”,第三行包括的文本区域为“pos”、“1230”、“734”、“1026”、“266”、“642”,第四行包括的文本区域为“neg”、“785”、“904”、“746”、“165”、“797”,第五行包括的文本区域为“neu”、“918”、“7569”、“2016”、“12668”、“10214”,第六行包括的文本区域为“sum”、“2933”、“9207”、“3788”、“13099”、“11653”。In one embodiment, as shown in FIG. 5 and FIG. 6 , a schematic diagram of a row relationship prediction result of the table structure identification method and a schematic diagram of a column relationship prediction result of the table structure identification method are respectively provided. Referring to Figure 5, it can be seen that the row relationship prediction result can determine the text area corresponding to each row in the table. In Figure 5, different shades of gray are used to represent the text areas of different rows. The text area included in the first row is: "SE", "POS tagging information", the text area included in the second line is "NT", "adj", "verb", "idiom", "noun", "other", and the text area included in the third line is "pos", "1230", "734", "1026", "266", "642", the fourth line includes the text area "neg", "785", "904", "746", "165" ", "797", the text area included in the fifth line is "neu", "918", "7569", "2016", "12668", "10214", and the text area included in the sixth line is "sum", " 2933", "9207", "3788", "13099", "11653".

进一步,参照图6可知,列关系预测结果可确定出表格中每一列对应的文本区域,在图6中采用不同深浅程度的灰度对不同列的文本区域进行表示,其中,第一列包括的文本区域为“SENT”、“pos”、“neg”、“neu”、“sum”,第二列包括的文本区域为“POS”、“adj”、“1230”、“785”、“918”、“2933”,第三列包括的文本区域为“tagg”、“verb”、“734”、“904”、“7569”、“9207”,第四列包括的文本区域为“ing in”、“idiom”、“1026”、“746”、“2016”、“3788”,第五列包括的文本区域为“form”、“noun”、“266”、“165”、“12668”、“13099”,第六列包括的文本区域为“ation”、“other”、“642”、“797”、“10214”、“11653”。Further, referring to FIG. 6, it can be seen that the column relationship prediction result can determine the text area corresponding to each column in the table. In FIG. 6, different shades of gray are used to represent the text areas of different columns. The first column includes The text areas are "SENT", "pos", "neg", "neu", "sum", and the text areas included in the second column are "POS", "adj", "1230", "785", "918" , "2933", the text areas included in the third column are "tagg", "verb", "734", "904", "7569", "9207", and the text areas included in the fourth column are "ing in", "idiom", "1026", "746", "2016", "3788", the fifth column includes the text areas "form", "noun", "266", "165", "12668", "13099" ”, the text area included in the sixth column is "ation", "other", "642", "797", "10214", "11653".

步骤S210,基于各文本区域的行列关系预测结果,确定与目标表格图像区域对应的表格结构。Step S210: Determine the table structure corresponding to the target table image region based on the row-column relationship prediction result of each text region.

具体地,根据对每两个文本区域的行列关系的预测结果,可进一步确定出具体哪些文本区域属于同一行,哪些文本区域属于同一列,对不同文本区域的行列关系以及位置坐标进行进一步分析和排列,可确定与目标表格图像区域对应的表格结构。Specifically, according to the prediction result of the row-column relationship of each two text regions, it can be further determined which text regions belong to the same row and which text regions belong to the same column, and further analyze and analyze the row-column relationship and position coordinates of different text regions. Arrangement can determine the table structure corresponding to the target table image area.

在一个实施例中,为了客观评价表格识别结果的精度,构造了用来衡量表格结构识别的精度的数据集,如表1所示。其中,表格结构识别的召回率指在测试集存在的表格中,正确预测的邻接关系所占的比例,表格结构识别的准确率指在预测的结果中,正确预测的邻接关系所占的比例。In one embodiment, in order to objectively evaluate the accuracy of table recognition results, a data set for measuring the accuracy of table structure recognition is constructed, as shown in Table 1. Among them, the recall rate of table structure recognition refers to the proportion of correctly predicted adjacency relationships in the tables existing in the test set, and the accuracy rate of table structure recognition refers to the proportion of correctly predicted adjacency relationships in the predicted results.

表1表格结构识别指标Table 1 Table structure identification indicators

上述表格结构识别方法中,通过获取目标表格图像区域,识别目标表格图像区域中的文本区,并确定各文本区域的图像特征和坐标特征,分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征,可通过对目标表格图像区域内的不同文本区域进行图像特征、坐标特征的融合,以达到对目标表格图像区域的整体识别,而不是针对单个文本区域的局部识别。进而根据文本区域元素融合特征,确定目标表格图像区域中各结点的邻接特征,并将任意两个结点的邻接特征进行特征拼接,通过对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果,进而基于各文本区域的行列关系预测结果,确定与目标表格图像区域对应的表格结构。实现了根据对各文本区域的行列关系的预测结果,即可确定得到相应的表格结构,而无需利用额外的文本检测网络进一步进行识别,可减少不必要繁琐操作,进而提高了表格识别准确度和识别效率。In the above table structure recognition method, by acquiring the target table image area, identifying the text area in the target table image area, and determining the image feature and coordinate feature of each text area, respectively merging the image feature and the coordinate feature to obtain and each text area. The text area element fusion feature corresponding to the area can be used to fuse the image features and coordinate features of different text areas in the target form image area to achieve the overall recognition of the target form image area, rather than the local recognition of a single text area. . Then, according to the element fusion features of the text area, the adjacency features of each node in the target table image area are determined, and the adjacency features of any two nodes are feature spliced, and the adjacency matrix obtained by splicing is classified and predicted to generate a The row-column relationship prediction result of the text region corresponding to each node is further determined based on the row-column relationship prediction result of each text region, and the table structure corresponding to the target table image region is determined. It is realized that according to the prediction results of the row-column relationship of each text area, the corresponding table structure can be determined without using an additional text detection network for further identification, which can reduce unnecessary and cumbersome operations, thereby improving the accuracy of table recognition. identification efficiency.

在一个实施例中,如图7所示,得到与各文本区域对应的文本区域元素融合特征的步骤,即确定各文本区域的图像特征和坐标特征,并分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征的步骤,具体包括:In one embodiment, as shown in FIG. 7 , the step of obtaining the text region element fusion features corresponding to each text region is to determine the image features and coordinate features of each text region, and fuse the image features and coordinate features respectively, The steps of obtaining the text area element fusion features corresponding to each text area include:

步骤S702,获取从目标表格图像区域内确定出各文本区域的位置坐标,并对文本区域的位置坐标进行升维,得到升维后的坐标特征。In step S702, the position coordinates of each text area determined from the target table image area are acquired, and the dimension of the position coordinates of the text area is increased to obtain the coordinate features after the dimension increase.

具体地,通过从目标表格图像区域确定出各文本区域,并获取各文本区域的位置坐标,进而采用FCN网络(全连接网络)分别对各文本区域的位置坐标进行升维,得到升维后的坐标特征。Specifically, each text area is determined from the target table image area, and the position coordinates of each text area are obtained, and then the FCN network (full connection network) is used to upgrade the position coordinates of each text area respectively, and the upgraded dimension is obtained. Coordinate feature.

其中,各文本区域的位置坐标为4维,可以是(x,y,w,h)的四维坐标,为用于后续和图像特征进行融合,进而采用FCN网络将四维的坐标特征升维至和图像特征的维度一致。Among them, the position coordinates of each text area are 4-dimensional, which can be the four-dimensional coordinates of (x, y, w, h), which are used for subsequent fusion with image features, and then the FCN network is used to upgrade the four-dimensional coordinate features to sum. The dimensions of the image features are consistent.

在一个实施例中,在获取从目标表格图像区域内确定出各文本区域的位置坐标,并对文本区域的位置坐标进行升维,得到升维后的坐标特征之前,还包括:In one embodiment, before obtaining the position coordinates of each text area determined from the target table image area, and performing dimension upgrade on the position coordinates of the text area, before obtaining the coordinate features after the dimension upgrade, the method further includes:

计算目标表格图像区域内的各文本区域与预设标注文本区域的交并比;筛选出交并比大于预设交并比阈值的文本区域。Calculate the intersection ratio between each text area in the image area of the target table and the preset marked text area; screen out the text areas whose intersection ratio is greater than the preset intersection ratio threshold.

具体地,通过获取预设标注文本区域,并计算目标表格图像区域内各文本区域和预设标注文本区域的交并比,并获取预设交并比阈值,筛选出交并比大于预设交并比阈值的文本区域。Specifically, by obtaining the preset marked text area, and calculating the intersection ratio between each text area in the target table image area and the preset marked text area, and obtaining the preset intersection ratio threshold, the intersection ratio is filtered out and the intersection ratio is greater than the preset intersection ratio. And than the thresholded text area.

其中,预设标注文本区域为预先已进行标注的文本区域,还携带有相应已标注文本区域的行列关系,即已经标注的文本区域具体和哪些文本区域属于同一行,或者属于同一列。在本实施例中,预设交并比阈值可以为0.7至0.9中的不同取值,优选地,预设交并比阈值可以取0.8。The preset marked text area is a pre-marked text area, and also carries the row-column relationship of the corresponding marked text area, that is, the marked text area and which text areas belong to the same row or the same column. In this embodiment, the preset intersection ratio threshold may be a different value from 0.7 to 0.9, and preferably, the preset intersection ratio threshold may be 0.8.

步骤S704,根据各文本区域的位置坐标,获取对应的文本区域的图像内容。Step S704: Acquire the image content of the corresponding text area according to the position coordinates of each text area.

具体地,根据文本区域的位置坐标,确定文本区域在目标表格图像区域内的具体位置,进而获取对应具体位置上的图像内容,确定为与该文本区域对应的图像内容。Specifically, according to the position coordinates of the text area, the specific position of the text area in the target table image area is determined, and then the image content corresponding to the specific position is obtained and determined as the image content corresponding to the text area.

步骤S706,基于文本区域的图像内容进行图像特征对齐,得到对齐后的图像特征,对齐后的图像特征的维度与升维后的坐标特征的维度相同。In step S706, image feature alignment is performed based on the image content of the text area to obtain aligned image features, and the dimensions of the aligned image features are the same as the dimensions of the dimension-raised coordinate features.

具体地,采用Roi Align算法(即使用双线性插值固定不同大小感兴趣区域特征输出的算法),对文本区域的图像内容进行图像特征对齐。其中,文本区域的图像特征,可在采用Mask-RCNN网络对目标表格区域进行目标检测,确定目标表格区域内的文本区域时,进一步根据FPN网络(Feature Pyramid Network,即特征金字塔网络)对文本区域内的图像内容进行识别得到。Specifically, the Roi Align algorithm (that is, an algorithm that uses bilinear interpolation to fix the feature output of regions of interest of different sizes) is used to align the image features of the image content of the text region. Among them, the image features of the text area can be detected by using the Mask-RCNN network on the target table area, and the text area in the target table area can be determined. The image content inside is identified.

其中,采用Roi Align算法对文本区域的图像内容进行图像特征对齐时,得到的对齐后的图像特征为128维。而由于各文本区域的位置坐标为4维,可以是(x,y,w,h)的四维坐标,为用于后续和图像特征进行融合,进而采用FCN网络(全连接网络)将四维的坐标特征升维至和图像特征的维度一致,即通过FCN网络(全连接网络)将四维的坐标特征升维至128维,以和对齐后的图像特征的维度一致。Among them, when the Roi Align algorithm is used to align the image features of the image content of the text area, the obtained aligned image features are 128 dimensions. Since the position coordinates of each text area are 4-dimensional, it can be the four-dimensional coordinates of (x, y, w, h) for subsequent fusion with image features, and then the FCN network (full connection network) is used to convert the four-dimensional coordinates. The dimension of the feature is increased to be consistent with the dimension of the image feature, that is, the four-dimensional coordinate feature is increased to 128 dimensions through the FCN network (full connection network), so as to be consistent with the dimension of the aligned image feature.

步骤S708,对升维后的坐标特征、对齐后的图像特征进行融合,得到与各文本区域对应的文本区域元素融合特征。Step S708 , fuse the coordinate features after dimension enhancement and the image features after alignment to obtain text region element fusion features corresponding to each text region.

具体地,通过采用按点诸位相加的方式,将每个文本区域对应的升维后的坐标特征、对齐后的图像特征进行融合,以得到与各文本区域对应的文本区域元素融合特征。Specifically, by adopting the point-by-point addition method, the dimension-raised coordinate features and aligned image features corresponding to each text region are fused to obtain the text region element fusion features corresponding to each text region.

本实施例中,通过获取从目标表格图像区域内确定出各文本区域的位置坐标,并对文本区域的位置坐标进行升维,得到升维后的坐标特征。而根据各文本区域的位置坐标,获取对应的文本区域的图像内容,并基于文本区域的图像内容进行图像特征对齐,得到对齐后的图像特征,对齐后的图像特征的维度与升维后的坐标特征的维度相同。通过对升维后的坐标特征、对齐后的图像特征进行融合,得到与各文本区域对应的文本区域元素融合特征,能够达到对目标表格图像区域内的所有文本区域的整体识别,而不是针对单个文本区域的局部识别,进而提高对目标表格图像区域的表格识别准确度。In this embodiment, the position coordinates of each text region determined from the target table image region are obtained, and the position coordinates of the text region are dimensioned to obtain the dimension-upgraded coordinate features. According to the position coordinates of each text area, the image content of the corresponding text area is obtained, and the image features are aligned based on the image content of the text area to obtain the aligned image features. The dimensions of the aligned image features and the coordinates after the dimension increase The dimensions of the features are the same. By fusing the dimension-upgraded coordinate features and the aligned image features, the text region element fusion features corresponding to each text region can be obtained, which can achieve the overall recognition of all text regions in the target table image region, rather than for a single text region. Local recognition of the text area, thereby improving the table recognition accuracy of the target table image area.

在一个实施例中,如图8所示,获取各文本区域元素融合特征对应的结点的局部特征的步骤,具体包括:In one embodiment, as shown in FIG. 8 , the step of acquiring the local features of the nodes corresponding to the fusion features of each text area element specifically includes:

步骤S802,获取各文本区域元素融合特征对应的结点,并确定各结点的预设个数的邻近结点。Step S802, acquiring nodes corresponding to the fusion feature of each text area element, and determining a preset number of adjacent nodes of each node.

具体地,通过获取各文本区域元素融合特征对应的结点,并采用K-邻近算法(k-Nearest Neighbor algorithm),分别确定出各文本区域元素融合特征对应的结点的K个邻近结点。其中,K-邻近算法用于确定出与当前结点最邻近的K个邻近结点。Specifically, by acquiring the nodes corresponding to the fusion features of each text area element, and using a K-Nearest Neighbor algorithm, K adjacent nodes of the nodes corresponding to the fusion features of each text area element are determined respectively. Among them, the K-neighbor algorithm is used to determine the K nearest neighbor nodes to the current node.

步骤S804,将各结点与该结点对应的各邻近结点的文本区域元素融合特征进行融合,得到各结点对应的邻近融合特征。Step S804, fuse each node and the text area element fusion features of each adjacent node corresponding to the node to obtain adjacent fusion features corresponding to each node.

具体地,通过将K个邻近结点的文本区域元素特征,和该结点的文本区域元素融合特征进行特征融合,以得到各结点的邻近融合特征。Specifically, the feature fusion is performed by combining the text area element features of the K adjacent nodes with the text area element fusion features of the node, so as to obtain the adjacent fusion features of each node.

其中,由于每个结点的文本区域元素特征为128维,通过将K个邻近结点的文本区域元素特征,和该结点的文本区域元素融合特征进行特征融合后,得到的结点的邻近融合特征则提升至128K维。Among them, since the text area element feature of each node is 128-dimensional, after the feature fusion of the text area element feature of K adjacent nodes and the text area element fusion feature of the node is performed, the adjacent nodes are obtained. The fusion feature is increased to 128K dimensions.

步骤S806,整合各结点的邻近融合特征,得到聚合特征。Step S806, integrating adjacent fusion features of each node to obtain aggregated features.

具体地,通过采用FCN网络(全连接网络)整合结点的邻近融合特征,得到相应的聚合特征。其中,由于结点的邻近融合特征为128K维,通过采用FCN网络,将每个结点的128K维的邻近融合特征聚合至128维的聚合特征。Specifically, by using the FCN network (full connection network) to integrate the adjacent fusion features of the nodes, the corresponding aggregated features are obtained. Among them, since the adjacent fusion features of the nodes are 128K dimensions, by using the FCN network, the 128K-dimensional adjacent fusion features of each node are aggregated into 128-dimensional aggregated features.

步骤S808,对各结点的聚合特征进行降维处理,得到降维后的多头图特征,降维后的多头图特征为与文本区域元素融合特征对应的结点的局部特征。Step S808, performing dimension reduction processing on the aggregated features of each node to obtain a multi-head graph feature after dimension reduction, and the multi-head graph feature after dimensionality reduction is a local feature of a node corresponding to a text area element fusion feature.

具体地,通过采用预设个平行的FCN网络,对结点的聚合特征进行降维处理,得到降维后的多头图特征。其中,降维后的多头图特征即为与文本区域元素融合特征对应的结点的局部特征。Specifically, by using a preset parallel FCN network, dimensionality reduction processing is performed on the aggregated features of the nodes, and the multi-head graph features after dimensionality reduction are obtained. Among them, the multi-head graph feature after dimensionality reduction is the local feature of the node corresponding to the element fusion feature of the text area.

进一步地,在本实施例中,可以是采用8个平行的FCN网络,对结点的聚合特征进行降维处理,将128维的聚合特征转换成8个16维的多头图特征。其中,采用平行的FCN网络进行降维处理时,每个平行的FCN网络进行的降维处理过程是独立的,互不影响。Further, in this embodiment, 8 parallel FCN networks may be used to perform dimensionality reduction processing on the aggregated features of nodes, and convert the 128-dimensional aggregated features into 8 16-dimensional multi-head graph features. Among them, when parallel FCN networks are used for dimensionality reduction processing, the dimensionality reduction processing process performed by each parallel FCN network is independent and does not affect each other.

本实施例中,通过获取各文本区域元素融合特征对应的结点,并确定各结点的预设个数的邻近结点,进而将各结点与该结点对应的各邻近结点的文本区域元素融合特征进行融合,得到各结点对应的邻近融合特征。通过整合各结点的邻近融合特征,得到聚合特征,并对各结点的聚合特征进行降维处理,得到降维后的多头图特征,得到的降维后的多头图特征即为与文本区域元素融合特征对应的结点的局部特征。实现了对文本区域元素融合特征的进一步整合以及降维处理,得到降维后的多头图特征,便于和后续通过多头注意力机制对文本区域元素融合特征进行上下文特征聚合得到的全局特征,进行进一步融合,以达到对目标表格图像区域的整体识别,而不是针对单个文本区域的局部识别,提升表格识别准确度。In this embodiment, by acquiring the nodes corresponding to the fusion features of the elements of each text area, and determining a preset number of adjacent nodes of each node, the text of each node and each adjacent node corresponding to the node is further compared. The regional element fusion features are fused, and the adjacent fusion features corresponding to each node are obtained. By integrating the adjacent fusion features of each node, the aggregated features are obtained, and the dimensionality reduction of the aggregated features of each node is performed to obtain the multi-head graph features after dimensionality reduction. The obtained multi-head graph features after dimensionality reduction are the same as the text area The local feature of the node corresponding to the element fusion feature. It realizes further integration and dimensionality reduction of text area element fusion features, and obtains multi-head image features after dimensionality reduction, which is convenient and subsequent to the global features obtained by contextual feature aggregation of text area element fusion features through multi-head attention mechanism. Fusion to achieve the overall recognition of the target table image area, rather than the local recognition of a single text area, to improve the accuracy of table recognition.

在一个实施例中,如图9所示,提供了一种表格结构识别方法的整体流程,具体包括P1文本检测部分、P2特征聚合部分以及P3邻接关系预测部分,其中:In one embodiment, as shown in FIG. 9, an overall process of a table structure identification method is provided, which specifically includes a P1 text detection part, a P2 feature aggregation part, and a P3 adjacency relationship prediction part, wherein:

1、P1文本区域识别部分,具体包括:1. P1 text area recognition part, including:

1)采用Mask-RCNN网络对目标表格区域进行目标检测,得到相应的RPN网络(区域生成网络)识别结果,并根据RPN网络识别结果确定目标表格区域内的文本区域。其中,Mask-RCNN网络的骨干网络为带有FPN的Res50网络。1) The Mask-RCNN network is used to detect the target table area, and the corresponding RPN network (region generation network) recognition result is obtained, and the text area in the target table area is determined according to the RPN network recognition result. Among them, the backbone network of Mask-RCNN network is Res50 network with FPN.

2)利用NMS算法(非极大值抑制算法)对识别得到的文本区域进行过滤,得到过滤后的文本区域。其中,文本区域的检测结果如图4所示,在目标表格区域内,具有如图4所示的和各文本框对应的文本区域。2) Use the NMS algorithm (non-maximum suppression algorithm) to filter the recognized text area to obtain the filtered text area. The detection result of the text area is shown in FIG. 4 , and in the target table area, there is a text area corresponding to each text box as shown in FIG. 4 .

2、P2特征聚合部分,具体包括:2. P2 feature aggregation part, including:

1)确定各文本区域的图像特征和坐标特征,并分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征。1) Determine the image features and coordinate features of each text region, and fuse the image features and coordinate features respectively to obtain the text region element fusion features corresponding to each text region.

在一个实施例中,确定各文本区域的图像特征和坐标特征,并分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征,包括:In one embodiment, image features and coordinate features of each text region are determined, and the image features and coordinate features are fused respectively to obtain text region element fusion features corresponding to each text region, including:

获取从目标表格图像区域内确定出各文本区域的位置坐标,并对文本区域的位置坐标进行升维,得到升维后的坐标特征;根据各文本区域的位置坐标,获取对应的文本区域的图像内容;基于文本区域的图像内容进行图像特征对齐,得到对齐后的图像特征,对齐后的图像特征的维度与升维后的坐标特征的维度相同;对升维后的坐标特征、对齐后的图像特征进行融合,得到与各文本区域对应的文本区域元素融合特征。Obtain the position coordinates of each text area determined from the image area of the target table, and upgrade the position coordinates of the text area to obtain the coordinate features after the upgrade; obtain the image of the corresponding text area according to the position coordinates of each text area content; image features are aligned based on the image content of the text area to obtain the aligned image features, the dimensions of the aligned image features are the same as the dimensions of the coordinate features after the dimension increase; The features are fused to obtain the text region element fusion features corresponding to each text region.

具体地,通过从目标表格图像区域确定出各文本区域,并获取各文本区域的位置坐标,进而采用FCN网络(全连接网络)分别对各文本区域的位置坐标进行升维,得到升维后的坐标特征。根据文本区域的位置坐标,确定文本区域在目标表格图像区域内的具体位置,进而获取对应具体位置上的图像内容,确定为与该文本区域对应的图像内容,并采用RoiAlign算法(即使用双线性插值固定不同大小感兴趣区域特征输出的算法),对文本区域的图像内容进行图像特征对齐。通过采用按点诸位相加的方式,将每个文本区域对应的升维后的坐标特征、对齐后的图像特征进行融合,以得到与各文本区域对应的文本区域元素融合特征。Specifically, each text area is determined from the target table image area, and the position coordinates of each text area are obtained, and then the FCN network (full connection network) is used to upgrade the position coordinates of each text area respectively, and the upgraded dimension is obtained. Coordinate feature. According to the position coordinates of the text area, determine the specific position of the text area in the image area of the target table, and then obtain the image content corresponding to the specific position, determine the image content corresponding to the text area, and use the RoiAlign algorithm (that is, using double-line Algorithm to fix the feature output of regions of interest with different sizes), and perform image feature alignment on the image content of the text area. By adopting the method of adding each bit by point, the coordinate features after dimension enhancement and the aligned image features corresponding to each text region are fused to obtain the text region element fusion features corresponding to each text region.

2)根据文本区域元素融合特征,确定目标表格图像区域中各结点的邻接特征。2) According to the element fusion feature of the text area, determine the adjacency feature of each node in the target table image area.

具体地,根据文本区域元素融合特征,确定目标表格图像区域中各结点的邻接特征,包括:Specifically, according to the element fusion feature of the text region, the adjacency features of each node in the target table image region are determined, including:

获取各文本区域元素融合特征对应的结点的局部特征和全局特征;基于局部特征和全局特征进行特征聚合,得到目标表格图像区域中各结点的邻接特征。Obtain the local features and global features of the nodes corresponding to the element fusion features of each text area; perform feature aggregation based on the local features and global features to obtain the adjacency features of each node in the target table image area.

在一个实施例中,获取各文本区域元素融合特征对应的结点的局部特征,包括:In one embodiment, obtaining the local features of the nodes corresponding to the fusion features of each text area element includes:

获取各文本区域元素融合特征对应的结点,并确定各结点的预设个数的邻近结点;将各结点与该结点对应的各邻近结点的文本区域元素融合特征进行融合,得到各结点对应的邻近融合特征;整合各结点的邻近融合特征,得到聚合特征;对各结点的聚合特征进行降维处理,得到降维后的多头图特征,降维后的多头图特征为与文本区域元素融合特征对应的结点的局部特征。Obtain the nodes corresponding to the fusion feature of each text area element, and determine a preset number of adjacent nodes of each node; fuse each node with the text area element fusion feature of each adjacent node corresponding to the node, Obtain the adjacent fusion features corresponding to each node; integrate the adjacent fusion features of each node to obtain aggregated features; perform dimensionality reduction on the aggregated features of each node to obtain the multi-head graph features after dimensionality reduction, and the multi-headed graph after dimensionality reduction. The feature is the local feature of the node corresponding to the element fusion feature of the text area.

具体地,采用K-邻近算法(k-Nearest Neighbor algorithm),分别确定出各文本区域元素融合特征对应的结点的K个邻近结点,将K个邻近结点的文本区域元素特征,和该结点的文本区域元素融合特征进行特征融合,以得到各结点的邻近融合特征,采用FCN网络(全连接网络)整合结点的邻近融合特征,得到相应的聚合特征。进一步采用预设个平行的FCN网络,对结点的聚合特征进行降维处理,得到降维后的多头图特征。Specifically, the K-Nearest Neighbor algorithm is used to determine K adjacent nodes of the nodes corresponding to the fusion features of each text area element respectively, and the text area element features of the K adjacent nodes are combined with the The text area element fusion features of the nodes are used for feature fusion to obtain the adjacent fusion features of each node, and the FCN network (full connection network) is used to integrate the adjacent fusion features of the nodes to obtain the corresponding aggregated features. Further, a preset parallel FCN network is used to reduce the dimension of the aggregated features of the nodes to obtain the multi-head graph features after dimension reduction.

在一个实施例中,获取各文本区域元素融合特征对应的结点的全局特征,包括:In one embodiment, acquiring the global features of the nodes corresponding to the fusion features of each text area element includes:

根据多头注意力机制,对与各结点对应的文本区域元素融合特征进行上下文特征聚合,得到与文本区域元素融合特征对应的结点的全局特征。According to the multi-head attention mechanism, the context feature aggregation is performed on the text region element fusion features corresponding to each node, and the global features of the nodes corresponding to the text region element fusion features are obtained.

其中,通过采用transformer模型(基于自注意力机制的语言模型)的编码器对文本区域元素融合特征进行表征,其中transformer模型的隐层大小为128,文本区域元素融合特征的维度为128维。其中,设置的多头注意力机制(Multi-head attention)对应的head数可以为8,根据多头注意力机制,对与各结点对应的文本区域元素融合特征进行上下文特征聚合时,得到的与文本区域元素融合特征对应的结点的全局特征的维度为16维,而采用8个平行的FCN网络,对结点的128维聚合特征进行降维处理,得到降维后的多头图特征的维度同样为16维。Among them, the text area element fusion feature is represented by the encoder using the transformer model (language model based on self-attention mechanism), where the hidden layer size of the transformer model is 128, and the dimension of the text area element fusion feature is 128 dimensions. Among them, the number of heads corresponding to the set multi-head attention mechanism (Multi-head attention) can be 8. According to the multi-head attention mechanism, when the context feature aggregation is performed on the text region element fusion features corresponding to each node, the obtained text is the same as the text. The dimension of the global feature of the node corresponding to the regional element fusion feature is 16 dimensions, and 8 parallel FCN networks are used to reduce the dimension of the 128-dimensional aggregated feature of the node, and the dimension of the multi-head graph feature after dimensionality reduction is the same. for 16 dimensions.

在一个实施例中,基于局部特征和全局特征进行特征聚合,得到目标表格图像区域中各结点的邻接特征,包括:In one embodiment, feature aggregation is performed based on local features and global features to obtain adjacent features of each node in the target table image area, including:

获取与门机制对应的各个门参数;基于预设激活函数、各门参数,对局部特征和全局特征进行特征聚合,得到与目标表格图像区域中各结点的邻接特征。Obtain each gate parameter corresponding to the gate mechanism; based on the preset activation function and each gate parameter, perform feature aggregation on local features and global features to obtain adjacency features with each node in the target table image area.

在一个实施例中,如图10所示,提供了一种用于生成邻接特征的FLAG网络结构示意图,其中,FLAG网络结构表示融入图特征的自注意机制的结构。参照图10可知,FLAG网络结构设置有用于确定出局部特征的GNN分支(Graph Neural Networks,即图神经网络),包括GNN1、……、GNNN,用于确定出全局特征的self-attention(自注意力机制)分支,包括self-attention head1、……、self-attention headn,用于控制不同类型的特征融合的gate机制(门机制),gate机制对应的门参数包括gate1、……、gaten,以及用于提升模型的表征能力的FFN(Feed-Forward Network,前馈神经网络)。其中,GNN分支数量、self-attention分支数量以及gate机制数量一致。In one embodiment, as shown in FIG. 10 , a schematic diagram of a FLAG network structure for generating adjacency features is provided, wherein the FLAG network structure represents a structure of a self-attention mechanism incorporating graph features. Referring to FIG. 10 , the FLAG network structure is provided with GNN branches (Graph Neural Networks, ie graph neural networks) for determining local features, including GNN1 , ..., GNNN , which are used to determine the self-attention ( Self-attention mechanism) branch, including self-attention head1 ,..., self-attention headn , used to control the gate mechanism (gate mechanism) of different types of feature fusion, the gate parameters corresponding to the gate mechanism include gate1 ,... ..., gaten , and FFN (Feed-Forward Network) for improving the representational ability of the model. Among them, the number of GNN branches, the number of self-attention branches and the number of gate mechanisms are the same.

具体地,self-attention(自注意力机制)分支中是采用transformer模型(基于自注意力机制的语言模型)的编码器对文本区域元素融合特征进行表征,参照图10可知,transformer模型使用的注意力函数包括:Q(请求(query))、K(主键(key))、V(数值(value)),其中,query向量(Q)用于负责查找目标特征和其他特征的相关度,key向量(K)用于为query向量(Q)做匹配,得到相关度评分,value向量(V)用于对评分进行加权求和。Specifically, the self-attention (self-attention mechanism) branch uses the encoder of the transformer model (language model based on the self-attention mechanism) to characterize the fusion features of text area elements. Referring to Figure 10, it can be seen that the attention used by the transformer model The force function includes: Q (request (query)), K (primary key (key)), V (value (value)), where the query vector (Q) is used to find the correlation between the target feature and other features, and the key vector (K) is used to match the query vector (Q) to get the relevance score, and the value vector (V) is used to weight the scores.

进一步地,由于self-attention(自注意力机制)分支是多头注意力机制,则相应设置有多个head,包括head1、head2、……、headn,transformer模型使用的注意力函数针对每个head分别对应设置,包括K1、Q1、V1,K2、Q2、V2,……KN、QN、VN。Further, since the self-attention (self-attention mechanism) branch is a multi-head attention mechanism, multiple heads are set accordingly, including head1 , head2 , ..., headn , and the attention function used by the transformer model is for each head. Each head is set correspondingly, including K1 , Q1 , V1 , K2 , Q2 , V2 , ... KN , QN , VN .

具体来说,通过获取预设激活函数,以及gate机制对应的门参数,包括,gate1、……、gaten,并基于预设激活函数、各门参数,对各结点的局部特征和全局特征进行特征聚合,得到与目标表格图像区域中各结点的邻接特征。其中,采用FFN(Feed-ForwardNetwork,前馈神经网络)对进行特征聚合得到的邻接特征进一步识别和分析,提升模型的表征能力。Specifically, by obtaining the preset activation function and the gate parameters corresponding to the gate mechanism, including gate1 , ..., gaten , and based on the preset activation function and each gate parameter, the local characteristics and global characteristics of each node are analyzed. The features are aggregated to obtain the adjacent features of each node in the target table image area. Among them, FFN (Feed-Forward Network, feed-forward neural network) is used to further identify and analyze the adjacent features obtained by feature aggregation, so as to improve the representation ability of the model.

在本实施例中,设置有四层FLAG网络结构,将每层FLAG网络结构输出的邻接特征进一步进行特征融合,最终确定得到标表格图像区域中各结点的邻接特征。In this embodiment, a four-layer FLAG network structure is provided, and the adjacent features output by each layer of the FLAG network structure are further subjected to feature fusion, and finally the adjacent features of each node in the marked table image area are determined to be obtained.

3、P3邻接关系预测部分,具体包括:3. P3 adjacency prediction part, including:

(1)将任意两个结点的邻接特征进行特征拼接,并对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果。(1) Feature splicing is performed on the adjacency features of any two nodes, and the adjacency matrix obtained by splicing is classified and predicted, and the row-column relationship prediction result of the text area corresponding to the two nodes is generated.

在一个实施例中,将任意两个结点的邻接特征进行特征拼接,并对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果,包括:In one embodiment, feature splicing is performed on the adjacency features of any two nodes, and the adjacency matrix obtained by the splicing is classified and predicted, and the row-column relationship prediction result of the text area corresponding to the two nodes is generated, including:

将任意两个结点的邻接特征进行特征拼接,得到拼接后的邻接矩阵;根据全连接神经网络对拼接后的邻接矩阵,进行二分类预测,得到相应的文本区域的行列关系预测结果;其中,二分类预测包括行关系预测和列关系预测。Perform feature splicing on the adjacency features of any two nodes to obtain a spliced adjacency matrix; perform two-class prediction on the spliced adjacency matrix according to the fully connected neural network, and obtain the row-column relationship prediction result of the corresponding text area; among them, Two-category prediction includes row relationship prediction and column relationship prediction.

具体地,根据全连接网络对拼接后的邻接矩阵进行二分类预测,确定拼接的邻接矩阵对应的两个文本区域是否属于表格中的同一行,或者判断拼接的邻接矩阵对应的两个文本区域是否属于表格中的同一列,进而得到相应的文本区域的行列关系预测结果。Specifically, perform binary classification prediction on the spliced adjacency matrix according to the fully connected network to determine whether the two text regions corresponding to the spliced adjacency matrix belong to the same row in the table, or determine whether the two text regions corresponding to the spliced adjacency matrix belong to the same row. belong to the same column in the table, and then obtain the row-column relationship prediction result of the corresponding text area.

进一步的,得到的各文本区域的行列关系预测结果,可参照图5所示的表格结构识别方法的行关系预测结果,以及图6所示的表格结构识别方法的列关系预测结果。Further, for the obtained row-column relationship prediction results of each text region, reference may be made to the row relationship prediction results of the table structure identification method shown in FIG. 5 and the column relationship prediction results of the table structure identification method shown in FIG. 6 .

(2)基于各文本区域的行列关系预测结果,确定与目标表格图像区域对应的表格结构。(2) Determine the table structure corresponding to the target table image region based on the row-column relationship prediction result of each text region.

具体地,根据对每两个文本区域的行列关系的预测结果,可进一步确定出具体哪些文本区域属于同一行,哪些文本区域属于同一列,对不同文本区域的行列关系以及位置坐标进行进一步分析和排列,可确定与目标表格图像区域对应的表格结构。Specifically, according to the prediction result of the row-column relationship of each two text regions, it can be further determined which text regions belong to the same row and which text regions belong to the same column, and further analyze and analyze the row-column relationship and position coordinates of different text regions. Arrangement can determine the table structure corresponding to the target table image area.

上述表格结构识别方法中,通过获取目标表格图像区域,识别目标表格图像区域中的文本区,并确定各文本区域的图像特征和坐标特征,分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征,可通过对目标表格图像区域内的不同文本区域进行图像特征、坐标特征的融合,以达到对目标表格图像区域的整体识别,而不是针对单个文本区域的局部识别。进而根据文本区域元素融合特征,确定目标表格图像区域中各结点的邻接特征,并将任意两个结点的邻接特征进行特征拼接,通过对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果,进而基于各文本区域的行列关系预测结果,确定与目标表格图像区域对应的表格结构。实现了根据对各文本区域的行列关系的预测结果,即可确定得到相应的表格结构,而无需利用额外的文本检测网络进一步进行识别,可减少不必要繁琐操作,进而提高了表格识别准确度和识别效率。In the above table structure recognition method, by acquiring the target table image area, identifying the text area in the target table image area, and determining the image feature and coordinate feature of each text area, respectively merging the image feature and the coordinate feature to obtain and each text area. The text area element fusion feature corresponding to the area can be used to fuse the image features and coordinate features of different text areas in the target form image area to achieve the overall recognition of the target form image area, rather than the local recognition of a single text area. . Then, according to the element fusion features of the text area, the adjacency features of each node in the target table image area are determined, and the adjacency features of any two nodes are feature spliced, and the adjacency matrix obtained by splicing is classified and predicted to generate a The row-column relationship prediction result of the text region corresponding to each node is further determined based on the row-column relationship prediction result of each text region, and the table structure corresponding to the target table image region is determined. It is realized that according to the prediction results of the row-column relationship of each text area, the corresponding table structure can be determined without using an additional text detection network for further identification, which can reduce unnecessary and cumbersome operations, thereby improving the accuracy of table recognition. identification efficiency.

应该理解的是,虽然上述实施例涉及的各流程图中的各个步骤按照箭头的指示依次显示,但是这些步骤并不是必然按照箭头指示的顺序依次执行。除非本文中有明确的说明,这些步骤的执行并没有严格的顺序限制,这些步骤可以以其它的顺序执行。而且,上述实施例涉及的各流程图中的至少一部分步骤可以包括多个步骤或者多个阶段,这些步骤或者阶段并不必然是在同一时刻执行完成,而是可以在不同的时刻执行,这些步骤或者阶段的执行顺序也不必然是依次进行,而是可以与其它步骤或者其它步骤中的步骤或者阶段的至少一部分轮流或者交替地执行。It should be understood that, although the steps in the flowcharts involved in the above embodiments are sequentially displayed according to the arrows, these steps are not necessarily executed in the order indicated by the arrows. Unless explicitly stated herein, the execution of these steps is not strictly limited to the order, and these steps may be performed in other orders. Moreover, at least a part of the steps in the flowcharts involved in the above embodiments may include multiple steps or multiple stages. These steps or stages are not necessarily executed at the same time, but may be executed at different times. Alternatively, the order of execution of the stages is not necessarily sequential, but may be performed alternately or alternately with other steps or at least a portion of the steps or stages in the other steps.

在一个实施例中,如图11所示,提供了一种表格结构识别装置,该装置可以采用软件模块或硬件模块,或者是二者的结合成为计算机设备的一部分,该装置具体包括:文本区域识别模块1102、文本区域元素融合特征生成模块1104、邻接特征生成模块1106、行列关系预测结果生成模块1108以及表格结构确定模块1110,其中:In one embodiment, as shown in FIG. 11 , a table structure identification device is provided. The device can use a software module or a hardware module, or a combination of the two to become a part of a computer device. The device specifically includes: a text area The

文本区域识别模块1102,用于获取目标表格图像区域,识别目标表格图像区域中的文本区域。The text

文本区域元素融合特征生成模块1104,用于确定各文本区域的图像特征和坐标特征,并分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征。The text region element fusion

邻接特征生成模块1106,用于根据文本区域元素融合特征,确定目标表格图像区域中各结点的邻接特征。The adjacency

行列关系预测结果生成模块1108,用于将任意两个结点的邻接特征进行特征拼接,并对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果。The row-column relationship prediction

表格结构确定模块1110,用于基于各文本区域的行列关系预测结果,确定与目标表格图像区域对应的表格结构。The table

上述表格结构识别装置中,通过获取目标表格图像区域,识别目标表格图像区域中的文本区,并确定各文本区域的图像特征和坐标特征,分别将图像特征、坐标特征进行融合,得到与各文本区域对应的文本区域元素融合特征,可通过对目标表格图像区域内的不同文本区域进行图像特征、坐标特征的融合,以达到对目标表格图像区域的整体识别,而不是针对单个文本区域的局部识别。进而根据文本区域元素融合特征,确定目标表格图像区域中各结点的邻接特征,并将任意两个结点的邻接特征进行特征拼接,通过对拼接得到的邻接矩阵进行分类预测,生成与该两个结点对应的文本区域的行列关系预测结果,进而基于各文本区域的行列关系预测结果,确定与目标表格图像区域对应的表格结构。实现了根据对各文本区域的行列关系的预测结果,即可确定得到相应的表格结构,而无需利用额外的文本检测网络进一步进行识别,可减少不必要繁琐操作,进而提高了表格识别准确度和识别效率。In the above-mentioned table structure recognition device, by acquiring the target table image area, identifying the text area in the target table image area, and determining the image feature and coordinate feature of each text area, respectively, the image feature and the coordinate feature are fused to obtain and each text area. The text area element fusion feature corresponding to the area can be used to fuse the image features and coordinate features of different text areas in the target form image area to achieve the overall recognition of the target form image area, rather than the local recognition of a single text area. . Then, according to the element fusion features of the text area, the adjacency features of each node in the target table image area are determined, and the adjacency features of any two nodes are feature spliced, and the adjacency matrix obtained by splicing is classified and predicted to generate a The row-column relationship prediction result of the text region corresponding to each node is further determined based on the row-column relationship prediction result of each text region, and the table structure corresponding to the target table image region is determined. It is realized that according to the prediction results of the row-column relationship of each text area, the corresponding table structure can be determined without using an additional text detection network for further identification, which can reduce unnecessary and cumbersome operations, thereby improving the accuracy of table recognition. identification efficiency.

在一个实施例中,文本区域元素融合特征生成模块还用于:In one embodiment, the text area element fusion feature generation module is further used for:

获取从目标表格图像区域内确定出各文本区域的位置坐标,并对文本区域的位置坐标进行升维,得到升维后的坐标特征;根据各文本区域的位置坐标,获取对应的文本区域的图像内容;基于文本区域的图像内容进行图像特征对齐,得到对齐后的图像特征,对齐后的图像特征的维度与升维后的坐标特征的维度相同;对升维后的坐标特征、对齐后的图像特征进行融合,得到与各文本区域对应的文本区域元素融合特征。Obtain the position coordinates of each text area determined from the image area of the target table, and upgrade the position coordinates of the text area to obtain the coordinate features after the upgrade; obtain the image of the corresponding text area according to the position coordinates of each text area content; image features are aligned based on the image content of the text area to obtain the aligned image features, the dimensions of the aligned image features are the same as the dimensions of the coordinate features after the dimension increase; The features are fused to obtain the text region element fusion features corresponding to each text region.

上述文本区域元素融合特征生成模块,实现了对升维后的坐标特征、对齐后的图像特征进行融合,得到与各文本区域对应的文本区域元素融合特征,能够达到对目标表格图像区域内的所有文本区域的整体识别,而不是针对单个文本区域的局部识别,进而提高对目标表格图像区域的表格识别准确度。The above-mentioned text area element fusion feature generation module realizes the fusion of the coordinate features after the dimension increase and the aligned image features, and obtains the text area element fusion features corresponding to each text area, which can achieve the target table image area. The overall recognition of the text area, rather than the local recognition of a single text area, improves the table recognition accuracy for the target table image area.

在一个实施例中,文本区域元素融合特征生成模块还包括:In one embodiment, the text area element fusion feature generation module further includes:

交并比计算单元,用于计算目标表格图像区域内的各文本区域与预设标注文本区域的交并比;an intersection ratio calculation unit, used for calculating the intersection ratio between each text area in the target table image area and the preset marked text area;

文本区域筛选模块,用于筛选出交并比大于预设交并比阈值的文本区域。The text area filtering module is used to filter out the text area whose intersection ratio is greater than the preset intersection ratio threshold.

在一个实施例中,邻接特征生成模块还用于:In one embodiment, the adjacency feature generation module is further used to:

获取各文本区域元素融合特征对应的结点的局部特征和全局特征;基于局部特征和全局特征进行特征聚合,得到目标表格图像区域中各结点的邻接特征。Obtain the local features and global features of the nodes corresponding to the element fusion features of each text area; perform feature aggregation based on the local features and global features to obtain the adjacency features of each node in the target table image area.

在一个实施例中,邻接特征生成模块还包括:In one embodiment, the adjacency feature generation module further includes:

邻近结点获取模块,用于获取各文本区域元素融合特征对应的结点,并确定各结点的预设个数的邻近结点;The adjacent node acquisition module is used to obtain the nodes corresponding to the fusion features of each text area element, and to determine the adjacent nodes of the preset number of each node;

邻近融合特征生成模块,用于将各结点与该结点对应的各邻近结点的文本区域元素融合特征进行融合,得到各结点对应的邻近融合特征;The adjacent fusion feature generation module is used to fuse each node and the text area element fusion features of each adjacent node corresponding to the node to obtain the adjacent fusion feature corresponding to each node;

聚合特征生成模块,用于整合各结点的邻近融合特征,得到聚合特征;The aggregated feature generation module is used to integrate the adjacent fusion features of each node to obtain aggregated features;

局部特征生成模块,用于对各结点的聚合特征进行降维处理,得到降维后的多头图特征,降维后的多头图特征为与文本区域元素融合特征对应的结点的局部特征。The local feature generation module is used to perform dimension reduction processing on the aggregated features of each node, and obtain the multi-head graph features after dimensionality reduction.

上述邻接特征生成模块,实现了对文本区域元素融合特征的进一步整合以及降维处理,得到降维后的多头图特征,便于和后续通过多头注意力机制对文本区域元素融合特征进行上下文特征聚合得到的全局特征,进行进一步融合,以达到对目标表格图像区域的整体识别,而不是针对单个文本区域的局部识别,提升表格识别准确度。The above-mentioned adjacent feature generation module realizes the further integration and dimensionality reduction processing of the text region element fusion features, and obtains the multi-head graph features after dimensionality reduction, which is convenient and subsequent to the text region element fusion features through the multi-head attention mechanism. The global features of the target form are further fused to achieve the overall recognition of the target form image area, rather than the local recognition of a single text area, to improve the accuracy of form recognition.

在一个实施例中,邻接特征生成模块还包括全局特征生成模块,用于:In one embodiment, the adjacent feature generation module further includes a global feature generation module for:

根据多头注意力机制,对与各结点对应的文本区域元素融合特征进行上下文特征聚合,得到与文本区域元素融合特征对应的结点的全局特征。According to the multi-head attention mechanism, the context feature aggregation is performed on the text region element fusion features corresponding to each node, and the global features of the nodes corresponding to the text region element fusion features are obtained.

在一个实施例中,行列关系预测结果生成模块还用于:In one embodiment, the row-column relationship prediction result generation module is further configured to:

将任意两个结点的邻接特征进行特征拼接,得到拼接后的邻接矩阵;根据全连接神经网络对拼接后的邻接矩阵,进行二分类预测,得到相应的文本区域的行列关系预测结果;其中,二分类预测包括行关系预测和列关系预测。Perform feature splicing on the adjacency features of any two nodes to obtain a spliced adjacency matrix; perform two-class prediction on the spliced adjacency matrix according to the fully connected neural network, and obtain the row-column relationship prediction result of the corresponding text area; among them, Two-category prediction includes row relationship prediction and column relationship prediction.

关于表格结构识别装置的具体限定可以参见上文中对于表格结构识别方法的限定,在此不再赘述。上述表格结构识别装置中的各个模块可全部或部分通过软件、硬件及其组合来实现。上述各模块可以硬件形式内嵌于或独立于计算机设备中的处理器中,也可以以软件形式存储于计算机设备中的存储器中,以便于处理器调用执行以上各个模块对应的操作。For specific limitations on the table structure identification device, reference may be made to the above limitations on the table structure identification method, which will not be repeated here. Each module in the above-mentioned table structure identification device may be implemented in whole or in part by software, hardware and combinations thereof. The above modules can be embedded in or independent of the processor in the computer device in the form of hardware, or stored in the memory in the computer device in the form of software, so that the processor can call and execute the operations corresponding to the above modules.

在一个实施例中,提供了一种计算机设备,该计算机设备可以是服务器,其内部结构图可以如图12所示。该计算机设备包括通过系统总线连接的处理器、存储器和网络接口。其中,该计算机设备的处理器用于提供计算和控制能力。该计算机设备的存储器包括非易失性存储介质、内存储器。该非易失性存储介质存储有操作系统、计算机程序和数据库。该内存储器为非易失性存储介质中的操作系统和计算机程序的运行提供环境。该计算机设备的数据库用于存储文本区域、图像特征、坐标特征、文本区域元素融合特征、邻接特征以及行列关系预测结果等数据。该计算机设备的网络接口用于与外部的终端通过网络连接通信。该计算机程序被处理器执行时以实现一种表格结构识别方法。In one embodiment, a computer device is provided, and the computer device may be a server, and its internal structure diagram may be as shown in FIG. 12 . The computer device includes a processor, memory, and a network interface connected by a system bus. Among them, the processor of the computer device is used to provide computing and control capabilities. The memory of the computer device includes a non-volatile storage medium, an internal memory. The nonvolatile storage medium stores an operating system, a computer program, and a database. The internal memory provides an environment for the execution of the operating system and computer programs in the non-volatile storage medium. The database of the computer device is used to store data such as text area, image feature, coordinate feature, text area element fusion feature, adjacency feature, and row-column relationship prediction result. The network interface of the computer device is used to communicate with an external terminal through a network connection. The computer program, when executed by the processor, implements a table structure identification method.

本领域技术人员可以理解,图12中示出的结构,仅仅是与本申请方案相关的部分结构的框图,并不构成对本申请方案所应用于其上的计算机设备的限定,具体的计算机设备可以包括比图中所示更多或更少的部件,或者组合某些部件,或者具有不同的部件布置。Those skilled in the art can understand that the structure shown in FIG. 12 is only a block diagram of a partial structure related to the solution of the present application, and does not constitute a limitation on the computer equipment to which the solution of the present application is applied. Include more or fewer components than shown in the figures, or combine certain components, or have a different arrangement of components.

在一个实施例中,还提供了一种计算机设备,包括存储器和处理器,存储器中存储有计算机程序,该处理器执行计算机程序时实现上述各方法实施例中的步骤。In one embodiment, a computer device is also provided, including a memory and a processor, where a computer program is stored in the memory, and the processor implements the steps in the foregoing method embodiments when the processor executes the computer program.