CN114328800A - Text processing method, apparatus, electronic device, and computer-readable storage medium - Google Patents

Text processing method, apparatus, electronic device, and computer-readable storage mediumDownload PDFInfo

- Publication number

- CN114328800A CN114328800ACN202111351720.9ACN202111351720ACN114328800ACN 114328800 ACN114328800 ACN 114328800ACN 202111351720 ACN202111351720 ACN 202111351720ACN 114328800 ACN114328800 ACN 114328800A

- Authority

- CN

- China

- Prior art keywords

- text

- sample

- word

- keyword

- features

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

- 238000003672processing methodMethods0.000titleclaimsabstractdescription36

- 238000012545processingMethods0.000claimsabstractdescription189

- 238000000605extractionMethods0.000claimsabstractdescription48

- 230000011218segmentationEffects0.000claimsabstractdescription42

- 238000012549trainingMethods0.000claimsdescription82

- 238000002372labellingMethods0.000claimsdescription10

- 230000004927fusionEffects0.000claimsdescription8

- 238000004590computer programMethods0.000claimsdescription7

- 238000012216screeningMethods0.000claimsdescription4

- 238000001914filtrationMethods0.000claimsdescription3

- 239000013598vectorSubstances0.000description35

- 238000000034methodMethods0.000description29

- 230000006870functionEffects0.000description18

- 238000010586diagramMethods0.000description7

- 241000393496ElectraSpecies0.000description5

- 238000004891communicationMethods0.000description4

- 238000013528artificial neural networkMethods0.000description3

- 238000007726management methodMethods0.000description3

- 238000009825accumulationMethods0.000description2

- 238000005516engineering processMethods0.000description2

- 230000003287optical effectEffects0.000description2

- 238000007781pre-processingMethods0.000description2

- 230000001133accelerationEffects0.000description1

- 238000013473artificial intelligenceMethods0.000description1

- 230000009286beneficial effectEffects0.000description1

- 238000004364calculation methodMethods0.000description1

- 238000010276constructionMethods0.000description1

- 238000001514detection methodMethods0.000description1

- 238000011161developmentMethods0.000description1

- 230000018109developmental processEffects0.000description1

- 238000007599dischargingMethods0.000description1

- 230000000694effectsEffects0.000description1

- 230000010354integrationEffects0.000description1

- 238000012544monitoring processMethods0.000description1

- 238000003058natural language processingMethods0.000description1

- 238000010606normalizationMethods0.000description1

- 238000007500overflow downdraw methodMethods0.000description1

- 230000000644propagated effectEffects0.000description1

- 238000011160researchMethods0.000description1

- 239000007787solidSubstances0.000description1

- 238000001228spectrumMethods0.000description1

Images

Classifications

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02D—CLIMATE CHANGE MITIGATION TECHNOLOGIES IN INFORMATION AND COMMUNICATION TECHNOLOGIES [ICT], I.E. INFORMATION AND COMMUNICATION TECHNOLOGIES AIMING AT THE REDUCTION OF THEIR OWN ENERGY USE

- Y02D10/00—Energy efficient computing, e.g. low power processors, power management or thermal management

Landscapes

- Information Retrieval, Db Structures And Fs Structures Therefor (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及通信技术领域,具体涉及一种文本处理方法、装置、电子设备和计算机可读存储介质。The present invention relates to the field of communication technologies, and in particular, to a text processing method, an apparatus, an electronic device and a computer-readable storage medium.

背景技术Background technique

近年来,随着互联网技术的飞速发展,网络上出现了海量的文本,就需要在这些海量的文本中检索出需要的目标文本。在检索这些文本的过程中,往往需要对文本进行处理,从而实现文本的在线检索。现有的文本处理方法往往通过Bert模型(双塔模型)提取文本的文本特征,根据文本特征,在海量的文本中检索出目标文本。In recent years, with the rapid development of Internet technology, massive texts have appeared on the Internet, and it is necessary to retrieve the desired target texts from these massive texts. In the process of retrieving these texts, it is often necessary to process the texts so as to realize online retrieval of the texts. The existing text processing methods often extract the text features of the text through the Bert model (two-tower model), and retrieve the target text from the massive text according to the text features.

在对现有技术的研究和实践过程中,本发明的发明人发现通过Bert模型提取的文本特征往往会存在一些信息噪声,使得提取的文本特征的准确性不足,因此,导致文本处理的准确性不足。During the research and practice of the prior art, the inventors of the present invention found that the text features extracted by the Bert model often have some information noise, which makes the accuracy of the extracted text features insufficient, thus resulting in the accuracy of text processing. insufficient.

发明内容SUMMARY OF THE INVENTION

本发明实施例提供一种文本处理方法、装置、电子设备和计算机可读存储介质,可以提高文本处理的准确性。Embodiments of the present invention provide a text processing method, apparatus, electronic device, and computer-readable storage medium, which can improve the accuracy of text processing.

一种文本处理方法,包括:A text processing method comprising:

获取文本词样本和文本样本对,所述文本词样本包括标注关键词类别的文本词,所述文本样本对包括标注语义匹配关系的文本对;acquiring a text word sample and a text sample pair, the text word sample includes a text word marked with a keyword category, and the text sample pair includes a text pair marked with a semantic matching relationship;

采用预设文本处理模型对所述文本样本对中的文本样本进行分词,并对分词后的目标文本词和文本词样本进行特征提取,得到所述目标文本词的文本词特征和所述文本词样本的文本词样本特征;A preset text processing model is used to segment the text samples in the text sample pair, and feature extraction is performed on the segmented target text words and text word samples to obtain the text word features of the target text words and the text words The text word sample features of the sample;

基于所述文本词特征和文本词样本特征,对所述目标文本词和文本词样本进行关键词类别识别,得到所述目标文本词的第一关键词类别和所述文本词样本的第二关键词类别;Based on the feature of the text word and the feature of the text word sample, perform keyword category recognition on the target text word and the text word sample to obtain the first keyword category of the target text word and the second keyword of the text word sample word category;

根据所述第一关键词类别,对所述文本词特征进行加权,以得到所述文本样本对中每一文本样本的文本特征,并计算所述文本特征之间的特征距离;Weighting the text word features according to the first keyword category to obtain text features of each text sample in the text sample pair, and calculating a feature distance between the text features;

基于所述第二关键词类别、标注关键词类别、特征距离和标注语义匹配关系对预设文本处理模型进行收敛,得到训练后文本处理模型,并采用所述训练后文本处理模型检索目标文本。The preset text processing model is converged based on the second keyword category, label keyword category, feature distance and label semantic matching relationship to obtain a post-training text processing model, and the post-training text processing model is used to retrieve the target text.

相应的,本发明实施例提供一种文本处理装置,包括:Correspondingly, an embodiment of the present invention provides a text processing apparatus, including:

获取单元,用于获取文本词样本和文本样本对,所述文本词样本包括标注关键词类别的文本词,所述文本样本对包括标注语义匹配关系的文本对;an obtaining unit, configured to obtain a text word sample and a text sample pair, the text word sample includes a text word marked with a keyword category, and the text sample pair includes a text pair marked with a semantic matching relationship;

分词单元,用于采用预设文本处理模型对所述文本样本对中的文本样本进行分词,并对分词后的目标文本词和文本词样本进行特征提取,得到所述目标文本词的文本词特征和所述文本词样本的文本词样本特征;A word segmentation unit, used for segmenting the text samples in the text sample pair by using a preset text processing model, and performing feature extraction on the target text words and text word samples after word segmentation, so as to obtain the text word features of the target text words and the text word sample feature of the text word sample;

识别单元,用于基于所述文本词特征和文本词样本特征,对所述目标文本词和文本词样本进行关键词类别识别,得到所述目标文本词的第一关键词类别和所述文本词样本的第二关键词类别;An identification unit, configured to perform keyword category recognition on the target text word and text word sample based on the text word feature and the text word sample feature, to obtain the first keyword category of the target text word and the text word the second keyword category of the sample;

加权单元,用于根据所述第一关键词类别,对所述文本词特征进行加权,以得到所述文本样本对中每一文本样本的文本特征,并计算文本特征之间的特征距离;a weighting unit, configured to weight the text word features according to the first keyword category, to obtain the text features of each text sample in the text sample pair, and calculate the feature distance between the text features;

检索单元,用于基于所述第二关键词类别、标注关键词类别、特征距离和标注语义匹配关系对预设文本处理模型进行收敛,得到训练后文本处理模型,并采用所述训练后文本处理模型检索目标文本。A retrieval unit, configured to converge a preset text processing model based on the second keyword category, labeling keyword category, feature distance, and labeling semantic matching relationship, obtain a post-training text processing model, and use the post-training text processing The model retrieves the target text.

可选的,在一些实施例中,所述加权单元,具体可以用于根据所述第一关键词类别,确定所述文本词特征的文本权重;基于所述文本权重,对所述文本词特征进行加权,并将加权后文本词特征进行融合,得到所述文本样本对中每一文本样本的文本特征。Optionally, in some embodiments, the weighting unit may be specifically configured to determine the text weight of the text word feature according to the first keyword category; Weighting is performed, and the weighted text word features are fused to obtain text features of each text sample in the text sample pair.

可选的,在一些实施例中,所述加权单元,具体可以用于在所述第一关键词类别中识别出每一关键词类别的类别概率,得到第一类别概率;在所述第一类别概率中筛选出至少一个预设关键关键词类别的类别概率,得到基础类别概率;将所述基础类别概率进行融合,得到所述文本词特征的文本权重。Optionally, in some embodiments, the weighting unit may be specifically configured to identify the category probability of each keyword category in the first keyword category, and obtain the first category probability; The category probability of at least one preset key keyword category is screened out from the category probability to obtain the basic category probability; the basic category probability is fused to obtain the text weight of the text word feature.

可选的,在一些实施例中,所述加权单元,具体可以用于将所述加权后文本词特征进行融合,得到融合后文本特征;在所述融合后文本特征中提取出所述查询文本样本对应的查询文本特征和所述目标文本样本对应的至少一个字段文本特征;将所述字段文本特征进行融合,得到目标字段文本特征,并将所述目标字段文本特征和查询文本特征作为所述文本样本对中每一文本样本的文本特征。Optionally, in some embodiments, the weighting unit may be specifically configured to fuse the weighted text word features to obtain fused text features; and extract the query text from the fused text features. The query text feature corresponding to the sample and at least one field text feature corresponding to the target text sample; the field text feature is fused to obtain the target field text feature, and the target field text feature and the query text feature are used as the Text features for each text sample in a text sample pair.

可选的,在一些实施例中,所述加权单元,具体可以用于对所述字段文本特征进行关联特征提取,得到所述字段文本特征的关联特征;基于所述关联特征,确定所述字段文本特征的关联权重,所述关联权重用于指示字段文本特征之间的关联关系;根据所述关联权重,对所述字段文本特征进行加权,并将加权后的字段文本特征进行融合,得到目标字段文本特征。Optionally, in some embodiments, the weighting unit may be specifically configured to perform associated feature extraction on the field text feature to obtain the associated feature of the field text feature; based on the associated feature, determine the field The association weight of the text feature, the association weight is used to indicate the association relationship between the field text features; according to the association weight, the field text features are weighted, and the weighted field text features are fused to obtain the target Field text feature.

可选的,在一些实施例中,所述检索单元,具体可以用于基于所述第二关键词类别和标注关键词类别,确定所述文本词样本的关键词损失信息;根据所述标注语义匹配关系和特征距离,确定所述文本样本对的文本损失信息;基于所述关键词损失信息和文本损失信息,对所述预设文本处理模型进行收敛,得到训练后文本处理模型。Optionally, in some embodiments, the retrieval unit may be specifically configured to determine the keyword loss information of the text word sample based on the second keyword category and the labeled keyword category; The matching relationship and feature distance are used to determine the text loss information of the text sample pair; based on the keyword loss information and the text loss information, the preset text processing model is converged to obtain a post-training text processing model.

可选的,在一些实施例中,所述检索单元,具体可以用于在所述第二关键词类别中识别出每一关键词类别的类别概率,得到第二类别概率;在所述第二类别概率中筛选出所述标注关键词类别对应的类别概率,得到目标类别概率;将目标类别概率与标注关键词类别进行融合,并计算融合后关键词类别的均值,得到所述文本词样本的关键词损失信息。Optionally, in some embodiments, the retrieval unit may be specifically configured to identify the category probability of each keyword category in the second keyword category, and obtain the second category probability; The category probability corresponding to the labeled keyword category is screened out from the category probability, and the target category probability is obtained; the target category probability is fused with the labeled keyword category, and the average value of the fused keyword category is calculated to obtain the text word sample. Keyword loss information.

可选的,在一些实施例中,所述检索单元,具体可以用于根据所述标注语义匹配关系,确定所述文本样本对的匹配参数;当所述匹配参数为预设匹配参数,且所述特征距离小于预设距离阈值时,将所述匹配参数与特征距离进行融合,得到所述文本样本对的文本损失信息。Optionally, in some embodiments, the retrieval unit may be specifically configured to determine the matching parameter of the text sample pair according to the annotation semantic matching relationship; when the matching parameter is a preset matching parameter, and the When the feature distance is less than a preset distance threshold, the matching parameter and the feature distance are fused to obtain text loss information of the text sample pair.

可选的,在一些实施例中,所述检索单元,具体可以用于计算所述特征距离与所述预设距离阈值的距离差值;计算所述匹配参数与预设参数阈值的参数差值,并将所述距离差值与参数差值进行融合;将融合后差值、匹配参数和特征距离进行融合,得到所述文本样本的文本损失信息。Optionally, in some embodiments, the retrieval unit may be specifically configured to calculate the distance difference between the feature distance and the preset distance threshold; calculate the parameter difference between the matching parameter and the preset parameter threshold. , and fuse the distance difference with the parameter difference; fuse the fused difference, the matching parameter and the feature distance to obtain the text loss information of the text sample.

可选的,在一些实施例中,所述检索单元,具体可以用于获取损失权重,并基于所述损失权重,分别对所述关键词损失信息和文本损失信息进行加权;将加权后关键词损失信息和加权后文本损失信息进行融合,得到目标损失信息;采用所述加权后关键词损失信息对所述关键词识别网络进行收敛,得到训练后关键词识别网络;采用目标损失信息对所述特征提取网络进行收敛,得到训练后特征提取网络,并将所述训练后关键词识别网络和训练后特征提取网络作为训练后文本处理模型。Optionally, in some embodiments, the retrieval unit may be specifically configured to obtain a loss weight, and based on the loss weight, weight the keyword loss information and the text loss information respectively; The loss information and the weighted text loss information are fused to obtain target loss information; the weighted keyword loss information is used to converge the keyword recognition network to obtain a trained keyword recognition network; The feature extraction network converges to obtain a post-training feature extraction network, and the post-training keyword recognition network and the post-training feature extraction network are used as a post-training text processing model.

可选的,在一些实施例中,所述识别单元,具体可以用于采用所述关键词识别网络分别对文本词特征和文本词样本特征进行归一化处理;根据归一化后的文本词特征,映射出所述目标文本词属于每一关键词类别的类别概率,得到所述目标文本词的第一关键词类别;基于归一化后的文本词样本特征,映射出所述文本词样本属于每一关键词类别的类别概率,得到所述文本词样本的第二关键词类别。Optionally, in some embodiments, the identification unit may be specifically configured to use the keyword identification network to respectively normalize the text word features and the text word sample features; feature, map the category probability that the target text word belongs to each keyword category, and obtain the first keyword category of the target text word; based on the normalized text word sample features, map the text word sample The class probability belonging to each keyword category is obtained, and the second keyword category of the text word sample is obtained.

可选的,在一些实施例中,所述检索单元,具体可以用于获取候选文本集合,并采用所述训练后文本处理模型对所述候选文本集合中的每一候选文本进行特征提取,得到候选文本特征集合;根据所述候选文本特征集合中的候选文本特征,构建所述候选文本特征集合对应的索引信息;当接收到查询文本时,根据所述索引信息和查询文本,在所述候选文本集合中筛选出至少一个候选文本作为目标文本。Optionally, in some embodiments, the retrieval unit may be specifically configured to obtain a candidate text set, and use the post-training text processing model to perform feature extraction on each candidate text in the candidate text set, to obtain: A candidate text feature set; according to the candidate text features in the candidate text feature set, construct index information corresponding to the candidate text feature set; when receiving query text, according to the index information and query text, in the candidate text feature set At least one candidate text is screened out from the text set as the target text.

可选的,在一些实施例中,所述检索单元,具体可以用于采用所述训练后文本处理模型对所述查询文本进行特征提取,得到所述查询文本的查询文本特征;基于所述索引信息,在所述候选文本特征集合中检索出所述查询文本特征对应的至少一个候选文本特征,得到目标候选文本特征;在所述候选文本集合中筛选出所述目标候选文本特征对应的候选文本,得到所述查询文本对应的目标文本。Optionally, in some embodiments, the retrieval unit may be specifically configured to use the post-training text processing model to perform feature extraction on the query text to obtain query text features of the query text; based on the index information, retrieve at least one candidate text feature corresponding to the query text feature in the candidate text feature set, and obtain the target candidate text feature; screen out the candidate text corresponding to the target candidate text feature in the candidate text set to obtain the target text corresponding to the query text.

可选的,在一些实施例中,所述获取单元,具体可以用于获取文本样本集合,并在所述文本样本集合中筛选出至少一个文本样本和所述文本样本对应的语义文本样本,所述语义文本样本为与所述文本样本存在语义关系的文本样本;采用所述预设文本处理模型对所述文本样本进行分词,并在分词后的文本词中标注关键词类别,得到文本词样本;根据所述文本样本与语义文本样本之间的语义关系,在所述文本样本与语义文本样本组成的文本对中标注语义匹配关系,得到文本样本对。Optionally, in some embodiments, the acquiring unit may be specifically configured to acquire a text sample set, and filter out at least one text sample and a semantic text sample corresponding to the text sample from the text sample set, where Describe the semantic text sample as a text sample that has a semantic relationship with the text sample; use the preset text processing model to segment the text sample, and mark the keyword category in the segmented text words to obtain text word samples ; According to the semantic relationship between the text sample and the semantic text sample, mark the semantic matching relationship in the text pair composed of the text sample and the semantic text sample, and obtain the text sample pair.

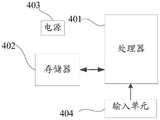

此外,本发明实施例还提供一种电子设备,包括处理器和存储器,所述存储器存储有应用程序,所述处理器用于运行所述存储器内的应用程序实现本发明实施例提供的文本处理方法。In addition, an embodiment of the present invention further provides an electronic device, including a processor and a memory, the memory stores an application program, and the processor is configured to run the application program in the memory to implement the text processing method provided by the embodiment of the present invention .

此外,本发明实施例还提供一种计算机可读存储介质,所述计算机可读存储介质存储有多条指令,所述指令适于处理器进行加载,以执行本发明实施例所提供的任一种文本处理方法中的步骤。In addition, an embodiment of the present invention further provides a computer-readable storage medium, where the computer-readable storage medium stores a plurality of instructions, and the instructions are adapted to be loaded by a processor to execute any one of the instructions provided by the embodiments of the present invention. steps in a text processing method.

本发明实施例在获取文本词样本和文本样本对后,采用预设文本处理模型对文本样本对中的文本样本进行分词,并对分词后的目标文本词和文本词样本进行特征提取,得到目标文本词的文本词特征和文本词样本的文本词样本特征,然后,基于文本词特征和文本词样本特征,对目标文本词和文本词样本进行关键词类别识别,得到目标文本词的第一关键词类别和文本词样本的第二关键词类别,然后,根据第一关键词类别,对文本词特征进行加权,以得到文本样本对中每一文本样本的文本特征,并计算文本特征之间的特征距离,然后,基于第二关键词类别、标注关键词类别、特征距离和标注语义匹配关系对预设文本处理模型进行收敛,得到训练后文本处理模型,并采用训练后文本处理模型检索目标文本;由于该方案通过多任务框架,将关键词类别识别任务和语义匹配任务同时进行训练,并通过识别出第一关键词类别,对文本词特征进行加权,显式增强了文本处理模型在语义匹配任务中对词权重识别能力,从而有效降低信息噪声,因此,可以提升文本处理的准确性。In this embodiment of the present invention, after acquiring text word samples and text sample pairs, a preset text processing model is used to segment the text samples in the text sample pairs, and feature extraction is performed on the segmented target text words and text word samples to obtain the target The text word feature of the text word and the text word sample feature of the text word sample, and then, based on the text word feature and the text word sample feature, the keyword category recognition is performed on the target text word and the text word sample, and the first keyword of the target text word is obtained. The word category and the second keyword category of the text word samples, and then, according to the first keyword category, the text word features are weighted to obtain the text features of each text sample in the text sample pair, and calculate the difference between the text features. feature distance, and then, based on the second keyword category, label keyword category, feature distance and label semantic matching relationship, the preset text processing model is converged to obtain a post-training text processing model, and the post-training text processing model is used to retrieve the target text ; Because the scheme uses the multi-task framework to train the keyword category recognition task and the semantic matching task at the same time, and by identifying the first keyword category, the text word features are weighted, which obviously enhances the text processing model in semantic matching. The ability to recognize word weights in the task can effectively reduce information noise, thus improving the accuracy of text processing.

附图说明Description of drawings

为了更清楚地说明本发明实施例中的技术方案,下面将对实施例描述中所需要使用的附图作简单地介绍,显而易见地,下面描述中的附图仅仅是本发明的一些实施例,对于本领域技术人员来讲,在不付出创造性劳动的前提下,还可以根据这些附图获得其他的附图。In order to illustrate the technical solutions in the embodiments of the present invention more clearly, the following briefly introduces the accompanying drawings used in the description of the embodiments. Obviously, the accompanying drawings in the following description are only some embodiments of the present invention. For those skilled in the art, other drawings can also be obtained from these drawings without creative effort.

图1是本发明实施例提供的文本处理方法的场景示意图;Fig. 1 is a scene schematic diagram of a text processing method provided by an embodiment of the present invention;

图2是本发明实施例提供的文本处理方法的流程示意图;2 is a schematic flowchart of a text processing method provided by an embodiment of the present invention;

图3是本发明实施例提供的文本检索的检索示意图;Fig. 3 is the retrieval schematic diagram of the text retrieval provided by the embodiment of the present invention;

图4是本发明实施例提供的文本处理流程的核心框架的示意图;4 is a schematic diagram of a core framework of a text processing flow provided by an embodiment of the present invention;

图5是本发明实施例提供的文本处理流程中的多任务学习框架图;5 is a multi-task learning framework diagram in a text processing flow provided by an embodiment of the present invention;

图6是本发明实施例提供的关键词识别任务的流程示意图;6 is a schematic flowchart of a keyword identification task provided by an embodiment of the present invention;

图7是本发明实施例提供的语义匹配任务的流程示意图;7 is a schematic flowchart of a semantic matching task provided by an embodiment of the present invention;

图8是本发明实施例提供的文本处理方法的另一流程示意图;8 is another schematic flowchart of a text processing method provided by an embodiment of the present invention;

图9是本发明实施例提供的文本处理装置的结构示意图;9 is a schematic structural diagram of a text processing apparatus provided by an embodiment of the present invention;

图10是本发明实施例提供的电子设备的结构示意图。FIG. 10 is a schematic structural diagram of an electronic device provided by an embodiment of the present invention.

具体实施方式Detailed ways

下面将结合本发明实施例中的附图,对本发明实施例中的技术方案进行清楚、完整地描述,显然,所描述的实施例仅仅是本发明一部分实施例,而不是全部的实施例。基于本发明中的实施例,本领域技术人员在没有作出创造性劳动前提下所获得的所有其他实施例,都属于本发明保护的范围。The technical solutions in the embodiments of the present invention will be clearly and completely described below with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only a part of the embodiments of the present invention, but not all of the embodiments. Based on the embodiments of the present invention, all other embodiments obtained by those skilled in the art without creative efforts shall fall within the protection scope of the present invention.

本发明实施例提供一种文本处理方法、装置、电子设备和计算机可读存储介质。其中,该文本处理装置可以集成在电子设备中,该电子设备可以是服务器,也可以是终端等设备。Embodiments of the present invention provide a text processing method, an apparatus, an electronic device, and a computer-readable storage medium. Wherein, the text processing apparatus may be integrated in an electronic device, and the electronic device may be a server or a terminal or other device.

其中,服务器可以是独立的物理服务器,也可以是多个物理服务器构成的服务器集群或者分布式系统,还可以是提供云服务、云数据库、云计算、云函数、云存储、网络服务、云通信、中间件服务、域名服务、安全服务、网络加速服务(Content Delivery Network,CDN)、以及大数据和人工智能平台等基础云计算服务的云服务器。终端可以是智能手机、平板电脑、笔记本电脑、台式计算机、智能音箱、智能手表等,但并不局限于此。终端以及服务器可以通过有线或无线通信方式进行直接或间接地连接,本申请在此不做限制。The server may be an independent physical server, or a server cluster or distributed system composed of multiple physical servers, or may provide cloud services, cloud databases, cloud computing, cloud functions, cloud storage, network services, and cloud communications. , middleware services, domain name services, security services, network acceleration services (Content Delivery Network, CDN), and cloud servers for basic cloud computing services such as big data and artificial intelligence platforms. The terminal may be a smart phone, a tablet computer, a notebook computer, a desktop computer, a smart speaker, a smart watch, etc., but is not limited thereto. The terminal and the server may be directly or indirectly connected through wired or wireless communication, which is not limited in this application.

例如,参见图1,以文本处理装置集成在电子设备中为例,电子设备在获取文本词样本和文本样本对后,采用预设文本处理模型对文本样本对中的文本样本进行分词,并对分词后的目标文本词和文本词样本进行特征提取,得到目标文本词的文本词特征和文本词样本的文本词样本特征,然后,基于文本词特征和文本词样本特征,对目标文本词和文本词样本进行关键词类别识别,得到目标文本词的第一关键词类别和文本词样本的第二关键词类别,然后,根据第一关键词类别,对文本词特征进行加权,以得到文本样本对中每一文本样本的文本特征,并计算文本特征之间的特征距离,然后,基于第二关键词类别、标注关键词类别、特征距离和标注语义匹配关系对预设文本处理模型进行收敛,得到训练后文本处理模型,并采用训练后文本处理模型检索目标文本,进而提升文本处理的准确性。For example, referring to FIG. 1 , taking the integration of a text processing device into an electronic device as an example, after acquiring a text word sample and a text sample pair, the electronic device uses a preset text processing model to segment the text samples in the text sample pair, and performs word segmentation on the text samples in the text sample pair. Feature extraction is performed on the target text words and text word samples after word segmentation, and the text word features of the target text words and the text word sample features of the text word samples are obtained. Then, based on the text word features and the text word sample features, the target text words and text The word sample is subjected to keyword category recognition to obtain the first keyword category of the target text word and the second keyword category of the text word sample, and then, according to the first keyword category, the text word features are weighted to obtain the text sample pair. the text features of each text sample in the The post-training text processing model is trained, and the post-training text processing model is used to retrieve the target text, thereby improving the accuracy of text processing.

以下分别进行详细说明。需要说明的是,以下实施例的描述顺序不作为对实施例优选顺序的限定。Each of them will be described in detail below. It should be noted that the description order of the following embodiments is not intended to limit the preferred order of the embodiments.

本实施例将从文本处理装置的角度进行描述,该文本处理装置具体可以集成在电子设备中,该电子设备可以是服务器,也可以是终端等设备;其中,该终端可以包括平板电脑、笔记本电脑、以及个人计算机(PC,Personal Computer)、可穿戴设备、虚拟现实设备或其他可以进行文本处理的智能设备等设备。This embodiment will be described from the perspective of a text processing apparatus. The text processing apparatus may be specifically integrated into an electronic device, and the electronic device may be a server or a terminal or other equipment; the terminal may include a tablet computer, a notebook computer, etc. , and devices such as a personal computer (PC, Personal Computer), a wearable device, a virtual reality device, or other smart devices that can perform text processing.

一种文本处理方法,包括:A text processing method comprising:

获取文本词样本和文本样本对,该文本词样本包括标注关键词类别的文本词,该文本样本对包括标注语义匹配关系的文本对,采用预设文本处理模型对文本样本对中的文本样本进行分词,并对分词后的目标文本词和文本词样本进行特征提取,得到目标文本词的文本词特征和文本词样本的文本词样本特征,基于文本词特征和文本词样本特征,对目标文本词和文本词样本进行关键词类别识别,得到目标文本词的第一关键词类别和文本词样本的第二关键词类别,根据第一关键词类别,对文本词特征进行加权,以得到文本样本对中每一文本样本的文本特征,并计算文本特征之间的特征距离,基于第二关键词类别、标注关键词类别、特征距离和标注语义匹配关系对预设文本处理模型进行收敛,得到训练后文本处理模型,并采用训练后文本处理模型检索目标文本。Obtain text word samples and text sample pairs, the text word samples include text words marked with keyword categories, and the text sample pairs include text pairs marked with semantic matching relationships, and the text samples in the text sample pairs are processed by using a preset text processing model. Word segmentation, and feature extraction of the target text words and text word samples after word segmentation, to obtain the text word features of the target text words and the text word sample features of the text word samples. Perform keyword category identification with text word samples to obtain the first keyword category of the target text word and the second keyword category of the text word sample, and weight the text word features according to the first keyword category to obtain the text sample pair. The text features of each text sample in the text sample, and the feature distance between the text features is calculated, and the preset text processing model is converged based on the second keyword category, the label keyword category, the feature distance and the label semantic matching relationship, and the post-training model is obtained. text processing model and retrieve the target text using the trained text processing model.

如图2所示,该文本处理方法的具体流程如下:As shown in Figure 2, the specific flow of the text processing method is as follows:

101、获取文本词样本和文本样本对。101. Acquire a text word sample and a text sample pair.

其中,文本词样本包括标注关键词类别的文本词,所谓关键词类别用于指示该文本词属于哪一个类别的关键词,关键词类别可以有多种,比如,可以分为三类,非关键词、一般关键词和重要关键词,这三类关键词可以分别用不同的标识进行表示,比如,非关键词可以用0表示,一般关键词可以用1表示,重要关键词可以用2表示,或者也可以用其他标识进行表示,但是不同关键词类别的标识往往不同。比如,文本序列为“保姆月嫂预约,定制筛选专业靠谱”为例,则对应的标注关键词类别结果就可以为“保/2,姆/2,月/2,嫂/2,预/1,约/1,定/0,制/0,筛/0,选/0,专/0,业/0,靠/0,谱/0”。Among them, the text word samples include text words marked with keyword categories. The so-called keyword categories are used to indicate which category of keywords the text words belong to. There can be many keyword categories. For example, they can be divided into three categories: non-keyword categories Words, general keywords and important keywords. These three types of keywords can be represented by different identifiers. For example, non-keywords can be represented by 0, general keywords can be represented by 1, and important keywords can be represented by 2. Or it can also be represented by other identifiers, but the identifiers of different keyword categories are often different. For example, if the text sequence is "Nanny Yuesao Appointment, Customized Screening Professional Reliability" as an example, then the corresponding marked keyword category results can be "bao/2, mu/2, month/2, sister-in-law/2, pre/ 1, about/1, fixed/0, system/0, sieve/0, selection/0, specialization/0, industry/0, reliance/0, spectrum/0”.

其中,文本样本对包括标注语义匹配关系的文本对,所谓语义匹配关系可以为文本对中的两段文本之间的语义相关性,若相关性高则认为两段文本匹配。很多自然语言处理任务都可转为语义匹配问题,例如网页搜索可抽象为用户的查询文本(query)与网页内容的相关性匹配问题,自动问答可抽象为问题与候选答案的满足度匹配问题,文本去重可抽象为文本与文本之间的相似度匹配问题。The text sample pair includes a text pair marked with a semantic matching relationship. The so-called semantic matching relationship may be the semantic correlation between two texts in the text pair. If the correlation is high, the two texts are considered to match. Many natural language processing tasks can be transformed into semantic matching problems. For example, web search can be abstracted as the correlation matching between the user's query text (query) and web content, and automatic question answering can be abstracted as the satisfaction matching between questions and candidate answers. Text deduplication can be abstracted as the similarity matching problem between texts.

其中,获取文本词样本和文本样本对的方式可以有多种,具体可以如下:There are various ways to obtain text word samples and text sample pairs, and the details can be as follows:

例如,可以获取文本样本集合,并在文本样本集合中筛选出至少一个文本样本和文本样本对应的语义文本样本,采用预设文本处理模型对文本样本进行分词,并在分词后的文本词中标注关键词类别,得到文本词样本,根据文本样本与语义文本样本之间的语义关系,在文本样本与语义文本样本组成的文本对中标注语义匹配关系,得到文本样本对。For example, a set of text samples can be obtained, at least one text sample and a semantic text sample corresponding to the text sample can be screened out from the set of text samples, a preset text processing model is used to segment the text samples, and the segmented text words are marked in the text. The keyword category is obtained, and text word samples are obtained. According to the semantic relationship between the text samples and the semantic text samples, the semantic matching relationship is marked in the text pairs composed of the text samples and the semantic text samples, and the text sample pairs are obtained.

其中,语义文本样本为与文本样本存在语义关系的文本样本,该语义关系为语义匹配和语义不匹配,筛选出文本样本对应的语义文本样本的方式可以有多种,比如,可以通过检索系统在文本样本集合中筛选出与文本样本语义匹配的文本样本作为语义文本样本,此时筛选出语义文本样本与文本样本之间的语义关系就可以为语义匹配,此时的文本样本对就可以为正文本样本对,还可以随机抽取离线库中的文本样本作为文本样本对应的语义文本样本,此时,抽取的语义文本样本与文本样本之间的语义关系就可以为语义不匹配,此时的文本样本对就可以为负文本样本对。Among them, the semantic text sample is a text sample that has a semantic relationship with the text sample, and the semantic relationship is semantic matching and semantic mismatch. There are many ways to filter out the semantic text samples corresponding to the text samples. The text samples that match the semantics of the text samples are screened out from the text sample set as the semantic text samples. At this time, the semantic relationship between the semantic text samples and the text samples can be selected as semantic matching, and the text sample pair at this time can be the main text. For this sample pair, the text samples in the offline library can also be randomly selected as the semantic text samples corresponding to the text samples. At this time, the semantic relationship between the extracted semantic text samples and the text samples can be semantic mismatch. At this time, the text The sample pair can then be a negative text sample pair.

其中,对文本样本进行分词的方式可以有多种,比如,可以采用预设文本处理模型中的Bert网络中的tokenization分词方法,将每一文本样本切分为单个token(令牌),这里的token指的是中文的单字、英文的单词或词根等。在分词后对文本词标注关键词类别的方式也可以有多种,比如,可以直接识别出文本词的关键词类别,然后,根据识别结果,在文本词上标注关键词类别,或者,还可以将切分出的文本词发送至标注服务器,接收标注服务器返回的文本词的关键词类别,并在文本词上标注对应的关键词类别,从而得到文本词样本。Among them, there are many ways to segment the text samples. For example, the tokenization tokenization method in the Bert network in the preset text processing model can be used to divide each text sample into a single token (token), where the Tokens refer to Chinese words, English words or root words, etc. There can also be various ways to mark the keyword category for the text word after word segmentation. For example, the keyword category of the text word can be directly identified, and then, according to the recognition result, the keyword category can be marked on the text word, or, you can also The segmented text words are sent to the tagging server, the keyword categories of the text words returned by the tagging server are received, and the corresponding keyword categories are tagged on the text words, thereby obtaining text word samples.

其中,在文本样本与语义文本样本组成的文本对中标注语义匹配关系的方式可以有多种,比如,可以根据文本样本与语义文本样本之间的语义关系,确定文本样本与语义文本样本的语义匹配关系,将文本样本与对应的语义文本样本组成文本对,并在该文本对中标注语义匹配关系,从而得到文本样本对。文本样本对可以包括正文本样本对和负文本样本对,正文本样本对中的文本样本与语义文本样本匹配,负文本样本对中的文本样本与语义文本样本不匹配,另外,当文本样本为查询文本样本时,对应的语义文本样本就可以为目标文本样本。There are various ways to mark the semantic matching relationship in the text pair composed of the text sample and the semantic text sample. For example, the semantic relationship between the text sample and the semantic text sample can be determined according to the semantic relationship between the text sample and the semantic text sample. Matching relationship, a text sample and a corresponding semantic text sample are formed into a text pair, and a semantic matching relationship is marked in the text pair, thereby obtaining a text sample pair. Text sample pairs can include positive text sample pairs and negative text sample pairs, the text samples in the positive text sample pair match the semantic text samples, and the text samples in the negative text sample pair do not match the semantic text samples. When querying a text sample, the corresponding semantic text sample can be the target text sample.

102、采用预设文本处理模型对文本样本对中的文本样本进行分词,并对分词后的目标文本词和文本词样本进行特征提取,得到目标文本词的文本特征和文本词样本的文本词样本特征。102. Use a preset text processing model to segment the text samples in the text sample pair, and perform feature extraction on the segmented target text words and text word samples to obtain text features of the target text words and text word samples of the text word samples. feature.

其中,采用预设文本处理模型对文本样本对中的文本样本进行分词的方式可以有多种,具体可以如下:Among them, there may be various ways to segment the text samples in the text sample pair by using the preset text processing model, and the details may be as follows:

例如,可以采用预设文本处理模型中的Bert网络中的tokenization分词方法,将每一文本样本切分为单个token,该token可以为中文的单字、英文的单词或词根等,从而得到文本样本对中每一文本样本分词后的目标文本词,或者,可以直接将文本样本对中的每一文本样本进行字符切分,切分为中文的单字、英文的单词或词根,从而得到文本样本对中每一文本样本分词后的目标文本词。For example, the tokenization word segmentation method in the Bert network in the preset text processing model can be used to divide each text sample into a single token, which can be a Chinese word, an English word or a root, etc., so as to obtain a pair of text samples. The target text word after word segmentation of each text sample in the text sample, or, each text sample in the text sample pair can be directly characterized and divided into Chinese words, English words or root words, so as to obtain the text sample pair. The target text word after tokenization of each text sample.

其中,在文本样本对将文本样本作为查询文本样本,对应的语义文本样本就可以为目标文本样本,在目标文本样本中可以先将拆分为多个字段,再针对每一个字段进行分词,从而得到每一字段分词后的目标文本词。Among them, in the text sample pair, the text sample is used as the query text sample, and the corresponding semantic text sample can be the target text sample. Get the target text word after tokenization of each field.

在对文本样本对中的文本样本进行分词后,便可以对分词后的目标文本词和文本词样本进行特征提取,特征提取的方式可以有多种,比如,可以采用预设文本处理模型中的Bert网络或者XLNet、ELECTRA等模型分别对目标文本词和文本词样本进行特征提取,从而得到每个目标文本词的向量表示(q1,q2,q3)和每个文本词样本的向量表示(t1,t2,t3),将目标文本词的向量表示作为目标文本词的文本词特征,将文本词样本的向量表示作为文本词样本的文本词样本特征。After word segmentation is performed on the text samples in the text sample pair, feature extraction can be performed on the segmented target text words and text word samples. There are various ways of feature extraction. Bert network or XLNet, ELECTRA and other models respectively perform feature extraction on the target text words and text word samples, so as to obtain the vector representation (q1 , q2 , q3 ) of each target text word and the vector representation of each text word sample. (t1 , t2 , t3 ), the vector representation of the target text word is used as the text word feature of the target text word, and the vector representation of the text word sample is used as the text word sample feature of the text word sample.

其中,在对目标文本词和文本词样本进行特征提取时,可以共享Bert网络的网络参数。Among them, the network parameters of Bert network can be shared when feature extraction is performed on target text words and text word samples.

103、基于文本词特征和文本词样本特征,对目标文本词和文本词样本进行关键词类别识别,得到目标文本词的第一关键词类别和文本词样本的第二关键词类别。103. Perform keyword category recognition on the target text word and the text word sample based on the text word feature and the text word sample feature, to obtain a first keyword category of the target text word and a second keyword category of the text word sample.

例如,可以采用预设文本处理模型中的关键词识别网络分别对文本词特征和文本词样本特征进行归一化处理,根据归一化后的文本词特征,映射出目标文本词属于每一关键词类别的类别概率,得到目标文本词的第一关键词类别,基于归一化后的文本词样本特征,映射出文本词样本属于每一关键词类别的类别概率,得到文本词样本的第二关键词类别。For example, the keyword recognition network in the preset text processing model can be used to normalize the text word features and the text word sample features respectively, and map the target text words belonging to each key word according to the normalized text word features. The category probability of the word category, the first keyword category of the target text word is obtained, and based on the normalized text word sample features, the category probability of the text word sample belonging to each keyword category is mapped, and the second keyword category of the text word sample is obtained. Keyword category.

其中,对文本词特征和文本词样本特征进行归一化处理的方式可以有多种,比如,可以采用全连接神经网络(Fully-Connected layer,FC)分别对文本词特征和文本词样本特征进行归一化处理。Among them, there are many ways to normalize the text word features and the text word sample features. For example, a fully-connected neural network (Fully-Connected layer, FC) can be used to perform the normalization processing on the text word features and the text word sample features respectively. Normalized processing.

在对文本词特征归一化处理之后,便可以基于归一化后的文本词特征计算出目标文本词的第一关键词类别,计算第一关键词类别的方式可以有多种,比如,通过Softmax函数计算每个目标文本词属于每个关键词类别的类别概率,譬如,以关键词类别为三类为例,则计算出类别概率就可以分为其中,为第i个目标文本词属于第0类关键词的概率,为第i个目标文本词属于第1类关键词的概率,为第i个目标文本词属于第2类关键词的概率,将作为目标文本词的第一关键词类别。After the text word features are normalized, the first keyword category of the target text word can be calculated based on the normalized text word features. There are many ways to calculate the first keyword category, for example, by The Softmax function calculates the category probability that each target text word belongs to each keyword category. For example, taking the keyword category as an example, the calculated category probability can be divided into in, is the probability that the i-th target text word belongs to the 0th category of keywords, is the probability that the i-th target text word belongs to the first category of keywords, is the probability that the i-th target text word belongs to the second category of keywords, set The first keyword category as the target text word.

在对文本词样本特征归一化处理之后,便可以基于归一化后的文本词样本特征计算出文本词样本的第二关键词类别,计算第二关键词类别的方式可以有多种,比如,通过Softmax函数计算每个文本词样本属于每个关键词类别的类别概率,譬如,以关键词类别为三类为例,则计算出的类别概率就可以为第i个文本词样本属于第0类的概率是属于第1类的概率是属于第2类的概率是将和作为文本词样本的第二关键词类别。After the features of the text word samples are normalized, the second keyword category of the text word samples can be calculated based on the normalized features of the text word samples. There are many ways to calculate the second keyword category, such as , the category probability of each text word sample belonging to each keyword category is calculated by the Softmax function. For example, taking the keyword category as an example, the calculated category probability can be that the ith text word sample belongs to the 0th category. The class probability is The probability of belonging to class 1 is The probability of belonging to class 2 is Will and A second keyword category that is a sample of text words.

其中,可以发现对目标文本词和文本词样本进行关键词类别识别的为预设文本处理模型中的关键词识别网络,该关键词识别网络的网络结构可以为FC-Softmax网络,且在识别文本词和文本词样本的关键词类别过程中,共享该FC-Softmax网络以及该网络的网络参数。Among them, it can be found that the keyword recognition network in the preset text processing model is used for keyword category recognition of the target text words and text word samples. The network structure of the keyword recognition network can be the FC-Softmax network, and the recognition In the process of keyword classification of words and text word samples, the FC-Softmax network and the network parameters of the network are shared.

104、根据第一关键词类别,对文本词特征进行加权,以得到文本样本对中每一文本样本的文本特征,并计算文本特征之间的特征距离。104. Weight the text word features according to the first keyword category to obtain the text features of each text sample in the text sample pair, and calculate the feature distance between the text features.

例如,可以根据第一关键词类别,确定文本词特征的文本权重,基于文本权重,对文本词特征进行加权,并将加权后文本词特征进行融合,得到文本样本对中每一文本样本的文本特征,计算文本特征之间的特征距离,具体可以如下:For example, the text weight of the text word feature may be determined according to the first keyword category, the text word feature may be weighted based on the text weight, and the weighted text word features may be fused to obtain the text of each text sample in the text sample pair. feature to calculate the feature distance between text features, which can be as follows:

S1、根据第一关键词类别,确定文本词特征的文本权重。S1. Determine the text weight of the text word feature according to the first keyword category.

其中,文本权重用于指示文本词特征对应的目标文本词在文本样本对中的每一目标文本词中的重要程度。基于该文本权重,从而可以更加准确的表征出文本样本对中每一文本样本的文本特征。The text weight is used to indicate the importance of the target text word corresponding to the text word feature in each target text word in the text sample pair. Based on the text weight, the text feature of each text sample in the text sample pair can be more accurately represented.

其中,根据第一关键词类别,确定文本词特征的文本权重的方式可以有多种,具体可以如下:Wherein, according to the first keyword category, there may be various ways to determine the text weight of the text word feature, which may be specifically as follows:

例如,在第一关键词类别中识别出每一关键词类别的类别概率,得到第一类别概率,在第一类别概率中筛选出至少一个预设关键词类别的类别概率,得到基础类别概率,将基础类别概率进行融合,得到文本词特征的文本权重。For example, the category probability of each keyword category is identified in the first keyword category, the first category probability is obtained, the category probability of at least one preset keyword category is screened out from the first category probability, and the basic category probability is obtained, The basic category probabilities are fused to obtain the text weights of the text word features.

其中,第一类别概率就可以为在第一类别概率中筛选出至少一个预设关键词类别的类别概率的方式可以有多种,比如,可以在第一类别概率中筛选出关键词类别为一般关键词和重要关键词的类别概率,从而得到基础类别概率,以第0类为非关键词、第1类为一般关键词和第2类为重要关键词为例,则就可以在筛选出作为基础类别概率。Among them, the first category probability can be There may be various ways to screen out the category probability of at least one preset keyword category from the first category probability. For example, the category probability of the keyword categories being general keywords and important keywords may be screened out from the first category probability. , so as to obtain the basic category probability. Taking the 0th category as a non-keyword, the 1st category as a general keyword, and the second category as an important keyword, you can Filter out as the base class probability.

在筛选出基础类别概率之后,便可以将基础类别概率进行融合,从而得到文本词特征的文本权重,融合的方式可以有多种,比如,获取每一基础类别概率的融合参数,将融合参数分别与对应的基础类别概率进行融合,然后,将融合后基础类别概率相加,得到目标基础类别概率,然后,计算目标基础类别概率的均值,从而该文本词特征的文本权重,具体可以如公式(1)所示:After the basic category probabilities are filtered out, the basic category probabilities can be fused to obtain the text weights of the text word features. There can be various fusion methods. For example, the fusion parameters of each basic category probability are obtained, and the fusion parameters are divided into It is fused with the corresponding basic category probability, and then, the basic category probability after fusion is added to obtain the target basic category probability, and then the mean value of the target basic category probability is calculated, so that the text weight of the text word feature can be specified as the formula ( 1) shown:

其中,wqi为第i个文本词特征的文本权重,和分别为基础类别概率。Among them, wqi is the text weight of the i-th text word feature, and are the base class probabilities, respectively.

S2、基于文本权重,对文本词特征进行加权,并将加权后文本词特征进行融合,得到文本样本对中每一文本样本的文本特征。S2. Based on the text weight, weight the text word features, and fuse the weighted text word features to obtain the text features of each text sample in the text sample pair.

其中,文本样本对包括查询文本样本和目标文本样本,查询文本样本可以为文本样本,目标文本样本就可以为文本样本对应的语义文本样本。The text sample pair includes a query text sample and a target text sample, the query text sample can be a text sample, and the target text sample can be a semantic text sample corresponding to the text sample.

其中,基于文本权重,对文本词特征进行加权,就可以得到加权后文本词特征,将加权后文本词特征进行融合,融合的方式可以有多种,具体可以如下;Among them, based on the text weight, the text word features are weighted, and the weighted text word features can be obtained, and the weighted text word features can be fused.

例如,基于文本权重,对文本词特征进行加权,得到加权后文本词特征,将加权后文本词特征进行融合,得到融合后文本特征(vecq=∑iwqi*qi)。在融合后文本特征中提取出查询文本样本对应的查询文本特征和目标文本样本对应的至少一个字段文本特征,将字段文本特征进行融合,得到目标字段文本特征,并将目标字段文本特征和查询文本特征作为文本样本对中每一文本样本的文本特征。For example, based on the text weight, the text word features are weighted to obtain the weighted text word features, and the weighted text word features are fused to obtain the fused text features (vecq =∑i wqi *qi) . Extract the query text feature corresponding to the query text sample and at least one field text feature corresponding to the target text sample from the fused text features, fuse the field text features to obtain the target field text feature, and combine the target field text feature with the query text The feature is used as the text feature of each text sample in the text sample pair.

其中,在融合后文本特征中提取出查询文本样本对应的查询文本特征和目标文本样本对应的至少一个字段文本特征的方式可以有多种,比如,可以在融合后文本特征中筛选出属于查询文本样本的文本特征,从而就可以得到查询文本样本对应的查询文本特征,在融合后文本特征中筛选出属于目标文本样本的每一个字段的文本特征,就可以得到至少一个字段文本特征。There are various ways to extract the query text feature corresponding to the query text sample and at least one field text feature corresponding to the target text sample from the fused text features. The text features of the samples can be obtained, so that the query text features corresponding to the query text samples can be obtained, and the text features of each field belonging to the target text samples can be screened out from the fused text features, and at least one field text feature can be obtained.

在筛选出字段文本特征之后,便可以将字段文本特征进行融合,从而得到目标字段文本特征,将字段文本特征进行融合的方式可以有多种,比如,对字段文本特征进行关联特征提取,得到字段文本特征的关联特征,基于关联特征,确定字段文本特征的关联权重,该关联权重用于指示字段文本特征之间的关联关系,根据关联权重,对字段文本特征进行加权,并将加权后的字段文本特征进行融合,得到目标字段文本特征,这里的目标字段文本特征就可以理解为目标文本样本对应的文本特征。After filtering out the field text features, the field text features can be fused to obtain the target field text features. There are many ways to fuse the field text features. The association feature of the text feature, based on the association feature, determine the association weight of the field text feature, the association weight is used to indicate the association relationship between the field text features, according to the association weight, the field text feature is weighted, and the weighted field The text features are fused to obtain the target field text features, where the target field text features can be understood as the text features corresponding to the target text samples.

其中,可以发现对字段文本特征进行融合的过程中重点关注了字段文本特征之间的关联权重,确定该关联权重的方式可以有多种,比如,可以采用注意力网络(Attention网络)提取出字段文本特征的关联特征,然后,基于该关联特征,计算每一字段文本特征的关联权重。Among them, it can be found that the process of fusing the field text features focuses on the correlation weight between the field text features. There are various ways to determine the correlation weight. For example, the attention network (Attention network) can be used to extract the field. The associated feature of the text feature, and then, based on the associated feature, the associated weight of the text feature of each field is calculated.

S3、计算文本特征之间的特征距离。S3. Calculate feature distances between text features.

其中,特征距离用于指示文本特征对应的文本样本之间的语义匹配关系,该特征距离的类型可以有多种,比如,可以包括欧式距离或者余弦距离等多种距离形式。The feature distance is used to indicate the semantic matching relationship between the text samples corresponding to the text feature, and the feature distance may be of various types, for example, may include various distance forms such as Euclidean distance or cosine distance.

其中,计算文本特征之间的特征距离的方式可以有多种,具体可以如下:Among them, there can be various ways to calculate the feature distance between text features, and the details can be as follows:

例如,可以直接计算文本特征之间的余弦距离,从而得到文本特征之间的特征距离,或者,还可以计算文本特征之间的欧式距离,从而得到文本特征之间的特征距离。For example, the cosine distance between the text features can be directly calculated to obtain the feature distance between the text features, or the Euclidean distance between the text features can also be calculated to obtain the feature distance between the text features.

105、基于第二关键词类别、标注关键词类别、特征距离和标注语义匹配关系对预设文本处理模型进行收敛,得到训练后文本处理模型,并采用训练后文本处理模型检索目标文本。105. Convergence the preset text processing model based on the second keyword category, the labeled keyword category, the feature distance, and the labeled semantic matching relationship to obtain a post-training text processing model, and use the post-training text processing model to retrieve the target text.

例如,可以基于第二关键词类别和标注关键词类别,确定文本词样本的关键词损失信息,根据标注语义匹配关系和特征距离,确定文本样本对的文本损失信息,基于关键词损失信息和文本损失信息,对预设文本处理模型进行收敛,得到训练后文本处理模型,采用训练后文本处理模型检索目标文本,具体可以如下:For example, the keyword loss information of the text word samples can be determined based on the second keyword category and the labeled keyword category, the text loss information of the text sample pair can be determined according to the labeled semantic matching relationship and the feature distance, and based on the keyword loss information and the text Loss information, converge the preset text processing model, obtain the post-training text processing model, and use the post-training text processing model to retrieve the target text, as follows:

C1、基于第二关键词类别和标注关键词类别,确定文本词样本的关键词损失信息。C1. Determine the keyword loss information of the text word sample based on the second keyword category and the labeled keyword category.

其中,关键词损失信息可以为预设文本处理模型在关键词类别识别任务中产生的损失信息。The keyword loss information may be loss information generated in the keyword category recognition task by a preset text processing model.

其中,基于第二关键词类别和标注关键词类别,确定文本词样本的关键词损失信息的方式可以有多种,具体可以如下:Wherein, based on the second keyword category and the marked keyword category, there may be various ways to determine the keyword loss information of the text word sample, which may be specifically as follows:

例如,在第二关键词类别中识别出每一关键词类别的类别概率,得到第二类别概率,在第二类别概率中筛选出与标注关键词类别对应的类别概率,得到目标类别概率,将目标类别概率与标注关键词类别进行融合,并计算融合后关键词类别的均值,得到文本词样本的关键词损失信息。For example, the category probability of each keyword category is identified in the second keyword category, the second category probability is obtained, the category probability corresponding to the marked keyword category is screened out from the second category probability, and the target category probability is obtained. The target category probability is fused with the labeled keyword category, and the mean value of the fused keyword category is calculated to obtain the keyword loss information of the text word sample.

其中,以关键词类别为三类为例,则在第二关键词类别中识别出第二类别概率可以为和在第二类别概率中筛选出目标类别概率的方式可以有多种,比如,当第i个文本词样本的标注关键词类别为第1类时,就可以在和筛选出为目标类别概率。Wherein, taking the keyword category as an example of three categories, the probability of identifying the second category in the second keyword category may be: and There are many ways to screen out the target category probability from the second category probability. For example, when the labeled keyword category of the i-th text word sample is the first category, you can and Filter out is the target class probability.

在筛选出目标类别概率之后,便可以将目标类别概率与标注关键词类别进行融合,融合的方式可以有多种,比如,可以根据标注关键词类别,确定该文本词样本的关键词类别参数,当第i个文本词样本属于第c类关键词时,该关键词参数就可以为1,反正该关键词参数就可以为0。对目标类别概率进行预处理之后,将预处理后的目标类别概率与关键词参数相乘,得到第i个文本词样本的基础关键词损失信息,然后,将文本词样本的基础关键词损失信息进行累加,并计算累加后关键词损失信息的均值,从而得到文本词样本的关键词损失信息,具体可以如公式(2)所示:After the target category probability is filtered out, the target category probability can be fused with the labeled keyword category. There are various ways of fusion. For example, the keyword category parameter of the text word sample can be determined according to the labeled keyword category. When the i-th text word sample belongs to the c-th keyword, the keyword parameter can be 1, and the keyword parameter can be 0 anyway. After preprocessing the target category probability, multiply the preprocessed target category probability with the keyword parameters to obtain the basic keyword loss information of the i-th text word sample, and then calculate the basic keyword loss information of the text word sample. Accumulate, and calculate the average value of the keyword loss information after the accumulation, so as to obtain the keyword loss information of the text word sample, which can be shown in formula (2) specifically:

其中,Losskeyword为文本词样本的关键词损失信息,标注关键词类别(c类),为目标类别概率(属于C类的类别概率)。Among them, Losskeyword is the keyword loss information of the text word sample, Label the keyword category (category c), is the target class probability (the class probability belonging to class C).

C2、根据标注语义匹配关系和特征距离,确定文本样本对的文本损失信息。C2. Determine the text loss information of the text sample pair according to the annotation semantic matching relationship and the feature distance.

其中,文本损失信息可以为预设文本处理模型在语义匹配任务中产生的损失信息。所谓语义匹配任务可以理解为了计算文本样本对中的文本样本之间的语义匹配关系。The text loss information may be loss information generated by a preset text processing model in the semantic matching task. The so-called semantic matching task can be understood to calculate the semantic matching relationship between text samples in a text sample pair.

其中,根据标注语义匹配关系和特征距离,确定文本样本对的文本损失信息的方式可以有多种,具体可以如下:Among them, according to the annotation semantic matching relationship and feature distance, there can be various ways to determine the text loss information of the text sample pair, and the details can be as follows:

例如,根据标注语义匹配关系,确定文本样本对的匹配参数,当匹配参数为预设匹配参数,且特征距离小于预设距离阈值时,将匹配参数与特征距离进行融合,得到文本样本对的文本损失信息。For example, according to the annotation semantic matching relationship, the matching parameters of the text sample pair are determined. When the matching parameters are preset matching parameters and the feature distance is less than the preset distance threshold, the matching parameters and the feature distance are fused to obtain the text of the text sample pair. loss information.

其中,匹配参数用于指示文本样本对中文本样本之间的语义匹配关系的参数,比如,当文本样本对中的文本样本的语义匹配关系为匹配时,则对应的匹配参数可以就为1,当文本样本对中的文本样本的语义匹配关系为不匹配时,则对应的匹配参数就可以为0,当然,匹配参数也可以为其他参数值,需要说明的是语义匹配关系不同,对应的匹配参数也不相同。以匹配参数为0或1为例,当匹配参数为0,且特征距离大于预设距离阈值时,文本样本对的文本损失信息就可以为0,当匹配参数为1,且特征距离小于预设距离阈值时,文本样本对才存在文本损失信息。因此,文本样本对存在文本损失信息的条件为匹配参数为预设匹配参数,且特征距离小于预设距离阈值。The matching parameter is used to indicate the semantic matching relationship between the text samples in the text sample pair. For example, when the semantic matching relationship between the text samples in the text sample pair is matching, the corresponding matching parameter can be 1. When the semantic matching relationship of the text samples in the text sample pair is mismatched, the corresponding matching parameter can be 0. Of course, the matching parameter can also be other parameter values. It should be noted that the semantic matching relationship is different, the corresponding matching The parameters are also different. Taking the matching parameter as 0 or 1 as an example, when the matching parameter is 0 and the feature distance is greater than the preset distance threshold, the text loss information of the text sample pair can be 0, and when the matching parameter is 1 and the feature distance is less than the preset distance When the distance threshold is set, the text sample pair has text loss information. Therefore, the condition for the existence of text loss information for text samples is that the matching parameters are preset matching parameters, and the feature distance is less than the preset distance threshold.

在匹配参数为预设匹配参数,且特征距离小于预设距离阈值的条件下,将匹配参数与特征距离进行融合的方式可以有多种,比如,计算特征距离与预设距离阈值的距离差值,计算匹配参数与预设参数阈值的参数差值,并将距离差值与参数差值进行融合,将融合后差值、匹配参数和特征距离进行融合,得到文本样本对的文本损失信息,具体可以如公式(3)所示:Under the condition that the matching parameter is the preset matching parameter and the feature distance is smaller than the preset distance threshold, there can be various ways to fuse the matching parameter and the feature distance, for example, calculating the distance difference between the feature distance and the preset distance threshold , calculate the parameter difference between the matching parameter and the preset parameter threshold, fuse the distance difference with the parameter difference, and fuse the fusion difference, matching parameters and feature distance to obtain the text loss information of the text sample pair. It can be shown as formula (3):

其中,Lossmatch为文本样本对的文本损失信息,N为文本样本对中文本样本的数量,y为匹配参数,d为特征距离,margin为超参数,用于指示预设距离阈值。计算文本损失信息的损失函数为对比损失函数,该对比损失函数的作用是着重学习相关样本的参数,忽略大于margin的不相关样本,对欠召回问题有较好效果,并且方便在线检索模块采用余弦距离计算相似性。Among them, Lossmatch is the text loss information of the text sample pair, N is the number of text samples in the text sample pair, y is the matching parameter, d is the feature distance, and margin is the hyperparameter used to indicate the preset distance threshold. The loss function for calculating the text loss information is the contrast loss function. The function of the contrast loss function is to focus on learning the parameters of the relevant samples, ignoring the irrelevant samples larger than the margin. Distance calculates similarity.

C3、基于关键词损失信息和文本损失信息,对预设文本处理模型进行收敛,得到训练后文本处理模型。C3. Based on the keyword loss information and the text loss information, the preset text processing model is converged to obtain a post-training text processing model.

其中,预设文本处理模型包括特征提取网络和关键词识别网络。The preset text processing model includes a feature extraction network and a keyword recognition network.

其中,对预设文本处理模型进行收敛的方式可以有多种,具体可以如下:Among them, there may be various ways to converge the preset text processing model, and the details may be as follows:

例如,获取损失权重,并基于损失权重,分别对关键词损失信息和文本损失信息进行加权,将加权后关键词损失信息和加权后文本损失信息进行融合,得到目标损失信息,采用加权后关键词损失信息对关键词识别网络进行收敛,得到训练后关键词识别网络,采用目标损失信息对特征提取网络进行收敛,得到训练后特征提取网络,并将训练后关键词识别网络和训练后特征提取网络作为训练后文本处理模型。For example, the loss weight is obtained, and based on the loss weight, the keyword loss information and the text loss information are weighted respectively, and the weighted keyword loss information and the weighted text loss information are fused to obtain the target loss information, and the weighted keyword The loss information converges the keyword recognition network, and the post-training keyword recognition network is obtained. The target loss information is used to converge the feature extraction network, and the post-training feature extraction network is obtained, and the post-training keyword recognition network and the post-training feature extraction network are combined. as a post-training text processing model.

其中,将加权后关键词损失信息和加权后文本损失信息进行融合的方式可以有多种,比如,可以直接将加权后关键词损失信息和加权后文本损失信息相加,从而就可以得到预设文本处理模型对应的目标损失信息,具体可以如公式(4)所示:There are various ways to fuse the weighted keyword loss information and the weighted text loss information. For example, the weighted keyword loss information and the weighted text loss information can be directly added to obtain a preset The target loss information corresponding to the text processing model can be specifically shown in formula (4):

Losstotal=α*Lossmatch+β*Losskeyword(4)Losstotal =α*Lossmatch +β*Losskeyword (4)

其中,Losstotal为目标损失信息,α和β分别为文本损失信息和关键词损失信息的损失权重,Lossmatch为文本损失信息,Losskeyword为关键词损失信息。Among them, Losstotal is the target loss information, α and β are the loss weights of text loss information and keyword loss information, respectively, Lossmatch is text loss information, and Losskeyword is keyword loss information.

在得到目标损失信息之后,便可以通过反向传播,对预设文本处理模型的网络参数进行更新,然后,多次迭代直至收敛,在收敛的过程中,需要说明的是,对于预设文本处理模型中的关键词识别网络来说,只需要采用加权后关键词损失信息对关键词识别网络的网络参数进行更新,无需加权后文本损失信息对关键词识别网络的网络参数进行更新。在预设文本处理模型中除了关键词识别网络以外的特征提取网络来说,就可以采用目标损失信息对特征提取网络的网络参数进行更新。对关键词识别网络和特征提取网络进行多次迭代训练直至收敛,从而得到训练后文本处理模型。需要说明的是关键词识别任务是为了增强语义匹配模型的降噪能力,是一个辅助任务,损失值的权重相对较低。After the target loss information is obtained, the network parameters of the preset text processing model can be updated through backpropagation, and then iterate for many times until convergence. In the process of convergence, it should be noted that for the preset text processing model For the keyword recognition network in the model, only the weighted keyword loss information needs to be used to update the network parameters of the keyword recognition network, and the weighted text loss information is not needed to update the network parameters of the keyword recognition network. For feature extraction networks other than the keyword recognition network in the preset text processing model, target loss information can be used to update the network parameters of the feature extraction network. The keyword recognition network and feature extraction network are iteratively trained for many times until they converge to obtain a post-training text processing model. It should be noted that the keyword recognition task is an auxiliary task to enhance the noise reduction capability of the semantic matching model, and the weight of the loss value is relatively low.

C4、采用训练后文本处理模型检索目标文本。C4. Use the post-training text processing model to retrieve the target text.

其中,目标文本为通过查询文本查询出的与查询文本存在语义匹配关系的文本。The target text is the text queried by the query text that has a semantic matching relationship with the query text.

其中,采用训练后文本处理模型检索目标文本的方式可以有多种,具体可以如下:Among them, there are many ways to retrieve the target text by using the post-training text processing model, and the details can be as follows:

例如,可以获取候选文本集合,并采用训练后文本处理模型对候选文本集合中的每一候选文本进行特征提取,到候选文本特征集合,根据候选文本特征集合中的候选文本特征,构建候选文本特征集合对应的索引信息,当接收到查询文本时,根据索引信息和查询文本,在候选文本集合中筛选出至少一个候选文本。For example, a set of candidate texts can be obtained, and a post-trained text processing model can be used to perform feature extraction on each candidate text in the candidate text set, and then to the candidate text feature set, the candidate text features can be constructed according to the candidate text features in the candidate text feature set. The index information corresponding to the set, when the query text is received, at least one candidate text is screened out from the candidate text set according to the index information and the query text.

其中,对候选文本集合进行处理主要可以通过离线处理的方式,通过训练后文本处理模型在离线提前计算所有候选文本的文本特征,从而得到候选文本集合对应的候选文本特征集合,采用索引构建工具构建候选文本特征集合的索引库,提供给在线检索系统进行检索。索引构建工具的类型可以有多种,比如,可以包括Faiss或nmslib等索引工具。Among them, the processing of the candidate text set can mainly be done through offline processing. The post-training text processing model calculates the text features of all candidate texts in advance offline, so as to obtain the candidate text feature set corresponding to the candidate text set, which is constructed by using an index construction tool. The index library of candidate text feature sets is provided to the online retrieval system for retrieval. There can be many types of index building tools, for example, index tools such as Faiss or nmslib can be included.

在构建候选文本特征集合对应的索引信息之后,便可以进行在线检索,在线检索的过程主要包括将训练后文本处理模型部署到在线模块,当用户输入查询文本时,可以根据索引信息和查询文本,在候选文本集合中筛选出至少一个候选文本作为目标文本,筛选的方式可以有多种,比如,可以采用训练后文本处理模型对查询文本进行特征提取,得到查询文本的查询文本特征,基于索引信息,在候选文本特征集合中检索出查询文本特征对应的至少一个候选文本特征,得到目标候选文本特征,在候选文本集合中筛选出目标候选文本特征对应的候选文本,得到查询文本对应的目标文本。After the index information corresponding to the candidate text feature set is constructed, online retrieval can be performed. The online retrieval process mainly includes deploying the post-training text processing model to the online module. Screen out at least one candidate text from the candidate text set as the target text. There are various screening methods. For example, a post-training text processing model can be used to extract features from the query text to obtain the query text features of the query text. Based on the index information , retrieve at least one candidate text feature corresponding to the query text feature in the candidate text feature set, obtain the target candidate text feature, filter the candidate text corresponding to the target candidate text feature in the candidate text set, and obtain the target text corresponding to the query text.

其中,基于索引信息,在候选文本特征集合中检索出查询文本特征对应的至少一个候选文本特征的方式可以有多种,比如,可以通过索引信息,计算查询文本特征与候选文本特征之间的特征相似度,然后,基于特征相似度,在候选文本特征集合中检索出相似度最高的Top K个候选文本特征,从而得到目标候选文本特征。Wherein, based on the index information, there may be various ways to retrieve at least one candidate text feature corresponding to the query text feature in the candidate text feature set. For example, the feature between the query text feature and the candidate text feature may be calculated through the index information. Then, based on the feature similarity, the Top K candidate text features with the highest similarity are retrieved from the candidate text feature set, so as to obtain the target candidate text features.

其中,需要说明的是,在得到训练后文本处理模型之后,需要在离线预测候选文本的候选文本特征,并构建候选文本特征对应的索引信息,然后,在线检索查询文本对应的候选文本,从而得到查询文本对应的至少一个目标文本。将检索到的目标文本及其相关特征通过下游模块返回至客户端进行显示,用户在客户端进行文本检索到返回检索的目标文本的过程可以如图3所示。用户可以通过应用平台的搜索控件输入需要搜索的业务或服务的文本信息,客户端将用户输入的查询文本(query)发送至服务器,服务器在候选文本集合中检索出与query相关的至少一个目标文本(doc),并将检索出的doc信息返回至客户端,客户端对返回的doc信息进行展示。Among them, it should be noted that after obtaining the post-training text processing model, it is necessary to predict the candidate text features of the candidate text offline, and construct the index information corresponding to the candidate text features, and then retrieve the candidate text corresponding to the query text online, so as to obtain At least one target text corresponding to the query text. The retrieved target text and its related features are returned to the client through the downstream module for display, and the process of the user performing text retrieval on the client to return the retrieved target text can be shown in FIG. 3 . The user can input the text information of the business or service to be searched through the search control of the application platform, the client sends the query text (query) input by the user to the server, and the server retrieves at least one target text related to the query from the candidate text set. (doc), and returns the retrieved doc information to the client, and the client displays the returned doc information.

其中,文本处理的整个核心框架可以如图4所示,主要分为三个阶段,多任务学习阶段、离线向量库生成阶段和在线向量检索阶段。Among them, the entire core framework of text processing can be shown in Figure 4, which is mainly divided into three stages, the multi-task learning stage, the offline vector library generation stage and the online vector retrieval stage.

多任务学习阶段主要用于对预设文本处理模型进行训练,在对预设文本处理模型进行训练的过程中,采用关键词识别任务和语义匹配任务对预设文本处理模型进行训练,两个任务会共享重要模块进行并行训练,构成多任务学习框架,如图5所示。The multi-task learning stage is mainly used to train the preset text processing model. In the process of training the preset text processing model, the keyword recognition task and the semantic matching task are used to train the preset text processing model. Two tasks are used to train the preset text processing model. Important modules are shared for parallel training, forming a multi-task learning framework, as shown in Figure 5.

在关键词识别任务过程中,对文本样本切分成token,并通过bert模型输入切分后的每一个token的文本特征,这里的文本特征可以为文本向量(t1,t2,t3),然后,通过FC-Softmax(关键词识别网络)计算出每个token属于每个关键词类别的类别概率,然后,通过CE loss(关键词损失函数)计算出关键词损失信息,具体可以如图6所示。In the process of keyword recognition task, the text sample is divided into tokens, and the text features of each token after segmentation are input through the bert model. The text features here can be text vectors (t1 , t2 , t3 ), Then, the category probability of each token belonging to each keyword category is calculated through FC-Softmax (keyword recognition network), and then the keyword loss information is calculated through CE loss (keyword loss function), as shown in Figure 6. shown.

其中,文本向量指的是将一段不定长的文本通过某种方式转换成一个定长的数值型向量。向量可分为两种形式:一种是高维稀疏向量,通常是将词表的长度作为向量的长度,每一维表示一个单词,只有在文本单词所对应的维度才有非零值,大部分维度都为零;另一种是低维稠密向量,可将文本输入到神经网络等模型中,通过训练输出向量表示,向量的每一维基本是非零值,没有明确的物理含义,但效果通常比高维稀疏向量更好。Among them, the text vector refers to converting a piece of text of indeterminate length into a numerical vector of fixed length in some way. The vector can be divided into two forms: one is a high-dimensional sparse vector, usually the length of the vocabulary is used as the length of the vector, each dimension represents a word, and only the dimension corresponding to the text word has a non-zero value. Some of the dimensions are zero; the other is a low-dimensional dense vector, which can input text into models such as neural networks, and is represented by a training output vector. Each dimension of the vector is basically a non-zero value. There is no clear physical meaning, but the effect Usually better than high-dimensional sparse vectors.

在语义匹配任务过程中,在文本样本集合中随机抽取若干query,通过检索系统检索出语义相似的doc作为正例(标签为1),同时随机抽取离线库中的doc作为负例(标签为0),将query、doc以及标签构成二元组数据作为文本样本对。所谓二元组数据为在文本匹配的场景下,一个二元组数据包括两个文本以及一个标签(0或1来表示)。假设两个文本为A和B,若两者匹配,则二元组数据为(A,B,1);若不匹配,则二元组数据为(A,B,0)。通过文本样本对训练双塔模型,这里的双塔模型可以理解为分别采用关键词识别网络和特征提取网络生成query和doc的文本向量,从而得到文本样本对中每一文本样本的文本特征。然后,通过对比损失函数计算query的文本向量和doc的文本向量的文本损失信息,具体可以如图7所示。In the process of semantic matching task, a number of queries are randomly selected from the text sample set, and the doc with similar semantics is retrieved through the retrieval system as a positive example (the label is 1), and the doc in the offline library is randomly selected as a negative example (the label is 0). ), the query, doc, and label constitute binary data as a pair of text samples. The so-called two-tuple data means that in the scenario of text matching, one two-tuple data includes two texts and one label (represented by 0 or 1). Assuming that the two texts are A and B, if they match, the binary data is (A, B, 1); if they do not match, the binary data is (A, B, 0). The two-tower model is trained by the pair of text samples. The two-tower model here can be understood as using the keyword recognition network and the feature extraction network to generate the text vectors of query and doc respectively, so as to obtain the text features of each text sample in the text sample pair. Then, the text loss information of the text vector of the query and the text vector of the doc is calculated by comparing the loss function, as shown in Figure 7.

在多任务学习训练过程中,将两个任务同时进行前向传播(共享bert模型和FC-Softmax网络),计算关键词损失信息和文本损失信息,然后,加权求和得到整体的损失信息,基于整体的损失信息对bert模型进行收敛,基于加权后关键词损失信息对FC-Softmax网络进行收敛,从而得到训练后文本处理模型。另外,还可以在对预设文本处理模型进行训练的过程中添加其他辅助任务,来提供预设文本处理模型在语义匹配场景下的精度和泛化能力。In the multi-task learning and training process, the two tasks are simultaneously forward propagated (the bert model and the FC-Softmax network are shared), the keyword loss information and text loss information are calculated, and then the weighted summation is used to obtain the overall loss information, based on The overall loss information converges the bert model, and the FC-Softmax network is converged based on the weighted keyword loss information to obtain a post-training text processing model. In addition, other auxiliary tasks can be added in the process of training the preset text processing model to provide the accuracy and generalization ability of the preset text processing model in the semantic matching scenario.

其中,需要说明的是本方案采用bert模型对文本样本进行切分,切分的粒度为token,从而无需进行中文分词,避免了分词工具带来的精度误差,另外,采用bert模型能够不足到文本顺序信息,特征抽取能量更好,抽取的文本特征的精度更加准确,另外,在关键词识别任务中设计了三分类的关键词识别任务,通过融合预测的关键词类别的类别概率,可以有效得到每个token的文本权重,显著提高了预设文本处理模型的关键词识别能力,进而提高了query和doc的语义相关性计算精度,可有效缓解欠召回和排序逆序问题。Among them, it should be noted that this solution uses the bert model to segment the text samples, and the granularity of segmentation is token, so there is no need to perform Chinese word segmentation, and the accuracy error caused by the word segmentation tool is avoided. In addition, the use of the bert model can be insufficient to text. Sequential information, the feature extraction energy is better, and the accuracy of the extracted text features is more accurate. In addition, a three-category keyword recognition task is designed in the keyword recognition task. The text weight of each token significantly improves the keyword recognition ability of the preset text processing model, thereby improving the calculation accuracy of the semantic correlation of query and doc, which can effectively alleviate the problems of under-recall and reverse sorting.

由以上可知,本申请实施例在获取文本词样本和文本样本对后,采用预设文本处理模型对文本样本对中的文本样本进行分词,并对分词后的目标文本词和文本词样本进行特征提取,得到目标文本词的文本词特征和文本词样本的文本词样本特征,然后,基于文本词特征和文本词样本特征,对目标文本词和文本词样本进行关键词类别识别,得到目标文本词的第一关键词类别和文本词样本的第二关键词类别,然后,根据第一关键词类别,对文本词特征进行加权,以得到文本样本对中每一文本样本的文本特征,并计算文本特征之间的特征距离,然后,基于第二关键词类别、标注关键词类别、特征距离和标注语义匹配关系对预设文本处理模型进行收敛,得到训练后文本处理模型,并采用训练后文本处理模型检索目标文本;由于该方案通过多任务框架,将关键词类别识别任务和语义匹配任务同时进行训练,并通过识别出第一关键词类别,对文本词特征进行加权,显式增强了文本处理模型在语义匹配任务中对词权重识别能力,从而有效降低信息噪声,因此,可以提升文本处理的准确性。It can be seen from the above that, after acquiring the text word samples and the text sample pairs in the embodiment of the present application, a preset text processing model is used to segment the text samples in the text sample pairs, and the target text words and the text word samples after word segmentation are characterized. Extract and obtain the text word features of the target text words and the text word sample features of the text word samples, and then, based on the text word features and the text word sample features, perform keyword category recognition on the target text words and text word samples, and obtain the target text words. Then, according to the first keyword category, weight the text word features to obtain the text features of each text sample in the text sample pair, and calculate the text feature distance between features, and then, based on the second keyword category, label keyword category, feature distance and label semantic matching relationship, the preset text processing model is converged to obtain a post-training text processing model, and the post-training text processing model is adopted. The model retrieves the target text; because this scheme trains the keyword category recognition task and the semantic matching task at the same time through the multi-task framework, and by identifying the first keyword category, the text word features are weighted, which significantly enhances text processing. The model has the ability to recognize word weights in semantic matching tasks, thereby effectively reducing information noise, thus improving the accuracy of text processing.

根据上面实施例所描述的方法,以下将举例作进一步详细说明。According to the methods described in the above embodiments, the following examples will be used for further detailed description.

在本实施例中,将以该文本处理装置具体集成在电子设备,电子设备为服务器,关键词类别包括三类:非关键词(用0表示)、一般关键词(用1表示)和重要关键词(用2表示)为例进行说明。In this embodiment, the text processing apparatus is specifically integrated into an electronic device, and the electronic device is used as a server. The keyword categories include three categories: non-keywords (represented by 0), general keywords (represented by 1), and important keywords The word (represented by 2) is used as an example to illustrate.

如图8所示,一种文本处理方法,具体流程如下:As shown in Figure 8, a text processing method, the specific process is as follows:

201、服务器获取文本词样本和文本样本对。201. The server obtains a text word sample and a text sample pair.