CN114328485A - Improved BiLSTM-CRF Named Entity Recognition Method for Electronic Medical Records - Google Patents

Improved BiLSTM-CRF Named Entity Recognition Method for Electronic Medical RecordsDownload PDFInfo

- Publication number

- CN114328485A CN114328485ACN202111587368.9ACN202111587368ACN114328485ACN 114328485 ACN114328485 ACN 114328485ACN 202111587368 ACN202111587368 ACN 202111587368ACN 114328485 ACN114328485 ACN 114328485A

- Authority

- CN

- China

- Prior art keywords

- electronic medical

- data

- medical record

- crf

- bilstm

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Landscapes

- Medical Treatment And Welfare Office Work (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明属于医疗自然语言处理领域,以建立结构化电子病历为基础建立命名实体模型,将传统的BiLSTM-CRF深度学习模型进行改进,具体为一种改进BiLSTM-CRF的电子病历命名实体识别方法。The invention belongs to the field of medical natural language processing, establishes a named entity model based on the establishment of a structured electronic medical record, and improves the traditional BiLSTM-CRF deep learning model, in particular to an improved BiLSTM-CRF electronic medical record named entity recognition method.

背景技术Background technique

医疗电子病历现今在临床诊断中被广泛应用,近几年伴随着机器学习、深度学习的快速发展,结构化电子病历得到了大众的关注。由于对医生病症描述的难以统一,使得结构化医疗术语无法建立。具体来说,对于同一种疾病,不同的医生在表达方式、中文的繁简体,英文字母的大小写的区别,导致在医疗领域难以形成规范化的标准。Medical electronic medical records are widely used in clinical diagnosis. In recent years, with the rapid development of machine learning and deep learning, structured electronic medical records have attracted the attention of the public. Structured medical terminology cannot be established due to the difficulty in unifying the descriptions of physicians' conditions. Specifically, for the same disease, different doctors differ in the way of expression, the traditional and simplified Chinese, and the capitalization of English letters, making it difficult to form a standardized standard in the medical field.

结构化电子病历主要分为两个部分,一种是前结构化电子病历,一种是后结构化电子病历,前结构化电子病历主要是医生不再使用传统的手写病历的方式,而是在计算机中,直接选中病人的病症、需要进行的药物治疗等;而后结构化电子病历则是怎样建立一个完整的系统,其中的医学术语都是符合标准的医学规范,这需要用到自然语言处理中的命名实体识别,只有对医学术语能够正确的区分和归类才能使医生实现前结构化电子病历中描述的场景。The structured electronic medical record is mainly divided into two parts, one is the pre-structured electronic medical record, and the other is the post-structured electronic medical record. In the computer, the patient's symptoms, the required drug treatment, etc. are directly selected; and how does the structured electronic medical record establish a complete system, in which the medical terms are all in line with standard medical norms, which needs to be used in natural language processing. Named entity recognition, only the correct distinction and classification of medical terms can enable doctors to realize the scenarios described in the pre-structured electronic medical records.

在使用当今较为先进的分词器后发现,对于专业的医疗术语,分词器无法进行正确的区分,主要原因在于,分词器的训练数据集并不是只针对医疗术语来进行训练的,虽然像jieba分词器可以自己扩充字典中的数据,但是在庞大的医学用语面前这种方式过于低效,需要耗费大量的人力来完成这项工作。After using today's more advanced tokenizers, it is found that the tokenizer cannot correctly distinguish professional medical terms. The main reason is that the training data set of the tokenizer is not only trained for medical terms, although like jieba word segmentation The computer can expand the data in the dictionary by itself, but this method is too inefficient in the face of huge medical terms, and it takes a lot of manpower to complete this work.

发明内容SUMMARY OF THE INVENTION

为了提高医疗命名实体识别的准确性,本发明提出一种扩展BiLSTM-CRF模型的命名实体识别模型。模型数据集是1000份人工手工标记的电子病历,将文字和标签同时经过多头注意力层,BiLSTM层提取特征,CRF层对最后的标签进行预测,最终实现实体的分类,能够较好的针对专业医疗用于进行识别。In order to improve the accuracy of medical named entity recognition, the present invention proposes a named entity recognition model that extends the BiLSTM-CRF model. The model data set is 1000 electronic medical records manually marked by hand. The text and labels are passed through the multi-head attention layer at the same time, the BiLSTM layer extracts features, the CRF layer predicts the final label, and finally realizes the classification of entities, which can better target professional Medical for identification.

本发明为实现上述目的所采用的技术方案是:The technical scheme that the present invention adopts for realizing the above-mentioned purpose is:

改进BiLSTM-CRF的电子病历命名实体识别方法,包括以下步骤:Improved BiLSTM-CRF named entity recognition method for electronic medical records, including the following steps:

获取带有人工标记的电子病历数据,并对其进行数据清洗;Obtain electronic medical record data with manual labeling, and perform data cleaning on it;

对清洗后的数据标记类别标签;Label the cleaned data with category labels;

对数据中的文字和类别标签分别做Embedding处理,并将处理后的文字和类别标签进行结合;Embedding the text and category labels in the data separately, and combine the processed text and category labels;

将结合后的数据输入到多头注意力机制中进行训练;Input the combined data into the multi-head attention mechanism for training;

将训练结果通过BiLSTM模型进行特征提取,得到每个字符在各个类别标签上的概率值作为提取结果;The training result is extracted by the BiLSTM model, and the probability value of each character on each category label is obtained as the extraction result;

将提取结果输入CRF模型,得到电子病历数据中每一个文字所对应的标签,作为识别结果。The extraction result is input into the CRF model, and the label corresponding to each character in the electronic medical record data is obtained as the recognition result.

对电子病历数据进行数据清洗,具体为:Data cleaning for electronic medical record data, specifically:

将电子病历数据中的空格,首尾标点符号以及错误符号进行删除,并检查人工标记的起始位置和终点位置的实体是否标记有误,并将电子病历数据中的标点全部使用英文标点表示。Delete spaces, punctuation marks at the beginning and end of the electronic medical record data, and wrong symbols, and check whether the entities at the manually marked start and end positions are incorrectly marked, and all punctuation in the electronic medical record data is represented by English punctuation.

使用BIO标记方式对清洗后的数据标记类别标签,BIO将开头文字以B表示,中间文字以I表示,没有类别的文字使用O表示。Use the BIO labeling method to mark the category label for the cleaned data. In the BIO, the initial text is represented by B, the middle text is represented by I, and the text without category is represented by O.

对数据中的文字做Embedding处理,具体为:Embedding the text in the data, specifically:

以当前所有电子病历数据中出现的文字为基础,建立字典,字典的内容是每个字与其对应的编号,使用字典中的编号代替当前文字。Based on the words appearing in all current electronic medical record data, a dictionary is established. The content of the dictionary is each word and its corresponding number, and the number in the dictionary is used to replace the current word.

对数据中的类别标签做Embedding处理,具体为:Embedding the category labels in the data, specifically:

以当前所有电子病历数据中出现的标签为基础,建立字典,字典的内容是每个类别标签与其对应的编号,使用字典中的编号代替当前类别标签。Based on the labels that appear in all current electronic medical record data, a dictionary is established. The content of the dictionary is each category label and its corresponding number, and the number in the dictionary is used to replace the current category label.

改进BiLSTM-CRF的电子病历命名实体识别系统,包括:Improve BiLSTM-CRF's electronic medical record named entity recognition system, including:

数据获取模块,用于获取带有人工标记的电子病历数据,并对其进行数据清洗;The data acquisition module is used to acquire electronic medical record data with manual marking, and perform data cleaning on it;

数据标记模块,用于对清洗后的数据标记类别标签;The data labeling module is used to label the cleaned data;

Embedding处理模块,用于对数据中的文字和类别标签分别做Embedding处理,并将处理后的文字和类别标签进行结合;The Embedding processing module is used to perform Embedding processing on the text and category labels in the data, and combine the processed text and category labels;

多头注意力机制模块,用于将结合后的数据输入到多头注意力机制中进行训练;The multi-head attention mechanism module is used to input the combined data into the multi-head attention mechanism for training;

特征提取模块,用于将训练结果通过BiLSTM模型进行特征提取,得到每个字符在各个类别标签上的概率值作为提取结果;The feature extraction module is used to extract the training results through the BiLSTM model, and obtain the probability value of each character on each category label as the extraction result;

CRF识别模块,用于将提取结果输入CRF模型,得到识别结果。The CRF recognition module is used to input the extraction result into the CRF model to obtain the recognition result.

改进BiLSTM-CRF的电子病历命名实体识别系统,包括存储器和处理器;所述存储器,用于存储计算机程序;所述处理器,用于当执行所述计算机程序时,实现所述的改进BiLSTM-CRF的电子病历命名实体识别方法。An improved BiLSTM-CRF electronic medical record named entity recognition system, comprising a memory and a processor; the memory for storing a computer program; the processor for implementing the improved BiLSTM- when executing the computer program CRF's Electronic Medical Record Named Entity Recognition Method.

一种计算机可读存储介质,所述存储介质上存储有计算机程序,当所述计算机程序被处理器执行时,实现所述的改进BiLSTM-CRF的电子病历命名实体识别方法。A computer-readable storage medium having a computer program stored on the storage medium, when the computer program is executed by a processor, realizes the improved BiLSTM-CRF electronic medical record named entity identification method.

本发明具有以下有益效果及优点:The present invention has the following beneficial effects and advantages:

1.本发明有助于解决分词器中对于专业医疗用语分词不准确的情况;1. The present invention helps to solve the inaccurate situation of word segmentation for professional medical terms in the word segmenter;

2.良好的分词和医疗术语识别对于实现结构化电子病历有推动作用;2. Good word segmentation and medical term recognition can promote the realization of structured electronic medical records;

3.医疗术语的精准抓取有助于医生编写规范的电子病历。3. Accurate capture of medical terms helps doctors to write standardized electronic medical records.

附图说明Description of drawings

图1为本发明的流程图;Fig. 1 is the flow chart of the present invention;

图2为本发明的模型结构图;Fig. 2 is the model structure diagram of the present invention;

图3为多头注意力层的计算过程图;Figure 3 is a diagram of the calculation process of the multi-head attention layer;

图4为模型期望结果图。Figure 4 is a graph of the expected results of the model.

具体实施方式Detailed ways

下面结合附图及实施例对本发明做进一步的详细说明。The present invention will be further described in detail below with reference to the accompanying drawings and embodiments.

针对当前医疗命名实体识别中出现的问题,本文提出的WT-MHA-BiLSTM-CRF模型进行实体识别的主要步骤如下:In view of the current problems in medical named entity recognition, the main steps of entity recognition by the WT-MHA-BiLSTM-CRF model proposed in this paper are as follows:

步骤1:对原始收集到的1000份人工标记电子病历进行数据清洗;Step 1: Perform data cleaning on the 1000 manually-labeled electronic medical records that were originally collected;

步骤2:为对应文字标记类别标签;Step 2: Mark the category label for the corresponding text;

步骤3:文字和标签同时做Embedding处理,并将处理后的数据结合;Step 3: Embedding the text and label at the same time, and combine the processed data;

步骤4:进入多头注意力机制中训练;Step 4: Enter the training in the multi-head attention mechanism;

步骤5:训练结果作为BiLSTM模型的输入,模型进行特征提取;Step 5: The training result is used as the input of the BiLSTM model, and the model performs feature extraction;

步骤6:提取结果进入CRF模型,模型自动学习文本中的约束,预测分类标签;Step 6: The extracted results enter the CRF model, and the model automatically learns the constraints in the text and predicts the classification labels;

步骤7:计算损失,得到模型输出结果。Step 7: Calculate the loss and get the model output.

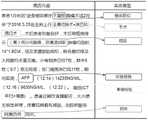

原始数据难免存在一些错误,如果直接进行训练会使得模型的噪音过大影响模型的训练结果,因此对原始电子病历进行数据清洗,python程序会将原始文本中的空格,首尾标点符号以及一些错误符号进行删除,并检查人工标记的起始位置和终点位置的实体是否标记有误,并将原始文本中的标点全部使用英文标点表示。将清洗后的数据按6:2:2的比例分为训练集,验证集,测试集,实验共涉及6类标签,分别是病症,身体部位,手术,药物,化验检验,影像检验,其对应的类别数量分别是:4212,8426,1492,1931,1195,972。There are inevitably some errors in the original data. If the training is carried out directly, the noise of the model will be too large and affect the training results of the model. Therefore, when cleaning the original electronic medical records, the python program will change the spaces, first and last punctuation marks and some wrong symbols in the original text. Delete and check whether the entities at the manually marked start and end positions are incorrectly marked, and all punctuation in the original text is represented by English punctuation. The cleaned data is divided into training set, validation set, and test set according to the ratio of 6:2:2. The experiment involves a total of 6 types of labels, namely disease, body part, surgery, drug, laboratory test, and image test. The number of categories are: 4212, 8426, 1492, 1931, 1195, 972.

清洗过的数据先进行类别标记并做Embedding处理,再进入多头注意力层,Attention机制会会使网络在训练过程中更多的注意到输入中的相关信息,而对无关信息进行简略,从而提高训练的准确性。The cleaned data is first labeled and processed by Embedding, and then enters the multi-head attention layer. The Attention mechanism will make the network pay more attention to the relevant information in the input during the training process, and simplify the irrelevant information, thereby improving the training accuracy.

本发明不只将文字作为模型训练的内容,同时考虑标签在进行命名实体识别中起到的作用,因而在模型训练之前对文字和标签进行了Embedding处理。The present invention not only regards text as the content of model training, but also considers the role that labels play in performing named entity recognition, and therefore performs Embedding processing on text and labels before model training.

就文字Embedding而言,需要进行的步骤包括:As far as text Embedding is concerned, the steps that need to be taken include:

1)以当前所有文本中出现的文字为基础,建立字典,字典的内容是每个字与其对应的编号。1) Based on the words appearing in all current texts, a dictionary is established, and the content of the dictionary is each word and its corresponding number.

2)使用字典中的编号代替当前文字,因此进行过Embedding后的文本是一个大型的矩阵,矩阵中的内容是该位置上文字对应的编号。2) Use the number in the dictionary to replace the current text, so the text after Embedding is a large matrix, and the content in the matrix is the number corresponding to the text at the position.

就标签Embedding而言,需要进行的步骤包括:As far as tag Embedding is concerned, the steps that need to be taken include:

1)以当前所有病历中出现的标签为基础,建立字典,字典的内容是每个标签与其对应的编号。1) Based on the labels appearing in all current medical records, a dictionary is established, and the content of the dictionary is each label and its corresponding number.

2)使用字典中的编号代替当前标签,因此进行过Embedding后的标签是一个大型的矩阵,矩阵中的内容是该位置上标签对应的编号。2) Use the number in the dictionary to replace the current label, so the label after Embedding is a large matrix, and the content in the matrix is the number corresponding to the label at the position.

文字和标签同时进行Embedding后,将两者进行结合,并输入到多头注意力层(MHA)中,MHA的本质是多次的Self-Attention计算,MHA根据Self-Attention的计算原理,将原来的一层提升为h层(h是一个超参数,在模型中取8)。After the text and label are embedded at the same time, the two are combined and input into the multi-head attention layer (MHA). The essence of MHA is multiple Self-Attention calculations. According to the calculation principle of Self-Attention, MHA converts the original One layer is promoted to h layer (h is a hyperparameter and takes 8 in the model).

经过MHA层的数据会作为BiLSTM层的输入,BiLSTM模型对特征进行提取,并计算当前字符在每一个类别中的概率值大小,相较于循环神经网络,可以很好的解决梯度消失和梯度爆炸的问题,同时双向LSTM网络使得模型不仅可以看到前文的信息,也可以看到未来文本中的信息,更好的理解上下文信息。The data passing through the MHA layer will be used as the input of the BiLSTM layer. The BiLSTM model extracts the features and calculates the probability value of the current character in each category. Compared with the recurrent neural network, it can well solve the gradient disappearance and gradient explosion. At the same time, the bidirectional LSTM network allows the model to see not only the information in the previous text, but also the information in the future text, and better understand the context information.

BiLSTM层的输出进入CRF层,CRF模型可以自主的学习到文本中的约束,这些约束会帮助训练结果更加合理。CRF会评估预测分数,通过最大似然法进行训练,使用viterbi算法进行解码,从而找到最优路径。为证明模型的可行度,将WT-MHA-BiLSTM-CRF模型与其他三种模型进行对比,该模型得到了较好的实体识别效果。The output of the BiLSTM layer enters the CRF layer, and the CRF model can autonomously learn constraints in the text, which will help the training results to be more reasonable. The CRF evaluates the prediction scores, trains through maximum likelihood, and decodes using the viterbi algorithm to find the optimal path. In order to prove the feasibility of the model, the WT-MHA-BiLSTM-CRF model is compared with the other three models, and the model obtains a better entity recognition effect.

实施例Example

步骤1:对原始收集到的1000份人工标记电子病历进行数据清洗,人工标记好的数据集包括病历内容本身,病历中包含的实体起始位置和终止位置以及该实体对应的类别标签。Step 1: Perform data cleaning on the 1000 manually-labeled electronic medical records collected originally. The manually-labeled data set includes the content of the medical record itself, the starting and ending positions of the entities contained in the medical records, and the category label corresponding to the entity.

步骤2:为对应文字标记类别标签,模型使用BIO标记方式对文本进行标记,BIO将开头文字以B表示,中间文字以I表示,没有类别的文字使用O表示。具体来说,对于“胃癌”一词,该词是一种疾病,因此其对应的标签是DISEASE,针对每一个字来说,“胃”是开始文字,因此标记为B-DISEASE,“癌”是中间文字,因此标记为I-DISEASE,数据集最终被标注为每个字及其对应标签。Step 2: In order to mark the category label corresponding to the text, the model uses the BIO marking method to mark the text. The BIO represents the beginning text with B, the middle text with I, and the text without category is represented by O. Specifically, for the word "stomach cancer", the word is a disease, so its corresponding label is DISEASE. For each word, "stomach" is the starting word, so it is marked as B-DISEASE, "cancer" is the middle word, so labeled as I-DISEASE, the dataset is finally annotated with each word and its corresponding label.

步骤3:文字和标签同时做Embedding处理,并将处理后的数据结合。由于深度学习模型不能以文字作为输入进行训练,必须将文字转换成计算机可以识别的数字进行计算,因而需要将文本和标签进行Embedding处理。就文字Embedding而言,需要进行的步骤包括:Step 3: Embedding the text and labels at the same time, and combine the processed data. Since the deep learning model cannot be trained with text as input, the text must be converted into numbers that can be recognized by the computer for calculation, so the text and labels need to be embedded. As far as text Embedding is concerned, the steps that need to be taken include:

1)以当前所有文本中出现的文字为基础,建立字典,字典的内容是每个字与其对应的编号。1) Based on the words appearing in all current texts, a dictionary is established, and the content of the dictionary is each word and its corresponding number.

2)使用字典中的编号代替当前文字,因此进行过Embedding后的文本是一个大型的矩阵,矩阵中的内容是该位置上文字对应的编号。2) Use the number in the dictionary to replace the current text, so the text after Embedding is a large matrix, and the content in the matrix is the number corresponding to the text at the position.

就标签Embedding而言,需要进行的步骤包括:As far as tag Embedding is concerned, the steps that need to be taken include:

1)以当前所有病历中出现的标签为基础,建立字典,字典的内容是每个标签与其对应的编号。1) Based on the labels appearing in all current medical records, a dictionary is established, and the content of the dictionary is each label and its corresponding number.

步骤4:进入多头注意力机制中训练,结合附图说明中的图3显示,Mutli-HeadAttention本质是多次的Self-Attention计算,Attention机制会使网络在训练过程中更多的注意到输入中的相关信息,而对无关信息进行简略,从而提高训练的准确性。Self-Attention对输入的每个词向量创建三个新向量:Query、Key、Value,这三个向量分别是词向量和Q,K,V三个矩阵乘积得到的,Q,K,V三个矩阵是一个需要学习的参数。MHA根据Self-Attention的计算原理,将原来的一层提升为h层(h是一个超参数,在模型中取8)。Step 4: Enter the multi-head attention mechanism for training. Combined with Figure 3 in the description of the accompanying drawings, it shows that Mutli-HeadAttention is essentially multiple Self-Attention calculations, and the Attention mechanism will make the network pay more attention to the input during the training process. relevant information, and abbreviated irrelevant information, thereby improving the accuracy of training. Self-Attention creates three new vectors for each input word vector: Query, Key, and Value. These three vectors are obtained by multiplying the word vector and three matrices Q, K, and V, respectively, Q, K, and V. A matrix is a parameter that needs to be learned. According to the calculation principle of Self-Attention, MHA upgrades the original layer to h layer (h is a hyperparameter, and takes 8 in the model).

步骤5:训练结果作为BiLSTM模型的输入,模型进行特征提取。BiLSTM模型是双向的LSTM模型,结合附图说明中的图2中BiLSTM层来说,双向模型的好处是,模型不仅可以看到之前文本中的信息,也可以看到未来文本中的信息,使得文本上下文中的消息被很好的获取。并且使用门机制来解决循环神经网络中的长期依赖问题,也解决了解决循环神经网络中梯度消失和梯度爆炸的问题。在模型结构中,将网络层的相关参数定义为:每次训练选取的样本数batch_size值为64,学习率lr设定为1e-4,训练轮数epoch设置为30,BiLSTM模型的网络层数为1,词向量维数为128,隐层向量维数为128,使用Adam优化器,丢失率dropout值为0.5,BiLSTM模型的最后输出是每个字符在各个类别标签上的概率值。Step 5: The training result is used as the input of the BiLSTM model, and the model performs feature extraction. The BiLSTM model is a bi-directional LSTM model. Combined with the BiLSTM layer in Figure 2 in the description of the accompanying drawings, the advantage of the bi-directional model is that the model can not only see the information in the previous text, but also the information in the future text, making Messages in textual context are well captured. And use the gate mechanism to solve the long-term dependency problem in the recurrent neural network, and also solve the problem of gradient disappearance and gradient explosion in the recurrent neural network. In the model structure, the relevant parameters of the network layer are defined as: the number of samples selected for each training batch_size is 64, the learning rate lr is set to 1e-4, the number of training rounds epoch is set to 30, the number of network layers of the BiLSTM model is 1, the word vector dimension is 128, and the hidden layer vector dimension is 128. Using the Adam optimizer, the dropout value is 0.5. The final output of the BiLSTM model is the probability value of each character on each category label.

步骤6:提取结果进入CRF模型,模型自动学习文本中的约束,预测分类标签。CRF模型可以自主的学习到文本中的约束,例如在命名实体识别中,I-标签的后面不会出现B-标签,O标签的后面不会出现I-标签等,这些约束会帮助训练结果更加合理。CRF模型通过自身的评估公式得到评估分数,并通过最大似然法进行训练,在深度学习框架中可以损失函数进行求导或者梯度下降的方法来优化,使用viterbi算法进行解码,从而找到最优路径,也就是每个字符的最佳对应标签。Step 6: The extraction results are entered into the CRF model, and the model automatically learns the constraints in the text and predicts the classification labels. The CRF model can autonomously learn the constraints in the text. For example, in named entity recognition, the B-label will not appear after the I-label, and the I-label will not appear after the O label. These constraints will help the training results to be more accurate. Reasonable. The CRF model obtains the evaluation score through its own evaluation formula, and conducts training through the maximum likelihood method. In the deep learning framework, the loss function can be derived or the gradient descent method can be used to optimize, and the viterbi algorithm is used to decode to find the optimal path. , which is the best corresponding label for each character.

步骤7:计算损失,得到模型输出结果。通过精确率、召回率Step 7: Calculate the loss and get the model output. By precision, recall

F1值查看模型训练的结果,F1值是精确率和召回率的结合,其值越高说明模型的训练效果越好。The F1 value is the result of model training. The F1 value is a combination of precision and recall. The higher the value, the better the training effect of the model.

结合附图说明的图1的模型流程图将流程归纳如下:The model flow chart of FIG. 1 described in conjunction with the accompanying drawings summarizes the process as follows:

(1)在原始数据集中将收集到的1000份电子病历进行数据清洗,将不符合标准的数据进行删除。(1) The 1000 electronic medical records collected were cleaned in the original data set, and the data that did not meet the standards were deleted.

(2)对清洗后的数据每一个文字进行BIO格式的标记。(2) Mark each character of the cleaned data in BIO format.

(3)同时对字和标签进行Embedding处理,并将处理后的结果进行结合。(3) Embedding the words and tags at the same time, and combine the processed results.

(4)数据进入多头注意力层,为使模型在后续训练过程中更多关注有用信息。(4) The data enters the multi-head attention layer, in order to make the model pay more attention to useful information in the subsequent training process.

(5)数据进入BiLSTM模型,模型用来提取特征数据。(5) The data enters the BiLSTM model, and the model is used to extract feature data.

(6)将BiLSTM模型的输出作为CRF模型的输入,CRF对结果进行预测。(6) The output of the BiLSTM model is used as the input of the CRF model, and the CRF predicts the result.

(7)输出预测后的结果值。(7) Output the predicted result value.

所述结果值为每一个文字所对应的标签,比如肺炎一词,在模型的输出结果中将会被识别为:肺B-DISEASE;炎I-DISEASEThe result value is the label corresponding to each word, such as the word pneumonia, which will be recognized in the output of the model as: lung B-DISEASE; inflammation I-DISEASE

由此可以得到结果肺炎一词是疾病(DISEASE)类型。From this it can be concluded that the word pneumonia is a type of disease (DISEASE).

模型最终希望得到的训练效果如附图说明中图4所示,对于给定的一份电子病历,可以准确的找到相应的实体类别及其标签类别。目前结构化电子病历由于没有规范的术语标准使得其难以进行规范化的书写,也无法达到前结构化电子病历所需要的标准,因此对医疗文本的实体识别将会很好的规范医生在进行诊断时的语言描述,结构化电子病历的内容,对于病人的后续治疗和医学的发展将会起到促进作用。The final desired training effect of the model is shown in Figure 4 in the description of the accompanying drawings. For a given electronic medical record, the corresponding entity category and its label category can be accurately found. At present, the structured electronic medical record has no standardized terminology standard, which makes it difficult to standardize the writing and cannot meet the standards required by the former structured electronic medical record. Therefore, the entity recognition of medical text will be a good standard for doctors when making a diagnosis. The language description and the content of structured electronic medical records will play a role in promoting the follow-up treatment of patients and the development of medicine.

Claims (8)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202111587368.9ACN114328485A (en) | 2021-12-23 | 2021-12-23 | Improved BiLSTM-CRF Named Entity Recognition Method for Electronic Medical Records |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202111587368.9ACN114328485A (en) | 2021-12-23 | 2021-12-23 | Improved BiLSTM-CRF Named Entity Recognition Method for Electronic Medical Records |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN114328485Atrue CN114328485A (en) | 2022-04-12 |

Family

ID=81054961

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202111587368.9APendingCN114328485A (en) | 2021-12-23 | 2021-12-23 | Improved BiLSTM-CRF Named Entity Recognition Method for Electronic Medical Records |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN114328485A (en) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN118350371A (en)* | 2024-06-12 | 2024-07-16 | 北京星河智源科技有限公司 | A method and system for extracting token pairs from patent texts |

Citations (9)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN109471895A (en)* | 2018-10-29 | 2019-03-15 | 清华大学 | Method and system for phenotype extraction and phenotype name normalization from electronic medical records |

| CN109871538A (en)* | 2019-02-18 | 2019-06-11 | 华南理工大学 | A Named Entity Recognition Method for Chinese Electronic Medical Records |

| CN111243699A (en)* | 2020-01-14 | 2020-06-05 | 中南大学 | Chinese electronic medical record entity extraction method based on word information fusion |

| CN111581474A (en)* | 2020-04-02 | 2020-08-25 | 昆明理工大学 | Evaluation object extraction method of case-related microblog comments based on multi-head attention system |

| CN111930936A (en)* | 2020-06-28 | 2020-11-13 | 山东师范大学 | A kind of platform message text mining method and system |

| CN112015891A (en)* | 2020-07-17 | 2020-12-01 | 山东师范大学 | Method and system for message classification of online political platform based on deep neural network |

| CN112883732A (en)* | 2020-11-26 | 2021-06-01 | 中国电子科技网络信息安全有限公司 | Method and device for identifying Chinese fine-grained named entities based on associative memory network |

| CN113297379A (en)* | 2021-05-25 | 2021-08-24 | 善诊(上海)信息技术有限公司 | Text data multi-label classification method and device |

| CN113743119A (en)* | 2021-08-04 | 2021-12-03 | 中国人民解放军战略支援部队航天工程大学 | Chinese named entity recognition module, method and device and electronic equipment |

- 2021

- 2021-12-23CNCN202111587368.9Apatent/CN114328485A/enactivePending

Patent Citations (9)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN109471895A (en)* | 2018-10-29 | 2019-03-15 | 清华大学 | Method and system for phenotype extraction and phenotype name normalization from electronic medical records |

| CN109871538A (en)* | 2019-02-18 | 2019-06-11 | 华南理工大学 | A Named Entity Recognition Method for Chinese Electronic Medical Records |

| CN111243699A (en)* | 2020-01-14 | 2020-06-05 | 中南大学 | Chinese electronic medical record entity extraction method based on word information fusion |

| CN111581474A (en)* | 2020-04-02 | 2020-08-25 | 昆明理工大学 | Evaluation object extraction method of case-related microblog comments based on multi-head attention system |

| CN111930936A (en)* | 2020-06-28 | 2020-11-13 | 山东师范大学 | A kind of platform message text mining method and system |

| CN112015891A (en)* | 2020-07-17 | 2020-12-01 | 山东师范大学 | Method and system for message classification of online political platform based on deep neural network |

| CN112883732A (en)* | 2020-11-26 | 2021-06-01 | 中国电子科技网络信息安全有限公司 | Method and device for identifying Chinese fine-grained named entities based on associative memory network |

| CN113297379A (en)* | 2021-05-25 | 2021-08-24 | 善诊(上海)信息技术有限公司 | Text data multi-label classification method and device |

| CN113743119A (en)* | 2021-08-04 | 2021-12-03 | 中国人民解放军战略支援部队航天工程大学 | Chinese named entity recognition module, method and device and electronic equipment |

Non-Patent Citations (3)

| Title |

|---|

| GUOYIN WANG: "Joint Embedding of Words and Labels for Text Classification", ARXIV, 10 May 2018 (2018-05-10)* |

| MINQIAN LIU 等: "Co-attention network with label embedding for text classification", NEUROCOMPUTING, 4 November 2021 (2021-11-04)* |

| 李易: "深度学习 算法入门与Keras编程实践", 30 April 2021, 北京:机械工业出版社, pages: 142 - 144* |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN118350371A (en)* | 2024-06-12 | 2024-07-16 | 北京星河智源科技有限公司 | A method and system for extracting token pairs from patent texts |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN111079377B (en) | Method for recognizing named entities of Chinese medical texts | |

| CN106897559B (en) | A kind of symptom and sign class entity recognition method and device towards multi-data source | |

| CN111444721A (en) | Chinese text key information extraction method based on pre-training language model | |

| WO2021139424A1 (en) | Text content quality evaluation method, apparatus and device, and storage medium | |

| CN109871538A (en) | A Named Entity Recognition Method for Chinese Electronic Medical Records | |

| CN106682397A (en) | Knowledge-based electronic medical record quality control method | |

| CN110688855A (en) | Chinese medical entity identification method and system based on machine learning | |

| CN112800766A (en) | Chinese medical entity identification and labeling method and system based on active learning | |

| CN106844351B (en) | A multi-data source-oriented medical institution organization entity identification method and device | |

| WO2023029502A1 (en) | Method and apparatus for constructing user portrait on the basis of inquiry session, device, and medium | |

| CN111259111B (en) | Medical record-based decision-making assisting method and device, electronic equipment and storage medium | |

| CN112154509A (en) | Machine learning model with evolving domain-specific dictionary features for text annotation | |

| CN110335653A (en) | Non-standard medical record parsing method based on openEHR medical record format | |

| CN112749277B (en) | Medical data processing method, device and storage medium | |

| CN116737924B (en) | Medical text data processing method and device | |

| CN114420233A (en) | A method for extracting structured information from Chinese electronic medical records | |

| CN115545021A (en) | Clinical term identification method and device based on deep learning | |

| CN113674866B (en) | Pre-training method for medical text | |

| CN101615182A (en) | TCM symptom information storage system and TCM symptom information storage method | |

| CN116595994A (en) | Contradictory information prediction method, device, equipment and medium based on prompt learning | |

| CN114328485A (en) | Improved BiLSTM-CRF Named Entity Recognition Method for Electronic Medical Records | |

| CN112735543B (en) | Medical data processing method, device and storage medium | |

| CN113111660A (en) | Data processing method, device, equipment and storage medium | |

| US20250285718A1 (en) | Systems and methods for automatic medical report generation | |

| CN118797002A (en) | A clinical pathway image recognition method and system based on large model |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination |