CN113809780B - Micro-grid optimal scheduling method based on improved Q learning punishment selection - Google Patents

Micro-grid optimal scheduling method based on improved Q learning punishment selectionDownload PDFInfo

- Publication number

- CN113809780B CN113809780BCN202111115317.6ACN202111115317ACN113809780BCN 113809780 BCN113809780 BCN 113809780BCN 202111115317 ACN202111115317 ACN 202111115317ACN 113809780 BCN113809780 BCN 113809780B

- Authority

- CN

- China

- Prior art keywords

- cost

- wind

- power

- grid

- microgrid

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

- H—ELECTRICITY

- H02—GENERATION; CONVERSION OR DISTRIBUTION OF ELECTRIC POWER

- H02J—CIRCUIT ARRANGEMENTS OR SYSTEMS FOR SUPPLYING OR DISTRIBUTING ELECTRIC POWER; SYSTEMS FOR STORING ELECTRIC ENERGY

- H02J3/00—Circuit arrangements for AC mains or AC distribution networks

- H02J3/38—Arrangements for parallely feeding a single network by two or more generators, converters or transformers

- H02J3/46—Controlling of the sharing of output between the generators, converters, or transformers

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06Q—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES; SYSTEMS OR METHODS SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES, NOT OTHERWISE PROVIDED FOR

- G06Q10/00—Administration; Management

- G06Q10/06—Resources, workflows, human or project management; Enterprise or organisation planning; Enterprise or organisation modelling

- G06Q10/063—Operations research, analysis or management

- G06Q10/0631—Resource planning, allocation, distributing or scheduling for enterprises or organisations

- G06Q10/06312—Adjustment or analysis of established resource schedule, e.g. resource or task levelling, or dynamic rescheduling

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06Q—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES; SYSTEMS OR METHODS SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES, NOT OTHERWISE PROVIDED FOR

- G06Q10/00—Administration; Management

- G06Q10/06—Resources, workflows, human or project management; Enterprise or organisation planning; Enterprise or organisation modelling

- G06Q10/063—Operations research, analysis or management

- G06Q10/0631—Resource planning, allocation, distributing or scheduling for enterprises or organisations

- G06Q10/06315—Needs-based resource requirements planning or analysis

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06Q—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES; SYSTEMS OR METHODS SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES, NOT OTHERWISE PROVIDED FOR

- G06Q50/00—Information and communication technology [ICT] specially adapted for implementation of business processes of specific business sectors, e.g. utilities or tourism

- G06Q50/06—Energy or water supply

- H—ELECTRICITY

- H02—GENERATION; CONVERSION OR DISTRIBUTION OF ELECTRIC POWER

- H02J—CIRCUIT ARRANGEMENTS OR SYSTEMS FOR SUPPLYING OR DISTRIBUTING ELECTRIC POWER; SYSTEMS FOR STORING ELECTRIC ENERGY

- H02J3/00—Circuit arrangements for AC mains or AC distribution networks

- H02J3/28—Arrangements for balancing of the load in a network by storage of energy

- H02J3/32—Arrangements for balancing of the load in a network by storage of energy using batteries with converting means

- H—ELECTRICITY

- H02—GENERATION; CONVERSION OR DISTRIBUTION OF ELECTRIC POWER

- H02J—CIRCUIT ARRANGEMENTS OR SYSTEMS FOR SUPPLYING OR DISTRIBUTING ELECTRIC POWER; SYSTEMS FOR STORING ELECTRIC ENERGY

- H02J2203/00—Indexing scheme relating to details of circuit arrangements for AC mains or AC distribution networks

- H02J2203/20—Simulating, e g planning, reliability check, modelling or computer assisted design [CAD]

- H—ELECTRICITY

- H02—GENERATION; CONVERSION OR DISTRIBUTION OF ELECTRIC POWER

- H02J—CIRCUIT ARRANGEMENTS OR SYSTEMS FOR SUPPLYING OR DISTRIBUTING ELECTRIC POWER; SYSTEMS FOR STORING ELECTRIC ENERGY

- H02J2300/00—Systems for supplying or distributing electric power characterised by decentralized, dispersed, or local generation

- H02J2300/20—The dispersed energy generation being of renewable origin

- H02J2300/22—The renewable source being solar energy

- H02J2300/24—The renewable source being solar energy of photovoltaic origin

- H—ELECTRICITY

- H02—GENERATION; CONVERSION OR DISTRIBUTION OF ELECTRIC POWER

- H02J—CIRCUIT ARRANGEMENTS OR SYSTEMS FOR SUPPLYING OR DISTRIBUTING ELECTRIC POWER; SYSTEMS FOR STORING ELECTRIC ENERGY

- H02J2300/00—Systems for supplying or distributing electric power characterised by decentralized, dispersed, or local generation

- H02J2300/20—The dispersed energy generation being of renewable origin

- H02J2300/28—The renewable source being wind energy

- H—ELECTRICITY

- H02—GENERATION; CONVERSION OR DISTRIBUTION OF ELECTRIC POWER

- H02J—CIRCUIT ARRANGEMENTS OR SYSTEMS FOR SUPPLYING OR DISTRIBUTING ELECTRIC POWER; SYSTEMS FOR STORING ELECTRIC ENERGY

- H02J2300/00—Systems for supplying or distributing electric power characterised by decentralized, dispersed, or local generation

- H02J2300/40—Systems for supplying or distributing electric power characterised by decentralized, dispersed, or local generation wherein a plurality of decentralised, dispersed or local energy generation technologies are operated simultaneously

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02E—REDUCTION OF GREENHOUSE GAS [GHG] EMISSIONS, RELATED TO ENERGY GENERATION, TRANSMISSION OR DISTRIBUTION

- Y02E10/00—Energy generation through renewable energy sources

- Y02E10/50—Photovoltaic [PV] energy

- Y02E10/56—Power conversion systems, e.g. maximum power point trackers

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02E—REDUCTION OF GREENHOUSE GAS [GHG] EMISSIONS, RELATED TO ENERGY GENERATION, TRANSMISSION OR DISTRIBUTION

- Y02E40/00—Technologies for an efficient electrical power generation, transmission or distribution

- Y02E40/70—Smart grids as climate change mitigation technology in the energy generation sector

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02E—REDUCTION OF GREENHOUSE GAS [GHG] EMISSIONS, RELATED TO ENERGY GENERATION, TRANSMISSION OR DISTRIBUTION

- Y02E70/00—Other energy conversion or management systems reducing GHG emissions

- Y02E70/30—Systems combining energy storage with energy generation of non-fossil origin

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y04—INFORMATION OR COMMUNICATION TECHNOLOGIES HAVING AN IMPACT ON OTHER TECHNOLOGY AREAS

- Y04S—SYSTEMS INTEGRATING TECHNOLOGIES RELATED TO POWER NETWORK OPERATION, COMMUNICATION OR INFORMATION TECHNOLOGIES FOR IMPROVING THE ELECTRICAL POWER GENERATION, TRANSMISSION, DISTRIBUTION, MANAGEMENT OR USAGE, i.e. SMART GRIDS

- Y04S10/00—Systems supporting electrical power generation, transmission or distribution

- Y04S10/50—Systems or methods supporting the power network operation or management, involving a certain degree of interaction with the load-side end user applications

Landscapes

- Business, Economics & Management (AREA)

- Human Resources & Organizations (AREA)

- Engineering & Computer Science (AREA)

- Economics (AREA)

- Strategic Management (AREA)

- Entrepreneurship & Innovation (AREA)

- Theoretical Computer Science (AREA)

- General Physics & Mathematics (AREA)

- General Business, Economics & Management (AREA)

- Physics & Mathematics (AREA)

- Marketing (AREA)

- Tourism & Hospitality (AREA)

- Quality & Reliability (AREA)

- Operations Research (AREA)

- Game Theory and Decision Science (AREA)

- Educational Administration (AREA)

- Development Economics (AREA)

- Power Engineering (AREA)

- Health & Medical Sciences (AREA)

- Public Health (AREA)

- Water Supply & Treatment (AREA)

- General Health & Medical Sciences (AREA)

- Primary Health Care (AREA)

- Feedback Control In General (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及微电网经济调度方法,尤其是涉及一种基于改进Q学习惩罚选择的微电网优化调度方法。The invention relates to a micro-grid economic scheduling method, in particular to a micro-grid optimal scheduling method based on improved Q-learning penalty selection.

背景技术Background technique

伴随着能源结构的不断调整,由多类能源设备组成且分散广泛的微电网系统依靠其独立输发配电、快速调度、可再生能源占比大及孤岛运行等优点得到了广泛应用。微电网系统可以提升偏远地区的供电质量,也可以有效地防止因自然灾害造成的电力供应中断等问题。With the continuous adjustment of the energy structure, the widely dispersed microgrid system composed of various types of energy equipment has been widely used due to its advantages of independent power transmission and distribution, fast dispatch, large proportion of renewable energy, and island operation. The microgrid system can improve the quality of power supply in remote areas, and can also effectively prevent power supply interruptions caused by natural disasters.

随着国家政策对新能源产业的不断扶持,风光并网规模不断增大。但由于风电、光伏出力的波动性与不确定性,其大规模接入微电网造成了系统内部功率不平衡及电能质量降低等问题。如何在保证微电网系统内部稳定安全运行的同时提升新能源发电占比是目前亟需解决的问题。With the continuous support of national policies for the new energy industry, the scale of grid-connected wind and solar continues to increase. However, due to the volatility and uncertainty of wind power and photovoltaic output, their large-scale access to microgrids has caused problems such as internal power imbalances and power quality degradation in the system. How to increase the proportion of new energy power generation while ensuring the stable and safe operation of the microgrid system is an urgent problem to be solved.

微电网内部包含传统机组、新能源发电机组、储能机组以及多类负荷需求,传统调度问题所考虑的单一机组发电成本问题已经无法满足微电网系统所追求的快速、经济、环保与安全调度的需求。因此对微电网系统多目标综合调度、各类机组运行新工况及多类机组与负荷需求的优化协调的具有重要意义。The microgrid contains traditional units, new energy generators, energy storage units, and various types of load demands. The cost of generating electricity for a single unit considered in the traditional dispatching problem has been unable to meet the requirements of fast, economical, environmentally friendly and safe dispatching pursued by the microgrid system. need. Therefore, it is of great significance for the multi-objective comprehensive scheduling of the microgrid system, the new operating conditions of various types of units, and the optimization and coordination of multi-type units and load demand.

发明内容Contents of the invention

本发明要解决的技术问题是提供一种基于改进Q学习惩罚选择的微电网优化调度方法,在常规机组、风光机组与储能机组协调运行的微电网传统调度方法中引入奖惩阶梯型弃风弃光惩罚回报函数,并通过由多元宇宙优化算法改进的Q学习算法将微电网调度问题进行状态与动作描述,以满足惩罚回报函数最优的基础上实现总体调度成本最低,降低可再生能源的弃用率,减少微电网与大电网能量交互的波动性,解决传统优化方法响应慢、不收敛的问题,提升微电网运行的稳定性与经济性。The technical problem to be solved by the present invention is to provide an optimal scheduling method for micro-grids based on improved Q-learning penalty selection, and introduce rewards and punishments into the traditional micro-grid scheduling method for the coordinated operation of conventional units, wind turbines and energy storage units. The light penalty reward function, and through the Q learning algorithm improved by the multiverse optimization algorithm, describe the state and action of the microgrid scheduling problem, so as to achieve the lowest overall scheduling cost and reduce the waste of renewable energy on the basis of the optimal penalty reward function. It can reduce the fluctuation of energy interaction between microgrid and large grid, solve the problems of slow response and non-convergence of traditional optimization methods, and improve the stability and economy of microgrid operation.

为了解决现有技术存在的问题,本发明采用的技术方案如下:In order to solve the problems existing in the prior art, the technical scheme adopted in the present invention is as follows:

一种基于改进Q学习惩罚选择的微电网优化调度方法,包括如下步骤:A microgrid optimal scheduling method based on improved Q-learning penalty selection, comprising the following steps:

步骤1:以微电网内部常规机组运行成本、环境效益成本、大电网功率交互成本构建目标函数;Step 1: Construct the objective function based on the operating cost of conventional units in the microgrid, the cost of environmental benefits, and the power interaction cost of the large power grid;

步骤2:建立微电网运行的约束条件;Step 2: Establish constraints on microgrid operation;

步骤3:构造以最高弃风弃光成本与风光完全消纳成本为最高与最低阈值的惩罚回报函数;Step 3: Construct a penalty-return function with the highest cost of abandoning wind and solar energy and the cost of fully absorbing wind and solar energy as the highest and lowest thresholds;

步骤4:采用多元宇宙优化算法改进传统Q学习算法;Step 4: Improve the traditional Q-learning algorithm by using the multiverse optimization algorithm;

优化后的改进Q学习算法的状态-动作函数表示如下:The optimized state-action function of the improved Q-learning algorithm is expressed as follows:

式中:Fs作为传统Q学习的状态特征;为经多元宇宙优化算法优化后的动作特征;/>分别为状态特征与动作特征的初始值;Emvo-p为MVO-Q策略下的期望值;T为迭代次数;/>YT分别为迭代下的奖赏值与折扣系数;In the formula: Fs is used as the state feature of traditional Q-learning; It is the action feature optimized by the multiverse optimization algorithm; /> are the initial values of state features and action features; Emvo-p is the expected value under the MVO-Q strategy; T is the number of iterations; /> YT are the reward value and discount coefficient under iteration respectively;

步骤5:将步骤1所得目标函数进行马尔科夫决策描述处理,并以改进的Q学习算法对所得状态与动作描述进行规划求解。Step 5: The objective function obtained in

其中,所述步骤1包括如下步骤:Wherein, said

步骤1.1:在风光高比例并网情况下,将常规机组分为常规运行与在低负荷的运行状态,微电网内部常规发电成本表示如下:Step 1.1: In the case of a high proportion of wind and wind connected to the grid, the conventional units are divided into normal operation and low-load operation. The internal conventional power generation cost of the microgrid is expressed as follows:

式中:a、b、c为常规机组正常运行状态下的成本因子;Pi为第i台常规机组出力;g、h、l、p为低负荷运行状态下的成本因子;kPi,max为第i台常规机组的正常运行状态与低功率运行状态的临界功率;In the formula: a, b, c are the cost factors under the normal operation state of the conventional unit; Pi is the output of the i-th conventional unit; g, h, l, p are the cost factors under the low load operation state; kPi,max is the critical power of the i-th conventional unit in normal operation state and low power operation state;

步骤1.2:风光不确定出力情况下,常规机组的启停成本表示如下:Step 1.2: In the case of uncertain wind and solar output, the start-stop cost of conventional units is expressed as follows:

式中:Fon-off为常规机组启停成本;C为机组的启停次数;K(ti,r)为第i机组第r次启动的成本;ti,r为第i机组在C次启动前的连续停运时间;C(ti,r)为机组冷态启动是相关辅助系统的操作成本;tcold-hot为机组冷态启动与热态启动的停运临界时间;In the formula: Fon-off is the start-stop cost of the conventional unit; C is the number of start-stop times of the unit; K(ti,r ) is the cost of the r-th start-up of the i-th unit; ti,r is the cost of the i-th unit at C The continuous outage time before the second start; C(ti,r ) is the operating cost of the related auxiliary system for the cold start of the unit; tcold-hot is the critical outage time for the cold start and hot start of the unit;

步骤1.3:常规机组发电排放污染物主要含有氮氧化物、硫氧化物以及二氧化碳等,其治理成本表示如下:Step 1.3: Pollutants emitted by conventional units for power generation mainly include nitrogen oxides, sulfur oxides, and carbon dioxide, etc., and the treatment costs are expressed as follows:

Em(Pi)=(αi,m+βi,mPi+γi,mPi2)+ζi,mexp(δi,mPi)Em (Pi )=(αi,m +βi,m Pi +γi,m Pi2 )+ζi,m exp(δi,m Pi )

式中:Fg为常规机组污染治理成本;M为排放污染物的种类;Em(Pi)为第i台机组污染物的排放量;ηm为第m类污染物的治理成本系数;In the formula: Fg is the pollution control cost of conventional units; M is the type of pollutants discharged; Em (Pi ) is the pollutant emission of the i-th unit; ηm is the treatment cost coefficient of the m-th type of pollutants;

αi,m、βi,m、γi,m、ζi,m、δi,m为第i台机组排放的第m种污染物的排放系数;αi,m , βi,m , γi,m , ζi,m , δi,m are the emission coefficients of the mth pollutant emitted by the i unit;

步骤1.4:微电网与大电网的功率交换成本表示如下:Step 1.4: The cost of power exchange between the microgrid and the large grid is expressed as follows:

式中:λp为微电网售购电状态,售电取值为1,购电取值为-1;Psu/sh为微电网内部的功率盈余与缺额;为大电网的售购电价格;In the formula: λp is the status of electricity sales and purchases in the microgrid, the value of electricity sales is 1, and the value of electricity purchase is -1; Psu/sh is the power surplus and shortage inside the microgrid; It is the price of electricity sold and purchased by the large power grid;

步骤1.5:以微电网内部常规机组运行成本、环境效益成本、主电网功率交换成本构建目标函数表示如下:Step 1.5: The objective function is constructed based on the operating cost of conventional units in the microgrid, the cost of environmental benefits, and the cost of power exchange in the main grid as follows:

minF=Fcf+Fon-off+Fg+Fgrid。minF=Fcf +Fon-off +Fg +Fgrid .

式中:F为微电网系统运行的目标函数值;Fcf、Fon-off、Fg、Fgrid分别为常规机组运行成本、启停成本、污染治理成本以及微电网与大电网功率交互成本。In the formula: F is the objective function value of micro-grid system operation; Fcf , Fon-off , Fg , and Fgrid are the operating cost of conventional units, start-up and shutdown costs, pollution control costs, and power interaction costs between micro-grid and large power grid, respectively .

其中,所述步骤2包括如下步骤:Wherein, said

步骤2.1:功率平衡约束表示如下:Step 2.1: The power balance constraints are expressed as follows:

式中:分别表示t时段常规机组、风电与光伏输出功率;/>为t时段蓄电池的储释功率;Ptgrid为与大电网交互功率;PtL为t时段的总负荷功率;T为微电网运行总时段,取24h;In the formula: Respectively represent the output power of conventional units, wind power and photovoltaic power during the t period; /> is the storage and release power of the battery in the t period; Ptgrid is the power interacting with the large grid; PtL is the total load power in the t period; T is the total operation period of the microgrid, which is 24h;

步骤2.2:蓄电池储释状态约束表示如下:Step 2.2: The battery storage and release state constraints are expressed as follows:

SOCmin≤SOC(t)≤SOCmaxSOCmin ≤ SOC(t) ≤ SOCmax

式中:SOC(t)为蓄电池t时刻荷电状态;SOCmin与SOCmax分别代表蓄电池的最大与最小荷电状态;In the formula: SOC(t) is the state of charge of the battery at time t; SOCmin and SOCmax represent the maximum and minimum state of charge of the battery, respectively;

步骤2.3:对于常规机组而言,其累计的启停时间应该大于最小连续启停时间,其约束表示如下:Step 2.3: For conventional units, the cumulative start-stop time should be greater than the minimum continuous start-stop time, and its constraints are expressed as follows:

式中:为机组最小的连续停止时间;/>为机组最小的连续启动时间。In the formula: is the minimum continuous stop time of the unit; /> It is the minimum continuous start time of the unit.

其中,所述步骤3包括如下步骤:Wherein, said

步骤3.1:规定微电网内部弃风弃光量的最低与最高额度,划分风光完全消纳量至弃风弃光量最高额度的增长区间χn,区间表示如下:Step 3.1: Define the minimum and maximum amount of wind and solar curtailment within the microgrid, and divide the growth interval χn from the complete consumption of wind and solar energy to the maximum amount of wind and solar curtailment. The interval is expressed as follows:

式中:分别为系统内部规定的弃风弃光量的最高与最低额度;n为所划分的区间个数;λ为规定额度增长量的增长步长;In the formula: Respectively, the maximum and minimum amount of wind and light curtailment stipulated in the system; n is the number of divided intervals; λ is the growth step of the specified amount of growth;

步骤3.2:根据系统对于弃风弃光量所规定的额度区间,将其进行线性化处理获得奖惩阶梯型弃风弃光惩罚回报函数,函数表示如下:Step 3.2: According to the quota range stipulated by the system for the amount of curtailment of wind and solar, linearize it to obtain a ladder-type reward function for curtailing wind and solar. The function is expressed as follows:

式中:dab弃风弃光惩罚回报函数值;Pab,wp为系统的弃风弃光量;c为弃风弃光惩罚系数;k为惩罚系数的区间增长步长。In the formula: dab is the reward function value of wind and solar curtailment penalty; Pab,wp is the amount of wind and solar curtailment of the system; c is the penalty coefficient of wind and solar curtailment; k is the interval growth step of the penalty coefficient.

其中,所述步骤5包括如下步骤:Wherein, said

步骤5.1:步骤1所述目标函数包含机组运行成本、环境效益成本、主电网功率交换成本,将系统内各主体在迭代过程T中的状态描述表示为:Step 5.1: The objective function described in

Fs=[Fcf,Fon-off,Em(Pi),Fg,Fgrid,F]Fs =[Fcf ,Fon-off ,Em (Pi ),Fg ,Fgrid ,F]

步骤5.2:步骤2所述约束条件包含常规机组输出功率、风电与光伏输出功率、蓄电池的储释功率、大电网交互功率、总负荷功率,同时兼顾弃风弃光量奖惩原则,将其进行离散化处理为N个动作所得到的系统内各主体在迭代过程T中的动作描述,表示为:Step 5.2: The constraints described in

步骤5.3:多元宇宙算法改进的Q学习算法求解目标函数的最优值步骤如下:Step 5.3: The multiverse algorithm improved Q-learning algorithm to find the optimal value of the objective function. The steps are as follows:

5.31)规定微电网内部弃风弃光量的最低与最高额度,划分弃风弃光惩罚区间,初始化多元宇宙算法各项参数,其中宇宙个体数N,维数n,最大迭代次数MAX,初始虫洞位置Xij;5.31) Define the minimum and maximum amount of wind and light curtailment within the microgrid, divide the wind and light curtailment penalty interval, and initialize the parameters of the multiverse algorithm, including the number of universe individuals N, the dimension n, the maximum number of iterations MAX, and the initial wormhole position Xij ;

5.32)随机选定Q学习算法的初始状态5.32) Randomly select the initial state of the Q-learning algorithm

5.33)多元宇宙算法优化Q学习贪婪策略的初始动作5.33) The multiverse algorithm optimizes the initial action of the Q-learning greedy strategy

5.34)基于贪婪策略输出初始状态为的初始动作,进行初始寻优准备;5.34) The initial state based on the greedy strategy output is The initial action for initial optimization preparation;

5.35)依据优化后的初始动作进行目标函数最优值minF的求解;5.35) Solve the optimal value minF of the objective function according to the optimized initial action;

5.36)判断是否满足误差精度;5.36) Judging whether the error accuracy is satisfied;

5.37)若满足误差精度,选定动作并计算多元宇宙算法的最优值更新与虫洞距离,同时进行下一次迭代,最优值更新公式如下:5.37) If the error accuracy is met, select the action And calculate the optimal value update and wormhole distance of the multiverse algorithm, and proceed to the next iteration at the same time, the optimal value update formula is as follows:

式中:Xj为最优宇宙个体所在位置;p1/p2/p3∈[0,1],为随机数;ε为宇宙膨胀率;uj,lj为x的上下限;η为虫洞在所有个体中占比,由迭代次数l与最大迭代次数L规定,表示如下:In the formula: Xj is the position of the optimal individual in the universe; p1 /p2 /p3 ∈[0,1] is a random number; ε is the expansion rate of the universe; uj , lj are the upper and lower limits of x; η is the proportion of wormholes in all individuals, specified by the number of iterations l and the maximum number of iterations L, expressed as follows:

多元宇宙算法寻优机制为黑洞与摆动遵循轮盘赌机制进行选择、个体通过膨胀与自变向当前最优宇宙移动,移动过程中最优移动距离与迭代精度p有关,表示如下:The optimization mechanism of the multiverse algorithm is that the black hole and the swing follow the roulette mechanism to select, and the individual moves to the current optimal universe through expansion and self-change. The optimal moving distance during the moving process is related to the iteration precision p, which is expressed as follows:

5.38)若不满足误差精度,则抛弃本次迭代动作重新进行动作选择并返回步骤5.35);5.38) If the error accuracy is not satisfied, discard this iterative action and re-select the action and return to step 5.35);

5.39)判断是否目标函数值是否为全局最优值,如果不是,则返回步骤5.38);5.39) judge whether the objective function value is the global optimal value, if not, then return to step 5.38);

5.40)若为全局最优值,则输出最终状态与动作;5.40) If it is the global optimal value, output the final state and action;

5.41)计算最终结果。5.41) Calculate the final result.

进一步地,所述步骤3.2将奖惩阶梯型弃风弃光惩罚回报函数作为改进Q学习方法中的动作值。Further, in the step 3.2, the reward-punishment ladder-type penalty return function for abandoning wind and light is used as the action value in the improved Q-learning method.

进一步地,所述步骤4采用多元宇宙优化算法改进传统Q学习算法中的状态特征对应目标函数的最优值。Further, in

进一步地,所述步骤4采用多元宇宙优化算法改进传统Q学习算法的改进方法具体为:Further, the improvement method of

使用多元宇宙算法对Q学习的多级贪婪动作进行优化,降低寻优中冗余动作的发生,进而降低本次迭代结果Qmvo-q的误差精度γT;在不满足本次迭代误差精度的情况下进行下一次状态-动作策略,采用多元宇宙算法进行下一次的优化处理,优化公式表示如下:Use the multiverse algorithm to optimize the multi-level greedy action of Q learning, reduce the occurrence of redundant actions in optimization, and then reduce the error accuracy γT of this iteration result Qmvo-q ; if the error accuracy of this iteration is not satisfied In this case, the next state-action strategy is carried out, and the multiverse algorithm is used for the next optimization process. The optimization formula is expressed as follows:

本发明所具有的优点和有益效果是:The advantages and beneficial effects that the present invention has are:

本发明方法兼顾风光消纳、环境效益与经济效益,考虑微电网内部常规机组、风光机组、储能机组、大电网交互过程以及污染物治理为目标函数建立数学模型,并引入了一种奖惩阶梯型弃风弃光惩罚回报函数对风光发电并网进一步规划。同时提出了一种多元宇宙算法改进的Q学习算法,将传统Q学习的状态与动作参数对应与微电网调度的目标函数、约束条件与弃风弃光奖惩,在满足系统稳定供电的同时实现环境效益最大与风光的完全消纳。本发明所提出改进的Q学习算法采用规划机制寻优,避免了传统算法在寻优过程中产生的最优值局部收敛问题,并考虑弃风弃光惩罚回报的选择机制,解决了微电网调度模型中的多目标优化问题。The method of the invention takes into account the consumption of wind and rain, environmental benefits and economic benefits, and considers the conventional unit, wind and wind unit, energy storage unit, large power grid interaction process and pollutant treatment in the microgrid as the objective function to establish a mathematical model, and introduces a reward and punishment ladder The penalty reward function for abandoning wind and solar power is further planned for grid-connected wind and solar power generation. At the same time, a Q-learning algorithm improved by the multiverse algorithm is proposed, which corresponds the state and action parameters of traditional Q-learning to the objective function, constraint conditions and rewards and punishments of curtailment of wind and light in microgrid scheduling, and realizes environmental protection while satisfying stable power supply of the system. Maximum benefits and complete consumption of scenery. The improved Q-learning algorithm proposed by the present invention adopts the planning mechanism to optimize, which avoids the problem of local convergence of the optimal value generated by the traditional algorithm in the optimization process, and considers the selection mechanism of the penalty return for abandoning wind and light, and solves the problem of microgrid scheduling. Multi-objective optimization problems in the model.

本发明方法降低了微电网运行调度中可再生能源的弃用率,减少了微电网与大电网能量交互的波动性,解决了传统优化方法响应慢、不收敛的问题,提升了微电网运行的稳定性与经济性。The method of the invention reduces the abandonment rate of renewable energy in the operation and scheduling of the micro-grid, reduces the fluctuation of energy interaction between the micro-grid and the large power grid, solves the problem of slow response and non-convergence of the traditional optimization method, and improves the efficiency of the operation of the micro-grid. stability and economy.

附图说明Description of drawings

下面结合附图和实施例,对本发明作进一步详细描述:Below in conjunction with accompanying drawing and embodiment, the present invention is described in further detail:

图1为多元宇宙优化算法改进的Q学习算法优化流程图;Fig. 1 is a Q-learning algorithm optimization flow chart improved by the multiverse optimization algorithm;

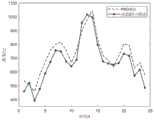

图2为仿真图风光消纳量曲线;Figure 2 is the curve of the wind and light consumption in the simulation graph;

图3为仿真图综合成本曲线;Fig. 3 is the integrated cost curve of the simulation diagram;

图4为本发明一种基于改进Q学习惩罚选择的微电网优化调度方法流程图。Fig. 4 is a flowchart of a microgrid optimal scheduling method based on improved Q-learning penalty selection in the present invention.

具体实施方式Detailed ways

下面结合附图和实施例,对本发明的具体实施方式作进一步详细描述。以下实施例用于说明本发明,但不用来限制本发明的范围。The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

如图4所示,本发明一种基于改进Q学习惩罚选择的微电网优化调度方法,包括以下步骤:As shown in Figure 4, a microgrid optimal scheduling method based on improved Q-learning penalty selection in the present invention includes the following steps:

步骤1:以微电网内部常规机组运行成本、环境效益成本、主电网功率交换成本构建目标函数;Step 1: Construct the objective function based on the operating cost of conventional units in the microgrid, the cost of environmental benefits, and the cost of power exchange in the main grid;

步骤1.1:在风光高比例并网情况下,将常规机组分为常规运行与在低负荷的运行状态,即微电网内部常规发电成本表示如下:Step 1.1: In the case of a high proportion of wind and wind connected to the grid, the conventional unit is divided into normal operation and low-load operation, that is, the internal conventional power generation cost of the microgrid is expressed as follows:

式中:Fcf为常规机组运行成本;a、b、c为常规机组正常运行状态下的成本因子;Pi为第i台常规机组出力;g、h、l、p为低负荷运行状态下的成本因子;kPi,max为第i台常规机组的正常运行状态与低功率运行状态的临界功率。In the formula:Fcf is the operating cost of conventional units; a, b, c are the cost factors of conventional units in normal operation; Pi is the output of the i-th conventional unit; g, h, l, p are The cost factor of ; kPi,max is the critical power of the i-th conventional unit in normal operation state and low power operation state.

步骤1.2:风光不确定处理情况下,常规机组的启停成本表示如下:Step 1.2: In the case of uncertain scenery, the start-stop cost of conventional units is expressed as follows:

式中:Fon-off为常规机组启停成本;C为机组的启停次数;K(ti,r)为第i机组第r次启动的成本;ti,r为第i机组在C次启动前的连续停运时间;C(ti,r)为机组冷态启动是相关辅助系统的操作成本;tcold-hot为机组冷态启动与热态启动的停运临界时间。In the formula: Fon-off is the start-stop cost of the conventional unit; C is the number of start-stop times of the unit; K(ti,r ) is the cost of the r-th start-up of the i-th unit; ti,r is the cost of the i-th unit at C C(ti,r ) is the operating cost of the relevant auxiliary system for the cold start of the unit; tcold-hot is the critical outage time for the cold start and hot start of the unit.

步骤1.3:常规机组发电排放污染物主要含有氮氧化物、硫氧化物以及二氧化碳等,其治理成本表示如下:Step 1.3: Pollutants emitted by conventional units for power generation mainly include nitrogen oxides, sulfur oxides, and carbon dioxide, etc., and the treatment costs are expressed as follows:

Em(Pi)=(αi,m+βi,mPi+γi,mPi2)+ζi,mexp(δi,mPi)Em (Pi )=(αi,m +βi,m Pi +γi,m Pi2 )+ζi,m exp(δi,m Pi )

式中:Fg为常规机组污染治理成本;M为排放污染物的种类;Em(Pi)为第i台机组污染物的排放量;ηm为第m类污染物的治理成本系数;αi,m、βi,m、γi,m、ζi,m、δi,m为第i台机组排放的第m种污染物的排放系数;In the formula: Fg is the pollution control cost of conventional units; M is the type of pollutants discharged; Em (Pi ) is the pollutant emission of the i-th unit; ηm is the treatment cost coefficient of the m-th type of pollutants; αi,m , βi,m , γi,m , ζi,m , δi,m are the emission coefficients of the mth pollutant emitted by the i unit;

步骤1.4:微电网与大电网的功率交换成本表示如下:Step 1.4: The cost of power exchange between the microgrid and the large grid is expressed as follows:

式中:Fgrid为微电网与大电网功率交互成本;λp为微电网售购电状态,售电取值为1,购电取值为-1;Psu/sh为微电网内部的功率盈余与缺额;为大电网的售购电价格。In the formula: Fgrid is the power interaction cost between the microgrid and the large grid; λp is the state of the microgrid’s electricity sales and purchases, the value of electricity sales is 1, and the value of electricity purchase is -1; Psu/sh is the internal power of the microgrid surpluses and deficits; It is the price of electricity sold and purchased by the large power grid.

步骤1.5:以微电网内部常规机组运行成本、环境效益成本、主电网功率交换成本构建目标函数表示如下:Step 1.5: The objective function is constructed based on the operating cost of conventional units in the microgrid, the cost of environmental benefits, and the cost of power exchange in the main grid as follows:

minF=Fcf+Fon-off+Fg+FgridminF=Fcf +Fon-off +Fg +Fgrid

式中:F为微电网系统运行的目标函数值;Fcf、Fon-off、Fg、Fgrid分别为常规机组运行成本、启停成本、污染治理成本以及微电网与大电网功率交互成本。In the formula: F is the objective function value of micro-grid system operation; Fcf , Fon-off , Fg , and Fgrid are the operating cost of conventional units, start-up and shutdown costs, pollution control costs, and power interaction costs between micro-grid and large power grid, respectively .

步骤2:建立微电网运行的约束条件;Step 2: Establish constraints on microgrid operation;

步骤2.1:功率平衡约束表示如下:Step 2.1: The power balance constraints are expressed as follows:

式中:分别表示t时段常规机组、风电与光伏输出功率;/>为t时段蓄电池的储释功率;Ptgrid为与大电网交互功率;PtL为t时段的总负荷功率;T为微电网运行总时段,取24h。In the formula: Respectively represent the output power of conventional units, wind power and photovoltaic power during the t period; /> is the storage and release power of the battery during the t period; Ptgrid is the interactive power with the large grid; PtL is the total load power during the t period; T is the total operation period of the microgrid, which is 24h.

步骤2.2:蓄电池储释状态约束表示如下:Step 2.2: The battery storage and release state constraints are expressed as follows:

SOCmin≤SOC(t)≤SOCmaxSOCmin ≤ SOC(t) ≤ SOCmax

式中:SOC(t)为蓄电池t时刻荷电状态;SOCmin与SOCmax分别代表蓄电池的最大与最小荷电状态。In the formula: SOC(t) is the state of charge of the battery at time t; SOCmin and SOCmax represent the maximum and minimum state of charge of the battery, respectively.

步骤2.3:对于常规机组而言,其累计的启停时间应该大于最小连续启停时间,其约束表示如下:Step 2.3: For conventional units, the cumulative start-stop time should be greater than the minimum continuous start-stop time, and its constraints are expressed as follows:

式中:为机组最小的连续停止时间;/>为机组最小的连续启动时间。In the formula: is the minimum continuous stop time of the unit; /> It is the minimum continuous start time of the unit.

步骤3:构造以最高弃风弃光成本与风光完全消纳成本为最高与最低阈值的惩罚回报函数;Step 3: Construct a penalty-return function with the highest cost of abandoning wind and solar energy and the cost of fully absorbing wind and solar energy as the highest and lowest thresholds;

步骤3.1:规定微电网内部弃风弃光量的最低与最高额度,划分风光完全消纳量至弃风弃光量最高额度的增长区间χn,区间表示如下:Step 3.1: Define the minimum and maximum amount of wind and solar curtailment within the microgrid, and divide the growth interval χn from the complete consumption of wind and solar energy to the maximum amount of wind and solar curtailment. The interval is expressed as follows:

式中:分别为系统内部规定的弃风弃光量的最高与最低额度;n为所划分的区间个数;λ为规定额度增长量的增长步长。In the formula: Respectively, the maximum and minimum amount of wind and light curtailment stipulated in the system; n is the number of divided intervals; λ is the growth step of the specified amount of growth.

步骤3.2:根据系统对于弃风弃光量所规定的额度区间,将其进行线性化处理获得奖惩阶梯型弃风弃光惩罚回报函数,函数表示如下:Step 3.2: According to the quota range stipulated by the system for the amount of curtailment of wind and solar, linearize it to obtain a ladder-type reward function for curtailing wind and solar. The function is expressed as follows:

式中:dab弃风弃光惩罚回报函数值;Pab,wp为系统的弃风弃光量;c为弃风弃光惩罚系数;k为惩罚系数的区间增长步长。In the formula: dab is the reward function value of wind and solar curtailment penalty; Pab,wp is the amount of wind and solar curtailment of the system; c is the penalty coefficient of wind and solar curtailment; k is the interval growth step of the penalty coefficient.

步骤3.2中将奖惩阶梯型弃风弃光惩罚回报函数作为改进Q学习方法中的动作值。In step 3.2, the reward-punishment ladder-type penalty return function for abandoning wind and light is used as the action value in the improved Q-learning method.

步骤4:采用多元宇宙优化算法改进传统Q学习算法;Step 4: Improve the traditional Q-learning algorithm by using the multiverse optimization algorithm;

多元宇宙优化算法作为启发式搜索算法,将宇宙作为问题可行解,通过黑洞、白洞与虫洞的相互作用进行循环迭代,即将传统Q学习算法在非监督状态下的最优选择进行迭代优化从而得到强化后的目标解。优化后的改进Q学习算法的状态-动作函数表示如下:As a heuristic search algorithm, the multiverse optimization algorithm takes the universe as a feasible solution to the problem, and performs cyclic iterations through the interaction of black holes, white holes and wormholes, and iteratively optimizes the optimal choice of the traditional Q-learning algorithm in an unsupervised state. Get the enhanced target solution. The optimized state-action function of the improved Q-learning algorithm is expressed as follows:

式中:Fs作为传统Q学习的状态特征,对应微电网系统运行的目标函数F;为经多元宇宙优化算法优化后的动作特征,对应奖惩阶梯型弃风弃光惩罚回报函数值dab;分别为状态特征与动作特征的初始值;Emvo-p为MVO-Q策略下的期望值;T为迭代次数;/>YT分别为迭代下的奖赏值与折扣系数。In the formula: Fs is the state feature of traditional Q-learning, which corresponds to the objective function F of microgrid system operation; is the action feature optimized by the multiverse optimization algorithm, and corresponds to the value of reward and punishment reward function dab for stepwise abandonment of wind and light; are the initial values of state features and action features; Emvo-p is the expected value under the MVO-Q strategy; T is the number of iterations; /> YT are the reward value and discount coefficient under the iteration respectively.

使用多元宇宙算法对Q学习的多级贪婪动作进行优化,降低寻优中冗余动作的发生,进而降低本次迭代结果Qmvo-q的误差精度γT(初始误差精度为γT0)。在不满足本次迭代误差精度的情况下进行下一次状态-动作策略,采用多元宇宙算法进行下一次的优化处理,优化公式表示如下:Using the multiverse algorithm to optimize the multi-level greedy action of Q learning, reduce the occurrence of redundant actions in optimization, and then reduce the error accuracy γT of the iterative result Qmvo-q (the initial error accuracy is γT0 ). When the error accuracy of this iteration is not satisfied, the next state-action strategy is carried out, and the multiverse algorithm is used for the next optimization process. The optimization formula is expressed as follows:

式中:为T-1时刻的动作特征与状态特征;/>为T时刻的状态特征;为T-1时刻下的奖赏值In the formula: is the action feature and state feature at T-1 time; /> is the state characteristic at time T; is the reward value at time T-1

将多元宇宙优化算法改进传统Q学习算法中状态特征对应目标函数的最优值。The multiverse optimization algorithm is improved to the optimal value of the objective function corresponding to the state characteristics in the traditional Q-learning algorithm.

步骤5:将步骤1所得目标函数进行马尔科夫决策描述处理,并以改进的Q学习算法对所得状态与动作描述进行规划求解。Step 5: The objective function obtained in

步骤5.1:步骤1所述目标函数包含机组运行成本、环境效益成本、主电网功率交换成本,故将系统内各主体在迭代过程T中的状态描述表示为:Step 5.1: The objective function described in

Fs=[Fcf,Fon-off,Em(Pi),Fg,Fgrid,F]Fs =[Fcf ,Fon-off ,Em (Pi ),Fg ,Fgrid ,F]

步骤5.2:步骤2所述约束条件包含常规机组输出功率、风电与光伏输出功率、蓄电池的储释功率、大电网交互功率、总负荷功率,同时兼顾弃风弃光量奖惩原则,将其进行离散化处理为N个动作所得到的系统内各主体在迭代过程T中的动作描述,表示为:Step 5.2: The constraints described in

步骤5.3:如图1所示,多元宇宙算法改进的Q学习算法求解目标函数的最优值步骤如下:Step 5.3: As shown in Figure 1, the steps to solve the optimal value of the objective function by the improved Q-learning algorithm of the multiverse algorithm are as follows:

5.31)规定微电网内部弃风弃光量的最低与最高额度,划分弃风弃光惩罚区间,初始化多元宇宙算法各项参数,其中宇宙个体数N,维数n,最大迭代次数MAX,初始虫洞位置Xij;5.31) Define the minimum and maximum amount of wind and light curtailment within the microgrid, divide the wind and light curtailment penalty interval, and initialize the parameters of the multiverse algorithm, including the number of universe individuals N, the dimension n, the maximum number of iterations MAX, and the initial wormhole position Xij ;

5.32)随机选定Q学习算法的初始状态5.32) Randomly select the initial state of the Q-learning algorithm

5.33)多元宇宙算法优化Q学习贪婪策略的初始动作5.33) The multiverse algorithm optimizes the initial action of the Q-learning greedy strategy

5.34)基于贪婪策略输出初始状态为的初始动作,进行初始寻优准备;5.34) The initial state based on the greedy strategy output is The initial action for initial optimization preparation;

5.35)依据优化后的初始动作进行目标函数最优值minF的求解;5.35) Solve the optimal value minF of the objective function according to the optimized initial action;

5.36)判断是否满足误差精度;5.36) Judging whether the error accuracy is satisfied;

5.37)若满足误差精度,则选定动作并计算多元宇宙算法的最优值更新与虫洞距离,同时进行下一次迭代,最优值更新公式如下:5.37) If the error accuracy is met, select the action And calculate the optimal value update and wormhole distance of the multiverse algorithm, and proceed to the next iteration at the same time, the optimal value update formula is as follows:

式中:Xj为最优宇宙个体所在位置;p1/p2/p3∈[0,1],为随机数;ε为宇宙膨胀率;uj,lj为x的上下限;η为虫洞在所有个体中占比,由迭代次数l与最大迭代次数L规定,表示如下:In the formula: Xj is the position of the optimal individual in the universe; p1 /p2 /p3 ∈[0,1] is a random number; ε is the expansion rate of the universe; uj , lj are the upper and lower limits of x; η is the proportion of wormholes in all individuals, specified by the number of iterations l and the maximum number of iterations L, expressed as follows:

多元宇宙算法寻优机制为黑洞与摆动遵循轮盘赌机制进行选择、个体通过膨胀与自变向当前最优宇宙移动,移动过程中最优移动距离与迭代精度p有关,表示如下:The optimization mechanism of the multiverse algorithm is that the black hole and the swing follow the roulette mechanism to select, and the individual moves to the current optimal universe through expansion and self-change. The optimal moving distance during the moving process is related to the iteration precision p, which is expressed as follows:

5.38)若不满足误差精度,则抛弃本次迭代动作重新进行动作选择并返回步骤5.35);5.38) If the error accuracy is not satisfied, discard this iterative action and re-select the action and return to step 5.35);

5.39)判断是否目标函数值是否为全局最优值,如果不是则返回步骤5.38)。5.39) Judging whether the objective function value is the global optimal value, if not, return to step 5.38).

5.40)若为全局最优值则输出最终状态与动作;5.40) If it is the global optimal value, output the final state and action;

5.41)计算最终结果。5.41) Calculate the final result.

采用常规微电网内部的经典电负荷需求进行实验仿真,实验参数设置如下:The experimental simulation is carried out using the classic electric load demand inside the conventional microgrid, and the experimental parameters are set as follows:

本发明方法针对包含风电场、光伏发电厂、燃气轮机机组、储能机组的典型微电网进行优化调度,且假设存在微电网与大电网的功率交互,并采用传统粒子群算法与上述改进Q学习算法对目标函数进行优化求解,得到满足风光最大消纳量的系统综合调度计划。如图2、3所示,经过仿真实验对比分析,运用本发明的方法进行微电网调度风光消纳总量提升了33.18%,综合成本降低了6.51%。因此,本发明在微电网的调度规划过程中可以极大的提高风光消纳比例,在满足环境效益的同时达到经济效益的最大化。The method of the present invention optimizes scheduling for a typical micro-grid including wind farms, photovoltaic power plants, gas turbine units, and energy storage units, and assumes that there is power interaction between the micro-grid and the large power grid, and adopts the traditional particle swarm algorithm and the above-mentioned improved Q learning algorithm The objective function is optimized and solved, and the system comprehensive scheduling plan that satisfies the maximum consumption of wind and solar energy is obtained. As shown in Figures 2 and 3, after comparison and analysis of simulation experiments, the total amount of wind and solar consumption for microgrid scheduling by using the method of the present invention has increased by 33.18%, and the overall cost has been reduced by 6.51%. Therefore, the present invention can greatly increase the proportion of wind and solar consumption in the scheduling and planning process of the microgrid, and achieve maximum economic benefits while satisfying environmental benefits.

最后应说明的是,以上实施例仅用以说明本发明的技术方案,而非对其限制;尽管参照前述实施例对本发明进行了详细的说明,本领域的普通技术人员应当理解:其依然可以对前述实施例所记载的技术方案进行修改,或者对其中部分或者全部技术特征进行等同替换;而这些修改或者替换,并不使相应技术方案的本质脱离本发明权利要求所限定的范围。Finally, it should be noted that the above embodiments are only used to illustrate the technical solutions of the present invention, rather than limit them; although the present invention has been described in detail with reference to the foregoing embodiments, those of ordinary skill in the art should understand that: it can still be Modifications are made to the technical solutions described in the foregoing embodiments, or equivalent replacements are made to some or all of the technical features; these modifications or replacements do not make the essence of the corresponding technical solutions depart from the scope defined by the claims of the present invention.

Claims (8)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202111115317.6ACN113809780B (en) | 2021-09-23 | 2021-09-23 | Micro-grid optimal scheduling method based on improved Q learning punishment selection |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202111115317.6ACN113809780B (en) | 2021-09-23 | 2021-09-23 | Micro-grid optimal scheduling method based on improved Q learning punishment selection |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN113809780A CN113809780A (en) | 2021-12-17 |

| CN113809780Btrue CN113809780B (en) | 2023-06-30 |

Family

ID=78940309

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202111115317.6AActiveCN113809780B (en) | 2021-09-23 | 2021-09-23 | Micro-grid optimal scheduling method based on improved Q learning punishment selection |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN113809780B (en) |

Families Citing this family (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114418198A (en)* | 2021-12-30 | 2022-04-29 | 国网辽宁省电力有限公司电力科学研究院 | A piecewise functional calculation method for the penalty cost of abandoning new energy |

| CN114862048B (en)* | 2022-05-30 | 2024-09-17 | 哈尔滨理工大学 | Permanent magnet synchronous motor optimization method based on improved multi-element universe optimization algorithm |

| CN117439190B (en)* | 2023-10-26 | 2024-06-11 | 华中科技大学 | A method, device, equipment and storage medium for dispatching water, fire and wind systems |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN108964042A (en)* | 2018-07-24 | 2018-12-07 | 合肥工业大学 | Regional power grid operating point method for optimizing scheduling based on depth Q network |

| CN109347149A (en)* | 2018-09-20 | 2019-02-15 | 国网河南省电力公司电力科学研究院 | Microgrid energy storage scheduling method and device based on deep Q-value network reinforcement learning |

| JP6667785B1 (en)* | 2019-01-09 | 2020-03-18 | 裕樹 有光 | A program for learning by associating a three-dimensional model with a depth image |

| CN112084680A (en)* | 2020-09-02 | 2020-12-15 | 沈阳工程学院 | An energy internet optimization strategy method based on DQN algorithm |

Family Cites Families (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2019157257A1 (en)* | 2018-02-08 | 2019-08-15 | Cognizant Technology Solutions U.S. Corporation | System and method for pseudo-task augmentation in deep multitask learning |

| CN109934423B (en)* | 2019-04-25 | 2020-04-21 | 山东大学 | Power prediction method and system of photovoltaic power station based on grid-connected inverter operation data |

- 2021

- 2021-09-23CNCN202111115317.6Apatent/CN113809780B/enactiveActive

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN108964042A (en)* | 2018-07-24 | 2018-12-07 | 合肥工业大学 | Regional power grid operating point method for optimizing scheduling based on depth Q network |

| CN109347149A (en)* | 2018-09-20 | 2019-02-15 | 国网河南省电力公司电力科学研究院 | Microgrid energy storage scheduling method and device based on deep Q-value network reinforcement learning |

| JP6667785B1 (en)* | 2019-01-09 | 2020-03-18 | 裕樹 有光 | A program for learning by associating a three-dimensional model with a depth image |

| CN112084680A (en)* | 2020-09-02 | 2020-12-15 | 沈阳工程学院 | An energy internet optimization strategy method based on DQN algorithm |

Non-Patent Citations (2)

| Title |

|---|

| 基于最优潮流的含多微网的主动配电网双层优化调度;叶亮;吕智林;王蒙;杨啸;;电力系统保护与控制(第18期);全文* |

| 基于纵横交叉算法优化BP神经网络的风机齿轮箱故障诊断方法;马留洋;孟安波;葛佳菲;;广东工业大学学报(第02期);全文* |

Also Published As

| Publication number | Publication date |

|---|---|

| CN113809780A (en) | 2021-12-17 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN112036611B (en) | A Grid Optimal Planning Method Considering Risks | |

| CN113809780B (en) | Micro-grid optimal scheduling method based on improved Q learning punishment selection | |

| CN110659830A (en) | Multi-energy microgrid planning method for integrated energy system | |

| CN117833285A (en) | A microgrid energy storage optimization scheduling method based on deep reinforcement learning | |

| Umeozor et al. | Operational scheduling of microgrids via parametric programming | |

| Zhang et al. | Day-ahead optimal scheduling of a standalone solar-wind-gas based integrated energy system with and without considering thermal inertia and user comfort | |

| CN110854932A (en) | Multi-time scale optimization scheduling method and system for AC/DC power distribution network | |

| CN114676991A (en) | Optimal scheduling method for multi-energy complementary systems based on uncertainty on both sides of source and load | |

| CN114865631B (en) | Optimal distribution robust economic scheduling method for source-load cooperative carbon reduction integrated energy system | |

| Xu et al. | Optimization based on tabu search algorithm for optimal sizing of hybrid PV/energy storage system: Effects of tabu search parameters | |

| CN115375344A (en) | A Two-Stage Robust Optimal Low-Carbon Economic Scheduling Method for Microgrid Considering Ladder Carbon Trading Mechanism | |

| CN105305423A (en) | Method for determining optimal error boundary considering intermittent energy uncertainty | |

| CN111293718A (en) | AC/DC hybrid microgrid partition two-layer optimized operation method based on scene analysis | |

| CN115423282A (en) | Electricity-hydrogen-storage integrated energy network multi-objective optimization scheduling model based on reward and punishment stepped carbon transaction | |

| CN111585279A (en) | Microgrid optimization scheduling method based on new energy consumption | |

| CN116468215A (en) | Comprehensive energy system scheduling method and device considering uncertainty of source load | |

| Saha | Adaptive model-based receding horizon control of interconnected renewable-based power micro-grids for effective control and optimal power exchanges | |

| Luo et al. | Two‐stage robust optimal scheduling of wind power‐photovoltaic‐thermal power‐pumped storage combined system | |

| CN118336692A (en) | Wind-light-fire-storage multifunctional complementary day-ahead robust optimal scheduling method | |

| CN117252043A (en) | Multi-objective optimal dispatching method and device for regional multi-energy complementary energy systems | |

| CN118822160A (en) | A collaborative planning method for source-grid-storage-direct current in Shagohuang new energy base | |

| Zhao et al. | Research on Multiobjective Optimal Operation Strategy for Wind‐Photovoltaic‐Hydro Complementary Power System | |

| Li et al. | Distributed Robust Optimal Dispatch for the Microgrid Considering Output Correlation between Wind and Photovoltaic. | |

| CN117744894B (en) | An Active Learning Agent Optimization Method for Integrated Energy Systems | |

| CN119128660A (en) | A comprehensive energy system operation decision-making method, product, medium and equipment |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |