CN113204787B - Block chain-based federated learning privacy protection method, system, device and medium - Google Patents

Block chain-based federated learning privacy protection method, system, device and mediumDownload PDFInfo

- Publication number

- CN113204787B CN113204787BCN202110493191.XACN202110493191ACN113204787BCN 113204787 BCN113204787 BCN 113204787BCN 202110493191 ACN202110493191 ACN 202110493191ACN 113204787 BCN113204787 BCN 113204787B

- Authority

- CN

- China

- Prior art keywords

- model

- node

- encryption

- block

- aggregation

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F21/00—Security arrangements for protecting computers, components thereof, programs or data against unauthorised activity

- G06F21/60—Protecting data

- G06F21/62—Protecting access to data via a platform, e.g. using keys or access control rules

- G06F21/6218—Protecting access to data via a platform, e.g. using keys or access control rules to a system of files or objects, e.g. local or distributed file system or database

- G06F21/6245—Protecting personal data, e.g. for financial or medical purposes

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F16/00—Information retrieval; Database structures therefor; File system structures therefor

- G06F16/20—Information retrieval; Database structures therefor; File system structures therefor of structured data, e.g. relational data

- G06F16/27—Replication, distribution or synchronisation of data between databases or within a distributed database system; Distributed database system architectures therefor

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F21/00—Security arrangements for protecting computers, components thereof, programs or data against unauthorised activity

- G06F21/60—Protecting data

- G06F21/602—Providing cryptographic facilities or services

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F21/00—Security arrangements for protecting computers, components thereof, programs or data against unauthorised activity

- G06F21/60—Protecting data

- G06F21/64—Protecting data integrity, e.g. using checksums, certificates or signatures

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N20/00—Machine learning

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y04—INFORMATION OR COMMUNICATION TECHNOLOGIES HAVING AN IMPACT ON OTHER TECHNOLOGY AREAS

- Y04S—SYSTEMS INTEGRATING TECHNOLOGIES RELATED TO POWER NETWORK OPERATION, COMMUNICATION OR INFORMATION TECHNOLOGIES FOR IMPROVING THE ELECTRICAL POWER GENERATION, TRANSMISSION, DISTRIBUTION, MANAGEMENT OR USAGE, i.e. SMART GRIDS

- Y04S40/00—Systems for electrical power generation, transmission, distribution or end-user application management characterised by the use of communication or information technologies, or communication or information technology specific aspects supporting them

- Y04S40/20—Information technology specific aspects, e.g. CAD, simulation, modelling, system security

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Software Systems (AREA)

- General Physics & Mathematics (AREA)

- General Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Bioethics (AREA)

- General Health & Medical Sciences (AREA)

- Health & Medical Sciences (AREA)

- Computer Security & Cryptography (AREA)

- Computer Hardware Design (AREA)

- Databases & Information Systems (AREA)

- Data Mining & Analysis (AREA)

- Computing Systems (AREA)

- Medical Informatics (AREA)

- Mathematical Physics (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Evolutionary Computation (AREA)

- Artificial Intelligence (AREA)

- Storage Device Security (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及联邦学习技术领域,特别是涉及一种基于区块链的联邦学习隐私保护方法、系统、计算机设备和存储介质。The present invention relates to the technical field of federated learning, in particular to a blockchain-based federated learning privacy protection method, system, computer equipment and storage medium.

背景技术Background technique

联邦学习是一种将中心化的机器学习拆解为分布式的机器学习机器学习架构,其通过将机器学习任务分发到终端设备节点进行学习,再将所有终端设备节点学习产生的梯度结果聚合得到最终训练结果的方法,有效帮助各用户打破数据孤岛,在满足用户隐私保护、数据安全和政府法规的要求下,进行广泛而深入的机器学习研究。虽然联邦学习已在数字图像处理、自然语言处理、文本语音处理等领域得到广泛应用,但传统的联邦学习在计算和更新模型时完全依赖于中央服务器,一旦中央服务器遭到攻击,将导致整个训练过程无法正常进行的问题一直困扰着用户,也成为众多学者研究的问题。Federated learning is a machine learning architecture that disassembles centralized machine learning into distributed machine learning. It distributes machine learning tasks to terminal device nodes for learning, and then aggregates the gradient results generated by all terminal device nodes. The method of final training results effectively helps users to break the data silos, and conduct extensive and in-depth machine learning research while meeting the requirements of user privacy protection, data security and government regulations. Although federated learning has been widely used in digital image processing, natural language processing, text-to-speech processing and other fields, traditional federated learning completely relies on the central server when computing and updating models. Once the central server is attacked, the entire training The problem that the process cannot be carried out normally has been plaguing users and has also become a problem studied by many scholars.

现有的解决方案有P2P的联邦学习方法和基于区块链的联邦学习方法。虽然P2P的联邦学习方法能够解决中央服务器遭到攻击,将导致整个训练过程无法正常进行的问题,但其会增加参与方(终端设备节点)之间的通信压力,于是就有学者提出了基于区块链的联邦学习方法,该方法虽然减少了参与方之间的通信压力,也能够保证参与方的可信度和可追溯性,但其仍存在在不足:1)只能保证区块链中的数据不会被篡改,无法保护联邦学习时区块链中的数据内容的隐私;2)忽略了对联邦学习训练过程中客户端权重的保护,攻击者可以根据模型分析的结果以一定的概率间接推断出训练数据的来源;3)对于服务器来说,聚合模型的计算成本较大。Existing solutions include P2P federated learning methods and blockchain-based federated learning methods. Although the P2P federated learning method can solve the problem that the central server is attacked and the whole training process cannot be carried out normally, it will increase the communication pressure between the participants (terminal device nodes), so some scholars have proposed a district-based The federated learning method of the blockchain, although this method reduces the communication pressure between the participants and can also ensure the credibility and traceability of the participants, but it still has shortcomings: 1) It can only guarantee the The data will not be tampered with, and the privacy of the data content in the blockchain cannot be protected during federated learning; 2) The protection of client weights in the federated learning training process is ignored, and attackers can indirectly use a certain probability according to the results of model analysis. Infer the source of the training data; 3) For the server, the computational cost of the aggregation model is large.

因此,亟需提供一种在保证现有基于区块链联邦学习优势的基础上,增加对客户端属性来源隐私和模型数据内容隐私的保护,降低服务提供方的计算成本,且提高联邦学习效率和模型服务质量的联邦学习方法。Therefore, it is urgent to provide a way to increase the protection of client attribute source privacy and model data content privacy on the basis of ensuring the advantages of existing blockchain-based federated learning, reduce the computing cost of service providers, and improve the efficiency of federated learning. A Federated Learning Approach to Model Quality of Service.

发明内容SUMMARY OF THE INVENTION

本发明的目的是提供一种基于区块链的联邦学习隐私保护方法,在克服了现有基于区块链联邦学习只能保证区块链中的数据不被篡改,而无法保护联邦学习时区块链中的数据内容隐私、训练过程中忽略对客户端权重保护,以及服务器聚合模型计算成本较大的问题的同时,还能提高联邦学习效率和模型服务质量。The purpose of the present invention is to provide a blockchain-based federated learning privacy protection method, which overcomes the fact that the existing blockchain-based federated learning can only ensure that the data in the blockchain is not tampered with, but cannot protect the blocks during federated learning. The data content privacy in the chain, the neglect of client weight protection in the training process, and the large computational cost of the server aggregation model, can also improve the efficiency of federated learning and the quality of model service.

为了实现上述目的,有必要针对上述技术问题,提供了一种基于区块链的联邦学习隐私保护方法、系统、计算机设备及存储介质。In order to achieve the above purpose, it is necessary to provide a blockchain-based federated learning privacy protection method, system, computer equipment and storage medium for the above technical problems.

第一方面,本发明实施例提供了一种基于区块链的联邦学习隐私保护方法,所述方法包括以下步骤:In a first aspect, an embodiment of the present invention provides a blockchain-based federated learning privacy protection method, the method includes the following steps:

预先根据各节点的权重向量,由可信第三方生成主公钥、主私钥、解密密钥和各节点的加密密钥,并将所述主公钥、解密密钥和加密密钥发送至对应的各节点;所述加密密钥为各节点私有;According to the weight vector of each node in advance, a trusted third party generates the master public key, master private key, decryption key and encryption key of each node, and sends the master public key, decryption key and encryption key to Each corresponding node; the encryption key is private to each node;

由主节点创建初始区块,将初始模型写入所述初始区块并发布;所述初始模型基于公共数据集训练得到;An initial block is created by the master node, and an initial model is written into the initial block and released; the initial model is obtained by training based on a public data set;

由所述各节点下载所述初始模型,训练得到本地模型,并采用所述加密密钥对所述本地模型进行加密,得到加密模型并上传至区块链;The initial model is downloaded by each node, a local model is obtained by training, and the local model is encrypted by using the encryption key to obtain an encrypted model and upload it to the blockchain;

响应于各节点的所述加密模型全部上传至区块链,由所述各节点竞争生成聚合区块的权力,并由获得所述生成聚合区块的权力的节点根据所述主公钥和所述解密密钥,将各节点的所述加密模型聚合生成全局模型并上传至区块链;In response to uploading all the encryption models of each node to the blockchain, each node competes for the power to generate aggregated blocks, and the node that obtains the power to generate aggregated blocks based on the master public key and all The decryption key is generated, and the encryption model of each node is aggregated to generate a global model and uploaded to the blockchain;

由所述主节点下载所述全局模型,并判断所述全局模型是否为理想模型,若是理想模型,则停止迭代,反之,进入下一轮训练。The master node downloads the global model, and judges whether the global model is an ideal model. If it is an ideal model, the iteration is stopped; otherwise, the next round of training is entered.

进一步地,所述预先根据各节点的权重向量,由可信第三方生成主公钥、主私钥、解密密钥和各节点的加密密钥的步骤包括:Further, the step of generating a master public key, a master private key, a decryption key and an encryption key of each node by a trusted third party according to the weight vector of each node in advance includes:

由所述各节点将预设的权重向量发送给所述可信第三方;sending the preset weight vector to the trusted third party by the nodes;

由所述可信第三方根据各节点的所述权重向量生成权重矩阵,并根据所述权重矩阵生成所述主公钥和所述主私钥;A weight matrix is generated by the trusted third party according to the weight vector of each node, and the master public key and the master private key are generated according to the weight matrix;

由所述可信第三方根据所述权重矩阵和所述主私钥,生成所述解密密钥和所述加密密钥。The decryption key and the encryption key are generated by the trusted third party according to the weight matrix and the master private key.

进一步地,所述由所述各节点下载所述初始模型,训练得到本地模型,并采用所述加密密钥对所述本地模型进行加密,得到加密模型并上传至区块链的步骤包括:Further, the steps of downloading the initial model from each node, training to obtain a local model, and encrypting the local model with the encryption key, and obtaining the encrypted model and uploading it to the blockchain include:

由所述各节点下载所述初始区块,并获取所述初始模型;Downloading the initial block by each node, and acquiring the initial model;

根据本地数据对所述初始模型进行训练,得到所述本地模型;The initial model is trained according to local data to obtain the local model;

根据所述加密密钥,对所述本地模型运行多输入函数加密的加密算法,得到所述加密模型;According to the encryption key, the encryption algorithm of multi-input function encryption is run on the local model to obtain the encryption model;

根据所述区块链的共识机制竞争生成加密模型区块的权力,并将所述加密模型、聚合码和迭代次数写入所述加密模型区块,以及将所述加密模型区块上传至所述区块链;所述聚合码为第一预设值。Compete for the right to generate an encrypted model block according to the consensus mechanism of the blockchain, write the encrypted model, aggregation code and the number of iterations into the encrypted model block, and upload the encrypted model block to all the blockchain; the aggregation code is a first preset value.

进一步地,所述响应于各节点的所述加密模型全部上传至区块链,由所述各节点竞争生成聚合区块的权力,并由获得所述生成聚合区块的权力的节点根据所述主公钥和所述解密密钥,将各节点的所述加密模型聚合生成全局模型并上传至区块链的步骤包括:Further, all the encryption models in response to each node are uploaded to the blockchain, each node competes for the power to generate aggregated blocks, and the node that obtains the power to generate aggregated blocks according to the The master public key and the decryption key, the steps of aggregating the encryption model of each node to generate a global model and uploading it to the blockchain include:

由所述各节点根据所述区块链的共识机制竞争生成模型聚合区块权力,并由获得所述生成模型聚合区块权力的节点下载其他节点对应的所述加密模型区块,获取其他节点对应的所述加密模型;The nodes compete to generate model aggregation block power according to the consensus mechanism of the blockchain, and the node that obtains the generation model aggregation block power downloads the encrypted model blocks corresponding to other nodes, and obtains other nodes. the corresponding encryption model;

根据所述主公钥和所述解密密钥,对各节点的所述加密模型运行多输入函数加密的解密算法,得到所述全局模型,并向所述区块链发起上链请求,以使得所述区块链对所述全局模型进行区块打包处理和广播共识校验;According to the master public key and the decryption key, the decryption algorithm of multi-input function encryption is run on the encryption model of each node to obtain the global model, and an on-chain request is initiated to the blockchain, so that the The blockchain performs block packaging processing and broadcast consensus verification on the global model;

响应于所述上链请求,由所述区块链的全节点确定所述全局模型的区块链存储地址,并生成所述模型聚合区块,以及将所述模型聚合区块广播至所述区块链中的其他全节点进行共识校验;所述模型聚合区块记录所述全局模型和所述区块链存储地址;In response to the on-chain request, the full node of the blockchain determines the blockchain storage address of the global model, generates the model aggregation block, and broadcasts the model aggregation block to the Other full nodes in the blockchain perform consensus verification; the model aggregation block records the global model and the blockchain storage address;

响应于所述共识校验成功,由所述全节点将所述全局模型、所述聚合码和所述迭代次数保存至所述区块链存储地址,并将所述模型聚合区块广播至所述区块链的各节点进行同步;所述聚合码设为第二预设值。In response to the success of the consensus verification, the full node saves the global model, the aggregation code and the number of iterations to the blockchain storage address, and broadcasts the model aggregation block to all nodes. Each node of the blockchain is synchronized; the aggregation code is set to a second preset value.

进一步地,所述由获得所述生成模型聚合区块权力的节点下载其他节点对应的所述加密模型区块,获取其他节点对应的所述加密模型的步骤包括:Further, the step of downloading the encryption model blocks corresponding to other nodes by the node that obtains the generation model aggregation block power, and obtaining the encryption models corresponding to other nodes includes:

判断所述加密模型区块是否满足聚合要求;所述聚合要求为所述聚合码均为第一预设值,且所述迭代次数均为当前迭代次数;Judging whether the encryption model block satisfies the aggregation requirement; the aggregation requirement is that the aggregation code is the first preset value, and the number of iterations is the current number of iterations;

若所述加密模型区块满足聚合要求,则获取所述加密模型区块对应的所述加密模型。If the encryption model block meets the aggregation requirement, the encryption model corresponding to the encryption model block is acquired.

进一步地,所述由所述主节点下载所述全局模型,并判断所述全局模型是否为理想模型,若是理想模型,则停止迭代,反之,进入下一轮训练的步骤包括:Further, the master node downloads the global model, and judges whether the global model is an ideal model, if it is an ideal model, then stop the iteration, otherwise, the steps of entering the next round of training include:

由所述主节点下载与当前轮数对应的所述模型聚合区块,并判断所述模型聚合区块对应的所述聚合码是否为所述第二预设值,若所述聚合码为所述第二预设值,则获取所述全局模型;The master node downloads the model aggregation block corresponding to the current number of rounds, and determines whether the aggregation code corresponding to the model aggregation block is the second preset value, if the aggregation code is the the second preset value, then obtain the global model;

根据所述公共数据集测试所述全局模型的准确率,并根据所述准确率是否收敛判断所述全局模型是否为理想模型;Test the accuracy of the global model according to the public data set, and judge whether the global model is an ideal model according to whether the accuracy converges;

若所述全局模型不是理想模型,则发送继续训练广播消息,反之,则发送停止训练广播消息;If the global model is not an ideal model, send a continue training broadcast message, otherwise, send a stop training broadcast message;

响应于所述继续训练广播消息,由所述各节点下载所述全局模型,并开始下一轮训练。In response to the continuing training broadcast message, each node downloads the global model and starts the next round of training.

进一步地,所述响应于所述继续训练广播消息,由所述各节点下载所述全局模型的步骤包括:Further, in response to the continuous training broadcast message, the step of downloading the global model by each node includes:

由所述各节点下载所述模型聚合区块,判断所述聚合码是否为第二预设值;Downloading the model aggregation block by each node, and judging whether the aggregation code is a second preset value;

若所述聚合码为所述第二预设值,则判断所述迭代次数是否为所述当前迭代次数;If the aggregation code is the second preset value, determining whether the number of iterations is the current number of iterations;

若所述迭代次数为所述当前迭代次数,则获取所述全局模型。If the number of iterations is the current number of iterations, the global model is acquired.

第二方面,本发明实施例提供了一种基于区块链的联邦学习隐私保护系统,所述系统包括:In a second aspect, an embodiment of the present invention provides a blockchain-based federated learning privacy protection system, the system includes:

权重加密模块,用于预先根据各节点的权重向量,由可信第三方生成主公钥、主私钥、解密密钥和各节点的加密密钥,并将所述主公钥、解密密钥和加密密钥发送至对应的各节点;所述加密密钥为各节点私有;The weight encryption module is used to generate the master public key, master private key, decryption key and encryption key of each node by a trusted third party according to the weight vector of each node in advance, and use the master public key, decryption key and encryption keys are sent to corresponding nodes; the encryption keys are private to each node;

初始建模模块,用于由主节点创建初始区块,将初始模型写入所述初始区块并发布;所述初始模型基于公共数据集训练得到;an initial modeling module, used for creating an initial block by the master node, writing the initial model into the initial block and publishing; the initial model is obtained by training based on a public data set;

本地训练模块,用于由所述各节点下载所述初始模型,训练得到本地模型,并采用所述加密密钥对所述本地模型进行加密,得到加密模型并上传至区块链;a local training module, used for downloading the initial model from each node, training to obtain a local model, and encrypting the local model with the encryption key, obtaining the encrypted model and uploading it to the blockchain;

模型聚合模块,用于响应于各节点的所述加密模型全部上传至区块链,由所述各节点竞争生成聚合区块的权力,并由获得所述生成聚合区块的权力的节点根据所述主公钥和所述解密密钥,将各节点的所述加密模型聚合生成全局模型并上传至区块链;The model aggregation module is used for uploading all the encrypted models of each node to the blockchain, and each node competes for the power to generate the aggregated block, and the node that obtains the power to generate the aggregated block according to the the master public key and the decryption key, aggregate the encryption models of each node to generate a global model and upload it to the blockchain;

模型测试模块,用于由所述主节点下载所述全局模型,并判断所述全局模型是否为理想模型,若是理想模型,则停止迭代,反之,进入下一轮训练。The model testing module is used for downloading the global model from the master node, and judging whether the global model is an ideal model, if it is an ideal model, then stop the iteration, otherwise, enter the next round of training.

第三方面,本发明实施例还提供了一种计算机设备,包括存储器、处理器及存储在存储器上并可在处理器上运行的计算机程序,所述处理器执行所述计算机程序时实现上述方法的步骤。In a third aspect, an embodiment of the present invention further provides a computer device, including a memory, a processor, and a computer program stored in the memory and running on the processor, where the processor implements the above method when executing the computer program A step of.

第四方面,本发明实施例还提供一种计算机可读存储介质,其上存储有计算机程序,所述计算机程序被处理器执行时实现上述方法的步骤。In a fourth aspect, an embodiment of the present invention further provides a computer-readable storage medium on which a computer program is stored, and when the computer program is executed by a processor, implements the steps of the above method.

上述本申请提供了一种基于区块链的联邦学习隐私保护方法、系统、计算机设备及存储介质,通过所述方法,实现了通过可信第三方根据各节点预设的权重向量进行权重加密生成主公/私钥、解密密钥、以及各节点的加密密钥,并发送给对应的节点,由主节点创建初始区块写入初始模型发布后,各节点下载初始模型进行训练,并采用加密密钥加密得到加密模型,再基于区块链的工作量证明机制竞争后上传至区块链,当各节点的加密模型全部上传至区块链后,各节点基于区块链的共识机制竞争生成模型聚合区块的权力,并由获得该权力的节点下载其他各节点的加密模型,且根据主公钥和解密密钥将各节点的加密模型聚合生成全局模型后上传至区块链,再由主节点下载全局模型并进行理想模型判断,以确定是否继续迭代的技术方案。与现有技术相比,该基于区块链的联邦学习隐私保护方法,不仅克服了现有Flchain在客户梯度融合中忽略权重保护的问题,达到保护客户端属性来源隐私和模型数据内容隐私的效果,而且将模型聚合操作下放到参与方,达到降低服务提供方的计算成本的效果,还将联邦学习部署在区块链上,能促进参与方积极参与到联邦学习,达到提高联邦学习效率和保障模型服务质量的效果。The above-mentioned application provides a blockchain-based federated learning privacy protection method, system, computer equipment and storage medium. Through the method, a trusted third party can perform weight encryption and generation according to the preset weight vector of each node. The master/private key, decryption key, and encryption key of each node are sent to the corresponding nodes. After the master node creates the initial block and writes the initial model, each node downloads the initial model for training, and uses the encryption key. The encryption model is obtained through key encryption, and then uploaded to the blockchain after competition based on the proof-of-work mechanism of the blockchain. The power of a block is aggregated, and the node that obtains the power downloads the encryption models of other nodes, and aggregates the encryption models of each node according to the master public key and decryption key to generate a global model and uploads it to the blockchain, and then the master public key and decryption key. The node downloads the global model and judges the ideal model to determine whether to continue the iterative technical solution. Compared with the existing technology, this blockchain-based federated learning privacy protection method not only overcomes the problem that the existing Flchain ignores weight protection in the customer gradient fusion, but also achieves the effect of protecting the privacy of client attribute source and model data content. , and the model aggregation operation is delegated to the participants to achieve the effect of reducing the computing cost of the service provider, and the deployment of federated learning on the blockchain can promote the participants to actively participate in the federated learning, so as to improve the efficiency and guarantee of the federated learning. The effect of model service quality.

附图说明Description of drawings

图1是本发明实施例中基于区块链的联邦学习隐私保护方法的应用场景示意图;1 is a schematic diagram of an application scenario of a blockchain-based federated learning privacy protection method in an embodiment of the present invention;

图2是本发明实施例基于区块链的联邦学习隐私保护方法的框架示意图;2 is a schematic diagram of a framework of a blockchain-based federated learning privacy protection method according to an embodiment of the present invention;

图3是现有技术中P2P联邦学习模型的框架示意图;3 is a schematic diagram of the framework of the P2P federated learning model in the prior art;

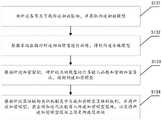

图4是本发明实施例中基于区块链的联邦学习隐私保护方法的流程示意图;4 is a schematic flowchart of a blockchain-based federated learning privacy protection method in an embodiment of the present invention;

图5是图4中步骤S11可信第三方基于各节点权重向量加密生成主公/私钥、解密密钥和各节点加密密钥的流程示意图;Fig. 5 is the schematic flow chart of step S11 trusted third party in Fig. 4 to generate master/private key, decryption key and each node encryption key based on each node weight vector encryption;

图6是本发明实施例中设计的区块头新增聚合码和迭代次数的区块链中的区块结构示意图;6 is a schematic diagram of a block structure in a block chain designed in an embodiment of the present invention to add an aggregation code and an iteration count to the block header;

图7是图4中步骤S13各节点下载初始模型训练得到加密模型并上传至区块链的流程示意图;FIG. 7 is a schematic flow chart of each node in step S13 in FIG. 4 downloading the initial model training to obtain the encrypted model and uploading it to the blockchain;

图8是图4中步骤S14各节点竞争生成包含全局模型的聚合模型区块并上传区块链的流程示意图;Fig. 8 is a schematic flowchart of each node competing to generate an aggregated model block containing a global model and uploading the blockchain in step S14 in Fig. 4;

图9是图4中步骤S15主节点下载全局模型并进行理想模型判断的流程示意图;Fig. 9 is the schematic flowchart of step S15 in Fig. 4 that the master node downloads the global model and judges the ideal model;

图10是本发明实施例中基于区块链的联邦学习隐私保护系统的结构示意图;10 is a schematic structural diagram of a blockchain-based federated learning privacy protection system in an embodiment of the present invention;

图11是本发明实施例中计算机设备的内部结构图。FIG. 11 is an internal structure diagram of a computer device in an embodiment of the present invention.

具体实施方式Detailed ways

为了使本申请的目的、技术方案和有益效果更加清楚明白,下面结合附图及实施例,对本发明作进一步详细说明,显然,以下所描述的实施例是本发明实施例的一部分,仅用于说明本发明,但不用来限制本发明的范围。基于本发明中的实施例,本领域普通技术人员在没有作出创造性劳动前提下所获得的所有其他实施例,都属于本发明保护的范围。In order to make the purpose, technical solutions and beneficial effects of the present application clearer, the present invention will be described in further detail below with reference to the accompanying drawings and embodiments. Obviously, the embodiments described below are part of the embodiments of the present invention and are only used for The present invention is illustrated, but not intended to limit the scope of the present invention. Based on the embodiments of the present invention, all other embodiments obtained by those of ordinary skill in the art without creative efforts shall fall within the protection scope of the present invention.

本发明提供的基于区块链的联邦学习隐私保护方法,可以应用于如图1所示的终端或服务器上。其中,终端可以但不限于是各种个人计算机、笔记本电脑、智能手机、平板电脑和便携式可穿戴设备,服务器可以用独立的服务器或者是多个服务器组成的服务器集群来实现。如图2所示的基于区块链的联邦学习隐私保护方法的框架,可将服务器部署为区块链的主节点,多个终端设备按需部署为区块链的参与节点,在服务器主节点创建初始区块并写入初始模型,供各终端设备参与训练节点下载并使用本地数据训练得到本地模型,且使用加密密钥加密得到加密模型后上传至区块链,并在所有终端设备上传加密模型完成后,由所有节点竞争获取生成聚合全局模型的权利,在节点设备聚合生成全局模型后上传至区块链,由服务器主节点下载全局模型,计算该模型的准确率判断是否进行下轮训练,在全局模型准确率收敛时停止迭代,将此时的全局模型作为联邦学习的理想模型进行相应的应用。该基于区块链的联邦学习隐私保护方法的框架有效解决了如图3所示的P2P联邦学习模型中各参与方之间的通信压力的问题,同时,在基于区块链的特性,保证了联邦学习中参与方的可信度和可追溯性的基础上,还实现了保护客户端属性来源隐私和模型数据内容隐私,降低服务提供方的计算成本的效果,提高联邦学习效率和保障模型服务质量的技术效果。The blockchain-based federated learning privacy protection method provided by the present invention can be applied to the terminal or server as shown in FIG. 1 . Wherein, the terminal can be, but is not limited to, various personal computers, notebook computers, smart phones, tablet computers and portable wearable devices, and the server can be implemented by an independent server or a server cluster composed of multiple servers. As shown in Figure 2, the framework of the blockchain-based federated learning privacy protection method can deploy the server as the master node of the blockchain, and multiple terminal devices can be deployed as the participating nodes of the blockchain as needed. Create the initial block and write the initial model for each terminal device to participate in the training node to download and use the local data to train the local model, and use the encryption key to encrypt the encrypted model and upload it to the blockchain, and upload the encrypted model on all terminal devices After the model is completed, all nodes compete to obtain the right to generate the aggregated global model. After the node devices aggregate to generate the global model, upload the global model to the blockchain. The server master node downloads the global model, and calculates the accuracy of the model to determine whether to perform the next round of training. , stop the iteration when the accuracy of the global model converges, and use the global model at this time as an ideal model for federated learning for corresponding applications. The framework of the blockchain-based federated learning privacy protection method effectively solves the problem of communication pressure between the participants in the P2P federated learning model shown in Figure 3. At the same time, the blockchain-based features ensure that On the basis of the credibility and traceability of the participants in federated learning, it also achieves the effect of protecting the privacy of client attribute sources and model data content, reducing the computing cost of service providers, improving the efficiency of federated learning and ensuring model services. Quality technical effects.

区块链包括多个简称为节点的区块链终端设备,且区块链根据其组成节点的准入形式分为公有链、联盟链和私有链。本发明为了真正使用区块链的去中心化特性,基于联盟链的应用场景进行相应的设计,区块链中的节点分为全节点和轻节点两种类型,其中全节点通过对区块打包数据的共识验证来保证区块链上数据的安全性和准确性,而轻节点不参与数据的共识验证,仅负责同步共识验证后的数据信息,且每一个轻节点都需要连接到一个全节点,以便同步区块链的当前状态并能够参与整个区块链的运行管理。The blockchain includes a number of blockchain terminal devices referred to as nodes, and the blockchain is divided into public chains, alliance chains and private chains according to the access form of its constituent nodes. In order to truly use the decentralization characteristics of the blockchain, the present invention is designed based on the application scenario of the alliance chain. The nodes in the blockchain are divided into two types: full nodes and light nodes. Consensus verification of data ensures the security and accuracy of data on the blockchain, while light nodes do not participate in consensus verification of data, and are only responsible for synchronizing the data information after consensus verification, and each light node needs to be connected to a full node , in order to synchronize the current state of the blockchain and be able to participate in the operation and management of the entire blockchain.

在一个实施例中,如图4所示,提供了一种基于区块链的联邦学习隐私保护方法,包括以下步骤:In one embodiment, as shown in Figure 4, a blockchain-based federated learning privacy protection method is provided, including the following steps:

S11、预先根据各节点的权重向量,由可信第三方生成主公钥、主私钥、解密密钥和各节点的加密密钥,并将所述主公钥、解密密钥和加密密钥发送至对应的各节点;所述加密密钥为各节点私有;S11. According to the weight vector of each node in advance, a trusted third party generates the master public key, master private key, decryption key and encryption key of each node, and combines the master public key, decryption key and encryption key sent to the corresponding nodes; the encryption key is private to each node;

其中,可信第三方可根据实际情况选取,如可以选择证书颁发机构CA,且参与训练的各节点的权重一般只用作后续对各节点训练生成的加密模型进行聚合生成满足主节点使用需求的全局模型,本实施例基于保护训练数据来源方面的考虑,对现有技术中忽略的各参与节点权重,采用多输入函数加密方法进行保护,由第可信第三方根据各节点设置的权重向量,生成对应的主公/私钥、解密密钥和各节点的加密密钥,如图5所示,所述预先根据各节点的权重向量,由可信第三方生成主公钥、主私钥、解密密钥和各节点的加密密钥的步骤S11包括:Among them, the trusted third party can be selected according to the actual situation. For example, the certificate authority CA can be selected, and the weight of each node participating in the training is generally only used for the subsequent aggregation of the encryption model generated by the training of each node to meet the needs of the master node. Global model, based on the consideration of protecting the source of training data, the weight of each participating node ignored in the prior art is protected by a multi-input function encryption method, and the trusted third party is based on the weight vector set by each node, Generate the corresponding public/private key, decryption key and encryption key of each node, as shown in Figure 5, according to the weight vector of each node in advance, a trusted third party generates the master public key, master private key, decryption key The step S11 of the encryption key and the encryption key of each node includes:

S111、由所述各节点将预设的权重向量发送给所述可信第三方;S111, each node sends a preset weight vector to the trusted third party;

其中,权重向量yi,i=1,…,n为n维的列向量,且该向量的第i个元素代表第i个节点的权重值,权重值的范围为(0,1),该向量的其他所有位置的元素值均为0。具体权重向量yi的第i个元素的取值可根据实际应用需求设定,如各节点根据实际贡献度或者参与训练的本地数据量的大小等因素确定并发送给可信第三方,由可信第三方管理和使用,避免直接将各节点的权重暴露给模型聚合节点,造成潜在的数据来源泄密风险。Among them, the weight vector yi , i=1,...,n is an n-dimensional column vector, and the ith element of the vector represents the weight value of the ith node, and the range of the weight value is (0, 1). All other positions of the vector have the element value 0. The value of theith element of the specific weight vector yi can be set according to the actual application requirements. For example, each node is determined according to factors such as the actual contribution degree or the amount of local data participating in the training and sent to a trusted third party. Trust the third-party management and use to avoid directly exposing the weight of each node to the model aggregation node, resulting in the potential risk of data source leakage.

S112、由所述可信第三方根据各节点的所述权重向量生成权重矩阵,并根据所述权重矩阵生成所述主公钥和所述主私钥;S112, generating a weight matrix by the trusted third party according to the weight vector of each node, and generating the master public key and the master private key according to the weight matrix;

其中,权重矩阵是由可信第三方根据各节点发送的权重向量yi,i=1,…,n,直接按节点序号组合而成得到n*n维矩阵y=(y1,…,yn)。可信第三方得到权重矩阵后,基于能够实现函数加密的函数加密算法,如伪随机数生成器PRNG(线性同余、BBS、ANSI X9.17、RC4等方法)随机生成主公钥mpk和主私钥msk。Among them, the weight matrix is obtained by a trusted third party according to the weight vector yi , i=1,...,n sent by each node, and directly combined according to the node serial number to obtain an n*n-dimensional matrix y=(y1 ,...,yn ). After the trusted third party obtains the weight matrix, based on the function encryption algorithm that can realize function encryption, such as the pseudo random number generator PRNG (linear congruence, BBS, ANSI X9.17, RC4, etc.), the main public key mpk and the main public key mpk are randomly generated. Private key msk.

S113、由所述可信第三方根据所述权重矩阵和所述主私钥,生成所述解密密钥和所述加密密钥。S113. The trusted third party generates the decryption key and the encryption key according to the weight matrix and the master private key.

其中,解密密钥skf是用于后续对各节点训练得到的模型聚合使用,其是采用密钥生成算法,以权重矩阵y和主私钥为输入,通过向量、矩阵的乘法和线性组合生成的,且具体的密钥生成算法可根据实际需求选取,此处不作限制,如可采用如表1所示的算法公式进行函数加密得到。同时,各节点的加密密钥mski,i=1,…,n,为上述主私钥msk的分量,可通过运行多输入函数加密的密钥生成算法得到。可信第三方生成各节点的加密密钥mski,i=1,…,n和模型聚合使用的解密密钥skf后,再将主公钥mpk、解密密钥skf和加密密钥mski发送给对应的各节点,有效地避免了因主节点对参与训练节点权重的感知而造成主节点侧出现训练模型及数据等信息的泄露风险,为联邦学习的隐私提供了更深层的保护。Among them, the decryption key skf is used for the subsequent aggregation of the models trained by each node, which is generated by the key generation algorithm, using the weight matrix y and the master private key as input, and generated through the multiplication and linear combination of vectors and matrices , and the specific key generation algorithm can be selected according to actual needs, which is not limited here. For example, the algorithm formula shown in Table 1 can be used to perform function encryption. Meanwhile, the encryption key mski of each node, i=1, . . . , n, is the component of the above-mentioned master private key msk, and can be obtained by running the key generation algorithm encrypted by the multi-input function. After the trusted third party generates the encryption key mski of each node, i=1,...,n and the decryption key skf used by the model aggregation, it sends the master public key mpk, decryption key skf and encryption key mski For the corresponding nodes, it effectively avoids the risk of leakage of training models and data on the master node side due to the master node's perception of the weight of the participating training nodes, and provides a deeper protection for the privacy of federated learning.

表1多输入函数加密-密钥生成算法示例Table 1 Multiple Input Function Encryption - Example of Key Generation Algorithm

S12、由主节点创建初始区块,将初始模型写入所述初始区块并发布;所述初始模型基于公共数据集训练得到;S12, an initial block is created by the master node, and an initial model is written into the initial block and released; the initial model is obtained by training based on a public data set;

其中,主节点可以根据实际训练需求从加入区块链的节点中指定,其主要用于基于公共数据集训练得到初始模型,并创建和发布存放首轮训练使用的初始模型的初始区块以供参与训练节点下载使用,以及后续基于公共数据对每轮训练得到的全局模型进行准确率测试,以得到可以应用的理想模型。Among them, the master node can be specified from the nodes joining the blockchain according to the actual training requirements. It is mainly used to train the initial model based on the public data set, and to create and publish the initial block that stores the initial model used in the first round of training for Participate in the download and use of training nodes, and subsequently test the accuracy of the global model obtained by each round of training based on public data to obtain an ideal model that can be applied.

本实施例对初始模型的类型不作限定,其适用于各类机器学习和深度学习模型的联邦学习训练,如,线性回归,神经网络,卷积神经网络,决策树,支持向量机,贝叶斯分类器等,具体初始模型的确定可根据实际应用需求选择需要各参与节点完成本地训练的模型。需要说明的是,由于实际的联邦学习训练需要很多轮才能够得到满足服务需求的理想模型,此处的初始模型仅为第一轮训练时由主节点根据公共数据集训练得到模型,其并不是严格意义上的按照预设规则聚合后的全局模型,后续迭代训练中使用的训练模型都是上轮训练中根据参与训练节点的权重向量聚合得到,且已在区块链上发布的全局模型。This embodiment does not limit the type of the initial model, which is suitable for federated learning training of various machine learning and deep learning models, such as linear regression, neural network, convolutional neural network, decision tree, support vector machine, Bayesian The specific initial model can be determined by selecting the model that requires each participating node to complete local training according to the actual application requirements. It should be noted that since the actual federated learning training requires many rounds to obtain the ideal model that meets the service requirements, the initial model here is only the model obtained by the master node based on the public data set during the first round of training. Strictly speaking, the global model aggregated according to the preset rules, the training models used in the subsequent iterative training are the global models that are aggregated based on the weight vectors of the participating training nodes in the previous round of training and have been published on the blockchain.

初始区块的创建以及如何将初始模型写入初始区块后在区块链上发布均采用现有的区块链中的相应方法得到,此处不再赘述。需要注意的是,初始区块还是采用传统的区块头结构,而本发明的后续打包的加密模型区块和聚合模型区块都是采用如图6所示的新设计的区块结构,即区块包括区块头和区块体,区块头在传统的区块头结构基础上增加了存在第一预设值和第二预设值两种可能性的聚合码AggregationFlag和迭代次数IntegerNum,区块体用于存储加密模型/全局模型。其中,区块头中的聚合码为第一预设值时标识该区别打包的是节点训练的本地加密模型,聚合码为第二预设值时标识该区别打包的是聚合后的全局模型,如聚合码值为0表示该区块包含本地加密模型,聚合码值为1表示该区块包含全局模型;迭代次数表示该区块包含的加密模型/全局模型处在第几轮训练的,用于对迭代训练次数的记录,保证后续迭代训练中下载模型的正确性,进而提高联邦学习训练的效率。The creation of the initial block and how to write the initial model into the initial block and then publish it on the blockchain are obtained by using the corresponding methods in the existing blockchain, and will not be repeated here. It should be noted that the initial block still adopts the traditional block header structure, and the subsequent packaged encryption model blocks and aggregation model blocks of the present invention adopt the newly designed block structure as shown in FIG. A block includes a block header and a block body. On the basis of the traditional block header structure, the block header adds the aggregation code AggregationFlag and the number of iterations IntegerNum with two possibilities of the first preset value and the second preset value. For storing encrypted models/global models. Wherein, when the aggregation code in the block header is the first preset value, it indicates that the difference packaged is the local encryption model trained by the node, and when the aggregation code is the second preset value, it indicates that the difference packaged is the aggregated global model, such as The aggregation code value of 0 indicates that the block contains the local encryption model, and the aggregation code value of 1 indicates that the block contains the global model; the number of iterations indicates which round of training the encryption model/global model contained in the block is in, which is used for The record of the number of iterative training ensures the correctness of the downloaded model in subsequent iterative training, thereby improving the efficiency of federated learning training.

S13、由所述各节点下载所述初始模型,训练得到本地模型,并采用所述加密密钥对所述本地模型进行加密,得到加密模型并上传至区块链;S13, downloading the initial model from each node, training to obtain a local model, and encrypting the local model with the encryption key, obtaining an encrypted model and uploading it to the blockchain;

其中,本地数据指的是参与训练的各节点的私有数据。各节点在本地使用自有的本地数据获取得到的初始模型分别进行一轮迭代训练得到对应的本地模型,并采用多输入函数加密得到加密模型后上传至区块链,如图7所示,所述由所述各节点下载所述初始模型,训练得到本地模型,并采用所述加密密钥对所述本地模型进行加密,得到加密模型并上传至区块链的步骤包括:Among them, the local data refers to the private data of each node participating in the training. Each node locally uses the initial model obtained by its own local data to perform one round of iterative training to obtain the corresponding local model, and uses multi-input function encryption to obtain the encrypted model and upload it to the blockchain, as shown in Figure 7. The steps of downloading the initial model by the nodes, training to obtain a local model, and encrypting the local model with the encryption key, and obtaining the encrypted model and uploading it to the blockchain include:

S131、由所述各节点下载所述初始区块,并获取所述初始模型;S131. Download the initial block by each node, and obtain the initial model;

其中,初始区块如上所述区块头中没有作相应的改进,即区块头中没有聚合码和迭代次数的相关记录,各节点只需根据同步得到的信息下载对应的区块,得到区块体中保存的初始模型即可。Among them, the initial block has no corresponding improvement in the block header as mentioned above, that is, there is no relevant record of the aggregation code and the number of iterations in the block header, and each node only needs to download the corresponding block according to the information obtained by synchronization, and obtain the block body. The initial model saved in .

S132、根据本地数据对所述初始模型进行训练,得到所述本地模型;S132, training the initial model according to local data to obtain the local model;

其中,对初始模型的训练方法,与初始模型的类型有关,只需按照初始模型对应的训练方法训练即可,此处不作详述。Among them, the training method of the initial model is related to the type of the initial model, and only needs to be trained according to the training method corresponding to the initial model, which will not be described in detail here.

S133、根据所述加密密钥,对所述本地模型运行多输入函数加密的加密算法,得到所述加密模型;S133, according to the encryption key, run the encryption algorithm of multi-input function encryption on the local model to obtain the encryption model;

其中,多输入函数加密是指一种多个拥有加密密钥用户参与,拥有解密密钥的用户可以获得的秘密数据的函数值而不会获得其他有关明文任何信息的方案的加密方案。加密密钥为上述由可信第三方基于各节点的权重向量采用函数加密算法生成的,各节点在本地对上述训练得到的本地模型使用加密密钥进行加密,再将加密得到的加密模型上传区块链发布,很好地保证区块发布中模型的私密性。Among them, multi-input function encryption refers to an encryption scheme in which multiple users with encryption keys participate, and users with decryption keys can obtain the function value of the secret data without obtaining any other information about the plaintext. The encryption key is generated by the trusted third party using the function encryption algorithm based on the weight vector of each node. Each node encrypts the local model obtained by the above training locally using the encryption key, and then uploads the encrypted model obtained by encryption to the area. The block chain is released, which can well ensure the privacy of the model in the block release.

S134、根据所述区块链的共识机制竞争生成加密模型区块的权力,并将所述加密模型、聚合码和迭代次数写入所述加密模型区块,以及将所述加密模型区块上传至所述区块链;所述聚合码为第一预设值。S134. Compete for the right to generate an encrypted model block according to the consensus mechanism of the blockchain, write the encrypted model, the aggregation code and the number of iterations into the encrypted model block, and upload the encrypted model block to the blockchain; the aggregation code is a first preset value.

其中,共识机制可以根据实际应用需求进行择优选择,如可选用工作量证明机制、权益证明机制、股权授权证明机制或Pool验证池中的任一种用于管理所有参与训练节点竞争生成加密模型区块的权力。其中,工作量证明机制是一种区块链常用的共识机制,其就是对于节点工作量的证明,是生成要加入到区块链中的一笔新的交易信息(即新区块)时必须满足的要求,区块链节点通过计算随机哈希散列的数值解争夺记账权,求得正确的数值解以竞争生成区块的能力是节点算力的具体表现,工作量证明机制具有完全去中心化的优点,在以工作量证明机制为共识的区块链中,节点可以自由进出。本实施例中参与训练的各节点可以优选采用工作量证明机制竞争获取生成加密模型区块并将其上传至区块链的权利,能促进参与方节点积极参与到联邦学习,在达到保障模型服务质量的同时,还进一步提高联邦学习的效率。Among them, the consensus mechanism can be selected according to the actual application requirements. For example, any one of the workload proof mechanism, the equity proof mechanism, the equity authorization proof mechanism or the Pool verification pool can be used to manage all participating training nodes to compete to generate an encrypted model area. block power. Among them, the workload proof mechanism is a consensus mechanism commonly used in the blockchain, which is the proof of the workload of the node, which must satisfy the requirements when generating a new transaction information (ie, a new block) to be added to the blockchain The ability of blockchain nodes to compete for the accounting right by calculating the numerical solution of the random hash, and the ability to obtain the correct numerical solution to compete for the generation of blocks is the specific performance of the node's computing power, and the workload proof mechanism has the ability to completely eliminate The advantage of centralization is that in a blockchain with a proof-of-work mechanism as the consensus, nodes can enter and exit freely. In this embodiment, each node participating in the training can preferably use the workload proof mechanism to compete to obtain the right to generate an encrypted model block and upload it to the blockchain, which can promote the participating nodes to actively participate in federated learning, and ensure the model service is achieved when While improving the quality, it also further improves the efficiency of federated learning.

各节点获得加密模型区块的权利后,生成加密模型区块,将如上所述的加密模型、聚合码和迭代次数等信息写入该加密模型区块上传即可,将加密模型写入该区块的区块体,将设为第一预设值的聚合码,及记录当前迭代轮数的迭代次数分别写入区块头对应的位置。此处只对本发明关心的区块字段进行说明,对于区块中其他的信息写入参照现有技术实现即可,此处不再赘述。After each node obtains the right to the encrypted model block, it generates an encrypted model block, writes the above-mentioned information such as the encrypted model, aggregation code and iteration times into the encrypted model block for upload, and writes the encrypted model into this area. For the block body of the block, the aggregation code set as the first preset value and the iteration times recording the current iteration round number are written into the corresponding positions of the block header respectively. Only the block field concerned by the present invention is described here, and other information writing in the block can be implemented with reference to the prior art, and details are not repeated here.

S14、响应于各节点的所述加密模型全部上传至区块链,由所述各节点竞争生成聚合区块的权力,并由获得所述生成聚合区块的权力的节点根据所述主公钥和所述解密密钥,将各节点的所述加密模型聚合生成全局模型并上传至区块链;S14. In response to uploading all the encryption models of each node to the blockchain, each node competes for the power to generate an aggregated block, and the node that obtains the power to generate an aggregated block uses the master public key and the decryption key, aggregate the encryption models of each node to generate a global model and upload it to the blockchain;

其中,全局模型的聚合是采用多输入函数加密对应的解密算法实现的,即以主公钥mpk、解密密钥skf、以及各节点的加密模型为输入,计算输出全局模型(联邦平均模型)。本实施例基于解决现有联邦学习将聚合得到全局模型的任务放在服务器(主节点)处理,将会导致加重服务提供方的计算成本问题的考虑,完全利用区块链的去中心化特性,并没有将聚合得到全局模型的任务放在固定的主节点处理,而是由所有参与训练的节点竞争聚合模型及对全局模型进行打包上传至区块链的权利。如图8所示,所述响应于各节点的所述加密模型全部上传至区块链,由所述各节点竞争生成聚合区块的权力,并由获得所述生成聚合区块的权力的节点根据所述主公钥和所述解密密钥,将各节点的所述加密模型聚合生成全局模型并上传至区块链的步骤包括S14包括:Among them, the aggregation of the global model is realized by using the decryption algorithm corresponding to the multi-input function encryption, that is, the master public key mpk, the decryption key skf, and the encryption model of each node are used as inputs, and the output global model (federal average model) is calculated. This embodiment solves the problem of increasing the computing cost of the service provider by placing the task of aggregating the global model on the server (master node) in the existing federated learning, and fully utilizing the decentralization characteristics of the blockchain. The task of aggregating the global model is not handled by a fixed master node, but all nodes participating in the training compete for the aggregation model and the right to package and upload the global model to the blockchain. As shown in FIG. 8 , the encryption models in response to each node are all uploaded to the blockchain, each node competes for the power to generate aggregated blocks, and the node that obtains the power to generate aggregated blocks According to the master public key and the decryption key, the step of aggregating the encryption models of each node to generate a global model and uploading it to the blockchain includes S14 including:

S141、由所述各节点根据所述区块链的共识机制竞争生成模型聚合区块权力,并由获得所述生成模型聚合区块权力的节点下载其他节点对应的所述加密模型区块,获取其他节点对应的所述加密模型;S141. The nodes compete to generate model aggregation block power according to the consensus mechanism of the blockchain, and the node that obtains the generation model aggregation block power downloads the encrypted model blocks corresponding to other nodes, and obtains the encryption models corresponding to other nodes;

其中,共识机制如上所述,同样可以根据实际应用需求进行择优选择,如可选用工作量证明机制、权益证明机制、股权授权证明机制或Pool验证池中的任一种用于管理所有参与训练节点竞争生成模型聚合区块的权利,只需与上述加密模型上传采用的机制保持一直即可,具体方法此处不再赘述。Among them, the consensus mechanism is as described above, and can also be selected according to the actual application requirements. For example, any one of the workload proof mechanism, the equity proof mechanism, the equity authorization proof mechanism or the Pool verification pool can be used to manage all participating training nodes. The right to compete for the generation model aggregation block only needs to be consistent with the above-mentioned encryption model uploading mechanism, and the specific method will not be repeated here.

按照上述竞争机制获得生成模型聚合区块权力的节点,会按照已同步的区块信息下载其他节点对应的加密模型区块,并判断加密模型区块是否满足聚合要求,即在确认该加密模型区块中的聚合码为第一预设值,迭代次数为当前迭代轮数后,获取加密模型区块对应的加密模型,来保证用于当前轮数聚合的加密模型的正确性,在提高训练效率的同时,保证了聚合得到全局模型的准确性。The node that obtains the power to generate the model aggregation block according to the above competition mechanism will download the encryption model block corresponding to other nodes according to the synchronized block information, and judge whether the encryption model block meets the aggregation requirements, that is, after confirming the encryption model block The aggregation code in the block is the first preset value, and the number of iterations is the current number of iteration rounds, and the encryption model corresponding to the encryption model block is obtained to ensure the correctness of the encryption model used for the aggregation of the current number of rounds and improve training efficiency. At the same time, the accuracy of the global model obtained by aggregation is guaranteed.

S142、根据所述主公钥和所述解密密钥,对各节点的所述加密模型运行多输入函数加密的解密算法,得到所述全局模型,并向所述区块链发起上链请求,以使得所述区块链对所述全局模型进行区块打包处理和广播共识校验。S142. According to the master public key and the decryption key, run a multi-input function encryption decryption algorithm on the encryption model of each node to obtain the global model, and initiate an on-chain request to the blockchain, So that the blockchain performs block packaging and broadcast consensus verification on the global model.

其中,主公钥和解密密钥为按照上述采用多输入函数加密的加密算法生成,由解密密钥和各节点的加密模型得到全局模型是函数加密方案的固有特性,即在解密完成就直接可以获聚合后的联邦平均模型,此处不再详述。获得生成模型聚合区块权力的节点,使用主公钥和解密密钥对各节点加密模型聚合得到全局模型后,要主动调用区块链开放的上链接口,向区块链中的全节点发起上链请求,该上链请求中携带全局模型,以保证该全局模型能够在区块链上共享,利于后续主节点对其进行正确性判断,及进行是否需要继续训练的管控使用。Among them, the master public key and decryption key are generated according to the above encryption algorithm using multi-input function encryption, and the global model obtained from the decryption key and the encryption model of each node is an inherent feature of the function encryption scheme, that is, after decryption is completed, it can be directly The federated average model after the aggregation is obtained, which will not be described in detail here. The node that obtains the power to generate the model aggregation block, uses the master public key and the decryption key to encrypt the model of each node and obtains the global model, and then actively calls the blockchain's open on-chain interface to initiate a call to all nodes in the blockchain. On-chain request, the global model is carried in the on-chain request to ensure that the global model can be shared on the blockchain, which is helpful for subsequent master nodes to judge its correctness and control whether it needs to continue training.

S143、响应于所述上链请求,由所述区块链的全节点确定所述全局模型的区块链存储地址,并生成所述模型聚合区块,以及将所述模型聚合区块广播至所述区块链中的其他全节点进行共识校验;S143. In response to the on-chain request, the full nodes of the blockchain determine the blockchain storage address of the global model, generate the model aggregation block, and broadcast the model aggregation block to Other full nodes in the blockchain perform consensus verification;

其中,区块链中的全节点收到上述的上链请求后,先确定该全局模型对应的区块链存储地址,生成记录该全局模型以及对应的区块链存储地址的模型聚合区块后,再将该模型聚合区块广播至区块链的其他全节点进行相应的共识校验。Among them, after receiving the above-mentioned on-chain request, the full node in the blockchain first determines the blockchain storage address corresponding to the global model, and generates a model aggregation block that records the global model and the corresponding blockchain storage address. , and then broadcast the model aggregation block to other full nodes of the blockchain for corresponding consensus verification.

S144、响应于所述共识校验成功,由所述全节点将所述全局模型、所述聚合码和所述迭代次数保存至所述区块链存储地址,并将所述模型聚合区块广播至所述区块链的各节点进行同步。S144. In response to the success of the consensus verification, the full node saves the global model, the aggregation code and the number of iterations to the blockchain storage address, and broadcasts the model aggregation block to each node of the blockchain for synchronization.

其中,共识校验成功后,会将模型聚合区块广播至区块链的全部节点(所有全节点和轻节点)进行同步,由全节点将全局模型保存至上述的区块链存储地址,并将该区块链存储地址作为模型聚合区块的地址。Among them, after the consensus verification is successful, the model aggregation block will be broadcast to all nodes of the blockchain (all full nodes and light nodes) for synchronization, and the global model will be saved by the full nodes to the above-mentioned blockchain storage address, and Use this blockchain storage address as the address of the model aggregation block.

本实施例将参与训练节点的加密模型聚合得到全局模型的操作,通过共识机制下放到参与训练节点完成,在保证联邦学习服务质量和学习效率的同时,还达到了降低服务提供方的计算成本的效果。In this embodiment, the operation of aggregating the encrypted models of the participating training nodes to obtain the global model is delegated to the participating training nodes through a consensus mechanism. This not only ensures the quality of the federated learning service and the learning efficiency, but also reduces the computing cost of the service provider. Effect.

S15、由所述主节点下载所述全局模型,并判断所述全局模型是否为理想模型,若是理想模型,则停止迭代,反之,进入下一轮训练;S15, download the global model by the master node, and judge whether the global model is an ideal model, if it is an ideal model, then stop the iteration, otherwise, enter the next round of training;

其中,上述训练得到的全局模型并不一定是满足应用需求的理想模型,为了保证联邦学习模型的精度和服务质量,在每轮训练完成后,都会有主节点对当前训练得到的全局模型的准确率进行检验,判断其准确率是否收敛来决定是否需要通知参与训练的节点继续进行迭代训练,直至得到理想模型,如图9所示,所述由所述主节点下载所述全局模型,并判断所述全局模型是否为理想模型,若是理想模型,则停止迭代,反之,进入下一轮训练的步骤S15包括:Among them, the global model obtained by the above training is not necessarily an ideal model to meet the application requirements. In order to ensure the accuracy and service quality of the federated learning model, after each round of training is completed, the master node will have the accuracy of the current training global model. Check whether the accuracy rate has converged to determine whether it is necessary to notify the nodes participating in the training to continue iterative training until an ideal model is obtained. As shown in Figure 9, the master node downloads the global model and judges Whether the global model is an ideal model, if it is an ideal model, then stop the iteration, otherwise, the step S15 of entering the next round of training includes:

S151、由所述主节点下载与当前轮数对应的所述模型聚合区块,并判断所述模型聚合区块对应的所述聚合码是否为所述第二预设值,若所述聚合码为所述第二预设值,则获取所述全局模型;S151. The master node downloads the model aggregation block corresponding to the current round number, and judges whether the aggregation code corresponding to the model aggregation block is the second preset value, if the aggregation code is the second preset value, then obtain the global model;

其中,与当前轮数对应的模型聚合区块,原则上是整个区块链上最新生成的区块,主节点可以根据已获得的同步信息对其进行下载,在结合区块头中的聚合码是否为第二预设值进行辅助判断下载的模型聚合区块是否正确,在确保模型聚合区块正确性的基础上,再获取该区块体中存储的全局模型。Among them, the model aggregation block corresponding to the current number of rounds is, in principle, the latest generated block on the entire blockchain. The master node can download it according to the obtained synchronization information. Whether the aggregation code in the combined block header is The second preset value is used to assist in judging whether the downloaded model aggregation block is correct, and on the basis of ensuring the correctness of the model aggregation block, the global model stored in the block body is obtained.

S152、根据所述公共数据集测试所述全局模型的准确率,并根据所述准确率是否收敛判断所述全局模型是否为理想模型;S152, test the accuracy of the global model according to the public data set, and judge whether the global model is an ideal model according to whether the accuracy converges;

其中,理想模型的具体评判标准可根据实际应用需求设定,本实施例中根据主节点上存储的公共数据集对当前迭代得到全局模型进行准确率测试,并将当前得到的准确率结果与之前得到的结果进行比对,若连续若干次(如三次)的准确率之差都不超过预设阈值(比如1%),则就判定为准确率收敛,且将准确率最高的全局模型作为最终的理想模型,反之,则需要继续迭代训练。需要说明的是,本实施例中判定理想模型的方法,以及判定全局模型的准确率是否收敛的标准仅为示例性说明,实际应用中可根据需求进行调整。The specific evaluation criteria of the ideal model can be set according to the actual application requirements. In this embodiment, the global model obtained by the current iteration is tested for accuracy according to the public data set stored on the master node, and the currently obtained accuracy results are compared with the previous ones. The obtained results are compared. If the difference between the accuracy rates for several consecutive times (such as three times) does not exceed the preset threshold (such as 1%), it is determined that the accuracy rate has converged, and the global model with the highest accuracy rate is used as the final. The ideal model of , on the contrary, it needs to continue iterative training. It should be noted that the method for determining the ideal model and the criterion for determining whether the accuracy rate of the global model converges in this embodiment are merely illustrative, and may be adjusted according to requirements in practical applications.

S153、若所述全局模型不是理想模型,则发送继续训练广播消息,反之,则发送停止训练广播消息;S153, if the global model is not an ideal model, send a continue training broadcast message, otherwise, send a stop training broadcast message;

其中,继续训练广播消息和停止训练广播消息,都是由主节点完成上述全局模型的准确率测试,得到当前全局模型是否为理想模型的评判结果之后,主节点会根据实际的评判结果,将测试结果(如准确率等)和是否需要进行下一轮训练的训练指令以广播消息的形式发送给整个区块链的所有节点,用于参与训练的节点根据主节点的训练指令判断是否需要继续下载全局模型进行本地训练。需要说明的是广播消息中的训练指令可按需设置不同的指令,比如,训练指令为1表示需要继续训练,训练指令为0表示需要停止训练,只要事先将主节点和参与训练节点的通信机制确定清楚,并实现通过主节点控制参与训练节点的训练任务是否继续的功能,都在本发明的保护范围内。Among them, to continue training broadcast messages and stop training broadcast messages, the master node completes the accuracy test of the above-mentioned global model, and after obtaining the judgment result of whether the current global model is an ideal model, the master node will test the test according to the actual judgment result. The results (such as accuracy, etc.) and the training instructions for whether the next round of training is required are sent to all nodes in the entire blockchain in the form of broadcast messages, and the nodes participating in the training determine whether to continue downloading according to the training instructions of the master node. The global model is trained locally. It should be noted that the training command in the broadcast message can be set to different commands as needed. For example, a training command of 1 indicates that training needs to be continued, and a training command of 0 indicates that training needs to be stopped. As long as the communication mechanism between the master node and the participating training nodes is set in advance It is within the protection scope of the present invention to determine clearly and realize the function of controlling whether the training task of the participating training nodes continues through the master node.

S154、响应于所述继续训练广播消息,由所述各节点下载所述全局模型,并开始下一轮训练。S154. In response to the continuous training broadcast message, each node downloads the global model, and starts the next round of training.

其中,继续训练广播消息为上述主节点对当前训练得到的全局模型进行准确率测试后,发现不满足准确率收敛要求时广播的消息。当参与训练的节点接收该广播消息且识别出训练指令为继续训练时,参与训练的各节点都会下载最新的全局模型,并开始新一轮的迭代训练。需要注意的是,参与训练的各节点非首次下载训练模型与首次下载训练模型的区别在于,首次下载主节点创建的初始区块获得初始模型,而非首次下载的是最新一次发布的聚合模型区块,完成对聚合模型区块的准确性校验后,再获取最新的全局模型,与之对应的具体下载全局模型的步骤包括:由所述各节点下载所述模型聚合区块,判断所述聚合码是否为第二预设值;若所述聚合码为所述第二预设值,则判断所述迭代次数是否为所述当前迭代次数;若所述迭代次数为所述当前迭代次数,则获取所述全局模型。Wherein, the continuous training broadcast message is the message broadcast when the above-mentioned master node performs an accuracy test on the currently trained global model and finds that the accuracy convergence requirement is not met. When the nodes participating in the training receive the broadcast message and recognize that the training instruction is to continue training, each node participating in the training will download the latest global model and start a new round of iterative training. It should be noted that the difference between downloading the training model for the first time and downloading the training model for the first time for each node participating in the training is that the initial block created by the master node is downloaded for the first time to obtain the initial model, not the latest released aggregate model area. After completing the accuracy verification of the aggregation model block, the latest global model is obtained, and the specific step of downloading the global model corresponding to it includes: downloading the model aggregation block by each node, judging the Whether the aggregation code is the second preset value; if the aggregation code is the second preset value, determine whether the number of iterations is the current number of iterations; if the number of iterations is the current number of iterations, Then the global model is obtained.

本申请实施例设计了一种基于区块链的联邦学习隐私保护方法的框架,利用区块链的优良特性(如去中心化、不可篡改性、可追溯性等),通过预先根据各节点的权重向量,由可信第三方生成主公/私钥、加密密钥和解密密钥,由主节点创造初始区块将其训练得到的初始模型发布在区块链,由各节点下载并根据本地数据进行训练得到加密模型上传区块链,且由参与训练各节点通过共识机制聚合生成全局模型并上传区块链后,再由主节点对全局模型进行准确率评判以控制参与训练节点是否需要继续训练直至得到理想模型的技术方案,实现了降低联邦学习中服务提供方的计算成本,有效保障联邦学习模型的服务质量,以及提升联邦学习效率同时,还通过引入函数隐藏的多输入函数加密算法,有效解决了对各参与方(客户端/节点)的权重和数据内容进行保护的问题,有效避免隐私泄露风险的发生的技术效果。The embodiments of this application design a framework of a blockchain-based federated learning privacy protection method, which utilizes the excellent characteristics of blockchain (such as decentralization, immutability, traceability, etc.) Weight vector, a trusted third party generates the master/private key, encryption key and decryption key, the master node creates the initial block and publishes the initial model trained by it on the blockchain, and each node downloads it according to the local data After training, the encrypted model is obtained and uploaded to the blockchain, and each node participating in the training generates a global model through a consensus mechanism and uploads it to the blockchain, and then the master node evaluates the accuracy of the global model to control whether the participating training nodes need to continue training. Until the technical solution of the ideal model is obtained, the calculation cost of the service provider in the federated learning is reduced, the service quality of the federated learning model is effectively guaranteed, and the efficiency of the federated learning is improved. It solves the problem of protecting the weight and data content of each participant (client/node), and effectively avoids the risk of privacy leakage.

需要说明的是,虽然上述流程图中的各个步骤按照箭头的指示依次显示,但是这些步骤并不是必然按照箭头指示的顺序依次执行。除非本文中有明确的说明,这些步骤的执行并没有严格的顺序限制,这些步骤可以以其它的顺序执行。It should be noted that although the steps in the above flow chart are displayed in sequence according to the arrows, these steps are not necessarily executed in the sequence indicated by the arrows. Unless explicitly stated herein, the execution of these steps is not strictly limited to the order, and the steps may be executed in other orders.

在一个实施例中,如图10所示,提供了一种基于区块链的联邦学习隐私保护系统,所述系统包括:In one embodiment, as shown in Figure 10, a blockchain-based federated learning privacy protection system is provided, the system includes:

权重加密模块1,用于预先根据各节点的权重向量,由可信第三方生成主公钥、主私钥、解密密钥和各节点的加密密钥,并将所述主公钥、解密密钥和加密密钥发送至对应的各节点;所述加密密钥为各节点私有;The weight encryption module 1 is used to generate the master public key, master private key, decryption key and encryption key of each node by a trusted third party according to the weight vector of each node in advance, and use the master public key, decryption key and encryption key for each node. The encryption key and the encryption key are sent to the corresponding nodes; the encryption key is private to each node;

初始建模模块2,用于由主节点创建初始区块,将初始模型写入所述初始区块并发布;所述初始模型基于公共数据集训练得到;Initial modeling module 2, for creating an initial block by the master node, writing the initial model into the initial block and publishing; the initial model is obtained based on public data set training;

本地训练模块3,用于由所述各节点下载所述初始模型,训练得到本地模型,并采用所述加密密钥对所述本地模型进行加密,得到加密模型并上传至区块链;

模型聚合模块4,用于响应于各节点的所述加密模型全部上传至区块链,由所述各节点竞争生成聚合区块的权力,并由获得所述生成聚合区块的权力的节点根据所述主公钥和所述解密密钥,将各节点的所述加密模型聚合生成全局模型并上传至区块链;The model aggregation module 4 is used for uploading all the encrypted models of each node to the blockchain, and each node competes for the power to generate aggregated blocks, and the node that obtains the power to generate aggregated blocks according to the master public key and the decryption key, aggregate the encryption models of each node to generate a global model and upload it to the blockchain;

模型测试模块5,用于由所述主节点下载所述全局模型,并判断所述全局模型是否为理想模型,若是理想模型,则停止迭代,反之,进入下一轮训练。The

关于一种基于区块链的联邦学习隐私保护系统的具体限定可以参见上文中对于一种基于区块链的联邦学习隐私保护方法的限定,在此不再赘述。上述一种基于区块链的联邦学习隐私保护系统中的各个模块可全部或部分通过软件、硬件及其组合来实现。上述各模块可以硬件形式内嵌于或独立于计算机设备中的处理器中,也可以以软件形式存储于计算机设备中的存储器中,以便于处理器调用执行以上各个模块对应的操作。For the specific definition of a blockchain-based federated learning privacy protection system, please refer to the above definition of a blockchain-based federated learning privacy protection method, which will not be repeated here. Each module in the above-mentioned blockchain-based federated learning privacy protection system can be implemented in whole or in part by software, hardware and combinations thereof. The above modules can be embedded in or independent of the processor in the computer device in the form of hardware, or stored in the memory in the computer device in the form of software, so that the processor can call and execute the operations corresponding to the above modules.

需要说明的是,本发明实施例中的基于区块链的联邦学习隐私保护方法和系统,可以应用在包括若干个电力公司的区域用电预测和电力供应调整规划,以实现电力供应的合理分配的电力数据计算场景。采用该方法或系统完成各个电力公司间训练用电量预测模型的安全的联邦学习,以实现电量合理分配的具体应用为:参与训练的各个电力公司将自己预设的权重向量发送给包括证书颁发机构(CA)的可信第三方,用于生成对应的权重矩阵,及对各电力公司的权重进行函数加密生成的主公/私钥、解密密钥和各节点的加密密钥,并将主公钥、解密密钥,和各电力公司的加密密钥发送给对应的各电力公司;电力部门创建初始区块,利用公共数据集训练出初始模型,将初始模型写入初始区块并发布;各电力公司下载初始区块,利用初始区块记载的初始模型和本地用电数据训练得到本地模型,再利用各自的加密密钥运行多输入函数加密的加密算法对本地模型进行加密,得到对应的加密模型后,通过区块链的工作量证明机制(PoW)竞争生成新区块(加密模型区块)的权力,将加密模型、标识为加密模型的聚合码AggregationFlag、记录当前迭代轮数的迭代次数IntegerNum写入新区块(加密模型区块)并上传链上;待所有电力公司上传了含加密模型的新区块(聚合码值为第一预设值的加密模型区块)后,各电力公司通过区块链的共识机制竞争生成模型聚合区块的权力,由获得生成模型聚合区块权力的电力公司下载所有含其他电力公司的加密模型的加密模型区块(聚合码值为第一预设值,迭代次数为当前迭代轮数),利用主公钥和解密密钥运行多输入函数加密的解密算法,得到全局模型(联邦平均模型),将全局模型、标识为全局模型的聚合码、以及记录当前迭代轮数的迭代次数写入模型聚合区块并上传链上;电力部门下载含有全局模型的模型聚合区块(聚合码值为第二预设值,迭代次数为当前迭代轮数),利用公共数据集测试出该全局模型的准确率,并根据准确率是否收敛判断其是否为理想模型,若不是理想模型,则发送继续训练广播消息,反之,则发送停止训练广播消息;所有电力公司在收到继续训练广播消息后,下载最新的全局模型,并开始下一轮训练,反之,在收到停止训练广播消息后,则停止训练;电力部门在发送停止训练广播消息后,就可以将当前训练得到的全局模型(联邦评价模型)作为最终的用电量预测模型提供服务,分析各地全年的用电量,并以此来合理规划电力公司每月的电力资源供应和电力公司在各地的分配。It should be noted that the blockchain-based federated learning privacy protection method and system in the embodiments of the present invention can be applied to regional power consumption forecasting and power supply adjustment planning including several power companies, so as to achieve a reasonable distribution of power supply power data calculation scenarios. The method or system is used to complete the safe federated learning of training electricity consumption prediction models among various power companies, and the specific application of realizing the reasonable distribution of electricity is as follows: each power company participating in the training sends its own preset weight vector to including certificate issuance. The trusted third party of the organization (CA) is used to generate the corresponding weight matrix, the public/private key, the decryption key and the encryption key of each node generated by functional encryption of the weights of each power company, and the main key, decryption key, and the encryption key of each power company are sent to the corresponding power companies; the power department creates the initial block, uses the public data set to train the initial model, writes the initial model into the initial block and publishes it; The power company downloads the initial block, uses the initial model recorded in the initial block and the local electricity consumption data to train to obtain the local model, and then uses the respective encryption keys to run the encryption algorithm of multi-input function encryption to encrypt the local model to obtain the corresponding encryption. After the model, the power to generate a new block (encrypted model block) is competed through the proof-of-work mechanism (PoW) of the blockchain. Write a new block (encrypted model block) and upload it to the chain; after all power companies have uploaded a new block containing an encrypted model (an encrypted model block whose aggregate code value is the first preset value), each power company will pass the block. The consensus mechanism of the blockchain competes for the power to generate model aggregation blocks, and the power company that obtains the power to generate model aggregation blocks downloads all encrypted model blocks containing the encryption models of other power companies (the aggregation code value is the first preset value, The number of iterations is the current number of iteration rounds), use the master public key and the decryption key to run the decryption algorithm encrypted by the multi-input function to obtain the global model (federal average model), and record the global model, the aggregate code identified as the global model, and the current The iteration number of the iteration round number is written into the model aggregation block and uploaded to the chain; the power sector downloads the model aggregation block containing the global model (the aggregation code value is the second preset value, and the number of iterations is the current iteration round number), using the public The data set tests the accuracy rate of the global model, and judges whether it is an ideal model according to whether the accuracy rate converges. If it is not an ideal model, send a broadcast message to continue training; otherwise, send a broadcast message to stop training; all power companies are receiving After continuing the training broadcast message, download the latest global model and start the next round of training. On the contrary, after receiving the stop training broadcast message, the training will be stopped; after the power department sends the stop training broadcast message, the current training can be The obtained global model (federal evaluation model) serves as the final electricity consumption forecasting model, analyzes the annual electricity consumption in various places, and uses this to reasonably plan the monthly power resource supply of the power company and the distribution of the power company in various places. .

图11示出一个实施例中计算机设备的内部结构图,该计算机设备具体可以是终端或服务器。如图11所示,该计算机设备包括通过系统总线连接的处理器、存储器、网络接口、显示器和输入装置。其中,该计算机设备的处理器用于提供计算和控制能力。该计算机设备的存储器包括非易失性存储介质、内存储器。该非易失性存储介质存储有操作系统和计算机程序。该内存储器为非易失性存储介质中的操作系统和计算机程序的运行提供环境。该计算机设备的网络接口用于与外部的终端通过网络连接通信。该计算机程序被处理器执行时以实现一种基于区块链的联邦学习隐私保护方法。该计算机设备的显示屏可以是液晶显示屏或者电子墨水显示屏,该计算机设备的输入装置可以是显示屏上覆盖的触摸层,也可以是计算机设备外壳上设置的按键、轨迹球或触控板,还可以是外接的键盘、触控板或鼠标等。FIG. 11 shows an internal structure diagram of a computer device in an embodiment, and the computer device may specifically be a terminal or a server. As shown in FIG. 11, the computer device includes a processor, memory, network interface, display, and input devices connected by a system bus. Among them, the processor of the computer device is used to provide computing and control capabilities. The memory of the computer device includes a non-volatile storage medium, an internal memory. The nonvolatile storage medium stores an operating system and a computer program. The internal memory provides an environment for the execution of the operating system and computer programs in the non-volatile storage medium. The network interface of the computer device is used to communicate with an external terminal through a network connection. The computer program, when executed by the processor, implements a blockchain-based federated learning privacy protection method. The display screen of the computer equipment may be a liquid crystal display screen or an electronic ink display screen, and the input device of the computer equipment may be a touch layer covered on the display screen, or a button, a trackball or a touchpad set on the shell of the computer equipment , or an external keyboard, trackpad, or mouse.

本领域普通技术人员可以理解,图11中示出的结构,仅仅是与本申请方案相关的部分结构的框图,并不构成对本申请方案所应用于其上的计算机设备的限定,具体的计算设备可以包括比图中所示更多或更少的部件,或者组合某些部件,或者具有同的部件布置。Those of ordinary skill in the art can understand that the structure shown in FIG. 11 is only a block diagram of a partial structure related to the solution of the present application, and does not constitute a limitation on the computer equipment to which the solution of the present application is applied. More or fewer components than shown in the figures may be included, or some components may be combined, or have the same arrangement of components.

在一个实施例中,提供了一种计算机设备,包括存储器、处理器及存储在存储器上并可在处理器上运行的计算机程序,处理器执行计算机程序时实现上述方法的步骤。In one embodiment, a computer device is provided, including a memory, a processor, and a computer program stored in the memory and executable on the processor, and the processor implements the steps of the above method when executing the computer program.

在一个实施例中,提供了一种计算机可读存储介质,其上存储有计算机程序,计算机程序被处理器执行时实现上述方法的步骤。In one embodiment, there is provided a computer-readable storage medium having a computer program stored thereon, the computer program implementing the steps of the above method when executed by a processor.

综上,本发明实施例提供的一种基于区块链的联邦学习隐私保护方法、系统、计算机设备及存储介质,其基于区块链的联邦学习隐私保护方法通过可信第三方根据各节点预设的权重向量进行权重加密生成主公/私钥、解密密钥、以及各节点的加密密钥,并发送给对应的节点,由主节点创建初始区块写入初始模型发布后,各节点下载初始模型进行训练,并采用加密密钥加密得到加密模型,再基于区块链的工作量证明机制竞争后上传至区块链,当各节点的加密模型全部上传至区块链后,各节点基于区块链的共识机制竞争生成模型聚合区块的权力,并由获得该权力的节点下载其他各节点的加密模型,且根据主公钥和解密密钥将各节点的加密模型聚合生成全局模型后上传至区块链,再由主节点下载全局模型并进行理想模型判断,以确定是否继续迭代的技术方案。与现有技术相比,该基于区块链的联邦学习隐私保护方法,不仅克服了现有Flchain在客户梯度融合中忽略权重保护的问题,达到保护客户端属性来源隐私和模型数据内容隐私的效果,而且将模型聚合操作下放到参与方,达到降低服务提供方的计算成本的效果,还将联邦学习部署在区块链上,能促进参与方积极参与到联邦学习,达到提高联邦学习效率和保障模型服务质量的技术效果。In summary, the embodiments of the present invention provide a blockchain-based federated learning privacy protection method, system, computer equipment, and storage medium. The set weight vector is encrypted to generate the master/private key, decryption key, and encryption key of each node, and sent to the corresponding node. After the master node creates the initial block and writes the initial model, each node downloads the initial model. The model is trained, and the encrypted model is obtained by encrypting the encryption key, and then uploaded to the blockchain after competition based on the workload proof mechanism of the blockchain. The consensus mechanism of the blockchain competes for the power to generate model aggregation blocks, and the node that obtains the power downloads the encryption models of other nodes, and aggregates the encryption models of each node according to the master public key and decryption key to generate a global model and uploads it To the blockchain, the master node downloads the global model and judges the ideal model to determine whether to continue the iterative technical solution. Compared with the existing technology, this blockchain-based federated learning privacy protection method not only overcomes the problem that the existing Flchain ignores weight protection in the customer gradient fusion, but also achieves the effect of protecting the privacy of client attribute source and model data content. , and the model aggregation operation is delegated to the participants to achieve the effect of reducing the computing cost of the service provider, and the deployment of federated learning on the blockchain can promote the participants to actively participate in the federated learning, so as to improve the efficiency and guarantee of the federated learning. Technical effects of model service quality.

本说明书中的各个实施例均采用递进的方式描述,各个实施例直接相同或相似的部分互相参见即可,每个实施例重点说明的都是与其他实施例的不同之处。尤其,对于系统实施例而言,由于其基本相似于方法实施例,所以描述的比较简单,相关之处参见方法实施例的部分说明即可。需要说明的是,上述实施例的各技术特征可以进行任意的组合,为使描述简洁,未对上述实施例中的各个技术特征所有可能的组合都进行描述,然而,只要这些技术特征的组合不存在矛盾,都应当认为是本说明书记载的范围。Each embodiment in this specification is described in a progressive manner, and the directly identical or similar parts of each embodiment may be referred to each other, and each embodiment focuses on the differences from other embodiments. In particular, as for the system embodiments, since they are basically similar to the method embodiments, the description is relatively simple, and for related parts, please refer to the partial descriptions of the method embodiments. It should be noted that the technical features of the above embodiments can be combined arbitrarily. In order to make the description simple, all possible combinations of the technical features in the above embodiments are not described. However, as long as the combinations of these technical features do not If there is any contradiction, it should be regarded as the scope of the description in this specification.

以上所述实施例仅表达了本申请的几种优选实施方式,其描述较为具体和详细,但并不能因此而理解为对发明专利范围的限制。应当指出的是,对于本技术领域的普通技术人员来说,在不脱离本发明技术原理的前提下,还可以做出若干改进和替换,这些改进和替换也应视为本申请的保护范围。因此,本申请专利的保护范围应以所述权利要求的保护范围为准。The above-mentioned embodiments only represent several preferred embodiments of the present application, and the descriptions thereof are relatively specific and detailed, but should not be construed as a limitation on the scope of the invention patent. It should be pointed out that for those skilled in the art, without departing from the technical principle of the present invention, several improvements and replacements can also be made, and these improvements and replacements should also be regarded as the protection scope of the present application. Therefore, the protection scope of the patent of the present application shall be subject to the protection scope of the claims.

Claims (8)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202110493191.XACN113204787B (en) | 2021-05-06 | 2021-05-06 | Block chain-based federated learning privacy protection method, system, device and medium |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |