CN112689303A - Edge cloud cooperative resource joint allocation method, system and application - Google Patents

Edge cloud cooperative resource joint allocation method, system and applicationDownload PDFInfo

- Publication number

- CN112689303A CN112689303ACN202011584281.1ACN202011584281ACN112689303ACN 112689303 ACN112689303 ACN 112689303ACN 202011584281 ACN202011584281 ACN 202011584281ACN 112689303 ACN112689303 ACN 112689303A

- Authority

- CN

- China

- Prior art keywords

- edge

- computing

- cloud

- task

- user

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Landscapes

- Mobile Radio Communication Systems (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明属于无线通信技术领域,尤其涉及一种边云协同资源联合分配方法、系统及应用。The present invention belongs to the technical field of wireless communication, and in particular relates to a method, system and application for joint allocation of edge-cloud cooperative resources.

背景技术Background technique

目前:随着大数据及物联网技术的不断发展,越来越多的智能应用得以普及于无线通信领域,用户可经由无线网络获得丰富的智能业务服务。在此背景下,云计算技术的提出为智能应用与服务提供了技术支撑,无线网络内流经的大数据可经由云计算服务器分析并优化系统决策,从而为用户带来更高服务质量以及体验质量的业务。然而,随着通信技术和云计算技术的不断发展,人们不再满足于现有技术所带来的服务质量,为了进一步提升用户获取业务时的端到端时延,又或者进一步降低用户获取业务产生的能量消耗,边缘计算技术应运而生。边缘计算技术可将云计算拉近用户,为临近用户的接入点部署小型服务器,真正为用户提供“近距离”、“高速率”、“高可靠”的计算服务。At present: With the continuous development of big data and Internet of Things technologies, more and more intelligent applications are popularized in the field of wireless communication, and users can obtain rich intelligent business services through wireless networks. In this context, the proposal of cloud computing technology provides technical support for intelligent applications and services. The big data flowing in the wireless network can be analyzed and optimized by the cloud computing server, thereby bringing higher service quality and experience to users. quality business. However, with the continuous development of communication technology and cloud computing technology, people are no longer satisfied with the service quality brought by the existing technology. The resulting energy consumption, edge computing technology came into being. Edge computing technology can bring cloud computing closer to users, deploy small servers for access points close to users, and truly provide users with "close-range," "high-speed," and "high-reliability" computing services.

考虑到边缘计算相较于云计算而言可供驱使的资源量有限,在用户需求某些大型计算业务的时候,边缘计算服务器不足以满足其计算需求。在这一背景下,为了既保证用户能够“近距离”获得计算业务,又保证用户能够获得充足的计算资源,边云协同的概念应运而生。2019年Jinke Ren等人在“IEEE Transactions on Vehicular Technology”(《国际电气电子工程师协会车辆技术汇刊》)(2019年5月第68卷第5期)发表的“CollaborativeCloud and Edge Computing for Latency Minimization”(《最小化边云协同时延》)中研究了云计算和边缘计算之间的协作,令移动设备的部分任务可以在边缘节点和云服务器上联合处理,从而在有限的通信和计算能力下提高边缘云工作效率。2019年Junhui Zhao等人在“IEEE Transactions on Vehicular Technology”《国际电气电子工程师协会车辆技术汇刊》)(2019年8月第68卷第8期)发表的“Computation Offloading and ResourceAllocation For Cloud Assisted Mobile Edge Computing in Vehicular Networks”(《车联网下基于边云协同的计算卸载和资源分配》)中提出了一种专门针对车辆网的边云协同方案,通过联合优化计算卸载决策和计算资源分配,保证车辆计算卸载时的时延约束。Considering the limited amount of resources that can be driven by edge computing compared to cloud computing, when users demand certain large-scale computing services, edge computing servers are not enough to meet their computing needs. In this context, in order to ensure that users can obtain computing services at a "close distance" and that users can obtain sufficient computing resources, the concept of edge-cloud collaboration came into being. In 2019, "Collaborative Cloud and Edge Computing for Latency Minimization" published by Jinke Ren et al. in "IEEE Transactions on Vehicular Technology" ("IEEE Transactions on Vehicular Technology") (Volume 68,

然而,上述方案并未考虑到,实际网络环境中用户业务类型存在差异化,不同业务类型对传输和计算的时延以及能耗要求不同。此外,用户设备卸载计算任务时很容易被临近的其他用户设备感知,充分利用各用户设备实现计算任务的协作转发,可进一步提高边缘计算服务器处的任务可达性。However, the above solution does not take into account that there are differences in user service types in an actual network environment, and different service types have different requirements for transmission and calculation delay and energy consumption. In addition, when a user equipment offloads a computing task, it is easily perceived by other neighboring user equipments, making full use of each user equipment to realize the cooperative forwarding of computing tasks, which can further improve the task accessibility at the edge computing server.

通过上述分析,现有技术存在的问题及缺陷为:现有技术未考虑到,实际网络环境中用户业务类型存在差异化,不同业务类型对传输和计算的时延以及能耗要求不同。此外,用户设备卸载计算任务时很容易被临近的其他用户设备感知,然而现有技术并未对这些临近设备加以利用。Through the above analysis, the existing problems and defects are as follows: the existing technology does not take into account that the user service types in the actual network environment are differentiated, and different service types have different requirements for transmission and calculation delay and energy consumption. In addition, when a user equipment offloads computing tasks, it is easy to be perceived by other nearby user equipments, but the prior art does not make use of these adjacent equipments.

解决以上问题及缺陷的难度为:权重系数可以很好地表征不同用户业务对传输和计算的时延以及能耗指标要求,但是如何基于该权重系数,通过合理地资源分配和任务分割,实现时延和能耗之间的均衡,是本发明的难点之一。此外,通过协作通信技术,对临近的用户设备加以利用,一方面可加强边缘服务器卸载效率,但也进一步带来的问题求解的难度。The difficulty of solving the above problems and defects is as follows: the weight coefficient can well represent the delay and energy consumption requirements of different user services for transmission and calculation, but how to realize the time and The balance between delay and energy consumption is one of the difficulties of the present invention. In addition, using the adjacent user equipment through the cooperative communication technology can enhance the unloading efficiency of the edge server on the one hand, but it also brings the difficulty of solving the problem further.

解决以上问题及缺陷的意义为:可以基于用户任务对传输和计算的时延以及能耗指标要求,制定合理的优化方案确定用户本地计算量、上传任务量、本地传输功率、本地计算时延、协作传输功率、边缘计算任务量、边缘计算时延及云计算任务量、云计算时延等关键性能参数,从而保证时延和能耗的性能均衡。The significance of solving the above problems and defects is that a reasonable optimization scheme can be formulated to determine the user's local computing volume, upload task volume, local transmission power, local computing delay, Key performance parameters such as collaborative transmission power, edge computing task volume, edge computing delay, cloud computing task volume, cloud computing delay, etc., to ensure the performance balance between delay and energy consumption.

发明内容SUMMARY OF THE INVENTION

针对现有技术存在的问题,本发明提供了一种边云协同资源联合分配方法、系统及应用。Aiming at the problems existing in the prior art, the present invention provides a method, system and application for joint allocation of edge-cloud collaborative resources.

本发明是这样实现的,一种边云协同资源联合分配方法,所述边云协同资源联合分配方法卸载用户首先基于自身的业务类型确定时延和能耗权重;卸载用户根据链路状态选择协助设备,并根据权重确定本地计算量、上传任务量、本地传输功率、本地计算时延,注意到为避免用户间的干扰,相临边缘服务器覆盖范围内的用户采用频分多址接入方式;用户首先将上传任务传输至选择的协助设备,然后在协助设备的辅助下采用协作通信的方式将上传任务卸载至边缘服务器,从而确保边缘服务器准确地接收到卸载任务,此时需要确定协作通信过程中的协作传输功率;边缘服务器根据用户对于计算时延和计算能耗的权重指标,确定边缘计算任务量、边缘计算时延及云计算任务量、云计算时延,并将云计算任务进一步卸载至云服务器,从而完成整个卸载过程。The present invention is implemented in the following way, a method for joint allocation of edge-cloud collaborative resources. The method for joint allocation of edge-cloud collaborative resources firstly determines the delay and energy consumption weight of the offloading user based on its own service type; the offloading user selects the assistance according to the link state. equipment, and determine the local calculation amount, upload task amount, local transmission power, and local calculation delay according to the weight, and note that in order to avoid interference between users, users within the coverage of adjacent edge servers use frequency division multiple access; The user first transmits the upload task to the selected assisting device, and then uses cooperative communication to offload the uploading task to the edge server with the assistance of the assisting device, so as to ensure that the edge server receives the offloading task accurately. At this time, the collaborative communication process needs to be determined. The edge server determines the amount of edge computing tasks, edge computing delay, cloud computing task volume, and cloud computing delay according to the user's weight index for computing delay and computing energy consumption, and further offloads cloud computing tasks. to the cloud server to complete the entire uninstallation process.

进一步,所述边云协同资源联合分配方法具体包括:Further, the method for joint allocation of edge-cloud collaborative resources specifically includes:

(1)卸载用户基于自身的业务类型确定时延和能耗权重,令和分别表示用户u对于通信能耗、通信时延、计算能耗和计算时延的权重指标,其中且权重值越大,表明该用户的卸载任务对于该项指标越敏感;(1) Offloading users determine the delay and energy consumption weights based on their own service types, so that and respectively represent the weight indicators of user u for communication energy consumption, communication delay, computing energy consumption and computing delay, where and The larger the weight value, the more sensitive the user's uninstallation task is to this indicator;

(2)卸载用户根据链路状态选择协助设备,根据权重确定本地计算量、上传任务量、本地传输功率及本地计算时延:(2) The offloading user selects the assisting device according to the link status, and determines the local calculation amount, upload task amount, local transmission power and local calculation delay according to the weight:

(2.1)假设用户u的备选协助设备集合为Uu,令备选设备u'作为其协助设备,则其中gu,u'、和分别表示用户u到备选设备u'的功率增益、用户u到用户u关联的边缘服务器nu的功率增益和备选设备u'到用户u关联的边缘服务器nu的功率增益;(2.1) Assuming that the set of alternative assisting devices for user u is Uu , let the alternative device u' be its assisting device, then where gu,u' , and respectively represent the power gain from user u to the candidate device u', the power gain from user u to the edge server nu associated with useru , and the power gain from the candidate deviceu ' to the edge server nu associated with user u;

(2.2)用户u可根据(1)中设定的权重优化本地计算量au、上传任务量1-au、本地传输功率pu,1及本地计算时延优化问题可建模为:(2.2) The user u can optimize the local computation amount au , the upload task amount 1-au , the local transmission power pu,1 and the local computation delay according to the weight set in (1) The optimization problem can be modeled as:

其中,Iu、Bu、c、ξUser分别表示用户u的总任务量、卸载频带宽度(、计算每比特任务所需消耗的CPU数、本地计算时延、用户设备计算能量系数;此外,优化变量pu,1和有上下界限制,若超过了边界值,则直接设定为边界值;Among them, Iu , Bu , c, ξUser represents the total task amount of user u, the offload bandwidth (, the number of CPUs required to calculate each bit task, the local computing delay, and the user equipment computing energy coefficient; in addition, the optimization variables pu,1 and There are upper and lower bounds, if the boundary value is exceeded, it is directly set as the boundary value;

(2.3)卸载用户u采用交替迭代的方式确定变量au、pu,1和(2.3) Unloading user u determines the variables au , pu,1 and

(3)卸载用户u首先将卸载任务的1-au部分以传输功率pu,1发送至选择的协助设备u',然后在协助设备的辅助下采用协作通信的方式将卸载任务的1-au部分卸载至边缘服务器nu,此时卸载用户u和协助设备u'的协作传输功率分别为pu,2及pu',pu,2及pu'的解由以下优化问题获得:(3) The offloading user u first sends the 1-au part of the offloading task to the selected assisting device u' with the transmission power pu,1 , and then uses cooperative communication with the assistance of the assisting device to send the 1-a u part of the offloading task to the selected assisting device u'. The part of au is offloaded to the edge server nu . At this time, the cooperative transmission power of the offloaded user u and the assisting device u' is pu,2 and pu ' respectively. The solutions of pu,2 and pu' are obtained by the following optimization problem :

此处优化变量pu,2和pu'有上下界限制,若超过了边界值,则直接设定为边界值;此时优化问题OP3的目标函数前两项分别为关于pu,2和pu'的非凸函数,后两项分别为关于pu,2和pu'的凹函数,因此需要对前两项进行连续凸逼近,此时变量pu,2和pu'的解可由以下优化问题获得:Here, the optimization variables pu,2 and pu' have upper and lower bounds. If they exceed the boundary value, they are directly set as the boundary value; at this time, the first two items of the objective function of the optimization problem OP3 are about pu,2 and The non-convex function of pu' , the last two terms are concave functions about pu,2 and pu' respectively, so it is necessary to carry out continuous convex approximation to the first two terms. At this time, the solutions of the variables pu,2 and pu' It can be obtained by the following optimization problem:

其中,角标i表示迭代次数;表示函数h(pu,2,pu')在pu,2的一阶导数并令表示第i次迭代得到的pu,2值;表示函数h(pu,2,pu')在pu'的一阶导数并代入表示第i次迭代得到的pu'值;上述优化问题OP4为关于pu,2和pu'的凸优化问题,通过搜索H'(pu,2)=0和H'(pu')=0的根即可得到优化问题OP3中pu,2和pu'的解;基于连续凸逼近的思想,反复更新的pu,2和pu'值直至相临两次迭代的pu,2和pu'的差值分别小于一定精度,即可认为算法收敛,收敛时得到的pu,2和pu'即为(3)中卸载用户u和协助设备u'的协作传输功率pu,2和pu'的最终解;Among them, the index i represents the number of iterations; Denote the first derivative of the function h(pu,2 ,pu' ) at pu,2 and let Indicates the pu,2 value obtained by the i-th iteration; Represent the first derivative of the function h(pu,2 ,pu' ) at pu' and substitute it into represents the value of pu' obtained by the ith iteration; the above optimization problem OP4 is a convex optimization problem about pu,2 and pu' , by searching H'(pu,2 )=0 and H'(pu' )=0, the solutions of pu, 2 and pu' in optimization problem OP3 can be obtained; based on the idea of continuous convex approximation, the values of p u, 2 and p u' are updated repeatedly until the pu, 2 and pu' values of two adjacent iterations are reached. If the difference betweenu,2 and pu' is less than a certain precision, it can be considered that the algorithm converges, and the pu,2 and pu' obtained during convergence are the cooperative transmission of the unloaded user u and the assisting device u' in (3). The final solution of power pu,2 and pu' ;

(4)边缘服务器nu根据卸载用户u对于计算时延和计算能耗的权重指标,确定边缘计算任务量bu、边缘计算时延及云计算任务量1-bu、云计算时延并将云计算任务1-bu进一步卸载至云服务器,完成整个卸载过程:(4) The edge server nu determines the edge computing task amount bu and the edge computing delay according to the weight index of the offloading user u on the computing delay and computing energy consumption and cloud computing task volume 1-bu , cloud computing delay And further uninstall the cloud computing task 1-bu to the cloud server to complete the entire uninstallation process:

(4.1)边缘服务器nu根据(1)中设定的权重优化边缘计算任务量bu、边缘计算时延及云计算任务量1-bu、云计算时延优化问题可建模为:(4.1) The edge server nu optimizes the edge computing task volume bu and the edge computing delay according to the weight set in (1). and cloud computing task volume 1-bu , cloud computing delay The optimization problem can be modeled as:

其中ξMEC和ξCloud表示边缘服务器计算能量系数和云服务器计算能量系数;此处优化变量和有上下界限制,若超过了边界值,则直接设定为边界值;where ξMEC and ξCloud represent the computing energy coefficient of edge server and the computing energy coefficient of cloud server; the variables are optimized here and There are upper and lower bounds, if the boundary value is exceeded, it is directly set as the boundary value;

(4.2)边缘服务器nu采用交替迭代的方式确定变量bu、及(4.2) The edge server nu determines the variables bu , and

(5)卸载用户u、边缘服务器nu及云服务器基于优化结果分别以计算速率及处理卸载用户u的任务中au部分、bu(1-au)部分及(1-bu)(1-au)部分,计算完毕后卸载用户u汇总各部分计算结果,并生成卸载任务的最终计算结果。(5) The offloading user u, the edge server nu and the cloud server respectively calculate the speed based on the optimization result. and Process the au part, bu (1-au ) part and (1-bu )(1-au ) part of the task of uninstalling user u, after the calculation is completed, the uninstall user u summarizes the calculation results of each part, and generates the uninstallation The final calculation result of the task.

进一步,所述步骤(2.3)按如下步骤进行:Further, the step (2.3) is carried out as follows:

(2.3a)对每个卸载用户u初始化一组pu,1和的值;(2.3a) Initialize a set of pu,1 and the value of;

(2.3b)基于得到的pu,1和优化变量au,此时构造的优化问题被转换为关于au的凸优化问题,基于凸优化理论可得au的解为:(2.3b) Based on the obtained pu,1 and Optimize the variable au , the optimization problem constructed at this time is transformed into a convex optimization problem about au , and the solution of au can be obtained based on the convex optimization theory as:

如果得到的au*大于1,则取值为1;If the obtained au* is greater than 1, the value is 1;

(2.3c)基于得到的au优化变量此时构造的优化问题被转换为关于的凸优化问题,基于凸优化理论可得的解为(2.3c) Optimization variables based on the obtained au The optimization problem constructed at this point is transformed into The convex optimization problem of , based on the convex optimization theory, we can get The solution is

(2.3d)基于得到的au优化变量pu,1,此时目标函数的第一项为关于pu,1的非凸函数,第二项为关于pu,1的凹函数,因此需要对第一项进行连续凸逼近,此时变量pu,1的解可由以下优化问题获得:(2.3d) Optimize the variable pu,1 based on the obtained au . At this time, the first item of the objective function is a non-convex function about p u,1 , and the second item is a concave function about pu,1 . Therefore, it is necessary to A continuous convex approximation is performed for the first term, and the solution to the variable pu,1 can be obtained by the following optimization problem:

其中,角标i表示迭代次数;表示函数f(pu,1)在pu,1的一阶导数并代入表示第i次迭代得到的pu,1值;上述优化问题OP2为关于pu,1的凸优化问题,通过搜索F'(pu,1)=0的根即可得到优化问题OP2中pu,1的解;基于连续凸逼近的思想,反复更新的pu,1值直至相临两次迭代的pu,1差值小于一定精度,即可认为算法收敛,最新得到的pu,1值为优化问题OP1中pu,1的解;Among them, the index i represents the number of iterations; Represent the first derivative of the function f(pu,1 ) at pu,1 and substitute it into Represents the value of pu,1 obtained by the i-th iteration; the above optimization problem OP2 is a convex optimization problem about pu,1 , and p in the optimization problem OP2 can be obtained by searching for the root of F'(pu,1 )=0 The solution ofu,1 ; based on the idea of continuous convex approximation, the value of pu,1 is repeatedly updated until the difference of pu,1 between two adjacent iterations is less than a certain precision, the algorithm can be considered to be convergent, and the latest obtained pu, 1 is the solution of pu,1 in optimization problem OP1;

(2.3e)基于步骤(2.3c)和(2.3d)中得到的和更新au,再基于得到的au,刷新和不断循环直至算法收敛,收敛时得到的au、pu,1和即为步骤(2.2)中本地计算量au、本地传输功率pu,1及本地计算的最终解。(2.3e) based on the results obtained in steps (2.3c) and (2.3d) and Update au , then based on the obtained au , refresh and Keep looping until the algorithm converges, and the au , pu,1 and That is, in step (2.2), the local calculation amount au , the local transmission power pu,1 and the local calculation the final solution.

进一步,所述步骤(4.2)按如下步骤进行:Further, the step (4.2) is carried out as follows:

(4.2a)对边缘服务器nu初始化一组及的值;(4.2a) Initialize a set of edge servers nu and the value of;

(4.2b)基于得到的及优化变量bu,此时构造的优化问题被转换为关于bu的凸优化问题,基于凸优化理论可得bu的解为:(4.2b) Based on the obtained and Optimize the variable bu , the optimization problem constructed at this time is transformed into a convex optimization problem about bu , and the solution of bu can be obtained based on the convex optimization theory as:

(4.2c)基于得到的bu优化变量及此时构造的优化问题被转换为关于及的凸优化问题,基于凸优化理论可得及的解分为:(4.2c) Optimization variables based on the obtained bu and The optimization problem constructed at this point is transformed into and The convex optimization problem of , based on the convex optimization theory, we can get and The solution is divided into:

及 and

(4.2d)基于步骤(4.2c)中得到的及更新bu,再基于得到的bu,刷新及不断循环直至算法收敛,收敛时得到的bu、及即为步骤(4.1)中边缘计算任务量bu、边缘计算时延及云计算时延的最终解。(4.2d) based on the result obtained in step (4.2c) and update bu , and then based on the obtained bu , refresh and Continuously loop until the algorithm converges, and thebu , and That is, the amount of edge computing tasks bu and the edge computing delay in step (4.1) and cloud computing latency the final solution.

本发明的另一目的在于提供一种计算机设备,所述计算机设备包括存储器和处理器,所述存储器存储有计算机程序,所述计算机程序被所述处理器执行时,使得所述处理器执行如下步骤:首先基于自身的业务类型确定时延和能耗权重;卸载用户根据链路状态选择协助设备,并根据权重确定本地计算量、上传任务量、本地传输功率、本地计算时延,注意到为避免用户间的干扰,相临边缘服务器覆盖范围内的用户采用频分多址接入方式;用户首先将上传任务传输至选择的协助设备,然后在协助设备的辅助下采用协作通信的方式将上传任务卸载至边缘服务器,从而确保边缘服务器准确地接收到卸载任务,此时需要确定协作通信过程中的协作传输功率;边缘服务器根据用户对于计算时延和计算能耗的权重指标,确定边缘计算任务量、边缘计算时延及云计算任务量、云计算时延,并将云计算任务进一步卸载至云服务器,从而完成整个卸载过程。Another object of the present invention is to provide a computer device, the computer device includes a memory and a processor, the memory stores a computer program, and when the computer program is executed by the processor, the processor executes the following Steps: First, determine the delay and energy consumption weights based on their own business types; the offload user selects the assisting device according to the link status, and determines the local calculation amount, upload task amount, local transmission power, and local calculation delay according to the weight. To avoid interference between users, users within the coverage of adjacent edge servers use frequency division multiple access; the user first transmits the upload task to the selected assisting device, and then uses cooperative communication with the assistance of the assisting device. The task is offloaded to the edge server to ensure that the offload task is accurately received by the edge server. At this time, the cooperative transmission power in the cooperative communication process needs to be determined; the edge server determines the edge computing task according to the user's weighted indicators of computing delay and computing energy consumption The workload, edge computing delay, cloud computing task volume, cloud computing delay, and the cloud computing tasks are further offloaded to the cloud server to complete the entire offloading process.

本发明的另一目的在于提供一种计算机可读存储介质,存储有计算机程序,所述计算机程序被处理器执行时,使得所述处理器执行如下步骤:首先基于自身的业务类型确定时延和能耗权重;卸载用户根据链路状态选择协助设备,并根据权重确定本地计算量、上传任务量、本地传输功率、本地计算时延,注意到为避免用户间的干扰,相临边缘服务器覆盖范围内的用户采用频分多址接入方式;用户首先将上传任务传输至选择的协助设备,然后在协助设备的辅助下采用协作通信的方式将上传任务卸载至边缘服务器,从而确保边缘服务器准确地接收到卸载任务,此时需要确定协作通信过程中的协作传输功率;边缘服务器根据用户对于计算时延和计算能耗的权重指标,确定边缘计算任务量、边缘计算时延及云计算任务量、云计算时延,并将云计算任务进一步卸载至云服务器,从而完成整个卸载过程。Another object of the present invention is to provide a computer-readable storage medium storing a computer program. When the computer program is executed by a processor, the processor causes the processor to perform the following steps: first, determine the delay and the delay based on its own service type. Energy consumption weight; offloading users select assisting devices according to the link status, and determine the local calculation amount, upload task amount, local transmission power, and local calculation delay according to the weight. Note that in order to avoid interference between users, the coverage area of adjacent edge servers The users in the network use the frequency division multiple access method; the user first transmits the upload task to the selected assisting device, and then uses the cooperative communication method to offload the uploading task to the edge server with the assistance of the assisting device, so as to ensure that the edge server is accurate. After receiving the offloading task, the cooperative transmission power in the cooperative communication process needs to be determined at this time; the edge server determines the amount of edge computing tasks, edge computing delay and cloud computing task amount, Cloud computing delays, and the cloud computing tasks are further offloaded to the cloud server to complete the entire offloading process.

本发明的另一目的在于提供一种信息数据处理终端,所述信息数据处理终端用于实现所述的边云协同资源联合分配方法。Another object of the present invention is to provide an information data processing terminal, the information data processing terminal is used to realize the joint allocation method for edge-cloud collaborative resources.

本发明的另一目的在于提供一种边缘计算服务器,所述边缘计算服务器用于实现所述的边云协同资源联合分配方法。Another object of the present invention is to provide an edge computing server, which is used to implement the method for joint allocation of edge-cloud collaborative resources.

本发明的另一目的在于提供一种云计算服务器,所述云计算服务器用于实现所述的边云协同资源联合分配方法。Another object of the present invention is to provide a cloud computing server, which is used for implementing the method for joint allocation of edge-cloud collaborative resources.

本发明的另一目的在于提供一种无线通信系统,所述无线通信系统用于实现所述的边云协同资源联合分配方法。Another object of the present invention is to provide a wireless communication system, which is used for implementing the method for joint allocation of edge-cloud cooperative resources.

结合上述的所有技术方案,本发明所具备的优点及积极效果为:本发明通过用户协助边云任务的卸载,能够提升卸载任务的可达性,同时基于卸载用户对时延、能耗的权重指标要求,实现通信、计算两方面的时延能效加权和的最小化。本发明采用边云协同技术,通过用户设备协助的方式,实现为用户提供计算卸载服务的目的。在边云协同的背景下,为需要计算卸载的用户选择合适的协助设备、优化卸载任务量及通信资源,保证不同业务的时延及能耗指标要求,实现用户计算任务高效卸载至边缘云甚至是云的目标。Combining all the above technical solutions, the advantages and positive effects of the present invention are: the present invention can improve the accessibility of the offloading task by assisting the offloading of the edge cloud task by the user, and at the same time, based on the weight of the offloading user on the delay and energy consumption The index requirements are to minimize the weighted sum of delay and energy efficiency in both communication and computation. The invention adopts the edge-cloud collaboration technology, and realizes the purpose of providing computing offloading services for users by means of user equipment assistance. In the context of edge-cloud collaboration, select appropriate assistance devices for users who need computing offloading, optimize offloading tasks and communication resources, ensure the latency and energy consumption requirements of different services, and realize efficient offloading of user computing tasks to edge cloud or even is the goal of the cloud.

本发明具体描述了边云协同技术在多用户计算任务卸载及无线通信场景中的应用,并通过联合分配计算资源和通信资源(包括协助设备选择、计算任务拆分比例、传输功率、计算时延)的方式,保证不同卸载业务在通信和计算两方面的时延及能耗指标要求,实现用户计算任务高效卸载至边缘云甚至是云的目标。The present invention specifically describes the application of edge-cloud collaboration technology in multi-user computing task offloading and wireless communication scenarios, and through joint allocation of computing resources and communication resources (including assisting device selection, computing task split ratio, transmission power, computing delay ) method to ensure the latency and energy consumption index requirements of different offloading services in terms of communication and computing, and achieve the goal of efficiently offloading user computing tasks to the edge cloud or even the cloud.

本发明提出了一种基于用户协助的边云协同资源联合分配方法,方法具体描述了边云协同技术在多用户计算任务卸载及无线通信场景中的应用,并通过联合分配计算资源和通信资源(包括协助设备选择、计算任务拆分比例、传输功率、计算时延)的方式,保证不同卸载业务在通信和计算两方面的时延及能耗指标要求,实现用户计算任务高效卸载至边缘云甚至是云的目标。The present invention proposes a method for joint allocation of edge-cloud collaborative resources based on user assistance. The method specifically describes the application of the edge-cloud collaborative technology in multi-user computing task offloading and wireless communication scenarios, and allocates computing resources and communication resources through joint allocation ( Including assisting device selection, computing task split ratio, transmission power, computing delay), to ensure the delay and energy consumption index requirements of different offload services in terms of communication and computing, and to achieve efficient offloading of user computing tasks to the edge cloud or even is the goal of the cloud.

本发明提出了一种基于用户协助的边云协同资源联合分配方法,方法考虑到实际网络环境中用户业务类型存在差异化,不同业务类型对传输和计算的时延以及能耗要求不同。此外,用户设备卸载计算任务时很容易被临近的其他用户设备感知,充分利用各用户设备实现计算任务的协作转发,可进一步提高边缘计算服务器处的任务可达性。The present invention proposes a method for joint allocation of edge-cloud collaborative resources based on user assistance, which takes into account differences in user service types in an actual network environment, and different service types have different requirements for transmission and calculation delay and energy consumption. In addition, when a user equipment offloads a computing task, it is easily perceived by other neighboring user equipments, making full use of each user equipment to realize the cooperative forwarding of computing tasks, which can further improve the task accessibility at the edge computing server.

本发明采用边云协同技术,通过用户设备协助的方式,实现为用户提供计算卸载服务的目的。在边云协同的背景下,为需要计算卸载的用户选择合适的协助设备、优化卸载任务量及通信资源,保证不同业务的时延及能耗指标要求,实现用户计算任务高效卸载至边缘云甚至是云的目标。The invention adopts the edge-cloud collaboration technology, and realizes the purpose of providing computing offloading services for users by means of user equipment assistance. In the context of edge-cloud collaboration, select appropriate assistance devices for users who need computing offloading, optimize offloading tasks and communication resources, ensure the latency and energy consumption requirements of different services, and realize efficient offloading of user computing tasks to edge cloud or even is the goal of the cloud.

附图说明Description of drawings

为了更清楚地说明本申请实施例的技术方案,下面将对本申请实施例中所需要使用的附图做简单的介绍,显而易见地,下面所描述的附图仅仅是本申请的一些实施例,对于本领域普通技术人员来讲,在不付出创造性劳动的前提下还可以根据这些附图获得其他的附图。In order to explain the technical solutions of the embodiments of the present application more clearly, the following will briefly introduce the drawings that need to be used in the embodiments of the present application. Obviously, the drawings described below are only some embodiments of the present application. For those of ordinary skill in the art, other drawings can also be obtained from these drawings without creative effort.

图1是本发明实施例提供的边云协同资源联合分配方法流程图。FIG. 1 is a flowchart of a method for joint allocation of edge-cloud collaborative resources provided by an embodiment of the present invention.

图2是本发明实施例提供的边云协同资源联合分配系统的结构示意图;2 is a schematic structural diagram of an edge-cloud collaborative resource joint allocation system provided by an embodiment of the present invention;

图2中:1、时延和能耗权重确定模块;2、参数确定模块;3、协作通信传输模块;4、卸载处理模块;5、计算结果输出模块。In Fig. 2: 1. Delay and energy consumption weight determination module; 2. Parameter determination module; 3. Collaborative communication transmission module; 4. Offload processing module; 5. Calculation result output module.

图3是本发明实施例提供的使用的用户设备协助的边云协同模型图。FIG. 3 is a diagram of a user equipment-assisted edge-cloud collaboration model provided by an embodiment of the present invention.

图4是本发明实施例提供的边云协同资源联合分配方法的实现总流程图。FIG. 4 is a general flow chart of the implementation of the method for joint allocation of edge-cloud collaborative resources provided by an embodiment of the present invention.

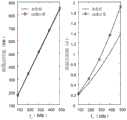

图5是本发明实施例提供的在不同能耗和时延权重下的性能示例图。FIG. 5 is an example diagram of performance under different energy consumption and delay weights provided by an embodiment of the present invention.

图6是本发明实施例提供的不同技术效果性能对比图。FIG. 6 is a performance comparison diagram of different technical effects provided by an embodiment of the present invention.

具体实施方式Detailed ways

为了使本发明的目的、技术方案及优点更加清楚明白,以下结合实施例,对本发明进行进一步详细说明。应当理解,此处所描述的具体实施例仅用以解释本发明,并不用于限定本发明。In order to make the objectives, technical solutions and advantages of the present invention clearer, the present invention will be further described in detail below with reference to the embodiments. It should be understood that the specific embodiments described herein are only used to explain the present invention, but not to limit the present invention.

针对现有技术存在的问题,本发明提供了一种边云协同资源联合分配方法、系统及应用。本发明所涉及的边云协同涉及资源协同和业务管理协同两方面:1.资源协同特指计算资源协同,可在用户本地资源及边缘资源不足的情况下,调用云中心的资源进行补充,满足用户侧应用对资源的需要,云中心可提供的资源包括但不局限于裸机、虚拟机和容器。2.业务管理协同可通过云服务器增值网络业务的服务质量,按能耗和时延指标需求为客户提供相关网络业务。下面结合附图对本发明作详细的描述。Aiming at the problems existing in the prior art, the present invention provides a method, system and application for joint allocation of edge-cloud collaborative resources. The edge-cloud collaboration involved in the present invention involves two aspects: resource collaboration and business management collaboration: 1. Resource collaboration specifically refers to computing resource collaboration. In the case of insufficient user local resources and edge resources, the resources of the cloud center can be called for supplementation to meet the needs of User-side applications require resources, and the resources provided by the cloud center include but are not limited to bare metal, virtual machines, and containers. 2. Business management collaboration can provide customers with relevant network services according to the requirements of energy consumption and delay indicators through the service quality of cloud server value-added network services. The present invention will be described in detail below with reference to the accompanying drawings.

如图1所示,本发明提供的边云协同资源联合分配方法包括以下步骤:As shown in Figure 1, the method for joint allocation of edge-cloud collaborative resources provided by the present invention includes the following steps:

S101:卸载用户首先基于自身的业务类型确定时延和能耗权重;S101: The offloading user first determines the delay and energy consumption weights based on the service type of the offloading user;

S102:卸载用户根据链路状态选择协助设备,并根据权重确定本地计算量、上传任务量、本地传输功率、本地计算时延;S102: The offloading user selects the assisting device according to the link state, and determines the local computation amount, the upload task amount, the local transmission power, and the local computation delay according to the weight;

S103:用户首先将上传任务传输至选择的协助设备,然后在协助设备的辅助下采用协作通信的方式将上传任务卸载至边缘服务器,从而确保边缘服务器准确地接收到卸载任务,此时需要确定协作通信过程中的协作传输功率;S103: The user first transmits the uploading task to the selected assisting device, and then uses cooperative communication to offload the uploading task to the edge server with the assistance of the assisting device, so as to ensure that the edge server receives the offloading task accurately. At this time, it is necessary to determine the collaboration Cooperative transmission power during communication;

S104:边缘服务器根据用户对于计算时延和计算能耗的权重指标,确定边缘计算任务量、边缘计算时延及云计算任务量、云计算时延,并将云计算任务进一步卸载至云服务器,从而完成整个卸载过程;S104: The edge server determines the edge computing task volume, edge computing delay, cloud computing task volume, and cloud computing delay according to the user's weight index for computing delay and computing energy consumption, and further offloads the cloud computing task to the cloud server. So as to complete the entire uninstallation process;

S105:卸载用户、边缘服务器及云服务器基于优化结果分别以各自的计算速率处理分割后的卸载任务,计算完毕后卸载用户汇总各部分计算结果,并生成最终计算结果。S105: The offloading user, the edge server, and the cloud server process the divided offloading tasks at their respective calculation rates based on the optimization result, and after the calculation is completed, the offloading user aggregates the calculation results of each part, and generates a final calculation result.

本发明提供的边云协同资源联合分配方法业内的普通技术人员还可以采用其他的步骤实施,图1的本发明提供的边云协同资源联合分配方法仅仅是一个具体实施例而已。The method for joint allocation of edge-cloud collaborative resources provided by the present invention may also be implemented by those skilled in the art by other steps. The method for joint allocation of collaborative resources for side-cloud provided by the present invention in FIG. 1 is only a specific embodiment.

如图2所示,本发明提供的边云协同资源联合分配系统包括:As shown in Figure 2, the system for joint allocation of edge-cloud collaborative resources provided by the present invention includes:

时延和能耗权重确定模块1,用于实现卸载用户基于自身的业务类型确定时延和能耗权重;The delay and energy consumption

参数确定模块2,用于实现卸载用户根据链路状态选择协助设备,并根据权重确定本地计算量、上传任务量、本地传输功率、本地计算时延;The

协作通信传输模块3,用于实现用户将上传任务传输至选择的协助设备,在协助设备的辅助下采用协作通信的方式将上传任务卸载至边缘服务器;The cooperative

卸载处理模块4,用于实现边缘服务器根据用户对于计算时延和计算能耗的权重指标,确定边缘计算任务量、边缘计算时延及云计算任务量、云计算时延,并将云计算任务进一步卸载至云服务器,完成整个卸载过程;The

计算结果输出模块5,用于卸载用户、边缘服务器及云服务器基于优化结果分别以各自的计算速率处理分割后的卸载任务,计算完毕后卸载用户汇总各部分计算结果,并生成最终计算结果。The calculation

下面结合附图对本发明的技术方案作进一步的描述。The technical solutions of the present invention will be further described below with reference to the accompanying drawings.

如图3所示,本发明使用的用户设备协助的边云协同模型图,其中,基站集成云服务器,网络边缘的接入点集成了边缘服务器。卸载用户完成任务卸载需要三大步骤:首先,将确定好的任务卸载部分转发至选中的协助用户设备;然后,采用协作通信的方式,由协助用户设备将任务卸载部分转发至边缘服务器,此时为了保证边缘服务的解码成功率,卸载用户设备以频分多址接入方式同时将任务卸载部分发送至边缘服务器;最后,边缘服务器将卸载的任务进一步拆分至云服务器,由于边缘服务器至云服务器通常采用有线的方式连接,因此这一部分的通信资源可被假设为充足的,仅考虑计算能耗和计算时延。As shown in FIG. 3 , the user equipment-assisted edge-cloud collaboration model diagram used in the present invention, wherein the base station integrates the cloud server, and the access point at the network edge integrates the edge server. Three steps are required for the offloading user to complete the task offloading: first, the determined offloading part of the task is forwarded to the selected assisting user equipment; then, the assisting user equipment forwards the offloading part of the task to the edge server by means of cooperative communication. In order to ensure the decoding success rate of the edge service, the offloaded user equipment simultaneously sends the offloaded part of the task to the edge server in the frequency division multiple access mode; finally, the edge server further splits the offloaded tasks to the cloud server, since the edge server to the cloud The servers are usually connected by wire, so the communication resources of this part can be assumed to be sufficient, and only the calculation energy consumption and calculation delay are considered.

如图4所示,本发明的具体实现步骤如下:As shown in Figure 4, the specific implementation steps of the present invention are as follows:

步骤一:卸载用户基于自身的业务类型确定时延和能耗权重。令和分别表示用户u对于通信能耗、通信时延、计算能耗和计算时延的权重指标,其中且权重值越大,表明该用户的卸载任务对于该项指标越敏感。Step 1: The offloading user determines the delay and energy consumption weights based on its own service type. make and respectively represent the weight indicators of user u for communication energy consumption, communication delay, computing energy consumption and computing delay, where and The larger the weight value, the more sensitive the user's uninstallation task is to this indicator.

步骤二:卸载用户根据链路状态选择协助设备,根据权重确定本地计算量、上传任务量、本地传输功率及本地计算时延:Step 2: The offloading user selects the assisting device according to the link status, and determines the local calculation amount, upload task amount, local transmission power and local calculation delay according to the weight:

2.1)假设用户u的备选协助设备集合为Uu,令备选设备u'作为其协助设备,则其中gu,u'、和分别表示用户u到备选设备u'的功率增益、用户u到用户u关联的边缘服务器nu的功率增益和备选设备u'到用户u关联的边缘服务器nu的功率增益。2.1) Assuming that the set of alternative assisting devices of user u is Uu , let the alternative device u' be its assisting device, then where gu,u' , and respectively represent the power gain from the user u to the candidate device u', the power gain from the user u to the edge server nu associated with the useru , and the power gain from the candidate deviceu ' to the edge server nu associated with the user u.

2.2)用户u可根据步骤1中设定的权重优化本地计算量au、上传任务量1-au、本地传输功率pu,1及本地计算时延优化问题可建模为:2.2) User u can optimize the local computation amount au , the upload task amount 1-au , the local transmission power pu,1 and the local computation delay according to the weight set in

其中,Iu、Bu、c、ξUser分别表示用户u的总任务量(单位:bits)、卸载频带宽度(单位:Hz)、计算每比特任务所需消耗的CPU数(单位:CPU/bit)、本地计算时延(单位:s)、用户设备计算能量系数(可由CPU利用率乘以能效系数表示);此外,优化变量pu,1和有上下界限制,若超过了边界值,则直接设定为边界值。Among them, Iu , Bu , c, ξUser represents the total task amount of user u (unit: bits), the offload bandwidth (unit: Hz), the number of CPUs required to calculate each bit task (unit: CPU/bit), and the local computing delay (unit: s), the user equipment calculates the energy coefficient (which can be expressed by multiplying the CPU utilization by the energy efficiency coefficient); in addition, the optimization variables pu,1 and There are upper and lower bounds. If the boundary value is exceeded, it is directly set as the boundary value.

2.3)卸载用户u采用交替迭代的方式确定变量au、pu,1和2.3) Unloading user u determines variables au , pu,1 and

2.3.1)对每个卸载用户u初始化一组pu,1和的值;2.3.1) Initialize a set of pu,1 and the value of;

2.3.2)基于得到的pu,1和优化变量au,此时构造的优化问题被转换为关于au的凸优化问题,基于凸优化理论可得au的解为:2.3.2) Based on the obtained pu,1 and Optimize the variable au , the optimization problem constructed at this time is transformed into a convex optimization problem about au , and the solution of au can be obtained based on the convex optimization theory as:

其中表示如果x大于1,则取值为1,如果x小于0,则取值为0。in Indicates that if x is greater than 1, the value is 1, and if x is less than 0, the value is 0.

2.3.3)基于得到的au优化变量此时构造的优化问题被转换为关于的凸优化问题,基于凸优化理论可得的解为2.3.3) Optimize variables based on the obtained au The optimization problem constructed at this point is transformed into The convex optimization problem of , based on the convex optimization theory, we can get The solution is

2.3.4)基于得到的au优化变量pu,1,此时目标函数的第一项为关于pu,1的非凸函数,第二项为关于pu,1的凹函数,因此需要对第一项进行连续凸逼近,此时变量pu,1的解可由以下优化问题获得:2.3.4) Optimize the variable pu,1 based on the obtained au . At this time, the first item of the objective function is a non-convex function about p u,1 , and the second item is a concave function about pu,1 . Therefore, it is necessary to A continuous convex approximation is performed for the first term, and the solution to the variable pu,1 can be obtained by the following optimization problem:

其中,表示函数f(pu,1)在pu,1的一阶导数并令表示第i次迭代得到的pu,1值;上述优化问题OP2为关于pu,1的凸优化问题,通过搜索F'(pu,1)=0的根即可得到优化问题OP2中pu,1的解;基于连续凸逼近的思想,反复更新的pu,1值直至相临两次迭代的pu,1差值小于一定精度,即可认为算法收敛,最新得到的pu,1值为优化问题OP1中pu,1的解。in, Denote the first derivative of the function f(pu,1 ) at pu,1 and let Represents the value of pu,1 obtained by the i-th iteration; the above optimization problem OP2 is a convex optimization problem about pu,1 , and p in the optimization problem OP2 can be obtained by searching for the root of F'(pu,1 )=0 The solution ofu,1 ; based on the idea of continuous convex approximation, the value of pu,1 is repeatedly updated until the difference of pu,1 between two adjacent iterations is less than a certain precision, the algorithm can be considered to be convergent, and the latest obtained pu, The value of1 is the solution of pu,1 in the optimization problem OP1.

2.3.5)基于步骤2.3.3)和2.3.4)中得到的和更新au,再基于得到的au,刷新和不断循环直至算法收敛,收敛时得到的au、pu,1和即为步骤2.2)中本地计算量au、本地传输功率pu,1及本地计算的最终解。2.3.5) Based on the results obtained in steps 2.3.3) and 2.3.4) and Update au , then based on the obtained au , refresh and Keep looping until the algorithm converges, and the au , pu,1 and That is, in step 2.2), the local calculation amount au , the local transmission power pu,1 and the local calculation the final solution.

步骤三:卸载用户u首先将卸载任务的1-au部分以传输功率pu,1发送至选择的协助设备u',然后在协助设备的辅助下采用协作通信的方式将卸载任务的1-au部分卸载至边缘服务器nu,此时卸载用户u和协助设备u'的协作传输功率分别为pu,2及pu',pu,2及pu'的解可由以下优化问题获得:Step 3: The offloading user u first sends the 1-au part of the offloading task to the selected assisting device u' with the transmission power pu,1 , and then uses cooperative communication with the assistance of the assisting device to transfer the 1-a u part of the offloading task. The part of au is offloaded to the edge servernu , and the cooperative transmission power of the offloading user u and the assisting device u' is pu,2 and pu' respectively. The solutions ofpu,2 andpu' can be obtained by the following optimization problem :

此处优化变量pu,2和pu'有上下界限制,若超过了边界值,则直接设定为边界值;此时优化问题OP3的目标函数前两项分别为关于pu,2和pu'的非凸函数,后两项分别为关于pu,2和pu'的凹函数,因此需要对前两项进行连续凸逼近,此时变量pu,2和pu'的解可由以下优化问题获得:Here, the optimization variables pu,2 and pu' have upper and lower bounds. If they exceed the boundary value, they are directly set as the boundary value; at this time, the first two items of the objective function of the optimization problem OP3 are about pu,2 and The non-convex function of pu' , the last two terms are concave functions about pu,2 and pu' respectively, so it is necessary to carry out continuous convex approximation to the first two terms. At this time, the solutions of the variables pu,2 and pu' It can be obtained by the following optimization problem:

其中,表示函数h(pu,2,pu')在pu,2的一阶导数并令表示第i次迭代得到的pu,2值,表示函数h(pu,2,pu')在pu'的一阶导数并令表示第i次迭代得到的pu'值;上述优化问题OP4为关于pu,2和pu'的凸优化问题,通过搜索H'(pu,2)=0和H'(pu')=0的根即可得到优化问题OP3中pu,2和pu'的解;基于连续凸逼近的思想,反复更新的pu,2和pu'值直至相临两次迭代的pu,2和pu'的差值分别小于一定精度,即可认为算法收敛,收敛时得到的pu,2和pu'即为步骤3中卸载用户u和协助设备u'的协作传输功率pu,2和pu'的最终解。in, Denote the first derivative of the function h(pu,2 ,pu' ) at pu,2 and let represents the value of pu,2 obtained by the ith iteration, Denote the first derivative of the function h(pu,2 ,pu' ) at pu' and let represents the value of pu' obtained by the ith iteration; the above optimization problem OP4 is a convex optimization problem about pu,2 and pu' , by searching H'(pu,2 )=0 and H'(pu' )=0, the solutions of pu, 2 and pu' in optimization problem OP3 can be obtained; based on the idea of continuous convex approximation, the values of p u, 2 and p u' are updated repeatedly until the pu, 2 and pu' values of two adjacent iterations are reached. If the difference betweenu,2 and pu' is less than a certain precision, the algorithm can be considered to be converged, and the obtained pu,2 and pu' during convergence are the cooperative transmission power of the unloaded user u and the assisting device u' in

步骤四:边缘服务器nu根据卸载用户u对于计算时延和计算能耗的权重指标,确定边缘计算任务量bu、边缘计算时延及云计算任务量1-bu、云计算时延并将云计算任务1-bu进一步卸载至云服务器,从而完成整个卸载过程:Step 4: The edge server nu determines the edge computing task volume bu and the edge computing delay according to the weight index of the offloading user u on the computing delay and computing energy consumption and cloud computing task volume 1-bu , cloud computing delay And the cloud computing task 1-bu is further uninstalled to the cloud server to complete the entire uninstallation process:

4.1)边缘服务器nu根据步骤1中设定的权重优化边缘计算任务量bu、边缘计算时延及云计算任务量1-bu、云计算时延优化问题可建模为:4.1) The edge server nu optimizes the edge computing task volume bu and the edge computing delay according to the weight set in

其中ξMEC和ξCloud表示边缘服务器计算能量系数和云服务器计算能量系数(计算能量系数可由CPU利用率乘以能效系数表示);此处优化变量和有上下界限制,若超过了边界值,则直接设定为边界值。Among them, ξMEC and ξCloud represent the computing energy coefficient of edge server and cloud server computing energy coefficient (the computing energy coefficient can be represented by the CPU utilization multiplied by the energy efficiency coefficient); the optimization variables here and There are upper and lower bounds. If the boundary value is exceeded, it is directly set as the boundary value.

4.2)边缘服务器nu采用交替迭代的方式确定变量bu、及4.2) The edge server nu determines the variables bu , and

4.2.1)对边缘服务器nu初始化一组及的值;4.2.1) Initialize a set of edge servers nu and the value of;

4.2.2)基于得到的及优化变量bu,此时构造的优化问题被转换为关于bu的凸优化问题,基于凸优化理论可得bu的解为:4.2.2) Based on the obtained and Optimize the variable bu , the optimization problem constructed at this time is transformed into a convex optimization problem about bu , and the solution of bu can be obtained based on the convex optimization theory as:

其中表示如果x大于1,则取值为1,如果x小于0,则取值为0。in Indicates that if x is greater than 1, the value is 1, and if x is less than 0, the value is 0.

4.2.3)基于得到的bu优化变量及此时构造的优化问题被转换为关于及的凸优化问题,基于凸优化理论可得及的解分为:4.2.3) Based on the obtained bu optimization variables and The optimization problem constructed at this point is transformed into and The convex optimization problem of , based on the convex optimization theory, we can get and The solution is divided into:

及 and

4.2.4)基于步骤4.2.3)中得到的及更新bu,再基于得到的bu,刷新及不断循环直至算法收敛,收敛时得到的bu、及即为步骤4.1)中边缘计算任务量bu、边缘计算时延及云计算时延的最终解。4.2.4) Based on the result obtained in step 4.2.3) and update bu , and then based on the obtained bu , refresh and Continuously loop until the algorithm converges, and thebu , and That is, the amount of edge computing tasks bu and the edge computing delay in step 4.1) and cloud computing latency the final solution.

步骤五:卸载用户u、边缘服务器nu及云服务器基于优化结果分别以计算速率及处理卸载用户u的任务中au部分、bu(1-au)部分及(1-bu)(1-au)部分,计算完毕后卸载用户u汇总各部分计算结果,并生成卸载任务的最终计算结果。Step 5: Uninstall the user u, the edge server nu and the cloud server based on the optimization results and calculate the speed respectively and Process the au part, bu (1-au ) part and (1-bu )(1-au ) part of the task of uninstalling user u, after the calculation is completed, the uninstall user u summarizes the calculation results of each part, and generates the uninstallation The final calculation result of the task.

下面结合附图对本发明的技术效果作详细的描述。The technical effects of the present invention will be described in detail below with reference to the accompanying drawings.

如图5所示,给出了本发明与传统策略对比示例图。图例横坐标为卸载用户的总任务量;(a)对比了不同能耗权重下的用户卸载时延,(b)对比了不同能耗权重下的用户卸载能耗,其中点实线和圆实线所代表的能耗权重分别为与相对应的时延权重则分别为与图例假设频谱资源归一化,各单位能量系数为1。从图中可以看出,随着总任务量的增加,用户卸载总时延和总能耗不可避免得会产生上升趋势;但根据卸载任务类型的不同,或者说卸载任务的指标需求的不同,调节能耗、时延权重因子,在能耗权重更大时候,可以通过牺牲一定卸载时延的方式,换取更低的卸载能耗,反之亦成立。因此时延能耗权重表征了卸载任务对于时延和能耗的需求,合理的设定时延能耗权重,可实现卸载时延和卸载能耗的性能折中。As shown in FIG. 5 , an example diagram of the comparison between the present invention and the traditional strategy is given. The abscissa of the legend is the total amount of unloaded users; (a) compares the user unloading delay under different energy consumption weights, (b) compares the user unloading energy consumption under different energy consumption weights, where the dotted line and the circle are solid The power consumption weights represented by the lines are and The corresponding delay weights are and The legend assumes that the spectrum resources are normalized, and the energy coefficient of each unit is 1. It can be seen from the figure that with the increase of the total amount of tasks, the total time delay and total energy consumption of user unloading will inevitably have an upward trend; Adjust the energy consumption and delay weight factors. When the energy consumption weight is larger, a certain unloading delay can be sacrificed in exchange for lower unloading energy consumption, and vice versa. Therefore, the delay and energy consumption weight represents the requirements of the offloading task for the delay and energy consumption, and a reasonable setting of the delay energy consumption weight can achieve a performance trade-off between the offloading delay and the offloading energy consumption.

如图6所示,给出了本发明与其他技术效果的性能对比图;(a)对比了不同技术下的用户卸载时延,(b)对比了不同技术的用户卸载能耗。对比方案为仅由边缘计算服务器为用户提供业务服务,不考虑云中心资源和业务管理的协同。可以看到,通过边缘协同以及计算资源和任务拆分比例的合理划分,可以进一步在时延和可靠性两方面提升系统性能。As shown in FIG. 6 , a performance comparison diagram of the present invention and other technical effects is given; (a) compares the user unloading delay under different technologies, (b) compares the user unloading energy consumption of different technologies. The comparison scheme is that only the edge computing server provides business services to users, and does not consider the coordination of cloud center resources and business management. It can be seen that through edge collaboration and a reasonable division of computing resources and task split ratios, system performance can be further improved in terms of latency and reliability.

应当注意,本发明的实施方式可以通过硬件、软件或者软件和硬件的结合来实现。硬件部分可以利用专用逻辑来实现;软件部分可以存储在存储器中,由适当的指令执行系统,例如微处理器或者专用设计硬件来执行。本领域的普通技术人员可以理解上述的设备和方法可以使用计算机可执行指令和/或包含在处理器控制代码中来实现,例如在诸如磁盘、CD或DVD-ROM的载体介质、诸如只读存储器(固件)的可编程的存储器或者诸如光学或电子信号载体的数据载体上提供了这样的代码。本发明的设备及其模块可以由诸如超大规模集成电路或门阵列、诸如逻辑芯片、晶体管等的半导体、或者诸如现场可编程门阵列、可编程逻辑设备等的可编程硬件设备的硬件电路实现,也可以用由各种类型的处理器执行的软件实现,也可以由上述硬件电路和软件的结合例如固件来实现。It should be noted that the embodiments of the present invention may be implemented by hardware, software, or a combination of software and hardware. The hardware portion may be implemented using special purpose logic; the software portion may be stored in memory and executed by a suitable instruction execution system, such as a microprocessor or specially designed hardware. Those of ordinary skill in the art will appreciate that the apparatus and methods described above may be implemented using computer-executable instructions and/or embodied in processor control code, for example on a carrier medium such as a disk, CD or DVD-ROM, such as a read-only memory Such code is provided on a programmable memory (firmware) or a data carrier such as an optical or electronic signal carrier. The device and its modules of the present invention can be implemented by hardware circuits such as very large scale integrated circuits or gate arrays, semiconductors such as logic chips, transistors, etc., or programmable hardware devices such as field programmable gate arrays, programmable logic devices, etc., It can also be implemented by software executed by various types of processors, or by a combination of the above-mentioned hardware circuits and software, such as firmware.

以上所述,仅为本发明的具体实施方式,但本发明的保护范围并不局限于此,任何熟悉本技术领域的技术人员在本发明揭露的技术范围内,凡在本发明的精神和原则之内所作的任何修改、等同替换和改进等,都应涵盖在本发明的保护范围之内。The above are only specific embodiments of the present invention, but the protection scope of the present invention is not limited to this. Any person skilled in the art is within the technical scope disclosed by the present invention, and all within the spirit and principle of the present invention Any modifications, equivalent replacements and improvements made within the scope of the present invention should be included within the protection scope of the present invention.

Claims (10)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202011584281.1ACN112689303B (en) | 2020-12-28 | 2020-12-28 | Edge cloud cooperative resource joint allocation method, system and application |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202011584281.1ACN112689303B (en) | 2020-12-28 | 2020-12-28 | Edge cloud cooperative resource joint allocation method, system and application |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN112689303Atrue CN112689303A (en) | 2021-04-20 |

| CN112689303B CN112689303B (en) | 2022-07-22 |

Family

ID=75453989

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202011584281.1AActiveCN112689303B (en) | 2020-12-28 | 2020-12-28 | Edge cloud cooperative resource joint allocation method, system and application |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN112689303B (en) |

Cited By (11)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113191654A (en)* | 2021-05-12 | 2021-07-30 | 余绍祥 | Distributed resource scheduling system and method based on multi-objective optimization |

| CN113419867A (en)* | 2021-08-23 | 2021-09-21 | 浙大城市学院 | Energy-saving service supply method in edge-oriented cloud collaborative computing environment |

| CN113660625A (en)* | 2021-07-06 | 2021-11-16 | 山东师范大学 | Method and system for resource coordination between multi-access edge computing nodes and CDN nodes |

| CN113784373A (en)* | 2021-08-24 | 2021-12-10 | 苏州大学 | Combined optimization method and system for time delay and frequency spectrum occupation in cloud edge cooperative network |

| CN113923223A (en)* | 2021-11-15 | 2022-01-11 | 安徽大学 | User allocation method with low time cost in edge environment |

| CN114448991A (en)* | 2021-12-28 | 2022-05-06 | 西安电子科技大学 | Multi-edge server selection method, system, medium, device and terminal |

| CN114844900A (en)* | 2022-05-05 | 2022-08-02 | 中南大学 | Edge cloud resource cooperation method based on uncertain demand |

| CN115695231A (en)* | 2022-10-31 | 2023-02-03 | 中国联合网络通信集团有限公司 | Method, device and storage medium for determining network energy efficiency |

| CN116506877A (en)* | 2023-06-26 | 2023-07-28 | 北京航空航天大学 | A Distributed Collaborative Computing Method for Mobile Crowd Sensing |

| CN117193873A (en)* | 2023-09-05 | 2023-12-08 | 北京科技大学 | A computing offloading method and device suitable for industrial control systems |

| CN118260067A (en)* | 2024-02-18 | 2024-06-28 | 北京中电飞华通信有限公司 | Computing resource distribution method based on simulated annealing and related equipment |

Citations (11)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN106900011A (en)* | 2017-02-28 | 2017-06-27 | 重庆邮电大学 | Task discharging method between a kind of cellular basestation based on MEC |

| CN107241767A (en)* | 2017-06-14 | 2017-10-10 | 广东工业大学 | The method and device that a kind of mobile collaboration is calculated |

| WO2017196249A1 (en)* | 2016-05-13 | 2017-11-16 | Telefonaktiebolaget Lm Ericsson (Publ) | Network architecture, methods, and devices for a wireless communications network |

| US20180220432A1 (en)* | 2017-02-01 | 2018-08-02 | Deutsche Telekom Ag | Network resources brokering system and brokering entity for accessing a network resource with preferential treatment |

| CN108804227A (en)* | 2018-05-23 | 2018-11-13 | 大连理工大学 | The method of the unloading of computation-intensive task and best resource configuration based on mobile cloud computing |

| CN109474681A (en)* | 2018-11-05 | 2019-03-15 | 安徽大学 | Resource allocation method, system and server system for mobile edge computing server |

| CN109814951A (en)* | 2019-01-22 | 2019-05-28 | 南京邮电大学 | A joint optimization method for task offloading and resource allocation in mobile edge computing networks |

| CN111464983A (en)* | 2020-03-10 | 2020-07-28 | 深圳大学 | A computing and communication cooperation method and system in a passive edge computing network |

| CN111586762A (en)* | 2020-04-29 | 2020-08-25 | 重庆邮电大学 | Task unloading and resource allocation joint optimization method based on edge cooperation |

| CN111585916A (en)* | 2019-12-26 | 2020-08-25 | 国网辽宁省电力有限公司电力科学研究院 | LTE electric power wireless private network task unloading and resource allocation method based on cloud edge cooperation |

| CN111913723A (en)* | 2020-06-15 | 2020-11-10 | 合肥工业大学 | Cloud-edge-end cooperative unloading method and system based on assembly line |

- 2020

- 2020-12-28CNCN202011584281.1Apatent/CN112689303B/enactiveActive

Patent Citations (11)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2017196249A1 (en)* | 2016-05-13 | 2017-11-16 | Telefonaktiebolaget Lm Ericsson (Publ) | Network architecture, methods, and devices for a wireless communications network |

| US20180220432A1 (en)* | 2017-02-01 | 2018-08-02 | Deutsche Telekom Ag | Network resources brokering system and brokering entity for accessing a network resource with preferential treatment |

| CN106900011A (en)* | 2017-02-28 | 2017-06-27 | 重庆邮电大学 | Task discharging method between a kind of cellular basestation based on MEC |

| CN107241767A (en)* | 2017-06-14 | 2017-10-10 | 广东工业大学 | The method and device that a kind of mobile collaboration is calculated |

| CN108804227A (en)* | 2018-05-23 | 2018-11-13 | 大连理工大学 | The method of the unloading of computation-intensive task and best resource configuration based on mobile cloud computing |

| CN109474681A (en)* | 2018-11-05 | 2019-03-15 | 安徽大学 | Resource allocation method, system and server system for mobile edge computing server |

| CN109814951A (en)* | 2019-01-22 | 2019-05-28 | 南京邮电大学 | A joint optimization method for task offloading and resource allocation in mobile edge computing networks |

| CN111585916A (en)* | 2019-12-26 | 2020-08-25 | 国网辽宁省电力有限公司电力科学研究院 | LTE electric power wireless private network task unloading and resource allocation method based on cloud edge cooperation |

| CN111464983A (en)* | 2020-03-10 | 2020-07-28 | 深圳大学 | A computing and communication cooperation method and system in a passive edge computing network |

| CN111586762A (en)* | 2020-04-29 | 2020-08-25 | 重庆邮电大学 | Task unloading and resource allocation joint optimization method based on edge cooperation |

| CN111913723A (en)* | 2020-06-15 | 2020-11-10 | 合肥工业大学 | Cloud-edge-end cooperative unloading method and system based on assembly line |

Non-Patent Citations (2)

| Title |

|---|

| MENGQI YANG, JIAN CHEN, LONG YANG,LU LV ,BINGTAO HE, AND BOYANG: ""Design and Performance Analysis of Cooperative NOMA With Coordinated Direct and Relay Transmission"", 《IEEE ACCESS》* |

| 杨龙,陈健: ""一种基于非正交多址接入技术的协作多播传输方法"", 《科技成果登记表》* |

Cited By (19)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113191654A (en)* | 2021-05-12 | 2021-07-30 | 余绍祥 | Distributed resource scheduling system and method based on multi-objective optimization |

| CN113660625A (en)* | 2021-07-06 | 2021-11-16 | 山东师范大学 | Method and system for resource coordination between multi-access edge computing nodes and CDN nodes |

| CN113660625B (en)* | 2021-07-06 | 2024-02-02 | 山东师范大学 | Resource cooperation method and system for multi-access edge computing node and CDN node |

| CN113419867A (en)* | 2021-08-23 | 2021-09-21 | 浙大城市学院 | Energy-saving service supply method in edge-oriented cloud collaborative computing environment |

| CN113419867B (en)* | 2021-08-23 | 2022-01-18 | 浙大城市学院 | Energy-saving service supply method in edge-oriented cloud collaborative computing environment |

| CN113784373B (en)* | 2021-08-24 | 2022-11-25 | 苏州大学 | Joint optimization method and system for time delay and spectrum occupancy in cloud-edge collaborative network |

| CN113784373A (en)* | 2021-08-24 | 2021-12-10 | 苏州大学 | Combined optimization method and system for time delay and frequency spectrum occupation in cloud edge cooperative network |

| CN113923223A (en)* | 2021-11-15 | 2022-01-11 | 安徽大学 | User allocation method with low time cost in edge environment |

| CN113923223B (en)* | 2021-11-15 | 2024-02-06 | 安徽大学 | User allocation method with low time cost in edge environment |

| CN114448991B (en)* | 2021-12-28 | 2022-10-21 | 西安电子科技大学 | A method, system, medium, device and terminal for selecting a multi-edge server |

| CN114448991A (en)* | 2021-12-28 | 2022-05-06 | 西安电子科技大学 | Multi-edge server selection method, system, medium, device and terminal |

| CN114844900B (en)* | 2022-05-05 | 2022-12-13 | 中南大学 | A Collaborative Method for Edge-Cloud Resources Based on Uncertain Demand |

| CN114844900A (en)* | 2022-05-05 | 2022-08-02 | 中南大学 | Edge cloud resource cooperation method based on uncertain demand |

| CN115695231A (en)* | 2022-10-31 | 2023-02-03 | 中国联合网络通信集团有限公司 | Method, device and storage medium for determining network energy efficiency |

| CN116506877A (en)* | 2023-06-26 | 2023-07-28 | 北京航空航天大学 | A Distributed Collaborative Computing Method for Mobile Crowd Sensing |

| CN116506877B (en)* | 2023-06-26 | 2023-09-26 | 北京航空航天大学 | A distributed collaborative computing method for mobile crowd sensing |

| CN117193873A (en)* | 2023-09-05 | 2023-12-08 | 北京科技大学 | A computing offloading method and device suitable for industrial control systems |

| CN117193873B (en)* | 2023-09-05 | 2024-06-25 | 北京科技大学 | A method and device for offloading calculations in industrial control systems |

| CN118260067A (en)* | 2024-02-18 | 2024-06-28 | 北京中电飞华通信有限公司 | Computing resource distribution method based on simulated annealing and related equipment |

Also Published As

| Publication number | Publication date |

|---|---|

| CN112689303B (en) | 2022-07-22 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN112689303B (en) | Edge cloud cooperative resource joint allocation method, system and application | |

| Cui et al. | A novel offloading scheduling method for mobile application in mobile edge computing | |

| CN111586762B (en) | Task unloading and resource allocation joint optimization method based on edge cooperation | |

| CN111586696B (en) | Resource allocation and unloading decision method based on multi-agent architecture reinforcement learning | |

| CN110351754B (en) | Industrial Internet machine equipment user data calculation unloading decision method based on Q-learning | |

| US11831708B2 (en) | Distributed computation offloading method based on computation-network collaboration in stochastic network | |

| CN112492626A (en) | Method for unloading computing task of mobile user | |

| CN114189892A (en) | Cloud-edge collaborative Internet of things system resource allocation method based on block chain and collective reinforcement learning | |

| CN107995660A (en) | Joint Task Scheduling and Resource Allocation Method Supporting D2D-Edge Server Offloading | |

| CN112105062B (en) | Mobile edge computing network energy consumption minimization strategy method under time-sensitive condition | |

| CN111885147A (en) | Dynamic resource pricing method in edge calculation | |

| CN107295109A (en) | Task unloading and power distribution joint decision method in self-organizing network cloud computing | |

| CN105656999B (en) | A kind of cooperation task immigration method of energy optimization in mobile cloud computing environment | |

| CN109714382B (en) | Multi-user multi-task migration decision method of unbalanced edge cloud MEC system | |

| CN112822707B (en) | A method for task offloading and resource allocation in MEC with limited computing resources | |

| CN111949409B (en) | A method and system for offloading computing tasks in power wireless heterogeneous networks | |

| CN111163143B (en) | Low-delay task unloading method for mobile edge calculation | |

| CN114265631A (en) | A method and device for intelligent offloading of mobile edge computing based on federated meta-learning | |

| CN118113484B (en) | Resource scheduling method, system, storage medium and vehicle | |

| CN112988347A (en) | Edge computing unloading method and system for reducing system energy consumption and cost sum | |

| CN114449490A (en) | Multi-task joint computing unloading and resource allocation method based on D2D communication | |

| CN113747450A (en) | Service deployment method and device in mobile network and electronic equipment | |

| CN114980160B (en) | A UAV-assisted terahertz communication network joint optimization method and device | |

| Liu et al. | Joint optimization of task offloading and computing resource allocation in MEC-D2D network | |

| Yang et al. | Deep reinforcement learning-based low-latency task offloading for mobile-edge computing networks |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |