CN112509006A - Sub-map recovery fusion method and device - Google Patents

Sub-map recovery fusion method and deviceDownload PDFInfo

- Publication number

- CN112509006A CN112509006ACN202011457290.4ACN202011457290ACN112509006ACN 112509006 ACN112509006 ACN 112509006ACN 202011457290 ACN202011457290 ACN 202011457290ACN 112509006 ACN112509006 ACN 112509006A

- Authority

- CN

- China

- Prior art keywords

- map

- image

- feature

- current

- feature point

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

- G06T7/246—Analysis of motion using feature-based methods, e.g. the tracking of corners or segments

- G06T7/248—Analysis of motion using feature-based methods, e.g. the tracking of corners or segments involving reference images or patches

- G—PHYSICS

- G01—MEASURING; TESTING

- G01C—MEASURING DISTANCES, LEVELS OR BEARINGS; SURVEYING; NAVIGATION; GYROSCOPIC INSTRUMENTS; PHOTOGRAMMETRY OR VIDEOGRAMMETRY

- G01C21/00—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00

- G01C21/10—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00 by using measurements of speed or acceleration

- G01C21/12—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00 by using measurements of speed or acceleration executed aboard the object being navigated; Dead reckoning

- G01C21/16—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00 by using measurements of speed or acceleration executed aboard the object being navigated; Dead reckoning by integrating acceleration or speed, i.e. inertial navigation

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F16/00—Information retrieval; Database structures therefor; File system structures therefor

- G06F16/20—Information retrieval; Database structures therefor; File system structures therefor of structured data, e.g. relational data

- G06F16/29—Geographical information databases

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10016—Video; Image sequence

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30244—Camera pose

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30248—Vehicle exterior or interior

- G06T2207/30252—Vehicle exterior; Vicinity of vehicle

Landscapes

- Engineering & Computer Science (AREA)

- Remote Sensing (AREA)

- Theoretical Computer Science (AREA)

- Radar, Positioning & Navigation (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Databases & Information Systems (AREA)

- Automation & Control Theory (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Multimedia (AREA)

- Data Mining & Analysis (AREA)

- General Engineering & Computer Science (AREA)

- Image Analysis (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明涉及视觉同时定位与建图技术领域,更具体地说,涉及一种子地图恢复融合方法及装置。The present invention relates to the technical field of simultaneous visual positioning and mapping, and more particularly, to a sub-map restoration and fusion method and device.

背景技术Background technique

SLAM(simultaneous localization and mapping,同时定位与地图构建)是指移动机器人在未知环境中通过传感器建立环境模型并确定自身位置。视觉惯性融合的SLAM算法已经成为当今研究热点。SLAM (simultaneous localization and mapping, simultaneous localization and map construction) refers to a mobile robot establishing an environmental model and determining its own position through sensors in an unknown environment. The SLAM algorithm of visual-inertial fusion has become a research hotspot today.

现在主流的视觉惯性融合框架是基于非线性优化或滤波的紧耦合SLAM算法,尽管鲁棒性有所提高,但在实际应用中由于环境和运动的复杂性,仍然可能跟踪失败。The current mainstream visual-inertial fusion framework is a tightly coupled SLAM algorithm based on nonlinear optimization or filtering. Although the robustness is improved, tracking may still fail in practical applications due to the complexity of the environment and motion.

因此,如何在SLAM系统跟踪失败后重新跟踪建图,是本领域亟需解决的问题。Therefore, how to re-track and build a map after the SLAM system fails to track is an urgent problem to be solved in this field.

发明内容SUMMARY OF THE INVENTION

有鉴于此,为解决上述问题,本发明提供一种子地图恢复融合方法及装置,技术方案如下:In view of this, in order to solve the above-mentioned problems, the present invention provides a sub-map restoration and fusion method and device, and the technical solutions are as follows:

一种子地图恢复融合方法,所述方法包括:A sub-map restoration fusion method, the method comprises:

如果检测到系统跟踪失败,建立当前子地图,所述当前子地图对应的时间最早的一帧图像是通过惯性测量单元IMU计算其相机位姿的;If it is detected that the system tracking fails, a current submap is established, and the earliest frame image corresponding to the current submap is calculated by the inertial measurement unit IMU for its camera pose;

获取建立时间距离所述当前子地图最近的上一子地图,并对所述当前子地图对应的采集时间最早的前N帧第一图像、以及所述上一子地图对应的采集时间最晚的前M帧第二图像进行特征匹配;Acquire the last submap whose establishment time is the closest to the current submap, and compare the first N frames of the first image with the earliest collection time corresponding to the current submap, and the last image corresponding to the last submap with the latest collection time. Perform feature matching on the second images of the first M frames;

如果所述第一图像和所述第二图像特征匹配成功,基于特征匹配所得的至少一个第一特征点对求解所述当前子地图与所述上一子地图间的第一相机位姿变换;If the feature matching of the first image and the second image is successful, solve the first camera pose transformation between the current submap and the previous submap based on at least one first feature point pair obtained by feature matching;

基于所述第一相机位姿变换对所述当前子地图和所述上一子地图进行融合。The current submap and the previous submap are fused based on the first camera pose transformation.

优选的,所述对所述当前子地图对应的采集时间最早的前N帧第一图像、以及所述上一子地图对应的采集时间最晚的前M帧第二图像进行特征匹配,包括:Preferably, performing feature matching on the first N frames of first images with the earliest acquisition time corresponding to the current submap and the first M frames of second images with the latest acquisition time corresponding to the previous submap, including:

针对所述第一图像中的第一特征点,通过检索图像字典数据库从所述第二图像的第二特征点中确定词袋向量与该第一特征点相同的第一候选特征点;For the first feature point in the first image, determine the first candidate feature point whose bag of words vector is the same as the first feature point from the second feature point of the second image by retrieving the image dictionary database;

从所述第一候选特征点中选取一个描述符相似度最大的第一目标特征点,所述第一目标特征点与该第一特征点组成一个第一特征点对。A first target feature point with the largest descriptor similarity is selected from the first candidate feature points, and the first target feature point and the first feature point form a first feature point pair.

优选的,所述方法还包括:Preferably, the method further includes:

如果所述第一图像和所述第二图像特征匹配失败,对所述当前子地图对应的采集时间最晚的一帧第三图像、以及所述上一子地图对应的各帧第四图像进行特征匹配;If the feature matching of the first image and the second image fails, perform a third image with the latest acquisition time corresponding to the current submap and each frame of the fourth image corresponding to the previous submap. feature matching;

如果所述第三图像和所述第四图像特征匹配成功,通过对特征匹配所得到的至少一个第二特征点对进行sim(3)变换得到所述当前子地图与所述上一子地图间的第二相机位姿变换;If the feature matching of the third image and the fourth image is successful, the difference between the current submap and the previous submap is obtained by performing sim(3) transformation on at least one second feature point pair obtained by feature matching The pose transformation of the second camera;

基于所述第二相机位姿变换对所述当前子地图和所述上一子地图进行融合。The current submap and the previous submap are fused based on the second camera pose transformation.

优选的,所述对所述当前子地图对应的采集时间最晚的一帧第三图像、以及所述上一子地图对应的各帧第四图像进行特征匹配,包括:Preferably, performing feature matching on a frame of the third image with the latest acquisition time corresponding to the current submap and each frame of the fourth image corresponding to the previous submap includes:

针对所述第三图像中的第三特征点,通过检索图像字典数据库从所述第四图像的第四特征点中确定词袋向量与该第三特征点相同的第二候选特征点;For the third feature point in the third image, the second candidate feature point whose bag of words vector is the same as the third feature point is determined from the fourth feature point of the fourth image by retrieving the image dictionary database;

从具有所述第二候选特征点的第四图像中,筛选所具有的所述第二候选特征点的数量最多的多帧候选图像;From the fourth image having the second candidate feature points, screening the multi-frame candidate images with the largest number of the second candidate feature points;

针对所述第三图像中的第三特征点,通过检索图像字典数据库从所述候选图像的第四特征点中确定词袋向量与该第三特征点相同的第三候选特征点;For the third feature point in the third image, determine the third candidate feature point whose bag of words vector is the same as the third feature point from the fourth feature point of the candidate image by retrieving the image dictionary database;

从所述第三候选特征点中选取一个描述符相似度最大的第二目标特征点,所述第二目标特征点与该第三特征点组成一个第二特征点对。A second target feature point with the largest descriptor similarity is selected from the third candidate feature points, and the second target feature point and the third feature point form a second feature point pair.

优选的,所述通过对特征匹配所得到的至少一个第二特征点对进行sim(3)变换得到所述当前子地图与所述上一子地图间的第二相机位姿变换,包括:Preferably, the second camera pose transformation between the current submap and the previous submap is obtained by performing sim(3) transformation on at least one second feature point pair obtained by feature matching, including:

按照所述第二特征点对中第二目标特征点所在的候选图像,将所述第二特征点对划分至相应的特征点对组内;According to the candidate image where the second target feature point is located in the second feature point pair, the second feature point pair is divided into the corresponding feature point pair group;

确定待处理的当前特征点对组,从该当前特征点对组中随机选取三个第二特征点对,并通过对所选取的三个第二特征点对进行sim(3)变换,得到所述第三图像与该当前特征点对组对应的候选图像间的候选相机位姿变换;Determine the current feature point pair to be processed, randomly select three second feature point pairs from the current feature point pair group, and perform sim(3) transformation on the selected three second feature point pairs to obtain the candidate camera pose transformation between the third image and the candidate image corresponding to the current feature point pair group;

基于所述候选相机位姿变换对该当前特征点对组内的第二特征点对进行重投影操作,以确定该当前特征点对组内属于内点的第二特征点对;Perform a reprojection operation on the second feature point pair in the current feature point pair group based on the candidate camera pose transformation to determine the second feature point pair belonging to the interior point in the current feature point pair group;

如果该当前特征点对组内属于内点的第二特征点对的数量大于等于预设阈值,将所述候选相机位姿变换作为所述当前子地图与所述上一子地图间的第二相机位姿变换;If the number of second feature point pairs belonging to the inner point in the current feature point pair group is greater than or equal to a preset threshold, the candidate camera pose transformation is used as the second feature point between the current submap and the previous submap. camera pose transformation;

如果该当前特征点对组内属于内点的第二特征点对的数量小于预设阈值,返回执行所述确定待处理的当前特征点对组的步骤,直到遍历完所有特征点对组时结束。If the number of second feature point pairs belonging to the inner points in the current feature point pair group is less than the preset threshold, return to the step of determining the current feature point pair group to be processed, and end when all feature point pairs are traversed .

优选的,所述方法还包括:Preferably, the method further includes:

如果该当前特征点对组内属于内点的第二特征点对的数量大于等于预设阈值,基于重投影操作的结果确定该当前特征点对组内属于外点的第二特征点对,并删除;If the number of second feature point pairs belonging to the inner points in the current feature point pair group is greater than or equal to a preset threshold, determine the second feature point pairs belonging to the outer points in the current feature point pair group based on the result of the reprojection operation, and delete;

针对删除外点后的该当前特征点对组,基于所述第二相机位姿变换优化其组内属于内点的第二特征点对,并根据优化结果调整所述第二相机位姿变换。For the current feature point pair group after the outliers are deleted, the second feature point pair belonging to the inliers in the group is optimized based on the second camera pose transformation, and the second camera pose transformation is adjusted according to the optimization result.

一种子地图恢复融合装置,所述装置包括:A sub-map restoration and fusion device, the device includes:

地图建立模块,用于如果检测到系统跟踪失败,建立当前子地图,所述当前子地图对应的时间最早的一帧图像是通过惯性测量单元IMU计算其相机位姿的;a map establishment module, for establishing a current submap if it is detected that the system tracking fails, and the earliest frame image corresponding to the current submap is calculated by the inertial measurement unit (IMU) of its camera pose;

第一特征匹配模块,用于获取建立时间距离所述当前子地图最近的上一子地图,并对所述当前子地图对应的采集时间最早的前N帧第一图像、以及所述上一子地图对应的采集时间最晚的前M帧第二图像进行特征匹配;The first feature matching module is used to obtain the last submap whose establishment time is the closest to the current submap, and compare the first N frames of the first images with the earliest acquisition time corresponding to the current submap, and the last submap. Feature matching is performed on the second images of the first M frames with the latest acquisition time corresponding to the map;

第一位姿计算模块,用于如果所述第一图像和所述第二图像特征匹配成功,基于特征匹配所得的至少一个第一特征点对求解所述当前子地图与所述上一子地图间的第一相机位姿变换;A first pose calculation module, configured to solve the current submap and the previous submap based on at least one first feature point pair obtained by feature matching if the first image and the second image feature matching is successful The first camera pose transformation between

第一地图融合模块,用于基于所述第一相机位姿变换对所述当前子地图和所述上一子地图进行融合。A first map fusion module, configured to fuse the current submap and the previous submap based on the first camera pose transformation.

优选的,所述装置还包括:Preferably, the device further includes:

第二特征匹配模块,用于如果所述第一图像和所述第二图像特征匹配失败,对所述当前子地图对应的采集时间最晚的一帧第三图像、以及所述上一子地图对应的各帧第四图像进行特征匹配;The second feature matching module is configured to, if the feature matching between the first image and the second image fails, compare the third image with the latest acquisition time corresponding to the current submap and the previous submap. Feature matching is performed on the corresponding fourth images of each frame;

第二位姿计算模块,用于如果所述第三图像和所述第四图像特征匹配成功,通过对特征匹配所得到的至少一个第二特征点对进行sim(3)变换得到所述当前子地图与所述上一子地图间的第二相机位姿变换;The second pose calculation module is configured to obtain the current sub-image by performing sim(3) transformation on at least one second feature point pair obtained by feature matching if the third image and the fourth image feature matching successfully the pose transformation of the second camera between the map and the previous sub-map;

第二地图融合模块,用于基于所述第二相机位姿变换对所述当前子地图和所述上一子地图进行融合。A second map fusion module, configured to fuse the current submap and the previous submap based on the second camera pose transformation.

优选的,所述第二特征匹配模块,具体用于:Preferably, the second feature matching module is specifically used for:

针对所述第三图像中的第三特征点,通过检索图像字典数据库从所述第四图像的第四特征点中确定词袋向量与该第三特征点相同的第二候选特征点;从具有所述第二候选特征点的第四图像中,筛选所具有的所述第二候选特征点的数量最多的多帧候选图像;针对所述第三图像中的第三特征点,通过检索图像字典数据库从所述候选图像的第四特征点中确定词袋向量与该第三特征点相同的第三候选特征点;从所述第三候选特征点中选取一个描述符相似度最大的第二目标特征点,所述第二目标特征点与该第三特征点组成一个第二特征点对。For the third feature point in the third image, a second candidate feature point whose bag of words vector is the same as the third feature point is determined from the fourth feature point of the fourth image by retrieving the image dictionary database; In the fourth image of the second candidate feature point, the multi-frame candidate image with the largest number of the second candidate feature point is screened; for the third feature point in the third image, by retrieving the image dictionary The database determines a third candidate feature point whose bag-of-word vector is the same as the third feature point from the fourth feature point of the candidate image; selects a second target with the largest descriptor similarity from the third candidate feature point feature point, the second target feature point and the third feature point form a second feature point pair.

优选的,所述第二位姿计算模块,具体用于:Preferably, the second pose calculation module is specifically used for:

按照所述第二特征点对中第二目标特征点所在的候选图像,将所述第二特征点对划分至相应的特征点对组内;确定待处理的当前特征点对组,从该当前特征点对组中随机选取三个第二特征点对,并通过对所选取的三个第二特征点对进行sim(3)变换,得到所述第三图像与该当前特征点对组对应的候选图像间的候选相机位姿变换;基于所述候选相机位姿变换对该当前特征点对组内的第二特征点对进行重投影操作,以确定该当前特征点对组内属于内点的第二特征点对;如果该当前特征点对组内属于内点的第二特征点对的数量大于等于预设阈值,将所述候选相机位姿变换作为所述当前子地图与所述上一子地图间的第二相机位姿变换;如果该当前特征点对组内属于内点的第二特征点对的数量小于预设阈值,返回执行所述确定待处理的当前特征点对组的步骤,直到遍历完所有特征点对组时结束。According to the candidate image where the second target feature point is located in the second feature point pair, the second feature point pair is divided into the corresponding feature point pair group; the current feature point pair group to be processed is determined, and the current feature point pair group is determined from the current Three second feature point pairs are randomly selected from the feature point pair group, and by performing sim(3) transformation on the selected three second feature point pairs, the third image corresponding to the current feature point pair group is obtained. The candidate camera pose transformation between the candidate images; based on the candidate camera pose transformation, a reprojection operation is performed on the second feature point pair in the current feature point pair group to determine the current feature point pair in the group belonging to the inner point. second feature point pair; if the number of second feature point pairs belonging to the inner point in the current feature point pair group is greater than or equal to a preset threshold, the candidate camera pose transformation is used as the current submap and the previous The second camera pose transformation between the sub-maps; if the number of the second feature point pairs belonging to the inner points in the current feature point pair group is less than the preset threshold, return to the step of performing the step of determining the current feature point pair group to be processed , until all feature point pairs are traversed.

本发明提供一种子地图恢复融合方法及装置,在系统跟踪失败后,用IMU来临时计算相机位姿,并采用IMU算出的相机位姿作为初值建立子地图,子地图在建图过程中,检查与前面地图的匹配并进行融合调整。本发明在系统跟踪失败后,即使无法满足重定位要求,也能继续进行跟踪建图。该方法对场景的依赖较弱,只要初始化成功,就能保存重定位之前的地图信息。The invention provides a sub-map restoration and fusion method and device. After the system tracking fails, the IMU is used to temporarily calculate the camera pose, and the camera pose calculated by the IMU is used as the initial value to establish the sub-map. During the map-building process, the sub-map is Check match with previous maps and make blend adjustments. After the system tracking fails, the present invention can continue to track and build a map even if the relocation requirement cannot be met. This method is less dependent on the scene, as long as the initialization is successful, the map information before relocation can be saved.

附图说明Description of drawings

为了更清楚地说明本发明实施例或现有技术中的技术方案,下面将对实施例或现有技术描述中所需要使用的附图作简单地介绍,显而易见地,下面描述中的附图仅仅是本发明的实施例,对于本领域普通技术人员来讲,在不付出创造性劳动的前提下,还可以根据提供的附图获得其他的附图。In order to explain the embodiments of the present invention or the technical solutions in the prior art more clearly, the following briefly introduces the accompanying drawings that need to be used in the description of the embodiments or the prior art. Obviously, the accompanying drawings in the following description are only It is an embodiment of the present invention. For those of ordinary skill in the art, other drawings can also be obtained according to the provided drawings without creative work.

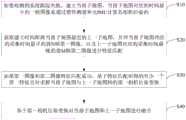

图1为本发明实施例提供的子地图恢复融合方法的方法流程图;FIG. 1 is a method flowchart of a submap restoration and fusion method provided by an embodiment of the present invention;

图2为本发明实施例提供的子地图恢复融合方法的另一方法流程图;Fig. 2 is another method flow chart of the sub-map restoration fusion method provided by the embodiment of the present invention;

图3为本发明实施例提供的子地图恢复融合装置的结构示意图。FIG. 3 is a schematic structural diagram of an apparatus for sub-map restoration and fusion provided by an embodiment of the present invention.

具体实施方式Detailed ways

下面将结合本发明实施例中的附图,对本发明实施例中的技术方案进行清楚、完整地描述,显然,所描述的实施例仅仅是本发明一部分实施例,而不是全部的实施例。基于本发明中的实施例,本领域普通技术人员在没有做出创造性劳动前提下所获得的所有其他实施例,都属于本发明保护的范围。The technical solutions in the embodiments of the present invention will be clearly and completely described below with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only a part of the embodiments of the present invention, but not all of the embodiments. Based on the embodiments of the present invention, all other embodiments obtained by those of ordinary skill in the art without creative efforts shall fall within the protection scope of the present invention.

为使本发明的上述目的、特征和优点能够更加明显易懂,下面结合附图和具体实施方式对本发明作进一步详细的说明。In order to make the above objects, features and advantages of the present invention more clearly understood, the present invention will be described in further detail below with reference to the accompanying drawings and specific embodiments.

SLAM技术可应用于许多领域,如自动驾驶、增强和虚拟现实、移动机器人和无人机导航。但是现实世界中的运动往往是比较复杂的,例如相机运动过快时会导致图像运动模糊,在高亮、低亮或者环境纹理特征较少等情况下都会导致视觉SLAM跟踪失败,因此仅仅通过视觉传感器已经无法满足实际应用需求。而惯性传感器能在短时间内的快速运动中提供较好的状态估计,与相机有明显的互补性,并且目前在移动设备中基本都同时具有相机与惯性传感器,视觉惯性融合的SLAM算法已经成为当今研究热点。SLAM technology can be applied in many fields such as autonomous driving, augmented and virtual reality, mobile robotics and drone navigation. However, the motion in the real world is often more complex. For example, when the camera moves too fast, the image will be blurred, and the visual SLAM tracking will fail in the case of high brightness, low brightness or less environmental texture features. Therefore, only through visual Sensors have been unable to meet practical application needs. Inertial sensors can provide better state estimation in a short period of fast motion, and have obvious complementarity with cameras. At present, mobile devices basically have both cameras and inertial sensors at the same time. The SLAM algorithm of visual-inertial fusion has become a current research hotspots.

现在主流的视觉惯性融合框架是基于非线性优化或滤波的紧耦合SLAM算法。无论是滤波的方案还是优化的方案,尽管都提高了系统的鲁棒性,但是在实际应用中由于环境和运动的复杂性,仍然可能会跟踪失败。The current mainstream visual-inertial fusion framework is a tightly coupled SLAM algorithm based on nonlinear optimization or filtering. Regardless of the filtering scheme or the optimized scheme, although the robustness of the system is improved, in practical applications, due to the complexity of the environment and motion, tracking may still fail.

目前采用最多的解决跟踪失败的方案是重定位。比如ORB SLAM2,这是一套基于ORB特征点的SLAM算法,ORB是指一种具有旋转不变性的特征点。在ORB SLAM2中,使用Bagof Word词袋模型,计算每个图像帧的Bow,将词袋与特征点结合对图像进行描述;当视觉跟踪失败后,ORB SLAM2通过当前帧的BoW,与图像数据库中的所有数据进行匹配,查找相似的图像帧。At present, the most adopted solution for tracking failure is relocation. For example, ORB SLAM2, which is a set of SLAM algorithms based on ORB feature points. ORB refers to a feature point with rotation invariance. In ORB SLAM2, the Bagof Word bag of words model is used to calculate the Bow of each image frame, and the bag of words and feature points are combined to describe the image; when visual tracking fails, ORB SLAM2 passes the BoW of the current frame and compares it with the image database. match all the data to find similar image frames.

上述方法只有当相机采集到的图像与已经建立好的地图中的某帧关键帧非常相似时,才能恢复出当前相机的位姿,从而重定位成功。在实际应用中,当系统跟踪失败后,设备必须回到之前能跟踪上的场景中才能重定位成功并继续跟踪建图。但是在许多应用场景中,如无人驾驶汽车和智能无人机需要一直前进时,这种重定位方案并不适合。The above method can restore the current camera pose only when the image collected by the camera is very similar to a certain key frame in the established map, so that the relocation is successful. In practical applications, when the system tracking fails, the device must return to the scene where it could be tracked before in order to relocate successfully and continue to track and build a map. But in many application scenarios, such as driverless cars and smart drones that need to keep moving, this relocation scheme is not suitable.

针对这个问题,本发明提出一种基于ORB SLAM2的子地图融合算法解决跟踪失败的问题。算法的框架是基于IMU(inertial measurement unit惯性测量单元)紧耦合的ORBSLAM2框架,在系统跟踪失败后,即使无法满足重定位要求,也能继续进行跟踪建图。该方法对场景的依赖较弱,只要初始化成功,就能保存重定位之前的地图信息。Aiming at this problem, the present invention proposes a submap fusion algorithm based on ORB SLAM2 to solve the problem of tracking failure. The framework of the algorithm is based on the tightly coupled ORBSLAM2 framework of the IMU (inertial measurement unit). After the system fails to track, it can continue to track and map even if the relocation requirements cannot be met. This method is less dependent on the scene, as long as the initialization is successful, the map information before relocation can be saved.

本发明实施例提供一种子地图恢复融合方法,该方法的方法流程图如图1所示,包括如下步骤:An embodiment of the present invention provides a sub-map restoration and fusion method. The method flowchart of the method is shown in FIG. 1 , and includes the following steps:

S10,如果检测到系统跟踪失败,建立当前子地图,当前子地图对应的时间最早的一帧图像是通过惯性测量单元IMU计算其相机位姿的。S10, if it is detected that the system tracking fails, a current submap is established, and an image of the earliest frame corresponding to the current submap is used to calculate its camera pose through the inertial measurement unit IMU.

本发明实施例中,在ORB SLAM2系统跟踪失败后,使用IMU预积分临时计算相机位姿,以此作为建图的基础。In the embodiment of the present invention, after the ORB SLAM2 system fails to track, the IMU pre-integration is used to temporarily calculate the camera pose, which is used as the basis for mapping.

以下对IMU预积分的过程、以及利用预积分临时计算相机位姿的过程进行说明:The following describes the process of IMU pre-integration and the process of temporarily calculating the camera pose using pre-integration:

采用如下公式(1)、(2)、(3)计算IMU预积分:The following formulas (1), (2) and (3) are used to calculate the IMU pre-integration:

其中,i、j是IMU数据的索引;k是图像帧的索引;ΔRij,Δvij,Δpij是IMU的预积分项,分别为预积分的旋转、速度和位移;分别是陀螺仪测量的角速度、加速度计测量的加速度,分别是角速度和加速度的偏移,分别是角速度和加速度的离散时间噪声,Δt是IMU数据的时间间隔。Among them, i and j are the indexes of the IMU data; k is the index of the image frame; ΔRij , Δvij , Δpij are the pre-integration terms of the IMU, which are the rotation, velocity and displacement of the pre-integration; are the angular velocity measured by the gyroscope and the acceleration measured by the accelerometer, respectively. are the offsets of angular velocity and acceleration, respectively, are the discrete-time noise of angular velocity and acceleration, respectively, and Δt is the time interval of the IMU data.

采用如下公式(4)、(5)、(6)计算相机位姿:The camera pose is calculated using the following formulas (4), (5), and (6):

其中,W代表世界坐标系(第一帧相机的坐标系),B代表载体坐标系,分别是位姿(从载体坐标系到世界坐标系)的旋转和位移分量,是第i+1时刻载体在世界坐标系中的速度,是第i+1时刻载体在世界坐标系中的位移,分别是预积分的速度对的雅克比矩阵,分别是预积分的位移对的雅克比矩阵。Among them, W represents the world coordinate system (the coordinate system of the first frame camera), B represents the carrier coordinate system, are the rotation and displacement components of the pose (from the carrier coordinate system to the world coordinate system), respectively, is the speed of the carrier in the world coordinate system at time i+1, is the displacement of the carrier in the world coordinate system at time i+1, are the pre-integrated velocity pairs, respectively The Jacobian matrix of , are the pre-integrated displacement pairs, respectively The Jacobian matrix of .

ORB SLAM2系统在运行过程中会创建多个地图,每个地图都称为一个子地图。而ORB SLAM2本身是基于视觉进行跟踪,在系统跟踪失败后,本发明实施例在用IMU临时计算相机位姿的同时,会重新建立一个新的子地图,即当前子地图,后续继续基于ORB SLAM2进行视觉跟踪。The ORB SLAM2 system creates multiple maps during operation, and each map is called a submap. The ORB SLAM2 itself is based on vision for tracking. After the system tracking fails, the embodiment of the present invention uses the IMU to temporarily calculate the camera pose, and at the same time, will re-establish a new submap, that is, the current submap, and continue to follow the ORB SLAM2 based on Do visual tracking.

S20,获取建立时间距离当前子地图最近的上一子地图,并对当前子地图对应的采集时间最早的前N帧第一图像、以及上一子地图对应的采集时间最晚的前M帧第二图像进行特征匹配。S20, obtain the last submap whose establishment time is closest to the current submap, and compare the first N frames of the first image with the earliest collection time corresponding to the current submap and the M frames with the latest collection time corresponding to the previous submap Two images for feature matching.

本发明实施例中,对于子地图的匹配,基于当前子地图的前N帧与上一子地图的最后M帧进行快速匹配,N和M的大小关系并不做限定。这种方式可以在系统跟踪失败后快速匹配地图并恢复位姿。In this embodiment of the present invention, for submap matching, fast matching is performed based on the first N frames of the current submap and the last M frames of the previous submap, and the size relationship between N and M is not limited. This method can quickly match the map and restore the pose after the system fails to track.

系统初始化成功,对当前子地图的前N帧与上一子地图的最后M帧进行特征点匹配;若匹配上,则可以进行子地图的融合。After the system is successfully initialized, the feature points of the first N frames of the current submap and the last M frames of the previous submap are matched; if they match, the submaps can be fused.

需要说明的是,本发明实施例中的图像,都是指关键帧,该关键帧是ORB SLAM2系统中根据时间间隔、帧间匹配点数量等条件所选取的,这样可以避免信息冗余、减少内存占用。It should be noted that the images in the embodiments of the present invention all refer to key frames, and the key frames are selected in the ORB SLAM2 system according to conditions such as time interval, number of matching points between frames, etc., so as to avoid information redundancy and reduce memory usage.

具体实现过程中,步骤S20“对当前子地图对应的采集时间最早的前N帧第一图像、以及上一子地图对应的采集时间最晚的前M帧第二图像进行特征匹配”可以采用如下步骤:In the specific implementation process, in step S20, "feature matching is performed on the first N frames of the first image corresponding to the current submap with the earliest acquisition time, and the first M frames of the second image with the latest acquisition time corresponding to the previous submap" can be adopted as follows step:

针对第一图像中的第一特征点,通过检索图像字典数据库从第二图像的第二特征点中确定词袋向量与该第一特征点相同的第一候选特征点;从第一候选特征点中选取一个描述符相似度最大的第一目标特征点,第一目标特征点与该第一特征点组成一个第一特征点对。For the first feature point in the first image, a first candidate feature point whose bag of words vector is the same as the first feature point is determined from the second feature point of the second image by retrieving the image dictionary database; Select a first target feature point with the largest descriptor similarity, and the first target feature point and the first feature point form a first feature point pair.

本发明实施例中,图像中存在多个特征点,每个特征点都具有相应的词袋向量,图像中各特征点的词袋向量被记录于图像字典数据库。因此,根据词袋向量,通过函数SearchByBow()对当前子地图的前N帧与上一子地图的最后M帧进行特征点匹配,得到一系列匹配的特征点对。特征点匹配的基本原理,根据第一特征点的词袋向量在图像字典数据库中查找与可能的匹配点,然后计算与所匹配特征点的描述符相似度,得到最佳的匹配点。In the embodiment of the present invention, there are multiple feature points in the image, each feature point has a corresponding bag-of-words vector, and the bag-of-words vector of each feature point in the image is recorded in the image dictionary database. Therefore, according to the word bag vector, the function SearchByBow() is used to perform feature point matching between the first N frames of the current submap and the last M frames of the previous submap to obtain a series of matching feature point pairs. The basic principle of feature point matching is to search for possible matching points in the image dictionary database according to the word bag vector of the first feature point, and then calculate the descriptor similarity with the matched feature points to obtain the best matching point.

S30,如果第一图像和第二图像特征匹配成功,基于特征匹配所得的至少一个第一特征点对求解当前子地图与上一子地图间的第一相机位姿变换。S30 , if the feature matching of the first image and the second image is successful, solve the first camera pose transformation between the current submap and the previous submap based on at least one first feature point pair obtained by the feature matching.

本发明实施例中,根据步骤S20所获得的至少一个第一特征点对,通过函数PoseOptimization()对相机姿态进行优化求解,进一步求解当前子地图的图像与上一子地图所匹配的图像之间的位姿变换进而将该位姿变换作为当前子地图与上一子地图间的相机位姿变换。In the embodiment of the present invention, according to the at least one first feature point pair obtained in step S20, the camera pose is optimized and solved by the function PoseOptimization(), and the relationship between the image of the current submap and the image matched by the previous submap is further solved. The pose transformation of and then transform the pose As the camera pose transformation between the current submap and the previous submap.

S40,基于第一相机位姿变换对当前子地图和上一子地图进行融合。S40, fuse the current submap and the previous submap based on the first camera pose transformation.

本发明实施例中,将当前子地图中的所有图像的相机位姿调整到上一子地图中,采用如下公式(7)计算调整后的相机位姿:In the embodiment of the present invention, the camera poses of all images in the current submap are adjusted to the previous submap, and the adjusted camera poses are calculated by using the following formula (7):

其中,i是当前子地图中图像的索引,w2是当前子地图的世界坐标系,w1是匹配的地图的世界坐标系,cur是指当前图像,matched是指匹配的上一子地图中的图像。是指从坐标系b到坐标系a的SE(3)变换,也就是包括旋转和平移。where i is the index of the image in the current submap, w2 is the world coordinate system of the current submap, w1 is the world coordinate system of the matched map, cur is the current image, and matched is the matched image in the previous submap . Refers to the SE(3) transformation from coordinate system b to coordinate system a, that is, including rotation and translation.

将当前子地图中的所有地图点的坐标调整到上一子地图中,两个地图之间3D点的坐标变换采用如下公式(8)表示:The coordinates of all map points in the current submap are adjusted to the previous submap, and the coordinate transformation of 3D points between the two maps is expressed by the following formula (8):

其中,是从w2坐标系到w1坐标系的SE(3)变换。in, is the SE(3) transformation from the w2 coordinate system to the w1 coordinate system.

在其他一些实施例中,为提高地图匹配的精度,本发明实施例还可以采用长时运行的基于sim(3)变换的子地图匹配,这种方式可以对地图进行全局优化,提高系统的精度。在图1所示的子地图恢复融合方法的基础上,还可以采用如下步骤,方法流程图如图2所示:In some other embodiments, in order to improve the accuracy of map matching, the embodiments of the present invention may also adopt long-running sub-map matching based on sim(3) transformation, which can globally optimize the map and improve the accuracy of the system . On the basis of the sub-map restoration and fusion method shown in Figure 1, the following steps can also be used, and the flow chart of the method is shown in Figure 2:

S50,如果第一图像和第二图像特征匹配失败,对当前子地图对应的采集时间最晚的一帧第三图像、以及上一子地图对应的各帧第四图像进行特征匹配。S50 , if the feature matching of the first image and the second image fails, feature matching is performed on a frame of the third image corresponding to the current submap with the latest acquisition time and each frame of the fourth image corresponding to the previous submap.

本发明实施例中,对于子地图的匹配,采用当前子地图的最后一帧与上一子地图的所有帧进行全局匹配,这种方式可以在系统跟踪失败后精确匹配地图并恢复位姿。当然,匹配的方式可以采用词袋向量与描述符相结合的方式进行处理。即,根据第三图像中特征点的词袋向量在图像字典数据库中查找与可能的匹配点,然后计算与所匹配特征点的描述符相似度,得到最佳的匹配点。In this embodiment of the present invention, for submap matching, the last frame of the current submap is used for global matching with all frames of the previous submap. This method can accurately match the map and restore the pose after the system fails to track. Of course, the matching method can be processed by combining the word bag vector and the descriptor. That is, according to the bag-of-words vectors of the feature points in the third image, the possible matching points are searched in the image dictionary database, and then the descriptor similarity with the matched feature points is calculated to obtain the best matching point.

具体实现过程中,步骤S50中“对当前子地图对应的采集时间最晚的一帧第三图像、以及上一子地图对应的各帧第四图像进行特征匹配”可以采用如下步骤:In the specific implementation process, in step S50, the following steps may be adopted for "matching the features of the third frame of the third image corresponding to the current submap with the latest acquisition time and each frame of the fourth image corresponding to the previous submap":

针对第三图像中的第三特征点,通过检索图像字典数据库从第四图像的第四特征点中确定词袋向量与该第三特征点相同的第二候选特征点;从具有第二候选特征点的第四图像中,筛选所具有的第二候选特征点的数量最多的多帧候选图像;针对第三图像中的第三特征点,通过检索图像字典数据库从候选图像的第四特征点中确定词袋向量与该第三特征点相同的第三候选特征点;从第三候选特征点中选取一个描述符相似度最大的第二目标特征点,第二目标特征点与该第三特征点组成一个第二特征点对。For the third feature point in the third image, a second candidate feature point whose bag of words vector is the same as the third feature point is determined from the fourth feature point of the fourth image by retrieving the image dictionary database; In the fourth image of the point, the multi-frame candidate image with the largest number of second candidate feature points is screened; for the third feature point in the third image, the image dictionary database is retrieved from the fourth feature point of the candidate image. Determine the third candidate feature point with the same bag of words vector as the third feature point; select a second target feature point with the largest descriptor similarity from the third candidate feature point, and the second target feature point and the third feature point form a second feature point pair.

本发明实施例中,根据第三图像中第三特征点的词袋向量,调用ORB SLAM2系统中函数DetectLoopCandidates()接口,检索图像字典数据库,得到上一子地图中与匹配的第四图像,并通过一定的筛选策略对这些匹配的第四图像进行筛选,得到匹配较好的多个候选图像。In the embodiment of the present invention, according to the word bag vector of the third feature point in the third image, the function DetectLoopCandidates() interface in the ORB SLAM2 system is called to retrieve the image dictionary database to obtain the matching fourth image in the previous submap, and These matched fourth images are screened through a certain screening strategy, and multiple candidate images with better matching are obtained.

进一步,通过函数SearchByBoW()寻找候选图像与第三图像间的ORB特征匹配,特征点匹配的基本原理也是根据第三特征点的词袋向量在图像字典数据库中查找可能的匹配点,然后计算匹配特征点描述符的相似度,得到最佳匹配点。对于每个候选图像都会与第三图像进行特征匹配。Further, the ORB feature matching between the candidate image and the third image is searched by the function SearchByBoW(). The basic principle of feature point matching is to search for possible matching points in the image dictionary database according to the word bag vector of the third feature point, and then calculate the matching point. The similarity of feature point descriptors is used to obtain the best matching point. For each candidate image, feature matching is performed with the third image.

S60,如果第三图像和第四图像特征匹配成功,通过对特征匹配所得到的至少一个第二特征点对进行sim(3)变换得到当前子地图与上一子地图间的第二相机位姿变换。S60, if the feature matching of the third image and the fourth image is successful, perform sim(3) transformation on at least one second feature point pair obtained by feature matching to obtain the second camera pose between the current submap and the previous submap transform.

单目SLAM系统有7个自由度,3个平移,3个旋转,1个尺度因子。本发明实施例中,对于步骤S50得到的至少一个第二特征点对,通过调用函数ComputeSim3()计算第三图像和所匹配的图像之间的平移和旋转。The monocular SLAM system has 7 degrees of freedom, 3 translations, 3 rotations, and 1 scale factor. In the embodiment of the present invention, for the at least one second feature point pair obtained in step S50, the translation and rotation between the third image and the matched image are calculated by calling the function ComputeSim3().

具体实现过程中,步骤S60“通过对特征匹配所得到的至少一个第二特征点对进行sim(3)变换得到当前子地图与上一子地图间的第二相机位姿变换”可以采用如下步骤:In the specific implementation process, step S60 "by performing sim(3) transformation on at least one second feature point pair obtained by feature matching to obtain the second camera pose transformation between the current submap and the previous submap" can adopt the following steps :

按照第二特征点对中第二目标特征点所在的候选图像,将第二特征点对划分至相应的特征点对组内;确定待处理的当前特征点对组,从该当前特征点对组中随机选取三个第二特征点对,并通过对所选取的三个第二特征点对进行sim(3)变换,得到第三图像与该当前特征点对组对应的候选图像间的候选相机位姿变换;基于候选相机位姿变换对该当前特征点对组内的第二特征点对进行重投影操作,以确定该当前特征点对组内属于内点的第二特征点对;如果该当前特征点对组内属于内点的第二特征点对的数量大于等于预设阈值,将候选相机位姿变换作为当前子地图与上一子地图间的第二相机位姿变换;如果该当前特征点对组内属于内点的第二特征点对的数量小于预设阈值,返回执行确定待处理的当前特征点对组的步骤,直到遍历完所有特征点对组时结束。According to the candidate image where the second target feature point is located in the second feature point pair, the second feature point pair is divided into the corresponding feature point pair group; the current feature point pair group to be processed is determined, and the current feature point pair group Randomly select three second feature point pairs in the pose transformation; perform a reprojection operation on the second feature point pair in the current feature point pair group based on the candidate camera pose transformation to determine the second feature point pair belonging to the inner point in the current feature point pair group; if the The number of second feature point pairs belonging to the inner points in the current feature point pair group is greater than or equal to the preset threshold, and the candidate camera pose transformation is used as the second camera pose transformation between the current submap and the previous submap; if the current If the number of second feature point pairs belonging to the inner point in the feature point pair group is less than the preset threshold, the process of determining the current feature point pair group to be processed is returned to execute the step until all feature point pairs are traversed.

本发明实施例中,将第二目标特征点位于同一候选图像中的第二特征点对划分到同一特征点对组中,并以特征点对组为维度进行处理。In the embodiment of the present invention, the second feature point pairs whose second target feature points are located in the same candidate image are divided into the same feature point pair group, and the feature point pair group is used as a dimension for processing.

对于待处理的当前特征点对组,从中随机选取三个第二特征点对,通过sim(3)变换得到两帧图像间的相机位姿变换,即候选相机位姿变换;进一步,将其中一帧所有能够组成第二特征点对的特征点通过该候选相机位姿变换投影到另一帧中,一次是由第三图像投影到第四图像、另一次是由第四图像投影到第三图像,两次投影结束后计算两帧图像间各第二特征点对的重投影误差;如果重投影误差大于等于预设的误差阈值,则相应第二特征点对属于外点,反之,则属于内点。For the current feature point pair group to be processed, randomly select three second feature point pairs, and obtain the camera pose transformation between the two frames of images through sim(3) transformation, that is, the candidate camera pose transformation; Frame all the feature points that can form the second feature point pair are projected into another frame through the candidate camera pose transformation, once from the third image to the fourth image, and the other time from the fourth image to the third image , after the two projections, calculate the reprojection error of each second feature point pair between the two frames of images; if the reprojection error is greater than or equal to the preset error threshold, the corresponding second feature point pair belongs to the outer point, otherwise, it belongs to the inner point point.

最后,如果内点的数量大于等于预设阈值,则表示本次计算的候选相机位姿变换有效,反之,则重新选择待处理的当前特征点对组。Finally, if the number of inliers is greater than or equal to the preset threshold, it means that the candidate camera pose transformation calculated this time is valid; otherwise, the current feature point pair to be processed is reselected.

当然,对于待处理的当前特征点对组,如果一次计算的候选相机位姿变换无效,还可以重新选择该组中三个第二特征点对,在多次选择所计算的候选相机位姿变换均无效时,再重新选择待处理的当前特征点对组。Of course, for the current feature point pair group to be processed, if the candidate camera pose transformation calculated at one time is invalid, the three second feature point pairs in the group can also be reselected, and the calculated candidate camera pose transformation can be selected multiple times. When both are invalid, select the current feature point pair to be processed again.

在此基础上,为获得更准确的平移和旋转,本发明实施例还可以采用如下步骤:On this basis, in order to obtain more accurate translation and rotation, the embodiment of the present invention may also adopt the following steps:

如果该当前特征点对组内属于内点的第二特征点对的数量大于等于预设阈值,基于重投影操作的结果确定该当前特征点对组内属于外点的第二特征点对,并删除;针对删除外点后的该当前特征点对组,基于第二相机位姿变换优化其组内属于内点的第二特征点对,并根据优化结果调整第二相机位姿变换。If the number of second feature point pairs belonging to the inner points in the current feature point pair group is greater than or equal to a preset threshold, determine the second feature point pairs belonging to the outer points in the current feature point pair group based on the result of the reprojection operation, and Delete; for the current feature point pair group after the outer point is deleted, optimize the second feature point pair belonging to the inner point in the group based on the second camera pose transformation, and adjust the second camera pose transformation according to the optimization result.

本发明实施例中,基于所获得的各第二特征点对的重投影误差,将小于预设的误差阈值的第二特征点对作为外点,并剔除该异常点。而当通过sim(3)变换一个初始的平移和旋转之后,还可以调用函数SearchBySim3()来寻找更多的特征匹配的第二特征点对,进而利用重投影误差构造sim(3)优化问题,通过函数Optimizer::OptimizeSim3()优化,得到更准确的平移和旋转。这就可以得到当前子地图的第三图像与上一子地图所匹配的第四图像之间的位姿变换进而将该位姿变换作为当前子地图与上一子地图间的相机位姿变换,这是一个sim(3)变换矩阵。In the embodiment of the present invention, based on the obtained reprojection error of each second feature point pair, a second feature point pair smaller than a preset error threshold is used as an outlier, and the abnormal point is eliminated. After transforming an initial translation and rotation through sim(3), the function SearchBySim3() can also be called to find more second feature point pairs with matching features, and then use the reprojection error to construct the sim(3) optimization problem, Optimized by the function Optimizer::OptimizeSim3() to get more accurate translation and rotation. This can get the pose transformation between the third image of the current submap and the fourth image matched by the previous submap and then transform the pose As the camera pose transformation between the current submap and the previous submap, this is a sim(3) transformation matrix.

S70,基于第二相机位姿变换对当前子地图和上一子地图进行融合。S70, fuse the current submap and the previous submap based on the pose transformation of the second camera.

本发明实施例中,基于第二相机位姿变换进行地图融合的过程,可以参见步骤S40基于第一相机位姿变化进行地图融合的公开内容,在此不再赘述。In this embodiment of the present invention, for the process of performing map fusion based on the pose transformation of the second camera, reference may be made to the disclosure content of performing map fusion based on the pose transformation of the first camera in step S40, which will not be repeated here.

最后,本发明实施例中还可以调用一次函数RunGlobalBundleAdjustment()做一次地图的全局优化。Finally, in the embodiment of the present invention, the function RunGlobalBundleAdjustment( ) may be called once to perform a global optimization of the map.

本发明实施例提供的子地图恢复融合方法,在系统跟踪失败后,即使无法满足重定位要求,也能继续进行跟踪建图。该方法对场景的依赖较弱,只要初始化成功,就能保存重定位之前的地图信息。In the sub-map restoration and fusion method provided by the embodiment of the present invention, after the system tracking fails, even if the relocation requirement cannot be met, the tracking and mapping can be continued. This method is less dependent on the scene, as long as the initialization is successful, the map information before relocation can be saved.

基于上述实施例提供的子地图恢复融合方法,本发明实施例还提供一种执行上述子地图恢复融合方法的装置,该装置的结构示意图如图3所示,包括:Based on the sub-map restoration and fusion method provided by the foregoing embodiment, an embodiment of the present invention further provides an apparatus for executing the foregoing sub-map restoration and fusion method. The schematic structural diagram of the apparatus is shown in FIG. 3 , including:

地图建立模块10,用于如果检测到系统跟踪失败,建立当前子地图,当前子地图对应的时间最早的一帧图像是通过惯性测量单元IMU计算其相机位姿的;The

第一特征匹配模块20,用于获取建立时间距离当前子地图最近的上一子地图,并对当前子地图对应的采集时间最早的前N帧第一图像、以及上一子地图对应的采集时间最晚的前M帧第二图像进行特征匹配;The first

第一位姿计算模块30,用于如果第一图像和第二图像特征匹配成功,基于特征匹配所得的至少一个第一特征点对求解当前子地图与上一子地图间的第一相机位姿变换;The first

第一地图融合模块40,用于基于第一相机位姿变换对当前子地图和上一子地图进行融合。The first

可选的,第一特征匹配模块20,具体用于:Optionally, the first

针对第一图像中的第一特征点,通过检索图像字典数据库从第二图像的第二特征点中确定词袋向量与该第一特征点相同的第一候选特征点;从第一候选特征点中选取一个描述符相似度最大的第一目标特征点,第一目标特征点与该第一特征点组成一个第一特征点对。For the first feature point in the first image, a first candidate feature point whose bag of words vector is the same as the first feature point is determined from the second feature point of the second image by retrieving the image dictionary database; Select a first target feature point with the largest descriptor similarity, and the first target feature point and the first feature point form a first feature point pair.

可选的,上述装置还包括:Optionally, the above device also includes:

第二特征匹配模块,用于如果第一图像和第二图像特征匹配失败,对当前子地图对应的采集时间最晚的一帧第三图像、以及上一子地图对应的各帧第四图像进行特征匹配;The second feature matching module is configured to, if the feature matching of the first image and the second image fails, perform a third image with the latest acquisition time corresponding to the current submap and each frame of the fourth image corresponding to the previous submap. feature matching;

第二位姿计算模块,用于如果第三图像和第四图像特征匹配成功,通过对特征匹配所得到的至少一个第二特征点对进行sim(3)变换得到当前子地图与上一子地图间的第二相机位姿变换;The second pose calculation module is used to obtain the current submap and the previous submap by performing sim(3) transformation on at least one second feature point pair obtained by feature matching if the feature matching of the third image and the fourth image is successful The second camera pose transformation between;

第二地图融合模块,用于基于第二相机位姿变换对当前子地图和上一子地图进行融合。The second map fusion module is used to fuse the current submap and the previous submap based on the second camera pose transformation.

可选的,第二特征匹配模块,具体用于:Optionally, the second feature matching module is specifically used for:

针对第三图像中的第三特征点,通过检索图像字典数据库从第四图像的第四特征点中确定词袋向量与该第三特征点相同的第二候选特征点;从具有第二候选特征点的第四图像中,筛选所具有的第二候选特征点的数量最多的多帧候选图像;针对第三图像中的第三特征点,通过检索图像字典数据库从候选图像的第四特征点中确定词袋向量与该第三特征点相同的第三候选特征点;从第三候选特征点中选取一个描述符相似度最大的第二目标特征点,第二目标特征点与该第三特征点组成一个第二特征点对。For the third feature point in the third image, a second candidate feature point whose bag of words vector is the same as the third feature point is determined from the fourth feature point of the fourth image by retrieving the image dictionary database; In the fourth image of the point, the multi-frame candidate image with the largest number of second candidate feature points is screened; for the third feature point in the third image, the image dictionary database is retrieved from the fourth feature point of the candidate image. Determine the third candidate feature point with the same bag of words vector as the third feature point; select a second target feature point with the largest descriptor similarity from the third candidate feature point, and the second target feature point and the third feature point form a second feature point pair.

可选的,第二位姿计算模块,具体用于:Optionally, the second pose calculation module, which is specifically used for:

按照第二特征点对中第二目标特征点所在的候选图像,将第二特征点对划分至相应的特征点对组内;确定待处理的当前特征点对组,从该当前特征点对组中随机选取三个第二特征点对,并通过对所选取的三个第二特征点对进行sim(3)变换,得到第三图像与该当前特征点对组对应的候选图像间的候选相机位姿变换;基于候选相机位姿变换对该当前特征点对组内的第二特征点对进行重投影操作,以确定该当前特征点对组内属于内点的第二特征点对;如果该当前特征点对组内属于内点的第二特征点对的数量大于等于预设阈值,将候选相机位姿变换作为当前子地图与上一子地图间的第二相机位姿变换;如果该当前特征点对组内属于内点的第二特征点对的数量小于预设阈值,返回执行确定待处理的当前特征点对组的步骤,直到遍历完所有特征点对组时结束。According to the candidate image where the second target feature point is located in the second feature point pair, the second feature point pair is divided into the corresponding feature point pair group; the current feature point pair group to be processed is determined, and the current feature point pair group Randomly select three second feature point pairs in the pose transformation; perform a reprojection operation on the second feature point pair in the current feature point pair group based on the candidate camera pose transformation to determine the second feature point pair belonging to the inner point in the current feature point pair group; if the The number of second feature point pairs belonging to the inner points in the current feature point pair group is greater than or equal to the preset threshold, and the candidate camera pose transformation is used as the second camera pose transformation between the current submap and the previous submap; if the current If the number of second feature point pairs belonging to the inner point in the feature point pair group is less than the preset threshold, the process of determining the current feature point pair group to be processed is returned to execute the step until all feature point pairs are traversed.

可选的,第二位姿计算模块,还用于:Optionally, the second pose calculation module is also used for:

如果该当前特征点对组内属于内点的第二特征点对的数量大于等于预设阈值,基于重投影操作的结果确定该当前特征点对组内属于外点的第二特征点对,并删除;针对删除外点后的该当前特征点对组,基于第二相机位姿变换优化其组内属于内点的第二特征点对,并根据优化结果调整第二相机位姿变换。If the number of second feature point pairs belonging to the inner points in the current feature point pair group is greater than or equal to a preset threshold, determine the second feature point pairs belonging to the outer points in the current feature point pair group based on the result of the reprojection operation, and Delete; for the current feature point pair group after the outer point is deleted, optimize the second feature point pair belonging to the inner point in the group based on the second camera pose transformation, and adjust the second camera pose transformation according to the optimization result.

本发明实施例提供的子地图恢复融合装置,在系统跟踪失败后,即使无法满足重定位要求,也能继续进行跟踪建图。该方法对场景的依赖较弱,只要初始化成功,就能保存重定位之前的地图信息。The sub-map restoration and fusion device provided by the embodiment of the present invention can continue to perform tracking and mapping even if the relocation requirement cannot be met after the system tracking fails. This method is less dependent on the scene, as long as the initialization is successful, the map information before relocation can be saved.

以上对本发明所提供的一种子地图恢复融合方法及装置进行了详细介绍,本文中应用了具体个例对本发明的原理及实施方式进行了阐述,以上实施例的说明只是用于帮助理解本发明的方法及其核心思想;同时,对于本领域的一般技术人员,依据本发明的思想,在具体实施方式及应用范围上均会有改变之处,综上所述,本说明书内容不应理解为对本发明的限制。The method and device for sub-map restoration and fusion provided by the present invention have been described in detail above. In this paper, specific examples are used to illustrate the principles and implementations of the present invention. The descriptions of the above embodiments are only used to help understand the present invention. method and its core idea; at the same time, for those skilled in the art, according to the idea of the present invention, there will be changes in the specific implementation and application scope. Invention limitations.

需要说明的是,本说明书中的各个实施例均采用递进的方式描述,每个实施例重点说明的都是与其他实施例的不同之处,各个实施例之间相同相似的部分互相参见即可。对于实施例公开的装置而言,由于其与实施例公开的方法相对应,所以描述的比较简单,相关之处参见方法部分说明即可。It should be noted that the various embodiments in this specification are described in a progressive manner, and each embodiment focuses on the differences from other embodiments. For the same and similar parts among the various embodiments, refer to each other Can. As for the device disclosed in the embodiment, since it corresponds to the method disclosed in the embodiment, the description is relatively simple, and the relevant part can be referred to the description of the method.

还需要说明的是,在本文中,诸如第一和第二等之类的关系术语仅仅用来将一个实体或者操作与另一个实体或操作区分开来,而不一定要求或者暗示这些实体或操作之间存在任何这种实际的关系或者顺序。而且,术语“包括”、“包含”或者其任何其他变体意在涵盖非排他性的包含,从而使得包括一系列要素的过程、方法、物品或者设备所固有的要素,或者是还包括为这些过程、方法、物品或者设备所固有的要素。在没有更多限制的情况下,由语句“包括一个……”限定的要素,并不排除在包括所述要素的过程、方法、物品或者设备中还存在另外的相同要素。It should also be noted that in this document, relational terms such as first and second are used only to distinguish one entity or operation from another, and do not necessarily require or imply those entities or operations There is no such actual relationship or order between them. Furthermore, the terms "comprising", "comprising" or any other variation thereof are intended to encompass a non-exclusive inclusion such that a process, method, article, or device of a list of elements is included, inherent to, or is also included for, those processes. , method, article or device inherent elements. Without further limitation, an element qualified by the phrase "comprising a..." does not preclude the presence of additional identical elements in a process, method, article or apparatus that includes the element.

对所公开的实施例的上述说明,使本领域专业技术人员能够实现或使用本发明。对这些实施例的多种修改对本领域的专业技术人员来说将是显而易见的,本文中所定义的一般原理可以在不脱离本发明的精神或范围的情况下,在其它实施例中实现。因此,本发明将不会被限制于本文所示的这些实施例,而是要符合与本文所公开的原理和新颖特点相一致的最宽的范围。The above description of the disclosed embodiments enables any person skilled in the art to make or use the present invention. Various modifications to these embodiments will be readily apparent to those skilled in the art, and the generic principles defined herein may be implemented in other embodiments without departing from the spirit or scope of the invention. Thus, the present invention is not intended to be limited to the embodiments shown herein, but is to be accorded the widest scope consistent with the principles and novel features disclosed herein.

Claims (10)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202011457290.4ACN112509006A (en) | 2020-12-11 | 2020-12-11 | Sub-map recovery fusion method and device |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202011457290.4ACN112509006A (en) | 2020-12-11 | 2020-12-11 | Sub-map recovery fusion method and device |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN112509006Atrue CN112509006A (en) | 2021-03-16 |

Family

ID=74973665

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202011457290.4APendingCN112509006A (en) | 2020-12-11 | 2020-12-11 | Sub-map recovery fusion method and device |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN112509006A (en) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113238557A (en)* | 2021-05-17 | 2021-08-10 | 珠海市一微半导体有限公司 | Mapping abnormity identification and recovery method, chip and mobile robot |

Citations (11)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20180053056A1 (en)* | 2016-08-22 | 2018-02-22 | Magic Leap, Inc. | Augmented reality display device with deep learning sensors |

| CN108665540A (en)* | 2018-03-16 | 2018-10-16 | 浙江工业大学 | Robot localization based on binocular vision feature and IMU information and map structuring system |

| CN109166149A (en)* | 2018-08-13 | 2019-01-08 | 武汉大学 | A kind of positioning and three-dimensional wire-frame method for reconstructing and system of fusion binocular camera and IMU |

| CN109307508A (en)* | 2018-08-29 | 2019-02-05 | 中国科学院合肥物质科学研究院 | A Panoramic Inertial Navigation SLAM Method Based on Multiple Keyframes |

| CN109465832A (en)* | 2018-12-18 | 2019-03-15 | 哈尔滨工业大学(深圳) | High-precision vision and IMU tight fusion positioning method and system |

| CN109509230A (en)* | 2018-11-13 | 2019-03-22 | 武汉大学 | A kind of SLAM method applied to more camera lens combined type panorama cameras |

| CN110533587A (en)* | 2019-07-03 | 2019-12-03 | 浙江工业大学 | A kind of SLAM method of view-based access control model prior information and map recovery |

| CN110866497A (en)* | 2019-11-14 | 2020-03-06 | 合肥工业大学 | Robot positioning and image building method and device based on dotted line feature fusion |

| CN110967009A (en)* | 2019-11-27 | 2020-04-07 | 云南电网有限责任公司电力科学研究院 | Navigation positioning and map construction method and device for transformer substation inspection robot |

| US20200109954A1 (en)* | 2017-06-30 | 2020-04-09 | SZ DJI Technology Co., Ltd. | Map generation systems and methods |

| CN111583136A (en)* | 2020-04-25 | 2020-08-25 | 华南理工大学 | Method for simultaneously positioning and establishing image of autonomous mobile platform in rescue scene |

- 2020

- 2020-12-11CNCN202011457290.4Apatent/CN112509006A/enactivePending

Patent Citations (11)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20180053056A1 (en)* | 2016-08-22 | 2018-02-22 | Magic Leap, Inc. | Augmented reality display device with deep learning sensors |

| US20200109954A1 (en)* | 2017-06-30 | 2020-04-09 | SZ DJI Technology Co., Ltd. | Map generation systems and methods |

| CN108665540A (en)* | 2018-03-16 | 2018-10-16 | 浙江工业大学 | Robot localization based on binocular vision feature and IMU information and map structuring system |

| CN109166149A (en)* | 2018-08-13 | 2019-01-08 | 武汉大学 | A kind of positioning and three-dimensional wire-frame method for reconstructing and system of fusion binocular camera and IMU |

| CN109307508A (en)* | 2018-08-29 | 2019-02-05 | 中国科学院合肥物质科学研究院 | A Panoramic Inertial Navigation SLAM Method Based on Multiple Keyframes |

| CN109509230A (en)* | 2018-11-13 | 2019-03-22 | 武汉大学 | A kind of SLAM method applied to more camera lens combined type panorama cameras |

| CN109465832A (en)* | 2018-12-18 | 2019-03-15 | 哈尔滨工业大学(深圳) | High-precision vision and IMU tight fusion positioning method and system |

| CN110533587A (en)* | 2019-07-03 | 2019-12-03 | 浙江工业大学 | A kind of SLAM method of view-based access control model prior information and map recovery |

| CN110866497A (en)* | 2019-11-14 | 2020-03-06 | 合肥工业大学 | Robot positioning and image building method and device based on dotted line feature fusion |

| CN110967009A (en)* | 2019-11-27 | 2020-04-07 | 云南电网有限责任公司电力科学研究院 | Navigation positioning and map construction method and device for transformer substation inspection robot |

| CN111583136A (en)* | 2020-04-25 | 2020-08-25 | 华南理工大学 | Method for simultaneously positioning and establishing image of autonomous mobile platform in rescue scene |

Non-Patent Citations (6)

| Title |

|---|

| CHENG YUAN 等: "A Novel Fault-Tolerant Navigation and Positioning Method with Stereo-Camera/Micro Electro Mechanical Systems Inertial Measurement Unit (MEMS-IMU) in Hostile Environment", 《MICROMACHINES》, vol. 9, no. 12, 27 November 2018 (2018-11-27), pages 1 - 19* |

| MARY B. ALATISE 等: "Pose Estimation of a Mobile Robot Based on Fusion of IMU Data and Vision Data Using and Extended Kalman Filter", 《SENSORS》, vol. 17, no. 10, 21 September 2017 (2017-09-21), pages 1 - 22* |

| 向良华: "室外环境下视觉与惯导融合定位算法研究与实现", 《中国优秀硕士学位论文全文数据库信息科技辑》, no. 2019, 15 January 2019 (2019-01-15), pages 140 - 1526* |

| 张玉龙: "基于关键帧的视觉惯性SLAM算法", 《中国优秀硕士学位论文全文数据库信息科技辑》, no. 2019, 15 April 2019 (2019-04-15), pages 140 - 293* |

| 许峰: "基于单目视觉的多传感器组合导航算法研究", 《中国优秀硕士学位论文全文数据库 信息科技辑》, no. 2019, 15 February 2019 (2019-02-15), pages 140 - 664* |

| 陈常: "基于视觉和惯导融合的巡检机器人定位与建图技术研究", 《中国优秀硕士学位论文全文数据库 信息科技辑》, no. 2019, 15 September 2019 (2019-09-15), pages 138 - 651* |

Cited By (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113238557A (en)* | 2021-05-17 | 2021-08-10 | 珠海市一微半导体有限公司 | Mapping abnormity identification and recovery method, chip and mobile robot |

| CN113238557B (en)* | 2021-05-17 | 2024-05-07 | 珠海一微半导体股份有限公司 | Method for identifying and recovering abnormal drawing, computer readable storage medium and mobile robot |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN107967457B (en) | A method and system for location recognition and relative positioning that adapts to changes in visual features | |

| CN110490900B (en) | Binocular vision localization method and system in dynamic environment | |

| US11313684B2 (en) | Collaborative navigation and mapping | |

| US20190204084A1 (en) | Binocular vision localization method, device and system | |

| CN109186606B (en) | Robot composition and navigation method based on SLAM and image information | |

| Geneva et al. | An efficient schmidt-ekf for 3D visual-inertial SLAM | |

| CN107735797B (en) | Method for determining motion between a first coordinate system and a second coordinate system | |

| WO2021035669A1 (en) | Pose prediction method, map construction method, movable platform, and storage medium | |

| CN111127524A (en) | A method, system and device for trajectory tracking and three-dimensional reconstruction | |

| CN107193279A (en) | Robot localization and map structuring system based on monocular vision and IMU information | |

| WO2021043213A1 (en) | Calibration method, device, aerial photography device, and storage medium | |

| Li et al. | Review of vision-based Simultaneous Localization and Mapping | |

| CN106940186A (en) | A kind of robot autonomous localization and air navigation aid and system | |

| CN106595659A (en) | Map merging method of unmanned aerial vehicle visual SLAM under city complex environment | |

| CN110595466A (en) | Lightweight implementation method of inertial-assisted visual odometry based on deep learning | |

| WO2020063878A1 (en) | Data processing method and apparatus | |

| CN112731503B (en) | Pose estimation method and system based on front end tight coupling | |

| CN112767482B (en) | Indoor and outdoor positioning method and system with multi-sensor fusion | |

| CN116989772B (en) | An air-ground multi-modal multi-agent collaborative positioning and mapping method | |

| CN111932616A (en) | Binocular vision inertial odometer method for accelerating by utilizing parallel computing | |

| CN113570716A (en) | Cloud 3D map construction method, system and device | |

| CN114485640A (en) | Monocular visual-inertial synchronous positioning and mapping method and system based on point and line features | |

| CN114596382A (en) | A binocular vision SLAM method and system based on panoramic camera | |

| WO2024164812A1 (en) | Multi-sensor fusion-based slam method and device, and medium | |

| CN118225096A (en) | Multi-sensor SLAM method based on dynamic feature point elimination and loop detection |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| AD01 | Patent right deemed abandoned | ||

| AD01 | Patent right deemed abandoned | Effective date of abandoning:20241122 |