CN112199702A - Privacy protection method, storage medium and system based on federal learning - Google Patents

Privacy protection method, storage medium and system based on federal learningDownload PDFInfo

- Publication number

- CN112199702A CN112199702ACN202011109363.0ACN202011109363ACN112199702ACN 112199702 ACN112199702 ACN 112199702ACN 202011109363 ACN202011109363 ACN 202011109363ACN 112199702 ACN112199702 ACN 112199702A

- Authority

- CN

- China

- Prior art keywords

- model

- ciphertext

- global

- parameter

- parameters

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F21/00—Security arrangements for protecting computers, components thereof, programs or data against unauthorised activity

- G06F21/60—Protecting data

- G06F21/602—Providing cryptographic facilities or services

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/045—Combinations of networks

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

- G06N3/084—Backpropagation, e.g. using gradient descent

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Health & Medical Sciences (AREA)

- Health & Medical Sciences (AREA)

- Software Systems (AREA)

- General Physics & Mathematics (AREA)

- General Engineering & Computer Science (AREA)

- Biomedical Technology (AREA)

- Mathematical Physics (AREA)

- Data Mining & Analysis (AREA)

- Molecular Biology (AREA)

- Computing Systems (AREA)

- Computational Linguistics (AREA)

- Biophysics (AREA)

- Evolutionary Computation (AREA)

- Artificial Intelligence (AREA)

- Life Sciences & Earth Sciences (AREA)

- Bioethics (AREA)

- Computer Hardware Design (AREA)

- Computer Security & Cryptography (AREA)

- Information Retrieval, Db Structures And Fs Structures Therefor (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明涉及数据保护领域,特别涉及一种基于联邦学习的隐私保护方法、存储介质及系统。The invention relates to the field of data protection, in particular to a privacy protection method, storage medium and system based on federated learning.

背景技术Background technique

随着大数据挖掘和深度学习的广泛应用与发展,越来越多的隐私泄露和数据滥用事件频繁爆发,使得重视数据隐私和安全已经成为了世界性的趋势。特别是在分布式机器学习中,分布式参与者由于隐私担忧问题而不愿意提供自己的本地训练数据,从而形成了“数据孤岛”现象。为了应对数据隐私保护难题,打破数据孤岛的现实困难,满足数据联合使用的迫切需求,联邦学习的概念和工业级应用解决方案被提出来。联邦学习本质上是一种分布式机器学习框架,在此框架下,各个参与者之间不互通原始数据,在本地训练模型并上传更新的模型参数或者梯度,从而能够在保护隐私的前提下有效帮助多个参与者联合进行机器学习建模。With the wide application and development of big data mining and deep learning, more and more privacy leaks and data abuse incidents broke out frequently, making it a worldwide trend to pay attention to data privacy and security. Especially in distributed machine learning, distributed participants are reluctant to provide their own local training data due to privacy concerns, resulting in the phenomenon of "data silos". In order to deal with the problem of data privacy protection, break the practical difficulties of data silos, and meet the urgent needs of data joint use, the concept of federated learning and industrial-level application solutions are proposed. Federated learning is essentially a distributed machine learning framework. Under this framework, each participant does not exchange original data, trains the model locally and uploads updated model parameters or gradients, which can effectively protect privacy. Helps multiple participants jointly conduct machine learning modeling.

虽然联邦学习不要求参与者上传本地训练数据,从而能够一定程度上地保护隐私。然而,目前的研究表明攻击者仍然可能利用每个参与者上传的真实梯度或者更新的模型参数信息来获取原始的训练数据、进行成员推断以及属性推断等攻击。目前,基于联邦学习的隐私保护研究,几乎考虑的都是防止中央服务器从模型更新中获取参与者的隐私信息,却并未考虑恶意参与者的情况。也就是说,恶意参与者或者被攻击者截获的参与者仍然可以得到真实的全局模型更新,因此,它们仍然有可能通过真实的参数,再加上自己所拥有的本地训练数据来猜测其它训练数据,或者推测其他参与者的训练数据集。正如Kairouz等人指出,在联邦学习中,防止迭代过程中的真实的模型更新以及最终的模型参数被恶意参与者获取也是需要解决的问题。本质上来说,解决这个问题就是让参与者在加密或者扰乱的全局模型更新上进行本地训练。尽管目前有三种主流的隐私保护技术,即差分隐私、同态加密和安全多方计算,被广泛的应用于隐私保护的机器学习中。然而,这些技术都要么以牺牲模型准确性为代价,要么以牺牲模型训练的效率为代价,使得隐私保护的模型训练仍然是一大难点。Although federated learning does not require participants to upload local training data, it can protect privacy to a certain extent. However, current research shows that attackers may still exploit the real gradients uploaded by each participant or the updated model parameter information to obtain the original training data, perform membership inference and attribute inference attacks. At present, the research on privacy protection based on federated learning almost always considers preventing the central server from obtaining the private information of participants from the model update, but does not consider the situation of malicious participants. That is to say, malicious actors or actors intercepted by attackers can still get real global model updates, so it is still possible for them to guess other training data by using real parameters plus the local training data they own , or speculate on the training datasets of other participants. As pointed out by Kairouz et al., in federated learning, preventing real model updates in the iterative process and the final model parameters being obtained by malicious actors are also issues that need to be addressed. Essentially, the solution to this problem is to have participants train locally on encrypted or scrambled global model updates. Although there are currently three mainstream privacy-preserving techniques, namely differential privacy, homomorphic encryption, and secure multi-party computation, they are widely used in privacy-preserving machine learning. However, these techniques either sacrifice model accuracy or model training efficiency, making privacy-preserving model training still a big challenge.

因此,现有技术还有待于改进和发展。Therefore, the existing technology still needs to be improved and developed.

发明内容SUMMARY OF THE INVENTION

本发明要解决的技术问题在于,针对现有技术的不足,提供一种基于联邦学习的隐私保护方法、存储介质及系统,旨在解决现有隐私数据得不到有效保护的问题。The technical problem to be solved by the present invention is to provide a privacy protection method, storage medium and system based on federated learning, aiming at solving the problem that the existing privacy data cannot be effectively protected.

为了解决上述技术问题,本发明所采用的技术方案如下:In order to solve the above-mentioned technical problems, the technical scheme adopted in the present invention is as follows:

一种基于联邦学习的隐私保护方法,其中,包括步骤:A privacy protection method based on federated learning, which includes steps:

采用参数加密算法对全局模型进行加密处理,得到密文模型;The global model is encrypted by the parameter encryption algorithm, and the ciphertext model is obtained;

利用本地数据在所述密文模型上进行训练,得到密文梯度信息和噪声项;Use local data to perform training on the ciphertext model to obtain ciphertext gradient information and noise terms;

对所述密文梯度信息和噪声项进行解密,得到参数梯度,采用所述参数梯度对所述全局模型进行更新,循环上述步骤直至模型收敛或达到指定迭代次数,获得模型参数;Decrypt the ciphertext gradient information and the noise term to obtain the parameter gradient, use the parameter gradient to update the global model, and repeat the above steps until the model converges or reaches a specified number of iterations to obtain model parameters;

对所述模型参数进行加密,得到加密模型参数,采用所述加密模型参数对所述全局模型进行更新,得到全局加密模型;Encrypting the model parameters to obtain encryption model parameters, and using the encryption model parameters to update the global model to obtain a global encryption model;

在所述加密全局模型上进行本地训练,实现隐私保护。Local training is performed on the encrypted global model to achieve privacy protection.

所述的基于联邦学习的隐私保护方法,其中,所述采用参数加密算法对全局模型进行加密处理,得到密文模型的步骤包括:The method for protecting privacy based on federated learning, wherein the step of encrypting the global model by using a parameter encryption algorithm to obtain the ciphertext model includes:

当所述全局模型为L层的多层感知机模型时,采用随机数矩阵和对所述多层感知机模型中的明文模型参数进行加密,得到密文模型参数:其中,表示哈达玛积乘积运算;When the global model is an L-layer multilayer perceptron model, a random number matrix is used. and for the plaintext model parameters in the multilayer perceptron model Perform encryption to get the ciphertext model parameters: in, Represents the Hadamard product multiplication operation;

所述随机数矩阵R(l)由乘性噪声向量按以下规则构成:The random number matrix R(l) consists of a multiplicative noise vector Formed according to the following rules:

其中,下标i和j满足i∈[1,nl],j∈[1,nl-1]; Among them, the subscripts i and j satisfy i∈[1 ,nl], j∈[1,nl-1 ];

所述随机数矩阵Ra由随机数γ和加性噪声向量按下式组成:其中下标i和j满足i∈[1,nL],j∈[1,nL-1];The random number matrix Ra consists of random numbers γ and an additive noise vector It is composed as follows: where the subscripts i and j satisfy i∈[1,nL ], j∈[1,nL-1 ];

将所述密文模型参数替换所述多层感知机模型中的明文模型参数,得到密文模型。The ciphertext model parameters are replaced with the plaintext model parameters in the multilayer perceptron model to obtain a ciphertext model.

所述的基于联邦学习的隐私保护方法,其中,所述采用参数加密算法对全局模型进行加密处理,得到密文模型的步骤包括:The method for protecting privacy based on federated learning, wherein the step of encrypting the global model by using a parameter encryption algorithm to obtain the ciphertext model includes:

当所述全局模型为L层的卷积神经网络模型时,使用随机张量和随机矩阵对所述卷积神经网络模型的明文模型参数加密,得到对应的密文模型参数:当1≤l≤L-1时,参数W(l)为卷积核张量,所述随机张量R(l)由乘性噪声向量组成,且满足:When the global model is an L-layer convolutional neural network model, use a random tensor and random matrix The plaintext model parameters of the convolutional neural network model are encrypted to obtain the corresponding ciphertext model parameters: When 1≤l≤L-1, the parameter W(l) is the convolution kernel tensor, and the random tensor R(l) is composed of the multiplicative noise vector consists of:

其中r(l,in)=(r(m))m∈P(l),由m∈P(l)个向量r(m)拼接而成,P(l)表示所有连接到第l个卷积层的网络层的下标集合; where r(l,in) = (r(m) )m∈P(l) , which is composed of m∈P(l) vectors r(m) concatenated, and P(l) represents all connections to the lth volume The set of subscripts of the layered network layer;

所述随机矩阵R(L)由乘性噪声向量r(L-1)构成,且满足:The random matrix R(L) consists of a multiplicative noise vector r(L-1) and satisfies:

所述随机矩阵Ra由加性噪声向量ra和随机数γ组成,且满足: The random matrixRa is composed of an additive noise vector ra anda random number γ, and satisfies:

将所述密文模型参数替换所述卷积神经网络模型中的明文模型参数,得到密文模型。Replacing the ciphertext model parameters with the plaintext model parameters in the convolutional neural network model to obtain a ciphertext model.

所述的基于联邦学习的隐私保护方法,其中,利用本地数据在所述密文模型上进行训练,得到密文梯度信息和噪声项的步骤包括:The method for privacy protection based on federated learning, wherein the steps of using local data to train on the ciphertext model to obtain ciphertext gradient information and noise terms include:

计算所述密文模型的输出:所述密文模型的输出与其对应的明文模型的输出满足以下关系式:Compute the output of the ciphertext model: The output of the ciphertext model and the output of the corresponding plaintext model satisfy the following relationship:

其中r=γra; in r=γra ;

对于任意维的样本密文模型的预测值与真实值的均方误差作为损失函数表示为:For samples of any dimension Predicted value of the ciphertext model The mean squared error from the true value is expressed as a loss function as:

其中,nL表示模型输出层的维度,同时也是样本标签的维度; Among them, nL represents the dimension of the model output layer, which is also the dimension of the sample label;

所述损失函数对密文参数的带噪梯度与其对应的真实梯度满足以下关系式:其中,且The loss function ciphertext parameters The noisy gradient of and its corresponding true gradient satisfy the following relation: in, and

第k个参与者在其所有小批量数据样本上计算密文梯度信息并结合加性噪声向量ra计算出噪声项和The kth participant in all its mini-batches of data Calculate the gradient information of the ciphertext on the sample and combined with the additive noise vectorra to calculate the noise term and

所述的基于联邦学习的隐私保护方法,其中,利用本地数据在所述密文模型上进行训练,得到密文梯度信息和噪声项的步骤包括:The method for privacy protection based on federated learning, wherein the steps of using local data to train on the ciphertext model to obtain ciphertext gradient information and noise terms include:

所述密文模型的卷积层输出与其对应的真实卷积层输出满足:并且,密文模型的全连接层输出与其对应的真实输出结果满足:其中是伪输出统计量,函数Flatten(·)表示将多维张量延展成一维向量,延展后的向量维度为nL-1=cL-1hL-1wL-1,参数r=γra为组合噪声向量;The convolutional layer output of the ciphertext model and its corresponding real convolutional layer output satisfy: And, the fully connected layer output of the ciphertext model and its corresponding real output result satisfy: in is a pseudo-output statistic, the function Flatten( ) represents extending a multi-dimensional tensor into a one-dimensional vector, the dimension of the extended vector is nL-1 =cL-1 hL-1 wL-1 , the parameter r=γraa is the combined noise vector;

对于任意维的样本密文模型的预测值与真实值的均方误差作为损失函数表示为:For samples of any dimension Predicted value of the ciphertext model The mean squared error from the true value is expressed as a loss function as:

其中,nL表示模型输出层的维度,同时也是样本标签的维度; Among them, nL represents the dimension of the model output layer, which is also the dimension of the sample label;

所述损失函数对密文参数的带噪梯度与其对应的真实梯度满足以下关系式:其中,且第k个参与者在其所有小批量数据样本上计算密文梯度信息并结合加性噪声向量ra计算出噪声项和The loss function ciphertext parameters The noisy gradient of and its corresponding true gradient satisfy the following relation: in, and The kth participant in all its mini-batches of data Calculate the gradient information of the ciphertext on the sample and combined with the additive noise vectorra to calculate the noise term and

所述的基于联邦学习的隐私保护方法,其中,对所述密文梯度信息和噪声项进行解密,得到参数梯度,采用所述参数梯度对所述全局模型进行更新,直至模型收敛或达到指定迭代次数,获得模型参数的步骤包括:The privacy protection method based on federated learning, wherein the ciphertext gradient information and noise terms are decrypted to obtain parameter gradients, and the global model is updated by using the parameter gradients until the model converges or reaches a specified iteration times, the steps to obtain the model parameters include:

对于第t轮的全局模型Wt,在第k个参与者的本地训练过程中得到的参数梯度按下式求解:其中,Fk(·)表示第k个参与者的损失函数;For the global model Wt in the t-th round, the parameter gradients obtained during the local training process of the k-th participant are solved as: Among them, Fk ( ) represents the loss function of the kth participant;

采用所述参数梯度对所述全局模型进行更新,直至模型收敛或达到指定迭代次数,得到第t+1轮的模型参数Wt+1为:Using the parameter gradient to update the global model until the model converges or reaches the specified number of iterations, the model parameter Wt+1 of the t+1th round is obtained as:

其中,η表示学习速率,Nk/N表示第k个参与者的数据量占总数据量的比例。 Among them, η represents the learning rate, and Nk /N represents the ratio of the data volume of the kth participant to the total data volume.

所述的基于联邦学习的隐私保护方法,其中,对所述模型参数进行加密,得到加密模型参数,采用所述加密模型参数对所述全局模型进行更新,得到全局加密模型的步骤包括:The method for privacy protection based on federated learning, wherein, encrypting the model parameters to obtain encrypted model parameters, and using the encrypted model parameters to update the global model, the steps of obtaining the global encrypted model include:

根据公式对所述模型参数进行加密,得到加密模型参数;According to the formula Encrypting the model parameters to obtain encrypted model parameters;

采用所述加密模型参数对所述全局模型进行更新,得到全局加密模型。using the encryption model parameters The global model is updated to obtain a global encryption model.

一种计算机可读存储介质,其中,所述计算机可读存储介质存储有一个或者多个程序,所述一个或者多个程序可被一个或者多个处理器执行,以实现本发明所述基于联邦学习的隐私保护方法中的步骤。A computer-readable storage medium, wherein the computer-readable storage medium stores one or more programs, and the one or more programs can be executed by one or more processors to implement the federation-based Learn the steps in a privacy-preserving approach.

一种基于联邦学习的隐私保护系统,其中,包括:服务器端和客户端,所述服务器端用于采用参数加密算法对全局模型进行加密处理,得到密文模型;A privacy protection system based on federated learning, comprising: a server and a client, wherein the server is used to encrypt a global model by using a parameter encryption algorithm to obtain a ciphertext model;

所述客户端用于利用本地数据在所述密文模型上进行训练,得到密文梯度信息和噪声项;The client is configured to perform training on the ciphertext model using local data to obtain ciphertext gradient information and noise terms;

所述服务器端还用于对所述密文梯度信息和噪声项进行解密,得到参数梯度,采用所述参数梯度对所述全局模型进行更新,循环上述步骤直至模型收敛或达到指定迭代次数,获得模型参数;对所述模型参数进行加密,得到加密模型参数,采用所述加密模型参数对所述全局模型进行更新,得到全局加密模型;The server side is also used for decrypting the ciphertext gradient information and the noise term to obtain the parameter gradient, using the parameter gradient to update the global model, and repeating the above steps until the model converges or reaches a specified number of iterations, and obtains: model parameters; encrypt the model parameters to obtain encrypted model parameters, and use the encrypted model parameters to update the global model to obtain a global encrypted model;

所述客户端还用于在所述加密全局模型上进行本地训练,实现隐私保护。The client is further configured to perform local training on the encrypted global model to implement privacy protection.

有益效果:与现有技术相比,本发明提供的基于联邦学习的隐私保护方法,通过隐私保护算法来加密联邦学习的全局模型得到全局加密模型,并允许参与者所述全局加密模型上进行本地训练。本发明提供的隐私保护方法可以有效防止半可信的联邦学习参与者获取全局模型的真实参数和中间模型的输出结果,同时也保证所有参与者都能够利用最终训练好的加密模型获取到真实的预测结果。Beneficial effect: Compared with the prior art, the privacy protection method based on federated learning provided by the present invention obtains a global encryption model by encrypting the global model of federated learning through a privacy protection algorithm, and allows participants to perform local encryption on the global encryption model. train. The privacy protection method provided by the present invention can effectively prevent semi-trusted federated learning participants from obtaining the real parameters of the global model and the output results of the intermediate model, and also ensures that all participants can use the final trained encryption model to obtain the real parameters. forecast result.

附图说明Description of drawings

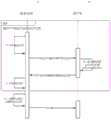

图1为本发明一种基于联邦学习的隐私保护方法较佳实施例的流程图。FIG. 1 is a flowchart of a preferred embodiment of a privacy protection method based on federated learning of the present invention.

图2为本发明一种基于联邦学习的隐私保护系统的原理图。FIG. 2 is a schematic diagram of a privacy protection system based on federated learning of the present invention.

具体实施方式Detailed ways

本发明提供一种基于联邦学习的隐私保护方法、存储介质及系统,为使本发明的目的、技术方案及效果更加清楚、明确,以下参照附图并举实施例对本发明进一步详细说明。应当理解,此处所描述的具体实施例仅用以解释本发明,并不用于限定本发明。The present invention provides a privacy protection method, storage medium and system based on federated learning. In order to make the purpose, technical solution and effect of the present invention clearer and clearer, the present invention is further described in detail below with reference to the accompanying drawings and examples. It should be understood that the specific embodiments described herein are only used to explain the present invention, but not to limit the present invention.

本技术领域技术人员可以理解,除非特意声明,这里使用的单数形式“一”、“一个”、“所述”和“该”也可包括复数形式。应该进一步理解的是,本发明的说明书中使用的措辞“包括”是指存在所述特征、整数、步骤、操作、元件和/或组件,但是并不排除存在或添加一个或多个其他特征、整数、步骤、操作、元件、组件和/或它们的组。应该理解,当我们称元件被“连接”或“耦接”到另一元件时,它可以直接连接或耦接到其他元件,或者也可以存在中间元件。此外,这里使用的“连接”或“耦接”可以包括无线连接或无线耦接。这里使用的措辞“和/或”包括一个或更多个相关联的列出项的全部或任一单元和全部组合。It will be understood by those skilled in the art that the singular forms "a", "an", "said" and "the" as used herein can include the plural forms as well, unless expressly stated otherwise. It should be further understood that the word "comprising" used in the description of the present invention refers to the presence of stated features, integers, steps, operations, elements and/or components, but does not exclude the presence or addition of one or more other features, Integers, steps, operations, elements, components and/or groups thereof. It will be understood that when we refer to an element as being "connected" or "coupled" to another element, it can be directly connected or coupled to the other element or intervening elements may also be present. Furthermore, "connected" or "coupled" as used herein may include wirelessly connected or wirelessly coupled. As used herein, the term "and/or" includes all or any element and all combination of one or more of the associated listed items.

本技术领域技术人员可以理解,除非另外定义,这里使用的所有术语(包括技术术语和科学术语),具有与本发明所属领域中的普通技术人员的一般理解相同的意义。还应该理解的是,诸如通用字典中定义的那些术语,应该被理解为具有与现有技术的上下文中的意义一致的意义,并且除非像这里一样被特定定义,否则不会用理想化或过于正式的含义来解释。It will be understood by those skilled in the art that, unless otherwise defined, all terms (including technical and scientific terms) used herein have the same meaning as commonly understood by one of ordinary skill in the art to which this invention belongs. It should also be understood that terms, such as those defined in a general dictionary, should be understood to have meanings consistent with their meanings in the context of the prior art and, unless specifically defined as herein, should not be interpreted in idealistic or overly formal meaning to explain.

下面结合附图,通过对实施例的描述,对发明内容作进一步说明。In the following, the content of the invention will be further illustrated by describing the embodiments in conjunction with the accompanying drawings.

联邦学习(FederatedLearning)是一种新兴的人工智能基础技术,在2016年由谷歌最先提出,原本用于解决安卓手机终端用户在本地更新模型的问题,其设计目标是在保障大数据交换时的信息安全、保护终端数据和个人数据隐私、保证合法合规的前提下,在多参与方或多计算结点之间开展高效率的机器学习。其中,联邦学习可使用的机器学习算法不局限于神经网络,还包括随机森林等重要算法。以包含两个数据拥有方(即企业A和B)的场景为例介绍联邦学习的系统构架。该构架可扩展至包含多个数据拥有方的场景。假设企业A和B想联合训练一个机器学习模型,它们的业务系统分别拥有各自用户的相关数据。此外,企业B还拥有模型需要预测的标签数据。出于数据隐私保护和安全考虑,A和B无法直接进行数据交换,可使用联邦学习系统建立模型。联邦学习系统构架由三部分构成:Federated Learning (Federated Learning) is an emerging artificial intelligence basic technology. It was first proposed by Google in 2016. It was originally used to solve the problem of Android mobile phone end users updating models locally. Its design goal is to ensure the exchange of big data. On the premise of information security, protecting the privacy of terminal data and personal data, and ensuring legal compliance, efficient machine learning is carried out among multiple parties or computing nodes. Among them, the machine learning algorithms that can be used in federated learning are not limited to neural networks, but also include important algorithms such as random forests. The system architecture of federated learning is introduced by taking a scenario with two data owners (namely, enterprises A and B) as an example. The architecture can be extended to scenarios involving multiple data owners. Suppose companies A and B want to jointly train a machine learning model, and their business systems have data about their respective users. In addition, Company B has the label data that the model needs to predict. For data privacy protection and security considerations, A and B cannot directly exchange data, and a federated learning system can be used to build a model. The architecture of the federated learning system consists of three parts:

第一部分:加密样本对齐。由于两家企业的用户群体并非完全重合,系统利用基于加密的用户样本对齐技术,在A和B不公开各自数据的前提下确认双方的共有用户,并且不暴露不互相重叠的用户,以便联合这些用户的特征进行建模。第二部分:加密模型训练。在确定共有用户群体后,就可以利用这些数据训练机器学习模型。为了保证训练过程中数据的保密性,需要借助第三方协作者C进行加密训练。以线性回归模型为例,训练过程可分为4步:第①步:协作者C把公钥分发给A和B,用以对训练过程中需要交换的数据进行加密。第②步:A和之间以加密形式交互用于计算梯度的中间结果。第③步:A和B分别基于加密的梯度值进行计算,同时B根据其标签数据计算损失,并把结果汇总给C,C通过汇总结果计算总梯度值并将其解密。第④步:C将解密后的梯度分别回传给A和B,A和B根据梯度更新各自模型的参数。迭代上述步骤直至损失函数收敛,这样就完成了整个训练过程。在样本对齐及模型训练过程中,A和B各自的数据均保留在本地,且训练中的数据交互也不会导致数据隐私泄露。因此,双方在联邦学习的帮助下得以实现合作训练模型。第三部分:效果激励。联邦学习的一大特点就是它解决了为什么不同机构要加入联邦共同建模的问题,即建立模型以后模型的效果会在实际应用中表现出来,并记录在永久数据记录机制(如区块链)上。这些模型的效果在联邦机制上会分发给各个机构反馈,并继续激励更多机构加入这一数据联邦。以上三部分的实施,既考虑了在多个机构间共同建模的隐私保护和效果,又考虑了以一个共识机制奖励贡献数据多的机构。Part 1: Encrypted sample alignment. Since the user groups of the two companies do not completely overlap, the system uses encryption-based user sample alignment technology to confirm the common users of both parties on the premise that A and B do not disclose their respective data, and does not expose users that do not overlap with each other, so as to combine these The characteristics of the user are modeled. Part II: Encrypted model training. Once the shared user group is identified, the data can be used to train a machine learning model. In order to ensure the confidentiality of the data during the training process, it is necessary to use a third-party collaborator C for encrypted training. Taking the linear regression model as an example, the training process can be divided into 4 steps: Step 1: Collaborator C distributes the public key to A and B to encrypt the data that needs to be exchanged during the training process. Step 2: The intermediate result used to calculate the gradient is exchanged between A and A in encrypted form. Step 3: A and B are calculated based on the encrypted gradient values, while B calculates the loss according to its label data, and summarizes the results to C, C calculates the total gradient value through the summary results and decrypts it. Step 4: C returns the decrypted gradients to A and B respectively, and A and B update the parameters of their respective models according to the gradients. Iterate the above steps until the loss function converges, thus completing the entire training process. In the process of sample alignment and model training, the respective data of A and B are kept locally, and the data interaction during training will not lead to data privacy leakage. Therefore, the two parties can cooperate to train the model with the help of federated learning. The third part: effect incentives. A major feature of federated learning is that it solves the problem of why different institutions should join the federation for common modeling, that is, after the model is established, the effect of the model will be manifested in practical applications and recorded in a permanent data recording mechanism (such as blockchain) superior. The effects of these models will be distributed to agencies for feedback on the federation mechanism, and will continue to motivate more agencies to join this data federation. The implementation of the above three parts not only considers the privacy protection and effect of co-modeling among multiple institutions, but also considers a consensus mechanism to reward institutions that contribute more data.

虽然联邦学习不要求参与者上传本地训练数据,从而能够一定程度上地提高隐私保护的水平。然而,目前的研究表明攻击者仍然可能利用每个参与者上传的真实梯度或者更新的模型参数信息来获取原始的训练数据、进行成员推断以及属性推断等攻击。目前,基于联邦学习的隐私保护研究,几乎考虑的都是防止中央服务器从模型更新中获取参与者的隐私信息,却并未考虑恶意参与者的情况。也就是说,恶意参与者或者被攻击者截获的参与者仍然可以得到真实的全局模型更新,因此,它们仍然有可能通过真实的参数,再加上自己所拥有的本地训练数据来猜测其它训练数据,或者推测其他参与者的训练数据集。因此,在联邦学习中,防止迭代过程中的真实的模型更新以及最终的模型参数被恶意参与者获取也是亟待解决的问题。Although federated learning does not require participants to upload local training data, it can improve the level of privacy protection to a certain extent. However, current research shows that attackers may still exploit the real gradients uploaded by each participant or the updated model parameter information to obtain the original training data, perform membership inference and attribute inference attacks. At present, the research on privacy protection based on federated learning almost always considers preventing the central server from obtaining the private information of participants from the model update, but does not consider the situation of malicious participants. That is to say, malicious actors or actors intercepted by attackers can still get real global model updates, so it is still possible for them to guess other training data through real parameters plus the local training data they own , or speculate on the training datasets of other participants. Therefore, in federated learning, it is also an urgent problem to prevent the real model update in the iterative process and the final model parameters from being obtained by malicious participants.

为解决现有技术存在的问题,本发明提供了一种基于联邦学习的隐私保护方法,如图1所示,其包括步骤:In order to solve the problems existing in the prior art, the present invention provides a privacy protection method based on federated learning, as shown in FIG. 1 , which includes the steps:

S10、采用参数加密算法对全局模型进行加密处理,得到密文模型;S10, using a parameter encryption algorithm to encrypt the global model to obtain a ciphertext model;

S20、利用本地数据在所述密文模型上进行训练,得到密文梯度信息和噪声项;S20, using local data to perform training on the ciphertext model to obtain ciphertext gradient information and noise terms;

S30、对所述密文梯度信息和噪声项进行解密,得到参数梯度,采用所述参数梯度对所述全局模型进行更新;S30. Decrypt the ciphertext gradient information and the noise term to obtain a parameter gradient, and use the parameter gradient to update the global model;

S40、循环进行执行步骤S10-S30,直至模型收敛或达到指定迭代次数,获得模型参数;S40, performing steps S10-S30 in a loop, until the model converges or the specified number of iterations is reached, and the model parameters are obtained;

S50、对所述模型参数进行加密,得到加密模型参数,采用所述加密模型参数对所述全局模型进行更新,得到全局加密模型;S50, encrypting the model parameters to obtain encryption model parameters, and using the encryption model parameters to update the global model to obtain a global encryption model;

S60、在所述加密全局模型上进行本地训练,实现隐私保护。S60. Perform local training on the encrypted global model to implement privacy protection.

具体来讲,差分隐私技术虽然能够保证模型的效率,但是引入的随机噪声无法消除,使得模型的准确性会受到很大程度的影响,并且模型的准确性与隐私保护水平之间还存在折中关系,即隐私保护水平越高,需要添加的随机数噪声就越大,这就会使得模型的准确性越差。为了解决这个问题,本实施例基于差分隐私的思想,提出了一种高效的隐私保护方法,在训练过程的每一轮迭代中,服务器端在分发全局模型参数之前,会选择满足一定条件的加性和乘性随机数作为私钥,并按照一定的设计要求将全局模型乘以或者加上私钥作为给每个参与者分发的加密的全局模型;然后,参与者在加密的全局模型上利用自己的本地数据进行模型训练。本实施例提出的隐私保护方法能够让服务器端精确地消除随机数的影响,恢复出真实的全局模型,从而能够保证模型的准确性。Specifically, although the differential privacy technology can ensure the efficiency of the model, the random noise introduced cannot be eliminated, so that the accuracy of the model will be greatly affected, and there is a compromise between the accuracy of the model and the level of privacy protection The higher the level of privacy protection, the greater the random number noise that needs to be added, which will make the accuracy of the model worse. In order to solve this problem, this embodiment proposes an efficient privacy protection method based on the idea of differential privacy. In each iteration of the training process, before distributing the global model parameters, the server will select the adder that satisfies certain conditions. The multiplicative random number is used as the private key, and the global model is multiplied or added to the private key as the encrypted global model distributed to each participant according to certain design requirements; then, the participants use the encrypted global model to use Model training on your own local data. The privacy protection method proposed in this embodiment enables the server to accurately eliminate the influence of random numbers and restore the true global model, thereby ensuring the accuracy of the model.

本实施例通过提出高效的隐私保护方法来加密全局模型,使得联邦学习的所有参与者只能在全局加密模型上进行训练而无法获得真实的模型参数,从而保证了全局模型的隐私性。也就是说,本实施例提供的基于联邦学习的隐私保护方法可以有效防止半可信的联邦学习参与者获取全局模型的真实参数和中间模型的输出结果,同时也保证所有参与者都能够利用最终训练好的加密模型获取到真实的预测结果。In this embodiment, an efficient privacy protection method is proposed to encrypt the global model, so that all participants of the federated learning can only train on the global encrypted model and cannot obtain real model parameters, thereby ensuring the privacy of the global model. That is to say, the federated learning-based privacy protection method provided in this embodiment can effectively prevent semi-trusted federated learning participants from obtaining the real parameters of the global model and the output results of the intermediate model, and also ensures that all participants can use the final The trained encrypted model obtains the real prediction results.

在一些具体的实施方式中,本发明提供的隐私保护方法可适用于在医院(医疗图像数据)、银行(信用卡交易记录)等数据隐私较为敏感的场景,联合各机构在不泄露数据隐私的前提下共同训练出全局模型,以达到预期的目的。以银行信用卡欺诈检测场景为例,各银行希望在不泄露数据隐私的同时训练全局模型,以获得针对单条信用卡交易信息,检测出其是否为欺诈交易的能力。各银行机构收到密文模型后,可利用本地信用卡交易记录数据以及人工标注的标签(交易是否为欺诈)在所述密文模型上进行训练,得到密文梯度信息和噪声项并发送给服务器端;服务器端对所述密文梯度信息和噪声项进行解密,得到参数梯度,采用所述参数梯度对所述全局模型进行更新;循环进行执行上述步骤,直至模型收敛或达到指定迭代次数,获得模型参数;对所述模型参数进行加密,得到加密模型参数,采用所述加密模型参数对所述全局模型进行更新,得到全局加密模型;服务器端将所述全局加密模型再分发至客户端(各银行机构),所述客户端可在所述加密全局模型上进行本地训练,实现隐私保护。In some specific embodiments, the privacy protection method provided by the present invention can be applied to scenarios where data privacy is more sensitive in hospitals (medical image data), banks (credit card transaction records), etc. The global model is jointly trained to achieve the expected purpose. Taking the scenario of bank credit card fraud detection as an example, each bank hopes to train a global model without revealing data privacy, so as to obtain the ability to detect whether a single credit card transaction information is a fraudulent transaction. After each banking institution receives the ciphertext model, it can use the local credit card transaction record data and manually marked labels (whether the transaction is fraudulent) to train on the ciphertext model, obtain the ciphertext gradient information and noise terms and send them to the server. The server side decrypts the ciphertext gradient information and the noise term, obtains the parameter gradient, and uses the parameter gradient to update the global model; performs the above steps in a loop until the model converges or reaches the specified number of iterations, and obtains model parameters; encrypt the model parameters to obtain encrypted model parameters, and use the encrypted model parameters to update the global model to obtain a global encrypted model; the server redistributes the global encrypted model to the client (each Banking institutions), the client can perform local training on the encrypted global model to achieve privacy protection.

在一些实施方式中,本实施例提供的隐私保护方法适用于多层感知机(MLP)和卷积神经网络(CNN)两类深度学习模型,支持ReLU激活函数和MSE损失函数;同时,所述隐私保护方法还能够有效的应用于具有跳跃连接的变种卷积神经网络模型,例如目前广泛流行的ResNet和DenseNet网络。In some implementations, the privacy protection method provided in this embodiment is applicable to two types of deep learning models, multilayer perceptron (MLP) and convolutional neural network (CNN), and supports ReLU activation function and MSE loss function; at the same time, the described The privacy-preserving method can also be effectively applied to variant convolutional neural network models with skip connections, such as the widely popular ResNet and DenseNet networks.

对应于横向联邦学习的训练过程,本发明包括“全局模型加密”、“本地模型训练”、“全局模型更新”和“最终模型分发”四个阶段。其中前三个阶段按序循环执行至模型收敛或达到指定循环次数,之后转到第四阶段收尾。Corresponding to the training process of horizontal federated learning, the present invention includes four stages: "global model encryption", "local model training", "global model update" and "final model distribution". The first three stages are executed in sequence until the model converges or the specified number of cycles is reached, and then the fourth stage ends.

在一些实施方式中,所述“全局模型加密”阶段由联邦学习框架的服务器端完成,所述服务器端使用所述参数加密算法对全局模型(称为“明文模型”)进行加密或扰乱,然后将加密后的模型(称为“密文模型”)以及辅助随机信息一同发送给客户端。所述全局模型可选用多层感知机模型或卷积神经网络模型,以下分别基于两类模型具体介绍所述参数加密算法。In some embodiments, the "global model encryption" stage is done by the server side of the federated learning framework, which encrypts or scrambles the global model (referred to as the "plaintext model") using the parametric encryption algorithm, and then The encrypted model (called "ciphertext model") and auxiliary random information are sent to the client. The global model can be selected from a multilayer perceptron model or a convolutional neural network model. The following describes the parameter encryption algorithm in detail based on the two types of models.

在一种具体实施方式中,当所述全局模型为L层的多层感知机模型时,所述多层感知机模型由任意数量的全连接层组成,且以ReLU为激活函数。考虑一个L层的多层感知机模型,其第l层的神经元个数为nl,参数矩阵为则其输出可表示为:In a specific embodiment, when the global model is an L-layer multilayer perceptron model, the multilayer perceptron model is composed of any number of fully connected layers, and uses ReLU as the activation function. Consider an L-layer multilayer perceptron model, the number of neurons in the l-th layer is nl , and the parameter matrix is Then its output can be expressed as:

特别地,当l=0时,y(0)表示模型的输入x=(x1,x2,…,xd)T,n0=d表示输入数据的维度。 In particular, when l=0, y(0) represents the input of the model x=(x1 , x2 , . . . , xd )T , and n0 =d represents the dimension of the input data.

针对所述L层的多层感知机模型,服务器端使用随机数矩阵和对明文模型参数进行加密,密文模型参数的计算过程如下:For the L-layer multilayer perceptron model, the server side uses a random number matrix and For plaintext model parameters Encrypt, ciphertext model parameters The calculation process is as follows:

其中表示哈达玛积(Hadamard)乘积运算。 in Represents the Hadamard product operation.

具体地,所述随机数矩阵R(l)由乘性噪声向量按以下规则构成:其中下标i和j满足i∈[1,nl],j∈[1,nl-1]。所述随机数矩阵Ra由随机数γ和加性噪声向量按下式组成:其中下标i和j满足i∈[1,nL],j∈[1,nL-1]。将所述密文模型参数替换所述多层感知机模型中的明文模型参数,得到密文模型。Specifically, the random number matrix R(l) consists of a multiplicative noise vector Formed according to the following rules: where the subscripts i and j satisfy i∈[1 ,nl], j∈[1,nl-1 ]. The random number matrix Ra consists of random numbers γ and an additive noise vector It is composed as follows: where the subscripts i and j satisfy i∈[1,nL ], j∈[1,nL-1 ]. The ciphertext model parameters are replaced with the plaintext model parameters in the multilayer perceptron model to obtain a ciphertext model.

在本实施例中,服务器端首先按照上述要求完成模型加密,然后将密文模型参数和加性噪声向量ra一同发送给联邦学习的参与者。In this embodiment, the server first completes model encryption according to the above requirements, and then encrypts the ciphertext model parameters Sent together with the additive noise vectorra to the federated learning participants.

在另一种具体实施方式中,当所述全局模型为L层的卷积神经网络模型时,所述卷积神经网络模型由任意数量的卷积层和最大池化层交替连接组成,然后以一个用于回归或分类任务的全连接层结束。所述卷积模型使用ReLU作为激活函数,并且允许各层之间具有“拼接型跳跃连接”结构。所述卷积层以三维数据为输入,以多个三维卷积核为参数,输出卷积后的特征图。对于任意通道数为cl,高度为hl,宽为wl的三维输入数据其三维卷积操作定义为:其中,是cl+1个大小为cl×f×f的卷积核组成的张量,是输出的特征图。In another specific embodiment, when the global model is an L-layer convolutional neural network model, the convolutional neural network model is composed of any number of convolutional layers and max-pooling layers alternately connected, and then End with a fully connected layer for regression or classification tasks. The convolutional model uses ReLU as the activation function, and allows a "spliced skip connection" structure between layers. The convolution layer takes three-dimensional data as input, takes multiple three-dimensional convolution kernels as parameters, and outputs a feature map after convolution. For three-dimensional input data with arbitrary number of channels cl , height hl , and width wl Its three-dimensional convolution operation is defined as: in, is a tensor composed of cl+1 convolution kernels of size cl ×f ×f, is the output feature map.

对于任意L层所述卷积网络模型,服务器端使用随机张量和随机矩阵对所述卷积神经网络模型的明文模型参数加密,得到对应的密文模型参数:当1≤l≤L-1时,参数W(l)为卷积核张量,所述随机张量R(l)由乘性噪声向量组成,且满足:For any L-layer described convolutional network model, the server side uses random tensors and random matrix The plaintext model parameters of the convolutional neural network model are encrypted to obtain the corresponding ciphertext model parameters: When 1≤l≤L-1, the parameter W(l) is the convolution kernel tensor, and the random tensor R(l) is composed of the multiplicative noise vector consists of:

其中r(l,in)=(r(m))m∈P(l),由m∈P(l)个向量r(m)拼接而成,P(l)表示所有连接到第l个卷积层的网络层的下标集合,这一改动用于适配具有拼接型跳跃连接的连接结构; where r(l,in) = (r(m) )m∈P(l) , which is composed of m∈P(l) vectors r(m) concatenated, and P(l) represents all connections to the lth volume The set of subscripts of the layered network layer, this modification is used to adapt the connection structure with spliced skip connections;

所述随机矩阵R(L)由乘性噪声向量r(L-1)构成,且满足:The random matrix R(L) consists of a multiplicative noise vector r(L-1) and satisfies:

所述随机矩阵Ra由加性噪声向量ra和随机数γ组成,且满足:将所述密文模型参数替换所述卷积神经网络模型中的明文模型参数,得到密文模型。 The random matrixRa is composed of an additive noise vector ra anda random number γ, and satisfies: Replacing the ciphertext model parameters with the plaintext model parameters in the convolutional neural network model to obtain a ciphertext model.

在本实施例中,服务器端首先按照上述要求完成模型加密,然后将密文模型参数和加性噪声向量ra一同发送给联邦学习的参与者(客户端)。In this embodiment, the server first completes model encryption according to the above requirements, and then encrypts the ciphertext model parameters It is sent to the participants (clients) of federated learning together with the additive noise vectorra .

在一些实施方式中,“本地模型训练”阶段为第二阶段,由客户端完成。每个参与者接收到密文模型和加性噪声向量之后,利用自己的本地数据在密文模型上进行训练,主要包括两个阶段:前向传播和反向传播。最后,将本地计算得到的密文梯度信息和额外的噪声项发送给服务器端。In some embodiments, the "local model training" phase is the second phase and is done by the client. After each participant receives the ciphertext model and the additive noise vector, it uses its own local data to train on the ciphertext model, which mainly includes two stages: forward propagation and back propagation. Finally, the locally computed ciphertext gradient information and additional noise terms are sent to the server.

前向传播阶段:Forward propagation stage:

当所述当所述全局模型为L层的多层感知机模型时,联邦学习的参与者接收到密文模型之后,计算含噪的输出When the global model is an L-layer multilayer perceptron model, after receiving the ciphertext model, the participant of federated learning calculates the noisy output

密文模型的输出与其对应的明文模型的输出满足以下关系式:The output of the ciphertext model and the output of the corresponding plaintext model satisfy the following relation:

其中r=γra。对于一个联邦学习参与者,随机噪声向量r(l)和随机数γ不可知。in r=γra . For a federated learning participant, the random noise vector r(l) and the random number γ are agnostic.

当所述当所述全局模型为L层的卷积神经网络模型时,与多层感知机类似,密文模型的卷积层输出与其对应的真实卷积层输出满足:When the global model is an L-layer convolutional neural network model, similar to a multilayer perceptron, the convolutional layer output of the ciphertext model and its corresponding real convolutional layer output satisfy:

并且密文模型的全连接层输出与其对应的真实输出结果满足:And the fully connected layer output of the ciphertext model and its corresponding real output result satisfy:

其中是伪输出统计量,函数Flatten(·)表示将多维张量延展成一维向量,延展后的向量维度为nL-1=cL-1hL-1wL-1。参数r=γra为组合噪声向量。与多层感知机模型的加密机制同理,随机噪声向量r(l)和随机数γ对联邦学习的参与者不可知,针对所述卷积模型的加密机制可以严格保证全局模型的安全性。in is a pseudo-output statistic, and the function Flatten(·) indicates that the multi-dimensional tensor is extended into a one-dimensional vector, and the dimension of the extended vector is nL-1 =cL-1 hL-1 wL-1 . The parameter r=γra is the combined noise vector. Similar to the encryption mechanism of the multi-layer perceptron model, the random noise vector r(l) and the random number γ are unknown to the participants of the federated learning, and the encryption mechanism for the convolution model can strictly guarantee the security of the global model.

反向传播阶段:Backpropagation stage:

该反向传播过程同时适用于多层感知机模型和卷积神经网络模型,使用均方误差(MSE)作为损失函数。对于任意维的样本密文模型的预测值与真实值的均方误差可表示为:This backpropagation process is applicable to both multilayer perceptron models and convolutional neural network models, using mean squared error (MSE) as the loss function. For samples of any dimension Predicted value of the ciphertext model The mean squared error from the true value can be expressed as:

其中nL表示模型输出层的维度,同时也是样本标签的维度。参数α和r分别是“阶段1”中介绍的伪输出统计量和组合噪声向量。对于本发明介绍的参数加密算法,损失函数对密文参数的带噪梯度与其对应的真实梯度满足以下关系式:其中,where nL represents the dimension of the output layer of the model, which is also the dimension of the sample label. The parameters α and r are the pseudo output statistic and combined noise vector introduced in "Stage 1", respectively. For the parameter encryption algorithm introduced in the present invention, the loss function ciphertext parameters The noisy gradient of and its corresponding true gradient satisfy the following relation: in,

且值得注意的是,参数σ(l)和β(l)是由参与者在本地独立计算完成。 and It is worth noting that the parameters σ(l) and β(l) are computed locally and independently by the participants.

具体地,第k个参与者在其所有小批量数据样本上计算梯度并结合服务器端提供的加性噪声向量ra计算出噪声项和最后将三类信息同并发送给服务器端。Specifically, the kth participant in all its mini-batches of data Calculate the gradient on the sample And calculate the noise term in combination with the additive noise vectorra provided by the server and Finally, three types of information At the same time and send to the server side.

在一些实施方式中,所述“全局模型更新”阶段由联邦学习的服务器端完成。服务器端接收到所有参与者发送的密文梯度信息和噪声项之后,利用选择的私钥解密出真实的参数梯度,最后利用聚合后的参数梯度更新全局模型。具体地,对于第t轮的全局模型Wt,它在第k个参与者的本地训练过程中得到的真实梯度可按下式求解:其中Fk(·)表示第k个参与者的损失函数。解密出真实梯度后,服务器端更新得到第t+1轮的全局模型Wt+1:In some embodiments, the "global model update" phase is done by the server side of federated learning. After receiving the ciphertext gradient information and noise terms sent by all participants, the server uses the selected private key to decrypt the real parameter gradient, and finally uses the aggregated parameter gradient to update the global model. Specifically, for the global model Wt of the t-th round, its true gradient obtained during the local training process of the k-th participant can be solved as follows: where Fk ( ) represents the loss function of the kth participant. After decrypting the real gradient, the server-side update obtains the global model Wt+1 of the t+1th round:

其中η表示学习速率,Nk/N表示第k个参与者的数据量占总数据量的比例。where η represents the learning rate, and Nk /N represents the ratio of the data volume of the kth participant to the total data volume.

在一些实施方式中,所述“最终模型分发”阶段由联邦学习的服务器端完成。服务器端和客户端交替式执行阶段1到阶段3至模型收敛或达到指定迭代次数后,服务器端将获得最终的模型参数为了保护模型参数同时还要保证参与者能够获得正确的推断结果,服务器端在分发模型前仍然要对全局模型加密,与训练阶段不同是的,服务器端不选择加性噪声Ra而只选择乘性噪声,以保证密文模型的输出与真实输出相同,即:不失一般性,服务器端将按下式加密模型参数:In some embodiments, the "final model distribution" phase is done by the server side of federated learning. The server side and the client side alternately execute Phase 1 to Phase 3 until the model converges or after reaching the specified number of iterations, the server side will obtain the final model parameters In order to protect the model parameters and ensure that participants can obtain correct inference results, the server still needs to encrypt the global model before distributing the model. Unlike the training phase, the server does not select the additive noiseRa but only selects the multiplication noise. noise to ensure that the output of the ciphertext model is the same as the real output, namely: Without loss of generality, the server will encrypt the model parameters as follows:

不论是多层感知机模型还是卷积神经网络模型,上式中的参数W(l)和噪声R(l)的形式与“阶段1”中介绍的完全相同。最终,服务器端将加密的全局模型分发给所有参与者,所述参与者在所述加密全局模型上进行本地训练,实现隐私保护。Regardless of whether it is a multilayer perceptron model or a convolutional neural network model, the parameters W(l) and noise R(l) in the above formula are of the same form as those introduced in "Stage 1". Finally, the server distributes the encrypted global model to all participants, and the participants perform local training on the encrypted global model to realize privacy protection.

在一些实施方式中,还提供一种计算机可读存储介质,其中,所述计算机可读存储介质存储有一个或者多个程序,所述一个或者多个程序可被一个或者多个处理器执行,以实现本发明所述基于联邦学习的隐私保护方法中的步骤。In some embodiments, a computer-readable storage medium is also provided, wherein the computer-readable storage medium stores one or more programs, and the one or more programs can be executed by one or more processors, To implement the steps in the federated learning-based privacy protection method of the present invention.

在一些实施方式中,还提供一种基于联邦学习方法的隐私保护系统,如图2所示,其包括服务器端10和客户端20,所述服务器端10用于采用参数加密算法对全局模型进行加密处理,得到密文模型;In some embodiments, a privacy protection system based on a federated learning method is also provided, as shown in FIG. 2 , which includes a

所述客户端20用于利用本地数据在所述密文模型上进行训练,得到密文梯度信息和噪声项;The

所述服务器端10还用于对所述密文梯度信息和噪声项进行解密,得到参数梯度,采用所述参数梯度对所述全局模型进行更新,循环上述步骤直至模型收敛或达到指定迭代次数,获得模型参数;对所述模型参数进行加密,得到加密模型参数,采用所述加密模型参数对所述全局模型进行更新,得到全局加密模型;The

所述客户端20还用于在所述加密全局模型上进行本地训练,实现隐私保护。The

综上所述,本发明解决了在密文域中实现ReLU之类的非线性激活函数的问题,从而支持客户端在不知道真实更新或参数的情况下在加密域上训练多层感知机模型、卷积神经网络模型或进行本地预测。因此,可以有效防止半可信的联邦学习参与者获取全局模型的真实参数和中间模型的输出结果,同时也保证所有参与者都能够利用最终分发的加密模型获取到真实的预测结果。本发明在提供隐私保护的同时,服务器端可以消除随机数以获得真实的全局模型参数,同时参与者可以使用加密的模型获得真实的预测,因此,它确保了模型和预测的准确性。本发明的额外成本主要是在反向传播中产生的,除了梯度之外,参与者还会计算两个额外的噪声项并将其发送到服务器端。与明文模型训练相比,额外的计算和通信成本的上限分别约为2T和2C(T是明文模型训练中反向传播的成本,C是模型参数的大小),这确保了该方法在实践中的效率和可用性。To sum up, the present invention solves the problem of implementing a nonlinear activation function like ReLU in the ciphertext domain, thereby supporting the client to train a multilayer perceptron model on the ciphertext domain without knowing the real updates or parameters , convolutional neural network models, or make local predictions. Therefore, it can effectively prevent the semi-trusted federated learning participants from obtaining the real parameters of the global model and the output results of the intermediate model, and at the same time, it can also ensure that all participants can use the final distributed encrypted model to obtain the real prediction results. While the present invention provides privacy protection, the server can eliminate random numbers to obtain real global model parameters, and at the same time, participants can use encrypted models to obtain real predictions, so it ensures the accuracy of models and predictions. The additional cost of the present invention is mainly incurred in backpropagation, where in addition to the gradient, two additional noise terms are computed by the participants and sent to the server side. Compared with plaintext model training, the additional computational and communication costs are capped at about 2T and 2C, respectively (T is the cost of backpropagation in plaintext model training, and C is the size of the model parameters), which ensures that the method works well in practice efficiency and availability.

最后应说明的是:以上实施例仅用以说明本发明的技术方案,而非对其限制;尽管参照前述实施例对本发明进行了详细的说明,本领域的普通技术人员应当理解:其依然可以对前述各实施例所记载的技术方案进行修改,或者对其中部分技术特征进行等同替换;而这些修改或者替换,并不使相应技术方案的本质脱离本发明各实施例技术方案的精神和范围。Finally, it should be noted that the above embodiments are only used to illustrate the technical solutions of the present invention, but not to limit them; although the present invention has been described in detail with reference to the foregoing embodiments, those of ordinary skill in the art should understand that it can still be The technical solutions described in the foregoing embodiments are modified, or some technical features thereof are equivalently replaced; and these modifications or replacements do not make the essence of the corresponding technical solutions deviate from the spirit and scope of the technical solutions of the embodiments of the present invention.

Claims (9)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202011109363.0ACN112199702B (en) | 2020-10-16 | 2020-10-16 | Privacy protection method, storage medium and system based on federal learning |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202011109363.0ACN112199702B (en) | 2020-10-16 | 2020-10-16 | Privacy protection method, storage medium and system based on federal learning |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN112199702Atrue CN112199702A (en) | 2021-01-08 |

| CN112199702B CN112199702B (en) | 2024-07-26 |

Family

ID=74009841

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202011109363.0AActiveCN112199702B (en) | 2020-10-16 | 2020-10-16 | Privacy protection method, storage medium and system based on federal learning |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN112199702B (en) |

Cited By (42)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112560106A (en)* | 2021-02-20 | 2021-03-26 | 支付宝(杭州)信息技术有限公司 | Method, device and system for processing privacy matrix |

| CN112862001A (en)* | 2021-03-18 | 2021-05-28 | 中山大学 | Decentralized data modeling method under privacy protection |

| CN112926073A (en)* | 2021-03-17 | 2021-06-08 | 深圳前海微众银行股份有限公司 | Federal learning modeling optimization method, apparatus, medium, and computer program product |

| CN112949760A (en)* | 2021-03-30 | 2021-06-11 | 平安科技(深圳)有限公司 | Model precision control method and device based on federal learning and storage medium |

| CN113159918A (en)* | 2021-04-09 | 2021-07-23 | 福州大学 | Bank client group mining method based on federal group penetration |

| CN113159316A (en)* | 2021-04-08 | 2021-07-23 | 支付宝(杭州)信息技术有限公司 | Model training method, method and device for predicting business |

| CN113158230A (en)* | 2021-03-16 | 2021-07-23 | 陕西数盾慧安数据科技有限公司 | Online classification method based on differential privacy |

| CN113179244A (en)* | 2021-03-10 | 2021-07-27 | 上海大学 | Federal deep network behavior feature modeling method for industrial internet boundary safety |

| CN113191530A (en)* | 2021-04-09 | 2021-07-30 | 汕头大学 | Block link point reliability prediction method and system with privacy protection function |

| CN113221144A (en)* | 2021-05-19 | 2021-08-06 | 国网辽宁省电力有限公司电力科学研究院 | Virtualization terminal abnormity detection method and system for privacy protection machine learning |

| CN113335490A (en)* | 2021-06-30 | 2021-09-03 | 广船国际有限公司 | Double-wall pipe ventilation system and ship |

| CN113362160A (en)* | 2021-06-08 | 2021-09-07 | 南京信息工程大学 | Federal learning method and device for credit card anti-fraud |

| CN113378198A (en)* | 2021-06-24 | 2021-09-10 | 深圳市洞见智慧科技有限公司 | Federal training system, method and device for model for protecting user identification |

| CN113515760A (en)* | 2021-05-28 | 2021-10-19 | 平安国际智慧城市科技股份有限公司 | Horizontal federal learning method, device, computer equipment and storage medium |

| CN113543120A (en)* | 2021-09-17 | 2021-10-22 | 百融云创科技股份有限公司 | Mobile terminal credit anti-fraud estimation method and system based on federal learning |

| CN113591133A (en)* | 2021-09-27 | 2021-11-02 | 支付宝(杭州)信息技术有限公司 | Method and device for performing feature processing based on differential privacy |

| CN113614726A (en)* | 2021-06-10 | 2021-11-05 | 香港应用科技研究院有限公司 | Dynamic differential privacy for federated learning systems |

| CN113688408A (en)* | 2021-08-03 | 2021-11-23 | 华东师范大学 | A Maximum Information Coefficient Method Based on Secure Multi-Party Computation |

| CN113704779A (en)* | 2021-07-16 | 2021-11-26 | 杭州医康慧联科技股份有限公司 | Encrypted distributed machine learning training method |

| CN113778966A (en)* | 2021-09-15 | 2021-12-10 | 深圳技术大学 | Cross-school information sharing method and related device for college teaching and course performance |

| CN113836556A (en)* | 2021-09-26 | 2021-12-24 | 广州大学 | Decentralized function encryption privacy protection method and system for federated learning |

| CN114091651A (en)* | 2021-11-03 | 2022-02-25 | 支付宝(杭州)信息技术有限公司 | Method, device and system for multi-party joint training of neural network of graph |

| CN114143311A (en)* | 2021-11-03 | 2022-03-04 | 深圳前海微众银行股份有限公司 | Privacy protection scheme aggregation method and device based on block chain |

| CN114282652A (en)* | 2021-12-22 | 2022-04-05 | 哈尔滨工业大学 | Privacy-protecting longitudinal deep neural network model construction method, computer and storage medium |

| CN114338144A (en)* | 2021-12-27 | 2022-04-12 | 杭州趣链科技有限公司 | Method for preventing data from being leaked, electronic equipment and computer-readable storage medium |

| CN114357484A (en)* | 2021-12-31 | 2022-04-15 | 杭州趣链科技有限公司 | Data prediction method, device and computer equipment based on longitudinal federated learning |

| CN114491590A (en)* | 2022-01-17 | 2022-05-13 | 平安科技(深圳)有限公司 | Homomorphic encryption method, system, equipment and storage medium based on federal factorization machine |

| CN114510741A (en)* | 2022-02-22 | 2022-05-17 | 国网山西省电力公司信息通信分公司 | Safe and efficient power data sharing method based on federal learning |

| CN114912630A (en)* | 2022-06-02 | 2022-08-16 | 上海富数科技有限公司广州分公司 | Model training method, device and electronic device based on federated learning |

| CN114971792A (en)* | 2022-05-27 | 2022-08-30 | 中国银行股份有限公司 | Insurance product push method and related device |

| CN115081014A (en)* | 2022-05-31 | 2022-09-20 | 西安翔迅科技有限责任公司 | Target detection label automatic labeling method based on federal learning |

| CN115186285A (en)* | 2022-09-09 | 2022-10-14 | 闪捷信息科技有限公司 | Parameter aggregation method and device for federal learning |

| CN115278709A (en)* | 2022-07-29 | 2022-11-01 | 南京理工大学 | A communication optimization method based on federated learning |

| CN115310121A (en)* | 2022-07-12 | 2022-11-08 | 华中农业大学 | Real-time reinforcement federated learning data privacy security method based on MePC-F model in the Internet of Vehicles |

| WO2022257180A1 (en)* | 2021-06-10 | 2022-12-15 | Hong Kong Applied Science and Technology Research Institute Company Limited | Dynamic differential privacy to federated learning systems |

| CN115865307A (en)* | 2023-02-27 | 2023-03-28 | 蓝象智联(杭州)科技有限公司 | Data point multiplication operation method for federal learning |

| CN116186780A (en)* | 2023-03-27 | 2023-05-30 | 华中科技大学 | A privacy protection method and system based on noise perturbation in a collaborative learning scenario |

| CN116366250A (en)* | 2023-06-02 | 2023-06-30 | 江苏微知量子科技有限公司 | Quantum federal learning method and system |

| WO2023174018A1 (en)* | 2022-03-15 | 2023-09-21 | 北京字节跳动网络技术有限公司 | Vertical federated learning methods, apparatuses, system and device, and storage medium |

| CN117675199A (en)* | 2023-12-21 | 2024-03-08 | 盐城集结号科技有限公司 | Network security defense system based on RPA |

| WO2024139273A1 (en)* | 2022-12-26 | 2024-07-04 | 支付宝(杭州)信息技术有限公司 | Federated learning method and apparatus, readable storage medium, and electronic device |

| CN118468344A (en)* | 2024-07-10 | 2024-08-09 | 中电科大数据研究院有限公司 | Method and system for improving privacy security of federated learning |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20190227980A1 (en)* | 2018-01-22 | 2019-07-25 | Google Llc | Training User-Level Differentially Private Machine-Learned Models |

| CN110572253A (en)* | 2019-09-16 | 2019-12-13 | 济南大学 | A method and system for enhancing the privacy of federated learning training data |

| CN110995737A (en)* | 2019-12-13 | 2020-04-10 | 支付宝(杭州)信息技术有限公司 | Gradient fusion method and device for federal learning and electronic equipment |

| CN111552986A (en)* | 2020-07-10 | 2020-08-18 | 鹏城实验室 | Blockchain-based federated modeling method, device, device and storage medium |

| CN111611610A (en)* | 2020-04-12 | 2020-09-01 | 西安电子科技大学 | Federated learning information processing method, system, storage medium, program, terminal |

- 2020

- 2020-10-16CNCN202011109363.0Apatent/CN112199702B/enactiveActive

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20190227980A1 (en)* | 2018-01-22 | 2019-07-25 | Google Llc | Training User-Level Differentially Private Machine-Learned Models |

| CN110572253A (en)* | 2019-09-16 | 2019-12-13 | 济南大学 | A method and system for enhancing the privacy of federated learning training data |

| CN110995737A (en)* | 2019-12-13 | 2020-04-10 | 支付宝(杭州)信息技术有限公司 | Gradient fusion method and device for federal learning and electronic equipment |

| CN111611610A (en)* | 2020-04-12 | 2020-09-01 | 西安电子科技大学 | Federated learning information processing method, system, storage medium, program, terminal |

| CN111552986A (en)* | 2020-07-10 | 2020-08-18 | 鹏城实验室 | Blockchain-based federated modeling method, device, device and storage medium |

Cited By (65)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112560106A (en)* | 2021-02-20 | 2021-03-26 | 支付宝(杭州)信息技术有限公司 | Method, device and system for processing privacy matrix |

| CN113179244B (en)* | 2021-03-10 | 2022-12-23 | 上海大学 | Federal deep network behavior feature modeling method for industrial internet boundary safety |

| CN113179244A (en)* | 2021-03-10 | 2021-07-27 | 上海大学 | Federal deep network behavior feature modeling method for industrial internet boundary safety |

| CN113158230B (en)* | 2021-03-16 | 2024-02-09 | 陕西数盾慧安数据科技有限公司 | Online classification method based on differential privacy |

| CN113158230A (en)* | 2021-03-16 | 2021-07-23 | 陕西数盾慧安数据科技有限公司 | Online classification method based on differential privacy |

| CN112926073A (en)* | 2021-03-17 | 2021-06-08 | 深圳前海微众银行股份有限公司 | Federal learning modeling optimization method, apparatus, medium, and computer program product |

| CN112862001A (en)* | 2021-03-18 | 2021-05-28 | 中山大学 | Decentralized data modeling method under privacy protection |

| CN112862001B (en)* | 2021-03-18 | 2024-07-26 | 中山大学 | Privacy protection method and system for decentralizing data modeling under federal learning |

| CN112949760A (en)* | 2021-03-30 | 2021-06-11 | 平安科技(深圳)有限公司 | Model precision control method and device based on federal learning and storage medium |

| CN112949760B (en)* | 2021-03-30 | 2024-05-10 | 平安科技(深圳)有限公司 | Model precision control method, device and storage medium based on federal learning |

| CN113159316A (en)* | 2021-04-08 | 2021-07-23 | 支付宝(杭州)信息技术有限公司 | Model training method, method and device for predicting business |

| CN113159316B (en)* | 2021-04-08 | 2022-05-17 | 支付宝(杭州)信息技术有限公司 | Model training method, method and device for predicting business |

| CN113159918B (en)* | 2021-04-09 | 2022-06-07 | 福州大学 | A Bank Customer Group Mining Method Based on Federal Regiment Penetration |

| CN113191530A (en)* | 2021-04-09 | 2021-07-30 | 汕头大学 | Block link point reliability prediction method and system with privacy protection function |

| CN113159918A (en)* | 2021-04-09 | 2021-07-23 | 福州大学 | Bank client group mining method based on federal group penetration |

| CN113221144B (en)* | 2021-05-19 | 2024-05-03 | 国网辽宁省电力有限公司电力科学研究院 | Privacy protection machine learning virtualization terminal abnormality detection method and system |

| CN113221144A (en)* | 2021-05-19 | 2021-08-06 | 国网辽宁省电力有限公司电力科学研究院 | Virtualization terminal abnormity detection method and system for privacy protection machine learning |

| CN113515760A (en)* | 2021-05-28 | 2021-10-19 | 平安国际智慧城市科技股份有限公司 | Horizontal federal learning method, device, computer equipment and storage medium |

| CN113515760B (en)* | 2021-05-28 | 2024-03-15 | 平安国际智慧城市科技股份有限公司 | Horizontal federal learning method, apparatus, computer device, and storage medium |

| CN113362160A (en)* | 2021-06-08 | 2021-09-07 | 南京信息工程大学 | Federal learning method and device for credit card anti-fraud |

| CN113362160B (en)* | 2021-06-08 | 2023-08-22 | 南京信息工程大学 | Federal learning method and device for credit card anti-fraud |

| CN113614726A (en)* | 2021-06-10 | 2021-11-05 | 香港应用科技研究院有限公司 | Dynamic differential privacy for federated learning systems |

| US11907403B2 (en) | 2021-06-10 | 2024-02-20 | Hong Kong Applied Science And Technology Research Institute Co., Ltd. | Dynamic differential privacy to federated learning systems |

| WO2022257180A1 (en)* | 2021-06-10 | 2022-12-15 | Hong Kong Applied Science and Technology Research Institute Company Limited | Dynamic differential privacy to federated learning systems |

| CN113378198A (en)* | 2021-06-24 | 2021-09-10 | 深圳市洞见智慧科技有限公司 | Federal training system, method and device for model for protecting user identification |

| CN113335490A (en)* | 2021-06-30 | 2021-09-03 | 广船国际有限公司 | Double-wall pipe ventilation system and ship |

| CN113704779A (en)* | 2021-07-16 | 2021-11-26 | 杭州医康慧联科技股份有限公司 | Encrypted distributed machine learning training method |

| CN113688408A (en)* | 2021-08-03 | 2021-11-23 | 华东师范大学 | A Maximum Information Coefficient Method Based on Secure Multi-Party Computation |

| CN113688408B (en)* | 2021-08-03 | 2023-05-12 | 华东师范大学 | Maximum information coefficient method based on secure multiparty calculation |

| CN113778966B (en)* | 2021-09-15 | 2024-03-26 | 深圳技术大学 | Cross-school information sharing method and related device for university teaching and course score |

| CN113778966A (en)* | 2021-09-15 | 2021-12-10 | 深圳技术大学 | Cross-school information sharing method and related device for college teaching and course performance |

| CN113543120A (en)* | 2021-09-17 | 2021-10-22 | 百融云创科技股份有限公司 | Mobile terminal credit anti-fraud estimation method and system based on federal learning |

| CN113543120B (en)* | 2021-09-17 | 2021-11-23 | 百融云创科技股份有限公司 | Mobile terminal credit anti-fraud estimation method and system based on federal learning |

| CN113836556A (en)* | 2021-09-26 | 2021-12-24 | 广州大学 | Decentralized function encryption privacy protection method and system for federated learning |

| CN113591133B (en)* | 2021-09-27 | 2021-12-24 | 支付宝(杭州)信息技术有限公司 | Method and device for performing feature processing based on differential privacy |

| CN113591133A (en)* | 2021-09-27 | 2021-11-02 | 支付宝(杭州)信息技术有限公司 | Method and device for performing feature processing based on differential privacy |

| CN114143311A (en)* | 2021-11-03 | 2022-03-04 | 深圳前海微众银行股份有限公司 | Privacy protection scheme aggregation method and device based on block chain |

| CN114091651B (en)* | 2021-11-03 | 2024-05-24 | 支付宝(杭州)信息技术有限公司 | Method, device and system for multi-party combined training of graph neural network |

| CN114091651A (en)* | 2021-11-03 | 2022-02-25 | 支付宝(杭州)信息技术有限公司 | Method, device and system for multi-party joint training of neural network of graph |

| CN114282652A (en)* | 2021-12-22 | 2022-04-05 | 哈尔滨工业大学 | Privacy-protecting longitudinal deep neural network model construction method, computer and storage medium |

| CN114338144A (en)* | 2021-12-27 | 2022-04-12 | 杭州趣链科技有限公司 | Method for preventing data from being leaked, electronic equipment and computer-readable storage medium |

| CN114338144B (en)* | 2021-12-27 | 2024-07-19 | 杭州趣链科技有限公司 | Method for preventing data leakage, electronic device and computer readable storage medium |

| CN114357484A (en)* | 2021-12-31 | 2022-04-15 | 杭州趣链科技有限公司 | Data prediction method, device and computer equipment based on longitudinal federated learning |

| CN114491590A (en)* | 2022-01-17 | 2022-05-13 | 平安科技(深圳)有限公司 | Homomorphic encryption method, system, equipment and storage medium based on federal factorization machine |

| WO2023134077A1 (en)* | 2022-01-17 | 2023-07-20 | 平安科技(深圳)有限公司 | Homomorphic encryption method and system based on federated factorization machine, device and storage medium |

| CN114510741A (en)* | 2022-02-22 | 2022-05-17 | 国网山西省电力公司信息通信分公司 | Safe and efficient power data sharing method based on federal learning |

| WO2023174018A1 (en)* | 2022-03-15 | 2023-09-21 | 北京字节跳动网络技术有限公司 | Vertical federated learning methods, apparatuses, system and device, and storage medium |

| CN114971792A (en)* | 2022-05-27 | 2022-08-30 | 中国银行股份有限公司 | Insurance product push method and related device |

| CN115081014A (en)* | 2022-05-31 | 2022-09-20 | 西安翔迅科技有限责任公司 | Target detection label automatic labeling method based on federal learning |

| CN115081014B (en)* | 2022-05-31 | 2024-07-26 | 西安翔迅科技有限责任公司 | Automatic labeling method for target detection labels based on federal learning |

| CN114912630B (en)* | 2022-06-02 | 2025-01-10 | 上海富数科技有限公司 | Model training method, device and electronic equipment based on federated learning |

| CN114912630A (en)* | 2022-06-02 | 2022-08-16 | 上海富数科技有限公司广州分公司 | Model training method, device and electronic device based on federated learning |

| CN115310121A (en)* | 2022-07-12 | 2022-11-08 | 华中农业大学 | Real-time reinforcement federated learning data privacy security method based on MePC-F model in the Internet of Vehicles |

| CN115278709B (en)* | 2022-07-29 | 2024-04-26 | 南京理工大学 | A communication optimization method based on federated learning |

| CN115278709A (en)* | 2022-07-29 | 2022-11-01 | 南京理工大学 | A communication optimization method based on federated learning |

| CN115186285B (en)* | 2022-09-09 | 2022-12-02 | 闪捷信息科技有限公司 | Parameter aggregation method and device for federal learning |

| CN115186285A (en)* | 2022-09-09 | 2022-10-14 | 闪捷信息科技有限公司 | Parameter aggregation method and device for federal learning |

| WO2024139273A1 (en)* | 2022-12-26 | 2024-07-04 | 支付宝(杭州)信息技术有限公司 | Federated learning method and apparatus, readable storage medium, and electronic device |

| CN115865307A (en)* | 2023-02-27 | 2023-03-28 | 蓝象智联(杭州)科技有限公司 | Data point multiplication operation method for federal learning |

| CN116186780A (en)* | 2023-03-27 | 2023-05-30 | 华中科技大学 | A privacy protection method and system based on noise perturbation in a collaborative learning scenario |

| CN116366250B (en)* | 2023-06-02 | 2023-08-15 | 江苏微知量子科技有限公司 | Quantum federal learning method and system |

| CN116366250A (en)* | 2023-06-02 | 2023-06-30 | 江苏微知量子科技有限公司 | Quantum federal learning method and system |

| CN117675199A (en)* | 2023-12-21 | 2024-03-08 | 盐城集结号科技有限公司 | Network security defense system based on RPA |

| CN117675199B (en)* | 2023-12-21 | 2024-06-07 | 盐城集结号科技有限公司 | Network security defense system based on RPA |

| CN118468344A (en)* | 2024-07-10 | 2024-08-09 | 中电科大数据研究院有限公司 | Method and system for improving privacy security of federated learning |

Also Published As

| Publication number | Publication date |

|---|---|

| CN112199702B (en) | 2024-07-26 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN112199702A (en) | Privacy protection method, storage medium and system based on federal learning | |

| CN112541593B (en) | Method and device for joint training business model based on privacy protection | |

| CN110399742B (en) | A training and prediction method and device for a federated transfer learning model | |

| US20220092216A1 (en) | Privacy-preserving machine learning in the three-server model | |

| US20210209247A1 (en) | Privacy-preserving machine learning in the three-server model | |

| WO2021197037A1 (en) | Method and apparatus for jointly performing data processing by two parties | |

| US12353591B2 (en) | Secure aggregation of information using federated learning | |

| US20160020904A1 (en) | Method and system for privacy-preserving recommendation based on matrix factorization and ridge regression | |

| CN112989368A (en) | Method and device for processing private data by combining multiple parties | |

| CN115549888A (en) | Block chain and homomorphic encryption-based federated learning privacy protection method | |

| CN115358387B (en) | Method and device for joint updating model | |

| CN114168988B (en) | Federal learning model aggregation method and electronic device | |

| CN113051586B (en) | Federal modeling system and method, federal model prediction method, medium, and device | |

| CN111291411A (en) | Security video anomaly detection system and method based on convolutional neural network | |

| CN116094686B (en) | Homomorphic encryption method, homomorphic encryption system, homomorphic encryption equipment and homomorphic encryption terminal for quantum convolution calculation | |

| CN101729554A (en) | Construction method of division protocol based on cryptology in distributed computation | |

| WO2024239593A1 (en) | Hybrid federated logistic regression method based on homomorphic encryption | |

| CN113792890A (en) | Model training method based on federal learning and related equipment | |

| US20230385446A1 (en) | Privacy-preserving clustering methods and apparatuses | |

| Weng et al. | Practical privacy attacks on vertical federated learning | |

| CN116561787A (en) | Training method and device for visual image classification model and electronic equipment | |

| Zhou et al. | Toward scalable and privacy-preserving deep neural network via algorithmic-cryptographic co-design | |

| Sun et al. | FL-EASGD: Federated Learning Privacy Security Method Based on Homomorphic Encryption. | |

| CN115409512A (en) | Abnormal information detection method, abnormal information detection device, computer equipment and storage medium | |

| CN114742239A (en) | Method and device for training financial insurance claims risk model based on federated learning |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |