CN111915703A - Image generation method and device - Google Patents

Image generation method and deviceDownload PDFInfo

- Publication number

- CN111915703A CN111915703ACN201910390118.2ACN201910390118ACN111915703ACN 111915703 ACN111915703 ACN 111915703ACN 201910390118 ACN201910390118 ACN 201910390118ACN 111915703 ACN111915703 ACN 111915703A

- Authority

- CN

- China

- Prior art keywords

- image

- semantic segmentation

- edge line

- determining

- sample

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T11/00—2D [Two Dimensional] image generation

- G06T11/40—Filling a planar surface by adding surface attributes, e.g. colour or texture

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/10—Segmentation; Edge detection

- G06T7/12—Edge-based segmentation

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/10—Segmentation; Edge detection

- G06T7/13—Edge detection

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Image Analysis (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本公开涉及图像处理技术领域,尤其涉及一种图像生成方法和装置。The present disclosure relates to the technical field of image processing, and in particular, to an image generation method and apparatus.

背景技术Background technique

随着以深度学习为代表的新一代机器学习算法日趋成熟,人工智能在智能医疗、无人驾驶、智能教育等领域发挥着越来越重要的作用。目前,人工智能辅助绘画是人工智能领域的研究热点。With the maturity of the new generation of machine learning algorithms represented by deep learning, artificial intelligence is playing an increasingly important role in the fields of intelligent medical care, unmanned driving, and intelligent education. At present, artificial intelligence-assisted painting is a research hotspot in the field of artificial intelligence.

现有技术中,人工智能辅助绘画系统可以分为下述三类:第一类是通过执行规则指令帮助用户完成简单的绘画操作,由于规则指令无法涵盖所有可能情况,这类系统仅能在某些特定情况下完成绘画图像输出;第二类是基于深度神经网络模仿绘画风格输出图像,例如,基于风格迁移算法将普通图像转化为具备某种风格的图像,该类系统只是对图像纹理的简单迁移,无法实现图像内容的创新;第三类是基于深度学习算法学习大量图像数据,得到图像数据的潜在概率分布,从而能够生成出训练集中不存在的图像,该类系统一般只能生成仅包含单一物体的图像,无法生成内容丰富完整的图像。In the prior art, artificial intelligence-assisted painting systems can be divided into the following three categories: the first category is to help users complete simple painting operations by executing rule instructions. Since rule instructions cannot cover all possible situations, this type of system can only In some specific cases, the output of painting images is completed; the second type is based on deep neural networks to imitate painting style output images, for example, based on the style transfer algorithm to convert ordinary images into images with a certain style, this type of system is only a simple image texture. The third type is to learn a large amount of image data based on deep learning algorithms, and obtain the potential probability distribution of the image data, so as to generate images that do not exist in the training set. Generally, this type of system can only generate images that only contain An image of a single object cannot generate a rich and complete image.

因此,亟需一种图像生成方法辅助用户生成具备目标风格、高质量、内容丰富的图像。Therefore, there is an urgent need for an image generation method to assist users in generating images with target style, high quality and rich content.

发明内容SUMMARY OF THE INVENTION

有鉴于此,本公开提出了一种图像生成方法和装置,使得可以根据用户输入的语义分割图像,快速为用户生成具备目标风格且场景内容丰富完整的图像。In view of this, the present disclosure proposes an image generation method and apparatus, so that the image can be segmented according to the semantics input by the user, and the image with the target style and the rich and complete scene content can be quickly generated for the user.

根据本公开的第一方面,提供了一种图像生成方法,包括:接收用户输入的第一语义分割图像,所述第一语义分割图像中包括至少一类目标场景物体;确定所述第一语义分割图像对应的第一边缘线条图像,所述第一边缘线条图像中包括毎类目标场景物体的边缘信息;将所述第一语义分割图像和所述第一边缘线条图像输入第一条件生成对抗神经网络模型,生成所述第一语义分割图像对应的具备目标风格的图像,所述第一条件生成对抗神经网络模型是根据具备所述目标风格的多个样本图像训练得到的。According to a first aspect of the present disclosure, an image generation method is provided, including: receiving a first semantic segmentation image input by a user, the first semantic segmentation image including at least one type of target scene object; determining the first semantic segmentation image a first edge line image corresponding to the segmented image, the first edge line image includes edge information of each type of target scene object; inputting the first semantic segmentation image and the first edge line image into a first conditional generation confrontation A neural network model is used to generate an image with a target style corresponding to the first semantic segmentation image, and the first conditional generation confrontation neural network model is obtained by training a plurality of sample images with the target style.

在一种可能的实现方式中,通过以下方式根据所述多个样本图像训练得到所述第一条件生成对抗神经网络模型,包括:对每个样本图像进行语义分割,确定所述样本图像的第二语义分割图像,第二语义分割图像中包括所述样本图像中的多类场景物体;对每个样本图像进行边缘检测,确定所述样本图像的第二边缘线条图像,第二边缘线条图像线条图中包括所述样本图像中的每类场景物体的边缘信息;根据所述多个样本图像,以及每个样本图像的第二语义分割图像和第二边缘线条图像,训练得到所述第一条件生成对抗神经网络模型。In a possible implementation manner, the first conditional generative adversarial neural network model is obtained by training according to the plurality of sample images in the following manner, including: performing semantic segmentation on each sample image, and determining the first condition of the sample image. Two semantically segmented images, the second semantically segmented image includes multiple types of scene objects in the sample image; edge detection is performed on each sample image to determine the second edge line image of the sample image, the second edge line image line The figure includes edge information of each type of scene object in the sample image; according to the multiple sample images, and the second semantic segmentation image and the second edge line image of each sample image, the first condition is obtained by training Generative Adversarial Neural Network Models.

在一种可能的实现方式中,确定所述第一语义分割图像对应的第一边缘线条图像,包括:根据每个样本图像的第二语义分割图像和第二边缘线条图像,确定所述第一边缘线条图像。In a possible implementation manner, determining the first edge line image corresponding to the first semantic segmentation image includes: determining the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image Edge line image.

在一种可能的实现方式中,根据每个样本图像的第二语义分割图像和第二边缘线条图像,确定所述第一边缘线条图像,包括:确定所述第一语义分割图像的第一空间结构特征;对所述第一空间结构特征进行降维处理,确定所述第一语义分割图像在特征空间模型中对应的第一特征点,降维后的第一空间结构特征与所述特征空间模型维度相同,所述特征空间模型中包括每个样本图像的第二语义分割图像对应的第二特征点;将所述特征空间模型中与所述第一特征点之间的欧式距离小于等于阈值的第二特征点对应的第二语义分割图像确定为第三语义分割图像;根据与第三语义分割图像对应相同样本图像的第二边缘线条图像,确定所述第一边缘线条图像。In a possible implementation manner, determining the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image includes: determining a first space of the first semantic segmentation image Structural features; perform dimensionality reduction processing on the first spatial structural features to determine the first feature points corresponding to the first semantic segmentation image in the feature space model, and the dimension-reduced first spatial structural features and the feature space The model dimensions are the same, and the feature space model includes the second feature point corresponding to the second semantic segmentation image of each sample image; the Euclidean distance between the feature space model and the first feature point is less than or equal to the threshold The second semantic segmented image corresponding to the second feature point of , is determined as the third semantic segmented image; the first edge line image is determined according to the second edge line image of the same sample image corresponding to the third semantic segmented image.

在一种可能的实现方式中,通过以下方式建立所述特征空间模型,包括:根据每个样本图像的第二语义分割图像,训练得到自编码器模型,所述自编码器模型用于提取语义分割图像的空间结构特征;根据所述自编码器模型,确定每个样本图像的第二语义分割图像的第二空间结构特征;对每个样本图像的第二语义分割图像的第二空间结构特征进行降维处理,得到所述特征空间模型。In a possible implementation manner, the feature space model is established in the following manner, including: segmenting the image according to the second semantics of each sample image, and training to obtain an autoencoder model, where the autoencoder model is used to extract semantics The spatial structure feature of the segmented image; the second spatial structure feature of the second semantically segmented image of each sample image is determined according to the self-encoder model; the second spatial structure feature of the second semantically segmented image of each sample image Dimension reduction processing is performed to obtain the feature space model.

在一种可能的实现方式中,确定所述第一语义分割图像的第一空间结构特征,包括:根据所述自编码器模型,确定所述第一空间结构特征。In a possible implementation manner, determining the first spatial structure feature of the first semantically segmented image includes: determining the first spatial structure feature according to the autoencoder model.

在一种可能的实现方式中,对空间结构特征进行降维处理,包括:使用PCA算法对空间结构特征进行降维处理。In a possible implementation manner, performing dimension reduction processing on the spatial structure features includes: using a PCA algorithm to perform dimension reduction processing on the spatial structure features.

在一种可能的实现方式中,根据每个样本图像的第二语义分割图像和第二边缘线条图像,确定所述第一边缘线条图像,包括:将所述第一语义分割图像输入第二条件生成对抗神经网络模型,确定所述第一边缘线条图像,所述第二条件生成对抗神经网络模型是根据每个样本图像的第二语义分割图像和第二边缘线条图像训练得到的。In a possible implementation manner, determining the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image includes: inputting the first semantic segmentation image into a second condition Generating an adversarial neural network model to determine the first edge line image, and the second conditional generating adversarial neural network model is obtained by training according to the second semantic segmentation image and the second edge line image of each sample image.

在一种可能的实现方式中,所述目标风格为动漫风格。In a possible implementation manner, the target style is an anime style.

根据本公开的第二方面,提供了一种图像生成装置,包括:接收模块,用于接收用户输入的第一语义分割图像,所述第一语义分割图像中包括至少一类目标场景物体;第一确定模块,用于确定所述第一语义分割图像对应的第一边缘线条图像,所述第一边缘线条图像中包括毎类目标场景物体的边缘信息;生成模块,用于将所述第一语义分割图像和所述第一边缘线条图像输入第一条件生成对抗神经网络模型,生成所述第一语义分割图像对应的具备目标风格的图像,所述第一条件生成对抗神经网络模型是根据具备所述目标风格的多个样本图像训练得到的。According to a second aspect of the present disclosure, an image generation apparatus is provided, comprising: a receiving module configured to receive a first semantically segmented image input by a user, wherein the first semantically segmented image includes at least one type of target scene object; a determining module, configured to determine a first edge line image corresponding to the first semantic segmentation image, where the first edge line image includes edge information of each type of object in the target scene; a generating module, configured to convert the first edge line image The semantic segmentation image and the first edge line image are input into a first conditional generation adversarial neural network model, and an image with the target style corresponding to the first semantic segmentation image is generated, and the first conditional generation adversarial neural network model is based on having obtained by training on multiple sample images of the target style.

在一种可能的实现方式中,所述装置还包括:第二确定模块,用于对每个样本图像进行语义分割,确定所述样本图像的第二语义分割图像,第二语义分割图像中包括所述样本图像中的多类场景物体;第三确定模块,用于对每个样本图像进行边缘检测,确定所述样本图像的第二边缘线条图像,第二边缘线条图像线条图中包括所述样本图像中的每类场景物体的边缘信息;第一模型训练模块,用于根据所述多个样本图像,以及每个样本图像的第二语义分割图像和第二边缘线条图像,训练得到所述第一条件生成对抗神经网络模型。In a possible implementation manner, the apparatus further includes: a second determination module, configured to perform semantic segmentation on each sample image, and determine a second semantically segmented image of the sample image, where the second semantically segmented image includes Multiple types of scene objects in the sample image; a third determination module, configured to perform edge detection on each sample image, and determine a second edge line image of the sample image, and the line diagram of the second edge line image includes the edge information of each type of scene object in the sample image; the first model training module is used for obtaining the The first conditional generative adversarial neural network model.

在一种可能的实现方式中,所述第一确定模块包括:第一确定子模块,用于根据每个样本图像的第二语义分割图像和第二边缘线条图像,确定所述第一边缘线条图像。In a possible implementation manner, the first determination module includes: a first determination sub-module, configured to determine the first edge line according to the second semantic segmentation image and the second edge line image of each sample image image.

在一种可能的实现方式中,所述第一确定子模块包括:第一确定单元,用于确定所述第一语义分割图像的第一空间结构特征;第二确定单元,用于对所述第一空间结构特征进行降维处理,确定所述第一语义分割图像在特征空间模型中对应的第一特征点,降维后的第一空间结构特征与所述特征空间模型维度相同,所述特征空间模型中包括每个样本图像的第二语义分割图像对应的第二特征点;第三确定单元,用于将所述特征空间模型中与所述第一特征点之间的欧式距离小于等于阈值的第二特征点对应的第二语义分割图像确定为第三语义分割图像;第四确定单元,用于根据与第三语义分割图像对应相同样本图像的第二边缘线条图像,确定所述第一边缘线条图像。In a possible implementation manner, the first determination sub-module includes: a first determination unit for determining a first spatial structure feature of the first semantically segmented image; a second determination unit for determining the first spatial structure feature of the first semantically segmented image; The first spatial structure feature is subjected to dimensionality reduction processing to determine the first feature point corresponding to the first semantic segmentation image in the feature space model. The dimension of the first spatial structure feature after dimensionality reduction is the same as that of the feature space model. The feature space model includes a second feature point corresponding to the second semantic segmentation image of each sample image; a third determination unit is used to determine the Euclidean distance between the feature space model and the first feature point less than or equal to The second semantic segmentation image corresponding to the second feature point of the threshold is determined as the third semantic segmentation image; the fourth determination unit is configured to determine the third semantic segmentation image according to the second edge line image of the same sample image corresponding to the third semantic segmentation image. A fringe line image.

在一种可能的实现方式中,所述装置还包括:第二模型训练模块,用于根据每个样本图像的第二语义分割图像,训练得到自编码器模型,所述自编码器模型用于提取语义分割图像的空间结构特征;第四确定模块,用于根据所述自编码器模型,确定每个样本图像的第二语义分割图像的第二空间结构特征;第五确定模块,用于对每个样本图像的第二语义分割图像的第二空间结构特征进行降维处理,确定所述特征空间模型。In a possible implementation manner, the apparatus further includes: a second model training module, configured to segment the image according to the second semantics of each sample image, and train to obtain an autoencoder model, where the autoencoder model is used for Extract the spatial structure features of the semantically segmented images; the fourth determination module is used for determining the second spatial structure features of the second semantically segmented images of each sample image according to the self-encoder model; the fifth determination module is used for The second spatial structure feature of the second semantically segmented image of each sample image is subjected to dimensionality reduction processing to determine the feature space model.

在一种可能的实现方式中,所述第一确定单元具体用于:根据所述自编码器模型,确定所述第一空间结构特征。In a possible implementation manner, the first determining unit is specifically configured to: determine the first spatial structure feature according to the autoencoder model.

在一种可能的实现方式中,所述装置还包括:数据处理模块,用于使用PCA算法对空间结构特征进行降维处理。In a possible implementation manner, the apparatus further includes: a data processing module, configured to perform dimension reduction processing on the spatial structure feature by using the PCA algorithm.

在一种可能的实现方式中,所述第一确定子模块具体用于:将所述第一语义分割图像输入第二条件生成对抗神经网络模型,确定所述第一边缘线条图像,所述第二条件生成对抗神经网络模型是根据每个样本图像的第二语义分割图像和第二边缘线条图像训练得到的。In a possible implementation manner, the first determination sub-module is specifically configured to: input the first semantic segmentation image into a second conditional generative adversarial neural network model, determine the first edge line image, and the first edge line image. The bi-conditional generative adversarial neural network model is trained according to the second semantic segmentation image and the second edge line image of each sample image.

在一种可能的实现方式中,所述目标风格为动漫风格。In a possible implementation manner, the target style is an anime style.

根据本公开的第三方面,提供了一种图像生成装置,包括:处理器;用于存储处理器可执行指令的存储器;其中,所述处理器被配置为执行上述第一方面所述的图像生成方法。According to a third aspect of the present disclosure, there is provided an image generating apparatus, comprising: a processor; a memory for storing instructions executable by the processor; wherein the processor is configured to execute the image described in the first aspect above Generate method.

根据本公开的第四方面,提供了一种非易失性计算机可读存储介质,其上存储有计算机程序指令,其中,所述计算机程序指令被处理器执行时实现上述第一方面所述的图像生成方法。According to a fourth aspect of the present disclosure, there is provided a non-volatile computer-readable storage medium having computer program instructions stored thereon, wherein the computer program instructions, when executed by a processor, implement the above-mentioned first aspect Image generation method.

接收用户输入的包括至少一类目标场景物体的第一语义分割图像,确定第一语义分割图像对应的第一边缘线条图像,第一边缘线条图像中包括毎类目标场景物体的边缘信息,将第一语义分割图像和第一边缘线条图像输入第一条件生成对抗神经网络模型,生成第一语义分割图像对应的具备目标风格的图像,第一条件生成对抗神经网络模型是根据具备目标风格的多个样本图像训练得到的,从而可以实现根据用户输入的语义分割图像,快速为用户生成具备目标风格且场景内容丰富完整的图像。Receive a first semantic segmentation image including at least one type of target scene object input by the user, determine a first edge line image corresponding to the first semantic segmentation image, and the first edge line image includes edge information of each type of target scene object, and the first edge line image A semantically segmented image and a first edge line image are input into a first conditional generative adversarial neural network model to generate an image with the target style corresponding to the first semantically segmented image. The first conditional generative adversarial neural network model is based on multiple It is obtained by training sample images, so that it can realize the semantic segmentation of images according to user input, and quickly generate images with target style and rich and complete scene content for users.

根据下面参考附图对示例性实施例的详细说明,本公开的其它特征及方面将变得清楚。Other features and aspects of the present disclosure will become apparent from the following detailed description of exemplary embodiments with reference to the accompanying drawings.

附图说明Description of drawings

包含在说明书中并且构成说明书的一部分的附图与说明书一起示出了本公开的示例性实施例、特征和方面,并且用于解释本公开的原理。The accompanying drawings, which are incorporated in and constitute a part of the specification, illustrate exemplary embodiments, features, and aspects of the disclosure, and together with the description, serve to explain the principles of the disclosure.

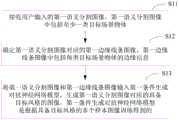

图1示出本公开一实施例的图像生成方法的流程示意图;FIG. 1 shows a schematic flowchart of an image generation method according to an embodiment of the present disclosure;

图2示出本公开一实施例的第一语义分割图像的示意图;FIG. 2 shows a schematic diagram of a first semantically segmented image according to an embodiment of the present disclosure;

图3示出本公开一实施例的图2所示第一语义分割图像对应的第一边缘线条图像的示意图;3 shows a schematic diagram of a first edge line image corresponding to the first semantic segmentation image shown in FIG. 2 according to an embodiment of the present disclosure;

图4示出本公开一实施例的图2所示第一语义分割图像对应的具备动漫风格的图像的示意图;4 shows a schematic diagram of an image with an animation style corresponding to the first semantically segmented image shown in FIG. 2 according to an embodiment of the present disclosure;

图5示出本公开一实施例的图像生成装置的结构示意图;FIG. 5 shows a schematic structural diagram of an image generating apparatus according to an embodiment of the present disclosure;

图6示出本公开一实施例的电子设备的结构示意图。FIG. 6 is a schematic structural diagram of an electronic device according to an embodiment of the present disclosure.

具体实施方式Detailed ways

以下将参考附图详细说明本公开的各种示例性实施例、特征和方面。附图中相同的附图标记表示功能相同或相似的元件。尽管在附图中示出了实施例的各种方面,但是除非特别指出,不必按比例绘制附图。Various exemplary embodiments, features and aspects of the present disclosure will be described in detail below with reference to the accompanying drawings. The same reference numbers in the figures denote elements that have the same or similar functions. While various aspects of the embodiments are shown in the drawings, the drawings are not necessarily drawn to scale unless otherwise indicated.

在这里专用的词“示例性”意为“用作例子、实施例或说明性”。这里作为“示例性”所说明的任何实施例不必解释为优于或好于其它实施例。The word "exemplary" is used exclusively herein to mean "serving as an example, embodiment, or illustration." Any embodiment described herein as "exemplary" is not necessarily to be construed as preferred or advantageous over other embodiments.

另外,为了更好的说明本公开,在下文的具体实施方式中给出了众多的具体细节。本领域技术人员应当理解,没有某些具体细节,本公开同样可以实施。在一些实例中,对于本领域技术人员熟知的方法、手段、元件和电路未作详细描述,以便于凸显本公开的主旨。In addition, in order to better illustrate the present disclosure, numerous specific details are given in the following detailed description. It will be understood by those skilled in the art that the present disclosure may be practiced without certain specific details. In some instances, methods, means, components and circuits well known to those skilled in the art have not been described in detail so as not to obscure the subject matter of the present disclosure.

绘画是一种具有自由性、开放性、表达性以及艺术性的活动,人工智能辅助绘画一直是人工智能领域的研究热点。目前,正如背景技术部分陈述的,现有的人工智能辅助绘画系统无法生成场景内容丰富完整的图像。Painting is a free, open, expressive and artistic activity. Artificial intelligence-assisted painting has always been a research hotspot in the field of artificial intelligence. At present, as stated in the background art section, the existing artificial intelligence-assisted painting systems cannot generate images with rich and complete scene contents.

本公开提供的图像生成方法可以应用于人工智能辅助绘画场景,以实现仅需用户输入语义分割图像,即可以快速为用户生成具备目标风格且场景内容丰富完整的图像。下面以生成具备动漫风格的图像为例详细介绍本公开提供的图像生成方法。本领域技术人员应当理解,动漫风格仅是本公开的一个应用场景示例,并不构成对本公开的限定,本公开提供的图像生成方法还可以应用于生成具备其他风格的图像的应用场景。The image generation method provided by the present disclosure can be applied to an artificial intelligence-assisted painting scene, so that only a user can input a semantically segmented image, that is, an image with a target style and rich and complete scene content can be quickly generated for the user. The following describes the image generation method provided by the present disclosure in detail by taking generating an image with an animation style as an example. Those skilled in the art should understand that the animation style is only an example of an application scenario of the present disclosure, and does not constitute a limitation of the present disclosure, and the image generation method provided in the present disclosure can also be applied to an application scenario of generating images with other styles.

图1示出本公开一实施例的图像生成方法的流程示意图。如图1所示,该方法可以包括:FIG. 1 shows a schematic flowchart of an image generation method according to an embodiment of the present disclosure. As shown in Figure 1, the method may include:

步骤S11,接收用户输入的第一语义分割图像,第一语义分割图像中包括至少一类目标场景物体。Step S11: Receive a first semantic segmentation image input by the user, where the first semantic segmentation image includes at least one type of target scene object.

步骤S12,确定第一语义分割图像对应的第一边缘线条图像,第一边缘线条图像中包括毎类目标场景物体的边缘信息。Step S12, determining a first edge line image corresponding to the first semantic segmentation image, where the first edge line image includes edge information of each type of target scene object.

步骤S13,将第一语义分割图像和第一边缘线条图像输入第一条件生成对抗神经网络模型,生成第一语义分割图像对应的具备目标风格的图像,第一条件生成对抗神经网络模型是根据具备目标风格的多个样本图像训练得到的。Step S13, the first semantic segmentation image and the first edge line image are input into the first conditional generation adversarial neural network model, and the image corresponding to the first semantic segmentation image with the target style is generated. The first conditional generation of the confrontation neural network model is based on having Trained on multiple sample images of the target style.

在生成具备目标风格的图像之前,根据具备目标风格的多个样本图像训练得到第一条件生成对抗神经网络模型,第一条件生成对抗神经网络模型可以用于生成具备目标风格的图像。Before generating an image with the target style, a first conditional generative adversarial neural network model is obtained by training a plurality of sample images with the target style, and the first conditional generative adversarial neural network model can be used to generate an image with the target style.

在一种可能的实现方式中,通过以下方式根据多个样本图像训练得到第一条件生成对抗神经网络模型,包括:对每个样本图像进行语义分割,确定样本图像的第二语义分割图像,第二语义分割图像中包括样本图像中的多类场景物体;对每个样本图像进行边缘检测,确定样本图像的第二边缘线条图像,第二边缘线条图像线条图中包括样本图像中的每类场景物体的边缘信息;根据多个样本图像,以及每个样本图像的第二语义分割图像和第二边缘线条图像,训练得到第一条件生成对抗神经网络模型。In a possible implementation manner, the first conditional generative adversarial neural network model is obtained by training multiple sample images in the following manner, including: performing semantic segmentation on each sample image, determining a second semantic segmentation image of the sample image, The second semantic segmentation image includes multiple types of scene objects in the sample image; edge detection is performed on each sample image to determine the second edge line image of the sample image, and the line image of the second edge line image includes each type of scene in the sample image. The edge information of the object; according to the multiple sample images, the second semantic segmentation image and the second edge line image of each sample image, the first conditional generative adversarial neural network model is obtained by training.

在一种可能的实现方式中,目标风格为动漫风格。In one possible implementation, the target style is an anime style.

下面以根据具备动漫风格(目标风格)的多个样本图像训练得到第一条件生成对抗神经网络模型为例进行详细介绍。The following is an example of a first conditional generative adversarial neural network model obtained by training a plurality of sample images having an anime style (target style).

第一步,获取具备动漫风格(目标风格)的多个样本图像。例如,通过对动漫电影和/或动漫连续剧进行图像截取,得到具备动漫风格(目标风格)的多个样本图像。In the first step, multiple sample images with anime style (target style) are obtained. For example, a plurality of sample images having an anime style (target style) are obtained by cutting out images of an anime movie and/or an anime series.

在一示例中,目标风格还可以对动漫风格进行进一步精细划分,得到不同类型动漫风格。例如,日式动漫风格、美式动漫风格、中国风式动漫风格等。获取具备相同类型动漫风格的多个样本图像进行模型训练。In an example, the target style may further subdivide the animation styles to obtain different types of animation styles. For example, Japanese anime style, American anime style, Chinese style anime style, etc. Obtain multiple sample images with the same type of anime style for model training.

在一示例中,对多个样本图像进行筛选,仅选取其中的风景样本图像进行模型训练。In an example, a plurality of sample images are screened, and only landscape sample images are selected for model training.

第二步,对每个样本图像进行语义分割,确定每个样本图像的第二语义分割图像。例如,针对每个样本图像,对该样本图像中的各类场景物体进行语义分割标注,可以用不同颜色代表不同类别的场景物体,得到第二语义分割图像。In the second step, semantic segmentation is performed on each sample image, and a second semantic segmentation image of each sample image is determined. For example, for each sample image, various types of scene objects in the sample image are semantically segmented and labeled, and different colors can be used to represent different types of scene objects to obtain a second semantic segmented image.

语义分割图像中每种颜色区域对应一类场景物体,可以提供图像的语义信息。Each color area in the semantic segmentation image corresponds to a class of scene objects, which can provide semantic information of the image.

在一示例中,可以定义9种类别的场景物体:天空、山、树、草地、建筑、河流、道路、岩石、其它(不属于上述8种类别的场景物体,例如,人物、交通工具等)。In an example, 9 categories of scene objects can be defined: sky, mountains, trees, grass, buildings, rivers, roads, rocks, others (scene objects that do not belong to the above 8 categories, such as people, vehicles, etc.) .

第三步,对每个样本图像进行边缘检测,确定每个样本图像的第二边缘线条图像。In the third step, edge detection is performed on each sample image, and a second edge line image of each sample image is determined.

在一示例中,采用canny边缘检测算法对每个样本图像进行边缘检测,得到每个样本图像的第二边缘线条图像。In an example, edge detection is performed on each sample image by using the canny edge detection algorithm to obtain a second edge line image of each sample image.

对每个样本图像进行边缘检测时,除了可以采用canny边缘检测算法,还可以采用其它边缘检测算法,本公开对此不作具体限定。When performing edge detection on each sample image, in addition to the canny edge detection algorithm, other edge detection algorithms can also be used, which is not specifically limited in the present disclosure.

边缘线条图像中包括每类场景物体的边缘信息,可以提供图像的细节信息。The edge line image includes edge information of each type of scene object, which can provide detailed information of the image.

第四步,对每个样本图像以及每个样本图像对应的第二语义分割图像和第二边缘线条图像进行缩放或裁剪处理。In the fourth step, scaling or cropping is performed on each sample image and the second semantic segmentation image and the second edge line image corresponding to each sample image.

由于不同样本图像可能来源于不同多媒体数据(动漫电影或动漫连续剧),为了确保后续进行模型训练的图像大小一致,对每个样本图像以及每个样本图像对应的第二语义分割图像和第二边缘线条图像进行缩放或裁剪处理,得到大小一致的图像数据集。Since different sample images may come from different multimedia data (anime movies or anime series), in order to ensure the consistent image size for subsequent model training, each sample image and its corresponding second semantic segmentation image and second edge The line image is scaled or cropped to obtain an image dataset of the same size.

若每个样本图像以及每个样本图像对应的第二语义分割图像和第二边缘线条图像大小一致,则无需进行第四步的缩放或裁剪处理。If each sample image and the second semantic segmentation image corresponding to each sample image have the same size as the second edge line image, it is not necessary to perform the scaling or cropping process in the fourth step.

第五步,针对每个样本图像,使用“样本图像-样本图像的第二语义分割图像-样本图像的第二边缘线条图像”数据组作为训练数据进行模型训练,学习样本图像、样本图像的第二语义分割图像和样本图像的第二边缘线条图像之间的映射关系。The fifth step, for each sample image, use the "sample image-the second semantic segmentation image of the sample image-the second edge line image of the sample image" data group as the training data for model training, learn the sample image, the first image of the sample image. The mapping relationship between the semantically segmented image and the second edge line image of the sample image.

在一示例中,第一条件生成对抗神经网络模型包括生成器G和判别器D。In an example, the first conditional generative adversarial neural network model includes a generator G and a discriminator D.

针对任一“样本图像y-样本图像的第二语义分割图像x1-样本图像的第二边缘线条图像x2”数据组详细介绍模型训练过程。The model training process is described in detail for any data set of "sample image y-second semantic segmentation image of sample image x1 -second edge line image x2 of sample image".

首先,将样本图像的第二语义分割图像x1和第二边缘线条图像x2输入生成器G。First, the second semantic segmentation image x1 and the second edge line image x2 of the sample image are input into the generator G.

其次,生成器G根据第二语义分割图像x1、第二边缘线条图像x2和随机向量z,生成具备动漫风格(目标风格)的图像y',即G(x1,x2,z)→y'。Secondly, the generator G generates an image y' with anime style (target style) according to the second semantic segmentation image x1 , the second edge line image x2 and the random vector z, namely G(x1 ,x2 ,z) →y'.

其次,判别器D判断生成的图像y'与样本图像y是否相同,并将判断结果返回给生成器G,使得生成器G改进自己的生成能力。Secondly, the discriminator D judges whether the generated image y' is the same as the sample image y, and returns the judgment result to the generator G, so that the generator G can improve its generating ability.

最后,通过生成器G与判别器D的多次博弈,即最小化下述目标函数:Finally, through multiple games between the generator G and the discriminator D, the following objective function is minimized:

使得生成器G的生成能力达到最优,此时,训练得到第一条件生成对抗神经网络模型。The generation ability of the generator G is optimized. At this time, the first conditional generative adversarial neural network model is obtained by training.

人工智能辅助绘画系统训练得到第一条件生成对抗神经网络模型之后,用户希望通过人工智能辅助绘画系统生成具备动漫风格(目标风格)的图像时,基于绘画创作需求,用户向人工智能辅助绘画系统中输入包括至少一类目标场景物体的第一语义分割图。After the AI-assisted painting system is trained to obtain the first conditional generative adversarial neural network model, when the user wishes to generate an image with an animation style (target style) through the AI-assisted painting system, based on the needs of painting creation, the user sends the AI-assisted painting system to the AI-assisted painting system. The input includes a first semantic segmentation map of at least one class of target scene objects.

图2示出本公开一实施例的第一语义分割图像的示意图。如图1所示,第一语义分割图像中包括五类目标场景物体:天空、山、树、草地、河流。在第一语义分割图像中,可以用不同颜色表示不同类别的目标场景物体。FIG. 2 shows a schematic diagram of a first semantically segmented image according to an embodiment of the present disclosure. As shown in Figure 1, the first semantic segmentation image includes five types of target scene objects: sky, mountain, tree, grass, and river. In the first semantic segmentation image, different types of target scene objects may be represented by different colors.

在一种可能的实现方式中,确定第一语义分割图像对应的第一边缘线条图像,包括:根据每个样本图像的第二语义分割图像和第二边缘线条图像,确定第一边缘线条图像。In a possible implementation manner, determining the first edge line image corresponding to the first semantic segmentation image includes: determining the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image.

根据每个样本图像的第二语义分割图像和第二边缘线条图像确定第一边缘线条图像的方式包括下述至少两种。The manners of determining the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image include the following at least two.

第一种:The first:

在一种可能的实现方式中,根据每个样本图像的第二语义分割图像和第二边缘线条图像,确定第一边缘线条图像,包括:确定第一语义分割图像的第一空间结构特征;对第一空间结构特征进行降维处理,确定第一语义分割图像在特征空间模型中对应的第一特征点,降维后的第一空间结构特征与特征空间模型维度相同,特征空间模型中包括每个样本图像的第二语义分割图像对应的第二特征点;将特征空间模型中与第一特征点之间的欧式距离小于等于阈值的第二特征点对应的第二语义分割图像确定为第三语义分割图像;根据与第三语义分割图像对应相同样本图像的第二边缘线条图像,确定第一边缘线条图像。In a possible implementation manner, determining the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image includes: determining the first spatial structure feature of the first semantic segmentation image; The first spatial structure feature is subjected to dimensionality reduction processing to determine the first feature point corresponding to the first semantic segmentation image in the feature space model. The dimension of the first spatial structure feature after dimensionality reduction is the same as that of the feature space model. The feature space model includes each The second feature point corresponding to the second semantic segmentation image of the sample images; the second semantic segmentation image corresponding to the second feature point whose Euclidean distance between the first feature point and the first feature point in the feature space model is less than or equal to the threshold is determined as the third Semantic segmentation image; determining the first edge line image according to the second edge line image corresponding to the same sample image as the third semantic segmentation image.

在根据每个样本图像的第二语义分割图像和第二边缘线条图像确定第一边缘线条图像之前,根据每个样本图像的第二语义分割图像建立特征空间模型。Before determining the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image, a feature space model is established according to the second semantic segmentation image of each sample image.

在一种可能的实现方式中,通过以下方式建立特征空间模型,包括:根据每个样本图像的第二语义分割图像,训练得到自编码器模型,自编码器模型用于提取语义分割图像的空间结构特征;根据自编码器模型,确定每个样本图像的第二语义分割图像的第二空间结构特征;对每个样本图像的第二语义分割图像的第二空间结构特征进行降维处理,得到特征空间模型。In a possible implementation manner, a feature space model is established in the following manner, including: obtaining an autoencoder model by training according to the second semantic segmentation image of each sample image, and the autoencoder model is used to extract the space of the semantically segmented image Structural feature; according to the autoencoder model, determine the second spatial structure feature of the second semantic segmentation image of each sample image; perform dimensionality reduction processing on the second spatial structure feature of the second semantic segmentation image of each sample image to obtain Feature space model.

在一种可能的实现方式中,对空间结构特征进行降维处理,包括:使用主成分分析(PCA,Principal Component Analysis)算法对空间结构特征进行降维处理。In a possible implementation manner, performing dimension reduction processing on the spatial structure features includes: using a principal component analysis (PCA, Principal Component Analysis) algorithm to perform dimension reduction processing on the spatial structure features.

根据每个样本图像的第二语义分割图像训练得到用于提取语义分割图像的空间结构特征的自编码器模型。根据自编码器模型,提取每个样本图像的第二语义分割图像的第二空间结构特征。通过PCA算法对每个样本图像的第二语义分割图像的第二空间结构特征进行降维处理构建特征空间模型,即确定每个样本图像的第二语义分割图像在特征空间模型中对应的第二特征点。An autoencoder model for extracting the spatial structure features of the semantically segmented images is obtained by training according to the second semantically segmented image of each sample image. According to the autoencoder model, the second spatial structure feature of the second semantically segmented image of each sample image is extracted. The second spatial structure feature of the second semantically segmented image of each sample image is dimensionally reduced by the PCA algorithm to construct a feature space model, that is, the second corresponding second semantically segmented image of each sample image in the feature space model is determined. Feature points.

在一种可能的实现方式中,确定第一语义分割图像的第一空间结构特征,包括:根据自编码器模型,确定第一空间结构特征。In a possible implementation manner, determining the first spatial structure feature of the first semantically segmented image includes: determining the first spatial structure feature according to an autoencoder model.

针对用户输入的第一语义分割图像,可以使用上述根据每个样本图像的第二语义分割图像训练得到的自编码器模型,确定第一语义分割图像的第一空间结构特征。For the first semantically segmented image input by the user, the above-mentioned autoencoder model trained according to the second semantically segmented image of each sample image may be used to determine the first spatial structure feature of the first semantically segmented image.

在确定第一空间结构特征之后,可以使用PCA算法对第一空间结构特征进行降维处理,确定第一语义分割图像在特征空间模型中对应的第一特征点,其中,降维后的第一空间结构特征与特征空间模型维度相同。After the first spatial structure feature is determined, the PCA algorithm may be used to perform dimensionality reduction processing on the first spatial structure feature to determine the first feature point corresponding to the first semantic segmentation image in the feature space model, wherein the dimension-reduced first feature point The spatial structure features are of the same dimension as the feature space model.

在一示例中,特征空间模型中特征点之间的欧式距离可以表示特征点对应的语义分割图像之间的空间结构差异性。In an example, the Euclidean distance between the feature points in the feature space model can represent the spatial structure difference between the semantically segmented images corresponding to the feature points.

在一示例中,在特征空间模型中,将与第一特征点之间的欧式距离小于等于阈值的第二特征点对应的第二语义分割图像确定为第三语义分割图像。即第三语义分割图像与第一语义分割图像之间的空间结构相似性较高。阈值大小可以根据实际情况确定,本公开对阈值的具体取值不作限定。In an example, in the feature space model, the second semantically segmented image corresponding to the second feature point whose Euclidean distance between the first feature points is less than or equal to a threshold is determined as the third semantically segmented image. That is, the spatial structure similarity between the third semantically segmented image and the first semantically segmented image is relatively high. The size of the threshold can be determined according to the actual situation, and the present disclosure does not limit the specific value of the threshold.

例如,阈值为x,在特征空间模型中,确定第一语义分割图像A对应的第一特征点a与每个样本图像的第二语义分割图像对应的第二特征点之间的欧式距离,进而确定与第一特征点a之间的欧式距离小于等于阈值x的第二特征点有3个:第二特征点b、第二特征点c和第二特征点d,则将第二特征点b对应的第二语义分割图像B、第二特征点c对应的第二语义分割图像C和第二特征点d对应的第二语义分割图像D均确定为第三语义分割图像。For example, the threshold is x, and in the feature space model, the Euclidean distance between the first feature point a corresponding to the first semantic segmentation image A and the second feature point corresponding to the second semantic segmentation image of each sample image is determined, and then It is determined that there are 3 second feature points whose Euclidean distance from the first feature point a is less than or equal to the threshold x: the second feature point b, the second feature point c, and the second feature point d, then the second feature point b The corresponding second semantically segmented image B, the second semantically segmented image C corresponding to the second feature point c, and the second semantically segmented image D corresponding to the second feature point d are all determined as the third semantically segmented image.

在一示例中,将与第一特征点之间的距离最近的预设个数的第二特征点对应的第二语义分割图像确定为第三语义分割图像。预设个数可以根据实际情况确定,本公开对此不作具体限定。In an example, the second semantically segmented image corresponding to the preset number of second feature points with the closest distance between the first feature points is determined as the third semantically segmented image. The preset number can be determined according to the actual situation, which is not specifically limited in the present disclosure.

例如,预设个数为6,在特征空间模型中,确定第一特征点与每个样本图像的第二语义分割图像对应的第二特征点之间的欧式距离,进而确定与第一特征点a之间的欧式距离最近的6个第二特征点:第二特征点b、第二特征点c、第二特征点d、第二特征点e、第二特征点f和第二特征点h,则将第二特征点b对应的第二语义分割图像B、第二特征点c对应的第二语义分割图像C、第二特征点d对应的第二语义分割图像D、第二特征点e对应的第二语义分割图像E、第二特征点f对应的第二语义分割图像F和第二特征点h对应的第二语义分割图像H均确定为第三语义分割图像。For example, the preset number is 6, in the feature space model, determine the Euclidean distance between the first feature point and the second feature point corresponding to the second semantic segmentation image of each sample image, and then determine the distance between the first feature point and the first feature point The 6 second feature points with the closest Euclidean distance between a: the second feature point b, the second feature point c, the second feature point d, the second feature point e, the second feature point f, and the second feature point h , the second semantic segmentation image B corresponding to the second feature point b, the second semantic segmentation image C corresponding to the second feature point c, the second semantic segmentation image D corresponding to the second feature point d, and the second feature point e The corresponding second semantically segmented image E, the second semantically segmented image F corresponding to the second feature point f, and the second semantically segmented image H corresponding to the second feature point h are all determined as the third semantically segmented image.

在确定第三语义分割图像之后,根据与每个第三语义分割图像对应相同样本图像的第二边缘线条图像,确定边缘线条图像素材库。由于第三语义分割图像与第一语义分割图像的空间结构最相似,则可以基于边缘线条图像素材库中的第二边缘线条图像,确定第一语义分割图像对应的第一边缘线条图像。After the third semantic segmentation images are determined, the edge line image material library is determined according to the second edge line images corresponding to the same sample images as each third semantic segmentation image. Since the spatial structure of the third semantic segmentation image is most similar to the first semantic segmentation image, the first edge line image corresponding to the first semantic segmentation image may be determined based on the second edge line image in the edge line image material library.

第二种:The second:

在一种可能的实现方式中,根据每个样本图像的第二语义分割图像和第二边缘线条图像,确定第一边缘线条图像,包括:将第一语义分割图像输入第二条件生成对抗神经网络模型,确定第一边缘线条图像,第二条件生成对抗神经网络模型是根据每个样本图像的第二语义分割图像和第二边缘线条图像训练得到的。In a possible implementation manner, determining the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image includes: inputting the first semantic segmentation image into the second conditional generative adversarial neural network The model determines the first edge line image, and the second conditional generative adversarial neural network model is trained according to the second semantic segmentation image and the second edge line image of each sample image.

针对每个样本图像,使用“样本图像的第二语义分割图像-样本图像的第二边缘线条图像”数据组作为训练数据进行模型训练,学习样本图像的第二语义分割图像和样本图像的第二边缘线条图像之间的映射关系,得到第二条件生成对抗神经网络模型。For each sample image, use the "second semantic segmentation image of the sample image - the second edge line image of the sample image" data set as training data for model training, and learn the second semantic segmentation image of the sample image and the second image of the sample image. The mapping relationship between edge line images is obtained to obtain the second conditional generative adversarial neural network model.

将用户输入的第一语义分割图像输入第二条件生成对抗神经网络模型,则可以生成第一语义分割图像对应的第一边缘线条图像。The first semantic segmentation image input by the user is input into the second conditional generation adversarial neural network model, and then the first edge line image corresponding to the first semantic segmentation image can be generated.

图3示出本公开一实施例的图2所示第一语义分割图像对应的第一边缘线条图像的示意图。FIG. 3 shows a schematic diagram of a first edge line image corresponding to the first semantic segmentation image shown in FIG. 2 according to an embodiment of the present disclosure.

在确定用户输入的第一语义分割图像对应的第一边缘线条图像之后,将第一语义分割图像和第一边缘线条图像输入第一条件生成对抗神经网络模型,可以生成第一语义分割图像对应的具备动漫风格(目标风格)的图像。After determining the first edge line image corresponding to the first semantic segmentation image input by the user, inputting the first semantic segmentation image and the first edge line image into the first conditional generation adversarial neural network model, the first semantic segmentation image corresponding to the Image with anime style (target style).

图4示出本公开一实施例的图2所示第一语义分割图像对应的具备动漫风格的图像的示意图。FIG. 4 is a schematic diagram of an image with an animation style corresponding to the first semantically segmented image shown in FIG. 2 according to an embodiment of the present disclosure.

接收用户输入的包括至少一类目标场景物体的第一语义分割图像,确定第一语义分割图像对应的第一边缘线条图像,第一边缘线条图像中包括毎类目标场景物体的边缘信息,将第一语义分割图像和第一边缘线条图像输入第一条件生成对抗神经网络模型,生成第一语义分割图像对应的具备目标风格的图像,第一条件生成对抗神经网络模型是根据具备目标风格的多个样本图像训练得到的,从而可以实现根据用户输入的语义分割图像,快速为用户生成具备目标风格且内容丰富完整的图像。Receive a first semantic segmentation image including at least one type of target scene object input by the user, determine a first edge line image corresponding to the first semantic segmentation image, and the first edge line image includes edge information of each type of target scene object, and the first edge line image A semantically segmented image and a first edge line image are input into a first conditional generative adversarial neural network model to generate an image with the target style corresponding to the first semantically segmented image. The first conditional generative adversarial neural network model is based on multiple It is obtained by training sample images, so that images can be segmented according to the semantics input by users, and images with target style and rich content can be quickly generated for users.

图5示出本公开一实施例的图像生成装置的结构示意图。图5所示的装置50可以用于执行图1所示方法实施例的步骤,装置50包括:FIG. 5 shows a schematic structural diagram of an image generating apparatus according to an embodiment of the present disclosure. The

接收模块51,用于接收用户输入的第一语义分割图像,第一语义分割图像中包括至少一类目标场景物体;The receiving

第一确定模块52,用于确定第一语义分割图像对应的第一边缘线条图像,第一边缘线条图像中包括毎类目标场景物体的边缘信息;a

生成模块53,用于将第一语义分割图像和第一边缘线条图像输入第一条件生成对抗神经网络模型,生成第一语义分割图像对应的具备目标风格的图像,第一条件生成对抗神经网络模型是根据具备目标风格的多个样本图像训练得到的。The

在一种可能的实现方式中,装置50还包括:In a possible implementation manner, the

第二确定模块,用于对每个样本图像进行语义分割,确定样本图像的第二语义分割图像,第二语义分割图像中包括样本图像中的多类场景物体;a second determination module, configured to perform semantic segmentation on each sample image, and determine a second semantic segmentation image of the sample image, where the second semantic segmentation image includes multiple types of scene objects in the sample image;

第三确定模块,用于对每个样本图像进行边缘检测,确定样本图像的第二边缘线条图像,第二边缘线条图像线条图中包括样本图像中的每类场景物体的边缘信息;a third determination module, configured to perform edge detection on each sample image, and determine a second edge line image of the sample image, where the second edge line image line image includes edge information of each type of scene object in the sample image;

第一模型训练模块,用于根据多个样本图像,以及每个样本图像的第二语义分割图像和第二边缘线条图像,训练得到第一条件生成对抗神经网络模型。The first model training module is used for obtaining the first conditional generative adversarial neural network model according to the plurality of sample images, the second semantic segmentation image and the second edge line image of each sample image.

在一种可能的实现方式中,第一确定模块52包括:In a possible implementation manner, the first determining

第一确定子模块,用于根据每个样本图像的第二语义分割图像和第二边缘线条图像,确定第一边缘线条图像。The first determination submodule is configured to determine the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image.

在一种可能的实现方式中,第一确定子模块包括:In a possible implementation manner, the first determination submodule includes:

第一确定单元,用于确定第一语义分割图像的第一空间结构特征;a first determining unit, configured to determine the first spatial structure feature of the first semantically segmented image;

第二确定单元,用于对第一空间结构特征进行降维处理,确定第一语义分割图像在特征空间模型中对应的第一特征点,降维后的第一空间结构特征与特征空间模型维度相同,特征空间模型中包括每个样本图像的第二语义分割图像对应的第二特征点;The second determining unit is configured to perform dimensionality reduction processing on the first spatial structure feature, and determine the first feature point corresponding to the first semantic segmentation image in the feature space model, the dimension-reduced first spatial structure feature and the dimension of the feature space model Similarly, the feature space model includes the second feature point corresponding to the second semantic segmentation image of each sample image;

第三确定单元,用于将特征空间模型中与第一特征点之间的欧式距离小于等于阈值的第二特征点对应的第二语义分割图像确定为第三语义分割图像;a third determining unit, configured to determine the second semantic segmentation image corresponding to the second feature point whose Euclidean distance between the first feature point is less than or equal to the threshold in the feature space model as the third semantic segmentation image;

第四确定单元,用于根据与第三语义分割图像对应相同样本图像的第二边缘线条图像,确定第一边缘线条图像。The fourth determining unit is configured to determine the first edge line image according to the second edge line image corresponding to the same sample image as the third semantic segmentation image.

在一种可能的实现方式中,装置50还包括:In a possible implementation manner, the

第二模型训练模块,用于根据每个样本图像的第二语义分割图像,训练得到自编码器模型,自编码器模型用于提取语义图像的空间结构特征;The second model training module is used to segment the image according to the second semantics of each sample image, and train to obtain an autoencoder model, and the autoencoder model is used to extract the spatial structure feature of the semantic image;

第四确定模块,用于根据自编码器模型,确定每个样本图像的第二语义分割图像的第二空分割间结构特征;a fourth determination module, configured to determine, according to the autoencoder model, the second space-segmented structural feature of the second semantically segmented image of each sample image;

第五确定模块,用于对每个样本图像的第二语义分割图像的第二空间结构特征进行降维处理,确定特征空间模型。The fifth determination module is configured to perform dimension reduction processing on the second spatial structure feature of the second semantically segmented image of each sample image to determine a feature space model.

在一种可能的实现方式中,第一确定单元具体用于:In a possible implementation manner, the first determining unit is specifically used for:

根据自编码器模型,确定第一空间结构特征。According to the autoencoder model, the first spatial structure feature is determined.

在一种可能的实现方式中,50装置还包括:In a possible implementation manner, the

数据处理模块,用于使用PCA算法对空间结构特征进行降维处理。The data processing module is used to reduce the dimension of the spatial structure feature by using the PCA algorithm.

在一种可能的实现方式中,第一确定子模块具体用于:In a possible implementation manner, the first determination submodule is specifically used for:

将第一语义分割图像输入第二条件生成对抗神经网络模型,确定第一边缘线条图像,第二条件生成对抗神经网络模型是根据每个样本图像的第二语义分割图像和第二边缘线条图像训练得到的。Input the first semantic segmentation image into the second conditional generative adversarial neural network model to determine the first edge line image, and the second conditional generative adversarial neural network model is trained according to the second semantic segmentation image and the second edge line image of each sample image owned.

在一种可能的实现方式中,目标风格为动漫风格。In one possible implementation, the target style is an anime style.

本公开提供的装置50能够实现图1所示方法实施例中的各个步骤,并实现相同的技术效果,为避免重复,这里不再赘述。The

图6示出本公开一实施例的电子设备的结构示意图。如图6所示,在硬件层面,该电子设备包括处理器,可选地还包括内部总线、网络接口、存储器。其中,存储器可能包含内存,例如高速随机存取存储器(Random-Access Memory,RAM),也可能还包括非易失性存储器(non-volatile memory),例如至少1个磁盘存储器等。当然,该电子设备还可能包括其他业务所需要的硬件。FIG. 6 is a schematic structural diagram of an electronic device according to an embodiment of the present disclosure. As shown in FIG. 6 , at the hardware level, the electronic device includes a processor, and optionally an internal bus, a network interface, and a memory. The memory may include memory, such as high-speed random-access memory (Random-Access Memory, RAM), or may also include non-volatile memory (non-volatile memory), such as at least one disk memory. Of course, the electronic equipment may also include hardware required for other services.

处理器、网络接口和存储器可以通过内部总线相互连接,该内部总线可以是工业标准体系结构(Industry Standard Architecture,ISA)总线、外设部件互连标准(Peripheral Component Interconnect,PCI)总线或扩展工业标准结构(ExtendedIndustry Standard Architecture,EISA)总线等。总线可以分为地址总线、数据总线、控制总线等。为便于表示,图6中仅用一个双向箭头表示,但并不表示仅有一根总线或一种类型的总线。The processor, network interface, and memory can be interconnected by an internal bus, which can be an Industry Standard Architecture (ISA) bus, a Peripheral Component Interconnect (PCI) bus, or an extended industry standard Structure (ExtendedIndustry Standard Architecture, EISA) bus and so on. The bus can be divided into address bus, data bus, control bus and so on. For ease of representation, only one bidirectional arrow is shown in FIG. 6, but it does not mean that there is only one bus or one type of bus.

存储器,存放程序。具体地,程序可以包括程序代码,程序代码包括计算机操作指令。存储器可以包括内存和非易失性存储器,并向处理器提供指令和数据。memory to store programs. Specifically, the program may include program code, and the program code includes computer operation instructions. The memory may include memory and non-volatile memory and provide instructions and data to the processor.

处理器从非易失性存储器中读取对应的计算机程序到内存中然后运行,在逻辑层面上形成图像生成装置。处理器,执行存储器所存放的程序,并具体执行:接收用户输入的第一语义分割图像,第一语义分割图像中包括至少一类目标场景物体;确定第一语义分割图像对应的第一边缘线条图像,第一边缘线条图像中包括毎类目标场景物体的边缘信息;将第一语义分割图像和第一边缘线条图像输入第一条件生成对抗神经网络模型,生成第一语义分割图像对应的具备目标风格的图像,第一条件生成对抗神经网络模型是根据具备目标风格的多个样本图像训练得到的。The processor reads the corresponding computer program from the non-volatile memory into the memory and then executes it, forming an image generating device on a logical level. The processor executes the program stored in the memory, and specifically executes: receiving a first semantic segmentation image input by a user, where the first semantic segmentation image includes at least one type of target scene objects; determining a first edge line corresponding to the first semantic segmentation image image, the first edge line image includes edge information of each type of target scene object; the first semantic segmentation image and the first edge line image are input into the first condition to generate an adversarial neural network model, and the first semantic segmentation image corresponding to the target is generated. style images, the first conditional generative adversarial neural network model is trained on multiple sample images with the target style.

在一种可能的实现方式中,处理器被具体配置为执行:对每个样本图像进行语义分割,确定样本图像的第二语义分割图像,第二语义分割图像中包括样本图像中的多类场景物体;对每个样本图像进行边缘检测,确定样本图像的第二边缘线条图像,第二边缘线条图像线条图中包括样本图像中的每类场景物体的边缘信息;根据多个样本图像,以及每个样本图像的第二语义分割图像和第二边缘线条图像,训练得到第一条件生成对抗神经网络模型。In a possible implementation manner, the processor is specifically configured to perform: perform semantic segmentation on each sample image, and determine a second semantically segmented image of the sample image, where the second semantically segmented image includes multiple types of scenes in the sample image object; perform edge detection on each sample image to determine a second edge line image of the sample image, and the line image of the second edge line image includes edge information of each type of scene object in the sample image; The second semantic segmentation image and the second edge line image of each sample image are trained to obtain the first conditional generative adversarial neural network model.

在一种可能的实现方式中,处理器被具体配置为执行:根据每个样本图像的第二语义分割图像和第二边缘线条图像,确定第一边缘线条图像。In a possible implementation manner, the processor is specifically configured to perform: determining the first edge line image according to the second semantic segmentation image and the second edge line image of each sample image.

在一种可能的实现方式中,处理器被具体配置为执行:确定第一语义分割图像的第一空间结构特征;对第一空间结构特征进行降维处理,确定第一语义分割图像在特征空间模型中对应的第一特征点,降维后的第一空间结构特征与特征空间模型维度相同,特征空间模型中包括每个样本图像的第二语义分割图像对应的第二特征点;将特征空间模型中与第一特征点之间的欧式距离小于等于阈值的第二特征点对应的第二语义分割图像确定为第三语义分割图像;根据与第三语义分割图像对应相同样本图像的第二边缘线条图像,确定第一边缘线条图像。In a possible implementation manner, the processor is specifically configured to perform: determining a first spatial structure feature of the first semantically segmented image; performing dimensionality reduction processing on the first spatial structure feature to determine that the first semantically segmented image is in the feature space For the corresponding first feature points in the model, the dimension-reduced first spatial structure feature is the same as the feature space model, and the feature space model includes the second feature point corresponding to the second semantic segmentation image of each sample image; The second semantic segmentation image corresponding to the second feature point whose Euclidean distance between the first feature point is less than or equal to the threshold in the model is determined as the third semantic segmentation image; according to the second edge of the same sample image corresponding to the third semantic segmentation image Line image, determines the first edge line image.

在一种可能的实现方式中,处理器被具体配置为执行:根据每个样本图像的第二语义分割图像,训练得到自编码器模型,自编码器模型用于提取语义分割图像的空间结构特征;根据自编码器模型,确定每个样本图像的第二语义分割图像的第二空间结构特征;对每个样本图像的第二语义分割图像的第二空间结构特征进行降维处理,得到特征空间模型。In a possible implementation manner, the processor is specifically configured to execute: according to the second semantic segmentation image of each sample image, an autoencoder model is obtained by training, and the autoencoder model is used to extract the spatial structure feature of the semantically segmented image ; According to the self-encoder model, determine the second spatial structure feature of the second semantic segmentation image of each sample image; Carry out dimensionality reduction processing on the second spatial structure feature of the second semantic segmentation image of each sample image to obtain a feature space Model.

在一种可能的实现方式中,处理器被具体配置为执行:根据自编码器模型,确定第一空间结构特征。In a possible implementation manner, the processor is specifically configured to perform: determining the first spatial structure feature according to the autoencoder model.

在一种可能的实现方式中,处理器被具体配置为执行:使用PCA算法对空间结构特征进行降维处理。In a possible implementation manner, the processor is specifically configured to perform: using the PCA algorithm to perform dimension reduction processing on the spatial structure feature.

在一种可能的实现方式中,处理器被具体配置为执行:将第一语义分割图像输入第二条件生成对抗神经网络模型,确定第一边缘线条图像,第二条件生成对抗神经网络模型是根据每个样本图像的第二语义分割图像和第二边缘线条图像训练得到的。In a possible implementation manner, the processor is specifically configured to execute: input the first semantic segmentation image into the second conditional generative adversarial neural network model, determine the first edge line image, and the second conditional generative adversarial neural network model is based on The second semantic segmentation image and the second edge line image of each sample image are trained.

在一种可能的实现方式中,目标风格为动漫风格。In one possible implementation, the target style is an anime style.

处理器可能是一种集成电路芯片,具有信号的处理能力。在实现过程中,上述方法的各步骤可以通过处理器中的硬件的集成逻辑电路或者软件形式的指令完成。上述的处理器可以是通用处理器,包括中央处理器(Central Processing Unit,CPU)、网络处理器(Network Processor,NP)等;还可以是数字信号处理器(Digital Signal Processor,DSP)、专用集成电路(Application Specific Integrated Circuit,ASIC)、现场可编程门阵列(Field-Programmable Gate Array,FPGA)或者其他可编程逻辑器件、分立门或者晶体管逻辑器件、分立硬件组件。可以实现或者执行本说明书实施例中的公开的各方法、步骤及逻辑框图。通用处理器可以是微处理器或者该处理器也可以是任何常规的处理器等。结合本说明书实施例所公开的方法的步骤可以直接体现为硬件译码处理器执行完成,或者用译码处理器中的硬件及软件模块组合执行完成。软件模块可以位于随机存储器,闪存、只读存储器,可编程只读存储器或者电可擦写可编程存储器、寄存器等本领域成熟的存储介质中。该存储介质位于存储器,处理器读取存储器中的信息,结合其硬件完成上述方法的步骤。A processor may be an integrated circuit chip with signal processing capabilities. In the implementation process, each step of the above-mentioned method can be completed by a hardware integrated logic circuit in a processor or an instruction in the form of software. The above-mentioned processor may be a general-purpose processor, including a central processing unit (Central Processing Unit, CPU), a network processor (Network Processor, NP), etc.; it may also be a digital signal processor (Digital Signal Processor, DSP), dedicated integrated Circuit (Application Specific Integrated Circuit, ASIC), Field-Programmable Gate Array (Field-Programmable Gate Array, FPGA) or other programmable logic devices, discrete gate or transistor logic devices, discrete hardware components. Various methods, steps and logic block diagrams disclosed in the embodiments of this specification can be implemented or executed. A general purpose processor may be a microprocessor or the processor may be any conventional processor or the like. The steps of the methods disclosed in conjunction with the embodiments of this specification may be directly embodied as being executed by a hardware decoding processor, or executed by a combination of hardware and software modules in the decoding processor. The software modules may be located in random access memory, flash memory, read-only memory, programmable read-only memory or electrically erasable programmable memory, registers and other storage media mature in the art. The storage medium is located in the memory, and the processor reads the information in the memory, and completes the steps of the above method in combination with its hardware.

该电子设备可执行图1所示方法实施例执行的方法,并实现上述图1所示方法实施例的功能,本说明书实施例在此不再赘述。The electronic device can execute the method performed by the method embodiment shown in FIG. 1, and implement the functions of the method embodiment shown in FIG.

本说明书实施例还提出了一种计算机可读存储介质,该计算机可读存储介质存储一个或多个程序,该一个或多个程序包括指令,该指令当被包括多个应用程序的电子设备执行时,能够使该电子设备执行图1所示实施例中的图像生成方法,并具体执行图1所示方法实施例的步骤。An embodiment of the present specification also provides a computer-readable storage medium, where the computer-readable storage medium stores one or more programs, and the one or more programs include instructions, and the instructions are executed by an electronic device including multiple application programs. , the electronic device can be made to execute the image generation method in the embodiment shown in FIG. 1 , and specifically execute the steps of the method embodiment shown in FIG. 1 .

本公开可以是系统、方法和/或计算机程序产品。计算机程序产品可以包括计算机可读存储介质,其上载有用于使处理器实现本公开的各个方面的计算机可读程序指令。The present disclosure may be a system, method and/or computer program product. The computer program product may include a computer-readable storage medium having computer-readable program instructions loaded thereon for causing a processor to implement various aspects of the present disclosure.

计算机可读存储介质可以是可以保持和存储由指令执行设备使用的指令的有形设备。计算机可读存储介质例如可以是――但不限于――电存储设备、磁存储设备、光存储设备、电磁存储设备、半导体存储设备或者上述的任意合适的组合。计算机可读存储介质的更具体的例子(非穷举的列表)包括:便携式计算机盘、硬盘、随机存取存储器(RAM)、只读存储器(ROM)、可擦式可编程只读存储器(EPROM或闪存)、静态随机存取存储器(SRAM)、便携式压缩盘只读存储器(CD-ROM)、数字多功能盘(DVD)、记忆棒、软盘、机械编码设备、例如其上存储有指令的打孔卡或凹槽内凸起结构、以及上述的任意合适的组合。这里所使用的计算机可读存储介质不被解释为瞬时信号本身,诸如无线电波或者其他自由传播的电磁波、通过波导或其他传输媒介传播的电磁波(例如,通过光纤电缆的光脉冲)、或者通过电线传输的电信号。A computer-readable storage medium may be a tangible device that can hold and store instructions for use by the instruction execution device. The computer-readable storage medium may be, for example, but not limited to, an electrical storage device, a magnetic storage device, an optical storage device, an electromagnetic storage device, a semiconductor storage device, or any suitable combination of the foregoing. More specific examples (non-exhaustive list) of computer readable storage media include: portable computer disks, hard disks, random access memory (RAM), read only memory (ROM), erasable programmable read only memory (EPROM) or flash memory), static random access memory (SRAM), portable compact disk read only memory (CD-ROM), digital versatile disk (DVD), memory sticks, floppy disks, mechanically coded devices, such as printers with instructions stored thereon Hole cards or raised structures in grooves, and any suitable combination of the above. Computer-readable storage media, as used herein, are not to be construed as transient signals per se, such as radio waves or other freely propagating electromagnetic waves, electromagnetic waves propagating through waveguides or other transmission media (eg, light pulses through fiber optic cables), or through electrical wires transmitted electrical signals.

这里所描述的计算机可读程序指令可以从计算机可读存储介质下载到各个计算/处理设备,或者通过网络、例如因特网、局域网、广域网和/或无线网下载到外部计算机或外部存储设备。网络可以包括铜传输电缆、光纤传输、无线传输、路由器、防火墙、交换机、网关计算机和/或边缘服务器。每个计算/处理设备中的网络适配卡或者网络接口从网络接收计算机可读程序指令,并转发该计算机可读程序指令,以供存储在各个计算/处理设备中的计算机可读存储介质中。The computer readable program instructions described herein may be downloaded to various computing/processing devices from a computer readable storage medium, or to an external computer or external storage device over a network such as the Internet, a local area network, a wide area network, and/or a wireless network. The network may include copper transmission cables, fiber optic transmission, wireless transmission, routers, firewalls, switches, gateway computers, and/or edge servers. A network adapter card or network interface in each computing/processing device receives computer-readable program instructions from a network and forwards the computer-readable program instructions for storage in a computer-readable storage medium in each computing/processing device .

用于执行本公开操作的计算机程序指令可以是汇编指令、指令集架构(ISA)指令、机器指令、机器相关指令、微代码、固件指令、状态设置数据、或者以一种或多种编程语言的任意组合编写的源代码或目标代码,所述编程语言包括面向对象的编程语言—诸如Smalltalk、C++等,以及常规的过程式编程语言—诸如“C”语言或类似的编程语言。计算机可读程序指令可以完全地在用户计算机上执行、部分地在用户计算机上执行、作为一个独立的软件包执行、部分在用户计算机上部分在远程计算机上执行、或者完全在远程计算机或服务器上执行。在涉及远程计算机的情形中,远程计算机可以通过任意种类的网络—包括局域网(LAN)或广域网(WAN)—连接到用户计算机,或者,可以连接到外部计算机(例如利用因特网服务提供商来通过因特网连接)。在一些实施例中,通过利用计算机可读程序指令的状态信息来个性化定制电子电路,例如可编程逻辑电路、现场可编程门阵列(FPGA)或可编程逻辑阵列(PLA),该电子电路可以执行计算机可读程序指令,从而实现本公开的各个方面。Computer program instructions for carrying out operations of the present disclosure may be assembly instructions, instruction set architecture (ISA) instructions, machine instructions, machine-dependent instructions, microcode, firmware instructions, state setting data, or instructions in one or more programming languages. Source or object code, written in any combination, including object-oriented programming languages, such as Smalltalk, C++, etc., and conventional procedural programming languages, such as the "C" language or similar programming languages. The computer readable program instructions may execute entirely on the user's computer, partly on the user's computer, as a stand-alone software package, partly on the user's computer and partly on a remote computer, or entirely on the remote computer or server implement. In the case of a remote computer, the remote computer may be connected to the user's computer through any kind of network, including a local area network (LAN) or a wide area network (WAN), or may be connected to an external computer (eg, using an Internet service provider through the Internet connect). In some embodiments, custom electronic circuits, such as programmable logic circuits, field programmable gate arrays (FPGAs), or programmable logic arrays (PLAs), can be personalized by utilizing state information of computer readable program instructions. Computer readable program instructions are executed to implement various aspects of the present disclosure.

这里参照根据本公开实施例的方法、装置(系统)和计算机程序产品的流程图和/或框图描述了本公开的各个方面。应当理解,流程图和/或框图的每个方框以及流程图和/或框图中各方框的组合,都可以由计算机可读程序指令实现。Aspects of the present disclosure are described herein with reference to flowchart illustrations and/or block diagrams of methods, apparatus (systems) and computer program products according to embodiments of the disclosure. It will be understood that each block of the flowchart illustrations and/or block diagrams, and combinations of blocks in the flowchart illustrations and/or block diagrams, can be implemented by computer readable program instructions.

这些计算机可读程序指令可以提供给通用计算机、专用计算机或其它可编程数据处理装置的处理器,从而生产出一种机器,使得这些指令在通过计算机或其它可编程数据处理装置的处理器执行时,产生了实现流程图和/或框图中的一个或多个方框中规定的功能/动作的装置。也可以把这些计算机可读程序指令存储在计算机可读存储介质中,这些指令使得计算机、可编程数据处理装置和/或其他设备以特定方式工作,从而,存储有指令的计算机可读介质则包括一个制造品,其包括实现流程图和/或框图中的一个或多个方框中规定的功能/动作的各个方面的指令。These computer readable program instructions may be provided to a processor of a general purpose computer, special purpose computer or other programmable data processing apparatus to produce a machine that causes the instructions when executed by the processor of the computer or other programmable data processing apparatus , resulting in means for implementing the functions/acts specified in one or more blocks of the flowchart and/or block diagrams. These computer readable program instructions can also be stored in a computer readable storage medium, these instructions cause a computer, programmable data processing apparatus and/or other equipment to operate in a specific manner, so that the computer readable medium on which the instructions are stored includes An article of manufacture comprising instructions for implementing various aspects of the functions/acts specified in one or more blocks of the flowchart and/or block diagrams.

也可以把计算机可读程序指令加载到计算机、其它可编程数据处理装置、或其它设备上,使得在计算机、其它可编程数据处理装置或其它设备上执行一系列操作步骤,以产生计算机实现的过程,从而使得在计算机、其它可编程数据处理装置、或其它设备上执行的指令实现流程图和/或框图中的一个或多个方框中规定的功能/动作。Computer readable program instructions can also be loaded onto a computer, other programmable data processing apparatus, or other equipment to cause a series of operational steps to be performed on the computer, other programmable data processing apparatus, or other equipment to produce a computer-implemented process , thereby causing instructions executing on a computer, other programmable data processing apparatus, or other device to implement the functions/acts specified in one or more blocks of the flowcharts and/or block diagrams.

附图中的流程图和框图显示了根据本公开的多个实施例的系统、方法和计算机程序产品的可能实现的体系架构、功能和操作。在这点上,流程图或框图中的每个方框可以代表一个模块、程序段或指令的一部分,所述模块、程序段或指令的一部分包含一个或多个用于实现规定的逻辑功能的可执行指令。在有些作为替换的实现中,方框中所标注的功能也可以以不同于附图中所标注的顺序发生。例如,两个连续的方框实际上可以基本并行地执行,它们有时也可以按相反的顺序执行,这依所涉及的功能而定。也要注意的是,框图和/或流程图中的每个方框、以及框图和/或流程图中的方框的组合,可以用执行规定的功能或动作的专用的基于硬件的系统来实现,或者可以用专用硬件与计算机指令的组合来实现。The flowchart and block diagrams in the Figures illustrate the architecture, functionality, and operation of possible implementations of systems, methods and computer program products according to various embodiments of the present disclosure. In this regard, each block in the flowchart or block diagrams may represent a module, segment, or portion of instructions, which comprises one or more functions for implementing the specified logical function(s) executable instructions. In some alternative implementations, the functions noted in the blocks may occur out of the order noted in the figures. For example, two blocks in succession may, in fact, be executed substantially concurrently, or the blocks may sometimes be executed in the reverse order, depending upon the functionality involved. It is also noted that each block of the block diagrams and/or flowchart illustrations, and combinations of blocks in the block diagrams and/or flowchart illustrations, can be implemented in dedicated hardware-based systems that perform the specified functions or actions , or can be implemented in a combination of dedicated hardware and computer instructions.

以上已经描述了本公开的各实施例,上述说明是示例性的,并非穷尽性的,并且也不限于所披露的各实施例。在不偏离所说明的各实施例的范围和精神的情况下,对于本技术领域的普通技术人员来说许多修改和变更都是显而易见的。本文中所用术语的选择,旨在最好地解释各实施例的原理、实际应用或对市场中的技术的技术改进,或者使本技术领域的其它普通技术人员能理解本文披露的各实施例。Various embodiments of the present disclosure have been described above, and the foregoing descriptions are exemplary, not exhaustive, and not limiting of the disclosed embodiments. Numerous modifications and variations will be apparent to those of ordinary skill in the art without departing from the scope and spirit of the described embodiments. The terminology used herein was chosen to best explain the principles of the embodiments, the practical application or technical improvement over the technology in the marketplace, or to enable others of ordinary skill in the art to understand the embodiments disclosed herein.

Claims (20)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201910390118.2ACN111915703B (en) | 2019-05-10 | 2019-05-10 | An image generation method and device |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201910390118.2ACN111915703B (en) | 2019-05-10 | 2019-05-10 | An image generation method and device |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN111915703Atrue CN111915703A (en) | 2020-11-10 |

| CN111915703B CN111915703B (en) | 2023-05-09 |

Family

ID=73242255

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201910390118.2AActiveCN111915703B (en) | 2019-05-10 | 2019-05-10 | An image generation method and device |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN111915703B (en) |

Cited By (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114332267A (en)* | 2021-11-05 | 2022-04-12 | 腾讯科技(深圳)有限公司 | Generation method and device of ink-wash painting image, computer equipment and medium |

| CN115100312A (en)* | 2022-07-14 | 2022-09-23 | 猫小兜动漫影视(深圳)有限公司 | Method and device for animating image |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20030095701A1 (en)* | 2001-11-19 | 2003-05-22 | Heung-Yeung Shum | Automatic sketch generation |

| CN102496180A (en)* | 2011-12-15 | 2012-06-13 | 李大锦 | Method for automatically generating wash landscape painting image |

| CN108229504A (en)* | 2018-01-29 | 2018-06-29 | 深圳市商汤科技有限公司 | Method for analyzing image and device |

| CN109712068A (en)* | 2018-12-21 | 2019-05-03 | 云南大学 | Image Style Transfer and analogy method for cucurbit pyrography |

- 2019

- 2019-05-10CNCN201910390118.2Apatent/CN111915703B/enactiveActive

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20030095701A1 (en)* | 2001-11-19 | 2003-05-22 | Heung-Yeung Shum | Automatic sketch generation |

| CN102496180A (en)* | 2011-12-15 | 2012-06-13 | 李大锦 | Method for automatically generating wash landscape painting image |

| CN108229504A (en)* | 2018-01-29 | 2018-06-29 | 深圳市商汤科技有限公司 | Method for analyzing image and device |

| CN109712068A (en)* | 2018-12-21 | 2019-05-03 | 云南大学 | Image Style Transfer and analogy method for cucurbit pyrography |

Non-Patent Citations (5)

| Title |

|---|

| LINGYUN SUN等: "SmartPaint: a co-creative drawing system based on generative adversarial networks", 《FRONTIERS OF INFORMATION TECHNOLOGY & ELECTRONIC ENGINEERING》* |

| M. ELAD 等: "Style Transfer Via Texture Synthesis", 《IEEE TRANSACTIONS ON IMAGE PROCESSING》* |

| 卢盛荣 等: "一种绘画效果生成算法", 《计算机工程与应用》* |

| 王得丘: "人脸漫画自动生成算法的研究与应用", 《中国优秀硕士学位论文全文数据库 信息科技辑》* |

| 赵艳丹: "风格化数字图像的GPU加速生成和编辑", 《中国博士学位论文全文数据库 信息科技辑》* |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114332267A (en)* | 2021-11-05 | 2022-04-12 | 腾讯科技(深圳)有限公司 | Generation method and device of ink-wash painting image, computer equipment and medium |

| CN115100312A (en)* | 2022-07-14 | 2022-09-23 | 猫小兜动漫影视(深圳)有限公司 | Method and device for animating image |

| CN115100312B (en)* | 2022-07-14 | 2023-08-22 | 猫小兜动漫影视(深圳)有限公司 | Image cartoon method and device |

Also Published As

| Publication number | Publication date |

|---|---|

| CN111915703B (en) | 2023-05-09 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| TWI737006B (en) | Cross-modal information retrieval method, device and storage medium | |

| US11200424B2 (en) | Space-time memory network for locating target object in video content | |

| CN110443266B (en) | Object prediction method and device, electronic equipment and storage medium | |

| CN111488873B (en) | Character level scene text detection method and device based on weak supervision learning | |

| GB2574087A (en) | Compositing aware digital image search | |

| CN109685121A (en) | Training method, image search method, the computer equipment of image encrypting algorithm | |

| CN114429566B (en) | Image semantic understanding method, device, equipment and storage medium | |

| Smith et al. | A method for animating children’s drawings of the human figure | |

| CN114842411A (en) | Group behavior identification method based on complementary space-time information modeling | |

| CN116982089A (en) | Method and system for image semantic enhancement | |

| US10217224B2 (en) | Method and system for sharing-oriented personalized route planning via a customizable multimedia approach | |

| WO2022227218A1 (en) | Drug name recognition method and apparatus, and computer device and storage medium | |

| CN116311279A (en) | Sample image generation, model training and character recognition methods, equipment and media | |

| CN113674374A (en) | Chinese text image generation method and device based on generation type countermeasure network | |

| CN111915703B (en) | An image generation method and device | |

| CN110969641A (en) | Image processing method and device | |

| Wu et al. | Pre‐trained SAM as data augmentation for image segmentation | |

| CN118035493B (en) | Image generation method, device, equipment, storage medium and program product | |

| CN116304146B (en) | Image processing methods and related devices | |

| CN118115624A (en) | Image layering generation system, method and device based on stable diffusion model | |

| WO2024197829A1 (en) | Single-character detection method and apparatus, model training method and apparatus, and device and medium | |

| CN114792406A (en) | Method, apparatus, and medium for generating a tag for user-generated content | |

| CN113627124B (en) | A processing method, device and electronic device for font migration model | |

| CN115761389A (en) | Image sample amplification method and device, electronic device and storage medium | |

| CN115712696A (en) | Data processing method, device, equipment and computer readable storage medium |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||