CN111915591B - A High-Quality Image Extrapolation System Based on Spiral Generation Network - Google Patents

A High-Quality Image Extrapolation System Based on Spiral Generation NetworkDownload PDFInfo

- Publication number

- CN111915591B CN111915591BCN202010768731.6ACN202010768731ACN111915591BCN 111915591 BCN111915591 BCN 111915591BCN 202010768731 ACN202010768731 ACN 202010768731ACN 111915591 BCN111915591 BCN 111915591B

- Authority

- CN

- China

- Prior art keywords

- image

- spiral

- extrapolation

- slice

- generation network

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/0002—Inspection of images, e.g. flaw detection

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/40—Analysis of texture

- G06T7/49—Analysis of texture based on structural texture description, e.g. using primitives or placement rules

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/90—Determination of colour characteristics

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10024—Color image

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20081—Training; Learning

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20084—Artificial neural networks [ANN]

Landscapes

- Engineering & Computer Science (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Quality & Reliability (AREA)

- Image Processing (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明涉及图像外推技术领域,尤其涉及一种基于螺旋生成网络的高质量图像外推系统。The invention relates to the technical field of image extrapolation, in particular to a high-quality image extrapolation system based on a spiral generating network.

背景技术Background technique

假设给定一张子图像(比如人脸的部分图像),当人们被要求绘制完整图像时该如何做?实际上,尽管周围区域无法看到,但人类可以根据先验知识初步想象整幅图像的样子,然后根据想象内容,沿图像周围由内向外逐步绘制出。在计算机视觉中,此类问题被称为图像外推。图像外推的目的是将输入子图像周围不可见区域填充内容,例如依据对象(如人脸、车)的部分图像将完整对象绘制出,或扩展场景图像的内容边界。图像外推问题主要具有两项挑战:Given a sub-image (say a partial image of a face), what should one do when asked to draw the full image? In fact, although the surrounding area cannot be seen, humans can initially imagine what the entire image looks like based on prior knowledge, and then gradually draw from the inside to the outside around the image according to the imagined content. In computer vision, such problems are called image extrapolation. The purpose of image extrapolation is to fill the invisible area around the input sub-image with content, for example, to draw the complete object according to the partial image of the object (such as face, car), or to expand the content boundary of the scene image. The image extrapolation problem has two main challenges:

1、外推图像须具有语义合理性,且外推部分需具有特定准确语义内容;1. The extrapolated image must be semantically reasonable, and the extrapolated part must have specific and accurate semantic content;

2、外推区域须与原始输入子图像在结构与纹理上保持一致。2. The extrapolated region must be consistent with the original input sub-image in structure and texture.

即使对人类来说,图像外推也是一项具有挑战的问题,在计算机视觉中,由于近年来对抗生成网络的快速发展,图像外推问题得以在此基础上得到初步解决。目前基于对抗生成网络的图像外推方法首先生成整个完整图像,再将原始输入子图像根据外推位置粘贴到完整图像上,这种方法会使得最终的结果在输入子图像区域与外推区域之间产生视觉上的不适感。另外,由于外推区域与输入子图像之间的存在普遍的长距离上下文关系,直接应用图像补全方法常常会导致产生语义不一致的模糊现象。Image extrapolation is a challenging problem even for humans, and in computer vision, due to the rapid development of adversarial generative networks in recent years, the image extrapolation problem has been preliminarily solved on this basis. The current image extrapolation method based on adversarial generative network first generates the entire complete image, and then pastes the original input sub-image onto the complete image according to the extrapolated position. This method will make the final result between the input sub-image area and the extrapolated area. produce visual discomfort. In addition, due to the prevalent long-range contextual relationship between the extrapolated region and the input sub-image, directly applying image completion methods often leads to semantically inconsistent blurring.

发明内容SUMMARY OF THE INVENTION

本发明提供一种基于螺旋生成网络的高质量图像外推系统,解决的技术问题在于:目前基于对抗生成网络的图像外推方法,还未实现使得外推图像具有语义合理性,且外推部分需具有特定准确语义内容,以及外推区域与原始输入子图像在结构与纹理上保持一致。The present invention provides a high-quality image extrapolation system based on a spiral generating network, and the technical problem to be solved is: the current image extrapolation method based on the confrontation generating network has not yet realized that the extrapolated image is semantically reasonable, and the extrapolation part It needs to have specific and accurate semantic content, and the structure and texture of the extrapolated region and the original input sub-image should be consistent.

为解决以上技术问题,本发明提供一种基于螺旋生成网络的高质量图像外推系统,螺旋生成网络包括假想图生成网络、切片生成网络、螺旋辨别器和外推辨别器;In order to solve the above technical problems, the present invention provides a high-quality image extrapolation system based on a spiral generation network, the spiral generation network includes an imaginary image generation network, a slice generation network, a spiral discriminator and an extrapolation discriminator;

所述假想图生成网络包括假想图生成器和假想图辨别器,所述假想图生成网络用于在所述假想图生成器、所述假想图辨别器于假想图目标损失函数的对抗下,生成输入子图像的假想图;The hypothetical graph generation network includes a hypothetical graph generator and a hypothetical graph discriminator, and the hypothetical graph generation network is used to generate a hypothetical graph under the confrontation of the hypothetical graph generator and the hypothetical graph discriminator with the hypothetical graph target loss function. An imaginary image of the input sub-image;

所述切片生成网络包括切片算子、切片生成器、外推算子;所述切片算子用于将所述假想图切出假想图切片以及将当前螺旋点的外推图像切出最近邻切片;所述切片生成器用于根据所述最近邻切片、所述假想图切片与所述输入子图像生成外推切片;所述外推算子用于将所述外推切片缝合回当前螺旋点的外推图像,得到下一螺旋点的外推图像,如此完成一次次的螺旋外推;The slice generation network includes a slice operator, a slice generator, and an extrapolation operator; the slice operator is used to cut the imaginary graph into an imaginary graph slice and cut the extrapolated image of the current spiral point into a nearest neighbor slice; The slice generator is used to generate an extrapolated slice according to the nearest neighbor slice, the imaginary image slice and the input sub-image; the extrapolation operator is used to stitch the extrapolated slice back to the extrapolation of the current helix point image to obtain the extrapolated image of the next spiral point, thus completing the spiral extrapolation again and again;

所述螺旋辨别器和所述外推辨别器用于在螺旋损失目标函数的对抗下,输出多次螺旋外推后的目标图像。The spiral discriminator and the extrapolation discriminator are used to output the target image after multiple spiral extrapolations under the confrontation of the spiral loss objective function.

进一步地,所述假想图目标损失函数由第一对抗损失函数、色相-颜色损失函数、感知损失函数线性组合而成;所述色相-颜色损失函数的表达式为:Further, the imaginary image target loss function is formed by a linear combination of a first confrontation loss function, a hue-color loss function, and a perceptual loss function; the expression of the hue-color loss function is:

其中,是真实图像Y的缩小图像,是所述假想图,h×w是真实图像Y的尺寸,ξ与γ均为常数,γ<1。in, is a reduced image of the real image Y, is the imaginary image, h×w is the size of the real image Y, ξ and γ are both constants, and γ<1.

进一步地,所述假想图目标损失函数的表达式为:Further, the expression of the imaginary graph target loss function is:

其中,分别表示所述第一对抗损失函数、所述感知损失函数,是用于平衡三类损失的权重;in, respectively represent the first adversarial loss function and the perceptual loss function, is for balance The weights of the three types of losses;

所述第一对抗损失函数的表达式为:The first adversarial loss function The expression is:

其中,GI、DI分别表示所述假想图生成器和所述假想图辨别器,表示输入子图像;Wherein, GI and DI represent the imaginary graph generator and the imaginary graph discriminator, respectively, represents the input sub-image;

所述感知损失函数的表达式为:The expression of the perceptual loss function is:

其中,Nu是第u个激活层中特征矩阵的元素数量,σu是预训练模型VGG-19中第u层激活特征矩阵。where Nu is the number of elements of the feature matrix in the u-th activation layer, and σu is the activation feature matrix of theu -th layer in the pretrained model VGG-19.

进一步地,所述假想图生成器采用CycleGAN的生成器结构,所述假想图辨别器采用Pix2Pix的辨别器结构。Further, the imaginary graph generator adopts the generator structure of CycleGAN, and the imaginary graph discriminator adopts the discriminator structure of Pix2Pix.

进一步地,将所述输入子图像叠加外推遮盖层M与均匀分布噪声Z后输入所述假想图生成网络中,得到所述假想图。Further, the input sub-image is superimposed with an extrapolation cover layer M and uniformly distributed noise Z, and then input into the imaginary image generation network to obtain the imaginary image.

进一步地,所述螺旋损失目标函数由第二对抗损失函数、L1损失函数、风格损失函数、所述色相-颜色损失函数线性组合而成;Further, the spiral loss objective function is formed by a linear combination of the second adversarial loss function, the L1 loss function, the style loss function, and the hue-color loss function;

所述螺旋损失目标函数的表达式为:The expression of the spiral loss objective function is:

其中,分别表示所述第二对抗损失函数、所述L1损失函数、所述风格损失函数、所述色相-颜色损失函数,λadv、λL1、λstyle、λhue是用于平衡这四类损失的权重;in, respectively represent the second adversarial loss function, the L1 loss function, the style loss function, and the hue-color loss function, λadv , λL1 , λstyle , λhue are used for balance The weights of these four types of losses;

所述第二对抗损失函数的表达式为:The expression of the second adversarial loss function is:

其中,F表示螺旋生成函数,DS表示所述螺旋辨别器,DE表示所述外推辨别器,表示螺旋生成的所述目标图像,表示的外推区域,E表示真实图像Y的外推区域,表示的外推区域,即表示与和Y等大的外推遮盖层。where F represents the spiral generating function, DS represents the spiral discriminator, DE represents the extrapolation discriminator, represents the target image generated by the spiral, express The extrapolation area of , E represents the extrapolation area of the real image Y, express the extrapolation area of means with and an extrapolated mask of size Y.

所述L1损失函数的表达式为:The expression of the L1 loss function is:

所述风格损失函数的表达式为:The expression of the style loss function is:

其中,表示从激活图σv中构造的Gv×GvGram矩阵。in, represents the Gv ×Gv Gram matrix constructed from the activation map σv .

进一步地,所述切片生成器包括编码器、自适应实例归一化模块、空间自适应归一化模块、解码器;将所述假想图切片输入至所述编码器,通过自适应实例归一化模块在其隐变量空间中融合所述输入子图像的风格,其后在所述解码器中通过空间自适应归一化模块引入所述最近邻切片的语义信息,生成最终的所述外推切片。Further, the slice generator includes an encoder, an adaptive instance normalization module, a spatial adaptive normalization module, and a decoder; the imaginary image slices are input to the encoder, and are normalized by adaptive instance normalization. The normalization module fuses the style of the input sub-image in its latent variable space, and then introduces the semantic information of the nearest neighbor slice through the spatial adaptive normalization module in the decoder to generate the final extrapolation slice.

本发明提供的一种基于螺旋生成网络的高质量图像外推系统,其有益效果在于:A high-quality image extrapolation system based on a spiral generating network provided by the present invention has the beneficial effects of:

1、提出假想图生成网络用以模仿人类的想象生成假想图,假想图用于指引切片生成网络以生成更多的图像细节,假想图目标损失函数可使得假想图的色彩与输入子图像更贴近;1. Propose an imaginary image generation network to imitate human imagination to generate an imaginary image. The imaginary image is used to guide the slice generation network to generate more image details. The imaginary image target loss function can make the color of the imaginary image closer to the input sub-image. ;

2、提出切片生成网络,采用切片算子、切片生成器、外推算子,在假想图的语义指引下,将输入子图像沿着上下左右四个方向逐步外推绘制图像,最后得到的目标图像具有更好的语音一致性与高质量细节,与原始的输入子图像在结构、纹理上可基本保持一致;2. Propose a slice generation network, using slice operator, slice generator, extrapolation operator, under the semantic guidance of the imaginary image, the input sub-image is gradually extrapolated along the four directions of up, down, left and right to draw the image, and finally the target image is obtained. It has better speech consistency and high-quality details, and can be basically consistent with the original input sub-image in structure and texture;

3、提出一种新颖的螺旋生成网络,首次将螺旋外推问题视为一个螺旋生长的过程,使输入子图像在假想图生成网络和切片生成网络的作用下沿着螺旋曲线方向在四周逐步生长,每次外推上下左右四个方向的某一个边,直至外推成完整图像,因为假想图生成网络、切片生成网络、螺旋辨别器、外推辨别器的网络结构和相应的目标损失函数设计,使得螺旋生成的外推区域较为真实,且与原始子图像在结构、纹理上可以保持一致,符合人类想象;3. A novel spiral generation network is proposed. For the first time, the spiral extrapolation problem is regarded as a spiral growth process, so that the input sub-image gradually grows around the spiral curve under the action of the imaginary image generation network and the slice generation network. , each time extrapolate one of the four directions of up, down, left, and right, until the extrapolation becomes a complete image, because the network structure of the imaginary graph generation network, slice generation network, spiral discriminator, extrapolation discriminator and the corresponding target loss function design , so that the extrapolated region generated by the spiral is more realistic, and can be consistent with the original sub-image in structure and texture, which is in line with human imagination;

4、面向各种图像外推情形与不同数据集,螺旋生成网络在图像外推问题中达到目前最佳指标与视觉效果。4. Facing various image extrapolation situations and different data sets, the spiral generation network achieves the current best indicators and visual effects in the image extrapolation problem.

附图说明Description of drawings

图1是本发明实施例提供的螺旋生成网络将输入子图像进行螺旋外推的示意图;Fig. 1 is a schematic diagram of spirally extrapolating an input sub-image by a spiral generation network provided by an embodiment of the present invention;

图2是本发明实施例提供的螺旋生成网络将输入子图像进行螺旋外推后的效果示例图;2 is an example diagram of the effect of spirally extrapolating an input sub-image by a spiral generation network provided by an embodiment of the present invention;

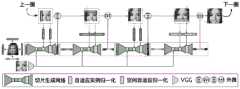

图3是本发明实施例提供的螺旋生成网络的结构示意图;3 is a schematic structural diagram of a spiral generation network provided by an embodiment of the present invention;

图4是本发明实施例提供的螺旋生成网络进行一圈螺旋外推时的切片生成网络的工作流程图;Fig. 4 is the work flow diagram of the slice generation network when the spiral generation network provided by the embodiment of the present invention performs a circle of spiral extrapolation;

图5是本发明实施例提供的不同情况下的定性评价结果;5 is a qualitative evaluation result under different circumstances provided by an embodiment of the present invention;

图6是本发明实施例提供的在CelebA-HQ数据集上的未知边界距离情况的结果示例图;6 is an example diagram of a result of an unknown boundary distance situation on the CelebA-HQ data set provided by an embodiment of the present invention;

图7是本发明实施例提供的螺旋必要性消融实验的定性评价结果示例图;7 is an example diagram of a qualitative evaluation result of a spiral necessity ablation experiment provided by an embodiment of the present invention;

图8是本发明实施例提供的三元切片生成网络输入消融实验的定性评级结果示例图;8 is an example diagram of a qualitative rating result of a ternary slice generation network input ablation experiment provided by an embodiment of the present invention;

图9是本发明实施例提供的切片生成网络不同切片大小的消融实验的定性评价结果示例图;9 is an example diagram of qualitative evaluation results of ablation experiments of different slice sizes of a slice generation network provided by an embodiment of the present invention;

图10是本发明实施例提供的假想图生成网络在Flowers和Stanford Cars数据集上不同损失的比较图。FIG. 10 is a comparison diagram of different losses of the imaginary graph generation network provided by the embodiment of the present invention on the Flowers and Stanford Cars datasets.

具体实施方式Detailed ways

下面结合附图具体阐明本发明的实施方式,实施例的给出仅仅是为了说明目的,并不能理解为对本发明的限定,包括附图仅供参考和说明使用,不构成对本发明专利保护范围的限制,因为在不脱离本发明精神和范围基础上,可以对本发明进行许多改变。The embodiments of the present invention will be explained in detail below in conjunction with the accompanying drawings. The examples are given only for the purpose of illustration and should not be construed as a limitation of the present invention. The accompanying drawings are only used for reference and description, and do not constitute a limitation on the protection scope of the patent of the present invention. limitation, since many changes may be made in the present invention without departing from the spirit and scope of the invention.

目前基于对抗生成网络的图像外推方法,还未实现使得外推图像具有语义合理性,且外推部分需具有特定准确语义内容,以及外推区域与原始输入子图像在结构与纹理上保持一致。为了解决这一技术问题,本实施例提出了一种基于螺旋生成网络的高质量图像外推系统,并对该螺旋生成网络的图像外推效果进行了实验验证,下面分别针对螺旋生成网络和实验部分进行介绍。At present, the image extrapolation method based on adversarial generative network has not yet been realized to make the extrapolated image semantically reasonable, and the extrapolated part must have specific and accurate semantic content, and the extrapolated area and the original input sub-image should be consistent in structure and texture . In order to solve this technical problem, this embodiment proposes a high-quality image extrapolation system based on a spiral generation network, and experimentally verifies the image extrapolation effect of the spiral generation network. section is introduced.

(一)螺旋生成网络(1) Spiral generation network

人类具有对图像之外不可见区域的想象能力,受此启发,针对高质量图像外推问题,本实施例提出一种新颖的螺旋生成网络,此网络将输入子图像沿着螺旋曲线在图像四周四个方向进行外推,最终达到目标尺寸。螺旋生成网络由两部分构成:假想图生成网络与切片生成网络。两个子网络将图像外推问题分解为两个独立的子任务:语义信息推测(通过假想图生成网络)与上下文细节生成(通过切片生成网络)。在这其中,切片生成网络的设计利用了生成外推内容与外推方向之间的相关性,并针对各个方向进行外推切片生成。Human beings have the ability to imagine invisible areas outside the image. Inspired by this, for the high-quality image extrapolation problem, this embodiment proposes a novel spiral generation network, which generates the input sub-image along the spiral curve around the image. Four directions are extrapolated, and the target size is finally reached. The spiral generation network consists of two parts: the imaginary graph generation network and the slice generation network. Two sub-networks decompose the image extrapolation problem into two independent subtasks: semantic information inference (via a hypothetical graph generation network) and contextual detail generation (via a slice generation network). Among them, the design of the slice generation network takes advantage of the correlation between the generated extrapolation content and the extrapolation direction, and extrapolates the slice generation for each direction.

如图1所示,本实施例将图像外推问题视为一个螺旋生长过程,输入子图像沿着螺旋曲线方向在四周逐步生长,每次外推一个边,直至外推成完整图像。本质上讲,螺旋生成网络是一种渐进式的部分到整体的图像生成方法,该网络将输入子图像沿着上下左右四个方向逐步外推绘制图像。以这种方式,本实施例将输入子图像周围的大块不可视区域分解成多个小块方向片,对每一个小片单独生成,这使得最终的外推图像具有更好的语音一致性与高质量细节,如图2所示。As shown in FIG. 1 , in this embodiment, the image extrapolation problem is regarded as a spiral growth process. The input sub-image gradually grows around along the spiral curve direction, and one edge is extrapolated at a time, until a complete image is extrapolated. Essentially, the spiral generative network is a progressive part-to-whole image generation method, in which the network gradually extrapolates the input sub-image along the four directions of up, down, left, and right to draw the image. In this way, this embodiment decomposes the large invisible area around the input sub-image into multiple small directional patches, and generates each patch separately, which makes the final extrapolated image have better speech consistency and consistency. High quality details, as shown in Figure 2.

如图3所示,本实施例将图像外推视为输入子图像的螺旋生长过程:输入子图像沿着螺旋曲线逐步外推至完整图像。其中,给定输入子图像外推距离m=(ml,mt,mr,mb),其中ml、mt、mr和mb分别指左侧、顶侧、右侧与底侧的外推距离。图像外推的目的是输出高质量外推图像(目标图像):其中h′=h+mt+mb,w′=w+ml+mr,X是的一张子图像。As shown in FIG. 3 , in this embodiment, the image extrapolation is regarded as a spiral growth process of the input sub-image: the input sub-image is gradually extrapolated to the complete image along the spiral curve. where, given the input sub-image The extrapolation distance m=(ml , mt , mr , mb ), wherein ml , mt , mr and mb refer to the extrapolated distances of left side, top side, right side and bottom side, respectively. The purpose of image extrapolation is to output a high-quality extrapolated image (target image): where h'=h+mt +mb , w'=w+ml +mr , and X is a sub-image of .

螺旋曲线上存在一系列点P={p1,p2,…,pN},输入子图像在这一系列点上进行某方向外推,直到N次外推后得到目标图像螺旋曲线上的每个点p用当前点在螺旋曲线上第几圈与外推方向两部分表示。方便起见,每个点p上的外推距离τ在本实施例中是相同的。There is a series of points P={p1 ,p2 ,...,pN } on the spiral curve, and the input sub-image is extrapolated in a certain direction on this series of points, until the target image is obtained after N extrapolations Each point p on the helical curve is represented by the number of turns of the current point on the helical curve and the extrapolation direction. For convenience, the extrapolated distance τ at each point p is the same in this embodiment.

在任一图像的螺旋外推过程中,根据给定的外推距离m与外推尺寸τ,可以计算得到螺旋曲线上的总点数N与螺旋总圈数T。本实施例用外推函数Gp(·)代表在点p处的外推过程,对第k个点pk,输入子图像通过函数外推为其中pk+1代表pk在螺旋曲线上的下个点。在到的外推过程中,输入子图像尺寸将会发生相应的增加。最终,输入子图像通过T圈螺旋曲线上N个点外推至目标图像此过程可以表达为:In the spiral extrapolation process of any image, according to the given extrapolation distance m and extrapolation size τ, the total number of points N on the spiral curve and the total number of spiral turns T can be calculated. In this embodiment, the extrapolation function Gp (·) is used to represent the extrapolation process at the point p, and for the kth point pk , the input sub-image by function extrapolated to where pk+1 represents the next point of pk on the helical curve. exist arrive During the extrapolation of , the input sub-image size will increase accordingly. Finally, the input sub-image Extrapolate to the target image by N points on the T-turn helical curve This process can be expressed as:

同时,图像尺寸h与w将分别变为h′与w′,外推距离将变为(0,0,0,0)。At the same time, the image sizes h and w will become h' and w' respectively, and the extrapolated distance will become (0,0,0,0).

值得注意的是,四个方向上的螺旋圈数并不必须等同,由于输入子图像不一定恰好位于目标图像的中心,使得在四个方向的外推可能在不同时刻停止。It is worth noting that the number of helical turns in the four directions is not necessarily equal, since the input sub-image is not necessarily exactly at the center of the target image, so the extrapolation in the four directions may stop at different times.

(1)假想图生成网络(1) Hypothetical graph generation network

本实施例提出假想图生成网络用于根据给定的输入子图像,“想象”一副粗略的完整图像。这张假想图将作为指引用于切片生成网络。这种策略是模仿人类对图像外推问题的想象能力而来,本实施例借鉴此思路用于计算机视觉解决图像外推问题。This embodiment proposes an imaginary image generation network for "imagining" a rough complete image based on a given input sub-image. This hypothetical diagram will be used as a guideline for the slice generation network. This strategy is derived from imitating human's ability to imagine the image extrapolation problem, and this embodiment draws on this idea for computer vision to solve the image extrapolation problem.

假想图生成网络本质上是一个带有编码器-解码器的条件对抗生成网络GI。给定一张输入子图像将其叠加外推遮盖层M与一个均匀分布噪声Z,输入网络中得到假想图在这其中,所有的输入、输出图像均缩放到相同的小尺寸(如128×128),得以充分利用对抗生成网络在低分辨率图像生成上的优势。The hypothetical graph generative network is essentially a conditional adversarial generative network GI with an encoder-decoder. Given an input subimage It superimposes the extrapolation cover layer M and a uniformly distributed noise Z, and enters the network to obtain an imaginary image In this, all input and output images are scaled to the same small size (such as 128×128), which can take full advantage of the adversarial generative network in low-resolution image generation.

在网络的目标函数中,除对抗损失函数外,本实施例还引入了其他损失函数用于得到更好的效果。特别地,本实施例在此网络中提出色相-颜色损失函数,以消除输出结果产生的亮斑及色调偏暗的现象。此外,实验后,发现色相-颜色损失可以同时帮助稳定网络训练过程。In the objective function of the network, in addition to the adversarial loss function, this embodiment also introduces other loss functions to obtain better results. In particular, the present embodiment proposes a hue-color loss function in this network to eliminate the phenomenon of bright spots and dark hues caused by the output result. Furthermore, after experiments, it is found that the hue-color loss can help stabilize the network training process at the same time.

色相-颜色损失函数。色相是颜色的基本元素,大部分人谈论各种颜色时,其表达的即是颜色的色相。因此,在图像外推过程中保持一致的色相将很有帮助。然而根据RGB色彩立方体的HSL/HSV表示,相同色相可能导致完全不用的颜色,这方面需加以限制。为了同时保持色相一致与色彩协调,本实施例将色相-颜色表达为:Hue-color loss function. Hue is the basic element of color. When most people talk about various colors, what they express is the hue of the color. Therefore, maintaining a consistent hue during image extrapolation would be helpful. However, according to the HSL/HSV representation of the RGB color cube, the same hue can lead to completely unused colors, and this is limited. In order to keep the hue consistent and color coordinated at the same time, this embodiment expresses the hue-color as:

其中,是真实图像Y的缩小图像,ξ是为了避免计算中出现0而添加的一个非常小的数字(一般≤0.01),γ<1用于增大差异。在实验中,本实施例按照经验将ξ设置为0.001,将γ设置为0.4。in, is a reduced image of the real image Y, ξ is a very small number (generally ≤ 0.01) added to avoid 0 in the calculation, and γ<1 is used to increase the difference. In the experiment, this embodiment empirically sets ξ to 0.001 and γ to 0.4.

与颜色损失与重建损失相比,本实施例的色相-颜色损失更关心真实的“色彩”,而忽略与灰色相关的部分,这对于外推任务中强调外推图像真实感以及输入子图像与外推区域保持一致性十分贴合。在本实施例的实践中,发现在缺少此损失的情况下,生成图像往往出现色调偏暗、易在色彩鲜丽的目标图像(如花朵)中产生亮斑的问题。而色相-颜色损失可以很好地解决这个问题。此外,本实施例发现此损失可以帮助稳定训练过程。Compared with the color loss and reconstruction loss, the hue-color loss of this embodiment is more concerned with the real "color" and ignores the gray-related part, which is important for the extrapolation task, which emphasizes the extrapolation image realism and the input sub-image and the input sub-image. The extrapolation area maintains consistency and fits very well. In the practice of this embodiment, it is found that in the absence of this loss, the generated image tends to have a dark tone, and it is easy to produce bright spots in a brightly colored target image (such as a flower). And the hue-color loss can solve this problem very well. Furthermore, this example finds that this loss can help stabilize the training process.

假想图目标损失函数。假想图目标损失函数由第一对抗损失函数、色相-颜色损失函数、感知损失函数线性组合而成,其表达式为:Hypothetical graph target loss function. The imaginary image target loss function is composed of a linear combination of the first adversarial loss function, the hue-color loss function, and the perceptual loss function, and its expression is:

其中,分别表示第一对抗损失函数、感知损失函数,是用于平衡三类损失的权重;in, respectively represent the first adversarial loss function and the perceptual loss function, is for balance The weights of the three types of losses;

在下文的实验中,将分别设置为0.1、10与1,在其他的实施例中,可设置为其他的权重值,比如0.2、10、1等。In the experiments below, the They are set to 0.1, 10, and 1, respectively. In other embodiments, they can be set to other weight values, such as 0.2, 10, and 1.

第一对抗损失函数。假想图生成网络的对抗损失表达为:The first adversarial loss function. The adversarial loss of the hypothetical graph generation network is expressed as:

其中,GI、DI分别表示假想图生成器和假想图辨别器,表示输入子图像。Among them, GI and DI represent the imaginary graph generator and imaginary graph discriminator, respectively, represents the input sub-image.

假想图生成器GI在训练中企图降低生成图像对假想图辨别器DI的损失,而假想图辨别器DI企图增加其值,二者之间构成的对抗训练使得假想图生成器GI可以生成更接近真实的图像。The imaginary image generator GI attempts to reduce the loss of the generated images to the imaginary image discriminator DI during training, while the imaginary image discriminator DI attempts to increase its value. The adversarial training between the two makes the imaginary image generator GI A more realistic image can be generated.

感知损失函数。本实施例使用感知损失用于惩罚假想图与真实图像之间的差异,其表达式为:Perceptual loss function. This example uses perceptual loss It is used to penalize the difference between the imaginary image and the real image, and its expression is:

其中,Nu是第u个激活层中特征矩阵的元素数量,σu是预训练模型VGG-19中第u层激活特征矩阵。where Nu is the number of elements of the feature matrix in the u-th activation layer, and σu is the activation feature matrix of theu -th layer in the pretrained model VGG-19.

(2)切片生成网络(2) Slice generation network

本实施例设计了一种新颖的切片生成网络,以实现在螺旋曲线上p点的外推函数功能Gp(·)功能。如图3所示,切片生成网络由切片算子ψp,切片生成器以及外推算子φp组成,除此之外,本实施例还加入了螺旋辨别器DS以及外推辨别器DE(二者未在图3中标识)。In this embodiment, a novel slice generation network is designed to realize the extrapolation function function Gp (·) of point p on the spiral curve. As shown in Fig. 3, the slice generation network consists of slice operatorψp , slice generator and extrapolation operator φp , in addition, this embodiment also adds a spiral discriminatorDS and an extrapolation discriminatorDE (these are not marked in FIG. 3 ).

对于螺旋曲线上第k个点pk,切片生成网络的外推函数将当前螺旋点的外推图像和假想图作为输入,输出外推图像假想图需放大至具有与真实图像相同的尺寸,其由前文假想图生成网络生成。For the kth point pk on the helical curve, the extrapolation function of the slice generation network extrapolates the image of the current helical point and imaginary As input, output extrapolated image imaginary figure need to be zoomed in to match the real image The same size, which is generated by the previous hypothetical graph generation network.

切片算子。切片算子ψp用于将当前螺旋点的图像切出目标切片,其切割后的片尺寸即为在该点的外推尺寸。对于pk处的切片生成网络,将有两个切片算子分别对当前螺旋点的外推图像与假想图切下最近邻切片与假想图切片即:和slice operator. The slice operatorψp is used to cut the image of the current helix point out of the target slice, and the slice size after cutting is the extrapolated size at the point. For the slice generation network at pk , there will be two slice operators for the extrapolated image of the current spiral point respectively with imaginary figure Cut the nearest neighbor slice Slice with imaginary graph which is: and

切片生成器。为了更好利用假想图切片输入子图像X及近邻切片的信息,本实施例设计了一种新的生成结构——“编码器—自适应实例归一化模块—空间自适应归一化模块—解码器”,采用这种结构的生成器将充分利用两个切片与输入子图像的信息,以保持外推图像的语义准确性与视觉一致性。如图4所示,在切片生成器中,将假想图切片输入至编码器,通过自适应实例归一化模块在其隐变量空间中融合输入子图像X的风格,其后在解码器中通过空间自适应归一化模块引入最近邻切片的语义信息,最终得到外推切片此过程可表达为:Slice generator. In order to make better use of imaginary graph slices Input sub-image X and neighbor slices information, this embodiment designs a new generation structure - "encoder - adaptive instance normalization module - spatial adaptive normalization module - decoder", the generator using this structure will make full use of The information of the two slices and the input sub-image to maintain the semantic accuracy and visual consistency of the extrapolated image. As shown in Figure 4, in the slice generator, slice the imaginary graph Input to the encoder, the style of the input sub-image X is fused in its latent variable space through the adaptive instance normalization module, and then the nearest neighbor slice is introduced in the decoder through the spatial adaptive normalization module The semantic information of , and finally get the extrapolated slice This process can be expressed as:

值得一提的是,中的切片近邻与下一个螺旋点的输入子图像的外推切片最近邻,假想图中的假想图切片则对应于中的外推切片的位置。以这种方式,本实施例在语义上组合了来自输入子图像与假想图中相应的切片语义信息,以进行高质量的外推切片生成。It is worth mentioning that, slice neighbors in the input subimage with the next spiral point extrapolated slice of nearest neighbor, imaginary graph Imaginary graph slices in corresponds to Extrapolated slices in s position. In this way, the present embodiment semantically combines the corresponding slice semantic information from the input sub-image and the hypothetical map for high-quality extrapolated slice generation.

此外,考虑到计算复杂度问题,切片生成网络没有独立的辨别器。Furthermore, considering the computational complexity issue, the slice generation network does not have an independent discriminator.

外推算子。外推算子的目的是将切片生成器的输出切片缝合回输入子图像完成一次外推操作:Extrapolation operator. The purpose of the extrapolation operator is to slice the output of the slice generator Stitch back to the input subimage Complete an extrapolation operation:

由以上各部分,本实施例在点pk完成一次外推工作。From the above parts, this embodiment completes an extrapolation work at the pointpk .

共享切片生成网络。螺旋生成网络上包括N个切片生成网络,用于每个螺旋曲线上的点进行图像外推,如图3所示为螺旋曲线上一圈中四个切片生成网络组合。当需要对输入子图像进行较长距离的外推工作时,整个螺旋生成网络将需要大量的切片生成网络,因此其参数量将非常庞大。针对这个问题,本实施例共享了所有的切片生成网络权重,也就是说,整个螺旋生成网络中仅有一个独立的切片生成网络。Shared slice generation network. The spiral generation network includes N slice generation networks, which are used for image extrapolation at the points on each spiral curve. Figure 3 shows the combination of four slice generation networks in a circle on the spiral curve. When the extrapolation of input sub-images needs to be performed over a long distance, the entire spiral generation network will require a large number of slice generation networks, so the amount of parameters will be very large. To address this problem, this embodiment shares all the slice generation network weights, that is, there is only one independent slice generation network in the entire spiral generation network.

(3)螺旋损失设计(3) Spiral loss design

螺旋损失目标函数。螺旋损失目标函数由第二对抗损失函数、L1损失函数、风格损失函数、色相-颜色损失函数线性组合而成。螺旋损失目标函数的表达式为:Spiral loss objective function. The spiral loss objective function is composed of the second adversarial loss function, the L1 loss function, the style loss function, and the hue-color loss function. The expression of the spiral loss objective function is:

其中,分别表示第二对抗损失函数、L1损失函数、风格损失函数、色相-颜色损失函数;λadv、λL1、λstyle、λhue是用于平衡这四类损失的权重,根据经验,设置的权重值分别为0.1、10、250、10,在其他的实施例中,可设置为其他的权重值,比如0.2、20、250、10等。in, respectively represent the second adversarial loss function, L1 loss function, style loss function, hue-color loss function; λadv , λL1 , λstyle , λhue are used for balance The weights of these four types of losses are set to 0.1, 10, 250, and 10 according to experience. In other embodiments, they can be set to other weights, such as 0.2, 20, 250, and 10.

第二对抗损失函数。本实施例设计了一个螺旋辨别器DS和一个外推辨别器DE,以分辨辨别整幅外推图像与外推区域第二对抗损失函数的表达式为:The second adversarial loss function. In this embodiment, a spiral discriminatorDS and an extrapolation discriminatorDE are designed to discriminate the entire extrapolated image with extrapolation area The expression of the second adversarial loss function is:

其中,F表示螺旋生成函数,DS表示螺旋辨别器,DE表示外推辨别器,表示螺旋生成的目标图像,表示的外推区域,E表示真实图像Y的外推区域,表示的外推区域,即表示与和Y等大的外推遮盖层。where F is the spiral generating function, DS is the spiral discriminator, DE is the extrapolation discriminator, represents the target image generated by the spiral, express The extrapolation area of , E represents the extrapolation area of the real image Y, express the extrapolation area of means with and an extrapolated mask of size Y.

F是整个螺旋生成函数,它在训练中企图最小化辨别器DS与DE的损失,而DS与DE则企图最大化F带来的损失,两者之间形成对抗。螺旋辨别器DS注重保持图像图像一致性,而外推辨别器DE则更注重外推区域的切片之间的连续性。F is the entire spiral generating function, which tries to minimize the loss of discriminators DS and DE during training, while DS andDEtry tomaximize the loss caused byF , forming a confrontation between the two. The spiral discriminator DS focuses on maintaining image image consistency, while the extrapolation discriminatorDEfocuses more on the continuity between slices of the extrapolated region.

L1损失函数。本实施例通过L1损失函数最小化外推图像与真实图像之间的重构差异,表达式为:L1 loss function. This embodiment uses the L1 loss function to minimize the reconstruction difference between the extrapolated image and the real image, and the expression is:

其中,表示从激活图σv中构造的Gv×GvGram矩阵。in, represents the Gv ×Gv Gram matrix constructed from the activation map σv .

(4)未知外推距离的情况(4) The case of unknown extrapolation distance

假定仅给予输入子图像和外推输出图像尺寸h′×w′×c,在外推距离m未知的情况下,无法得知X在中的具体位置。在这种情况下,前人的工作将束手无策。而在本实施例的方法中,由于先生成假想图的策略(网络输入去掉外推遮盖层),本实施例可以在放大后的假想图与输入子图像进行模板匹配,这样便可以得知输入子图像在外推图像中的大概位置。通过这种方法,本实施例实际上间接获得了各方向的外推距离。在本下文的实验中,本实施例采用归一化互相关模板匹配方法。Assume that only the input sub-image is given and the extrapolated output image size h′×w′×c, when the extrapolation distance m is unknown, it is impossible to know that X is in specific location in . In this case, the work of the predecessors will be at a loss. However, in the method of this embodiment, due to the strategy of first generating an imaginary image (the network input removes the extrapolation cover layer), this embodiment can perform template matching between the enlarged imaginary image and the input sub-image, so that the input sub-image can be known. The approximate location of the subimage in the extrapolated image. Through this method, the present embodiment actually indirectly obtains the extrapolation distances in all directions. In the experiments below, this embodiment adopts the normalized cross-correlation template matching method.

(5)实现细节(5) Implementation details

网络架构。对于假想图生成网络与切片生成器,本实施例采用类似于CycleGAN方法的编码器—解码器网络结构。不同的是在切片生成器中,本实施例将瓶颈网络部分原有的8个残差块替换为6个,此外本实施例在瓶颈残差块前引入自适应实例归一化模块,并在解码器的两个转置卷积层前引入两个空间自适应归一化模块,从而形成“编码器—自适应实例归一化模块—空间自适应归一化模块—解码器”结构。另外,对于假想图生成辨别器与外推辨别器,本实施例采用基于Pix2Pix方法的图像块辨别器结构;在MUSICAL方法的启发下,本实施例采用了与DenceNet类似结构的网络实现螺旋辨别器。Network Architecture. For the imaginary graph generation network and slice generator, this embodiment adopts an encoder-decoder network structure similar to the CycleGAN method. The difference is that in the slice generator, this embodiment replaces the original 8 residual blocks in the bottleneck network part with 6. In addition, this embodiment introduces an adaptive instance normalization module before the bottleneck residual block, and in the Two spatially adaptive normalization modules are introduced in front of the two transposed convolutional layers of the decoder, thus forming the "encoder-adaptive instance normalization module-spatially adaptive normalization module-decoder" structure. In addition, for the imaginary image generation discriminator and extrapolation discriminator, this embodiment adopts the image block discriminator structure based on the Pix2Pix method; inspired by the MUSICAL method, this embodiment uses a network similar to DenceNet to implement the spiral discriminator. .

训练细节。training details.

假想图生成网络需提前进行独立训练,其生成器与辨别器均使用Adam进行优化,学习率采用相同的参数:α=0.0002,β1=0.5及β2=0.9。本实施例使用相同的配置来训练切片生成网络、螺旋辨别器和外推辨别器。The hypothetical graph generation network needs to be independently trained in advance. Both the generator and the discriminator are optimized by Adam, and the learning rate adopts the same parameters: α=0.0002, β1 =0.5 and β2 =0.9. This embodiment uses the same configuration to train the slice generation network, the spiral discriminator, and the extrapolation discriminator.

(6)具体效果(6) Specific effects

本发明实施例提供的一种基于螺旋生成网络的高质量图像外推系统,其有益效果主要在于:A high-quality image extrapolation system based on a spiral generation network provided by the embodiment of the present invention has the following beneficial effects:

1、提出假想图生成网络用以模仿人类的想象生成假想图,假想图用于指引切片生成网络以生成更多的图像细节,假想图目标损失函数可使得假想图的色彩与输入子图像更贴近;1. Propose an imaginary image generation network to imitate human imagination to generate an imaginary image. The imaginary image is used to guide the slice generation network to generate more image details. The imaginary image target loss function can make the color of the imaginary image closer to the input sub-image. ;

2、提出切片生成网络,采用切片算子、切片生成器、外推算子,在假想图的语义指引下,将输入子图像沿着上下左右四个方向逐步外推绘制图像,得到的目标图像具有更好的语音一致性与高质量细节,与原始的输入子图像在结构、纹理上可基本保持一致;2. A slice generation network is proposed. Using slice operator, slice generator, and extrapolation operator, under the semantic guidance of the imaginary image, the input sub-image is gradually extrapolated and drawn along the four directions of up, down, left, and right, and the obtained target image has Better speech consistency and high-quality details, basically consistent with the original input sub-image in structure and texture;

3、提出一种新颖的螺旋生成网络,首次将螺旋外推问题视为一个螺旋生长的过程,使输入子图像在假想图生成网络和切片生成网络的作用下沿着螺旋曲线方向在四周逐步生长,每次外推上下左右四个方向的某一个边,直至外推成完整图像,因为假想图生成网络、切片生成网络、螺旋辨别器、外推辨别器的网络结构和相应的目标损失函数设计,使得螺旋生成的外推区域较为真实,且与原始子图像在结构、纹理上可以保持一致,符合人类想象;3. A novel spiral generation network is proposed. For the first time, the spiral extrapolation problem is regarded as a spiral growth process, so that the input sub-image gradually grows around the spiral curve under the action of the imaginary image generation network and the slice generation network. , each time extrapolate one of the four directions of up, down, left, and right, until the extrapolation becomes a complete image, because the network structure of the imaginary graph generation network, slice generation network, spiral discriminator, extrapolation discriminator and the corresponding target loss function design , so that the extrapolated region generated by the spiral is more realistic, and can be consistent with the original sub-image in structure and texture, which is in line with human imagination;

4、面向各种图像外推情形与不同数据集,螺旋生成网络在图像外推问题中达到目前最佳指标与视觉效果。4. Facing various image extrapolation situations and different data sets, the spiral generation network achieves the current best indicators and visual effects in the image extrapolation problem.

(二)实验(2) Experiment

为评估本实施例提出的图像外推方法的性能,本实施例分别在八个数据集上进行了实验:CelebA-HQ(人脸),Stanford Cars(车),CUB(鸟),Flowers(花),Paris StreetView(街景),Cityscapes(城市),Place365 Desert Road(公路)和Sky(天空),主要考虑对象(人脸、车、鸟和花)和场景(街景、城市、公路和天空)的情况。In order to evaluate the performance of the image extrapolation method proposed in this example, experiments were carried out on eight datasets in this example: CelebA-HQ (face), Stanford Cars (car), CUB (bird), Flowers (flower). ), Paris StreetView (street view), Cityscapes (city), Place365 Desert Road (road) and Sky (sky), which mainly consider objects (faces, cars, birds and flowers) and scenes (street view, city, road and sky) Happening.

对于Stanford Car和CUB数据集,本实施例使用给定的边界框对数据集中的对象进行裁剪,然后将图片大小调整到256×256,此外本实施例还丢弃了严重失真的对象,从而更适用于图像外推任务。本实施例在表1中列出了针对八个数据集的训练和测试集划分,其中,本实施例对Cityscapes和Place365 Desert Road数据集保留默认的官方划分,在其他数据集上随机选择样本。For the Stanford Car and CUB datasets, this example uses the given bounding box to crop the objects in the dataset, and then resize the image to 256×256. In addition, this example also discards severely distorted objects, making it more applicable for image extrapolation tasks. This embodiment lists the training and test set divisions for eight datasets in Table 1, wherein this embodiment retains the default official division for the Cityscapes and Place365 Desert Road datasets, and randomly selects samples from other datasets.

本实施例评估时考虑了三种不同情况的图像外推任务:(1)在CelebA-HQ,Stanford Cars,CUB和Flowers数据集上进行四边外推,从128×128到256×256;(2)在Cityscapes和Place365 Sky数据集上进行双向外推,从256×256到512×256;(3)在ParisStreetView和Place365 Desert Road进行单向外推,从256×256到512×256。本实施例将本实施例的方法与当前最先进的Boundless和SRN进行比较,其中Boundless比较单向外推情况,SRN三种情况都比较。此外,本实施例对CelebA-HQ数据集上未知外推距离的情况进行处理(即未知外推距离的情况),SRN无法处理这种情况。本实施例所有的模型都是在装有4个NVIDIA GeForce GTX 1080Ti GPU的计算机上,通过PyTorch v1.1实现。Three different image extrapolation tasks are considered in the evaluation of this example: (1) Four-sided extrapolation on CelebA-HQ, Stanford Cars, CUB and Flowers datasets, from 128×128 to 256×256; (2) ) on the Cityscapes and Place365 Sky datasets for bidirectional extrapolation from 256×256 to 512×256; (3) for unidirectional extrapolation on ParisStreetView and Place365 Desert Road from 256×256 to 512×256. In this embodiment, the method of this embodiment is compared with the current state-of-the-art Boundless and SRN, wherein Boundless compares the case of unilateral extrapolation, and the three cases of SRN are compared. In addition, this embodiment handles the case where the extrapolation distance is unknown on the CelebA-HQ dataset (ie, the case where the extrapolation distance is unknown), which cannot be handled by the SRN. All models in this example are implemented with PyTorch v1.1 on a computer with four NVIDIA GeForce GTX 1080Ti GPUs.

表1.八个数据集在训练和测试上的划分情况Table 1. The division of training and testing of eight datasets

(1)定量评价(1) Quantitative evaluation

根据Boundless和SRN,本实施例使用峰值信噪比(PSNR),结构相似性指标度量(SSIM)和弗雷谢起始距离(FID)作为评估语义一致性和视觉真实感的指标(PSNR和SSIM越高越好,FID越低越好)。表2中的结果验证了本实施例的螺旋生成网络在几乎所有情况下均优于Boundless和SRN。值得注意的是,本实施例假想图生成网络(作为一个cGAN)的性能要比最终的螺旋生成网络差,FID的分数表现极差,证明假想图生成网络的视觉效果不佳。According to Boundless and SRN, this embodiment uses Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Indicator Metric (SSIM) and Fraser Onset Distance (FID) as metrics for evaluating semantic consistency and visual realism (PSNR and SSIM) The higher the better, the lower the FID the better). The results in Table 2 verify that the spiral generation network of this example outperforms Boundless and SRN in almost all cases. It is worth noting that the performance of the hypothetical graph generation network (as a cGAN) in this example is worse than the final spiral generation network, and the FID score performance is extremely poor, which proves that the visual effect of the hypothetical graph generation network is not good.

表2.不同情况下的定量评价结果Table 2. Quantitative evaluation results in different situations

为了比较外推输出结果的真实性,本实施例进行了A/B成对测试的用户研究。本实施例的设置类似于SRN。对于每个数据集,本实施例从相同的输入中随机选择40个成对结果,分别由螺旋生成网络与Boundless和螺旋生成网络与SRN推断得出。要求用户在无限时间内,从每对中选择更逼真的图像。每对至少由3个不同的用户来判断。表3中显示的结果,验证了本实施例的螺旋生成网络在所有可用数据集上的性能均优于Boundless和SRN。In order to compare the authenticity of the extrapolated output results, this embodiment conducts a user study of A/B pairwise testing. The setup of this embodiment is similar to SRN. For each dataset, this example randomly selects 40 pairwise results from the same input, inferred by Spiral Generating Network vs. Boundless and Spiral Generating Network vs. SRN, respectively. The user is asked to select a more realistic image from each pair for an unlimited time. Each pair is judged by at least 3 different users. The results shown in Table 3 verify that the spiral generation network of this example outperforms Boundless and SRN on all available datasets.

表3.结果显示Table 3. Results Display

(2)定性评价(2) Qualitative evaluation

本实施例在图5中显示了Boundless、SRN和本实施例的螺旋生成网络定性评价的结果,其中:(a)在CelebA-HQ、Stanford Cars、CUB和Flowers数据集上的四边外推结果;(b)在Cityscapes和Place365 Sky数据集上的双边外推结果;(c)在Paris StreetView andPlace365 Desert Road数据集上的单边外推结果。本实施例的方法从语义一致性和生动的细节来推断更合理的生成结果,避免了毫无意义的内容和混乱的背景。此外,图6(在CelebA-HQ数据集上的未知边界距离情况的结果)和表3(CelebA-HQ)表明本实施例的螺旋生成网络中未知边界距离的情况也可以很好地工作。This example shows the results of the qualitative evaluation of Boundless, SRN, and the spiral generation network of this example in Figure 5, where: (a) Four-sided extrapolation results on CelebA-HQ, Stanford Cars, CUB, and Flowers datasets; (b) Bilateral extrapolation results on Cityscapes and Place365 Sky datasets; (c) Unilateral extrapolation results on Paris StreetView and Place365 Desert Road datasets. The method of this embodiment infers more reasonable generated results from semantic consistency and vivid details, avoiding meaningless content and confusing backgrounds. Furthermore, Figure 6 (results for the unknown boundary distance case on the CelebA-HQ dataset) and Table 3 (CelebA-HQ) show that the spiral generation network of this embodiment also works well for the unknown boundary distance case.

(3)螺旋必要性(3) Spiral necessity

本实施例的螺旋架构对于诸如以下三个方面的图像外推任务是必不可少的,需要进行各种消融实验来验证每一个的必要性(结果如表4和图7所示)。图7展示了螺旋必要性消融实验的定性评价结果,其中:(a)A.一对一;(b)A.水平垂直;(c)A.垂直水平;(d)B.无最近邻切片;(e)B.有子图像切片;(f)C.同时;(g)C.水平垂直;(h)C.垂直水平;(i)螺旋生成网络,逆时针旋转;(j)螺旋生成网络,顺时针旋转。The spiral architecture of this embodiment is essential for image extrapolation tasks such as the following three aspects, and various ablation experiments are required to verify the necessity of each (results are shown in Table 4 and Fig. 7). Figure 7 shows the qualitative evaluation results of the helical necessity ablation experiment, where: (a) A. one-to-one; (b) A. horizontal and vertical; (c) A. vertical horizontal; (d) B. no nearest neighbor slices ; (e) B. There are sub-image slices; (f) C. Simultaneously; (g) C. Horizontal vertical; (h) C. Vertical horizontal; (i) Spiral generation network, rotating counterclockwise; (j) Spiral generation network, rotated clockwise.

A.逐圈外推:(1)一对一定向外推(A.一对一);(2)水平然后垂直方向外推(A.水平垂直);(3)垂直然后水平方向外推(A.垂直水平)。图7中的一个示例表明,破坏了切片在四个方向上的生长,将导致水平小切片和垂直大切片的生成器不协调,从而导致内容混乱的语义不一致。A. Circle-by-circle extrapolation: (1) One-to-one extrapolation (A. One-to-one); (2) Horizontal and then vertical extrapolation (A. Horizontal and vertical); (3) Vertical and then horizontal extrapolation ( A. Vertical horizontal). An example in Figure 7 shows that disrupting the growth of slices in four directions will lead to incongruent generators for small horizontal slices and large vertical slices, resulting in semantic inconsistencies with cluttered content.

B.相邻圈中方向性切片的依赖性:(1)没有最近邻切片输入(B.无最近邻切片);(2)用子图像切片替换最近邻切片(B.有子图像切片)。如图7d和图7e所示,在远离原始子图像区域的部分上显示模糊的细节和不真实的纹理。B. Dependency of directional slices in adjacent circles: (1) no nearest neighbor slice input (B. no nearest neighbor slice); (2) replace nearest neighbor slice with subimage slice (B. with subimage slice). As shown in Fig. 7d and Fig. 7e, blurred details and unrealistic textures are displayed on parts far from the original sub-image area.

C.相邻切片之间的相关性:(1)同时生成四个方向切片(C.同时);(2)生成水平然后垂直切片(C.水平垂直);(3)生成垂直然后水平切片(C.垂直水平)。图7f、7g和7h说明一圈螺旋中的一些切片受非连续切片生成的影响。虽然螺旋生成网络可以在一圈中生成逆时针或顺时针(默认)切片,但顺时针在定量(表4)和定性(图7)评价方面都表现的更好,这表明本实施例以螺旋方式进行图像推断是有效的。C. Correlation between adjacent slices: (1) Generate four directional slices simultaneously (C. Simultaneously); (2) Generate horizontal then vertical slices (C. Horizontal vertical); (3) Generate vertical then horizontal slices ( C. Vertical Horizontal). Figures 7f, 7g and 7h illustrate that some slices in a turn of the helix are affected by the generation of non-consecutive slices. Although the spiral generation network can generate counterclockwise or clockwise (default) slices in one turn, clockwise performs better in both quantitative (Table 4) and qualitative (Fig. 7) evaluations, indicating that this example uses spiral way to perform image inference is effective.

表4.螺旋必要性消融实验的定量评价结果Table 4. Quantitative evaluation results of helical necessity ablation experiments

(4)切片生成网络的分析(4) Analysis of slice generation network

三元切片生成网络的输入。本实施例的切片生成网络采用新的“编码器—自适应实例归一化模块—空间自适应归一化模块—解码器”结构设计,将假象切片编码(编码器)到潜在空间中,并将子图像中的样式信息(自适应实例归一化)与潜在编码融合,然后当合成的潜在编码解码回图像空间(解码器)时,与最近邻切片中的语义信息融合(空间自适应归一化模块),从而得到具有样式,语义和上下文一致性的外推切片。Triadic slices generate the input to the network. The slice generation network of this embodiment adopts a new structural design of "encoder-adaptive instance normalization module-spatial adaptive normalization module-decoder", which encodes false slices (encoder) into the latent space, and The style information in the sub-image (adaptive instance normalization) is fused with the latent encoding, and then when the synthesized latent encoding is decoded back into the image space (decoder), it is fused with the semantic information in the nearest neighbor slice (spatial adaptive normalization). normalized modules), resulting in extrapolated slices that are stylistically, semantically, and contextually consistent.

本实施例对三元切片生成网络输入:假象切片、子图像和最近邻切片进行了消融研究,从而验证它们和相应结构的效能。本实施例构造一个基准,它是一个以假象切片作为唯一输入的编码自编码网络,然后分别使用“编码器—自适应实例归一化模块—解码器”和“编码器—空间自适应归一化模块—解码器”添加子图像和最近邻切片进行比较。此外,本实施例还将假象切片和最近邻切片交换,以进行进一步分析。表5和图8显示了对三元切片生成网络输入的消融实验结果,验证了本实施例结构的优势。图8中:(a)基准;(b)添加子图像;(c)添加最近邻切片;(d)假象切片和最近邻切片交换;(e)螺旋生成网络。This example conducts ablation studies on ternary slice generation network inputs: artifact slices, sub-images, and nearest-neighbor slices to verify their performance and the corresponding structures. This example constructs a benchmark, which is an encoded autoencoder network with an artifact slice as the only input, and then uses the "encoder-adaptive instance normalization module-decoder" and "encoder-spatial adaptive normalization" respectively. "Module - Decoder" to add sub-images and nearest neighbor slices for comparison. In addition, this embodiment also swaps artifact slices and nearest neighbor slices for further analysis. Table 5 and Figure 8 show the results of ablation experiments on the input of the ternary slice generation network, verifying the advantages of the structure of this embodiment. In Fig. 8: (a) datum; (b) adding sub-image; (c) adding nearest neighbor slice; (d) swapping artifact slice and nearest neighbor slice; (e) spiral generative network.

从视觉上看,比起输入的子图像,子图像和生成切片之间的样式看起来更协调(图8a和8b);通过输入最近邻切片,生成的切片在语义上似乎也与子图像更加一致(图8a和8c);如果本实施例交换假象切片和最近邻切片,会出现语义不一致的失真内容(图8d);借助三元输入,螺旋生成网络的性能得到了改善(图8e)。Visually, the styles between the sub-images and the generated slices appear to be more coordinated than the input sub-images (Figures 8a and 8b); with the input nearest neighbor slices, the generated slices also appear to be semantically closer to the sub-images Consistent (Figures 8a and 8c); if this example swaps artifact slices and nearest-neighbor slices, semantically inconsistent distorted content (Figure 8d); with ternary inputs, the performance of the spiral generation network is improved (Figure 8e).

表5.三元切片生成网络输入消融实验的定量评级结果Table 5. Quantitative rating results of ternary slice generation network input ablation experiments

不同的切片大小。然后,本实施例研究切片大小τ的影响,并采用五种不同大小的τ={4,8,16,32,64}进行消融实验。表6和图9的结果表明,小尺寸的切片可能会导致纹理不清晰(图9a),大尺寸的切片可能会产生更明显的缝合块效应(图9d)。综合考虑有效性和效率,本实施例设置τ=32。Different slice sizes. Then, this example studies the effect of slice size τ, and conducts ablation experiments with five different sizes of τ={4, 8, 16, 32, 64}. The results in Table 6 and Fig. 9 indicate that small-sized slices may result in unclear texture (Fig. 9a), and large-sized slices may produce more pronounced stitch-block effects (Fig. 9d). Considering the effectiveness and efficiency comprehensively, this embodiment sets τ=32.

表6.切片生成网络不同切片大小的消融实验的定量评价结果Table 6. Quantitative evaluation results of ablation experiments with different slice sizes of the slice generation network

(5)色相-颜色损失的效能(5) Hue-color loss efficiency

本实施例最后分析了色相-颜色损失的效能。为方便起见,本实施例使用假想图生成网络在Flowers和Stanford Cars数据集上进行了实验,通过删除色相-颜色损失作为基准,并用颜色损失和L1损失进行比较,结果参考表7和图10。图10中:(a)和(b)分别展示Flowers和Stanford Cars数据集上的定性评价结果,从左到右分别是基线、颜色损失、L1损失、色相-颜色损失;(c)在逐步训练中,Flowers和Stanford Cars数据集上相应的总损失曲线。This example concludes with an analysis of the efficacy of hue-color loss. For convenience, this example uses an imaginary graph generation network to conduct experiments on the Flowers and Stanford Cars datasets, by removing the hue-color loss as a benchmark, and using the color loss and L1 loss for comparison, the results refer to Table 7 and Figure 10. In Figure 10: (a) and (b) show the qualitative evaluation results on the Flowers and Stanford Cars datasets, respectively, from left to right are the baseline, color loss, L1 loss, and hue-color loss; (c) Stepwise training , the corresponding total loss curves on the Flowers and Stanford Cars datasets.

表7中的结果表明,就PSNR、SSIM和FID而言,色相-颜色损失更为有效。图10a和10b展示了在具有不同损失的训练中循序渐进的推断,从中本实施例观察到基准中出现了暗色和亮色点,L1损失和颜色损失可能对其中一个问题有所缓和,而本实施例的色相-颜色损失很好地处理了这两个问题。图10c中相应的总损失曲线表明,通过使用色相-颜色损失,总损失在开始时会下降很快,因此模型可以识别正确的颜色从而稳定训练过程。The results in Table 7 show that the hue-color loss is more effective in terms of PSNR, SSIM and FID. Figures 10a and 10b show step-by-step inference in training with different losses, from which this example observes dark and light points appearing in the baseline, L1 loss and color loss may mitigate one of these issues, while this example The Hue-Color loss handles both of these issues well. The corresponding total loss curve in Figure 10c shows that by using the hue-color loss, the total loss drops quickly at the beginning, so the model can recognize the correct color and stabilize the training process.

表7.不同损失的假想图生成网络消融实验的定量评价结果Table 7. Quantitative evaluation results of network ablation experiments for hypothetical graph generation with different losses

(6)结论(6 Conclusion

实验中,针对不同的外推情形,本实施例面向多种对象、场景数据集进行测试,实验结果表明,本实施例提出的螺旋生成网络已达到目前最优异的图像外推质量。此外,本实施例还进行了一系列的消融对比试验,验证了螺旋生成网络细节设计的有效性。In the experiment, for different extrapolation situations, this embodiment is tested for a variety of objects and scene data sets. The experimental results show that the spiral generation network proposed in this embodiment has achieved the best image extrapolation quality at present. In addition, a series of ablation comparison experiments are also carried out in this embodiment to verify the effectiveness of the detailed design of the spiral generation network.

上述实施例为本发明较佳的实施方式,但本发明的实施方式并不受上述实施例的限制,其他的任何未背离本发明的精神实质与原理下所作的改变、修饰、替代、组合、简化,均应为等效的置换方式,都包含在本发明的保护范围之内。The above-mentioned embodiments are preferred embodiments of the present invention, but the embodiments of the present invention are not limited by the above-mentioned embodiments, and any other changes, modifications, substitutions, combinations, The simplification should be equivalent replacement manners, which are all included in the protection scope of the present invention.

Claims (4)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010768731.6ACN111915591B (en) | 2020-08-03 | 2020-08-03 | A High-Quality Image Extrapolation System Based on Spiral Generation Network |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010768731.6ACN111915591B (en) | 2020-08-03 | 2020-08-03 | A High-Quality Image Extrapolation System Based on Spiral Generation Network |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN111915591A CN111915591A (en) | 2020-11-10 |

| CN111915591Btrue CN111915591B (en) | 2022-03-22 |

Family

ID=73287049

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202010768731.6AActiveCN111915591B (en) | 2020-08-03 | 2020-08-03 | A High-Quality Image Extrapolation System Based on Spiral Generation Network |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN111915591B (en) |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102549334A (en)* | 2009-10-01 | 2012-07-04 | Opto设计股份有限公司 | Color correction method for illumination light, and light source module and lighting device using this color correction method |

| CN108122264A (en)* | 2016-11-28 | 2018-06-05 | 奥多比公司 | Sketch is promoted to be converted to drawing |

| CN109615582A (en)* | 2018-11-30 | 2019-04-12 | 北京工业大学 | A face image super-resolution reconstruction method based on attribute description generative adversarial network |

| CN111127346A (en)* | 2019-12-08 | 2020-05-08 | 复旦大学 | Multi-level image restoration method based on partial-to-integral attention mechanism |

Family Cites Families (7)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5515409A (en)* | 1994-12-22 | 1996-05-07 | General Electric Company | Helical interpolative algorithm for image reconstruction in a CT system |

| US7369712B2 (en)* | 2003-09-30 | 2008-05-06 | Fotonation Vision Limited | Automated statistical self-calibrating detection and removal of blemishes in digital images based on multiple occurrences of dust in images |

| CN101313594A (en)* | 2005-10-16 | 2008-11-26 | 米迪尔波得股份有限公司 | Apparatus, system, and method for increasing quality of digital image capture |

| CN109377448B (en)* | 2018-05-20 | 2021-05-07 | 北京工业大学 | Face image restoration method based on generation countermeasure network |

| CN110456355B (en)* | 2019-08-19 | 2021-12-24 | 河南大学 | Radar echo extrapolation method based on long-time and short-time memory and generation countermeasure network |

| CN110765878A (en)* | 2019-09-20 | 2020-02-07 | 苏州大圜科技有限公司 | Short-term rainfall prediction method |

| CN110568442B (en)* | 2019-10-15 | 2021-08-20 | 中国人民解放军国防科技大学 | A Radar Echo Extrapolation Method Based on Adversarial Extrapolation Neural Network |

- 2020

- 2020-08-03CNCN202010768731.6Apatent/CN111915591B/enactiveActive

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102549334A (en)* | 2009-10-01 | 2012-07-04 | Opto设计股份有限公司 | Color correction method for illumination light, and light source module and lighting device using this color correction method |

| CN108122264A (en)* | 2016-11-28 | 2018-06-05 | 奥多比公司 | Sketch is promoted to be converted to drawing |

| CN109615582A (en)* | 2018-11-30 | 2019-04-12 | 北京工业大学 | A face image super-resolution reconstruction method based on attribute description generative adversarial network |

| CN111127346A (en)* | 2019-12-08 | 2020-05-08 | 复旦大学 | Multi-level image restoration method based on partial-to-integral attention mechanism |

Also Published As

| Publication number | Publication date |

|---|---|

| CN111915591A (en) | 2020-11-10 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| US10748324B2 (en) | Generating stylized-stroke images from source images utilizing style-transfer-neural networks with non-photorealistic-rendering | |

| CN109361934B (en) | Image processing method, device, device and storage medium | |

| CN104318570B (en) | A kind of self adaptation camouflage painting method for designing based on background | |

| DE102020002964A1 (en) | Using a neural network with a two-stream encoder architecture to generate composite digital images | |

| CN111047516A (en) | Image processing method, image processing device, computer equipment and storage medium | |

| CN109255831A (en) | The method that single-view face three-dimensional reconstruction and texture based on multi-task learning generate | |

| CN109829855A (en) | A kind of super resolution ratio reconstruction method based on fusion multi-level features figure | |

| DE102018129600A1 (en) | Method and system for the virtual fitting of glasses | |

| CN109447907A (en) | A kind of single image Enhancement Method based on full convolutional neural networks | |

| Guo et al. | Spiral generative network for image extrapolation | |

| CN116664782B (en) | A three-dimensional reconstruction method of neural radiation fields based on fused voxels | |

| CN113128517B (en) | Tone mapping image mixed visual feature extraction model establishment and quality evaluation method | |

| CN111709900A (en) | A High Dynamic Range Image Reconstruction Method Based on Global Feature Guidance | |

| CN117114984A (en) | Remote sensing image super-resolution reconstruction method based on generative adversarial network | |

| CN110910336A (en) | Three-dimensional high dynamic range imaging method based on full convolution neural network | |

| CN114926452A (en) | Remote sensing image fusion method based on NSST and beta divergence nonnegative matrix factorization | |

| CN111915591B (en) | A High-Quality Image Extrapolation System Based on Spiral Generation Network | |

| CN118628370B (en) | An image processing method and system for detailed marine land space planning | |

| Mugita et al. | Future landscape visualization by generating images using a diffusion model and instance segmentation | |

| CN118297815A (en) | Cross-view panoramic synthesis method based on diffusion model and attention mechanism | |

| CN114663315A (en) | Image bit enhancement method and device for generating countermeasure network based on semantic fusion | |

| CN117011181B (en) | Classification-guided unmanned aerial vehicle imaging dense fog removal method | |

| CN115705616A (en) | Realistic Image Style Transfer Method Based on Structural Consistency Statistical Mapping Framework | |

| CN118096620A (en) | An image harmonization method based on multi-view image feature fusion | |

| DE102023127131A1 (en) | ARTIFICIAL INTELLIGENCE TECHNIQUES FOR EXTRAPOLATION OF HDR PANORAMAS FROM LDR IMAGES WITH A SMALL FIELD OF VIEW (FOV) |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |