CN111860832A - A Federated Learning-Based Approach to Enhance the Defense Capability of Neural Networks - Google Patents

A Federated Learning-Based Approach to Enhance the Defense Capability of Neural NetworksDownload PDFInfo

- Publication number

- CN111860832A CN111860832ACN202010618973.7ACN202010618973ACN111860832ACN 111860832 ACN111860832 ACN 111860832ACN 202010618973 ACN202010618973 ACN 202010618973ACN 111860832 ACN111860832 ACN 111860832A

- Authority

- CN

- China

- Prior art keywords

- model

- neural network

- data

- federal learning

- training

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F21/00—Security arrangements for protecting computers, components thereof, programs or data against unauthorised activity

- G06F21/60—Protecting data

- G06F21/602—Providing cryptographic facilities or services

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F21/00—Security arrangements for protecting computers, components thereof, programs or data against unauthorised activity

- G06F21/60—Protecting data

- G06F21/62—Protecting access to data via a platform, e.g. using keys or access control rules

- G06F21/6218—Protecting access to data via a platform, e.g. using keys or access control rules to a system of files or objects, e.g. local or distributed file system or database

- G06F21/6245—Protecting personal data, e.g. for financial or medical purposes

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N20/00—Machine learning

- G06N20/10—Machine learning using kernel methods, e.g. support vector machines [SVM]

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04L—TRANSMISSION OF DIGITAL INFORMATION, e.g. TELEGRAPHIC COMMUNICATION

- H04L63/00—Network architectures or network communication protocols for network security

- H04L63/04—Network architectures or network communication protocols for network security for providing a confidential data exchange among entities communicating through data packet networks

- H04L63/0428—Network architectures or network communication protocols for network security for providing a confidential data exchange among entities communicating through data packet networks wherein the data content is protected, e.g. by encrypting or encapsulating the payload

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Software Systems (AREA)

- General Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Computer Security & Cryptography (AREA)

- General Health & Medical Sciences (AREA)

- General Physics & Mathematics (AREA)

- Health & Medical Sciences (AREA)

- Computer Hardware Design (AREA)

- Bioethics (AREA)

- Computing Systems (AREA)

- Artificial Intelligence (AREA)

- Mathematical Physics (AREA)

- Data Mining & Analysis (AREA)

- Evolutionary Computation (AREA)

- Medical Informatics (AREA)

- Signal Processing (AREA)

- Molecular Biology (AREA)

- Computational Linguistics (AREA)

- Biophysics (AREA)

- Biomedical Technology (AREA)

- Life Sciences & Earth Sciences (AREA)

- Computer Networks & Wireless Communication (AREA)

- Databases & Information Systems (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Image Analysis (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及人工智能安全技术领域,特别的为一种基于联邦学习的增强神经网络防御能力的方法。The invention relates to the technical field of artificial intelligence security, in particular to a method for enhancing the defense capability of a neural network based on federated learning.

背景技术Background technique

对抗样本(Adversarial Examples)是指在输入样本添加难以人为分辨的细微干扰,导致机器学习模型(如神经网络)以高置信度给出一个错误的输出。对抗样本的存在证实了现有机器学习模型自身仍存在安全问题,因而限制了人工智能(AI)在安全性要求较高的领域(如自动驾驶)的应用和发展。论文“Intriguing Propertiesof Neural Networks”(Christian Szegedy,Wojciech Zaremba,etal.IntriguingPropertiesofNeuralNetworks.In ICLR,2014.)提出对抗样本的概念并证明了对抗样本对不同结构以及不同训练集训练的模型具有普遍性。从此,针对图像领域的对抗攻击和防御引起了研究热潮。Adversarial examples refer to adding subtle disturbances to the input samples that are difficult to distinguish by humans, causing a machine learning model (such as a neural network) to give a false output with high confidence. The existence of adversarial samples confirms that the existing machine learning models still have safety problems, thus limiting the application and development of artificial intelligence (AI) in areas with high safety requirements (such as autonomous driving). The paper "Intriguing Properties of Neural Networks" (Christian Szegedy, Wojciech Zaremba, et al. Intriguing Properties of Neural Networks. In ICLR, 2014.) proposes the concept of adversarial examples and proves that adversarial examples are universal to models trained with different structures and different training sets. Since then, adversarial attacks and defenses in the image domain have caused a research boom.

为了增强神经网络的防御能力,一种思路是改进神经网络模型架构,让神经网络模型架构具有某些特性,如论文“Fortified networks:Improving the robustness ofdeep networks by modeling the manifold of hidden representations”提出强化网络(fortified networks),在模型关键位置插入自动编码器进行去噪,从而降低对抗样本对神经网络模型的攻击,从而增强神经网络的防御能力;另一种思路是寻找尽量大的数据集进行训练,以增强机器学习模型的安全性和鲁棒性。其中最经典的做法是使用生成的对抗样本进行再训练,即在原有的数据集中扩充对抗样本,该方法称为对抗学习(如论文Learning with a strong adversary),能有效防御对抗样本的攻击,增强神经网络模型的防御能力。In order to enhance the defense capability of the neural network, one idea is to improve the neural network model architecture so that the neural network model architecture has certain characteristics. (fortified networks), insert autoencoders at key positions of the model for denoising, thereby reducing the attack of adversarial samples on the neural network model, thereby enhancing the defense capability of the neural network; another idea is to find the largest possible dataset for training, To enhance the security and robustness of machine learning models. The most classic approach is to use the generated adversarial samples for retraining, that is, to expand the adversarial samples in the original data set. This method is called adversarial learning (such as the paper Learning with a strong adversary), which can effectively defend against adversarial sample attacks and enhance Defensive capabilities of neural network models.

其中,第一种方法的不足在于神经网络模型架构很复杂,修改神经网络模型架构难度很大,同时,神经网络模型运行的机理缺乏可解释性,即使修改了以后的神经网络模型,由于其不可解释,依然存在被攻击的可能性;第二种方法相对而言更容易实现,通过扩充数据集进行学习,增强神经网络模型的防御能力。Among them, the disadvantage of the first method is that the architecture of the neural network model is very complex, and it is very difficult to modify the architecture of the neural network model. At the same time, the operation mechanism of the neural network model lacks interpretability. Explanation, there is still the possibility of being attacked; the second method is relatively easier to implement, learning by expanding the data set to enhance the defense ability of the neural network model.

然而,第二种方法面临下面介绍的一个难点:为了扩充数据集,可以从更多的用户、企业获取数据进行训练,但是随着大众对隐私保护的意识增强,数据收集变得困难;《中华人民共和国网络空间安全法》要求企业不能泄露或篡改收集到的用户个人信息,在与第三方进行数据交易时必须履行数据保护义务。《中华人民共和国电子商务法》对电子商务环境下个人信息保护提出了更高要求,欧盟的《通用数据保护条例》更是对用户信息的收集和使用提出了史上最严的要求。因此,传统的数据收集和使用方法不再适用,大数据驱动的人工智能面临着数据危机,简单地扩充数据集,对神经网络模型再训练的方法面临着数据共享、隐私泄露等安全隐患。However, the second method faces a difficulty as described below: in order to expand the data set, it is possible to obtain data from more users and enterprises for training, but as the public's awareness of privacy protection increases, data collection becomes difficult; The Cyberspace Security Law of the People's Republic of China requires enterprises not to disclose or tamper with the collected personal information of users, and must fulfill their data protection obligations when conducting data transactions with third parties. The E-Commerce Law of the People's Republic of China has put forward higher requirements for the protection of personal information in the e-commerce environment, and the EU's General Data Protection Regulation has put forward the most stringent requirements in history for the collection and use of user information. Therefore, traditional methods of data collection and use are no longer applicable. Big data-driven artificial intelligence is facing a data crisis. Simply expanding the data set and retraining the neural network model faces security risks such as data sharing and privacy leakage.

发明内容SUMMARY OF THE INVENTION

本发明提供的发明目的在于提供一种基于联邦学习的增强神经网络防御能力的方法,该解决上述背景技术中的问题。The purpose of the invention provided by the present invention is to provide a method for enhancing the defense capability of a neural network based on federated learning, which solves the above-mentioned problems in the background art.

为实现以上目的,本发明通过以下技术方案予以实现:一种基于联邦学习的增强神经网络防御能力的方法,包括以下步骤:In order to achieve the above purpose, the present invention is achieved through the following technical solutions: a method for enhancing the defense capability of a neural network based on federated learning, comprising the following steps:

S1、利用联邦学习,使数据留在本地并防止数据隐私泄露,协同各方进行分布式的模型训练,对中间结果进行加密以保护数据安全,汇总后融合多方模型得到联邦模型。S1. Use federated learning to keep data locally and prevent data privacy leakage. Collaborate with all parties to conduct distributed model training, encrypt intermediate results to protect data security, and integrate multi-party models to obtain a federated model.

S2、建立对抗样本,并采用算法对对抗样本进行快速寻找。S2. Establish an adversarial sample, and use an algorithm to quickly search for the adversarial sample.

进一步的,在S1中的操作步骤中,还包括以下步骤:Further, in the operation steps in S1, the following steps are also included:

101、选取一个可信的服务器作为可信的第三方,参与模型训练的终端从服务器中下载共享的初始模型。101. Select a trusted server as a trusted third party, and terminals participating in model training download the shared initial model from the server.

S102、各参与方利用本地存储的图片数据对下载的共享模型进行训练。S102: Each participant uses the locally stored image data to train the downloaded shared model.

S103、各参与方对模型的中间结果进行加密,并通过安全协议把加密的中间结果上传服务器。S103, each participant encrypts the intermediate result of the model, and uploads the encrypted intermediate result to the server through a security protocol.

S104、服务器把各参与方的中间结果通过联邦模型融合算法融合起来,得到一个优化的共享模型。S104, the server fuses the intermediate results of each participant through a federated model fusion algorithm to obtain an optimized shared model.

进一步的,在S2中的操作步骤中,还包括以下步骤:Further, in the operation steps in S2, the following steps are also included:

S201、数据的收集和整理,并集中起来形成数据集。S201. Collect and organize data, and gather them together to form a data set.

S202、图像在计算机里是以像素为单位用二进制进行存储。S202, the image is stored in the computer using binary as the unit of pixel.

S203、对优化问题进行解决即可得到对抗样本。S203, an adversarial sample can be obtained by solving the optimization problem.

S204、采用对称正定迭代矩阵法或快速梯度符号法,即可快速查找图片的对抗样本。S204 , using the symmetric positive definite iterative matrix method or the fast gradient symbol method, the confrontation sample of the picture can be quickly searched.

进一步的,在S102中的操作步骤中,利用本地图片生成对应的对抗样本输入到训练模型中,用于提高对抗样本的防御能力。Further, in the operation step in S102, the corresponding adversarial samples are generated by using the local pictures and input into the training model, so as to improve the defense capability of the adversarial samples.

进一步的,在S103中的操作步骤中,所述中间结果的加密算法包括但不限于同态加密算法。Further, in the operation step in S103, the encryption algorithm of the intermediate result includes but is not limited to a homomorphic encryption algorithm.

进一步的,在S104中的操作步骤中,所述共享模型优于初始模型的算法包括但不限于FedAvg算法。Further, in the operation step in S104, the algorithm in which the shared model is better than the initial model includes, but is not limited to, the FedAvg algorithm.

进一步的,重复步骤S102-S104,直到结果收敛并达到目的的条件。Further, steps S102-S104 are repeated until the result converges and the target condition is reached.

本发明提供了一种基于联邦学习的增强神经网络防御能力的方法。具备以下有益效果:The present invention provides a method for enhancing the defense capability of a neural network based on federated learning. Has the following beneficial effects:

该基于联邦学习的增强神经网络防御能力的方法,该方法把联邦学习和神经网络模型的训练过程结合起来,解决了处于隐私保护的考虑以及法律法规限制而导致的数据集不能流通的困境,省去了数据收集的麻烦,同时使得神经网络模型的训练集更加丰富,更加独立,克服了由于训练集不完备导致的神经网络模型容易被对抗样本攻击的能力,提升了模型防御对抗样本的能力,同时该方法提高了神经网络模型的学习能力以及防御对抗样本攻击的能力,使对抗样本的攻击有效性降低,增强了神经网络模型的防御能力和安全性。The method for enhancing the defense capability of neural network based on federated learning combines federated learning with the training process of neural network model, and solves the dilemma that data sets cannot be circulated due to privacy protection considerations and legal and regulatory restrictions. It eliminates the trouble of data collection, and at the same time makes the training set of the neural network model richer and more independent, overcomes the ability of the neural network model to be easily attacked by adversarial samples due to the incomplete training set, and improves the ability of the model to defend against adversarial samples. At the same time, the method improves the learning ability of the neural network model and the ability to defend against adversarial sample attacks, reduces the effectiveness of the adversarial sample attack, and enhances the defense ability and security of the neural network model.

附图说明Description of drawings

图1为现有技术下图片数据不流通的困境示意图;Fig. 1 is a schematic diagram of the dilemma that picture data does not circulate under the prior art;

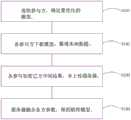

图2为本发明的总流程图;Fig. 2 is the general flow chart of the present invention;

图3为本发明的增强神经网络防御能力的方法流程图;3 is a flow chart of the method for enhancing the defense capability of a neural network according to the present invention;

图4为本发明的对抗样本生成流程图;Fig. 4 is the flow chart of adversarial sample generation of the present invention;

图5为本发明的增强神经网络防御能力的方法示意图。FIG. 5 is a schematic diagram of a method for enhancing the defense capability of a neural network according to the present invention.

具体实施方式Detailed ways

实施例1:参照图1-5:本发明提供一种基于联邦学习的增强神经网络防御能力的方法,包括以下步骤:Embodiment 1: Referring to Figures 1-5: the present invention provides a method for enhancing the defense capability of a neural network based on federated learning, including the following steps:

步骤一:利用联邦学习,省去了数据收集的麻烦,数据留在本地可以使数据隐私不往外泄露,协同各方进行分布式的模型训练,对中间结果进行加密以保护数据安全,最后汇总融合多方模型得到一个更好的联邦模型,增加了参与训练的数据集的丰富度,降低对抗样本的有效性。Step 1: Use federated learning to save the trouble of data collection. Keeping the data locally can prevent data privacy from leaking. Collaborate with all parties to conduct distributed model training, encrypt the intermediate results to protect data security, and finally summarize and integrate The multi-party model results in a better federated model, increasing the richness of the dataset involved in training and reducing the effectiveness of adversarial examples.

以下为具体的实施步骤:The following are the specific implementation steps:

1)、选取一个可信的服务器作为可信的第三方,参与模型训练的终端(参与方,如企业、高校、科研院所、个人用户等)从服务器中下载共享的初始模型,如用于图像分类的神经网络模型inceptionV3;1) Select a trusted server as a trusted third party, and the terminals (participants, such as enterprises, universities, research institutes, individual users, etc.) participating in the model training download the shared initial model from the server, such as for Image classification neural network model inceptionV3;

2)、各参与方利用本地存储的图片数据对下载的共享模型进行训练,为提高对对抗样本的防御能力,可以利用本地图片生成对应的对抗样本输入到训练模型中;2) Each participant uses the locally stored image data to train the downloaded shared model. In order to improve the defense capability against adversarial samples, local images can be used to generate corresponding adversarial samples and input them into the training model;

3)、各参与方对模型的中间结果(如权重矩阵)进行加密(如同态加密等),并通过安全协议把加密的中间结果上传服务器;3) Each participant encrypts the intermediate results of the model (such as weight matrix) (such as homomorphic encryption, etc.), and uploads the encrypted intermediate results to the server through a security protocol;

4)、服务器把各参与方的中间结果通过联邦模型融合算法(如联邦平均算法FedAvg,Keith Bonawitz,Vladimir Ivanov,et al.Practical Secure Aggregation forPrivacy-Preserving Machine Learning,arXiv:1611.04482[cs.CR],2016)融合起来,得到一个优化的共享模型。假设令fi(w)=l(xi,yi;w)表示模型参数w对数据示例(xi,yi)的预测损失,k表示参与方总数,Pk表示参与方k上数据点的索引值,nk=|Pk|表示参与方k的数据样本总数,则可以令其中对于第t次迭代,参与方k计算本地梯度更新其中η表示学习率。每个参与方用同态加密得到本地加密后的梯度更新得到服务器通过计算即可得到第t轮联邦模型的梯度更新。这里用加性同态加密可以使得服务器不用对进行解密即可运算,因为同态加密的良好性质是加密后计算和计算后加密结果是一致的,即这样可以防止服务器得到中间结果,以此反推参与方的训练数据。当达到本次迭代最优时,服务器把更新后的模型参数w发送给各参与方,以继续进行后续的训练。4) The server integrates the intermediate results of each participant through the federated model fusion algorithm (such as the federated average algorithm FedAvg, Keith Bonawitz, Vladimir Ivanov, et al.Practical Secure Aggregation forPrivacy-Preserving Machine Learning, arXiv:1611.04482[cs.CR], 2016) are fused to obtain an optimized shared model. Suppose fi(w)=l(xi,yi ; w) represents the prediction loss of the model parameter w to the data example (xi,yi), k represents the total number of participants, Pk represents the index value of the data point on participant k, nk = |Pk | represents the total number of data samples of participant k, then we can make in For the t-th iteration, party k computes a local gradient update in η represents the learning rate. Each participant uses homomorphic encryption to obtain the locally encrypted gradient update to obtain server by computing The gradient update of the federated model in the t-th round can be obtained. Here, additive homomorphic encryption can be used so that the server does not need to Decryption can be performed, because the good property of homomorphic encryption is that the calculation after encryption and the encryption result after calculation are consistent, that is This prevents the server from getting intermediate results and inferring the training data of the participants. When the optimality of this iteration is reached, the server sends the updated model parameters w to each participant to continue the subsequent training.

重复迭代2)-4)步骤,直到结果收敛或者达到目的条件。Repeat steps 2)-4) until the result converges or the target condition is reached.

具体而言,以提高inceptionV3模型对对抗样本防御能力为例。Specifically, take improving the defense ability of the inceptionV3 model against adversarial samples as an example.

选定一个各参与方都信任的服务器,或者各轮在各参与方随机选取一方作为可信的第三方,根据业务需求,有需要的提前在服务器部署好inceptionV3,并协商好用于加密中间结果的加密方法以及传输的安全协议,保证中间结果不泄露数据隐私。训练刚开始时各参与方从服务器中下载初始模型inceptionV3(若开始时参与方本地已经有inceptionV3则可以不去服务器下载)。各方模型准备好后开始利用本地图片数据进行训练,对本地产生的权重参数等中间结果用协商好的加密方法加密,并用协商好的协议传输到服务器。服务器采取FedAvg等算法对各方半模型进行融合,得到一个比开始时更优的inceptionV3。Select a server trusted by all participants, or randomly select a party as a trusted third party from each participant in each round. According to business needs, deploy inceptionV3 on the server in advance if necessary, and negotiate to encrypt the intermediate results. The encryption method and transmission security protocol ensure that the intermediate results do not reveal data privacy. At the beginning of training, each participant downloads the initial model inceptionV3 from the server (if the participant already has inceptionV3 locally at the beginning, it can be downloaded without going to the server). After the models of all parties are ready, they start to use the local image data for training, encrypt the intermediate results such as the weight parameters generated locally with the negotiated encryption method, and transmit them to the server with the negotiated protocol. The server uses algorithms such as FedAvg to fuse the half-models of all parties, and obtains a better inceptionV3 than the beginning.

步骤二:建立对抗样本,并采用算法对对抗样本进行快速寻找。Step 2: Establish adversarial samples, and use algorithms to quickly search for adversarial samples.

以下为具体的实施步骤:The following are the specific implementation steps:

1)、数据的收集和整理,并集中起来形成数据集。如机器学习图像领域来自NIST(美国国家标准与技术研究所)的手写数据集MNIST。MNIST训练集收集了250个不同人手写的数字,其中50%是高中学生,50%来自人口普查局的工作人员。测试集也是同样比例的手写数字数据。同是图像领域,CIFAR-10用于识别普适物体的小型数据集,由AlexKrizhevsky和Ilya Sutskever整理而成。这些数据集形成的过程都有把原始数据收集集中的过程。1), data collection and sorting, and centralized to form a data set. For example, the field of machine learning images comes from the handwriting dataset MNIST from NIST (National Institute of Standards and Technology). The MNIST training set is a collection of 250 handwritten digits by different people, 50% of which are high school students and 50% from Census Bureau staff. The test set is also the same proportion of handwritten digit data. Also in the image field, CIFAR-10 is a small dataset for recognizing ubiquitous objects, organized by Alex Krizhevsky and Ilya Sutskever. The process of forming these data sets has the process of collecting the original data.

2)、图像在计算机里是以像素为单位用二进制进行存储的,彩色图像是多个颜色通道在该像素点叠加的结果,如RGB(红、绿、蓝)三个颜色通道,每个通道的范围是[0,255],显示的时候是三个颜色通道数值叠加的结果。因此一个简单的图像自身包含了大量的二进制信息。以手写数字数据集MNIST(Yann LeCun.http://yann.lecun.com/exdb/mnist/)为例,MINIST包含60000张的训练数据集以及10000张的测试数据集,每一张图片都是一个手写数字,包含28*28个像素。每张图片展开得到一个1*784的向量,作为模型的输入。MNIST数据集图片对应0到9十个数字,经过一个10维的one-hot vectors(独热编码),可以把图片上的数据转换为对应的标签,如数字0的图片标签是[1,0,0,0,0,0,0,0,0,0],即图片上的数字对应的位为1,其余各维度数字都是0。把图片设为“x”,对应的标签设为“y”,即每张图片表示为[x,y],即可得到一个[60000,10]的训练集矩阵。2) The image is stored in binary in the unit of pixel in the computer. The color image is the result of the superposition of multiple color channels at the pixel, such as RGB (red, green, blue) three color channels, each channel The range is [0,255], which is the result of the superposition of the values of the three color channels when displayed. So a simple image itself contains a lot of binary information. Taking the handwritten digit dataset MNIST (Yann LeCun.http://yann.lecun.com/exdb/mnist/) as an example, MINIST contains a training dataset of 60,000 images and a test dataset of 10,000 images. Each image is a A handwritten number containing 28*28 pixels. Each image is expanded to get a 1*784 vector, which is used as the input of the model. MNIST data set pictures correspond to ten numbers from 0 to 9. After a 10-dimensional one-hot vectors (one-hot encoding), the data on the pictures can be converted into corresponding labels. For example, the picture label of the number 0 is [1,0 ,0,0,0,0,0,0,0,0], that is, the bit corresponding to the number on the picture is 1, and the other dimensions are 0. Set the picture as "x" and the corresponding label as "y", that is, each picture is represented as [x, y], and a training set matrix of [60000, 10] can be obtained.

3)、求解优化问题Minimze c|r|+lossf(x+r,l)可以得到对抗样本,其中f表示一种将图像像素值向量映射到离散标签集的分类器,lossf是分类器关联的损失函数,x+r是最接近x的图像,被f分类为l,也就是对抗攻击里要得到的对抗样本。c是控制|r|大小的参数。求解以上问题可以找到一张图片对应的对抗样本,如对MNIST的数字0图片x添加扰动r,使得模型把0识别为1。3), solve the optimization problem Minimze c|r|+lossf (x+r, l) to get adversarial samples, where f represents a classifier that maps image pixel value vectors to discrete label sets, and lossf is the classifier The associated loss function, x+r is the image closest to x, which is classified as l by f, which is the adversarial sample to be obtained in the adversarial attack. c is a parameter that controls the size of |r|. Solving the above problem can find the adversarial sample corresponding to a picture, such as adding a perturbation r to the MNIST digital 0 picture x, so that the model recognizes 0 as 1.

4)、对抗样本发生概率较低,因此在训练集和测试集中都很少出现;但对抗样本的分布又是密集的,可以通过一些算法快速找到一张图片的对抗样本,如L-BFGS(有限内存的对称正定迭代矩阵法,Christian Szegedy,Wojciech Zaremba,et al.IntriguingPropertiesof Neural Networks.In ICLR,2014.)、FGSM(快速梯度符号法,GoodfellowI,Shlens J,Szegedy C,et al.Explaining and Harnessing Adversarial Examples[J].2014.)等。4) The probability of occurrence of adversarial samples is low, so they rarely appear in the training set and test set; but the distribution of adversarial samples is dense, and some algorithms can quickly find the adversarial samples of a picture, such as L-BFGS ( Symmetric Positive Definite Iterative Matrix Method for Limited Memory, Christian Szegedy, Wojciech Zaremba, et al. Intriguing Properties of Neural Networks. In ICLR, 2014.), FGSM (Fast Gradient Notation Method, GoodfellowI, Shlens J, Szegedy C, et al.Explaining and Harnessing Adversarial Examples[J].2014.) and so on.

本发明中,该方法把联邦学习和神经网络模型的训练过程结合起来,解决了处于隐私保护的考虑以及法律法规限制而导致的数据集不能流通的困境,省去了数据收集的麻烦,同时使得神经网络模型的训练集更加丰富,更加独立,克服了由于训练集不完备导致的神经网络模型容易被对抗样本攻击的能力,提升了模型防御对抗样本的能力,同时该方法提高了神经网络模型的学习能力以及防御对抗样本攻击的能力,使对抗样本的攻击有效性降低,增强了神经网络模型的防御能力和安全性。In the present invention, the method combines the federated learning and the training process of the neural network model, which solves the dilemma that the data set cannot be circulated due to the consideration of privacy protection and the limitation of laws and regulations, saves the trouble of data collection, and makes the The training set of the neural network model is richer and more independent, which overcomes the ability of the neural network model to be easily attacked by adversarial samples due to the incomplete training set, and improves the ability of the model to defend against adversarial samples. At the same time, this method improves the performance of the neural network model. The learning ability and the ability to defend against adversarial sample attacks reduce the effectiveness of adversarial sample attacks and enhance the defense ability and security of the neural network model.

以上的仅是本发明的优选实施方式,应当指出,对于本领域的普通技术人员来说,在不脱离本发明创造构思的前提下,还可以做出若干变形和改进,这些都属于本发明的保护范围。The above are only the preferred embodiments of the present invention. It should be pointed out that for those of ordinary skill in the art, some modifications and improvements can be made without departing from the inventive concept of the present invention, which all belong to the present invention. protected range.

Claims (7)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010618973.7ACN111860832A (en) | 2020-07-01 | 2020-07-01 | A Federated Learning-Based Approach to Enhance the Defense Capability of Neural Networks |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010618973.7ACN111860832A (en) | 2020-07-01 | 2020-07-01 | A Federated Learning-Based Approach to Enhance the Defense Capability of Neural Networks |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN111860832Atrue CN111860832A (en) | 2020-10-30 |

Family

ID=72989695

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202010618973.7APendingCN111860832A (en) | 2020-07-01 | 2020-07-01 | A Federated Learning-Based Approach to Enhance the Defense Capability of Neural Networks |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN111860832A (en) |

Cited By (23)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112364908A (en)* | 2020-11-05 | 2021-02-12 | 浙江大学 | Decision tree-oriented longitudinal federal learning method |

| CN112364943A (en)* | 2020-12-10 | 2021-02-12 | 广西师范大学 | Federal prediction method based on federal learning |

| CN112507219A (en)* | 2020-12-07 | 2021-03-16 | 中国人民大学 | Personalized search system based on federal learning enhanced privacy protection |

| CN112560059A (en)* | 2020-12-17 | 2021-03-26 | 浙江工业大学 | Vertical federal model stealing defense method based on neural pathway feature extraction |

| CN112632620A (en)* | 2020-12-30 | 2021-04-09 | 支付宝(杭州)信息技术有限公司 | Federal learning method and system for enhancing privacy protection |

| CN112653752A (en)* | 2020-12-18 | 2021-04-13 | 重庆大学 | Block chain industrial Internet of things data sharing method based on federal learning |

| CN112668044A (en)* | 2020-12-21 | 2021-04-16 | 中国科学院信息工程研究所 | Privacy protection method and device for federal learning |

| CN113143286A (en)* | 2021-04-30 | 2021-07-23 | 广州大学 | Electrocardiosignal identification method, system, device and medium based on distributed learning |

| CN113204766A (en)* | 2021-05-25 | 2021-08-03 | 华中科技大学 | Distributed neural network deployment method, electronic device and storage medium |

| CN113268758A (en)* | 2021-06-17 | 2021-08-17 | 上海万向区块链股份公司 | Data sharing system, method, medium and device based on federal learning |

| CN113344221A (en)* | 2021-05-10 | 2021-09-03 | 上海大学 | Federal learning method and system based on neural network architecture search |

| CN113468521A (en)* | 2021-07-01 | 2021-10-01 | 哈尔滨工程大学 | Data protection method for federal learning intrusion detection based on GAN |

| CN113515812A (en)* | 2021-07-09 | 2021-10-19 | 东软睿驰汽车技术(沈阳)有限公司 | Automatic driving method, device, processing device and storage medium |

| CN113657611A (en)* | 2021-08-30 | 2021-11-16 | 支付宝(杭州)信息技术有限公司 | Method and device for jointly updating model |

| CN113726561A (en)* | 2021-08-18 | 2021-11-30 | 西安电子科技大学 | Business type recognition method for training convolutional neural network by using federal learning |

| CN113792873A (en)* | 2021-08-24 | 2021-12-14 | 浙江数秦科技有限公司 | Neural network model trusteeship training system based on block chain |

| CN113807157A (en)* | 2020-11-27 | 2021-12-17 | 京东科技控股股份有限公司 | Method, device and system for training neural network model based on federal learning |

| CN114489626A (en)* | 2020-11-13 | 2022-05-13 | 深圳前海微众银行股份有限公司 | Model generation method, device, device and storage medium |

| CN114781583A (en)* | 2022-03-07 | 2022-07-22 | 中国人民解放军战略支援部队信息工程大学 | A method to improve the adversarial robustness of deep neural network models |

| CN114978654A (en)* | 2022-05-12 | 2022-08-30 | 北京大学 | End-to-end communication system attack defense method based on deep learning |

| CN115374433A (en)* | 2022-04-29 | 2022-11-22 | 华南理工大学 | Federal learning confrontation sample attack detection method, system, equipment and computer readable medium |

| CN116894484A (en)* | 2023-06-29 | 2023-10-17 | 山东浪潮科学研究院有限公司 | A federated modeling method and system |

| CN117808694A (en)* | 2023-12-28 | 2024-04-02 | 中国人民解放军总医院第六医学中心 | Painless gastroscope image enhancement method and painless gastroscope image enhancement system under deep neural network |

Citations (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN108446765A (en)* | 2018-02-11 | 2018-08-24 | 浙江工业大学 | The multi-model composite defense method of sexual assault is fought towards deep learning |

| CN109698822A (en)* | 2018-11-28 | 2019-04-30 | 众安信息技术服务有限公司 | Combination learning method and system based on publicly-owned block chain and encryption neural network |

| US20190227980A1 (en)* | 2018-01-22 | 2019-07-25 | Google Llc | Training User-Level Differentially Private Machine-Learned Models |

| CN110443367A (en)* | 2019-07-30 | 2019-11-12 | 电子科技大学 | A kind of method of strength neural network model robust performance |

| CN110719158A (en)* | 2019-09-11 | 2020-01-21 | 南京航空航天大学 | Edge calculation privacy protection system and method based on joint learning |

| US20200125739A1 (en)* | 2018-10-19 | 2020-04-23 | International Business Machines Corporation | Distributed learning preserving model security |

- 2020

- 2020-07-01CNCN202010618973.7Apatent/CN111860832A/enactivePending

Patent Citations (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20190227980A1 (en)* | 2018-01-22 | 2019-07-25 | Google Llc | Training User-Level Differentially Private Machine-Learned Models |

| CN108446765A (en)* | 2018-02-11 | 2018-08-24 | 浙江工业大学 | The multi-model composite defense method of sexual assault is fought towards deep learning |

| US20200125739A1 (en)* | 2018-10-19 | 2020-04-23 | International Business Machines Corporation | Distributed learning preserving model security |

| CN109698822A (en)* | 2018-11-28 | 2019-04-30 | 众安信息技术服务有限公司 | Combination learning method and system based on publicly-owned block chain and encryption neural network |

| CN110443367A (en)* | 2019-07-30 | 2019-11-12 | 电子科技大学 | A kind of method of strength neural network model robust performance |

| CN110719158A (en)* | 2019-09-11 | 2020-01-21 | 南京航空航天大学 | Edge calculation privacy protection system and method based on joint learning |

Cited By (35)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112364908A (en)* | 2020-11-05 | 2021-02-12 | 浙江大学 | Decision tree-oriented longitudinal federal learning method |

| CN114489626A (en)* | 2020-11-13 | 2022-05-13 | 深圳前海微众银行股份有限公司 | Model generation method, device, device and storage medium |

| CN114489626B (en)* | 2020-11-13 | 2025-06-24 | 深圳前海微众银行股份有限公司 | Model generation method, device, equipment and storage medium |

| CN113807157A (en)* | 2020-11-27 | 2021-12-17 | 京东科技控股股份有限公司 | Method, device and system for training neural network model based on federal learning |

| CN113807157B (en)* | 2020-11-27 | 2024-07-19 | 京东科技控股股份有限公司 | Method, device and system for training neural network model based on federal learning |

| CN112507219B (en)* | 2020-12-07 | 2023-06-02 | 中国人民大学 | A Personalized Search System with Enhanced Privacy Protection Based on Federated Learning |

| CN112507219A (en)* | 2020-12-07 | 2021-03-16 | 中国人民大学 | Personalized search system based on federal learning enhanced privacy protection |

| CN112364943B (en)* | 2020-12-10 | 2022-04-22 | 广西师范大学 | A Federated Prediction Method Based on Federated Learning |

| CN112364943A (en)* | 2020-12-10 | 2021-02-12 | 广西师范大学 | Federal prediction method based on federal learning |

| CN112560059A (en)* | 2020-12-17 | 2021-03-26 | 浙江工业大学 | Vertical federal model stealing defense method based on neural pathway feature extraction |

| CN112560059B (en)* | 2020-12-17 | 2022-04-29 | 浙江工业大学 | Vertical federal model stealing defense method based on neural pathway feature extraction |

| CN112653752A (en)* | 2020-12-18 | 2021-04-13 | 重庆大学 | Block chain industrial Internet of things data sharing method based on federal learning |

| CN112668044A (en)* | 2020-12-21 | 2021-04-16 | 中国科学院信息工程研究所 | Privacy protection method and device for federal learning |

| CN112632620A (en)* | 2020-12-30 | 2021-04-09 | 支付宝(杭州)信息技术有限公司 | Federal learning method and system for enhancing privacy protection |

| CN112632620B (en)* | 2020-12-30 | 2022-08-26 | 支付宝(杭州)信息技术有限公司 | Federal learning method and system for enhancing privacy protection |

| CN113143286A (en)* | 2021-04-30 | 2021-07-23 | 广州大学 | Electrocardiosignal identification method, system, device and medium based on distributed learning |

| CN113344221A (en)* | 2021-05-10 | 2021-09-03 | 上海大学 | Federal learning method and system based on neural network architecture search |

| CN113204766A (en)* | 2021-05-25 | 2021-08-03 | 华中科技大学 | Distributed neural network deployment method, electronic device and storage medium |

| CN113204766B (en)* | 2021-05-25 | 2022-06-17 | 华中科技大学 | Distributed neural network deployment method, electronic device and storage medium |

| CN113268758A (en)* | 2021-06-17 | 2021-08-17 | 上海万向区块链股份公司 | Data sharing system, method, medium and device based on federal learning |

| CN113268758B (en)* | 2021-06-17 | 2022-11-04 | 上海万向区块链股份公司 | Data sharing system, method, medium and device based on federal learning |

| CN113468521A (en)* | 2021-07-01 | 2021-10-01 | 哈尔滨工程大学 | Data protection method for federal learning intrusion detection based on GAN |

| CN113468521B (en)* | 2021-07-01 | 2022-04-05 | 哈尔滨工程大学 | Data protection method for federal learning intrusion detection based on GAN |

| CN113515812A (en)* | 2021-07-09 | 2021-10-19 | 东软睿驰汽车技术(沈阳)有限公司 | Automatic driving method, device, processing device and storage medium |

| CN113726561A (en)* | 2021-08-18 | 2021-11-30 | 西安电子科技大学 | Business type recognition method for training convolutional neural network by using federal learning |

| CN113792873A (en)* | 2021-08-24 | 2021-12-14 | 浙江数秦科技有限公司 | Neural network model trusteeship training system based on block chain |

| CN113657611A (en)* | 2021-08-30 | 2021-11-16 | 支付宝(杭州)信息技术有限公司 | Method and device for jointly updating model |

| CN114781583A (en)* | 2022-03-07 | 2022-07-22 | 中国人民解放军战略支援部队信息工程大学 | A method to improve the adversarial robustness of deep neural network models |

| CN114781583B (en)* | 2022-03-07 | 2024-12-27 | 中国人民解放军网络空间部队信息工程大学 | A method to improve the adversarial robustness of deep neural network models |

| CN115374433A (en)* | 2022-04-29 | 2022-11-22 | 华南理工大学 | Federal learning confrontation sample attack detection method, system, equipment and computer readable medium |

| CN114978654B (en)* | 2022-05-12 | 2023-03-10 | 北京大学 | A defense method for end-to-end communication system attack based on deep learning |

| CN114978654A (en)* | 2022-05-12 | 2022-08-30 | 北京大学 | End-to-end communication system attack defense method based on deep learning |

| CN116894484A (en)* | 2023-06-29 | 2023-10-17 | 山东浪潮科学研究院有限公司 | A federated modeling method and system |

| CN117808694A (en)* | 2023-12-28 | 2024-04-02 | 中国人民解放军总医院第六医学中心 | Painless gastroscope image enhancement method and painless gastroscope image enhancement system under deep neural network |

| CN117808694B (en)* | 2023-12-28 | 2024-05-24 | 中国人民解放军总医院第六医学中心 | Painless gastroscope image enhancement method and painless gastroscope image enhancement system under deep neural network |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN111860832A (en) | A Federated Learning-Based Approach to Enhance the Defense Capability of Neural Networks | |

| Wang et al. | Attrleaks on the edge: Exploiting information leakage from privacy-preserving co-inference | |

| Zhang et al. | F-TPE: Flexible thumbnail-preserving encryption based on multi-pixel sum-preserving encryption | |

| Xia et al. | Secure image LBP feature extraction in cloud-based smart campus | |

| TW202205118A (en) | Picture classification method and apparatus for protecting data privacy | |

| CN110659379B (en) | A Searchable Encrypted Image Retrieval Method Based on Deep Convolutional Network Features | |

| Ambika et al. | Encryption-based steganography of images by multiobjective whale optimal pixel selection | |

| CN112528064A (en) | Privacy-protecting encrypted image retrieval method and system | |

| CN112487456A (en) | Federal learning model training method and system, electronic equipment and readable storage medium | |

| CN112560059B (en) | Vertical federal model stealing defense method based on neural pathway feature extraction | |

| CN115496227A (en) | Method and Application of Membership Reasoning Attack Model Training Based on Federated Learning | |

| Kayani et al. | Privacy preserving content based image retrieval | |

| Jin et al. | Efficient blind face recognition in the cloud | |

| Mu | Deep leakage from gradients | |

| Xu et al. | An image autonomous selection encryption algorithm based on the delay exponential logistic chaotic model | |

| CN116628445A (en) | A privacy-preserving method for federated learning based on Cutmix data enhancement | |

| Zhang et al. | BADFSS: Backdoor Attacks on Federated Self-Supervised Learning. | |

| CN117709407A (en) | Federal distillation membership reasoning attack defense technology based on data generation | |

| CN117494183A (en) | Private data generation method and system based on knowledge distillation generative adversarial network model | |

| Mao | [Retracted] Algorithm of Encrypting Digital Image Using Chaos Neural Network | |

| Satre et al. | Quantum image cryptography based on discrete chaotic maps | |

| Chen et al. | Adversarial representation sharing: A quantitative and secure collaborative learning framework | |

| Wang et al. | Secure Content Based Image Retrieval Scheme Based on Deep Hashing and Searchable Encryption. | |

| Narayana et al. | Medical image cryptanalysis using adaptive, lightweight neural network based algorithm for IoT based secured cloud storage | |

| Dong | Computer Network Security Encryption Technology Based on Neural Network |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| TA01 | Transfer of patent application right | Effective date of registration:20220628 Address after:No. 230, Waihuan West Road, Guangzhou University Town, Panyu, Guangzhou City, Guangdong Province, 510006 Applicant after:Guangzhou University Applicant after:National University of Defense Technology Address before:No. 230, Waihuan West Road, Guangzhou University Town, Panyu, Guangzhou City, Guangdong Province, 510006 Applicant before:Guangzhou University | |

| TA01 | Transfer of patent application right | ||

| RJ01 | Rejection of invention patent application after publication | Application publication date:20201030 | |

| RJ01 | Rejection of invention patent application after publication |