CN111738085A - System construction method and device for simultaneous positioning and mapping of automatic driving - Google Patents

System construction method and device for simultaneous positioning and mapping of automatic drivingDownload PDFInfo

- Publication number

- CN111738085A CN111738085ACN202010441208.2ACN202010441208ACN111738085ACN 111738085 ACN111738085 ACN 111738085ACN 202010441208 ACN202010441208 ACN 202010441208ACN 111738085 ACN111738085 ACN 111738085A

- Authority

- CN

- China

- Prior art keywords

- mapping

- automatic driving

- algorithm

- construction method

- system construction

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/40—Document-oriented image-based pattern recognition

- G06V30/42—Document-oriented image-based pattern recognition based on the type of document

- G06V30/422—Technical drawings; Geographical maps

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/22—Matching criteria, e.g. proximity measures

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/40—Extraction of image or video features

- G06V10/44—Local feature extraction by analysis of parts of the pattern, e.g. by detecting edges, contours, loops, corners, strokes or intersections; Connectivity analysis, e.g. of connected components

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02T—CLIMATE CHANGE MITIGATION TECHNOLOGIES RELATED TO TRANSPORTATION

- Y02T10/00—Road transport of goods or passengers

- Y02T10/10—Internal combustion engine [ICE] based vehicles

- Y02T10/40—Engine management systems

Landscapes

- Engineering & Computer Science (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Artificial Intelligence (AREA)

- Data Mining & Analysis (AREA)

- Multimedia (AREA)

- Bioinformatics & Cheminformatics (AREA)

- Bioinformatics & Computational Biology (AREA)

- Evolutionary Biology (AREA)

- Evolutionary Computation (AREA)

- Life Sciences & Earth Sciences (AREA)

- General Engineering & Computer Science (AREA)

- Image Analysis (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及自动驾驶领域,尤其涉及一种实现自动驾驶同时定位与建图的系统构建方法及装置。The invention relates to the field of automatic driving, in particular to a system construction method and device for realizing simultaneous positioning and mapping of automatic driving.

背景技术Background technique

同时定位与建图(Simultaneous localization and mapping,SLAM)是自动驾驶研究技术中的核心问题之一,高鲁棒性、高精度、实时性的SLAM,对自动驾驶等领域有重要的应用价值。Simultaneous localization and mapping (SLAM) is one of the core issues in autonomous driving research technology. SLAM with high robustness, high precision, and real-time performance has important application value in areas such as autonomous driving.

目前,国内外学者对自动驾驶领域的SLAM技术的研究主要还是基于静态环境且计算效率不高。目前,一些系统能够为单目视觉SLAM地图提供真实的尺度信息,减少视觉定位过程中不稳定因素的影响,提高系统在视觉特征缺失等情况下的稳定性。然而动态场景下,该系统跟踪精度极低甚至跟踪失败;同时,由于该系统计算效率低,在车辆速度较快时,系统将无法运行。At present, domestic and foreign scholars' research on SLAM technology in the field of autonomous driving is mainly based on static environment and the calculation efficiency is not high. At present, some systems can provide real scale information for monocular visual SLAM maps, reduce the influence of unstable factors in the visual positioning process, and improve the stability of the system in the absence of visual features. However, in dynamic scenarios, the tracking accuracy of the system is extremely low or even the tracking fails; at the same time, due to the low computational efficiency of the system, the system will not be able to run when the vehicle speed is fast.

受限于计算平台的性能和功耗,如何在有限的资源条件下实现实时运行,一直是一个难以解决的问题。同时,传统的SLAM是建立在静态环境下,不考虑环境物体的运动,而实际环境中,人的走动、车辆的来往都会造成环境动态变化,从而使SLAM系统建立的地图无法保持长时间的一致性,基于视觉的特征也会因为物体的运动而变得不稳定。因此,如何在有限资源的条件下构建高鲁棒性、高精度、实时性的SLAM是智能驾驶领域急需解决的问题。Limited by the performance and power consumption of the computing platform, how to realize real-time operation under limited resource conditions has always been a difficult problem to solve. At the same time, traditional SLAM is built in a static environment and does not consider the movement of environmental objects. In the actual environment, the movement of people and the movement of vehicles will cause dynamic changes in the environment, so that the map created by the SLAM system cannot be maintained for a long time. Sensitive, vision-based features can also become unstable due to object motion. Therefore, how to build a highly robust, high-precision, real-time SLAM under the condition of limited resources is an urgent problem to be solved in the field of intelligent driving.

名词解释:Glossary:

DG-SLAM:基于深度学习和GPU并行计算的SLAM,即SLAM Based on Deep Learningand GPU Parallel Computing的缩写。DG-SLAM: SLAM based on deep learning and GPU parallel computing, which is the abbreviation of SLAM Based on Deep Learning and GPU Parallel Computing.

发明内容SUMMARY OF THE INVENTION

为了解决上述技术问题之一,本发明的目的是提供一种实现自动驾驶同时定位与建图的系统构建方法及装置。In order to solve one of the above technical problems, the purpose of the present invention is to provide a system construction method and device for realizing simultaneous positioning and mapping of automatic driving.

本发明所采用的技术方案是:The technical scheme adopted in the present invention is:

一种实现自动驾驶同时定位与建图的系统构建方法,所述系统包括目标检测线程、追踪线程和局部建图线程三个并行线程,所述追踪线程包括以下步骤:A system construction method for realizing simultaneous positioning and mapping of automatic driving, the system includes three parallel threads, a target detection thread, a tracking thread and a local mapping thread, and the tracking thread includes the following steps:

获取图像数据后,采用所述目标检测线程检测所述图像数据中的动态物体;After acquiring the image data, use the target detection thread to detect dynamic objects in the image data;

采用特征提取算法提取所述图像数据的特征点,所述特征提取算法与所述目标检测线程采取并行计算方式;A feature extraction algorithm is used to extract the feature points of the image data, and the feature extraction algorithm and the target detection thread adopt a parallel computing mode;

根据所述动态物体的检测结果和所述特征点的提取结果,获取并剔除包含所述动态物体的特征点,以及根据预设的匹配算法完成不包含所述动态物体特征点的匹配;According to the detection result of the dynamic object and the extraction result of the feature point, acquire and remove the feature point that includes the dynamic object, and complete the matching that does not include the feature point of the dynamic object according to a preset matching algorithm;

根据相邻帧的特征点匹配获取相机的初始位姿,通过追踪局部地图优化所述相机的位姿,完成所述相机的定位;Obtain the initial pose of the camera according to the feature point matching of adjacent frames, optimize the pose of the camera by tracking the local map, and complete the positioning of the camera;

其中,在追踪局部地图过程中,采用饱和核函数作用于计算特征点投影匹配的最小化误差项的二范数平方和。Among them, in the process of tracking the local map, the saturation kernel function is used to calculate the two-norm square sum of the minimized error term of the feature point projection matching.

进一步,所述采用饱和核函数作用于计算特征点投影匹配的最小化误差项的二范数平方和的公式为:Further, the formula for calculating the sum of squares of the two-norm of the minimized error term of the feature point projection matching using the saturated kernel function is:

其中,fi(x)为点集P在像素坐标系下的坐标与当前帧特征点的坐标的残差,S为鲁棒饱和核函数,记||fi(x)||2=e,则:Among them, fi (x) is the residual between the coordinates of the point set P in the pixel coordinate system and the coordinates of the feature points of the current frame, S is the robust saturation kernel function, denoted ||fi (x)||2 =e ,but:

式中,δ为残差的阈值。In the formula, δ is the threshold of the residual.

进一步,所述通过追踪局部地图优化所述相机的位姿,包括:Further, optimizing the pose of the camera by tracking the local map includes:

根据所述初始位姿计算以所述相机为中心的当前帧的空间;Calculate the space of the current frame centered on the camera according to the initial pose;

并行采用三种标准筛选所述空间中的地图点,并计算获得与当前帧进行投影匹配的点集P;In parallel, three criteria are used to filter the map points in the space, and the point set P that is projected and matched with the current frame is obtained by calculation;

其中,所述点集P由世界坐标系投影到像素坐标系与当前帧的特征点进行投影匹配,且采用光束平差法进行最小二乘优化。Wherein, the point set P is projected from the world coordinate system to the pixel coordinate system to perform projection matching with the feature points of the current frame, and the beam adjustment method is used for least squares optimization.

进一步,所述并行采用三种标准筛选所述空间中的地图点,包括:Further, the parallel uses three criteria to filter the map points in the space, including:

基于第一标准,筛选选出位于所述空间中的局部地图点集S;Based on the first criterion, filter out the local map point set S located in the space;

基于第二标准,计算当前视图射线v和地图云点平均视图方向n的夹角,若v·n<cos(60°),则舍弃所述地图云点,得到点集T;Based on the second criterion, calculate the angle between the current view ray v and the average view direction n of the map cloud point, if v·n<cos(60°), then discard the map cloud point to obtain a point set T;

基于第三标准,计算地图云点到相机中心的距离d,若所述距离d不在地图云点的尺度不变区间,则舍弃所述地图云点,得到点集W。Based on the third criterion, the distance d from the map cloud point to the camera center is calculated. If the distance d is not within the scale-invariant range of the map cloud point, the map cloud point is discarded to obtain a point set W.

进一步,所述目标检测线程包括目标检测模型,所述目标检测模型为基于深度学习的算法,通过该算法对目标物体进行学习获得基于深度学习的目标检测模型。Further, the target detection thread includes a target detection model, and the target detection model is an algorithm based on deep learning, and a target detection model based on deep learning is obtained by learning the target object through the algorithm.

进一步,所述基于深度学习的算法为SSD算法,该算法以VGG-16作为基础网络,基于前馈卷积神经网络,在不同尺度的特征图上分别检测,然后运用非极大值抑制算法消除冗余的重复框得到最后的检测框。Further, the algorithm based on deep learning is the SSD algorithm. The algorithm uses VGG-16 as the basic network, based on the feedforward convolutional neural network, detects separately on the feature maps of different scales, and then uses the non-maximum suppression algorithm to eliminate Redundant duplicate boxes get the final detection box.

进一步,所述目标检测模型由Tensorflow深度学习框架训练获得。Further, the target detection model is obtained by training the Tensorflow deep learning framework.

进一步,所述局部建图线程基于ORB-SLAM建立。Further, the local mapping thread is established based on ORB-SLAM.

进一步,所述阈值δ取值为35.89。Further, the threshold value δ is 35.89.

本发明所采用的另一技术方案是:Another technical scheme adopted by the present invention is:

一种实现自动驾驶同时定位与建图的系统构建装置,包括:A system construction device for realizing simultaneous positioning and mapping of automatic driving, comprising:

至少一个处理器;at least one processor;

至少一个存储器,用于存储至少一个程序;at least one memory for storing at least one program;

当所述至少一个程序被所述至少一个处理器执行,使得所述至少一个处理器实现上所述方法。When the at least one program is executed by the at least one processor, the at least one processor implements the above method.

本发明的有益效果是:本发明具有较高的跟踪轨迹精度,以及更快的计算速度,满足自动驾驶车辆在动态环境下的同时定位与建图。The beneficial effects of the present invention are: the present invention has higher tracking trajectory accuracy and faster calculation speed, and can satisfy simultaneous positioning and mapping of the automatic driving vehicle in a dynamic environment.

附图说明Description of drawings

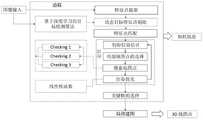

图1是本发明实施例的一种实现自动驾驶高效同时定位与建图的DG-SLAM系统构建方法的整体流程图。FIG. 1 is an overall flow chart of a method for constructing a DG-SLAM system for realizing efficient simultaneous positioning and mapping of automatic driving according to an embodiment of the present invention.

图2是本发明实施例的基于SSD模型的流程图;2 is a flowchart based on an SSD model according to an embodiment of the present invention;

图3是本发明实施例的基于GPU并行计算模型的流程图;3 is a flowchart of a GPU-based parallel computing model according to an embodiment of the present invention;

图4为本发明实施例SSD算法在KITTI数据集上的检测效果图;4 is a detection effect diagram of the SSD algorithm according to an embodiment of the present invention on the KITTI data set;

图5为本发明实施例方法构建的系统与ORB-SLAM系统在KITTI数据集上测试精度对比效果图;5 is a comparison effect diagram of the test accuracy of the system constructed by the method according to the embodiment of the present invention and the ORB-SLAM system on the KITTI data set;

图6为本发明实施例方法构建的系统与ORB-SLAM系统在KITTI数据集上测试实时性对比效果图。FIG. 6 is a comparison effect diagram of the real-time test of the system constructed by the method according to the embodiment of the present invention and the ORB-SLAM system on the KITTI data set.

具体实施方式Detailed ways

下面详细描述本发明的实施例,所述实施例的示例在附图中示出,其中自始至终相同或类似的标号表示相同或类似的元件或具有相同或类似功能的元件。下面通过参考附图描述的实施例是示例性的,仅用于解释本发明,而不能理解为对本发明的限制。The following describes in detail the embodiments of the present invention, examples of which are illustrated in the accompanying drawings, wherein the same or similar reference numerals refer to the same or similar elements or elements having the same or similar functions throughout. The embodiments described below with reference to the accompanying drawings are exemplary, only used to explain the present invention, and should not be construed as a limitation of the present invention.

在本发明的描述中,需要理解的是,涉及到方位描述,例如上、下、前、后、左、右等指示的方位或位置关系为基于附图所示的方位或位置关系,仅是为了便于描述本发明和简化描述,而不是指示或暗示所指的装置或元件必须具有特定的方位、以特定的方位构造和操作,因此不能理解为对本发明的限制。In the description of the present invention, it should be understood that the azimuth description, such as the azimuth or position relationship indicated by up, down, front, rear, left, right, etc., is based on the azimuth or position relationship shown in the drawings, only In order to facilitate the description of the present invention and simplify the description, it is not indicated or implied that the indicated device or element must have a particular orientation, be constructed and operated in a particular orientation, and therefore should not be construed as limiting the present invention.

在本发明的描述中,若干的含义是一个或者多个,多个的含义是两个以上,大于、小于、超过等理解为不包括本数,以上、以下、以内等理解为包括本数。如果有描述到第一、第二只是用于区分技术特征为目的,而不能理解为指示或暗示相对重要性或者隐含指明所指示的技术特征的数量或者隐含指明所指示的技术特征的先后关系。In the description of the present invention, the meaning of several is one or more, the meaning of multiple is two or more, greater than, less than, exceeding, etc. are understood as not including this number, above, below, within, etc. are understood as including this number. If it is described that the first and the second are only for the purpose of distinguishing technical features, it cannot be understood as indicating or implying relative importance, or indicating the number of the indicated technical features or the order of the indicated technical features. relation.

本发明的描述中,除非另有明确的限定,设置、安装、连接等词语应做广义理解,所属技术领域技术人员可以结合技术方案的具体内容合理确定上述词语在本发明中的具体含义。In the description of the present invention, unless otherwise clearly defined, words such as setting, installation, connection should be understood in a broad sense, and those skilled in the art can reasonably determine the specific meanings of the above words in the present invention in combination with the specific content of the technical solution.

参照图1,本实施例提供了一种实现自动驾驶高效同时定位与建图的DG-SLAM系统构建方法,该系统由目标检测线程、追踪线程和局部建图三个并行线程组成,其中追踪线程包括但不限于以下步骤:Referring to FIG. 1, the present embodiment provides a method for constructing a DG-SLAM system for realizing automatic driving and efficient simultaneous positioning and mapping. The system consists of three parallel threads: a target detection thread, a tracking thread and a local mapping thread. The tracking thread is composed of three parallel threads. Including but not limited to the following steps:

步骤一:在追踪线程中数据关联部分使用基于检测速度较快和检测精度较高SSD的目标检测算法模型检测动态物体,如图2所示,该算法以VGG-16作为基础网络,基于前馈卷积神经网络,在不同尺度的特征图上分别检测,然后运用非极大值抑制算法消除冗余的重复框得到最后的检测框。该算法模型通过使用Tensorflow深度学习框架训练KITTI数据集得到。为了更好测试本算法效果,本实施例将KITTI数据集划分为vehicle,pedestrian,cyclist和background四类组成。在8000张带标签图片中,6400张作为训练集,800张作为训练验证集,800张作为测试集,得到本文系统中的目标检测模型。在本系统中,首先给定图像数据,使用基于SSD的目标检测算法模型检测动态物体(如行人和车辆),并行使用特征点提取算法提取图像数据特征点,然后结合动态目标检测结果和特征点提取结果,剔除动态目标特征点,最后根据特征点的匹配算法完成特征点的匹配。Step 1: In the data association part of the tracking thread, the target detection algorithm model based on SSD with faster detection speed and higher detection accuracy is used to detect dynamic objects. As shown in Figure 2, the algorithm uses VGG-16 as the basic network and is based on feedforward. The convolutional neural network detects the feature maps of different scales separately, and then uses the non-maximum suppression algorithm to eliminate redundant repeated frames to obtain the final detection frame. The algorithm model is obtained by training the KITTI dataset using the Tensorflow deep learning framework. In order to better test the effect of this algorithm, this embodiment divides the KITTI data set into four categories: vehicle, pedestrian, cyclist and background. Among the 8000 labeled images, 6400 are used as the training set, 800 are used as the training and validation set, and 800 are used as the test set to obtain the target detection model in the system in this paper. In this system, given the image data first, use the SSD-based object detection algorithm model to detect dynamic objects (such as pedestrians and vehicles), and use the feature point extraction algorithm to extract image data feature points in parallel, and then combine the dynamic object detection results and feature points. Extract the results, remove the dynamic target feature points, and finally complete the feature point matching according to the feature point matching algorithm.

步骤二:如图3所示,追踪线程定位地图点选择时,根据匹配的特征点获取初始位姿,根据初始位姿计算以相机为中心当前帧的空间I,并行采用三种标准筛选地图点:checking1(即第一标准):筛选选出位于空间I中的局部地图点集S;checking2(即第二标准):计算当前视图射线v和地图云点平均视图方向n的夹角,如果v·n<cos(60°),则舍弃该点,得到点集T;checking3(即第三标准):计算地图云点到相机中心的距离d,如果它不在地图云点的尺度不变区间,则舍弃所述地图云点,得到点集W。并行的将关键帧图像投影到局部地图,搜索与当前帧共视的关键帧,通过式:Step 2: As shown in Figure 3, when the tracking thread locates the map point selection, the initial pose is obtained according to the matching feature points, and the space I of the current frame centered on the camera is calculated according to the initial pose, and three criteria are used to filter the map points in parallel. : checking1 (ie the first standard): filter out the local map point set S located in the space I; checking2 (ie the second standard): calculate the angle between the current view ray v and the average view direction n of the map cloud points, if v n<cos(60°), then discard the point to get the point set T; checking3 (ie the third standard): Calculate the distance d from the map cloud point to the camera center, if it is not in the scale-invariant range of the map cloud point, Then discard the map cloud points to obtain a point set W. Project the keyframe image to the local map in parallel, and search for the keyframe that is common to the current frame, through the formula:

S∩T∩W=PS∩T∩W=P

计算得到与当前帧进行投影匹配局部地图点集P;点集P由世界坐标系投影到像素坐标系与当前帧的特征点进行投影匹配,使用光束平差法进行最小二乘优化。The local map point set P is obtained by projection matching with the current frame; the point set P is projected from the world coordinate system to the pixel coordinate system and the feature points of the current frame are projected and matched, and the beam adjustment method is used for least squares optimization.

步骤三:在追踪线程中的位姿优化时使用Saturated核函数作用于最小化误差项的二范数平方和:Step 3: Use the Saturated kernel function to minimize the two-norm sum of squares of the error term during pose optimization in the tracking thread:

式中,fi(x)为点集P在像素坐标系下的坐标与当前帧特征点的坐标的残差,S为鲁棒饱和核函数,记||fi(x)||2=e,则:In the formula, fi (x) is the residual between the coordinates of the point set P in the pixel coordinate system and the coordinates of the feature points of the current frame, S is the robust saturation kernel function, denoted ||fi (x)||2 = e, then:

式中,δ为残差的阈值,本实施例δ取值为35.89,当残差小于35.89时,函数增长为一次性,当残差超过35.89时,函数值取δ2,相当于限制了梯度的最大值,可以有效的处理异常点,保证系统具有较高的鲁棒性。本实施例每次优化过程循环4次,前两次优化调用了鲁棒核,防止误差值太过发散,更主要的原因是抑制错误匹配对的影响;后两次关闭了鲁棒核,认为前两次的优化基本上抑制了错误匹配的影响,每次优化迭代10次;每次优化结束后,根据预设误差阈值判断每一个特征点是内点还是外点;优化结束后,根据判断条件,将所有匹配的外点全部删除,统计内点(观测次数大于0)数量;若内点数量大于10,则认为跟踪成功,否则跟踪失败。在姿态优化的过程中,通过最小化误差项的二范数平方和作为目标函数,为了避免当误差很大时二范数增长得太快,就需要核函数来保证每条边的误差不会掩盖掉其它的边。具体操作为,将原先误差的二范数度量替换成一个增长没有那么快的函数,同时保证光滑性质以便于求导。针对常用的非线性Huber鲁棒核函数的非线性函数不适用于GPU并行处理的缺点,本发明中利用饱和线性函数为线性方程的特性,在不影响误差优化效果的同时适应了GPU并行计算加速。本实施例将追踪线程定位部分中每次位姿优化的结果作为位姿初始值,循环步骤二和步骤三不断优化,获得最新位姿值,直至优化结果满足设定阈值。In the formula, δ is the threshold of the residual error. In this embodiment, the value of δ is 35.89. When the residual error is less than 35.89, the function growth is one-time. When the residual error exceeds 35.89, the function value is δ2 , which is equivalent to limiting the gradient. The maximum value of , can effectively deal with abnormal points and ensure that the system has high robustness. In this example, each

本实施例在数据关联算法中耦合基于深度学习的数据关联方法,引进基于SSD的目标检测算法检测环境中的动态物体,在特征点匹配前剔除动态特征点,从而减少特征点误匹配的情况。该实施例具体工作流程为:给定图像数据输入,首先使用SSD的目标检测算法检测动态物体(如行人和车辆等),图4为该算法在KITTI数据集上检测效果图,该算法与特征点的提取算法采取并行计算的方式,结合动态物体检测结果和特征点提取结果,剔除动态物体特征点,进而完成特征点的匹配;随后由帧间匹配获得相机初始位姿,通过追踪局部地图优化相机位姿,在追踪局部地图过程中,将当前帧映射到空间区域,计算局部地图空间点是否在当前帧的视野范围内,由于此步不依赖于当前帧2D数据,可以通过构建并行计算模型完成待优化特征点的选择;为了避免当误差很大时二范数增长得太快,就需要核函数来保证每条边的误差不会掩盖掉其它的边,因此在完成特征点的选择和匹配后,本实施例使用核函数作用于最小化误差项的二范数平方和并行计算特征点重投影误差,完成相机位姿的定位。In this embodiment, the data association method based on deep learning is coupled with the data association algorithm, and the SSD-based target detection algorithm is introduced to detect dynamic objects in the environment, and the dynamic feature points are eliminated before the feature points are matched, thereby reducing the mismatch of the feature points. The specific workflow of this embodiment is: given the input of image data, first use the target detection algorithm of SSD to detect dynamic objects (such as pedestrians and vehicles, etc.) The point extraction algorithm adopts the method of parallel calculation, combines the dynamic object detection results and the feature point extraction results, eliminates the dynamic object feature points, and then completes the matching of the feature points; then the initial camera pose is obtained by the inter-frame matching, and is optimized by tracking the local map. Camera pose, in the process of tracking the local map, map the current frame to the space area, and calculate whether the local map space point is within the field of view of the current frame. Since this step does not depend on the 2D data of the current frame, a parallel computing model can be constructed by Complete the selection of feature points to be optimized; in order to avoid the two-norm growing too fast when the error is large, a kernel function is needed to ensure that the error of each edge will not cover up other edges, so after completing the selection of feature points and After matching, this embodiment uses the kernel function to act on the two-norm squared sum of the minimized error term to calculate the feature point re-projection error in parallel, and complete the positioning of the camera pose.

本实施例在KITTI 01上进行测试,同时与ORB-SLAM系统对比,如图5中,RMSE(RootMean Square Error)为系统测试的均方根误差,非常适合评价SLAM系统的精度性能,由图可知,本实施例较ORB-SLAM定位精度有显著提升。如图6所示,ORB-SLAM在运行的平均帧率约为25帧每秒,且运行时间时间不稳定;本实施例的SLAM算法的运行帧率平均超过125帧每秒,比ORB-SLAM快5倍以上,且运行时间稳定,因此本实施例实现了高鲁棒性、高精度、实时性的SLAM。This embodiment is tested on KITTI 01 and compared with the ORB-SLAM system. As shown in Figure 5, RMSE (RootMean Square Error) is the root mean square error of the system test, which is very suitable for evaluating the accuracy performance of the SLAM system. It can be seen from the figure , this embodiment significantly improves the positioning accuracy of ORB-SLAM. As shown in Figure 6, the average frame rate of ORB-SLAM in operation is about 25 frames per second, and the running time is unstable; It is more than 5 times faster, and the running time is stable, so this embodiment implements SLAM with high robustness, high precision, and real-time performance.

本实施例还提供了一种实现自动驾驶同时定位与建图的系统构建装置,包括:This embodiment also provides a system construction device for realizing simultaneous positioning and mapping of automatic driving, including:

至少一个处理器;at least one processor;

至少一个存储器,用于存储至少一个程序;at least one memory for storing at least one program;

当所述至少一个程序被所述至少一个处理器执行,使得所述至少一个处理器实现上所述方法。When the at least one program is executed by the at least one processor, the at least one processor implements the above method.

本实施例的一种实现自动驾驶同时定位与建图的系统构建装置,可执行本发明方法实施例所提供的一种实现自动驾驶高效同时定位与建图的DG-SLAM系统构建方法,可执行方法实施例的任意组合实施步骤,具备该方法相应的功能和有益效果。A system construction apparatus for realizing automatic driving simultaneous positioning and mapping in this embodiment can execute the DG-SLAM system construction method for realizing automatic driving efficient simultaneous positioning and mapping provided by the method embodiment of the present invention, and can execute Any combination of implementation steps of the method embodiments has the corresponding functions and beneficial effects of the method.

可以理解的是,上文中所公开方法中的全部或某些步骤、系统可以被实施为软件、固件、硬件及其适当的组合。某些物理组件或所有物理组件可以被实施为由处理器,如中央处理器、数字信号处理器或微处理器执行的软件,或者被实施为硬件,或者被实施为集成电路,如专用集成电路。这样的软件可以分布在计算机可读介质上,计算机可读介质可以包括计算机存储介质(或非暂时性介质)和通信介质(或暂时性介质)。如本领域普通技术人员公知的,术语计算机存储介质包括在用于存储信息(诸如计算机可读指令、数据结构、程序模块或其他数据)的任何方法或技术中实施的易失性和非易失性、可移除和不可移除介质。计算机存储介质包括但不限于RAM、ROM、EEPROM、闪存或其他存储器技术、CD-ROM、数字多功能盘(DVD)或其他光盘存储、磁盒、磁带、磁盘存储或其他磁存储装置、或者可以用于存储期望的信息并且可以被计算机访问的任何其他的介质。此外,本领域普通技术人员公知的是,通信介质通常包含计算机可读指令、数据结构、程序模块或者诸如载波或其他传输机制之类的调制数据信号中的其他数据,并且可包括任何信息递送介质。It will be understood that all or some of the steps and systems in the methods disclosed above may be implemented as software, firmware, hardware, and suitable combinations thereof. Some or all physical components may be implemented as software executed by a processor, such as a central processing unit, digital signal processor or microprocessor, or as hardware, or as an integrated circuit, such as an application specific integrated circuit . Such software may be distributed on computer-readable media, which may include computer storage media (or non-transitory media) and communication media (or transitory media). As known to those of ordinary skill in the art, the term computer storage media includes both volatile and nonvolatile implemented in any method or technology for storage of information, such as computer readable instructions, data structures, program modules or other data flexible, removable and non-removable media. Computer storage media include, but are not limited to, RAM, ROM, EEPROM, flash memory or other memory technology, CD-ROM, digital versatile disk (DVD) or other optical disk storage, magnetic cartridges, magnetic tape, magnetic disk storage or other magnetic storage devices, or may Any other medium used to store desired information and which can be accessed by a computer. In addition, communication media typically embodies computer readable instructions, data structures, program modules, or other data in a modulated data signal such as a carrier wave or other transport mechanism, and can include any information delivery media, as is well known to those of ordinary skill in the art .

上面结合附图对本发明实施例作了详细说明,但是本发明不限于上述实施例,在所述技术领域普通技术人员所具备的知识范围内,还可以在不脱离本发明宗旨的前提下作出各种变化。The embodiments of the present invention have been described in detail above in conjunction with the accompanying drawings, but the present invention is not limited to the above-mentioned embodiments. Within the scope of knowledge possessed by those of ordinary skill in the technical field, various modifications can be made without departing from the purpose of the present invention. kind of change.

Claims (10)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010441208.2ACN111738085B (en) | 2020-05-22 | 2020-05-22 | System construction method and device for realizing automatic driving simultaneous positioning and mapping |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010441208.2ACN111738085B (en) | 2020-05-22 | 2020-05-22 | System construction method and device for realizing automatic driving simultaneous positioning and mapping |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN111738085Atrue CN111738085A (en) | 2020-10-02 |

| CN111738085B CN111738085B (en) | 2023-10-24 |

Family

ID=72647642

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202010441208.2AActiveCN111738085B (en) | 2020-05-22 | 2020-05-22 | System construction method and device for realizing automatic driving simultaneous positioning and mapping |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN111738085B (en) |

Cited By (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113485907A (en)* | 2021-05-21 | 2021-10-08 | 北京汽车研究总院有限公司 | Test method and device for automatic driving mapping result and computer equipment |

| CN114283199A (en)* | 2021-12-29 | 2022-04-05 | 北京航空航天大学 | A point-line fusion semantic SLAM method for dynamic scenes |

| WO2022089577A1 (en)* | 2020-10-31 | 2022-05-05 | 华为技术有限公司 | Pose determination method and related device thereof |

| WO2025036037A1 (en)* | 2023-08-15 | 2025-02-20 | 浙江大学 | Real-time simultaneous localization and mapping system based on implicit representation |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN109631855A (en)* | 2019-01-25 | 2019-04-16 | 西安电子科技大学 | High-precision vehicle positioning method based on ORB-SLAM |

| CN109781092A (en)* | 2019-01-19 | 2019-05-21 | 北京化工大学 | A mobile robot positioning and mapping method in dangerous chemical accidents |

| US20190178654A1 (en)* | 2016-08-04 | 2019-06-13 | Reification Inc. | Methods for simultaneous localization and mapping (slam) and related apparatus and systems |

| CN109934862A (en)* | 2019-02-22 | 2019-06-25 | 上海大学 | A binocular vision SLAM method combining point and line features |

| CN110378345A (en)* | 2019-06-04 | 2019-10-25 | 广东工业大学 | Dynamic scene SLAM method based on YOLACT example parted pattern |

- 2020

- 2020-05-22CNCN202010441208.2Apatent/CN111738085B/enactiveActive

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20190178654A1 (en)* | 2016-08-04 | 2019-06-13 | Reification Inc. | Methods for simultaneous localization and mapping (slam) and related apparatus and systems |

| CN109781092A (en)* | 2019-01-19 | 2019-05-21 | 北京化工大学 | A mobile robot positioning and mapping method in dangerous chemical accidents |

| CN109631855A (en)* | 2019-01-25 | 2019-04-16 | 西安电子科技大学 | High-precision vehicle positioning method based on ORB-SLAM |

| CN109934862A (en)* | 2019-02-22 | 2019-06-25 | 上海大学 | A binocular vision SLAM method combining point and line features |

| CN110378345A (en)* | 2019-06-04 | 2019-10-25 | 广东工业大学 | Dynamic scene SLAM method based on YOLACT example parted pattern |

Non-Patent Citations (3)

| Title |

|---|

| RAÚL MUR-ARTAL ET AL.: "ORB-SLAM: A Versatile and Accurate Monocular SLAM System", vol. 31, no. 5, pages 1152 - 1153* |

| 张威: "基于物体语义信息的室内视觉SLAM研究", vol. 2019, no. 2019, pages 45* |

| 禹鑫燚 等: "SLAM过程中的机器人位姿估计优化算法研究", vol. 28, no. 8, pages 713* |

Cited By (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2022089577A1 (en)* | 2020-10-31 | 2022-05-05 | 华为技术有限公司 | Pose determination method and related device thereof |

| CN114445490A (en)* | 2020-10-31 | 2022-05-06 | 华为技术有限公司 | A pose determination method and related equipment |

| CN113485907A (en)* | 2021-05-21 | 2021-10-08 | 北京汽车研究总院有限公司 | Test method and device for automatic driving mapping result and computer equipment |

| CN114283199A (en)* | 2021-12-29 | 2022-04-05 | 北京航空航天大学 | A point-line fusion semantic SLAM method for dynamic scenes |

| CN114283199B (en)* | 2021-12-29 | 2024-06-11 | 北京航空航天大学 | Dynamic scene-oriented dotted line fusion semantic SLAM method |

| WO2025036037A1 (en)* | 2023-08-15 | 2025-02-20 | 浙江大学 | Real-time simultaneous localization and mapping system based on implicit representation |

Also Published As

| Publication number | Publication date |

|---|---|

| CN111738085B (en) | 2023-10-24 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN111462200B (en) | A cross-video pedestrian positioning and tracking method, system and device | |

| CN111738085B (en) | System construction method and device for realizing automatic driving simultaneous positioning and mapping | |

| CN111462207A (en) | RGB-D simultaneous positioning and map creation method integrating direct method and feature method | |

| CN105809687B (en) | A Monocular Vision Odometry Method Based on Edge Point Information in Image | |

| CN111462135A (en) | Semantic Mapping Method Based on Visual SLAM and 2D Semantic Segmentation | |

| CN109974743B (en) | Visual odometer based on GMS feature matching and sliding window pose graph optimization | |

| CN110070615A (en) | A kind of panoramic vision SLAM method based on polyphaser collaboration | |

| CN112101066A (en) | Object detection method and device and intelligent driving method, device and storage medium | |

| CN108682027A (en) | VSLAM realization method and systems based on point, line Fusion Features | |

| CN107687850A (en) | A kind of unmanned vehicle position and orientation estimation method of view-based access control model and Inertial Measurement Unit | |

| CN104615986B (en) | The method that pedestrian detection is carried out to the video image of scene changes using multi-detector | |

| US9299161B2 (en) | Method and device for head tracking and computer-readable recording medium | |

| WO2019196476A1 (en) | Laser sensor-based map generation | |

| CN111882602B (en) | Visual odometry implementation method based on ORB feature points and GMS matching filter | |

| CN111998862A (en) | Dense binocular SLAM method based on BNN | |

| CN110349249A (en) | Real-time dense method for reconstructing and system based on RGB-D data | |

| CN113781523B (en) | A football detection and tracking method and device, electronic equipment, and storage medium | |

| CN111709893A (en) | An Improved ORB-SLAM2 Algorithm Based on Information Entropy and Sharpening Adjustment | |

| CN118840529A (en) | Visual positioning method and system based on scene recognition and deep learning | |

| CN117036462A (en) | Visual positioning method and device based on event camera, electronic equipment and medium | |

| KR101766823B1 (en) | Robust visual odometry system and method to irregular illumination changes | |

| CN119224743B (en) | Laser radar and camera external parameter calibration method | |

| CN115115691A (en) | Monocular three-dimensional plane recovery method, equipment and storage medium | |

| CN110009683B (en) | Real-time object detection method on plane based on MaskRCNN | |

| CN118189963A (en) | Unmanned ship binocular vision synchronous positioning and mapping method based on dotted line characteristics |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |