CN111583502B - Renminbi (RMB) crown word number multi-label identification method based on deep convolutional neural network - Google Patents

Renminbi (RMB) crown word number multi-label identification method based on deep convolutional neural networkDownload PDFInfo

- Publication number

- CN111583502B CN111583502BCN202010381442.0ACN202010381442ACN111583502BCN 111583502 BCN111583502 BCN 111583502BCN 202010381442 ACN202010381442 ACN 202010381442ACN 111583502 BCN111583502 BCN 111583502B

- Authority

- CN

- China

- Prior art keywords

- layer

- image

- neural network

- model

- rmb

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Expired - Fee Related

Links

Images

Classifications

- G—PHYSICS

- G07—CHECKING-DEVICES

- G07D—HANDLING OF COINS OR VALUABLE PAPERS, e.g. TESTING, SORTING BY DENOMINATIONS, COUNTING, DISPENSING, CHANGING OR DEPOSITING

- G07D7/00—Testing specially adapted to determine the identity or genuineness of valuable papers or for segregating those which are unacceptable, e.g. banknotes that are alien to a currency

- G07D7/004—Testing specially adapted to determine the identity or genuineness of valuable papers or for segregating those which are unacceptable, e.g. banknotes that are alien to a currency using digital security elements, e.g. information coded on a magnetic thread or strip

- G07D7/0047—Testing specially adapted to determine the identity or genuineness of valuable papers or for segregating those which are unacceptable, e.g. banknotes that are alien to a currency using digital security elements, e.g. information coded on a magnetic thread or strip using checkcodes, e.g. coded numbers derived from serial number and denomination

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/045—Combinations of networks

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/30—Determination of transform parameters for the alignment of images, i.e. image registration

- G06T7/33—Determination of transform parameters for the alignment of images, i.e. image registration using feature-based methods

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/20—Image preprocessing

- G06V10/25—Determination of region of interest [ROI] or a volume of interest [VOI]

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/40—Document-oriented image-based pattern recognition

- G06V30/41—Analysis of document content

- G06V30/413—Classification of content, e.g. text, photographs or tables

- G—PHYSICS

- G07—CHECKING-DEVICES

- G07D—HANDLING OF COINS OR VALUABLE PAPERS, e.g. TESTING, SORTING BY DENOMINATIONS, COUNTING, DISPENSING, CHANGING OR DEPOSITING

- G07D7/00—Testing specially adapted to determine the identity or genuineness of valuable papers or for segregating those which are unacceptable, e.g. banknotes that are alien to a currency

- G07D7/20—Testing patterns thereon

- G07D7/2008—Testing patterns thereon using pre-processing, e.g. de-blurring, averaging, normalisation or rotation

- G—PHYSICS

- G07—CHECKING-DEVICES

- G07D—HANDLING OF COINS OR VALUABLE PAPERS, e.g. TESTING, SORTING BY DENOMINATIONS, COUNTING, DISPENSING, CHANGING OR DEPOSITING

- G07D7/00—Testing specially adapted to determine the identity or genuineness of valuable papers or for segregating those which are unacceptable, e.g. banknotes that are alien to a currency

- G07D7/20—Testing patterns thereon

- G07D7/2016—Testing patterns thereon using feature extraction, e.g. segmentation, edge detection or Hough-transformation

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Multimedia (AREA)

- Artificial Intelligence (AREA)

- General Health & Medical Sciences (AREA)

- Biophysics (AREA)

- Evolutionary Computation (AREA)

- Computational Linguistics (AREA)

- Molecular Biology (AREA)

- Computing Systems (AREA)

- General Engineering & Computer Science (AREA)

- Data Mining & Analysis (AREA)

- Mathematical Physics (AREA)

- Software Systems (AREA)

- Biomedical Technology (AREA)

- Life Sciences & Earth Sciences (AREA)

- Health & Medical Sciences (AREA)

- Computer Security & Cryptography (AREA)

- Image Analysis (AREA)

- Inspection Of Paper Currency And Valuable Securities (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及钞票识别技术领域,尤其涉及一种基于深度卷积神经网络的人民币冠字号多标签识别方法。The present invention relates to the technical field of banknote identification, in particular to a multi-label identification method of RMB serial number based on a deep convolutional neural network.

背景技术Background technique

纸币的冠字号用于记录纸币发行序列,具有控制纸币发行数量以及纸币防伪的作用。冠字号可以理解为每一张纸币的身份证,银行或自助金融设备可对流入或流出的纸币进行冠字号记录,以便于管理取证以及跟踪纸币的流向。自动取款机或存取一体机等自助金融设备还可以根据纸币的冠字号对纸币的真伪进行识别。由此可见,准确的识别出纸币冠字号十分重要。The serial number of the banknote is used to record the issuance sequence of the banknote, which has the function of controlling the issuance quantity of the banknote and preventing counterfeiting of the banknote. The serial number can be understood as the ID card of each banknote, and the bank or self-service financial equipment can record the serial number of the incoming or outgoing banknotes, so as to facilitate the management of evidence collection and the tracking of the flow of the banknotes. Self-service financial equipment such as automatic teller machines or all-in-one deposit and withdrawal machines can also identify the authenticity of banknotes based on the serial number of the banknotes. It can be seen that it is very important to accurately identify the serial number of banknotes.

目前国内外纸币冠字号识别的方法有:通过USB将纸币图像传至上位机进行处理,由于受限于USB传输速度,实时性效果差;通过DSP平台进行纸币冠字号识别,但由于纸币图像的寻边、面向朝向的识别、冠字号区域的定位分割以及冠字号识别采用了低效率的方法,导致识别效果及软件的健壮性较差。例如在纸币图像的寻边,没有进行异常点去除,导致寻找的纸币边缘不准确,影响纸币冠字号定位和识别。又如纸币的面向朝向识别,采用了粗网格特征,严重影响了程序的效率。At present, the methods for identifying the serial number of banknotes at home and abroad include: transferring the image of the banknote to the host computer for processing through USB. Due to the limitation of the USB transmission speed, the real-time effect is poor; Edge finding, orientation-oriented recognition, location and segmentation of the serial number area, and serial number recognition use inefficient methods, resulting in poor recognition effect and software robustness. For example, in the edge finding of the banknote image, no abnormal point removal is carried out, resulting in inaccurate edges of the banknote searched, which affects the positioning and identification of the banknote serial number. Another example is the face-to-face recognition of banknotes, which uses coarse grid features, which seriously affects the efficiency of the program.

这些方法最主要的缺点就是效率低下,识别效果差,冠字号识别率不高。The main disadvantages of these methods are low efficiency, poor recognition effect, and low recognition rate of serial number.

发明内容SUMMARY OF THE INVENTION

为了克服现有技术的不足,本发明提供了一种基于深度卷积神经网络的人民币冠字号多标签识别方法,相较于传统识别方法,快速,稳定,准确率高。In order to overcome the deficiencies of the prior art, the present invention provides a multi-label identification method for the RMB serial number based on a deep convolutional neural network, which is fast, stable and has a high accuracy rate compared to the traditional identification method.

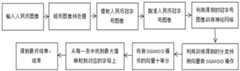

基于深度卷积神经网络的人民币冠字号多标签识别方法,具体包括如下步骤:The multi-label identification method of the RMB title number based on the deep convolutional neural network specifically includes the following steps:

1)对钞票图像进行预处理,包括改善光亮强度、提取冠字号图像以及配准冠字号图像;1) Preprocessing the banknote image, including improving the light intensity, extracting the serial number image and registering the serial number image;

2)首先使用先验知识大致定位,然后对冠字号进行准确定位,得到人民币冠字号图像;2) First use prior knowledge to roughly locate, and then accurately locate the serial number to obtain an image of the RMB serial number;

3)将所有人民币冠字号图像缩放成预设的同一尺寸;3) Scale all the images of the RMB serial number to the same preset size;

4)利用深度卷积神经网络对图像特征进行提取,训练模型后得到预测向量,当模型达到一定准确率时保存模型;4) Extract the image features by using a deep convolutional neural network, obtain a prediction vector after training the model, and save the model when the model reaches a certain accuracy;

5)预测阶段将图像传入到深度卷积神经网络中提取图像特征;5) In the prediction stage, the image is passed into the deep convolutional neural network to extract image features;

6)将特征图拉伸输入到全连接层中得出预测向量;6) The feature map is stretched and input into the fully connected layer to obtain the prediction vector;

7)将预测向量进行Sigmoid操作;7) Perform Sigmoid operation on the prediction vector;

8)将作Sigmoid操作后的预测向量切分成十条,从每一条中找到最大值,并映射到对应的标签向量上,得出最终分类结果。8) Divide the predicted vector after the Sigmoid operation into ten pieces, find the maximum value from each piece, and map it to the corresponding label vector to obtain the final classification result.

所述步骤1具体包括:灰度化的基础上结合顶帽变换以改善纸币图像二值化效果;提取纸币图像所在的矩阵区域以去除无关的背景信息;利用单应矩阵对图像进行配准以校正倾斜和消除透视效应;The

第一个图像预处理具体包括:The first image preprocessing specifically includes:

1)建立配准前纸币图像的四个顶角坐标与配准图像的对应关系;1) Establish the correspondence between the four vertex coordinates of the banknote image before registration and the registration image;

2)由坐标对应关系求出单应矩阵;2) Obtain the homography matrix from the coordinate correspondence;

3)利用单应矩阵求出配准后的纸币图像中在配准前的纸币图像的对应点;3) using the homography matrix to find the corresponding point of the banknote image before registration in the registered banknote image;

4)采用双线性插值法对匹配后的纸币图像赋值。4) Using bilinear interpolation method to assign value to the matched banknote image.

所述步骤2具体包括:利用先验知识大致定位在配准图像左方1/4和下方1/3的矩形区域;采用基于分块二值化的精准定位,即将大致定位图分成左右两块并分别使用全局阈值进行二值化,再拼合起来进行扫描定位。The

所述步骤4具体包括:训练阶段将归一化的二值冠字号图像输入深度卷积神经网络中通过模型的自训练得到特征向量;将特征图拉伸输入到全连接层中得出预测向量;通过Sigmoid交叉熵函数对预测向量和标签向量进行训练,得出最终模型。The

所述步骤5具体包括:预测阶段将图像输入到保存的深度卷积神经网络模型中提取特征。The step 5 specifically includes: in the prediction stage, the image is input into the saved deep convolutional neural network model to extract features.

所述深度卷积神经网络模型结构如下:The structure of the deep convolutional neural network model is as follows:

首先共有4层,分别为卷积层,批正则化层,激活层,以及最大值池化层;First, there are 4 layers, namely convolution layer, batch regularization layer, activation layer, and maximum pooling layer;

输入图像尺寸为(128,64,3),其中卷积层的卷积核大小为7x7,卷积核的深度为64,卷积步长为2;The input image size is (128, 64, 3), the size of the convolution kernel of the convolution layer is 7x7, the depth of the convolution kernel is 64, and the convolution stride is 2;

批正则化层对输入进行归一化,不改变输入的尺寸;The batch regularization layer normalizes the input without changing the size of the input;

激活层增加了神经网络的非线性,不改变输入的尺寸;The activation layer increases the nonlinearity of the neural network without changing the size of the input;

最大池化层中采样层为3x3,步长为2,最大池化层用来缩减模型的大小,提高计算速度,同时提高所提取特征的鲁棒性;The sampling layer in the maximum pooling layer is 3x3, and the step size is 2. The maximum pooling layer is used to reduce the size of the model, improve the calculation speed, and improve the robustness of the extracted features;

然后是瓶颈模块,瓶颈模块中包含了九层,第一层为卷积层,第二层为批正则化层,第三层为激活层,第四层为补边层,第五层为卷积层,第六层为批正则化层,第七层为激活层,第八层为卷积层,第九层为批正则化层;Then there is the bottleneck module, which contains nine layers, the first layer is the convolution layer, the second layer is the batch regularization layer, the third layer is the activation layer, the fourth layer is the edge supplementation layer, and the fifth layer is the volume The sixth layer is the batch regularization layer, the seventh layer is the activation layer, the eighth layer is the convolution layer, and the ninth layer is the batch regularization layer;

共堆叠了16个瓶颈模块;A total of 16 bottleneck modules are stacked;

接下来时是捷径残差快,在瓶颈模块中,跨越三层将第一层加权连接到第三层,有效的解决了深度网络中的梯度发散问题。其中捷径通道中的权重设为1;The next step is the shortcut residual fast. In the bottleneck module, the first layer is weighted and connected to the third layer across three layers, which effectively solves the problem of gradient divergence in deep networks. The weight in the shortcut channel is set to 1;

然后是全局均值池化层,其中全局均值池化层用来减少参数数量,即减轻模型过拟合的发生;Then there is the global mean pooling layer, in which the global mean pooling layer is used to reduce the number of parameters, that is, to reduce the occurrence of model overfitting;

最后是全连接层,全连接层输出高度提纯的特征,用来交给最后的分类器做分类。The last is the fully connected layer, which outputs highly purified features, which are used to hand over to the final classifier for classification.

所述步骤6具体包括:将特征图拉伸输入到全连接层中得出预测向量。The step 6 specifically includes: stretching the feature map and inputting it into the fully connected layer to obtain a prediction vector.

所述步骤7具体包括:将预测向量作Sigmoid交叉熵函数得到取值范围为0-1的预测向量。The

所述步骤8具体包括:由于冠字号图像固定是十位,所以将向量切分成10条,从每条中找到最大值,并映射到对应的标签向量上,然后输出预测结果。The

与现有的技术相比,本发明的有益效果是:Compared with the prior art, the beneficial effects of the present invention are:

1、本发明采用了基于单应矩阵的纸币图像配准方法使不同角度、背景、光照强度以及分辨率的输入纸币图像均能输出为统一的纸币俯视图。1. The present invention adopts the banknote image registration method based on the homography matrix, so that the input banknote images of different angles, backgrounds, light intensity and resolution can be output as a unified banknote top view.

2、本发明采用了基于人民币纸币的图像纹理特征和预处理后的配准纸币图像快速判断纸币为正面还是为反面的问题,迅速地定位到人民币冠字号。2. The present invention adopts the problem of quickly judging whether the banknote is positive or negative based on the image texture features of the RMB banknote and the preprocessed registered banknote image, and quickly locates the RMB serial number.

3、本发明不需要再进行繁琐的人民币冠字号图像分割操作,大大提升了识别效率,使字符识别准确率达到99.84%。3. The present invention does not need to perform the tedious image segmentation operation of the renminbi serial number, greatly improves the recognition efficiency, and makes the character recognition accuracy rate reach 99.84%.

附图说明Description of drawings

图1为本发明人民币冠字号识别系统框图;Fig. 1 is the block diagram of the identification system of RMB serial number of the present invention;

图2为本发明基于单应矩阵的纸币图像配准过程图;Fig. 2 is the banknote image registration process diagram based on the homography matrix of the present invention;

图3为本发明深度卷积神经网络结构图;Fig. 3 is the deep convolutional neural network structure diagram of the present invention;

图4为本发明提取人民币冠字号图像示例图;Fig. 4 is an example diagram of the present invention extracting the image of the renminbi serial number;

图5为本发明瓶颈模块结构图;Fig. 5 is the bottleneck module structure diagram of the present invention;

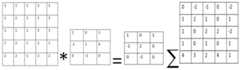

图6为本发明卷积计算过程图;Fig. 6 is the convolution calculation process diagram of the present invention;

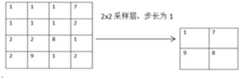

图7为本发明池化计算过程图;Fig. 7 is the pooling calculation process diagram of the present invention;

图8为本发明捷径残差块结构图。FIG. 8 is a structural diagram of the short-cut residual block of the present invention.

具体实施方式Detailed ways

本发明公开了一种基于深度卷积神经网络的人民币冠字号多标签识别方法。本领域技术人员可以借鉴本文内容,适当改进参数实现。特别需要指出的是,所有类似的替换和改动对本领域技术人员来说是显而易见的,它们都被视为包括在本发明。本发明的方法及应用已经通过较佳实施例进行了描述,相关人员明显能在不脱离本发明内容、精神和范围内对本文所述的方法和应用进行改动或适当变更与组合,来实现和应用本发明技术。The present invention discloses a multi-label identification method of the RMB serial number based on the deep convolutional neural network. Those skilled in the art can refer to the content of this document to appropriately improve the parameter realization. It should be particularly pointed out that all similar substitutions and modifications are obvious to those skilled in the art, and they are deemed to be included in the present invention. The method and application of the present invention have been described through preferred embodiments, and it is obvious that relevant persons can make changes or appropriate changes and combinations of the methods and applications described herein without departing from the content, spirit and scope of the present invention to achieve and Apply the technology of the present invention.

实施例:Example:

如图1-8所示,一种基于深度卷积神经网络的人民币冠字号多标签识别方法,包括下述步骤,首先,对纸币图像进行预处理,包括改善严重曝光、提取纸币图像和配准纸币图像等处理。提取纸币图像所在的矩阵区域以去除无关的背景信息;利用单应矩阵对图像进行配准以校正倾斜和消除透视效应;先根据纸币二值图像像素点的左右分布来判断倒转情况,再根据纸币左下方区域的颜色色调来判断正反面情况。该预处理算法能够很好地适配后续冠字号定位和识别,而且对于输入纸币图像的约束要求低,在任意角度、光照强度、分辨率下,只要直观可清晰辨认,则可输出正立纸币图像。As shown in Figure 1-8, a multi-label identification method of RMB serial number based on deep convolutional neural network includes the following steps. First, preprocessing the banknote image, including improving severe exposure, extracting the banknote image and registration Processing of banknote images, etc. Extract the matrix area where the banknote image is located to remove irrelevant background information; use the homography matrix to register the image to correct the inclination and eliminate the perspective effect; first judge the inversion situation according to the left and right distribution of the pixel points of the banknote binary image, and then according to the banknote The color tone of the lower left area is used to judge the positive and negative situation. The preprocessing algorithm can well adapt to the positioning and recognition of the subsequent serial number, and has low constraints on the input banknote image. Under any angle, light intensity and resolution, as long as it is intuitive and clearly identifiable, the upright banknote can be output. image.

用两步法来对冠字号进行定位,即第一步使用先验知识大致定位,第二步对冠字号进行准确定位。A two-step method is used to locate the title number, that is, the first step uses prior knowledge to roughly locate, and the second step is to accurately locate the title number.

最后利用深度卷积神经网络对图像进行训练以及预测,获得了较高的识别率。Finally, a deep convolutional neural network is used to train and predict the image, and a high recognition rate is obtained.

为了能够输出统一的纸币俯视图,我们采用了基于单应矩阵的纸币图像配准方法,对纸币图像进行校正倾斜、消除透视效应。设配准前的纸币图像为A,配准完成后得到配准后的图像B,其中B=HA,H为单应矩阵。In order to output a unified top view of banknotes, we adopted a homography matrix-based banknote image registration method to correct the inclination of banknote images and eliminate perspective effects. The banknote image before registration is set as A, and the registered image B is obtained after the registration is completed, where B=HA, and H is a homography matrix.

纸币图像配准的处理步骤如下:The processing steps of banknote image registration are as follows:

建立原图的四个顶角坐标与配准图的对应关系;Establish the correspondence between the four vertex coordinates of the original image and the registration map;

由坐标对应关系求出单应矩阵H;The homography matrix H is obtained from the coordinate correspondence;

利用H求出B中在A的对应点;Use H to find the corresponding point in A in B;

采用双线性插值法对B赋值。Use bilinear interpolation to assign values to B.

另外,根据人民币纸币图像纹理,通过其二值化图像的左右两块像素值分布情况来判断纸币是否倒转,再通过左下方区域的色调来判断正反面情况。In addition, according to the image texture of RMB banknotes, it can be judged whether the banknotes are reversed by the distribution of the left and right pixel values of the binarized image, and then the front and back sides of the banknotes can be judged by the hue of the lower left area.

本深度卷积神经网络模型结构如下:The structure of this deep convolutional neural network model is as follows:

首先共有4层,分别为卷积层,批正则化层,激活层,以及最大值池化层。First, there are 4 layers, namely convolution layer, batch regularization layer, activation layer, and max pooling layer.

输入图像尺寸为(128,64,3),通过卷积运算提取输入图片的特征得到一个卷积层,其中卷积核大小为7x7,卷积核的深度为64,卷积步长为2,卷积层由32个特征映射图组成,共(7x7+1)x32=1600个参数。The input image size is (128, 64, 3), and the features of the input image are extracted by convolution operation to obtain a convolution layer, where the size of the convolution kernel is 7x7, the depth of the convolution kernel is 64, and the convolution step size is 2. The convolutional layer consists of 32 feature maps with a total of (7x7+1)x32=1600 parameters.

批正则化层对输入进行归一化,不改变输入的尺寸。The batch regularization layer normalizes the input without changing the size of the input.

激活层增加模型的非线性,同样不改变输入的尺寸。The activation layer increases the nonlinearity of the model without changing the size of the input.

最大池化层中采样层为3x3,步长为2,最大池化层用来缩减模型的大小,提高计算速度,同时提高所提取特征的鲁棒性,通过最大池化层池化操作后,得到一个64x32x32的特征映射图。The sampling layer in the maximum pooling layer is 3x3, and the step size is 2. The maximum pooling layer is used to reduce the size of the model, improve the calculation speed, and improve the robustness of the extracted features. After the pooling operation of the maximum pooling layer, Get a 64x32x32 feature map.

接下来是瓶颈模块,瓶颈模块中包含了九层,第一层为卷积层,第二层为批正则化层,第三层为激活层,第四层为补边层,第五层为卷积层,第六层为批正则化层,第七层为激活层,第八层为卷积层,第九层为批正则化层。Next is the bottleneck module, which consists of nine layers, the first layer is the convolution layer, the second layer is the batch regularization layer, the third layer is the activation layer, the fourth layer is the edge-filling layer, and the fifth layer is the The sixth layer is the batch regularization layer, the seventh layer is the activation layer, the eighth layer is the convolution layer, and the ninth layer is the batch regularization layer.

总共堆叠了16个瓶颈模块。A total of 16 bottleneck modules are stacked.

然后是捷径残差块,在瓶颈模块中,跨越三层将第一层加权连接到第三层,有效的解决了深度网络中的梯度发散问题。其中捷径通道中的权重设为1。Then there is the shortcut residual block. In the bottleneck module, the first layer is weighted and connected to the third layer across three layers, which effectively solves the problem of gradient divergence in deep networks. The weight in the shortcut channel is set to 1.

然后是全局均值池化层,其中全局均值池化层用来减少参数数量,即减轻模型过拟合的发生。Then there is the global mean pooling layer, where the global mean pooling layer is used to reduce the number of parameters, that is, to reduce the occurrence of model overfitting.

最后是全连接层,全连接层输出高度提纯的特征,用来给分类器做分类。Finally, the fully connected layer, which outputs highly purified features, is used to classify the classifier.

卷积操作:在卷积神经网络中,最常见的计算方法有两种,一种是卷积操作,另外一种则是池化操作。卷积实际上是一种积分运算,它用来描述线性时不变系统的输入和输出的关系:即输出可以通过输入和一个表征系统特性的函数进行卷积运算得到。当F(n)为有限长度N,S(n)为有限长度M的信号,计算卷积F(n)*S(n)主要的方法有直接计算法,它的做法时利用卷积的定义若F(n)和S(n)都是实数信号,则需要MN个乘法。因此计算卷积的复杂度为O(m*n)。Convolution operation: In the convolutional neural network, there are two most common calculation methods, one is the convolution operation, and the other is the pooling operation. Convolution is actually an integral operation, which is used to describe the relationship between the input and output of a linear time-invariant system: that is, the output can be obtained by performing a convolution operation on the input and a function that characterizes the system. When F(n) is a signal of finite length N and S(n) is a signal of finite length M, the main method for calculating convolution F(n)*S(n) is the direct calculation method, which uses the definition of convolution. If both F(n) and S(n) are real signals, MN multiplications are required. So the complexity of computing convolution is O(m*n).

图像的卷积过程:用一个可训练的卷积核x去和另外一个输入的图像做卷积运算,然后添加一个偏置b,得到卷积特征层,即f(xWij+b),f是relu函数。Image convolution process: use a trainable convolution kernel x to perform a convolution operation with another input image, and then add a bias b to get the convolution feature layer, that is, f(xWij +b), f is the relu function.

池化操作:经过卷积操作后,提取了图像最基本的特征,理论上讲,可以利用这些特征进行分类,但这些特征不一定能够代表抽象概念的特征,且数据量大,容易出现过拟合,所以需要对其进行更高层次的抽象,也就是进行池化操作,即二次特征提取,这么做的好处可以检测更多的特征信息,并且能够减少计算复杂度。Pooling operation: After the convolution operation, the most basic features of the image are extracted. In theory, these features can be used for classification, but these features may not represent the features of abstract concepts, and the amount of data is large, which is prone to overfitting. Therefore, a higher level of abstraction is required, that is, a pooling operation, that is, secondary feature extraction, which has the advantage of detecting more feature information and reducing computational complexity.

池化操作时利用一个矩阵窗口在张量上进行扫描,将每个矩阵中的通过取最大值或者平均值等来减少元素的个数。选择图像中的连续像素点作为池化区域的话,并且只是池化相同的隐藏单元产生的特征,那么,这些池化单元就具有平移不变性,平移不变性对于识别十分重要。During the pooling operation, a matrix window is used to scan the tensor, and the number of elements in each matrix is reduced by taking the maximum value or average value, etc. If the continuous pixels in the image are selected as the pooling area, and only the features generated by the same hidden units are pooled, then these pooling units have translation invariance, which is very important for recognition.

瓶颈模块:瓶颈模块共包含了三个卷积层,其中第一个是1x1卷积,能够对通道数进行降维的作用,从而令第二个3x3的卷积层在计算的时候能够以相对较低维度的输入进行卷积计算,提高计算效率。而第三个1x1卷积层则是起到了升维的作用。其他层则主要是对模型的优化。Bottleneck module: The bottleneck module contains a total of three convolutional layers, the first of which is a 1x1 convolution, which can reduce the dimension of the number of channels, so that the second 3x3 convolutional layer can be calculated with relative Convolution calculations are performed on inputs of lower dimensions to improve computational efficiency. The third 1x1 convolutional layer plays the role of dimension enhancement. The other layers are mainly to optimize the model.

捷径残差块:随着网络深度的增加,卷积神经网络的效果越来越好,但训练变的更加困难。这主要是因为在基于随机梯度下降的网络训练过程中,误差信号的多层反向传播非常容易引发梯度弥散的现象。一些特殊的权重初始化策略和批正则化等等方法能够改善此类问题,但是当模型收敛时,随着网络深度愈发的增加,训练误差没有降低反而升高。捷径残差块则很好的解决了网络深度带来的训练困难的问题。Shortcut Residual Block: Convolutional Neural Networks get better as the depth of the network increases, but training becomes more difficult. This is mainly because in the process of network training based on stochastic gradient descent, the multi-layer back-propagation of the error signal is very easy to cause the phenomenon of gradient dispersion. Some special weight initialization strategies and batch regularization methods can improve such problems, but when the model converges, as the network depth increases, the training error does not decrease but increases. The shortcut residual block is a good solution to the training difficulty caused by the depth of the network.

残差计算公式如下所示:y=F(x,wf)*T(x,wt)+x*C(x,wc)传统的卷积神经网络则没有T,C两项。T(x,wt)是一种非线性变换,称作变换门,负责控制变换的强度。C(x,wc)也是一种非线性变化,称作携带门,负责控制原输入信号的保留强度,换句话说就是y是F和x的加权组合,T和C则分别控制着两项对应的权重,其中T+C=1。残差块使得训练模型的过程比训练原始函数更容易。The residual calculation formula is as follows: y=F(x,wf )*T(x,wt )+x*C(x,wc ) The traditional convolutional neural network does not have T and C items. T(x,wt) is a nonlinear transformation, called a transformation gate, that controls the strength of the transformation. C(x,wc) is also a nonlinear change, called the carry gate, which is responsible for controlling the retention strength of the original input signal. In other words, y is the weighted combination of F and x, and T and C control the corresponding two items respectively. , where T+C=1. Residual blocks make the process of training the model easier than training the original function.

本发明采用了基于单应矩阵的纸币图像配准方法使不同角度、背景、光照强度以及分辨率的输入纸币图像均能输出为统一的纸币俯视图。本发明采用了基于人民币纸币的图像纹理特征和预处理后的配准纸币图像快速判断纸币为正面还是为反面的问题,迅速地定位到人民币冠字号。本发明不需要再进行繁琐的人民币冠字号图像分割操作,大大提升了识别效率,使字符识别准确率达到99.84%。The invention adopts the banknote image registration method based on the homography matrix, so that the input banknote images of different angles, backgrounds, light intensity and resolution can be output as a unified banknote top view. The invention adopts the problem of quickly judging whether the banknote is positive or negative based on the image texture feature of the RMB banknote and the preprocessed registered banknote image, and quickly locates the RMB serial number. The invention does not need to carry out the complicated operation of image segmentation of the RMB serial number, greatly improves the recognition efficiency, and makes the character recognition accuracy rate reach 99.84%.

以上所述,仅为本发明较佳的具体实施方式,但本发明的保护范围并不局限于此,任何熟悉本技术领域的技术人员在本发明揭露的技术范围内,根据本发明的技术方案及其发明构思加以等同替换或改变,都应涵盖在本发明的保护范围之内。The above description is only a preferred embodiment of the present invention, but the protection scope of the present invention is not limited to this. The equivalent replacement or change of the inventive concept thereof shall be included within the protection scope of the present invention.

Claims (3)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010381442.0ACN111583502B (en) | 2020-05-08 | 2020-05-08 | Renminbi (RMB) crown word number multi-label identification method based on deep convolutional neural network |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010381442.0ACN111583502B (en) | 2020-05-08 | 2020-05-08 | Renminbi (RMB) crown word number multi-label identification method based on deep convolutional neural network |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN111583502A CN111583502A (en) | 2020-08-25 |

| CN111583502Btrue CN111583502B (en) | 2022-06-03 |

Family

ID=72113338

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202010381442.0AExpired - Fee RelatedCN111583502B (en) | 2020-05-08 | 2020-05-08 | Renminbi (RMB) crown word number multi-label identification method based on deep convolutional neural network |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN111583502B (en) |

Families Citing this family (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113033635B (en)* | 2021-03-12 | 2024-05-14 | 中钞长城金融设备控股有限公司 | Method and device for detecting invisible graphics context of coin |

| CN113240643B (en)* | 2021-05-14 | 2024-11-19 | 广州广电运通金融电子股份有限公司 | A banknote quality detection method, system and medium based on multispectral image |

| CN114202759B (en)* | 2021-12-10 | 2025-09-12 | 江苏国光信息产业股份有限公司 | Multi-currency banknote serial number recognition method and device based on deep learning |

| CN116129448A (en)* | 2022-11-29 | 2023-05-16 | 中国银行股份有限公司 | Commemorative coin identification method and device |

| CN115984866B (en)* | 2022-12-03 | 2025-05-23 | 广州大有网络科技有限公司 | A calligraphy stroke intelligent extraction system and method |

Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN105957238A (en)* | 2016-05-20 | 2016-09-21 | 聚龙股份有限公司 | Banknote management method and system |

| CN108320374A (en)* | 2018-02-08 | 2018-07-24 | 中南大学 | A kind of multinational paper money number character identifying method based on finger image |

| CN111046866A (en)* | 2019-12-13 | 2020-04-21 | 哈尔滨工程大学 | Method for detecting RMB crown word number region by combining CTPN and SVM |

Family Cites Families (14)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP3321267B2 (en)* | 1993-10-21 | 2002-09-03 | グローリー工業株式会社 | Mask Optimization Method Using Genetic Algorithm in Pattern Recognition |

| US7089218B1 (en)* | 2004-01-06 | 2006-08-08 | Neuric Technologies, Llc | Method for inclusion of psychological temperament in an electronic emulation of the human brain |

| CN102024262B (en)* | 2011-01-06 | 2012-07-04 | 西安电子科技大学 | Method for performing image segmentation by using manifold spectral clustering |

| CN102800148B (en)* | 2012-07-10 | 2014-03-26 | 中山大学 | RMB sequence number identification method |

| CN104036271B (en)* | 2014-06-11 | 2017-08-25 | 新达通科技股份有限公司 | The recognition methods of a kind of character and paper money number and device and ATM |

| CN106056751B (en)* | 2016-05-20 | 2019-04-12 | 聚龙股份有限公司 | The recognition methods and system of serial number |

| WO2018168521A1 (en)* | 2017-03-14 | 2018-09-20 | Omron Corporation | Learning result identifying apparatus, learning result identifying method, and program therefor |

| CN107025716B (en)* | 2017-06-05 | 2019-12-10 | 深圳怡化电脑股份有限公司 | Method and device for detecting contamination of paper money crown word number |

| CN107358575A (en)* | 2017-06-08 | 2017-11-17 | 清华大学 | A kind of single image super resolution ratio reconstruction method based on depth residual error network |

| CN108427921A (en)* | 2018-02-28 | 2018-08-21 | 辽宁科技大学 | A kind of face identification method based on convolutional neural networks |

| CN108734142A (en)* | 2018-05-28 | 2018-11-02 | 西南交通大学 | A kind of core in-pile component surface roughness appraisal procedure based on convolutional neural networks |

| CN110033021B (en)* | 2019-03-07 | 2021-04-06 | 华中科技大学 | Fault classification method based on one-dimensional multipath convolutional neural network |

| CN110276881A (en)* | 2019-05-10 | 2019-09-24 | 广东工业大学 | A Banknote Serial Number Recognition Method Based on Convolutional Recurrent Neural Network |

| CN110517277B (en)* | 2019-08-05 | 2022-12-06 | 西安电子科技大学 | SAR image segmentation method based on PCANet and high-order CRF |

- 2020

- 2020-05-08CNCN202010381442.0Apatent/CN111583502B/ennot_activeExpired - Fee Related

Patent Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN105957238A (en)* | 2016-05-20 | 2016-09-21 | 聚龙股份有限公司 | Banknote management method and system |

| CN108320374A (en)* | 2018-02-08 | 2018-07-24 | 中南大学 | A kind of multinational paper money number character identifying method based on finger image |

| CN111046866A (en)* | 2019-12-13 | 2020-04-21 | 哈尔滨工程大学 | Method for detecting RMB crown word number region by combining CTPN and SVM |

Also Published As

| Publication number | Publication date |

|---|---|

| CN111583502A (en) | 2020-08-25 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN111583502B (en) | Renminbi (RMB) crown word number multi-label identification method based on deep convolutional neural network | |

| CN111401372B (en) | A method for extracting and identifying image and text information from scanned documents | |

| Doush et al. | Currency recognition using a smartphone: Comparison between color SIFT and gray scale SIFT algorithms | |

| KR102207533B1 (en) | Bill management method and system | |

| CN110298376B (en) | An Image Classification Method of Bank Notes Based on Improved B-CNN | |

| CN109522908A (en) | Image significance detection method based on area label fusion | |

| CN112381775A (en) | Image tampering detection method, terminal device and storage medium | |

| CN118552973B (en) | Bill identification method, device, equipment and storage medium | |

| CN103345631B (en) | Image characteristics extraction, training, detection method and module, device, system | |

| CN106683073B (en) | A license plate detection method, camera and server | |

| CN110569782A (en) | A target detection method based on deep learning | |

| CN108197644A (en) | A kind of image-recognizing method and device | |

| CN109977899B (en) | A method and system for training, reasoning and adding new categories of item recognition | |

| CN110210297B (en) | Method for locating and extracting Chinese characters in customs clearance image | |

| CN109740572A (en) | A face detection method based on local color texture features | |

| CN110046617A (en) | A kind of digital electric meter reading self-adaptive identification method based on deep learning | |

| CN113688821A (en) | OCR character recognition method based on deep learning | |

| CN112560858B (en) | Character and picture detection and rapid matching method combining lightweight network and personalized feature extraction | |

| CN112365451A (en) | Method, device and equipment for determining image quality grade and computer readable medium | |

| CN110852311A (en) | Three-dimensional human hand key point positioning method and device | |

| Lv et al. | Research on plant leaf recognition method based on multi-feature fusion in different partition blocks | |

| CN114581928B (en) | A table recognition method and system | |

| CN119338827A (en) | Surface detection method and system for precision fasteners | |

| CN114444565B (en) | Image tampering detection method, terminal equipment and storage medium | |

| CN110991201B (en) | Bar code detection method and related device |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant | ||

| CF01 | Termination of patent right due to non-payment of annual fee | ||

| CF01 | Termination of patent right due to non-payment of annual fee | Granted publication date:20220603 |