CN111444801A - A real-time detection method for infrared target of unmanned aerial vehicle - Google Patents

A real-time detection method for infrared target of unmanned aerial vehicleDownload PDFInfo

- Publication number

- CN111444801A CN111444801ACN202010191052.7ACN202010191052ACN111444801ACN 111444801 ACN111444801 ACN 111444801ACN 202010191052 ACN202010191052 ACN 202010191052ACN 111444801 ACN111444801 ACN 111444801A

- Authority

- CN

- China

- Prior art keywords

- infrared

- detection

- unmanned aerial

- aerial vehicle

- real

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Pending

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V20/00—Scenes; Scene-specific elements

- G06V20/10—Terrestrial scenes

- G06V20/13—Satellite images

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/045—Combinations of networks

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V20/00—Scenes; Scene-specific elements

- G06V20/10—Terrestrial scenes

- G06V20/194—Terrestrial scenes using hyperspectral data, i.e. more or other wavelengths than RGB

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V2201/00—Indexing scheme relating to image or video recognition or understanding

- G06V2201/07—Target detection

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- General Physics & Mathematics (AREA)

- Computing Systems (AREA)

- General Engineering & Computer Science (AREA)

- Biophysics (AREA)

- Computational Linguistics (AREA)

- Data Mining & Analysis (AREA)

- Evolutionary Computation (AREA)

- General Health & Medical Sciences (AREA)

- Molecular Biology (AREA)

- Artificial Intelligence (AREA)

- Biomedical Technology (AREA)

- Life Sciences & Earth Sciences (AREA)

- Mathematical Physics (AREA)

- Software Systems (AREA)

- Health & Medical Sciences (AREA)

- Astronomy & Astrophysics (AREA)

- Remote Sensing (AREA)

- Multimedia (AREA)

- Image Processing (AREA)

- Traffic Control Systems (AREA)

- Aiming, Guidance, Guns With A Light Source, Armor, Camouflage, And Targets (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明属于无人机实时监测技术领域,具体涉及一种无人机红外目标实时检测方法。The invention belongs to the technical field of real-time monitoring of unmanned aerial vehicles, and in particular relates to a real-time detection method of infrared targets of unmanned aerial vehicles.

背景技术Background technique

现今无人机的军事化应用已经愈发成熟与深入。随着飞控、通信、定位导航等技术的快速发展,无人机的更多应用领域与价值被人们挖掘了出来,比如影视航拍、娱乐自拍、农业植保、交通管理等等。而在夜间无光环境下,气候恶劣条件下,无人机需要在较远距离高精度探测目标,依靠可见光照明或微光夜视等设备均有较大局限。Today, the military application of UAVs has become more mature and in-depth. With the rapid development of flight control, communication, positioning and navigation and other technologies, more application fields and values of UAVs have been discovered by people, such as film and television aerial photography, entertainment selfie, agricultural plant protection, traffic management and so on. However, in the dark environment at night and under harsh weather conditions, drones need to detect targets with high precision at a long distance, and relying on devices such as visible light illumination or low-light night vision have greater limitations.

红外热成像系统成像原理为红外光谱辐射成像,不依赖光源,受天气影响小,探测距离远,在夜间全黑环境下进行目标识别与探测,搜救,军事,行车辅助的领域具有很强应用价值。热成像技术的运用让无人机在夜间快速寻人和快速定源等方面拥有更加强大的能力,这让其能够成为灾后救援寻人、火灾火源确认及日常警方搜索的重要利器。The imaging principle of the infrared thermal imaging system is infrared spectral radiation imaging, which does not depend on the light source, is less affected by the weather, and has a long detection distance. . The application of thermal imaging technology enables drones to have more powerful capabilities in quickly finding people at night and quickly locating sources, which makes them an important tool for post-disaster rescue and search, fire source confirmation, and daily police searches.

未来“热成像+无人机”之间还需要加上“智能化”三个字。目前,热成像无人机依然只是一个数据搜集工具,热成像图要么依赖人工、要么依赖其他机器,尚处于人力化和自动化的后期处理阶段,理想中的实时智能化尚未实现。鉴于此,未来的发展趋势应该是将智能分析功能直接加载到热成像无人机身上,让其不仅能飞、能看还能思考。通过智能化的加持,让无人机成为搜集工具的同时也是一个分析工具,两者合一才能更好的辅助科学决策。In the future, the word "intelligence" needs to be added between "thermal imaging + drone". At present, thermal imaging drones are still only a data collection tool, and thermal imaging images either rely on manual labor or other machines, and are still in the post-processing stage of humanization and automation, and the ideal real-time intelligence has not yet been realized. In view of this, the future development trend should be to directly load the intelligent analysis function on the thermal imaging drone, so that it can not only fly, see but also think. Through intelligent blessing, drones can become both a collection tool and an analysis tool. The combination of the two can better assist scientific decision-making.

现有大量目标技术基于可见光环境,对于全黑无光及雨雾天气等环境下的目标检测方法缺乏研究与实践。其次,在无人机视觉下的智能化实时性目标检测研究工作也处于起步阶段,相关研究成果较少。A large number of existing target technologies are based on the visible light environment, and there is a lack of research and practice on target detection methods in environments such as total darkness and rain and fog. Secondly, the research on intelligent real-time target detection under UAV vision is also in its infancy, and there are few related research results.

发明内容SUMMARY OF THE INVENTION

本发明的目的在于:提供一种无人机红外目标实时检测方法,以解决上述现有大量目标技术基于可见光环境,对于全黑无光及雨雾天气等环境下的目标检测方法缺乏研究与实践的缺陷。The object of the present invention is to provide a real-time detection method for infrared targets of unmanned aerial vehicles, so as to solve the problem that the above-mentioned existing large number of target technologies are based on the visible light environment, and the target detection methods in environments such as completely black and no light and rainy and foggy weather lack research and practice. defect.

本发明采用的技术方案如下:一种无人机红外目标实时检测方法,包括如下步骤:The technical solution adopted in the present invention is as follows: a real-time detection method for an infrared target of an unmanned aerial vehicle, comprising the following steps:

(1)构建IT-YOLO无人机红外目标检测网络;(1) Build the IT-YOLO UAV infrared target detection network;

(2)采集制作无人机红外目标检测数据集;(2) Collect and make a UAV infrared target detection data set;

(3)训练生成无人机实时红外目标检测模型;(3) Train and generate real-time infrared target detection model of UAV;

(4)无人机检测红外目标。(4) UAV detects infrared targets.

本申请的技术方案中,在Tiny-YOLOV3轻量级目标检测网络基础上进行主干特征提取网络的改进与网络检测层的替换,构建IT-YOLO无人机红外目标检测网络,使用红外成像在无人机视觉下采集制作无人机红外目标检测数据集,训练生成无人机实时红外目标检测模型,应用于无人机在夜间无光,存在雨雾等天气影响环境等可见光摄像头和普通数码夜视仪无法应对的探测环境下检测目标,可在百米左右采集清晰的行人,车辆红外光谱成像,进行实时,高精度目标检测,解决了上述现有大量目标技术基于可见光环境,对于全黑无光及雨雾天气等环境下的目标检测方法缺乏研究与实践的缺陷。In the technical solution of the present application, based on the Tiny-YOLOV3 lightweight target detection network, the backbone feature extraction network is improved and the network detection layer is replaced, and the IT-YOLO UAV infrared target detection network is constructed. Collect and produce UAV infrared target detection data sets under human-machine vision, train and generate UAV real-time infrared target detection models, which are applied to visible light cameras and ordinary digital night vision when the UAV has no light at night, rain and fog and other weather-affected environments. It can detect targets in the detection environment that the instrument cannot cope with, and can collect clear infrared spectral imaging of pedestrians and vehicles at about 100 meters, and perform real-time, high-precision target detection. And the target detection method in rainy and foggy weather and other environments lacks the shortcomings of research and practice.

优选的,步骤(1)中IT-YOLO无人机红外目标检测网络是在Tiny-YOLOV3轻量级目标检测网络基础上进行主干网络与检测网络的改进。IT-YOLO无人机红外目标检测网络采用Tiny-YOLOV3的基础结构,根据红外图像特点,提取浅层卷积层特征,提高红外小目标检测能力,使用单通道卷积核,降低运算量,检测部分使用基于CenterNet结构的检测方式以降低误检测率,提高检测速度。Preferably, in step (1), the IT-YOLO UAV infrared target detection network is based on the Tiny-YOLOV3 lightweight target detection network to improve the backbone network and the detection network. The IT-YOLO UAV infrared target detection network adopts the basic structure of Tiny-YOLOV3. According to the characteristics of infrared images, it extracts the features of the shallow convolution layer to improve the detection ability of small infrared targets. It uses a single-channel convolution kernel to reduce the amount of computation and detect Part of the detection method based on CenterNet structure is used to reduce the false detection rate and improve the detection speed.

优选的,对于远距离低分辨率红外小目标的特征提取,Tiny-YOLOV3目标检测网络浅层卷积Conv4层能够较为有效地表征红外小目标的语义信息,而Conv3层感受野太小,Conv5层则感受野太大,包含一定的背景噪声干扰,因此为提升红外小目标检测能力,将Tiny-YOLOV3中Maxpool3层替换为Conv4层,增加Conv5层,同时在Tiny-YOLOV3模型的基础上增加上采样层Upsample2,将Conv5层和Upsample2在通道维度上进行连接操作,Conv7通过Upsample2进行升采样操作,形成特征金字塔增加的一个特征图层,再将首个卷积层内卷积核换为单通道卷积核,降低运算量,提升实时性,在检测网络部分,使用CenterNet结构代替原有YOLO检测层,优化检测方法,降低误检率,进一步提高实时性,即为IT-YOLO无人机红外目标检测网络。Preferably, for the feature extraction of long-distance low-resolution small infrared targets, the shallow convolution Conv4 layer of the Tiny-YOLOV3 target detection network can more effectively characterize the semantic information of the small infrared targets, while the receptive field of the Conv3 layer is too small, and the Conv5 layer The receptive field is too large and contains a certain amount of background noise interference. Therefore, in order to improve the detection ability of small infrared targets, the Maxpool3 layer in Tiny-YOLOV3 is replaced by the Conv4 layer, the Conv5 layer is added, and the upsampling is added on the basis of the Tiny-YOLOV3 model. Layer Upsample2, connect Conv5 layer and Upsample2 in the channel dimension, Conv7 performs upsampling operation through Upsample2 to form a feature layer added to the feature pyramid, and then replace the convolution kernel in the first convolutional layer with a single-channel volume In the detection network part, the CenterNet structure is used to replace the original YOLO detection layer, the detection method is optimized, the false detection rate is reduced, and the real-time performance is further improved, which is the infrared target of the IT-YOLO UAV Check the network.

优选的,特征金字塔网络为13×13、26×26和52×52像素的3个检测尺度结构。特征金字塔网络由Tiny-YOLOV3的13×13和26×26像素提升为13×13、26×26和52×52像素的3个检测尺度结构,在3个检测尺度上使用CenterNet检测结构。Preferably, the feature pyramid network has three detection scale structures of 13×13, 26×26 and 52×52 pixels. The feature pyramid network is upgraded from Tiny-YOLOV3's 13 × 13 and 26 × 26 pixels to 3 detection scale structures of 13 × 13, 26 × 26 and 52 × 52 pixels, and CenterNet is used to detect the structure on the 3 detection scales.

优选的,为进一步提高红外图像中红外目标的检测精度,提高检测实时性,降低运算量,检测网络部分3个检测尺度上使用CenterNet检测结构,用无锚点方式(anchor free)代替原有基于锚点(anchor box)的YOLO检测层,使改进后的目标检测网络更适合检测红外小目标。Preferably, in order to further improve the detection accuracy of infrared targets in infrared images, improve the real-time detection, and reduce the amount of computation, the CenterNet detection structure is used in the three detection scales of the detection network, and the anchor free method is used to replace the original based on The YOLO detection layer of the anchor box makes the improved target detection network more suitable for detecting small infrared targets.

CenterNet基本思想为:基于锚点(anchor box)的方法经常出现大量不正确的边界框,由于缺乏对相关剪裁区域的额外监督所造成,因此原Tiny-YOLOV3在检测层中需要大量的 anchor box进行目标检测。而CenterNet是一个单阶段的关键点检测模型,将检测每个目标物看作三个关键点(中心点,上下对角点),避免产生大量anchor box,降低运算量提高实时性的同时提高检测准确率及召回率。ET-YOLO所使用的CenterNet由Cascade cornerpooling及Center pooling两个模型构成,从而获得从左上角及右下角的丰富信息,同时在中间区域获得更多的识别信息。The basic idea of CenterNet is: the anchor box-based method often has a large number of incorrect bounding boxes. Due to the lack of additional supervision of the relevant clipping regions, the original Tiny-YOLOV3 needs a large number of anchor boxes in the detection layer. Target Detection. CenterNet is a single-stage key point detection model, which treats each target as three key points (center point, upper and lower diagonal points), avoids generating a large number of anchor boxes, reduces the amount of computation, improves real-time performance, and improves detection. precision and recall. The CenterNet used by ET-YOLO is composed of two models, Cascade cornerpooling and Center pooling, so as to obtain rich information from the upper left corner and lower right corner, and at the same time obtain more identification information in the middle area.

CenterNet网络中的Center Pooling模型由2个卷积归一化残差融合层(Conv-BN-ReLU), 1个左池化层(Left Pooling),1个右池化层(Right Pooling),1个顶层池化层(TopPooling), 1个底层池化层(Bottom Pooling)组成,作用在于用于预测中心关键点的分支,有利于中心获得更多目标物的中心区域,进而更易感知proposal的中心区域。通过取中心位置横向与纵向响应值的和的最大值实现此方法。Cascade corner pooling模型由2个卷积归一化残差融合层(Conv-BN-ReLU),1个左池化层(Left Pooling),1个卷积归一化融合层(Conv-BN), 1个顶层池化层(Top Pooling)构成,作用在于增加原始的corner pooling感知内部信息的功能。结合了feature map中目标物内部及边界方向的响应值和的最大值来预测角点。The Center Pooling model in the CenterNet network consists of 2 convolutional normalized residual fusion layers (Conv-BN-ReLU), 1 left pooling layer (Left Pooling), 1 right pooling layer (Right Pooling), 1 It consists of a top pooling layer (TopPooling) and a bottom pooling layer (Bottom Pooling), which is used to predict the branch of the key point of the center, which is beneficial to the center to obtain more central areas of the target object, and then more easily perceive the center of the proposal area. This method is implemented by taking the maximum value of the sum of the horizontal and vertical response values at the center position. The Cascade corner pooling model consists of 2 convolution normalization residual fusion layers (Conv-BN-ReLU), 1 left pooling layer (Left Pooling), 1 convolution normalization fusion layer (Conv-BN), A top pooling layer (Top Pooling) is formed, which is used to increase the function of the original corner pooling to perceive internal information. The corner points are predicted by combining the maximum value of the sum of the response values in the target interior and boundary directions in the feature map.

优选的,使用CenterNet结构进行检测时,采用尺度敏感区域以适应不同尺寸大小的目标物,将生成相对小目标较大,相对大目标较小的中心区域,获取一个边界框I是否被保留,获取方式如下:tlx,tly代表框左上角的点,brx,bry代表框右下角的点,定义一个中心区域 j,定义左上角的点的坐标为(ctlx,ctly),右下角点(cbrx,cbry),ctlx,ctly,cbrx,cbry的满足如下公式,Preferably, when using the CenterNet structure for detection, the scale-sensitive area is used to adapt to objects of different sizes, and a relatively small target will be generated, and a relatively small central area of the large target will be generated. Obtain whether a bounding box I is retained, obtain The method is as follows: tlx , tly represent the point in the upper left corner of the box, brx , bry represent the point in the lower right corner of the box, define a central area j, define the coordinates of the upper left point as (ctlx , ctly ), right The lower corner point (cbrx , cbry ), ctlx , ctly , cbrx , cbry satisfies the following formula,

(1)式中n为奇数,代表中心区域j的大小,边界框小于150时,n=3,边界框大于150时,n=5。(1) where n is an odd number, representing the size of the center region j, when the bounding box is less than 150, n=3, and when the bounding box is greater than 150, n=5.

优选的,步骤(2)中,在夜间环境下采用无人机搭载红外热成像平台分别于低空,高空距离进行拍摄,由上至下的视角采集清晰可供识别检测的红外图像,汇集8000个无人机视角下夜间场景的红外热成像图像作为无人机红外目标检测数据集。Preferably, in step (2), in the nighttime environment, the drone is equipped with an infrared thermal imaging platform to shoot at low altitude and high altitude, respectively, and from top to bottom, clear infrared images for identification and detection are collected, and 8,000 infrared images are collected. Infrared thermal imaging images of night scenes from the perspective of UAVs are used as UAV infrared target detection datasets.

更为优选的,按5:1的比例将无人机红外目标检测数据集分为训练集和测试集,使用 YOLO-MARK工具标注要检测的2类目标,行人和车辆;在模型训练环节,将数据集中的图像样本全部转换为416×416像素的图像,训练时以100幅图像为一个批次进行小批量训练,训练一批图像,权值进行一次更新,权值的衰减速率设为0.0005,动量设置为0.9,初始学习率设为0.001,对IT-YOLO网络进行20000次迭代,每间隔2000次迭代后保存一次模型,最终模型平均损失降至0.2以下,选取精度最高的模型作为无人机实时红外目标检测模型。More preferably, the UAV infrared target detection data set is divided into a training set and a test set according to the ratio of 5:1, and the YOLO-MARK tool is used to mark the two types of targets to be detected, pedestrians and vehicles; in the model training process, Convert all the image samples in the dataset to 416×416 pixel images. During training, 100 images are used as a batch for mini-batch training, a batch of images is trained, the weights are updated once, and the decay rate of the weights is set to 0.0005 , the momentum is set to 0.9, the initial learning rate is set to 0.001, 20,000 iterations are performed on the IT-YOLO network, and the model is saved after every 2,000 iterations. Machine real-time infrared target detection model.

模型训练与测试使用硬件平台为Core i7-8750H 2.2Ghz处理器+16GB内存+Geforce GTX 1080 8GB显卡,软件平台使用Win10+tensorflow1.9.0+CUDA9.2+VS2017+opencv4.0。The hardware platform for model training and testing is Core i7-8750H 2.2Ghz processor + 16GB memory + Geforce GTX 1080 8GB graphics card, and the software platform uses Win10+tensorflow1.9.0+CUDA9.2+VS2017+opencv4.0.

优选的,选取平均准确率Mp,平均误检率Mf,平均漏检率Mm,平均运算速度Mo,检测模型大小Mw指标进行评价,计算式如下:其中,TP表示视红外图像中正确检测出的红外目标数量,FN表示红外图像中没有检测出来的红外目标数量,FP表示红外图像中误检出来的红外目标数量,TN 表示红外图像中没有误检的红外目标数量,Mo与Mw由实际测试及训练得到,对Mp,Mf, Mm,Mo,Mw指标进行测试并分析结果。Preferably, the average accuracy rate Mp, the average false detection rate Mf, the average missed detection rate Mm, the average operation speed Mo, and the detection model size Mw are selected for evaluation, and the calculation formula is as follows: Among them, TP represents the number of infrared targets correctly detected in the visual infrared image, FN represents the number of infrared targets that were not detected in the infrared image, FP represents the number of infrared targets that were incorrectly detected in the infrared image, and TN indicated that there was no false detection in the infrared image. The number of infrared targets, Mo and Mw are obtained from actual testing and training, and the Mp, Mf, Mm, Mo, Mw indicators are tested and the results are analyzed.

使用长度为600帧的无人机视觉红外视频进行实际测试,对检测的行人与车辆实际测试识别。Use the UAV visual infrared video with a length of 600 frames for actual testing to identify the detected pedestrians and vehicles.

根据实际测试结果,对比目前具有代表性的目标检测网络,采用与模型训练与测试使用相同的硬件平台和软件平台,数据集与训练参数,对上述几条指标进行测试,并分析测试结果。According to the actual test results, compare the current representative target detection networks, use the same hardware platform and software platform, data set and training parameters as the model training and testing to test the above indicators, and analyze the test results.

综上所述,由于采用了上述技术方案,本发明的有益效果是:To sum up, due to the adoption of the above-mentioned technical solutions, the beneficial effects of the present invention are:

1、本发明中,应用于无人机在夜间无光,存在雨雾等天气影响环境等可见光摄像头和普通数码夜视仪无法应对的探测环境下检测目标,可在百米左右采集清晰的行人,车辆红外光谱成像,进行实时,高精度目标检测;1. In the present invention, the drone is applied to detect targets in a detection environment that cannot be dealt with by visible light cameras and ordinary digital night vision devices when there is no light at night, rain and fog and other weather-affected environments, and can collect clear pedestrians at about 100 meters. Vehicle infrared spectral imaging for real-time, high-precision target detection;

2、IT-YOLO无人机红外目标检测网络采用Tiny-YOLOV3的基础结构,根据红外图像特点,提取浅层卷积层特征,提高红外小目标检测能力,使用单通道卷积核,降低运算量,检测部分使用基于CenterNet结构的检测方式以降低误检测率,提高检测速度;2. The IT-YOLO UAV infrared target detection network adopts the basic structure of Tiny-YOLOV3. According to the characteristics of infrared images, it extracts the features of the shallow convolution layer, improves the detection ability of small infrared targets, and uses a single-channel convolution kernel to reduce the amount of calculation. , the detection part uses the detection method based on the CenterNet structure to reduce the false detection rate and improve the detection speed;

3、形成特征金字塔增加的一个特征图层,特征金字塔网络由Tiny-YOLOV3的13×13和 26×26像素提升为13×13、26×26和52×52像素的3检测尺度结构,再将首个卷积层内卷积核换为单通道卷积核,降低运算量,提升实时性,在检测网络部分,使用CenterNet结构代替原有YOLO检测层,优化检测方法,降低误检率,进一步提高实时性,即为IT-YOLO 无人机红外目标检测网络;3. A feature layer added to the feature pyramid is formed. The feature pyramid network is upgraded from 13×13 and 26×26 pixels of Tiny-YOLOV3 to 3 detection scale structures of 13×13, 26×26 and 52×52 pixels. The convolution kernel in the first convolution layer is replaced by a single-channel convolution kernel, which reduces the amount of computation and improves the real-time performance. In the detection network part, the CenterNet structure is used to replace the original YOLO detection layer to optimize the detection method, reduce the false detection rate, and further Improve real-time performance, namely IT-YOLO UAV infrared target detection network;

4、IT-YOLO在原Tiny-YOLOV3目标检测网络基础上增加了一定数量卷积层以提取低层卷积特征增加检测尺度,在检测部分使用了CenterNet结构以提高检测实时性降低误检率,因此在原目标检测网络基础上加深了网络结构,使得训练模型权重由34MB增加至96MB, IT-YOLO属于轻量级目标检测网络,其模型权重大小可满足在无人机机载嵌入式系统与边缘计算单元上的部署;4. IT-YOLO adds a certain number of convolutional layers on the basis of the original Tiny-YOLOV3 target detection network to extract low-level convolutional features to increase the detection scale, and uses the CenterNet structure in the detection part to improve the real-time detection and reduce the false detection rate. Therefore, in the original On the basis of the target detection network, the network structure is deepened, so that the weight of the training model is increased from 34MB to 96MB. IT-YOLO is a lightweight target detection network, and its model weight can meet the requirements of UAV airborne embedded systems and edge computing units. deployment on;

5、夜间环境下采用无人机搭载红外热成像平台视觉采集制作数据集,分别于低空,高空角度以无人机视觉进行拍摄,并以监督学习方式进行行人与车辆目标的标注,划分训练与测试集,采集制作了适用于无人机夜间针对地面行人与车辆的红外目标检测数据集,并通过训练生成了无人机实时红外目标检测模型。5. In the nighttime environment, a drone equipped with an infrared thermal imaging platform is used to visually collect and produce a data set, which is shot at low altitude and high altitude with drone vision, and the pedestrian and vehicle targets are marked in a supervised learning method. For the test set, an infrared target detection data set suitable for drones on the ground for pedestrians and vehicles at night was collected and produced, and a real-time infrared target detection model of the drone was generated through training.

附图说明Description of drawings

图1为本发明一种无人机红外目标实时检测方法的流程图;Fig. 1 is the flow chart of a kind of UAV infrared target real-time detection method of the present invention;

图2为本发明所述的Tiny-YOLOV3网络结构图;Fig. 2 is the Tiny-YOLOV3 network structure diagram of the present invention;

图3为本发明所述的IT-YOLO的网络结构图;Fig. 3 is the network structure diagram of IT-YOLO of the present invention;

图4为本发明所述的CenterNet网络结构图;Fig. 4 is the CenterNet network structure diagram of the present invention;

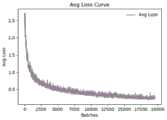

图5为模型训练平均损失图;Figure 5 shows the average loss of model training;

图6为无人机实时红外目标检测测试结果图;Fig. 6 is the test result chart of real-time infrared target detection of UAV;

图7为机载热成像目标采集与检测平台的流程图。Figure 7 is a flow chart of the airborne thermal imaging target acquisition and detection platform.

具体实施方式Detailed ways

为了使本发明的目的、技术方案及优点更加清楚明白,以下结合附图及实施例,对本发明进行进一步详细说明。应当理解,此处所描述的具体实施例仅用以解释本发明,并不用于限定本发明。In order to make the objectives, technical solutions and advantages of the present invention clearer, the present invention will be further described in detail below with reference to the accompanying drawings and embodiments. It should be understood that the specific embodiments described herein are only used to explain the present invention, but not to limit the present invention.

实施例1Example 1

如图1,一种无人机红外目标实时检测方法,包括如下步骤:As shown in Figure 1, a real-time detection method for an infrared target of an unmanned aerial vehicle includes the following steps:

(1)构建IT-YOLO无人机红外目标检测网络;(1) Build the IT-YOLO UAV infrared target detection network;

(2)采集制作无人机红外目标检测数据集;(2) Collect and make a UAV infrared target detection data set;

(3)训练生成无人机实时红外目标检测模型;(3) Train and generate real-time infrared target detection model of UAV;

(4)无人机检测红外目标。(4) UAV detects infrared targets.

本申请的技术方案中,在Tiny-YOLOV3轻量级目标检测网络基础上进行主干特征提取网络的改进与网络检测层的替换,构建IT-YOLO无人机红外目标检测网络,使用红外成像在无人机视觉下采集制作无人机红外目标检测数据集,训练生成无人机实时红外目标检测模型,应用于无人机在夜间无光,存在雨雾等天气影响环境等可见光摄像头和普通数码夜视仪无法应对的探测环境下检测目标,可在百米左右采集清晰的行人,车辆红外光谱成像,进行实时,高精度目标检测,解决了上述现有大量目标技术基于可见光环境,对于全黑无光及雨雾天气等环境下的目标检测方法缺乏研究与实践的缺陷。In the technical solution of the present application, based on the Tiny-YOLOV3 lightweight target detection network, the backbone feature extraction network is improved and the network detection layer is replaced, and the IT-YOLO UAV infrared target detection network is constructed. Collect and produce UAV infrared target detection data sets under human-machine vision, train and generate UAV real-time infrared target detection models, which are applied to visible light cameras and ordinary digital night vision when the UAV has no light at night, rain and fog and other weather-affected environments. It can detect targets in the detection environment that the instrument cannot cope with, and can collect clear infrared spectral imaging of pedestrians and vehicles at about 100 meters, and perform real-time, high-precision target detection. And the target detection method in rainy and foggy weather and other environments lacks the shortcomings of research and practice.

实施例2Example 2

如附图1-4,在实施例1的基础上,步骤(1)中IT-YOLO无人机红外目标检测网络是在Tiny-YOLOV3轻量级目标检测网络基础上进行主干网络与检测网络的改进。IT-YOLO 无人机红外目标检测网络采用Tiny-YOLOV3的基础结构,根据红外图像特点,提取浅层卷积层特征,提高红外小目标检测能力,使用单通道卷积核,降低运算量,检测部分使用基于CenterNet结构的检测方式以降低误检测率,提高检测速度。As shown in Figures 1-4, on the basis of

对于远距离低分辨率红外小目标的特征提取,Tiny-YOLOV3目标检测网络浅层卷积 Conv4层能够较为有效地表征红外小目标的语义信息,而Conv3层感受野太小,Conv5层则感受野太大,包含一定的背景噪声干扰,因此为提升红外小目标检测能力,将 Tiny-YOLOV3中Maxpool3层替换为Conv4层,增加Conv5层,同时在Tiny-YOLOV3模型的基础上增加上采样层Upsample2,将Conv5层和Upsample2在通道维度上进行连接操作,Conv7通过Upsample2进行升采样操作,形成特征金字塔增加的一个特征图层,再将首个卷积层内卷积核换为单通道卷积核,降低运算量,提升实时性,在检测网络部分,使用CenterNet结构代替原有YOLO检测层,优化检测方法,降低误检率,进一步提高实时性,即为IT-YOLO无人机红外目标检测网络。For the feature extraction of long-distance low-resolution small infrared targets, the shallow convolution Conv4 layer of the Tiny-YOLOV3 target detection network can more effectively characterize the semantic information of small infrared targets, while the receptive field of the Conv3 layer is too small, and the Conv5 layer has a receptive field. It is too large and contains a certain amount of background noise interference. Therefore, in order to improve the detection ability of small infrared targets, the Maxpool3 layer in Tiny-YOLOV3 is replaced by the Conv4 layer, the Conv5 layer is added, and the upsampling layer Upsample2 is added on the basis of the Tiny-YOLOV3 model. Connect the Conv5 layer and Upsample2 in the channel dimension, Conv7 performs upsampling operation through Upsample2 to form a feature layer added to the feature pyramid, and then replace the convolution kernel in the first convolutional layer with a single-channel convolution kernel, Reduce the amount of computation and improve the real-time performance. In the detection network part, the CenterNet structure is used to replace the original YOLO detection layer, the detection method is optimized, the false detection rate is reduced, and the real-time performance is further improved, which is the IT-YOLO UAV infrared target detection network.

特征金字塔网络为13×13、26×26和52×52像素的3个检测尺度结构。特征金字塔网络由Tiny-YOLOV3的13×13和26×26像素提升为13×13、26×26和52×52像素的3个检测尺度结构,在3个检测尺度上使用CenterNet检测结构。The feature pyramid network has 3 detection scale structures of 13×13, 26×26 and 52×52 pixels. The feature pyramid network is upgraded from Tiny-YOLOV3's 13 × 13 and 26 × 26 pixels to 3 detection scale structures of 13 × 13, 26 × 26 and 52 × 52 pixels, and CenterNet is used to detect the structure on the 3 detection scales.

为进一步提高红外图像中红外目标的检测精度,提高检测实时性,降低运算量,检测网络部分3个检测尺度上使用CenterNet检测结构,用无锚点方式(anchor free)代替原有基于锚点(anchor box)的YOLO检测层,使改进后的目标检测网络更适合检测红外小目标。In order to further improve the detection accuracy of infrared targets in infrared images, improve the real-time detection, and reduce the amount of computation, the CenterNet detection structure is used in the three detection scales of the detection network, and the anchor free method is used instead of the original anchor-based (anchor-free) method. The YOLO detection layer of the anchor box) makes the improved target detection network more suitable for detecting small infrared targets.

使用CenterNet结构进行检测时,采用尺度敏感区域以适应不同尺寸大小的目标物,将生成相对小目标较大,相对大目标较小的中心区域,获取一个边界框I是否被保留,获取方式如下:tlx,tly代表框左上角的点,brx,bry代表框右下角的点,定义一个中心区域j,定义左上角的点的坐标为(ctlx,ctly),右下角点(cbrx,cbry),ctlx,ctly,cbrx,cbry的满足如下公式,When using the CenterNet structure for detection, the scale-sensitive area is used to adapt to targets of different sizes, which will generate a central area with relatively small targets and relatively small targets, and obtain whether a bounding box I is retained. The acquisition method is as follows: tlx , tly represent the point in the upper left corner of the box, brx , bry represent the point in the lower right corner of the box, define a central area j, define the coordinates of the upper left corner point as (ctlx , ctly ), the lower right corner point ( cbrx , cbry ), ctlx , ctly , cbrx , cbry satisfy the following formulas,

(1)式中n为奇数,代表中心区域j的大小,边界框小于150时,n=3,边界框大于150时,n=5。(1) where n is an odd number, representing the size of the center region j, when the bounding box is less than 150, n=3, and when the bounding box is greater than 150, n=5.

实施例3Example 3

如附图1-5,在实施例1的基础上,在夜间环境下采用无人机搭载红外热成像平台分别于低空,高空距离进行拍摄,由上至下的视角采集清晰可供识别检测的红外图像,汇集8000 个无人机视角下夜间场景的红外热成像图像作为无人机红外目标检测数据集。As shown in Figures 1-5, on the basis of Example 1, in the nighttime environment, the drone is equipped with an infrared thermal imaging platform to shoot at low altitude and high altitude, and the top-to-bottom viewing angle is clearly collected for identification and detection. Infrared images, 8000 infrared thermal imaging images of night scenes from the perspective of UAVs are collected as the UAV infrared target detection dataset.

按5:1的比例将无人机红外目标检测数据集分为训练集和测试集,使用YOLO-MARK工具标注要检测的2类目标,行人和车辆;在模型训练环节,将数据集中的图像样本全部转换为416×416像素的图像,训练时以100幅图像为一个批次进行小批量训练,训练一批图像,权值进行一次更新,权值的衰减速率设为0.0005,动量设置为0.9,初始学习率设为0.001,对IT-YOLO网络进行20000次迭代,每间隔2000次迭代后保存一次模型,最终模型平均损失降至0.2以下,选取精度最高的模型作为无人机实时红外目标检测模型。The UAV infrared target detection data set is divided into training set and test set according to the ratio of 5:1, and the YOLO-MARK tool is used to mark the two types of targets to be detected, pedestrians and vehicles; in the model training process, the images in the data set are All samples are converted into 416×416 pixel images. During training, 100 images are used as a batch for mini-batch training. After training a batch of images, the weights are updated once. The decay rate of the weights is set to 0.0005 and the momentum is set to 0.9. , the initial learning rate is set to 0.001, 20,000 iterations are performed on the IT-YOLO network, and the model is saved after every 2,000 iterations. The average loss of the final model is reduced to below 0.2, and the model with the highest accuracy is selected as the UAV real-time infrared target detection Model.

模型训练与测试使用硬件平台为Core i7-8750H 2.2Ghz处理器+16GB内存+Geforce GTX 1080 8GB显卡,软件平台使用Win10+tensorflow1.9.0+CUDA9.2+VS2017+opencv4.0。The hardware platform for model training and testing is Core i7-8750H 2.2Ghz processor + 16GB memory + Geforce GTX 1080 8GB graphics card, and the software platform uses Win10+tensorflow1.9.0+CUDA9.2+VS2017+opencv4.0.

选取平均准确率Mp,平均误检率Mf,平均漏检率Mm,平均运算速度Mo,检测模型大小Mw指标进行评价,计算式如下:其中,TP表示视红外图像中正确检测出的红外目标数量,FN表示红外图像中没有检测出来的红外目标数量,FP表示红外图像中误检出来的红外目标数量,TN表示红外图像中没有误检的红外目标数量,Mo与Mw由实际测试及训练得到,对Mp,Mf,Mm,Mo, Mw指标进行测试并分析结果。Select the average accuracy rate Mp, the average false detection rate Mf, the average missed detection rate Mm, the average operation speed Mo, and the detection model size Mw for evaluation. The calculation formula is as follows: Among them, TP indicates the number of infrared targets correctly detected in the infrared image, FN indicates the number of infrared targets that were not detected in the infrared image, FP indicates the number of infrared targets that were incorrectly detected in the infrared image, and TN indicated that there was no false detection in the infrared image. The number of infrared targets, Mo and Mw are obtained from actual testing and training, and the Mp, Mf, Mm, Mo, Mw indicators are tested and the results are analyzed.

实施例4Example 4

如附图1-6,在实施例3的基础上,使用长度为600帧的无人机视觉红外视频进行实际测试,对检测的行人与车辆实际测试识别,结果如图6所示。As shown in Figures 1-6, on the basis of Example 3, the actual test is carried out by using the UAV visual infrared video with a length of 600 frames, and the actual test identification of the detected pedestrians and vehicles is carried out. The results are shown in Figure 6.

根据实际测试结果,对比目前具有代表性的目标检测网络,采用与模型训练与测试使用相同的硬件平台和软件平台,数据集与训练参数,对上述几条指标进行测试,并分析测试结果,测试结果如表1所示。According to the actual test results, compare the current representative target detection networks, use the same hardware and software platforms, data sets and training parameters as the model training and testing to test the above indicators, and analyze the test results. The results are shown in Table 1.

表1综合性能测试对比分析Table 1 Comparative analysis of comprehensive performance test

由表1知,IT-YOLO红外目标检测网络平均检测率达到YOLOV3目标检测网络95%,超出SSD300×300目标检测网络24%,RetinaNet-50-500目标检测网络1%,Tiny-YOLOV3目标检测网络25%,平均误检率相对SSD300×300目标检测网络降低5%,相对 RetinaNet-50-500目标检测网络降低9%,相对YOLOV3目标检测网络降低10%,相对 Tiny-YOLOV3目标检测网络降低6%,平均漏检率超出YOLOV3目标检测网络6%,相对 SSD300×300目标检测网络降低19%,相对RetinaNet-50-500目标检测网络降低1%,相对 Tiny-YOLOV3目标检测网络降低20%,平均运算速度上高于SSD300×300网络68FPS,高于RetinaNet-50-500网络74FPS,高于YOLOV3网络60FPS,高于Tiny-YOLOV3网络19FPS,模型权重大小上为96MB,大大低于SSD300×300,RetinaNet-50-500,YOLOV3网络模型权重,高于Tiny-YOLOV3网络模型权重,由于Tiny-YOLOV3检测精度不能满足对无人机红外目标的检测。因此IT-YOLO在无人机红外目标检测精度,检测实时性与模型大小几项指标上满足无人机机载热成像系统实时目标检测的需求,适宜于部署Edge-TPU类型的最新边缘计算嵌入式设备上。From Table 1, the average detection rate of the IT-YOLO infrared target detection network reaches 95% of the YOLOV3 target detection network, exceeding the SSD300×300 target detection network by 24%, the RetinaNet-50-500 target detection network by 1%, and the Tiny-YOLOV3 target detection network. 25%, the average false detection rate is 5% lower than the SSD300×300 target detection network, 9% lower than the RetinaNet-50-500 target detection network, 10% lower than the YOLOV3 target detection network, 6% lower than the Tiny-YOLOV3 target detection network , the average missed detection rate exceeds the YOLOV3 target detection network by 6%, which is 19% lower than the SSD300×300 target detection network, 1% lower than the RetinaNet-50-500 target detection network, and 20% lower than the Tiny-YOLOV3 target detection network. The speed is higher than SSD300×300 network 68FPS, higher than RetinaNet-50-500 network 74FPS, higher than YOLOV3 network 60FPS, higher than Tiny-YOLOV3 network 19FPS, model weight size is 96MB, much lower than SSD300×300, RetinaNet- 50-500, the weight of the YOLOV3 network model is higher than the weight of the Tiny-YOLOV3 network model, because the detection accuracy of Tiny-YOLOV3 cannot meet the detection of UAV infrared targets. Therefore, IT-YOLO meets the requirements of real-time target detection of UAV airborne thermal imaging system in terms of UAV infrared target detection accuracy, detection real-time performance and model size, and is suitable for deploying the latest edge computing embedded of Edge-TPU type. on the device.

实施例5Example 5

如附图7,采用无人机搭载红外热成像探测模块进行前端红外图像采集,应用于夜间无光源的全黑环境下,并在存在一定雨雾天气的情况下不影响目标检测与识别效果,由于热像仪输出为AV格式单通道信号,因此通过数据采集板卡进行格式转换,转换为单通道数字图像格式,无人机平台上采用嵌入式系统的边缘计算模块如Google Edge TPU搭载基于深度学习的轻量级目标检测网络。As shown in Figure 7, the front-end infrared image acquisition is carried out by using an unmanned aerial vehicle equipped with an infrared thermal imaging detection module. The output of the thermal imager is a single-channel signal in AV format, so the format is converted through the data acquisition board and converted into a single-channel digital image format. The edge computing module of the embedded system on the UAV platform, such as Google Edge TPU, is based on deep learning. A lightweight object detection network.

本申请的图5中Avg Loss Curve为模型训练平均损耗曲线,Avg Loss为模型训练平均损失,Batches为模型训练批次。In Figure 5 of this application, Avg Loss Curve is the average loss curve of model training, Avg Loss is the average loss of model training, and Batches is the model training batch.

以上所述仅为本发明的较佳实施例而已,并不用以限制本发明,凡在本发明的精神和原则之内所作的任何修改、等同替换和改进等,均应包含在本发明的保护范围之内。The above descriptions are only preferred embodiments of the present invention and are not intended to limit the present invention. Any modifications, equivalent replacements and improvements made within the spirit and principles of the present invention shall be included in the protection of the present invention. within the range.

Claims (10)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010191052.7ACN111444801A (en) | 2020-03-18 | 2020-03-18 | A real-time detection method for infrared target of unmanned aerial vehicle |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010191052.7ACN111444801A (en) | 2020-03-18 | 2020-03-18 | A real-time detection method for infrared target of unmanned aerial vehicle |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| CN111444801Atrue CN111444801A (en) | 2020-07-24 |

Family

ID=71653366

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202010191052.7APendingCN111444801A (en) | 2020-03-18 | 2020-03-18 | A real-time detection method for infrared target of unmanned aerial vehicle |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN111444801A (en) |

Cited By (9)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112016449A (en)* | 2020-08-27 | 2020-12-01 | 西华大学 | Vehicle identification and detection method based on deep learning |

| CN112052802A (en)* | 2020-09-09 | 2020-12-08 | 上海工程技术大学 | Front vehicle behavior identification method based on machine vision |

| CN112071058A (en)* | 2020-08-14 | 2020-12-11 | 深延科技(北京)有限公司 | Road traffic monitoring and vehicle abnormity, contraband and fire detection method and system based on deep learning |

| CN112686340A (en)* | 2021-03-12 | 2021-04-20 | 成都点泽智能科技有限公司 | Dense small target detection method based on deep neural network |

| CN112767297A (en)* | 2021-02-05 | 2021-05-07 | 中国人民解放军国防科技大学 | Infrared unmanned aerial vehicle group target simulation method based on image derivation under complex background |

| CN113688748A (en)* | 2021-08-27 | 2021-11-23 | 武汉大千信息技术有限公司 | Fire detection model and method |

| CN114140405A (en)* | 2021-11-22 | 2022-03-04 | 国网河南省电力公司 | Insulator infrared image detection method based on CenterNet network |

| CN115240069A (en)* | 2022-07-19 | 2022-10-25 | 大连理工大学 | A real-time obstacle detection method in foggy scene |

| CN115482479A (en)* | 2022-10-24 | 2022-12-16 | 云南师范大学 | Method, system, electronic device and storage medium for detecting tiny target by unmanned aerial vehicle |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20180284743A1 (en)* | 2016-05-09 | 2018-10-04 | StrongForce IoT Portfolio 2016, LLC | Methods and systems for industrial internet of things data collection for vibration sensitive equipment |

| CN109508710A (en)* | 2018-10-23 | 2019-03-22 | 东华大学 | Based on the unmanned vehicle night-environment cognitive method for improving YOLOv3 network |

| CN110334661A (en)* | 2019-07-09 | 2019-10-15 | 国网江苏省电力有限公司扬州供电分公司 | Infrared Power Transmission and Transformation Abnormal Hot Spot Target Detection Method Based on Deep Learning |

| US20190339688A1 (en)* | 2016-05-09 | 2019-11-07 | Strong Force Iot Portfolio 2016, Llc | Methods and systems for data collection, learning, and streaming of machine signals for analytics and maintenance using the industrial internet of things |

- 2020

- 2020-03-18CNCN202010191052.7Apatent/CN111444801A/enactivePending

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20180284743A1 (en)* | 2016-05-09 | 2018-10-04 | StrongForce IoT Portfolio 2016, LLC | Methods and systems for industrial internet of things data collection for vibration sensitive equipment |

| US20190339688A1 (en)* | 2016-05-09 | 2019-11-07 | Strong Force Iot Portfolio 2016, Llc | Methods and systems for data collection, learning, and streaming of machine signals for analytics and maintenance using the industrial internet of things |

| CN109508710A (en)* | 2018-10-23 | 2019-03-22 | 东华大学 | Based on the unmanned vehicle night-environment cognitive method for improving YOLOv3 network |

| CN110334661A (en)* | 2019-07-09 | 2019-10-15 | 国网江苏省电力有限公司扬州供电分公司 | Infrared Power Transmission and Transformation Abnormal Hot Spot Target Detection Method Based on Deep Learning |

Non-Patent Citations (4)

| Title |

|---|

| ZHENG YANG等: "Detecting Small Objects in Urban Settings Using SlimNet Model", 《IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING》* |

| 刘军等: "基于增强Tiny YOLOV3算法的车辆实时检测与跟踪", 《农业工程学报》* |

| 易诗等: "基于红外热成像与改进YOLOV3的夜间野兔监测方法", 《农业工程学报》* |

| 王殿伟等: "改进的YOLOv3红外视频图像行人检测算法", 《西安邮电大学学报》* |

Cited By (12)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112071058A (en)* | 2020-08-14 | 2020-12-11 | 深延科技(北京)有限公司 | Road traffic monitoring and vehicle abnormity, contraband and fire detection method and system based on deep learning |

| CN112016449A (en)* | 2020-08-27 | 2020-12-01 | 西华大学 | Vehicle identification and detection method based on deep learning |

| CN112052802A (en)* | 2020-09-09 | 2020-12-08 | 上海工程技术大学 | Front vehicle behavior identification method based on machine vision |

| CN112052802B (en)* | 2020-09-09 | 2024-02-20 | 上海工程技术大学 | Machine vision-based front vehicle behavior recognition method |

| CN112767297A (en)* | 2021-02-05 | 2021-05-07 | 中国人民解放军国防科技大学 | Infrared unmanned aerial vehicle group target simulation method based on image derivation under complex background |

| CN112767297B (en)* | 2021-02-05 | 2022-09-23 | 中国人民解放军国防科技大学 | Infrared unmanned aerial vehicle group target simulation method based on image derivation under complex background |

| CN112686340A (en)* | 2021-03-12 | 2021-04-20 | 成都点泽智能科技有限公司 | Dense small target detection method based on deep neural network |

| CN113688748A (en)* | 2021-08-27 | 2021-11-23 | 武汉大千信息技术有限公司 | Fire detection model and method |

| CN113688748B (en)* | 2021-08-27 | 2023-08-18 | 武汉大千信息技术有限公司 | Fire detection model and method |

| CN114140405A (en)* | 2021-11-22 | 2022-03-04 | 国网河南省电力公司 | Insulator infrared image detection method based on CenterNet network |

| CN115240069A (en)* | 2022-07-19 | 2022-10-25 | 大连理工大学 | A real-time obstacle detection method in foggy scene |

| CN115482479A (en)* | 2022-10-24 | 2022-12-16 | 云南师范大学 | Method, system, electronic device and storage medium for detecting tiny target by unmanned aerial vehicle |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN111444801A (en) | A real-time detection method for infrared target of unmanned aerial vehicle | |

| CN110660222B (en) | Intelligent environment-friendly electronic snapshot system for black-smoke road vehicle | |

| CN108647655B (en) | Low-altitude aerial image power line foreign object detection method based on light convolutional neural network | |

| CN109255286B (en) | Optical fast detection and recognition method of UAV based on deep learning network framework | |

| CN108109385B (en) | A vehicle identification and dangerous behavior identification system and method for preventing external breakage of power lines | |

| CN106462737A (en) | Systems and methods for haziness detection | |

| CN110070530A (en) | A kind of powerline ice-covering detection method based on deep neural network | |

| CN118015490B (en) | A method, system and electronic device for detecting small targets in unmanned aerial image | |

| CN116363532A (en) | Traffic target detection method for UAV images based on attention mechanism and reparameterization | |

| CN116846059A (en) | Edge detection system for power grid inspection and monitoring | |

| CN118115952B (en) | All-weather detection method and system for unmanned aerial vehicle image under urban low-altitude complex background | |

| CN112633114A (en) | Unmanned aerial vehicle inspection intelligent early warning method and device for building change event | |

| CN119152390A (en) | Unmanned aerial vehicle aerial photography small target detection method based on improvement YOLOv8 | |

| CN114358178A (en) | Airborne thermal imaging wild animal species classification method based on YOLOv5 algorithm | |

| CN119478858A (en) | A radar and video information fusion coding method for vehicle overload warning | |

| CN117351351A (en) | Mobile terminal multifunctional pavement damage detection method and system | |

| CN119942379B (en) | A UAV infrared small target detection method based on attention mechanism | |

| CN119716839A (en) | CN-YOLOv-based high-precision detection method for multi-mode data fusion anti-unmanned aerial vehicle | |

| CN119559378A (en) | An improved YOLOv8 vehicle-to-vehicle OCC system target recognition method | |

| CN118691804A (en) | Target detection method, electronic device and storage medium | |

| CN117422867B (en) | Moving object detection method based on multi-frame images | |

| Zhang et al. | Small Target Detection Algorithm for UAV Based on Improved YOLOv5 | |

| CN118072163A (en) | Neural network-based method and system for detecting illegal occupation of territorial cultivated land | |

| CN114550016B (en) | Unmanned aerial vehicle positioning method and system based on context information perception | |

| CN116503833A (en) | Urban high-complexity detection scene-based vehicle illegal parking detection method |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| RJ01 | Rejection of invention patent application after publication | ||

| RJ01 | Rejection of invention patent application after publication | Application publication date:20200724 |