CN111431996A - Method, apparatus, device and medium for resource configuration - Google Patents

Method, apparatus, device and medium for resource configurationDownload PDFInfo

- Publication number

- CN111431996A CN111431996ACN202010203430.9ACN202010203430ACN111431996ACN 111431996 ACN111431996 ACN 111431996ACN 202010203430 ACN202010203430 ACN 202010203430ACN 111431996 ACN111431996 ACN 111431996A

- Authority

- CN

- China

- Prior art keywords

- service

- resource

- services

- usage information

- cluster

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Classifications

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04L—TRANSMISSION OF DIGITAL INFORMATION, e.g. TELEGRAPHIC COMMUNICATION

- H04L67/00—Network arrangements or protocols for supporting network services or applications

- H04L67/01—Protocols

- H04L67/10—Protocols in which an application is distributed across nodes in the network

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04L—TRANSMISSION OF DIGITAL INFORMATION, e.g. TELEGRAPHIC COMMUNICATION

- H04L67/00—Network arrangements or protocols for supporting network services or applications

- H04L67/2866—Architectures; Arrangements

- H04L67/30—Profiles

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04L—TRANSMISSION OF DIGITAL INFORMATION, e.g. TELEGRAPHIC COMMUNICATION

- H04L67/00—Network arrangements or protocols for supporting network services or applications

- H04L67/50—Network services

- H04L67/51—Discovery or management thereof, e.g. service location protocol [SLP] or web services

Landscapes

- Engineering & Computer Science (AREA)

- Computer Networks & Wireless Communication (AREA)

- Signal Processing (AREA)

- Debugging And Monitoring (AREA)

- Data Exchanges In Wide-Area Networks (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本公开的实施例主要涉及计算机技术,尤其是涉及人工智能领域。The embodiments of the present disclosure mainly relate to computer technology, especially to the field of artificial intelligence.

背景技术Background technique

随着互联网技术的发展,为了便于管理和资源利用,服务提供方通常将多个服务部署在服务节点集群上。服务节点集群,有时也称为服务器集群,可以将一组独立的服务器作为单一系统进行管理。服务节点集群的资源由部署在其上的多个服务共享。通常,每个服务会被配置有相应的资源。然而,在服务运行过程中,服务的资源需求可能会发生变化,因而需要调整这些服务在服务节点集群上的资源配置。With the development of Internet technology, in order to facilitate management and resource utilization, service providers usually deploy multiple services on a cluster of service nodes. A service node cluster, sometimes called a server cluster, allows a group of independent servers to be managed as a single system. The resources of a service node cluster are shared by multiple services deployed on it. Typically, each service will be configured with corresponding resources. However, during the service operation, the resource requirements of the services may change, so the resource configuration of these services on the service node cluster needs to be adjusted.

发明内容SUMMARY OF THE INVENTION

根据本公开的实施例,提供了一种用于资源配置的方案。According to an embodiment of the present disclosure, a scheme for resource configuration is provided.

在本公开的第一方面,提供了一种资源配置的方法。该方法包括获取被部署在服务节点集群上的多个服务对应的服务使用信息,服务使用信息与对应服务的预期业务量相关。该方法还包括获取多个服务对应的资源使用信息,资源使用信息指示对应服务的当前资源使用率。该方法进一步包括利用资源配置预测模型,基于服务使用信息和资源使用信息来确定多个服务在服务节点集群中的资源配置。In a first aspect of the present disclosure, a method for resource configuration is provided. The method includes acquiring service usage information corresponding to a plurality of services deployed on the service node cluster, where the service usage information is related to the expected traffic volume of the corresponding service. The method also includes acquiring resource usage information corresponding to a plurality of services, where the resource usage information indicates the current resource usage rate of the corresponding services. The method further includes utilizing the resource configuration prediction model to determine the resource configuration of the plurality of services in the service node cluster based on the service usage information and the resource usage information.

在本公开的第二方面,提供了一种用于资源配置的装置。该装置包括第一信息获取模块,被配置为获取被部署在服务节点集群上的多个服务对应的服务使用信息,服务使用信息与对应服务的预期业务量相关。该装置还包括第二信息获取模块,被配置为获取多个服务对应的资源使用信息,资源使用信息指示对应服务的当前资源使用率。该装置进一步包括配置确定模块,被配置为利用资源配置预测模型,基于服务使用信息和资源使用信息来确定多个服务在服务节点集群中的资源配置。In a second aspect of the present disclosure, an apparatus for resource configuration is provided. The device includes a first information acquisition module configured to acquire service usage information corresponding to a plurality of services deployed on the service node cluster, where the service usage information is related to the expected traffic volume of the corresponding service. The device further includes a second information acquisition module configured to acquire resource usage information corresponding to multiple services, where the resource usage information indicates the current resource usage rate of the corresponding service. The apparatus further includes a configuration determination module configured to utilize the resource configuration prediction model to determine the resource configuration of the plurality of services in the service node cluster based on the service usage information and the resource usage information.

在本公开的第三方面,提供了一种电子设备,包括一个或多个处理器;以及存储装置,用于存储一个或多个程序,当一个或多个程序被一个或多个处理器执行,使得一个或多个处理器实现根据本公开的第一方面的方法。In a third aspect of the present disclosure, there is provided an electronic device comprising one or more processors; and storage means for storing one or more programs, when the one or more programs are executed by the one or more processors , causing one or more processors to implement the method according to the first aspect of the present disclosure.

在本公开的第四方面,提供了一种计算机可读存储介质,其上存储有计算机程序,该程序被处理器执行时实现根据本公开的第一方面的方法。In a fourth aspect of the present disclosure, there is provided a computer-readable storage medium having stored thereon a computer program which, when executed by a processor, implements the method according to the first aspect of the present disclosure.

应当理解,发明内容部分中所描述的内容并非旨在限定本公开的实施例的关键或重要特征,亦非用于限制本公开的范围。本公开的其它特征将通过以下的描述变得容易理解。It should be understood that the matters described in this Summary are not intended to limit key or critical features of the embodiments of the present disclosure, nor are they intended to limit the scope of the present disclosure. Other features of the present disclosure will become apparent from the following description.

附图说明Description of drawings

结合附图并参考以下详细说明,本公开各实施例的上述和其他特征、优点及方面将变得更加明显。在附图中,相同或相似的附图标注表示相同或相似的元素,其中:The above and other features, advantages and aspects of various embodiments of the present disclosure will become more apparent when taken in conjunction with the accompanying drawings and with reference to the following detailed description. In the drawings, the same or similar reference numbers refer to the same or similar elements, wherein:

图1示出了本公开的多个实施例能够在其中应用的环境的示意图;FIG. 1 shows a schematic diagram of an environment in which various embodiments of the present disclosure can be applied;

图2示出了根据本公开的一些实施例的资源配置的系统的框图;Figure 2 shows a block diagram of a system for resource allocation according to some embodiments of the present disclosure;

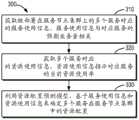

图3示出了根据本公开的一些实施例的资源配置的方法的流程图;3 shows a flowchart of a method for resource configuration according to some embodiments of the present disclosure;

图4示出了根据本公开的一些实施例的用于资源配置的装置的框图;以及FIG. 4 shows a block diagram of an apparatus for resource configuration according to some embodiments of the present disclosure; and

图5示出了能够实施本公开的多个实施例的设备的框图。5 illustrates a block diagram of a device capable of implementing various embodiments of the present disclosure.

具体实施方式Detailed ways

下面将参照附图更详细地描述本公开的实施例。虽然附图中显示了本公开的某些实施例,然而应当理解的是,本公开可以通过各种形式来实现,而且不应该被解释为限于这里阐述的实施例,相反提供这些实施例是为了更加透彻和完整地理解本公开。应当理解的是,本公开的附图及实施例仅用于示例性作用,并非用于限制本公开的保护范围。Embodiments of the present disclosure will be described in more detail below with reference to the accompanying drawings. While certain embodiments of the present disclosure are shown in the drawings, it should be understood that the present disclosure may be embodied in various forms and should not be construed as limited to the embodiments set forth herein, but rather are provided for the purpose of A more thorough and complete understanding of the present disclosure. It should be understood that the drawings and embodiments of the present disclosure are only for exemplary purposes, and are not intended to limit the protection scope of the present disclosure.

在本公开的实施例的描述中,术语“包括”及其类似用语应当理解为开放性包含,即“包括但不限于”。术语“基于”应当理解为“至少部分地基于”。术语“一个实施例”或“该实施例”应当理解为“至少一个实施例”。术语“第一”、“第二”等等可以指代不同的或相同的对象。下文还可能包括其他明确的和隐含的定义。In the description of embodiments of the present disclosure, the term "comprising" and the like should be understood as open-ended inclusion, ie, "including but not limited to". The term "based on" should be understood as "based at least in part on". The terms "one embodiment" or "the embodiment" should be understood to mean "at least one embodiment". The terms "first", "second", etc. may refer to different or the same objects. Other explicit and implicit definitions may also be included below.

如本文中所使用的,术语“模型”可以从训练数据中学习到相应的输入与输出之间的关联,从而在训练完成后可以针对给定的输入,生成对应的输出。模型的生成可以基于机器学习技术。深度学习是一种机器学习算法,通过使用多层处理单元来处理输入和提供相应输出。在本文中,“模型”也可以被称为“机器学习模型”、“学习模型”、“机器学习网络”或“学习网络”,这些术语在本文中可互换地使用。As used herein, the term "model" can learn associations between corresponding inputs and outputs from training data so that, after training is complete, a corresponding output can be generated for a given input. The generation of the model can be based on machine learning techniques. Deep learning is a machine learning algorithm that uses multiple layers of processing units to process inputs and provide corresponding outputs. A "model" may also be referred to herein as a "machine learning model," "learning model," "machine learning network," or "learning network," and these terms are used interchangeably herein.

通常,机器学习可以包括三个阶段,即训练阶段、测试阶段和应用阶段(也称为推理阶段)。在训练阶段,给定的模型可以使用大量的训练数据进行训练,不断迭代,直到模型能够从训练数据中获取一致的、与人类智慧所能够做出的推理类似的推理。通过训练,模型可以被认为能够从训练数据中学习从输入到输出之间的关联(也称为输入到输出的映射)。经训练的模型的参数值被确定。在测试阶段,将测试输入应用到训练后的模型,测试模型是否能够提供正确的输出,从而确定模型的性能。在应用阶段,模型可以被用于基于训练得到的参数值,对实际的输入进行处理,确定对应的输出。Generally, machine learning can consist of three phases, namely the training phase, the testing phase, and the application phase (also known as the inference phase). During the training phase, a given model can be trained using a large amount of training data, iterating over and over until the model can derive consistent inferences from the training data that are similar to what human intelligence is capable of. Through training, a model can be thought of as being able to learn associations from input to output (also known as input-to-output mapping) from the training data. The parameter values of the trained model are determined. During the testing phase, the performance of the model is determined by applying the test input to the trained model and testing whether the model can provide the correct output. In the application phase, the model can be used to process the actual input based on the parameter values obtained by training to determine the corresponding output.

如以上提及的,多个服务可以被部署在服务节点集群上,共享服务节点集群的资源。图1示出了这样的服务部署的环境100的示例,本公开的多个实施例能够被应用在该环境100中。As mentioned above, multiple services can be deployed on a cluster of service nodes, sharing the resources of the cluster of service nodes. FIG. 1 illustrates an example of such a

如图1所示,服务节点集群110包括多个服务节点112-1、112-2、……112-N,其中N是大于1的整数。在本文中,为便于描述,这些服务节点有时被统称为或单独称为服务节点112。服务组120可以被部署在服务节点集群110中,服务组120包括多个服务122-1、122-2、……122-M,其中M是大于1的整数。在本文中,为便于描述,这些服务有时被统称为或单独称为服务122。As shown in FIG. 1 , the

服务节点集群110中的服务节点112可以是具有计算能力的任何物理设备或虚拟设备。服务节点112的示例包括但不限于服务器、大型计算机、小型计算机、边缘计算节点、虚拟机、多处理器系统,或者包括上述系统或设备中的任意一个的分布式计算系统等。The

被部署到服务节点集群110的服务122可以是各种类型的服务。服务122的示例包括但不限于搜索服务、网站浏览服务、网络购物服务、文件上传服务、图像处理服务等等。服务122可以由服务提供方供应和部署。在有些环境中服务122也被称为应用。The

应当理解,以上仅给出的服务节点112和服务122的一些具体示例,根据需要还可以利用其它类型的服务节点以及部署其它类型的服务。本公开的实施例的范围在此方面不受限制。It should be understood that only some specific examples of the

在服务部署之后,服务节点集群110上的服务122可以由用户130-1、……130-P经由客户端140-1、……140-P访问和使用,其中P是大于等于1的整数。这些用户有时被统称为或单独称为用户130,这些客户端有时被统称为或单独称为客户端140。用户130可以经由客户端140向服务122发送请求。服务122对来自用户130的请求进行响应,以向用户130提供对应的服务。After service deployment,

为了支持服务的供应,每个服务122会被配置有服务节点集群110中的一定量的资源以支持运行。在一些情况下,一个或多个服务122对资源的需求量是动态变化。因此,有时需要调整多个服务122在服务节点集群110中的资源配置。To support the provisioning of services, each

在传统方案中,服务的资源配置通常由运维人员手动调整,和/或通过参考技术人员设置的一些简单规则来进行调整。例如,规则可以被配置为:如果某个服务的业务量达到10,000QPS(Queries Per Second,每秒查询率),则可以为该服务配置1000个处理单元,如果达到12,000QPS,则可以为该服务配置1300个处理单元。然而,这些传统方案要求人工参与,带来人力方面的较大开销。规则设置的准确度非常依赖于认为经验,并且数目有限的规则可能无法覆盖不同服务可能遇到的全部情况。In traditional solutions, the resource configuration of services is usually adjusted manually by operation and maintenance personnel, and/or by referring to some simple rules set by technicians. For example, a rule can be configured as: if the traffic volume of a service reaches 10,000QPS (Queries Per Second, query rate per second), then 1000 processing units can be configured for that service, and if it reaches 12,000QPS, then the service can be configured with 1000 processing units Configure 1300 processing units. However, these traditional solutions require human participation, which brings a large overhead in terms of human resources. The accuracy of rule setting is very dependent on experience, and a limited number of rules may not cover all situations that different services may encounter.

考虑到上述原因,当前对服务的资源配置的调整周期被设置为较长,导致资源调整的滞后性。此外,在一些当前实现中还为每个服务分配较大的资源冗余量,以避免资源短缺的情况发生,但这同时又引发了服务节点集群的资源利用率不高的问题。Considering the above reasons, the adjustment period of the resource configuration of the service is currently set to be longer, resulting in a hysteresis of resource adjustment. In addition, in some current implementations, a large amount of resource redundancy is allocated to each service to avoid resource shortage, but this also leads to the problem of low resource utilization of service node clusters.

根据本公开的实施例,提出了一种用于资源配置的方案。根据该方案,利用基于机器学习的资源配置预测模型来动态地确定多个服务在服务节点集群中的资源配置。资源配置预测模型对资源配置的确定依赖于多个服务对应的服务使用信息和资源使用信息,其中服务使用信息与对应服务的预期业务量有关,资源使用信息指示对应服务的当前资源使用率。根据上述方案,借助机器学习模型,可以实现更灵活、动态且准确的资源配置。According to an embodiment of the present disclosure, a scheme for resource configuration is proposed. According to the solution, the resource allocation prediction model based on machine learning is used to dynamically determine the resource allocation of multiple services in the service node cluster. The resource configuration prediction model determines resource configuration depending on service usage information and resource usage information corresponding to multiple services, wherein the service usage information is related to the expected traffic volume of the corresponding service, and the resource usage information indicates the current resource usage rate of the corresponding service. According to the above solution, with the help of the machine learning model, a more flexible, dynamic and accurate resource allocation can be achieved.

以下将参照附图来具体描述本公开的实施例。Embodiments of the present disclosure will be described in detail below with reference to the accompanying drawings.

图2示出了根据本公开的一些实施例的资源配置的系统200的框图。在图2的示例中,由计算设备210利用资源配置预测模型212来确定和调整多个服务的资源配置。为便于讨论,将参考图1的环境100来描述本公开的实施例的资源配置。相应地,计算设备210可以确定和调整多个服务122在服务节点集群110中的资源配置。Figure 2 shows a block diagram of a

在系统200中,计算设备210可以是具有计算能力的任何电子设备,包括移动设备、固定设备或便携式设备。计算设备210的示例包括但不限于服务器、大型计算机、小型计算机、边缘计算节点、个人计算机、服务器计算机、手持或膝上型设备、移动设备(诸如移动电话、个人数字助理(PDA)、媒体播放器等)、多处理器系统,或者包括上述系统或设备中的任意一个的分布式计算系统等。In

在操作时,计算设备210获取多个服务122-1至122-M对应的服务使用信息220-1、220-2、220-3、……220-M以及资源使用信息230-1、230-2、230-3、……230-M。为便于讨论,这些服务使用信息被统称为或单独称为服务使用信息220,这些资源使用信息被统称为或单独称为资源使用信息230。在本公开的实施例中,服务使用信息220和资源使用信息230被用于确定多个服务122的相应资源配置。In operation, the

服务使用信息220与对应服务122的预期业务量相关,可以用于预测服务122在未来一段时间内可能的业务量。由于业务量的大小通常会影响到服务122对资源的消耗,因而服务使用信息220有助于辅助准确确定服务122的资源配置。服务使用信息220可以包括各种各样能够用于确定或预测服务122的预期业务量的信息。The

在一些实施例中,对于一个或多个服务122,服务使用信息220可以包括与该服务122相关的热点事件信息。热点事件的发生可能会导致用户130对服务122的请求增加,导致业务量增长,而热点事件的结束可能会带来业务量的下降。因此,热点事件信息可以指示对应热点事件的发生和结束。In some embodiments, for one or

在一些实施例中,不同类型的服务的业务量可能会对不同类型的热点事件敏感。例如,对于提供新闻浏览的服务或搜索服务,热点事件信息例如可以包括热点新闻的出现,特定时间段或节假日的到来(例如,人们可能喜欢早间和晚间浏览新闻,而在节假日也更有空闲浏览新闻和搜索感兴趣的内容),等等。对于提供网络购物的服务,热点事件信息例如可以包括促销活动的发生和结束、节假日的到来等等。对于其他类型的服务,可能存在与业务量相关的其他热点事件。In some embodiments, the traffic of different types of services may be sensitive to different types of hot events. For example, for a service that provides news browsing or a search service, the hot event information may include, for example, the appearance of hot news, the arrival of a specific time period or a holiday (eg, people may like to browse news in the morning and evening, and are more free on holidays). browse news and search for content of interest), etc. For services that provide online shopping, the hot event information may include, for example, the occurrence and end of promotional activities, the arrival of holidays, and the like. For other types of services, there may be other hot events related to traffic.

除热点事件信息之外或作为备选,对于一个或多个服务122,服务使用信息220还可以包括服务122的当前业务量。当前业务量也可以作为预测后续业务量的一个参考。In addition to or as an alternative to hotspot event information, for one or

在一些情况中,服务122的业务量可能还受到一个或多个其他服务的业务量的影响。这里的其他服务可以是部署在服务节点集群110中的服务122,也可以是被部署在其他服务节点或服务节点集群中的服务。考虑到服务之间的相互影响,在一些实施例中,服务122的服务使用信息220还可以包括与该服务122的业务量相关的一个或多个其他服务对应的服务使用信息。服务122与其他服务的业务量之间可以是正相关,例如其他服务的业务量增长会导致该服务122的业务量增长,或者是负相关,例如其他服务的业务量增长会导致服务122的业务量降低。在一个示例中,对于搜索服务,如果提供新闻浏览的服务的业务量预期会发生增长,那么搜索服务的业务量可能也会随之发生增长。通过参考其他服务对应的服务使用信息,可以更准确预测当前服务的预期服务使用状况。In some cases, the traffic of

除考虑服务使用信息之外,多个服务122的资源使用状况也会影响到对相应服务122的资源分配。资源使用信息230可以指示服务122对当前配置的资源的使用率。支持服务122的运行的资源可以包括处理资源,包括各种类型的处理设备,例如中央处理单元(CPU)、图形处理单元(GPU)、处理核、控制器等。附加地或备选地,支持服务122的运行的资源还可以包括存储资源,例如内存资源、高速缓存资源、非瞬态存储设备资源等;网络带宽资源,用于支持服务122的网络访问或设备间访问;文件描述符资源,用于支持服务122的文件访问,等等。In addition to considering service usage information, the resource usage of

考虑到被配置的资源,资源使用信息230可以包括每个资源122的处理资源使用率、存储资源使用率、带宽资源使用率和/或文件描述符使用率,等等。一个类型的资源的使用率可以由该类型的资源的数量表示,或者由已使用的资源量占当前为该服务分配的总资源量的比例来表示。本公开的实施例的范围在此方面不受限制。The

以上给出了服务使用信息220和资源使用信息230的一些可能的示例。在其他实施例中,服务使用信息220和/或资源使用信息230还可以包括其他类型的信息。Some possible examples of

计算设备210可以从各种渠道或来源检测各个服务122的服务使用信息220。计算设备210还可以通过监测服务节点集群110中部署的各个服务122的运行状况确定资源使用信息230。服务使用信息220和/或资源使用信息230可以是与时间相关的信息,计算设备210可以不断检测和更新这些信息。

在获取服务使用信息220和资源使用信息230后,计算设备210利用资源配置预测模型212,基于所获取的这些信息来确定多个服务122在服务节点集群110中的资源配置214。After acquiring the

资源配置预测模型212被配置来实现资源配置的预测目的。从模型结构上看,资源配置预测模型212的输入为服务对应的服务使用信息和资源使用信息,输出为预期要向该服务配置的资源。资源配置预测模型212可以是任何类型的机器学习模型或者深度学习模型或者多个模型的组合。资源配置预测模型212的一些示例包括但不限于支持向量机(SVM)模型,贝叶斯模型,随机森林模型,各种深度学习/神经网络模型,诸如卷积神经网络(CNN)、循环神经网络(RNN)等。The resource

为了实现预测目的,需要利用训练数据来训练资源配置预测模型212。在初始阶段,可以采集各个服务节点集群中部署的服务对应的服务使用信息和资源使用信息以及对应的资源配置,作为训练数据。备选地或附加地,也可以由有经验的技术人员提供需要的训练数据。在利用训练数据训练完成之后,资源配置预测模型212可以被部署和使用。资源配置预测模型212的训练可以由计算设备210实现或者可以由另一个或多个计算设备来实现。For prediction purposes, the resource

通过处理输入的服务使用信息220和资源使用信息230,资源预测模型212可以为每个服务122确定对应的资源配置214。在一些实施例中,所确定的资源配置214可以具体地指示服务节点集群110所提供的各种类型的资源在多个服务122之间的分配。例如,取决于服务122对资源类型的需要,向服务122分配的资源可以包括处理资源、存储资源、网络单开资源和/或文件描述符等。资源配置预测模型212可以确定每个服务122所需要的资源是否需要扩容或缩容,并且可以输出每个服务122预测的资源的量。By processing the input

在一些实施例中,资源配置预测模型212可以逐个服务地预测服务122对应的资源配置214。按服务的资源配置预测可以按顺序执行或者可以并行实现,例如多个相同的资源配置预测模型212并行运行来执行预测。In some embodiments, the resource

在一些实施例中,资源配置预测模型212对资源配置214的确定可以是按照预定周期执行或响应于预定事件的触发而执行。例如,计算设备210可以设置每隔几个小时、每天或每个若干天执行一次预测。在另外的示例中,计算设备210还可以监测一个或多个服务122的当前业务量和/或资源使用率。如果业务量和/或资源使用率超过对应的阈值,计算设备210可以触发利用资源配置预测模型212来执行一次资源配置的重新确定。In some embodiments, the determination of the

在一些实施例中,多个服务122的资源配置214还可以满足服务节点集群110所能提供的总资源量的约束,以避免配置过量的资源。总资源量的约束可以在资源配置预测模型212确定资源配置214时或者在资源配置预测模型212输出资源配置后由计算设备210调整。In some embodiments, the

由计算设备210确定的资源配置214可以被用于修改服务节点集群110上各个服务节点112的配置。具体地,计算设备210可以基于所确定的资源配置214来生成针对服务节点集群212中的集群配置。集群配置指示各个服务节点112上的资源如何分配,以符合资源配置214。计算设备210可以将集群配置提供到服务节点集群110,以配置服务节点集群110向多个服务122提供资源。由此,资源配置214可以快速生效。The

随着资源配置预测模型212对多个服务122在服务节点集群110上的资源配置的确定,在一些实施例中,所确定的资源配置214以及对应的服务使用信息220和资源使用信息230可以被相关联地存储,以用于对资源配置预测模型212的重新训练。资源配置214以及对应的服务使用信息220和资源使用信息230可以被存储在执行模型重新训练的计算设备可访问的存储系统或数据库中。执行模型重新训练的计算设备可以是计算设备210或另一个或多个计算设备。通过在资源配置预测模型212的使用过程中积累更多的训练数据,可以在后续阶段通过重新训练来继续优化资源配置预测模型212,使得模型输出的准确度更高。重新训练后的资源配置预测模型212可以继续被提供给计算设备210使用。As the resource

根据本公开的实施例,通过基于机器学习的模型的使用,同时结合可能影响服务的资源需求的若干因素,可以自动且动态地调整服务节点集群的资源配置,快速响应服务的资源需求,避免滞后性的资源扩容或缩容。另一方面,由于资源配置可以更动态地调整,可以避免每次向服务122配置过量的冗余资源,从而可以提供服务节点集群110的总体资源利用率,避免资源浪费。此外,训练得到的资源配置预测模型212还可以被灵活应用到多个服务节点集群,为这些集群上部署的服务进行资源配置的确定和调整。According to the embodiments of the present disclosure, through the use of a machine learning-based model, combined with several factors that may affect the resource requirements of the service, the resource configuration of the service node cluster can be automatically and dynamically adjusted, quickly respond to the resource requirements of the service, and avoid lag Ability to expand or shrink resources. On the other hand, since the resource configuration can be adjusted more dynamically, it is possible to avoid configuring excessive redundant resources to the

图3示出了根据本公开的一些实施例的资源配置的方法300的流程图。方法300可以由图2的计算设备210实现。Figure 3 shows a flowchart of a

在框310,计算设备210获取被部署在服务节点集群上的多个服务对应的服务使用信息,服务使用信息与对应服务的预期业务量相关。在框320,计算设备210获取多个服务对应的资源使用信息,资源使用信息指示对应服务的当前资源使用率。在框330,计算设备210利用资源配置预测模型,基于服务使用信息和资源使用信息来确定多个服务在服务节点集群中的资源配置。At block 310, the

在一些实施例中,获取服务使用信息包括:针对多个服务中的特定服务,确定以下至少一项作为特定服务对应的服务使用信息:与特定服务相关的热点事件信息,特定服务的当前业务量,以及与特定服务的业务量相关的至少一个其他服务对应的服务使用信息。In some embodiments, acquiring service usage information includes: for a specific service in the plurality of services, determining at least one of the following as service usage information corresponding to the specific service: hot event information related to the specific service, current traffic volume of the specific service , and service usage information corresponding to at least one other service related to the traffic volume of the specific service.

在一些实施例中,资源使用信息指示以下至少一项:处理资源使用率,存储资源使用率,网络带宽资源使用率,以及文件描述符使用率。In some embodiments, the resource usage information indicates at least one of: processing resource usage, storage resource usage, network bandwidth resource usage, and file descriptor usage.

在一些实施例中,确定资源配置包括:确定服务节点集群的以下各项资源中的至少一项在多个服务之间的分配:处理资源、存储资源、网络带宽资源和文件描述符。In some embodiments, determining the resource configuration includes determining the allocation of at least one of the following resources of the service node cluster among the plurality of services: processing resources, storage resources, network bandwidth resources, and file descriptors.

在一些实施例中,方法300还包括:基于所确定的资源配置来生成针对服务节点集群中的集群配置;以及将集群配置提供给服务节点集群,以配置服务节点集群向多个服务提供资源。In some embodiments, the

在一些实施例中,方法300还包括:将所确定的资源配置与服务使用信息和资源使用信息相关联地存储,以用于资源配置预测模型的重新训练。In some embodiments, the

图4示出了根据本公开的一些实施例的用于资源配置的装置400的示意性框图。装置400可以被包括在图2的计算设备210中或者被实现为计算设备210。FIG. 4 shows a schematic block diagram of an

如图4所示,装置400包括第一信息获取模块410,被配置为获取被部署在服务节点集群上的多个服务对应的服务使用信息,服务使用信息与对应服务的预期业务量相关。装置400还包括第二信息获取模块420,被配置为获取多个服务对应的资源使用信息,资源使用信息指示对应服务的当前资源使用率。装置400进一步包括配置确定模块430,被配置为利用资源配置预测模型,基于服务使用信息和资源使用信息来确定多个服务在服务节点集群中的资源配置。As shown in FIG. 4 , the

在一些实施例中,第一信息获取模块410包括特定服务信息获取模块,被配置为针对多个服务中的特定服务,确定以下至少一项作为特定服务对应的服务使用信息:与特定服务相关的热点事件信息,特定服务的当前业务量,以及与特定服务的业务量相关的至少一个其他服务对应的服务使用信息。In some embodiments, the first

在一些实施例中,资源使用信息指示以下至少一项:处理资源使用率,存储资源使用率,网络带宽资源使用率,以及文件描述符使用率。In some embodiments, the resource usage information indicates at least one of: processing resource usage, storage resource usage, network bandwidth resource usage, and file descriptor usage.

在一些实施例中,配置确定模块430包括:分配确定模块,被配置为确定服务节点集群的以下各项资源中的至少一项在多个服务之间的分配:处理资源、存储资源、网络带宽资源和文件描述符。In some embodiments, the

在一些实施例中,装置400还包括:集群配置确定模块,被配置为基于所确定的资源配置来生成针对服务节点集群中的集群配置;以及配置供应模块,被配置为将集群配置提供给服务节点集群,以配置服务节点集群向多个服务提供资源。In some embodiments, the

在一些实施例中,装置400还包括:存储模块,被配置为将所确定的资源配置与服务使用信息和资源使用信息相关联地存储,以用于资源配置预测模型的重新训练。In some embodiments, the

图5示出了可以用来实施本公开的实施例的示例设备500的示意性框图。设备500可以用于实现图1的服务节点112或图2的计算设备210,或者被包括在服务节点112或计算设备210中。5 shows a schematic block diagram of an

如图所示,设备500包括计算单元501,其可以根据存储在只读存储器(ROM)502中的计算机程序指令或者从存储单元508加载到随机访问存储器(RAM)503中的计算机程序指令,来执行各种适当的动作和处理。在RAM 503中,还可存储设备500操作所需的各种程序和数据。计算单元501、ROM 502以及RAM 503通过总线504彼此相连。输入/输出(I/O)接口505也连接至总线504。As shown, the

设备500中的多个部件连接至I/O接口505,包括:输入单元506,例如键盘、鼠标等;输出单元507,例如各种类型的显示器、扬声器等;存储单元508,例如磁盘、光盘等;以及通信单元509,例如网卡、调制解调器、无线通信收发机等。通信单元509允许设备500通过诸如因特网的计算机网络和/或各种电信网络与其他设备交换信息/数据。Various components in the

计算单元501可以是各种具有处理和计算能力的通用和/或专用处理组件。计算单元501的一些示例包括但不限于中央处理单元(CPU)、图形处理单元(GPU)、各种专用的人工智能(AI)计算芯片、各种运行机器学习模型算法的计算单元、数字信号处理器(DSP)、以及任何适当的处理器、控制器、微控制器等。计算单元501执行上文所描述的各个方法和处理,例如方法300。例如,在一些实施例中,方法300可被实现为计算机软件程序,其被有形地包含于机器可读介质,例如存储单元508。在一些实施例中,计算机程序的部分或者全部可以经由ROM 502和/或通信单元509而被载入和/或安装到设备500上。当计算机程序加载到RAM503并由计算单元501执行时,可以执行上文描述的方法300的一个或多个步骤。备选地,在其他实施例中,计算单元501可以通过其他任何适当的方式(例如,借助于固件)而被配置为执行方法300。

本文中以上描述的功能可以至少部分地由一个或多个硬件逻辑部件来执行。例如,非限制性地,可以使用的示范类型的硬件逻辑部件包括:场可编程门阵列(FPGA)、专用集成电路(ASIC)、专用标准产品(ASSP)、芯片上系统的系统(SOC)、负载可编程逻辑设备(CPLD)等等。The functions described herein above may be performed, at least in part, by one or more hardware logic components. For example, without limitation, exemplary types of hardware logic components that may be used include: Field Programmable Gate Arrays (FPGAs), Application Specific Integrated Circuits (ASICs), Application Specific Standard Products (ASSPs), System on Chips (SOCs), Load Programmable Logic Device (CPLD) and so on.

用于实施本公开的方法的程序代码可以采用一个或多个编程语言的任何组合来编写。这些程序代码可以提供给通用计算机、专用计算机或其他可编程数据处理装置的处理器或控制器,使得程序代码当由处理器或控制器执行时使流程图和/或框图中所规定的功能/操作被实施。程序代码可以完全在机器上执行、部分地在机器上执行,作为独立软件包部分地在机器上执行且部分地在远程机器上执行或完全在远程机器或服务器上执行。Program code for implementing the methods of the present disclosure may be written in any combination of one or more programming languages. These program codes may be provided to a processor or controller of a general purpose computer, special purpose computer or other programmable data processing apparatus, such that the program code, when executed by the processor or controller, performs the functions/functions specified in the flowcharts and/or block diagrams. Action is implemented. The program code may execute entirely on the machine, partly on the machine, partly on the machine and partly on a remote machine as a stand-alone software package or entirely on the remote machine or server.

在本公开的上下文中,机器可读介质可以是有形的介质,其可以包含或存储以供指令执行系统、装置或设备使用或与指令执行系统、装置或设备结合地使用的程序。机器可读介质可以是机器可读信号介质或机器可读储存介质。机器可读介质可以包括但不限于电子的、磁性的、光学的、电磁的、红外的、或半导体系统、装置或设备,或者上述内容的任何合适组合。机器可读存储介质的更具体示例会包括基于一个或多个线的电气连接、便携式计算机盘、硬盘、随机存取存储器(RAM)、只读存储器(ROM)、可擦除可编程只读存储器(EPROM或快闪存储器)、光纤、便捷式紧凑盘只读存储器(CD-ROM)、光学储存设备、磁储存设备、或上述内容的任何合适组合。In the context of the present disclosure, a machine-readable medium may be a tangible medium that may contain or store a program for use by or in connection with the instruction execution system, apparatus or device. The machine-readable medium may be a machine-readable signal medium or a machine-readable storage medium. Machine-readable media may include, but are not limited to, electronic, magnetic, optical, electromagnetic, infrared, or semiconductor systems, devices, or devices, or any suitable combination of the foregoing. More specific examples of machine-readable storage media would include one or more wire-based electrical connections, portable computer disks, hard disks, random access memory (RAM), read only memory (ROM), erasable programmable read only memory (EPROM or flash memory), fiber optics, compact disk read only memory (CD-ROM), optical storage, magnetic storage, or any suitable combination of the foregoing.

此外,虽然采用特定次序描绘了各操作,但是这应当理解为要求这样操作以所示出的特定次序或以顺序次序执行,或者要求所有图示的操作应被执行以取得期望的结果。在一定环境下,多任务和并行处理可能是有利的。同样地,虽然在上面论述中包含了若干具体实现细节,但是这些不应当被解释为对本公开的范围的限制。在单独的实施例的上下文中描述的某些特征还可以组合地实现在单个实现中。相反地,在单个实现的上下文中描述的各种特征也可以单独地或以任何合适的子组合的方式实现在多个实现中。Additionally, although operations are depicted in a particular order, this should be understood to require that such operations be performed in the particular order shown or in a sequential order, or that all illustrated operations should be performed to achieve desirable results. Under certain circumstances, multitasking and parallel processing may be advantageous. Likewise, although the above discussion contains several implementation-specific details, these should not be construed as limitations on the scope of the present disclosure. Certain features that are described in the context of separate embodiments can also be implemented in combination in a single implementation. Conversely, various features that are described in the context of a single implementation can also be implemented in multiple implementations separately or in any suitable subcombination.

尽管已经采用特定于结构特征和/或方法逻辑动作的语言描述了本主题,但是应当理解所附权利要求书中所限定的主题未必局限于上面描述的特定特征或动作。相反,上面所描述的特定特征和动作仅仅是实现权利要求书的示例形式。Although the subject matter has been described in language specific to structural features and/or logical acts of method, it is to be understood that the subject matter defined in the appended claims is not necessarily limited to the specific features or acts described above. Rather, the specific features and acts described above are merely example forms of implementing the claims.

Claims (14)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010203430.9ACN111431996B (en) | 2020-03-20 | 2020-03-20 | Method, apparatus, device and medium for resource configuration |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202010203430.9ACN111431996B (en) | 2020-03-20 | 2020-03-20 | Method, apparatus, device and medium for resource configuration |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN111431996Atrue CN111431996A (en) | 2020-07-17 |

| CN111431996B CN111431996B (en) | 2022-08-09 |

Family

ID=71549975

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202010203430.9AActiveCN111431996B (en) | 2020-03-20 | 2020-03-20 | Method, apparatus, device and medium for resource configuration |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN111431996B (en) |

Cited By (7)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112231054A (en)* | 2020-10-10 | 2021-01-15 | 苏州浪潮智能科技有限公司 | Method and device for deploying multi-model inference service based on k8s cluster |

| CN113254213A (en)* | 2021-06-08 | 2021-08-13 | 苏州浪潮智能科技有限公司 | Service computing resource allocation method, system and device |

| CN114785760A (en)* | 2022-05-07 | 2022-07-22 | 阿里巴巴(中国)有限公司 | Service preheating method, equipment, medium and product |

| CN115220915A (en)* | 2022-07-15 | 2022-10-21 | 中国电信股份有限公司 | Server control method and device, storage medium and electronic equipment |

| CN118170566A (en)* | 2024-05-13 | 2024-06-11 | 中国电信股份有限公司 | Message processing method oriented to edge equipment and related equipment |

| WO2025102702A1 (en)* | 2023-11-14 | 2025-05-22 | 华为云计算技术有限公司 | Resource supply solution determination method, apparatus and computing device cluster |

| CN120596284A (en)* | 2025-08-06 | 2025-09-05 | 北京火山引擎科技有限公司 | Cluster resource allocation method, device and product for artificial intelligent service |

Citations (12)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102317917A (en)* | 2011-06-30 | 2012-01-11 | 华为技术有限公司 | Hot field virtual machine cpu dispatching method and virtual machine system (vms) |

| CN104484222A (en)* | 2014-12-31 | 2015-04-01 | 北京天云融创软件技术有限公司 | Virtual machine dispatching method based on hybrid genetic algorithm |

| CN106959894A (en)* | 2016-01-11 | 2017-07-18 | 北京京东尚科信息技术有限公司 | Resource allocation methods and device |

| CN108023759A (en)* | 2016-10-28 | 2018-05-11 | 腾讯科技(深圳)有限公司 | Adaptive resource regulating method and device |

| CN108259376A (en)* | 2018-04-24 | 2018-07-06 | 北京奇艺世纪科技有限公司 | The control method and relevant device of server cluster service traffics |

| US20180219735A1 (en)* | 2015-07-28 | 2018-08-02 | British Telecommunications Public Limited Company | Network function virtualization |

| US20180278541A1 (en)* | 2015-12-31 | 2018-09-27 | Huawei Technologies Co., Ltd. | Software-Defined Data Center and Service Cluster Scheduling and Traffic Monitoring Method Therefor |

| CN108683720A (en)* | 2018-04-28 | 2018-10-19 | 金蝶软件(中国)有限公司 | A kind of container cluster service configuration method and device |

| CN108874640A (en)* | 2018-05-07 | 2018-11-23 | 北京京东尚科信息技术有限公司 | A kind of appraisal procedure and device of clustering performance |

| CN109271257A (en)* | 2018-10-11 | 2019-01-25 | 郑州云海信息技术有限公司 | A kind of method and apparatus of virtual machine (vm) migration deployment |

| CN109412829A (en)* | 2018-08-30 | 2019-03-01 | 华为技术有限公司 | A kind of prediction technique and equipment of resource distribution |

| CN109672795A (en)* | 2018-11-14 | 2019-04-23 | 平安科技(深圳)有限公司 | Call center resource management method and device, electronic equipment, storage medium |

- 2020

- 2020-03-20CNCN202010203430.9Apatent/CN111431996B/enactiveActive

Patent Citations (12)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102317917A (en)* | 2011-06-30 | 2012-01-11 | 华为技术有限公司 | Hot field virtual machine cpu dispatching method and virtual machine system (vms) |

| CN104484222A (en)* | 2014-12-31 | 2015-04-01 | 北京天云融创软件技术有限公司 | Virtual machine dispatching method based on hybrid genetic algorithm |

| US20180219735A1 (en)* | 2015-07-28 | 2018-08-02 | British Telecommunications Public Limited Company | Network function virtualization |

| US20180278541A1 (en)* | 2015-12-31 | 2018-09-27 | Huawei Technologies Co., Ltd. | Software-Defined Data Center and Service Cluster Scheduling and Traffic Monitoring Method Therefor |

| CN106959894A (en)* | 2016-01-11 | 2017-07-18 | 北京京东尚科信息技术有限公司 | Resource allocation methods and device |

| CN108023759A (en)* | 2016-10-28 | 2018-05-11 | 腾讯科技(深圳)有限公司 | Adaptive resource regulating method and device |

| CN108259376A (en)* | 2018-04-24 | 2018-07-06 | 北京奇艺世纪科技有限公司 | The control method and relevant device of server cluster service traffics |

| CN108683720A (en)* | 2018-04-28 | 2018-10-19 | 金蝶软件(中国)有限公司 | A kind of container cluster service configuration method and device |

| CN108874640A (en)* | 2018-05-07 | 2018-11-23 | 北京京东尚科信息技术有限公司 | A kind of appraisal procedure and device of clustering performance |

| CN109412829A (en)* | 2018-08-30 | 2019-03-01 | 华为技术有限公司 | A kind of prediction technique and equipment of resource distribution |

| CN109271257A (en)* | 2018-10-11 | 2019-01-25 | 郑州云海信息技术有限公司 | A kind of method and apparatus of virtual machine (vm) migration deployment |

| CN109672795A (en)* | 2018-11-14 | 2019-04-23 | 平安科技(深圳)有限公司 | Call center resource management method and device, electronic equipment, storage medium |

Non-Patent Citations (3)

| Title |

|---|

| ABHINANDAN S. PRASAD等: "Optimal Resource Configuration of Complex Services in the Cloud", 《2017 17TH IEEE/ACM INTERNATIONAL SYMPOSIUM ON CLUSTER, CLOUD AND GRID COMPUTING (CCGRID)》* |

| 崔广章等: "容器云资源调度策略的改进", 《计算机与数字工程》* |

| 徐雅斌等: "基于需求预测的PaaS平台资源分配方法", 《计算机应用》* |

Cited By (11)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112231054A (en)* | 2020-10-10 | 2021-01-15 | 苏州浪潮智能科技有限公司 | Method and device for deploying multi-model inference service based on k8s cluster |

| CN112231054B (en)* | 2020-10-10 | 2022-07-08 | 苏州浪潮智能科技有限公司 | Multi-model inference service deployment method and device based on k8s cluster |

| CN113254213A (en)* | 2021-06-08 | 2021-08-13 | 苏州浪潮智能科技有限公司 | Service computing resource allocation method, system and device |

| CN113254213B (en)* | 2021-06-08 | 2021-10-15 | 苏州浪潮智能科技有限公司 | A service computing resource configuration method, system and device |

| WO2022257301A1 (en)* | 2021-06-08 | 2022-12-15 | 苏州浪潮智能科技有限公司 | Method, system and apparatus for configuring computing resources of service |

| CN114785760A (en)* | 2022-05-07 | 2022-07-22 | 阿里巴巴(中国)有限公司 | Service preheating method, equipment, medium and product |

| CN115220915A (en)* | 2022-07-15 | 2022-10-21 | 中国电信股份有限公司 | Server control method and device, storage medium and electronic equipment |

| CN115220915B (en)* | 2022-07-15 | 2024-02-02 | 中国电信股份有限公司 | Server control method, device, storage medium and electronic equipment |

| WO2025102702A1 (en)* | 2023-11-14 | 2025-05-22 | 华为云计算技术有限公司 | Resource supply solution determination method, apparatus and computing device cluster |

| CN118170566A (en)* | 2024-05-13 | 2024-06-11 | 中国电信股份有限公司 | Message processing method oriented to edge equipment and related equipment |

| CN120596284A (en)* | 2025-08-06 | 2025-09-05 | 北京火山引擎科技有限公司 | Cluster resource allocation method, device and product for artificial intelligent service |

Also Published As

| Publication number | Publication date |

|---|---|

| CN111431996B (en) | 2022-08-09 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN111431996B (en) | Method, apparatus, device and medium for resource configuration | |

| US11507430B2 (en) | Accelerated resource allocation techniques | |

| Smith et al. | Federated multi-task learning | |

| CN111258767B (en) | Cloud computing resource intelligent distribution method and device for complex system simulation application | |

| US11443228B2 (en) | Job merging for machine and deep learning hyperparameter tuning | |

| TW201820165A (en) | Server and cloud computing resource optimization method thereof for cloud big data computing architecture | |

| CN110058936A (en) | For determining the method, equipment and computer program product of the stock number of dedicated processes resource | |

| Chen et al. | Heterogeneous semi-asynchronous federated learning in internet of things: A multi-armed bandit approach | |

| CN114579294B (en) | Container elastic expansion system supporting service load surge prediction in cloud primary environment | |

| CN109614227A (en) | Task resource allocation method, apparatus, electronic device, and computer-readable medium | |

| CN111527734B (en) | Node traffic ratio prediction method and device | |

| CN115913967A (en) | A Microservice Elastic Scaling Method Based on Resource Demand Prediction in Cloud Environment | |

| WO2020206699A1 (en) | Predicting virtual machine allocation failures on server node clusters | |

| US20220083378A1 (en) | Hybrid scheduling method for deep learning workloads, and computing apparatus with hybrid scheduling | |

| CN112615795A (en) | Flow control method and device, electronic equipment, storage medium and product | |

| US11630719B1 (en) | System and method for potential system impairment detection | |

| CN118093097B (en) | Data storage cluster resource scheduling method, device, electronic equipment and medium | |

| US11907191B2 (en) | Content based log retrieval by using embedding feature extraction | |

| US11853187B1 (en) | System and method for remote management of data processing systems | |

| CN118796372A (en) | Microservice resource scheduling method and device, electronic device and storage medium | |

| CN113515524B (en) | A method and device for automatically and dynamically allocating nodes in a distributed cache access layer | |

| CN116432754A (en) | Artificial intelligence reasoning acceleration method and system | |

| US11435926B2 (en) | Method, device, and computer program product for managing storage system | |

| CN116166427A (en) | Automatic capacity expansion and contraction method, device, equipment and storage medium | |

| CN114764353A (en) | ML to ML orchestration system and method for Information Handling System (IHS) full system optimization |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |