CN109947429B - Data processing method and device - Google Patents

Data processing method and deviceDownload PDFInfo

- Publication number

- CN109947429B CN109947429BCN201910190563.4ACN201910190563ACN109947429BCN 109947429 BCN109947429 BCN 109947429BCN 201910190563 ACN201910190563 ACN 201910190563ACN 109947429 BCN109947429 BCN 109947429B

- Authority

- CN

- China

- Prior art keywords

- data

- separator

- processed

- target

- preset

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

- 238000003672processing methodMethods0.000titleclaimsabstractdescription21

- 238000000034methodMethods0.000claimsabstractdescription50

- 238000012544monitoring processMethods0.000claimsabstractdescription12

- 230000011218segmentationEffects0.000claimsdescription22

- 238000012545processingMethods0.000claimsdescription14

- 230000006870functionEffects0.000claimsdescription12

- 230000001186cumulative effectEffects0.000claimsdescription10

- 238000004590computer programMethods0.000claimsdescription6

- 238000012795verificationMethods0.000description26

- 238000004891communicationMethods0.000description6

- 238000003491arrayMethods0.000description3

- 238000013135deep learningMethods0.000description3

- 238000010586diagramMethods0.000description3

- 238000000605extractionMethods0.000description3

- 238000012986modificationMethods0.000description2

- 230000004048modificationEffects0.000description2

- 230000003287optical effectEffects0.000description2

- 238000000926separation methodMethods0.000description2

- 230000003068static effectEffects0.000description2

- 238000010276constructionMethods0.000description1

- 125000004122cyclic groupChemical group0.000description1

- 238000007418data miningMethods0.000description1

- 230000002452interceptive effectEffects0.000description1

- 238000010801machine learningMethods0.000description1

- 238000010200validation analysisMethods0.000description1

Images

Landscapes

- Stored Programmes (AREA)

- Information Retrieval, Db Structures And Fs Structures Therefor (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明实施例涉及数据处理技术领域,尤其涉及一种数据处理方法及装置。Embodiments of the present invention relate to the technical field of data processing, and in particular, to a data processing method and apparatus.

背景技术Background technique

计算引擎(Spark)是在大规模数据处理领域通用的计算引擎。Spark是一种开源的类,可适用于数据挖掘与机器学习等需要迭代的算法中,除了能够提供交互式查询外,还可以优化迭代工作负载。The computing engine (Spark) is a general computing engine in the field of large-scale data processing. Spark is an open source class that can be used in data mining and machine learning algorithms that require iteration. In addition to providing interactive queries, it can also optimize iterative workloads.

然而,现有技术中,每个Spark版本通常具有预设的默认的分隔符,无法对非默认分隔符的数据进行处理。比如Spark版本为Cdh5.5.0时,此版本的Spark只能识别默认的分隔符。若待处理的数据中所使用的分隔符不为Spark的默认分隔符时,Spark无法正确识别和处理该数据,从而无法对数据进行处理。However, in the prior art, each Spark version usually has a preset default delimiter, and cannot process data with a non-default delimiter. For example, when the Spark version is Cdh5.5.0, this version of Spark can only recognize the default delimiter. If the delimiter used in the data to be processed is not Spark's default delimiter, Spark cannot correctly identify and process the data, so it cannot process the data.

发明内容SUMMARY OF THE INVENTION

本发明实施例提供一种数据处理方法及装置,用以解决现有技术中,每个Spark版本通常具有预设的默认的分隔符,无法对非默认分隔符的数据进行处理的问题。Embodiments of the present invention provide a data processing method and apparatus to solve the problem in the prior art that each Spark version usually has a preset default delimiter and cannot process data with non-default delimiters.

一方面,本发明实施例提供一种数据处理方法,所述方法应用于计算引擎Spark,所述方法包括:On the one hand, an embodiment of the present invention provides a data processing method, the method is applied to the computing engine Spark, and the method includes:

监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库;Monitor and learn that the default delimiter of the Spark application cannot divide the data to be processed, and obtain the default delimiter library of the Spark;

遍历所述预设分隔符库中的分隔符,获取所述分隔符中与所述待处理数据相匹配的目标分隔符;其中,所述目标分隔符为将所述待处理数据中的部分数据分割成功且满足预设校验规则的分隔符;Traverse the delimiters in the preset delimiter library, and obtain a target delimiter in the delimiter that matches the data to be processed; wherein, the target delimiter is a part of the data in the data to be processed. The delimiter that successfully splits and satisfies the preset verification rules;

将所述目标分隔符设置为所述Spark的默认分隔符。Set the target delimiter to the Spark's default delimiter.

一方面,本发明实施例提供一种数据处理装置,应用于计算引擎Spark,所述装置包括:On the one hand, an embodiment of the present invention provides a data processing apparatus, which is applied to the computing engine Spark, and the apparatus includes:

监测模块,用于监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库;A monitoring module, configured to monitor and learn that the default delimiter of the Spark application cannot divide the data to be processed, and obtain the Spark's preset delimiter library;

遍历模块,用于遍历所述预设分隔符库中的分隔符,获取所述分隔符中与所述待处理数据相匹配的目标分隔符;其中,所述目标分隔符为将所述待处理数据中的部分数据分割成功且满足预设校验规则的分隔符;A traversal module, configured to traverse the delimiters in the preset delimiter library, and obtain the target delimiter in the delimiter that matches the data to be processed; wherein, the target delimiter is the Part of the data in the data is successfully divided and satisfies the delimiter of the preset verification rules;

设置模块,用于将所述目标分隔符设置为所述Spark的默认分隔符。A setting module, used to set the target delimiter as the default delimiter of the Spark.

另一方面,本发明实施例还提供了一种电子设备,包括存储器、处理器、总线以及存储在存储器上并可在处理器上运行的计算机程序,所述处理器执行所述程序时实现上述数据处理方法中的步骤。On the other hand, an embodiment of the present invention also provides an electronic device, including a memory, a processor, a bus, and a computer program stored in the memory and running on the processor, where the processor implements the above-mentioned program when the processor executes the program Steps in a data processing method.

再一方面,本发明实施例还提供了一种非暂态计算机可读存储介质,其上存储有计算机程序,所述程序被处理器执行时实现上述数据处理方法中的步骤。In yet another aspect, an embodiment of the present invention further provides a non-transitory computer-readable storage medium, on which a computer program is stored, and when the program is executed by a processor, the steps in the above data processing method are implemented.

本发明实施例提供的数据处理方法及装置,监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库;遍历所述预设分隔符库中的分隔符,获取所述分隔符中与所述待处理数据相匹配的目标分隔符,即待处理数据中实际使用的分隔符;将所述目标分隔符设置为所述Spark的默认分隔符,使得Spark可对该待处理数据进行识别及处理,提升Spark的通用程度以及数据处理效率。In the data processing method and device provided by the embodiments of the present invention, monitoring and learning that the default delimiter of the Spark application cannot divide the data to be processed, and obtaining the Spark's preset delimiter library; traversing the preset delimiter library in the Delimiter, obtain the target delimiter that matches the data to be processed in the delimiter, that is, the delimiter actually used in the data to be processed; set the target delimiter to the default delimiter of the Spark, so that Spark can identify and process the data to be processed, improving Spark's generality and data processing efficiency.

附图说明Description of drawings

为了更清楚地说明本发明实施例或现有技术中的技术方案,下面将对实施例或现有技术描述中所需要使用的附图作一简单地介绍,显而易见地,下面描述中的附图是本发明的一些实施例,对于本领域普通技术人员来讲,在不付出创造性劳动的前提下,还可以根据这些附图获得其他的附图。In order to more clearly illustrate the embodiments of the present invention or the technical solutions in the prior art, the following briefly introduces the accompanying drawings that need to be used in the description of the embodiments or the prior art. Obviously, the accompanying drawings in the following description These are some embodiments of the present invention. For those of ordinary skill in the art, other drawings can also be obtained according to these drawings without creative efforts.

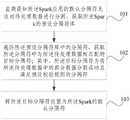

图1为本发明一实施例提供的数据处理方法的流程示意图;FIG. 1 is a schematic flowchart of a data processing method provided by an embodiment of the present invention;

图2为本发明又一实施例提供的数据处理方法的流程示意图;2 is a schematic flowchart of a data processing method provided by another embodiment of the present invention;

图3为本发明实施例的示例的流程示意图;3 is a schematic flowchart of an example of an embodiment of the present invention;

图4为本发明实施例提供的数据处理装置的结构示意图;4 is a schematic structural diagram of a data processing apparatus provided by an embodiment of the present invention;

图5为本发明实施例提供的服务器的结构示意图。FIG. 5 is a schematic structural diagram of a server provided by an embodiment of the present invention.

具体实施方式Detailed ways

为使本发明要解决的技术问题、技术方案和优点更加清楚,下面将结合附图及具体实施例进行详细描述。在下面的描述中,提供诸如具体的配置和组件的特定细节仅仅是为了帮助全面理解本发明的实施例。因此,本领域技术人员应该清楚,可以对这里描述的实施例进行各种改变和修改而不脱离本发明的范围和精神。另外,为了清楚和简洁,省略了对已知功能和构造的描述。In order to make the technical problems, technical solutions and advantages to be solved by the present invention more clear, the following will be described in detail with reference to the accompanying drawings and specific embodiments. In the following description, specific details such as specific configurations and components are provided merely to assist in a comprehensive understanding of embodiments of the present invention. Accordingly, it should be apparent to those skilled in the art that various changes and modifications of the embodiments described herein can be made without departing from the scope and spirit of the invention. Also, descriptions of well-known functions and constructions are omitted for clarity and conciseness.

应理解,说明书通篇中提到的“实施例”或“一实施例”意味着与实施例有关的特定特征、结构或特性包括在本发明的至少一个实施例中。因此,在整个说明书各处出现的“实施例中”或“在一实施例中”未必一定指相同的实施例。此外,这些特定的特征、结构或特性可以任意适合的方式结合在一个或多个实施例中。It is to be understood that reference throughout the specification to "an embodiment" or "an embodiment" means that a particular feature, structure or characteristic associated with the embodiment is included in at least one embodiment of the present invention. Thus, appearances of "in an embodiment" or "in an embodiment" in various places throughout this specification are not necessarily necessarily referring to the same embodiment. Furthermore, the particular features, structures or characteristics may be combined in any suitable manner in one or more embodiments.

在本发明的各种实施例中,应理解,下述各过程的序号的大小并不意味着执行顺序的先后,各过程的执行顺序应以其功能和内在逻辑确定,而不应对本发明实施例的实施过程构成任何限定。In various embodiments of the present invention, it should be understood that the size of the sequence numbers of the following processes does not mean the sequence of execution, and the execution sequence of each process should be determined by its functions and internal logic, rather than the implementation of the present invention The implementation of the examples constitutes no limitation.

在本申请所提供的实施例中,应理解,“与A相应的B”表示B与A相关联,根据A可以确定B。但还应理解,根据A确定B并不意味着仅仅根据A确定B,还可以根据A和/或其它信息确定B。In the embodiments provided in this application, it should be understood that "B corresponding to A" means that B is associated with A, and B can be determined according to A. However, it should also be understood that determining B according to A does not mean that B is only determined according to A, and B may also be determined according to A and/or other information.

图1示出了本发明一实施例提供的一种数据处理方法的流程示意图。FIG. 1 shows a schematic flowchart of a data processing method provided by an embodiment of the present invention.

如图1所示,本发明实施例提供的数据处理方法,应用于计算引擎Spark,所述方法具体包括以下步骤:As shown in FIG. 1 , the data processing method provided by the embodiment of the present invention is applied to the computing engine Spark, and the method specifically includes the following steps:

步骤101,监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库。

其中,待处理数据可以日志数据或其他数据。The data to be processed may be log data or other data.

一方面分隔符用于在对待处理数据识别或读取的同时,会对数据进行分割;另一方面,分隔符用于标识文本分隔的位置,或用其标识新行或新列的起始位置。On the one hand, the separator is used to separate the data while identifying or reading the data to be processed; on the other hand, the separator is used to identify the position of text separation, or use it to identify the starting position of a new row or new column .

在Spark识别待处理数据时,利用分隔符对待处理数据进行分割,而由于每个Spark版本通常具有预设的默认分隔符,若其默认分隔符与待处理数据中携带的分隔符不一致,此时Spark将无法对待处理数据进行分割,进而无法进行数据识别及处理。When Spark identifies the data to be processed, the delimiter is used to divide the data to be processed. Since each Spark version usually has a preset default delimiter, if the default delimiter is inconsistent with the delimiter carried in the data to be processed, then Spark will not be able to segment the data to be processed, and thus will not be able to identify and process the data.

因此,若监测到Spark利用其默认分隔符无法对待处理数据进行分割的情况,比如,上述情况可以是Spark所读取的待处理数据中为连续的、不存在分割的数据的情况,获取所述Spark的预设分隔符库,调用预设分隔符库中的分隔符对所述待处理数据进行分割。Therefore, if it is detected that Spark cannot divide the data to be processed by using its default delimiter, for example, the above situation may be that the data to be processed read by Spark is continuous and there is no divided data. Spark's preset delimiter library, calling the delimiter in the preset delimiter library to divide the data to be processed.

步骤102,遍历所述预设分隔符库中的分隔符,获取所述分隔符中与所述待处理数据相匹配的目标分隔符;其中,所述目标分隔符为将所述待处理数据中的部分数据分割成功且满足预设校验规则的分隔符。

其中,循环遍历预设分隔符库中的分隔符,至获取到目标分隔符,目标分隔符即待处理数据中实际使用的分隔符。The delimiters in the preset delimiter library are traversed in a loop until the target delimiter is obtained, and the target delimiter is the delimiter actually used in the data to be processed.

具体地,对于预设分隔符库中的每个分隔符,利用该分隔符分割待处理数据中的部分数据,若该分隔符将该部分数据分割成功,则继续选择待处理数据中的其他数据对该分隔符进行校验;直至满足预设校验规则之后,确定该分隔符为目标分隔符。Specifically, for each delimiter in the preset delimiter library, use the delimiter to divide part of the data in the data to be processed, and if the delimiter successfully divides the part of the data, continue to select other data in the data to be processed The delimiter is verified; until the preset verification rule is satisfied, the delimiter is determined as the target delimiter.

可选地,预设校验规则中可以是对校验次数的限定,比如包括最低校验次数阈值,最低校验阈值可采用深度学习的方式获得。Optionally, the preset verification rule may be a limit on the number of verifications, for example, including a threshold of the minimum verification number, and the minimum verification threshold may be obtained by means of deep learning.

步骤103,将所述目标分隔符设置为所述Spark的默认分隔符。Step 103: Set the target delimiter as the default delimiter of the Spark.

其中,确定目标分隔符之后,将所述目标分隔符设置为所述Spark的默认分隔符,比如将该目标分隔符添加到Spark的默认分隔符库中,所述默认分隔符库包括所述Spark的默认分隔符,使得Spark当前可对对该待处理数据进行识别及处理,以及当所述Spark后续再次遇到包含目标分隔符的待处理数据时,可对待处理数据进行处理。Wherein, after the target delimiter is determined, the target delimiter is set as the default delimiter of the Spark, for example, the target delimiter is added to the default delimiter library of Spark, and the default delimiter library includes the Spark The default delimiter of , so that Spark can currently identify and process the data to be processed, and when the Spark encounters the data to be processed containing the target delimiter again later, it can process the data to be processed.

本发明上述实施例中,监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库;遍历所述预设分隔符库中的分隔符,获取所述分隔符中与所述待处理数据相匹配的目标分隔符,即待处理数据中实际使用的分隔符;将所述目标分隔符设置为所述Spark的默认分隔符,使得Spark可对该待处理数据进行识别及处理,提升Spark的通用程度以及数据处理效率。本发明实施例解决了现有技术中,每个Spark版本通常具有预设的默认的分隔符,无法对非默认分隔符的数据进行处理的问题。In the above embodiment of the present invention, monitoring and learning that the default delimiter of the Spark application cannot divide the data to be processed, and obtaining the Spark's preset delimiter library; traversing the delimiters in the preset delimiter library, and obtaining all the delimiters in the preset delimiter library. The target delimiter that matches the data to be processed in the delimiter, that is, the delimiter actually used in the data to be processed; the target delimiter is set to the default delimiter of the Spark, so that Spark can process the data to be processed. Process data for identification and processing to improve the generality of Spark and the efficiency of data processing. The embodiment of the present invention solves the problem in the prior art that each Spark version usually has a preset default delimiter and cannot process data with non-default delimiters.

图2示出了本发明又一实施例提供的一种数据处理方法的流程示意图。FIG. 2 shows a schematic flowchart of a data processing method provided by another embodiment of the present invention.

如图2所示,本发明实施例提供的数据处理方法,应用于计算引擎Spark,所述方法具体包括以下步骤:As shown in FIG. 2 , the data processing method provided by the embodiment of the present invention is applied to the computing engine Spark, and the method specifically includes the following steps:

步骤201,监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库。

其中,分隔符用于在将待处理数据识别或读取时,对数据进行分割,用分隔符标识文本分隔的位置,或用其标识新行或新列的起始位置。待处理数据可以日志数据或其他数据。The delimiter is used to divide the data when identifying or reading the data to be processed, and the delimiter is used to identify the position where the text is separated, or it is used to identify the starting position of a new row or new column. The data to be processed can be log data or other data.

在Spark识别待处理数据时,利用分隔符对待处理数据进行分割,而由于每个Spark版本通常具有预设的默认分隔符,若其默认分隔符与待处理数据中携带的分隔符不一致,此时Spark将无法对待处理数据进行分割,进而无法进行数据识别及处理。When Spark identifies the data to be processed, the delimiter is used to divide the data to be processed. Since each Spark version usually has a preset default delimiter, if the default delimiter is inconsistent with the delimiter carried in the data to be processed, then Spark will not be able to segment the data to be processed, and thus will not be able to identify and process the data.

因此,在检测到Spark利用其默认分隔符无法对待处理数据进行分割时,比如,所读取的待处理数据中为连续的、不存在分割的数据时,获取所述Spark的预设分隔符库,调用预设分隔符库中的分隔符对所述待处理数据进行分割。Therefore, when it is detected that Spark cannot divide the data to be processed by using its default delimiter, for example, when the read data to be processed is continuous and there is no divided data, the preset delimiter library of Spark is obtained. , and call the delimiter in the preset delimiter library to segment the data to be processed.

步骤202,针对所述预设分隔符库中的每个分隔符,对所述待处理数据中满足预设字符串长度要求的多组目标数据依次进行分割,并获得连续分割成功的累计次数;其中,若一组目标数据被成功分割则对所述累计次数进行加一处理。

其中,针对每个分隔符,从所述待处理数据中,随机抽取部分数据作为目标数据,且所抽取的目标数据应满足预设字符串长度要求,避免字符串长度过短,无法分割。Wherein, for each separator, from the data to be processed, a part of the data is randomly extracted as the target data, and the extracted target data should meet the preset string length requirements, so as to avoid the string length being too short and unable to be divided.

抽取到一组目标数据后,首先对其进行分割,若分割成功则对所述累计次数进行加一处理,并继续从所述待处理数据中余下的部分继续抽取新的目标数据,继续分割;若分割失败,则停止循环抽取步骤,排除该分隔符。累计次数即连续分割成功的次数。After extracting a group of target data, firstly divide it, if the division is successful, then add one to the accumulated number of times, and continue to extract new target data from the remaining part of the data to be processed, and continue to divide; If the segmentation fails, the loop extraction step is stopped and the delimiter is excluded. The cumulative number of times is the number of consecutive successful segmentations.

步骤203,若所述累计次数满足预设校验规则,则将所述分隔符确定为与所述待处理数据相匹配的目标分隔符。Step 203: If the accumulated number of times satisfies a preset verification rule, determine the delimiter as a target delimiter matching the data to be processed.

其中,若所述累计次数满足预设校验规则,确定该分隔符为目标分隔符。可选地,预设校验规则中可以是对累计次数的限定,比如包括最低累计次数阈值,最低累计次数阈值可采用深度学习的方式获得。Wherein, if the accumulated number of times satisfies the preset verification rule, the delimiter is determined as the target delimiter. Optionally, the preset verification rule may be a limitation on the cumulative number of times, for example, including a minimum cumulative number of times threshold, and the minimum cumulative number of times threshold may be obtained by deep learning.

步骤204,将所述目标分隔符设置为所述Spark的默认分隔符。Step 204: Set the target delimiter as the default delimiter of the Spark.

其中,确定目标分隔符之后,将所述目标分隔符设置为所述Spark的默认分隔符,比如将该目标分隔符添加到Spark的默认分隔符库中,所述默认分隔符库包括所述Spark的默认分隔符,使得Spark当前可对对该待处理数据进行识别及处理,以及当所述Spark后续再次遇到包含目标分隔符的待处理数据时,可对待处理数据进行处理。Wherein, after the target delimiter is determined, the target delimiter is set as the default delimiter of the Spark, for example, the target delimiter is added to the default delimiter library of Spark, and the default delimiter library includes the Spark The default delimiter of , so that Spark can currently identify and process the data to be processed, and when the Spark encounters the data to be processed containing the target delimiter again later, it can process the data to be processed.

可选地,本发明上述实施例中,所述对所述待处理数据中满足预设字符串长度要求的多组目标数据依次进行分割的步骤,包括:Optionally, in the above-mentioned embodiment of the present invention, the step of sequentially dividing multiple groups of target data that meet the preset string length requirement in the data to be processed includes:

针对每组目标数据,获取所述目标数据的预设属性数值;For each group of target data, obtain the preset attribute value of the target data;

利用所述分隔符对所述目标数据进行分割,若分割后的字段数目与所述预设属性数值相同,则分割成功;否则,分割失败。The target data is segmented by using the separator, and if the number of the segmented fields is the same as the value of the preset attribute, the segmenting succeeds; otherwise, the segmenting fails.

其中,预设属性数值即目标数据中包含的属性个数;属性即数据类型。在Spark对待处理数据进行分割时,会将不同类型的数据以分隔符分割,因此,若所分割后的字段数目与所述预设属性数值相同,则表明分割成功;若所分割后的字段数目与所述预设属性数值不相同,则表明分割失败。The preset attribute value is the number of attributes included in the target data; the attribute is the data type. When Spark divides the data to be processed, it divides different types of data with delimiters. Therefore, if the number of divided fields is the same as the value of the preset attribute, the division is successful; if the number of divided fields is the same as the value of the preset attribute If the value is different from the preset attribute value, it indicates that the segmentation fails.

作为示例,当待处理数据为日志数据时,以JAVA语言为例,首先在Spark源码新建类SplitCharUtils、方法getSplitLibrary以及方法GetRealSplitChar。As an example, when the data to be processed is log data, taking the JAVA language as an example, first create a new class SplitCharUtils, a method getSplitLibrary, and a method GetRealSplitChar in the Spark source code.

具体地,第一步,可以先在Spark源码中新建一个类SplitCharUtils。Specifically, the first step is to create a new class SplitCharUtils in the Spark source code.

其中,该类可以用于定义三个局部变量,分别为:ATTR_COUNT、SPLIT_CHAR_TMP和SPLIT_CHAR,以及一个静态常量:SUCCESS_COUNT=1000,为预设校验规则中规定的最低校验阈值。Among them, this class can be used to define three local variables: ATTR_COUNT, SPLIT_CHAR_TMP and SPLIT_CHAR, and a static constant: SUCCESS_COUNT=1000, which is the minimum verification threshold specified in the preset verification rules.

其中,局部变量ATTR_COUNT用于指示当前Spark读取的源数据表(即待处理数据)的属性个数,局部变量SPLIT_CHAR_TMP用于指示当前获取到的Spark的预设分隔符库中的一个分隔符;SPLIT_CHAR可以代表当前获取到的数据的目标分隔符。Among them, the local variable ATTR_COUNT is used to indicate the number of attributes of the source data table (that is, the data to be processed) currently read by Spark, and the local variable SPLIT_CHAR_TMP is used to indicate the currently obtained Spark's preset delimiter library a delimiter; SPLIT_CHAR can represent the target delimiter of the currently fetched data.

第二步,在Spark源码中新建一个方法getSplitLibrary,该方法可以用于读取split_library表,并根据读取结果返回一个List,其中,split_library表中用于存储预设分隔符库中的分隔符。The second step is to create a new method getSplitLibrary in the Spark source code. This method can be used to read the split_library table and return a List according to the read result. The split_library table is used to store the separators in the preset separator library.

第三步,再新建一个方法GetRealSplitChar,并在该方法中传入一个参数,该参数可以用于标识当前获取到的日志数据log_data;在该方法的开头定义一个变量,以标识SPLIT_CHAR_TMP校验成功的次数success_count_tmp=0。The third step is to create a new method GetRealSplitChar, and pass in a parameter in this method, which can be used to identify the currently obtained log data log_data; define a variable at the beginning of the method to identify the successful SPLIT_CHAR_TMP verification The number of times success_count_tmp=0.

在完成上述创建过程后,使得Spark在读取日志数据时,可以首先调用getSplitLibrary方法,获取到分隔符库的集合,然后再遍历这个集合,依次取出集合中的分隔符,并将取出的分隔符赋值给变量SPLIT_CHAR_TMP。After completing the above creation process, when Spark reads log data, it can first call the getSplitLibrary method to obtain the set of separator libraries, and then traverse the set, take out the separators in the set in turn, and put the taken separators Assigned to the variable SPLIT_CHAR_TMP.

此时,Spark可以以变量SPLIT_CHAR_TMP为参数,通过具有分割功能的函数log_data.split(SPLIT_CHAR_TMP).length,来得到当前日志数据通过变量SPLIT_CHAR_TMP分割后的字段个数data_attr_count;At this time, Spark can use the variable SPLIT_CHAR_TMP as a parameter, and use the function log_data.split(SPLIT_CHAR_TMP).length with the split function to obtain the number of fields data_attr_count after the current log data is split by the variable SPLIT_CHAR_TMP;

并将得到的字段个数data_attr_count和代表当前Spark任务读取的源数据表的属性个数ATTR_COUNT进行比较:如果data_attr_count=ATTR_COUNT,那么success_count_tmp值加1;如果data_attr_count不等于ATTR_COUNT,那么success_count_tmp=0。And compare the number of fields data_attr_count obtained with ATTR_COUNT representing the number of attributes of the source data table read by the current Spark task: if data_attr_count=ATTR_COUNT, then the value of success_count_tmp is incremented by 1; if data_attr_count is not equal to ATTR_COUNT, then success_count_tmp=0.

此后,Spark可以取预设分隔符库中的下一个分隔符按照上述校验流程进行校验。直到某一个分隔符的校验满足success_count_tmp=SUCCESS_COUNT,那么将当前分隔符SPLIT_CHAR_TMP设置为SPLIT_CHAR的值,即本次任务获取的日志数据的目标分隔符为SPLIT_CHAR。After that, Spark can take the next delimiter in the preset delimiter library for verification according to the above verification process. Until the verification of a certain separator satisfies success_count_tmp=SUCCESS_COUNT, then set the current separator SPLIT_CHAR_TMP to the value of SPLIT_CHAR, that is, the target separator of the log data obtained by this task is SPLIT_CHAR.

可选地,本发明上述实施例中,所述对所述待处理数据中满足预设字符串长度要求的多组目标数据依次进行分割的步骤,包括:Optionally, in the above-mentioned embodiment of the present invention, the step of sequentially dividing multiple groups of target data that meet the preset string length requirement in the data to be processed includes:

从所述待处理数据中,随机抽取的满足预设字符串长度要求的数据作为当前组目标数据,利用所述分隔符对所述当前组目标数据进行分割;From the data to be processed, randomly extracted data that meets the length requirement of the preset character string is used as the current group target data, and the current group target data is divided by using the separator;

若对当前组目标数据分割成功,则再次从所述待处理数据的非当前组目标数据中,随机抽取的满足预设字符串长度要求的数据作为新的一组目标数据。If the current group of target data is successfully segmented, the data that meets the preset character string length requirement is randomly extracted from the non-current group of target data of the data to be processed again as a new group of target data.

其中,首先随机抽取一组数据作为当前组目标数据,并进行分割:若分割成功,则继续执行循环抽取步骤,即随机抽取的满足预设字符串长度要求的数据作为新的一组目标数据,并记录累计次数;若分割失败,则停止循环抽取步骤,排除该分隔符。Among them, a group of data is randomly selected as the current group of target data and divided: if the segmentation is successful, the cyclic extraction step is continued, that is, the randomly selected data that meets the preset string length requirements is used as a new group of target data, And record the cumulative number of times; if the segmentation fails, stop the circular extraction step and exclude the delimiter.

可选地,本发明上述实施例中,所述预设校验规则包括连续分割成功的次数大于或等于一预设数值。Optionally, in the above-mentioned embodiment of the present invention, the preset verification rule includes that the number of consecutive successful segmentations is greater than or equal to a preset value.

其中,该预设数值即最低校验阈值,可预设先设定。The preset value is the minimum verification threshold, which can be preset and set first.

可选地,本发明上述实施例中,所述利用所述分隔符对所述目标数据进行分割的步骤,包括:Optionally, in the foregoing embodiment of the present invention, the step of dividing the target data by using the separator includes:

将所述分隔符赋值给预设分割函数,通过所述预设分割函数对所述目标数据进行分割。The separator is assigned to a preset segmentation function, and the target data is segmented by the preset segmentation function.

可选地,预设分割函数用于利用所述分隔符将目标数据进行分割;比如上述示例中,预设分割函数可以是log_data.split(SPLIT_CHAR_TMP).length;success_count_tmp用于标识校验成功的次数。可选地,本发明上述实施例中,所述将所述目标分隔符设置为所述Spark的默认分隔符的步骤,包括:Optionally, the preset split function is used to split the target data by using the delimiter; for example, in the above example, the preset split function may be log_data.split(SPLIT_CHAR_TMP).length; success_count_tmp is used to identify the number of successful verifications . Optionally, in the above embodiment of the present invention, the step of setting the target delimiter as the default delimiter of the Spark includes:

在所述Spark的分隔符配置文件中,增加所述目标分隔符的配置文件。In the Spark separator configuration file, add the target separator configuration file.

比如,在Spark的目录下新增一个目标分隔符的配置文件,该配置文件可以是sparkConfig.properties,以实现对Spark的默认分隔符进行修改。For example, add a configuration file for the target delimiter in the Spark directory. The configuration file can be sparkConfig.properties to modify the default delimiter of Spark.

需要说明的是,若待处理数据中实际使用的目标分隔符有至少两个,则可以在Spark的目录下配置多个sparkConfig.properties,每个sparkConfig.properties对应不同的分隔符。It should be noted that if there are at least two target delimiters actually used in the data to be processed, multiple sparkConfig.properties can be configured in the Spark directory, and each sparkConfig.properties corresponds to a different delimiter.

具体地,参见图3,作为示例,以JAVA语言为例,数据处理方法主要包括以下步骤:Specifically, referring to FIG. 3, as an example, taking the JAVA language as an example, the data processing method mainly includes the following steps:

步骤301,Spark在接收到读取日志数据的命令后,通过Hive表中的数据来确定日志数据中的数据表的属性个数ATTR_COUNT。Step 301: After receiving the command to read the log data, Spark determines the attribute number ATTR_COUNT of the data table in the log data by using the data in the Hive table.

其中,Hive表中的数据为基于日志数据实际使用的分隔符分隔得到的数据。可选地,Spark在读取Hive表中数据的时候,可以通过“desc table_name”命令获取到表的属性“from deserializer”的个数ATTR_COUNT。The data in the Hive table is the data separated based on the delimiter actually used by the log data. Optionally, when Spark reads the data in the Hive table, it can obtain the ATTR_COUNT of the attribute "from deserializer" of the table through the "desc table_name" command.

步骤302,Spark在获取到属性个数ATTR_COUNT后,针对当前获取到的数据,结合Spark的预设分隔符库,即数据库表split_library,逐一进行分隔符匹配,确定目标分隔符。

例如,若当前的日志数据可以为“attr1,attr2,attr3”,且Spark通过Hive表中的数据确定的属性个数ATTR_COUNT=3;For example, if the current log data can be "attr1, attr2, attr3", and the number of attributes determined by Spark through the data in the Hive table ATTR_COUNT=3;

Spark第一次从split_library中取出的分隔符可以为“/”,此时,Spark可以用“/”对日志数据进行分割,若分割后的数组个数为1,和前面获取到的ATTR_COUNT=3不相符,那么就继续取split_library里的下一个分隔符;The delimiter that Spark takes from split_library for the first time can be "/". At this time, Spark can use "/" to split the log data. If the number of divided arrays is 1, and the previously obtained ATTR_COUNT=3 If it does not match, then continue to take the next separator in split_library;

第二次取到的分隔符是“,”,则用“,”对日志数据进行分割,分割后的数组个数为3,和前面获取到的ATTR_COUNT=3相符,那么设置当前任务的SPLIT_CHAR=“,”,后面继续取数据对“,”进行校验。The separator obtained for the second time is ",", then use "," to divide the log data. The number of divided arrays is 3, which is consistent with the ATTR_COUNT=3 obtained earlier, then set the current task's SPLIT_CHAR= ",", and then continue to fetch data to verify ",".

具体地,校验时设置SPLIT_CHAR_TMP=“,”,新的目标数据为“attr4,attr5,attr6”,Spark直接用SPLIT_CHAR_TMP对数据进行分割,分割后的数组个数也是3,和前面获取到的ATTR_COUNT=3相符,如此循环校验一定次数,结果都是正确的,则可以确定当前Spark任务的实际分隔符就是“,”,这时将系统的分隔符SPLIT_CHAR就设置为“,”。Specifically, when verifying, set SPLIT_CHAR_TMP=",", the new target data is "attr4, attr5, attr6", Spark directly uses SPLIT_CHAR_TMP to divide the data, the number of divided arrays is also 3, and the ATTR_COUNT obtained earlier = 3 matches, so the loop checks a certain number of times, and the results are correct, it can be determined that the actual delimiter of the current Spark task is ",", then the system delimiter SPLIT_CHAR is set to ",".

可选地,SPLIT_CHAR作为当前任务的全局变量存放在系统的静态存储区中,当前任务可随时获取,任务结束则清空。这样保证每个Spark的分隔符都是重新计算得来的,提升系统的可靠性。Optionally, SPLIT_CHAR is stored in the static storage area of the system as a global variable of the current task. The current task can be obtained at any time, and is cleared when the task ends. This ensures that each Spark delimiter is recalculated and improves the reliability of the system.

步骤303,在所述Spark的分隔符配置文件中,增加所述目标分隔符的配置文件。Step 303 , in the Spark separator configuration file, add the target separator configuration file.

在本方案中,在确定日志数据实际使用的分隔符后,spark可以将当前的默认分隔符修改为识别出的实际使用的分隔符。In this solution, after determining the delimiter actually used by the log data, spark can modify the current default delimiter to the recognized delimiter actually used.

可选地,本发明上述实施例中,所述增加所述目标分隔符的配置文件的步骤之后,所述方法还包括:Optionally, in the above embodiment of the present invention, after the step of adding the configuration file of the target separator, the method further includes:

获取所述待处理数据中的数据表的源标识符,记录所述源标识符与所述配置文件的对应关系。Acquire the source identifier of the data table in the data to be processed, and record the correspondence between the source identifier and the configuration file.

其中,增加配置文件后,获取与目标分隔符对应的待处理数据中的数据表的源标识符,并记录源标识符与所述配置文件的对应关系,以便后续再次处理该源标识符对应的数据表时,直接根据对应关系选择正确的分隔符。Wherein, after adding the configuration file, obtain the source identifier of the data table in the data to be processed corresponding to the target separator, and record the corresponding relationship between the source identifier and the configuration file, so that the source identifier corresponding to the source identifier can be processed again later. When creating a data table, select the correct delimiter directly according to the corresponding relationship.

以及,当待处理数据中存在两个以上的分隔符时,根据该对应关系,可快速查找分隔符。And, when there are more than two delimiters in the data to be processed, the delimiters can be quickly searched according to the corresponding relationship.

进一步地,本发明上述实施例中,所述方法还包括:Further, in the above-mentioned embodiment of the present invention, the method further includes:

读取待处理数据时,获取所述待处理数据中包括的数据表的标识符;When reading the data to be processed, obtain the identifier of the data table included in the data to be processed;

根据所述对应关系,调用与所述标识符对应的所述配置文件。According to the corresponding relationship, the configuration file corresponding to the identifier is called.

其中,读取待处理数据时,首先获取其包括的数据表的标识符,再根据对应关系,调用对应的配置文件;仍以JAVA为例,在Spark中增加数据表的标识符与配置文件的对应关系后,可以将日志数据中实际使用的分隔符设置为当前日志数据对应的数据表的名称。这样,当Spark开始读取以及识别日志数据时,可以先加载配置文件,再根据日志数据的名称,调用该配置文件,并定义其中的分隔符为参数SPLIT_CHAR。然后,Spark可以根据参数SPLIT_CHAR来对日志数据进行读取以及识别。Among them, when reading the data to be processed, first obtain the identifier of the data table included in it, and then call the corresponding configuration file according to the corresponding relationship; still take JAVA as an example, add the identifier of the data table and the configuration file in Spark. After the corresponding relationship, the delimiter actually used in the log data can be set to the name of the data table corresponding to the current log data. In this way, when Spark starts to read and identify log data, it can load the configuration file first, then call the configuration file according to the name of the log data, and define the delimiter in it as the parameter SPLIT_CHAR. Then, Spark can read and identify the log data according to the parameter SPLIT_CHAR.

本发明上述实施例中,监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库;遍历所述预设分隔符库中的分隔符,获取所述分隔符中与所述待处理数据相匹配的目标分隔符,即待处理数据中实际使用的分隔符;将所述目标分隔符设置为所述Spark的默认分隔符,使得Spark可对该待处理数据进行识别及处理,提升Spark的通用程度以及数据处理效率。In the above embodiment of the present invention, monitoring and learning that the default delimiter of the Spark application cannot divide the data to be processed, and obtaining the Spark's preset delimiter library; traversing the delimiters in the preset delimiter library, and obtaining all the delimiters in the preset delimiter library. The target delimiter that matches the data to be processed in the delimiter, that is, the delimiter actually used in the data to be processed; the target delimiter is set to the default delimiter of the Spark, so that Spark can process the data to be processed. Process data for identification and processing to improve the generality of Spark and the efficiency of data processing.

以上介绍了本发明实施例提供的数据处理方法,下面将结合附图介绍本发明实施例提供的数据处理装置。The data processing method provided by the embodiments of the present invention has been described above, and the data processing apparatus provided by the embodiments of the present invention will be described below with reference to the accompanying drawings.

如图4所示,本发明实施例还提供一种数据处理装置,应用于计算引擎Spark,所述装置包括:As shown in FIG. 4 , an embodiment of the present invention further provides a data processing apparatus, which is applied to the computing engine Spark, and the apparatus includes:

监测模块401,用于监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库。The

其中,待处理数据可以日志数据或其他数据。The data to be processed may be log data or other data.

一方面分隔符用于在对待处理数据识别或读取的同时,会对数据进行分割;另一方面,分隔符用于标识文本分隔的位置,或用其标识新行或新列的起始位置。On the one hand, the separator is used to separate the data while identifying or reading the data to be processed; on the other hand, the separator is used to identify the position of text separation, or use it to identify the starting position of a new row or new column .

在Spark识别待处理数据时,利用分隔符对待处理数据进行分割,而由于每个Spark版本通常具有预设的默认分隔符,若其默认分隔符与待处理数据中携带的分隔符不一致,此时Spark将无法对待处理数据进行分割,进而无法进行数据识别及处理。When Spark identifies the data to be processed, the delimiter is used to divide the data to be processed. Since each Spark version usually has a preset default delimiter, if the default delimiter is inconsistent with the delimiter carried in the data to be processed, then Spark will not be able to segment the data to be processed, and thus will not be able to identify and process the data.

因此,若监测到Spark利用其默认分隔符无法对待处理数据进行分割的情况,比如,上述情况可以是Spark所读取的待处理数据中为连续的、不存在分割的数据的情况,获取所述Spark的预设分隔符库,调用预设分隔符库中的分隔符对所述待处理数据进行分割。Therefore, if it is detected that Spark cannot divide the data to be processed by using its default delimiter, for example, the above situation may be that the data to be processed read by Spark is continuous and there is no divided data. Spark's preset delimiter library, calling the delimiter in the preset delimiter library to divide the data to be processed.

遍历模块402,用于遍历所述预设分隔符库中的分隔符,获取所述分隔符中与所述待处理数据相匹配的目标分隔符;其中,所述目标分隔符为将所述待处理数据中的部分数据分割成功且满足预设校验规则的分隔符。Traversing

其中,循环遍历预设分隔符库中的分隔符,至获取到目标分隔符,目标分隔符即待处理数据中实际使用的分隔符。具体地,对于预设分隔符库中的每个分隔符,利用该分隔符分割待处理数据中的部分数据,若该分隔符将该部分数据分割成功,则继续选择待处理数据中的其他数据对该分隔符进行校验;直至满足预设校验规则之后,确定该分隔符为目标分隔符。可选地,预设校验规则中可以是对校验次数的限定,比如包括最低校验次数阈值,最低校验阈值可采用深度学习的方式获得。The delimiters in the preset delimiter library are traversed in a loop until the target delimiter is obtained, and the target delimiter is the delimiter actually used in the data to be processed. Specifically, for each delimiter in the preset delimiter library, use the delimiter to divide part of the data in the data to be processed, and if the delimiter successfully divides the part of the data, continue to select other data in the data to be processed The delimiter is verified; until the preset verification rule is satisfied, the delimiter is determined as the target delimiter. Optionally, the preset verification rule may be a limit on the number of verifications, for example, including a threshold of the minimum verification number, and the minimum verification threshold may be obtained by means of deep learning.

设置模块403,用于将所述目标分隔符设置为所述Spark的默认分隔符。A

其中,确定目标分隔符之后,将所述目标分隔符设置为所述Spark的默认分隔符,比如将该目标分隔符添加到Spark的默认分隔符库中,所述默认分隔符库包括所述Spark的默认分隔符,使得Spark当前可对对该待处理数据进行识别及处理,以及当所述Spark后续再次遇到包含目标分隔符的待处理数据时,可对待处理数据进行处理。Wherein, after the target delimiter is determined, the target delimiter is set as the default delimiter of the Spark, for example, the target delimiter is added to the default delimiter library of Spark, and the default delimiter library includes the Spark The default delimiter of , so that Spark can currently identify and process the data to be processed, and when the Spark encounters the data to be processed containing the target delimiter again later, it can process the data to be processed.

可选地,本发明实施例中,所述遍历模块402包括:Optionally, in this embodiment of the present invention, the

分割子模块,用于针对所述预设分隔符库中的每个分隔符,对所述待处理数据中满足预设字符串长度要求的多组目标数据依次进行分割,并获得连续分割成功的累计次数;其中,若一组目标数据被成功分割则对所述累计次数进行加一处理;The segmentation sub-module is used for, for each delimiter in the preset delimiter library, to sequentially segment multiple groups of target data that meet the preset character string length requirements in the data to be processed, and obtain consecutive segmentation successes. The cumulative number of times; wherein, if a group of target data is successfully segmented, the cumulative number of times is increased by one;

校验子模块,用于若所述累计次数满足预设校验规则,则将所述分隔符确定为与所述待处理数据相匹配的目标分隔符。A check sub-module, configured to determine the delimiter as a target delimiter matching the data to be processed if the accumulated number of times satisfies a preset check rule.

可选地,本发明实施例中,所述分割子模块用于:Optionally, in this embodiment of the present invention, the segmentation submodule is used for:

针对每组目标数据,获取所述目标数据的预设属性数值;For each group of target data, obtain the preset attribute value of the target data;

利用所述分隔符对所述目标数据进行分割,若分割后的字段数目与所述预设属性数值相同,则分割成功。The target data is segmented by using the delimiter, and if the number of segments after segmenting is the same as the value of the preset attribute, the segmenting is successful.

可选地,本发明实施例中,所述分割子模块用于:Optionally, in this embodiment of the present invention, the segmentation submodule is used for:

从所述待处理数据中,随机抽取的满足预设字符串长度要求的数据作为当前组目标数据,利用所述分隔符对所述当前组目标数据进行分割;From the data to be processed, randomly extracted data that meets the length requirement of the preset character string is used as the current group target data, and the current group target data is divided by using the separator;

若对当前组目标数据分割成功,则再次从所述待处理数据的非当前组目标数据中,随机抽取的满足预设字符串长度要求的数据作为新的一组目标数据。If the current group of target data is successfully segmented, the data that meets the preset character string length requirement is randomly extracted from the non-current group of target data of the data to be processed again as a new group of target data.

可选地,本发明实施例中,所述预设校验规则包括连续分割成功的次数大于或等于一预设数值。Optionally, in this embodiment of the present invention, the preset verification rule includes that the number of consecutive successful segmentations is greater than or equal to a preset value.

可选地,本发明实施例中,所述分割子模块用于:Optionally, in this embodiment of the present invention, the segmentation submodule is used for:

将所述分隔符赋值给预设分割函数,通过所述预设分割函数对所述目标数据进行分割。The separator is assigned to a preset segmentation function, and the target data is segmented by the preset segmentation function.

可选地,本发明实施例中,所述设置模块403包括:Optionally, in this embodiment of the present invention, the

配置子模块,用于在所述Spark的分隔符配置文件中,增加所述目标分隔符的配置文件。The configuration submodule is used to add the configuration file of the target delimiter to the delimiter configuration file of the Spark.

可选地,本发明实施例中,所述装置还包括:Optionally, in this embodiment of the present invention, the device further includes:

记录模块,用于获取所述待处理数据中的数据表的源标识符,记录所述源标识符与所述配置文件的对应关系。The recording module is configured to acquire the source identifier of the data table in the data to be processed, and record the corresponding relationship between the source identifier and the configuration file.

可选地,本发明实施例中,所述装置还包括:Optionally, in this embodiment of the present invention, the device further includes:

调用模块,用于读取待处理数据时,获取所述待处理数据中包括的数据表的标识符;a calling module, configured to acquire the identifier of the data table included in the data to be processed when reading the data to be processed;

根据所述对应关系,调用与所述标识符对应的所述配置文件。According to the corresponding relationship, the configuration file corresponding to the identifier is called.

本发明上述实施例中,通过监测模块401监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库;遍历模块402遍历所述预设分隔符库中的分隔符,获取所述分隔符中与所述待处理数据相匹配的目标分隔符,即待处理数据中实际使用的分隔符;设置模块403将所述目标分隔符设置为所述Spark的默认分隔符,使得Spark可对该待处理数据进行识别及处理,提升Spark的通用程度以及数据处理效率。In the above-mentioned embodiment of the present invention, the

另一方面,本发明实施例还提供了一种电子设备,包括存储器、处理器、总线以及存储在存储器上并可在处理器上运行的计算机程序,所述处理器执行所述程序时实现上述数据处理方法中的步骤。On the other hand, an embodiment of the present invention also provides an electronic device, including a memory, a processor, a bus, and a computer program stored in the memory and running on the processor, where the processor implements the above-mentioned program when the processor executes the program Steps in a data processing method.

举个例子如下,当电子设备为服务器时,图5示例了一种服务器的实体结构示意图。For example, when the electronic device is a server, FIG. 5 illustrates a schematic diagram of the physical structure of a server.

如图5所示,该服务器可以包括:处理器(Processor)510、通信接口(Communications Interface)520、存储器(Memory)530和通信总线540,其中,处理器510,通信接口520,存储器530通过通信总线540完成相互间的通信。处理器510可以调用存储器530中的逻辑指令,以执行如下方法:As shown in FIG. 5 , the server may include: a processor (Processor) 510, a communication interface (Communications Interface) 520, a memory (Memory) 530 and a

监测获知所述Spark应用的默认分隔符无法对待处理数据进行分割,获取所述Spark的预设分隔符库;Monitor and learn that the default delimiter of the Spark application cannot divide the data to be processed, and obtain the default delimiter library of the Spark;

遍历所述预设分隔符库中的分隔符,获取所述分隔符中与所述待处理数据相匹配的目标分隔符;其中,所述目标分隔符为将所述待处理数据中的部分数据分割成功且满足预设校验规则的分隔符;Traverse the delimiters in the preset delimiter library, and obtain a target delimiter in the delimiter that matches the data to be processed; wherein, the target delimiter is a part of the data in the data to be processed. The delimiter that successfully splits and satisfies the preset verification rules;

将所述目标分隔符设置为所述Spark的默认分隔符。Set the target delimiter to the Spark's default delimiter.

此外,上述的存储器530中的逻辑指令可以通过软件功能单元的形式实现并作为独立的产品销售或使用时,可以存储在一个计算机可读取存储介质中。基于这样的理解,本发明的技术方案本质上或者说对现有技术做出贡献的部分或者该技术方案的部分可以以软件产品的形式体现出来,该计算机软件产品存储在一个存储介质中,包括若干指令用以使得一台计算机设备(可以是个人计算机,服务器,或者网络设备等)执行本发明各个实施例所述方法的全部或部分步骤。而前述的存储介质包括:U盘、移动硬盘、只读存储器(ROM,Read-OnlyMemory)、随机存取存储器(RAM,Random Access Memory)、磁碟或者光盘等各种可以存储程序代码的介质。In addition, the above-mentioned logic instructions in the

再一方面,本发明实施例还提供了一种非暂态计算机可读存储介质,其上存储有计算机程序,所述程序被处理器执行时实现上述数据处理方法中的步骤,本发明实施例在此不再赘述。In another aspect, an embodiment of the present invention also provides a non-transitory computer-readable storage medium, on which a computer program is stored, and when the program is executed by a processor, implements the steps in the above data processing method, an embodiment of the present invention It is not repeated here.

以上所描述的装置实施例仅仅是示意性的,其中所述作为分离部件说明的单元可以是或者也可以不是物理上分开的,作为单元显示的部件可以是或者也可以不是物理单元,即可以位于一个地方,或者也可以分布到多个网络单元上。可以根据实际的需要选择其中的部分或者全部模块来实现本实施例方案的目的。本领域普通技术人员在不付出创造性的劳动的情况下,即可以理解并实施。The device embodiments described above are only illustrative, wherein the units described as separate components may or may not be physically separated, and the components shown as units may or may not be physical units, that is, they may be located in One place, or it can be distributed over multiple network elements. Some or all of the modules may be selected according to actual needs to achieve the purpose of the solution in this embodiment. Those of ordinary skill in the art can understand and implement it without creative effort.

通过以上的实施方式的描述,本领域的技术人员可以清楚地了解到各实施方式可借助软件加必需的通用硬件平台的方式来实现,当然也可以通过硬件。基于这样的理解,上述技术方案本质上或者说对现有技术做出贡献的部分可以以软件产品的形式体现出来,该计算机软件产品可以存储在计算机可读存储介质中,如ROM/RAM、磁碟、光盘等,包括若干指令用以使得一台计算机设备(可以是个人计算机,服务器,或者网络设备等)执行各个实施例或者实施例的某些部分所述的方法。From the description of the above embodiments, those skilled in the art can clearly understand that each embodiment can be implemented by means of software plus a necessary general hardware platform, and certainly can also be implemented by hardware. Based on this understanding, the above-mentioned technical solutions can be embodied in the form of software products in essence or the parts that make contributions to the prior art, and the computer software products can be stored in computer-readable storage media, such as ROM/RAM, magnetic A disc, an optical disc, etc., includes several instructions for causing a computer device (which may be a personal computer, a server, or a network device, etc.) to perform the methods described in various embodiments or some parts of the embodiments.

最后应说明的是:以上实施例仅用以说明本发明的技术方案,而非对其限制;尽管参照前述实施例对本发明进行了详细的说明,本领域的普通技术人员应当理解:其依然可以对前述各实施例所记载的技术方案进行修改,或者对其中部分技术特征进行等同替换;而这些修改或者替换,并不使相应技术方案的本质脱离本发明各实施例技术方案的精神和范围。Finally, it should be noted that the above embodiments are only used to illustrate the technical solutions of the present invention, but not to limit them; although the present invention has been described in detail with reference to the foregoing embodiments, those of ordinary skill in the art should understand that it can still be The technical solutions described in the foregoing embodiments are modified, or some technical features thereof are equivalently replaced; and these modifications or replacements do not make the essence of the corresponding technical solutions deviate from the spirit and scope of the technical solutions of the embodiments of the present invention.

Claims (9)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201910190563.4ACN109947429B (en) | 2019-03-13 | 2019-03-13 | Data processing method and device |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201910190563.4ACN109947429B (en) | 2019-03-13 | 2019-03-13 | Data processing method and device |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN109947429A CN109947429A (en) | 2019-06-28 |

| CN109947429Btrue CN109947429B (en) | 2022-07-26 |

Family

ID=67009699

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201910190563.4AActiveCN109947429B (en) | 2019-03-13 | 2019-03-13 | Data processing method and device |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN109947429B (en) |

Families Citing this family (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN112131296B (en)* | 2020-09-27 | 2024-07-26 | 北京锐安科技有限公司 | Data exploration method and device, electronic equipment and storage medium |

| CN113190631A (en)* | 2021-05-31 | 2021-07-30 | 中国银行股份有限公司 | Method and device for splitting single character segment containing multi-column information |

| CN115168383A (en)* | 2022-07-19 | 2022-10-11 | 中国银行股份有限公司 | Data comparison method and device |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN107622088A (en)* | 2017-08-17 | 2018-01-23 | 郑州云海信息技术有限公司 | A method based on Hive supporting multiple characters as delimiters |

| CN107798035A (en)* | 2017-04-10 | 2018-03-13 | 平安科技(深圳)有限公司 | A kind of data processing method and terminal |

| CN108235069A (en)* | 2016-12-22 | 2018-06-29 | 北京国双科技有限公司 | The processing method and processing device of Web TV daily record |

| CN108804697A (en)* | 2018-06-15 | 2018-11-13 | 中国平安人寿保险股份有限公司 | Method of data synchronization, device, computer equipment based on Spark and storage medium |

Family Cites Families (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US10599460B2 (en)* | 2017-08-07 | 2020-03-24 | Modelop, Inc. | Analytic model execution engine with instrumentation for granular performance analysis for metrics and diagnostics for troubleshooting |

- 2019

- 2019-03-13CNCN201910190563.4Apatent/CN109947429B/enactiveActive

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN108235069A (en)* | 2016-12-22 | 2018-06-29 | 北京国双科技有限公司 | The processing method and processing device of Web TV daily record |

| CN107798035A (en)* | 2017-04-10 | 2018-03-13 | 平安科技(深圳)有限公司 | A kind of data processing method and terminal |

| CN107622088A (en)* | 2017-08-17 | 2018-01-23 | 郑州云海信息技术有限公司 | A method based on Hive supporting multiple characters as delimiters |

| CN108804697A (en)* | 2018-06-15 | 2018-11-13 | 中国平安人寿保险股份有限公司 | Method of data synchronization, device, computer equipment based on Spark and storage medium |

Also Published As

| Publication number | Publication date |

|---|---|

| CN109947429A (en) | 2019-06-28 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN110888849B (en) | An online log parsing method, system and electronic terminal device thereof | |

| CN111522816B (en) | Data processing method, device, terminal and medium based on database engine | |

| EP3678346A1 (en) | Blockchain smart contract verification method and apparatus, and storage medium | |

| US9195738B2 (en) | Tokenization platform | |

| CN109947429B (en) | Data processing method and device | |

| CN109543942A (en) | Data verification method, device, computer equipment and storage medium | |

| US10628403B2 (en) | Annotation system for extracting attributes from electronic data structures | |

| CN111078227A (en) | Binary code and source code similarity analysis method and device based on code characteristics | |

| CN104036187A (en) | Method and system for determining computer virus types | |

| CN107169092A (en) | Intelligent Recognition and the method and system of sensitive content are handled in interaction | |

| CN111159497A (en) | Regular expression generation method and data extraction method based on regular expression | |

| CN108304467B (en) | Method for matching between texts | |

| CN114968725A (en) | Task dependency relationship correction method and device, computer equipment and storage medium | |

| CN102725754B (en) | Method and device for processing index data | |

| US11144712B2 (en) | Dictionary creation apparatus, dictionary creation method, and non-transitory computer-readable storage medium for storing dictionary creation program | |

| CN113821211B (en) | Command parsing method and device, storage medium and computer equipment | |

| CN116401229A (en) | Database data verification method, device and equipment | |

| CN111400320B (en) | Method and device for generating information | |

| CN114817327A (en) | File version identification method, system, terminal equipment and storage medium | |

| US11494272B2 (en) | Method, device, and computer program product for data protection | |

| CN104991963B (en) | Document handling method and device | |

| CN113688054A (en) | Page testing method and device, computer readable storage medium and terminal equipment | |

| CN114238709A (en) | Character string matching method, device, equipment and readable storage medium | |

| CN112765420A (en) | Block chain-based error field data processing method, system, device and medium | |

| CN120358083B (en) | Network system intrusion detection method, device, equipment and medium |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |