CN108181989B - Video data-based gesture control method and device, and computing device - Google Patents

Video data-based gesture control method and device, and computing deviceDownload PDFInfo

- Publication number

- CN108181989B CN108181989BCN201711477666.6ACN201711477666ACN108181989BCN 108181989 BCN108181989 BCN 108181989BCN 201711477666 ACN201711477666 ACN 201711477666ACN 108181989 BCN108181989 BCN 108181989B

- Authority

- CN

- China

- Prior art keywords

- tracking

- gesture

- detection

- image frame

- area

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/017—Gesture based interaction, e.g. based on a set of recognized hand gestures

Landscapes

- Engineering & Computer Science (AREA)

- General Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Human Computer Interaction (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Image Analysis (AREA)

- User Interface Of Digital Computer (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明涉及图像处理领域,具体涉及一种基于视频数据的手势控制方法及装置、计算设备。The present invention relates to the field of image processing, in particular to a gesture control method and device based on video data, and a computing device.

背景技术Background technique

随着科技的发展,图像采集设备的技术以及智能控制的技术也日益提高。目前,智能硬件大多通过预先设定控制规则的方式进行控制,例如,用户可以针对空调设置“每天18点使空调自动开机”的控制规则。又如,用户还可以针对空调设置“当检测到用户手机与家门之间的距离小于预设距离时自动开机”的控制规则等。通过上述控制规则,能够根据用户的需求控制智能硬件,从而为用户提供方便。With the development of science and technology, the technology of image acquisition equipment and the technology of intelligent control are also improving day by day. At present, most of the intelligent hardware is controlled by pre-setting control rules. For example, the user can set a control rule for the air conditioner of "automatically turn on the air conditioner at 18:00 every day". For another example, the user can also set a control rule for the air conditioner, such as "automatically turn on when it is detected that the distance between the user's mobile phone and the door is less than a preset distance". Through the above control rules, the intelligent hardware can be controlled according to the needs of the user, thereby providing convenience for the user.

但是,发明人在实现本发明的过程中发现,现有技术中,无法捕获用户所对应的视频数据,更无法根据捕获到的视频数据中包含的用户信息控制智能硬件。因此,智能硬件的控制方式还不够灵活、不够智能化。However, in the process of implementing the present invention, the inventor found that in the prior art, the video data corresponding to the user cannot be captured, and the intelligent hardware cannot be controlled according to the user information contained in the captured video data. Therefore, the control method of intelligent hardware is not flexible and intelligent enough.

发明内容SUMMARY OF THE INVENTION

鉴于上述问题,提出了本发明以便提供一种克服上述问题或者至少部分地解决上述问题的基于视频数据的手势控制方法及装置、计算设备。In view of the above problems, the present invention is proposed in order to provide a video data-based gesture control method, apparatus, and computing device that overcome the above problems or at least partially solve the above problems.

根据本发明的一个方面,提供了一种基于视频数据的手势控制方法,其包括:According to an aspect of the present invention, there is provided a gesture control method based on video data, comprising:

每当获取到跟踪器当前输出的与所述视频数据相对应的跟踪结果后,根据所述跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域;After obtaining the tracking result corresponding to the video data currently output by the tracker, determine the gesture tracking area included in the current tracking image frame according to the tracking result;

从检测器已输出的与所述视频数据相对应的各次检测结果中获取输出时间最晚的检测结果,确定所述输出时间最晚的检测结果中包含的手势类型;Obtain the detection result with the latest output time from the detection results corresponding to the video data that have been output by the detector, and determine the gesture type included in the detection result with the latest output time;

根据所述输出时间最晚的检测结果中包含的手势类型以及所述当前跟踪图像帧中包含的手势跟踪区域确定控制规则;Determine the control rule according to the gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame;

向预设的智能硬件发送与所述控制规则相对应的控制指令。Send a control instruction corresponding to the control rule to the preset intelligent hardware.

可选地,其中,所述根据所述输出时间最晚的检测结果中包含的手势类型以及所述当前跟踪图像帧中包含的手势跟踪区域确定控制规则的步骤具体包括:Optionally, the step of determining the control rule according to the gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame specifically includes:

确定所述当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域;Determine the gesture tracking area included in the previous frame tracking image frame corresponding to the current tracking image frame;

根据所述前帧跟踪图像帧中包含的手势跟踪区域以及所述当前跟踪图像帧中包含的手势跟踪区域确定手部运动轨迹;Determine the hand motion trajectory according to the gesture tracking area included in the previous frame tracking image frame and the gesture tracking area included in the current tracking image frame;

根据所述输出时间最晚的检测结果中包含的手势类型以及所述手部运动轨迹,确定对应的控制规则。According to the gesture type included in the detection result with the latest output time and the hand motion trajectory, a corresponding control rule is determined.

可选地,其中,所述根据所述输出时间最晚的检测结果中包含的手势类型以及所述手部运动轨迹,确定对应的控制规则的步骤具体包括:Optionally, wherein, the step of determining the corresponding control rule according to the gesture type included in the detection result with the latest output time and the hand motion trajectory specifically includes:

查询预设的手势控制库,根据所述手势控制库确定与所述输出时间最晚的检测结果中包含的手势类型以及所述手部运动轨迹相对应的控制规则;querying a preset gesture control library, and determining, according to the gesture control library, a control rule corresponding to the gesture type included in the detection result with the latest output time and the hand motion trajectory;

其中,所述手势控制库用于存储与各种手势类型和/或手部运动轨迹相对应的控制规则。The gesture control library is used to store control rules corresponding to various gesture types and/or hand motion trajectories.

可选地,其中,所述跟踪器每隔第一预设间隔从所述视频数据中提取一帧图像作为当前跟踪图像帧,并输出与所述当前跟踪图像帧相对应的跟踪结果;Optionally, wherein, the tracker extracts a frame of image from the video data every first preset interval as a current tracking image frame, and outputs a tracking result corresponding to the current tracking image frame;

所述检测器每隔第二预设间隔从所述视频数据中提取一帧图像作为当前检测图像帧,并输出与所述当前检测图像帧相对应的检测结果;The detector extracts a frame of image from the video data every second preset interval as a current detection image frame, and outputs a detection result corresponding to the current detection image frame;

其中,所述第二预设间隔大于所述第一预设间隔。Wherein, the second preset interval is greater than the first preset interval.

可选地,其中,所述从检测器已输出的与所述视频数据相对应的各次检测结果中获取输出时间最晚的检测结果的步骤之前,进一步包括步骤:Optionally, before the step of acquiring the detection result with the latest output time from the detection results corresponding to the video data that have been output by the detector, the step further includes:

判断所述当前跟踪图像帧中包含的手势跟踪区域是否为有效区域;Determine whether the gesture tracking area included in the current tracking image frame is an effective area;

当判断结果为是时,执行所述从检测器已输出的与所述视频数据相对应的各次检测结果中获取输出时间最晚的检测结果的步骤及其后续步骤。When the judgment result is yes, the step of obtaining the detection result with the latest output time from the detection results outputted by the detector and corresponding to the video data and the subsequent steps are performed.

可选地,其中,所述判断所述当前跟踪图像帧中包含的手势跟踪区域是否为有效区域的步骤具体包括:Optionally, wherein, the step of judging whether the gesture tracking area included in the current tracking image frame is an effective area specifically includes:

通过预设的手部分类器判断所述当前跟踪的图像帧中包含的手势跟踪区域是否为手部区域;Determine whether the gesture tracking area included in the currently tracked image frame is a hand area by a preset hand classifier;

若是,则确定所述当前跟踪图像帧中包含的手势跟踪区域为有效区域;若否,则确定所述当前跟踪图像帧中包含的手势跟踪区域为无效区域。If yes, the gesture tracking area included in the current tracking image frame is determined to be a valid area; if not, it is determined that the gesture tracking area included in the current tracking image frame is an invalid area.

可选地,其中,当所述当前跟踪图像帧中包含的手势跟踪区域为无效区域时,所述方法进一步包括:Optionally, wherein, when the gesture tracking area included in the current tracking image frame is an invalid area, the method further includes:

获取所述检测器在所述跟踪结果之后输出的检测结果,确定所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域;acquiring the detection result output by the detector after the tracking result, and determining the hand detection area included in the detection result output after the tracking result;

将所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域提供给所述跟踪器,以供所述跟踪器根据所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域输出后续的跟踪结果。The hand detection area included in the detection result output after the tracking result is provided to the tracker, so that the tracker can use the hand detection area included in the detection result output after the tracking result according to the tracking result. The partial detection area outputs the subsequent tracking results.

可选地,其中,当所述当前跟踪图像帧中包含的手势跟踪区域为有效区域时,所述方法进一步包括:Optionally, wherein, when the gesture tracking area included in the current tracking image frame is an effective area, the method further includes:

将所述有效区域提供给所述检测器,以供所述检测器根据所述有效区域输出后续的检测结果。The effective area is provided to the detector, so that the detector can output subsequent detection results according to the effective area.

可选地,其中,所述检测器根据所述有效区域输出后续的检测结果的步骤具体包括:Optionally, wherein, the step of the detector outputting subsequent detection results according to the effective area specifically includes:

根据所述有效区域确定当前检测图像帧中的检测范围;Determine the detection range in the current detection image frame according to the effective area;

根据所述检测范围,通过神经网络算法预测与所述当前检测图像帧相对应的检测结果;According to the detection range, predict the detection result corresponding to the current detection image frame through a neural network algorithm;

其中,所述检测结果中包含手势检测区域以及手势类型。Wherein, the detection result includes a gesture detection area and a gesture type.

可选地,其中,所述方法执行之前,进一步包括步骤:Optionally, before the method is executed, it further comprises the steps:

确定检测器已输出的检测结果中包含的手部检测区域;Determine the hand detection area included in the detection results that the detector has output;

将所述检测器已输出的检测结果中包含的手部检测区域提供给所述跟踪器,以供所述跟踪器根据所述检测器已输出的检测结果中包含的手部检测区域输出后续的跟踪结果。The hand detection area included in the detection result that the detector has output is provided to the tracker, so that the tracker can output a subsequent hand detection area according to the hand detection area included in the detection result that the detector has output. Tracking Results.

可选地,其中,跟踪器当前输出与所述视频数据相对应的跟踪结果的步骤具体包括:Optionally, the step of the tracker currently outputting the tracking result corresponding to the video data specifically includes:

跟踪器判断当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域是否为有效区域;The tracker determines whether the gesture tracking area included in the previous frame tracking image frame corresponding to the current tracking image frame is a valid area;

若是,则根据所述前帧跟踪图像帧中包含的手势跟踪区域输出与所述当前跟踪图像帧相对应的跟踪结果;If yes, output the tracking result corresponding to the current tracking image frame according to the gesture tracking area included in the previous frame tracking image frame;

若否,则根据所述检测器提供的手部检测区域输出与所述当前跟踪图像帧相对应的跟踪结果。If not, output the tracking result corresponding to the current tracking image frame according to the hand detection area provided by the detector.

根据本发明的另一方面,提供了一种基于视频数据的手势控制装置,其包括:According to another aspect of the present invention, a gesture control device based on video data is provided, comprising:

第一确定模块,适于每当获取到跟踪器当前输出的与所述视频数据相对应的跟踪结果后,根据所述跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域;a first determination module, adapted to determine the gesture tracking area included in the current tracking image frame according to the tracking result whenever the tracking result corresponding to the video data currently output by the tracker is obtained;

第二确定模块,适于从检测器已输出的与所述视频数据相对应的各次检测结果中获取输出时间最晚的检测结果,确定所述输出时间最晚的检测结果中包含的手势类型;The second determination module is adapted to obtain the detection result with the latest output time from the detection results corresponding to the video data that have been output by the detector, and determine the gesture type included in the detection result with the latest output time ;

第三确定模块,适于根据所述输出时间最晚的检测结果中包含的手势类型以及所述当前跟踪图像帧中包含的手势跟踪区域确定控制规则;a third determining module, adapted to determine a control rule according to the gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame;

发送模块,适于向预设的智能硬件发送与所述控制规则相对应的控制指令。The sending module is adapted to send a control instruction corresponding to the control rule to the preset intelligent hardware.

可选地,其中,所述第三确定模块具体适于:Optionally, wherein the third determining module is specifically adapted to:

确定所述当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域;Determine the gesture tracking area included in the previous frame tracking image frame corresponding to the current tracking image frame;

根据所述前帧跟踪图像帧中包含的手势跟踪区域以及所述当前跟踪图像帧中包含的手势跟踪区域确定手部运动轨迹;Determine the hand motion trajectory according to the gesture tracking area included in the previous frame tracking image frame and the gesture tracking area included in the current tracking image frame;

根据所述输出时间最晚的检测结果中包含的手势类型以及所述手部运动轨迹,确定对应的控制规则。According to the gesture type included in the detection result with the latest output time and the hand motion trajectory, a corresponding control rule is determined.

可选地,其中,所述第三确定模块具体适于:Optionally, wherein the third determining module is specifically adapted to:

查询预设的手势控制库,根据所述手势控制库确定与所述输出时间最晚的检测结果中包含的手势类型以及所述手部运动轨迹相对应的控制规则;querying a preset gesture control library, and determining, according to the gesture control library, a control rule corresponding to the gesture type included in the detection result with the latest output time and the hand motion trajectory;

其中,所述手势控制库用于存储与各种手势类型和/或手部运动轨迹相对应的控制规则。The gesture control library is used to store control rules corresponding to various gesture types and/or hand motion trajectories.

可选地,其中,所述跟踪器每隔第一预设间隔从所述视频数据中提取一帧图像作为当前跟踪图像帧,并输出与所述当前跟踪图像帧相对应的跟踪结果;Optionally, wherein, the tracker extracts a frame of image from the video data every first preset interval as a current tracking image frame, and outputs a tracking result corresponding to the current tracking image frame;

所述检测器每隔第二预设间隔从所述视频数据中提取一帧图像作为当前检测图像帧,并输出与所述当前检测图像帧相对应的检测结果;The detector extracts a frame of image from the video data every second preset interval as a current detection image frame, and outputs a detection result corresponding to the current detection image frame;

其中,所述第二预设间隔大于所述第一预设间隔。Wherein, the second preset interval is greater than the first preset interval.

可选地,其中,所述装置进一步包括判断模块,适于:Optionally, wherein, the device further includes a judgment module, adapted to:

判断所述当前跟踪图像帧中包含的手势跟踪区域是否为有效区域;Determine whether the gesture tracking area included in the current tracking image frame is an effective area;

当判断结果为是时,执行所述从检测器已输出的与所述视频数据相对应的各次检测结果中获取输出时间最晚的检测结果的步骤及其后续步骤。When the judgment result is yes, the step of obtaining the detection result with the latest output time from the detection results outputted by the detector and corresponding to the video data and the subsequent steps are performed.

可选地,其中,所述判断模块具体适于:Optionally, wherein the judging module is specifically adapted to:

通过预设的手部分类器判断所述当前跟踪的图像帧中包含的手势跟踪区域是否为手部区域;Determine whether the gesture tracking area included in the currently tracked image frame is a hand area by a preset hand classifier;

若是,则确定所述当前跟踪图像帧中包含的手势跟踪区域为有效区域;若否,则确定所述当前跟踪图像帧中包含的手势跟踪区域为无效区域。If yes, the gesture tracking area included in the current tracking image frame is determined to be a valid area; if not, it is determined that the gesture tracking area included in the current tracking image frame is an invalid area.

可选地,其中,当所述当前跟踪图像帧中包含的手势跟踪区域为无效区域时,所述判断模块进一步适于:Optionally, wherein, when the gesture tracking area included in the current tracking image frame is an invalid area, the judgment module is further adapted to:

获取所述检测器在所述跟踪结果之后输出的检测结果,确定所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域;acquiring the detection result output by the detector after the tracking result, and determining the hand detection area included in the detection result output after the tracking result;

将所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域提供给所述跟踪器,以供所述跟踪器根据所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域输出后续的跟踪结果。The hand detection area included in the detection result output after the tracking result is provided to the tracker, so that the tracker can use the hand detection area included in the detection result output after the tracking result according to the tracking result. The partial detection area outputs the subsequent tracking results.

可选地,其中,当所述当前跟踪图像帧中包含的手势跟踪区域为有效区域时,所述判断模块进一步适于:Optionally, wherein, when the gesture tracking area included in the current tracking image frame is an effective area, the judgment module is further adapted to:

将所述有效区域提供给所述检测器,以供所述检测器根据所述有效区域输出后续的检测结果。The effective area is provided to the detector, so that the detector can output subsequent detection results according to the effective area.

可选地,其中,所述判断模块具体适于:Optionally, wherein the judging module is specifically adapted to:

根据所述有效区域确定当前检测图像帧中的检测范围;Determine the detection range in the current detection image frame according to the effective area;

根据所述检测范围,通过神经网络算法预测与所述当前检测图像帧相对应的检测结果;According to the detection range, predict the detection result corresponding to the current detection image frame through a neural network algorithm;

其中,所述检测结果中包含手势检测区域以及手势类型。Wherein, the detection result includes a gesture detection area and a gesture type.

可选地,其中,所述装置进一步包括:Optionally, wherein the device further comprises:

第四确定模块,适于确定检测器已输出的检测结果中包含的手部检测区域;a fourth determination module, adapted to determine the hand detection area included in the detection result output by the detector;

提供模块,适于将所述检测器已输出的检测结果中包含的手部检测区域提供给所述跟踪器,以供所述跟踪器根据所述检测器已输出的检测结果中包含的手部检测区域输出后续的跟踪结果。a providing module, adapted to provide the tracker with the hand detection area included in the detection result output by the detector, so that the tracker can use the hand detection area included in the detection result output by the detector for the tracker The detection area outputs subsequent tracking results.

可选地,其中,所述第一确定模块具体适于:Optionally, wherein the first determining module is specifically adapted to:

跟踪器判断当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域是否为有效区域;The tracker determines whether the gesture tracking area included in the previous frame tracking image frame corresponding to the current tracking image frame is a valid area;

若是,则根据所述前帧跟踪图像帧中包含的手势跟踪区域输出与所述当前跟踪图像帧相对应的跟踪结果;If yes, output the tracking result corresponding to the current tracking image frame according to the gesture tracking area included in the previous frame tracking image frame;

若否,则根据所述检测器提供的手部检测区域输出与所述当前跟踪图像帧相对应的跟踪结果。If not, output the tracking result corresponding to the current tracking image frame according to the hand detection area provided by the detector.

根据本发明的又一方面,提供了一种计算设备,包括:处理器、存储器、通信接口和通信总线,处理器、存储器和通信接口通过通信总线完成相互间的通信;According to another aspect of the present invention, a computing device is provided, including: a processor, a memory, a communication interface, and a communication bus, and the processor, the memory, and the communication interface communicate with each other through the communication bus;

存储器用于存放至少一可执行指令,可执行指令使处理器执行上述基于视频数据的手势控制方法对应的操作。The memory is used for storing at least one executable instruction, and the executable instruction enables the processor to perform operations corresponding to the above-mentioned gesture control method based on video data.

根据本发明的再一方面,提供了一种计算机存储介质,所述存储介质中存储有至少一可执行指令,所述可执行指令使处理器执行如上述基于视频数据的手势控制方法对应的操作。According to yet another aspect of the present invention, a computer storage medium is provided, wherein the storage medium stores at least one executable instruction, and the executable instruction enables a processor to perform operations corresponding to the above-mentioned gesture control method based on video data .

根据本发明提供的基于视频数据的手势控制方法及装置、计算设备,能够根据跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域,并且由检测器确定输出时间最晚的检测结果中包含的手势类型,然后根据输出时间最晚的检测结果中包含的手势类型以及当前跟踪图像帧中包含的手势跟踪区域确定控制规则,进而向预设的智能硬件发送与控制规则相对应的控制指令,来实现对智能硬件的控制。由此可见,该方式能够及时地根据检测到的手势类型来实现对智能硬件的控制。总之,该方式能够捕获用户所对应的视频数据,进而根据捕获到的视频数据中包含的用户信息控制智能硬件。因此,智能硬件的控制方式灵活且智能化。According to the video data-based gesture control method, device, and computing device provided by the present invention, the gesture tracking area included in the current tracking image frame can be determined according to the tracking result, and the gesture included in the detection result with the latest output time can be determined by the detector. Then, according to the gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame, the control rule is determined, and then the control command corresponding to the control rule is sent to the preset intelligent hardware to realize Control over intelligent hardware. It can be seen that this method can realize the control of the intelligent hardware according to the detected gesture type in time. In a word, this method can capture the video data corresponding to the user, and then control the intelligent hardware according to the user information contained in the captured video data. Therefore, the control method of intelligent hardware is flexible and intelligent.

上述说明仅是本发明技术方案的概述,为了能够更清楚了解本发明的技术手段,而可依照说明书的内容予以实施,并且为了让本发明的上述和其它目的、特征和优点能够更明显易懂,以下特举本发明的具体实施方式。The above description is only an overview of the technical solutions of the present invention, in order to be able to understand the technical means of the present invention more clearly, it can be implemented according to the content of the description, and in order to make the above and other purposes, features and advantages of the present invention more obvious and easy to understand , the following specific embodiments of the present invention are given.

附图说明Description of drawings

通过阅读下文优选实施方式的详细描述,各种其他的优点和益处对于本领域普通技术人员将变得清楚明了。附图仅用于示出优选实施方式的目的,而并不认为是对本发明的限制。而且在整个附图中,用相同的参考符号表示相同的部件。在附图中:Various other advantages and benefits will become apparent to those of ordinary skill in the art upon reading the following detailed description of the preferred embodiments. The drawings are for the purpose of illustrating preferred embodiments only and are not to be considered limiting of the invention. Also, the same components are denoted by the same reference numerals throughout the drawings. In the attached image:

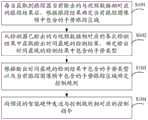

图1示出了根据本发明一个实施例的基于视频数据的手势控制方法的流程图;1 shows a flowchart of a gesture control method based on video data according to an embodiment of the present invention;

图2示出了根据本发明另一个实施例的基于视频数据的手势控制方法的流程图;2 shows a flowchart of a gesture control method based on video data according to another embodiment of the present invention;

图3示出了根据本发明一个实施例的基于视频数据的手势控制装置的功能框图;FIG. 3 shows a functional block diagram of a gesture control device based on video data according to an embodiment of the present invention;

图4示出了根据本发明一个实施例的一种计算设备的结构示意图。FIG. 4 shows a schematic structural diagram of a computing device according to an embodiment of the present invention.

具体实施方式Detailed ways

下面将参照附图更详细地描述本公开的示例性实施例。虽然附图中显示了本公开的示例性实施例,然而应当理解,可以以各种形式实现本公开而不应被这里阐述的实施例所限制。相反,提供这些实施例是为了能够更透彻地理解本公开,并且能够将本公开的范围完整的传达给本领域的技术人员。Exemplary embodiments of the present disclosure will be described in more detail below with reference to the accompanying drawings. While exemplary embodiments of the present disclosure are shown in the drawings, it should be understood that the present disclosure may be embodied in various forms and should not be limited by the embodiments set forth herein. Rather, these embodiments are provided so that the present disclosure will be more thoroughly understood, and will fully convey the scope of the present disclosure to those skilled in the art.

图1示出了根据本发明一个实施例的基于视频数据的手势控制方法的流程图。如图1所示,基于视频数据的手势控制方法具体包括如下步骤:FIG. 1 shows a flowchart of a gesture control method based on video data according to an embodiment of the present invention. As shown in Figure 1, the gesture control method based on video data specifically includes the following steps:

步骤S101,每当获取到跟踪器当前输出的与视频数据相对应的跟踪结果后,根据跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域。Step S101 , each time the tracking result corresponding to the video data currently output by the tracker is obtained, the gesture tracking area included in the current tracking image frame is determined according to the tracking result.

具体地,在视频进行播放的时候,可以根据预设的帧率,每隔几帧图像或者每隔预设的时间间隔获取视频数据中的一帧图像进行跟踪,比如,假设一秒钟内播放30帧图像,则可以每隔2帧图像或者每隔80毫秒获取一帧图像进行跟踪。或者,还可以对视频帧中的每一帧图像都进行跟踪,具体地,可以根据跟踪器的处理速度和想要达到的跟踪精度具体选择获取视频帧的方式。比如跟踪器处理的速度较快,为了达到更高的精度要求可以对视频中的每一帧图像都进行跟踪;如果跟踪器的处理速度较慢、精度要求也较低,此时可以每隔几帧图像获取一帧图像来进行跟踪。本领域人员可以根据实际情况具体进行选择,在此不再一一类述。在获取到跟踪器当前输出的与视频数据相对应的跟踪结果之后,根据跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域。其中,当前跟踪图像帧是指当前获取的要跟踪的这一帧图像。根据本步骤,可以根据当前跟踪图像帧的上一帧或者上几帧图像确定当前跟踪图像帧中包含的手势跟踪区域。Specifically, when the video is playing, one frame of image in the video data can be acquired every few frames of images or every preset time interval for tracking according to the preset frame rate, for example, assuming that the video is played within one second If there are 30 frames of images, you can obtain a frame of images every 2 frames or every 80 milliseconds for tracking. Alternatively, each image frame in the video frame may also be tracked, and specifically, the method of acquiring the video frame may be specifically selected according to the processing speed of the tracker and the desired tracking accuracy. For example, the processing speed of the tracker is fast, in order to achieve higher accuracy requirements, each frame of the video can be tracked; if the processing speed of the tracker is slow and the accuracy requirements are low, at this time, every few frames can be tracked. Frame Image Acquire a frame image for tracking. Those skilled in the art can make specific selections according to the actual situation, which will not be described one by one here. After acquiring the tracking result corresponding to the video data currently output by the tracker, the gesture tracking area included in the current tracking image frame is determined according to the tracking result. Wherein, the current tracking image frame refers to the currently acquired frame of image to be tracked. According to this step, the gesture tracking area included in the current tracking image frame can be determined according to the previous frame or previous frames of the current tracking image frame.

步骤S102,从检测器已输出的与视频数据相对应的各次检测结果中获取输出时间最晚的检测结果,确定输出时间最晚的检测结果中包含的手势类型。Step S102: Obtain the detection result with the latest output time from the detection results corresponding to the video data that have been output by the detector, and determine the gesture type included in the detection result with the latest output time.

从检测器已输出的与视频数据相对应的各次检测结果中获取输出时间最晚的检测结果可以是与上述跟踪器跟踪到的当前跟踪图像帧相对应的检测结果,还可以是与当前跟踪图像帧对应的前帧跟踪图像帧中的一帧图像相对应的检测结果。即检测器检测的过程可以和跟踪器跟踪的过程同步,还可以比跟踪器跟踪的过程延迟。从检测器已输出的与视频数据相对应的各次检测结果中获取输出时间最晚的检测结果之后,确定输出时间最晚的检测结果中包含的手势类型。其中,手势类型可以为各种手势类型,可以为静态的还可以为动态的,比如单手做出的“OK”手势等。The detection result with the latest output time obtained from the detection results corresponding to the video data output by the detector may be the detection result corresponding to the current tracking image frame tracked by the above-mentioned tracker, or may be the detection result corresponding to the current tracking image frame tracked by the above-mentioned tracker. The previous frame corresponding to the image frame tracks the detection result corresponding to an image in the image frame. That is, the process of detection by the detector can be synchronized with the process of tracking by the tracker, and it can also be delayed than the process of tracking by the tracker. After the detection result with the latest output time is obtained from the detection results corresponding to the video data that have been output by the detector, the gesture type included in the detection result with the latest output time is determined. The gesture type may be various gesture types, which may be static or dynamic, such as an "OK" gesture made with one hand.

步骤S103,根据输出时间最晚的检测结果中包含的手势类型以及当前跟踪图像帧中包含的手势跟踪区域确定控制规则。Step S103: Determine the control rule according to the gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame.

具体地,可以根据输出时间最晚的检测结果中包含的手势类型以及当前跟踪图像帧中包含的手势跟踪区域确定控制规则。还可以根据输出时间最晚的检测结果中包含的手势类型和/或,根据当前跟踪图像帧中包含的手势跟踪区域以及前帧跟踪图像帧中包含的手势跟踪区域所确定的手部运动轨迹来确定对应的规则。其中,控制规则可以是根据手势类型或者结合手部运动的轨迹对智能硬件实现相应的控制。比如做出“OK”的手势来实现空调的开启,做出“紧握拳头”的手势来实现空调的关闭;或者根据手掌平铺的手势并再结合手部向右移的动作来控制音响或者手机的音量的变大,根据做出手掌平铺的手势再结合手部向左移的动作来控制音响或者手机音量的变小。控制规则还可以是其它类型的控制规则,在此不再一一赘述。由于检测的速度相对比跟踪的速度慢,在不能对每一帧图像都进行检测的情况下,根据该步骤能够快速地跟踪到每一帧图像中手势所在的位置,并根据输出时间最晚的检测结果中包含的手势类型以及当前跟踪图像帧中包含的手势跟踪区域确定控制规则。Specifically, the control rule may be determined according to the gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame. It can also be determined according to the gesture type included in the detection result with the latest output time and/or according to the hand motion trajectory determined by the gesture tracking area included in the current tracking image frame and the gesture tracking area included in the previous tracking image frame. Determine the corresponding rules. Wherein, the control rule may be to implement corresponding control to the intelligent hardware according to the gesture type or in combination with the trajectory of the hand movement. For example, make an "OK" gesture to turn on the air conditioner, make a "clench your fist" gesture to turn off the air conditioner; or control the audio or When the volume of the mobile phone increases, the sound or the volume of the mobile phone can be controlled according to the gesture of making the palm tile and moving the hand to the left. The control rules may also be other types of control rules, which will not be repeated here. Since the speed of detection is relatively slower than the speed of tracking, in the case that each frame of image cannot be detected, the position of the gesture in each frame of image can be quickly tracked according to this step, and according to the latest output time The gesture type included in the detection result and the gesture tracking area included in the current tracking image frame determine the control rule.

步骤S104,向预设的智能硬件发送与控制规则相对应的控制指令。Step S104, sending a control instruction corresponding to the control rule to the preset intelligent hardware.

在确定控制规则后,向预设的智能硬件发送与控制规则相对应的控制指令。其中,智能硬件可以为空调、音响、冰箱之类的智能硬件,还可以为其它的智能硬件。After the control rule is determined, a control instruction corresponding to the control rule is sent to the preset intelligent hardware. Among them, the intelligent hardware may be intelligent hardware such as air conditioners, stereos, refrigerators, etc., and may also be other intelligent hardware.

根据本实施例提供的基于视频数据的手势控制方法,能够根据跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域,并且由检测器确定输出时间最晚的检测结果中包含的手势类型,然后根据输出时间最晚的检测结果中包含的手势类型以及当前跟踪图像帧中包含的手势跟踪区域确定控制规则,进而向预设的智能硬件发送与控制规则相对应的控制指令,来实现对智能硬件的控制。由此可见,该方式能够及时地根据检测到的手势类型来实现对智能硬件的控制。总之,该方式能够捕获用户所对应的视频数据,进而根据捕获到的视频数据中包含的用户信息控制智能硬件。因此,智能硬件的控制方式灵活且智能化。According to the gesture control method based on video data provided by this embodiment, the gesture tracking area included in the current tracking image frame can be determined according to the tracking result, and the gesture type included in the detection result with the latest output time can be determined by the detector, and then according to The gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame determine the control rules, and then send the control instructions corresponding to the control rules to the preset intelligent hardware to realize the intelligent hardware. control. It can be seen that this method can realize the control of the intelligent hardware according to the detected gesture type in time. In a word, this method can capture the video data corresponding to the user, and then control the intelligent hardware according to the user information contained in the captured video data. Therefore, the control method of intelligent hardware is flexible and intelligent.

图2示出了根据本发明另一个实施例的基于视频数据的手势控制方法的流程图。如图2所示,基于视频数据的手势控制方法具体包括如下步骤:FIG. 2 shows a flowchart of a gesture control method based on video data according to another embodiment of the present invention. As shown in Figure 2, the gesture control method based on video data specifically includes the following steps:

步骤S201,确定检测器已输出的检测结果中包含的手部检测区域。Step S201, determining the hand detection area included in the detection result output by the detector.

其中,检测器已输出的检测结果可以是输出的第一帧待检测的图像所对应的检测结果,从而实现快速初始化跟踪器,提升效率的目的。当然,检测器已输出的检测结果还可以是输出的单独的第N帧待检测的图像或连续的前N帧图像。其中,N为大于1的自然数,从而结合多帧检测结果来准确确定手部检测区域的具体位置。The detection result output by the detector may be the detection result corresponding to the output image to be detected in the first frame, so as to realize the purpose of quickly initializing the tracker and improving efficiency. Of course, the detection result that has been output by the detector may also be the output of the single N-th frame of the image to be detected or the consecutive previous N frames of images. Among them, N is a natural number greater than 1, so that the specific position of the hand detection area can be accurately determined in combination with the multi-frame detection results.

在本实施例中,以检测器已输出的检测结果为第一帧待检测的图像所对应的检测结果为例进行说明。具体地,该第一帧待检测的图像可以是视频中播放的首帧图像,也可以是视频中的第二帧图像等。在获取到第一帧待检测的图像时,为了确定跟踪器跟踪的对象,以便初始化跟踪器,需要利用检测器检测第一帧待检测的图像中手部所在的区域,并将该区域确定为手部检测区域,从而确定检测器已输出的检测结果中包含的手部检测区域。其中,检测器可利用神经网络预测算法等多种方式实现检测手部所在区域的目的,本发明对此不做限定。In this embodiment, description is made by taking an example that the detection result output by the detector is the detection result corresponding to the image to be detected in the first frame. Specifically, the image to be detected in the first frame may be the first frame image played in the video, or may be the second frame image in the video, or the like. When the first frame of the image to be detected is acquired, in order to determine the object tracked by the tracker so as to initialize the tracker, it is necessary to use the detector to detect the area where the hand is located in the first frame of the image to be detected, and determine the area as Hand detection area, so as to determine the hand detection area included in the detection result that the detector has output. Wherein, the detector can realize the purpose of detecting the area where the hand is located by using neural network prediction algorithm and other methods, which is not limited in the present invention.

步骤S202,将检测器已输出的检测结果中包含的手部检测区域提供给跟踪器,以供跟踪器根据检测器已输出的检测结果中包含的手部检测区域输出后续的跟踪结果。Step S202 , providing the hand detection area included in the detection result output by the detector to the tracker, so that the tracker can output subsequent tracking results according to the hand detection area included in the detection result output by the detector.

检测器输出的检测结果中包含的手部检测区域是检测出来的精确度较高的手部所在的区域,可以将检测器已输出的检测结果中包含的手部检测区域提供给跟踪器,以初始化跟踪器,为跟踪器提供跟踪目标,从而供跟踪器根据检测器已输出的检测结果中包含的手部检测区域输出后续的跟踪结果。具体地,由于视频中包含的各帧图像之间存在连贯性,因此,跟踪器利用已检测出的图像中的手部检测区域能够快速确定后续图像中的手部位置。The hand detection area included in the detection result output by the detector is the area where the detected hand with higher accuracy is located. The hand detection area included in the detection result output by the detector can be provided to the tracker to Initialize the tracker and provide the tracker with a tracking target, so that the tracker can output subsequent tracking results according to the hand detection area included in the detection results that have been output by the detector. Specifically, due to the coherence between the frames of images included in the video, the tracker can quickly determine the position of the hand in the subsequent images by using the hand detection area in the detected image.

步骤S203,每当获取到跟踪器当前输出的与视频数据相对应的跟踪结果后,根据跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域。Step S203 , every time the tracking result corresponding to the video data currently output by the tracker is obtained, the gesture tracking area included in the current tracking image frame is determined according to the tracking result.

为了提高跟踪器跟踪的准确性,减小失误率,每当获取到跟踪器当前输出的与视频数据相对应的跟踪结果时,跟踪器需要判断当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域是否为有效区域;若是,则根据前帧跟踪图像帧中包含的手势跟踪区域输出与当前跟踪图像帧相对应的跟踪结果;若否,则根据检测器提供的手部检测区域输出与当前跟踪图像帧相对应的跟踪结果。根据上述步骤,能够在执行对当前帧跟踪图像帧进行跟踪的步骤之前过滤掉无效的前帧跟踪图像帧,从而能够提高跟踪器跟踪的准确性,并且提高了跟踪的效率,缩短了跟踪的时间。In order to improve the tracking accuracy of the tracker and reduce the error rate, whenever the tracking result corresponding to the video data currently output by the tracker is obtained, the tracker needs to determine that the previous tracking image frame corresponding to the current tracking image frame contains Whether the gesture tracking area is a valid area; if so, output the tracking result corresponding to the current tracking image frame according to the gesture tracking area included in the previous frame tracking image frame; if not, output the hand detection area provided by the detector The tracking result corresponding to the current tracking image frame. According to the above steps, the invalid previous frame tracking image frame can be filtered out before the step of tracking the current frame tracking image frame is performed, so that the tracking accuracy of the tracker can be improved, the tracking efficiency can be improved, and the tracking time can be shortened .

在本步骤中,具体地,跟踪器可以每隔第一预设间隔从视频数据中提取一帧图像作为当前跟踪图像帧,并输出与当前跟踪图像帧相对应的跟踪结果。其中,当前跟踪图像帧是指当前获取的要跟踪的这一帧图像。第一预设间隔可以根据预设的帧率设定,还可以由用户自定义设定,还可以根据其它的方式来设定。比如一秒钟内获取30帧图像,则第一预设间隔可以设定为每隔2帧图像的时间间隔或者可以直接设定为80毫秒,还可以将第一预设间隔设定为获取每一帧图像之间的时间间隔。每当获取到跟踪器当前输出的与视频数据相对应的跟踪结果后,根据跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域。In this step, specifically, the tracker may extract a frame of image from the video data every first preset interval as the current tracking image frame, and output the tracking result corresponding to the current tracking image frame. Wherein, the current tracking image frame refers to the currently acquired frame of image to be tracked. The first preset interval may be set according to a preset frame rate, may also be set by a user, or may be set according to other methods. For example, to acquire 30 frames of images in one second, the first preset interval can be set to the time interval of every 2 frames of images or can be directly set to 80 milliseconds, and the first preset interval can also be set to acquire every 2 frames of images. The time interval between a frame of images. Whenever the tracking result corresponding to the video data currently output by the tracker is obtained, the gesture tracking area included in the current tracking image frame is determined according to the tracking result.

步骤S204,判断当前跟踪图像帧中包含的手势跟踪区域是否为有效区域。Step S204, it is judged whether the gesture tracking area included in the current tracking image frame is a valid area.

在跟踪的时候,当手的位置变化特别快时,可能会出现跟踪器跟踪不上的手部位置的变化,或者跟踪到错误的位置,此时当前跟踪图像帧中包含的手势跟踪区域为错误的区域即无效区域。所以对当前跟踪图像帧进行跟踪的时候,需要判断当前跟踪图像帧中包含的手势跟踪区域是否为有效区域。During tracking, when the position of the hand changes very quickly, there may be a change in the position of the hand that the tracker cannot track, or the wrong position is tracked. At this time, the gesture tracking area contained in the current tracking image frame is wrong. The area is the invalid area. Therefore, when tracking the current tracking image frame, it is necessary to determine whether the gesture tracking area included in the current tracking image frame is a valid area.

具体地,判断的方法可以是通过预设的手部分类器判断所述当前跟踪的图像帧中包含的手势跟踪区域是否为手部区域;当手势跟踪区域内存在人手并且能够被手部分类器识别的时候,则当前跟踪的图像帧中包含的手势跟踪区域为手部区域;当手势跟踪区域内不存在人手或者只存在手的很小一部分而不能被手部分类器识别的时候,则当前跟踪的图像帧中包含的手势跟踪区域不是手部区域。若当前跟踪的图像帧中包含的手势跟踪区域是手部区域,则确定当前跟踪图像帧中包含的手势跟踪区域为有效区域;若当前跟踪的图像帧中包含的手势跟踪区域不是手部区域,则确定当前跟踪图像帧中包含的手势跟踪区域为无效区域。其中,手部分类器可以为二叉树分类器或者其它的手部分类器,上述手部分类器可以通过利用手的特征数据和/或非手的特征数据训练出手部识别模型,然后将获取的当前跟踪的图像帧中包含的手势跟踪区域相对应的数据输入给该手部识别模型,并根据手部识别模型的输出结果判断当前跟踪的图像帧中包含的手势跟踪区域是否为手部区域。根据该步骤S204判断出当前跟踪图像帧中包含的手势跟踪区域若不是有效区域,则执行步骤S205~步骤S206,若是,则执行后续的步骤S207~S2010。Specifically, the judgment method may be to use a preset hand classifier to judge whether the gesture tracking area included in the currently tracked image frame is a hand area; when a human hand exists in the gesture tracking area and can be classified by the hand classifier When identifying, the gesture tracking area contained in the currently tracked image frame is the hand area; when there is no human hand or only a small part of the hand in the gesture tracking area and cannot be recognized by the hand classifier, the current The gesture tracking area contained in the tracked image frame is not the hand area. If the gesture tracking area included in the currently tracked image frame is the hand area, determine that the gesture tracking area included in the currently tracked image frame is an effective area; if the gesture tracking area included in the currently tracked image frame is not the hand area, Then it is determined that the gesture tracking area included in the current tracking image frame is an invalid area. Wherein, the hand classifier may be a binary tree classifier or other hand classifiers, and the above-mentioned hand classifier may train a hand recognition model by using hand feature data and/or non-hand feature data, and then use the acquired current The data corresponding to the gesture tracking area included in the tracked image frame is input to the hand recognition model, and whether the gesture tracking area included in the currently tracked image frame is a hand area is determined according to the output result of the hand recognition model. According to this step S204, if it is determined that the gesture tracking area included in the current tracking image frame is not a valid area, then steps S205 to S206 are performed, and if so, the subsequent steps S207 to S2010 are performed.

步骤S205,获取检测器在跟踪结果之后输出的检测结果,确定在跟踪结果之后输出的检测结果中包含的手部检测区域。Step S205 , acquiring the detection result output by the detector after the tracking result, and determining the hand detection area included in the detection result output after the tracking result.

当判断出当前跟踪图像帧中包含的手势跟踪区域为无效区域之后,获取检测器在跟踪结果之后输出的检测结果,并且确定在跟踪结果之后输出的检测结果中包含的手部检测区域。After it is determined that the gesture tracking area included in the current tracking image frame is an invalid area, the detection result output by the detector after the tracking result is acquired, and the hand detection area included in the detection result output after the tracking result is determined.

其中,检测器与跟踪器并行运行。具体实施时,可以通过检测线程实现检测器的功能,以进行检测;通过跟踪线程实现跟踪器的功能,以进行跟踪。跟踪线程每隔第一预设时间从视频数据中提取一帧图像作为当前跟踪图像帧,并输出与当前跟踪图像帧相对应的跟踪结果;检测线程每隔第二预设时间从视频数据中提取一帧图像作为当前检测图像帧,并输出与当前检测图像帧相对应的检测结果,并且第二预设时间大于第一预设时间间隔。所以跟踪线程跟踪的速度大于检测线程的速度,比如跟踪器每隔2帧的时间间隔获取一帧图像进行跟踪,则检测器可以每隔10帧的时间间隔获取一帧图像进行检测。因此利用跟踪线程能够快速地跟踪到手部移动的位置,以弥补检测线程检测较慢的缺点。Among them, the detector runs in parallel with the tracker. During specific implementation, the function of the detector can be implemented by the detection thread to perform detection; the function of the tracker can be implemented by the tracking thread to perform the tracking. The tracking thread extracts a frame of image from the video data as the current tracking image frame every first preset time, and outputs the tracking result corresponding to the current tracking image frame; the detection thread extracts from the video data every second preset time One frame of image is used as the current detection image frame, and the detection result corresponding to the current detection image frame is output, and the second preset time is greater than the first preset time interval. Therefore, the tracking speed of the tracking thread is greater than the speed of the detection thread. For example, the tracker obtains one frame of image for tracking every 2 frames, and the detector can obtain one frame of image every 10 frames for detection. Therefore, the position of the hand movement can be quickly tracked by using the tracking thread to make up for the disadvantage that the detection thread is slow in detection.

步骤S206,将在跟踪结果之后输出的检测结果中包含的手部检测区域提供给跟踪器,以供跟踪器根据在跟踪结果之后输出的检测结果中包含的手部检测区域输出后续的跟踪结果。Step S206, the hand detection area included in the detection result output after the tracking result is provided to the tracker, so that the tracker can output subsequent tracking results according to the hand detection area included in the detection result output after the tracking result.

在判断出当前跟踪图像帧中包含的手势跟踪区域为无效区域之后,检测器可能同时地将检测结果中包含的手部检测区域提供给跟踪器。由于检测器检测的速度小于跟踪器跟踪的速度,所以可能还需要等待一段延时时间检测器才能将在跟踪结果之后输出的检测结果中包含的手部检测区域提供给跟踪器,此时会出现一定的延迟。将在跟踪结果之后输出的检测结果中包含的手部检测区域提供给跟踪器,以初始化跟踪器,从而供跟踪器根据在跟踪结果之后输出的检测结果中包含的手部检测区域输出后续的跟踪结果,进而进一步地执行步骤S203~步骤S2010。After judging that the gesture tracking area included in the current tracking image frame is an invalid area, the detector may simultaneously provide the hand detection area included in the detection result to the tracker. Since the detection speed of the detector is less than the tracking speed of the tracker, it may also need to wait for a delay time before the detector can provide the hand detection area included in the detection result outputted after the tracking result to the tracker. a certain delay. The hand detection area included in the detection result output after the tracking result is provided to the tracker to initialize the tracker, so that the tracker can output subsequent tracking according to the hand detection area included in the detection result output after the tracking result As a result, steps S203 to S2010 are further executed.

步骤S207,将有效区域提供给检测器,以供检测器根据有效区域输出后续的检测结果。In step S207, the effective area is provided to the detector, so that the detector can output subsequent detection results according to the effective area.

其中,有效区域可以是当前跟踪图像帧中的有效区域,还可以是在当前跟踪图像帧之前,且在当前检测图像帧之后的多帧跟踪图像帧中的有效区域,上述当前检测图像帧是指检测器当前检测的这一帧图像。比如跟踪器当前跟踪到第10帧图像,检测器此时检测到第2帧图像时,则有效区域可以是第10帧图像的有效区域,还可以是在第10帧图像之前,第2帧图像之后的图像帧中的多帧图像。也就是说,在一种实现方式中,跟踪器可以将每一次得到的跟踪图像帧中的有效区域都提供给检测器,由于检测器的检测频率低于跟踪器的跟踪频率,因此,此时,检测器可以根据得到的多个跟踪图像帧中的有效区域对当前检测图像帧进行检测,从而通过分析多个跟踪图像帧中的有效区域的运动趋势和/或运动速度来更精确地确定当前检测图像帧中的手部检测区域。在另一种实现方式中,跟踪器也可以从连续的M个跟踪图像帧中选择一帧,并将选择的该帧中的有效区域提供给检测器,M为大于1的自然数,具体取值可根据跟踪器的跟踪频率和检测器的检测频率确定。例如,跟踪器的跟踪频率是每隔2帧图像跟踪一次,检测器检测的频率是每隔10帧图像检测一次,则M可以取值为5,即跟踪器可以从连续的5个跟踪图像帧中选择一帧,并将选择的该帧中的有效区域提供给检测器。具体地,检测器根据有效区域确定当前检测图像帧中的检测范围;根据检测范围,通过神经网络算法预测与当前检测图像帧相对应的检测结果;其中,检测结果中包含手势检测区域以及手势类型。其中检测范围是根据有效区域确定的,具体地,检测范围既可以是与有效区域相同的区域范围,也可以是大于有效区域的区域范围,甚至也可能是小于有效区域的区域范围,具体选取的大小本领域人员可以根据实际情况自己设定。并且在上述有效区域内可以通过神经网络算法预测与当前检测图像帧相对应的检测结果,其中神经网络算法是逻辑性的思维,具体是指根据逻辑规则进行推理的过程;它先将信息化成概念,并用符号表示,然后,根据符号运算按串行模式进行逻辑推理。通过神经网络算法可以比较准确地预测出当前检测图像帧相对应的检测结果。由于检测范围仅为整个图像中的局部区域,因此,通过将有效区域提供给检测器,以供检测器根据有效区域输出后续的检测结果的方式,可以加快检测速度,提升效率并且缩短延时。Wherein, the effective area may be the effective area in the current tracking image frame, or may be the effective area in the multi-frame tracking image frames before the current tracking image frame and after the current detection image frame, and the above-mentioned current detection image frame refers to The frame image currently detected by the detector. For example, the tracker currently tracks the 10th frame of image, and when the detector detects the 2nd frame of image at this time, the effective area can be the effective area of the 10th frame of image, or it can be the 2nd frame of image before the 10th frame of image. Multiple frames of images in subsequent image frames. That is to say, in an implementation manner, the tracker can provide the detector with the effective area in the tracking image frame obtained each time. Since the detection frequency of the detector is lower than the tracking frequency of the tracker, at this time, , the detector can detect the current detection image frame according to the effective area in the obtained multiple tracking image frames, so as to more accurately determine the current detection image frame by analyzing the motion trend and/or motion speed of the effective area in the multiple tracking image frames Detect hand detection areas in image frames. In another implementation manner, the tracker may also select a frame from the consecutive M tracking image frames, and provide the selected effective area in the frame to the detector, where M is a natural number greater than 1, and the specific value is It can be determined according to the tracking frequency of the tracker and the detection frequency of the detector. For example, the tracking frequency of the tracker is to track every 2 frames of images, and the frequency of detection of the detector is to detect every 10 frames of images, then M can be 5, that is, the tracker can track 5 consecutive image frames from Select a frame in the frame and provide the selected valid area in the frame to the detector. Specifically, the detector determines the detection range in the current detection image frame according to the effective area; according to the detection range, predicts the detection result corresponding to the current detection image frame through the neural network algorithm; wherein, the detection result includes the gesture detection area and the gesture type . The detection range is determined according to the effective area. Specifically, the detection range may be the same area as the effective area, or an area larger than the effective area, or even smaller than the effective area. The size can be set by those in the field according to the actual situation. And in the above-mentioned effective area, the detection result corresponding to the current detection image frame can be predicted by the neural network algorithm. The neural network algorithm is a logical thinking, which specifically refers to the process of reasoning according to logical rules; it first converts information into concepts. , and represented by symbols, and then logical reasoning is carried out in serial mode according to symbolic operations. The detection result corresponding to the current detection image frame can be more accurately predicted by the neural network algorithm. Since the detection range is only a local area in the whole image, by providing the effective area to the detector, so that the detector can output subsequent detection results according to the effective area, the detection speed can be accelerated, the efficiency can be improved, and the delay time can be shortened.

步骤S208,从检测器已输出的与视频数据相对应的各次检测结果中获取输出时间最晚的检测结果,确定输出时间最晚的检测结果中包含的手势类型。Step S208: Obtain the detection result with the latest output time from the detection results corresponding to the video data that have been output by the detector, and determine the gesture type included in the detection result with the latest output time.

具体地,由上述步骤S203可知,跟踪器每隔第一预设间隔从视频数据中提取一帧图像作为当前跟踪图像帧,并输出与当前跟踪图像帧相对应的跟踪结果。由于检测器可以每隔第二预设时间从视频数据中提取一帧图像作为当前检测图像帧,并输出与所述当前检测图像帧相对应的检测结果;并且第二预设间隔大于上述第一预设间隔。其中,第二预设间隔可以根据预设的帧率设定,还可以由用户自定义设定,还可以根据其它的方式来设定。比如一秒钟内获取30帧图像,如果第一预设间隔设定为每获取2帧图像的时间间隔,则第二预设时间间隔可以设定为每获取10帧图像的时间间隔,当然还可以根据其它的方式设定为其它的值,在此不作限制。跟踪器跟踪的线程和检测器检测的线程是两个同时工作的线程,但是跟踪的速度大于检测的速度。从而当手部的手势变化不大,但是位置发生变化时,由检测器可能不能及时地检测到手部所在的位置时,由跟踪器则可以快速地检测到手部所在的位置,并且及时地根据检测到的手势对图像进行处理。在将有效区域提供给检测器,以供检测器根据有效区域输出后续的检测结果之后,在本步骤S208中从检测器已输出的与视频数据相对应的各次检测结果中获取输出时间最晚的检测结果,确定输出时间最晚的检测结果中包含的手势类型。具体地,发明人在实现本发明的过程中发现,由于视频的帧率较高,人手的手势动作往往会在连续的数帧图像中保持恒定,因此,在本实施例中,获取输出时间最晚的检测结果中包含的手势类型(即检测器最近一次输出的检测结果中包含的手势类型),将该手势类型确定为跟踪器跟踪到的手势跟踪区域中的手势类型,从而能够充分利用跟踪器检测速度快(但可能无法及时确定出手势的具体类型),检测器检测精度高的优势。例如,假设跟踪器目前跟踪至第8帧图像,而检测器则刚刚输出第5帧图像的检测结果,因此,直接将第5帧图像中的手势类型确定为第8帧图像中的手势类型。Specifically, it can be known from the above step S203 that the tracker extracts one frame of image from the video data every first preset interval as the current tracking image frame, and outputs the tracking result corresponding to the current tracking image frame. Because the detector can extract a frame of image from the video data as the current detection image frame every second preset time, and output the detection result corresponding to the current detection image frame; and the second preset interval is greater than the first Preset interval. The second preset interval may be set according to a preset frame rate, may also be set by a user, or may be set according to other methods. For example, if 30 frames of images are acquired in one second, if the first preset interval is set as the time interval for acquiring 2 frames of images, the second preset time interval can be set as the time interval for acquiring 10 frames of images. Other values can be set in other ways, which are not limited here. The thread tracked by the tracker and the thread detected by the detector are two threads working at the same time, but the speed of tracking is faster than the speed of detection. Therefore, when the gesture of the hand does not change much, but the position changes, the detector may not be able to detect the position of the hand in time, the tracker can quickly detect the position of the hand, and according to the detection to the gesture to process the image. After the valid area is provided to the detector for the detector to output subsequent detection results according to the valid area, in this step S208, the latest output time is obtained from the detection results corresponding to the video data that have been output by the detector. The detection result is determined, and the gesture type included in the detection result with the latest output time is determined. Specifically, the inventor found in the process of implementing the present invention that, due to the high frame rate of the video, the gestures of the human hand tend to remain constant in several consecutive frames of images. Therefore, in this embodiment, the acquisition and output time is the longest. The gesture type included in the late detection result (that is, the gesture type included in the detection result outputted by the detector most recently), the gesture type is determined as the gesture type in the gesture tracking area tracked by the tracker, so that the tracking can be fully utilized. The detection speed of the detector is fast (but the specific type of gesture may not be determined in time), and the detector has the advantages of high detection accuracy. For example, it is assumed that the tracker is currently tracking to the 8th frame image, and the detector has just output the detection result of the 5th frame image. Therefore, the gesture type in the 5th frame image is directly determined as the gesture type in the 8th frame image.

步骤S209,根据输出时间最晚的检测结果中包含的手势类型以及当前跟踪图像帧中包含的手势跟踪区域确定控制规则。Step S209: Determine the control rule according to the gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame.

可选地,在确定控制规则的时候,不仅可以根据手势类型确定,还可以根据手部运动的动作确定。获取手部运动的动作的时候,需要确定手部运动轨迹。这样将静态的手势和手部的动作结合起来对智能硬件进行控制,从而更加多样并且准确地控制智能硬件。具体地,可以首先确定当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域;其中当前跟踪图像帧对应的前帧跟踪图像帧可以是当前跟踪图像帧对应的前帧跟踪图像帧中的一帧或者多帧。然后根据前帧跟踪图像帧中包含的手势跟踪区域以及当前跟踪图像帧中包含的手势跟踪区域确定手部运动轨迹;最后根据输出时间最晚的检测结果中包含的手势类型以及手部运动轨迹,确定对应的控制规则。在确定对应的控制规则可以查询预设的手势控制库,根据手势控制库确定与输出时间最晚的检测结果中包含的手势类型以及手部运动轨迹相对应的控制规则;其中,手势控制库用于存储与各种手势类型和/或手部运动轨迹相对应的控制规则。其中,控制规则可以是根据手势类型或者结合手部运动的轨迹对智能硬件实现相应的控制。比如做出“OK”的手势来实现空调的开启,做出“紧握拳头”的手势来实现空调的关闭;或者根据手掌平铺的手势并再结合手部向右移的动作来控制音响或者手机的音量的变大,根据做出手掌平铺的手势再结合手部向左移的动作来控制音响或者手机音量的变小。控制规则还可以是其它类型的控制规则,在此不再一一赘述。Optionally, when determining the control rule, it can be determined not only according to the gesture type, but also according to the action of the hand movement. When acquiring the motion of the hand motion, the trajectory of the hand motion needs to be determined. In this way, the static gestures and hand movements are combined to control the intelligent hardware, so as to control the intelligent hardware more diversely and accurately. Specifically, the gesture tracking area included in the previous tracking image frame corresponding to the current tracking image frame may be determined first; wherein the previous tracking image frame corresponding to the current tracking image frame may be the previous tracking image frame corresponding to the current tracking image frame. one or more frames. Then determine the hand motion trajectory according to the gesture tracking area contained in the previous tracking image frame and the gesture tracking area contained in the current tracking image frame; finally, according to the gesture type and hand motion trajectory contained in the detection result with the latest output time, Determine the corresponding control rules. When determining the corresponding control rule, you can query the preset gesture control library, and determine the control rule corresponding to the gesture type and hand motion trajectory contained in the detection result with the latest output time according to the gesture control library; wherein, the gesture control library uses for storing control rules corresponding to various gesture types and/or hand motion trajectories. Wherein, the control rule may be to implement corresponding control to the intelligent hardware according to the gesture type or in combination with the trajectory of the hand movement. For example, make an "OK" gesture to turn on the air conditioner, make a "clench your fist" gesture to turn off the air conditioner; or control the audio or When the volume of the mobile phone increases, the sound or the volume of the mobile phone can be controlled according to the gesture of making the palm tile and moving the hand to the left. The control rules may also be other types of control rules, which will not be repeated here.

步骤S2010,向预设的智能硬件发送与控制规则相对应的控制指令。Step S2010, sending a control instruction corresponding to the control rule to the preset intelligent hardware.

在确定控制规则后,向预设的智能硬件发送与控制规则相对应的控制指令。其中,智能硬件可以为空调、音响、冰箱之类的智能硬件,还可以为其它的智能硬件。控制指令例如可以是空调关闭指令、音量调节指令等等。After the control rule is determined, a control instruction corresponding to the control rule is sent to the preset intelligent hardware. Among them, the intelligent hardware may be intelligent hardware such as air conditioners, stereos, refrigerators, etc., and may also be other intelligent hardware. The control command may be, for example, an air conditioner off command, a volume adjustment command, and the like.

根据本实施例提供的方法,首先确定检测器已输出的检测结果中包含的手部检测区域,将检测器已输出的检测结果中包含的手部检测区域提供给跟踪器,以供跟踪器根据检测器已输出的检测结果中包含的手部检测区域输出后续的跟踪结果,从而能够初始化跟踪器,使跟踪器获得跟踪的目标。然后进一步地,根据跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域,并且判断当前跟踪图像帧中包含的手势跟踪区域是否为有效区域,若否,则获取检测器在跟踪结果之后输出的检测结果,确定在跟踪结果之后输出的检测结果中包含的手部检测区域,并将在跟踪结果之后输出的检测结果中包含的手部检测区域提供给跟踪器,以供跟踪器根据在跟踪结果之后输出的检测结果中包含的手部检测区域输出后续的跟踪结果,从而初始化跟踪器;若是,则继续从检测器已输出的与视频数据相对应的各次检测结果中获取输出时间最晚的检测结果,确定输出时间最晚的检测结果中包含的手势类型,进而根据输出时间最晚的检测结果中包含的手势类型以及当前跟踪图像帧中包含的手势跟踪区域确定控制规则,并向预设的智能硬件发送与控制规则相对应的控制指令,从而实现对智能硬件的控制。根据该方法,无需针对每一帧图像都进行检测,提升了效率,缩短了耗时,并且跟踪和检测的过程同时进行,提高了根据手势对图像进行处理的准确性,减小了失误率,从而更加准确及时地根据手势类型和手部运动趋势对智能硬件进行控制。According to the method provided in this embodiment, the hand detection area included in the detection result output by the detector is first determined, and the hand detection area included in the detection result output by the detector is provided to the tracker for the tracker to use according to The hand detection area included in the detection result output by the detector outputs the subsequent tracking result, so that the tracker can be initialized so that the tracker can obtain the tracked target. Then further, determine the gesture tracking area included in the current tracking image frame according to the tracking result, and determine whether the gesture tracking area included in the current tracking image frame is a valid area, if not, obtain the detection output by the detector after the tracking result. As a result, the hand detection area included in the detection result outputted after the tracking result is determined, and the hand detection area included in the detection result outputted after the tracking result is provided to the tracker for the tracker to be based on after the tracking result. The hand detection area included in the output detection results outputs subsequent tracking results, thereby initializing the tracker; if so, continue to obtain the detection with the latest output time from the detection results corresponding to the video data that have been output by the detector As a result, the gesture type included in the detection result with the latest output time is determined, and then the control rule is determined according to the gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame, and sent to the preset The intelligent hardware sends control instructions corresponding to the control rules, thereby realizing the control of the intelligent hardware. According to the method, it is not necessary to perform detection for each frame of image, the efficiency is improved, the time-consuming process is shortened, and the processes of tracking and detection are performed simultaneously, which improves the accuracy of image processing based on gestures, and reduces the error rate. Thereby, the intelligent hardware can be controlled more accurately and timely according to the gesture type and hand movement trend.

图3示出了根据本发明一个实施例的基于视频数据的手势控制装置的功能框图。如图3所示,该装置包括:第四确定模块301,提供模块302、第一确定模块303、判断模块304、第二确定模块305、第三确定模块306、发送模块307。其中,第一确定模块303,适于每当获取到跟踪器当前输出的与所述视频数据相对应的跟踪结果后,根据所述跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域;FIG. 3 shows a functional block diagram of a gesture control device based on video data according to an embodiment of the present invention. As shown in FIG. 3 , the device includes: a fourth determining

第二确定模块305,适于从检测器已输出的与所述视频数据相对应的各次检测结果中获取输出时间最晚的检测结果,确定所述输出时间最晚的检测结果中包含的手势类型;The

第三确定模块306,适于根据所述输出时间最晚的检测结果中包含的手势类型以及所述当前跟踪图像帧中包含的手势跟踪区域确定控制规则;A third determining

发送模块307,适于向预设的智能硬件发送与所述控制规则相对应的控制指令。The sending

在另一个实施例中,其中,所述第三确定模块306具体适于:In another embodiment, the third determining

确定所述当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域;Determine the gesture tracking area included in the previous frame tracking image frame corresponding to the current tracking image frame;

根据所述前帧跟踪图像帧中包含的手势跟踪区域以及所述当前跟踪图像帧中包含的手势跟踪区域确定手部运动轨迹;Determine the hand motion trajectory according to the gesture tracking area included in the previous frame tracking image frame and the gesture tracking area included in the current tracking image frame;

根据所述输出时间最晚的检测结果中包含的手势类型以及所述手部运动轨迹,确定对应的控制规则。According to the gesture type included in the detection result with the latest output time and the hand motion trajectory, a corresponding control rule is determined.

可选地,其中,所述第三确定模块306具体适于:Optionally, the third determining

查询预设的手势控制库,根据所述手势控制库确定与所述输出时间最晚的检测结果中包含的手势类型以及所述手部运动轨迹相对应的控制规则;querying a preset gesture control library, and determining, according to the gesture control library, a control rule corresponding to the gesture type included in the detection result with the latest output time and the hand motion trajectory;

其中,所述手势控制库用于存储与各种手势类型和/或手部运动轨迹相对应的控制规则。The gesture control library is used to store control rules corresponding to various gesture types and/or hand motion trajectories.

可选地,其中,所述跟踪器每隔第一预设间隔从所述视频数据中提取一帧图像作为当前跟踪图像帧,并输出与所述当前跟踪图像帧相对应的跟踪结果;Optionally, wherein, the tracker extracts a frame of image from the video data every first preset interval as a current tracking image frame, and outputs a tracking result corresponding to the current tracking image frame;

所述检测器每隔第二预设间隔从所述视频数据中提取一帧图像作为当前检测图像帧,并输出与所述当前检测图像帧相对应的检测结果;The detector extracts a frame of image from the video data every second preset interval as a current detection image frame, and outputs a detection result corresponding to the current detection image frame;

其中,所述第二预设间隔大于所述第一预设间隔。Wherein, the second preset interval is greater than the first preset interval.

可选地,其中,所述装置进一步包括判断模块304,适于:Optionally, wherein, the apparatus further includes a

判断所述当前跟踪图像帧中包含的手势跟踪区域是否为有效区域;Determine whether the gesture tracking area included in the current tracking image frame is an effective area;

当判断结果为是时,执行所述从检测器已输出的与所述视频数据相对应的各次检测结果中获取输出时间最晚的检测结果的步骤及其后续步骤。When the judgment result is yes, the step of obtaining the detection result with the latest output time from the detection results outputted by the detector and corresponding to the video data and the subsequent steps are performed.

可选地,其中,所述判断模块304具体适于:Optionally, wherein, the judging

通过预设的手部分类器判断所述当前跟踪的图像帧中包含的手势跟踪区域是否为手部区域;Determine whether the gesture tracking area included in the currently tracked image frame is a hand area by a preset hand classifier;

若是,则确定所述当前跟踪图像帧中包含的手势跟踪区域为有效区域;若否,则确定所述当前跟踪图像帧中包含的手势跟踪区域为无效区域。If yes, the gesture tracking area included in the current tracking image frame is determined to be a valid area; if not, it is determined that the gesture tracking area included in the current tracking image frame is an invalid area.

可选地,其中,当所述当前跟踪图像帧中包含的手势跟踪区域为无效区域时,所述判断模块304进一步适于:Optionally, wherein, when the gesture tracking area included in the current tracking image frame is an invalid area, the judging

获取所述检测器在所述跟踪结果之后输出的检测结果,确定所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域;acquiring the detection result output by the detector after the tracking result, and determining the hand detection area included in the detection result output after the tracking result;

将所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域提供给所述跟踪器,以供所述跟踪器根据所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域输出后续的跟踪结果。The hand detection area included in the detection result output after the tracking result is provided to the tracker, so that the tracker can use the hand detection area included in the detection result output after the tracking result according to the tracking result. The partial detection area outputs the subsequent tracking results.

可选地,其中,当所述当前跟踪图像帧中包含的手势跟踪区域为有效区域时,所述判断模块304进一步适于:Optionally, wherein, when the gesture tracking area included in the current tracking image frame is an effective area, the

将所述有效区域提供给所述检测器,以供所述检测器根据所述有效区域输出后续的检测结果。The effective area is provided to the detector, so that the detector can output subsequent detection results according to the effective area.

可选地,其中,所述判断模块304具体适于:Optionally, wherein, the judging

根据所述有效区域确定当前检测图像帧中的检测范围;Determine the detection range in the current detection image frame according to the effective area;

根据所述检测范围,通过神经网络算法预测与所述当前检测图像帧相对应的检测结果;According to the detection range, predict the detection result corresponding to the current detection image frame through a neural network algorithm;

其中,所述检测结果中包含手势检测区域以及手势类型。Wherein, the detection result includes a gesture detection area and a gesture type.

可选地,其中,所述装置进一步包括:Optionally, wherein the device further comprises:

第四确定模块301,适于确定检测器已输出的检测结果中包含的手部检测区域;The

提供模块302,适于将所述检测器已输出的检测结果中包含的手部检测区域提供给所述跟踪器,以供所述跟踪器根据所述检测器已输出的检测结果中包含的手部检测区域输出后续的跟踪结果。The providing

可选地,其中,所述第一确定模块303具体适于:Optionally, the first determining

跟踪器判断当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域是否为有效区域;The tracker determines whether the gesture tracking area included in the previous frame tracking image frame corresponding to the current tracking image frame is a valid area;

若是,则根据所述前帧跟踪图像帧中包含的手势跟踪区域输出与所述当前跟踪图像帧相对应的跟踪结果;If yes, output the tracking result corresponding to the current tracking image frame according to the gesture tracking area included in the previous frame tracking image frame;

若否,则根据所述检测器提供的手部检测区域输出与所述当前跟踪图像帧相对应的跟踪结果。If not, output the tracking result corresponding to the current tracking image frame according to the hand detection area provided by the detector.

其中,上述各个模块的具体工作原理可参照方法实施例中相应步骤的描述,此处不再赘述。For the specific working principles of the above modules, reference may be made to the descriptions of the corresponding steps in the method embodiments, which will not be repeated here.

图4示出了根据本发明一个实施例的一种计算设备的结构示意图,本发明具体实施例并不对计算设备的具体实现做限定。FIG. 4 shows a schematic structural diagram of a computing device according to an embodiment of the present invention. The specific embodiment of the present invention does not limit the specific implementation of the computing device.

如图4所示,该计算设备可以包括:处理器(processor)402、通信接口(Communications Interface)404、存储器(memory)406、以及通信总线408。As shown in FIG. 4 , the computing device may include: a processor (processor) 402 , a communications interface (Communications Interface) 404 , a memory (memory) 406 , and a communication bus 408 .

其中:in:

处理器402、通信接口404、以及存储器406通过通信总线408完成相互间的通信。The processor 402 , the

通信接口404,用于与其它设备比如客户端或其它服务器等的网元通信。The

处理器402,用于执行程序410,具体可以执行上述基于视频数据的手势控制方法实施例中的相关步骤。The processor 402 is configured to execute the

具体地,程序410可以包括程序代码,该程序代码包括计算机操作指令。Specifically, the

处理器402可能是中央处理器CPU,或者是特定集成电路ASIC(ApplicationSpecific Integrated Circuit),或者是被配置成实施本发明实施例的一个或多个集成电路。计算设备包括的一个或多个处理器,可以是同一类型的处理器,如一个或多个CPU;也可以是不同类型的处理器,如一个或多个CPU以及一个或多个ASIC。The processor 402 may be a central processing unit (CPU), or an application specific integrated circuit (ASIC), or one or more integrated circuits configured to implement embodiments of the present invention. The one or more processors included in the computing device may be the same type of processors, such as one or more CPUs; or may be different types of processors, such as one or more CPUs and one or more ASICs.

存储器406,用于存放程序410。存储器406可能包含高速RAM存储器,也可能还包括非易失性存储器(non-volatile memory),例如至少一个磁盘存储器。The memory 406 is used to store the

程序410具体可以用于使得处理器402执行以下操作:The

每当获取到跟踪器当前输出的与所述视频数据相对应的跟踪结果后,根据所述跟踪结果确定当前跟踪图像帧中包含的手势跟踪区域;After obtaining the tracking result corresponding to the video data currently output by the tracker, determine the gesture tracking area included in the current tracking image frame according to the tracking result;

从检测器已输出的与所述视频数据相对应的各次检测结果中获取输出时间最晚的检测结果,确定所述输出时间最晚的检测结果中包含的手势类型;Obtain the detection result with the latest output time from the detection results corresponding to the video data that have been output by the detector, and determine the gesture type included in the detection result with the latest output time;

根据所述输出时间最晚的检测结果中包含的手势类型以及所述当前跟踪图像帧中包含的手势跟踪区域确定控制规则;Determine the control rule according to the gesture type included in the detection result with the latest output time and the gesture tracking area included in the current tracking image frame;

向预设的智能硬件发送与所述控制规则相对应的控制指令。Send a control instruction corresponding to the control rule to the preset intelligent hardware.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:In an optional manner, the

确定所述当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域;Determine the gesture tracking area included in the previous frame tracking image frame corresponding to the current tracking image frame;

根据所述前帧跟踪图像帧中包含的手势跟踪区域以及所述当前跟踪图像帧中包含的手势跟踪区域确定手部运动轨迹;Determine the hand motion trajectory according to the gesture tracking area included in the previous frame tracking image frame and the gesture tracking area included in the current tracking image frame;

根据所述输出时间最晚的检测结果中包含的手势类型以及所述手部运动轨迹,确定对应的控制规则。According to the gesture type included in the detection result with the latest output time and the hand motion trajectory, a corresponding control rule is determined.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:In an optional manner, the

查询预设的手势控制库,根据所述手势控制库确定与所述输出时间最晚的检测结果中包含的手势类型以及所述手部运动轨迹相对应的控制规则;querying a preset gesture control library, and determining, according to the gesture control library, a control rule corresponding to the gesture type included in the detection result with the latest output time and the hand motion trajectory;

其中,所述手势控制库用于存储与各种手势类型和/或手部运动轨迹相对应的控制规则。The gesture control library is used to store control rules corresponding to various gesture types and/or hand motion trajectories.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:其中,所述跟踪器每隔第一预设间隔从所述视频数据中提取一帧图像作为当前跟踪图像帧,并输出与所述当前跟踪图像帧相对应的跟踪结果;In an optional manner, the

所述检测器每隔第二预设间隔从所述视频数据中提取一帧图像作为当前检测图像帧,并输出与所述当前检测图像帧相对应的检测结果;The detector extracts a frame of image from the video data every second preset interval as a current detection image frame, and outputs a detection result corresponding to the current detection image frame;

其中,所述第二预设间隔大于所述第一预设间隔。Wherein, the second preset interval is greater than the first preset interval.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:In an optional manner, the

判断所述当前跟踪图像帧中包含的手势跟踪区域是否为有效区域;Determine whether the gesture tracking area included in the current tracking image frame is an effective area;

当判断结果为是时,执行所述从检测器已输出的与所述视频数据相对应的各次检测结果中获取输出时间最晚的检测结果的步骤及其后续步骤。When the judgment result is yes, the step of obtaining the detection result with the latest output time from the detection results outputted by the detector and corresponding to the video data and the subsequent steps are performed.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:In an optional manner, the

通过预设的手部分类器判断所述当前跟踪的图像帧中包含的手势跟踪区域是否为手部区域;Determine whether the gesture tracking area included in the currently tracked image frame is a hand area by a preset hand classifier;

若是,则确定所述当前跟踪图像帧中包含的手势跟踪区域为有效区域;若否,则确定所述当前跟踪图像帧中包含的手势跟踪区域为无效区域。If yes, the gesture tracking area included in the current tracking image frame is determined to be a valid area; if not, it is determined that the gesture tracking area included in the current tracking image frame is an invalid area.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:In an optional manner, the

获取所述检测器在所述跟踪结果之后输出的检测结果,确定所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域;acquiring the detection result output by the detector after the tracking result, and determining the hand detection area included in the detection result output after the tracking result;

将所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域提供给所述跟踪器,以供所述跟踪器根据所述在所述跟踪结果之后输出的检测结果中包含的手部检测区域输出后续的跟踪结果。The hand detection area included in the detection result output after the tracking result is provided to the tracker, so that the tracker can use the hand detection area included in the detection result output after the tracking result according to the tracking result. The partial detection area outputs the subsequent tracking results.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:In an optional manner, the

将所述有效区域提供给所述检测器,以供所述检测器根据所述有效区域输出后续的检测结果。The effective area is provided to the detector, so that the detector can output subsequent detection results according to the effective area.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:In an optional manner, the

根据所述有效区域确定当前检测图像帧中的检测范围;Determine the detection range in the current detection image frame according to the effective area;

根据所述检测范围,通过神经网络算法预测与所述当前检测图像帧相对应的检测结果;According to the detection range, predict the detection result corresponding to the current detection image frame through a neural network algorithm;

其中,所述检测结果中包含手势检测区域以及手势类型。Wherein, the detection result includes a gesture detection area and a gesture type.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:In an optional manner, the

确定检测器已输出的检测结果中包含的手部检测区域;Determine the hand detection area included in the detection results that the detector has output;

将所述检测器已输出的检测结果中包含的手部检测区域提供给所述跟踪器,以供所述跟踪器根据所述检测器已输出的检测结果中包含的手部检测区域输出后续的跟踪结果。The hand detection area included in the detection result that the detector has output is provided to the tracker, so that the tracker can output a subsequent hand detection area according to the hand detection area included in the detection result that the detector has output. Tracking Results.

在一种可选的方式中,程序410具体可以进一步用于使得处理器402执行以下操作:In an optional manner, the

跟踪器判断当前跟踪图像帧对应的前帧跟踪图像帧中包含的手势跟踪区域是否为有效区域;The tracker determines whether the gesture tracking area included in the previous frame tracking image frame corresponding to the current tracking image frame is a valid area;

若是,则根据所述前帧跟踪图像帧中包含的手势跟踪区域输出与所述当前跟踪图像帧相对应的跟踪结果;If yes, output the tracking result corresponding to the current tracking image frame according to the gesture tracking area included in the previous frame tracking image frame;

若否,则根据所述检测器提供的手部检测区域输出与所述当前跟踪图像帧相对应的跟踪结果。If not, output the tracking result corresponding to the current tracking image frame according to the hand detection area provided by the detector.

在此提供的算法和显示不与任何特定计算机、虚拟系统或者其它设备固有相关。各种通用系统也可以与基于在此的示教一起使用。根据上面的描述,构造这类系统所要求的结构是显而易见的。此外,本发明也不针对任何特定编程语言。应当明白,可以利用各种编程语言实现在此描述的本发明的内容,并且上面对特定语言所做的描述是为了披露本发明的最佳实施方式。The algorithms and displays provided herein are not inherently related to any particular computer, virtual system, or other device. Various general-purpose systems can also be used with teaching based on this. The structure required to construct such a system is apparent from the above description. Furthermore, the present invention is not directed to any particular programming language. It is to be understood that various programming languages may be used to implement the inventions described herein, and that the descriptions of specific languages above are intended to disclose the best mode for carrying out the invention.

在此处所提供的说明书中,说明了大量具体细节。然而,能够理解,本发明的实施例可以在没有这些具体细节的情况下实践。在一些实例中,并未详细示出公知的方法、结构和技术,以便不模糊对本说明书的理解。In the description provided herein, numerous specific details are set forth. It will be understood, however, that embodiments of the invention may be practiced without these specific details. In some instances, well-known methods, structures and techniques have not been shown in detail in order not to obscure an understanding of this description.

类似地,应当理解,为了精简本公开并帮助理解各个发明方面中的一个或多个,在上面对本发明的示例性实施例的描述中,本发明的各个特征有时被一起分组到单个实施例、图、或者对其的描述中。然而,并不应将该公开的方法解释成反映如下意图:即所要求保护的本发明要求比在每个权利要求中所明确记载的特征更多的特征。更确切地说,如下面的权利要求书所反映的那样,发明方面在于少于前面公开的单个实施例的所有特征。因此,遵循具体实施方式的权利要求书由此明确地并入该具体实施方式,其中每个权利要求本身都作为本发明的单独实施例。Similarly, it is to be understood that in the above description of exemplary embodiments of the invention, various features of the invention are sometimes grouped together into a single embodiment, figure, or its description. This disclosure, however, should not be construed as reflecting an intention that the invention as claimed requires more features than are expressly recited in each claim. Rather, as the following claims reflect, inventive aspects lie in less than all features of a single foregoing disclosed embodiment. Thus, the claims following the Detailed Description are hereby expressly incorporated into this Detailed Description, with each claim standing on its own as a separate embodiment of this invention.