CN103268153A - Human-computer interaction system and interaction method based on computer vision in demonstration environment - Google Patents

Human-computer interaction system and interaction method based on computer vision in demonstration environmentDownload PDFInfo

- Publication number

- CN103268153A CN103268153ACN2013102123622ACN201310212362ACN103268153ACN 103268153 ACN103268153 ACN 103268153ACN 2013102123622 ACN2013102123622 ACN 2013102123622ACN 201310212362 ACN201310212362 ACN 201310212362ACN 103268153 ACN103268153 ACN 103268153A

- Authority

- CN

- China

- Prior art keywords

- gesture

- human body

- human

- effect display

- display module

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Landscapes

- User Interface Of Digital Computer (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明属于人机交互领域,具体来说,涉及演示环境下的基于计算机视觉的人机交互系统及交互方法。The present invention belongs to the field of human-computer interaction, and specifically relates to a computer vision-based human-computer interaction system and an interaction method in a demonstration environment.

背景技术Background technique

演示行为是指借助屏幕或投影显示演示内容,用演讲的形式的一种介绍行为。演示环境是指进行演示行为的环境。目前,随着各类视频捕捉设备的快速普及,基于视觉的用户行为分析与自然交互技术的重要性也越来越突出,并可广泛应用于智能电视、体感交互、教学演示等场合。一个典型的应用场景是:目前普遍使用的演示系统通常要使用如键盘、鼠标以及无线遥控器等控制设备,但在人机交互的自然化,便捷性上仍存在诸多限制。如果能针对人机交互这一典型问题,开发一种更加自然的交互方式,显然是非常有吸引力和实用价值的。基于计算机视觉的人机交互可以解决以上问题,然后现有的此类交互一般都要求控制者面对屏幕,且身体不能靠近身后的物体。显然,这样的空间要求放在演示环境中的演讲者身上是不合适的。此外,为了避免手势误判,控制者在身上,尤其是手部,受到了很大的约束。Demonstration behavior refers to an introduction behavior in the form of a speech by displaying the content of the demonstration with the help of a screen or projection. The demonstration environment refers to the environment in which the demonstration behavior is performed. At present, with the rapid popularization of various video capture devices, the importance of vision-based user behavior analysis and natural interaction technology is becoming more and more prominent, and can be widely used in smart TVs, somatosensory interaction, teaching demonstrations and other occasions. A typical application scenario is: currently commonly used demonstration systems usually use control devices such as keyboards, mice, and wireless remote controls, but there are still many limitations in the naturalization and convenience of human-computer interaction. It is obviously very attractive and practical to develop a more natural interaction method for the typical problem of human-computer interaction. Human-computer interaction based on computer vision can solve the above problems, but the existing such interactions generally require the controller to face the screen and keep the body away from the objects behind it. Clearly, such space requirements are not appropriate for a speaker in a presentation environment. In addition, in order to avoid misjudgment of gestures, the controller is subject to great constraints on the body, especially the hands.

发明内容Contents of the invention

技术问题:本发明所要解决的技术问题是:提供演示环境下基于计算机视觉的人机交互系统,该人机交互系统通过视觉手段识别演讲者的典型手势,并将其应用于系统演示,从而从视觉识别角度,帮助演讲者与演示设备间自然交互,一方面可以让演讲者面向观众展现演示内容,另一方面显示内容间的切换效果和其他动画效果可以动态地由演讲者手势确定,减少了显示效果前期准备时间,提高了演讲者的工作效率;同时,还提供了该交互系统的交互方法,该方法简单,通过演讲者的手势动作控制演示内容的切换。Technical problem: The technical problem to be solved by the present invention is to provide a human-computer interaction system based on computer vision in a demonstration environment. The visual recognition angle helps the natural interaction between the speaker and the presentation equipment. On the one hand, it allows the speaker to show the presentation content to the audience. On the other hand, the switching effect and other animation effects between the displayed content can be dynamically determined by the speaker’s gestures, reducing the The pre-preparation time of the display effect improves the speaker's work efficiency; at the same time, it also provides an interactive method of the interactive system, which is simple and controls the switching of the demonstration content through the speaker's gestures.

技术方案:为解决上述技术问题,本发明采用的技术方案是:Technical scheme: in order to solve the above technical problems, the technical scheme adopted in the present invention is:

一种演示环境下基于计算机视觉的人机交互系统,该人机交互系统包括演示屏幕、计算机、视觉传感器和人体,人体和演示屏幕位于视觉传感器的视野范围内;人体位于演示屏幕前方;人体背部或者人体侧部与演示屏幕相对;计算机中包含手势识别模块和效果显示模块;A human-computer interaction system based on computer vision in a demonstration environment. The human-computer interaction system includes a demonstration screen, a computer, a visual sensor and a human body. The human body and the demonstration screen are located within the field of vision of the visual sensor; the human body is located in front of the demonstration screen; Or the side of the human body is opposite to the demonstration screen; the computer includes a gesture recognition module and an effect display module;

手势识别模块用于控制计算机与视觉传感器的连接和断开,从视觉传感器获得数据,并对接收的数据进行分析,产生相应的手势控制指令,将手势控制指令传递给与效果显示模块;The gesture recognition module is used to control the connection and disconnection between the computer and the visual sensor, obtain data from the visual sensor, analyze the received data, generate corresponding gesture control instructions, and pass the gesture control instructions to the effect display module;

效果显示模块用于建立和绘制图形界面,读入或绘制用户进行演示的内容,提供用户选择的手势指令集合,接受手势识别模块发送的手势控制指令,并显示手势控制指令对应的演示内容;The effect display module is used to create and draw a graphical interface, read in or draw the content of the user's demonstration, provide a set of gesture commands selected by the user, accept the gesture control commands sent by the gesture recognition module, and display the demonstration content corresponding to the gesture control commands;

计算机中的效果显示模块将演示内容绘制在展示给观众观看的演示屏幕上,视觉传感器捕获位于视觉传感器的视野范围内的信息作为视觉信息,视觉传感器将视觉信息传给计算机的手势识别模块,手势识别模块接受并分析该视觉信息,然后生成与该视觉信息相应的手势控制指令,手势识别模块将该手势控制指令传递给效果显示模块,效果显示模块根据接收到的手势控制指令,切换演示屏幕上的演示内容。The effect display module in the computer draws the demonstration content on the demonstration screen displayed to the audience. The visual sensor captures the information within the visual sensor's field of view as visual information, and the visual sensor transmits the visual information to the gesture recognition module of the computer. The recognition module receives and analyzes the visual information, and then generates a gesture control instruction corresponding to the visual information. The gesture recognition module transmits the gesture control instruction to the effect display module, and the effect display module switches the display screen according to the received gesture control instruction. content of the demo.

进一步,所述的效果显示模块还用于接受通过计算机辅件输入的控制指令,并显示该控制指令对应的演示内容。Further, the effect display module is also used to accept control instructions input through computer accessories, and display the demonstration content corresponding to the control instructions.

上述的演示环境下基于计算机视觉的人机交互系统的交互方法,该交互方法包括以下步骤:The interaction method of the human-computer interaction system based on computer vision under the above-mentioned demonstration environment, the interaction method includes the following steps:

第一步:设置人体手势控制动作的标准运动方式,然后将该标准运动方式及该标准运动方式对应的判别标准,存储在手势识别模块中;对每种手势控制动作的标准运动方式分配手势指令,将每种手势指令以及该手势指令对应的演示效果制成指令效果对照表,将该指令效果对照表存储在效果显示模块中;Step 1: Set the standard motion mode of the human gesture control action, and then store the standard motion mode and the corresponding criteria for the standard motion mode in the gesture recognition module; assign gesture commands to the standard motion mode of each gesture control action , making each gesture instruction and the demonstration effect corresponding to the gesture instruction into an instruction effect comparison table, and storing the instruction effect comparison table in the effect display module;

第二步:安装系统:安装视觉传感器,使人体和演示屏幕位于视觉传感器的视野范围内;视觉传感器的信号输出端与计算机的手势识别模块的信号输入端进行通信连接,手势识别模块的信号输出端和效果显示模块的信号输入端连接,效果显示模块的信号输出端和演示屏幕的信号输入端连接;演示屏幕显示演示内容及切换的动画效果;The second step: install the system: install the visual sensor so that the human body and the demonstration screen are located in the field of vision of the visual sensor; the signal output terminal of the visual sensor communicates with the signal input terminal of the gesture recognition module of the computer, and the signal output of the gesture recognition module The terminal is connected to the signal input terminal of the effect display module, and the signal output terminal of the effect display module is connected to the signal input terminal of the demo screen; the demo screen displays the demonstration content and the switching animation effect;

第三步:人机交互:人体在演示屏幕前方作出手势动作,视觉传感器捕获位于视觉传感器的视野范围内的信息,并将信息传递给手势识别模块,手势识别模块持续对视觉传感器传递的信息进行分析,并判断该信息中包含的人体手势动作是否属于第一步中设置的人体手势控制动作的标准运动方式,如果是,则将该信息转化为手势指令后传递给效果显示模块,效果显示模块接收手势识别模块传递的信息,根据指令效果对照表,对演示屏幕上演示的内容做出相应的改变;如果不是,则手势识别模块记录或者不记录该计算结果,手势识别模块不与效果显示模块通信或者通知效果显示模块当前没有有效手势,效果显示模块对演示内容不产生操作。 The third step: human-computer interaction: the human body makes gestures in front of the demonstration screen, and the visual sensor captures the information within the field of vision of the visual sensor and transmits the information to the gesture recognition module. The gesture recognition module continues to process the information transmitted by the visual sensor Analyze and judge whether the human gesture action contained in the information belongs to the standard motion mode of the human gesture control action set in the first step, if so, convert the information into a gesture instruction and pass it to the effect display module, and the effect display module Receive the information transmitted by the gesture recognition module, and make corresponding changes to the content demonstrated on the demo screen according to the instruction effect comparison table; if not, the gesture recognition module records or does not record the calculation result, and the gesture recognition module does not communicate with the effect display module The communication or notification effect display module currently has no valid gestures, and the effect display module does not operate on the demonstration content. the

有益效果:与现有技术相比,本发明具有以下有益效果:Beneficial effects: compared with the prior art, the present invention has the following beneficial effects:

(1)演讲显示内容间的切换效果和其他动画效果动态地由演讲者手势确定,减少了显示效果前期准备时间,提高了演讲者的工作效率。现有技术中演讲环境通常由演讲者、屏幕、计算机构成,演讲者在演讲过程中需要到计算机上切换演示内容,或者借助遥控器等额外配件来实现控制。而且所切换的内容及切换方式也是实现设置好的,不能根据演讲需要动态的改变。本发明的人机交互系统包括演示屏幕、计算机、视觉传感器和人体。人体即演讲者。人体位于演示屏幕前方,且人体背部或者人体侧部与演示屏幕相对。视觉传感器捕获位于视觉传感器的视野范围内的信息作为视觉信息,视觉传感器将视觉信息传给手势识别模块,手势识别模块接受并分析该视觉信息,然后生成与该视觉信息相应的手势控制指令,效果显示模块根据接收到的手势控制指令,切换演示屏幕上的演示内容。这样,人体在演讲的同时,通过自身的手势控制动作,实现了演示屏幕的内容切换。在此过程中,人体无需中止演讲,来进行演讲内容的切换。这样,本发明的交互系统提高了演讲者的工作效率。(1) The switching effect and other animation effects between speech display contents are dynamically determined by the speaker's gestures, which reduces the preparation time for the display effect and improves the speaker's work efficiency. In the prior art, the speech environment is usually composed of a speaker, a screen, and a computer. During the speech, the speaker needs to switch the presentation content on the computer, or use additional accessories such as a remote control to realize control. Moreover, the switched content and switching method are also set, and cannot be dynamically changed according to the needs of the speech. The human-computer interaction system of the present invention includes a demonstration screen, a computer, a visual sensor and a human body. The human body is the speaker. The human body is positioned in front of the demonstration screen, and the back or side of the human body is opposite to the demonstration screen. The visual sensor captures information within the visual sensor's field of view as visual information, and the visual sensor transmits the visual information to the gesture recognition module, which receives and analyzes the visual information, and then generates gesture control instructions corresponding to the visual information, the effect The display module switches the demonstration content on the demonstration screen according to the received gesture control instruction. In this way, while the human body is giving a speech, it realizes the content switching of the demonstration screen through its own gesture control actions. During this process, the human body does not need to stop the speech to switch the content of the speech. In this way, the interactive system of the present invention improves the work efficiency of the presenter.

(2)有利于保持人体演讲的连贯性。在本发明的交互系统中,人体在切换演讲内容时,无需中止演讲,只需通过手势控制动作,即可实现演示屏幕内容的切换。人体在一边演讲时,一边进行演讲内容的切换。这有利于保持人体演讲的连贯性。本发明的系统中,人体与各设备间具有适合演示环境的空间关系。控制手势没有约束人体做正常演讲需要的肢体语言。考虑到了演示环境中观众的存在,本发明将演示内容的切换效果与手势动作保持一致性,让观众感受到演讲者切换内容的流畅,帮助演讲者取得更好的演讲展示效果。(2) It is conducive to maintaining the coherence of human speech. In the interactive system of the present invention, when the human body is switching the content of the speech, there is no need to stop the speech, and the switching of the content of the presentation screen can be realized only through gesture control actions. When the human body is giving a speech, it switches the content of the speech. This is good for maintaining the coherence of human speech. In the system of the present invention, the human body and each device have a spatial relationship suitable for the demonstration environment. Control gestures do not constrain the body language that the human body needs for normal speech. Considering the presence of audience in the presentation environment, the present invention keeps the switching effect of the presentation content consistent with gesture actions, so that the audience can feel the smoothness of the lecturer's content switching, and helps the lecturer to achieve better speech display effects.

(3)演讲者面向观众展现演示内容。现有技术的人机交互中,人体通常面向屏幕才能进行人机交互。而本发明中的人机交互系统和方法中,人体在演讲时,面向观众,在进行演示内容切换时,仍然可以面向观众。本发明的人机交互,无需人体面向屏幕。现有的基于计算机视觉的人体交互系统往往会因为不能完整的识别出整个人体而无法进行一些手势判断。本专利的技术方案允许人体与背景距离比较近。本发明的系统中的手势可由用户将手掌平贴在屏幕上进行操作,相当于与背景的零距离接触。(3) The speaker presents the presentation to the audience. In the human-computer interaction in the prior art, the human body usually faces the screen to perform the human-computer interaction. However, in the human-computer interaction system and method of the present invention, the human body faces the audience when giving a speech, and can still face the audience when switching the demonstration content. The human-computer interaction of the present invention does not require the human body to face the screen. Existing computer vision-based human interaction systems are often unable to perform some gesture judgments because they cannot fully recognize the entire human body. The technical scheme of this patent allows the distance between the human body and the background to be relatively close. The gestures in the system of the present invention can be operated by the user pressing the palm flat on the screen, which is equivalent to zero-distance contact with the background.

附图说明Description of drawings

图1是本发明中交互方法的流程框图。Figure 1 is a flowchart of the interactive method in the present invention.

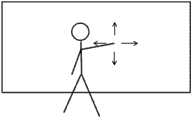

图2是本发明中实施例1的人体手势动作示意图。Fig. 2 is a schematic diagram of human body gestures in Embodiment 1 of the present invention.

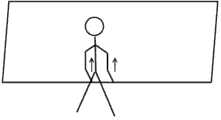

图3是本发明中实施例2的人体手势动作示意图。Fig. 3 is a schematic diagram of human body gestures in Embodiment 2 of the present invention.

图4是本发明中实施例3的人体手势动作示意图。Fig. 4 is a schematic diagram of human body gestures in Embodiment 3 of the present invention.

具体实施方式Detailed ways

下面结合实施例,对本发明的技术方案进行详细的说明。The technical solution of the present invention will be described in detail below in conjunction with the embodiments.

本发明的演示环境下基于计算机视觉的人机交互系统,该人机交互系统包括演示屏幕、计算机、视觉传感器和人体。视觉传感器优选带深度数据的视觉传感器,如微软公司的Kinect For Windows。视觉传感器的视野范围是以视觉传感器为顶点的圆锥形的区域,且该视野范围覆盖了全部或部分演示屏幕。人体和演示屏幕位于视觉传感器的视野范围内;人体位于演示屏幕前方;人体背部或者人体侧部与演示屏幕相对;计算机中包含手势识别模块和效果显示模块。手势识别模块用于控制计算机与视觉传感器的连接和断开,从视觉传感器获得数据,并对接收的数据进行分析,产生相应的手势控制指令,将手势控制指令传递给与效果显示模块。效果显示模块用于建立和绘制图形界面,读入或绘制用户进行演示的内容,提供用户选择的手势指令集合,接受手势识别模块发送的手势控制指令,并显示手势控制指令对应的演示内容。效果显示模块用于绘制与显示手势控制指令对应的演示内容,根据控制指令操控其他演示软件,如微软公司的 PowerPoint,进而切换演示内容。The human-computer interaction system based on computer vision in the demonstration environment of the present invention includes a demonstration screen, a computer, a visual sensor and a human body. The visual sensor preferably has a visual sensor with depth data, such as Kinect For Windows of Microsoft Corporation. The field of view of the visual sensor is a cone-shaped area with the visual sensor as the apex, and the field of view covers all or part of the presentation screen. The human body and the demonstration screen are located within the field of vision of the visual sensor; the human body is located in front of the demonstration screen; the back or side of the human body is opposite to the demonstration screen; the computer includes a gesture recognition module and an effect display module. The gesture recognition module is used to control the connection and disconnection between the computer and the visual sensor, obtain data from the visual sensor, analyze the received data, generate corresponding gesture control instructions, and pass the gesture control instructions to the effect display module. The effect display module is used to create and draw a graphical interface, read or draw the content of the user's demonstration, provide a set of gesture commands selected by the user, accept the gesture control commands sent by the gesture recognition module, and display the demonstration content corresponding to the gesture control commands. The effect display module is used to draw the demonstration content corresponding to the display gesture control instruction, and control other presentation software, such as Microsoft's PowerPoint, according to the control instruction, and then switch the demonstration content.

上述系统的工作过程是:计算机中的效果显示模块将演示内容绘制在展示给观众观看的演示屏幕上,视觉传感器捕获位于视觉传感器的视野范围内的信息作为视觉信息,视觉传感器将视觉信息传给计算机的手势识别模块,手势识别模块接受并分析该视觉信息,然后生成与该视觉信息相应的手势控制指令,手势识别模块将该手势控制指令传递给效果显示模块,效果显示模块根据接收到的手势控制指令,切换演示屏幕上的演示内容。The working process of the above system is: the effect display module in the computer draws the demonstration content on the demonstration screen displayed to the audience, the visual sensor captures the information within the visual sensor’s field of view as visual information, and the visual sensor transmits the visual information to The gesture recognition module of the computer, the gesture recognition module accepts and analyzes the visual information, and then generates a gesture control instruction corresponding to the visual information, the gesture recognition module transmits the gesture control instruction to the effect display module, and the effect display module Control commands to switch the demo content on the demo screen.

在上述人机交互系统还包括投影仪。演示屏幕是投影幕布。利用投影幕布和投影仪来实现演示内容的传输与显示。计算机的效果显示模块的信号输出端与投影仪的信号输入端连接,投影仪的信号输出端与投影幕布相对。 The above-mentioned human-computer interaction system also includes a projector. The presentation screen is a projection screen. Use the projection screen and projector to realize the transmission and display of the presentation content. The signal output end of the effect display module of the computer is connected with the signal input end of the projector, and the signal output end of the projector is opposite to the projection screen. the

当然,演示屏幕可以仅仅是显示屏。该显示屏的信号输入端与计算机的效果显示模块的信号输出端连接。这里的显示屏类型可以包括LED、CRT、等离子。演示屏幕展示的演示内容为多媒体内容,包括文字、图片、视频和音乐中的一种或任意组合。Of course, the presentation screen could be just a display screen. The signal input end of the display screen is connected with the signal output end of the effect display module of the computer. The display types here can include LED, CRT, plasma. The demo content displayed on the demo screen is multimedia content, including one or any combination of text, pictures, video and music.

进一步,所述的效果显示模块还用于接受通过计算机辅件输入的控制指令,并显示该控制指令对应的演示内容。计算机辅件包括键盘、鼠标、指点杆、触摸板和遥控器中的一种或任意组合。这使得本发明的交互系统中演示内容的切换不仅仅可以依靠人体手势来实现,而且通过计算机辅件输入的控制指令也可以实现。这样,有利于扩大演示内容切换的控制方式。Further, the effect display module is also used to accept control instructions input through computer accessories, and display the demonstration content corresponding to the control instructions. Computer accessories include one or any combination of keyboards, mice, pointing sticks, touchpads, and remote controls. This makes the switching of demonstration content in the interactive system of the present invention not only rely on human body gestures, but also can be realized through control instructions input by computer accessories. In this way, it is beneficial to expand the control mode for switching presentation content.

如图1所示,上述演示环境下基于计算机视觉的人机交互系统的交互方法,包括以下步骤:As shown in Figure 1, the interaction method of the human-computer interaction system based on computer vision in the above demonstration environment includes the following steps:

第一步:设置人体手势控制动作的标准运动方式,然后将该标准运动方式及该标准运动方式对应的判别标准,存储在手势识别模块中;对每种手势控制动作的标准运动方式分配手势指令,将每种手势指令以及该手势指令对应的演示效果制成指令效果对照表,将该指令效果对照表存储在效果显示模块中;Step 1: Set the standard motion mode of the human gesture control action, and then store the standard motion mode and the corresponding criteria for the standard motion mode in the gesture recognition module; assign gesture commands to the standard motion mode of each gesture control action , making each gesture instruction and the demonstration effect corresponding to the gesture instruction into an instruction effect comparison table, and storing the instruction effect comparison table in the effect display module;

第二步:安装系统:安装视觉传感器,使人体和演示屏幕位于视觉传感器的视野范围内;视觉传感器的信号输出端与计算机的手势识别模块的信号输入端进行通信连接,手势识别模块的信号输出端和效果显示模块的信号输入端连接,效果显示模块的信号输出端和演示屏幕的信号输入端连接;演示屏幕显示演示内容及切换的动画效果;The second step: install the system: install the visual sensor so that the human body and the demonstration screen are located in the field of vision of the visual sensor; the signal output terminal of the visual sensor communicates with the signal input terminal of the gesture recognition module of the computer, and the signal output of the gesture recognition module The terminal is connected to the signal input terminal of the effect display module, and the signal output terminal of the effect display module is connected to the signal input terminal of the demo screen; the demo screen displays the demonstration content and the switching animation effect;

第三步:人机交互:人体在演示屏幕前方作出手势动作,视觉传感器捕获位于视觉传感器的视野范围内的信息,并将信息传递给手势识别模块,手势识别模块持续对视觉传感器传递的信息进行分析,并判断该信息中包含的人体手势动作是否属于第一步中设置的人体手势控制动作的运动方式,如果是,则将该信息转化为手势指令后传递给效果显示模块,效果显示模块接收手势识别模块传递的信息,根据指令效果对照表,对演示屏幕上演示的内容做出相应的改变;如果不是,则手势识别模块记录或者不记录该计算结果,手势识别模块不与效果显示模块通信或者通知效果显示模块当前没有有效手势,效果显示模块对演示内容不产生操作。 The third step: human-computer interaction: the human body makes gestures in front of the demonstration screen, and the visual sensor captures the information within the field of vision of the visual sensor and transmits the information to the gesture recognition module. The gesture recognition module continues to process the information transmitted by the visual sensor Analyze and judge whether the human gesture action contained in the information belongs to the movement mode of the human gesture control action set in the first step, if so, convert the information into a gesture instruction and pass it to the effect display module, and the effect display module receives The information transmitted by the gesture recognition module, according to the instruction effect comparison table, makes corresponding changes to the content demonstrated on the demonstration screen; if not, the gesture recognition module records or does not record the calculation result, and the gesture recognition module does not communicate with the effect display module Or the notification effect display module does not have a valid gesture currently, and the effect display module does not operate on the demonstration content. the

进一步,所述的第一步中,设置人体手势控制动作的运动方式可以有多种。但在本专利中优选,向左移动、向右移动、向上移动、向下移动四种运动方式。在设置该四种运动方式时,同时在演示屏幕前方设置手势判断区域,手势判断区域位于视觉传感器的视野范围中。这样,在第三步人机交互中,手势识别模块对视觉传感器传递的信息进行分析的过程包括以下步骤:Further, in the first step described above, there may be various ways of setting the movement modes of the gesture control action of the human body. But preferably in this patent, move to the left, move to the right, move up, move down four motion modes. When setting the four motion modes, a gesture judging area is set in front of the demonstration screen at the same time, and the gesture judging area is located in the field of view of the visual sensor. In this way, in the third step of human-computer interaction, the process of the gesture recognition module analyzing the information transmitted by the visual sensor includes the following steps:

步骤101)根据计算机视觉算法,测算人体手部位置。Step 101) Calculate the position of the human hand according to the computer vision algorithm.

步骤102)分析人体手部的位置是否在手势判断区域内:设定演示屏幕前方n米以内的区域为手势判断区域,接着测算出人体的手部到演示屏幕的距离为h米,如果h≤n,则人体手部位于手势判断区域内,进入步骤103),如果h>n,则人体手部位于手势判断区域外,该人体手部动作不属于第一步中设置的人体手势控制动作的标准运动方式。n优选为0.05—0.5。Step 102) Analyze whether the position of the human hand is within the gesture judgment area: set the area within n meters in front of the demonstration screen as the gesture judgment area, and then calculate the distance from the human hand to the demonstration screen as h meters, if h≤ n, the human hand is located in the gesture judgment area, go to step 103), if h>n, the human hand is located outside the gesture judgment area, and the human hand movement does not belong to the human gesture control action set in the first step standard exercise. n is preferably 0.05-0.5.

步骤103)设定手部向上移动的阈值为D1、向下移动的阈值为D2、向左移动的阈值为D3、向右移动的阈值为D4,然后判断人体手部动作中包含的手势运动轨迹从起点到终点分别向上、下、左、右四个方向移动的距离是否达到相应方向的阈值,如果达到阈值,则该手部动作属于第一步中设置的人体手势控制动作的运动方式;如果没有达到阈值,则该手部动作不属于第一步中设置的人体手势控制动作的运动方式;如果两个或两个以上方向都达到阈值,则将最先达到的阈值对应的方向作为第一步中设置的人体手势控制动作的标准运动方式。作为优选,D1= D2= 0.15 H ,D3=D4=0.12 H,其中,H表示人体身高。Step 103) Set the threshold for upward movement of the hand to D1, the threshold for downward movement to D2, the threshold for leftward movement to D3, and the threshold for rightward movement to D4, and then judge the trajectory of gestures contained in human hand movements Whether the distance moved from the starting point to the end point in the four directions of up, down, left and right reaches the threshold value of the corresponding direction. If it reaches the threshold value, the hand movement belongs to the movement mode of the human gesture control action set in the first step; if If the threshold is not reached, the hand movement does not belong to the movement mode of the human gesture control action set in the first step; if two or more directions reach the threshold, the direction corresponding to the first reached threshold is taken as the first The standard movement mode of the human gesture control action set in the step. Preferably, D1= D2=0.15 H, D3=D4=0.12 H, wherein, H represents the height of the human body.

本专利中测算人体手部位置用计算机视觉算法。计算机视觉算法属于现有技术。例如论文《Real-time human pose recognition in parts from single depth images》(出处CVPR '11 Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition;Pages 1297-1304)公开的计算机视觉算法。下面介绍上述步骤101)中测算人体手部位置的一种方法:In this patent, a computer vision algorithm is used to measure and calculate the position of the human hand. Computer vision algorithms are within the state of the art. For example, the computer vision algorithm disclosed in the paper "Real-time human pose recognition in parts from single depth images" (source CVPR '11 Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition; Pages 1297-1304). A method for measuring the position of the human hand in the above step 101) is introduced below:

(1011)利用计算机视觉中的算法以视觉传感器数据为输入作为计算,得出包含人体手部的人体各关节位置信息,取出其中的手部三维位置用于后续分析与计算。这里的算法可以是现有的计算机视觉算法,比如Kinect SDK for Windows中提供的。 (1011) Using the algorithm in computer vision and taking the visual sensor data as input for calculation, the position information of each joint of the human body including the human hand is obtained, and the three-dimensional position of the hand is taken out for subsequent analysis and calculation. The algorithm here can be an existing computer vision algorithm, such as that provided in Kinect SDK for Windows. the

(1012)若(1011)中算法无法针对当前数据计算出人体手部位置信息,或者得出的结果具有较低的置信度时,则丢弃(1011)中的计算结果,进入(1013),否则进入本专利的步骤102)中,分析人体手部的位置是否在判断区域内。(1012) If the algorithm in (1011) cannot calculate the human hand position information based on the current data, or the obtained result has low confidence, then discard the calculation result in (1011) and enter (1013), otherwise Enter step 102) of this patent to analyze whether the position of the human hand is within the judgment area.

(1013)利用计算机视觉中的算法在视觉传感器数据的背景里分离出人体位置。这里的算法可以是现有的计算机视觉算法,比如Kinect SDK for Windows中提供的。分析(1012)中得到的人体左右两边最旁侧的位置,即水平方向上剔除干扰点后的最边缘的左右两点作为手部位置(优先为左)。取该点的空间位置作为人体手部空间位置的近似,进入本专利的步骤102)中,分析人体手部的位置是否在判断区域内。(1013) Utilizing algorithms in computer vision to isolate body positions from the background of visual sensor data. The algorithm here can be an existing computer vision algorithm, such as that provided in Kinect SDK for Windows. The most lateral positions of the left and right sides of the human body obtained in the analysis (1012), that is, the leftmost and leftmost two points after removing interference points in the horizontal direction, are used as the hand position (the left is preferred). Take the spatial position of this point as the approximation of the spatial position of the human hand, enter step 102) of this patent, and analyze whether the position of the human hand is within the judgment area.

以上仅是一种具体的算法,本领域技术人员可以采用现有的其他算法,只要能测算出人体手部的位置即可。The above is only a specific algorithm, and those skilled in the art can use other existing algorithms, as long as the position of the human hand can be measured and calculated.

现有技术的人机交互设备中,人体通常面向屏幕,才能进行人机交互。而在本发明中,人体背向屏幕或者人体侧部朝向屏幕。演讲过程中,演讲者通过手势控制演讲内容切换时,不需要面向屏幕。这样就方便了演讲者在演讲的同时,通过手势运动,控制屏幕上演示内容的切换。本发明的人机交互场景用于演示环境,演讲者在给一群观众做展示。演讲者在演讲的同时,用很多肢体语言来帮助说明,并且用手势动作控制演示屏幕上的演示内容的切换。In the human-computer interaction devices in the prior art, the human body usually faces the screen to perform human-computer interaction. However, in the present invention, the human body faces away from the screen or the side of the human body faces the screen. During the speech, the speaker does not need to face the screen when controlling the switching of speech content through gestures. In this way, it is convenient for the speaker to control the switching of the demonstration content on the screen through gesture movements while giving a speech. The human-computer interaction scene of the present invention is used in a demonstration environment, and a lecturer is presenting to a group of audiences. While speaking, the speaker uses a lot of body language to help explain, and uses gestures to control the switching of the presentation content on the presentation screen.

下面例举实施例。Examples are given below.

实施例1:开窗Example 1: window opening

如图2所示,采用本发明的交互系统和交互方法,进行开窗操作。开窗的人体手势控制动作的标准运动方式为:演讲者沿着与演示屏幕平行的方向,伸出双手,双手在竖直方向保持一定的间隔,双手距演示屏幕5-100厘米,然后双手分别向上和向下打开。As shown in Fig. 2, the window opening operation is performed by using the interactive system and the interactive method of the present invention. The standard movement mode of the human body gesture control action for opening the window is: the speaker stretches out his hands along the direction parallel to the demonstration screen, keeps a certain distance between his hands in the vertical direction, and keeps his hands 5-100 cm away from the demonstration screen, and then separates his hands Open up and down.

手势识别模块持续地对视觉传感器捕获的信息进行计算和分析。利用计算机视觉算法得出人体手部位置与躯干位置。设定手部在水平方向与躯干相差d米为伸出状态。d优选为1。当手势识别模块识别出:人体先伸出第一只手;再伸出第二只手,且第二只手与第一只手同方向伸出;双手上下打开这三个阶段后,手势识别模块向效果显示模块发送对应开窗动作的手势指令。效果显示模块接收手势识别模块传递的信息,根据指令效果对照表,控制演示屏幕的显示效果,即:当双手上下张开时,当前演示内容也在水平方向上被上下切开,分别向屏幕的上方与下方移动,此时演示屏幕显示接下来的演示内容,当之前被切开的演示内容完全移动至演示屏幕边缘时,切换结束。The gesture recognition module continuously calculates and analyzes the information captured by the vision sensor. The computer vision algorithm is used to obtain the position of the human hand and the position of the torso. Set the difference of d meters between the hand and the torso in the horizontal direction as the extended state. d is preferably 1. When the gesture recognition module recognizes that: the human body first stretches out the first hand; then stretches out the second hand, and the second hand stretches out in the same direction as the first hand; after opening these three stages with both hands up and down, gesture recognition The module sends a gesture instruction corresponding to the window opening action to the effect display module. The effect display module receives the information transmitted by the gesture recognition module, and controls the display effect of the demonstration screen according to the instruction effect comparison table, that is, when the hands are opened up and down, the current demonstration content is also cut up and down in the horizontal direction, respectively to the top and bottom of the screen. At this time, the demo screen displays the next demo content. When the previously cut demo content moves completely to the edge of the demo screen, the switching ends.

实施例2:上、下、左、右推拉动作 Example 2: Up, down, left, right push and pull actions

针对播放演示系统过程中的基础控制动作(包括上、下、左、右四个方向移动),其中上、下、左、右分别对应播放演示内容时,切换演示内容的移动方向。上和左表示切换到下一张演示内容,右和下表示切换到上一张演示内容。For the basic control actions in the process of playing the demonstration system (including four directions of movement up, down, left, and right), where up, down, left, and right correspond to switching the movement direction of the demonstration content when playing the demonstration content. Up and left means to switch to the next demo content, and right and down means to switch to the previous demo content.

如图3所示,采用本发明的交互系统和交互方法,分别进行上、下、左、右推拉动作。演讲者在进行上、下、左、右推拉动作时,身体尽量与幕布平行,与幕布保持20cm以上的距离。要切换页面时,将手放入距离屏幕十厘米内的手势判断区域内,进行上、下、左、右推拉动作。As shown in Fig. 3, the interaction system and interaction method of the present invention are used to perform up, down, left, and right push-pull actions respectively. When the speaker pushes and pulls up, down, left, and right, his body should be as parallel to the screen as possible, keeping a distance of more than 20cm from the screen. When switching pages, put your hand in the gesture judgment area within ten centimeters from the screen, and perform up, down, left, and right push and pull actions.

人体手势控制动作的标准运动方式为:上移:手部放入手势判断区域,人体手部向上移动,移动完毕后,手部移出手势判断区域;下移:手部放入手势判断区域,人体手部向下移动,移动完毕后,手部移出手势判断区域;左移:手部放入手势判断区域,人体手部向左移动,移动完毕后,手部移出手势判断区域;右移:手部放入手势判断区域,人体手部向右移动,移动完毕后,手部移出手势判断区域。The standard movement mode of the gesture control action of the human body is: move up: put the hand into the gesture judgment area, and move the human hand upwards. After the movement is completed, the hand moves out of the gesture judgment area; move down: put the hand into the gesture judgment area, and The hand moves downward, and after the movement is completed, the hand moves out of the gesture judgment area; left: the hand is placed in the gesture judgment area, and the human hand moves to the left, and after the movement is completed, the hand moves out of the gesture judgment area; Put the hand into the gesture judgment area, the human hand moves to the right, and after the movement is completed, the hand moves out of the gesture judgment area.

手势识别模块持续地对视觉传感器捕获的信息进行计算和分析。手势识别模块判断该信息中包含的人体手势动作属于提前设置的人体手势控制动作的运动方式后,将该手部动作对应的手势指令传递给效果显示模块,效果显示模块接收手势识别模块传递的手势指令,根据指令效果对照表,对演示屏幕的内容进行相应的切换操作。 The gesture recognition module continuously calculates and analyzes the information captured by the vision sensor. After the gesture recognition module judges that the human gesture action contained in the information belongs to the movement mode of the human gesture control action set in advance, it transmits the gesture instruction corresponding to the hand movement to the effect display module, and the effect display module receives the gesture transmitted by the gesture recognition module Instructions, according to the instruction effect comparison table, perform corresponding switching operations on the content of the demo screen. the

演示屏幕的显示效果是:根据手部移动的方向(若手部斜着移动,则根据角度归入正上、正下、正左、正右四个方向中最接近的一个),当前演示内容朝向手部方向的屏幕边缘移动,同时下一演示内容从相对的一侧开始移入,直至下一演示内容完全覆盖整个屏幕位置。当手部向上或向左推动时,演示屏幕的内容切换到下一张幻灯片。当手部向下或向右推动时,演示屏幕的内容切换到上一张图片。The display effect of the demo screen is: according to the direction of hand movement (if the hand moves obliquely, it will be classified into the closest one of the four directions of up, down, left and right according to the angle), the current demo content is oriented The edge of the screen in the direction of the hand moves, and at the same time the next presentation content moves in from the opposite side until the next presentation content completely covers the entire screen position. When the hand is pushed up or to the left, the content of the presentation screen switches to the next slide. When the hand is pushed down or to the right, the content of the presentation screen switches to the previous picture.

人体手部放入屏幕前方的手势判断区域内时,如果是做向下动作时,应将手部在最高处时放入手势判断区域,而不应该先放入判断区,再将手部举起到最高处。在一个动作做完,页面切换完成后,才可进行下一个动作。When the human hand is placed in the gesture judgment area in front of the screen, if it is a downward movement, the hand should be placed in the gesture judgment area when it is at the highest point, instead of being placed in the judgment area first, and then the hand is raised. Get to the top. After one action is completed and the page switching is completed, the next action can be performed.

实施例3:音乐开启Example 3: music on

如图4所示,采用本发明的交互系统和交互方法,进行音乐开启操作。As shown in FIG. 4, the music start operation is performed by using the interactive system and the interactive method of the present invention.

音乐开启的人体手势控制动作的标准运动方式为:演示者立于视觉传感器正前方,双手自然下垂,人体双手从距离胸前至少10cm的位置,同时缓缓举起,直至肩部位置。The standard movement method of the human gesture control action with the music turned on is: the demonstrator stands directly in front of the visual sensor, with his hands drooping naturally, and the human hands are slowly raised from a position of at least 10cm from the chest to the shoulder position at the same time.

手势识别模块持续地对视觉传感器捕获的信息进行计算和分析。利用计算机视觉算法得出人体手部位置与躯干位置。设定手部在水平方向与躯干相差10cm为伸出状态。当发现人体先后经历双手自然下垂、胸前伸出并同时举起、双手的高度大于或等于肩膀的高度三个阶段后,则向效果显示模块发送对应音乐开启的手势指令。效果显示模块接受到指令后,根据指令效果对照表查阅为开启音乐的效果,对演示屏幕进行音乐开启操作。演示屏幕的显示效果是:播放预先定义的音频文件。The gesture recognition module continuously calculates and analyzes the information captured by the vision sensor. The computer vision algorithm is used to obtain the position of the human hand and the position of the torso. The difference between the hand and the torso in the horizontal direction is 10cm, which is the extended state. When it is found that the human body has experienced three stages of naturally drooping hands, stretching out the chest and raising them at the same time, and the height of the hands is greater than or equal to the height of the shoulders, then send a gesture command corresponding to the music to the effect display module. After the effect display module receives the instruction, it looks up the effect of turning on the music according to the instruction effect comparison table, and performs the operation of turning on the music on the demo screen. The display effect of the demo screen is: playing a pre-defined audio file.

实施例4:音乐停止Example 4: Music stops

音乐停止的人体手势控制动作的标准运动方式为:演示者立于视觉传感器正前方,双手高度大于或等于肩膀高度,双手从距离胸前至少10cm的位置,同时缓缓落下,直至双手自然下垂的位置。The standard movement mode of the human gesture control action when the music stops is: the demonstrator stands directly in front of the visual sensor, the height of the hands is greater than or equal to the height of the shoulders, and the hands are at least 10cm away from the chest, and slowly fall down at the same time until the hands naturally droop. Location.

手势识别模块持续地对视觉传感器捕获的信息进行计算和分析。利用计算机视觉算法得出人体手部位置与躯干位置。设定手部在水平方向与躯干相差10cm为伸出状态。当手势识别模块识别出人体先后经历双手的高度大于或等于肩膀的高度、胸前伸出并同时落下和双手自然下垂三个阶段后,则手势识别模块向效果显示模块发送对应的音乐关闭的手势指令。效果显示模块接受到手势指令后,根据指令效果对照表查阅为音乐停止的效果。效果显示模块对演示屏幕进行音乐停止操作。演示屏幕的显示效果是:若当前有音频正在播放,则结束播放。The gesture recognition module continuously calculates and analyzes the information captured by the vision sensor. The computer vision algorithm is used to obtain the position of the human hand and the position of the torso. The difference between the hand and the torso in the horizontal direction is 10cm, which is the extended state. When the gesture recognition module recognizes that the human body has successively experienced three stages: the height of the hands is greater than or equal to the height of the shoulders, the chest stretches out and falls at the same time, and the hands naturally droop, the gesture recognition module sends the corresponding music off gesture to the effect display module instruction. After the effect display module receives the gesture instruction, it looks up the effect of music stop according to the instruction effect comparison table. The effect display module performs a music stop operation on the demo screen. The display effect of the demo screen is: if there is audio currently playing, the playback will end.

Claims (10)

- Under the demo environment based on the man-machine interactive system of computer vision, it is characterized in that this man-machine interactive system comprises presentation screen, computing machine, vision sensor and human body, human body and presentation screen are positioned at vision sensor within sweep of the eye; Human body is positioned at presentation screen the place ahead; Human body back or human body sidepiece are relative with presentation screen; Comprise gesture identification module and effect display module in the computing machine;The gesture identification module is used for being connected and disconnection of control computing machine and vision sensor, obtains data from vision sensor, and the data of reception are analyzed, and produces corresponding gesture steering order, and the gesture steering order is passed to and the effect display module;The effect display module is used for setting up and the graphing interface, read in or draw the content that the user demonstrates, the gesture steering order that the gesture identification module sends is accepted in the gesture instruction set that provides the user to select, and shows the demo content of gesture steering order correspondence;Effect display module in the computing machine is plotted in demo content and shows on the presentation screen that spectators watch, vision sensor catch be positioned at vision sensor information within the vision as visual information, the gesture identification module of computing machine passed to visual information by vision sensor, this visual information is accepted and analyzed to the gesture identification module, generate and the corresponding gesture steering order of this visual information then, the gesture identification module passes to the effect display module with this gesture steering order, the effect display module switches the demo content on the presentation screen according to the gesture steering order that receives.

- According under the described demo environment of claim 1 based on the man-machine interactive system of computer vision, it is characterized in that, also comprise projector, described presentation screen is projection screen, the signal output part of the effect display module of computing machine is connected with the signal input part of projector, and the signal output part of projector is relative with projection screen.

- According under the described demo environment of claim 1 based on the man-machine interactive system of computer vision, it is characterized in that described presentation screen is display screen, the signal input part of this display screen is connected with the signal output part of the effect display module of computing machine.

- According under the described demo environment of claim 1 based on the man-machine interactive system of computer vision, it is characterized in that, described effect display module also is used for accepting to pass through the steering order of computing machine auxiliary input, and shows the demo content of this steering order correspondence.

- According under the described demo environment of claim 4 based on the man-machine interactive system of computer vision, it is characterized in that described computing machine auxiliary comprises a kind of or combination in any in keyboard, mouse, TrackPoint, touch pad and the telepilot.

- According under the described demo environment of claim 1 based on the man-machine interactive system of computer vision, it is characterized in that, described vision sensor be the band depth data vision sensor.

- According under the described demo environment of claim 1 based on the man-machine interactive system of computer vision, it is characterized in that described demo content comprises a kind of or combination in any in literal, picture, video and the music.

- Under the described demo environment of claim 1 based on the exchange method of the man-machine interactive system of computer vision, it is characterized in that this exchange method may further comprise the steps:The first step: the standard movement mode of human body gesture control action is set, with the discrimination standard of this standard movement mode and this standard movement mode correspondence, is stored in the gesture identification module then; Standard movement mode to every kind of gesture control action is distributed gesture instruction, and the demonstration effect of every kind of gesture instruction and this gesture instruction correspondence is made instruction effect comparison table, should instruct the effect comparison table to be stored in the effect display module;Second step: installation system: vision sensor is installed, is made human body and presentation screen be positioned at vision sensor within sweep of the eye; The signal output part of vision sensor communicates with the signal input part of the gesture identification module of computing machine and is connected, the signal output part of gesture identification module is connected with the signal input part of effect display module, and the signal output part of effect display module is connected with the signal input part of presentation screen; Presentation screen shows the animation effect of demo content and switching;The 3rd step: man-machine interaction: human body is made gesture motion in presentation screen the place ahead, vision sensor is caught the information within the vision that is positioned at vision sensor, and information passed to the gesture identification module, the gesture identification module continues the information of vision sensor transmission is analyzed, and judge whether the human body gesture motion that comprises in this information belongs to the standard movement mode of the human body gesture control action that arranges in the first step, if, pass to the effect display module after then this information being converted into gesture instruction, the effect display module receives the information that the gesture identification module is transmitted, according to instruction effect comparison table, the content of demonstrating on the presentation screen is made corresponding change; If not, gesture identification module records or do not record this result of calculation then, gesture identification module are not communicated by letter with the effect display module or are notified that the effect display module is current not to have effective gesture, and the effect display module does not produce operation to demo content.

- According under the described demo environment of claim 8 based on the exchange method of the man-machine interactive system of computer vision, it is characterized in that, in the described first step, the mode of motion of human body gesture control action is set for being moved to the left, moving right, moving up, moving down, gesture is set in presentation screen the place ahead simultaneously judges the zone, gesture judges that the zone is arranged in the field range of vision sensor.

- According under the described demo environment of claim 9 based on the exchange method of the man-machine interactive system of computer vision, it is characterized in that in described the 3rd step, the process that the gesture identification module is analyzed the information of vision sensor transmission may further comprise the steps:Step 101) according to computer vision algorithms make, measuring and calculating human hands position;Step 102) whether the position of analyzing human hands is in gesture is judged the zone: setting presentation screen the place ahead n rice is that gesture is judged the zone with interior zone, the hand that then calculates human body is h rice to the distance of presentation screen, if h≤n, then human hands is positioned at gesture judgement zone, enter step 103), if h>n, then human hands is positioned at outside the gesture judgement zone, and this human hands action does not belong to the standard movement mode of the human body gesture control action that arranges in the first step;Step 103) threshold value that the threshold value that the threshold value that to set the threshold value that hand moves up be D1, move down is D2, be moved to the left is D3, move right is D4, judge then the gesture motion track that comprises in the human hands action from origin-to-destination respectively up and down, whether the distance that moves of left and right four direction reach the threshold value of respective direction, if reach threshold value, then this hand motion belongs to the mode of motion of the human body gesture control action that arranges in the first step; If do not reach threshold value, then this hand motion does not belong to the mode of motion of the human body gesture control action that arranges in the first step; If two or more directions all reach threshold value, the direction of the threshold value correspondence that then will reach at first is as the standard movement mode of the human body gesture control action that arranges in the first step.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201310212362.2ACN103268153B (en) | 2013-05-31 | 2013-05-31 | Based on the man-machine interactive system of computer vision and exchange method under demo environment |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201310212362.2ACN103268153B (en) | 2013-05-31 | 2013-05-31 | Based on the man-machine interactive system of computer vision and exchange method under demo environment |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN103268153Atrue CN103268153A (en) | 2013-08-28 |

| CN103268153B CN103268153B (en) | 2016-07-06 |

Family

ID=49011788

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201310212362.2AExpired - Fee RelatedCN103268153B (en) | 2013-05-31 | 2013-05-31 | Based on the man-machine interactive system of computer vision and exchange method under demo environment |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN103268153B (en) |

Cited By (17)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN103677992A (en)* | 2013-12-20 | 2014-03-26 | 深圳泰山在线科技有限公司 | Method and system for switching page in motion sensing mode |

| CN104460972A (en)* | 2013-11-25 | 2015-03-25 | 安徽寰智信息科技股份有限公司 | Human-computer interaction system based on Kinect |

| CN104461524A (en)* | 2014-11-27 | 2015-03-25 | 沈阳工业大学 | Song requesting method based on Kinect |

| CN104834383A (en)* | 2015-05-26 | 2015-08-12 | 联想(北京)有限公司 | Input method and electronic device |

| CN104952289A (en)* | 2015-06-16 | 2015-09-30 | 浙江师范大学 | Novel intelligentized somatosensory teaching aid and use method thereof |

| CN105549747A (en)* | 2016-01-29 | 2016-05-04 | 合肥工业大学 | Wireless gesture interaction based specially-shaped particle type LED display system |

| CN106125928A (en)* | 2016-06-24 | 2016-11-16 | 同济大学 | PPT based on Kinect demonstrates aid system |

| CN107766842A (en)* | 2017-11-10 | 2018-03-06 | 济南大学 | A kind of gesture identification method and its application |

| CN108241434A (en)* | 2018-01-03 | 2018-07-03 | 广东欧珀移动通信有限公司 | Human-computer interaction method, device, medium and mobile terminal based on depth of field information |

| CN109739373A (en)* | 2018-12-19 | 2019-05-10 | 重庆工业职业技术学院 | A motion trajectory-based demonstration device control method and system |

| CN109857325A (en)* | 2019-01-31 | 2019-06-07 | 北京字节跳动网络技术有限公司 | Display interface switching method, electronic equipment and computer readable storage medium |

| CN109947247A (en)* | 2019-03-14 | 2019-06-28 | 海南师范大学 | A somatosensory interactive display system and method |

| CN112261395A (en)* | 2020-10-22 | 2021-01-22 | Nsi产品(香港)有限公司 | Image replacement method, device, intelligent terminal and storage medium |

| CN113719810A (en)* | 2021-06-07 | 2021-11-30 | 西安理工大学 | Human-computer interaction lighting device based on visual identification and intelligent control |

| CN113768497A (en)* | 2015-05-04 | 2021-12-10 | 原相科技股份有限公司 | Action recognition system and method thereof |

| CN113986066A (en)* | 2021-10-27 | 2022-01-28 | 深圳市华胜软件技术有限公司 | Intelligent mobile terminal, control method and intelligent mobile system |

| US12213780B2 (en) | 2015-04-15 | 2025-02-04 | Pixart Imaging Inc. | Action recognition system and method thereof |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN101943947A (en)* | 2010-09-27 | 2011-01-12 | 鸿富锦精密工业(深圳)有限公司 | Interactive display system |

| CN201945947U (en)* | 2011-01-21 | 2011-08-24 | 中科芯集成电路股份有限公司 | Multifunctional gesture interactive system |

| CN102854983A (en)* | 2012-09-10 | 2013-01-02 | 中国电子科技集团公司第二十八研究所 | Man-machine interaction method based on gesture recognition |

| CN102945079A (en)* | 2012-11-16 | 2013-02-27 | 武汉大学 | Intelligent recognition and control-based stereographic projection system and method |

- 2013

- 2013-05-31CNCN201310212362.2Apatent/CN103268153B/ennot_activeExpired - Fee Related

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN101943947A (en)* | 2010-09-27 | 2011-01-12 | 鸿富锦精密工业(深圳)有限公司 | Interactive display system |

| CN201945947U (en)* | 2011-01-21 | 2011-08-24 | 中科芯集成电路股份有限公司 | Multifunctional gesture interactive system |

| CN102854983A (en)* | 2012-09-10 | 2013-01-02 | 中国电子科技集团公司第二十八研究所 | Man-machine interaction method based on gesture recognition |

| CN102945079A (en)* | 2012-11-16 | 2013-02-27 | 武汉大学 | Intelligent recognition and control-based stereographic projection system and method |

Cited By (23)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN104460972A (en)* | 2013-11-25 | 2015-03-25 | 安徽寰智信息科技股份有限公司 | Human-computer interaction system based on Kinect |

| CN103677992A (en)* | 2013-12-20 | 2014-03-26 | 深圳泰山在线科技有限公司 | Method and system for switching page in motion sensing mode |

| CN103677992B (en)* | 2013-12-20 | 2017-02-22 | 深圳泰山在线科技有限公司 | Method and system for switching page in motion sensing mode |

| CN104461524A (en)* | 2014-11-27 | 2015-03-25 | 沈阳工业大学 | Song requesting method based on Kinect |

| US12213780B2 (en) | 2015-04-15 | 2025-02-04 | Pixart Imaging Inc. | Action recognition system and method thereof |

| CN113768497A (en)* | 2015-05-04 | 2021-12-10 | 原相科技股份有限公司 | Action recognition system and method thereof |

| CN104834383A (en)* | 2015-05-26 | 2015-08-12 | 联想(北京)有限公司 | Input method and electronic device |

| CN104952289A (en)* | 2015-06-16 | 2015-09-30 | 浙江师范大学 | Novel intelligentized somatosensory teaching aid and use method thereof |

| CN105549747A (en)* | 2016-01-29 | 2016-05-04 | 合肥工业大学 | Wireless gesture interaction based specially-shaped particle type LED display system |

| CN106125928A (en)* | 2016-06-24 | 2016-11-16 | 同济大学 | PPT based on Kinect demonstrates aid system |

| CN107766842A (en)* | 2017-11-10 | 2018-03-06 | 济南大学 | A kind of gesture identification method and its application |

| CN107766842B (en)* | 2017-11-10 | 2020-07-28 | 济南大学 | A gesture recognition method and its application |

| CN108241434A (en)* | 2018-01-03 | 2018-07-03 | 广东欧珀移动通信有限公司 | Human-computer interaction method, device, medium and mobile terminal based on depth of field information |

| CN108241434B (en)* | 2018-01-03 | 2020-01-14 | Oppo广东移动通信有限公司 | Man-machine interaction method, device and medium based on depth of field information and mobile terminal |

| CN109739373A (en)* | 2018-12-19 | 2019-05-10 | 重庆工业职业技术学院 | A motion trajectory-based demonstration device control method and system |

| CN109857325A (en)* | 2019-01-31 | 2019-06-07 | 北京字节跳动网络技术有限公司 | Display interface switching method, electronic equipment and computer readable storage medium |

| CN109947247A (en)* | 2019-03-14 | 2019-06-28 | 海南师范大学 | A somatosensory interactive display system and method |

| CN109947247B (en)* | 2019-03-14 | 2022-07-05 | 海南师范大学 | Somatosensory interaction display system and method |

| CN112261395A (en)* | 2020-10-22 | 2021-01-22 | Nsi产品(香港)有限公司 | Image replacement method, device, intelligent terminal and storage medium |

| CN112261395B (en)* | 2020-10-22 | 2022-08-16 | Nsi产品(香港)有限公司 | Image replacement method and device, intelligent terminal and storage medium |

| CN113719810A (en)* | 2021-06-07 | 2021-11-30 | 西安理工大学 | Human-computer interaction lighting device based on visual identification and intelligent control |

| CN113719810B (en)* | 2021-06-07 | 2023-08-04 | 西安理工大学 | Man-machine interaction lamp light device based on visual identification and intelligent control |

| CN113986066A (en)* | 2021-10-27 | 2022-01-28 | 深圳市华胜软件技术有限公司 | Intelligent mobile terminal, control method and intelligent mobile system |

Also Published As

| Publication number | Publication date |

|---|---|

| CN103268153B (en) | 2016-07-06 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN103268153B (en) | Based on the man-machine interactive system of computer vision and exchange method under demo environment | |

| CN101874234B (en) | User interface device, user interface method, and recording medium | |

| TWI423112B (en) | Portable virtual human-machine interaction device and method therewith | |

| US20180292907A1 (en) | Gesture control system and method for smart home | |

| CN102662498B (en) | A kind of wireless control method of projection demonstration and system | |

| CN104410883B (en) | The mobile wearable contactless interactive system of one kind and method | |

| JP6372487B2 (en) | Information processing apparatus, control method, program, and storage medium | |

| US20140247216A1 (en) | Trigger and control method and system of human-computer interaction operation command and laser emission device | |

| CN102769802A (en) | A human-computer interaction system and an interaction method for a smart TV | |

| US20170192519A1 (en) | System and method for inputting gestures in 3d scene | |

| WO2018000519A1 (en) | Projection-based interaction control method and system for user interaction icon | |

| CN102200830A (en) | Non-contact control system and control method based on static gesture recognition | |

| US20210072818A1 (en) | Interaction method, device, system, electronic device and storage medium | |

| WO2012119371A1 (en) | User interaction system and method | |

| CN103729096A (en) | Interaction recognition system and display unit provided with same | |

| CN104571823A (en) | Non-contact virtual human-computer interaction method based on smart television set | |

| CN101847057A (en) | Method for touchpad to acquire input information | |

| CN106031163A (en) | Method and apparatus for controlling projection display | |

| Anand et al. | Smart AI basedVolume Control System: Gesture Recognition with OpenCV & Mediapipe Integration | |

| CN103135746B (en) | Non-contact control method, system and equipment based on static posture and dynamic posture | |

| KR20220127568A (en) | Method for providing home tranninig service and a display apparatus performing the same | |

| CN102868925A (en) | Intelligent TV (television) control method | |

| KR20100048747A (en) | User interface mobile device using face interaction | |

| CN203950270U (en) | Body sense recognition device and by the man-machine interactive system of its mouse beacon keyboard operation | |

| GB2590207A (en) | Scenario control method and device, and electronic device |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| C06 | Publication | ||

| PB01 | Publication | ||

| C10 | Entry into substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| C14 | Grant of patent or utility model | ||

| GR01 | Patent grant | ||

| CF01 | Termination of patent right due to non-payment of annual fee | Granted publication date:20160706 Termination date:20180531 | |

| CF01 | Termination of patent right due to non-payment of annual fee |