CN102360421B - Face identification method and system based on video streaming - Google Patents

Face identification method and system based on video streamingDownload PDFInfo

- Publication number

- CN102360421B CN102360421BCN201110316170.7ACN201110316170ACN102360421BCN 102360421 BCN102360421 BCN 102360421BCN 201110316170 ACN201110316170 ACN 201110316170ACN 102360421 BCN102360421 BCN 102360421B

- Authority

- CN

- China

- Prior art keywords

- face

- image

- key

- face image

- recognized

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Expired - Fee Related

Links

- 238000000034methodMethods0.000titleclaimsabstractdescription54

- 238000012545processingMethods0.000claimsabstractdescription56

- 238000001514detection methodMethods0.000claimsabstractdescription37

- 238000007781pre-processingMethods0.000claimsabstractdescription27

- 238000005286illuminationMethods0.000claimsdescription18

- 238000004364calculation methodMethods0.000claimsdescription11

- 238000010606normalizationMethods0.000claimsdescription11

- 230000003044adaptive effectEffects0.000claimsdescription4

- 238000001914filtrationMethods0.000claimsdescription3

- 238000013519translationMethods0.000claimsdescription3

- 230000004807localizationEffects0.000claims1

- 238000010586diagramMethods0.000description11

- 230000006870functionEffects0.000description10

- 210000000887faceAnatomy0.000description8

- 230000008569processEffects0.000description7

- 239000013598vectorSubstances0.000description7

- 210000004709eyebrowAnatomy0.000description6

- 230000009466transformationEffects0.000description6

- 238000005516engineering processMethods0.000description4

- 238000000605extractionMethods0.000description4

- 230000001815facial effectEffects0.000description4

- 238000012549trainingMethods0.000description4

- 230000008878couplingEffects0.000description3

- 238000010168coupling processMethods0.000description3

- 238000005859coupling reactionMethods0.000description3

- 238000004422calculation algorithmMethods0.000description2

- 230000008859changeEffects0.000description2

- 239000003086colorantSubstances0.000description2

- 238000004891communicationMethods0.000description2

- 230000001186cumulative effectEffects0.000description2

- 238000005315distribution functionMethods0.000description2

- 210000000056organAnatomy0.000description2

- 238000005070samplingMethods0.000description2

- 206010034719Personality changeDiseases0.000description1

- 238000004458analytical methodMethods0.000description1

- 238000006243chemical reactionMethods0.000description1

- 230000000052comparative effectEffects0.000description1

- 230000001419dependent effectEffects0.000description1

- 238000002474experimental methodMethods0.000description1

- 230000010354integrationEffects0.000description1

- 230000002452interceptive effectEffects0.000description1

- 239000011159matrix materialSubstances0.000description1

- 230000005055memory storageEffects0.000description1

- 238000012986modificationMethods0.000description1

- 230000004048modificationEffects0.000description1

- 230000003287optical effectEffects0.000description1

- 238000003672processing methodMethods0.000description1

- 238000011160researchMethods0.000description1

- 238000012216screeningMethods0.000description1

- 238000004088simulationMethods0.000description1

- 238000001228spectrumMethods0.000description1

- 230000003068static effectEffects0.000description1

- 238000012546transferMethods0.000description1

- 238000012795verificationMethods0.000description1

- 230000000007visual effectEffects0.000description1

Images

Landscapes

- Image Analysis (AREA)

- Image Processing (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及仿真技术领域,特别是涉及一种基于视频流的人脸识别方法及系统。The invention relates to the field of simulation technology, in particular to a video stream-based face recognition method and system.

背景技术Background technique

人脸识别是一种基于人的脸部特征信息进行身份识别的生物识别技术,其相对于如指纹、DNA检测等其他较成熟的人体生物特征识别方法具有非直接侵犯性、低沉本、可交互性强、便于事后跟踪等优点,因此,多年来一直是一个研究热点。人脸识别是用照相机或摄像机等设备采集含有人脸的图像或视频流,自动在图像中检测和跟踪人脸,进而对检测到的人脸进行脸部的一系列相关技术,包括人脸图像采集、人脸定位、人脸识别预处理、记忆存储和对比辨识,达到识别不同人身份的目的。Face recognition is a biometric identification technology based on human facial feature information. Compared with other more mature human biometric identification methods such as fingerprints and DNA detection, it is non-directly invasive, low-cost, and interactive. It has the advantages of strong stability and easy follow-up after the event, so it has been a research hotspot for many years. Face recognition is a series of related technologies that use cameras or video cameras to collect images or video streams containing faces, automatically detect and track faces in the images, and then perform facial recognition on detected faces, including face images. Acquisition, face positioning, face recognition preprocessing, memory storage and comparative recognition, to achieve the purpose of identifying different people.

其中,基于视频流的人脸识别是指输入为人脸视频,而利用静止图像人脸数据库进行识别或验证。由于视频流中通常包含较多的信息,例如:同一个人的多帧图像、视频中的人脸图像具有时间和空间上的连续性、通过运动变化估计三维人脸结构、从低分辨率图像恢复出高分辨率图像等,可以防止基于静态图像的识别欺骗等。Among them, the face recognition based on the video stream means that the input is a face video, and a still image face database is used for recognition or verification. Since the video stream usually contains more information, for example: multiple frames of images of the same person, the face images in the video have continuity in time and space, estimate the three-dimensional face structure through motion changes, and recover from low-resolution images. High-resolution images, etc., can prevent recognition fraud based on static images, etc.

因此,基于视频流的人脸识别可以利用视频中较多信息,比传统的基于静止图像的人脸识别更有优势,成为罪犯识别、入境管理、家政机器人等领域研究人员的关注。Therefore, face recognition based on video stream can use more information in the video, which has more advantages than traditional face recognition based on still images, and has become the focus of researchers in the fields of criminal recognition, immigration management, and domestic robots.

发明内容Contents of the invention

为解决上述技术问题,本发明实施例提供了一种基于视频流的人脸识别方法及系统,技术方案如下:In order to solve the above-mentioned technical problems, the embodiment of the present invention provides a face recognition method and system based on video stream, and the technical solution is as follows:

一种基于视频流的人脸识别方法,包括:A face recognition method based on video stream, comprising:

接收视频采集设备所采集的待识别视频流;Receiving the video stream to be identified collected by the video collection device;

对所述待识别视频流中每帧图像进行人脸检测,以确定待识别人脸图像;Perform face detection on each frame of image in the video stream to be identified to determine the image of the face to be identified;

定位每帧待识别人脸图像对应的特征点;Locate the feature points corresponding to each frame of the face image to be recognized;

根据待识别人脸图像所对应的颜色直方图,确定待识别人脸图像中的关键人脸图像;According to the color histogram corresponding to the face image to be recognized, determine the key face image in the face image to be recognized;

确定每帧关键人脸图像对应特征点中的关键特征点;Determine the key feature points in the feature points corresponding to the key face images of each frame;

对所确定的关键人脸图像进行图像预处理,以降低图像几何特征和光照对关键人脸图像的影响;Perform image preprocessing on the determined key face image to reduce the influence of image geometric features and illumination on the key face image;

根据每帧关键人脸图像中关键特征点与人脸图像数据库中各人脸模型对应特征点的相似度的加权处理结果,确定所述待识别视频流对应的人脸识别结果。According to the weighted processing result of the similarity between the key feature points in each key face image and the corresponding feature points of each face model in the face image database, the face recognition result corresponding to the video stream to be recognized is determined.

相应的,本发明还提供一种基于视频流的人脸识别系统,包括:Correspondingly, the present invention also provides a face recognition system based on video stream, including:

视频接收模块,用于接收视频采集设备所采集的待识别视频流;The video receiving module is used to receive the video stream to be identified collected by the video acquisition device;

人脸检测模块,用于对所述待识别视频流中每帧图像进行人脸检测,以确定待识别人脸图像;A face detection module, configured to perform face detection on each frame of image in the video stream to be recognized, so as to determine the face image to be recognized;

特征点定位模块,用于定位每帧待识别人脸图像对应的特征点;Feature point positioning module, used to locate feature points corresponding to each frame of the face image to be recognized;

关键帧确定模块,用于根据待识别人脸图像所对应的颜色直方图,确定待识别人脸图像中的关键人脸图像;A key frame determining module, configured to determine a key face image in the face image to be recognized according to the color histogram corresponding to the face image to be recognized;

关键点确定模块,用于确定每帧关键人脸图像对应特征点中的关键特征点;A key point determination module is used to determine the key feature points in the corresponding feature points of each frame key face image;

预处理模块,用于对所确定的关键人脸图像进行图像预处理,以降低图像几何特征和光照对关键人脸图像的影响;A preprocessing module is used to perform image preprocessing on the determined key face image, so as to reduce the influence of image geometric features and illumination on the key face image;

结果确定模块,根据每帧关键人脸图像中关键特征点与人脸图像数据库中各人脸模型对应特征点的相似度的加权处理结果,确定所述待识别视频流对应的人脸识别结果。The result determination module determines the face recognition result corresponding to the video stream to be recognized according to the weighted processing result of the similarity between the key feature points in each frame of key face images and the corresponding feature points of each face model in the face image database.

本发明实施例所提供的技术方案中,对视频采集设备所采集的视频流进行人脸检测和特征点定位后,利用人脸图像的颜色直方图,确定出人脸图像中的关键人脸图像,进而对关键人脸图像进行降低几何特征和光照影响的图像预处理以及关键特征点的确定,最后根据关键特征点与人脸图像数据库中各人脸模型对应的相似度的加权值,确定最终的人脸识别结果。本方案中,通过基于颜色直方图的关键帧检测以及关键特征点确定、相似度加权的方式,消除由于视频采集环境影响带来的识别偏差,快速有效地进行人脸识别。In the technical solution provided by the embodiment of the present invention, after face detection and feature point positioning are performed on the video stream collected by the video capture device, the key face images in the face images are determined by using the color histogram of the face images , and then carry out image preprocessing to reduce the influence of geometric features and illumination on the key face image and determine the key feature points, and finally determine the final face recognition results. In this solution, through the method of key frame detection based on color histogram, determination of key feature points, and similarity weighting, the recognition deviation caused by the influence of video acquisition environment is eliminated, and face recognition is performed quickly and effectively.

附图说明Description of drawings

为了更清楚地说明本发明实施例或现有技术中的技术方案,下面将对实施例或现有技术描述中所需要使用的附图作简单的介绍,显而易见地,下面描述中的附图仅仅是本发明的一些实施例,对于本领域普通技术人员来讲,在不付出创造性劳动性的前提下,还可以根据这些附图获得其他的附图。In order to more clearly illustrate the technical solutions in the embodiments of the present invention or the prior art, the following will briefly introduce the drawings that need to be used in the description of the embodiments or the prior art. Obviously, the accompanying drawings in the following description are only These are some embodiments of the present invention. For those skilled in the art, other drawings can also be obtained according to these drawings without any creative effort.

图1为本发明实施例所提供的一种基于视频流的人脸识别方法的第一种流程图;Fig. 1 is the first flow chart of a kind of face recognition method based on video stream provided by the embodiment of the present invention;

图2为本发明实施例所提供的一种基于视频流的人脸识别方法的第二种流程图;FIG. 2 is a second flow chart of a video stream-based face recognition method provided by an embodiment of the present invention;

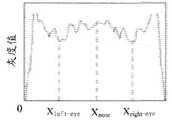

图3为本发明实施例中对人脸垂直积分投影的示意图;Fig. 3 is the schematic diagram to the face vertical integral projection in the embodiment of the present invention;

图4为本发明实施例中对人脸水平积分投影的示意图;Fig. 4 is the schematic diagram of integral projection to face level in the embodiment of the present invention;

图5为本发明实施例中对人脸垂直积分投影的第二种示意图;Fig. 5 is the second schematic diagram of the vertical integral projection of the face in the embodiment of the present invention;

图6为本发明实施例中图像旋转示意图;Fig. 6 is a schematic diagram of image rotation in an embodiment of the present invention;

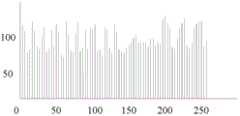

图7为本发明实施例中关键人脸图像均衡化前的直方图示意图;Fig. 7 is a schematic diagram of a histogram before equalization of a key face image in an embodiment of the present invention;

图8为本发明实施例中对关键人脸图像均衡化后的直方图示意图;FIG. 8 is a schematic diagram of a histogram after equalization of a key face image in an embodiment of the present invention;

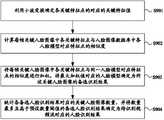

图9为本发明实施例所提供的一种基于视频流的人脸识别方法第三种流程图;FIG. 9 is a third flow chart of a video stream-based face recognition method provided by an embodiment of the present invention;

图10为本发明实施例所提供的一种基于视频流的人脸识别系统的结构示意图。FIG. 10 is a schematic structural diagram of a video stream-based face recognition system provided by an embodiment of the present invention.

具体实施方式Detailed ways

本发明实施例提供了一种基于视频流的人脸识别方法及系统,以实现利用所采集的一般应用场景下的视频流进行快速有效的人脸识别。下面首先对本发明实施例所提供的一种基于视频流的人脸识别方法进行介绍。Embodiments of the present invention provide a face recognition method and system based on video streams, so as to realize fast and effective face recognition by using collected video streams in general application scenarios. In the following, a face recognition method based on a video stream provided by an embodiment of the present invention is firstly introduced.

一种基于视频流的人脸识别方法,包括:A face recognition method based on video stream, comprising:

接收视频采集设备所采集的待识别视频流;Receiving the video stream to be identified collected by the video collection device;

对所述待识别视频流中每帧图像进行人脸检测,以确定待识别人脸图像;Perform face detection on each frame of image in the video stream to be identified to determine the image of the face to be identified;

定位每帧待识别人脸图像对应的特征点;Locate the feature points corresponding to each frame of the face image to be recognized;

根据待识别人脸图像所对应的颜色直方图,确定待识别人脸图像中的关键人脸图像;According to the color histogram corresponding to the face image to be recognized, determine the key face image in the face image to be recognized;

确定每帧关键人脸图像对应特征点中的关键特征点;Determine the key feature points in the feature points corresponding to the key face images of each frame;

对所确定的关键人脸图像进行图像预处理,以降低图像几何特征和光照对关键人脸图像的影响;Perform image preprocessing on the determined key face image to reduce the influence of image geometric features and illumination on the key face image;

根据每帧关键人脸图像中关键特征点与人脸图像数据库中各人脸模型对应特征点的相似度的加权处理结果,确定所述待识别视频流对应的人脸识别结果。According to the weighted processing result of the similarity between the key feature points in each key face image and the corresponding feature points of each face model in the face image database, the face recognition result corresponding to the video stream to be recognized is determined.

本发明实施例所提供的技术方案中,对视频采集设备所采集的视频流进行人脸检测和特征点定位后,利用人脸图像的颜色直方图,确定出人脸图像中的关键人脸图像,进而对关键人脸图像进行降低几何特征和光照影响的图像预处理以及关键特征点的确定,最后根据关键特征点与人脸图像数据库中各人脸模型对应的相似度的加权值,确定最终的人脸识别结果。本方案中,通过基于颜色直方图的关键帧检测以及关键特征点确定、相似度加权的方式,消除由于视频采集环境影响带来的识别偏差,快速有效地进行人脸识别。In the technical solution provided by the embodiment of the present invention, after face detection and feature point positioning are performed on the video stream collected by the video capture device, the key face images in the face images are determined by using the color histogram of the face images , and then carry out image preprocessing to reduce the influence of geometric features and illumination on the key face image and determine the key feature points, and finally determine the final face recognition results. In this solution, through the method of key frame detection based on color histogram, determination of key feature points, and similarity weighting, the recognition deviation caused by the influence of video acquisition environment is eliminated, and face recognition is performed quickly and effectively.

下面将结合本发明实施例中的附图,对本发明实施例中的技术方案进行清楚、完整地描述,显然,所描述的实施例仅是本发明一部分实施例,而不是全部的实施例。基于本发明中的实施例,本领域普通技术人员在没有做出创造性劳动前提下所获得的所有其他实施例,都属于本发明保护的范围。The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only some of the embodiments of the present invention, not all of them. Based on the embodiments of the present invention, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the protection scope of the present invention.

如图1所示,一种基于视频流的人脸识别方法,可以包括:As shown in Figure 1, a face recognition method based on video stream may include:

S101,接收视频采集设备所采集的待识别视频流;S101. Receive a video stream to be identified collected by a video collection device;

通常视频采集设备一般可以包括:摄像机、录像机、LD视频机等。这些视频采集设备安装在需要视频采集的区域特定位置。当需要对视频采集设备所采集的视频流进行分析处理时,则将其与相应的分析处理设备相连即可。Generally, the video acquisition equipment may generally include: a video camera, a video recorder, an LD video machine, and the like. These video capture devices are installed at specific locations in areas where video capture is required. When the video stream collected by the video collection device needs to be analyzed and processed, it can be connected to the corresponding analysis and processing device.

S102,对所述待识别视频流中每帧图像进行人脸检测,以确定待识别人脸图像;S102. Perform face detection on each frame of image in the video stream to be recognized, so as to determine the face image to be recognized;

所接收到的待识别视频流的各帧图像区域中可能不存在人脸、人脸比例较小,或者存在多个非相关的人脸、完整的人脸等。也就是说,待识别视频流中的各帧图像所包含的信息并不是全部可用的,所以需要对待识别视频流中的每帧图像进行人脸检测,将包含完整人脸且满足一定人脸比例的图像帧提取,进行后续的人脸识别处理。In the image area of each frame of the received video stream to be recognized, there may be no human face, a small proportion of human faces, or multiple irrelevant human faces, complete human faces, etc. That is to say, not all the information contained in each frame of image in the video stream to be recognized is available, so it is necessary to perform face detection on each frame of image in the video stream to be recognized, which will contain a complete face and meet a certain proportion of faces The image frame is extracted for subsequent face recognition processing.

其中,如图2所示,对所述待识别视频流中每帧图像进行人脸检测,具体可以为:Wherein, as shown in Figure 2, face detection is performed on each frame of image in the video stream to be identified, which may specifically be:

S201,对所述待识别视频流中每帧图像进行灰度化和直方图均衡化处理;S201. Perform grayscale and histogram equalization processing on each frame of image in the video stream to be identified;

S202,对处理后的每帧图像进行多尺度多特征的人脸检测;S202, performing multi-scale and multi-feature face detection on each processed image frame;

S203,将每帧图像的每一尺度所对应的检测结果进行多特征合并处理;S203, performing multi-feature merging processing on the detection results corresponding to each scale of each frame of image;

S204,将每帧图像对应的多特征合并处理结果进行多尺度合并处理;S204, performing multi-scale merging processing on the multi-feature merging processing result corresponding to each frame of image;

S205,将满足预设人脸尺度阈值的多尺度合并处理结果确定为待识别人脸图像。S205. Determine the multi-scale merging processing result satisfying the preset face scale threshold as the face image to be recognized.

在上述的处理过程中,当对待识别视频流中各帧图像进行灰度化和直方图均衡化处理后,则对每帧图像分别进行多尺度多特征的人脸检测,以确定出对于不同尺度不同特征的检测结果。所谓多尺度多特征为:对每帧图像进行各个尺度的检测,并在对每个尺度进行检测时,根据不同的特征进行检测。在进行多尺度多特征检测后,则将检测结果进行多特征多尺度合并处理,以消除不同检测结果的偏差,最终每帧图像对应一个合并结果。可以理解的是,如果合并结果中所含的人脸信息较少,则该帧图像并不适用于人脸识别。所以,需要将满足预设人脸尺度阈值的多尺度合并处理结果确定为待识别人脸图像。可以理解的是,人脸尺度阈值可以根据实际情况进行设定,在此不进行限定。In the above processing process, after grayscale and histogram equalization processing are performed on each frame image in the video stream to be recognized, multi-scale and multi-feature face detection is performed on each frame image to determine the Detection results of different features. The so-called multi-scale and multi-features are: each frame of image is detected at each scale, and when each scale is detected, it is detected according to different features. After multi-scale and multi-feature detection, the detection results are combined with multi-feature and multi-scale to eliminate the deviation of different detection results, and finally each frame of image corresponds to a combined result. It can be understood that if the merged result contains less face information, the frame image is not suitable for face recognition. Therefore, it is necessary to determine the multi-scale merging processing result that satisfies the preset face scale threshold as the face image to be recognized. It can be understood that the face scale threshold can be set according to actual conditions, and is not limited here.

S103,定位每帧待识别人脸图像对应的特征点;S103, locating feature points corresponding to each frame of the face image to be recognized;

可以理解的是,人脸由眼睛、鼻子、嘴巴、下巴等部分构成,而这些部分的形状、大小和结构上的各种差异才使得每个人脸千差万别,因此,对于这些器官的准确定位,对整个人脸识别过程而言是至关重要的一步。其中,由于眼睛是人脸当中比较突出的人脸特征,所以只要眼睛被精确定位,则脸部其他器官,如:眼眉、嘴巴、鼻子等,可以由潜在的分布关系比较准确的定位。It is understandable that the human face is composed of eyes, nose, mouth, chin and other parts, and the various differences in the shape, size and structure of these parts make each human face very different. Therefore, the accurate positioning of these organs is very important It is a crucial step in the whole face recognition process. Among them, since the eyes are relatively prominent facial features in the human face, as long as the eyes are accurately located, other facial organs, such as eyebrows, mouth, nose, etc., can be located more accurately based on the potential distribution relationship.

本实施例中,待识别人脸图像特征点的定位可以通过对应于不同积分投影方式下产生的波峰或波谷进行。其中,积分投影分为垂直投影和水平投影,设f(x,y)表示图像(x,y)处的灰度值,在图像[y1,y2]和[x1,x2]区域的水平积分投影Mh(y)和垂直积分投影Mv(x)分别表示为:In this embodiment, the location of the feature points of the face image to be recognized can be performed by corresponding to peaks or troughs generated in different integral projection modes. Among them, the integral projection is divided into vertical projection and horizontal projection, let f(x, y) represent the gray value at the image (x, y), in the image [y1 , y2 ] and [x1 , x2 ] area The horizontal integral projection Mh (y) and the vertical integral projection Mv (x) of are expressed as:

其中,水平积分投影就是将一行所有像素点的灰度值进行累加后再显示;而垂直积分投影就是将一列所有像素点的灰度值进行累加后再显示。Among them, the horizontal integral projection is to accumulate the gray values of all the pixels in a row before displaying; and the vertical integral projection is to accumulate the gray values of all the pixels in a column before displaying.

可以理解的是,在进行特征点定位之前,为了得到灰度变化明显的投影曲线,可以增加图像的对比度,例如采用自适应阈值法进行二值化处理后,再进行积分投影。It can be understood that, before the feature point location, in order to obtain the projection curve with obvious gray scale changes, the contrast of the image can be increased, for example, the adaptive threshold method is used for binarization, and then the integral projection is performed.

例如:如图3(a)、图3(b)以及图3(c)所示,为对人脸图像垂直积分结果,从各投影曲线中可以看出,在人脸左右边界处,图像的灰度发生较大的变化,左右边界对应着曲线中两边的两个较大波谷。因此,只需定位这两个波谷点x1、x2从该待识别人脸图像中把横轴[x1,x2]区域的图像截取出来,即可实现待识别人脸图像左右边界的定位。对左右边界定位后二值化待识别人脸图像,分别进行水平积分投影和垂直积分投影,其投影结果分别如图4、图5所示。进一步的,利用对人脸图像的先验知识可知,眉毛和眼睛是人脸图像中较近的黑色区域,其对应着水平积分投影曲线上的前两个极小值点。如图4所示,第一个极小值点对应的是眉毛在纵轴上的位置,记做ybrow,第二个极小值点对应的是眼睛在纵轴上的位置,记做yeye,第三个极小值点对应的是鼻子在纵轴上的位置,记做ynose,第四个极小值点对应的是嘴巴在纵轴上的位置,记做ymonth。同样,如图5所示,人脸图像中心对称轴两侧出现两个极小值点,分别对应左右眼在横轴上的位置,记做xleft-eye、xright-eye;眉毛在横轴上的位置和眼睛相同;嘴巴和鼻子在横轴上的位置为(xleft-eye+xright-eye)/2。For example: as shown in Figure 3(a), Figure 3(b) and Figure 3(c), it is the result of the vertical integration of the face image. It can be seen from the projection curves that at the left and right boundaries of the face, the The gray scale changes greatly, and the left and right boundaries correspond to the two large troughs on both sides of the curve. Therefore, it is only necessary to locate the two trough points x1 and x2 to intercept the image of the horizontal axis [x1 , x2 ] area from the face image to be recognized, so as to achieve the recognition of the left and right boundaries of the face image to be recognized position. After locating the left and right boundaries, binarize the face image to be recognized, and perform horizontal integral projection and vertical integral projection respectively. The projection results are shown in Figure 4 and Figure 5, respectively. Further, using the prior knowledge of the face image, it can be known that the eyebrows and eyes are close black areas in the face image, which correspond to the first two minimum points on the horizontal integral projection curve. As shown in Figure 4, the first minimum value point corresponds to the position of the eyebrows on the vertical axis, denoted as ybrow , and the second minimum value point corresponds to the position of the eyes on the vertical axis, denoted as yeye , the third minimum point corresponds to the position of the nose on the vertical axis, denoted as ynose , the fourth minimum value point corresponds to the position of the mouth on the vertical axis, denoted as ymonth . Similarly, as shown in Figure 5, two minimum points appear on both sides of the central symmetry axis of the face image, corresponding to the positions of the left and right eyes on the horizontal axis, denoted as xleft-eye and xright-eye ; The position on the axis is the same as that of the eyes; the position of the mouth and nose on the horizontal axis is (xleft-eye +xright-eye )/2.

可以理解的是,待识别人脸图像的特征点的定位方式并不局限于本实施例所述的方式。It can be understood that the manner of locating the feature points of the face image to be recognized is not limited to the manner described in this embodiment.

S104,根据待识别人脸图像所对应的颜色直方图,确定待识别人脸图像中的关键人脸图像;S104, according to the color histogram corresponding to the face image to be recognized, determine the key face image in the face image to be recognized;

由于在待识别视频中,同一个人会在很多帧中出现,在一帧中也会有很多人出现,由于受姿态、光照变化、正面、侧面、尺度变化、表情变化等影响,其图像质量是各不相同的,为了提高识别的准确率和效率,需要筛选出一些质量比较好的图像,进行后续的人脸识别,将所筛选出的质量较好的各帧图像定义为关键人脸图像。也就是,通常情况下,用于人脸识别的视频中图像帧比较多,如果对每一人脸图像都进行处理,必然影响系统运行效率,降低系统实时性。同时,人脸视频中包含有大量冗余帧,包括旋转过大和光照不均匀的人脸图像帧,对这些帧进行特征定位会非常困难,甚至定位失败,直接影响系统整体的识别率。In the video to be recognized, the same person will appear in many frames, and there will be many people in one frame. Due to the influence of posture, illumination changes, front, side, scale changes, expression changes, etc., the image quality is Each is different, in order to improve the accuracy and efficiency of recognition, it is necessary to screen out some images with better quality for subsequent face recognition, and define each frame of images with better quality as the key face image. That is, under normal circumstances, there are many image frames in the video used for face recognition. If each face image is processed, it will inevitably affect the operating efficiency of the system and reduce the real-time performance of the system. At the same time, face video contains a large number of redundant frames, including face image frames with excessive rotation and uneven illumination. It is very difficult to locate the features of these frames, and even the positioning fails, which directly affects the overall recognition rate of the system.

本实例中根据待识别人脸图像所对应的颜色直方图,确定待识别人脸图像中的关键帧图像。In this example, the key frame image in the face image to be recognized is determined according to the color histogram corresponding to the face image to be recognized.

其中,颜色直方图反映了一幅图像中像素颜色出现的概率,是像素出现概率的估计。给定一幅数字图像I,其颜色直方图向量H可表示为:Among them, the color histogram reflects the probability of occurrence of pixel colors in an image, and is an estimation of the probability of occurrence of pixels. Given a digital image I, its color histogram vector H can be expressed as:

其中h[ck]是第k种颜色在此数字图像中出现的概率数:where h[ck ] is the probability of the kth color appearing in this digital image:

N1、N2分别表示图像有N1行N2列,I(i,j)表示图像中点(i,j)处的像素值。N1 and N2 respectively represent that the image has N1 rows and N2 columns, and I(i, j) represents the pixel value at the point (i, j) in the image.

颜色直方图反映了图像在颜色上的全局信息,每幅图像都有与之相对应的颜色直方图。设G和H为要进行比较的两幅图像的颜色直方图矢量,N表示图像中出现的颜色级数,gk、hk分别表示图像G和H的颜色直方图中k级色出现的频数,则两幅图像的相似度可用其颜色直方图之间的欧式距离来表示:The color histogram reflects the global color information of the image, and each image has a corresponding color histogram. Let G and H be the color histogram vectors of the two images to be compared, N represents the number of color levels appearing in the image, and gk and hk represent the frequency of k-level colors in the color histograms of images G and H respectively , then the similarity between two images can be represented by the Euclidean distance between their color histograms:

颜色直方图作为一种重要视觉特征,可有效区分不同结构、大小、形状的物体,同时颜色识别是一种既简单又快速的方法,这对有实时性要求的系统尤为重要。基于此,本发明提出一种基于颜色直方图的人脸视频关键帧提取算法,在特征提取和识别之前,先进行人脸关键帧检测,避免对冗余帧进行处理,从而大大提高系统性能。具体过程如下:As an important visual feature, color histogram can effectively distinguish objects of different structures, sizes, and shapes. At the same time, color recognition is a simple and fast method, which is especially important for systems with real-time requirements. Based on this, the present invention proposes a face video key frame extraction algorithm based on a color histogram. Before feature extraction and recognition, face key frame detection is performed to avoid processing redundant frames, thereby greatly improving system performance. The specific process is as follows:

在人脸视频序列中选择质量较好的一帧图像作为标准人脸图像,计算其颜色直方图H,作为标准人脸颜色直方图;In the human face video sequence, select a frame image with better quality as a standard human face image, calculate its color histogram H, and use it as a standard human face color histogram;

逐帧获取人脸视频序列中的待识别人脸图像,计算其颜色直方图Gi,并和标准颜色直方图比较,通过式(5)计算其欧式距离;Obtain the face image to be recognized in the face video sequence frame by frame, calculate its color histogram Gi , and compare it with the standard color histogram, and calculate its Euclidean distance by formula (5);

设定距离阈值T(0≤T≤1),将欧式距离小于T的所有待识别人脸图像作为关键人脸图像,而将欧式距离大于距离阈值的待识别人脸图像确定为非关键人脸图像。其中,T可以控制选取关键人脸图像的数量和质量。Set the distance threshold T (0 ≤ T ≤ 1), and use all the face images to be recognized whose Euclidean distance is less than T as key face images, and determine the face images to be recognized whose Euclidean distance is greater than the distance threshold as non-key faces image. Among them, T can control the quantity and quality of selected key face images.

可以理解的是,通过在待识别人脸图像中筛选出关键人脸图像进行后续的人脸识别,可有效提高系统处理效率,同时更加具有实用性。It can be understood that by screening out key face images from the face images to be recognized for subsequent face recognition, the processing efficiency of the system can be effectively improved, and at the same time, it is more practical.

S105,确定每帧关键人脸图像对应特征点中的关键特征点;S105, determining the key feature points in the feature points corresponding to each frame of the key face image;

可以理解的是,人脸不同部位特征点对人脸识别来说,是有差别的,也就是,并不是所有特征点都具有较高的识别价值,,例如,根据实验结果发现,眉毛、嘴巴等部位特征点的识别能力高于眼睛、鼻子、轮廓等部位。因此,需要确定每帧关键人脸图像对应特征点中的关键特征点,以进行有效的人脸识别。It is understandable that the feature points of different parts of the face are different for face recognition, that is, not all feature points have high recognition value, for example, according to the experimental results, the eyebrows, mouth The recognition ability of the feature points of other parts is higher than that of eyes, nose, outline and other parts. Therefore, it is necessary to determine the key feature points among the corresponding feature points of each key face image for effective face recognition.

S106,对所确定的关键人脸图像进行图像预处理,以降低图像几何特征和光照对关键人脸图像的影响;S106, performing image preprocessing on the determined key face image, so as to reduce the influence of image geometric features and illumination on the key face image;

其中,对所确定的关键帧进行几何归一化预处理,也就是对人脸图像经过平移、旋转以及滤波处理,以降低图像几何特征所带来的识别偏差,便于特征提取和识别。对所确定的关键人脸图像几何归一化的处理过程可以为:Among them, the geometric normalization preprocessing is performed on the determined key frames, that is, the face image is translated, rotated and filtered to reduce the recognition deviation caused by the geometric features of the image and facilitate feature extraction and recognition. The process of geometric normalization of the determined key face images can be as follows:

假设关键人脸图像中两只眼睛的位置坐标分别为E1(x1,y1)和Er(xr,yr),眼睛中心的距离为d,两眼轴线与x轴的夹角为θ,θ即为要旋转的角度值。Assume that the position coordinates of the two eyes in the key face image are E1 (x1 , y1 ) and Er (xr , yr ), the distance between the center of the eyes is d, and the angle between the axis of the two eyes and the x-axis is θ, and θ is the angle value to be rotated.

根据人脸图像比例的先验知识,人脸的中心位置C(xc,yc)为:According to the prior knowledge of the proportion of the face image, the center position C(xc , yc ) of the face is:

则以C为中心,以θ为旋转角度对人脸进行旋转,旋转后的人脸坐标(x’,y’)的计算公式为:Then take C as the center and rotate the face with θ as the rotation angle. The calculation formula of the rotated face coordinates (x', y') is:

x′=xc+cosθ(x-xc)+sinθ(y-yc)x'=xc +cosθ(xxc )+sinθ(yyc )

(9) (9)

y′=yc+cosθ(y-yc)+sinθ(x-xc)y'=yc +cosθ(yyc )+sinθ(xxc )

关键帧图像旋转示意图如图6所示。The schematic diagram of key frame image rotation is shown in Figure 6.

可以理解的是,经过旋转变化的图像可能尺寸大小不一,所以还需要将人脸图像规整到统一的尺寸。其中图像的尺寸归一化通常采用缩放的方法实现,具体处理过程可以为:It is understandable that the rotated image may have different sizes, so it is also necessary to normalize the face image to a uniform size. The size normalization of the image is usually realized by scaling, and the specific processing process can be as follows:

像素坐标的变换,将输入图像的像素映射到输出图像。设图像x轴方向的缩放比例为Rx,y轴方向的缩放比例为Ry,图像缩放的变换矩阵如下:A transformation of pixel coordinates that maps pixels of an input image to an output image. Let the scaling ratio in the x-axis direction of the image be Rx , and the scaling ratio in the y-axis direction be Ry , and the transformation matrix for image scaling is as follows:

其中,当对图像进行放大时,输出图像中的像素可能在源图像中找不到相应的像素点,因此,必须对其进行插值运算。常用的插值运算方法包括:最近邻插值、双线性插值和双三次插值。最近邻插值是用其邻域中的几个点的像素插值得到的,算法简单,运算量非常小,但变换后灰度有明显的不连续性;双三次插值计算精度高,但计算量较大。因此,常用双线性插值进行补偿。假设点(x1,y1)、(x1,y2)、(x2,y1)、(x2,x2)为图像中矩形区域的四个顶点,点(x,y)包含在该矩形中,则(x,y)的灰度值的计算方法如下:Wherein, when the image is enlarged, the pixel in the output image may not find the corresponding pixel in the source image, therefore, it must be interpolated. Commonly used interpolation methods include: nearest neighbor interpolation, bilinear interpolation and bicubic interpolation. The nearest neighbor interpolation is obtained by pixel interpolation of several points in its neighborhood. The algorithm is simple and the amount of calculation is very small, but the gray level after transformation has obvious discontinuity; the bicubic interpolation has high calculation accuracy, but the calculation amount is relatively large. big. Therefore, bilinear interpolation is commonly used for compensation. Suppose the points (x1 , y1 ), (x1 , y2 ), (x2 , y1 ), (x2 , x2 ) are the four vertices of the rectangular area in the image, and the point (x, y) contains In this rectangle, the calculation method of the gray value of (x, y) is as follows:

f(x,y0)=f(x0,y0)+(x-x0)/(x1-x0)[f(x1,y0)-f(x0,y0)]f(x,y0 )=f(x0 ,y0 )+(xx0 )/(x1 -x0 )[f(x1 ,y0 )-f(x0 ,y0 )]

f(x,y1)=f(x0,y1)+(x-x0)/(x1-x0)[f(x1,y1)-f(x0,y1)] (11)f(x,y1 )=f(x0 ,y1 )+(xx0 )/(x1 -x0 )[f(x1 ,y1 )-f(x0 ,y1 )] (11 )

f(x,y)=f(x,y0)+(y-y0)/(y1-y0)[f(x,y1)-f(x,y0)]f(x,y)=f(x,y0 )+(yy0 )/(y1 -y0 )[f(x,y1 )-f(x,y0 )]

更进一步的,由于光照是影响人脸识别结果的主要因素之一,因此需要消除光照对人脸图像的影响。本实施例中,可以采用直方图均衡化技术进行图像光照预处理,原理是通过改变图像中每个像素的灰度级,以此来改变图像的直方图,从而使偏亮的图像变暗或使偏暗的图像变亮。灰度直方图是一幅图像中各像素灰度值出现次数或频率的统计结果,是灰度值的函数,在数字图像处理中是一个最简单、有用的工具。它不仅描述了一幅图像的灰度等级内容,而且描述了图像中具有该灰度值的像素的个数。任何一幅图像的直方图都包含了可观的信息,某些类型的图像还可以由其直方图完全描述。Furthermore, since illumination is one of the main factors affecting the result of face recognition, it is necessary to eliminate the influence of illumination on the face image. In this embodiment, the histogram equalization technology can be used for image lighting preprocessing. The principle is to change the histogram of the image by changing the gray level of each pixel in the image, thereby darkening or darkening the brighter image. Brightens dark images. The gray histogram is the statistical result of the occurrence times or frequency of the gray value of each pixel in an image, and it is a function of the gray value. It is the simplest and most useful tool in digital image processing. It not only describes the grayscale content of an image, but also describes the number of pixels with this grayscale value in the image. The histogram of any image contains considerable information, and some types of images can be completely described by its histogram.

其中,具体的处理方法可以包括:Among them, specific processing methods may include:

假设灰度图像中总的像素数目为n,某一灰度级为rk的像素数目为nk,则nk/n就是灰度级为rk的像素出现的概率,统计该灰度图像中所有灰度级出现的概率,即可得到该图像的灰度直方图。若以变量r代表图像中像素灰度级,并对r做归一化处理:0≤r≤1,其中,r=0代表黑,r=1代表白。对于一幅给定的图像而言,每个像素取得[0,1]区间内的灰度级是随机的。R是一个随机变量,可以用概率密度函数Pr(r)来表示原始图像的灰度分布,从图像灰度级的分布可以看出一幅图像的灰度分布特征。若图像中大多数像素的灰度值取在接近于0的区域,则整幅图像较暗,反之,则整幅图像较量。许多图像的灰度值是非均匀分布的,灰度值集中在一个小区间内的图像是很常见的。Assuming that the total number of pixels in the grayscale image is n, and the number of pixels with a certain grayscale rk is nk , then nk /n is the probability of occurrence of pixels with a grayscale rk , and the grayscale image is counted The probability of occurrence of all gray levels in the image can be obtained from the gray histogram of the image. If the variable r is used to represent the gray level of the pixel in the image, and r is normalized: 0≤r≤1, where r=0 represents black, and r=1 represents white. For a given image, each pixel obtains a gray level in the [0, 1] interval is random. R is a random variable, and the gray distribution of the original image can be represented by the probability density function Pr (r). The gray distribution characteristics of an image can be seen from the gray distribution of the image. If the gray value of most of the pixels in the image is taken in an area close to 0, the whole image is darker, otherwise, the whole image is darker. The gray values of many images are non-uniformly distributed, and it is very common for images with gray values concentrated in a small interval.

直方图均衡处理的目的是使处理后的图像的直方图是平直的,即各灰度级具有相同的出现频率,由此各灰度级具有均匀的概率分布,从而提高图像的主观质量。因此,需要找到一种变换关系S=T(r),使变换后新的灰度直方图较变换前直方图平直。可以采用r的累积概率分布函数作为转换函数,即:The purpose of histogram equalization is to make the histogram of the processed image straight, that is, each gray level has the same frequency of occurrence, so each gray level has a uniform probability distribution, thereby improving the subjective quality of the image. Therefore, it is necessary to find a transformation relation S=T(r), so that the new gray histogram after transformation is straighter than the histogram before transformation. The cumulative probability distribution function of r can be used as the conversion function, namely:

其中,w是积分变量,是r的累积概率分布函数。用rk表示离散灰度值,用Pr(rk)代表Pr(r),则有:where w is the integral variable, is the cumulative probability distribution function of r. Use rk to represent the discrete gray value, and use Pr (rk ) to represent Pr (r), then:

其中,nk为图像中出现这种灰度的象素数,n为图像中象素总数,L表示图像灰度值共分为L级。Among them,nk is the number of pixels with this gray level in the image, n is the total number of pixels in the image, and L indicates that the gray level of the image is divided into L levels.

转换函数的离散形式为:The discrete form of the transfer function is:

以任意一幅人脸图像为例,图7为直方图均衡化前的示意图,图8为直方图均衡化后的示意图。可见,直方图均衡化后的图像的光照强度得到了补偿,灰度值动态范围增加,灰度分布更加均匀,图像整体对比度增强。Taking any face image as an example, FIG. 7 is a schematic diagram before histogram equalization, and FIG. 8 is a schematic diagram after histogram equalization. It can be seen that the light intensity of the image after histogram equalization is compensated, the dynamic range of the gray value is increased, the gray distribution is more uniform, and the overall contrast of the image is enhanced.

S107,根据每帧关键人脸图像中关键特征点与人脸图像数据库中各人脸模型对应特征点的相似度的加权处理结果,确定所述待识别视频流对应的人脸识别结果。S107. Determine the face recognition result corresponding to the video stream to be recognized according to the weighted processing result of the similarity between the key feature points in each frame of the key face image and the corresponding feature points of each face model in the face image database.

可以理解的是,在进行人脸识别时,首先应该通过特征训练方式构成相应的人脸图像数据库,以为人脸识别提供判别标准。在进行特征训练的过程中以可变形的二位网格作为模板来表示人脸。在脸部区域抽取一个二位网格,这些网格可以被视为一个二维拓扑图,对于拓扑图中各个节点,计算其特征信息,并将所得到的值赋予该节点得到标号图,具体为首先选取人脸图像上位置特殊的点(比如眉毛、眼睛、鼻子、下巴等)作为特征点;然后通过Gabor滤波器对特征点进行滤波,提取Gabor系数组成特征矢量,用人脸图表示每个人脸的特征点及其对应的特征矢量并存储在数据库中。It can be understood that, when performing face recognition, a corresponding face image database should first be formed through feature training to provide a criterion for face recognition. In the process of feature training, a deformable two-dimensional grid is used as a template to represent the face. A two-dimensional grid is extracted in the face area. These grids can be regarded as a two-dimensional topological map. For each node in the topological map, its feature information is calculated, and the obtained value is assigned to the node to obtain a label map. Specifically In order to first select the special points on the face image (such as eyebrows, eyes, nose, chin, etc.) as feature points; then filter the feature points through the Gabor filter, extract the Gabor coefficient to form a feature vector, and use the face map to represent each person The feature points of the face and their corresponding feature vectors are stored in the database.

例如:1000个人的训练库中的数据就是1000个人的人脸特征点的原始数据。人脸图像上位置特殊的点(比如眉毛、眼睛、鼻尖、下巴等)作为特征点;然后通过Gabor滤波器对特征点进行滤波,提取Gabor系数组成特征矢量而构成人脸图,用人脸图表示每个人脸的特征点及其对应的特征矢量并存储在训练库中。For example: the data in the training library of 1000 people is the original data of the face feature points of 1000 people. Points with special positions on the face image (such as eyebrows, eyes, nose tip, chin, etc.) are used as feature points; then the feature points are filtered through the Gabor filter, and the Gabor coefficients are extracted to form a feature vector to form a face map, which is represented by a face map The feature points and their corresponding feature vectors of each face are stored in the training library.

其中,所述根据每帧关键人脸图像中关键特征点与人脸图像数据库中各人脸模型对应特征点的相似度的加权处理结果,确定所述待识别视频流对应的人脸识别结果,如图9所示,具体可以为:Wherein, according to the weighted processing result of the similarity between the key feature points in each key face image and the corresponding feature points of each face model in the face image database, determine the face recognition result corresponding to the video stream to be recognized, As shown in Figure 9, the details can be:

S901,利用小波变换确定各关键特征点的对应的关键特征值;S901, using wavelet transform to determine the corresponding key feature value of each key feature point;

人脸信息的特征提取是人脸识别中至关重要的一步,提取的特征信息是否稳定、可靠、充分等都将影响到系统最终的识别率,特别是当人脸图像中存在光照、表情、姿态等变化以及其他干扰时。二位小波变换具有局部性、方向选择性和频率选择性,能够精确地提取人脸图像中不同方向、频率和尺度的局部特征,且具有一定的抗干扰能力,因此广泛应用于人脸的特征提取中。其中,二维小波的函数形式可以表示为:The feature extraction of face information is a crucial step in face recognition. Whether the extracted feature information is stable, reliable, and sufficient will affect the final recognition rate of the system, especially when there are illumination, expression, etc. in the face image. Attitude changes and other disturbances. The binary wavelet transform has locality, direction selectivity and frequency selectivity, can accurately extract local features of different directions, frequencies and scales in face images, and has certain anti-interference ability, so it is widely used in face features Extracting. Among them, the functional form of the two-dimensional wavelet can be expressed as:

其中波矢量为where the wave vector for

式中,为给定位置的图像坐标;也称滤波器的中心频率;反映了滤波器的方向选择性。在自然图像中,用来补偿由频率决定的能量谱衰减;是用来约束平面波的高斯包络函数;为复数值平面波,其实部为余弦平面波虚部为正弦平面波称为直流成分补偿,用来消除图像的直流成分对二维Gabor小波的影响,使得二维Gabor小波变换不受图像灰度绝对数值的影响,并且对图像的光照变化不敏感。二维Gabor滤波器的函数为一个复函数,其实部和虚部分别可以表示为:In the formula, is the image coordinates of the given position; Also known as the center frequency of the filter; Reflects the directional selectivity of the filter. In natural images, Used to compensate for frequency-dependent energy spectrum attenuation; is the Gaussian envelope function used to constrain the plane wave; is a complex-valued plane wave whose real part is a cosine plane wave The imaginary part is a sine plane wave It is called DC component compensation, which is used to eliminate the influence of the DC component of the image on the two-dimensional Gabor wavelet, so that the two-dimensional Gabor wavelet transform is not affected by the absolute value of the image gray level, and is insensitive to the illumination change of the image. The function of the two-dimensional Gabor filter is a complex function, and its real part and imaginary part can be expressed as:

二维Gabor小波变换描述了图像I上给定一点附近区域的灰度特征,对图像I的滤波过程可以通过Gabor函数族与图像的卷积来实现:Two-dimensional Gabor wavelet transform describes a given point on the image I The grayscale feature of the nearby area, the filtering process of the image I can be realized by the convolution of the Gabor function family and the image:

二维Gabor滤波器是带通滤波器,在空间域和频率域均有较好的分辨能力,其参数体现了它在空间域和频率域的采样方式,决定了它对信号的表达能力。通常可采用具有多个中心频率和方向的Gabor滤波器组来描述图像。参数kv、的不同选择分别体现了二维Gabor小波在频率和方向空间的采样方式;参数σ决定滤波器的带宽。Lades的实验表明:对于尺寸为128×128的图像来说,当滤波器最大中心频率kmax为π/2,σ=2π时,达到最好的实验结果。由于图像的纹理是随机分布的,的取值范围为0~2π,若考虑到Gabor滤波器的对称性,则的实际取值范围为0到π。为了描述图像的局部特征,本实施例采用5个中心频率、8个方向组成的40个Gabor滤波器对图像进行滤波。参数kv和的取值如下:The two-dimensional Gabor filter is a bandpass filter, which has good resolution in the space domain and the frequency domain. Its parameters reflect its sampling method in the space domain and the frequency domain, and determine its ability to express signals. Generally, a Gabor filter bank with multiple center frequencies and directions can be used to describe an image. parameter kv, The different choices of reflect the two-dimensional Gabor wavelet sampling method in the frequency and direction space respectively; the parameter σ determines the bandwidth of the filter. The experiments of Lades show that: for an image with a size of 128×128, when the maximum center frequency kmax of the filter is π/2, σ=2π, the best experimental results are achieved. Since the texture of the image is randomly distributed, The value range of is 0~2π. If the symmetry of the Gabor filter is considered, then The actual value range of is from 0 to π. In order to describe the local features of the image, this embodiment uses 40 Gabor filters composed of 5 center frequencies and 8 directions to filter the image. The parameters kv and The values are as follows:

式中,f是用来限定频域中核函数距离的间隔因子,通常取为v∈{0,1,2,3,4},u={0,1,2,3,4,5,6,7},j=u+8v。In the formula, f is the interval factor used to limit the distance of the kernel function in the frequency domain, usually taken as v∈{0, 1, 2, 3, 4}, u={0, 1, 2, 3, 4, 5, 6, 7}, j=u+8v.

S902,计算每帧关键人脸图像中各关键特征点与人脸图像数据库中各人脸模型对应特征点的相似度;S902, calculating the similarity between each key feature point in each key face image and the corresponding feature point of each face model in the face image database;

S903,将每帧关键人脸图像中各关键特征点与同一人脸模型对应特征点的相似度进行加权,将最大加权值对应的人脸模型确定为所述关键人脸图像的备选识别结果;S903, weighting the similarity between each key feature point in each key face image and the corresponding feature point of the same face model, and determining the face model corresponding to the maximum weighted value as the candidate recognition result of the key face image ;

S904,统计各备选人脸识别结果对应的关键人脸图像数量,并将数量最多且高于预设数量阈值的备选人脸识别结果确定为待识别视频流对应的人脸识别结果。S904. Count the number of key face images corresponding to each candidate face recognition result, and determine the candidate face recognition result with the largest number and higher than the preset number threshold as the face recognition result corresponding to the video stream to be recognized.

在上述的处理中,通过计算每帧关键人脸图像中每个关键特征点与所预设各人脸模型中对应特征点的相似度,对每一帧关键人脸图像中的所有关键特征点针对同一人脸模型的相似度进行加权,并将最大加权值对应的人脸模型确定为所述关键人脸图像的备选识别结果;然后,统计各备选人脸识别结果对应的关键人脸图像数量,并将数量最多且高于预设数量阈值的备选人脸识别结果确定为待识别视频流对应的人脸识别结果。可以理解的是,在实际应用中,所采集的视频流中并不一定包含人脸图像数据库中的某个人脸模型,因此,某一备选人脸识别结果对应的关键人脸图像数量可能最多,但是与其他备选人脸识别结果数量相近时,表明所采集的视频流可能并未包含有人脸图像数据库中的人脸模型。因此,需要设定一个数量阈值,以提高识别的准确性。In the above processing, by calculating the similarity between each key feature point in each frame of key face images and the corresponding feature points in each preset face model, all key feature points in each frame of key face images Carry out weighting for the similarity of the same face model, and determine the face model corresponding to the maximum weighted value as the candidate recognition result of the key face image; then, count the key faces corresponding to each candidate face recognition result The number of images, and the candidate face recognition result with the largest number and higher than the preset number threshold is determined as the face recognition result corresponding to the video stream to be recognized. It is understandable that in practical applications, the collected video stream does not necessarily contain a face model in the face image database, therefore, the number of key face images corresponding to a candidate face recognition result may be the largest , but when the number of other candidate face recognition results is similar, it indicates that the collected video stream may not contain the face model in the face image database. Therefore, it is necessary to set a quantity threshold to improve the accuracy of recognition.

通过以上的方法实施例的描述,所属领域的技术人员可以清楚地了解到本发明可借助软件加必需的通用硬件平台的方式来实现,当然也可以通过硬件,但很多情况下前者是更佳的实施方式。基于这样的理解,本发明的技术方案本质上或者说对现有技术做出贡献的部分可以以软件产品的形式体现出来,该计算机软件产品存储在一个存储介质中,包括若干指令用以使得一台计算机设备(可以是个人计算机,服务器,或者网络设备等)执行本发明各个实施例所述方法的全部或部分步骤。而前述的存储介质包括:只读存储器(ROM)、随机存取存储器(RAM)、磁碟或者光盘等各种可以存储程序代码的介质。Through the description of the above method embodiments, those skilled in the art can clearly understand that the present invention can be implemented by means of software plus a necessary general-purpose hardware platform, and of course also by hardware, but in many cases the former is better implementation. Based on this understanding, the essence of the technical solution of the present invention or the part that contributes to the prior art can be embodied in the form of a software product. The computer software product is stored in a storage medium and includes several instructions to make a A computer device (which may be a personal computer, a server, or a network device, etc.) executes all or part of the steps of the methods described in various embodiments of the present invention. The aforementioned storage medium includes various media capable of storing program codes such as read-only memory (ROM), random access memory (RAM), magnetic disk or optical disk.

相应于上面的方法实施例,本发明实施例还提供一种基于视频流的人脸识别系统,如图10所示,可以包括:Corresponding to the above method embodiment, the embodiment of the present invention also provides a face recognition system based on video stream, as shown in FIG. 10 , which may include:

视频接收模块110,用于接收视频采集设备所采集的待识别视频流;A

人脸检测模块120,用于对所述待识别视频流中每帧图像进行人脸检测,以确定待识别人脸图像;The

特征点定位模块130,用于定位每帧待识别人脸图像对应的特征点;The feature

关键帧确定模块140,用于根据待识别人脸图像所对应的颜色直方图,确定待识别人脸图像中的关键人脸图像;The key

关键点确定模块150,用于确定每帧关键人脸图像对应特征点中的关键特征点;Key

预处理模块160,用于对所确定的关键人脸图像进行图像预处理,以降低图像几何特征和光照对关键人脸图像的影响;The

结果确定模块170,根据每帧关键人脸图像中关键特征点与人脸图像数据库中各人脸模型对应特征点的相似度的加权处理结果,确定所述待识别视频流对应的人脸识别结果。The

其中,所述人脸检测模块包括:Wherein, the face detection module includes:

均衡处理单元,对所述待识别视频流中每帧图像进行灰度化和直方图均衡化处理;An equalization processing unit, which performs grayscale and histogram equalization processing on each frame of image in the video stream to be identified;

人脸检测单元,用于对处理后的每帧图像进行多尺度多特征的人脸检测;A face detection unit is used to perform multi-scale and multi-feature face detection on each processed image frame;

多特征合并单元,用于将每帧图像的每一尺度所对应的检测结果进行多特征合并处理;A multi-feature merging unit, configured to perform multi-feature merging processing on the detection results corresponding to each scale of each frame of image;

多尺度合并单元,用于将每帧图像对应的多特征合并处理结果进行多尺度合并处理;A multi-scale merging unit, configured to perform multi-scale merging processing on the multi-feature merging processing results corresponding to each frame of image;

结果确定单元,用于将满足预设人脸尺度阈值的多尺度合并处理结果确定为待识别人脸图像。The result determination unit is configured to determine the multi-scale merging processing result satisfying the preset human face scale threshold as the face image to be recognized.

其中,所述特征点定位模块包括:Wherein, the feature point positioning module includes:

对比度增加单元,用于采用自适应阈值法对待识别人脸图像进行对比度增加处理;The contrast increasing unit is used to adopt the adaptive threshold method to perform contrast increasing processing on the face image to be recognized;

特征点定位单元,利用垂直积分投影方法和水平积分投影方法对待识别人脸图像进行特征点定位处理,以定位所述待识别人脸图像对应的特征点。The feature point positioning unit uses the vertical integral projection method and the horizontal integral projection method to perform feature point positioning processing on the face image to be recognized, so as to locate the feature points corresponding to the face image to be recognized.

其中,所述关键帧确定模块包括:Wherein, the key frame determination module includes:

标准确定单元,用于确定标准待识别人脸图像,并计算所述标准待识别人脸图像对应人脸区域的颜色直方图;A standard determining unit, configured to determine a standard face image to be recognized, and calculate a color histogram of the face region corresponding to the standard face image to be recognized;

颜色直方图确定单元,用于计算每帧待识别人脸图像对应人脸区域的颜色直方图;A color histogram determination unit, configured to calculate the color histogram of each frame of the face image to be recognized corresponding to the face area;

直方图差值计算单元,用于计算当前待识别人脸图像对应的颜色直方图与标准待识别人脸图像对应的颜色直方图的欧式距离;A histogram difference calculation unit for calculating the Euclidean distance between the color histogram corresponding to the current face image to be recognized and the color histogram corresponding to the standard face image to be recognized;

关键帧确定单元,用于判断所述欧式距离是否小于预设距离阈值,如果是,则将所述当前待识别人脸图像确定为关键人脸图像;如果否,则将所述待识别人脸图像确定为非关键人脸图像。A key frame determination unit, configured to determine whether the Euclidean distance is less than a preset distance threshold, if yes, determine the current face image to be recognized as a key face image; if not, determine the face image to be recognized The image is determined to be a non-key face image.

其中,所述预处理模块包括:Wherein, the preprocessing module includes:

几何归一化单元,用于对所述关键人脸图像进行平移、旋转和/或滤波处理,以实现几何归一化处理;a geometric normalization unit, configured to perform translation, rotation and/or filter processing on the key face image, so as to realize geometric normalization processing;

光照预处理单元,用于对经过上述处理的关键人脸图像进行直方图均衡处理,以实现光照预处理。The illumination preprocessing unit is configured to perform histogram equalization processing on the above-mentioned processed key face images, so as to realize illumination preprocessing.

其中,所述结果确定模块包括:Wherein, the result determination module includes:

特征值计算单元,用于利用小波变换确定各关键特征点的对应的关键特征值;An eigenvalue calculation unit, configured to determine the corresponding key eigenvalues of each key feature point by wavelet transform;

相似度计算单元,计算每帧关键人脸图像中各关键特征点与人脸图像数据库中各人脸模型对应特征点的相似度;The similarity calculation unit calculates the similarity between each key feature point in each key face image and the corresponding feature point of each face model in the face image database;

备选结果确定单元,将每帧关键人脸图像中各关键特征点与同一人脸模型对应特征点的相似度进行加权,将最大加权值对应的人脸模型确定为所述关键人脸图像的备选识别结果;The alternative result determination unit weights the similarity between each key feature point in each frame key face image and the corresponding feature point of the same face model, and determines the face model corresponding to the maximum weighted value as the key feature point of the key face image. alternative recognition results;

识别结果确定单元,用于统计各备选人脸识别结果对应的关键人脸图像数量,并将数量最多且高于预设阈值的备选人脸识别结果确定为待识别视频流对应的人脸识别结果。The recognition result determination unit is used to count the number of key face images corresponding to each candidate face recognition result, and determine the candidate face recognition result with the largest number and higher than the preset threshold as the face corresponding to the video stream to be recognized recognition result.

对于装置或系统实施例而言,由于其基本相应于方法实施例,所以相关之处参见方法实施例的部分说明即可。以上所描述的装置或系统实施例仅仅是示意性的,其中所述作为分离部件说明的单元可以是或者也可以不是物理上分开的,作为单元显示的部件可以是或者也可以不是物理单元,即可以位于一个地方,或者也可以分布到多个网络单元上。可以根据实际的需要选择其中的部分或者全部模块来实现本实施例方案的目的。本领域普通技术人员在不付出创造性劳动的情况下,即可以理解并实施。As for the device or system embodiment, since it basically corresponds to the method embodiment, for related parts, please refer to the part of the description of the method embodiment. The device or system embodiments described above are only illustrative, and the units described as separate components may or may not be physically separated, and the components shown as units may or may not be physical units, that is, It can be located in one place, or it can be distributed to multiple network elements. Part or all of the modules can be selected according to actual needs to achieve the purpose of the solution of this embodiment. It can be understood and implemented by those skilled in the art without creative effort.

在本发明所提供的几个实施例中,应该理解到,所揭露的系统,装置和方法,在没有超过本申请的精神和范围内,可以通过其他的方式实现。当前的实施例只是一种示范性的例子,不应该作为限制,所给出的具体内容不应该限制本申请的目的。例如,所述单元或子单元的划分,仅仅为一种逻辑功能划分,实际实现时可以有另外的划分方式,例如多个单元或多个子单元结合一起。另外,多个单元可以或组件可以结合或者可以集成到另一个系统,或一些特征可以忽略,或不执行。In the several embodiments provided by the present invention, it should be understood that the disclosed systems, devices and methods can be implemented in other ways without exceeding the spirit and scope of the present application. The present embodiment is only an exemplary example and should not be taken as a limitation, and the specific content given should not limit the purpose of the present application. For example, the division of the units or subunits is only a division of logical functions. In actual implementation, there may be other division methods, such as combining multiple units or multiple subunits. Also, multiple units or components may be combined or integrated into another system, or some features may be omitted, or not implemented.

另外,所描述系统,装置和方法以及不同实施例的示意图,在不超出本申请的范围内,可以与其它系统,模块,技术或方法结合或集成。另一点,所显示或讨论的相互之间的耦合或直接耦合或通信连接可以是通过一些接口,装置或单元的间接耦合或通信连接,可以是电性,机械或其它的形式。In addition, the described systems, devices and methods and schematic diagrams of different embodiments may be combined or integrated with other systems, modules, techniques or methods within the scope of the present application. In another point, the mutual coupling or direct coupling or communication connection shown or discussed may be through some interfaces, and the indirect coupling or communication connection of devices or units may be in electrical, mechanical or other forms.

以上所述仅是本发明的具体实施方式,应当指出,对于本技术领域的普通技术人员来说,在不脱离本发明原理的前提下,还可以做出若干改进和润饰,这些改进和润饰也应视为本发明的保护范围。The foregoing is only a specific embodiment of the present invention. It should be pointed out that for those of ordinary skill in the art, some improvements and modifications can also be made without departing from the principle of the present invention. It should be regarded as the protection scope of the present invention.

Claims (8)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201110316170.7ACN102360421B (en) | 2011-10-19 | 2011-10-19 | Face identification method and system based on video streaming |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201110316170.7ACN102360421B (en) | 2011-10-19 | 2011-10-19 | Face identification method and system based on video streaming |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN102360421A CN102360421A (en) | 2012-02-22 |

| CN102360421Btrue CN102360421B (en) | 2014-05-28 |

Family

ID=45585748

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201110316170.7AExpired - Fee RelatedCN102360421B (en) | 2011-10-19 | 2011-10-19 | Face identification method and system based on video streaming |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN102360421B (en) |

Cited By (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN105279496A (en)* | 2015-10-26 | 2016-01-27 | 浙江宇视科技有限公司 | Human face recognition method and apparatus |

| RU2712417C1 (en)* | 2019-02-28 | 2020-01-28 | Публичное Акционерное Общество "Сбербанк России" (Пао Сбербанк) | Method and system for recognizing faces and constructing a route using augmented reality tool |

Families Citing this family (55)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN103294986B (en)* | 2012-03-02 | 2019-04-09 | 汉王科技股份有限公司 | A kind of recognition methods of biological characteristic and device |

| CN104349045B (en)* | 2013-08-09 | 2019-01-15 | 联想(北京)有限公司 | A kind of image-pickup method and electronic equipment |

| CN103809759A (en)* | 2014-03-05 | 2014-05-21 | 李志英 | Face input method |

| CN104008370B (en)* | 2014-05-19 | 2017-06-13 | 清华大学 | A kind of video face identification method |

| CN103955719A (en)* | 2014-05-20 | 2014-07-30 | 中国科学院信息工程研究所 | Filter bank training method and system and image key point positioning method and system |

| GB2528330B (en)* | 2014-07-18 | 2021-08-04 | Unifai Holdings Ltd | A method of video analysis |

| CN105631391B (en)* | 2014-11-05 | 2019-03-22 | 联芯科技有限公司 | A kind of image processing method and system for realizing eyes amplification |

| CN104376334B (en)* | 2014-11-12 | 2018-05-29 | 上海交通大学 | A kind of pedestrian comparison method of multi-scale feature fusion |

| CN104581047A (en)* | 2014-12-15 | 2015-04-29 | 苏州福丰科技有限公司 | Three-dimensional face recognition method for supervisory video recording |

| CN104794464B (en)* | 2015-05-13 | 2019-06-07 | 上海依图网络科技有限公司 | A kind of biopsy method based on relative priority |

| CN104899575A (en)* | 2015-06-19 | 2015-09-09 | 南京大学 | Human body assembly dividing method based on face detection and key point positioning |

| CN105046227B (en)* | 2015-07-24 | 2018-07-31 | 上海依图网络科技有限公司 | A kind of key frame acquisition methods for portrait video system |

| CN106407984B (en)* | 2015-07-31 | 2020-09-11 | 腾讯科技(深圳)有限公司 | Target object identification method and device |

| CN105893922A (en)* | 2015-08-11 | 2016-08-24 | 乐视体育文化产业发展(北京)有限公司 | Bicycle unlocking method and device and bicycle |

| CN106570445B (en)* | 2015-10-13 | 2019-02-05 | 腾讯科技(深圳)有限公司 | A kind of characteristic detection method and device |

| CN105234940A (en)* | 2015-10-23 | 2016-01-13 | 上海思依暄机器人科技有限公司 | Robot and control method thereof |

| CN105426829B (en)* | 2015-11-10 | 2018-11-16 | 深圳Tcl新技术有限公司 | Video classification methods and device based on facial image |

| CN106856063A (en)* | 2015-12-09 | 2017-06-16 | 朱森 | A kind of new teaching platform |

| CN105631419B (en)* | 2015-12-24 | 2019-06-11 | 浙江宇视科技有限公司 | Face recognition method and device |

| CN105809107B (en)* | 2016-02-23 | 2019-12-03 | 深圳大学 | Single sample face recognition method and system based on facial feature points |

| CN105628996A (en)* | 2016-03-25 | 2016-06-01 | 胡荣 | Electric Energy Meter Based on Image Processing |

| CN106022313A (en)* | 2016-06-16 | 2016-10-12 | 湖南文理学院 | Scene-automatically adaptable face recognition method |

| CN106250825A (en)* | 2016-07-22 | 2016-12-21 | 厚普(北京)生物信息技术有限公司 | A kind of at the medical insurance adaptive face identification system of applications fields scape |

| CN106326853B (en)* | 2016-08-19 | 2020-05-15 | 厦门美图之家科技有限公司 | Face tracking method and device |

| CN106326981B (en)* | 2016-08-31 | 2019-03-26 | 北京光年无限科技有限公司 | Robot automatically creates the method and device of individualized virtual robot |

| CN106326980A (en)* | 2016-08-31 | 2017-01-11 | 北京光年无限科技有限公司 | Robot and method for simulating human facial movements by robot |

| CN107066932A (en)* | 2017-01-16 | 2017-08-18 | 北京龙杯信息技术有限公司 | The detection of key feature points and localization method in recognition of face |

| CN106919898A (en)* | 2017-01-16 | 2017-07-04 | 北京龙杯信息技术有限公司 | Feature modeling method in recognition of face |

| CN106951866A (en)* | 2017-03-21 | 2017-07-14 | 北京深度未来科技有限公司 | A kind of face authentication method and device |

| CN108932254A (en)* | 2017-05-25 | 2018-12-04 | 中兴通讯股份有限公司 | A kind of detection method of similar video, equipment, system and storage medium |

| CN107633209B (en)* | 2017-08-17 | 2018-12-18 | 平安科技(深圳)有限公司 | Electronic device, the method for dynamic video recognition of face and storage medium |

| CN107633564A (en)* | 2017-08-31 | 2018-01-26 | 深圳市盛路物联通讯技术有限公司 | Monitoring method and Internet of Things server based on image |

| CN108038422B (en)* | 2017-11-21 | 2021-12-21 | 平安科技(深圳)有限公司 | Camera device, face recognition method and computer-readable storage medium |

| CN108228742B (en)* | 2017-12-15 | 2021-10-22 | 深圳市商汤科技有限公司 | Face duplicate checking method and device, electronic equipment, medium and program |

| CN108615256B (en)* | 2018-03-29 | 2022-04-12 | 西南民族大学 | Human face three-dimensional reconstruction method and device |

| CN108898051A (en)* | 2018-05-22 | 2018-11-27 | 广州洪森科技有限公司 | A kind of face identification method and system based on video flowing |

| CN108629335A (en)* | 2018-06-05 | 2018-10-09 | 华东理工大学 | Adaptive face key feature points selection method |

| CN110688872A (en)* | 2018-07-04 | 2020-01-14 | 北京得意音通技术有限责任公司 | Lip-based person identification method, device, program, medium, and electronic apparatus |

| CN109190474B (en)* | 2018-08-01 | 2021-07-20 | 南昌大学 | Human animation key frame extraction method based on pose saliency |

| CN109214157A (en)* | 2018-08-16 | 2019-01-15 | 安徽超清科技股份有限公司 | A kind of embedded human face identification intelligent identity authorization system based on robot platform |

| CN109190561B (en)* | 2018-09-04 | 2022-03-22 | 四川长虹电器股份有限公司 | Face recognition method and system in video playing |

| CN109350965B (en)* | 2018-10-09 | 2019-10-29 | 苏州好玩友网络科技有限公司 | A kind of game control method, device and terminal applied to mobile terminal |

| CN109360284A (en)* | 2018-10-16 | 2019-02-19 | 菏泽学院 | A student intelligent attendance management system and method |

| CN109584276B (en)* | 2018-12-04 | 2020-09-25 | 北京字节跳动网络技术有限公司 | Key point detection method, device, equipment and readable medium |

| CN109670440B (en)* | 2018-12-14 | 2023-08-08 | 央视国际网络无锡有限公司 | Identification method and device for big bear cat face |

| CN111258406A (en)* | 2019-03-19 | 2020-06-09 | 李华 | Mobile terminal power consumption management device |

| CN110148092B (en)* | 2019-04-16 | 2022-12-13 | 无锡海鸿信息技术有限公司 | Method for analyzing sitting posture and emotional state of teenager based on machine vision |

| CN112307817B (en)* | 2019-07-29 | 2024-03-19 | 中国移动通信集团浙江有限公司 | Face living body detection method, device, computing equipment and computer storage medium |

| CN110688977B (en)* | 2019-10-09 | 2022-09-20 | 浙江中控技术股份有限公司 | Industrial image identification method and device, server and storage medium |

| CN111144326B (en)* | 2019-12-28 | 2023-10-27 | 神思电子技术股份有限公司 | Human face anti-re-recognition method for man-machine interaction |

| CN112102150B (en)* | 2020-01-07 | 2022-03-18 | 杭州鸭梨互动网络科技有限公司 | Adaptive short video content enhancement system |

| CN111783681B (en)* | 2020-07-02 | 2024-08-13 | 深圳市万睿智能科技有限公司 | Large-scale face library identification method, system, computer equipment and storage medium |

| CN112487904A (en)* | 2020-11-23 | 2021-03-12 | 成都尽知致远科技有限公司 | Video image processing method and system based on big data analysis |

| US12406528B2 (en)* | 2023-01-27 | 2025-09-02 | Avago Technologies International Sales Pte. Limited | Face recognition systems and methods for media playback devices |

| CN116665024A (en)* | 2023-06-07 | 2023-08-29 | 浙江久婵物联科技有限公司 | Image processing system and method in face recognition |

Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US7706576B1 (en)* | 2004-12-28 | 2010-04-27 | Avaya Inc. | Dynamic video equalization of images using face-tracking |

| CN102214291A (en)* | 2010-04-12 | 2011-10-12 | 云南清眸科技有限公司 | Method for quickly and accurately detecting and tracking human face based on video sequence |

- 2011

- 2011-10-19CNCN201110316170.7Apatent/CN102360421B/ennot_activeExpired - Fee Related

Patent Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US7706576B1 (en)* | 2004-12-28 | 2010-04-27 | Avaya Inc. | Dynamic video equalization of images using face-tracking |

| CN102214291A (en)* | 2010-04-12 | 2011-10-12 | 云南清眸科技有限公司 | Method for quickly and accurately detecting and tracking human face based on video sequence |

Non-Patent Citations (2)

| Title |

|---|

| 李新涛等.结合加权相似值和相似投票的视频流人脸识别.《合肥工业大学学报自然科学版》.2011,第34卷(第2期),摘要. |

| 结合加权相似值和相似投票的视频流人脸识别;李新涛等;《合肥工业大学学报自然科学版》;20110228;第34卷(第2期);摘要* |

Cited By (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN105279496A (en)* | 2015-10-26 | 2016-01-27 | 浙江宇视科技有限公司 | Human face recognition method and apparatus |

| CN105279496B (en)* | 2015-10-26 | 2019-10-18 | 浙江宇视科技有限公司 | Method and device for face recognition |

| RU2712417C1 (en)* | 2019-02-28 | 2020-01-28 | Публичное Акционерное Общество "Сбербанк России" (Пао Сбербанк) | Method and system for recognizing faces and constructing a route using augmented reality tool |

Also Published As

| Publication number | Publication date |

|---|---|

| CN102360421A (en) | 2012-02-22 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN102360421B (en) | Face identification method and system based on video streaming | |

| CN112686812B (en) | Bank card tilt correction detection method, device, readable storage medium and terminal | |

| CN110363116B (en) | Irregular face correction method, system and medium based on GLD-GAN | |

| CN110490158B (en) | Robust face alignment method based on multistage model | |

| CN113591968A (en) | Infrared weak and small target detection method based on asymmetric attention feature fusion | |

| Wang et al. | Background-driven salient object detection | |

| WO2015149534A1 (en) | Gabor binary pattern-based face recognition method and device | |

| CN105205480A (en) | Complex scene human eye locating method and system | |

| CN103020992B (en) | A kind of video image conspicuousness detection method based on motion color-associations | |

| CN107977661B (en) | Region-of-interest detection method based on FCN and low-rank sparse decomposition | |

| CN103020985B (en) | A kind of video image conspicuousness detection method based on field-quantity analysis | |

| CN111126240A (en) | A three-channel feature fusion face recognition method | |

| CN107633226A (en) | A kind of human action Tracking Recognition method and system | |

| CN111368742B (en) | Reconstruction and identification method and system of double yellow traffic marking lines based on video analysis | |

| CN103440035A (en) | Gesture recognition system in three-dimensional space and recognition method thereof | |

| CN106650606A (en) | Matching and processing method of face image and face image model construction system | |

| CN103034865A (en) | Extraction method of visual salient regions based on multiscale relative entropy | |

| Ma et al. | Msma-net: An infrared small target detection network by multiscale super-resolution enhancement and multilevel attention fusion | |

| CN106296632B (en) | A kind of well-marked target detection method based on amplitude spectrum analysis | |

| Proenca et al. | Iris recognition: An analysis of the aliasing problem in the iris normalization stage | |

| CN104616034B (en) | A smoke detection method | |

| Sulaiman et al. | Building precision: Efficient Encoder–Decoder networks for remote sensing based on aerial RGB and LiDAR data | |

| US10115195B2 (en) | Method and apparatus for processing block to be processed of urine sediment image | |

| CN111127355A (en) | A method for fine completion of defect optical flow graph and its application | |

| CN114463379A (en) | Method and device for dynamic capture of video key points |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| C06 | Publication | ||

| PB01 | Publication | ||

| C10 | Entry into substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| C14 | Grant of patent or utility model | ||

| GR01 | Patent grant | ||

| CF01 | Termination of patent right due to non-payment of annual fee | ||

| CF01 | Termination of patent right due to non-payment of annual fee | Granted publication date:20140528 Termination date:20171019 |