CN102289948A - Multi-characteristic fusion multi-vehicle video tracking method under highway scene - Google Patents

Multi-characteristic fusion multi-vehicle video tracking method under highway sceneDownload PDFInfo

- Publication number

- CN102289948A CN102289948ACN2011102577077ACN201110257707ACN102289948ACN 102289948 ACN102289948 ACN 102289948ACN 2011102577077 ACN2011102577077 ACN 2011102577077ACN 201110257707 ACN201110257707 ACN 201110257707ACN 102289948 ACN102289948 ACN 102289948A

- Authority

- CN

- China

- Prior art keywords

- vehicle

- frame

- tracking

- video

- sequence

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

- 238000000034methodMethods0.000titleclaimsabstractdescription42

- 230000004927fusionEffects0.000titleclaimsabstractdescription7

- 238000001514detection methodMethods0.000claimsabstractdescription15

- 239000000284extractSubstances0.000claimsabstractdescription8

- 238000012544monitoring processMethods0.000claimsabstractdescription6

- 238000000605extractionMethods0.000claimsdescription5

- 230000008878couplingEffects0.000claimsdescription2

- 238000010168coupling processMethods0.000claimsdescription2

- 238000005859coupling reactionMethods0.000claimsdescription2

- 238000002156mixingMethods0.000claimsdescription2

- 230000000007visual effectEffects0.000claimsdescription2

- 230000003139buffering effectEffects0.000claims1

- 230000007306turnoverEffects0.000claims1

- 230000000694effectsEffects0.000abstractdescription4

- 238000005516engineering processMethods0.000abstractdescription4

- 230000009286beneficial effectEffects0.000abstractdescription2

- 239000000203mixtureSubstances0.000description13

- 230000033001locomotionEffects0.000description6

- 238000011161developmentMethods0.000description4

- 238000010586diagramMethods0.000description2

- 238000012545processingMethods0.000description2

- 238000011160researchMethods0.000description2

- 238000000926separation methodMethods0.000description2

- 206010039203Road traffic accidentDiseases0.000description1

- 230000003044adaptive effectEffects0.000description1

- 238000004364calculation methodMethods0.000description1

- 230000007812deficiencyEffects0.000description1

- 238000005286illuminationMethods0.000description1

- 230000004807localizationEffects0.000description1

- 230000007774longtermEffects0.000description1

- 238000005259measurementMethods0.000description1

- 230000003287optical effectEffects0.000description1

- 238000005070samplingMethods0.000description1

Images

Landscapes

- Image Analysis (AREA)

Abstract

Translated fromChineseDescription

Translated fromChinese技术领域technical field

本发明属于图像处理领域,具体涉及智能交通领域,特别涉及到高速公路监控场景下多车辆视频跟踪的方法。The invention belongs to the field of image processing, in particular to the field of intelligent transportation, in particular to a method for video tracking of multiple vehicles in a highway monitoring scene.

背景技术Background technique

近年来,由于经济的快速发展,道路交通迅速发展,机动车辆的保有量迅速攀升,大量的公路交通问题显现出来,如堵车、交通事故等频频发生,对交通管理提出了新的挑战。为了解决地面交通快速发展所引发的各种问题,各个发达国家竞相投入大量的资金和人员,开始大规模的进行公路交通运输智能化的研究。智能交通系统(ITS,Intelligent Traffic System)的研究被提到了重要位置。许多国家就发展智能交通系统做出了长远规划。部分已经研制成功的智能交通系统技术投入使用后取得了良好的效果和收益。运动车辆检测跟踪是基于计算机视觉的智能交通系统的核心技术,是计算机视觉的基本问题之一。In recent years, due to the rapid development of the economy, the rapid development of road traffic and the rapid increase in the number of motor vehicles, a large number of road traffic problems have emerged, such as frequent traffic jams and traffic accidents, which pose new challenges to traffic management. In order to solve various problems caused by the rapid development of ground transportation, various developed countries have invested a large amount of funds and personnel to start large-scale research on the intelligentization of road transportation. The research on Intelligent Traffic System (ITS, Intelligent Traffic System) has been mentioned in an important position. Many countries have made long-term plans for the development of intelligent transportation systems. Part of the intelligent transportation system technology that has been successfully developed has achieved good results and benefits after being put into use. Moving vehicle detection and tracking is the core technology of intelligent transportation system based on computer vision, and it is one of the basic problems of computer vision.

运动车辆跟踪建立在准确的车辆检测的基础之上。目前基于视频的运动车辆的检测方法主要有:背景差分法、时间差分法、灰度特征法、光流法、高斯背景建模等方法。Moving vehicle tracking is based on accurate vehicle detection. At present, the detection methods of moving vehicles based on video mainly include: background difference method, time difference method, gray feature method, optical flow method, Gaussian background modeling and other methods.

运动车辆跟踪等价于在连续的图像帧间创建基于位置、速度、形状、纹理、色彩等有关特征的对应匹配问题,运动目标的跟踪大致可以分为基于特征的方法、基于区域的方法、基于轮廓的方法、基于模型的方法四种。Moving vehicle tracking is equivalent to creating a corresponding matching problem based on position, speed, shape, texture, color and other related features between consecutive image frames. The tracking of moving objects can be roughly divided into feature-based methods, region-based methods, and Contour-based methods and model-based methods.

基于特征的跟踪feature based tracking

基于特征的跟踪方法,是从图像中提取整个车辆或局部车辆的典型特征并在图像序列间匹配这些典型特征的跟踪方法。The feature-based tracking method is a tracking method that extracts typical features of the entire vehicle or local vehicles from images and matches these typical features between image sequences.

基于区域的跟踪Region-Based Tracking

基于小区域的跟踪是比较典型的基于区域的视觉跟踪。它的基本原则就是利用图像的特征将每帧图像分割为不同区域,通过这些区域在相邻帧中进行区域匹配,实现目标跟踪。Tracking based on small regions is a typical region-based visual tracking. Its basic principle is to use the characteristics of the image to divide each frame of the image into different regions, and perform region matching in adjacent frames through these regions to achieve target tracking.

基于轮廓的跟踪contour based tracking

基于活动轮廓的跟踪其核心思想是利用封闭的曲线轮廓来表达运动目标,该曲线轮廓通过各种约束函数达到方向及方向的变形从而逐渐与图像中的真实目标相适应,检索或跟踪复杂背景中的目标。The core idea of tracking based on active contours is to use closed curved contours to express moving targets. The curved contours achieve direction and direction deformation through various constraint functions to gradually adapt to the real target in the image, and retrieve or track objects in complex backgrounds. The goal.

基于模型的跟踪model-based tracking

基于模型中典型的三维模型的方法通过测量或者其他的计算机视觉技术建立起目标的三维模型,在跟踪的时候通过模型和投影等参数进行匹配来定位和识别目标。由于利用了物体的三维轮廓或者表面信息,这类方法在本质上具有非常强的鲁棒性,在遮挡和干扰情况下能够获得其它方法难以比拟的效果。但是也有自己的一些缺点。Based on the typical 3D model in the model, the 3D model of the target is established by measurement or other computer vision techniques, and the target is located and identified by matching parameters such as model and projection during tracking. Due to the use of the three-dimensional contour or surface information of the object, this type of method is inherently very robust, and can obtain effects that are incomparable to other methods in the case of occlusion and interference. But it also has some disadvantages of its own.

发明内容Contents of the invention

本发明的目的是在于克服现有技术的不足,提供一种基于改进的混合高斯建模法进行车辆检测,然后采用多特征融合,将分块建模、颜色建模和位置建模等相融合的多车辆的跟踪方法。该方法对于高速公路上多车辆的数据关联和遮挡等跟踪的难点问题有很强的鲁棒性。The purpose of the present invention is to overcome the deficiencies of the prior art, provide a vehicle detection based on an improved hybrid Gaussian modeling method, and then use multi-feature fusion to integrate block modeling, color modeling and position modeling. multi-vehicle tracking method. This method has strong robustness to the difficult problems such as data association and occlusion of multiple vehicles on the highway.

本发明的目的是通过以下步骤来实现的:The object of the present invention is achieved through the following steps:

步骤1.用一个固定视场的监控摄像机,对高速公路中行驶的车辆获取视频图像。Step 1. Use a surveillance camera with a fixed field of view to acquire video images of vehicles traveling on the highway.

步骤2.对于监控摄像机采集的输入视频图像中的每一帧图像,利用改进的混合高斯建模,在图像中进行车辆检测和定位,具体是:Step 2. For each frame image in the input video image collected by the surveillance camera, use the improved mixture Gaussian modeling to perform vehicle detection and localization in the image, specifically:

2-1.基于视频中的道路区域,利用改进的混合高斯建模,所述改进的混合高斯建模引进一个参数 ,记录当前帧与模型匹配的有效像素的点数,令背景更新率,其中表示基本混合高斯建模中背景参数的更新率。2-1. Based on the road area in the video, using the improved mixture Gaussian modeling, the improved mixture Gaussian modeling introduces a parameter , Record the number of effective pixels that match the current frame with the model, so that the background update rate ,in Indicates the update rate of the background parameter in basic mixture Gaussian modeling.

2-2.经过改进的混合高斯建模后,输入的视频图像变成二值化的图像,其中白色像素代表为前景物体的位置;通过连通区域的4邻域归并策略,将符合车辆大小特征的白色连通区域在原视频图像中用矩形搜索框标记出来,这样可获得视频中的车辆定位。2-2. After the improved mixed Gaussian modeling, the input video image becomes a binary image, in which the white pixel represents the position of the foreground object; through the 4-neighborhood merging strategy of the connected area, it will conform to the vehicle size feature The white connected regions of are marked with a rectangular search box in the original video image, so that the vehicle location in the video can be obtained.

步骤3.提取运动车辆的多个特征信息,用于视频车辆跟踪,具体是:对车辆所在矩形搜索框进行灰度、HSV颜色和位置信息的提取。Step 3. Extract a plurality of feature information of the moving vehicle for video vehicle tracking, specifically: extract the gray scale, HSV color and position information of the rectangular search box where the vehicle is located.

步骤4.匹配不同视频帧的检测结果,判断是否属于同一车辆,并对图像中车辆相互遮挡的情况进行处理,具体是:Step 4. Match the detection results of different video frames, determine whether they belong to the same vehicle, and process the mutual occlusion of vehicles in the image, specifically:

4-1.车辆相似度定义:将第t帧的第i个车辆检测结果表示为,其中表示车辆所在矩形搜索框中心位置,矩形搜索框的宽度和长度为。表示车辆所在矩形搜索框的HSV颜色特征。表示车辆所在矩形搜索框的灰度特征。而将第t-1帧的第j个车辆检测结果表示为,其中表示车辆所在矩形搜索框中心位置,矩形搜索框宽度和长度为,表示车辆所在矩形搜索框的HSV颜色特征,表示车辆所在矩形搜索框的灰度特征。4-1. Definition of vehicle similarity: the i-th vehicle detection result in the t-th frame is expressed as ,in Indicates the center position of the rectangular search box where the vehicle is located , the width and length of the rectangular search box are . Indicates the HSV color feature of the rectangular search box where the vehicle is located. Represents the grayscale feature of the rectangular search box where the vehicle is located. And the detection result of the jth vehicle in the t-1th frame is expressed as ,in Indicates the center position of the rectangular search box where the vehicle is located , the width and length of the rectangular search box are , Indicates the HSV color feature of the rectangular search box where the vehicle is located, Represents the grayscale feature of the rectangular search box where the vehicle is located.

采用高斯分布的核函数建模,分别得到HSV颜色相似函数,位置相似函数,灰度相似函数,则车辆相似度函数其中分别为、和在中的权重函数。The kernel function of Gaussian distribution is used to model, and the HSV color similarity function is obtained respectively , the positional similarity function , the gray similarity function , then the vehicle similarity function in respectively , and exist weight function in .

4-2.初始化:当前帧中检测出来所有车辆构成了待匹配车辆序列,如果当前帧是改进的混合高斯建模完成后的第一帧,则将第一帧中的待匹配车辆序列初始化为现有跟踪车辆序列。4-2. Initialization: All the vehicles detected in the current frame constitute the vehicle sequence to be matched. If the current frame is the first frame after the improved hybrid Gaussian modeling is completed, the vehicle sequence to be matched in the first frame is initialized as Existing tracked vehicle sequence.

4-3.跟踪:4-3. Tracking:

预设阀值、、。preset threshold , , .

4-3-1将第t帧的待匹配车辆序列的每一个车辆和现有跟踪车辆序列的所有车辆进行匹配,找到第t帧中的第i辆待匹配车辆与现有跟踪车辆序列中相似度函数值最大的第j辆车辆;如果这个最大的相似度函数值大于等于阀值,则判断第t-1帧的车辆j出现在第t帧的车辆i的位置,更新车辆信息;如果该最大相似度函数值小于阀值,那么第t帧的待匹配车辆序列中的车辆i作为可能出现在视频中的新车辆加入到车辆缓冲序列。4-3-1 Match each vehicle in the t-th frame to be matched with all vehicles in the existing tracked vehicle sequence, and find that the i-th vehicle to be matched in the t-th frame is similar to the existing tracked vehicle sequence The jth vehicle with the largest degree function value; if the largest similarity function value greater than or equal to the threshold , then it is judged that the vehicle j in the t-1th frame appears at the position of the vehicle i in the tth frame, and the vehicle information is updated; if the maximum similarity function value less than threshold , then the vehicle i in the vehicle sequence to be matched in the tth frame is added to the vehicle buffer sequence as a new vehicle that may appear in the video.

4-3-2对车辆缓冲序列里所有的车辆进行统计,如果当前车辆在缓冲序列中连续出现帧。那么认为该车辆是新车辆出现在视频中,并将该车加入到现有跟踪车辆序列,并返回至步骤4-3-1。如果连续帧未出现,那么则认为该车辆已经驶出本视频监控范围,从缓冲车辆序列里删除,并将其从现有跟踪车辆序列中删除。4-3-2 Count all the vehicles in the vehicle buffer sequence, if the current vehicle appears continuously in the buffer sequence frame. Then consider that the vehicle is a new vehicle appearing in the video, and add the vehicle to the existing tracked vehicle sequence, and return to step 4-3-1. if continuous If the frame does not appear, then it is considered that the vehicle has driven out of the video monitoring range, deleted from the buffered vehicle sequence, and deleted from the existing tracked vehicle sequence.

如果第t帧中的车辆目标对应t-1帧中两辆或两辆以上车辆的位置函数都大于一个预设的门限时,说明车辆目标对应于前一帧图像中两个或两个以上车辆模型,判定发生车辆间相互遮挡,采用分块跟踪方法消除跟踪遮挡影响。If the vehicle target in frame t Corresponding to two or more vehicles in frame t-1 position functions are greater than a preset threshold , specify the vehicle target Corresponding to two or more vehicle models in the previous frame image , it is determined that mutual occlusion occurs between vehicles, and the block tracking method is used to eliminate the influence of tracking occlusion.

所述的分块跟踪方法,具体是:在车辆所在矩形搜索框内采用分块模型分别进行分块,并提取每个分块的HSV颜色特征,然后将分好的每个块都标记上每块的中心点距离原先车辆矩形搜索框中心点的距离其中为方向上的距离,为方向上的距离,为分块的数量。对每一个分块在当前t帧的车辆目标矩形搜索框中进行颜色匹配;选择颜色匹配值最大的个分块(其中,)联合确定前一帧的车辆的中心在发生遮挡的车辆目标的矩形搜索框的位置,采用上一帧的车辆矩形搜索框获得车辆的矩形搜索框位置,这样可分离多个相互遮挡的车辆。The block tracking method is specifically: in the vehicle In the rectangular search box, the block model is used to divide the blocks separately, and the HSV color features of each block are extracted, and then each divided block is marked with the distance between the center point of each block and the center point of the original vehicle rectangular search box. distance in for the distance in the direction, for the distance in the direction, is the number of blocks. For each block, the vehicle target in the current t frame Color matching in the rectangular search box; select the one with the largest color matching value blocks (of which , ) jointly determine the center of the vehicle in the previous frame in the occluded vehicle target The position of the rectangular search box of the vehicle is obtained by using the vehicle rectangular search box of the previous frame to obtain the position of the vehicle's rectangular search box, so that multiple mutually occluded vehicles can be separated.

步骤4-1在得到HSV颜色相似函数过程中采用了对当前的前景图像进行采取隔行抽取的方法。Step 4-1 is getting the HSV color similarity function In the process, the method of interlacing the current foreground image is adopted.

与现有技术相比,本发明的优点在于:Compared with the prior art, the present invention has the advantages of:

本发明在背景建模方面通过混合高斯建模对光照变化和图像噪声的抗干扰性较强,对成功准确的提取车辆前景信息有较好的帮助。In terms of background modeling, the invention has strong anti-interference ability to illumination changes and image noise through mixed Gaussian modeling, and is helpful for successfully and accurately extracting vehicle foreground information.

本发明在多车辆跟踪的目标关联上采用了多信息融合的技术,多种特征的联合跟踪有利于提高跟踪的准确率和抗干扰性。The present invention adopts the technology of multi-information fusion in the target association of multi-vehicle tracking, and the joint tracking of multiple features is beneficial to improve the tracking accuracy and anti-interference performance.

本发明解决了车辆跟踪中遮挡耦合情况下,目标容易丢失的问题,车辆跟踪成功率高且应用范围较广。The invention solves the problem that the target is easily lost in the case of occlusion coupling in vehicle tracking, has high vehicle tracking success rate and wide application range.

附图说明Description of drawings

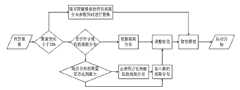

图1是本方法的总体流程图。Fig. 1 is the general flowchart of this method.

图2是混合高斯建模的流程图。Figure 2 is a flowchart of mixture Gaussian modeling.

图3是位置建模的模型图。Fig. 3 is a model diagram of position modeling.

图4是分块跟踪的分块模型图。Figure 4 is a block model diagram of block tracking.

具体实施方式Detailed ways

下面结合附图和具体实施方式对本发明做进一步的描述。The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

图1给出了车辆跟踪算法的技术流程图。Figure 1 shows the technical flow chart of the vehicle tracking algorithm.

1、视频采集1. Video capture

针对固定视场的监控摄像机,以固定时间间隔对道路场景进行采集以获得连续的现场场景图像,为保证对车辆检测和跟踪的准确性,采样间隔应小于0.1秒,即大于10帧每秒。For surveillance cameras with a fixed field of view, the road scene is collected at fixed time intervals to obtain continuous on-site scene images. In order to ensure the accuracy of vehicle detection and tracking, the sampling interval should be less than 0.1 second, that is, greater than 10 frames per second.

2、车辆检测2. Vehicle detection

本方法采用改进的混合高斯对背景进行建模。混合高斯建模由有限个高斯函数的加权和组成。对每一个像素点,定义个高斯模型。混合高斯建模的流程如图2所示。This method uses a modified mixture of Gaussians to model the background. Mixture Gaussian modeling consists of a weighted sum of a finite number of Gaussian functions. For each pixel, define a Gaussian model. The process of mixture Gaussian modeling is shown in Figure 2.

为了使图像像素的颜色对光亮度变化具有鲁棒性,我们采用HSV颜色空间把色度从饱和度、亮度中分解出来,从而减小对光照变化的影响。In order to make the color of image pixels robust to changes in light brightness, we use the HSV color space to decompose hue from saturation and brightness, thereby reducing the impact on light changes.

(1) (1)

混合高斯模型的初始化通过计算一段视频序列图像像素的均值和方差得到,即:The initialization of the mixed Gaussian model is obtained by calculating the mean and variance of the image pixels of a video sequence, namely:

(2) (2)

初始化后需要利用每一帧输入的像素更新模型的参数,首先检查每一个新的像素值是否与模型匹配,即在时刻,如果输入的像素特征对第个高斯分量有:After initialization, the parameters of the model need to be updated with the input pixels of each frame. First, check whether each new pixel value matches the model, that is, at time , if the input pixel feature pair Gaussian components are:

(3) (3)

D为置信参数,本算法取3,如果,那么,则认为输入像素点和背景的高斯混合模型不匹配,该像素为前景像素,不对相应的高斯混合模型参数进行更新。如果则认为与高斯混合模型匹配,则对相应的高斯混合模型的参数进行更新。高斯混合模型的权值及均值和方差的更新方程如下:D is the confidence parameter, this algorithm takes 3, if ,So , it is considered that the Gaussian mixture model of the input pixel does not match the background, the pixel is a foreground pixel, and the corresponding Gaussian mixture model parameters are not updated. if then think If it is matched with the Gaussian mixture model, the parameters of the corresponding Gaussian mixture model are updated. The weight, mean and variance update equations of the Gaussian mixture model are as follows:

(4) (4)

(5) (5)

(6) (6)

其中为更新率,,为背景参数更新率。in is the update rate, , is the background parameter update rate.

传统的混合高斯模型中,背景更新速度取决于更新率 。为了抑制视频中的噪声干扰和保持模型的稳定性,更新率的取值通常较小。然而更新率取值较小时,则背景模型的均值和方差收敛速度比较慢且需要较长时间适应环境的变化。因此取一个自适应的更新率 显得很关键。针对上述存在的问题,本发明对背景建模的更新过程进行了改进:In the traditional mixed Gaussian model, the background update speed depends on the update rate . In order to suppress the noise interference in the video and maintain the stability of the model, the update rate The value of is usually small. However the update rate When the value is small, the mean and variance convergence speed of the background model is relatively slow and it takes a long time to adapt to changes in the environment. So take an adaptive update rate Appears to be critical. In view of the above problems, the present invention improves the update process of background modeling:

(1)首先本算法引进一个参数 ,记录当前帧与某模型匹配的有效像素的点数,令而用 来计算背景更新率 。初始化为1,随着每次更新匹配的有效像素的增加而增加。当 较小时, 较大,在模型的更新过程中可以加快模型参数的收敛。随着的增加, 逐渐变小,模型趋向稳定。(1) First, the algorithm introduces a parameter , Record the number of valid pixels in the current frame that match a certain model, so that And use to calculate the background update rate . Initialized to 1 and incremented as more valid pixels are matched for each update. when when small, Larger, it can speed up the convergence of model parameters during the model update process. along with increase, gradually becomes smaller, the model tends to be stable.

(2)为了解决运动缓慢物体融入背景的问题,我们观察某像素持续为背景的帧数 ,当时,即使该像素模型由于权值上升已转化为背景模型,此时仍认为该像素为前景像素,认为该像素为运动缓慢物体像素。若则由于该像素模型持续认定为背景模型,才认定该像素为背景像素。即持续为背景的帧数至少要大于的情况下,才可被认定为背景。(2) In order to solve the problem of slow-moving objects blending into the background, we observe the number of frames that a certain pixel continues to be the background ,when When , even if the pixel model has been transformed into a background model due to the weight increase, the pixel is still considered to be a foreground pixel at this time, and the pixel is considered to be a slow-moving object pixel. like Then, because the pixel model continues to be recognized as the background model, the pixel is recognized as the background pixel. That is, the number of frames that continue to be the background must be at least greater than Only in the case of the background can it be identified as the background.

通过改进的混合高斯建模后,输入图像成为二值化的图像,白色像素点表示前景物体区域。然后通过连通区域的4邻域归并策略,对图像进行从上到下,从左到右的扫描来识别相连白色像素的区域。将得到的相连区域剔除那些大小不符合车辆大小上下限的区域,消除阴影的干扰,就得到了车辆区域定位,用矩形搜索框标记出。After the improved mixed Gaussian modeling, the input image becomes a binarized image, and the white pixels represent the foreground object area. Then, through the 4-neighborhood merging strategy of connected regions, the image is scanned from top to bottom and from left to right to identify the regions of connected white pixels. Eliminate those areas whose size does not meet the upper and lower limits of the vehicle size from the obtained connected areas, eliminate the interference of shadows, and obtain the vehicle area positioning, which is marked with a rectangular search box.

3、特征提取3. Feature extraction

将车辆所在矩形搜索框的HSV颜色特征、灰度特征、位置特征信息提取并存储起来,用于视频的车辆跟踪。Extract and store the HSV color feature, grayscale feature, and location feature information of the rectangular search box where the vehicle is located, and use it for vehicle tracking in the video.

(1)提取HSV颜色:(1) Extract HSV color:

对颜色特征建立可靠的模型,以便根据颜色模型进行跟踪,本算法选用核密度估计的方法对混合高斯建模检测到的车辆分块颜色信息进行建模,核函数采用高斯核。 A reliable model is established for color features to track according to the color model. This algorithm uses the method of kernel density estimation to model the color information of vehicle blocks detected by mixed Gaussian modeling, and the kernel function adopts Gaussian kernel.

给定样本空间,每个像素的颜色用一个三维向量表示,,, 分别表示,,的带宽。given sample space , each pixel The color of is represented by a three-dimensional vector, , , Respectively , , bandwidth.

(2)灰度特征提取:(2) Grayscale feature extraction:

提取灰度特征和提取HSV颜色特征类似,HSV空间的操作转换为灰度空间操作。Extracting grayscale features is similar to extracting HSV color features, and operations in HSV space are converted to grayscale space operations.

(3)提取位置特征:(3) Extract location features:

位置坐标选择为前景车辆矩形搜索框中轴线上的点坐标,我们选取上、中、下三点坐标,通过前后两帧之间点坐标的差值来建立位置相似函数,运动向量表示为,如图3所示。The position coordinates are selected as the point coordinates on the central axis of the rectangular search box of the foreground vehicle. We select the coordinates of the upper, middle and lower points, and use the difference between the point coordinates between the two frames before and after to establish a position similarity function. The motion vector is expressed as ,As shown in Figure 3.

4、车辆跟踪:4. Vehicle tracking:

多车辆的跟踪的关键是数据关联,即前一帧中建立的已知车辆跟踪序列如何与当前帧中的找到的待匹配车辆序列建立关系,确认两个序列中的某个车辆为同一个跟踪模型。The key to multi-vehicle tracking is data association, that is, how to establish a relationship between the known vehicle tracking sequence established in the previous frame and the vehicle sequence to be matched in the current frame, and confirm that a certain vehicle in the two sequences is the same tracking Model.

本算法利用了目标区域的颜色、灰度及位置信息特征,跟踪问题可通过对视频各帧间特征的匹配来实现,跟踪时按照最大相似度来确定匹配。This algorithm utilizes the color, grayscale and location information features of the target area. The tracking problem can be realized by matching the features between video frames, and the matching is determined according to the maximum similarity during tracking.

4-1相似性定义4-1 Similarity definition

4-1-1.HSV的颜色相似函数:4-1-1. HSV color similarity function:

(7) (7)

核函数采用高斯分布:The kernel function uses a Gaussian distribution:

(8) (8)

各个颜色通道到方差为,The variance of each color channel to ,

,, (9) , , (9)

4-1-2.灰度的相似函数和HSV颜色相似函数类似,操作空间由HSV空间转换到灰度空间。4-1-2. Similarity function of grayscale and HSV color similarity function Similarly, the operation space is converted from HSV space to grayscale space.

4-1-3. 提取位置特征时,我们先在上一帧车辆位置信息的基础上用kalman滤波预测该车辆在本帧的位置,然后将这个预测的运动位置结果与本帧提取的车辆的位置做比较。当两个车辆模型相距较远时,同一辆车在相邻两帧距离相对较小,因此位置相似函数将起主要作用,能够产生好的跟踪效果。在跟踪过程中被跟踪的车辆需要与前一时刻自己本身的模型进行位置相似函数的求解,同时还要与其他车辆模型进行位置相似函数的求解。4-1-3. When extracting position features, we first use kalman filter to predict the position of the vehicle in this frame based on the position information of the vehicle in the previous frame, and then compare the predicted motion position result with the vehicle’s position extracted in this frame. location for comparison. When the two vehicle models are far apart, the distance between two adjacent frames of the same vehicle is relatively small, so the position similarity function will play a major role and can produce a good tracking effect. During the tracking process, the tracked vehicle needs to solve the position similarity function with its own model at the previous moment, and also needs to solve the position similarity function with other vehicle models.

运动向量为,运动向量的三点坐标是相互独立的,因此位置相似函数可以表示为:The motion vector is , the three-point coordinates of the motion vector are independent of each other, so the position similarity function It can be expressed as:

(10) (10)

其中:in:

(11) (11)

其中的是由两帧帧间的车辆模型的运动距离大小决定的。one of them It is determined by the movement distance of the vehicle model between two frames.

相似度函数其中为HSV颜色相似函数,位置相似函数,为灰度相似函数。分别为的权重函数。similarity function in is the HSV color similarity function, positional similarity function, is the gray similarity function. respectively weight function.

4-2.初始化:当前帧中检测出来所有车辆构成了待匹配车辆序列,如果当前帧是改进的混合高斯建模完成后的第一帧,则将第一帧中的待匹配车辆序列初始化为现有跟踪车辆序列;4-2. Initialization: All the vehicles detected in the current frame constitute the vehicle sequence to be matched. If the current frame is the first frame after the improved hybrid Gaussian modeling is completed, the vehicle sequence to be matched in the first frame is initialized as Existing tracked vehicle sequences;

4-3.跟踪:4-3. Tracking:

预设阀值、、。preset threshold , , .

4-3-1.将第t帧的待匹配车辆序列的每一个车辆和现有跟踪车辆序列的所有车辆进行匹配,找到第t帧中的第i辆待匹配车辆与现有跟踪车辆序列中相似度函数值最大的第j辆车辆;如果这个最大的相似度函数值大于等于阀值,则判断第t-1帧的车辆j出现在第t帧的车辆i的位置,更新车辆信息;如果该最大相似度函数值小于阀值,那么第t帧的待匹配车辆序列中的车辆i作为可能出现在视频中的新车辆加入到车辆缓冲序列;4-3-1. Match each vehicle in the t-th frame to be matched with all vehicles in the existing tracked vehicle sequence, and find the i-th vehicle to be matched in the t-th frame with the existing tracked vehicle sequence The jth vehicle with the largest similarity function value; if the largest similarity function value greater than or equal to the threshold , then it is judged that the vehicle j in the t-1th frame appears at the position of the vehicle i in the tth frame, and the vehicle information is updated; if the maximum similarity function value less than threshold , then the vehicle i in the vehicle sequence to be matched in the tth frame is added to the vehicle buffer sequence as a new vehicle that may appear in the video;

4-3-2.对车辆缓冲序列里所有的车辆进行统计,如果当前车辆在缓冲序列中连续出现帧。那么认为该车辆是新车辆出现在视频中,并将该车加入到现有跟踪车辆序列,并返回至步骤4-3-1。如果连续帧未出现,那么则认为该车辆已经驶出本视频监控范围,从缓冲车辆序列里删除,并将其从现有跟踪车辆序列中删除。4-3-2. Count all the vehicles in the vehicle buffer sequence, if the current vehicle appears continuously in the buffer sequence frame. Then consider that the vehicle is a new vehicle appearing in the video, and add the vehicle to the existing tracked vehicle sequence, and return to step 4-3-1. if continuous If the frame does not appear, then it is considered that the vehicle has driven out of the video monitoring range, deleted from the buffered vehicle sequence, and deleted from the existing tracked vehicle sequence.

如果第t帧中的车辆目标对应t-1帧中两辆或两辆以上车辆的位置函数都大于一个预设的门限时,说明车辆目标对应于前一帧图像中两个或两个以上车辆模型,判定发生车辆间相互遮挡,采用分块跟踪方法消除跟踪遮挡影响。If the vehicle target in frame t Corresponding to two or more vehicles in frame t-1 position functions are greater than a preset threshold , specify the vehicle target Corresponding to two or more vehicle models in the previous frame image , it is determined that mutual occlusion occurs between vehicles, and the block tracking method is used to eliminate the influence of tracking occlusion.

分块跟踪方法,具体是:在车辆所在矩形搜索框内采用分块模型(如图4所示)分别进行分块,并提取每个分块的HSV颜色特征,然后将分好的每个块都标记上每块的中心点距离原先车辆矩形搜索框中心点的距离其中为方向上的距离,为方向上的距离,为分块的数量。对每一个分块在当前t帧的车辆目标矩形搜索框中进行颜色匹配;选择颜色匹配值最大的个分块(其中,)联合确定前一帧的车辆的中心在发生遮挡的车辆目标的矩形搜索框的位置,采用上一帧的车辆矩形搜索框获得车辆的矩形搜索框位置,这样可分离多个相互遮挡的车辆。block tracking method, specifically: in the vehicle In the rectangular search box, the block model (as shown in Figure 4) is used to divide the blocks separately, and the HSV color features of each block are extracted, and then each divided block is marked with the distance from the center point of each block to the original The distance from the center point of the vehicle rectangle search box in for the distance in the direction, for the distance in the direction, is the number of blocks. For each block, the vehicle target in the current t frame Color matching in the rectangular search box; select the one with the largest color matching value blocks (of which , ) jointly determine the center of the vehicle in the previous frame in the occluded vehicle target The position of the rectangular search box of the vehicle is obtained by using the vehicle rectangular search box of the previous frame to obtain the position of the vehicle's rectangular search box, so that multiple mutually occluded vehicles can be separated.

这时,我们将所有发生遮挡的车辆在现存的车辆模型序列中做上遮挡的标记,直到它们到某帧分开为止。判断遮挡分离的条件是:在前一帧中标记遮挡的车辆模型与本帧中若干个车辆模型的运动建模函数值大于一个预设的阀值,表明发生遮挡分离。我们用基于颜色模型的相似性来判断分离的模型对应于某一个存储的已知跟踪模型。匹配后取消遮挡标记。At this time, we mark all occluded vehicles in the existing vehicle model sequence until they are separated by a certain frame. The condition for judging occlusion separation is: the motion modeling function value of the vehicle model marked occlusion in the previous frame and several vehicle models in this frame is greater than a preset threshold value, indicating that occlusion separation occurs. We use color-based model similarity to judge that a separated model corresponds to a stored known tracking model. Unoccludes markers after matching.

5、提高算法的运行效率5. Improve the operating efficiency of the algorithm

对当前的前景图像和存储图像进行采取隔行抽取的方法来减少数据量。为了兼顾精度与计算量,我们采用以下原则:如果分辨率已经很小的图像就不必采用隔行抽取,以保证图像不失真,只有分辨率较大的图像采用隔行抽取的方法得到图像。对隔行抽取后的图像再进行相关的图像处理操作,得到了再本帧中的跟踪结果后将标示跟踪位置的矩形框乘以二倍的方式反推到原来图像上。该方法不仅达到了原来的跟踪效果而且大大提高了算法的运行效率。The current foreground image and the stored image are extracted interlacedly to reduce the amount of data. In order to take into account the accuracy and the amount of calculation, we adopt the following principles: if the resolution of the image is already very small, it is not necessary to use interlaced extraction to ensure that the image is not distorted, and only the image with a larger resolution is obtained by the method of interlaced extraction. Perform related image processing operations on the interlaced extracted image, and get the tracking result in this frame, then multiply the rectangular frame marking the tracking position by twice and push back to the original image. This method not only achieves the original tracking effect but also greatly improves the operating efficiency of the algorithm.

Claims (2)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN 201110257707CN102289948B (en) | 2011-09-02 | 2011-09-02 | Multi-characteristic fusion multi-vehicle video tracking method under highway scene |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN 201110257707CN102289948B (en) | 2011-09-02 | 2011-09-02 | Multi-characteristic fusion multi-vehicle video tracking method under highway scene |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN102289948Atrue CN102289948A (en) | 2011-12-21 |

| CN102289948B CN102289948B (en) | 2013-06-05 |

Family

ID=45336325

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN 201110257707Expired - Fee RelatedCN102289948B (en) | 2011-09-02 | 2011-09-02 | Multi-characteristic fusion multi-vehicle video tracking method under highway scene |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN102289948B (en) |

Cited By (23)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102663357A (en)* | 2012-03-28 | 2012-09-12 | 北京工业大学 | Color characteristic-based detection algorithm for stall at parking lot |

| CN102862574A (en)* | 2012-09-21 | 2013-01-09 | 上海永畅信息科技有限公司 | Method for realizing active safety of vehicle on the basis of smart phone |

| CN102915115A (en)* | 2012-09-25 | 2013-02-06 | 上海华勤通讯技术有限公司 | Method for adjusting display frame by eye |

| CN103136935A (en)* | 2013-01-11 | 2013-06-05 | 东南大学 | Method for tracking sheltered vehicles |

| CN103473566A (en)* | 2013-08-27 | 2013-12-25 | 东莞中国科学院云计算产业技术创新与育成中心 | Multi-scale-model-based vehicle detection method |

| CN103679214A (en)* | 2013-12-20 | 2014-03-26 | 华南理工大学 | Vehicle detection method based on online area estimation and multi-feature decision fusion |

| CN104318761A (en)* | 2014-08-29 | 2015-01-28 | 华南理工大学 | Highway-scene-based detection and vehicle detection tracking optimization method |

| CN106446824A (en)* | 2016-09-21 | 2017-02-22 | 防城港市港口区思达电子科技有限公司 | Vehicle detection and tracking method |

| CN106780539A (en)* | 2016-11-30 | 2017-05-31 | 航天科工智能机器人有限责任公司 | Robot vision tracking |

| CN108460968A (en)* | 2017-02-22 | 2018-08-28 | 中兴通讯股份有限公司 | A kind of method and device obtaining traffic information based on car networking |

| CN109359518A (en)* | 2018-09-03 | 2019-02-19 | 惠州学院 | A kind of infrared video moving object recognition method, system and alarm device |

| CN109426791A (en)* | 2017-09-01 | 2019-03-05 | 深圳市金溢科技股份有限公司 | A kind of polynary vehicle match method of multi-site, server and system |

| CN109472767A (en)* | 2018-09-07 | 2019-03-15 | 浙江大丰实业股份有限公司 | Stage lamp miss status analysis system |

| CN110264493A (en)* | 2019-06-17 | 2019-09-20 | 北京影谱科技股份有限公司 | A kind of multiple target object tracking method and device under motion state |

| CN110542908A (en)* | 2019-09-09 | 2019-12-06 | 阿尔法巴人工智能(深圳)有限公司 | laser radar dynamic object perception method applied to intelligent driving vehicle |

| CN111260929A (en)* | 2018-11-30 | 2020-06-09 | 西安宇视信息科技有限公司 | Vehicle tracking abnormity detection method and device |

| CN111597871A (en)* | 2020-03-27 | 2020-08-28 | 广州杰赛科技股份有限公司 | Vehicle tracking method and device, terminal equipment and computer storage medium |

| CN111882582A (en)* | 2020-07-24 | 2020-11-03 | 广州云从博衍智能科技有限公司 | Image tracking correlation method, system, device and medium |

| CN112382104A (en)* | 2020-11-13 | 2021-02-19 | 重庆盘古美天物联网科技有限公司 | Roadside parking management method based on vehicle track analysis |

| CN112532938A (en)* | 2020-11-26 | 2021-03-19 | 武汉宏数信息技术有限责任公司 | Video monitoring system based on big data technology |

| CN112597830A (en)* | 2020-12-11 | 2021-04-02 | 国汽(北京)智能网联汽车研究院有限公司 | Vehicle tracking method, device, equipment and computer storage medium |

| CN113516690A (en)* | 2020-10-26 | 2021-10-19 | 阿里巴巴集团控股有限公司 | Image detection method, device, device and storage medium |

| CN114241398A (en)* | 2022-02-23 | 2022-03-25 | 深圳壹账通科技服务有限公司 | Vehicle damage assessment method, device, equipment and storage medium based on artificial intelligence |

Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20090309966A1 (en)* | 2008-06-16 | 2009-12-17 | Chao-Ho Chen | Method of detecting moving objects |

| CN101800890A (en)* | 2010-04-08 | 2010-08-11 | 北京航空航天大学 | Multiple vehicle video tracking method in expressway monitoring scene |

| CN101976504A (en)* | 2010-10-13 | 2011-02-16 | 北京航空航天大学 | Multi-vehicle video tracking method based on color space information |

- 2011

- 2011-09-02CNCN 201110257707patent/CN102289948B/ennot_activeExpired - Fee Related

Patent Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20090309966A1 (en)* | 2008-06-16 | 2009-12-17 | Chao-Ho Chen | Method of detecting moving objects |

| CN101800890A (en)* | 2010-04-08 | 2010-08-11 | 北京航空航天大学 | Multiple vehicle video tracking method in expressway monitoring scene |

| CN101976504A (en)* | 2010-10-13 | 2011-02-16 | 北京航空航天大学 | Multi-vehicle video tracking method based on color space information |

Cited By (34)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN102663357A (en)* | 2012-03-28 | 2012-09-12 | 北京工业大学 | Color characteristic-based detection algorithm for stall at parking lot |

| CN102862574A (en)* | 2012-09-21 | 2013-01-09 | 上海永畅信息科技有限公司 | Method for realizing active safety of vehicle on the basis of smart phone |

| CN102862574B (en)* | 2012-09-21 | 2015-08-19 | 上海永畅信息科技有限公司 | The method of vehicle active safety is realized based on smart mobile phone |

| CN102915115A (en)* | 2012-09-25 | 2013-02-06 | 上海华勤通讯技术有限公司 | Method for adjusting display frame by eye |

| CN103136935B (en)* | 2013-01-11 | 2015-04-15 | 东南大学 | Method for tracking sheltered vehicles |

| CN103136935A (en)* | 2013-01-11 | 2013-06-05 | 东南大学 | Method for tracking sheltered vehicles |

| CN103473566A (en)* | 2013-08-27 | 2013-12-25 | 东莞中国科学院云计算产业技术创新与育成中心 | Multi-scale-model-based vehicle detection method |

| CN103473566B (en)* | 2013-08-27 | 2016-09-14 | 东莞中国科学院云计算产业技术创新与育成中心 | A kind of vehicle checking method based on multiple dimensioned model |

| CN103679214A (en)* | 2013-12-20 | 2014-03-26 | 华南理工大学 | Vehicle detection method based on online area estimation and multi-feature decision fusion |

| CN103679214B (en)* | 2013-12-20 | 2017-10-20 | 华南理工大学 | Vehicle checking method based on online Class area estimation and multiple features Decision fusion |

| CN104318761A (en)* | 2014-08-29 | 2015-01-28 | 华南理工大学 | Highway-scene-based detection and vehicle detection tracking optimization method |

| CN106446824A (en)* | 2016-09-21 | 2017-02-22 | 防城港市港口区思达电子科技有限公司 | Vehicle detection and tracking method |

| CN106780539A (en)* | 2016-11-30 | 2017-05-31 | 航天科工智能机器人有限责任公司 | Robot vision tracking |

| CN106780539B (en)* | 2016-11-30 | 2019-08-20 | 航天科工智能机器人有限责任公司 | Robot Vision Tracking Method |

| CN108460968A (en)* | 2017-02-22 | 2018-08-28 | 中兴通讯股份有限公司 | A kind of method and device obtaining traffic information based on car networking |

| CN109426791A (en)* | 2017-09-01 | 2019-03-05 | 深圳市金溢科技股份有限公司 | A kind of polynary vehicle match method of multi-site, server and system |

| CN109426791B (en)* | 2017-09-01 | 2022-09-16 | 深圳市金溢科技股份有限公司 | Multi-site and multi-vehicle matching method, server and system |

| CN109359518A (en)* | 2018-09-03 | 2019-02-19 | 惠州学院 | A kind of infrared video moving object recognition method, system and alarm device |

| CN109472767A (en)* | 2018-09-07 | 2019-03-15 | 浙江大丰实业股份有限公司 | Stage lamp miss status analysis system |

| CN109472767B (en)* | 2018-09-07 | 2022-02-08 | 浙江大丰实业股份有限公司 | Stage lamp missing state analysis system |

| CN111260929A (en)* | 2018-11-30 | 2020-06-09 | 西安宇视信息科技有限公司 | Vehicle tracking abnormity detection method and device |

| CN110264493B (en)* | 2019-06-17 | 2021-06-18 | 北京影谱科技股份有限公司 | A method and device for tracking multi-target objects in motion state |

| CN110264493A (en)* | 2019-06-17 | 2019-09-20 | 北京影谱科技股份有限公司 | A kind of multiple target object tracking method and device under motion state |

| CN110542908B (en)* | 2019-09-09 | 2023-04-25 | 深圳市海梁科技有限公司 | Laser radar dynamic object sensing method applied to intelligent driving vehicle |

| CN110542908A (en)* | 2019-09-09 | 2019-12-06 | 阿尔法巴人工智能(深圳)有限公司 | laser radar dynamic object perception method applied to intelligent driving vehicle |

| CN111597871A (en)* | 2020-03-27 | 2020-08-28 | 广州杰赛科技股份有限公司 | Vehicle tracking method and device, terminal equipment and computer storage medium |

| CN111882582B (en)* | 2020-07-24 | 2021-10-08 | 广州云从博衍智能科技有限公司 | Image tracking correlation method, system, device and medium |

| CN111882582A (en)* | 2020-07-24 | 2020-11-03 | 广州云从博衍智能科技有限公司 | Image tracking correlation method, system, device and medium |

| CN113516690A (en)* | 2020-10-26 | 2021-10-19 | 阿里巴巴集团控股有限公司 | Image detection method, device, device and storage medium |

| CN112382104A (en)* | 2020-11-13 | 2021-02-19 | 重庆盘古美天物联网科技有限公司 | Roadside parking management method based on vehicle track analysis |

| CN112532938B (en)* | 2020-11-26 | 2021-08-31 | 武汉宏数信息技术有限责任公司 | A video surveillance system based on big data technology |

| CN112532938A (en)* | 2020-11-26 | 2021-03-19 | 武汉宏数信息技术有限责任公司 | Video monitoring system based on big data technology |

| CN112597830A (en)* | 2020-12-11 | 2021-04-02 | 国汽(北京)智能网联汽车研究院有限公司 | Vehicle tracking method, device, equipment and computer storage medium |

| CN114241398A (en)* | 2022-02-23 | 2022-03-25 | 深圳壹账通科技服务有限公司 | Vehicle damage assessment method, device, equipment and storage medium based on artificial intelligence |

Also Published As

| Publication number | Publication date |

|---|---|

| CN102289948B (en) | 2013-06-05 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN102289948B (en) | Multi-characteristic fusion multi-vehicle video tracking method under highway scene | |

| CN104134222B (en) | Traffic flow monitoring image detecting and tracking system and method based on multi-feature fusion | |

| CN103164858B (en) | Adhesion crowd based on super-pixel and graph model is split and tracking | |

| Wang et al. | Review on vehicle detection based on video for traffic surveillance | |

| CN101950426B (en) | A vehicle relay tracking method in a multi-camera scene | |

| CN102044151B (en) | Night vehicle video detection method based on illumination visibility recognition | |

| CN106952286B (en) | Object Segmentation Method Based on Motion Saliency Map and Optical Flow Vector Analysis in Dynamic Background | |

| CN104091348B (en) | The multi-object tracking method of fusion marked feature and piecemeal template | |

| CN104978567B (en) | Vehicle checking method based on scene classification | |

| CN107563310B (en) | A method of illegal lane change detection | |

| KR101191308B1 (en) | Road and lane detection system for intelligent transportation system and method therefor | |

| CN103150903B (en) | Video vehicle detection method for adaptive learning | |

| Timofte et al. | Combining traffic sign detection with 3D tracking towards better driver assistance | |

| CN102592454A (en) | Intersection vehicle movement parameter measuring method based on detection of vehicle side face and road intersection line | |

| CN105260699A (en) | Lane line data processing method and lane line data processing device | |

| CN111860509B (en) | A two-stage method for accurate extraction of unconstrained license plate regions from coarse to fine | |

| CN105930833A (en) | Vehicle tracking and segmenting method based on video monitoring | |

| CN104835147A (en) | Method for detecting crowded people flow in real time based on three-dimensional depth map data | |

| CN101364347A (en) | A video-based detection method for vehicle control delays at intersections | |

| CN103268470A (en) | Real-time statistics method of video objects based on arbitrary scenes | |

| CN110969131B (en) | A method of counting subway people flow based on scene flow | |

| CN103700106A (en) | Distributed-camera-based multi-view moving object counting and positioning method | |

| CN103577832B (en) | A kind of based on the contextual people flow rate statistical method of space-time | |

| CN107742306A (en) | A Moving Target Tracking Algorithm in Intelligent Vision | |

| WO2017161544A1 (en) | Single-camera video sequence matching based vehicle speed measurement method and system |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| C06 | Publication | ||

| PB01 | Publication | ||

| C10 | Entry into substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| C14 | Grant of patent or utility model | ||

| GR01 | Patent grant | ||

| CF01 | Termination of patent right due to non-payment of annual fee | Granted publication date:20130605 Termination date:20140902 | |

| EXPY | Termination of patent right or utility model |