CN101867813B - Multi-view video coding method oriented for interactive application - Google Patents

Multi-view video coding method oriented for interactive applicationDownload PDFInfo

- Publication number

- CN101867813B CN101867813BCN 201010155912CN201010155912ACN101867813BCN 101867813 BCN101867813 BCN 101867813BCN 201010155912CN201010155912CN 201010155912CN 201010155912 ACN201010155912 ACN 201010155912ACN 101867813 BCN101867813 BCN 101867813B

- Authority

- CN

- China

- Prior art keywords

- viewpoint

- frame

- key frame

- mode

- macroblock

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Expired - Fee Related

Links

- 238000000034methodMethods0.000titleclaimsabstractdescription33

- 230000002452interceptive effectEffects0.000titleclaimsabstractdescription23

- 230000033001locomotionEffects0.000claimsabstractdescription123

- 239000013598vectorSubstances0.000claimsabstractdescription44

- 230000003044adaptive effectEffects0.000claimsabstractdescription14

- 230000011218segmentationEffects0.000claimsdescription3

- 238000004364calculation methodMethods0.000claimsdescription2

- 230000002708enhancing effectEffects0.000claimsdescription2

- 230000006835compressionEffects0.000abstractdescription16

- 238000007906compressionMethods0.000abstractdescription16

- 230000009191jumpingEffects0.000abstract1

- 238000010586diagramMethods0.000description9

- 238000005516engineering processMethods0.000description7

- 230000002123temporal effectEffects0.000description7

- 230000003068static effectEffects0.000description4

- 230000005540biological transmissionEffects0.000description3

- 238000005457optimizationMethods0.000description3

- 238000005192partitionMethods0.000description3

- 238000004458analytical methodMethods0.000description1

- 230000009286beneficial effectEffects0.000description1

- 230000008878couplingEffects0.000description1

- 238000010168coupling processMethods0.000description1

- 238000005859coupling reactionMethods0.000description1

- 230000000694effectsEffects0.000description1

- 230000003993interactionEffects0.000description1

- 238000012544monitoring processMethods0.000description1

- 238000011084recoveryMethods0.000description1

- 238000009877renderingMethods0.000description1

Images

Landscapes

- Compression Or Coding Systems Of Tv Signals (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及到多视点视频信号的编码压缩方法,尤其是涉及基于运动信息跳过编码的面向交互式应用的视频信号压缩方法。The invention relates to a method for encoding and compressing multi-viewpoint video signals, in particular to an interactive application-oriented video signal compression method based on motion information skipping encoding.

背景技术Background technique

多视点视频是当前多媒体领域的研究热点。作为FTV(自由视点电视)、3DTV(三维电视)等三维音视频应用中的核心技术,多视点视频技术旨在解决3D交互式视频的压缩、交互、存储和传输等问题。多视点视频信号是由相机阵列对实际场景进行拍摄得到的一组视频信号,它能提供拍摄场景不同角度的视频图像信息,利用其中的一个或多个视频信息可以合成任意视点的信息,使用户在观看时可以任意改变视点或者视角,以实现对同一场景进行的多方位体验。因此,多视点视频将广泛应用于面向带宽与高密度存储介质的交互式多媒体应用领域,如数字娱乐、远程监控、远程教育等。Multi-view video is a research hotspot in the field of multimedia. As the core technology in 3D audio and video applications such as FTV (Free Viewpoint Television) and 3DTV (3D Television), multi-viewpoint video technology aims to solve problems such as compression, interaction, storage and transmission of 3D interactive video. The multi-viewpoint video signal is a group of video signals obtained by shooting the actual scene by the camera array. It can provide video image information from different angles of the shooting scene, and use one or more of the video information to synthesize the information of any viewpoint. The point of view or angle of view can be changed arbitrarily when watching, so as to realize the multi-directional experience of the same scene. Therefore, multi-viewpoint video will be widely used in interactive multimedia applications for bandwidth and high-density storage media, such as digital entertainment, remote monitoring, and distance education.

多视点视频系统可以进行多视点视频信号的采集、编码压缩、传输、接收、解码、显示等,而其中多视点视频信号的编码压缩是整个系统的核心部分。一方面,多视点视频信号存在着数据量巨大,不利于网络传输和存储,如何高效地压缩多视点视频数据是其应用面临的一个重要挑战。另一方面,多视点视频是一种具有立体感和交互操作功能的视频序列,在保证视频高压缩率同时,也要关注其交互式性能,使系统具有灵活的随机访问、部分解码和绘制等性能。The multi-view video system can collect, code and compress, transmit, receive, decode, and display multi-view video signals, among which the code and compression of multi-view video signals is the core part of the whole system. On the one hand, multi-view video signals have a huge amount of data, which is not conducive to network transmission and storage. How to efficiently compress multi-view video data is an important challenge for its application. On the other hand, multi-view video is a video sequence with stereoscopic and interactive operation functions. While ensuring high video compression rate, attention should also be paid to its interactive performance, so that the system has flexible random access, partial decoding and rendering, etc. performance.

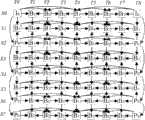

利用多视点视频信号的时间相关性、视点间相关性,采用运动补偿预测、视差补偿预测是进行多视点视频信号编码压缩的基本思路。目前大量的研究集中于寻找一种能最大限度地去除多视点视频序列时间上和视点间冗余的编码方案,如图2所示的基于空间-时间分层B帧的多视点视频编码方案。但是对于大多数多视点视频序列而言,虽然存在时间相关性和视点间相关性,但时间相关性却占了主导地位,所以对于基于空间-时间的分层B帧预测结构,在关键帧图像利用视点间预测关系的情况下,再对非关键帧进行视点间预测对压缩效率改善不明显,并且反过来会大大增加编码复杂度以及在解码端由于视点间的密切耦合会大大降低了视点的随机访问性能。Utilizing the time correlation and inter-view correlation of multi-view video signals, motion compensation prediction and parallax compensation prediction are the basic ideas for coding and compressing multi-view video signals. At present, a lot of research is focused on finding a coding scheme that can remove the temporal and inter-view redundancy of multi-view video sequences to the greatest extent, such as the multi-view video coding scheme based on space-time layered B frames as shown in Figure 2 . However, for most multi-view video sequences, although there are temporal correlations and inter-view correlations, the temporal correlations are dominant, so for the space-time based hierarchical B-frame prediction structure, in the key frame image In the case of using the inter-view prediction relationship, inter-view prediction for non-key frames will not improve the compression efficiency significantly, and in turn will greatly increase the coding complexity and greatly reduce the view at the decoding end due to the close coupling between the views. Random access performance.

另外,多视点视频序列除了具有相似的视频内容外,还具有相似的运动特性,即其运动信息同样具有高度的空间相关性,特别对运动剧烈的区域,其运动信息的空间相关性要大于时间相关性。运动信息跳过模式利用了这个原理,即通过全局视差矢量找到相邻视点中当前宏块的对应宏块,并导出对应宏块的运动信息作为当前宏块的运动信息。运动信息跳过模式对传统的运动补偿预测进行优化。当运动信息跳过模式为当前编码宏块的最佳编码模式时,只需要用一个模式标志位表明,而不需要对残差进行编码,从而能减少需要传输的比特数,提高压缩效率。然而通过全局视差有时不能得到当前编码宏块的最佳运动匹配信息。In addition, in addition to similar video content, multi-view video sequences also have similar motion characteristics, that is, their motion information also has a high degree of spatial correlation, especially for areas with intense motion, the spatial correlation of their motion information is greater than that of time Correlation. The motion information skip mode uses this principle, that is, finds the corresponding macroblock of the current macroblock in the adjacent view through the global disparity vector, and derives the motion information of the corresponding macroblock as the motion information of the current macroblock. Motion information skip mode optimizes traditional motion compensated prediction. When the motion information skipping mode is the best coding mode for the currently coded macroblock, only one mode flag bit needs to be used to indicate it, and there is no need to code the residual, so that the number of bits to be transmitted can be reduced and the compression efficiency can be improved. However, sometimes the best motion matching information of the current coded macroblock cannot be obtained through the global disparity.

针对以上问题,本发明采用一种面向交互式应用的多视点视频编码方法,在保证高压缩效率的条件下获得了低复杂度利低时延随机访问性能。In view of the above problems, the present invention adopts a multi-viewpoint video coding method oriented to interactive applications, and obtains random access performance with low complexity and low delay under the condition of ensuring high compression efficiency.

发明内容Contents of the invention

技术问题:本发明所要解决的技术是提供一种面向交互式应用的多视点视频编码方法,在保证高压缩率的同时,改善多视点视频的交互式性能。Technical problem: The technology to be solved by the present invention is to provide a multi-view video coding method for interactive applications, which can improve the interactive performance of multi-view video while ensuring a high compression rate.

技术方案:本发明面向交互式应用的多视点视频编码方法,包括以下步骤:Technical solution: the present invention is oriented to interactive application multi-viewpoint video encoding method, comprising the following steps:

步骤1:多视点视频序列分为基本视点和增强视点,每个视点序列都分为关键帧和非关键帧,选择中间视点为基本视点,并确定各视频序列关键帧的视点间参考关系,Step 1: Multi-view video sequences are divided into basic viewpoints and enhanced viewpoints, each viewpoint sequence is divided into key frames and non-key frames, select the intermediate viewpoint as the basic viewpoint, and determine the reference relationship between viewpoints of key frames of each video sequence,

步骤2:基本视点不参考其它视点,关键帧采用帧内预测编码方法;非关键帧选择本视点内的时间方向帧为参考帧,进行运动补偿预测编码,Step 2: The basic viewpoint does not refer to other viewpoints, the key frame adopts the intra-frame predictive coding method; the non-key frame selects the time direction frame in this viewpoint as the reference frame, and performs motion compensation predictive coding,

步骤3:增强视点的关键帧参考其它视点的关键帧,采用帧内预测编码方法或视差补偿预测法进行编码,同时计算出与参考视点的关键帧之间的全局视差,Step 3: Enhancing the key frames of the viewpoint with reference to the key frames of other viewpoints, using the intra-frame prediction coding method or the parallax compensation prediction method to encode, and calculating the global disparity between the key frames of the reference viewpoint,

步骤4:对增强视点的非关键帧,利用前后相邻两个关键帧图像的全局视差进行插值计算得到每个非关键帧图像与参考视点同一时刻非关键帧之间的全局视差,Step 4: For the non-key frame of the enhanced viewpoint, the global parallax between each non-key frame image and the non-key frame at the same moment of the reference viewpoint is calculated by interpolation using the global disparity of two adjacent key frame images,

步骤5:增强视点的非关键帧不进行视差补偿预测,只进行运动补偿预测,在传统的H.264的宏块编码模式的基础上,根据运动信息的视点间高度相关性原理,采用自适应运动矢量精细化的运动信息跳过编码对图像进行编码,Step 5: The non-key frame of the enhanced viewpoint does not perform parallax compensation prediction, but only performs motion compensation prediction. On the basis of the traditional H.264 macroblock coding mode, according to the principle of high correlation between viewpoints of motion information, adaptive The refined motion information of the motion vector skips the encoding to encode the image,

步骤6:增强视点非关键帧编码后设置每个宏块的运动信息跳过模式标志位,并写入码流发送到解码端。Step 6: Set the motion information skip mode flag bit of each macroblock after encoding the non-key frame of the enhanced view, write the code stream and send it to the decoding end.

步骤7:在解码端进行图像重建,首先根据判断当前帧图像类型,对参考视点进行部分解码,然后再解码当前帧图像。Step 7: Perform image reconstruction at the decoding end. First, according to the judgment of the image type of the current frame, partially decode the reference viewpoint, and then decode the image of the current frame.

对多视点视频序列分为基本视点和增强视点,基本视点不参考其它视点,增强视点可参考其它视点,包括基本视点或其它增强视点。Multi-viewpoint video sequences are divided into basic viewpoint and enhanced viewpoint. The basic viewpoint does not refer to other viewpoints, but the enhanced viewpoint can refer to other viewpoints, including the basic viewpoint or other enhanced viewpoints.

所述步骤4中对增强视点的非关键帧,利用前后相邻两个关键帧图像的全局视差进行插值计算得到每个非关键帧图像与参考视点同一时刻非关键帧之间的全局视差:前后相邻两个关键帧图像,即为当前图像组的第一帧以及下一个图像组的第一帧,全局视差的插值计算是以当前非关键帧在图像组中的序列号为依据的。In the

所述步骤5中增强视点的非关键帧根据运动信息的视点间高度相关性原理,采用自适应运动矢量精细化的运动信息跳过模式对图像进行编码:首先通过全局视差找到当前编码宏块在参考视点中的对应宏块,并导出对应宏块的运动信息,包括宏块分割模式、运动矢量等,作为当前宏块的候选编码模式和运动矢量;然后对当前宏块和对应宏块进行图像区域判断,决定是否扩大搜索范围,即是否要把参考视点中对应宏块的8个相邻宏块的编码模式和运动矢量,作为当前宏块的候选编码模式和运动矢量;最后利用所有候选编码模式和运动矢量,在本视点内进行运动补偿预测。In the

自适应运动矢量精细化的运动信息跳过模式对当前宏块和对应宏块进行图像区域判断,决定是否扩大搜索范围:如果当前宏块通过传统运动补偿预测后得到的最佳编码模式以及参考视点的对应宏块的编码模式都属于背景静态模式,则不扩大搜索范围,即将对应宏块的运动信息作为当前编码宏块的运动信息;否则则扩大搜索范围。The motion information skip mode of adaptive motion vector refinement judges the image area of the current macroblock and the corresponding macroblock, and decides whether to expand the search range: if the current macroblock obtains the best coding mode and reference viewpoint through traditional motion compensation prediction If the encoding modes of the corresponding macroblocks belong to the background static mode, the search range is not expanded, that is, the motion information of the corresponding macroblock is used as the motion information of the currently coded macroblock; otherwise, the search range is expanded.

在解码端进行图像重建,首先根据判断帧图像类型,对参考视点进行部分解码,然后再解码当前图像:要解码关键帧图像,要先对其参考视点的关键帧进行完全解码;要解码非关键帧图像,只需先对其参考视点的对应帧进行解析得到参考帧的运动信息,不需要对参考视点的对应帧进行完全解码。For image reconstruction at the decoding end, first, according to the judgment frame image type, the reference viewpoint is partially decoded, and then the current image is decoded: to decode the key frame image, it is necessary to fully decode the key frame of the reference viewpoint; to decode the non-key frame image For a frame image, it is only necessary to analyze the corresponding frame of the reference viewpoint to obtain the motion information of the reference frame, and it is not necessary to completely decode the corresponding frame of the reference viewpoint.

有益效果:本发明针对多视点视频的交互式应用,提出了一种面对交互式应用的多视点视频编码方法,修改了帧图像视点间的预测关系,并采用一种自适应运动矢量精细化的运动信息跳过模式,优化非关键帧图像的运动补偿预测的性能。Beneficial effects: the present invention proposes a multi-view video encoding method for interactive applications aimed at the interactive application of multi-view video, modifies the prediction relationship between the viewpoints of frame images, and adopts an adaptive motion vector refinement method The motion information skip mode optimizes the performance of motion compensated prediction for non-key frame images.

与现有技术相比,本发明的优点在于在保证高压缩效率的前提下,优化多视点视频随机访问性能,支持快速的视点切换,从而提高多视点视频的交互式性能。Compared with the prior art, the present invention has the advantages of optimizing the random access performance of multi-viewpoint video and supporting fast viewpoint switching under the premise of ensuring high compression efficiency, thereby improving the interactive performance of multi-viewpoint video.

附图说明Description of drawings

图1为多视点视频系统示意图;Fig. 1 is a schematic diagram of a multi-viewpoint video system;

图2为空间-时间分层B帧多视点视频预测结构示意图;Fig. 2 is a schematic structural diagram of space-time layered B-frame multi-viewpoint video prediction;

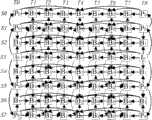

图3为本发明的面向交互视应用的多视点视频预测结构示意图;FIG. 3 is a schematic structural diagram of multi-viewpoint video prediction for interactive view applications according to the present invention;

图4为运动信息跳过模式预测编码示意图;FIG. 4 is a schematic diagram of motion information skip mode predictive coding;

图5为H.264/AVC标准中可变宏块分割图;Figure 5 is a variable macroblock segmentation diagram in the H.264/AVC standard;

图6为运动信息跳过模式的自适应运动矢量精细化算法的流程图;Fig. 6 is the flowchart of the adaptive motion vector refinement algorithm of motion information skip mode;

图7为自适应运动矢量精细化算法示意图;Fig. 7 is a schematic diagram of an adaptive motion vector refinement algorithm;

图8为增强视点的解码流程图;Fig. 8 is a decoding flowchart of an enhanced viewpoint;

图9为测试用例Ballroom在不同多视点视频编码方法下的率失真曲线;Figure 9 is the rate-distortion curve of the test case Ballroom under different multi-view video coding methods;

图10为测试用例Racel在不同多视点视频编码方法下的率失真曲线。FIG. 10 is the rate-distortion curves of the test case Racel under different multi-view video coding methods.

方法一为基于空间-时间分层B帧的多视点视频编码方案,The first method is a multi-view video coding scheme based on space-time layered B frames,

方法二为图3中对非关键帧不进行视点间预测的多视点视频编码方案。The second method is a multi-view video coding scheme in which inter-view prediction is not performed on non-key frames in FIG. 3 .

方法三为图3中对非关键帧应用原始运动信息跳过模式。The third method is to apply the original motion information skipping mode to the non-key frames in FIG. 3 .

方法四为图3中对非关键帧应用基于自适应运动矢量精细化的运动信息跳过模式。The fourth method is to apply a motion information skip mode based on adaptive motion vector refinement to non-key frames in FIG. 3 .

具体实施方式Detailed ways

以下结合附图实施例对本发明作进一步详细描述,这里以8×8多视点图像组结构为例(如图3所示,每个图像组共有8个视点、8个时刻,共64帧)。The present invention will be further described in detail below in conjunction with the embodiments of the accompanying drawings. Here, the structure of an 8×8 multi-viewpoint image group is taken as an example (as shown in FIG. 3 , each image group has 8 viewpoints, 8 moments, and a total of 64 frames).

参照图1,多视点视频编码中,由具有N个摄像机的多视点采集系统从不同角度拍摄同一场景得到的一组视频,即N路原始视点;N路原始视点视频经过多视点视频编解码系统后得到N路恢复视点。多视点视频编解码系统中,发送端通过多视点视频编码器,在保证信息质量和传输安全的情况下,编码压缩数据,传输到接收端后,由多视点视频解码器解码。Referring to Figure 1, in multi-viewpoint video coding, a group of videos obtained by shooting the same scene from different angles by a multi-viewpoint acquisition system with N cameras, that is, N-channel original viewpoints; N-channel original viewpoint videos pass through the multi-viewpoint video encoding and decoding system Afterwards, N-way recovery viewpoints are obtained. In the multi-view video codec system, the sending end uses the multi-view video encoder to encode and compress the data while ensuring the information quality and transmission security, and after being transmitted to the receiving end, it is decoded by the multi-view video decoder.

参照图3,给出了本发明的一种面向交互式应用的图像组GOP长度为8的多视点视频预测结构示意图。首先选择中间视点为基本视点;其次,确定关键帧图像视点间的参考关系为P-P-B-I-B-P-B-P,则此多视点视频序列的编码顺序为S4-S2-S3-S1-S6-S5-S8-S7;而对非关键帧只进行运动补偿预测法,在本视点内的参考关系采用分层B帧预测结构单视点内的预测关系,在视点间根据对应的关键帧图像的视点间参考关系,利用视点间参考图像的运动信息依赖性原理,运用运动信息跳过模式编码视点图像(如图3中虚线所示),模式标志位为motion_skip_flag。编码后获得的当前图像的运动信息都存储在运动信息缓存区中作为后续图像编码的信息参考。Referring to FIG. 3 , it is a schematic structural diagram of a multi-viewpoint video prediction structure for an interactive application-oriented GOP of pictures GOP length of 8 according to the present invention. First select the middle viewpoint as the basic viewpoint; secondly, determine the reference relationship between the viewpoints of the key frame images as P-P-B-I-B-P-B-P, then the encoding sequence of this multi-viewpoint video sequence is S4-S2-S3-S1-S6-S5-S8-S7; Non-key frames only perform motion compensation prediction method, and the reference relationship in this viewpoint adopts the prediction relationship in a single viewpoint of the hierarchical B-frame prediction structure, and uses the inter-viewpoint reference relationship between viewpoints according to the inter-viewpoint reference relationship of the corresponding key frame image The motion information dependence principle of the image uses the motion information skip mode to encode the viewpoint image (as shown by the dotted line in Figure 3), and the mode flag is motion_skip_flag. The motion information of the current image obtained after encoding is stored in the motion information buffer as an information reference for subsequent image encoding.

参照图4,给出了运动信息跳过模式的预测编码示意图。运动信息跳过模式主要分成两个步骤:Referring to FIG. 4 , a schematic diagram of predictive coding in the motion information skip mode is given. The motion information skip mode is mainly divided into two steps:

(1)通过GDV找到相邻视点的对应宏块。(1) Find the corresponding macroblocks of adjacent viewpoints through GDV.

(2)从这个对应宏块中拷贝其运动信息为当前宏块的运动信息,包括宏块分割模式、运动矢量以及图像参考索引号。(2) Copy the motion information of the corresponding macroblock as the motion information of the current macroblock, including macroblock partition mode, motion vector and image reference index number.

非关键帧处的全局视差则是对前后相邻的两个关键帧的全局视差加权平均得到的,如式(1)所示。The global disparity at the non-key frame is obtained by weighting the global disparity of two adjacent key frames, as shown in formula (1).

其中,GDVahead和GDVbehind为当前非关键帧前后相邻两个关键帧的全局视差矢量,POCcur、POCahead和POCbehind分别表示当前非关键帧、前关键帧和后关键帧在时间轴上的图像序列号。Among them, GDVahead and GDVbehind are the global disparity vectors of two adjacent key frames before and after the current non-key frame, and POCcur , POCahead and POCbehind represent the current non-key frame, front key frame and rear key frame on the time axis respectively. image serial number.

图5为H.264/AVC标准中可变宏块分割技术。一般来说,大尺寸的宏块编码模式通常用于背景静态区域,如P_Skip、B_skip、Direct、Inter 16×16编码模式。而小尺寸的宏块编码模式通常用于前景运动区域,如Inter 16×8、Inter 8×16、Inter 8×8等编码模式。则我们称P_Skip、B_skip、Direct以及Inter 16×16编码模式为背景静态模式,其他编码模式则为前景运动模式。FIG. 5 shows the variable macroblock segmentation technology in the H.264/AVC standard. Generally speaking, large-size macroblock coding modes are usually used in background static areas, such as P_Skip, B_skip, Direct,

图6为运动信息跳过模式的自适应运动矢量精细化算法的流程图,该算法的主要步骤为:Fig. 6 is the flowchart of the adaptive motion vector refinement algorithm of motion information skip mode, and the main steps of this algorithm are:

1)对非关键帧图像进行传统的运动补偿预测,其中当前宏块的运动矢量预测值MVP为其相邻宏块的运动矢量的中值,通过率失真最优化技术得到最佳的编码宏块模式MODEcur_opt和运动矢量MVcur_opt。1) Perform traditional motion compensation prediction on non-key frame images, where the motion vector predictorMVP of the current macroblock is the median value of the motion vectors of its adjacent macroblocks, and the best coding macro is obtained through rate-distortion optimization technology Block mode MODEcur_opt and motion vector MVcur_opt .

通过全局视差得到的参考视点的对应宏块,提取该对应宏块的运动信息,即宏块编码模式MODEco以及运动矢量MVco。从参考视点中得到当前宏块的候选编码模式和运动矢量。若MODEcur_opt和MODEco相同且都属于背景静态模式,则可判断通过全局视差所导出的运动信息是准确的,则对应宏块导出的编码模式MODEco和MVco直接作为当前宏块的候选编码模式和运动矢量;否则认为通过全局视差所得到的运动信息是不精确的,此时则扩大搜索窗口,提取对应宏块的MODEco和MVco,同时将该对应宏块的8个相邻宏块的编码模式和MV提取出来,作为当前宏块的候选编码模式和运动矢量。对于一些编码模式,每个宏块被分割成多个子块,则每个子块拥有各自的运动矢量,假如所得到的对应宏块的宏块分割模式为16×8模式,则当前宏块也被分割成两个16×8,并分别提取各自对应的运动矢量,其他模式也是依此类推。The corresponding macroblock of the reference view obtained by the global disparity is used to extract the motion information of the corresponding macroblock, that is, the macroblock coding mode MODEco and the motion vector MVco . Candidate coding modes and motion vectors of the current macroblock are obtained from the reference view. If MODEcur_opt and MODEco are the same and both belong to the background static mode, it can be judged that the motion information derived from the global parallax is accurate, and the coding modes MODEco and MVco derived from the corresponding macroblock are directly used as candidate coding for the current macroblock mode and motion vector; otherwise, it is considered that the motion information obtained through the global parallax is inaccurate, at this time, the search window is enlarged, and the MODEco and MVco of the corresponding macroblock are extracted, and the 8 adjacent macroblocks of the corresponding macroblock are simultaneously The encoding mode and MV of the block are extracted as the candidate encoding mode and motion vector of the current macroblock. For some encoding modes, each macroblock is divided into multiple sub-blocks, and each sub-block has its own motion vector. If the obtained macroblock partition mode of the corresponding macroblock is 16×8 mode, the current macroblock is also divided into Divide into two 16×8, and extract their corresponding motion vectors, and so on for other modes.

2)对得到的每个候选编码模式以及其对应的运动矢量,分别在时间参考帧中进行运动估计找到一个匹配块,最后根据率失真最优化技术确定运动信息跳过模式的最佳编码模式MODEms_opt和MVms_opt。2) For each candidate encoding mode and its corresponding motion vector obtained, perform motion estimation in the temporal reference frame to find a matching block, and finally determine the best encoding mode MODE for the motion information skip mode according to the rate-distortion optimization technologyms_opt and MVms_opt .

通过以上方法得到运动信息跳过模式的最佳编码模式MODEms_opt和MVms_opt后,再根据率失真最优化技术,与传统的运动补偿预测所得的MODEcur_opt和MVcur_opt进行比较得到当前宏块所用的最终的最优编码模式和运动矢量。After obtaining the best encoding modes MODEms_opt and MVms_opt of the motion information skip mode through the above method, and then according to the rate-distortion optimization technology, compare it with the MODEcur_opt and MVcur_opt obtained by the traditional motion compensation prediction to obtain the current macroblock. The final optimal coding mode and motion vectors.

图7为自适应运动矢量精细化算法示意图。IS,T中为视点S在T时刻的非关键帧,ISref,T为IS,T在其参考视点Sref中同一时刻的参考帧。IS,T中当前编码宏块(xi,yi),通过全局视差矢量GDV(xG,yG)找到ISref,T中的对应宏块(xi+xG,yi+yG)后,经过自适应运动矢量精细化算法得到当前编码宏块的最佳运动信息匹配宏块为宏块(xi+xG+Δxi,yi+yG+Δyi),则视差偏移量为ΔDi(Δxi,Δyi),若当前编码宏块的最佳运动信息匹配块为其对应宏块(xi+xG,yi+yG),则视差偏移量ΔDi设为0。把每个宏块的视差偏移量传输到解码端,用于图像的重建。FIG. 7 is a schematic diagram of an adaptive motion vector refinement algorithm. IS,T is the non-key frame of the viewpoint S at the moment T, and ISref,T is the reference frame of IS,T at the same moment in its reference viewpoint Sref . IS, the current coded macroblock (xi, y i) in T,find ISref, the corresponding macroblock in T (xi +xG , y i +y) through the global disparity vector GDV (x G,yG)G ), through the adaptive motion vector refinement algorithm, the best motion information matching macroblock of the current coded macroblock is obtained as the macroblock (xi +xG +Δxi , yi +yG +Δyi ), then the parallax The offset is ΔDi(Δxi, Δyi), if the best motion information matching block of the current coded macroblock is its corresponding macroblock (xi +xG , yi +yG ), then the disparity offset ΔDi is set to 0. The disparity offset of each macroblock is transmitted to the decoding end for image reconstruction.

图8为增强视点的解码流程图。解码当前图像,首先要判断帧图像类型,当当前帧为关键帧时,要对其参考帧进行解码;当当前帧为非关键帧时,不需要对其参考帧进行解码,只需要对其进行解析,通过全局视差矢量GDV以及视差偏移量ΔD找到对应宏块,并从存放运动信息缓存区中得到对应宏块的运动信息。解码后得到的当前图像的运动信息存储在运动信息缓存区中作为后续图像解码的信息参考。Fig. 8 is a flow chart of decoding enhanced view. To decode the current image, you must first determine the frame image type. When the current frame is a key frame, you need to decode its reference frame; when the current frame is a non-key frame, you don’t need to decode its reference frame, just decode it For analysis, the corresponding macroblock is found through the global disparity vector GDV and the disparity offset ΔD, and the motion information of the corresponding macroblock is obtained from the motion information buffer. The motion information of the current image obtained after decoding is stored in the motion information buffer area as an information reference for subsequent image decoding.

以下就本实施例进行多视点视频编码的性能进行说明:The following describes the performance of multi-viewpoint video coding in this embodiment:

1)面向交互式应用的多视点视频编码方法的率失真性能1) Rate-distortion performance of multi-view video coding methods for interactive applications

图9和图10分别为测试用例Ballroom和Racel在不同多视点视频编码方法下的率失真曲线,其横坐标和纵坐标分别表示8个视频序列的平均比特率和平均信噪比。其中JMVM为基于空间-时间分层B帧的多视点编码方案(如图2所示),JMVM_AP为图3中只对关键帧进行视点间预测的编码方案,JMVM_MS为图3中对非关键帧应用原始的运动信息跳过模式,JMVM_AFMS则是应用了采用自适应运动矢量精细化的运动信息跳过模式。JMVM_AP中所有视点都不对非关键帧图像进行视点间预测,JMVM中只有对B视点中的非关键帧图像进行视点间预测,而JMVM_MS和JMVM_AFMA中的运动信息跳过模式应用于所有增强视点的非关键帧图像。Figure 9 and Figure 10 are the rate-distortion curves of the test cases Ballroom and Racel under different multi-view video coding methods, and the abscissa and ordinate respectively represent the average bit rate and average signal-to-noise ratio of the eight video sequences. Among them, JMVM is a multi-view coding scheme based on space-time layered B frames (as shown in Figure 2), JMVM_AP is a coding scheme that only performs inter-view prediction on key frames in Figure 3, and JMVM_MS is a coding scheme for non-key frames in Figure 3 The original motion information skip mode is applied, and JMVM_AFMS applies the motion information skip mode using adaptive motion vector refinement. All viewpoints in JMVM_AP do not perform inter-view prediction on non-key frame images, JMVM only performs inter-view prediction on non-key frame images in B viewpoint, and the motion information skip mode in JMVM_MS and JMVM_AFMA is applied to non-key frame images of all enhanced viewpoints. Keyframe images.

由图9可知,由于Ballroom视频序列运动较平缓、时域相关性大,视点间的参考关系对非关键帧图像的编码性能影响不大,所以这4种编码方案的压缩性能相差不大。而对于Racel视频序列,由于镜头移动、运动剧烈且存在时间全局运动,导致时间预测的有效性差。因此,视点间的参考关系以及它的准确性对压缩性能是至关重要的。从图10可知,不采用非关键帧视点间参考关系的JMVM_AP的压缩性能最差,而本发明所提的JMVM_AFMS能得到当前编码宏块更为匹配的运动矢量,与JMVM_MS相比编码性能改善明显,在相同的比特率条件下其平均PSNR最大增加了0.2dB,与JMVM相比最大增加了0.35dB。因此,本发明所提算法对于Ballroom等运动缓慢的视频序列,其压缩性能接近基于空间-时间分层B帧的多视点编码方案,而对于racel等运动剧烈的视频序列其压缩性能优于基于空间-时间分层B帧的多视点视频编码方案。It can be seen from Figure 9 that since the motion of the Ballroom video sequence is relatively gentle and the temporal correlation is large, the reference relationship between viewpoints has little effect on the coding performance of non-key frame images, so the compression performance of these four coding schemes is not much different. However, for Racel video sequences, the effectiveness of temporal prediction is poor due to camera movement, violent motion, and temporal global motion. Therefore, the reference relationship between viewpoints and its accuracy are crucial to the compression performance. It can be seen from Figure 10 that the compression performance of JMVM_AP that does not use the reference relationship between non-key frame viewpoints is the worst, while the JMVM_AFMS proposed by the present invention can obtain a motion vector that is more suitable for the current coded macroblock, and the coding performance is significantly improved compared with JMVM_MS , the average PSNR increases by 0.2dB at the same bit rate, and increases by 0.35dB compared with JMVM. Therefore, the algorithm proposed in the present invention has a compression performance close to the multi-view coding scheme based on space-time layered B frames for slow-moving video sequences such as Ballroom, and its compression performance is better than that based on spatial - A multi-view video coding scheme for temporally layered B-frames.

2)面向交互式应用的多视点视频编码方法的随机访问性能2) Random access performance of multi-view video coding method for interactive applications

为了评价多视点视频的随机访问性能,我们使用FAV和FMAX来分别表示随机访问一帧所需要解码的平均和最大帧数。假设现要访问(i,j)处的帧,其随机访问代价FAV和FMAX可分别用式(2)和式(3)定义。In order to evaluate the random access performance of multi-view video, we use FAV and FMAX to denote the average and maximum number of frames that need to be decoded to randomly access a frame, respectively. Assuming that the frame at (i, j) is to be accessed now, its random access cost FAV and FMAX can be defined by formula (2) and formula (3) respectively.

FMAX=max{xi,j|0<i≤n,0<j≤m} (3)FMAX =max{xi, j |0<i≤n, 0<j≤m} (3)

其中,n为一个GOP包含的帧数,m为总的视点数。xi,j表示访问该帧之前所必须要解码的帧数,pi,j为用户选择观看该帧的概率,通常pi,j=1/(n×m)。Among them, n is the number of frames contained in a GOP, and m is the total number of viewpoints. xi,j represents the number of frames that must be decoded before accessing the frame, pi,j is the probability that the user chooses to watch the frame, usually pi,j =1/(n×m).

由于运动信息跳过模式不影响随机访问性能,所以本发明所提的编码方案JMVM_AFMS与JMVM_AP具有相同的随机访问性能。表1给出了本发明所提编码方案和基于空间-时间分层B帧的多视点编码方案在随机访问性能方面的比较。从表1可知本发明所提的编码方法的随机访问性能明显优于JMVM,平均提高了36.6%/44.4%。Since the motion information skipping mode does not affect the random access performance, the coding scheme JMVM_AFMS and JMVM_AP proposed in the present invention have the same random access performance. Table 1 shows the comparison of random access performance between the coding scheme proposed by the present invention and the multi-view coding scheme based on space-time layered B frames. It can be seen from Table 1 that the random access performance of the encoding method proposed by the present invention is obviously better than that of JMVM, with an average increase of 36.6%/44.4%.

表1 随机访问性能比较Table 1 Random access performance comparison

综上所述,与现有技术相比,本发明的优点在于在保证高压缩效率的前提下,优化多视点视频随机访问性能,支持快速的视点切换,从而提高多视点视频的交互式性能。To sum up, compared with the prior art, the present invention has the advantages of optimizing the random access performance of multi-viewpoint video and supporting fast viewpoint switching under the premise of ensuring high compression efficiency, thereby improving the interactive performance of multi-viewpoint video.

Claims (2)

Translated fromChinesePriority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN 201010155912CN101867813B (en) | 2010-04-23 | 2010-04-23 | Multi-view video coding method oriented for interactive application |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN 201010155912CN101867813B (en) | 2010-04-23 | 2010-04-23 | Multi-view video coding method oriented for interactive application |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN101867813A CN101867813A (en) | 2010-10-20 |

| CN101867813Btrue CN101867813B (en) | 2012-05-09 |

Family

ID=42959339

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN 201010155912Expired - Fee RelatedCN101867813B (en) | 2010-04-23 | 2010-04-23 | Multi-view video coding method oriented for interactive application |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN101867813B (en) |

Families Citing this family (13)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN107820082B9 (en)* | 2011-10-18 | 2021-07-20 | 株式会社Kt | Video signal decoding method |

| EP3657795A1 (en)* | 2011-11-11 | 2020-05-27 | GE Video Compression, LLC | Efficient multi-view coding using depth-map estimate and update |

| EP3657796A1 (en) | 2011-11-11 | 2020-05-27 | GE Video Compression, LLC | Efficient multi-view coding using depth-map estimate for a dependent view |

| EP3739886A1 (en) | 2011-11-18 | 2020-11-18 | GE Video Compression, LLC | Multi-view coding with efficient residual handling |

| CN103379348B (en)* | 2012-04-20 | 2016-11-16 | 乐金电子(中国)研究开发中心有限公司 | A View Synthesis Method, Device, and Encoder When Encoding Depth Information |

| WO2014107853A1 (en)* | 2013-01-09 | 2014-07-17 | Mediatek Singapore Pte. Ltd. | Methods for disparity vector derivation |

| WO2014163458A1 (en)* | 2013-04-05 | 2014-10-09 | 삼성전자주식회사 | Method for determining inter-prediction candidate for interlayer decoding and encoding method and apparatus |

| WO2015006899A1 (en)* | 2013-07-15 | 2015-01-22 | Mediatek Singapore Pte. Ltd. | A simplified dv derivation method |

| CN105612746A (en)* | 2013-10-11 | 2016-05-25 | 瑞典爱立信有限公司 | Brake caliper for a disk brake |

| WO2017020807A1 (en)* | 2015-07-31 | 2017-02-09 | Versitech Limited | Method and system for global motion estimation and compensation |

| JP6824579B2 (en)* | 2017-02-17 | 2021-02-03 | 株式会社ソニー・インタラクティブエンタテインメント | Image generator and image generation method |

| CN113949873B (en)* | 2021-10-15 | 2024-12-06 | 北京奇艺世纪科技有限公司 | Video encoding method, device and electronic equipment |

| WO2024077616A1 (en)* | 2022-10-14 | 2024-04-18 | Oppo广东移动通信有限公司 | Coding and decoding method and coding and decoding apparatus, device, and storage medium |

Family Cites Families (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2007035042A1 (en)* | 2005-09-21 | 2007-03-29 | Samsung Electronics Co., Ltd. | Apparatus and method for encoding and decoding multi-view video |

| CN100438632C (en)* | 2006-06-23 | 2008-11-26 | 清华大学 | Method for encoding interactive video in multiple viewpoints |

| CN101668205B (en)* | 2009-09-25 | 2011-04-20 | 南京邮电大学 | Self-adapting down-sampling stereo video compressed coding method based on residual error macro block |

- 2010

- 2010-04-23CNCN 201010155912patent/CN101867813B/ennot_activeExpired - Fee Related

Also Published As

| Publication number | Publication date |

|---|---|

| CN101867813A (en) | 2010-10-20 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN101867813B (en) | Multi-view video coding method oriented for interactive application | |

| CN111971960B (en) | Method for processing image based on inter prediction mode and apparatus therefor | |

| CN102308585B (en) | Multi-view video encoding/decoding method and device | |

| CN102055982B (en) | Coding and decoding methods and devices for three-dimensional video | |

| CN100415002C (en) | Coding and compression method of multi-mode and multi-viewpoint video signal | |

| CN101540926B (en) | Stereoscopic Video Coding and Decoding Method Based on H.264 | |

| CN102017627B (en) | Multiview Video Coding Using Depth-Based Disparity Estimation | |

| WO2019184639A1 (en) | Bi-directional inter-frame prediction method and apparatus | |

| CN101617537A (en) | Be used to handle the method and apparatus of vision signal | |

| CN104704835A (en) | Apparatus and method for motion information management in video coding | |

| CN103037218B (en) | Multi-view stereoscopic video compression and decompression method based on fractal and H.264 | |

| CN103051894B (en) | A kind of based on fractal and H.264 binocular tri-dimensional video compression & decompression method | |

| CN101895749B (en) | Quick parallax estimation and motion estimation method | |

| CN101243692A (en) | Method and device for encoding multi-view video | |

| CN101404766A (en) | Multi-view point video signal encoding method | |

| Xiang et al. | A novel error concealment method for stereoscopic video coding | |

| CN101568038B (en) | Multi-viewpoint error resilient coding scheme based on disparity/movement joint estimation | |

| CN101222640A (en) | Method and device for determining reference frame | |

| CN105704497B (en) | Coding unit size fast selection algorithm towards 3D-HEVC | |

| CN101986713A (en) | View synthesis-based multi-viewpoint error-resilient encoding frame | |

| Xiang et al. | Auto-regressive model based error concealment scheme for stereoscopic video coding | |

| CN101272496A (en) | A mode selection method for 264 video with reduced resolution transcoding | |

| CN103338369A (en) | A three-dimensional video coding method based on the AVS and a nerve network | |

| Liu et al. | Low-delay view random access for multi-view video coding | |

| CN101511016B (en) | Improved process for multi-eyepoint video encode based on HHI layered B frame predict structure |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| C06 | Publication | ||

| PB01 | Publication | ||

| C10 | Entry into substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| C14 | Grant of patent or utility model | ||

| GR01 | Patent grant | ||

| EE01 | Entry into force of recordation of patent licensing contract | Application publication date:20101020 Assignee:Jiangsu Nanyou IOT Technology Park Ltd. Assignor:NANJING University OF POSTS AND TELECOMMUNICATIONS Contract record no.:2016320000208 Denomination of invention:Multi-view video coding method oriented for interactive application Granted publication date:20120509 License type:Common License Record date:20161110 | |

| LICC | Enforcement, change and cancellation of record of contracts on the licence for exploitation of a patent or utility model | ||

| EC01 | Cancellation of recordation of patent licensing contract | Assignee:Jiangsu Nanyou IOT Technology Park Ltd. Assignor:NANJING University OF POSTS AND TELECOMMUNICATIONS Contract record no.:2016320000208 Date of cancellation:20180116 | |

| EC01 | Cancellation of recordation of patent licensing contract | ||

| CF01 | Termination of patent right due to non-payment of annual fee | Granted publication date:20120509 | |

| CF01 | Termination of patent right due to non-payment of annual fee |