CN101751549B - Tracking methods for moving objects - Google Patents

Tracking methods for moving objectsDownload PDFInfo

- Publication number

- CN101751549B CN101751549BCN200810179785.8ACN200810179785ACN101751549BCN 101751549 BCN101751549 BCN 101751549BCN 200810179785 ACN200810179785 ACN 200810179785ACN 101751549 BCN101751549 BCN 101751549B

- Authority

- CN

- China

- Prior art keywords

- mobile object

- appearance model

- mobile

- images

- database

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Expired - Fee Related

Links

Images

Landscapes

- Image Analysis (AREA)

Abstract

Description

Translated fromChinese技术领域technical field

本发明涉及一种图像处理方法,且特别涉及一种移动物体的追踪方法。The invention relates to an image processing method, and in particular to a tracking method of a moving object.

背景技术Background technique

视觉监控技术在近几年来日趋重要,尤其经过九一一事件之后,越来越多的监控摄影机被安置在各个场所,然而传统的监控往往是通过人力进行监看,或只是存放于存储装置做为事后调阅的工具。随着越来越多的摄影机架设,所需的人力亦越来越多,因此近年来通过计算机视觉技术辅助的自动化监控系统扮演更为重要的角色。Visual surveillance technology has become more and more important in recent years, especially after the September 11 incident, more and more surveillance cameras have been placed in various places, but traditional surveillance is often carried out by manpower, or just stored in storage devices. A tool for later retrieval. As more and more cameras are installed, more and more manpower is required. Therefore, in recent years, the automated monitoring system assisted by computer vision technology has played a more important role.

视觉监控系统通过分析监控画面内移动物体的行为,其可为轨迹、姿态或其他特征,得以检测异常事件的发生,并有效通知安全人员进行处理。许多视觉监控的基本议题,如背景相减、移动物体检测与追踪、阴影去除等在过去已有相当多的文献与研究,近年来,焦点则转往高阶的事件检测,如行为分析、遗留物检测、徘徊检测或拥挤检测等,在目前强烈的监控市场需求下,自动化且具有智慧的行为分析预期将会有极大的需求与商机。The visual monitoring system can detect the occurrence of abnormal events by analyzing the behavior of moving objects in the monitoring screen, which can be trajectory, posture or other characteristics, and effectively notify security personnel to deal with them. Many basic issues of visual surveillance, such as background subtraction, moving object detection and Object detection, loitering detection, or crowding detection, etc. Under the current strong monitoring market demand, automated and intelligent behavior analysis is expected to have great demand and business opportunities.

所谓的徘徊检测,是指当一个或多个移动物体在特定时间内,持续并重复出现于某个监控区域内。举例来说,流莺或乞丐会逗留于街角、涂鸦者会停留于墙边、有自杀意图者会徘徊于月台、或是贩毒者会徘徊于地铁车站等着与其客户碰面等。The so-called loitering detection refers to when one or more moving objects continuously and repeatedly appear in a certain monitoring area within a certain period of time. For example, a warbler or beggar hangs around a street corner, a graffiti hangs by a wall, a suicidal person hangs on a platform, or a drug dealer hangs around a subway station waiting to meet his client.

然而,由于视觉监控系统的摄影机的视野有限,无法完全涵盖徘徊者移动的路径,因此当徘徊者离开监控区域后,视觉监控系统即失去监控的目标,无法继续检测其动向。特别是徘徊者离开后再返回的情况,如何仍重新辨识并与其之前行为关联,是目前徘徊检测技术所面临的瓶颈。However, due to the limited field of view of the camera of the visual surveillance system, it cannot fully cover the moving path of the prowler. Therefore, when the prowler leaves the monitoring area, the visual surveillance system loses the monitoring target and cannot continue to detect its movement. Especially in the case of a wanderer returning after leaving, how to re-identify and correlate with its previous behavior is the bottleneck that current wandering detection technology is facing.

发明内容Contents of the invention

有鉴于此,本发明提供一种移动物体的追踪方法,结合多张图像中移动物体的空间信息及外貌模型,可持续追踪此移动物体在图像中的移动路径。In view of this, the present invention provides a method for tracking a moving object, which combines spatial information and appearance models of the moving object in multiple images to continuously track the moving path of the moving object in the images.

本发明提出一种移动物体的追踪方法,其包括检测连续多张图像中的移动物体,以获得此移动物体在各个图像中的空间信息,此外亦提取此移动物体在各个图像中的外貌特征,以建立此移动物体的外貌模型,最后则结合此移动物体的空间信息及外貌模型,以追踪此移动物体在图像中的移动路径。The present invention proposes a method for tracking a moving object, which includes detecting the moving object in multiple consecutive images to obtain the spatial information of the moving object in each image, and also extracting the appearance features of the moving object in each image, to establish an appearance model of the moving object, and finally combine the spatial information and the appearance model of the moving object to track the moving path of the moving object in the image.

在本发明的一实施例中,上述检测连续多张图像中的移动物体的步骤,还包括判断此移动物体是否为移动物体,并将非为移动物体的移动物体滤除。其中,判断该移动物体是否为移动物体的方式包括判断此矩形区域的面积是否大于第一预设值,而当其面积大于第一预设值时,即判定此矩形区域所包围的移动物体为移动物体。另一种方式则是判断此矩形区域的长宽比是否大于第二预设值,而当其长宽比大于第二预设值时,即判定此矩形区域所包围的移动物体为移动物体。In an embodiment of the present invention, the above-mentioned step of detecting a moving object in a plurality of consecutive images further includes judging whether the moving object is a moving object, and filtering out moving objects that are not moving objects. Wherein, the way of judging whether the moving object is a moving object includes judging whether the area of the rectangular area is greater than a first preset value, and when the area is larger than the first preset value, it is determined that the moving object surrounded by the rectangular area is moving objects. Another way is to determine whether the aspect ratio of the rectangular area is greater than a second preset value, and when the aspect ratio is greater than the second preset value, it is determined that the moving object surrounded by the rectangular area is a moving object.

在本发明的一实施例中,上述提取移动物体在各个图像中的外貌特征,以建立移动物体的外貌模型的步骤包括先将矩形区域分割为多个区块,并提取各个区块的色彩分布,接着采用递回的方式,从各个区块中取出其色彩分布的中值,据以建立一个二元树来描述其色彩分布,最后则选取此二元树分支的色彩分布,以作为移动物体的外貌模型的特征向量。In an embodiment of the present invention, the step of extracting the appearance features of the moving object in each image to establish the appearance model of the moving object includes first dividing the rectangular area into multiple blocks, and extracting the color distribution of each block , and then use a recursive method to take out the median value of its color distribution from each block, and build a binary tree to describe its color distribution, and finally select the color distribution of this binary tree branch as the moving object The feature vector of the appearance model of .

在本发明的一实施例中,上述将矩形区域分割为区块的步骤包括以一定的比例分割该矩形区域为头部区块、身体区块及下肢区块,而在提取各个区块的色彩分布时则包括将头部区块的色彩分布忽略。其中,上述的色彩分布包括红绿蓝(RGB)色彩空间或色调色度亮度(HSI)色彩空间中的色彩特征。In one embodiment of the present invention, the above-mentioned step of dividing the rectangular area into blocks includes dividing the rectangular area into head blocks, body blocks, and lower limb blocks at a certain ratio, and extracting the color of each block The distribution includes ignoring the color distribution of the head block. Wherein, the above-mentioned color distribution includes color features in a red-green-blue (RGB) color space or a hue-chroma-intensity (HSI) color space.

在本发明的一实施例中,在上述检测连续多张图像中的移动物体,以获得此移动物体在各个图像中的空间信息的步骤之后,还包括利用这些空间信息追踪此移动物体的移动路径,并累计此移动物体停留在这些图像中的停留时间。In an embodiment of the present invention, after the above-mentioned step of detecting the moving object in multiple consecutive images to obtain the spatial information of the moving object in each image, it also includes using the spatial information to track the moving path of the moving object , and accumulate the dwell time of the moving object in these images.

在本发明的一实施例中,在上述累计移动物体停留在图像中的停留时间的步骤之后,还包括判断此移动物体停留在这些图像中的停留时间是否超过一第一预设时间,而当此停留时间超过第一预设时间时,始提取移动物体的外貌特征,以建立移动物体的外貌模型,并结合移动物体的空间信息及外貌模型,追踪移动物体在这些图像中的移动路径。In an embodiment of the present invention, after the step of accumulating the dwell time of the moving object in the images, it further includes judging whether the dwell time of the moving object in the images exceeds a first preset time, and when When the dwell time exceeds the first preset time, the appearance features of the moving object are extracted to establish an appearance model of the moving object, and the moving path of the moving object in these images is tracked by combining the spatial information and the appearance model of the moving object.

在本发明的一实施例中,上述结合移动物体的空间信息及外貌模型,追踪移动物体在这些图像中的移动路径的步骤包括先利用空间信息计算两相邻图像中相对应的移动物体在空间上相关的事前机率,以及利用外貌信息计算两相邻图像中相对应的移动物体的相似度,然后才结合事前机率及相似度于贝式追踪器,以判断移动物体在这些相邻图像中的移动路径。In an embodiment of the present invention, the step of tracking the moving path of the moving object in these images in combination with the spatial information and the appearance model of the moving object includes first calculating the spatial distance of the corresponding moving object in two adjacent images by using the spatial information. The pre-correlation probability, and the use of appearance information to calculate the similarity of the corresponding moving objects in two adjacent images, and then combine the prior probability and similarity with the Bayesian tracker to judge the moving object in these adjacent images path of movement.

在本发明的一实施例中,当判断停留时间超过第一预设时间时,还包括将此移动物体的停留时间及外貌模型记录于数据库,其包括将移动物体的外貌模型与数据库中的多个外貌模型进行关联,以判断此移动物体的外貌模型是否已记录于数据库。其中,如果移动物体的外貌模型已记录于数据库,则仅记录移动物体的停留时间于数据库;反之,如果移动物体的外貌模型未记录于数据库,则记录移动物体的停留时间及外貌模型于数据库。In one embodiment of the present invention, when it is judged that the stay time exceeds the first preset time, it also includes recording the stay time and appearance model of the moving object in the database, which includes combining the appearance model of the moving object with multiple data in the database. appearance model to determine whether the appearance model of the moving object has been recorded in the database. Wherein, if the appearance model of the moving object has been recorded in the database, only the residence time of the moving object is recorded in the database; otherwise, if the appearance model of the moving object is not recorded in the database, the residence time and appearance model of the moving object are recorded in the database.

在本发明的一实施例中,上述将移动物体的外貌模型与数据库中的外貌模型进行关联的步骤包括计算同一移动物体于两不同时间点所建立的外貌模型间的第一距离,以建立第一距离分布,以及计算各个图像中两个移动物体的外貌模型间的第二距离,以建立第二距离分布,然后再利用此第一距离分布与第二距离分布求取其界线,以作为区分外貌模型的标准。In an embodiment of the present invention, the step of associating the appearance model of the moving object with the appearance models in the database includes calculating the first distance between the appearance models established by the same moving object at two different time points, so as to establish the second A distance distribution, and calculating the second distance between the appearance models of the two moving objects in each image to establish the second distance distribution, and then using the first distance distribution and the second distance distribution to obtain the boundary line as a distinction The standard of appearance model.

在本发明的一实施例中,在上述将移动物体的停留时间及外貌模型记录于数据库的步骤之后,还包括分析数据库中此移动物体的时间序列,以判定此移动物体是否符合徘徊事件。其判断方式包括判断移动物体在这些图像中持续出现的时间是否超过第二预设时间,而当移动物体在这些图像中已持续出现超过第二预设时间时,即判定此移动物体符合徘徊事件。另一方式则是判断移动物体离开这些图像的时间间距是否小于第三预设时间,而当移动物体离开这些图像的时间间距小于第三预设时间时,即判定此移动物体符合徘徊事件。In an embodiment of the present invention, after the step of recording the dwell time and appearance model of the moving object in the database, it further includes analyzing the time series of the moving object in the database to determine whether the moving object corresponds to a loitering event. The judging method includes judging whether the moving object continues to appear in these images for more than the second preset time, and when the moving object has continued to appear in these images for longer than the second preset time, it is determined that the moving object meets the loitering event . Another way is to determine whether the time interval of the moving object leaving the images is less than a third preset time, and when the time interval of the moving object leaving the images is less than the third preset time, it is determined that the moving object meets the loitering event.

基于上述,本发明通过建立访问者的外貌模型,并结合贝式追踪技术、数据库管理技术及适应性阈值学习技术,持续地监控进入画面中的移动物体,可解决移动物体离开画面后再返回无法持续检测的问题。此外,本发明根据访问者出现于画面中的时间条件,可自动检测出访问者的徘徊事件。Based on the above, the present invention continuously monitors the moving objects entering the screen by establishing the appearance model of the visitor, combined with Bayesian tracking technology, database management technology and adaptive threshold learning technology, and can solve the problem that the moving object cannot return after leaving the screen. The problem of continuous detection. In addition, the present invention can automatically detect the visitor's wandering event according to the time condition when the visitor appears in the screen.

为让本发明的上述特征和优点能更明显易懂,下文特举实施例,并配合附图作详细说明如下。In order to make the above-mentioned features and advantages of the present invention more comprehensible, the following specific embodiments are described in detail with reference to the accompanying drawings.

附图说明Description of drawings

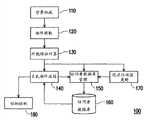

图1是依照本发明一实施例所绘示的移动物体追踪的系统架构的示意图。FIG. 1 is a schematic diagram of a system architecture for moving object tracking according to an embodiment of the present invention.

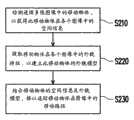

图2是依照本发明一实施例所绘示的移动物体的追踪方法的流程图。FIG. 2 is a flowchart of a method for tracking a moving object according to an embodiment of the present invention.

图3(a)、(b)及(c)是依照本发明一实施例所绘示的移动物体的外貌模型的示意图。3( a ), ( b ) and ( c ) are schematic diagrams of appearance models of moving objects according to an embodiment of the present invention.

图4是依照本发明一实施例所绘示的色彩分布的二元树的示意图。FIG. 4 is a schematic diagram of a binary tree of color distribution according to an embodiment of the present invention.

图5是依照本发明一实施例所绘示的贝式物件追踪方法的流程图。FIG. 5 is a flowchart of a method for tracking a Bayesian object according to an embodiment of the present invention.

图6是依照本发明一实施例所绘示的访问者数据库的管理方法的流程图。FIG. 6 is a flow chart of a method for managing a visitor database according to an embodiment of the present invention.

图7(a)、(b)及(c)是依照本发明一实施例所绘示的适应性阈值更新方法的示意图。7(a), (b) and (c) are schematic diagrams of an adaptive threshold updating method according to an embodiment of the present invention.

图8是依照本发明一实施例所绘示的适应性阈值计算的图例。FIG. 8 is a diagram illustrating adaptive threshold calculation according to an embodiment of the present invention.

【主要元件符号说明】[Description of main component symbols]

100:追踪系统100: Tracking System

110:背景相减110: Background subtraction

120:移动物体物件提取120: Moving object object extraction

130:外貌特征计算130: Appearance feature calculation

140:贝式物件追踪140: Bayesian Object Tracking

150:访问者数据库管理150: Visitor Database Management

160:访问者数据库160: Visitor Database

170:适应性阈值更新170: Adaptive Threshold Update

180:徘徊事件检测180: Loitering event detection

S210~S230:本发明一实施例的移动物体的追踪方法的各步骤S210-S230: each step of the tracking method of a moving object according to an embodiment of the present invention

S510~S570:本发明一实施例的贝式物件追踪方法的各步骤S510-S570: each step of the Bayesian object tracking method according to an embodiment of the present invention

S610~S670:本发明一实施例的访问者数据库的管理方法的各步骤S610-S670: each step of the visitor database management method according to an embodiment of the present invention

具体实施方式Detailed ways

本发明建立一套非监督式的徘徊检测技术,系统可自动地由监控画面的事件学习特定的参数,而对进入画面的访问者做外貌模型的建立,并将其与访问者数据库进行分析与关联。其中,通过与历史纪录比对,即使访问者离开画面后再进入监控场景,仍可保持追踪不间断。最后,利用事前定义的徘徊规则,可进一步地检测出徘徊事件。为了使本发明的内容更为明了,以下特举实施例作为本发明确实能够据以实施的范例。The present invention establishes a set of non-supervised wandering detection technology, the system can automatically learn specific parameters from the events of the monitoring screen, and establish the appearance model of the visitor entering the screen, and analyze and compare it with the visitor database associated. Among them, by comparing with the historical records, even if the visitor leaves the screen and then enters the monitoring scene, the tracking can still be kept uninterrupted. Finally, loitering events can be further detected by using pre-defined loitering rules. In order to make the content of the present invention clearer, the following specific examples are given as examples in which the present invention can actually be implemented.

图1是依照本发明一实施例所绘示的移动物体追踪的系统架构的示意图。请参照图1,本实施例的追踪系统100包括先经由背景相减110,检测出连续多张图像中的移动物体。由于本实施例追踪的对象是具有完整外型的移动物体(例如:行人),因此下一步即通过简单的条件设定,将不可能为移动物体的移动物体滤除,此即为移动物体物件提取120。FIG. 1 is a schematic diagram of a system architecture for moving object tracking according to an embodiment of the present invention. Referring to FIG. 1 , the

另一方面,针对每一个提取出来的移动物体,本实施例先计算其外貌特征130,而利用一个以贝氏决策(Bayesian Decision)为基础的追踪器持续追踪此移动物体140,并通过多张图像所取得同一个移动物体的外貌特征来建立其外貌模型。追踪系统100同时在存储器内维护一个访问者数据库150。其中,访问者数据库管理160会根据适应性阈值更新170的结果将目前所提取的移动物体的外貌特征与访问者数据库150中的外貌模型进行比对及关联,如果此移动物体能与访问者数据库150中的某一个人物关联起来,即表示此移动物体过去曾访问过该场景;反之,则将此移动物体新增至访问者数据库150内。最后,根据访问者出现于画面的时间条件作为判断依据,即可检测出徘徊事件180。在追踪移动物体的同时,追踪系统100可将画面中移动物体分布的情况作为样本,而自动学习如何区分不同访问者的差异,用以做为外貌模型关联的依据,以下即再举一实施例说明本发明移动物体的追踪方法的详细流程。On the other hand, for each extracted moving object, this embodiment first calculates its

图2是依照本发明一实施例所绘示的移动物体的追踪方法的流程图。请参照图2,本实施例针对进入监控画面中的移动物体进行追踪,藉以建立其外貌模型,并与配置于系统存储器内的访问者数据库内的数据进行比对,进而判断出此移动物体是否曾出现过,而能够持续追踪移动物体,其详细步骤如下:FIG. 2 is a flowchart of a method for tracking a moving object according to an embodiment of the present invention. Please refer to Fig. 2, this embodiment tracks the moving object entering the monitoring screen, so as to establish its appearance model, and compares it with the data in the visitor database configured in the system memory, and then judges whether the moving object is It has appeared before, and it can continuously track moving objects. The detailed steps are as follows:

首先,检测连续多张图像中的移动物体,以获得此移动物体在各个图像中的空间信息(步骤S210)。此移动物体检测技术主要是先建立一个背景图像,而将目前的图像与此背景图像相减来获得前景。通过背景相减后所得出的前景则可利用连接区域标记法将每一个连接区域标记出来,并以一个最小可包围此连接区域的矩形区域b={rleft,rtop,rright,rbottom}加以记录,其中rleft、rtop、rright与rbottom分别表示此一矩形区域于图像左、上、右、下的边界。First, the moving object in multiple consecutive images is detected to obtain the spatial information of the moving object in each image (step S210). This moving object detection technology mainly creates a background image first, and subtracts the current image from the background image to obtain the foreground. The foreground obtained after background subtraction can use the connected region marking method to mark each connected region, and use a minimum rectangular region b={rleft , rtop , rright , rbottom to enclose the connected region } to be recorded, where rleft , rtop , rright and rbottom represent the left, top, right and bottom borders of the rectangular area of the image respectively.

值得一提的是,由于造成前景的因素很多,而在此感兴趣的对象为包含单一个移动物体的前景物体,因此本实施例以行人为例,还包括判断此移动物体是否为行人,而将其中不属于行人的移动物体滤除,其例如是通过下面两个条件加以滤除:第一个条件是判断矩形区域的面积是否大于一个第一预设值,而当其面积大于第一预设值时,即判定此矩形区域所包围的移动物体为行人,如此可滤除噪声及破碎物体;第二个条件则是判断矩形区域的长宽比是否大于第二预设值,而当其长宽比大于第二预设值时,则判定此矩形区域所包围的移动物体为行人,如此可滤除多人重迭的区块或大范围的噪声。It is worth mentioning that since there are many factors that cause the foreground, and the object of interest here is a foreground object that contains a single moving object, so this embodiment takes a pedestrian as an example, and also includes judging whether the moving object is a pedestrian, and Filter out moving objects that do not belong to pedestrians, for example, through the following two conditions: the first condition is to determine whether the area of the rectangular area is greater than a first preset value, and when the area is greater than the first preset value When setting the value, it is determined that the moving object surrounded by the rectangular area is a pedestrian, so that noise and broken objects can be filtered out; the second condition is to determine whether the aspect ratio of the rectangular area is greater than the second preset value, and when it When the aspect ratio is greater than the second preset value, it is determined that the moving object surrounded by the rectangular area is a pedestrian, so that blocks overlapping multiple people or large-scale noise can be filtered out.

下一步则是提取移动物体在各个图像中的外貌特征,以建立此移动物体的外貌模型(步骤S220)。详细地说,本发明提出一种新的外貌描述法,其考虑色彩结构并通过较宽松的身体分割,得出较具意义的外貌特征。所谓较宽松的身体分割,就是将包围一个行人的矩形区域分割为多个区块,并提取各个区块的色彩分布,例如可依照2:4:4的比例将矩形区域分割为头部区块、身体区块及下肢区块,以分别对应于行人的头、身体及下肢。其中,由于头部的色彩特征易受其所面对方向的影响,且区分度并不显着,因此可将此头部区块的信息忽略。The next step is to extract the appearance features of the moving object in each image to establish an appearance model of the moving object (step S220). In detail, the present invention proposes a new appearance description method, which considers the color structure and obtains more meaningful appearance characteristics through looser body segmentation. The so-called looser body segmentation is to divide the rectangular area surrounding a pedestrian into multiple blocks, and extract the color distribution of each block. For example, the rectangular area can be divided into head blocks according to the ratio of 2:4:4 , the body block and the lower limbs block correspond to the pedestrian's head, body and lower limbs respectively. Wherein, since the color feature of the head is easily affected by the direction it is facing, and the degree of discrimination is not significant, the information of the head block can be ignored.

举例来说,图3(a)、3(b)及3(c)是依照本发明一实施例所绘示的移动物体的外貌模型的示意图。其中,图3(a)显示一个行人图像,其为经由上述二个条件滤除后的矩形区域,可称之为行人候选者P,而图3(b)为其对应的连接物件。在将连接物件标记后,接下来即可通过递回的方式,由图3(c)中的身体区块及下肢区块部分取出色彩分布的中值,进而建立一个二元树来描述色彩分布。For example, FIGS. 3( a ), 3 ( b ) and 3 ( c ) are schematic diagrams of an appearance model of a moving object according to an embodiment of the present invention. Among them, FIG. 3( a ) shows a pedestrian image, which is a rectangular area filtered by the above two conditions, which can be called a pedestrian candidate P, and FIG. 3( b ) is its corresponding connected object. After marking the connected objects, the median value of the color distribution can be obtained from the body block and lower limb block in Figure 3(c) in a recursive manner, and then a binary tree can be established to describe the color distribution .

图4是依照本发明一实施例所绘示的色彩分布的二元树的示意图。请参照图4,其中M表示身体区块或下肢区块中某一个色彩分布的中值,而ML及MH则为此色彩分布通过M分开后,各自的色彩分布的中值,依此可类推得到分支MLL、MLH、MHL及MHH。其中,上述的色彩分布可为红绿蓝(RGB)色彩空间或色调色度亮度(HSI)色彩空间中的任一色彩特征,甚至其他色彩空间的色彩特征,在此不限定。为方便说明,本实施例选用RGB色彩空间,并建立一个包含三层色彩分布的二元树,其可形成一个24维度的特征向量

在取得移动物体的空间信息及外貌模型后,本实施例即进一步结合这两样信息,据以追踪移动物体在图像中的移动路径(步骤S230)。本实施例通过一种基于贝氏决策的移动物体的追踪方法来达成移动物体追踪,此方法考虑两相邻图像中移动物体的外貌及位置,而运用贝氏决策来做出最好的关联,此即为移动物体追踪。After obtaining the spatial information and appearance model of the moving object, this embodiment further combines the two pieces of information to track the moving path of the moving object in the image (step S230 ). In this embodiment, a moving object tracking method based on Bayesian decision is used to achieve moving object tracking. This method considers the appearance and position of the moving object in two adjacent images, and uses Bayesian decision to make the best association. That is moving object tracking.

详细地说,假设在时间t时,通过物件检测及外貌模型建立所获得的包含n个行人候选者矩形的清单表示为

图5是依照本发明一实施例所绘示的贝式物件追踪方法的流程图。请参照图5,首先,在学习阶段中,本实施例先提供一组访问者猜想清单M(步骤S510),此访问者猜想清单M中包含多个已经过连续时间追踪,且被关联起来的访问者猜想。FIG. 5 is a flowchart of a method for tracking a Bayesian object according to an embodiment of the present invention. Please refer to FIG. 5. First, in the learning phase, this embodiment first provides a set of visitor guess list M (step S510). Visitors guess.

接着,针对访问者猜想清单M中的每一个访问者猜想检视其在图像中停留的长度(被追踪的时间)是否超过一第一预设时间L1(步骤S520),如果其长度短于L1,则认定其仍处于学习阶段,此时相邻画面的行人候选者仅通过空间的相关性来关联(步骤S530)。举例来说,如果属于访问者猜想的矩形区域与目前画面的行人候选者的矩形区域有空间上的重迭关系,则访问者猜想可通过加入行人候选者而更新为Next, for each visitor's guess in the visitor's guess list M Check whether the length of its stay in the image (the time being tracked) exceeds a first preset time L1 (step S520), if its length is shorter than L1 , then it is considered that it is still in the learning stage, and the adjacent picture is now Pedestrian candidates are correlated only by spatial correlation (step S530). For example, if the visitor's guess the rectangular area of Pedestrian candidates with the current frame the rectangular area of If there is a spatial overlapping relationship, the visitor guesses Pedestrian candidates can be added by and updated to

接着,在相关阶段中,即访问者猜想的长度大于第一预设时间L1,则表示其处于被稳定追踪的状态,此时我们不只考虑空间相关性,还更考虑物体的外貌特征,而通过贝氏决策来将访问者猜想与行人候选者关联(步骤S540)。详细地说,此步骤包括利用上述的空间信息来计算两相邻图像中相对应的移动物体在空间上相关的事前机率,以及利用上述外貌信息计算两相邻图像中相对应的移动物体的相似度,然后再将此事前机率及相似度结合于贝式追踪器,以判断访问者猜想与行人候选者是否相关联。举例来说,式(1)为贝氏决策的鉴别方程式(discriminant function):Then, in the relevant stage, the visitor guesses The length of is greater than the first preset time L1 , which means that it is in the state of being tracked stably. At this time, we not only consider the spatial correlation, but also consider the appearance characteristics of the object, and use the Bayesian decision to compare the visitor's guess with the pedestrian Candidate association (step S540). In detail, this step includes using the above-mentioned spatial information to calculate the prior probability that the corresponding moving objects in the two adjacent images are spatially related, and using the above-mentioned appearance information to calculate the similarity of the corresponding moving objects in the two adjacent images. degree, and then combine this prior probability and similarity with the Bayesian tracker to determine whether the visitor's guess is related to the pedestrian candidate. For example, formula (1) is the discriminant function of Bayesian decision-making:

其中相似度函数表示给予其属于的机率,而则相反,亦即不属于的机率。因此,如果BD大于1,即表示决策偏向于属于故将两者与以关联。其中,如果将式(1)中的相似度函式以多维的常态分布N(μ,∑2)来表示,则如式(2)所示:where the similarity function express to give which belongs to the probability of On the contrary, that is Does not belong probability. Therefore, if BD is greater than 1, it means that the decision is biased towards belong Therefore, the two are associated with . Among them, if the similarity function in formula (1) is Expressed by multidimensional normal distribution N(μ, ∑2 ), it is shown in formula (2):

其中μ and ∑为过去L1个特征值(从到)的平均与共变异矩阵,计算方法如下:where μ and ∑ are the past L1 eigenvalues (from arrive ) average and covariation matrix, calculated as follows:

其中in

σxy=(fx-μx)(fy-μy) (5)σxy =(fx -μx )(fy -μy ) (5)

相似度函数在此则以均匀分布(uniform distribution)函数来表示。另一方面,由于事前机率p(CH)与是用来反应对该事件发生的事前认知,在此是将此事前认知对应到空间的相关性。换句话说,即当与距离越近,则给予较大的事前机率,在此可通过一个与距离相关的指数函式来表示p(CH)与如式(6)与(7)所示:similarity function Here it is represented by a uniform distribution function. On the other hand, since the ex ante probability p(CH ) and It is used to reflect the prior cognition of the happening of the event, and here, it is the correlation of corresponding the prior cognition to the space. In other words, when and The closer the distance is, the greater the prior probability is given. Here, p(CH ) and As shown in formulas (6) and (7):

其中,σD为由使用者控制的参数,其可根据画面中移动物体的速度来加以调整。考虑上述空间相关性及外貌特征,即可用以判断访问者猜想是否与行人候选者关联(步骤S550)。而在更新阶段中,如果与经由上述阶段被判定为相关,则可将新增至而更新访问者猜想为

为了对进出场景内的访问者做外貌的辨识,以利分析同一个行人在此场景进出的行为及时间点,本发明配置了一个访问者数据库来记录访问者的外貌模型及其访问时间,此访问者数据库的管理流程如图6所示,其说明如下:In order to identify the appearance of the visitors entering and exiting the scene, and to analyze the behavior and time point of the same pedestrian entering and exiting the scene, the present invention configures a visitor database to record the appearance model of the visitor and the visiting time. The management process of the visitor database is shown in Figure 6, and its description is as follows:

首先,在追踪器内新增一个访问者猜想(步骤S610),并判断此访问者猜想的长度是否到达第二预设时间L2的整数倍(步骤S620),而当到达L2时,即根据其过往L2个外貌特征,计算其平均特征向量及共变异矩阵,藉以一高斯函数描述此时的外貌模型V={N(μ,∑),{s}}(步骤S630),其中{s}为一常数序列,其记录此外貌模型建立的时间。接着,判断是否有访问者的猜想长度等于L2(步骤S640)且其外貌模型为与访问者数据库中记录的访问者外貌模型相关联(步骤S650)。其中,如果此访问者与数据库内访问者外貌模型存在一个以上的相似(两者距离小于阈值T),则表示此访问者曾经访问过该场景,此时就可将其与最相近者Vk进行关联并通过式(8)、(9)与(10)更新至位于访问者数据库内的访问者外貌模型(步骤S660)。First, add a visitor's guess in the tracker (step S610), and judge whether the length of the visitor's guess reaches an integer multiple of the second preset time L2 (step S620), and when it reaches L2 , that is Calculate its average feature vector and covariation matrix according to its past L2 appearance features, and use a Gaussian function to describe the current appearance model V={N(μ, ∑), {s}} (step S630), where { s} is a constant sequence, which records the time when the appearance model is established. Next, determine whether there is a visitor whose guess length is equal to L2 (step S640) and whose appearance model is associated with the visitor's appearance model recorded in the visitor database (step S650). Among them, if there is more than one similarity between the visitor and the visitor's appearance model in the database (the distance between the two is smaller than the threshold T), it means that the visitor has visited the scene, and then it can be compared with the closest person Vk Correlate and update the visitor appearance model in the visitor database through formulas (8), (9) and (10) (step S660).

其中σ2(x,y)表示共变异矩阵内的元素(x,y),u与v为两外貌模型的时间序列长度,以作为更新的权重,由在此时Vi为一新建立起的外貌模型,故其v值为1。反之,如果Vi无法与访问者数据库内任何外貌模行进行关联,则表示此为一个新观察到的访问者,此时即可将其外貌模型及时间标记加入访问者数据库中(步骤S670)。此处两个外貌模型(各为一d维度的高斯分布,N1(μ1,∑1)与N2(μ2,∑2))之间的距离通过下列的距离公式来计算:Among them, σ2 (x, y) represents the element (x, y) in the covariation matrix, u and v are the time series lengths of the two appearance models, which are used as the update weight, and at this time Vi is a newly established The appearance model of , so its v value is 1. Conversely, ifVi cannot be associated with any appearance model in the visitor database, it means that this is a newly observed visitor, and its appearance model and time stamp can be added to the visitor database at this time (step S670) . Here, the distance between two appearance models (each a Gaussian distribution of one d dimension, N1 (μ1 , ∑1 ) and N2 (μ2 , ∑2 )) is calculated by the following distance formula:

D(V(N1),V(N2))=(DKL(N1||N2)+DKL(N2||N1))/2 (11)D(V(N1 ), V(N2 ))=(DKL (N1 ||N2 )+DKL (N2 ||N1 ))/2 (11)

其中in

值得一提的是,如果此访问者猜想长度为L2的两倍以上,则表示其已与访问者数据库关连过,此时只需通过式(8)持续更新其对应在访问者数据库的外貌模型即可。It is worth mentioning that if the visitor's guess length is more than twice the length ofL2 , it means that it has been associated with the visitor database. At this time, it is only necessary to continuously update its corresponding appearance in the visitor database through formula (8) model.

针对上述提及做为判断两个外貌模型相关依据的阈值T,当两个外貌模型距离大于此阈值T时,即可判断此两个外貌模型来自于相异的访问者;反的若距离小于阈值T,则可判定此两者相关联,进而断定两者为同一访问者。Regarding the threshold T mentioned above as the basis for judging the correlation between two appearance models, when the distance between the two appearance models is greater than the threshold T, it can be judged that the two appearance models come from different visitors; otherwise, if the distance is less than threshold T, it can be determined that the two are related, and then it can be determined that the two are the same visitor.

为了计算一个最适合的阈值T,本发明提出一种非监督式的学习策略,使得系统可自动地从影片中学习更新,以获得最佳的外貌分辨能力,其包括考虑下面两种事件:事件A发生于同一个访问者被持续稳定地追踪。如图7(a)与图7(b)所示,当访问者已被系统稳定地追踪2L2时间长度,此时即有足够的信心相信两个外貌模型

当系统搜集到的事件A与事件B的数量达到一定量时,再对其做统计上的分析。如图8所示,其中由于事件A的特征值乃是计算来自同一个访问者于两不同时间点所建立的外貌模型的距离,故其值较靠近零点位置且分布较为集中;而事件B的特征值乃是计算两个不同物体的外貌模型的距离,故其值离零点较远且分布较为分散。通过计算这两种事件个别的平均值及标准差,再以常态分布与来表示其距离数据,即可建立第一距离分布及第二距离分布,此时即可通过下列等式(13)求取此第一距离分布与第二距离分布最佳的界线,以作为区分外貌模型的阈值T:When the number of event A and event B collected by the system reaches a certain amount, it will be analyzed statistically. As shown in Figure 8, since the feature value of event A is calculated from the distance of the appearance model established by the same visitor at two different time points, its value is closer to the zero point and the distribution is more concentrated; while the feature value of event B The eigenvalue is to calculate the distance between the appearance models of two different objects, so its value is far from zero and the distribution is relatively scattered. By calculating the individual mean and standard deviation of these two events, and then using the normal distribution and To represent its distance data, the first distance distribution and the second distance distribution can be established. At this time, the best boundary line between the first distance distribution and the second distance distribution can be obtained by the following equation (13) as a distinction The threshold T of the appearance model:

最后,对于上述访问者数据库所记载各个访问者的外貌模型及停留时间,本发明更进一步地将其应用于徘徊检测,只要分析访问者数据库内每一个访问者的外貌模型所记录的时间序列{s}即可,在此可将下式(14)与(15)做为条件来进行徘徊的判定:Finally, for the appearance model and stay time of each visitor recorded in the above-mentioned visitor database, the present invention further applies it to wandering detection, as long as the time series recorded by the appearance model of each visitor in the visitor database is analyzed{ s}, here the following equations (14) and (15) can be used as conditions to determine wandering:

st-s1>α (14)st -s1 >α (14)

si-si-1<β,1<i≤t (15)si -si-1 <β, 1<i≤t (15)

其中式(14)表示访问者从第一次出现到目前检测时,已在画面出现超过预设时间α,而式(15)表示访问者被检测的时间间距至少小于预设时间β。Equation (14) indicates that the visitor has appeared on the screen for more than the preset time α from the first appearance to the current detection, and Equation (15) indicates that the time interval of the visitor being detected is at least less than the preset time β.

综上所述,本发明的移动物体的追踪方法结合「移动物体追踪」、「访问者数据库管理」、以及「适应性阈值学习」等技术,根据多张图像中移动物体的外貌特征建立外貌模型,并与配置于系统存储器内的访问者数据库内的数据进行比对,而能够维持对于访问者的追踪不间断,即使访问者离开监控画面而后再进入,仍可成功地将此访问者与其先前的行为相关联,进而辅助监控者即早发现异常行为并做出后续反应。To sum up, the moving object tracking method of the present invention combines technologies such as "moving object tracking", "visitor database management", and "adaptive threshold learning" to establish an appearance model based on the appearance characteristics of moving objects in multiple images, And compared with the data in the visitor database configured in the system memory, it can maintain uninterrupted tracking of the visitor. Even if the visitor leaves the monitoring screen and enters again, the visitor can still be successfully compared with the previous visitor. Behaviors are correlated, and then assist monitors to detect abnormal behaviors early and make follow-up responses.

虽然本发明已以实施例公开如上,然其并非用以限定本发明,本领域技术人员,在不脱离本发明的精神和范围内,当可作些许的更动与润饰,故本发明的保护范围当视所附权利要求书所界定者为准。Although the present invention has been disclosed as above with the embodiments, it is not intended to limit the present invention. Those skilled in the art can make some changes and modifications without departing from the spirit and scope of the present invention, so the protection of the present invention The scope is to be determined as defined by the appended claims.

Claims (21)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN200810179785.8ACN101751549B (en) | 2008-12-03 | 2008-12-03 | Tracking methods for moving objects |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN200810179785.8ACN101751549B (en) | 2008-12-03 | 2008-12-03 | Tracking methods for moving objects |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN101751549A CN101751549A (en) | 2010-06-23 |

| CN101751549Btrue CN101751549B (en) | 2014-03-26 |

Family

ID=42478515

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN200810179785.8AExpired - Fee RelatedCN101751549B (en) | 2008-12-03 | 2008-12-03 | Tracking methods for moving objects |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN101751549B (en) |

Families Citing this family (11)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN103324906B (en)* | 2012-03-21 | 2016-09-14 | 日电(中国)有限公司 | A kind of method and apparatus of legacy detection |

| CN107992198B (en)* | 2013-02-06 | 2021-01-05 | 原相科技股份有限公司 | Optical pointing system |

| CN103824299B (en)* | 2014-03-11 | 2016-08-17 | 武汉大学 | A kind of method for tracking target based on significance |

| CN105574511B (en)* | 2015-12-18 | 2019-01-08 | 财团法人车辆研究测试中心 | Adaptive object classification device with parallel framework and method thereof |

| CN107305378A (en)* | 2016-04-20 | 2017-10-31 | 上海慧流云计算科技有限公司 | A kind of method that image procossing follows the trail of the robot of object and follows the trail of object |

| CN108205643B (en)* | 2016-12-16 | 2020-05-15 | 同方威视技术股份有限公司 | Image matching method and device |

| CN110032917A (en)* | 2018-01-12 | 2019-07-19 | 杭州海康威视数字技术股份有限公司 | A kind of accident detection method, apparatus and electronic equipment |

| CN109117721A (en)* | 2018-07-06 | 2019-01-01 | 江西洪都航空工业集团有限责任公司 | A kind of pedestrian hovers detection method |

| TWI697868B (en)* | 2018-07-12 | 2020-07-01 | 廣達電腦股份有限公司 | Image object tracking systems and methods |

| CN109102669A (en)* | 2018-09-06 | 2018-12-28 | 广东电网有限责任公司 | A kind of transformer substation auxiliary facility detection control method and its device |

| CN111815671B (en)* | 2019-04-10 | 2023-09-15 | 曜科智能科技(上海)有限公司 | Target quantity counting method, system, computer device and storage medium |

Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN1141427A (en)* | 1995-12-29 | 1997-01-29 | 西安交通大学 | Method for measuring moving articles based on pattern recognition |

| CN1766928A (en)* | 2004-10-29 | 2006-05-03 | 中国科学院计算技术研究所 | A kind of motion object center of gravity track extraction method based on the dynamic background sport video |

| CN101170683A (en)* | 2006-10-27 | 2008-04-30 | 松下电工株式会社 | Target moving object tracking device |

Family Cites Families (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US6295367B1 (en)* | 1997-06-19 | 2001-09-25 | Emtera Corporation | System and method for tracking movement of objects in a scene using correspondence graphs |

| JP3880759B2 (en)* | 1999-12-20 | 2007-02-14 | 富士通株式会社 | Moving object detection method |

- 2008

- 2008-12-03CNCN200810179785.8Apatent/CN101751549B/ennot_activeExpired - Fee Related

Patent Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN1141427A (en)* | 1995-12-29 | 1997-01-29 | 西安交通大学 | Method for measuring moving articles based on pattern recognition |

| CN1766928A (en)* | 2004-10-29 | 2006-05-03 | 中国科学院计算技术研究所 | A kind of motion object center of gravity track extraction method based on the dynamic background sport video |

| CN101170683A (en)* | 2006-10-27 | 2008-04-30 | 松下电工株式会社 | Target moving object tracking device |

Also Published As

| Publication number | Publication date |

|---|---|

| CN101751549A (en) | 2010-06-23 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN101751549B (en) | Tracking methods for moving objects | |

| US8243990B2 (en) | Method for tracking moving object | |

| KR101375583B1 (en) | Object Density Estimation in Video | |

| CN100585656C (en) | A rule-based all-weather intelligent video analysis and monitoring method | |

| CN102982313B (en) | The method of Smoke Detection | |

| JP4759988B2 (en) | Surveillance system using multiple cameras | |

| CN102311039B (en) | Monitoring device | |

| CN107230267B (en) | Intelligence In Baogang Kindergarten based on face recognition algorithms is registered method | |

| US20120274777A1 (en) | Method of tracking an object captured by a camera system | |

| JP2007334623A (en) | Face authentication device, face authentication method, and entrance / exit management device | |

| CN105335701B (en) | A kind of pedestrian detection method based on HOG Yu D-S evidence theory multi-information fusion | |

| CN107491749B (en) | Method for detecting global and local abnormal behaviors in crowd scene | |

| CN109829382B (en) | Abnormal target early warning tracking system and method based on intelligent behavior characteristic analysis | |

| CN110781844B (en) | Security patrol monitoring method and device | |

| CN107766823A (en) | Anomaly detection method in video based on key area feature learning | |

| CN107016361A (en) | Recognition methods and device based on video analysis | |

| CN106297305A (en) | A kind of combination license plate identification and the fake-licensed car layer detection method of path optimization | |

| CN104809742A (en) | Article safety detection method in complex scene | |

| Yu et al. | Multiple-Level Distillation for Video Fine-Grained Accident Detection | |

| JP6739115B6 (en) | Risk determination program and system | |

| CN115147921B (en) | Multi-domain information fusion-based key region target abnormal behavior detection and positioning method | |

| CN117636240A (en) | A public video surveillance system based on big data | |

| CN117238027A (en) | Method for identifying and tracking gait in real time | |

| CN113326740B (en) | Improved double-flow traffic accident detection method | |

| Huang et al. | Unsupervised pedestrian re-identification for loitering detection |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| C06 | Publication | ||

| PB01 | Publication | ||

| C10 | Entry into substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant | ||

| CF01 | Termination of patent right due to non-payment of annual fee | Granted publication date:20140326 | |

| CF01 | Termination of patent right due to non-payment of annual fee |