- Notifications

You must be signed in to change notification settings - Fork326

Intelligent Router for Mixture-of-Models

License

vllm-project/semantic-router

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

Latest News 🔥

- [2025/12/15] New Blog:Token-Level Truth: Real-Time Hallucination Detection for Production LLMs 🚪

- [2025/11/19] New Blog:Signal-Decision Driven Architecture: Reshaping Semantic Routing at Scale 🧠

- [2025/11/03]Our paperCategory-Aware Semantic Caching for Heterogeneous LLM Workloads published 📝

- [2025/10/21] We announced the2025 Q4 Roadmap: Journey to Iris 📅.

- [2025/10/12]Our paperWhen to Reason: Semantic Router for vLLM accepted by NeurIPS 2025 MLForSys 🧠.

- [2025/10/08] We announced the integration withvLLM Production Stack Team 👋.

- [2025/10/01] We supported to deploy onKubernetes 🌊.

- [2025/09/01] We released the project officially:vLLM Semantic Router: Next Phase in LLM inference 🚀.

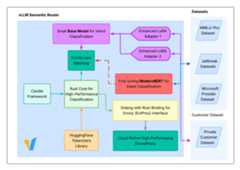

AMixture-of-Models (MoM) router that intelligently directs OpenAI API requests to the most suitable models or LoRA adapters from a defined pool based onSemantic Understanding of the request's intent (Complexity, Task, Tools).

Conceptually similar to Mixture-of-Experts (MoE) which liveswithin a model, this system selects the bestentire model for the nature of the task.

As such, the overall inference accuracy is improved by using a pool of models that are better suited for different types of tasks:

The router is implemented in two ways:

- Golang (with Rust FFI based on thecandle rust ML framework)

- PythonBenchmarking will be conducted to determine the best implementation.

Select the tools to use based on the prompt, avoiding the use of tools that are not relevant to the prompt so as to reduce the number of prompt tokens and improve tool selection accuracy by the LLM.

Automatically inject specialized system prompts based on query classification, ensuring optimal model behavior for different domains (math, coding, business, etc.) without manual prompt engineering.

Cache the semantic representation of the prompt so as to reduce the number of prompt tokens and improve the overall inference latency.

Detect PII in the prompt, avoiding sending PII to the LLM so as to protect the privacy of the user.

Detect if the prompt is a jailbreak prompt, avoiding sending jailbreak prompts to the LLM so as to prevent the LLM from misbehaving. Can be configured globally or at the category level for fine-grained security control.

Get up and running in seconds with our interactive setup script:

bash ./scripts/quickstart.sh

This command will:

- 🔍 Check all prerequisites automatically

- 📦 Install HuggingFace CLI if needed

- 📥 Download all required AI models (~1.5GB)

- 🐳 Start all Docker services

- ⏳ Wait for services to become healthy

- 🌐 Show you all the endpoints and next steps

For detailed installation and configuration instructions, see theComplete Documentation.

For comprehensive documentation including detailed setup instructions, architecture guides, and API references, visit:

👉Complete Documentation at Read the Docs

The documentation includes:

- Installation Guide - Complete setup instructions

- System Architecture - Technical deep dive

- Model Training - How classification models work

- API Reference - Complete API documentation

- Dashboard - vLLM Semantic Router Dashboard

For questions, feedback, or to contribute, please join#semantic-router channel in vLLM Slack.

We host bi-weekly community meetings to sync up with contributors across different time zones:

- First Tuesday of the month: 9:00-10:00 AM EST (accommodates US EST, EU, and Asia Pacific contributors)

- Third Tuesday of the month: 1:00-2:00 PM EST (accommodates US EST and California contributors)

- Meeting Recordings:YouTube

Join us to discuss the latest developments, share ideas, and collaborate on the project!

If you find Semantic Router helpful in your research or projects, please consider citing it:

@misc{semanticrouter2025, title={vLLM Semantic Router}, author={vLLM Semantic Router Team}, year={2025}, howpublished={\url{https://github.com/vllm-project/semantic-router}},}We opened the project at Aug 31, 2025. We love open source and collaboration ❤️

We are grateful to our sponsors who support us:

AMD provides us with GPU resources andROCm™ Software for training and researching the frontier router models, enhancing e2e testing, and building online models playground.

About

Intelligent Router for Mixture-of-Models

Topics

Resources

License

Code of conduct

Contributing

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Releases

Packages0

Uh oh!

There was an error while loading.Please reload this page.