- Notifications

You must be signed in to change notification settings - Fork7

Official page of Struct-MDC (RA-L'22 with IROS'22); Depth completion from Visual-SLAM using point & line features

License

url-kaist/Struct-MDC

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

(click the above buttons for redirection!)

Official page of "Struct-MDC: Mesh-Refined Unsupervised Depth Completion Leveraging Structural Regularities from Visual SLAM", which is accepted in IEEE RA-L'22 & IROS'22

- Depth completion from Visual(-inertial) SLAM using point & line features.

- Code (including source code, utility code for visualization) & Dataset will be finalized & released soon!

- version info

- (04/20) docker image has been uploaded.

- (04/21) Dataset has been uploaded.

- (04/21) Visusal-SLAM module (modifiedUV-SLAM) has been uploaded.

- (11/01) data generation tools has been uploaded.

- (12/14) Visusal-SLAM module has been modified.

- (12/22) Struct-MDC PyTorch code has been uploaded.

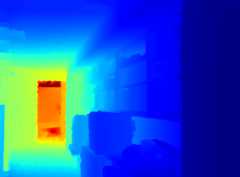

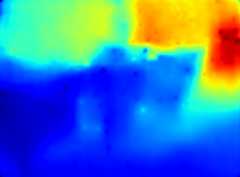

- 3D Depth estimation results

- 2D Depth estimation results

| Ground truth | Baseline | Struct-MDC (Ours) |

|---|---|---|

|  |  |

|  |  |

(You can skip the Visual-SLAM module part, if you just want to use NYUv2, VOID, and PLAD datsets)

- Common

- Ubuntu 18.04

- Visual-SLAM module

- ROS melodic

- OpenCV 3.2.0 (under 3.4.1)

- Ceres Solver-1.14.0

- Eigen-3.3.9

- CDT library

git clone https://github.com/artem-ogre/CDT.gitcd CDTmkdir build && cd buildcmake -DCDT_USE_AS_COMPILED_LIBRARY=ON -DCDT_USE_BOOST=ON ..cmake --build . && cmake --install .sudo make install

- Depth completion module

- Python 3.7.7

- PyTorch 1.5.0(you can easily reproduce equivalent environment usingour docker image)

(You can skip the Visual-SLAM module part, if you just want to use NYUv2, VOID, and PLAD datsets)

Visual-SLAM module

- As visual-SLAM, we modified theUV-SLAM, which is implemented in ROS environment.

- make sure that your catkin workspace has following cmake args:

-DCMAKE_BUILD_TYPE=Release

cd ~/$(PATH_TO_YOUR_ROS_WORKSPACE)/srcgit clone --recursive https://github.com/url-kaist/Struct-MDCcd ..catkin buildsource ~/$(PATH_TO_YOUR_ROS_WORKSPACE)/devel/setup.bashDepth completion module

- Our depth compeltion module is based on the popular Deep-Learning framework, PyTorch.

- For your convenience, we share our environment as Docker image. We assume that you have already installed the Docker. For Docker installation, please referhere

# pull our docker image into your local machinedocker pull zinuok/nvidia-torch:latest# run the image mounting our sourcedocker run -it --gpus "device=0" -v $(PATH_TO_YOUR_LOCAL_FOLER):/workspace zinuok/nvidia-torch:latest bash

- any issues found will be updated in this section.

- if you've found any other issues, please post it on

Issues tab. We'll do our best to resolve your issues.

Datasets

- There are three datasets we used for verifying the performance of our proposed method.

- We kindly share our modified datasets, which include also the

line featurefromUV-SLAM and already has been pre-processed. For testing our method, please use themodifiedone.

Pre-trained weights

- We provide our pre-trained network, which is same as the one used in the paper.

- Please download the weigths from the below table and put into the 'Struct-MDC_src/pretrained'.

- There are two files for each dataset: pre-trained weights for

- Depth network: used at training/evaluation time.

- Pose network: used only at training time. (for supervision)

PLAD

- This is our proposed dataset, which has

point & line feature depthfromUV-SLAM. - Each sequence was acquired in various indoor / outdoor man-made environment.

- The PLAD dataset has been slightly modified from the paper's version. We are trying to optimize each sequence and reproduce the results in this dataset. Of course, this dataset is always available, but it will be continuously updated withversion information. So please keep track of our updated instructions.

- This is our proposed dataset, which has

For more details on each dataset we used, please refer ourpaper

| Dataset | ref. link | train/eval data | ROS bag | DepthNet weight | PoseNet weight |

|---|---|---|---|---|---|

| VOID | original | void-download | void-raw | void-depth | void-pose |

| NYUv2 | pre-processed | nyu-download | - | nyu-depth | nyu-pose |

| PLAD | proposed! | plad-download | plad-raw | plad-depth | plad-pose |

Using our pre-trained network, you can simply run our network and verify the performance as same as our paper.

# move the pre-trained weights to the following folder:cd Struct-MDC/Struct-MDC_src/mv$(PATH_TO_PRETRAINED_WEIGHTS) ./pretrained/{plad| nyu| void}/struct_MDC_model/# link raw datasettar -xvzf {PLAD: PLAD_v2| VOID: void_parsed_line| NYUV2: nyu_v2_line}.tar.gzln -s$(PATH_TO_DATASET_FOLDER) ./data/# data preparationpython3 setup/setup_dataset_{nyu| void| plad}.py# runningbash bash/run_structMDC_{nyu| void| plad}_pretrain.sh

- Evaluation results

| MAE | RMSE | < 1.05 | < 1.10 | < 1.25^3 | |

|---|---|---|---|---|---|

| paper | 1170.303 | 1481.583 | 4.567 | 8.899 | 67.071 |

| modified | - | - | - | - | - |

You can also train the network from the beginning using your own data.

However, in this case, you have to prepare the data as same as ours, from following procedure:

(Since our data structure, dataloader, and pre-processing code templetes follows our baseline:KBNet, you can also refer the author's link. We thanks the KBNet's authors.)

# data preparationcd Struct-MDC_srcmkdir dataln -s$(PATH_TO_{NYU| VOID| PLAD}_DATASET_ROOT) data/python3 setup/setup_dataset_{nyu| void| plad}.py# trainbash bash/train_{nyu| void| plad}.sh

- Only if you want to make your own dataset from UV-SLAM depth estimation, followPost-process your own Dataset.

If you use the algorithm in an academic context, please cite the following publication:

@article{jeon2022struct, title={Struct-MDC: Mesh-Refined Unsupervised Depth Completion Leveraging Structural Regularities From Visual SLAM}, author={Jeon, Jinwoo and Lim, Hyunjun and Seo, Dong-Uk and Myung, Hyun}, journal={IEEE Robotics and Automation Letters}, volume={7}, number={3}, pages={6391--6398}, year={2022}, publisher={IEEE}}@article{lim2022uv, title={UV-SLAM: Unconstrained Line-based SLAM Using Vanishing Points for Structural Mapping}, author={Lim, Hyunjun and Jeon, Jinwoo and Myung, Hyun}, journal={IEEE Robotics and Automation Letters}, year={2022}, publisher={IEEE}, volume={7}, number={2}, pages={1518-1525}, doi={10.1109/LRA.2022.3140816}}- Visual-SLAM module

We useUV-SLAM, which is based onVINS-MONO, as our baseline code. Thanks for H. Lim and Dr. Qin Tong, Prof. Shen etc very much.

- Depth completion module

We useKBNet as our baseline code. Thanks for W. Alex and S. Stefano very much.

The source code is released underGPLv3 license.We are still working on improving the code reliability.For any technical issues, please contact Jinwoo Jeon (zinuok@kaist.ac.kr).

About

Official page of Struct-MDC (RA-L'22 with IROS'22); Depth completion from Visual-SLAM using point & line features

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Releases

Packages0

Contributors2

Uh oh!

There was an error while loading.Please reload this page.