- Notifications

You must be signed in to change notification settings - Fork0

Interpretable Machine Learning and Statistical Inference with Accumulated Local Effects (ALE)

License

Unknown, MIT licenses found

Licenses found

tripartio/ale

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

Accumulated Local Effects (ALE) were initially developed as amodel-agnostic approach for global explanations of the results ofblack-box machine learning algorithms (Apley, Daniel W., and Jingyu Zhu.‘Visualizing the effects of predictor variables in black box supervisedlearning models.’ Journal of the Royal Statistical Society Series B:Statistical Methodology 82.4 (2020): 1059-1086doi:10.1111/rssb.12377). ALE has two primary advantages over otherapproaches like partial dependency plots (PDP) and SHapley AdditiveexPlanations (SHAP): its values are not affected by the presence ofinteractions among variables in a model and its computation isrelatively rapid. This package reimplements the algorithms forcalculating ALE data and develops highly interpretable visualizationsfor plotting these ALE values. It also extends the original ALE conceptto add bootstrap-based confidence intervals and ALE-based statisticsthat can be used for statistical inference.

For more details, see Okoli, Chitu. 2023. “Statistical Inference UsingMachine Learning and Classical Techniques Based on Accumulated LocalEffects (ALE).” arXiv. doi:10.48550/arXiv.2310.09877.

The{ale} package defines four main{S7} classes:

ALE: data for 1D ALE (single variables) and 2D ALE (two-wayinteractions). ALE values may be bootstrapped with ALE statisticscalculated.ModelBoot: bootstrap results an entire model, not just the ALEvalues. This function returns the bootstrapped model statistics andcoefficients as well as the bootstrapped ALE values. This is theappropriate approach for models that have not been cross-validated.ALEPlots: store ALE plots generated from eitherALEorModelBootwith convenientprint(),plot(), andget()methods.ALEpDist: a distribution object for calculating the p-values for theALE statistics of anALEobject.

You can obtain direct help for any of the package’s user-facingfunctions with the Rhelp() function, e.g.,help(ale). However, themost detailed documentation is found in thewebsite for the mostrecent development version. Thereyou can find several articles. We particularly recommend:

You can obtain the official releases fromCRAN:

install.packages('ale')The CRAN releases are extensively tested and should have relatively fewbugs. However, this package is still in beta stage. For the{ale}package, that means that there will occasionally be new features withchanges in the function interface that might break the functionality ofearlier versions. Please excuse us for this as we move towards a stableversion that flexibly meets the needs of the broadest user base.

To get the most recent features, you can install the development versionof the package fromGitHub with:

# install.packages('pak')pak::pak('tripartio/ale')

The development version in the main branch of GitHub is alwaysthoroughly checked. However, the documentation might not be fullyup-to-date with the functionality.

We will give two demonstrations of how to use the package: first, asimple demonstration of ALE plots, and second, a more sophisticateddemonstration suitable for statistical inference with p-values. For bothdemonstrations, we begin by fitting a GAM model. We assume that this isa final deployment model that needs to be fitted to the entire dataset.

library(dplyr)#>#> Attaching package: 'dplyr'#> The following objects are masked from 'package:stats':#>#> filter, lag#> The following objects are masked from 'package:base':#>#> intersect, setdiff, setequal, union# Load diamonds dataset with some cleanupdiamonds<-ggplot2::diamonds|> filter(!(x==0|y==0|z==0))|># https://lorentzen.ch/index.php/2021/04/16/a-curious-fact-on-the-diamonds-dataset/ distinct(price,carat,cut,color,clarity,.keep_all=TRUE )|> rename(x_length=x,y_width=y,z_depth=z,depth_pct=depth )

# Create a GAM model with flexible curves to predict diamond price# Smooth all numeric variables and include all other variables# Build the model on training data, not on the full dataset.gam_diamonds<-mgcv::gam(price~ s(carat)+ s(depth_pct)+ s(table)+ s(x_length)+ s(y_width)+ s(z_depth)+cut+color+clarity,data=diamonds)

For the simple demonstration, we directly create ALE data with theALE() function and then plot theggplot plot objects.

library(ale)#>#> Attaching package: 'ale'#> The following object is masked from 'package:base':#>#> get# For speed, these examples use retrieve_rds() to load pre-created objects# from an online repository.# To run the code yourself, execute the code blocks directly.serialized_objects_site<-"https://github.com/tripartio/ale/raw/main/download"# Create ALE dataale_gam_diamonds<- retrieve_rds(# For speed, load a pre-created object by default. c(serialized_objects_site,'ale_gam_diamonds.0.5.2.rds'), {# To run the code yourself, execute this code block directly. ALE(gam_diamonds,data=diamonds) })# saveRDS(ale_gam_diamonds, file.choose())# Plot the ALE dataplot(ale_gam_diamonds)|> print(ncol=2)

For an explanation of these basic features, see theintroductoryvignette.

The statistical functionality of the{ale} package is rather slowbecause it typically involves 100 bootstrap iterations and sometimes a1,000 random simulations. Even though most functions in the packageimplement parallel processing by default, such procedures still takesome time. So, this statistical demonstration gives you downloadableobjects for a rapid demonstration.

First, we need to create a p-value distribution object so that the ALEstatistics can be properly distinguished from random effects.

# Create p_value distribution objectp_dist_gam_diamonds_readme<- retrieve_rds(# For speed, load a pre-created object by default. c(serialized_objects_site,'p_dist_gam_diamonds_readme.0.5.2.rds'), {# Rather slow because it retrains the model 100 times.# To run the code yourself, execute this code block directly. ALEpDist(gam_diamonds,diamonds,# Normally should be default 1000, but just 100 for a quicker demo.rand_it=100 ) })# saveRDS(p_dist_gam_diamonds_readme, file.choose())

Now we can create bootstrapped ALE data and see some of the differencesin the plots of bootstrapped ALE with p-values:

# Create ALE data with p-valuesale_gam_diamonds_stats_readme<- retrieve_rds(# For speed, load a pre-created object by default. c(serialized_objects_site,'ale_gam_diamonds_stats_readme.0.5.2.rds'), {# To run the code yourself, execute this code block directly. ALE(gam_diamonds,# generate ALE for all 1D variables and the carat:clarity 2D interactionx_cols=list(d1=TRUE,d2='carat:clarity'),data=diamonds,p_values=p_dist_gam_diamonds_readme,# Usually at least 100 bootstrap iterations, but just 10 here for a faster demoboot_it=10 ) })# saveRDS(ale_gam_diamonds_stats_readme, file.choose())# Create an ALEPlots object for fine-tuned plottingale_plots<- plot(ale_gam_diamonds_stats_readme)# Plot 1D ALE plotsale_plots|># Only select 1D ALE plots.# Use subset() instead of get() to keep the special ALEPlots object# plot and print functionality. subset(list(d1=TRUE))|> print(ncol=2)

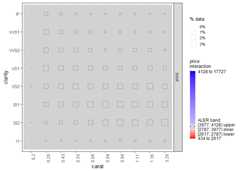

# Plot a selected 2D plotale_plots|># get() retrieves a specific desired plot get('carat:clarity')

For a detailed explanation of how to interpret these plots, see thevignette onALE-based statistics for statistical inference and effectsizes.

If you find a bug, please report it onGitHub. Be sure to alwaysinclude a minimal reproducible example for your usage requests. If youcannot include your own dataset in the question, then use one of thebuilt-in datasets to frame your help request:var_cars orcensus.You may also useggplot2::diamonds for a larger sample.

If you find this package useful, I would appreciate it if you would citethe appropriate sources as follows, depending on what aspects you use.

Apley, Daniel W., and Jingyu Zhu (2020). “Visualizing the effects ofpredictor variables in black box supervised learning models.”Journalof the Royal Statistical Society Series B: Statistical Methodology 82,no. 4: 1059-1086.

Okoli, Chitu (2023). “Statistical inference using machine learning andclassical techniques based on accumulated local effects (ALE).”arXivpreprint arXiv:2310.09877.

Okoli, Chitu (2024). “Model-Agnostic Interpretability: Effect SizeMeasures from Accumulated Local Effects (ALE)”. INFORMS Workshop on DataScience 2024. Seattle

Okoli, Chitu (2023). “Statistical inference using machine learning andclassical techniques based on accumulated local effects (ALE).”arXivpreprint arXiv:2310.09877.

Okoli, Chitu (2024). “Model-Agnostic Interpretability: Effect SizeMeasures from Accumulated Local Effects (ALE)”. INFORMS Workshop on DataScience 2024. Seattle

Okoli, Chitu ([year of package version used]). “ale: InterpretableMachine Learning and Statistical Inference with Accumulated LocalEffects (ALE)”. R software package version [enter version number].https://CRAN.R-project.org/package=ale.

About

Interpretable Machine Learning and Statistical Inference with Accumulated Local Effects (ALE)

Resources

License

Unknown, MIT licenses found

Licenses found

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Uh oh!

There was an error while loading.Please reload this page.