- Notifications

You must be signed in to change notification settings - Fork2

Implementation of paper - YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

License

roboflow/yolov7

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

Implementation of paper -YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

- Integrated intoHuggingface Spaces 🤗 using Gradio. Try out the Web Demo

MS COCO

| Model | Test Size | APtest | AP50test | AP75test | batch 1 fps | batch 32 average time |

|---|---|---|---|---|---|---|

| YOLOv7 | 640 | 51.4% | 69.7% | 55.9% | 161fps | 2.8ms |

| YOLOv7-X | 640 | 53.1% | 71.2% | 57.8% | 114fps | 4.3ms |

| YOLOv7-W6 | 1280 | 54.9% | 72.6% | 60.1% | 84fps | 7.6ms |

| YOLOv7-E6 | 1280 | 56.0% | 73.5% | 61.2% | 56fps | 12.3ms |

| YOLOv7-D6 | 1280 | 56.6% | 74.0% | 61.8% | 44fps | 15.0ms |

| YOLOv7-E6E | 1280 | 56.8% | 74.4% | 62.1% | 36fps | 18.7ms |

Docker environment (recommended)

Expand

# create the docker container, you can change the share memory size if you have more.nvidia-docker run --name yolov7 -it -v your_coco_path/:/coco/ -v your_code_path/:/yolov7 --shm-size=64g nvcr.io/nvidia/pytorch:21.08-py3# apt install required packagesapt updateapt install -y zip htop screen libgl1-mesa-glx# pip install required packagespip install seaborn thop# go to code foldercd /yolov7

yolov7.ptyolov7x.ptyolov7-w6.ptyolov7-e6.ptyolov7-d6.ptyolov7-e6e.pt

python test.py --data data/coco.yaml --img 640 --batch 32 --conf 0.001 --iou 0.65 --device 0 --weights yolov7.pt --name yolov7_640_val

You will get the results:

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.51206 Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.69730 Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.55521 Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.35247 Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.55937 Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.66693 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.38453 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.63765 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.68772 Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.53766 Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.73549 Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.83868To measure accuracy, downloadCOCO-annotations for Pycocotools to the./coco/annotations/instances_val2017.json

Data preparation

bash scripts/get_coco.sh

- Download MS COCO dataset images (train,val,test) andlabels. If you have previously used a different version of YOLO, we strongly recommend that you delete

train2017.cacheandval2017.cachefiles, and redownloadlabels

Single GPU training

# train p5 modelspython train.py --workers 8 --device 0 --batch-size 32 --data data/coco.yaml --img 640 640 --cfg cfg/training/yolov7.yaml --weights'' --name yolov7 --hyp data/hyp.scratch.p5.yaml# train p6 modelspython train_aux.py --workers 8 --device 0 --batch-size 16 --data data/coco.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6.yaml --weights'' --name yolov7-w6 --hyp data/hyp.scratch.p6.yaml

Multiple GPU training

# train p5 modelspython -m torch.distributed.launch --nproc_per_node 4 --master_port 9527 train.py --workers 8 --device 0,1,2,3 --sync-bn --batch-size 128 --data data/coco.yaml --img 640 640 --cfg cfg/training/yolov7.yaml --weights'' --name yolov7 --hyp data/hyp.scratch.p5.yaml# train p6 modelspython -m torch.distributed.launch --nproc_per_node 8 --master_port 9527 train_aux.py --workers 8 --device 0,1,2,3,4,5,6,7 --sync-bn --batch-size 128 --data data/coco.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6.yaml --weights'' --name yolov7-w6 --hyp data/hyp.scratch.p6.yaml

yolov7_training.ptyolov7x_training.ptyolov7-w6_training.ptyolov7-e6_training.ptyolov7-d6_training.ptyolov7-e6e_training.pt

Single GPU finetuning for custom dataset

# finetune p5 modelspython train.py --workers 8 --device 0 --batch-size 32 --data data/custom.yaml --img 640 640 --cfg cfg/training/yolov7-custom.yaml --weights'yolov7_training.pt' --name yolov7-custom --hyp data/hyp.scratch.custom.yaml# finetune p6 modelspython train_aux.py --workers 8 --device 0 --batch-size 16 --data data/custom.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6-custom.yaml --weights'yolov7-w6_training.pt' --name yolov7-w6-custom --hyp data/hyp.scratch.custom.yaml

On video:

python detect.py --weights yolov7.pt --conf 0.25 --img-size 640 --source yourvideo.mp4

On image:

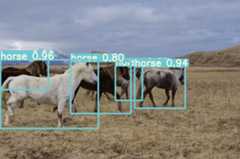

python detect.py --weights yolov7.pt --conf 0.25 --img-size 640 --source inference/images/horses.jpg

Pytorch to Onnx (Inline and inference with OpenVINO™ Torch-ORT)

In-line conversion to Onnx:https://github.com/pytorch/ort/blob/main/torch_ort_inference/docs/usage.md#additional-apis

Inference:

python detect_ort.py --weights /content/yolov7/runs/best.pt --conf 0.25 --img-size 640 --source UWH-6/test/images/DJI_0021_mp4-32_jpg.rf.0d9b746d8896d042b55a14c8303b4f36.jpg

Pytorch to CoreML (and inference on MacOS/iOS)

Pytorch to ONNX with NMS (and inference)

python export.py --weights yolov7-tiny.pt --grid --end2end --simplify \ --topk-all 100 --iou-thres 0.65 --conf-thres 0.35 --img-size 640 640 --max-wh 640

Pytorch to TensorRT with NMS (and inference)

wget https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7-tiny.ptpython export.py --weights ./yolov7-tiny.pt --grid --end2end --simplify --topk-all 100 --iou-thres 0.65 --conf-thres 0.35 --img-size 640 640git clone https://github.com/Linaom1214/tensorrt-python.gitpython ./tensorrt-python/export.py -o yolov7-tiny.onnx -e yolov7-tiny-nms.trt -p fp16

Pytorch to TensorRT another way

Expand

wget https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7-tiny.ptpython export.py --weights yolov7-tiny.pt --grid --include-nmsgit clone https://github.com/Linaom1214/tensorrt-python.gitpython ./tensorrt-python/export.py -o yolov7-tiny.onnx -e yolov7-tiny-nms.trt -p fp16# Or use trtexec to convert ONNX to TensorRT engine/usr/src/tensorrt/bin/trtexec --onnx=yolov7-tiny.onnx --saveEngine=yolov7-tiny-nms.trt --fp16Tested with: Python 3.7.13, Pytorch 1.12.0+cu113

Seekeypoint.ipynb.

Seeinstance.ipynb.

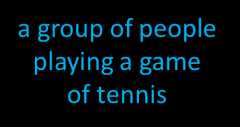

@article{wang2022yolov7, title={{YOLOv7}: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors}, author={Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark}, journal={arXiv preprint arXiv:2207.02696}, year={2022}}Yolov7-semantic & YOLOv7-panoptic & YOLOv7-caption

Expand

- https://github.com/AlexeyAB/darknet

- https://github.com/WongKinYiu/yolor

- https://github.com/WongKinYiu/PyTorch_YOLOv4

- https://github.com/WongKinYiu/ScaledYOLOv4

- https://github.com/Megvii-BaseDetection/YOLOX

- https://github.com/ultralytics/yolov3

- https://github.com/ultralytics/yolov5

- https://github.com/DingXiaoH/RepVGG

- https://github.com/JUGGHM/OREPA_CVPR2022

- https://github.com/TexasInstruments/edgeai-yolov5/tree/yolo-pose

About

Implementation of paper - YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

Resources

License

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Releases

Packages0

Languages

- Jupyter Notebook98.8%

- Python1.2%