Uh oh!

There was an error while loading.Please reload this page.

- Notifications

You must be signed in to change notification settings - Fork11.9k

ENH: add neon intrinsics to fft radix2&4#17231

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to ourterms of service andprivacy statement. We’ll occasionally send you account related emails.

Already on GitHub?Sign in to your account

Uh oh!

There was an error while loading.Please reload this page.

Conversation

mattip commentedSep 3, 2020

It would be nice if this could be done with the universal intrinsics rather than redefining new macros. |

DumbMice commentedSep 4, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

I could try to use universal intrinsic in the way I learned to do in#16969 and compare their benchmark results. However, there are intrinsics, specifically |

mattip commentedSep 4, 2020

@seiko2plus do we have a plan for intrinsics that are CPU-specific? There are two alternatives (you probably already thought about this):

|

Qiyu8 commentedSep 4, 2020

The best way is provide a normal intrinsics if we met CPU-specific intrinsics , As we can see in#17049 , |

DumbMice commentedSep 4, 2020

Sorry, I forgot BTW@mattip, these new macros using neon intrinsics with prefix |

seiko2plus commentedSep 4, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

@mattip, sure we have spacial data types npyv_{type}x2 and npyv_{type}x3 to be used with de&interleaving intrinsics and they're pretty compatible with neon intrinsics. we may need later to add data type npyv_{type}x4 to handle four channels.

Here are tips to implement de&interleaving intrinsics for two 64bit channels:

#ifndefNPY_SIMD#error "Not a standalone header"#endif#ifndef_NPY_SIMD_NEON_INTERLEAVE_H#define_NPY_SIMD_NEON_INTERLEAVE_H#ifNPY_SIMD_F64NPY_FINLINEnpyv_f64x2npyv_load_deinterleave_f64x2(constdouble*ptr){returnvld2q_f64(ptr); }NPY_FINLINEvoidnpyv_store_interleave_f64x2(double*ptr,npyv_f64x2a){vst2q_f64(ptr,a); }#endif// NPY_SIMD_F64#endif

#ifndefNPY_SIMD#error "Not a standalone header"#endif#include"memory.h"// load*#include"reorder.h"// npyv_zip*#ifndef_NPY_SIMD_{EXTENSION_NAME}_INTERLEAVE_H#define_NPY_SIMD_{EXTENSION_NAME}_INTERLEAVE_HNPY_FINLINEnpyv_f64x2npyv_load_deinterleave_f64x2(constdouble*ptr){npyv_f64a=npyv_load_f64(ptr);npyv_f64b=npyv_load_f64(ptr+npyv_nlanes_f64);returnnpyv_zip_f64(a,b);}NPY_FINLINEvoidnpyv_store_interleave_f64x2(double*ptr,npyv_f64x2a){npyv_f64x2zip=npyv_zip_f64(a.val[0],a.val[1]);npyv_store_f64(ptr,zip.val[0]);npyv_store_f64(ptr+npyv_nlanes_f64,zip.val[1]);}#endif// _NPY_SIMD_{EXTENSION_NAME}_INTERLEAVE_H

NPY_FINLINEnpyv_f64x2npyv_load_deinterleave_f64x2(constdouble*ptr){npyv_f64ab0=npyv_load_f64(ptr);npyv_f64ab1=npyv_load_f64(ptr+npyv_nlanes_f64);npyv_f64x2ab,swap_halves=npyv_combine_f64(ab0,ab1);ab.val[0]=_mm256_unpacklo_pd(swap_halves.val[0],swap_halves.val[1]);ab.val[1]=_mm256_unpackhi_pd(swap_halves.val[0],swap_halves.val[1]);returnab;}

NPY_FINLINEnpyv_f64x2npyv_load_deinterleave_f64x2(constdouble*ptr){npyv_f64ab0=npyv_load_f64(ptr);npyv_f64ab1=npyv_load_f64(ptr+npyv_nlanes_f64);npyv_f64x2ab;ab.val[0]=_mm512_permutex2var_pd(ab0,_mm512_setr_epi64(0,2,4,6,8,10,12,14),ab1);ab.val[1]=_mm512_permutex2var_pd(ab0,_mm512_setr_epi64(1,3,5,7,9,11,13,15),ab1);returnab;} EDIT: // forget adding .val :), thanks@DumbMice!. |

numpy/fft/_pocketfft.c Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others.Learn more.

| #ifdefNPY_HAVE_NEON | |

| #include"lowlevel_strided_loops.h" | |

| #include"arm_neon.h" | |

| /* align var to alignment */ | |

| #defineLOOP_BLOCK_ALIGN_VAR(var,type,alignment)\ | |

| npy_intpi,peel=npy_aligned_block_offset(var,sizeof(type),\ | |

| alignment,n);\ | |

| for(i=0;i<peel;i++) | |

| #defineLOOP_BLOCKED(type,vsize)\ | |

| for(;i<npy_blocked_end(peel,sizeof(type),vsize,n);\ | |

| i+= (vsize /sizeof(type))) | |

| #defineLOOP_BLOCKED_END\ | |

| for (;i<n;i++) | |

| #endif |

No need to handle the offset of the non-aligned part, for the following reasons

- You're dealing with 64bit load, it will not gonna make a difference even with old machines and it will not worst to add new npyv intrinsics to handle alignment memory access for interleaving/deinterleaving.

- NEON/ASIMD doesn't have intrinsics to handle the aligned load.

- Except for stream write(non-temporal) in the case of x86, modern machines and compilers can effectively handle non-aligned

memory access even with the lowest memory alignments(1/2/4).

DumbMice commentedSep 6, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

@seiko2plus Thank you for your tips! I added de&interleaving intrinsics to universal intrinsics and used them in pocketfft. Only need to change There are some subtleties in adding multiply-subtract intrinsics. For neon intrinsics, |

seiko2plus left a comment•

seiko2plus left a comment• edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others.Learn more.

add new intrinsic for negating multiply add

seiko2plus commentedSep 6, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

My bad I wrote that comment in a hurry, thanks for correcting me.

|

Uh oh!

There was an error while loading.Please reload this page.

seiko2plus commentedSep 6, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

@DumbMice, please wait let me review my suggestion |

seiko2plus commentedSep 6, 2020

@DumbMice, I'm going to release a pull request implementing fma3 intrinsics to remove any confusion just give me several hours. |

seiko2plus commentedSep 6, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

DumbMice commentedSep 8, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

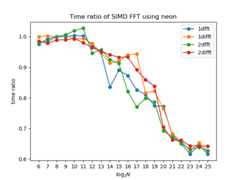

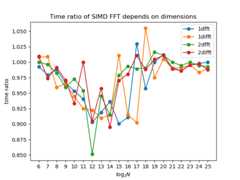

@seiko2plus Thanks so much for your help. All macros expanded as raw universal intrinsics as required. Benchmark ComparisonSimilar performances are achieved. I might update with benchmarks on x86 systems later. |

DumbMice commentedSep 9, 2020

Benchmark on x86Compared to which on arm machine, performances have insignificant changes on x86 machine. Not sure if this is supposed to happen. Builds and benchmark processes on both systems are:

System Info

Size Change

|

mattip commentedSep 9, 2020

If I understand the graphs correctly, SIMD on NEON only begins to really pay off for n>1_000_000 (log2n>20) or so? Performance on x86 before this PR used intrinsics, right?, so I would hope there is no change. |

DumbMice commentedSep 9, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

Yes. I suppose this is expected. The whole array will be iterated log(n) times, but at each time the array will be dissected into smaller and smaller subarrays, where SIMD intrinsics take place. I suppose this is the nature of fft's nlog(n) complexity.

I believe pocketfft is implemented with pure C. No intrinsics are used before this commit. I suppose an improvement is expected on x86? |

mattip commentedSep 9, 2020

Perhaps SIMD is competing with the compiler. |

charris commentedSep 9, 2020

Cache usage was historically the dominate factor in fft timings, not sure how that holds up on modern architectures. NumPy fft is also float64, float32 might benefit more. |

seiko2plus commentedSep 9, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

Please could you provide 'bench_fft.py?

The compiler can easily auto-vectorize these kinda loops, plus you're "not" using fused intrinsics since you're using NPYV under the domain of the default baseline features( Please could you re-run your benchmarks on x86 with |

seiko2plus commentedSep 9, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

especially complex float32 since the compiler will struggling in auto-vectorize interleave/deinterleave single-precision. |

Uh oh!

There was an error while loading.Please reload this page.

DumbMice commentedSep 10, 2020

Add bench-fft.py as required.

I used the following command for benchmark: Maybe your are right? Compilers are smart enough to auto-vectorize operations on f64 arrays using x86 intrinsics? |

Qiyu8 commentedSep 10, 2020

I think that the correct commands is:

|

DumbMice commentedSep 10, 2020

@Qiyu8 Thanks for your advice, but benchmark still shows no improvements on x86. In build log, both EXT and CLIB OPTMIZATION have Not sure how to check By comparing _pocketfft.o.d in build dir, I can confirm that header files in simd/avx folder are included when testing on fft-neon branch, and they are not included on master branch. So I think this might prove the compiler's efficiency in optimizing x86 codes. |

seiko2plus commentedSep 10, 2020

Thank you for your report, this issue should be fixed by#17284. To test python runtests.py --cpu-baseline="avx2 fma3" -t numpy/core/tests/test_fft.pyTo benchmark python3 runtests.py --cpu-baseline="avx2 fma3" --bench-compare master bench_fft |

DumbMice commentedSep 29, 2020 • edited

Loading Uh oh!

There was an error while loading.Please reload this page.

edited

Uh oh!

There was an error while loading.Please reload this page.

To help see the result faster, I've applied changes in#17284 in order to properly benchmark on my x86 machines locally. A reduction of time ~ 10% is shown when log2(N) is between 10 and 14. No improvement is seen elsewhere. Note: Benchmarking bench_fft.py could be memory-consumming, and my laptop has 2x8G memory. |

mattip commentedDec 16, 2020

We discussed this in the latest triage meeting and the feeling was to close this in favor of SciPy's implementation. NumPy as FFT as a "textbook implementation", if people want a faster FFT they should use SciPy. If you feel strongly that this position is wrong, let us know. One interesting thing to try would be to use the NumPy 1.20 Universal SIMD framework in Scipy, maybe it would provide a good reason to break the USIMD code out into a separate library. |

pocketfft

NumPy's fft uses pocketfft, and I added neon intrinsics to radix 2 and radix 4 components (cmplx input) for better performance on arm machine. Although scipy's fft is often recommended as the more developed module to be used, still there might be values in improving numpy's own fft.

Benchmarks

Simple benchmards were done on 1darray of size N and 2darray (N,4) filled with 1.+1j. Compared to master branch, time ratios from this commit are given below, and optimization is promising for large arrays.

Comments

If you think this is worth doing it, I could proceed to othere compoents (radix3,5...) as well.