- Notifications

You must be signed in to change notification settings - Fork74

This is a PyTorch reimplementation of Influence Functions from the ICML2017 best paper: Understanding Black-box Predictions via Influence Functions by Pang Wei Koh and Percy Liang.

License

nimarb/pytorch_influence_functions

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

This is a PyTorch reimplementation of Influence Functions from the ICML2017 best paper:Understanding Black-box Predictions via Influence Functions by Pang Wei Koh and Percy Liang.The reference implementation can be found here:link.

Influence functions help you to debug the results of your deep learning modelin terms of the dataset. When testing for a single test image, you can thencalculate which training images had the largest result on the classificationoutcome. Thus, you can easily find mislabeled images in your dataset, orcompress your dataset slightly to the most influential images important foryour individual test dataset. That can increase prediction accuracy, reducetraining time, and reduce memory requirements. For more details please seethe original paper linkedhere.

Influence functions can of course also be used for data other than images,as long as you have a supervised learning problem.

- Python 3.6 or later

- PyTorch 1.0 or later

- NumPy 1.12 or later

To run the tests, further requirements are:

- torchvision 0.3 or later

- PIL

You can either install this package directly through pip:

pip3 install --user pytorch-influence-functions

Or you can clone the repo and

- import it as a package after it's in your

PATH. - install it using

python setup.py install - install it using

python setup.py develop(if you want to edit the code)

Calculating the influence of the individual samples of your training dataseton the final predictions is straight forward.

The most barebones way of getting the code to run is like this:

importpytorch_influence_functionsasptif# Supplied by the user:model=get_my_model()trainloader,testloader=get_my_dataloaders()ptif.init_logging()config=ptif.get_default_config()influences,harmful,helpful=ptif.calc_img_wise(config,model,trainloader,testloader)# do someting with influences/harmful/helpful

Here,config contains default values for the influence function calculationwhich can of course be changed. For details and examples, lookhere.

The precision of the output can be adjusted by using more iterations and/ormore recursions when approximating the influence.

config is a dict which contains the parameters used to calculate theinfluences. You can get the defaultconfig by callingptif.get_default_config().

I recommend you to change the following parameters to your liking. The listbelow is divided into parameters affecting the calculation and parametersaffecting everything else.

save_pth: DefaultNone, folder where to saves_testandgrad_zfilesif saving is desiredoutdir: folder name to which the result json files are writtenlog_filename: DefaultNone, if set the output will be logged to thisfile in addition tostdout.

seed: Default = 42, random seed for numpy, random, pytorchgpu: Default = -1,-1for calculation on the CPU otherwise GPU idcalc_method: Default = img_wise, choose between the two calculation methodsoutlinedhere.DataLoaderobject for the desired datasettrain_loaderandtest_loader

test_sample_start_per_class: Default = False, per class index from where tostart to calculate the influence function. IfFalse, it will start from0.This is useful if you want to calculate the influence function of a wholetest dataset and manually split the calculation up over multiple threads/machines/gpus. Then, you can start at various points in the dataset.test_sample_num: Default = False, number of samples per classstarting from thetest_sample_start_per_classto calculate the influencefunction for. E.g. if your dataset has 10 classes and you set this value to1, then the influence functions will be calculated for10 * 1testsamples, one per class. IfFalse, calculates the influence for all images.

recursion_depth: Default = 5000, recursion depth for thes_testcalculation.Greater recursion depth improves precision.r: Default = 1, number ofs_testcalculations to take the average of.Greater r averaging improves precision.- Combined, the original paper suggests that

recursion_depth * rshould equalthe training dataset size, thus the above values ofr = 10andrecursion_depth = 5000are valid for CIFAR-10 with a training dataset sizeof 50000 items. damp: Default = 0.01, damping factor durings_testcalculation.scale: Default = 25, scaling factor durings_testcalculation.

This packages offers two modes of computation to calculate the influencefunctions. The first mode is calledcalc_img_wise, during which the twovaluess_test andgrad_z for each training image are computed on the flywhen calculating the influence of that single image. The algorithm moves thenon to the next image. The second mode is calledcalc_all_grad_then_test andcalculates thegrad_z values for all images first and saves them to disk.Then, it'll calculate alls_test values and save those to disk. Subsequently,the algorithm will then calculate the influence functions for all images byreading both values from disk and calculating the influence base on them. Thiscan take significant amounts of disk space (100s of GBs) but with a fast SSDcan speed up the calculation significantly as no duplicate calculations takeplace. This is the case becausegrad_z has to be calculated twice, once forthe first approximation ins_test and once to combine with thes_testvector to calculate the influence. Most importantnly however,s_test is onlydependent on the test sample(s). While onegrad_z is used to estimate theinitial value of the Hessian during thes_test calculation, this isinsignificant.grad_z on the other hand is only dependent on the trainingsample. Thus, in thecalc_img_wise mode, we throw away allgrad_zcalculations even if we could reuse them for all subsequents_testcalculations, which could potentially be 10s of thousands. However, as statedabove, keeping thegrad_zs only makes sense if they can be loaded faster/kept in RAM than calculating them on-the-fly.

TL;DR: The recommended way is usingcalc_img_wise unless you have a crazyfast SSD, lots of free storage space, and want to calculate the influences onthe prediction outcomes of an entire dataset or even >1000 test samples.

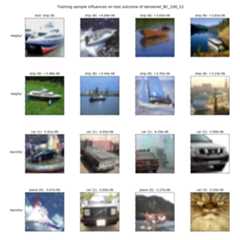

Visualised, the output can look like this:

The test image on the top left is test image for which the influences werecalculated. To get the correct test outcome ofship, the Helpful images fromthe training dataset were the most helpful, whereas the Harmful images were themost harmful. Here, we used CIFAR-10 as dataset. The model was ResNet-110. Thenumbers above the images show the actual influence value which was calculated.

The next figure shows the same but for a different model, DenseNet-100/12.Thus, we can see that different models learn more from different images.

Is a dict/json containting the influences calculated of all training datasamples for each test data sample. The dict structure looks similiar to this:

{"0": {"label":3,"num_in_dataset":0,"time_calc_influence_s":129.6417362689972,"influence": [-0.00016939856868702918,4.3426321099104825e-06,-9.501376189291477e-05, ... ],"harmful": [31527,5110,47217, ... ],"helpful": [5287,22736,3598, ... ] },"1": {"label":8,"num_in_dataset":1,"time_calc_influence_s":121.8709237575531,"influence": [3.993639438704122e-06,3.454859779594699e-06,-3.5805194329441292e-06, ...Harmful is a list of numbers, which are the IDs of the training data samplesordered by harmfulness. If the influence function is calculated for multipletest images, the harmfulness is ordered by average harmfullness to theprediction outcome of the processed test samples.

Helpful is a list of numbers, which are the IDs of the training data samplesordered by helpfulness. If the influence function is calculated for multipletest images, the helpfulness is ordered by average helpfulness to theprediction outcome of the processed test samples.

- makes variable names etc. dataset independent

- remove all dataset name checks from the code

- ability to disable shell output eg for

display_progressfrom the config - add proper result plotting support

- add a dataloader for training on the most influential samples only

- add some visualisation of the outcome

- add recreation of some graphs of the original paper to verifyimplementation

- allow custom save name for the influence json

- make the config a class, so that it can readjust itself, for examplewhen the

randrecursion_depthvalues can be lowered without big impact - check killing data augmentation!?

- in

calc_influence_function.pyinload_s_test,load_grad_zdon'thard code the filenames

- integrate myPy type annotations (static type checking)

- Use multiprocessing to calc the influence

- use

r"doc"docstrings like pytorch

About

This is a PyTorch reimplementation of Influence Functions from the ICML2017 best paper: Understanding Black-box Predictions via Influence Functions by Pang Wei Koh and Percy Liang.

Topics

Resources

License

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Releases

Packages0

Uh oh!

There was an error while loading.Please reload this page.

Contributors4

Uh oh!

There was an error while loading.Please reload this page.