- Notifications

You must be signed in to change notification settings - Fork13

hwalsuklee/numpy-neuralnet-exercise

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

All codes and slides are based on the online bookneuralnetworkanddeeplearning.com.

From example1.py to example8.py is implemented via only numpy and use the same architecture of a simple network called multilayer perceptrons (MLP) with one hidden layer.

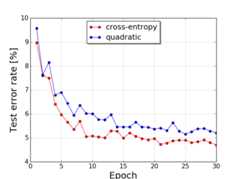

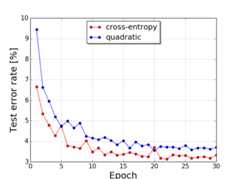

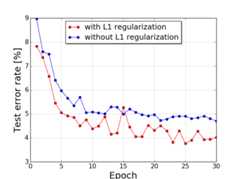

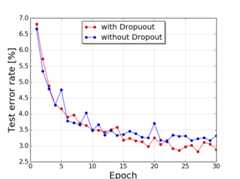

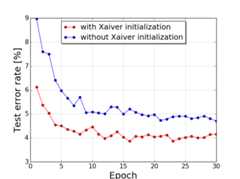

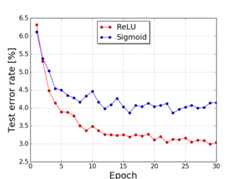

n is the number of unit in a hidden layer in following results.

| n=30 | n=100 |

|---|---|

|  |

| n=30 | n=100 |

|---|---|

|  |

| n=30 | n=100 |

|---|---|

|  |

| n=30 | n=100 |

|---|---|

|  |

| n=30 | n=100 |

|---|---|

|  |

| n=30 | n=100 |

|---|---|

|  |

| n=30 | n=100 |

|---|---|

|  |

There are also good resources for numpy-only-implementation and laucher for each recourse is provided.

| Resource | Launcher |

|---|---|

| neuralnetworkanddeeplearning.com | launcher_package1.py |

| Stanford CS231 lectures | launcher_package2.py |

Code in tf_code_mnist folder is for CNN implmentation.

ch6_summary.pdf is related slide.

| Command | Description | MNIST acc. |

|---|---|---|

train --model v0 | model v0 : BASE LINE + Softmax Layer + Cross Entropy Loss | 97.80% |

train --model v1 | model v1 : model v0 + 1 Convolutional/Pooling Layers | 98.78% |

train --model v2 | model v2 : model v1 + 1 Convolutional/Pooling Layers | 99.06% |

train --model v3 | model v3 : model v2 + ReLU | 99.23% |

train --model v4 | model v4 : model v3 + Data Augmentation | 99.37% |

train --model v5 | model v5 : model v4 + 1 Fully-Connected Layer | 99.43% |

train --model v6 | model v6 : model v5 + Dropout | 99.60% |

About

Implementation of key concepts of neuralnetwork via numpy

Topics

Resources

Uh oh!

There was an error while loading.Please reload this page.

Stars

Watchers

Forks

Releases

No releases published

Packages0

No packages published

Uh oh!

There was an error while loading.Please reload this page.